Adaface Sample Site Reliability Engineering Questions

Here are some sample Site Reliability Engineering questions from our premium questions library (10273 non-googleable questions).

Skills

Aptitude & Soft Skills

Logical Reasoning

Abstract Reasoning

English

Reading Comprehension

Spatial Reasoning

Verbal Reasoning

Diagrammatic Reasoning

Critical Thinking

Data Interpretation

Situational Judgement

Attention to Detail

Numerical Reasoning

Aptitude

Quantitative Reasoning

Inductive Reasoning

Deductive Reasoning

Error Checking

English Speaking

Pronunciation

Product & Design

Visualization & BI Tools

Programming Languages

Frontend Development

Backend Development

Data Science & AI

Data Engineering & Databases

Cloud & DevOps

Testing & QA

Accounting & Finance

Microsoft & Power Platform

CRM & ERP Platforms

Cybersecurity & Networking

Marketing & Growth

Oracle Technologies

Other Tools & Technologies

| 🧐 Question | |||||

|---|---|---|---|---|---|

|

Medium

Error Budget Management

|

Solve

|

||||

|

|

|||||

|

Medium

Incident Response Procedure

|

Solve

|

||||

|

|

|||||

|

Medium

Service Balancer Decision-making

|

Solve

|

||||

|

|

|||||

| 🧐 Question | 🔧 Skill | ||

|---|---|---|---|

|

Medium

Error Budget Management

|

3 mins Site Reliability Engineering

|

Solve

|

|

|

Medium

Incident Response Procedure

|

3 mins Site Reliability Engineering

|

Solve

|

|

|

Medium

Service Balancer Decision-making

|

2 mins Site Reliability Engineering

|

Solve

|

| 🧐 Question | 🔧 Skill | 💪 Difficulty | ⌛ Time | ||

|---|---|---|---|---|---|

|

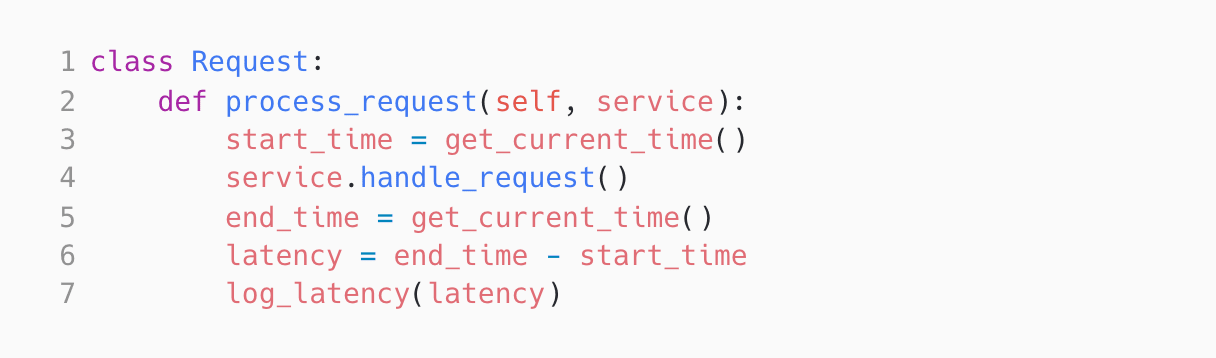

Error Budget Management

|

Site Reliability Engineering

|

Medium | 3 mins |

Solve

|

|

|

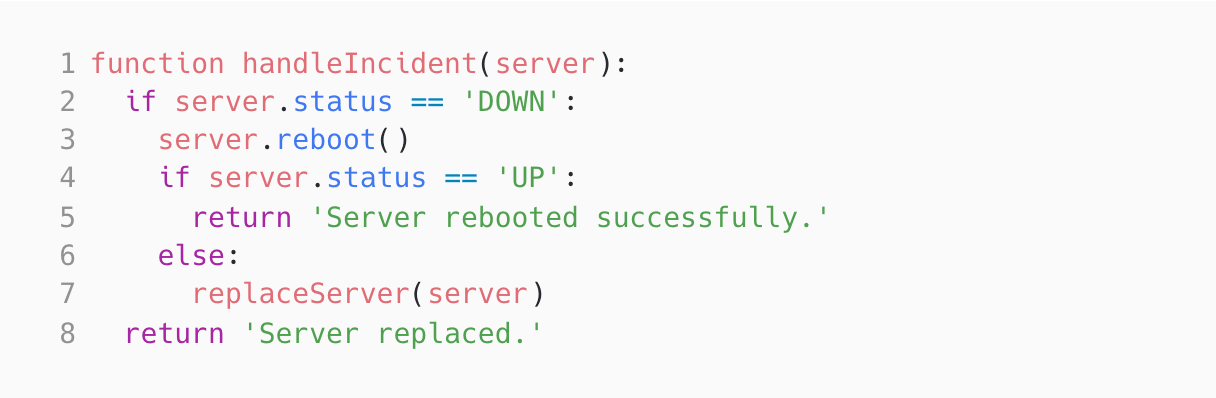

Incident Response Procedure

|

Site Reliability Engineering

|

Medium | 3 mins |

Solve

|

|

|

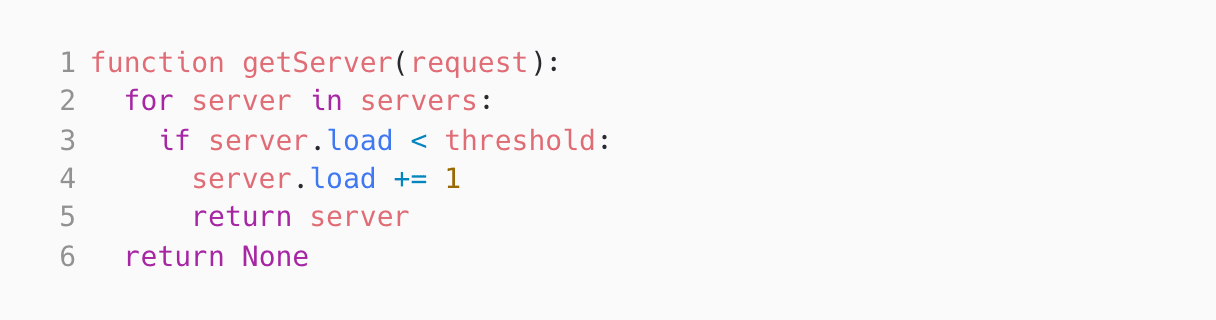

Service Balancer Decision-making

|

Site Reliability Engineering

|

Medium | 2 mins |

Solve

|

We evaluated several of their competitors and found Adaface to be the most compelling. Great library of questions that are designed to test for fit rather than memorization of algorithms.

Swayam Narain, CTO, Affable

Join 1200+ companies in 80+ countries.

Try the most candidate friendly skills assessment tool today.

Ready to streamline your recruitment efforts with Adaface?

Ready to streamline your recruitment efforts with Adaface?