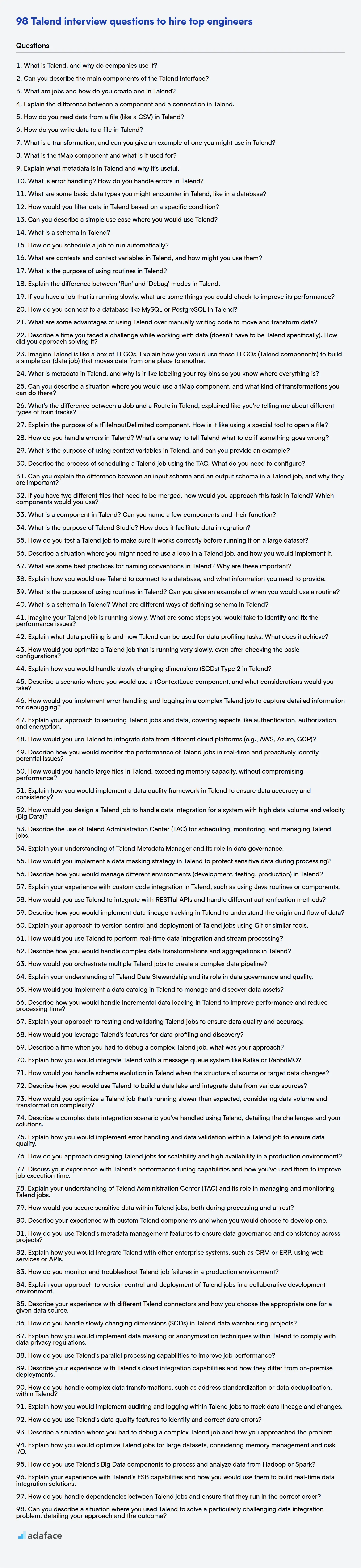

Talend is an extract, transform, load (ETL) tool that is widely used for data integration, data management, and big data projects. Recruiters looking for data integration experts need to ask the right questions to filter through the noise.

This blog post provides a compilation of Talend interview questions, suitable for various experience levels, from freshers to experts, and includes multiple-choice questions (MCQs). You'll find a range of questions tailored for different expertise levels, including freshers, juniors, advanced professionals, and seasoned experts.

Use these questions with our Talend online test to screen candidates and ensure you hire the best talent for your organization; also, do see how to screen for data analysts.

Table of contents

Talend interview questions for freshers

1. What is Talend, and why do companies use it?

Talend is a data integration platform that simplifies the process of extracting, transforming, and loading (ETL) data from various sources into a target system. It offers a graphical user interface (GUI) for designing data integration jobs, making it easier to build complex data workflows without extensive coding.

Companies use Talend because it offers a unified platform for data integration, data quality, and data governance. This helps improve data accuracy, consistency, and accessibility. It streamlines data processes and enables better decision-making. Some key benefits include: faster time-to-value, improved data quality, reduced data silos, and lower costs compared to custom coding solutions.

2. Can you describe the main components of the Talend interface?

The Talend interface consists of several key components. The Repository is where all project items like jobs, metadata, and routines are stored. The Design Workspace is the area where you visually create and configure data integration jobs using drag-and-drop components. The Palette contains a wide array of reusable components categorized by function (e.g., file input/output, database connections, transformations). The Configuration tab allows the user to configure the parameters of each component on the design workspace. Finally, the Run tab is where you execute your jobs and view the results, including logs and error messages.

3. What are jobs and how do you create one in Talend?

In Talend, a Job is the basic executable unit. It's a visual representation of a data integration process, essentially a program designed to perform specific tasks like data extraction, transformation, and loading (ETL). Jobs are designed using a graphical interface where you drag and drop components, configure them, and connect them to define the data flow.

To create a Job in Talend Studio:

- Go to the 'Repository' panel.

- Right-click on 'Job Designs' and select 'Create Job'.

- Enter a name and a purpose for your Job.

- Click 'Finish'. A blank Job Designer workspace will open where you can drag and drop components and configure them to build your data flow.

4. Explain the difference between a component and a connection in Talend.

In Talend, a component represents a specific action or transformation that needs to be performed on data. Examples include reading data from a file (tFileInputDelimited), transforming data (tMap), or writing data to a database (tDBOutput). Components have properties that define their behavior, such as the file path for tFileInputDelimited or the database connection details for tDBOutput.

On the other hand, a connection defines the flow of data between components. It visually links two or more components together, specifying the source and destination of the data stream. Connections determine the order in which components are executed in a Talend job. There are different types of connections, such as 'Row' (for main data flow), 'Trigger' (for controlling execution order based on conditions), and 'Lookup' (for data enrichment from another source).

5. How do you read data from a file (like a CSV) in Talend?

In Talend, you typically use the tFileInputDelimited component to read data from a delimited file like a CSV. You configure the component with the file path, field separator (e.g., comma for CSV), text enclosure (e.g., double quotes), and header row settings. You then define the schema in the component to match the columns in your file; this tells Talend the data types of each column.

After configuring tFileInputDelimited, you connect it to other components using a row connection. For example, you might connect it to a tLogRow component to display the data or a tMap component to transform it. The data flows from the file, through tFileInputDelimited, and into the subsequent components for processing. Other file input components like tFileInputPositional exist for other file types.

6. How do you write data to a file in Talend?

In Talend, you can write data to a file using various components, the most common being tFileOutputDelimited for delimited files (like CSV) and tFileOutputPositional for fixed-width files. First, you need a data flow that transforms or extracts data. Then, connect the output of that flow to the file output component.

Configure the component with the file path, field separator (for delimited files), and other settings like header and footer. Define the schema in the component or propagate it from the incoming data flow. Ensure the file path is accessible and that Talend has the necessary permissions to write to it. Example: For writing to a CSV file, use tFileOutputDelimited, specify the file name in the 'File Name' property, field separator in the 'Field Separator' property, and check the 'Include Header' box if needed.

7. What is a transformation, and can you give an example of one you might use in Talend?

A transformation in Talend is a component that modifies the data as it flows through a Job. It takes data from an input, performs an operation on it, and outputs the transformed data. These operations can involve cleaning, converting, filtering, or enriching the data. Transformations are the core part of an ETL process.

For example, I might use a tMap component as a transformation. tmap allows to map fields from one or multiple input flows to one or more output flows. I might use it to concatenate first and last names into a single "Full Name" field, or to convert a date from one format to another, or perform a lookup to enrich data with information from another source.

8. What is the tMap component and what is it used for?

The tMap component in Talend is a powerful data transformation component. It's primarily used for mapping, transforming, and routing data from one or more input sources to one or more output destinations. Think of it as a visual data manipulation tool where you can define complex data transformations using expressions, conditions, and lookup tables.

Specifically, tMap can perform actions such as data type conversions, string manipulation, applying business rules using expressions, joining data from multiple sources (lookup tables), filtering data, and creating new data fields based on calculations or conditions. It is a core component for almost any ETL process, enabling you to cleanse, enrich, and reshape data to meet specific business requirements.

9. Explain what metadata is in Talend and why it's useful.

In Talend, metadata is essentially data about data. It describes the structure, format, and properties of your data sources and targets. Think of it as the blueprint for your data. It includes things like table names, column names, data types, key constraints, and file formats (CSV, XML, etc.).

Metadata is useful because it promotes reusability, consistency, and simplifies development. By storing metadata in Talend, you can easily access and reuse connection details and data structures across multiple jobs, preventing the need to manually redefine them each time. This reduces errors, speeds up development, and makes it easier to maintain and update your data integration processes. For example, you can update a database connection once in the metadata repository, and all jobs using that connection will automatically be updated. Ultimately, It provides a central repository of information regarding the data used in the integration flows.

10. What is error handling? How do you handle errors in Talend?

Error handling is the process of anticipating, detecting, and resolving errors that occur during program execution. It involves implementing mechanisms to gracefully manage unexpected situations, prevent program crashes, and provide informative feedback to users or administrators.

In Talend, errors can be handled using several components:

- tDie: Stops the job immediately upon encountering an error. You can configure it to output an error message.

- tWarn: Issues a warning message but allows the job to continue.

- tLogCatcher: Catches Java exceptions thrown during job execution and routes them to a specified output, such as a file or database table. This allows you to log errors for analysis and debugging. You can then use a tMap to transform the error data.

- tFlowToIterate: Can be used to iterate over error rows and perform specific actions, like sending notifications or retrying failed processes.

- Try...Catch blocks (in tJava, tJavaRow, tJavaFlex): Standard Java error handling mechanisms can be incorporated directly into Java code components. Within the 'Catch' block you can implement your error handling logic.

The choice of error handling strategy depends on the severity of the error and the desired behavior. For critical errors that would corrupt data or halt the system, tDie might be appropriate. For less critical errors, tWarn or tLogCatcher might be used to log the error and continue processing. Use tFlowToIterate when iterative error processing is needed.

11. What are some basic data types you might encounter in Talend, like in a database?

Talend, when interacting with databases, commonly deals with standard data types similar to those found in the database itself or in Java. Some basic data types include: String (for text), Integer (for whole numbers), Double or Float (for decimal numbers), Boolean (for true/false values), and Date (for date and time information). Talend also has Object which allows any type.

12. How would you filter data in Talend based on a specific condition?

In Talend, data can be filtered based on a specific condition primarily using the tFilterRow component. You define the condition as an expression in the component's properties. The tFilterRow then directs the data either to the 'true' output flow (records meeting the condition) or the 'false' output flow (records not meeting the condition).

For example, to filter records where the 'age' field is greater than 25, you would configure the tFilterRow with the condition row.age > 25. Other common components used in conjunction with tFilterRow include tLogRow to display the results of filtering or tFileOutputDelimited to write the filtered data to a file.

13. Can you describe a simple use case where you would use Talend?

A simple use case for Talend is extracting data from a CSV file, transforming it to conform to a specific format, and loading it into a database table. Imagine you receive daily sales data as a CSV with inconsistent date formats and product IDs. Using Talend, you can:

- Read the CSV file: Use a

tFileInputDelimitedcomponent. - Transform the data: Use

tMapcomponent to:- Convert date formats to a standard format.

- Look up product IDs in a reference table (if needed) and replace them with standardized IDs.

- Filter out any rows with invalid data.

- Load into the database: Use a

tDBOutputcomponent (e.g.,tMysqlOutputfor MySQL) to insert the transformed data into the sales table.

14. What is a schema in Talend?

In Talend, a schema defines the structure of data flowing through a component or job. It specifies the name, data type, and other properties of each field (column) within a dataset. Schemas are crucial for ensuring data integrity and enabling Talend components to correctly interpret and process the data.

Schemas can be defined manually or automatically propagated throughout a job. They act as metadata, providing essential information to Talend about the expected format and content of the data. This information is then used for various tasks, such as data validation, transformation, and routing. Examples include:

- Column Name: e.g.,

CustomerID - Data Type: e.g.,

Integer,String,Date - Length: e.g.,

10(maximum characters for a string) - Nullable:

trueorfalse(indicates if a field can contain null values) - Key: Whether it is a Primary Key or Foreign Key.

// Example of a simple schema representation (conceptually)

Map<String, String> schema = new HashMap<>();

schema.put("name", "String");

schema.put("age", "Integer");

15. How do you schedule a job to run automatically?

The method for scheduling jobs automatically depends on the operating system or platform.

On Linux/Unix systems, I'd use cron. You edit the crontab file using crontab -e and specify the schedule and command to run. For example, 0 0 * * * /path/to/my/script.sh would run the script daily at midnight. Windows uses Task Scheduler. You can define a task with a trigger (time-based or event-based) and specify the program or script to execute. Cloud platforms like AWS, Azure, and GCP offer managed scheduling services like AWS CloudWatch Events (now EventBridge), Azure Logic Apps, and Google Cloud Scheduler, respectively. These services often provide more features like monitoring and retry mechanisms.

16. What are contexts and context variables in Talend, and how might you use them?

Contexts in Talend are sets of variables that allow you to parameterize your jobs, making them more flexible and reusable across different environments (e.g., development, testing, production). Context variables are the individual parameters within a context, holding values like database connection details, file paths, or threshold values.

You might use contexts to:

- Easily switch between database connections for different environments.

- Specify different input or output file paths based on the environment.

- Define error thresholds that vary depending on the system load.

- Securely store sensitive information like passwords.

- Create jobs which use environment specific values. Examples:

context.database_hostcontext.usernamecontext.filepath

17. What is the purpose of using routines in Talend?

Routines in Talend serve as reusable code snippets (Java) that can be called from various parts of a Talend job or across multiple jobs. This promotes code reusability, reduces redundancy, and simplifies complex data transformation logic. They encapsulate frequently used functions, making jobs more modular and maintainable.

Specifically, routines can be used to:

- Perform complex calculations or string manipulations.

- Standardize data formats (e.g., date conversions).

- Connect to external systems or APIs.

- Implement custom error handling.

18. Explain the difference between 'Run' and 'Debug' modes in Talend.

In Talend, 'Run' mode executes the Job as a compiled Java application, optimized for performance and speed. It doesn't pause for breakpoints or offer step-by-step execution, designed for deploying and executing jobs in production environments.

'Debug' mode, on the other hand, is specifically designed for troubleshooting and understanding Job execution. It allows you to set breakpoints, inspect variable values at different stages, step through the Job execution line by line, and monitor data flow. This mode is critical for identifying errors, understanding transformation logic, and ensuring data integrity during development.

19. If you have a job that is running slowly, what are some things you could check to improve its performance?

If a job is running slowly, I'd first check resource utilization (CPU, memory, disk I/O, network I/O) to identify bottlenecks. I'd also review the code for inefficient algorithms or data structures. Profiling the code can pinpoint slow sections. Look for database inefficiencies like missing indexes or slow queries.

Specifically, I would:

- Monitor resource usage: Use tools like

top,htop,iostat,vmstatto observe CPU, memory, and I/O. - Profile the code: Use profilers (e.g., Python's

cProfile) to identify performance hotspots. - Optimize database queries: Analyze query execution plans, add indexes, and rewrite slow queries.

- Review algorithms and data structures: Ensure they are appropriate for the task and data size.

- Check for blocking operations: Identify any I/O bound operations or locks that are causing delays.

- Consider parallelism: If applicable, explore using multi-threading or multi-processing to distribute the workload.

- Review logs: Check for any error messages or warnings that might indicate issues.

- If the job involves external dependencies (API calls, external services), check their responsiveness and latency.

20. How do you connect to a database like MySQL or PostgreSQL in Talend?

In Talend, you connect to databases like MySQL or PostgreSQL using database components. You typically use components like tMysqlConnection or tPostgresqlConnection for establishing the connection. These components require you to specify connection details such as host, port, database name, username, and password. After configuring the connection component, you can use other components like tMysqlInput or tPostgresqlInput to read data, or tMysqlOutput or tPostgresqlOutput to write data to the database.

Specifically, you'll drag the appropriate connection component onto your Talend job canvas, double-click it to open its configuration panel, and enter the database connection properties. For instance, you might set the 'Host' to 'localhost', 'Port' to '3306' (MySQL) or '5432' (PostgreSQL), 'Database' to your database name, and then provide valid 'Username' and 'Password' credentials. After the connection is established in the component, you can utilize the connection in other input and output components to read/write data.

21. What are some advantages of using Talend over manually writing code to move and transform data?

Talend offers several advantages over manual coding for data movement and transformation. It provides a visual, drag-and-drop interface that simplifies complex data integration tasks, reducing development time and the risk of errors. Its pre-built components and connectors for various data sources and destinations eliminate the need to write custom code for common operations, enhancing productivity.

Furthermore, Talend offers features like built-in data quality checks, data profiling, and comprehensive error handling. It facilitates collaboration through version control and shared repositories. Scalability is also a key benefit, as Talend can handle large volumes of data and complex transformations efficiently, which might require significant optimization efforts in manual coding scenarios. In essence, Talend streamlines data integration processes, making them faster, more reliable, and easier to manage compared to manually coded solutions.

22. Describe a time you faced a challenge while working with data (doesn't have to be Talend specifically). How did you approach solving it?

During a project involving customer churn prediction, I encountered a significant challenge with missing data. A substantial portion of customer profiles lacked complete information, particularly regarding demographics and usage patterns. This threatened to bias the model and reduce its accuracy. To address this, I first performed a thorough analysis of the missing data, categorizing it by type (e.g., missing completely at random, missing at random, missing not at random) and identifying the potential causes.

Based on the analysis, I employed a combination of techniques: for features with a small number of missing values, I used simple imputation methods like mean or median imputation. For features with a larger proportion of missing data or where imputation could introduce bias, I created new features indicating whether the original feature was missing (missing indicator variables). I also leveraged domain knowledge to inform the imputation process, for example, using the average usage patterns of similar customer segments to impute missing usage data. Finally, I evaluated the impact of these strategies on the model's performance through cross-validation, selecting the approach that minimized bias and maximized predictive accuracy.

Talend interview questions for juniors

1. Imagine Talend is like a box of LEGOs. Explain how you would use these LEGOs (Talend components) to build a simple car (data job) that moves data from one place to another.

Okay, think of Talend components as LEGO bricks. To build a simple data "car" (job), I'd start with a tFileInputDelimited component. This is like the wheels - it's how the car starts moving by reading data from a file (our data source). Next, I'd use a tMap component. This is like the car's chassis - it transforms and maps the input data to the desired structure. Finally, I'd use a tFileOutputDelimited component. This is like the destination - it writes the transformed data to a new file (our data target). In short the overall job reads data, transforms it, and writes it elsewhere.

2. What is metadata in Talend, and why is it like labeling your toy bins so you know where everything is?

In Talend, metadata is descriptive information about your data. It defines the structure, format, and properties of data objects within your Talend jobs. Think of it as the blueprint or schema of your data, describing fields, data types, lengths, and even database connections. Like labeling toy bins, Talend metadata helps you easily identify, understand, and manage your data sources and targets without having to rummage through them blindly.

Just as labeled toy bins prevent chaos, Talend metadata allows for efficient data integration. Without it, you'd waste time figuring out the layout of each file or database table every time you build a job. Properly defined metadata enables Talend to automatically handle data transformations and mapping, reducing errors and improving development speed. It promotes reusability and maintainability of your data integration processes.

3. Can you describe a situation where you would use a tMap component, and what kind of transformations you can do there?

A tMap component in Talend is primarily used for data transformation and mapping between one or more input sources and one or more output targets. A common scenario is enriching customer data. Imagine you have customer data (name, address) in one file and customer purchase history in another. Using tMap, you can join these two datasets based on a common key (e.g., CustomerID) to create a combined dataset containing customer details and their purchase history.

tMap supports various transformations. These include:

- Data type conversions: Converting strings to integers, dates to specific formats.

- String manipulation: Concatenating strings, extracting substrings, replacing characters.

- Conditional mapping: Using expressions to map data based on certain conditions (e.g., using

row1.age > 18 ? "Adult" : "Minor"). - Lookups: Retrieving data from lookup tables (other input flows) based on matching keys.

- Mathematical operations: Performing calculations on numeric fields.

- Date calculations: Adding or subtracting dates.

- Using routines: utilizing built-in or custom routines to apply complex business logic or standardized functions to the data.

4. What's the difference between a Job and a Route in Talend, explained like you're telling me about different types of train tracks?

Think of Talend as a giant railway system. A Job is like a complete train track that takes cargo (your data) from one station (source) to another (destination), performing actions (transformations) along the way. It's designed to run from start to finish, often multiple times, carrying out a specific data integration task. A Job defines the entire data flow from A to Z.

Now, a Route is like a specialized, dynamic segment of track designed for real-time or near real-time transport, often responding to events. It's more interactive. Imagine a small section of rail where signals change frequently based on incoming events. Routes are designed to be always on, listening for requests, transforming data on the fly, and sending it where it needs to go. Example: A route could pick up file information dropped in a webserver, transform it and send the relevant data to a database, as soon as the file is there. Thus, Routes are primarily used for service-oriented integration, working with APIs and message queues, constantly ready to process data requests as they arrive.

5. Explain the purpose of a tFileInputDelimited component. How is it like using a special tool to open a file?

The tFileInputDelimited component in Talend is used to read data from delimited text files (like CSV files). It parses the file, treating each line as a record and splitting each record into fields based on a specified delimiter (e.g., comma, tab, pipe). Think of it like using a specialized tool (like a letter opener) designed for a specific task: opening envelopes (CSV files) without damaging the contents.

Instead of manually writing code to read each line and split it based on the delimiter, tFileInputDelimited automates this process. It offers options to define:

- The file path.

- The delimiter.

- The text enclosure (if any).

- The header row (to use as column names).

- Data types for each column.

- Error handling options

6. How do you handle errors in Talend? What's one way to tell Talend what to do if something goes wrong?

In Talend, error handling is crucial for robust data integration. You can handle errors using several components and techniques. One primary method is using the tDie component or its variants like tWarn and tFail. These components are typically connected to the Reject output of a component where errors might occur. When a record is rejected (due to data type mismatch, constraint violation, etc.), it's routed to the tDie/tWarn/tFail component.

Specifically, tDie halts the job execution immediately. You can configure it to print a custom error message to the console or log file. For example, you might link a tMap's 'Reject' output to a tDie to stop the job if data transformation fails for any record. This ensures that faulty data doesn't propagate further into the system, preserving data integrity.

7. What is the purpose of using context variables in Talend, and can you provide an example?

Context variables in Talend allow you to parameterize your jobs, making them more flexible and reusable. Instead of hardcoding values directly into components, you can define context variables that hold these values. At runtime, you can set or change these context variables, allowing the same job to behave differently based on the context. This simplifies managing configurations across different environments (development, testing, production) or for different data sources.

For example, consider a job that reads data from a database. Instead of hardcoding the database connection details (host, port, database name, username, password) directly into the tDBConnection component, you can define context variables like context.db_host, context.db_port, context.db_name, context.db_user, and context.db_password. Then, in the tDBConnection component, you reference these context variables. Now, you can easily switch between different database connections by simply changing the values of these context variables, without modifying the job itself. This simplifies deployment and promotes code reusability.

8. Describe the process of scheduling a Talend job using the TAC. What do you need to configure?

To schedule a Talend job using the Talend Administration Center (TAC), you first need to log in to the TAC. Then, navigate to the 'Job Conductor' section. Click on 'Add Task' to create a new task associated with your published Talend job. You'll need to select the job to schedule, configure the execution server, and then define the scheduling parameters. This involves setting the start date, time, and recurrence pattern (e.g., daily, weekly, monthly, cron expression). You can also configure execution parameters such as contexts, variables, and priorities.

Specifically, you need to configure:

- Job Selection: Choose the specific Talend job you want to schedule.

- Execution Server: Select the execution server where the job will run.

- Scheduling: Define the schedule (start date/time, recurrence).

- Context: Choose the context to use for the job execution.

- Parameters: Set any required job parameters (variables).

- Priority: Set the job's priority.

9. Can you explain the difference between an input schema and an output schema in a Talend job, and why they are important?

In a Talend job, the input schema defines the structure and data types of the data that enters a component. It specifies the fields (columns), their data types (e.g., string, integer, date), and any constraints or metadata associated with the input data. The output schema describes the structure and data types of the data that the component produces as output, after processing the input data. Similar to the input schema, it defines fields, data types, and metadata.

They are important because schemas enable Talend to perform data type validation, transformation, and mapping operations correctly. They ensure that data is processed consistently and accurately throughout the job. Mismatched schemas can lead to errors, data corruption, or unexpected results. Using schemas improves the maintainability and readability of the Talend job by providing clear documentation of the data structures involved, and helps ensure data quality.

10. If you have two different files that need to be merged, how would you approach this task in Talend? Which components would you use?

To merge two different files in Talend, I would use the tMap component as the primary tool. The basic approach involves reading both files using appropriate input components (e.g., tFileInputDelimited for delimited files, tFileInputExcel for Excel files). These input components feed into the tMap component.

Inside the tMap, I would define the input flows (one for each file) and create an output flow that combines the desired fields from both input flows. Joins (inner join, left outer join, etc.) can be configured within tMap to handle matching records based on a common key, if required. For simple concatenation of rows, all input flows can be connected to the output without joins. Finally, the output flow from tMap would be written to the desired output file using an output component (e.g., tFileOutputDelimited).

11. What is a component in Talend? Can you name a few components and their function?

In Talend, a component is a pre-built, reusable block of code that performs a specific task within a data integration job. Think of them as building blocks you drag and drop to create a data flow. Components handle things like reading from a file, transforming data, writing to a database, or calling an API.

Some common components include:

- tFileInputDelimited: Reads data from a delimited file (e.g., CSV).

- tFileOutputDelimited: Writes data to a delimited file.

- tMap: Performs data transformations and mappings between input and output schemas.

- tMysqlInput/tOracleInput/tPostgresqlInput: Reads data from a MySQL/Oracle/PostgreSQL database, respectively.

- tMysqlOutput/tOracleOutput/tPostgresqlOutput: Writes data to a MySQL/Oracle/PostgreSQL database, respectively.

- tLogRow: Prints data to the console (useful for debugging).

12. What is the purpose of Talend Studio? How does it facilitate data integration?

Talend Studio is an open-source data integration platform used to build, deploy, and manage data integration processes. Its primary purpose is to simplify the movement and transformation of data between various systems, sources, and destinations. It allows users to create ETL (Extract, Transform, Load) processes, data quality checks, and data governance rules.

Talend facilitates data integration through its graphical interface, pre-built components (connectors), and code generation capabilities. Users can visually design data flows by dragging and dropping components, configuring them, and connecting them to create complete integration workflows. It supports a wide range of data sources, including databases, flat files, cloud applications, and big data platforms. The underlying engine translates these graphical flows into executable code (Java), which handles the data processing and movement.

13. How do you test a Talend job to make sure it works correctly before running it on a large dataset?

Before running a Talend job on a large dataset, thorough testing is crucial. Start with unit testing individual components or transformations. Use small, representative datasets for these tests. Verify that each component processes data as expected by checking the output against known correct values. Log specific values at different stages within the job to aid debugging.

Next, perform integration testing by combining several components and testing the overall flow. Use a slightly larger dataset for this. Leverage Talend's debugging features, such as the debugger and data viewers, to step through the job execution and inspect the data at each step. Check for data quality issues, performance bottlenecks, and unexpected errors. For example, verify output file format is correct using a command-line tool like head -n 1 output.csv and compare with expected output.

14. Describe a situation where you might need to use a loop in a Talend job, and how you would implement it.

A loop is needed when you want to iterate over a set of data within a Talend job. For example, imagine processing multiple files in a directory. We could read the list of filenames using tFileList, and then use a tLoop component to iterate through that list. Inside the tLoop, we'd process each file individually using components like tFileInputDelimited, tMap, and tFileOutputDelimited.

Implementation involves connecting the tFileList component to tLoop. Within tLoop, you define the 'From' and 'To' values. Critically, to access the filename within each iteration, you use a context variable created by tFileList (e.g., context.current_file) to pass the current filename to the file processing components inside the loop. You configure the tFileInputDelimited (or other relevant component) to use context.current_file to read data from the dynamically changing file path. Effectively, the tLoop drives the processing sequence for each identified file.

15. What are some best practices for naming conventions in Talend? Why are these important?

Talend naming conventions are crucial for readability, maintainability, and collaboration. Consistent naming makes jobs easier to understand and debug, especially in large projects.

Some best practices include:

- Use descriptive names that clearly indicate the component's purpose (e.g.,

tFileInputDelimited_Customers). - Follow a consistent naming structure (e.g.,

[component type]_[description]or[system]_[process]_[component]). - Use PascalCase or camelCase for component names.

- Avoid spaces and special characters; use underscores instead.

- Use abbreviations sparingly and consistently.

- For variables or context parameters:

context.variableName - Job naming convention:

[ProjectName]_[ProcessName]_[Version] - Routine naming convention:

routines.RoutineName

Following these conventions reduces ambiguity, promotes code reuse, and simplifies troubleshooting, saving time and effort in the long run.

16. Explain how you would use Talend to connect to a database, and what information you need to provide.

To connect to a database using Talend, you would typically use a database component like tDBConnection and tDBInput (or similar, depending on the database type). First, you would drag and drop the appropriate database connection component (e.g., tMysqlConnection, tOracleConnection) onto the job designer. Then, you'd configure the component by providing necessary connection details.

The required information usually includes:

- Connection Type/Mode: e.g., 'Built-in' or 'Repository'

- Database Type: The specific type of database (MySQL, Oracle, PostgreSQL, etc.)

- Host: The hostname or IP address of the database server.

- Port: The port number the database server is listening on.

- Database Name/SID: The name of the database or the Service ID for Oracle.

- Username: The username for authentication.

- Password: The password for the given username. Optionally, you can use context variables for these details for security and reusability.

- Schema (Optional): The database schema to connect to.

- Additional parameters (Optional): JDBC URL parameters

17. What is the purpose of using routines in Talend? Can you give an example of when you would use a routine?

Routines in Talend are reusable Java code snippets that perform specific tasks or calculations. They allow you to encapsulate complex logic and reuse it across multiple Talend jobs, improving maintainability and reducing code duplication. They serve the purpose of extending Talend's built-in functionalities.

For example, you might use a routine to:

- Format a phone number according to a specific pattern.

- Perform custom data validation.

- Encrypt or decrypt data using a specific algorithm.

- Calculate a complex business metric.

String formattedPhoneNumber = PhoneNumberFormatter.format("1234567890");

18. What is a schema in Talend? What are different ways of defining schema in Talend?

In Talend, a schema defines the structure of your data. It specifies the name, data type (e.g., string, integer, date), and other properties of each column in a dataset. Think of it as a blueprint for your data, ensuring consistency and allowing Talend components to properly process and transform the data.

There are several ways to define a schema in Talend:

- Built-in: Automatically detected or inferred by Talend based on the input data source (e.g., reading a CSV file). Useful for quick prototyping.

- Repository: Stored and managed centrally within the Talend repository. Reusable across multiple jobs, promoting consistency.

- Manual Definition: You define the schema column by column in the component itself. Useful for simple transformations or when a schema is not available elsewhere.

- Copy from another Component: Copy the schema directly from another component already present in the job. Useful when components share a common data structure.

- Retrieve Schema: Retrieve from database table. It can also be imported from other systems like XML, Excel.

19. Imagine your Talend job is running slowly. What are some steps you would take to identify and fix the performance issues?

First, I'd monitor the job's execution using Talend's monitoring features or the execution logs to pinpoint the slow component(s) or phase. Key metrics to observe include processing time, row counts, and memory usage. I'd then analyze the identified bottleneck, checking for common issues like:

- Data Volume: Large datasets can slow processing. Consider filtering, sampling, or using database-side operations.

- Inefficient Transformations: Use efficient Talend components and avoid unnecessary computations. For example, using

tMapeffectively, minimizing the usage oftJavaRowand optimizingtJoin. Use built-in functions instead of custom code wherever possible. - Database Bottlenecks: Optimize SQL queries, add indexes, or tune database parameters. Check connection pooling and database server load.

- Memory Issues: Increase JVM heap size allocated to the Talend job. Verify that there are no memory leaks.

- Parallelization: If applicable, increase the number of parallel execution threads to exploit multi-core processors. Use

tParallelizecomponent to parallelize independent tasks. However, be careful about resource contention.

Finally, after implementing a fix, I'd re-run the job and compare the performance metrics to ensure the problem is resolved, iterating until the desired performance is achieved.

20. Explain what data profiling is and how Talend can be used for data profiling tasks. What does it achieve?

Data profiling is the process of examining data to collect statistics and information about it. It involves analyzing data sets to understand their structure, content, relationships, and quality. Talend can be used for data profiling through its data profiling components and features within Talend Studio. These components allow you to analyze data sources, identify data quality issues (like missing values, invalid formats, and inconsistencies), and generate reports.

Data profiling with Talend achieves several things: It helps in understanding data assets, identifying data quality problems, assessing risks associated with data integration projects, and improving data quality. This ultimately leads to better data-driven decision-making, more effective data integration, and increased trust in data.

Advanced Talend interview questions

1. How would you optimize a Talend job that is running very slowly, even after checking the basic configurations?

To optimize a slow-running Talend job beyond basic configuration checks, I'd focus on data flow and resource utilization. First, I'd examine the job's execution plan to identify bottlenecks. This involves analyzing component execution times and data volumes at each stage. Then I'd consider techniques such as:

- Optimize database queries: Ensure queries are indexed correctly and retrieve only necessary data.

- Reduce data volume: Filter data as early as possible in the job flow.

- Improve data transformation: Use built-in Talend functions efficiently, and avoid unnecessary string manipulations.

- Increase parallelism: Utilize

tParallelizeortMapwith multiple outputs to process data concurrently. Also, look into the performance oftAggregateRow. TrytHashAggregatefor in-memory aggregation if memory permits and data volume isn't too large. Otherwise, usetAggregateRowwith temporary storage. - Optimize memory usage: Adjust JVM heap size and use

tFileOutputDelimitedor similar components with appropriate buffer sizes. - Leverage database features: Utilize database-specific bulk loading features if writing large amounts of data to a database.

- Check resources: Monitor CPU, memory, and disk I/O during job execution. Identify resource constraints.

2. Explain how you would handle slowly changing dimensions (SCDs) Type 2 in Talend?

To handle Slowly Changing Dimensions (SCD) Type 2 in Talend, I would typically use the tMap and tDBOutput (or similar database output component) along with other helper components.

The general process involves comparing incoming source data with existing data in the dimension table. If a change is detected for attributes tracked as Type 2, I'd perform the following actions: 1) Expire the current record: Set the end_date column of the current record in the dimension table to the current date or the date the change was detected. 2) Insert the new record: Insert a new record into the dimension table with the updated attribute values, a new surrogate key, and set start_date to the current date and end_date to a far future date (e.g., '9999-12-31'). If no change is detected, then no action needs to be taken. Talend components like tDie or tWarn can be used to catch unexpected scenarios.

3. Describe a scenario where you would use a tContextLoad component, and what considerations would you take?

I would use a tContextLoad component when I need to load context variables from an external source at runtime, such as a file (e.g., CSV, properties file) or a database. For example, imagine a job that processes customer data, and the database connection details (host, port, username, password) are stored in a configuration file. I would use tContextLoad to read these connection parameters from the file and set them as context variables before the database connection component executes. This way, I can easily change the connection details without modifying the job itself.

Considerations include: file encoding (to ensure correct character interpretation), data type conversion (making sure the values read from the file are properly converted to the required context variable types using the 'Type' column in tContextLoad), handling missing or invalid values (using error handling to gracefully manage scenarios where the context file is unavailable or contains incorrect data), and security (avoiding storing sensitive data, like passwords, in plain text; consider encrypting the configuration file or using environment variables instead).

4. How would you implement error handling and logging in a complex Talend job to capture detailed information for debugging?

In a complex Talend job, robust error handling and logging are critical. I would use tJavaRow or tJavaFlex components to implement custom error handling logic. Within these components, I'd use try-catch blocks to catch exceptions and log the error details using Talend's logging mechanisms or custom logging libraries (like Log4j) configured in the tMap component. The error log message would include relevant information like component name, timestamp, error message, and input data.

To centralize error handling, I'd leverage the tDie or tWarn components connected to OnComponentError or OnSubjobError triggers. These components would collect error information from multiple sources. I would then use tFileOutputDelimited or tLogCatcher to store the error information in a dedicated log file or database table for later analysis. A global variable containing detailed error context information will be useful.

5. Explain your approach to securing Talend jobs and data, covering aspects like authentication, authorization, and encryption.

Securing Talend jobs and data involves multiple layers. For authentication, Talend uses its own user management system or integrates with external systems like LDAP/Active Directory. Authorization is controlled through roles and permissions within Talend Studio and Talend Administration Center, defining what users can access and modify. Data encryption is applied both at rest and in transit. At rest, data can be encrypted within databases using the database's native encryption capabilities, and when stored on the file system, encryption tools can be employed. In transit, communication channels between Talend components (Studio, TAC, Execution Servers) are secured using SSL/TLS.

Specifically, securing Talend includes:

- Authentication: Using TAC's user management or integrating with LDAP/AD.

- Authorization: Defining user roles and permissions for job access and modification.

- Encryption: Data at rest is encrypted using database native encryption or file system encryption tools and data in transit is secured via SSL/TLS protocols.

- Masking/Tokenization: Protecting sensitive data fields using built-in Talend components during data processing. Also, we can control access using IP whitelisting.

- Auditing: Keeping track of user actions and data access within TAC for compliance and security analysis.

6. How would you use Talend to integrate data from different cloud platforms (e.g., AWS, Azure, GCP)?

Talend can integrate data from diverse cloud platforms like AWS, Azure, and GCP by leveraging its pre-built connectors and components. These connectors facilitate connectivity to various cloud services such as AWS S3, Azure Blob Storage, Google Cloud Storage, databases like AWS RDS, Azure SQL Database, Google Cloud SQL, and data warehouses like Amazon Redshift, Azure Synapse Analytics, and Google BigQuery. Talend's cloud connectors support authentication and authorization specific to each cloud platform. Jobs can be designed to extract data from one cloud, transform it, and load it into another, or into a central data warehouse, or data lake hosted on one of the platforms.

To achieve this, you can design Talend jobs using the graphical interface. For example, to read data from an AWS S3 bucket, you'd use the tS3Input component, configure it with AWS credentials and bucket details, then transform the data using components like tMap or tFilterRow, and finally load the data into Azure Blob Storage using the tAzureStoragePut component, configured with Azure credentials and container details. Talend Cloud's remote engine would be utilized to execute the job within the cloud environment for optimal performance.

7. Describe how you would monitor the performance of Talend jobs in real-time and proactively identify potential issues?

To monitor Talend job performance in real-time and proactively identify issues, I'd leverage Talend's built-in monitoring capabilities and external monitoring tools. For real-time insights, I'd configure Talend Administration Center (TAC) to monitor job execution statistics such as execution time, memory usage, and error counts. I would also set up alerts within TAC to trigger notifications when jobs exceed predefined thresholds or encounter errors. These alerts would be sent to relevant personnel, enabling prompt investigation and resolution.

For proactive issue identification, I'd implement centralized logging using tools like ELK stack (Elasticsearch, Logstash, Kibana) or Splunk. This allows for comprehensive log analysis and anomaly detection. I'd configure Talend jobs to write detailed logs including performance metrics and custom metrics. By analyzing these logs, I can identify performance bottlenecks, data quality issues, and potential job failures before they significantly impact operations. Regular review of these logs and dashboards is crucial for proactive monitoring and optimization. Additionally, I'd use TAC's monitoring dashboards to observe long-term trends and identify areas for job optimization or resource scaling.

8. How would you handle large files in Talend, exceeding memory capacity, without compromising performance?

To handle large files exceeding memory capacity in Talend without compromising performance, I'd utilize techniques like using tFileInputDelimited or tFileInputPositional with optimized settings. Specifically, setting a reasonable row limit in the component's 'Limit' tab will control the number of rows read at once. Also, using tFileOutputDelimited with a large buffer size can improve write performance. Utilizing components like tSortRow with the 'Use Disk' option allows sorting data larger than memory by using temporary disk storage. Finally, enabling the 'Commit size' property in database output components helps to process data in chunks avoiding memory overflow errors. Also, it's a good idea to increase JVM memory allocation if possible.

9. Explain how you would implement a data quality framework in Talend to ensure data accuracy and consistency?

To implement a data quality framework in Talend, I'd leverage Talend's built-in components and features. The core involves profiling data using the tDataProfile component to understand data characteristics like frequency, completeness, and patterns. Based on the profiling results, I'd define data quality rules and metrics. Then, I'd use components like tMap, tFilterRow, and tReplace to cleanse and standardize data based on these rules. The tRuleSurvivorship component can be used for data de-duplication and implementing survivorship strategies. Finally, I'd implement monitoring by generating reports using tFileOutputDelimited or sending alerts based on predefined thresholds to ensure continuous data quality.

Consistency would be ensured through standardization and validation. For example, consistent date formats and address standardization can be implemented across all sources. I'd use Talend's data masking and encryption features where needed and monitor data quality metrics regularly using Talend's administration console or custom dashboards and alert on data quality issues. Failed records would be directed to a quarantine area for review and correction. The overall data quality framework becomes an integral part of the data integration pipelines, regularly ensuring data accuracy and consistency.

10. How would you design a Talend job to handle data integration for a system with high data volume and velocity (Big Data)?

For high data volume and velocity in Talend, I'd design a job leveraging Talend's Big Data components. This involves using technologies like Hadoop, Spark, or cloud-based data processing services (AWS EMR, Databricks).

Specifically, the Talend job would:

- Use tFileInputDelimited or similar components to read the data.

- Process the data using tHDFSOutput, tSparkExecute, or tHiveOutput based on the chosen Big Data platform. I'd partition the data appropriately to enhance parallelism.

- Utilize a schema registry like Apache Avro or Parquet for efficient data serialization.

- Implement error handling mechanisms for bad records using tDie or tLogCatcher.

- Employ a scheduler (like TAC or Airflow) for orchestrating the job.

- Monitor the job using Talend Administration Center or cloud specific monitoring tools.

11. Describe the use of Talend Administration Center (TAC) for scheduling, monitoring, and managing Talend jobs.

Talend Administration Center (TAC) is the central web-based application for managing and administering Talend projects. For scheduling, TAC allows you to define job execution schedules, including setting recurrence patterns, start and end dates, and dependencies between jobs. It supports both time-based and event-based triggers.

Regarding monitoring, TAC provides a centralized view of job executions, allowing you to track job status (success, failure, running), execution time, and resource consumption. It also offers alerting capabilities, enabling you to receive notifications via email or other channels when jobs fail or exceed defined thresholds. For management, TAC allows you to manage users, roles, and permissions, ensuring secure access to Talend projects and resources. It also facilitates the deployment of jobs to different execution servers, managing execution server configurations and load balancing.

12. Explain your understanding of Talend Metadata Manager and its role in data governance.

Talend Metadata Manager (TMM) is a tool focused on discovering, managing, and governing metadata across an organization's data landscape. It acts as a central repository for technical, business, and operational metadata, enabling data lineage, impact analysis, and improved data quality. It helps to understand where data comes from, how it's transformed, and where it's used, which is crucial for data governance.

TMM plays a key role in data governance by:

- Providing a unified view of metadata.

- Supporting data lineage and impact analysis.

- Enabling data quality monitoring.

- Facilitating collaboration between data stakeholders.

- Helping to enforce data governance policies and standards. It allows to discover and document metadata from various data sources.

13. How would you implement a data masking strategy in Talend to protect sensitive data during processing?

In Talend, data masking can be implemented using various components and techniques. One common approach involves using the tReplace component with regular expressions to mask specific data patterns like credit card numbers or email addresses. For example, to mask all but the last four digits of a credit card number, you could use a regular expression like ^(\d{12})(\d{4})$ and replace it with XXXXXXXXXXXX$2.

Another strategy is using the tMasking component, which offers built-in masking functions for different data types. You can configure the component to replace characters with asterisks, randomly generate data, or use predefined dictionaries to substitute values. For more complex scenarios, you could leverage Java routines within tJavaRow or tJavaFlex components to implement custom masking logic. These routines might involve external data sources or sophisticated algorithms to anonymize the data effectively.

14. Describe how you would manage different environments (development, testing, production) in Talend?

In Talend, I would manage different environments (development, testing, production) primarily using Context Variables and Job Conductor/Talend Management Console (TMC). Context Variables allow me to define environment-specific values for database connections, file paths, and other parameters. I would create separate context files for each environment (e.g., dev.properties, test.properties, prod.properties) containing the appropriate values. These files would then be loaded into my Talend jobs based on the target environment.

Additionally, Talend Management Console provides capabilities for environment management and deployment. I can configure different execution environments within TMC and deploy jobs accordingly, ensuring the correct context variables are used for each environment. This also facilitates version control, scheduling, and monitoring across all environments. The tMap component and routines would also use context variables to conditionally control the logic based on the environment.

15. Explain your experience with custom code integration in Talend, such as using Java routines or components.

My experience with custom code integration in Talend primarily involves leveraging Java routines and tJava components. I've created reusable Java routines for complex data transformations, such as custom date format conversions or advanced string manipulations that aren't readily available through built-in Talend components. These routines are then called within Talend jobs to perform the necessary logic.

Furthermore, I've used tJava components for tasks like dynamic file name generation, executing external system commands, or handling custom error scenarios. For example, I've used it to conditionally route data based on the result of an external API call or to interact with specific Java libraries. I have experience in packaging custom java classes as jars, importing them in Talend and using them as custom components.

16. How would you use Talend to integrate with RESTful APIs and handle different authentication methods?

Talend simplifies REST API integration using components like tRESTClient and tExtractJsonFields. The tRESTClient component makes the API call, while tExtractJsonFields parses the JSON response. For authentication, Talend supports various methods:

- Basic Authentication: Configure the

tRESTClientwith username/password. - OAuth: Use tOAuth components (if available, otherwise custom Java code or tRESTClient) to obtain access tokens and include them in the

Authorizationheader. The tSetHeader component can be used to dynamically set headers. - API Keys: Include the API key in the URL as a parameter, or in the request header using tSetHeader component.

- Custom Authentication: Implement custom Java code using tJava or tJavaRow components for more complex scenarios. You can also use the tREST component to set the body and the necessary headers.

17. Describe how you would implement data lineage tracking in Talend to understand the origin and flow of data?

To implement data lineage tracking in Talend, I would primarily use the Talend Metadata Manager (TMM) or the built-in lineage features within Talend Studio. The key is to configure Talend jobs to automatically capture and publish metadata about data transformations.

I would ensure the following:

- Metadata Harvesting: Configure TMM to harvest metadata from Talend jobs, databases, and other relevant sources. This involves defining connections to these systems within TMM.

- Job Design Best Practices: Design Talend jobs with clear naming conventions and descriptions for components and transformations. This helps in understanding the lineage visually.

- Use of tLineage components: Utilize Talend's built-in

tLineagecomponents where available in the data flows to explicitly capture data lineage information. - Data flow tracing: Leverage the data flow tracing capabilities within Talend Studio to visually trace the data's path through a job. This can then be published to TMM.

- Impact Analysis: Use TMM's impact analysis features to determine the upstream and downstream dependencies of a specific data element.

18. Explain your approach to version control and deployment of Talend jobs using Git or similar tools.

My approach to version control and deployment of Talend jobs leverages Git for managing code and promoting changes through environments. I typically create a Git repository for Talend projects, committing all job designs, routines, and related files. Branches are used for development, feature implementation, and hotfixes, ensuring isolation and preventing conflicts. I follow a branching strategy like Gitflow where develop and main branches are key. Pull requests are mandatory for merging code, enabling peer review and quality checks. To deploy Talend jobs, I use a CI/CD pipeline, often built with Jenkins or GitLab CI. The pipeline automates the build process (exporting Talend jobs as executable archives or OSGI bundles), performs testing, and then deploys the artifacts to the appropriate Talend execution environment (TAC or standalone Job Servers). Environment-specific configurations (e.g., database connections) are managed using parameters or context variables, ensuring jobs work correctly across different environments. These configs are injected at deployment time.

19. How would you use Talend to perform real-time data integration and stream processing?

Talend can perform real-time data integration and stream processing primarily using its components within Talend Data Streams (formerly Talend Real-Time Big Data Platform). You would typically use components like tKafkaInput, tMQTTInput, or tRESTClient to ingest real-time data streams. These components connect to various sources like Kafka, MQTT brokers, or REST APIs, respectively. Then, you would apply transformations and enrichments using components like tMap, tFilterRow, or custom code routines. Finally, you would output the processed data to target systems using components like tKafkaOutput, tFileOutputDelimited (for batch micro-batches), or a database connector.

Specifically, you would design a Talend Job or Route to handle the stream. A Route is better suited for real-time processing due to its inherent ability to handle continuous data flow. You'd configure the input components to listen for incoming data, apply transformations, and then direct the output to the appropriate target. Key considerations include error handling, fault tolerance, and ensuring that the processing logic is optimized for low latency.

20. Describe how you would handle complex data transformations and aggregations in Talend?

In Talend, I would handle complex data transformations and aggregations using a combination of built-in components and custom code when necessary. For aggregations, the tAggregateRow component is often the first choice. I'd configure it to perform calculations like sums, averages, or counts based on grouping criteria. For more complex aggregations or windowing functions, I might use a tMap component with Java code or integrate with a database using tDBOutput and leverage its SQL aggregation capabilities.

For transformations, I would use a series of components like tMap, tConvertType, tReplace, and tFilterRow to cleanse, reshape, and enrich the data. If the transformation logic becomes intricate, embedding Java routines within tJavaFlex or tJavaRow gives greater flexibility. For example, if I needed to parse a complex JSON structure, I might use a tJavaRow component with Jackson library calls. If the same transformations are needed in multiple jobs, I would create reusable Talend routines or subjobs to avoid duplication. I would use the debugger to trace transformations and ensure they are doing what they should.

21. How would you orchestrate multiple Talend jobs to create a complex data pipeline?

To orchestrate multiple Talend jobs into a complex data pipeline, I would primarily use Talend's built-in orchestration capabilities. The main component for this is the Talend Job Conductor within Talend Management Console (TMC). It allows you to schedule and monitor job executions. I would create parent jobs that call child jobs (or joblets) in a defined sequence. TMC enables setting dependencies between jobs, so a job only starts upon successful completion of its predecessor.

Alternatively, I would also consider using an external orchestrator like Apache Airflow to manage the Talend jobs. In this scenario, I'd use the tRunJob component in Talend to invoke the jobs. Airflow DAGs would define the dependencies and execution order. This approach provides greater flexibility and monitoring capabilities, particularly in more complex environments where jobs are deployed across diverse platforms or systems. Using external orchestration like Airflow has the benefit of keeping data pipelines separate from the ETL tool.

22. Explain your understanding of Talend Data Stewardship and its role in data governance and quality.

Talend Data Stewardship is a collaborative data governance and quality management tool within the Talend ecosystem. It enables data professionals and business users to work together to resolve data quality issues, manage data certification, and ensure data accuracy and compliance. It facilitates the creation of data quality campaigns and tasks, allowing stakeholders to collaboratively identify, investigate, and resolve data errors or inconsistencies.

Its role in data governance and quality is significant. It provides a platform for implementing and enforcing data quality rules and policies. By centralizing data stewardship activities, it improves data accuracy, consistency, and reliability, leading to better decision-making and reduced data-related risks. This helps organizations meet regulatory requirements, improve data-driven insights, and build trust in their data assets.

23. How would you implement a data catalog in Talend to manage and discover data assets?

Implementing a data catalog in Talend involves several steps. First, leverage Talend Data Catalog (if available) or integrate with a compatible third-party catalog. Then, configure Talend jobs to automatically extract metadata from data sources and targets. This metadata, including schema definitions, data types, and lineage information, is pushed to the catalog. Finally, use the catalog's search and discovery features to browse and understand available data assets.

Consider using Talend's metadata extraction capabilities and APIs to automate the population of the data catalog. Regularly update the catalog as data assets evolve or new ones are introduced. Enforce data governance policies by tagging data assets with relevant classifications and access controls. This ensures that users can easily find and understand data while adhering to compliance requirements. A good data catalog improves collaboration between data engineers, analysts, and business users.

24. Describe how you would handle incremental data loading in Talend to improve performance and reduce processing time?

To handle incremental data loading in Talend, I would primarily use a combination of techniques focused on identifying and processing only the changed or new data. First, I'd implement a mechanism to track the last successful load timestamp or a unique sequence ID. This could involve storing the last processed value in a file, database table, or Talend context variable.

Then, in the Talend job, I would query the source system using this tracked value in a WHERE clause to filter for records modified or created after the last load. Key Talend components would include tDBInput (to query the source), tMap (for transformations), and tDBOutput (to load into the target). For CDC (Change Data Capture) scenarios, I'd consider using features provided by the source database (e.g., triggers, transaction logs) or specialized Talend components designed for CDC, such as those available in the Talend Data Management Platform, ensuring only changed data is processed.

25. Explain your approach to testing and validating Talend jobs to ensure data quality and accuracy.

My approach to testing Talend jobs focuses on data quality and accuracy throughout the development lifecycle. I start by understanding the data sources, transformations, and target requirements. Unit testing is performed on individual components within the job to ensure they process data as expected. This involves creating test data sets with various scenarios, including valid, invalid, and boundary conditions, then comparing the output against expected results. Data profiling helps understand the actual data and helps create better test cases. I also do data sampling and schema validation. We can use tAssert component to validate data transformations in the jobs.

Integration testing is crucial to ensure that the entire job functions correctly when all components are connected. This involves testing the end-to-end flow of data and validating that the output matches the expected results in the target system. We monitor resource utilization, performance, and error handling during execution. Finally, User Acceptance Testing (UAT) with business users is performed to validate the data meets their requirements and satisfies the business need. During UAT, business teams test if data is correctly transformed and loaded into target tables. We also use logging and monitoring features available in Talend Management Console (TMC) and Talend Administration Center (TAC).

26. How would you leverage Talend's features for data profiling and discovery?

Talend offers built-in components and functionalities specifically designed for data profiling and discovery. I would primarily use the tDataProfile component to analyze data in various sources. This component provides statistical summaries like min, max, mean, standard deviation, frequency distributions, and data quality metrics (e.g., null counts, duplicates) for each column.

Furthermore, I would leverage Talend's schema discovery capabilities. I can automatically detect the data types, lengths, and formats of columns in a data source. By analyzing the results of the data profiling and schema discovery processes, I can identify data quality issues, inconsistencies, and potential data transformation needs, leading to more effective data integration and cleansing strategies. For example, to find the number of distinct values in a field, I could use the "distinct" indicator within tDataProfile.

27. Describe a time when you had to debug a complex Talend job, what was your approach?

When debugging a complex Talend job, my approach typically involves a combination of systematic investigation and leveraging Talend's debugging features. First, I isolate the failing component or subjob by examining the error logs and monitoring the job's execution flow. I then use Talend's built-in debugger to step through the process, inspect variable values at each step, and identify discrepancies between expected and actual data. I often add tLogRow components at strategic points to print intermediate results and track data transformations.

If the issue is data-related, I carefully examine the input data for inconsistencies or errors that might be causing the problem. For performance issues, I use Talend's monitoring tools to identify bottlenecks and optimize resource allocation or adjust component configurations. For example, if I encounter a java.lang.OutOfMemoryError, I would investigate the tmap and tAggregateRow components to see if I could reduce data processing or use temporary storage. I will also validate data types in each step to avoid unexpected casts.

28. Explain how you would integrate Talend with a message queue system like Kafka or RabbitMQ?

To integrate Talend with a message queue system like Kafka or RabbitMQ, I would utilize Talend's built-in components specifically designed for these systems. For Kafka, I'd use components like tKafkaConnection, tKafkaInput, and tKafkaOutput. tKafkaConnection establishes the connection to the Kafka broker. tKafkaInput consumes messages from a Kafka topic, and tKafkaOutput publishes messages to a Kafka topic. I would configure these components with the appropriate broker addresses, topic names, group IDs, and serialization formats.

For RabbitMQ, components such as tRabbitMQConnection, tRabbitMQInput, and tRabbitMQOutput would be used. Similar to Kafka, tRabbitMQConnection manages the connection. tRabbitMQInput retrieves messages from a queue, while tRabbitMQOutput sends messages to a queue. Configuration includes the exchange, routing key, queue name, and credentials. Error handling is crucial in both scenarios, so I would implement robust exception handling to manage connection failures or message processing errors.

29. How would you handle schema evolution in Talend when the structure of source or target data changes?

Schema evolution in Talend can be handled using several techniques. When a source schema changes, you can use the tMap component to map the incoming fields to the target schema, ignoring new fields or providing default values for missing ones. For more complex transformations or when dealing with numerous schema changes, the tConvertType component can be useful to handle data type conversions dynamically. Alternatively, schema repository management allows you to centralize and control schema definitions, simplifying updates and ensuring consistency across jobs. Using dynamic schemas is also helpful; the tFileInputDelimited and tFileOutputDelimited components, among others, support dynamic schemas, allowing you to handle varying column structures.

When a target schema changes, similar techniques apply. If the target requires new fields, tMap can be used to populate them with default values or data from other sources. If the target no longer requires certain fields, tMap can simply omit them. You can also utilize database components such as tDBOutput with the 'Update' action to modify the target table schema directly from within the Talend job. Proper error handling and monitoring are crucial in either scenario to ensure data integrity.

30. Describe how you would use Talend to build a data lake and integrate data from various sources?

To build a data lake with Talend, I'd start by configuring connections to various data sources like databases (using JDBC connectors), cloud storage (S3, Azure Blob Storage), APIs (using tRESTClient), and flat files (using tFileInputDelimited). Talend's components enable extracting data from these diverse sources, often requiring data cleansing and transformation using components like tMap, tFilterRow, and tConvertType to ensure data quality and consistency. This transformed data would then be loaded into the data lake, which could be implemented using cloud storage solutions like AWS S3 or Azure Data Lake Storage Gen2. Talend's orchestration features allow for scheduling and monitoring the data ingestion pipelines.

To handle different data formats (structured, semi-structured, unstructured), I'd leverage Talend's schema management capabilities. For structured data, schemas can be defined and applied directly. For semi-structured data like JSON or XML, components like tExtractJSONField and tExtractXMLField are useful. Unstructured data might require more advanced processing, potentially using custom code or integration with external services for tasks like text extraction and sentiment analysis. Talend Data Preparation could also be used for interactive data profiling and cleansing. The goal is to land the raw data and transformed data into the data lake with appropriate metadata, making it accessible for analytics and machine learning.

Expert Talend interview questions

1. How would you optimize a Talend job that's running slower than expected, considering data volume and transformation complexity?

To optimize a slow Talend job with high data volume and transformation complexity, I'd focus on several areas. First, I'd analyze the job's execution plan to identify bottlenecks, paying close attention to components with high processing times or excessive memory usage. Optimizations would include: minimizing data reads by filtering early in the process; using appropriate data types to reduce memory footprint; leveraging Talend's bulk execution options for database operations; and optimizing transformations using efficient routines or expressions. For example, use tMap caching for lookups or implement database-side aggregation.

Second, I would improve resource allocation by adjusting the JVM heap size and optimizing the number of parallel execution threads. Component-specific optimization is also key; for instance, using tHashInput/tHashOutput for frequent lookups can dramatically improve performance compared to repeated database queries. Finally, consider using the monitoring features within Talend Administration Center (TAC) for real-time performance tracking and identify resource constraints. Job refactoring might be needed if the bottleneck isn't easily resolved.

2. Describe a complex data integration scenario you've handled using Talend, detailing the challenges and your solutions.