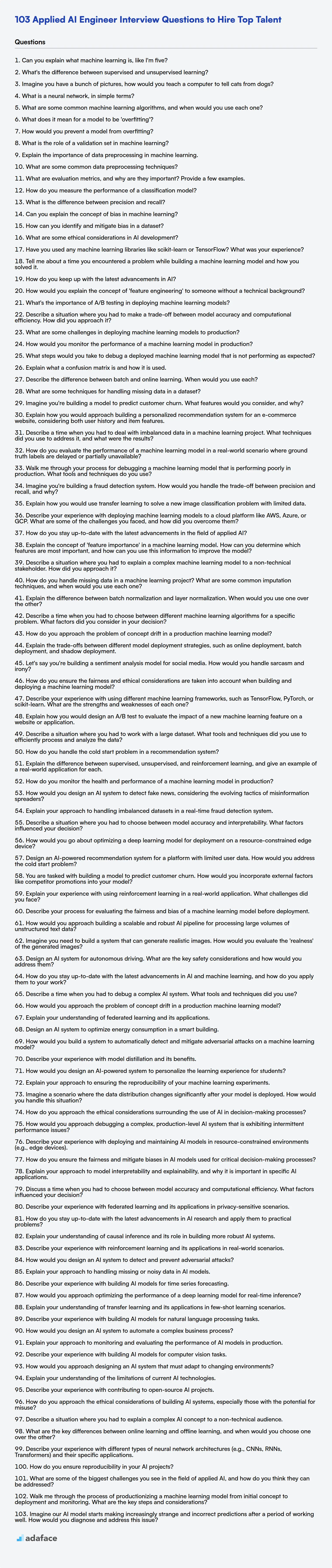

Are you looking to hire an Applied AI Engineer and need a way to filter through the noise? Having a structured list of questions, like in our skills required for machine learning engineer post, helps interviewers assess candidates thoroughly and identify top talent.

This blog post provides a question bank covering basic, intermediate, advanced, and expert level Applied AI Engineer interview questions. We also provide multiple-choice questions (MCQs) to make your interview process smoother.

By using these questions, you can quickly evaluate candidates and gauge their readiness for the role. To further streamline your hiring, consider using Adaface's Applied AI Engineer Test to pre-screen candidates before the interview.

Table of contents

Basic Applied AI Engineer interview questions

1. Can you explain what machine learning is, like I'm five?

Imagine you have a box of toys, and you want a robot to learn how to play with them. Machine learning is like teaching the robot by showing it lots and lots of examples. If you show the robot many pictures of cats, it will eventually learn what a cat looks like. It's like teaching a dog tricks, but instead of treats, we give the computer lots of data!

So, basically, instead of telling the computer exactly what to do, we give it examples, and it learns from those examples to make its own decisions or predictions. It's like learning from experience, but for computers.

2. What's the difference between supervised and unsupervised learning?

Supervised learning uses labeled data to train a model to predict outcomes. The algorithm learns from the input features and their corresponding target values (labels). Examples include classification (predicting categories) and regression (predicting continuous values).

Unsupervised learning, on the other hand, uses unlabeled data to discover hidden patterns or structures. There are no target values to guide the learning process. Algorithms try to identify clusters, reduce dimensionality, or find associations in the data. Examples include clustering, dimensionality reduction, and anomaly detection.

3. Imagine you have a bunch of pictures, how would you teach a computer to tell cats from dogs?

I'd teach a computer to distinguish cats from dogs using a machine learning approach. First, I'd gather a large dataset of images, carefully labeling each image as either "cat" or "dog". This dataset is crucial for training the model. Then, I'd use a Convolutional Neural Network (CNN), a type of deep learning algorithm well-suited for image recognition. The CNN learns to identify patterns and features in the images, such as the shape of the ears, nose, and overall body structure, that differentiate cats from dogs.

During training, the CNN adjusts its internal parameters to minimize the difference between its predictions and the correct labels. After training, I would test it on new, unseen images to evaluate its accuracy. If the accuracy is not satisfactory, I would iterate, improving the training data, adjusting the CNN architecture, or tweaking the training process. Techniques like data augmentation (e.g., rotating, flipping, and cropping images) can also improve the model's robustness. Libraries like TensorFlow or PyTorch could be used.

Here is an example of using PyTorch:

import torch

import torchvision.models as models

model = models.resnet18(pretrained=True) # load a pretrained model

model.fc = torch.nn.Linear(model.fc.in_features, 2) # Replace the last layer for binary classification

4. What is a neural network, in simple terms?

A neural network is a computing system inspired by the structure of the human brain. It consists of interconnected nodes called neurons that process and transmit information.

Think of it like this: inputs go into the first layer of neurons, these neurons perform simple calculations and pass the results to the next layer, and so on, until the final layer produces the output. The "learning" process involves adjusting the connections between neurons (the weights) to improve the accuracy of the network's predictions. They are particularly good at pattern recognition.

5. What are some common machine learning algorithms, and when would you use each one?

Some common machine learning algorithms include: Linear Regression (for predicting continuous values based on linear relationships), Logistic Regression (for binary classification problems), Decision Trees (for both classification and regression, useful when interpretability is important), Support Vector Machines (SVMs) (effective in high-dimensional spaces and for non-linear classification using kernel functions), and K-Nearest Neighbors (KNN) (a simple, non-parametric algorithm for classification and regression based on proximity to neighbors).

The choice of algorithm depends on the data type (continuous vs. categorical), the problem type (regression vs. classification), the desired level of interpretability, and the size of the dataset. For example, if you have a large dataset and need high accuracy, you might choose SVM or a neural network. If interpretability is key, Decision Trees or Linear Regression might be preferred.

6. What does it mean for a model to be 'overfitting'?

Overfitting occurs when a model learns the training data too well, capturing noise and specific details that don't generalize to new, unseen data. Essentially, it memorizes the training set instead of learning the underlying patterns. As a result, the model performs exceptionally well on the training data but poorly on test or validation data.

Indications of overfitting include a large discrepancy between training and testing performance, where the training accuracy is very high but the testing accuracy is significantly lower. Regularization techniques are often employed to combat overfitting by penalizing complex models and encouraging simpler, more generalizable solutions.

7. How would you prevent a model from overfitting?

To prevent overfitting, several strategies can be employed. One common approach is to increase the amount of training data. More data allows the model to generalize better to unseen examples. Another technique is regularization, which adds a penalty to the model's complexity. L1 and L2 regularization are popular methods. Cross-validation helps in assessing the model's performance on unseen data and tuning hyperparameters to avoid overfitting.

Furthermore, feature selection and feature engineering can simplify the model by reducing the number of input features or creating more informative features. Early stopping, which involves monitoring the model's performance on a validation set and stopping training when the performance starts to degrade, can also be effective. Finally, techniques like dropout (especially in neural networks) randomly deactivates neurons during training, forcing the network to learn more robust features.

8. What is the role of a validation set in machine learning?

The validation set is a subset of the training data used to evaluate the performance of a model during training. It helps to tune hyperparameters and prevent overfitting. Unlike the test set, the validation set is used iteratively throughout the training process. By evaluating the model on this independent dataset, one can identify how well the model generalizes to unseen data and make necessary adjustments to the model's complexity or hyperparameters.

In essence, it acts as a proxy for the test set during training, allowing for adjustments that optimize the model's ability to generalize without 'peeking' at the final test data, which is reserved for a final, unbiased evaluation. Choosing the best model or hyperparameter set is often based on the performance observed on the validation set.

9. Explain the importance of data preprocessing in machine learning.

Data preprocessing is crucial in machine learning because real-world data is often incomplete, inconsistent, and noisy. Without preprocessing, models may struggle to learn effectively, leading to poor performance and inaccurate predictions. Preprocessing ensures data quality, making it suitable for machine learning algorithms.

Specifically, preprocessing steps like handling missing values, removing outliers, scaling features, and encoding categorical variables significantly impact model performance. Clean and well-prepared data improves the accuracy, efficiency, and interpretability of machine learning models. It reduces bias and improves generalization, allowing models to make better predictions on unseen data.

10. What are some common data preprocessing techniques?

Common data preprocessing techniques include:

- Data Cleaning: Handling missing values (imputation or removal), removing outliers, correcting inconsistencies, and addressing noisy data.

- Data Transformation: Scaling or normalizing numerical features (e.g., min-max scaling, standardization), converting categorical variables to numerical representations (e.g., one-hot encoding, label encoding), and handling skewed distributions (e.g., log transformation).

- Data Reduction: Reducing the dimensionality of the data (e.g., PCA, feature selection), aggregating data, and sampling (e.g., under-sampling, over-sampling). Useful if resources are limited or model complexity needs to be constrained.

- Feature Engineering: Creating new features from existing ones to improve model performance (e.g., polynomial features, interaction terms).

These techniques aim to improve data quality, prepare it for machine learning algorithms, and ultimately enhance model performance.

11. What are evaluation metrics, and why are they important? Provide a few examples.

Evaluation metrics are quantitative measures used to assess the performance of a model or algorithm. They're vital for understanding how well a model is achieving its intended goals, comparing different models, and tuning model parameters for optimal performance. Without them, it's impossible to objectively determine if a model is improving or if one model is better than another.

Examples include:

- Accuracy: The proportion of correctly classified instances.

- Precision: The proportion of true positives out of all predicted positives.

- Recall: The proportion of true positives out of all actual positives.

- F1-score: The harmonic mean of precision and recall.

- Mean Squared Error (MSE): The average squared difference between predicted and actual values.

MSE = (1/n) * Σ(y_i - ŷ_i)^2 - Area Under the ROC Curve (AUC-ROC): Measures the ability of a classifier to distinguish between classes.

12. How do you measure the performance of a classification model?

Performance of a classification model is typically measured using metrics derived from the confusion matrix. Key metrics include:

- Accuracy: Overall correctness (TP+TN)/(TP+TN+FP+FN).

- Precision: Measures how many of the positive predictions are actually correct TP/(TP+FP).

- Recall (Sensitivity): Measures how many of the actual positives are correctly predicted TP/(TP+FN).

- F1-score: Harmonic mean of precision and recall 2 * (Precision * Recall) / (Precision + Recall).

- AUC-ROC: Area under the Receiver Operating Characteristic curve, representing the model's ability to distinguish between classes.

Choosing the appropriate metric depends on the specific problem and the relative importance of different types of errors. For example, in medical diagnosis, recall might be more important than precision.

13. What is the difference between precision and recall?

Precision and recall are two important metrics used to evaluate the performance of classification models.

Precision measures the accuracy of the positive predictions. It answers the question: "Of all the items the model predicted as positive, how many were actually positive?" It is calculated as True Positives / (True Positives + False Positives).

Recall, on the other hand, measures the completeness of the positive predictions. It answers the question: "Of all the actual positive items, how many did the model correctly predict as positive?" It is calculated as True Positives / (True Positives + False Negatives).

14. Can you explain the concept of bias in machine learning?

Bias in machine learning refers to systematic errors in a model's predictions. It occurs when a model makes consistent and inaccurate assumptions about the data, leading to poor performance on both the training data and new, unseen data. High bias models tend to underfit the data, meaning they are too simple to capture the underlying patterns.

Sources of bias can include using an overly simplistic model, incomplete or unrepresentative training data, or flawed assumptions in the algorithm itself. Mitigating bias often involves using more complex models, gathering more representative data, and carefully evaluating model performance across different subgroups of the population to identify and address any disparities.

15. How can you identify and mitigate bias in a dataset?

Identifying bias in a dataset involves exploring the data for skewed distributions, missing values in specific subgroups, and correlations between sensitive attributes (e.g., gender, race) and outcomes. Visualization techniques like histograms and scatter plots can reveal these patterns. Statistical tests can also quantify differences between groups. For example, if analyzing loan applications, calculate approval rates for different demographic groups to spot potential bias.

Mitigating bias often requires data preprocessing. This might include resampling techniques (oversampling minority groups, undersampling majority groups), re-weighting instances, or using algorithmic approaches like adversarial debiasing. Furthermore, consider using fairness-aware algorithms that explicitly optimize for equitable outcomes. Regular auditing and monitoring of model performance across different subgroups are crucial to ensure bias is not reintroduced.

16. What are some ethical considerations in AI development?

Ethical considerations in AI development are crucial. Bias in training data can lead to discriminatory outcomes, perpetuating societal inequalities. It's important to ensure fairness and avoid reinforcing harmful stereotypes. Transparency and explainability are also key; understanding how AI systems arrive at their decisions is vital for accountability and trust.

Privacy is another major concern, especially with AI systems processing vast amounts of personal data. Responsible data handling and robust security measures are essential to protect individuals' privacy. Finally, the potential for job displacement due to AI automation raises ethical questions about the need for retraining and social safety nets to mitigate the negative impacts on workers. It's important to note the issue of safety and control. As AI systems become more autonomous, it is critical to ensure they operate safely and remain under human control, preventing unintended consequences or misuse.

17. Have you used any machine learning libraries like scikit-learn or TensorFlow? What was your experience?

Yes, I have experience using scikit-learn and TensorFlow. With scikit-learn, I've used it for various tasks like classification, regression, and clustering. I'm familiar with common algorithms like linear regression, support vector machines, decision trees, and k-means. I've also used scikit-learn for model selection, hyperparameter tuning using techniques like GridSearchCV and RandomizedSearchCV, and evaluation metrics like accuracy, precision, recall, and F1-score. For example, in a project, I used scikit-learn to build a model that predicts customer churn based on historical data. The following code snippet showcases how I used it:

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy: {accuracy}")

I've also worked with TensorFlow for building and training neural networks. I have experience with creating different types of layers (dense, convolutional, recurrent), defining loss functions, optimizers, and training loops. I've used TensorFlow for tasks such as image classification and natural language processing. I'm comfortable with using Keras API within TensorFlow for building models. I am familiar with concepts like backpropagation, gradient descent, and regularization techniques.

18. Tell me about a time you encountered a problem while building a machine learning model and how you solved it.

During a project to predict customer churn, I encountered a significant class imbalance – only a small percentage of customers actually churned. This caused the model to be biased towards predicting the majority class (non-churn). To address this, I employed several techniques. First, I experimented with different sampling methods, including oversampling the minority class (churned customers) using SMOTE and undersampling the majority class.

Second, I adjusted the model's parameters to penalize misclassification of the minority class more heavily. Specifically, I used the class_weight='balanced' parameter in scikit-learn's Logistic Regression. Finally, I evaluated the model's performance using metrics that are less sensitive to class imbalance, such as precision, recall, F1-score, and AUC-ROC, instead of solely relying on accuracy. By combining these approaches, I was able to build a more robust and accurate churn prediction model.

19. How do you keep up with the latest advancements in AI?

I stay updated on AI advancements through a multi-faceted approach. I regularly read research papers on arXiv, particularly those from leading AI labs. I also subscribe to newsletters and blogs from reputable sources like Google AI Blog, OpenAI Blog, and DeepMind, as well as AI-focused news aggregators.

Furthermore, I actively participate in online communities and forums such as Reddit's r/MachineLearning and attend virtual conferences and webinars hosted by organizations like NeurIPS, ICML, and ICLR. This helps me stay informed about the latest trends, techniques, and real-world applications of AI. I also experiment with new libraries such as transformers or torchvision to gain practical experience.

20. How would you explain the concept of 'feature engineering' to someone without a technical background?

Imagine you're baking a cake. The ingredients (flour, sugar, eggs) are like the initial data. Feature engineering is like preparing those ingredients to make them better for baking. For example, instead of just using raw sugar, you might grind it into powdered sugar for a smoother texture. Or, instead of using whole eggs, you might separate the yolks and whites and whip the whites for added volume. These preparations (powdering sugar, whipping egg whites) are like creating new 'features' from your original data to help the cake (your machine learning model) turn out better.

In simpler terms, it's about taking the information you have and transforming it in a way that makes it easier for a computer to understand patterns and make predictions. We create new ingredients from the existing ones to give our 'cake' (prediction) the best chance of success.

21. What's the importance of A/B testing in deploying machine learning models?

A/B testing is crucial for deploying machine learning models because it allows for data-driven comparison of different model versions in a real-world setting. It mitigates risks associated with deploying a new model blindly, ensuring that the new model genuinely improves performance compared to the existing one, or a baseline. Without A/B testing, it's difficult to confidently assess the impact of changes and avoid potentially negative consequences.

Specifically, A/B testing helps to:

- Quantify the impact of model changes on key metrics like conversion rate, click-through rate, or revenue.

- Identify unexpected issues or biases that may not be apparent in offline testing.

- Ensure that the model performs well across different user segments or scenarios.

- Provide a statistically significant basis for making deployment decisions.

- Reduce the risk of deploying a model that degrades performance.

22. Describe a situation where you had to make a trade-off between model accuracy and computational efficiency. How did you approach it?

In a fraud detection project, I initially developed a highly accurate deep learning model. However, its inference time was too slow for real-time transaction processing. To address this, I explored several trade-offs. First, I experimented with model distillation, training a smaller, faster model to mimic the behavior of the larger one. This reduced the model size and improved inference speed, but with a slight decrease in accuracy. Second, I reduced the number of features used by the model focusing on the most important features as determined by feature importance metrics. This also helped reduce the computational complexity without significantly compromising accuracy. Ultimately, I chose the distilled model approach combined with feature selection because it provided a good balance between acceptable accuracy and the required computational efficiency for real-time deployment.

Specifically, for feature selection, I used techniques such as:

- Variance Thresholding: Removing features with low variance.

- Univariate Feature Selection: Using statistical tests (e.g., chi-squared) to select features with the strongest relationship to the target variable.

- Recursive Feature Elimination (RFE): Recursively removing features and building a model on the remaining features. I evaluated the performance using cross-validation. This helped me determine an optimal subset of features.

23. What are some challenges in deploying machine learning models to production?

Deploying machine learning models to production presents several challenges. One major hurdle is model maintenance: models can degrade over time due to changes in the data distribution (data drift) or the relationship between features and the target variable (concept drift). This requires continuous monitoring, retraining, and potentially model updates, which can be resource-intensive.

Other challenges include:

- Scalability: Ensuring the model can handle a large volume of requests with low latency.

- Reproducibility: Maintaining consistent model performance across different environments.

- Monitoring: Tracking model performance, identifying anomalies, and triggering alerts when necessary.

- Integration: Seamlessly integrating the model into existing systems and workflows.

- Security: Protecting the model and data from unauthorized access and manipulation.

- Explainability: Understanding why the model makes certain predictions, especially in regulated industries.

24. How would you monitor the performance of a machine learning model in production?

Monitoring a machine learning model in production involves tracking various metrics to ensure it's performing as expected. Key areas to monitor include:

- Performance Metrics: Track metrics relevant to the model's objective (e.g., accuracy, precision, recall, F1-score for classification; RMSE, MAE for regression). Monitor these metrics over time to detect degradation.

- Data Drift: Monitor the input data distribution for significant changes compared to the training data. Techniques like calculating the Population Stability Index (PSI) or using statistical tests can help detect drift. If drift is detected, it may be time to retrain the model.

- Prediction Drift: Monitor the model's output distribution for changes. Unexpected shifts could indicate underlying issues.

- Infrastructure Metrics: Track resource usage (CPU, memory, disk I/O) and latency to ensure the model is serving predictions efficiently. Set up alerts for anomalies or performance degradation in any of these areas.

- Model Versioning and Rollbacks: Keep track of model versions and have a plan for rolling back to a previous version if issues arise. Implement automated testing and validation procedures before deploying new models.

25. What steps would you take to debug a deployed machine learning model that is not performing as expected?

Debugging a deployed ML model involves several key steps. First, establish a baseline by comparing the model's current performance against its performance during training and validation using relevant metrics. If performance degradation is confirmed, isolate the issue. Monitor input data for anomalies or distribution shifts using techniques like calculating summary statistics and visualizing distributions. Check model infrastructure like logging, API endpoints, and resource utilization for errors or bottlenecks. It is also crucial to check for software updates, library updates or dependency conflicts between various model components.

Then, analyze model outputs using techniques like error analysis to identify patterns in mispredictions. For example, are specific groups of data points consistently misclassified? Implement detailed logging to capture model inputs, intermediate calculations, and predictions, allowing for thorough post-mortem analysis. If applicable, conduct A/B testing to compare the problematic model against a previous version or a simpler baseline model. Also consider the need to retrain the model with new data, or investigate the possibility of concept drift.

26. Explain what a confusion matrix is and how it is used.

A confusion matrix is a table that summarizes the performance of a classification model. It visualizes the counts of true positive (TP), true negative (TN), false positive (FP), and false negative (FN) predictions. Each row of the matrix represents the actual class, while each column represents the predicted class.

It is used to evaluate the accuracy of a classification model and identify areas where the model is performing well or poorly. From the confusion matrix, various metrics can be calculated, such as accuracy, precision, recall, and F1-score, providing a more detailed understanding of the model's performance than overall accuracy alone. This helps in model selection, tuning, and identifying biases.

27. Describe the difference between batch and online learning. When would you use each?

Batch learning involves training a model on a fixed, pre-existing dataset. The model learns from the entire dataset at once and is then deployed. It's suitable when the dataset is relatively small, unchanging, and available in its entirety before training, such as training a spam filter on a historical email dataset.

Online learning, also known as incremental learning, trains a model one data point or a small batch of data points at a time. The model updates its parameters with each new data point it receives. This is useful when dealing with large, continuous streams of data, where it's impractical or impossible to store the entire dataset in memory, or when the data distribution changes over time. For example, training a model to predict stock prices or for real-time fraud detection benefits from online learning.

28. What are some techniques for handling missing data in a dataset?

Several techniques exist for handling missing data. Simplest approaches involve removing rows or columns with missing values, but this can lead to significant data loss. Imputation techniques are often preferred, where missing values are replaced with estimated values. Common imputation methods include:

- Mean/Median/Mode Imputation: Replacing missing values with the mean, median, or mode of the available data in that column.

- Constant Value Imputation: Replacing missing values with a predefined constant (e.g., 0, -999).

- Regression Imputation: Predicting missing values using a regression model based on other variables.

- K-Nearest Neighbors (KNN) Imputation: Imputing missing values based on the values of the k-nearest neighbors.

- Multiple Imputation: Creating multiple plausible datasets with different imputed values and combining the results. This helps quantify the uncertainty associated with imputation. Libraries like

scikit-learn(usingSimpleImputerorKNNImputer) andstatsmodelsin Python provide implementations for many of these techniques.

29. Imagine you're building a model to predict customer churn. What features would you consider, and why?

To predict customer churn, I'd consider features falling into several categories. Customer demographics (age, location, gender) might reveal patterns. Engagement metrics are crucial: website visits, time spent on site, app usage frequency, and feature adoption. Customer service interactions like support ticket volume, resolution time, and sentiment scores (if available) provide insights into satisfaction. Subscription details are also important: plan type, payment method, billing frequency, and tenure. Finally, purchase history (frequency, amount spent, product types) can indicate loyalty.

I'd prioritize these features based on business context. For instance, in a SaaS business, feature usage and support interactions are paramount. In an e-commerce setting, purchase history and website behavior would be more significant. Feature selection would then involve exploring correlations with churn and using techniques like feature importance from machine learning models to further refine the feature set. Also, one-hot encoding might be used to handle categorical variables.

Intermediate Applied AI Engineer interview questions

1. Explain how you would approach building a personalized recommendation system for an e-commerce website, considering both user history and item features.

To build a personalized recommendation system for an e-commerce website, I would use a hybrid approach combining collaborative filtering and content-based filtering. For collaborative filtering, I'd analyze user purchase history, browsing data, and ratings to identify users with similar tastes. Then, I'd recommend items that similar users have liked or purchased. For content-based filtering, I'd analyze item features like category, price, brand, and description. This allows me to recommend items similar to those a user has previously interacted with.

Specifically, I'd likely implement matrix factorization (e.g., Singular Value Decomposition) on the user-item interaction matrix for collaborative filtering. For content-based filtering, I'd represent item features as vectors and use cosine similarity to find similar items. The final recommendations would be a weighted combination of the outputs from both methods, with the weights tuned based on A/B testing to optimize for metrics like click-through rate and conversion rate. Cold start problems (new users or items) can be handled by emphasizing content-based recommendations initially.

2. Describe a time when you had to deal with imbalanced data in a machine learning project. What techniques did you use to address it, and what were the results?

In a churn prediction project, I encountered a highly imbalanced dataset where only 5% of customers churned. This skewed the model's predictions, leading to high accuracy but poor recall for the churned class.

To address this, I used a combination of techniques. First, I employed oversampling (specifically SMOTE) to generate synthetic samples of the minority class (churned customers), effectively balancing the dataset. Second, I adjusted the model's class weights during training, penalizing misclassification of the minority class more heavily. I also experimented with different evaluation metrics beyond accuracy, such as F1-score, precision, and recall, to get a more comprehensive view of model performance. After applying these techniques, the model's ability to identify churned customers (recall) significantly improved, with a more balanced precision-recall trade-off. While the overall accuracy decreased slightly, the model became much more useful for identifying at-risk customers and preventing churn.

3. How do you evaluate the performance of a machine learning model in a real-world scenario where ground truth labels are delayed or partially unavailable?

Evaluating machine learning models with delayed or partially unavailable ground truth is challenging. Common approaches include using proxy metrics that correlate with the desired outcome but are available sooner. For example, in fraud detection, the number of transactions flagged for review can serve as an early indicator, even before confirmed fraud labels are available. A/B testing with gradual rollout and monitoring key business metrics (e.g., conversion rate, customer satisfaction) is also helpful. Additionally, techniques like survival analysis, which are used for time-to-event data, can be adapted to estimate performance when labels are delayed, by treating the delay as censoring. Finally, simulated experiments with realistic delay patterns can provide insights into how the model would perform in the long run. It is also important to use techniques like active learning to selectively request ground truth labels for instances where the model is uncertain, maximizing the information gain from limited labeling resources.

Specifically, you can use metrics like precision@k or recall@k, focusing on the top k predictions. You can also use historical data to estimate the expected distribution of delays and use this to weight the available labels appropriately, down-weighting early labels that are more likely to be inaccurate. Furthermore, monitoring model stability and retraining frequently with newly available labels helps to adapt to changes in the data distribution over time. Regular calibration checks are essential to ensure that the model's predicted probabilities are well-aligned with the observed outcomes as ground truth becomes available.

4. Walk me through your process for debugging a machine learning model that is performing poorly in production. What tools and techniques do you use?

When a machine learning model performs poorly in production, my debugging process involves several steps. First, I establish a baseline by comparing the current performance metrics with historical data and expected benchmarks. Then, I focus on data quality by analyzing input data for anomalies, missing values, or distribution shifts using tools like data profiling libraries. I also examine feature importance to ensure the model is still relying on relevant features and that no feature decay has occurred. If data issues are ruled out, I investigate model drift by comparing the distributions of predicted outputs in production versus training and validation sets. Techniques include using statistical tests or visual comparisons. I also check for software bugs in the deployed code such as version mismatch. Finally, retraining the model with updated data, tweaking hyperparameters, or ensembling might be necessary if the model has drifted significantly. Tools I use include: model monitoring dashboards, logging frameworks, and version control systems.

5. Imagine you're building a fraud detection system. How would you handle the trade-off between precision and recall, and why?

In a fraud detection system, the trade-off between precision and recall is critical. Precision focuses on minimizing false positives (identifying legitimate transactions as fraudulent), while recall aims to minimize false negatives (failing to detect actual fraudulent transactions). The optimal balance depends on the specific context and associated costs.

Generally, in fraud detection, recall is often prioritized over precision. The cost of missing a fraudulent transaction (a false negative) is typically higher than the cost of investigating a legitimate transaction flagged as potentially fraudulent (a false positive). A missed fraudulent transaction can lead to significant financial losses and damage to the company's reputation. While a high false positive rate can cause inconvenience for customers and require additional investigation, the financial impact is usually less severe compared to undetected fraud. However, an extremely low precision rate can overwhelm investigators and erode customer trust. Therefore, the balance is calibrated based on factors like fraud volume, transaction values, and investigation capacity. Techniques to improve precision without severely impacting recall include using more sophisticated machine learning models, incorporating more features, and implementing stricter rule-based systems.

6. Explain how you would use transfer learning to solve a new image classification problem with limited data.

To solve a new image classification problem with limited data using transfer learning, I would start by selecting a pre-trained model on a large dataset like ImageNet. Common choices include ResNet, Inception, or MobileNet. These models have already learned generic image features. Then, I would remove the final classification layer of the pre-trained model and replace it with a new layer tailored to the specific classes of my new problem. Next, I would freeze the weights of the earlier layers of the pre-trained model to prevent overfitting on the limited data, typically fine-tuning only the newly added classification layer or a few of the top layers. Finally, I would train the model on the new dataset, using techniques like data augmentation (e.g., rotations, flips, zooms) to artificially increase the size and variability of the training set. This allows the model to generalize better despite the limited data available.

7. Describe your experience with deploying machine learning models to a cloud platform like AWS, Azure, or GCP. What are some of the challenges you faced, and how did you overcome them?

I've deployed machine learning models on AWS using SageMaker and Lambda. My experience involves containerizing models (primarily with Docker) and deploying them as REST APIs. I've also used S3 for model storage and data preprocessing. Some challenges I faced included managing dependencies and ensuring consistent environments between development and production.

One specific challenge was optimizing model inference time for real-time predictions. To overcome this, I used techniques like model quantization and optimized the input data pipelines. I also experimented with different instance types on AWS to find the best balance between cost and performance. Another challenge was version control of models and datasets, which I addressed by implementing robust tracking systems using tools like DVC.

8. How do you stay up-to-date with the latest advancements in the field of applied AI?

I stay up-to-date with applied AI through a variety of channels. I regularly read research papers on arXiv and publications from leading AI conferences like NeurIPS, ICML, and ICLR. I also follow prominent AI researchers and thought leaders on social media platforms like Twitter and LinkedIn, as well as subscribe to newsletters and blogs focused on practical AI applications.

Furthermore, I actively participate in online communities like Reddit's r/MachineLearning and attend webinars and workshops to learn about new tools, techniques, and real-world case studies. Hands-on experience with cloud platforms like AWS, Azure, and GCP, as well as libraries such as TensorFlow and PyTorch helps me to grasp the practical implications of new advancements.

9. Explain the concept of 'feature importance' in a machine learning model. How can you determine which features are most important, and how can you use this information to improve the model?

Feature importance refers to assigning a score to input features based on how useful they are at predicting a target variable. In essence, it quantifies the contribution of each feature to the model's predictive power. Higher scores indicate that a specific feature has a larger impact on the model's predictions. Feature importance can be determined using various techniques, including:

- Tree-based models: (e.g., Random Forest, Gradient Boosting) directly provide feature importance scores based on how often a feature is used to split nodes in the trees and how much it reduces impurity (e.g., Gini impurity, information gain).

- Permutation importance: Randomly shuffling the values of a single feature and measuring the resulting decrease in model performance. A larger decrease indicates a more important feature.

- Coefficient analysis: In linear models (e.g., Linear Regression, Logistic Regression), the magnitude of the coefficients can indicate feature importance (after feature scaling).

- Feature selection techniques: Select the best features using methods like SelectKBest or recursive feature elimination (RFE).

Using feature importance information, we can improve a model in several ways. These include feature selection (remove unimportant features to simplify the model and reduce overfitting), feature engineering (create new features based on important ones), and model interpretation (gain insights into the underlying data and relationships).

10. Describe a situation where you had to explain a complex machine learning model to a non-technical stakeholder. How did you approach it?

In a project predicting customer churn for a telecom company, I had to present our model to the marketing director, who had no technical background. Instead of diving into the algorithms, I focused on the business value. I explained that the model helps us identify customers at high risk of leaving, allowing us to proactively offer them personalized incentives. I used analogies like comparing the model to a 'smart filter' that sorts customers based on their likelihood to churn.

Instead of talking about coefficients and feature importance, I presented visuals. For instance, a simple bar chart showing the top factors influencing churn, described in plain English (e.g., 'customers with frequent complaints' or 'customers with short contract durations'). I also showed examples of how the model's predictions translate into actual actions, like targeted email campaigns with customized offers, emphasizing the potential ROI from reduced churn.

11. How do you handle missing data in a machine learning project? What are some common imputation techniques, and when would you use each one?

Handling missing data is a crucial step in machine learning. Ignoring it can lead to biased models. Several techniques exist to address this issue. Dropping rows or columns with missing values is the simplest, but it can lead to significant data loss if missingness is prevalent. Imputation techniques are generally preferred.

Common imputation methods include: Mean/Median Imputation: Replace missing values with the mean or median of the column. Use median imputation if the data is skewed. Mode Imputation: Replace missing values with the most frequent value. Suitable for categorical features. Constant Value Imputation: Replace missing values with a specific constant value (e.g., 0, -1). Regression Imputation: Predict the missing values using a regression model based on other features. K-Nearest Neighbors (KNN) Imputation: Impute based on the average or mode of the k-nearest neighbors. The choice depends on the data type and distribution. For numerical data without outliers, mean imputation is a good starting point. If there are outliers, median imputation is preferred. For categorical data, mode imputation is used. For more accurate results, regression or KNN imputation may be considered, but these are more complex and computationally expensive. sklearn.impute provides tools like SimpleImputer and KNNImputer to implement these techniques in Python.

12. Explain the difference between batch normalization and layer normalization. When would you use one over the other?

Batch normalization normalizes the activations of each layer across the batch size. Layer normalization normalizes the activations across the features of a single input. In other words, batch norm computes the mean and variance for each feature across the batch, while layer norm computes the mean and variance for each input across all features.

You'd typically use batch normalization when the batch size is large and stable. Layer normalization is often preferred when dealing with recurrent neural networks (RNNs) or when the batch size is small, as it is less sensitive to batch size variations. For example, in sequence models or when the input data characteristics vary significantly across the batch, layer normalization often performs better.

13. Describe a time when you had to choose between different machine learning algorithms for a specific problem. What factors did you consider in your decision?

In a project aimed at predicting customer churn for a subscription service, I had to choose between Logistic Regression, Support Vector Machines (SVM), and Random Forests. Logistic Regression offered interpretability and speed but might struggle with complex non-linear relationships. SVM could capture these complexities but required careful kernel selection and was computationally expensive. Random Forests provided robustness and feature importance but were less interpretable.

I prioritized interpretability and speed initially, opting for Logistic Regression as a baseline. After evaluating its performance, which was mediocre, I considered Random Forests due to their higher potential accuracy and feature importance capabilities. Ultimately, I ran both SVM and Random forest models and compared their performance across metrics like precision, recall, F1-score, and AUC. Model selection was done using cross-validation on the data. Random Forest won out as it performed best. I also ran SHAP analysis on the random forest model to get a good sense of feature importance.

14. How do you approach the problem of concept drift in a production machine learning model?

Concept drift, where the relationship between input data and the target variable changes over time, is a common challenge in production machine learning. My approach involves continuous monitoring and model retraining. I would first establish a monitoring system to track model performance metrics (e.g., accuracy, precision, recall) and data distributions. Significant deviations from baseline values would trigger alerts.

Then, I would implement an automated retraining pipeline. This could involve periodic retraining (e.g., daily, weekly) or triggered retraining based on the drift detection alerts. Several drift detection techniques can be used (e.g., Kolmogorov-Smirnov test for data drift, CUSUM for performance drift). When retraining, I would consider using a rolling window of recent data to capture the updated relationships and potentially explore adaptive learning algorithms designed to handle drift more effectively. Techniques such as online learning or ensemble methods with model weighting can be employed.

15. Explain the trade-offs between different model deployment strategies, such as online deployment, batch deployment, and shadow deployment.

Online deployment provides immediate predictions, ideal for real-time applications. However, it requires significant infrastructure to handle peak loads and can be more complex to monitor and update without impacting users. Batch deployment processes data periodically, offering high throughput and cost-effectiveness. This is suitable when immediate predictions aren't crucial, but it introduces latency and may not be appropriate for time-sensitive tasks. Shadow deployment runs the new model alongside the existing one, comparing performance without affecting production traffic. It minimizes risk and provides valuable insights before a full rollout, but it requires more resources and careful analysis to ensure fair comparison and the appropriate logging metrics.

16. Let's say you're building a sentiment analysis model for social media. How would you handle sarcasm and irony?

Handling sarcasm and irony in sentiment analysis is tricky because they rely on context and often involve expressing a sentiment opposite to the literal meaning. Several approaches can be combined. First, contextual analysis is crucial. The model needs to consider surrounding words and phrases to understand the overall tone. Features like exclamation marks, question marks, and specific emojis (e.g., eye-roll emoji) can be strong indicators of sarcasm. Also, patterns or specific phrases are important that are often used sarcastically or ironically in the targeted social media.

Second, advanced techniques like fine-tuning pre-trained language models (e.g., BERT, RoBERTa) on datasets specifically annotated for sarcasm and irony can be very effective. These models learn complex patterns and relationships within the text, which aids in identifying subtle cues. Also contrastive learning by making the model recognize the differences of literal v.s. sarcastic usage of phrases will work. Finally, ensemble methods that combine the outputs of multiple models trained on different features (e.g., lexical, syntactic, sentiment) can improve the robustness and accuracy of sarcasm detection.

17. How do you ensure the fairness and ethical considerations are taken into account when building and deploying a machine learning model?

Ensuring fairness and ethical considerations in ML involves several steps. First, data bias needs to be addressed. This includes careful data collection, preprocessing, and augmentation techniques to mitigate skewed or unrepresentative data. We need to evaluate models for disparate impact across different demographic groups, using metrics like equal opportunity or demographic parity. Regular auditing helps to identify and correct biases that might emerge over time. Algorithmic transparency is also crucial. Understanding how a model makes decisions (interpretability) allows us to pinpoint sources of bias or unfairness. We need to have clear documentation about the model's intended use, limitations, and potential ethical implications. Also define clear accountability and redress mechanisms for unintended outcomes.

18. Describe your experience with using different machine learning frameworks, such as TensorFlow, PyTorch, or scikit-learn. What are the strengths and weaknesses of each one?

I have experience using scikit-learn, TensorFlow, and PyTorch. Scikit-learn is excellent for classical machine learning algorithms, providing a wide range of tools for tasks like classification, regression, and clustering. Its strengths are its ease of use and comprehensive documentation, but it is less suited for deep learning tasks. TensorFlow and PyTorch are powerful frameworks specifically designed for deep learning. TensorFlow is known for its production readiness and scalability, while PyTorch is favored for its dynamic computation graph and ease of debugging.

Specifically, I've used scikit-learn for building models for fraud detection and customer churn prediction. For deep learning, I've employed TensorFlow and Keras (a high-level API within TensorFlow) to develop image classification models and natural language processing (NLP) applications. More recently, I've been using PyTorch for its flexibility in research and experimentation, particularly in generative adversarial networks (GANs).

19. Explain how you would design an A/B test to evaluate the impact of a new machine learning feature on a website or application.

To A/B test a new machine learning feature, I'd randomly split users into two groups: a control group (A) and a treatment group (B). Group A sees the existing experience, while group B sees the new feature. Key metrics, such as conversion rate, click-through rate, and engagement, would be tracked for both groups over a defined period.

Statistical significance tests (e.g., t-tests) would then be used to determine if the difference in metrics between the two groups is statistically significant, indicating a real impact from the new feature. We would also monitor for any unintended negative side effects, and consider both statistical significance and practical significance (the magnitude of the effect) when making a decision about whether to launch the feature to all users.

20. Describe a situation where you had to work with a large dataset. What tools and techniques did you use to efficiently process and analyze the data?

In a previous role, I worked with a dataset containing several million customer records, including demographic information, purchase history, and website activity. Due to the size, loading the entire dataset into memory wasn't feasible. I used Python with pandas for initial data exploration and cleaning, but quickly moved to using Dask to handle out-of-memory computations by processing the data in chunks. For more complex analytical queries and aggregations, I loaded the data into a cloud-based data warehouse like Snowflake, which allowed me to leverage its distributed processing capabilities and SQL for efficient querying.

Specifically, I used techniques like data sampling to understand the data distribution, optimized data types within pandas to reduce memory footprint, and partitioned the data in Snowflake to improve query performance. Additionally, I utilized visualization libraries like matplotlib and seaborn to identify patterns and communicate insights effectively. I also incorporated a robust error handling and logging mechanism to track data quality and processing steps.

21. How do you handle the cold start problem in a recommendation system?

The cold start problem occurs when a recommendation system lacks sufficient information about new users or items to provide accurate recommendations. Several strategies can address this:

- Popularity-based recommendations: Initially, recommend the most popular items to all new users. This provides some initial engagement.

- Content-based filtering: If item metadata is available (e.g., genre, keywords), recommend items similar to those the user has interacted with (if any) or items whose metadata matches user-provided profile information.

- Collaborative filtering with knowledge transfer: Leverage data from similar users or items to bootstrap recommendations. For example, if the new item is similar to an existing item, use the interaction data of the existing item to recommend it.

- Hybrid approaches: Combine multiple strategies to leverage their strengths. For example, use content-based filtering to make initial recommendations and then transition to collaborative filtering as more data becomes available. Gathering explicit user feedback (e.g., asking users to rate items) early on can also help personalize recommendations more quickly.

22. Explain the difference between supervised, unsupervised, and reinforcement learning, and give an example of a real-world application for each.

Supervised learning involves training a model on a labeled dataset, where each input is paired with a correct output. The goal is to learn a mapping function that can predict the output for new, unseen inputs. A real-world example is email spam detection: the model is trained on emails labeled as either 'spam' or 'not spam', and then it can classify new emails. Unsupervised learning, on the other hand, uses unlabeled data to discover patterns and structures within the data. Clustering customer data into distinct segments based on purchasing behavior is an example, where we don't initially know what the segments are. Reinforcement learning involves an agent learning to make decisions in an environment to maximize a reward. The agent learns through trial and error, receiving feedback (rewards or penalties) for its actions. A real-world example is training a self-driving car to navigate traffic, where the agent (the car) learns to drive by receiving rewards for safe and efficient driving and penalties for accidents or traffic violations.

23. How do you monitor the health and performance of a machine learning model in production?

Monitoring the health and performance of a machine learning model in production is crucial for ensuring its continued accuracy and reliability. Key aspects include tracking model performance metrics like accuracy, precision, recall, F1-score, and AUC over time. Significant drops in these metrics can indicate model degradation or data drift. It's also important to monitor input data for changes in distribution, which can impact model performance. Monitoring infrastructure metrics (CPU usage, memory consumption, latency) helps ensure the model is serving predictions efficiently.

Specific techniques include setting up automated monitoring dashboards and alerts to notify when performance deviates from acceptable thresholds. A/B testing new model versions against existing ones can also reveal performance improvements or regressions. Tools for monitoring and alerting depend on the infrastructure used, but might include Prometheus, Grafana, or cloud-specific monitoring services. Regularly retraining the model with fresh data is important, as well as having a process for model rollback if an issue is detected.

Advanced Applied AI Engineer interview questions

1. How would you design an AI system to detect fake news, considering the evolving tactics of misinformation spreaders?

To design an AI system for detecting fake news, I'd employ a multi-layered approach combining several techniques. First, content analysis would involve natural language processing (NLP) to identify stylistic markers (e.g., sensationalism, emotional language), factual inconsistencies by cross-referencing with reliable sources (using a knowledge graph), and source credibility assessment by analyzing the domain's history, reputation, and author information. Feature engineering focusing on these elements would be crucial for training machine learning models. Second, network analysis would examine how news spreads on social media, identifying bot networks and coordinated disinformation campaigns. Finally, I would implement an adversarial training component, where the system is continuously exposed to evolving fake news examples to improve its robustness against new tactics. This requires setting up pipelines that monitor emerging disinformation trends to update training datasets and model architectures, keeping the system adaptive and resilient.

2. Explain your approach to handling imbalanced datasets in a real-time fraud detection system.

When dealing with imbalanced datasets in a real-time fraud detection system, my approach focuses on a combination of techniques to improve the model's ability to accurately identify fraudulent transactions without being overwhelmed by the majority class.

Firstly, I would consider using techniques like undersampling the majority class (genuine transactions) or oversampling the minority class (fraudulent transactions), however undersampling can result in information loss. Techniques like SMOTE (Synthetic Minority Oversampling Technique) is preferable, which generates synthetic samples for the minority class. During model training, I'd prioritize metrics like precision, recall, and F1-score over overall accuracy, as accuracy can be misleading in imbalanced scenarios. Furthermore, implementing cost-sensitive learning, where misclassifying a fraudulent transaction incurs a higher penalty, can guide the model to focus on correctly identifying fraud. For the model architecture, an ensemble method such as Random Forest or Gradient Boosting Machines can effectively handle imbalanced data by combining multiple decision trees or weak learners, and can easily be deployed in a real time system.

3. Describe a situation where you had to choose between model accuracy and interpretability. What factors influenced your decision?

In a fraud detection project, I encountered a situation where I had to balance model accuracy and interpretability. A complex deep learning model offered higher accuracy (92%) compared to a simpler logistic regression model (88%). However, the deep learning model was essentially a black box, making it difficult to understand why a particular transaction was flagged as fraudulent. This lack of transparency posed a challenge for regulatory compliance and for providing explanations to customers.

I ultimately chose the logistic regression model. Several factors influenced this decision. First, interpretability was paramount for regulatory compliance. Second, explaining the reasoning behind fraud alerts to customers was crucial for maintaining trust. Third, the slight drop in accuracy (4%) was deemed acceptable given the significant gains in interpretability and explainability. Finally, I could still use feature importance from the logistic regression to guide fraud investigation. We also considered techniques like LIME and SHAP to add some explainability to the deep learning model but those added complexities to the debugging process and deployment pipeline.

4. How would you go about optimizing a deep learning model for deployment on a resource-constrained edge device?

Optimizing a deep learning model for resource-constrained edge devices involves several techniques. Model quantization (e.g., converting weights from float32 to int8) significantly reduces model size and inference time. Techniques like pruning (removing less important connections) and knowledge distillation (training a smaller "student" model to mimic a larger "teacher" model) can further reduce the model's complexity.

Furthermore, consider using efficient model architectures like MobileNet or EfficientNet, specifically designed for mobile and embedded devices. Optimize the inference engine by using libraries like TensorFlow Lite or optimized custom kernels. Hardware acceleration (e.g., using a dedicated neural processing unit) can also dramatically improve performance. Finally, consider techniques like layer fusion which merges multiple operations into a single one to reduce overhead, and operator optimization by picking the fastest implementations for the target hardware.

5. Design an AI-powered recommendation system for a platform with limited user data. How would you address the cold start problem?

To design an AI-powered recommendation system with limited user data, particularly addressing the cold start problem, I'd implement a hybrid approach. Initially, leverage content-based filtering using item metadata (descriptions, categories, tags) to recommend similar items. Complement this with popularity-based recommendations, highlighting trending or frequently purchased items. For new users, a onboarding process can ask for a few preferences. These preferences will be used to create a user profile and recommend suitable items.

To improve the recommendation engine, I would use exploration-exploitation strategies like A/B testing different recommendation algorithms (e.g., collaborative filtering with matrix factorization as data grows). Also implement contextual bandit algorithms to balance exploring new items with exploiting known user preferences. In addition, consider gathering implicit feedback (e.g., time spent on a page, items added to cart) to refine recommendations over time. Synthetic data generation or using pre-trained models fine-tuned on relevant data could also mitigate the cold start problem.

6. You are tasked with building a model to predict customer churn. How would you incorporate external factors like competitor promotions into your model?

To incorporate external factors like competitor promotions into a customer churn model, I would first gather data on these promotions, including the type of promotion, duration, and target audience, if available. This data can be obtained through web scraping, market research reports, or partnerships with data providers.

Next, I would engineer relevant features from the raw data. For example:

- A binary feature indicating whether a competitor promotion was active during a specific period.

- A count of the number of competitor promotions active in a given month.

- A feature representing the intensity or attractiveness of competitor promotions (e.g., discount percentage).

Finally, these features would be included as input variables in the churn prediction model. I would experiment with different modeling techniques (e.g., logistic regression, gradient boosting) to determine which best captures the impact of external factors on churn.

7. Explain your experience with using reinforcement learning in a real-world application. What challenges did you face?

In a project aimed at optimizing inventory management for a retail chain, I applied reinforcement learning (RL) using a Deep Q-Network (DQN). The agent's environment was defined by historical sales data, current inventory levels, and various cost factors (holding costs, ordering costs, stockout penalties). The agent learned to make optimal ordering decisions to minimize total costs. States were defined by inventory levels, demand forecasts, and time-related features, while actions represented the quantity of each product to order. The reward function penalized stockouts and high inventory levels while rewarding efficient inventory management, ultimately leading to significant reduction in overall inventory expenses compared to traditional heuristic-based methods.

Challenges included defining a realistic and stable environment that accurately mirrored real-world demand fluctuations. Hyperparameter tuning for the DQN was also crucial and computationally expensive to avoid overfitting to the training data. Exploration-exploitation trade-offs were tricky; ensuring adequate exploration without compromising performance was a significant hurdle. Furthermore, dealing with the non-stationarity of the environment, due to changing consumer preferences and external factors, required periodic retraining and adaptation of the RL agent.

8. Describe your process for evaluating the fairness and bias of a machine learning model before deployment.

Before deploying a machine learning model, I evaluate fairness and bias through several steps. First, I define fairness metrics relevant to the specific application (e.g., demographic parity, equal opportunity). Then, I analyze the training data for potential sources of bias, such as imbalanced representation or skewed labels. Next, I assess model performance across different demographic groups using the chosen fairness metrics. This involves calculating metrics like disparate impact or equalized odds to identify disparities in outcomes. I'd also examine feature importance and model explanations (e.g., using SHAP values or LIME) to understand how the model is using potentially sensitive features.

If bias is detected, I apply mitigation techniques like re-weighting data, adjusting decision thresholds, or using fairness-aware algorithms. Post mitigation, I re-evaluate the fairness metrics to ensure the bias has been reduced without significantly compromising overall model performance. This iterative process of detection, mitigation, and evaluation helps ensure the model is both accurate and fair. Finally, I document all steps taken, including the metrics used, the results of the fairness evaluation, and any mitigation strategies applied, ensuring transparency and reproducibility.

9. How would you approach building a scalable and robust AI pipeline for processing large volumes of unstructured text data?

To build a scalable and robust AI pipeline for processing large volumes of unstructured text data, I would prioritize modularity and parallel processing. First, I'd break down the pipeline into distinct stages: data ingestion, preprocessing (cleaning, tokenization, stemming/lemmatization), feature extraction (TF-IDF, word embeddings), model training/inference, and output. Each stage would be designed as an independent module using technologies like Apache Kafka for message queuing and Apache Spark/Dask for distributed data processing. For model training, I'd explore cloud-based ML platforms (AWS SageMaker, Google AI Platform) to leverage their scalability and pre-built algorithms. Monitoring and logging are crucial; I'd use tools like Prometheus and Grafana to track performance metrics (throughput, latency, error rates) and set up alerts for any anomalies.

Key considerations also include data storage using cloud object storage (e.g., AWS S3) and version control using Git for both code and model artifacts. Robustness is achieved through comprehensive testing (unit, integration, end-to-end) and the implementation of retry mechanisms for transient failures. The system should be designed to handle different data formats and schemas and provide data lineage information, enabling traceability and debugging. Finally, the infrastructure would be defined as code (Infrastructure as Code - IaC) using tools like Terraform or CloudFormation for automation and reproducibility.

10. Imagine you need to build a system that can generate realistic images. How would you evaluate the 'realness' of the generated images?

Evaluating the 'realness' of generated images is a multifaceted problem. Subjective human evaluation is crucial – using methods like asking people to rate images on a scale of 'realism' or performing a Turing test-style experiment where participants try to distinguish between real and generated images. For automated metrics, we can use Inception Score (IS) and Frechet Inception Distance (FID). IS measures both the quality and diversity of generated images. FID calculates the distance between the feature vectors of real and generated images, with a lower score indicating more realistic images.

Other evaluation methods include assessing image quality metrics like sharpness and contrast (though these don't directly measure 'realness'), using classifiers trained on real images to see how well they classify generated images (lower confidence suggests more unrealistic images), and checking for common artifacts (e.g., repeating patterns or distorted features) that are often present in generated images. Combining both subjective and objective measures provides a comprehensive assessment.

11. Design an AI system for autonomous driving. What are the key safety considerations and how would you address them?

An AI system for autonomous driving necessitates a layered approach to safety. Key considerations include perception accuracy (identifying objects reliably in varied conditions), prediction of other agents' behavior (pedestrians, vehicles), path planning that adheres to traffic rules and avoids collisions, and robust control to execute planned maneuvers precisely. Addressing these involves using diverse sensor modalities (cameras, lidar, radar) with sensor fusion techniques to improve perception robustness. Model predictive control (MPC) can be used for path planning, allowing for dynamic adjustments based on predicted scenarios, combined with reinforcement learning trained on simulated environments with extreme conditions.

Redundancy and fail-safe mechanisms are crucial. This includes redundant sensors and computing units, along with a fallback system that can safely bring the vehicle to a stop if the primary AI system fails. Formal verification methods and extensive simulation testing, including corner cases and adversarial attacks, should be employed to validate the system's safety and reliability. Regular over-the-air (OTA) updates are required to patch vulnerabilities and improve the system based on real-world driving data.

12. How do you stay up-to-date with the latest advancements in AI and machine learning, and how do you apply them to your work?

I stay updated with AI/ML advancements through several channels. I regularly read research papers on arXiv, attend webinars and online courses on platforms like Coursera and edX (focusing on areas relevant to my work, such as deep learning, natural language processing or computer vision), and follow prominent AI researchers and thought leaders on social media (Twitter, LinkedIn). I also subscribe to newsletters like Import AI and The Batch.

To apply these advancements, I try to implement new techniques in my projects, even if it's just a small-scale experiment. For example, if I read about a new optimization algorithm, I might try integrating it into a model I'm working on. I also look for opportunities to use pre-trained models or APIs that leverage the latest AI research, like using transformer models for text analysis or incorporating object detection for image processing tasks. This hands-on approach allows me to understand the practical benefits and challenges of new technologies.

13. Describe a time when you had to debug a complex AI system. What tools and techniques did you use?

During the development of a reinforcement learning model for automated trading, I encountered unexpected behavior where the agent would consistently make high-risk trades despite being penalized for losses. Debugging this involved a multi-pronged approach. First, I meticulously examined the reward function to ensure it accurately reflected the desired trading strategy. I used TensorBoard to visualize the agent's performance metrics (cumulative reward, win rate, average trade size) over time, which helped identify patterns in the erroneous behavior. Also, I wrote a custom callback function to log the agent's actions, state, and predicted Q-values for each time step. This revealed that the agent was overestimating the value of certain risky actions due to a bias in the Q-network.

To address the bias, I implemented experience replay with prioritized sampling to ensure the agent learned more effectively from important transitions. I also experimented with different network architectures and regularization techniques (like dropout) to prevent overfitting. Finally, I utilized gradient checking to ensure that the gradients were being computed correctly during backpropagation. After several iterations of debugging and experimentation, the agent's trading behavior improved significantly, leading to a more stable and profitable strategy. I also used pdb and IPDB when i had access to the training environment and I wanted to step through the execution to see what the values are and better understand the state transitions.

14. How would you approach the problem of concept drift in a production machine learning model?

Concept drift refers to the change in the relationship between input features and the target variable over time. To handle this in production, I'd implement a monitoring system to track model performance using metrics relevant to the business objective. If a significant performance degradation is detected (compared to a baseline), it signals potential concept drift.

My approach would include:

- Data Monitoring: Track changes in the input data distribution. Techniques like calculating population stability index (PSI) can quantify these changes.

- Model Retraining: Regularly retrain the model with recent data. The frequency depends on the observed drift rate and the model's sensitivity.

- Adaptive Learning Techniques: Explore online learning algorithms that can adapt to changing data in real-time or ensemble methods where models are weighted dynamically based on their recent performance.

- A/B Testing: When deploying a new model version (e.g., retrained model), conduct A/B testing against the existing model to validate its performance improvement before a full rollout.

15. Explain your understanding of federated learning and its applications.

Federated learning is a decentralized machine learning approach that enables training a model across multiple devices or servers holding local data samples, without exchanging the data samples themselves. This preserves data privacy and reduces communication costs, as only model updates are shared. It typically involves multiple rounds of local training and aggregation of model updates on a central server.

Applications include personalized mobile keyboard prediction (training on user's phone data without uploading it), healthcare (training on patient data across hospitals without sharing sensitive records), and financial modeling (training on transaction data across banks while maintaining confidentiality). It's useful in scenarios where data is distributed, sensitive, or communication bandwidth is limited.

16. Design an AI system to optimize energy consumption in a smart building.

An AI system for optimizing energy consumption in a smart building can leverage Reinforcement Learning (RL). The RL agent observes the building's state (temperature, occupancy, weather forecast, energy prices) and takes actions (adjusting HVAC, lighting, blinds). A reward function incentivizes energy savings while maintaining occupant comfort. We can model the environment using historical data and simulations to train the agent. The agent could be implemented using a Deep Q-Network (DQN) to handle the high-dimensional state space.

Alternatively, a hybrid approach combining predictive modeling with rule-based control could be employed. Predictive models (e.g., using time series forecasting like ARIMA or LSTM networks) would forecast energy demand based on historical data and real-time conditions. Then, a rule-based system would use these predictions to adjust building systems, supplemented with anomaly detection (using techniques like autoencoders) to identify unusual energy usage patterns and trigger alerts or corrective actions. This can provide both proactive energy management and reactive responses to unexpected events.

17. How would you build a system to automatically detect and mitigate adversarial attacks on a machine learning model?