Hiring QA engineers is challenging because it demands a blend of technical skills and a detail-oriented mindset; something we at Adaface know a lot about since we help companies hire tech and non-tech talent. Without the right interview questions, you risk hiring someone who can't ensure product quality, which can lead to unhappy customers and costly fixes.

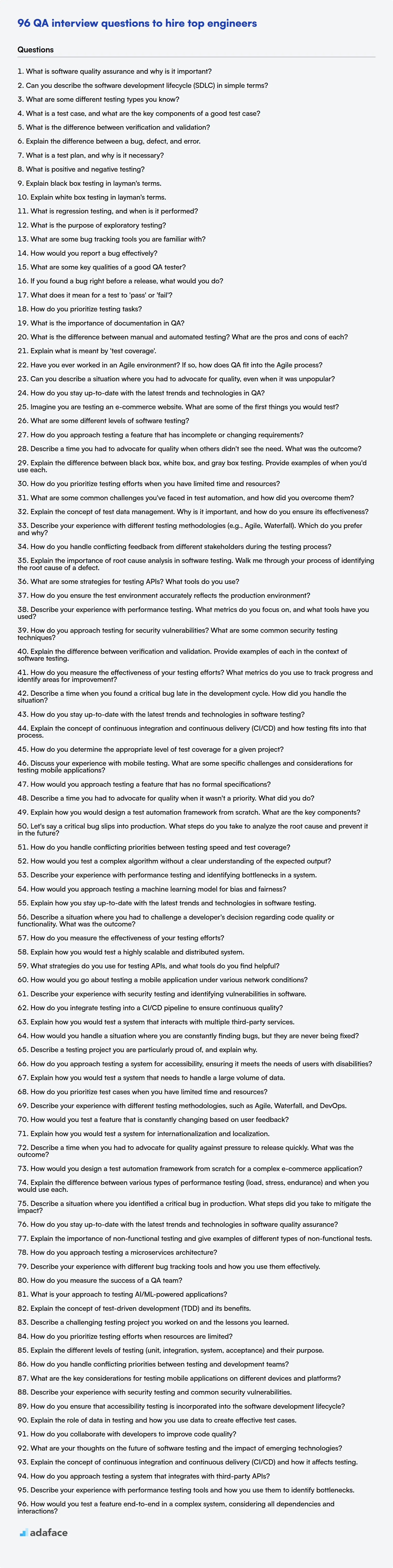

This blog post provides a list of QA interview questions, divided into basic, intermediate, advanced, and expert levels, including some QA MCQs. These questions will help you assess candidates' knowledge and problem-solving abilities, from fundamental testing concepts to advanced automation techniques.

By using this question bank, you'll be able to effectively evaluate and select top-tier QA talent and you can even identify top talent before the interview by using Adaface assessments.

Table of contents

Basic QA interview questions

1. What is software quality assurance and why is it important?

Software Quality Assurance (SQA) is a systematic approach to ensure that software meets the required quality standards and expectations. It encompasses all activities from initial planning to final testing and release, aimed at preventing defects and ensuring that the software is reliable, usable, and maintainable. SQA involves processes like requirements analysis, design reviews, code inspections, testing, and configuration management.

SQA is crucial because it directly impacts user satisfaction, reduces development costs in the long run by catching errors early, and enhances the overall reputation of the software and the organization. Poor software quality can lead to security vulnerabilities, system failures, data loss, and financial losses. By implementing robust SQA practices, organizations can deliver high-quality software that meets user needs and business objectives effectively.

2. Can you describe the software development lifecycle (SDLC) in simple terms?

The SDLC is essentially a roadmap for building software, ensuring a structured and efficient process. It outlines the various stages involved from the initial idea to the final product and its maintenance. Common phases include:

- Planning: Defining the project's goals and scope.

- Analysis: Gathering and documenting the requirements.

- Design: Creating the software architecture and specifications.

- Implementation (Coding): Writing the actual code.

- Testing: Verifying the software's functionality and quality.

- Deployment: Releasing the software to users.

- Maintenance: Addressing bugs, adding features, and providing support. Each phase might have different models like waterfall, agile etc. which determine how they are implemented and iterated.

3. What are some different testing types you know?

There are many types of software testing, each serving a specific purpose. Some common types include:

- Unit Testing: Testing individual components or functions in isolation.

- Integration Testing: Testing the interaction between different modules or components.

- System Testing: Testing the entire system as a whole to ensure it meets requirements.

- Acceptance Testing: Testing to determine if the system is ready for release by end-users or stakeholders. This often involves User Acceptance Testing (UAT).

- Regression Testing: Retesting existing functionality after changes to ensure no new issues are introduced.

- Performance Testing: Evaluating the system's speed, stability, and scalability under various loads.

- Security Testing: Identifying vulnerabilities and ensuring the system is protected against unauthorized access.

- Usability Testing: Assessing how easy the system is to use and whether it meets user needs.

4. What is a test case, and what are the key components of a good test case?

A test case is a specific set of conditions and inputs designed to verify that a software application or feature is functioning correctly. It outlines the steps to be taken, the data to be used, and the expected outcome.

Key components of a good test case include:

- Test Case ID: A unique identifier.

- Test Case Name/Title: A descriptive name.

- Purpose/Objective: What the test case aims to verify.

- Pre-conditions: The state of the system before the test.

- Test Steps: Detailed steps to execute.

- Test Data: Input values for the test.

- Expected Result: The anticipated outcome.

- Actual Result: The outcome of the test execution.

- Pass/Fail Status: Whether the test passed or failed.

- Post-conditions: The state of the system after the test.

5. What is the difference between verification and validation?

Verification is the process of checking that the software meets the specified requirements. It asks the question, "Are we building the product right?". It focuses on ensuring that the code is correct and functions as intended according to the design and specifications. Activities include reviews, inspections, and testing, such as unit testing.

Validation, on the other hand, is the process of checking that the software meets the user's needs and expectations. It asks, "Are we building the right product?". It focuses on ensuring that the software actually solves the problem it was designed to solve, and meets the needs of the user. This often involves user testing and feedback. Example: A website design verification checks if the links in the page is working, validation checks if the website is actually helping users make purchases.

6. Explain the difference between a bug, defect, and error.

While the terms are often used interchangeably, there are subtle differences.

An error is a mistake made by a human (developer, designer, etc.) during any phase of the software development lifecycle. A defect is a flaw or imperfection in a software component or system. It's the manifestation of an error. A bug is a specific instance of a defect that causes the software to behave in an unexpected or incorrect way. Simply put, an error leads to a defect, and that defect becomes a bug when the software isn't working correctly.

7. What is a test plan, and why is it necessary?

A test plan is a document outlining the strategy, objectives, schedule, estimation, deliverables, and resources needed for testing a software product. It serves as a blueprint for the entire testing process, guiding testers on what to test, how to test, and when to test.

It's necessary because it ensures comprehensive test coverage, minimizes risks by identifying potential issues early, improves software quality by systematically validating functionality, and facilitates efficient collaboration among team members by providing a clear roadmap. A well-defined test plan also enables better tracking of progress and provides evidence of testing efforts for audits and compliance.

8. What is positive and negative testing?

Positive testing involves verifying that the software works as expected with valid inputs. It aims to confirm that the application produces the correct output when given the correct input. Negative testing, on the other hand, focuses on ensuring the software handles invalid or unexpected inputs gracefully. It aims to identify error handling issues, such as crashes or incorrect error messages, when the application receives incorrect or malicious data.

In essence, positive testing is about confirming 'happy path' scenarios, while negative testing is about validating the system's robustness and error handling capabilities in the face of unexpected or invalid conditions.

9. Explain black box testing in layman's terms.

Imagine you're testing a new blender. Black box testing means you're only looking at what goes into the blender (the ingredients) and what comes out (the smoothie). You don't care about the blades, the motor, or any of the internal workings. You just want to see if it makes a good smoothie based on the ingredients you put in.

Basically, it's testing a system without knowing how it works internally. Testers provide inputs and observe the outputs to ensure they match the expected behavior, without needing any knowledge of the code or internal design. This helps find issues like incorrect outputs, missing features, or usability problems from the user's perspective.

10. Explain white box testing in layman's terms.

Imagine you're checking if a cake recipe works perfectly. White box testing is like going into the kitchen, looking at the recipe (the code) step-by-step, and making sure each instruction is followed correctly and does what it's supposed to do. You know exactly what ingredients are used and how they are mixed. You're not just tasting the final cake (black box testing), you're making sure the baking process itself is flawless.

Essentially, white box testing means you're looking at the internal workings of something (like software code) to ensure it functions as designed. Testers use their knowledge of the code to create tests that check specific paths and conditions within the program. This helps uncover hidden errors and optimize the code's efficiency.

11. What is regression testing, and when is it performed?

Regression testing is a type of software testing that verifies that existing functionality of an application continues to work as expected after changes, modifications, or updates have been made to the code. Its main goal is to ensure that new code changes haven't inadvertently introduced new bugs or negatively impacted existing features. This is done by re-running existing test cases, and sometimes adding new tests specifically designed to target the changed areas.

Regression testing is performed frequently throughout the software development lifecycle. Key times include after new features are added, when bug fixes are implemented, after code refactoring, and during integration of different software modules. The aim is to catch regression bugs early and prevent them from reaching production. Automated regression test suites are common to increase efficiency and coverage.

12. What is the purpose of exploratory testing?

The purpose of exploratory testing is to discover as much as possible about a software product in a short period. It emphasizes the tester's skill, intuition, and experience to uncover unexpected issues and gain insights into the application's behavior.

Unlike scripted testing, exploratory testing is less structured. Testers simultaneously design, execute, and evaluate tests based on their current understanding of the system. The goal is to identify potential bugs and areas of risk that might be missed by traditional, pre-defined test cases, especially edge cases and user experience issues.

13. What are some bug tracking tools you are familiar with?

I'm familiar with a variety of bug tracking tools used in software development. Some popular ones include Jira, Bugzilla, and Azure DevOps (formerly TFS). Jira is known for its customizable workflows and integration with other Atlassian products. Bugzilla is a free and open-source option often used for its simplicity and comprehensive bug reporting features. Azure DevOps offers a full suite of development tools, including robust bug tracking integrated with version control and CI/CD pipelines.

Other tools I've encountered or am aware of include Redmine (another open-source option), Trello (which can be adapted for basic bug tracking), and specialized tools like Sentry, which focuses on error tracking in production code. The choice of tool often depends on the specific needs of the team, the size of the project, and budget considerations.

14. How would you report a bug effectively?

To report a bug effectively, I would first ensure I can reliably reproduce it. Then, I'd write a clear and concise report including:

- Summary: A brief description of the bug.

- Steps to Reproduce: A numbered list of actions to trigger the bug.

- Expected Result: What should have happened.

- Actual Result: What actually happened.

- Environment: Operating system, browser, software version, etc.

- Severity/Priority: An assessment of the bug's impact.

- Attachments: Screenshots, logs, or code snippets (using code blocks like ```python print("hello")

15. What are some key qualities of a good QA tester?

A good QA tester possesses several key qualities. Attention to detail is crucial for identifying even subtle defects. Strong analytical skills enable them to understand complex systems and potential failure points. Communication skills are essential for clearly reporting bugs and collaborating with developers. Curiosity drives them to explore different scenarios and think outside the box. Persistence helps them to thoroughly investigate issues.

Beyond these, adaptability and a willingness to learn are important in a constantly evolving technological landscape. Understanding of testing methodologies like black box, white box, and grey box testing can be very helpful. Experience with test automation tools is valuable, especially in agile environments.

16. If you found a bug right before a release, what would you do?

If I found a bug right before a release, my immediate action would be to assess the severity and impact of the bug. I'd need to determine how critical it is to the core functionality and how many users it might affect. I would then communicate the bug to the team lead/project manager and the development team, providing as much detail as possible (steps to reproduce, logs, screenshots, etc.).

Next steps depend on the severity. If it's a showstopper, halting the release is necessary to fix it. If it's a minor bug with a workaround, a decision needs to be made in consultation with the product owner: either delay the release, release with a known bug and a plan for a hotfix, or defer the fix to a later release. The decision should consider risk, impact, and available resources. Creating a hotfix should include testing on a staging environment.

17. What does it mean for a test to 'pass' or 'fail'?

A test 'passes' when the actual output or behavior of the code under test matches the expected output or behavior defined in the test case. This indicates that the code is functioning as intended according to the specific criteria covered by that test. Conversely, a test 'fails' when the actual output or behavior deviates from the expected output or behavior. This signifies a discrepancy, suggesting a bug or unexpected outcome in the code being tested.

Essentially, a test outcome is a boolean value. Pass is true, and Fail is false. Tests should be designed to be repeatable and deterministic; running the same test with the same input should always produce the same result (pass or fail).

18. How do you prioritize testing tasks?

I prioritize testing tasks based on risk, impact, and frequency. High-risk areas that could cause significant damage or downtime, and features that are frequently used by users, are tested first. Also, new functionalities or major changes get precedence to catch regressions early.

Specifically, I might consider the following:

- Critical path: Test the main flows of the application first.

- High visibility: Features used by many users.

- Recently changed code: Focus on areas where recent changes were made.

- Past failures: Prioritize retesting areas where bugs were previously found. After assessing each of these attributes, I will rank tasks accordingly.

19. What is the importance of documentation in QA?

Documentation is crucial in QA because it provides a clear understanding of the software's intended behavior, functionality, and expected outcomes. This clarity is vital for testers to accurately create test cases, execute tests, and report defects effectively. Well-maintained documentation ensures consistency and reduces ambiguity throughout the testing process. It also serves as a valuable resource for onboarding new team members and resolving issues quickly.

Specifically, consider these points:

- Test Plans: Define the scope, objectives, resources, and schedule for testing.

- Test Cases: Outline the steps, inputs, and expected results for individual tests.

- Requirements Documentation: Detailed specifications of what the software should do.

- User Guides: Instructions for users on how to interact with the software.

- API Documentation: Critical when testing backend services, providing how to correctly call methods and expected results. Without these, QA effectiveness diminishes significantly.

20. What is the difference between manual and automated testing? What are the pros and cons of each?

Manual testing involves a human tester executing test cases without automation. Automated testing uses scripts and tools to execute tests.

Manual Testing: Pros: Good for exploratory testing, usability testing, and ad-hoc testing. Requires less upfront investment. Catches nuanced issues. Cons: Time-consuming, prone to human error, difficult to repeat consistently, and not suitable for large or complex systems.

Automated Testing: Pros: Faster, more reliable, repeatable, suitable for regression testing and large systems. Frees up testers for more creative tasks. Excellent for executing repetitive tasks. Can often be integrated into CI/CD pipelines. Cons: Requires initial investment in tools and script development, may not be effective for all types of testing (e.g., usability), and can be brittle (failing when there are minor UI changes).

21. Explain what is meant by 'test coverage'.

Test coverage is a metric that quantifies how much of the application's source code has been executed during testing. It's usually expressed as a percentage. Higher test coverage generally indicates a lower chance of undetected bugs, but it doesn't guarantee the absence of bugs.

Different types of test coverage include:

- Statement coverage: Measures whether each statement in the code has been executed.

- Branch coverage: Measures whether each branch of control structures (e.g.,

ifstatements, loops) has been executed. - Path coverage: Measures whether all possible paths through the code have been executed.

- Function coverage: Measures whether each function or method has been called.

22. Have you ever worked in an Agile environment? If so, how does QA fit into the Agile process?

Yes, I have experience working in Agile environments. In Agile, QA is integrated throughout the entire software development lifecycle, not just at the end. Testers are part of the Scrum team and collaborate with developers, product owners, and other stakeholders from the beginning of each sprint.

QA's role involves participating in sprint planning, reviewing user stories, creating test cases, performing continuous testing (including unit, integration, and system testing), and providing feedback to the development team. The goal is to identify and address issues early, leading to higher quality software and faster delivery. Automation is also important for quickly and regularly running tests.

23. Can you describe a situation where you had to advocate for quality, even when it was unpopular?

During a project involving a tight deadline, the team decided to skip writing unit tests to speed up development. I argued that while it would save time initially, the lack of testing would likely lead to more bugs in production, increasing the overall development time and potentially impacting user experience. My suggestion was unpopular as it meant delaying the initial release. However, I emphasized the long-term benefits, including reduced debugging efforts and a more stable product.

I proposed a compromise: we'd write focused unit tests for the core functionalities initially and add more tests later. This strategy was adopted, and while we still faced pressure, we managed to release a relatively stable product. We did encounter fewer bugs in production than anticipated, proving the value of even limited testing. I believe this demonstrated the importance of advocating for quality, even when it conflicts with short-term goals.

24. How do you stay up-to-date with the latest trends and technologies in QA?

I stay updated with QA trends through a combination of active learning and community engagement. This includes reading industry blogs like Software Testing Weekly and Guru99, subscribing to relevant newsletters from organizations like the ISTQB, and following key influencers on platforms like LinkedIn and Twitter.

I also participate in online communities and forums such as Stack Overflow and Reddit's r/softwaretesting to learn from others' experiences and discuss emerging technologies. Furthermore, I dedicate time to experimenting with new testing tools and frameworks, such as Cypress or Playwright, through personal projects or online courses on platforms like Coursera and Udemy.

25. Imagine you are testing an e-commerce website. What are some of the first things you would test?

When testing an e-commerce website for the first time, I would prioritize testing the core functionality that directly impacts the user's ability to make purchases. This includes:

- Search Functionality: Verify that the search bar returns relevant results for different search terms, including misspellings and synonyms.

- Product Pages: Check that product details (images, descriptions, price, availability) are displayed correctly and are consistent.

- Add to Cart: Ensure that adding products to the cart works without errors, and that the cart displays the correct items and quantities.

- Checkout Process: Test the entire checkout flow, including address entry, shipping options, payment processing (using test payment methods), and order confirmation. Different payment methods should be tested, for example, credit card, PayPal etc.

- Responsiveness: Confirm that the website is responsive and usable across different devices (desktop, mobile, tablet) and browsers. Basic UI elements should also be checked to see if they are correctly placed and formatted.

26. What are some different levels of software testing?

Software testing is typically performed at various levels, each with a specific focus and objective. Some of the common levels include:

- Unit Testing: Focuses on testing individual components or modules of the software in isolation. The goal is to verify that each unit of code functions as expected.

Example: Testing a single function in a class. - Integration Testing: Tests the interaction between different units or components of the software. It aims to ensure that the integrated units work together correctly.

- System Testing: Tests the entire system as a whole to ensure that it meets the specified requirements. It verifies the functionality, performance, and reliability of the complete system.

- Acceptance Testing: Conducted by the end-users or stakeholders to determine whether the system meets their needs and is ready for deployment. This level focuses on validating the system from a user perspective.

- Regression Testing: This is not really a 'level' of testing, but more of a type. It is used during all stages of the testing lifecycle. It ensures that new code changes have not broken existing code.

Intermediate QA interview questions

1. How do you approach testing a feature that has incomplete or changing requirements?

When requirements are incomplete or changing, I prioritize flexibility and collaboration. I'd start by clarifying what aspects are known and stable versus those that are uncertain. For testing, I'd focus on the stable parts first, writing tests that cover those core functionalities. For the uncertain areas, I'd use more exploratory testing and write tests that are easily adaptable. I'd also create smaller, more modular tests to minimize rework when requirements shift. Regularly communicating with the product owner, developers, and other stakeholders is crucial to get quick feedback and adjust the testing strategy accordingly. This helps ensure that the tests remain relevant and effective throughout the development process.

Specifically, for the uncertain areas, I'd consider:

- Using mocks and stubs: To isolate the feature being tested from dependencies that might change.

- Parameterized tests: To easily adjust test data as requirements evolve.

- Keeping tests small and focused: To reduce the impact of changes.

- Prioritizing integration tests later: Focusing on unit and component tests initially and then adding integration tests as the feature stabilizes.

2. Describe a time you had to advocate for quality when others didn't see the need. What was the outcome?

During a project to migrate a legacy system to a new cloud-based platform, the initial focus was solely on meeting the deadline. The team was cutting corners by skipping unit tests and rigorous code reviews to accelerate development. I noticed this would lead to increased bugs and maintainability issues post-launch. I advocated for dedicating time to write comprehensive unit tests and conduct thorough code reviews, explaining the long-term benefits of reduced technical debt and improved system stability. Initially, there was resistance due to the tight schedule.

I proposed a compromise: prioritize testing and reviewing the most critical components first, and gradually increase coverage as the deadline approached. I also volunteered to assist in writing test cases and leading code review sessions. Eventually, the team agreed. As a result, we identified and fixed several critical bugs before deployment, preventing major incidents after launch. The improved code quality also made subsequent maintenance and enhancements much easier, proving the value of investing in quality upfront.

3. Explain the difference between black box, white box, and gray box testing. Provide examples of when you'd use each.

Black box testing focuses on testing the functionality of the software without knowledge of the internal code structure or implementation. It's like testing a car by driving it and observing its behavior (does the radio work, do the brakes stop the car?). Examples include testing user interfaces, validating input/output, and performing end-to-end testing where you only care about the final result given a specific input. This is useful when testing from a user perspective, or when you lack access to the source code.

White box testing, conversely, involves testing the internal structure and code of the software. Testers have complete knowledge of the system's inner workings and can design tests to cover specific code paths, branches, and statements. Examples include unit testing (testing individual functions or methods) and testing specific algorithms or data structures. For example, ensuring a sorting algorithm correctly handles edge cases and different input sizes, using code coverage tools to verify all lines of code are executed at least once. Gray box testing is a hybrid approach combining aspects of both black box and white box testing. Testers have partial knowledge of the system's internal workings, such as the architecture or data structures, but not complete access to the source code. This enables them to design more effective tests by targeting specific areas of the system based on their understanding of the internal design. An example is testing API integration where you know the input/output format and some of the internal logic of the API, but you don't have access to the API's full source code. This allows you to craft tests that validate the API's behavior based on your understanding of how it's supposed to work internally.

4. How do you prioritize testing efforts when you have limited time and resources?

When time and resources are limited, I prioritize testing based on risk and impact. First, I'd identify the areas of the application that are most critical to the business and/or have the highest likelihood of failure. This includes core functionalities, frequently used features, and areas where changes have recently been made.

Then, I'd focus on testing these high-risk areas using a combination of techniques. This might involve prioritizing automated tests for critical paths, performing exploratory testing to uncover unexpected issues, and using risk-based testing to allocate testing effort proportionally to the risk level. Finally, I would focus on testing things used by a majority of users. This might also involve delaying testing of less critical features or reducing the scope of testing for lower-risk areas, while also documenting what's been deferred.

5. What are some common challenges you've faced in test automation, and how did you overcome them?

Some common challenges I've faced in test automation include dealing with flaky tests, maintaining test data, and keeping up with UI changes. Flaky tests are particularly frustrating; to address them, I've implemented strategies like adding retries with exponential backoff, improving test isolation to minimize dependencies, and thoroughly investigating the root cause of the failures to identify and fix underlying issues, which may involve code refactoring or adjusting test timing. For example, in a web application, a test might intermittently fail due to network latency. Implementing a retry mechanism that waits progressively longer intervals before retrying can often mitigate this.

Another challenge is managing test data. Creating and maintaining realistic and consistent test data can be time-consuming. To overcome this, I've used techniques like data factories, database seeding, and virtualization to generate or isolate test data. Also dealing with UI changes means, I have to invest time refactoring tests to use robust element locators (e.g., using IDs or data attributes instead of XPaths that are prone to break). Also, design tests and page object models to minimise the amount of work during such updates to the applications.

6. Explain the concept of test data management. Why is it important, and how do you ensure its effectiveness?

Test data management (TDM) involves planning, designing, storing, and governing the data used for software testing. It's crucial because realistic and well-managed test data directly impacts the quality and reliability of testing. Insufficient or poorly managed data can lead to inaccurate test results and missed defects. Furthermore, it helps ensure compliance with data privacy regulations by avoiding the use of production data directly.

To ensure effectiveness, you need to:

- Data Generation: Create synthetic or anonymized data that mirrors production data.

- Data Masking: Protect sensitive information (e.g., PII) when using production data subsets.

- Data Subsetting: Extract relevant subsets of production data for testing, reducing size and complexity.

- Data Refreshing: Regularly update test data to reflect changes in production.

- Data Versioning: Maintain versions of test data for repeatable tests.

- Data Governance: Implement policies for data usage, security, and access control.

- Automation: Automate test data provisioning to improve efficiency.

7. Describe your experience with different testing methodologies (e.g., Agile, Waterfall). Which do you prefer and why?

I have experience with both Agile and Waterfall methodologies. In Waterfall, I've worked on projects where requirements were clearly defined upfront, allowing for structured testing phases after development. This involved creating detailed test plans based on those initial requirements. I've also participated in Agile projects, particularly Scrum, where testing is integrated throughout the development sprints. This meant collaborating closely with developers and product owners, participating in sprint planning and reviews, and performing continuous testing.

I prefer Agile methodologies, specifically Scrum, due to its iterative nature and emphasis on collaboration. The flexibility to adapt to changing requirements, the constant feedback loop, and the ability to deliver value incrementally make it a more efficient and responsive approach to software development. Continuous testing and integration in Agile ensure higher quality and faster time-to-market. The daily stand-ups and retrospectives help identify and address issues promptly.

8. How do you handle conflicting feedback from different stakeholders during the testing process?

When facing conflicting feedback from stakeholders, I prioritize understanding the reasoning behind each perspective. I'd first gather all the feedback and identify the specific points of disagreement. Then, I would facilitate a discussion involving all relevant stakeholders to delve into the 'why' behind their suggestions. This often involves presenting data from testing, user research, or industry best practices to support my recommendations.

My goal is to find a solution that addresses the core concerns of each stakeholder while maintaining the quality and integrity of the product. If a consensus isn't immediately achievable, I would propose alternative solutions, prioritize feedback based on impact and risk, and involve a product owner or decision-maker to make a final determination. Clear communication and documentation of the decision-making process are crucial throughout.

9. Explain the importance of root cause analysis in software testing. Walk me through your process of identifying the root cause of a defect.

Root cause analysis (RCA) is crucial in software testing because it helps identify the underlying reason why a defect occurred, not just that it occurred. This prevents similar defects from reoccurring in the future, leading to improved software quality, reduced development costs, and more stable releases. Simply fixing a symptom doesn't address the real problem, which can manifest in other parts of the system or reappear later.

My process for identifying the root cause of a defect involves the following steps:

- Reproduce the defect: Confirm the defect and gather detailed information (steps, data, environment).

- Collect data: Examine logs, code, configurations, and any relevant documentation. Speak with developers, testers, and other stakeholders involved.

- Identify contributing factors: Use techniques like the '5 Whys' to drill down and uncover the chain of events leading to the defect.

- Determine the root cause: Pinpoint the fundamental issue that, if corrected, would prevent the defect from happening again. For example, was it a code logic error, an environmental issue, a misunderstanding of requirements, or a data integrity problem?

- Implement corrective actions: Propose and implement solutions to address the root cause. This might involve code changes, process improvements, better training, or updated documentation.

- Verify the fix: Retest the defect and, if appropriate, conduct regression testing to ensure the fix doesn't introduce new problems.

- Document the RCA: Record the process, findings, and corrective actions for future reference and learning.

10. What are some strategies for testing APIs? What tools do you use?

Strategies for testing APIs include: unit testing individual API endpoints, integration testing to ensure different API components work together, functional testing to validate that APIs meet business requirements, performance testing to measure API response times and scalability under load, security testing to identify vulnerabilities like injection flaws and authentication issues, and contract testing to verify that APIs adhere to the agreed-upon contract between provider and consumer. Testing should also consider input validation and error handling.

Tools used depend on the type of testing. Some common tools are:

- Postman: For manual API testing and exploration.

- curl: For simple command-line API requests.

- JUnit/Mockito (Java): For unit and integration testing of Java-based APIs.

- Pytest/requests (Python): For unit and integration testing of Python-based APIs.

- Swagger/OpenAPI: For generating tests from API definitions.

- Gatling/JMeter: For performance and load testing.

- OWASP ZAP/Burp Suite: For security testing.

11. How do you ensure the test environment accurately reflects the production environment?

Ensuring the test environment mirrors production is crucial for reliable testing. Key strategies include:

- Configuration Management: Use infrastructure-as-code tools (like Terraform, CloudFormation) to provision both environments identically. Automate configuration using tools like Ansible or Chef. Regular sync configuration from production to test.

- Data Synchronization: Anonymize or mask production data before loading it into the test environment. Keep the size of the test data representative of production for performance testing. Refresh data periodically.

- Software Versions: Maintain identical versions of operating systems, databases, libraries, and application code in both environments. Use containerization (Docker) to guarantee consistent dependencies.

- Network Topology: Replicate network configurations, including firewalls, load balancers, and DNS settings, to simulate real-world conditions.

- Monitoring & Logging: Employ similar monitoring and logging setups in both environments to aid in issue detection and analysis.

- Regular Audits: Conduct periodic audits to identify and rectify any discrepancies between the environments. Automate these checks where possible.

12. Describe your experience with performance testing. What metrics do you focus on, and what tools have you used?

My experience with performance testing includes both designing and executing tests to identify and address bottlenecks in web applications and APIs. I typically focus on key metrics such as:

- Response Time: The time taken for a request to be processed.

- Throughput: The number of requests processed per unit of time (e.g., requests per second).

- Error Rate: The percentage of failed requests.

- CPU Utilization: The amount of CPU resources being used.

- Memory Usage: The amount of memory being consumed.

I've used tools like JMeter and Gatling to simulate user load and measure these metrics. I've also integrated performance testing into CI/CD pipelines using tools like Jenkins to ensure that performance is continuously monitored and improved. Additionally, I have experience with monitoring tools such as Prometheus and Grafana for real-time performance analysis and visualization.

13. How do you approach testing for security vulnerabilities? What are some common security testing techniques?

I approach security vulnerability testing through a layered approach, starting with understanding the application's architecture and potential attack surfaces. This includes identifying critical assets and data flows, and then prioritizing testing efforts based on risk. Common techniques include static code analysis (SAST) to identify vulnerabilities in the source code (e.g., using tools like SonarQube or Fortify), dynamic application security testing (DAST) to simulate attacks and identify vulnerabilities in the running application (e.g., using tools like OWASP ZAP or Burp Suite), and penetration testing to simulate real-world attacks and identify vulnerabilities that may not be found by automated tools.

Other techniques include vulnerability scanning to identify known vulnerabilities in software components, security audits to assess the overall security posture of the application and infrastructure, and threat modeling to identify potential threats and vulnerabilities early in the development lifecycle. I also stay updated on the latest security threats and vulnerabilities by following security blogs, attending conferences, and participating in security communities. Finally, I believe in continuous security testing as part of the CI/CD pipeline to ensure that vulnerabilities are identified and addressed early and often.

14. Explain the difference between verification and validation. Provide examples of each in the context of software testing.

Verification is the process of checking whether the software conforms to its specification. It asks: "Are we building the product right?". Validation, on the other hand, is the process of evaluating the final product to check whether it meets the customer's needs and expectations. It asks: "Are we building the right product?".

For example, verification could involve a code review to ensure that the code adheres to coding standards and implements the design correctly. A validation example would be performing user acceptance testing (UAT) to confirm that the software meets the user's requirements and business needs. Other examples: verification - static analysis, inspections, walk throughs; validation - unit testing, integration testing, system testing.

15. How do you measure the effectiveness of your testing efforts? What metrics do you use to track progress and identify areas for improvement?

I measure the effectiveness of testing efforts using several key metrics. Defect density (defects found per lines of code or function points) helps assess code quality. Defect leakage (defects found in production) indicates how well testing covers real-world scenarios. Test coverage (statement, branch, path) measures the extent to which code is exercised by tests. Test execution rate (percentage of tests executed) and test pass rate (percentage of tests passed) track progress. Finally, mean time to detect (MTTD) and mean time to resolve (MTTR) for defects highlight the efficiency of the defect management process.

To track progress and identify improvement areas, I use tools like Jira or Azure DevOps for defect tracking, and test management tools that provide reporting dashboards for test execution and coverage. Analyzing these metrics reveals trends, such as increasing defect density in specific modules, which might require focused code reviews or refactoring. Low test coverage could indicate the need for more comprehensive test cases. By regularly monitoring and analyzing these metrics, I can adjust testing strategies and improve overall software quality.

16. Describe a time when you found a critical bug late in the development cycle. How did you handle the situation?

Late in the development cycle of a major e-commerce feature, while conducting final integration tests, I discovered a critical bug that caused incorrect tax calculations for certain product categories. This would have had severe legal and financial implications if deployed.

My immediate actions were to: 1. Isolate and document the bug with precise reproduction steps. 2. Notify the project manager and lead developer immediately, emphasizing the severity. 3. Collaborate with the team to identify the root cause, which turned out to be an outdated tax rate table not being properly updated during data migration. 4. Develop a fix involving updating the TaxCalculator class to dynamically pull the tax rates from the database. 5. Thoroughly test the fix across all affected product categories and regions. After verification and sign-off, we implemented the fix and re-ran regression tests to ensure no new issues were introduced. We then deployed the updated code.

17. How do you stay up-to-date with the latest trends and technologies in software testing?

I stay updated with software testing trends through a variety of channels. I regularly read industry blogs and articles from reputable sources like Martin Fowler's website, and the Ministry of Testing. Actively participating in online communities and forums like Stack Overflow and Reddit's r/softwaretesting helps me understand real-world challenges and solutions.

I also attend webinars, workshops, and conferences when possible. For instance, I recently attended a webinar on performance testing using Gatling. Experimenting with new tools and frameworks (e.g., Playwright, Cypress, or even contributing to open-source testing projects) provides hands-on experience and deeper understanding. Following thought leaders on social media (LinkedIn, Twitter) also exposes me to current discussions and emerging technologies.

18. Explain the concept of continuous integration and continuous delivery (CI/CD) and how testing fits into that process.

Continuous Integration (CI) is a development practice where developers regularly merge their code changes into a central repository, after which automated builds and tests are run. The key goal is to detect integration issues early and often. Continuous Delivery (CD) extends CI to ensure that the software can be reliably released at any time. This typically involves automating the release process, including testing, staging, and deployment.

Testing is an integral part of the CI/CD pipeline. Automated tests at different levels (unit, integration, system, and acceptance) are executed as part of the build process. If any test fails, the build is considered broken, and the development team is notified. This allows for rapid feedback and ensures that only tested and validated code is released. Testing allows developers to integrate code changes to the main branch and have confidence that they are not pushing broken code.

19. How do you determine the appropriate level of test coverage for a given project?

Determining appropriate test coverage involves balancing risk, resources, and business needs. It's not about achieving 100% coverage, which is often impractical and not cost-effective, but rather focusing on critical areas of the application. Factors to consider include: the complexity of the code, the criticality of the feature, the potential impact of a failure, and the availability of time and resources.

Techniques like risk-based testing, which prioritizes testing based on the likelihood and impact of failures, can be used. Metrics like code coverage (statement, branch, path) provide insights, but should be used judiciously. Business requirements, user stories, and regulatory requirements should also influence the scope and depth of testing. A well-defined test strategy, regular risk assessments, and collaboration with stakeholders are crucial for determining the right level of test coverage for a project.

20. Discuss your experience with mobile testing. What are some specific challenges and considerations for testing mobile applications?

My mobile testing experience includes both manual and automated testing across iOS and Android platforms. I've worked on projects involving native, hybrid, and web-based mobile apps. A significant portion of my experience has focused on functional testing, UI testing, performance testing (including battery consumption and memory usage), and security testing. I've used tools like Appium, Espresso, and XCUITest for automation.

Specific challenges in mobile testing include device fragmentation (different screen sizes, OS versions), network conditions (testing under varying bandwidths), and platform-specific behaviors. Considerations involve thoroughly testing installation/uninstallation processes, handling interruptions (calls, notifications), and ensuring responsiveness on different devices. Also, localization and internationalization testing are crucial for mobile applications targeting a global audience.

Advanced QA interview questions

1. How would you approach testing a feature that has no formal specifications?

When facing a feature without formal specifications, I'd focus on exploratory testing and collaboration. First, I'd work with stakeholders (developers, product owners, and potentially end-users) to understand the intended behavior, use cases, and potential edge cases. I'd document these assumptions. Then I'd use various testing techniques, like boundary value analysis, equivalence partitioning, and state transition testing, based on the assumptions to design test cases.

Next, I would perform exploratory testing, where I'd interact with the feature directly, observing its behavior and identifying potential issues not covered in the initial assumptions. I would document each test and its outcome, providing clear steps to reproduce failures. If bugs or inconsistencies are found, I'd use those to guide further testing and communicate frequently with the development team to clarify intended behavior and update documentation if needed.

2. Describe a time you had to advocate for quality when it wasn't a priority. What did you do?

In a previous role, I was working on a project with a tight deadline, and the initial focus was solely on delivering features quickly. I noticed that code reviews were being skipped and testing was minimal, leading to a growing number of bugs. I advocated for incorporating automated testing into our CI/CD pipeline to catch issues earlier in the development cycle.

To demonstrate the value of quality, I took the initiative to write a few key unit tests and showed how they could quickly identify existing bugs and prevent future regressions. I presented this demo to the team lead and explained how spending a bit of time on quality now would save us significant time and effort in the long run by reducing debugging and rework. Eventually, the team adopted a more balanced approach, prioritizing both speed and quality, leading to a more stable and reliable product. Even though there was resistance, framing the quality initiatives as 'time-saving' resonated with management.

3. Explain how you would design a test automation framework from scratch. What are the key components?

When designing a test automation framework, I'd focus on modularity, maintainability, and reusability. Key components would include: 1) Test Scripts: These are the actual automated tests written using a language like Python or Java, ideally following a clear naming convention. 2) Test Data Management: This handles test data, often separated from the scripts themselves using CSV or JSON files. 3) Test Execution Engine: This component is responsible for running the tests, managing dependencies, and orchestrating the overall test process. This can be integrated with CI/CD pipelines. 4) Reporting: The framework must generate detailed reports on test results, including pass/fail rates and any errors encountered. 5) Utilities/Helpers: Reusable code snippets for common tasks like reading configuration files, database connections, or interacting with APIs. Finally, the framework should have a clear structure that is easy to understand and extend. This often involves using design patterns like Page Object Model (POM).

For example, using Python with pytest:

# example test script

def test_example():

assert 1 == 1

4. Let's say a critical bug slips into production. What steps do you take to analyze the root cause and prevent it in the future?

First, I'd focus on immediate mitigation: identify the impact, rollback the problematic code if feasible, or implement a hotfix. Then, I'd perform a thorough root cause analysis. This involves:

- Gathering information: Collect logs, error reports, user feedback, and any relevant metrics surrounding the incident. Analyze code changes leading up to the bug using version control history (e.g.,

git blame). - Reproducing the bug: Attempt to recreate the issue in a development or staging environment to understand the exact conditions that trigger it.

- Identifying the root cause: Use debugging tools and techniques (e.g., code review, stepping through the code) to pinpoint the underlying problem. Consider the "5 Whys" technique to dig deeper beyond the immediate symptom.

To prevent recurrence, I'd propose these actions:

- Enhance testing: Improve unit, integration, and end-to-end tests to catch similar bugs before they reach production. Implement automated testing where possible.

- Improve code review process: Ensure thorough code reviews with a focus on identifying potential edge cases and vulnerabilities. Use static analysis tools for automated code quality checks.

- Strengthen monitoring and alerting: Set up more robust monitoring to detect anomalies and proactively alert the team to potential problems. Investigate implementing canary deployments or feature flags for safer releases.

- Knowledge sharing: Document the bug, its root cause, and the preventive measures taken to share the learnings with the team. Conduct a post-mortem meeting to discuss the incident and identify areas for improvement in our development processes.

5. How do you handle conflicting priorities between testing speed and test coverage?

Conflicting priorities between testing speed and test coverage require a strategic approach. I prioritize risk assessment to identify critical areas of the application and focus on achieving high test coverage in those areas. This may involve using techniques like risk-based testing and prioritizing test cases based on their likelihood of uncovering critical defects. For less critical areas, I may opt for faster testing methods with lower coverage, such as using simpler test cases or relying more on exploratory testing.

To balance speed and coverage, I also leverage automation strategically. Unit tests and integration tests for core functionalities are automated for speed and regression prevention. End-to-end tests, which are slower, are reserved for critical user flows and areas with complex interactions. Furthermore, I ensure efficient test design by avoiding redundancy and focusing on tests that provide maximum value. Continuous integration and continuous delivery (CI/CD) pipelines can be optimized to run tests in parallel, reducing overall testing time. Finally, regularly reviewing and refining the test suite is essential to eliminate obsolete tests and improve efficiency.

6. How would you test a complex algorithm without a clear understanding of the expected output?

When faced with testing a complex algorithm lacking a clearly defined expected output, I'd employ a multi-faceted approach. Firstly, focus on input variability and edge cases. Create a wide array of inputs, particularly those known to cause problems in similar algorithms or that test boundary conditions. Monitor for unexpected crashes, errors, or resource exhaustion.

Secondly, use relative comparison and stability testing. If incremental changes are expected, ensure the output changes incrementally too. If running the algorithm with the same input multiple times should produce similar results (or identical if it's deterministic), then verify that stability. Also, analyze intermediate results or metrics within the algorithm. Even without knowing the final output, internal values can provide clues about the algorithm's behavior, revealing bottlenecks, unexpected loops, or incorrect calculations. Tools for profiling and debugging become essential in these scenarios. Finally, compare against simpler, alternative implementations where feasible or validate using manual calculations on a small subset of data.

7. Describe your experience with performance testing and identifying bottlenecks in a system.

I have experience with performance testing using tools like JMeter and Gatling to simulate user load and identify performance bottlenecks. I've designed and executed load, stress, and endurance tests, analyzing metrics such as response time, throughput, and error rates using monitoring tools like Grafana and Prometheus.

Specifically, I've identified bottlenecks in several systems. For example, in one project, performance tests revealed slow database queries as a major bottleneck. We optimized these queries by adding indexes and rewriting complex joins, which significantly improved response times. In another case, excessive garbage collection was causing performance issues. By tuning the JVM garbage collection parameters, we were able to reduce pause times and improve overall system performance. I use profiling tools like JProfiler to pinpoint the exact line of code which is creating issues. I also look at CPU and Memory metrics to identify potential issues.

8. How would you approach testing a machine learning model for bias and fairness?

To test a machine learning model for bias and fairness, I would first define what fairness means in the context of the specific application. Then, I would identify protected attributes (e.g., race, gender, religion). Next, I would collect or create a dataset that includes these protected attributes.

I would then evaluate the model's performance across different subgroups defined by the protected attributes using appropriate fairness metrics like disparate impact, equal opportunity difference, or statistical parity difference. If bias is detected, I would explore techniques like data re-balancing, algorithm modification, or fairness-aware learning to mitigate it. It's crucial to document the fairness testing process and results for transparency and accountability. For example, using a library like fairlearn in Python to calculate fairness metrics is one approach.

9. Explain how you stay up-to-date with the latest trends and technologies in software testing.

I stay up-to-date with the latest trends and technologies in software testing through a variety of channels. I actively follow industry blogs and publications like Martin Fowler's blog and TechBeacon, attend webinars and online conferences hosted by organizations such as SeleniumConf and EuroSTAR. I also participate in online communities and forums, such as Stack Overflow and Reddit's r/softwaretesting, to engage in discussions and learn from other testers' experiences.

Specifically, I dedicate time each week to explore new testing frameworks and tools. For instance, recently I've been learning more about Playwright and its capabilities for end-to-end testing. I often try out small projects to practice new techniques. I'm currently experimenting with incorporating contract testing using tools like Pact to improve the reliability of microservices in projects where that applies. I also subscribe to newsletters from companies like Applitools and Sauce Labs to get insights into their latest product updates and testing methodologies.

10. Describe a situation where you had to challenge a developer's decision regarding code quality or functionality. What was the outcome?

I once reviewed code where a developer chose to implement a complex regular expression for data validation instead of using existing, well-tested library functions. I challenged this because the regex was hard to read, difficult to maintain, and potentially less performant than the readily available library. I explained the benefits of using the existing library: increased readability, maintainability, and potentially better performance due to optimizations within the library itself. We also discussed the risk of introducing subtle bugs with a custom regex. After discussing the pros and cons and running some performance tests, the developer agreed to refactor the code to use the library functions. This led to a cleaner, more maintainable solution.

In another instance, a developer proposed a new feature implementation that bypassed existing security checks for a perceived performance gain. I challenged this immediately, emphasizing the importance of security over a marginal performance improvement. We explored alternative solutions, such as optimizing the existing security checks or using caching mechanisms, which allowed us to maintain the necessary security level without sacrificing performance significantly. The feature was ultimately implemented with the security checks in place, preventing a potential vulnerability.

11. How do you measure the effectiveness of your testing efforts?

I measure the effectiveness of my testing efforts using several key metrics. These include defect detection rate (the percentage of bugs found before release), defect density (the number of bugs per line of code or feature), test coverage (the percentage of code covered by tests), and customer satisfaction (measured through surveys or feedback). Reduced post-release defects and improved customer satisfaction are strong indicators of effective testing.

Additionally, I track test execution time and resource utilization. If testing consistently finds critical bugs early in the development cycle, this demonstrates effective test planning and execution. The key is to have quantifiable metrics that demonstrate the value testing adds to the overall quality of the product. We should also track how long the bugs stay open and what is the cause of the bugs in the first place.

12. Explain how you would test a highly scalable and distributed system.

Testing a highly scalable and distributed system requires a multi-faceted approach. I would focus on these key areas:

- Performance and Load Testing: I'd use tools to simulate high user loads and data volumes to measure response times, throughput, and resource utilization. This includes stress testing (pushing the system beyond its limits) and soak testing (observing performance over extended periods). Metrics like latency, error rates, and CPU/memory usage are crucial. Specifically, I'd monitor for bottlenecks and ensure horizontal scalability is actually working (new nodes joining the cluster handle more load).

- Fault Tolerance and Resilience Testing: This involves intentionally introducing failures (e.g., node crashes, network partitions) to verify the system's ability to recover and maintain functionality. Tools like chaos engineering platforms could be valuable here. I would check data consistency after failures and ensure automatic failover mechanisms are working correctly.

- Data Consistency and Integrity Testing: I would verify that data remains consistent across all nodes in the distributed system, especially during concurrent updates and failures. Different consistency models (e.g., eventual consistency, strong consistency) would need to be validated based on the application's requirements. Tools to check data integrity are necessary.

- Security Testing: Authentication, authorization, and data encryption must be rigorously tested to protect against unauthorized access and data breaches.

13. What strategies do you use for testing APIs, and what tools do you find helpful?

My API testing strategies involve a combination of manual and automated tests. I start by understanding the API's functionality and specifications, then write test cases covering different scenarios: positive, negative, and edge cases. I pay close attention to input validation, authentication, authorization, data formats (JSON, XML), HTTP status codes, and error handling. I also test for performance aspects like response time and throughput.

For tools, I commonly use Postman for manual testing and exploring APIs. For automated testing, I prefer using Python with the requests library for sending HTTP requests and pytest for test framework and assertions. Also useful are tools like Swagger/OpenAPI for generating test cases and documentation, and potentially tools like JMeter for performance testing, depending on the project requirements. Specifically, for validating JSON responses, jsonschema is also very useful.

14. How would you go about testing a mobile application under various network conditions?

To test a mobile application under various network conditions, I'd simulate different network speeds and connectivity issues. This can be achieved through several methods. Device-based tools are useful, like network link conditioner on macOS or developer options on Android which allow throttling network speed. Network emulation tools, such as Charles Proxy or Fiddler, can intercept network traffic and simulate latency, packet loss, and bandwidth limitations. Cloud-based testing platforms like BrowserStack or Sauce Labs often provide network simulation features as well.

Testing involves systematically varying network conditions (e.g., 2G, 3G, 4G, Wi-Fi, and no connectivity) and observing the application's behavior. I'd focus on aspects like data loading times, responsiveness to user input, error handling (especially graceful degradation when connectivity is lost), battery consumption under different network loads, and proper synchronization of data when the network recovers. Automated tests using tools like Appium can be incorporated to streamline this process and ensure consistent results.

15. Describe your experience with security testing and identifying vulnerabilities in software.

During my experience in software development, I have actively participated in security testing and vulnerability identification using both manual and automated methods. I've utilized tools like OWASP ZAP and Burp Suite to perform penetration testing on web applications, identifying vulnerabilities such as Cross-Site Scripting (XSS), SQL Injection, and Cross-Site Request Forgery (CSRF). Furthermore, I've conducted static code analysis using tools like SonarQube to identify potential security flaws early in the development lifecycle.

I've also been involved in implementing secure coding practices and reviewing code for security vulnerabilities. For instance, I helped implement input validation to prevent injection attacks and regularly updated dependencies to patch known vulnerabilities. I also have experience with vulnerability scanning and reporting tools to identify, prioritize, and manage security risks. I document all findings and work with developers to remediate identified security flaws promptly and ensure security best practices are followed.

16. How do you integrate testing into a CI/CD pipeline to ensure continuous quality?

Integrating testing into a CI/CD pipeline is crucial for continuous quality. We automate various tests at different stages of the pipeline. Unit tests are run early and often, typically triggered by code commits. Integration tests, which verify the interaction between different components, are executed after the application is built and deployed to a test environment. End-to-end (E2E) tests simulate user behavior and are performed after integration tests.

Specifically, these tests are integrated as stages in the CI/CD pipeline definition (e.g., in Jenkins, GitLab CI, or GitHub Actions). Each stage executes a set of tests. If any test fails, the pipeline stops, preventing defective code from being deployed further. Feedback is provided to developers quickly so they can address the issues. For example:

# Example pipeline stage (using GitLab CI)

test_unit:

stage: test

script:

- npm install

- npm run test:unit

17. Explain how you would test a system that interacts with multiple third-party services.

Testing a system interacting with multiple third-party services involves a multi-faceted approach. I would start by identifying all the third-party services and their functionalities. Then, I'd create mock/stub versions of these services to isolate my system and control the responses. This allows for testing various scenarios, including successful responses, error conditions, timeouts, and unexpected data formats.

Specifically, I would use tools like WireMock or Mockito to create these mock services. Tests would focus on verifying that my system correctly handles different responses from the third-party services, adheres to expected data formats, and implements appropriate error handling and retry mechanisms. Performance testing and security testing are also crucial, assessing the impact of third-party service latency and validating data security during interactions. I will also focus on testing the integration points by writing integration tests which tests how the system behaves between multiple third party services and your application, to make sure everything is working as expected.

18. How would you handle a situation where you are constantly finding bugs, but they are never being fixed?

If I were constantly finding bugs that weren't being fixed, I would first document each bug thoroughly, including steps to reproduce, expected vs. actual behavior, and the potential impact. I'd then prioritize the bugs based on severity and frequency of occurrence.

Next, I'd communicate the issue clearly to the relevant stakeholders (development team, project manager, etc.), emphasizing the impact of these unfixed bugs on the product's quality, user experience, and potentially the project timeline. If the bugs continue to be ignored, I'd escalate the concern to higher management, providing data and examples to demonstrate the importance of addressing these issues. I would propose potential solutions, like introducing better testing strategies, improving code review processes, or allocating more resources to bug fixing. If it becomes a persistent pattern and is seriously impacting the product or my morale, I may consider if the work environment aligns with my values.

19. Describe a testing project you are particularly proud of, and explain why.

I'm particularly proud of my work on testing a new e-commerce platform's payment processing system. The project involved end-to-end testing, including unit, integration, and user acceptance testing. I developed comprehensive test cases to cover various scenarios, such as successful transactions, failed payments due to insufficient funds, fraud detection triggers, and refunds. What made this project rewarding was the complexity of integrating multiple payment gateways and ensuring PCI compliance.

I'm proud of this project because I identified a critical vulnerability related to race conditions in the refund processing logic. This vulnerability, if exploited, could have resulted in significant financial losses for the company. By proactively discovering and reporting this issue, I helped prevent potential damage and ensured the platform's security and reliability. I also created automated tests that simulated high transaction volumes, ensuring the system could handle peak loads without any issues.

20. How do you approach testing a system for accessibility, ensuring it meets the needs of users with disabilities?

I approach accessibility testing using a combination of automated tools and manual testing techniques. Automated tools like WAVE, Axe, and Lighthouse can quickly identify common accessibility issues such as missing alt text, insufficient color contrast, and semantic HTML errors.

Manual testing involves using screen readers (NVDA, VoiceOver), keyboard navigation, and magnification software to simulate the experience of users with disabilities. I would test different user flows and ensure all interactive elements are accessible and usable. Additionally, I consult accessibility guidelines like WCAG (Web Content Accessibility Guidelines) and ARIA (Accessible Rich Internet Applications) to guide my testing efforts and ensure compliance.

21. Explain how you would test a system that needs to handle a large volume of data.

To test a system handling large data volumes, I'd focus on performance, scalability, and reliability. I'd start with performance testing, using realistic datasets to measure response times and throughput under load. This includes load testing to simulate expected user activity and stress testing to push the system beyond its limits. Key metrics include transaction latency, data processing speed, and resource utilization (CPU, memory, disk I/O).

Next, I'd perform scalability testing to ensure the system can handle increasing data volumes and user loads. This involves horizontal scaling (adding more servers) and vertical scaling (increasing resources on existing servers). I'd verify that adding resources improves performance linearly. Finally, reliability testing focuses on data integrity and system availability, ensuring data is not lost or corrupted during high-volume processing and that the system can recover gracefully from failures. This includes testing data backup and recovery procedures, as well as failover mechanisms.

22. How do you prioritize test cases when you have limited time and resources?

When time and resources are limited, I prioritize test cases based on risk and impact. I focus on testing critical functionalities and high-traffic areas first. This involves identifying test cases that cover the most likely scenarios and potential failure points. Prioritization can also be guided by factors like business requirements, user stories, and areas with recent code changes or known bugs. Regression testing for core functionality is also crucial.

Specifically, I might use a risk-based approach to categorize test cases into priority levels (e.g., P0, P1, P2) based on the severity of the potential impact and the likelihood of occurrence. P0 would include critical path tests, P1 important but not critical, and P2 less critical. Time constraints might require reducing the scope of P2 or automating them for a later time.

23. Describe your experience with different testing methodologies, such as Agile, Waterfall, and DevOps.

I have experience working within Agile, Waterfall, and DevOps methodologies. In Agile environments, I actively participated in sprint planning, daily stand-ups, and retrospectives, ensuring continuous testing and feedback integration. This included using techniques like Behavior-Driven Development (BDD) and Test-Driven Development (TDD) where appropriate, with frequent regression testing. I've also worked on projects following the Waterfall model, where testing phases were more sequential and documentation-heavy, requiring detailed test plans and reports.

Furthermore, I have experience in DevOps environments, which focused on automation and continuous integration/continuous delivery (CI/CD). This involved creating and maintaining automated test suites (unit, integration, and end-to-end), using tools like Selenium, JUnit, and Jenkins. I've collaborated with developers and operations teams to implement testing strategies that support rapid deployment and ensure code quality throughout the development pipeline.

24. How would you test a feature that is constantly changing based on user feedback?

Testing a constantly evolving feature requires a flexible and iterative approach. I'd focus on establishing a core set of tests covering the fundamental functionality and user flows that are least likely to change drastically. These tests should be automated as much as possible to ensure rapid feedback after each iteration. For areas undergoing frequent changes, I'd rely more on exploratory testing, user feedback integration, and A/B testing to understand the impact of each modification. I would use feature flags and toggle features to different user groups, and track the results/feedback. This allows for continuous integration and delivery (CI/CD) while mitigating risks associated with constantly changing code. Regression testing remains crucial after each iteration to ensure that new changes haven't negatively impacted existing functionality.

Specifically, I would use a combination of automated unit tests for the core logic, integration tests for key workflows, and manual/exploratory testing to identify unexpected behaviors and gather qualitative user feedback. Tools like Postman, Selenium, Cypress, or Playwright can be invaluable for automating UI tests. For capturing user feedback, integrate tools for gathering in-app surveys, or analyze user behavior through analytics dashboards.

25. Explain how you would test a system for internationalization and localization.

Testing a system for internationalization (i18n) and localization (l10n) involves verifying that the application adapts correctly to different languages, regions, and cultural conventions. I'd start by testing core i18n aspects like proper handling of Unicode characters and correct date/time/number formatting for different locales. This involves creating test data in various languages and ensuring it displays correctly throughout the application. I would also verify that text is externalized (not hardcoded) and can be easily translated.

For localization testing, I'd focus on region-specific adaptations. This includes verifying that translations are accurate and culturally appropriate. Also testing things like currency symbols, address formats, postal codes, and keyboard layouts. For example, using a resource bundle/file (.properties, .json, .xml) for each locale and ensuring that the application loads the correct bundle based on the user's settings. I would also consider UI elements and their adaptability to right-to-left languages if required and perform pseudo-localization, automatically replacing strings with modified versions to quickly identify i18n issues.

Expert QA interview questions

1. Describe a time when you had to advocate for quality against pressure to release quickly. What was the outcome?

In my previous role as a software engineer, we were developing a new feature with a tight deadline. The pressure to release quickly was immense, and some team members suggested cutting corners in testing to meet the deadline. I strongly advocated for maintaining our quality standards, arguing that releasing a buggy feature would ultimately damage our reputation and create more work in the long run with bug fixes and customer support. I proposed a more targeted testing approach, focusing on the core functionality and high-risk areas, while still performing essential integration tests.

Ultimately, the team agreed to my proposal. We prioritized the most critical tests, and although we did release slightly later than initially planned, the feature was stable and well-received by users. We avoided a potential PR disaster and saved time and resources by preventing post-release bug fixes. This experience reinforced my belief that quality should never be compromised, even under pressure.

2. How would you design a test automation framework from scratch for a complex e-commerce application?

I'd design a modular, layered framework using a hybrid approach. This would combine data-driven and keyword-driven testing. I'd start with identifying key modules of the e-commerce application (e.g., login, product catalog, cart, checkout, order management). For each module, I'd define a set of reusable keywords or actions (e.g., login_user, add_to_cart, checkout). A data layer (e.g., CSV, Excel, database) would store test data and expected results. The core framework would use a programming language like Python or Java, along with libraries like Selenium for UI automation, RestAssured for API testing, and pytest/JUnit for test execution and reporting.