In the world of data management, ETL Testing plays a crucial role in ensuring data quality and integrity. As an interviewer, having a well-prepared list of ETL Testing interview questions is key to identifying the most qualified candidates for your team.

This blog post offers a comprehensive collection of ETL Testing interview questions, categorized by experience level and specific testing areas. From common questions for beginners to advanced queries for senior testers, we've got you covered.

By using these questions, you'll be able to thoroughly evaluate candidates' ETL Testing skills and make informed hiring decisions. Consider pairing these interview questions with an ETL online test to get a complete picture of your candidates' abilities.

Table of contents

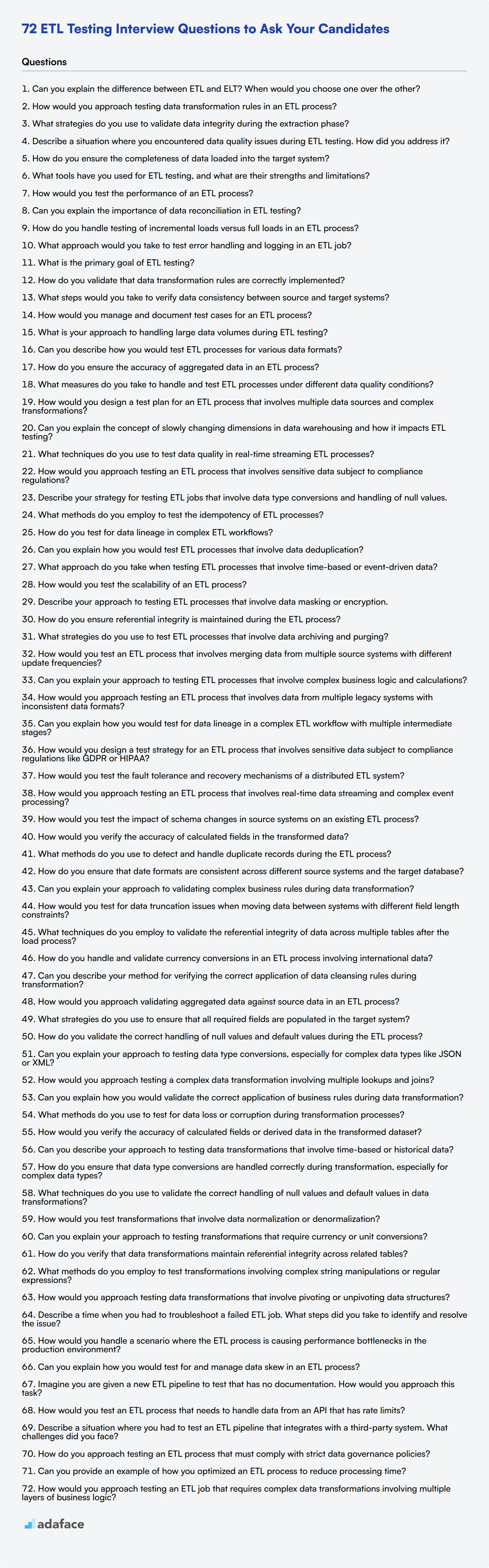

10 common ETL Testing interview questions to ask your candidates

To assess candidates' practical knowledge and problem-solving abilities in ETL testing, use these carefully curated questions. They will help you evaluate a candidate's understanding of ETL processes and their ability to apply testing principles in real-world scenarios.

- Can you explain the difference between ETL and ELT? When would you choose one over the other?

- How would you approach testing data transformation rules in an ETL process?

- What strategies do you use to validate data integrity during the extraction phase?

- Describe a situation where you encountered data quality issues during ETL testing. How did you address it?

- How do you ensure the completeness of data loaded into the target system?

- What tools have you used for ETL testing, and what are their strengths and limitations?

- How would you test the performance of an ETL process?

- Can you explain the importance of data reconciliation in ETL testing?

- How do you handle testing of incremental loads versus full loads in an ETL process?

- What approach would you take to test error handling and logging in an ETL job?

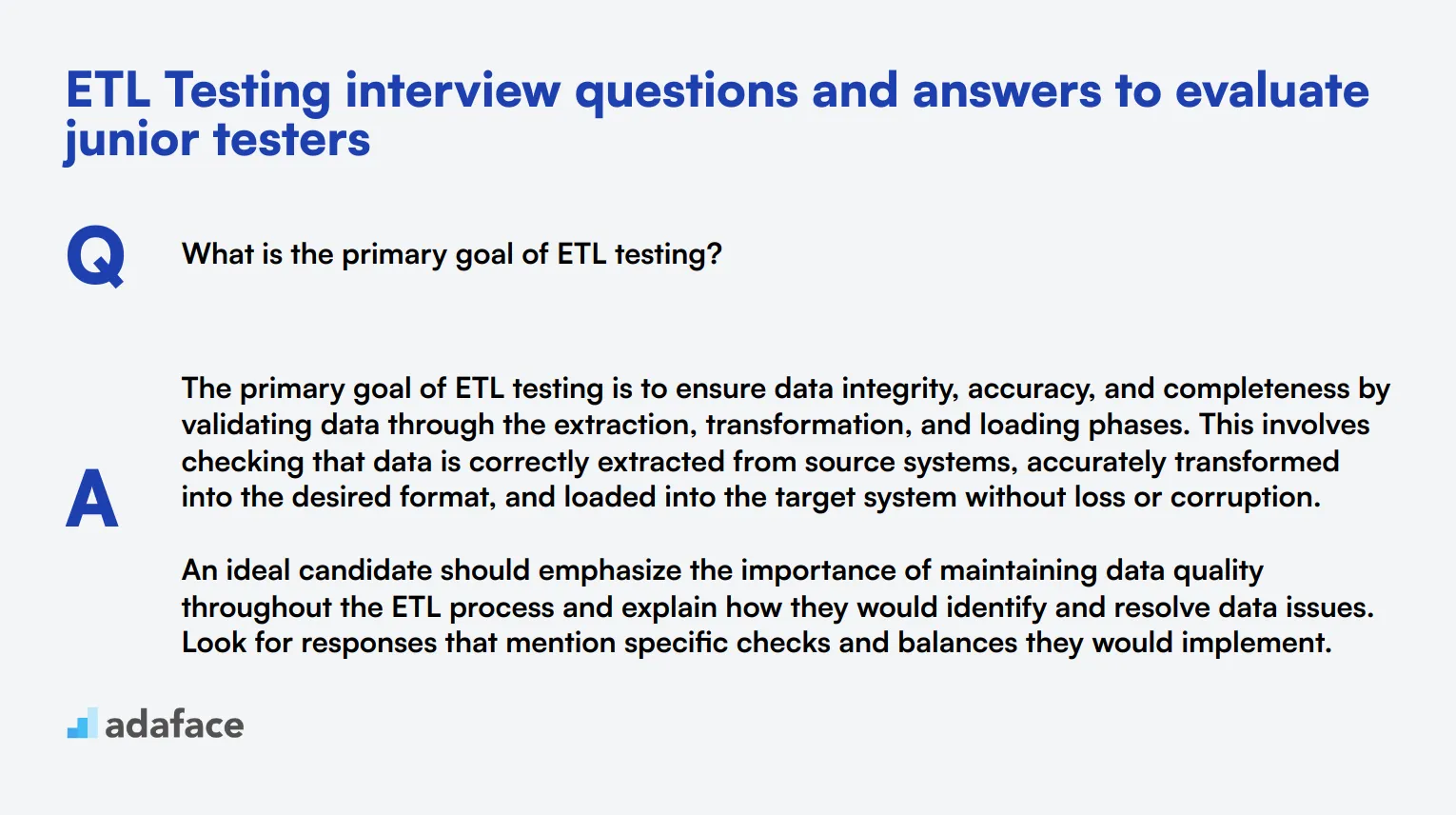

8 ETL Testing interview questions and answers to evaluate junior testers

To find out if your junior testers have the foundational skills for ETL testing, use these interview questions. They're designed to gauge their understanding and practical knowledge—without getting too technical.

1. What is the primary goal of ETL testing?

The primary goal of ETL testing is to ensure data integrity, accuracy, and completeness by validating data through the extraction, transformation, and loading phases. This involves checking that data is correctly extracted from source systems, accurately transformed into the desired format, and loaded into the target system without loss or corruption.

An ideal candidate should emphasize the importance of maintaining data quality throughout the ETL process and explain how they would identify and resolve data issues. Look for responses that mention specific checks and balances they would implement.

2. How do you validate that data transformation rules are correctly implemented?

To validate data transformation rules, I typically create detailed test cases that compare the source data with the transformed data based on the specified rules. This involves running SQL queries or using data comparison tools to verify that the transformations are applied correctly.

A strong candidate should mention methods like sample data testing, edge case testing, and using data profiling tools to check the transformations. Look for answers that demonstrate a methodical approach to comparing pre- and post-transformation data.

3. What steps would you take to verify data consistency between source and target systems?

To verify data consistency, I would first perform a row count check to ensure the number of records in the source and target systems match. Next, I would compare key data fields to ensure their values are consistent. Additionally, I’d conduct data profiling to identify any discrepancies.

Ideal responses should include a clear, step-by-step approach to verifying data consistency, mentioning specific techniques like row count checks, field-by-field comparisons, and the use of automated tools for data profiling.

4. How would you manage and document test cases for an ETL process?

I manage and document test cases using a combination of test management tools and detailed documentation practices. Each test case is documented with its purpose, input data, expected results, and actual results. Additionally, I organize the test cases based on the stages of the ETL process they apply to.

Look for candidates who mention the importance of maintaining a test case repository and using tools for version control and collaboration. Ideal answers should highlight their systematic approach to documentation and test management.

5. What is your approach to handling large data volumes during ETL testing?

When handling large data volumes, my approach includes using sampling techniques to test subsets of data, leveraging parallel processing to speed up tests, and employing data virtualization tools to manage data efficiently. I also ensure robust error handling to identify issues quickly.

Candidates should demonstrate an understanding of practical techniques for managing large datasets and mention tools or methods they have used in the past. Look for insights into their ability to balance thoroughness with efficiency.

6. Can you describe how you would test ETL processes for various data formats?

Testing ETL processes for various data formats involves creating specific test cases for each format, such as JSON, XML, and CSV. I ensure that the ETL process can correctly extract, transform, and load each format by validating the integrity and structure of the data at each stage.

A good candidate response should include examples of different data formats they have worked with and how they approached testing for each. Look for attention to detail in handling data format-specific challenges.

7. How do you ensure the accuracy of aggregated data in an ETL process?

Ensuring the accuracy of aggregated data involves validating the aggregation logic with smaller datasets before applying it to larger datasets. I use SQL queries to compare the aggregated data against the source data to verify the correctness of sums, averages, counts, etc.

Ideal candidates should discuss their approach to writing and validating aggregation queries and mention any tools they use for this purpose. Look for an understanding of common aggregation pitfalls and how to avoid them.

8. What measures do you take to handle and test ETL processes under different data quality conditions?

To handle and test ETL processes under different data quality conditions, I first perform data profiling to understand the quality of the source data. I then create test cases that cover a range of data quality scenarios, from perfect data to data with missing values, duplicates, and incorrect formats.

Strong responses should reflect a proactive approach to identifying and addressing data quality issues. Look for candidates who mention specific tests and checks they incorporate to handle various data conditions.

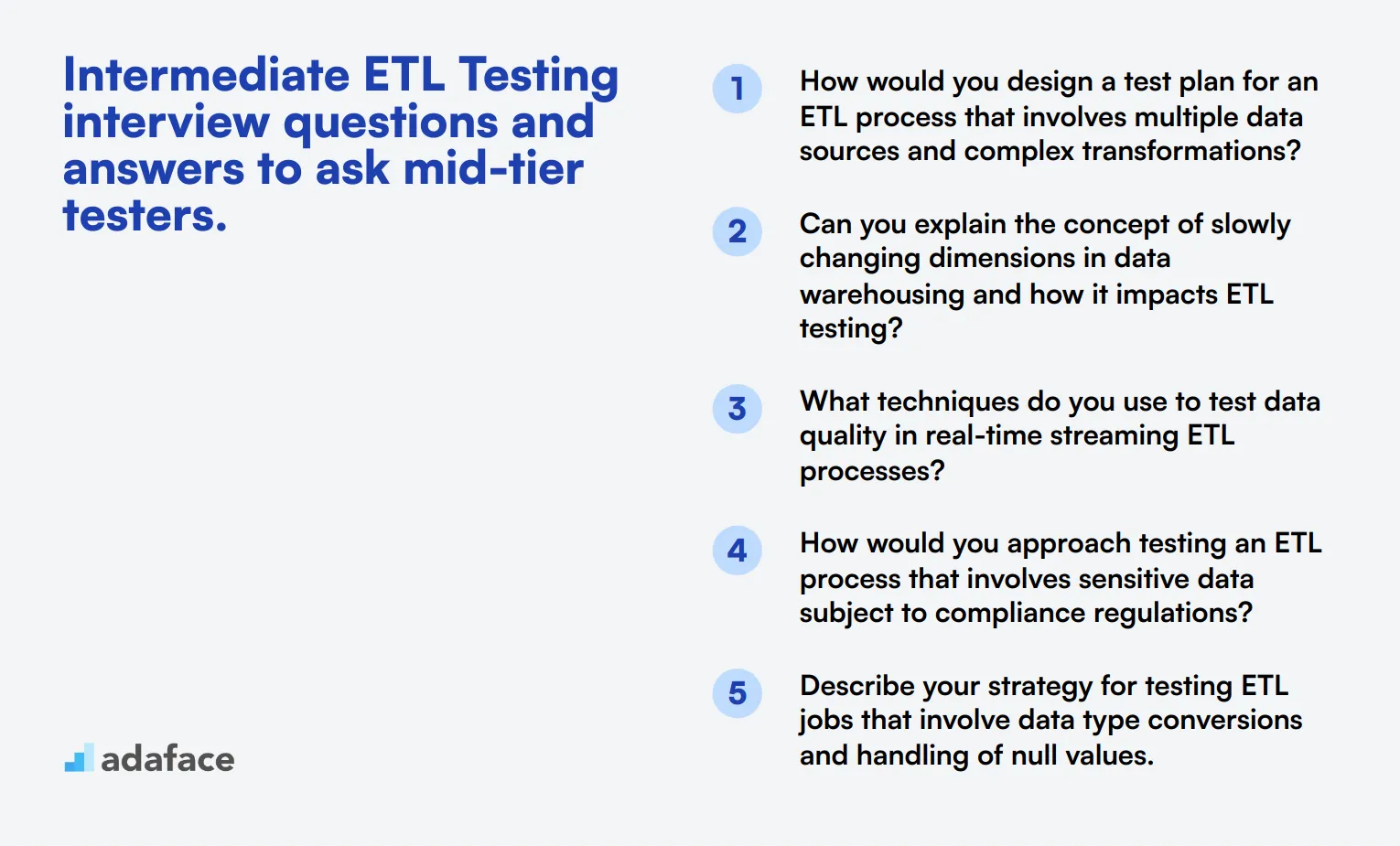

15 intermediate ETL Testing interview questions and answers to ask mid-tier testers.

To assess the intermediate-level skills of ETL testers, use these 15 targeted questions. They cover practical scenarios and technical knowledge, helping you identify candidates who can handle complex ETL testing tasks effectively.

- How would you design a test plan for an ETL process that involves multiple data sources and complex transformations?

- Can you explain the concept of slowly changing dimensions in data warehousing and how it impacts ETL testing?

- What techniques do you use to test data quality in real-time streaming ETL processes?

- How would you approach testing an ETL process that involves sensitive data subject to compliance regulations?

- Describe your strategy for testing ETL jobs that involve data type conversions and handling of null values.

- What methods do you employ to test the idempotency of ETL processes?

- How do you test for data lineage in complex ETL workflows?

- Can you explain how you would test ETL processes that involve data deduplication?

- What approach do you take when testing ETL processes that involve time-based or event-driven data?

- How would you test the scalability of an ETL process?

- Describe your approach to testing ETL processes that involve data masking or encryption.

- How do you ensure referential integrity is maintained during the ETL process?

- What strategies do you use to test ETL processes that involve data archiving and purging?

- How would you test an ETL process that involves merging data from multiple source systems with different update frequencies?

- Can you explain your approach to testing ETL processes that involve complex business logic and calculations?

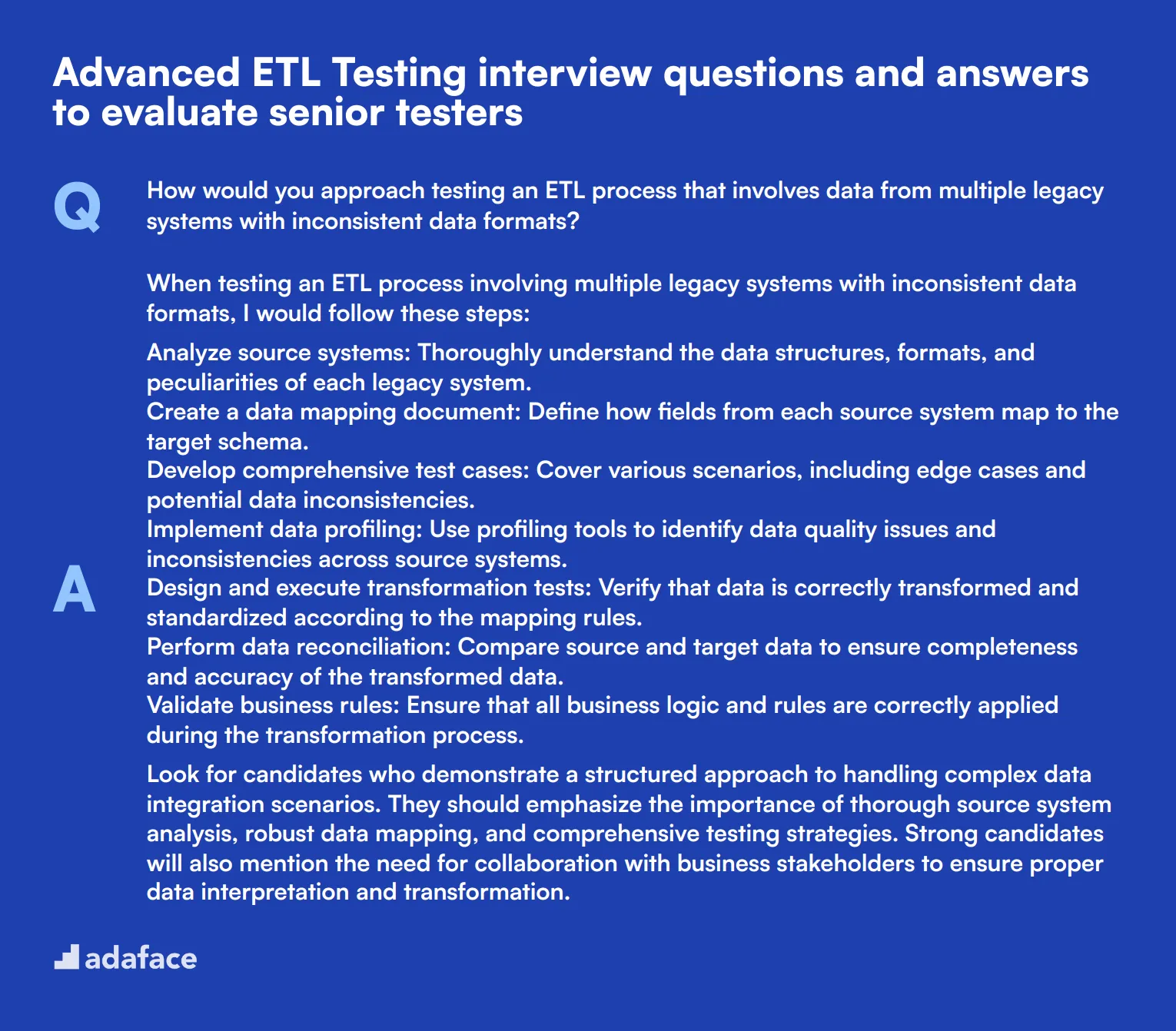

6 advanced ETL Testing interview questions and answers to evaluate senior testers

Ready to separate the ETL wizards from the data dabblers? These advanced ETL testing questions will help you identify senior testers who can handle complex scenarios and ensure data integrity. Use these questions to evaluate candidates' deep understanding of ETL processes and their ability to tackle challenging situations in data engineering.

1. How would you approach testing an ETL process that involves data from multiple legacy systems with inconsistent data formats?

When testing an ETL process involving multiple legacy systems with inconsistent data formats, I would follow these steps:

- Analyze source systems: Thoroughly understand the data structures, formats, and peculiarities of each legacy system.

- Create a data mapping document: Define how fields from each source system map to the target schema.

- Develop comprehensive test cases: Cover various scenarios, including edge cases and potential data inconsistencies.

- Implement data profiling: Use profiling tools to identify data quality issues and inconsistencies across source systems.

- Design and execute transformation tests: Verify that data is correctly transformed and standardized according to the mapping rules.

- Perform data reconciliation: Compare source and target data to ensure completeness and accuracy of the transformed data.

- Validate business rules: Ensure that all business logic and rules are correctly applied during the transformation process.

Look for candidates who demonstrate a structured approach to handling complex data integration scenarios. They should emphasize the importance of thorough source system analysis, robust data mapping, and comprehensive testing strategies. Strong candidates will also mention the need for collaboration with business stakeholders to ensure proper data interpretation and transformation.

2. Can you explain how you would test for data lineage in a complex ETL workflow with multiple intermediate stages?

Testing for data lineage in a complex ETL workflow with multiple intermediate stages involves tracking the flow of data from source to destination, including all transformations and intermediate steps. Here's how I would approach it:

- Document the entire ETL workflow: Create a detailed diagram showing all data sources, transformations, and intermediate stages.

- Implement data tagging: Add unique identifiers or metadata to track data elements through each stage of the process.

- Use data lineage tools: Employ specialized tools that can automatically trace data lineage in complex workflows.

- Perform sample-based tracing: Select specific data points and manually trace their journey through the entire ETL process.

- Validate transformation rules: Ensure that each transformation step is correctly applied and documented.

- Check for data loss or duplication: Verify that no data is unexpectedly lost or duplicated between stages.

- Test error handling and logging: Ensure that any data discrepancies or processing errors are properly logged with relevant lineage information.

An ideal candidate should emphasize the importance of maintaining clear documentation and using both manual and automated methods to trace data lineage. They should also mention the significance of data lineage in ensuring data quality, facilitating audits, and supporting troubleshooting efforts in complex ETL environments.

3. How would you design a test strategy for an ETL process that involves sensitive data subject to compliance regulations like GDPR or HIPAA?

Designing a test strategy for an ETL process involving sensitive data subject to compliance regulations like GDPR or HIPAA requires a careful approach that prioritizes data security and regulatory compliance. Here's how I would structure the test strategy:

- Compliance assessment: Review all relevant regulations and identify specific requirements that impact the ETL process.

- Data classification: Clearly identify and categorize sensitive data elements within the ETL workflow.

- Access control testing: Verify that only authorized personnel can access sensitive data throughout the ETL process.

- Data masking and encryption: Test the effectiveness of data masking and encryption techniques used to protect sensitive information.

- Audit trail validation: Ensure that all data access and modifications are properly logged and traceable.

- Data retention and deletion: Test processes for proper data retention and secure deletion as per regulatory requirements.

- Consent management: Verify that data processing aligns with user consent and preferences, especially for GDPR compliance.

- Cross-border data transfer: If applicable, test compliance with regulations governing international data transfers.

- Incident response testing: Simulate data breaches or unauthorized access attempts to test response procedures.

Look for candidates who demonstrate a strong understanding of data privacy regulations and their impact on ETL processes. They should emphasize the importance of integrating compliance requirements into every stage of the ETL workflow and testing process. Strong candidates will also mention the need for collaboration with legal and compliance teams to ensure all regulatory requirements are met.

4. How would you test the fault tolerance and recovery mechanisms of a distributed ETL system?

Testing the fault tolerance and recovery mechanisms of a distributed ETL system is crucial for ensuring data integrity and system reliability. Here's how I would approach this:

- Identify failure points: Map out all potential points of failure in the distributed system, including network issues, node failures, and resource constraints.

- Simulate failures: Deliberately introduce various types of failures (e.g., network partitions, node crashes, disk failures) during ETL processes.

- Test checkpoint and restart mechanisms: Verify that the system can properly save its state and resume processing from the last known good state after a failure.

- Validate data consistency: Ensure that data remains consistent across all nodes after recovery from a failure.

- Performance under failure: Measure system performance and data processing rates during and after failure scenarios.

- Test scalability: Verify that fault tolerance mechanisms work effectively as the system scales up or down.

- Monitor error handling: Evaluate how the system logs and reports errors, ensuring that issues are properly captured for analysis.

- Recovery time objectives (RTO): Measure the time taken for the system to recover from different types of failures and compare against defined RTOs.

- Data loss prevention: Confirm that no data is lost during failure and recovery scenarios.

An ideal candidate should demonstrate a comprehensive understanding of distributed systems and their potential failure modes. They should emphasize the importance of thorough testing under various failure scenarios and the need to validate both data integrity and system performance during recovery. Look for candidates who mention the significance of automated testing for fault tolerance, as manual testing alone may not be sufficient for complex distributed systems.

5. How would you approach testing an ETL process that involves real-time data streaming and complex event processing?

Testing an ETL process involving real-time data streaming and complex event processing requires a specialized approach to ensure data accuracy, timeliness, and system performance. Here's how I would tackle this:

- Set up a realistic test environment: Create a test setup that closely mimics the production environment, including data sources, streaming infrastructure, and processing components.

- Generate test data streams: Develop tools or scripts to generate realistic, high-volume data streams that match production patterns.

- Test data ingestion: Verify that the system can handle the expected data volume and velocity without data loss or significant lag.

- Validate event processing logic: Ensure that complex event processing rules are correctly applied to the streaming data.

- Check for data consistency: Verify that processed data remains consistent across all system components and matches expected outcomes.

- Performance testing: Measure system latency, throughput, and resource utilization under various load conditions.

- Fault tolerance testing: Simulate failures in different components and verify that the system can recover without data loss.

- End-to-end testing: Perform comprehensive tests from data ingestion to final data delivery, ensuring all transformations are correctly applied.

- Monitor and alert testing: Verify that monitoring systems can detect and alert on issues in real-time processing.

- Data quality checks: Implement automated data quality checks to ensure the integrity of processed data.

Look for candidates who demonstrate familiarity with real-time data processing technologies and testing methodologies. They should emphasize the importance of performance testing and monitoring in streaming environments. Strong candidates will also mention the need for automated testing tools and continuous monitoring to ensure the reliability of real-time ETL processes.

6. How would you test the impact of schema changes in source systems on an existing ETL process?

Testing the impact of schema changes in source systems on an existing ETL process is crucial for maintaining data integrity and preventing disruptions. Here's my approach to this scenario:

- Impact analysis: Review the proposed schema changes and identify all affected ETL components and downstream systems.

- Version control: Ensure that all ETL code and configurations are properly versioned before making any changes.

- Test environment setup: Create a separate test environment that mirrors the production setup, including the modified source schema.

- Data profiling: Perform thorough data profiling on the source system with the new schema to identify any potential data quality issues.

- Update ETL mappings: Modify the ETL mappings to accommodate the schema changes and document all modifications.

- Regression testing: Run comprehensive regression tests to ensure that existing functionality is not broken by the schema changes.

- Performance testing: Measure the performance impact of the schema changes on the ETL process, particularly for any new or modified transformations.

- Data reconciliation: Compare the results of the modified ETL process with the original process to ensure data accuracy and completeness.

- Error handling: Test error scenarios to ensure that the ETL process can gracefully handle any issues arising from the schema changes.

- Rollback plan: Develop and test a rollback plan in case the schema changes need to be reverted.

An ideal candidate should emphasize the importance of thorough impact analysis and testing before implementing schema changes in production. They should demonstrate an understanding of the ripple effects that schema changes can have throughout the data pipeline. Look for candidates who mention the need for close collaboration with database administrators and source system owners to ensure smooth transitions during schema changes.

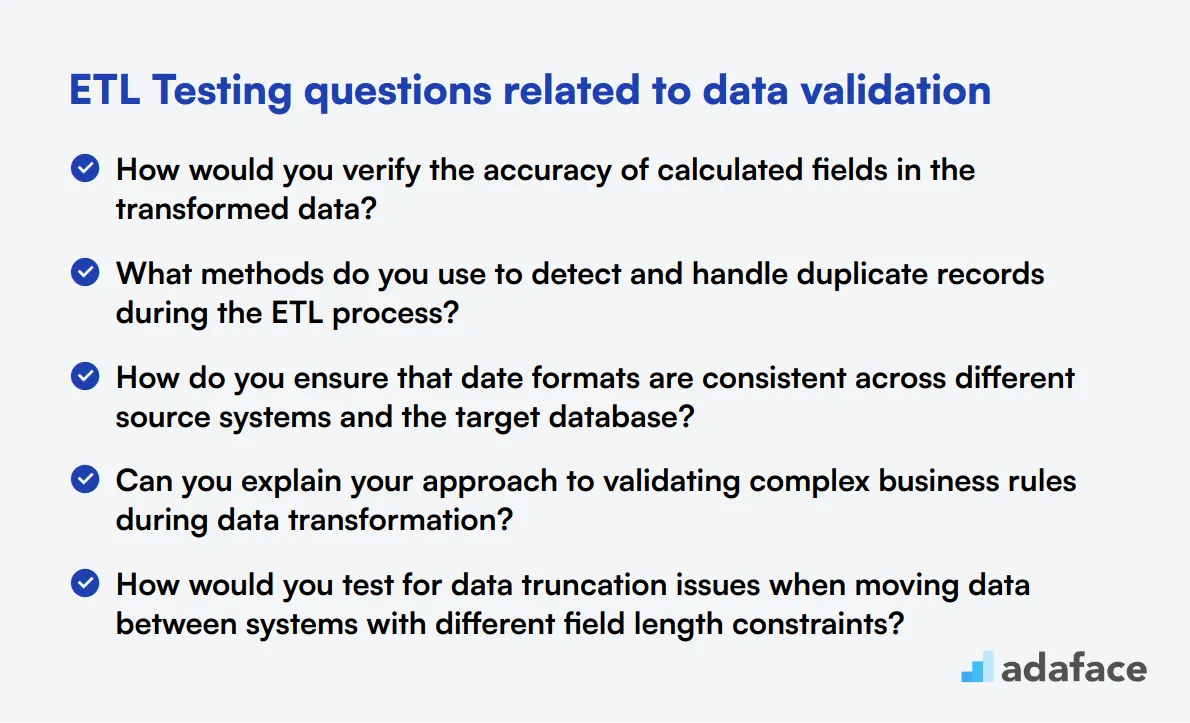

12 ETL Testing questions related to data validation

To assess candidates' proficiency in data validation during ETL processes, use these 12 targeted questions. They cover key aspects of ensuring data accuracy, completeness, and consistency throughout the ETL pipeline, helping you identify skilled professionals who can maintain data integrity.

- How would you verify the accuracy of calculated fields in the transformed data?

- What methods do you use to detect and handle duplicate records during the ETL process?

- How do you ensure that date formats are consistent across different source systems and the target database?

- Can you explain your approach to validating complex business rules during data transformation?

- How would you test for data truncation issues when moving data between systems with different field length constraints?

- What techniques do you employ to validate the referential integrity of data across multiple tables after the load process?

- How do you handle and validate currency conversions in an ETL process involving international data?

- Can you describe your method for verifying the correct application of data cleansing rules during transformation?

- How would you approach validating aggregated data against source data in an ETL process?

- What strategies do you use to ensure that all required fields are populated in the target system?

- How do you validate the correct handling of null values and default values during the ETL process?

- Can you explain your approach to testing data type conversions, especially for complex data types like JSON or XML?

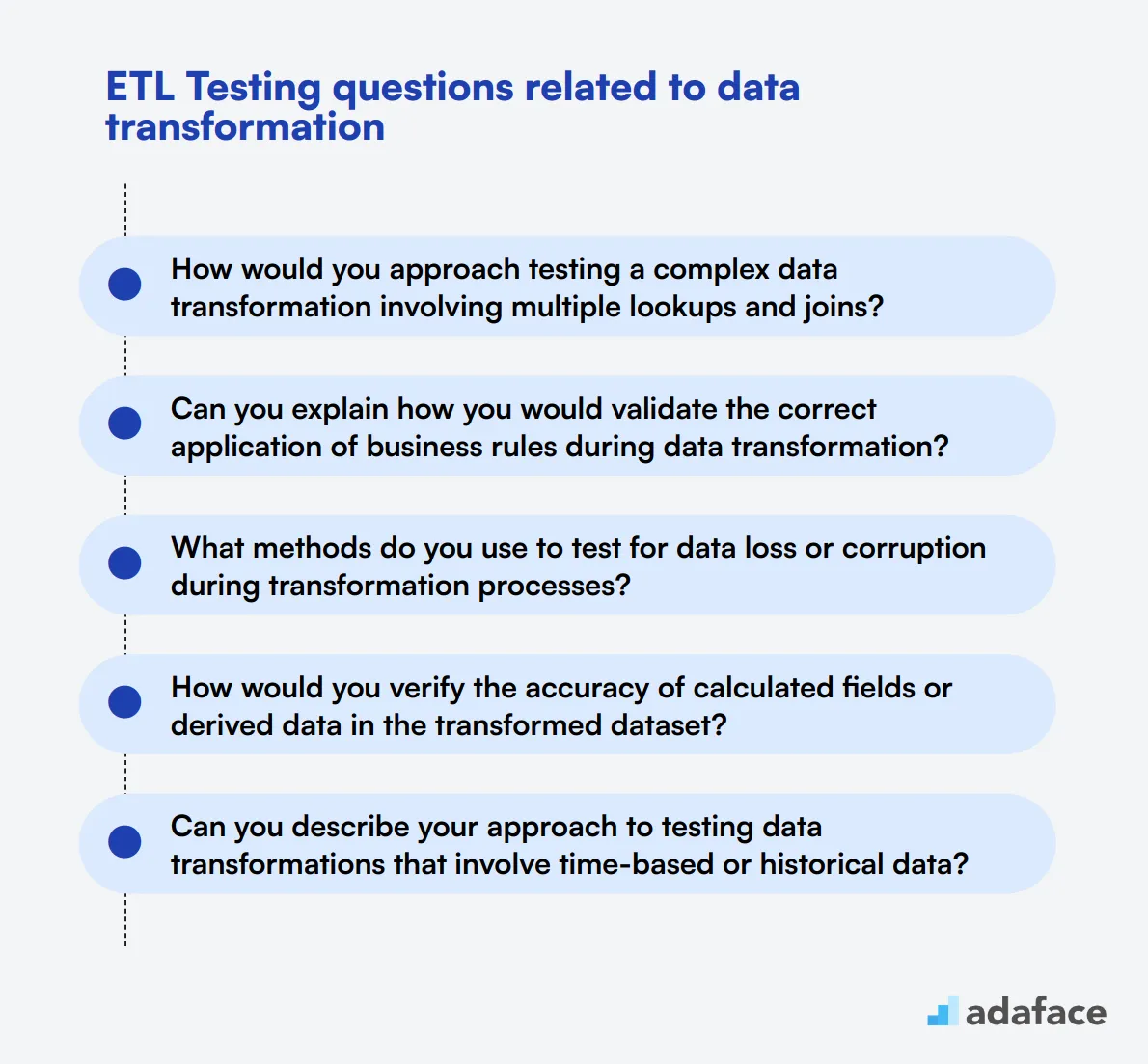

12 ETL Testing questions related to data transformation

To assess a candidate's proficiency in data transformation techniques, use these 12 ETL testing questions. These questions will help you evaluate the applicant's ability to handle complex data manipulations and ensure data quality throughout the ETL process.

- How would you approach testing a complex data transformation involving multiple lookups and joins?

- Can you explain how you would validate the correct application of business rules during data transformation?

- What methods do you use to test for data loss or corruption during transformation processes?

- How would you verify the accuracy of calculated fields or derived data in the transformed dataset?

- Can you describe your approach to testing data transformations that involve time-based or historical data?

- How do you ensure that data type conversions are handled correctly during transformation, especially for complex data types?

- What techniques do you use to validate the correct handling of null values and default values in data transformations?

- How would you test transformations that involve data normalization or denormalization?

- Can you explain your approach to testing transformations that require currency or unit conversions?

- How do you verify that data transformations maintain referential integrity across related tables?

- What methods do you employ to test transformations involving complex string manipulations or regular expressions?

- How would you approach testing data transformations that involve pivoting or unpivoting data structures?

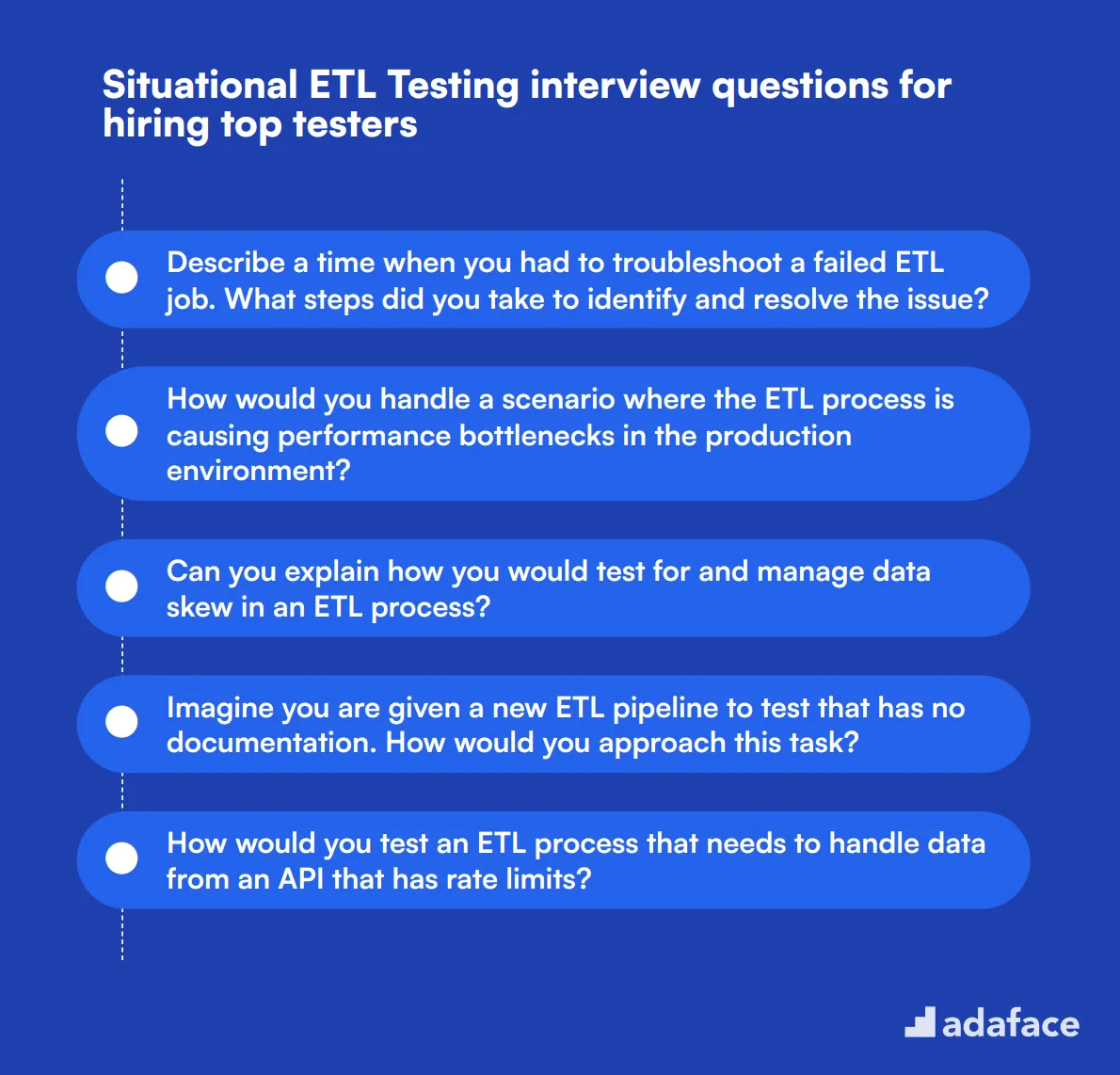

9 situational ETL Testing interview questions for hiring top testers

To identify top ETL testers and assess their practical skills, consider using these situational ETL Testing interview questions. They can help you evaluate how candidates handle real-world testing challenges and ensure they have the expertise needed for your projects. For more details on what to look for, check out our ETL developer job description.

- Describe a time when you had to troubleshoot a failed ETL job. What steps did you take to identify and resolve the issue?

- How would you handle a scenario where the ETL process is causing performance bottlenecks in the production environment?

- Can you explain how you would test for and manage data skew in an ETL process?

- Imagine you are given a new ETL pipeline to test that has no documentation. How would you approach this task?

- How would you test an ETL process that needs to handle data from an API that has rate limits?

- Describe a situation where you had to test an ETL pipeline that integrates with a third-party system. What challenges did you face?

- How do you approach testing an ETL process that must comply with strict data governance policies?

- Can you provide an example of how you optimized an ETL process to reduce processing time?

- How would you approach testing an ETL job that requires complex data transformations involving multiple layers of business logic?

Which ETL Testing skills should you evaluate during the interview phase?

While a single interview may not unveil every facet of a candidate’s potential, focusing on key ETL Testing skills can provide a significant insight into their capabilities. This section breaks down which skills are essential to evaluate to ensure you're capturing a broad yet deep understanding of the candidate's technical proficiency and problem-solving abilities in ETL processes.

SQL

SQL skills are indispensable in ETL testing as they directly pertain to extracting, transforming, and loading data. A strong command in SQL allows testers to effectively query databases, manipulate data, and validate transformations, ensuring data accuracy and integrity.

To gauge SQL proficiency early in the selection process, consider utilizing an assessment that challenges candidates on SQL-related scenarios. Adaface offers a comprehensive SQL Coding Test that can help filter candidates effectively.

During interviews, pose specific SQL-related questions to assess the practical application of their knowledge. Here's a question to get you started:

Explain how you would use SQL to test the integrity of a data transformation from one schema to another.

Look for answers that demonstrate a thorough understanding of SQL commands and their application in data validation. Effective responses should detail the process of comparing data sets before and after transformation.

Data Validation

Data validation is at the core of ETL testing, ensuring that data loaded into the target system meets all specifications and is free from errors. This skill is crucial for maintaining the integrity and quality of data in the business processes.

To dive deeper into a candidate’s data validation expertise, consider asking the following interview question:

What steps would you take to validate data completeness after an ETL process?

Candidates should outline a comprehensive strategy for verifying data completeness, including specific checks and tools they would use to ensure no data has been lost or misinterpreted during the ETL process.

Problem Solving

Problem-solving is a critical skill for ETL testers, as they must routinely identify and resolve issues that arise with data transformations and flows. The ability to troubleshoot effectively saves time and prevents data corruption.

To initially screen for problem-solving abilities in a relevant context, you can utilize Adaface's Logical Reasoning Test, which simulates scenarios that require logical and analytical thinking similar to those encountered in ETL testing.

Further explore their problem-solving skills during the interview with this question:

Describe a challenging data discrepancy issue you encountered during ETL testing and how you resolved it.

Effective answers should display not only the candidate’s ability to diagnose and resolve issues but also their approach to preventing similar problems in the future, demonstrating proactive problem management.

Optimize Your Hiring: Combine ETL Skills Tests and Targeted Interview Questions

When you're looking to hire someone with ETL skills, it's important to verify that candidates possess the necessary expertise. Ensuring accuracy in these skills is key to finding the right fit for your team.

The most direct way to assess these skills is through specialized skills tests. Consider using assessments like our ETL Online Test, Data Warehouse Online Test, and SQL Online Test to evaluate candidates effectively.

After administering these tests, you can confidently shortlist the top candidates. This selection process allows you to invite only the most promising applicants for interviews, streamlining your hiring process.

To get started with these assessments and further enhance your hiring strategy, sign up at our dashboard or explore more on our online assessment platform page.

ETL Assessment Test

Download ETL Testing interview questions template in multiple formats

ETL Testing Interview Questions FAQs

ETL testing involves verifying the processes of Extract, Transform, and Load in data integration to ensure data accuracy, completeness, and reliability.

An ETL tester should have strong SQL knowledge, data warehousing concepts, attention to detail, and experience with ETL tools and software.

Review common ETL testing concepts, practice SQL queries, understand data validation and transformation techniques, and familiarize yourself with popular ETL tools.

Common challenges include handling large volumes of data, ensuring data quality, managing data transformation complexity, and dealing with varying data sources.

Data validation ensures that the data being loaded into the data warehouse is accurate and consistent, preventing errors and ensuring reliable business analytics.

Common ETL testing tools include Informatica, Talend, Apache Nifi, and proprietary tools from database vendors such as SQL Server Integration Services (SSIS).

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources