As a recruiter or hiring manager, your goal is to identify the best Embedded C developers. This means asking the right questions to assess a candidate's knowledge and experience, and understanding their skills is very important. The right interview questions can help you make informed decisions, ensuring you find the perfect fit for your team.

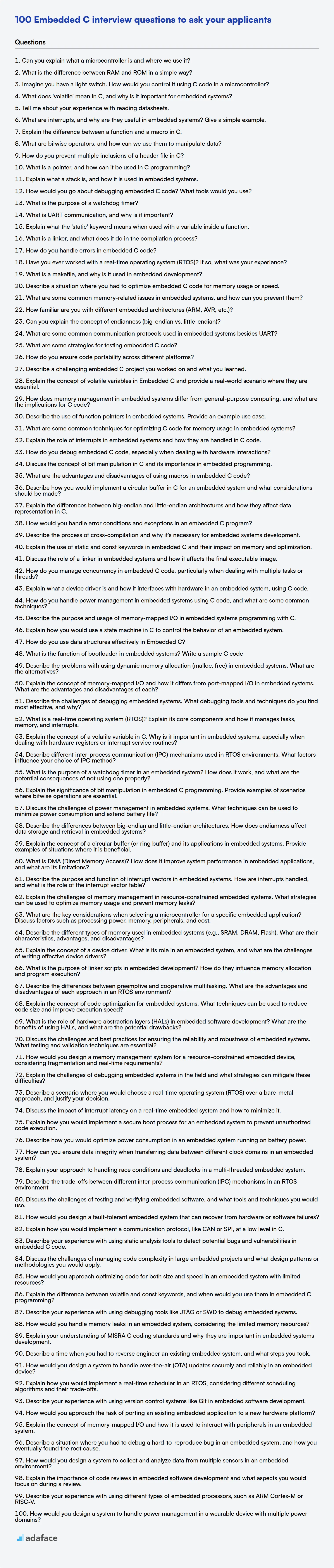

This blog post provides a comprehensive list of interview questions for Embedded C developers, ranging from beginner to expert levels. We've categorized questions based on experience levels, including MCQs, to help you structure your interviews effectively.

By using these questions, you can streamline your hiring process and accurately evaluate candidates' expertise. Consider using an assessment before interviews, such as our Embedded C online test, to get a head start.

Table of contents

Embedded C interview questions for freshers

1. Can you explain what a microcontroller is and where we use it?

A microcontroller is a small, self-contained computer on a single integrated circuit. It contains a processor core, memory (RAM, ROM, or flash), and programmable input/output peripherals. Microcontrollers are designed to be embedded in devices to control specific functions.

They are used in a wide range of applications, including:

- Consumer electronics: such as washing machines, microwave ovens, remote controls.

- Automotive: engine control, anti-lock braking systems (ABS), airbags.

- Industrial automation: programmable logic controllers (PLCs), motor control.

- Medical devices: pacemakers, blood glucose meters.

- Internet of Things (IoT): sensor nodes, smart home devices.

2. What is the difference between RAM and ROM in a simple way?

RAM (Random Access Memory) is volatile memory used for storing data that the computer is actively using. It's fast and allows reading and writing, but data is lost when power is turned off. Think of it like a whiteboard where you can quickly write and erase things.

ROM (Read-Only Memory) is non-volatile memory that stores permanent instructions, like the BIOS, needed to boot up the computer. The data in ROM cannot be easily changed or written to. It's like a printed manual that provides initial instructions, and the content is retained even without power.

3. Imagine you have a light switch. How would you control it using C code in a microcontroller?

To control a light switch with a microcontroller in C, you'd typically interact with a GPIO (General Purpose Input/Output) pin connected to the switch's control circuit (e.g., via a relay). First, configure the GPIO pin as an output using microcontroller-specific register settings. Then, to turn the light on, set the GPIO pin high; to turn it off, set the pin low. This toggling of the GPIO pin activates or deactivates the relay (or similar circuit), thus controlling the light switch.

Example (Conceptual):

// Assuming a specific microcontroller's register names

#define GPIO_PIN 5

#define GPIO_PORT_ADDRESS 0x40000000

void init_gpio() {

// Configure GPIO_PIN as output (microcontroller specific)

}

void turn_light_on() {

// Set GPIO_PIN high (microcontroller specific)

*(volatile uint32_t *)(GPIO_PORT_ADDRESS + 0x10) |= (1 << GPIO_PIN); // Example: set bit for the pin

}

void turn_light_off() {

// Set GPIO_PIN low (microcontroller specific)

*(volatile uint32_t *)(GPIO_PORT_ADDRESS + 0x10) &= ~(1 << GPIO_PIN); // Example: clear bit for the pin

}

Note: The exact code will vary significantly based on the specific microcontroller and its peripheral libraries.

4. What does 'volatile' mean in C, and why is it important for embedded systems?

In C, volatile is a keyword used as a qualifier to a variable declaration. It tells the compiler that the value of the variable may change at any time without any action being taken by the code the compiler generates. This prevents the compiler from performing certain optimizations, such as caching the variable's value in a register, because the value could be modified by external factors like hardware or another thread.

In embedded systems, volatile is crucial. Embedded systems often interact directly with hardware registers, which can be updated asynchronously by peripherals. Without volatile, the compiler might optimize away reads or writes to these registers, leading to incorrect or unpredictable behavior. For example:

volatile uint8_t *status_register = (uint8_t *)0x1234; // Assume a hardware register

while (!(*status_register & 0x01)); // Wait for a bit to be set by hardware

// If status_register is not volatile, the compiler might optimize the loop,

// assuming the value never changes.

5. Tell me about your experience with reading datasheets.

I have extensive experience reading datasheets, primarily for selecting and implementing hardware components and understanding software APIs. My process generally involves starting with the overview and features section to grasp the component's purpose. I then focus on the electrical characteristics (voltage, current, power consumption) and timing diagrams, which are crucial for hardware integration. For software or hardware-software interface related information, I carefully study the command sets, registers, and communication protocols, using example code snippets when available to guide my understanding.

I've used datasheets for microcontrollers (e.g., STM32, ESP32), sensors (e.g., accelerometers, temperature sensors), memory chips (e.g., EEPROM, Flash), and communication interfaces (e.g., UART, SPI, I2C). I am adept at extracting key information like operating conditions, absolute maximum ratings, pin configurations, and performance metrics. I always cross-reference multiple sources when possible to ensure accuracy and clarity, and pay close attention to errata sheets.

6. What are interrupts, and why are they useful in embedded systems? Give a simple example.

Interrupts are hardware or software signals that temporarily suspend the normal execution of the main program to handle a specific event or task. They allow the system to respond to events asynchronously and efficiently, without constantly polling for changes. The system saves the current state, jumps to an Interrupt Service Routine (ISR), executes the ISR, and then restores the previous state and resumes the main program.

In embedded systems, interrupts are crucial for handling real-time events like sensor data arrival, timer expirations, or external button presses. For example, consider a microcontroller reading data from an accelerometer. Instead of continuously polling the accelerometer for new data, the accelerometer can trigger an interrupt when new data is ready. The ISR can then read the data and store it, allowing the main program to focus on other tasks. This improves responsiveness and efficiency compared to polling.

7. Explain the difference between a function and a macro in C.

Functions and macros in C offer ways to reuse code, but they differ significantly in how they're processed. A function is a self-contained block of code that's executed at runtime. When a function is called, the program's execution jumps to the function's location, performs the defined operations, and then returns to the calling point. This involves overhead due to function call setup (pushing arguments onto the stack, etc.).

In contrast, a macro is a preprocessor directive. Before the code is compiled, the preprocessor replaces each instance of the macro with its definition. This is essentially a text substitution. Macros are expanded inline, avoiding function call overhead, which can lead to faster execution. However, this can also increase the code size. Macros don't have type checking (since the preprocessor just does text substitution) so they can be error-prone. Debugging macros can also be trickier. For example:

#define SQUARE(x) ((x) * (x))

int main() {

int y = SQUARE(5); // Becomes int y = ((5) * (5));

return 0;

}

8. What are bitwise operators, and how can we use them to manipulate data?

Bitwise operators perform operations on individual bits of data. They treat values as sequences of bits rather than as whole numbers. Common bitwise operators include:

&(AND): Sets a bit to 1 only if both corresponding bits are 1.|(OR): Sets a bit to 1 if at least one of the corresponding bits is 1.^(XOR): Sets a bit to 1 if the corresponding bits are different.~(NOT): Inverts each bit.<<(Left Shift): Shifts bits to the left, filling with zeros.>>(Right Shift): Shifts bits to the right. (arithmetic vs. logical shifts may differ based on language and data type). For example:

x = 5 # Binary: 0101

y = 3 # Binary: 0011

print(x & y) # 1 (Binary: 0001)

print(x | y) # 7 (Binary: 0111)

print(x ^ y) # 6 (Binary: 0110)

print(~x) # -6 (Binary: ...1010 - depends on the system's integer representation like two's complement)

print(x << 1) # 10 (Binary: 1010)

print(x >> 1) # 2 (Binary: 0010)

They're useful for tasks like setting/clearing specific bits in a flag variable, efficient multiplication/division by powers of 2 (using shifts), and low-level hardware manipulations. They can also be used to improve the performance of certain algorithms and data structures.

9. How do you prevent multiple inclusions of a header file in C?

To prevent multiple inclusions of a header file in C, we use include guards. These are preprocessor directives that ensure a header file is included only once during compilation.

This is typically achieved using the following pattern:

#ifndef HEADER_FILE_NAME_H

#define HEADER_FILE_NAME_H

/* Header file content */

#endif /* HEADER_FILE_NAME_H */

Where HEADER_FILE_NAME_H is a unique identifier, usually based on the header file's name. When the header file is included for the first time, HEADER_FILE_NAME_H is not defined, so the preprocessor defines it and includes the header's content. Subsequent inclusions will find that HEADER_FILE_NAME_H is already defined, so the content between #ifndef and #endif will be skipped, preventing multiple inclusions.

10. What is a pointer, and how can it be used in C programming?

A pointer is a variable that stores the memory address of another variable. It "points to" that memory location. In C, pointers are fundamental and provide powerful capabilities for memory management and manipulating data.

Here's how pointers are used in C programming:

- Dynamic memory allocation: Pointers are essential for

malloc()andcalloc()to allocate memory at runtime. - Passing arguments by reference: Functions can modify variables in the calling function by receiving pointers to those variables.

- Accessing array elements: Pointers can be used to traverse and manipulate array elements efficiently.

*(array + i)is equivalent toarray[i]. - Creating data structures: Pointers are used to link nodes in linked lists, trees, and other dynamic data structures.

int x = 10;

int *p = &x; // p stores the address of x

printf("%d", *p); // Dereferences p to access the value of x (prints 10)

11. Explain what a stack is, and how it is used in embedded systems.

A stack is a data structure that follows the LIFO (Last-In, First-Out) principle. Think of it like a stack of plates; you can only add or remove plates from the top. Key operations include push (adding an element to the top) and pop (removing the top element).

In embedded systems, stacks are crucial for managing function calls and local variables. When a function is called, its return address and local variables are pushed onto the stack. When the function returns, this data is popped off the stack, allowing the program to resume execution correctly. Stacks are also used for interrupt handling, where the current program state is saved on the stack before the interrupt service routine (ISR) is executed. Proper stack size is critical; insufficient stack space can lead to stack overflows, causing unpredictable behavior. For example, a stack overflow can occur when a function calls itself recursively without a proper exit condition. void func() { func(); } will eventually cause a stack overflow.

12. How would you go about debugging embedded C code? What tools would you use?

Debugging embedded C code involves a mix of hardware and software techniques. I'd start with the simplest methods: printf debugging (using UART or similar) to trace program flow and variable values. I'd also carefully review the code for common issues like buffer overflows, memory leaks, and incorrect pointer arithmetic. If printf debugging is insufficient, I'd use a debugger like GDB in conjunction with a JTAG or SWD interface to step through the code, inspect memory, set breakpoints, and examine registers. A logic analyzer or oscilloscope can be invaluable for observing external signals and timing relationships. Tools like static analyzers can also help identify potential code defects before runtime.

13. What is the purpose of a watchdog timer?

A watchdog timer is a hardware or software timer that is used to detect and recover from malfunctions in a system, typically an embedded system or real-time system. Its primary purpose is to prevent the system from getting stuck in an unresponsive state due to software errors or hardware failures.

The watchdog timer works by requiring the system to periodically 'kick' or 'pet' the timer (i.e., reset it). If the system fails to do so within a specified timeout period, the watchdog timer will trigger a reset, restarting the system and hopefully clearing the fault. This helps ensure the system remains operational and responsive even in the presence of unexpected errors.

14. What is UART communication, and why is it important?

UART (Universal Asynchronous Receiver/Transmitter) is a hardware communication protocol that enables serial data exchange between two devices. It's 'asynchronous' because it doesn't rely on a shared clock signal. Instead, it uses start and stop bits to indicate the beginning and end of a data frame.

UART is important because it's a simple and widely available method for connecting microcontrollers, computers, and other peripherals. It's used in GPS modules, Bluetooth modules, serial consoles, and many other applications. Its simplicity and low cost make it ideal for basic communication needs, even though it is relatively slow compared to other communication protocols like SPI or I2C.

15. Explain what the 'static' keyword means when used with a variable inside a function.

When the static keyword is used with a variable inside a function in languages like C or C++, it means that the variable's lifetime extends across multiple function calls. Unlike regular local variables that are created and destroyed each time the function is called, a static variable is initialized only once and retains its value between calls to the function.

Effectively, the variable has 'local scope' (it is only accessible within the function), but 'static lifetime' (it persists throughout the execution of the entire program). For example, a static variable can be used to keep track of how many times a function has been called, without relying on global variables.

16. What is a linker, and what does it do in the compilation process?

A linker is a program that combines one or more object files (generated by a compiler or assembler) into a single executable file, library file, or another object file. It resolves symbolic references between these object files, which are placeholders for addresses that aren't known until all the code is combined. The linker takes the machine code generated by compilers/assemblers and creates the final runnable program.

Specifically, the linker performs two main tasks:

- Symbol Resolution: It matches symbolic references (e.g., function calls, variable references) in one object file with their definitions in other object files or libraries.

- Relocation: It adjusts the addresses of code and data sections within the combined output file, ensuring that the program can run correctly at its designated memory location. This often involves modifying addresses in the instruction stream. For example, if

foo()infile1.ocallsbar()infile2.o, the linker replaces the placeholder address forbar()infile1.owithbar()'s actual address after merging the two object files.

17. How do you handle errors in embedded C code?

Error handling in embedded C is crucial for system stability. Common strategies involve return code checking, assertions, and exception handling using setjmp and longjmp (though used with caution). We often define custom error codes (enums) and check function return values against these codes. If an error is detected, appropriate action is taken, like logging the error, attempting recovery, or resetting the system.

Specifically, here are some methods:

- Return codes: Functions return error codes indicating success or failure.

- Assertions:

assert()statements verify assumptions and halt execution if violated (mainly for debugging). - Error flags: Global or local flags can be set to indicate error conditions.

- Watchdog timers: Reset the system if a process takes too long.

- Custom error handlers: Functions dedicated to handling specific error types. e.g., logging the error using

printf, resetting a sensor, or restarting the system. Code examples might look like:

enum {

OK,

ERROR_INVALID_INPUT,

ERROR_TIMEOUT

};

int myFunction(int input) {

if (input < 0) {

return ERROR_INVALID_INPUT;

}

// ... perform operations ...

if (timeoutOccurred()){

return ERROR_TIMEOUT;}

return OK;

}

int main() {

int result = myFunction(-5);

if (result != OK) {

// Handle error

printf("Error occurred: %d\n", result);

}

return 0;

}

18. Have you ever worked with a real-time operating system (RTOS)? If so, what was your experience?

Yes, I have experience working with real-time operating systems (RTOS), specifically FreeRTOS and Micrium OS. My experience primarily involves embedded systems development where deterministic behavior is crucial. I've used RTOS features like tasks, mutexes, semaphores, and message queues for inter-task communication and resource management.

For example, I've implemented task scheduling to prioritize critical operations like sensor data acquisition and motor control. I have also used mutexes to protect shared resources from race conditions. I also have debugging experience using tools like GDB and tracealyzer when using an RTOS.

19. What is a makefile, and why is it used in embedded development?

A makefile is a text file that contains a set of rules used by the make utility to automate the process of compiling and building software. It specifies dependencies between source files, object files, and the final executable, along with the commands needed to create them. In essence, it defines how to build your project.

Makefiles are crucial in embedded development for several reasons:

- Automation: They automate the build process, eliminating the need to manually compile each file and link them together every time you make a change.

- Dependency Management: They track dependencies between files, ensuring that only the necessary files are recompiled when changes are made, significantly reducing build times.

- Configuration: They allow you to easily configure build settings, such as compiler flags, optimization levels, and target architectures.

- Portability: Makefiles can be used across different platforms and toolchains with minimal modifications.

- Consistency: They enforce a consistent build process, reducing the risk of errors caused by manual intervention.

20. Describe a situation where you had to optimize embedded C code for memory usage or speed.

I was working on a low-power IoT device where memory and processing power were extremely limited. The device needed to perform real-time data compression before transmitting data over a narrowband connection. The initial implementation of the compression algorithm was too slow and consumed too much RAM.

To optimize it, I first profiled the code to identify the performance bottlenecks using a simple timing mechanism. Then, I focused on reducing memory usage by switching from dynamically allocated memory (using malloc) to pre-allocated static buffers where the maximum size was known. Additionally, I replaced some floating-point calculations with fixed-point arithmetic, which significantly reduced memory footprint and improved execution speed on the microcontroller. I also inlined frequently called functions to reduce function call overhead. Finally, I made sure to use bit-fields for representing certain data structures to make effective utilization of memory by packing variables into single byte. uint8_t flags:3;

21. What are some common memory-related issues in embedded systems, and how can you prevent them?

Common memory-related issues in embedded systems include memory leaks, buffer overflows, stack overflows, and fragmentation. Memory leaks occur when memory is allocated but not freed, eventually leading to resource exhaustion. Buffer overflows happen when data is written beyond the allocated buffer's boundaries, potentially corrupting adjacent memory or causing crashes. Stack overflows arise when the call stack exceeds its allocated size, often due to excessive recursion or large local variables.

To prevent these issues, employ static analysis tools to detect potential memory leaks and buffer overflows during development. Use dynamic memory allocation cautiously, always pairing malloc with free and ensuring timely deallocation. Implement bounds checking to prevent writing beyond buffer limits. Reduce stack usage by minimizing recursion depth, using iterative solutions where possible, and allocating large variables dynamically instead of on the stack. Consider using memory management techniques like memory pools to reduce fragmentation.

22. How familiar are you with different embedded architectures (ARM, AVR, etc.)?

I have experience with both ARM and AVR architectures. I'm comfortable working with ARM Cortex-M series microcontrollers, having used them in projects involving sensor data acquisition and motor control. This included configuring peripherals like timers, ADCs, UARTs, and SPI. I've also worked with the AVR architecture, specifically the ATmega series, for simpler embedded applications, where I focused on low-power operation and interrupt-driven programming.

My familiarity extends to understanding the differences in their instruction sets, memory organization, and interrupt handling mechanisms. While I have more hands-on experience with ARM, I can quickly adapt to new architectures based on project requirements.

23. Can you explain the concept of endianness (big-endian vs. little-endian)?

Endianness refers to the order in which bytes of a multi-byte data type (like integers or floating-point numbers) are stored in computer memory. There are two main types: big-endian and little-endian.

- Big-endian: The most significant byte (MSB) is stored at the lowest memory address. This is like reading a number from left to right as we usually do.

- Little-endian: The least significant byte (LSB) is stored at the lowest memory address. Most modern x86 processors use little-endian.

For example, the number 0x12345678 would be stored in memory as follows:

- Big-Endian:

12 34 56 78 - Little-Endian:

78 56 34 12

24. What are some common communication protocols used in embedded systems besides UART?

Besides UART, several other communication protocols are commonly used in embedded systems. SPI (Serial Peripheral Interface) is a synchronous serial communication interface used for short-distance, high-speed communication. I2C (Inter-Integrated Circuit) is another synchronous serial communication protocol, often used for communication between a microcontroller and peripherals. CAN (Controller Area Network) is a robust serial communication protocol widely used in automotive and industrial applications for communication between different electronic control units (ECUs).

Other protocols include Ethernet (for network connectivity), USB (for connecting to host devices), and protocols like Bluetooth and Zigbee for wireless communication. The choice of protocol depends on factors such as speed requirements, distance, the number of devices on the bus, and the environment in which the system operates.

25. What are some strategies for testing embedded C code?

Strategies for testing embedded C code include unit testing, integration testing, and system testing. Unit testing focuses on individual functions or modules using frameworks like Unity or CMock. Create test harnesses to simulate the embedded environment and use mocking to isolate the unit under test from hardware dependencies.

Integration testing verifies the interaction between different modules, and system testing validates the entire system's functionality. For integration and system testing, consider using hardware-in-the-loop (HIL) simulation to test the code in a simulated environment or target testing on the actual hardware. Code coverage analysis is essential to identify untested code paths. Static analysis tools and dynamic analysis with debuggers can also help find bugs and ensure code quality. Regularly running test suites during development is important.

26. How do you ensure code portability across different platforms?

To ensure code portability, I focus on using standard libraries and avoid platform-specific features. This includes adhering to language standards (e.g., using standard C++ instead of compiler-specific extensions). When dealing with OS-level functionalities, I abstract them using cross-platform libraries like SDL, Qt, or Boost, or I implement my own abstraction layers.

Specifically, I'd pay attention to potential differences in file paths, byte order, and data sizes across platforms. Using conditional compilation (e.g., #ifdef directives) or build systems like CMake can help manage platform-specific code sections. Thorough testing on different target platforms is crucial to verify portability and identify potential issues.

27. Describe a challenging embedded C project you worked on and what you learned.

In a previous role, I worked on a battery management system (BMS) for a high-power electric vehicle. The system was responsible for monitoring cell voltages, temperatures, and currents, and then using that information to control charging and discharging, as well as providing safety features like over-voltage and over-current protection. One major challenge was implementing a robust state-of-charge (SoC) estimation algorithm given noisy sensor data and the non-linear characteristics of the battery. We used an extended Kalman filter (EKF) to improve accuracy, but tuning the filter parameters for different operating conditions was difficult and involved a lot of testing and data analysis. The BMS was implemented on a microcontroller using C.

I learned a lot about Kalman filtering, battery electrochemistry, and real-time embedded systems development. I also gained valuable experience in debugging complex systems and working collaboratively with a cross-functional team. Specifically, I improved my skills with: data acquisition, signal processing, and control systems, as well as C programming for embedded devices.

Intermediate Embedded C interview questions

1. Explain the concept of volatile variables in Embedded C and provide a real-world scenario where they are essential.

In Embedded C, a volatile variable informs the compiler that the variable's value may change unexpectedly, outside the normal flow of program execution. This prevents the compiler from performing optimizations that assume the variable's value remains constant, such as caching the value in a register. The compiler will always read the variable's value directly from memory instead of relying on a potentially stale cached value.

A real-world scenario where volatile is essential is when dealing with hardware registers in embedded systems. For example, consider reading data from a sensor connected to a microcontroller. The sensor's data is stored in a specific memory location (a hardware register). The microcontroller needs to periodically read this register to get the latest sensor reading. Without declaring the register as volatile, the compiler might optimize the code by reading the register only once and then reusing the cached value, even if the sensor data has changed. This would lead to inaccurate readings. Declaring the register as volatile ensures that the microcontroller always reads the most up-to-date value from the sensor.

volatile uint8_t *sensor_data_register = (uint8_t *)0x1234; // Example memory address

uint8_t read_sensor_data() {

return *sensor_data_register; // Always reads from the memory location 0x1234

}

2. How does memory management in embedded systems differ from general-purpose computing, and what are the implications for C code?

Memory management in embedded systems differs significantly from general-purpose computing due to resource constraints and real-time requirements. Embedded systems often have limited RAM, ROM/Flash memory, and processing power. Dynamic memory allocation (using malloc and free) is often avoided or carefully controlled because of its non-deterministic behavior and potential for memory fragmentation. Instead, static allocation, memory pools, or custom memory managers are preferred.

This has implications for C code: * Static Allocation: Using global or static variables extensively to avoid dynamic allocation overhead.* Memory Pools: Implementing custom memory pools for managing fixed-size memory blocks efficiently. * Careful Resource Tracking: Meticulously tracking memory usage to prevent leaks or overflows. * Avoiding Recursion/Deep Call Stacks: Minimizing stack usage due to limited stack sizes.* Custom Memory Managers: Writing custom memory allocation routines optimized for the specific application and hardware.

3. Describe the use of function pointers in embedded systems. Provide an example use case.

Function pointers in embedded systems are pointers that store the memory address of a function. This allows for dynamic function calls at runtime, enabling flexibility and code reusability. Instead of directly calling a function by its name, the system calls the function pointed to by the pointer. This is particularly useful when the specific function to be executed is determined at runtime, based on external inputs, sensor data, or system states.

Example use case: Consider a system controlling different peripherals. Instead of using a large switch statement or if/else chains to select the appropriate peripheral control function, an array of function pointers can be used. Each element of the array points to a specific peripheral's control function. Based on a peripheral ID, the corresponding function can be called using the function pointer, leading to cleaner and more maintainable code. For example:

typedef void (*peripheral_function)(void);

peripheral_function peripheral_functions[NUM_PERIPHERALS] = {&peripheral1_control, &peripheral2_control, ...};

void control_peripheral(int peripheral_id) {

if (peripheral_id >= 0 && peripheral_id < NUM_PERIPHERALS) {

peripheral_functions[peripheral_id]();

}

}

4. What are some common techniques for optimizing C code for memory usage in embedded systems?

Several techniques help optimize C code for memory usage in embedded systems. Using smaller data types like int8_t or uint8_t instead of int can significantly reduce memory footprint, especially in large arrays or structures. Bit fields allow packing multiple variables into a single word, optimizing space for flags or small values. Careful memory allocation and deallocation using malloc and free (or custom memory management schemes) prevents memory leaks. Avoid dynamic allocation when possible. Consider using static allocation instead.

Furthermore, code optimization techniques like loop unrolling (when appropriate) and inlining small functions can reduce code size, though may increase overall memory consumption in some cases. Minimizing the use of global variables, which consume memory throughout the program's execution, is also beneficial. Using the const keyword whenever possible for variables that won't change can help the compiler store these variables in read-only memory, which may be more efficient.

5. Explain the role of interrupts in embedded systems and how they are handled in C code.

Interrupts in embedded systems are crucial for handling asynchronous events efficiently. They allow the system to respond to real-time events without constantly polling, making it more responsive and efficient. When an interrupt occurs, the current program execution is suspended, and a special function called an Interrupt Service Routine (ISR) or interrupt handler is executed.

In C code, interrupts are handled using interrupt vectors and ISRs. An interrupt vector table maps interrupt numbers to the memory addresses of their corresponding ISRs. To define an ISR in C, you typically use compiler-specific keywords (e.g., __interrupt in some compilers) or libraries (e.g., using CMSIS) to indicate that the function is an interrupt handler. For example:

__interrupt void timer_isr(void) {

// Code to handle the timer interrupt

// For example, update a counter or toggle an LED

}

Within the ISR, you perform the necessary actions to handle the interrupt, such as reading data from a sensor, updating system state, or signaling another part of the program. It's critical that ISRs are short and efficient to minimize the time the main program is interrupted.

6. How do you debug embedded C code, especially when dealing with hardware interactions?

Debugging embedded C code, especially with hardware, often involves a multi-pronged approach. Key strategies include: Serial Print Debugging: Utilizing printf or similar functions over UART to output variable values and program flow information, though mindful of timing impacts. Hardware Debuggers (JTAG/SWD): Using tools like GDB with JTAG/SWD interfaces allows stepping through code, setting breakpoints, and inspecting memory and registers in real-time. Logic Analyzers and Oscilloscopes: These are invaluable for observing hardware signals, timing, and interactions between components.

Additional techniques involve: Code Reviews: Having peers review code to catch errors. Unit Testing: Testing individual functions in isolation. Static Analysis Tools: Using tools to identify potential bugs and vulnerabilities in the code before execution. Using assertions helps in verifying assumptions within the code. Carefully examining the hardware datasheets and schematics is crucial for understanding the expected behavior and identifying potential hardware-related issues. Finally, ensure correct toolchain configuration.

7. Discuss the concept of bit manipulation in C and its importance in embedded programming.

Bit manipulation in C involves directly manipulating individual bits within a variable, often an integer. It's crucial in embedded programming because it allows for precise control over hardware registers and memory, which are often bit-oriented. Common bitwise operators include:

&(AND): Sets a bit to 1 only if both corresponding bits are 1.|(OR): Sets a bit to 1 if either or both corresponding bits are 1.^(XOR): Sets a bit to 1 if the corresponding bits are different.~(NOT): Inverts all the bits.<<(Left Shift): Shifts bits to the left, filling with zeros.>>(Right Shift): Shifts bits to the right (arithmetic or logical shift, implementation-dependent).

Embedded systems often have limited resources, and bit manipulation helps optimize memory usage and execution speed. For example, packing multiple boolean flags into a single byte reduces memory footprint. Directly setting or clearing specific bits in a hardware register (like enabling an interrupt) is another frequent application. Consider this example:

#define ENABLE_INTERRUPT 0x01

void enableInterrupt() {

// Assume register address is 0x1000

unsigned char *registerAddress = (unsigned char *)0x1000;

*registerAddress |= ENABLE_INTERRUPT; // Set the first bit to enable interrupt

}

In the above example, ENABLE_INTERRUPT is a macro representing a bitmask (0x01 in this case). The |= operator is used to set that bit, effectively enabling the interrupt without modifying the other bits in the register.

8. What are the advantages and disadvantages of using macros in embedded C code?

Macros in embedded C can offer several advantages, primarily related to performance and code brevity. They allow for code substitution at compile time, potentially eliminating function call overhead and improving execution speed, which is crucial in resource-constrained embedded systems. Macros can also be used for conditional compilation using #ifdef, #ifndef, #else, #endif which enables easy configuration of code for different hardware platforms or software features. They can also be used to define constants which might offer improved readability.

However, macros also have disadvantages. They can reduce code readability and maintainability due to their textual substitution nature making debugging more difficult. Because macros are pre-processed before compilation, error messages can be cryptic and difficult to trace back to the original macro definition. Macros don't have type checking, which can lead to unexpected behavior or errors at runtime. Also, macros can introduce side effects if not carefully designed, particularly when dealing with expressions that have side effects such as increment/decrement operations. The lack of namespace management can also lead to naming conflicts. A safer alternative to macros is often using inline functions or const variables. inline offers much of the performance of a macro with type checking and debugging support, while const offers the readability of constants.

9. Describe how you would implement a circular buffer in C for an embedded system and what considerations should be made?

A circular buffer in C for an embedded system can be implemented using an array and two pointers: head and tail. head points to the next available slot for writing, and tail points to the oldest element in the buffer. When writing data, the data is placed at the head location, and head is incremented. When reading, data is taken from the tail location, and tail is incremented. When either pointer reaches the end of the array, it wraps around to the beginning. A crucial part is handling buffer full and empty conditions.

Important considerations include:

- Memory allocation: Static allocation is preferred in embedded systems to avoid dynamic allocation overhead and fragmentation.

- Thread safety: If multiple threads or interrupt routines access the buffer, you need proper synchronization mechanisms like mutexes or disabling interrupts.

- Data size: Consider the size of the data being stored and choose an appropriate data type for the buffer.

- Buffer size: Choose the buffer size carefully to balance memory usage and performance needs. Make sure the buffer is large enough to accommodate burst writes or reads, otherwise data could be lost. Use a power of 2 size for more efficient modulo calculation using bitwise AND operations

index & (buffer_size - 1)instead of the modulo%operator. This can be significantly faster especially for small embedded systems.

10. Explain the differences between big-endian and little-endian architectures and how they affect data representation in C.

Big-endian and little-endian are two ways to store multi-byte data types (like integers, floats) in computer memory. In big-endian, the most significant byte (MSB) is stored at the lowest memory address. In little-endian, the least significant byte (LSB) is stored at the lowest memory address.

In C, endianness affects how you interpret data stored in memory or read from files/networks. For example, if you have a 4-byte integer with the value 0x12345678, in big-endian it would be stored in memory as 12 34 56 78, while in little-endian it would be stored as 78 56 34 12. This matters when you're working with binary data, network protocols, or memory dumps, especially when transferring data between systems with different endianness. You might need to use functions like ntohl() (network to host long) and htonl() (host to network long) to convert between network byte order (big-endian) and the host's byte order.

#include <stdio.h>

int main() {

unsigned int num = 0x12345678;

unsigned char *ptr = (unsigned char *)#

printf("Byte representation: ");

for (int i = 0; i < sizeof(num); i++) {

printf("%02x ", ptr[i]);

}

printf("\n");

return 0;

}

Running this code will output 78 56 34 12 on a little-endian system and 12 34 56 78 on a big-endian system.

11. How would you handle error conditions and exceptions in an embedded C program?

In embedded C, error handling is crucial due to limited resources and real-time constraints. Common strategies include using return codes to indicate success or failure. A typical approach is to define error codes (e.g., an enum) and return them from functions. The caller then checks the return code and takes appropriate action. For example:

enum error_code {

SUCCESS = 0,

ERROR_NULL_POINTER,

ERROR_INVALID_VALUE

};

enum error_code some_function(int *ptr) {

if (ptr == NULL) {

return ERROR_NULL_POINTER;

}

// ... rest of function

return SUCCESS;

}

For exception-like scenarios, especially when a function cannot meaningfully continue, consider using assertion macros (assert.h) during development to catch unexpected conditions. In production, these assertions can be disabled to avoid halting the system, or replaced with custom error handlers that log the error and attempt a recovery, such as restarting a task or entering a safe state. Avoid using C++ exceptions directly in C due to their overhead and complexity.

12. Describe the process of cross-compilation and why it's necessary for embedded systems development.

Cross-compilation is the process of compiling code on one platform (the host) to create an executable for a different platform (the target). This is essential for embedded systems development because embedded devices often have limited resources (processing power, memory) that make it impractical or impossible to compile code directly on the device itself.

Think of it this way: Your powerful laptop (host) compiles code that runs on a tiny microcontroller (target) in a coffee machine. Without cross-compilation, you'd need to somehow get a full-fledged compiler running on that microcontroller, which is usually not feasible. We use specialized toolchains, for example a gcc cross-compiler built for a specific target architecture, like arm-none-eabi-gcc for ARM microcontrollers. This involves setting up build environments, understanding target-specific libraries, and often handling platform-dependent optimization flags during the compilation process. We use flags such as -target <target_architecture> to specify the architecture we're compiling for.

13. Explain the use of static and const keywords in embedded C and their impact on memory and optimization.

In embedded C, static and const keywords serve distinct purposes related to memory and optimization. static has two main uses: for variables within a function, it means the variable retains its value between function calls and is initialized only once. For global variables or functions, static limits their scope to the file they are defined in, preventing naming conflicts and potentially allowing the compiler to optimize by knowing the variable is only used within that file. This can reduce code size.

const declares a variable as read-only, meaning its value cannot be changed after initialization. This allows the compiler to place the variable in read-only memory (like flash), saving RAM. The compiler can also perform optimizations based on the knowledge that the variable's value will not change, such as inlining the constant value directly into the code or removing redundant calculations. Here's an example:

const int MAX_VALUE = 100; // stored in flash, can be optimized

static int counter = 0; // persists between function calls, file scope

14. Discuss the role of a linker in embedded systems and how it affects the final executable image.

In embedded systems, a linker plays a crucial role in creating the final executable image that runs on the target device. It takes one or more object files (generated by the compiler from source code) and libraries as input and combines them to produce a single executable file. The linker resolves symbolic references (like function calls or variable accesses) between different object files. This involves matching function calls to their definitions, and ensuring correct memory addresses are assigned.

The linker significantly impacts the final executable in several ways. It determines the memory layout of the program, assigning addresses to different sections of code and data. It also eliminates unused code and data, reducing the size of the final image, which is particularly important in resource-constrained embedded systems. Linker scripts are often used to precisely control the memory layout, placing specific code or data at specific addresses, necessary for interacting with hardware peripherals. Without a linker, the individual object files remain fragmented and cannot be executed as a cohesive program.

15. How do you manage concurrency in embedded C code, particularly when dealing with multiple tasks or threads?

Managing concurrency in embedded C often involves techniques to prevent race conditions and ensure data consistency when multiple tasks or threads access shared resources. Common approaches include using mutexes (mutual exclusion locks) to protect critical sections of code, semaphores for signaling between tasks, and atomic operations for simple data updates.

Interrupts also introduce concurrency. When handling interrupts, it's crucial to disable them briefly while accessing shared data to prevent race conditions. Volatile variables should be used to indicate to the compiler that a variable's value might change unexpectedly. Also, non-blocking techniques using flags can be used to achieve concurrency.

16. Explain what a device driver is and how it interfaces with hardware in an embedded system, using C code.

A device driver is software that allows the operating system and other programs to interact with a specific hardware device. It acts as a translator, converting high-level software requests into low-level hardware commands and vice versa. In an embedded system, this interface is crucial because the hardware is often custom or highly specialized.

In C, device driver interacts with hardware primarily via memory-mapped I/O or port I/O. For example, to control an LED connected to a GPIO pin, a driver might write to a specific memory address that corresponds to the GPIO controller. Here's a snippet illustrating that:

#define GPIO_BASE 0x40000000

#define GPIO_OUTPUT_EN (GPIO_BASE + 0x04)

#define GPIO_OUTPUT_DATA (GPIO_BASE + 0x08)

void led_on() {

// Enable the GPIO pin for output

*(volatile unsigned int *)GPIO_OUTPUT_EN |= (1 << LED_PIN);

// Set the GPIO pin high to turn the LED on

*(volatile unsigned int *)GPIO_OUTPUT_DATA |= (1 << LED_PIN);

}

void led_off() {

// Enable the GPIO pin for output

*(volatile unsigned int *)GPIO_OUTPUT_EN |= (1 << LED_PIN);

// Set the GPIO pin low to turn the LED off

*(volatile unsigned int *)GPIO_OUTPUT_DATA &= ~(1 << LED_PIN);

}

This code directly manipulates memory addresses to control the hardware.

17. How do you handle power management in embedded systems using C code, and what are some common techniques?

Power management in embedded systems using C involves minimizing energy consumption to extend battery life or reduce heat dissipation. Common techniques include:

- Clock gating: Disabling the clock signal to inactive peripherals or modules.

- Voltage/Frequency scaling (DVFS): Dynamically adjusting the voltage and frequency of the processor based on workload. This can be implemented using system calls or hardware drivers.

- Power modes: Utilizing different power modes (e.g., sleep, deep sleep, standby) to reduce power consumption when the system is idle. C code interacts with hardware registers to set these modes. For example:

// Example: Entering sleep mode void enter_sleep_mode() { // Disable interrupts disable_interrupts(); // Configure sleep mode registers SLEEP_MODE_CTRL |= SLEEP_MODE_IDLE; // Enable sleep set_sleep_enable(); // Go to sleep sleep_cpu(); // Wake-up sequence } - Peripheral management: Disabling unused peripherals and configuring them to low-power modes.

- Interrupt management: Carefully managing interrupts to avoid unnecessary wake-ups.

- Code optimization: Optimizing C code for efficiency (e.g., minimizing memory access, using efficient algorithms).

18. Describe the purpose and usage of memory-mapped I/O in embedded systems programming with C.

Memory-mapped I/O (MMIO) is a technique in embedded systems where peripheral device registers are mapped into the system's memory address space. Instead of using special I/O instructions, the CPU can access and control peripherals by reading from and writing to specific memory addresses, treating the peripheral registers like memory locations. This simplifies programming in C because standard memory access instructions (e.g., pointer dereferencing) can be used for I/O operations. For example:

#define UART_DR *((volatile unsigned int *) 0x4000C000) // UART Data Register at address 0x4000C000

void transmit(unsigned char data) {

UART_DR = data; // Write data to the UART data register

}

Using MMIO allows device drivers to be written in a straightforward manner using standard C constructs. It provides a unified address space, where peripherals can be accessed using the same instructions as RAM or ROM. This simplifies the overall system architecture and potentially improves performance in certain scenarios by leveraging the CPU's memory access mechanisms.

19. Explain how you would use a state machine in C to control the behavior of an embedded system.

A state machine in C for an embedded system allows you to manage complex behavior by defining distinct states and transitions between them. Each state represents a specific mode of operation for the system. The code uses a switch statement or a function pointer array to determine the actions to perform based on the current state. Inputs (sensor data, user input, etc.) trigger transitions to different states.

For example, consider a coffee machine. States could be IDLE, BREWING, DISPENSING, ERROR. Input from a button press in the IDLE state might trigger a transition to BREWING. Each state has associated functions executed within that state, handling tasks like heating water or dispensing coffee. This approach improves code organization, maintainability, and predictability.

20. How do you use data structures effectively in Embedded C?

In Embedded C, data structures are crucial for organizing and managing memory-constrained data efficiently. I use structures (struct) to group related data elements into a single unit. Unions (union) are helpful for representing data that can take on different types, saving memory by overlapping storage. Arrays are frequently used for storing sequences of data and can be used in conjunction with pointers for efficient manipulation. Understanding memory allocation and the trade-offs between static and dynamic memory is key.

Effective use involves selecting appropriate data structures based on the problem's constraints. For example, a circular buffer implemented with an array can be useful for managing incoming data streams. Bitfields within structures are useful when needing to represent individual bits, such as hardware registers. When working with external memory, using volatile will ensure that the compiler does not optimize away reads/writes to them.

struct sensor_data {

uint16_t temperature;

uint16_t humidity;

};

21. What is the function of bootloader in embedded systems? Write a sample C code

The bootloader in embedded systems is a small piece of code that runs before the main operating system or application. Its primary function is to initialize the hardware and load the main application code into memory, and then transfer control to it. It is essential for updating the firmware of the device over-the-air (OTA) or through other interfaces.

Here's a simple example of a bootloader code snippet in C:

void bootloader_main() {

// Initialize hardware (clocks, memory, peripherals)

init_hardware();

// Load application from flash memory

load_application();

// Jump to application entry point

jump_to_application();

}

22. Describe the problems with using dynamic memory allocation (malloc, free) in embedded systems. What are the alternatives?

Dynamic memory allocation, while flexible, poses several challenges in embedded systems. Fragmentation is a significant concern, leading to memory exhaustion over time. Each allocation and deallocation can leave small, unusable blocks, reducing the available contiguous memory. Also, malloc and free are often non-deterministic, meaning their execution time can vary significantly, which is unacceptable in real-time systems where predictable performance is critical. Memory leaks, where allocated memory isn't freed, are another risk, potentially causing system instability or failure. Finally, standard library malloc implementations might be large and consume precious flash and RAM resources.

Alternatives to dynamic memory allocation include static allocation (defining fixed-size arrays or buffers at compile time), using memory pools (allocating fixed-size blocks from a pre-allocated pool), and using custom memory management schemes tailored to the specific application's needs. Memory pools mitigate fragmentation and provide deterministic allocation/deallocation times. Static allocation eliminates fragmentation and allocation overhead but requires knowing memory needs upfront. Custom allocators can be optimized for specific allocation patterns to reduce overhead and fragmentation.

Advanced Embedded C interview questions

1. Explain the concept of memory-mapped I/O and how it differs from port-mapped I/O in embedded systems. What are the advantages and disadvantages of each?

Memory-mapped I/O treats peripheral device registers as memory locations, allowing the CPU to access them using the same instructions used for accessing RAM. Port-mapped I/O uses separate address spaces and dedicated I/O instructions (e.g., in and out in x86) to communicate with peripherals.

Memory-mapped I/O's advantages include simpler programming (using standard memory access instructions), a larger address space for peripherals, and easier implementation of DMA. Disadvantages include potentially reducing the available memory space and the possibility of accidental memory corruption due to unintended writes. Port-mapped I/O's advantages are that it doesn't consume memory address space and can offer better isolation between memory and I/O. Its disadvantages include the need for specialized I/O instructions, a limited number of I/O ports, and more complex DMA implementations.

2. Describe the challenges of debugging embedded systems. What debugging tools and techniques do you find most effective, and why?

Debugging embedded systems presents unique challenges compared to traditional software development due to limited resources, real-time constraints, and close interaction with hardware. Access to the system can be restricted, and the lack of standard operating systems or debugging interfaces can complicate the process. Issues related to timing, interrupts, and peripheral interactions can be difficult to isolate. Memory constraints often limit the ability to use extensive logging or debugging tools.

Effective debugging tools and techniques include: JTAG debuggers for low-level access and control, logic analyzers to observe signal behavior, oscilloscopes to analyze timing and signal integrity, and in-circuit emulators (ICE) for real-time debugging. Techniques like breakpoints, single-stepping, and memory inspection are crucial. Remote debugging via UART or network connections, using debug print statements (sparingly, due to resource constraints), and leveraging custom debugging interfaces tailored to the specific hardware can also be effective. Writing comprehensive unit tests is essential to isolate software problems early in the development cycle. Understanding the hardware specifications and using a systematic approach is key to quickly identifying the root cause of the problem. Using tools like gdb alongside openocd can be helpful for ARM based systems.

3. What is a real-time operating system (RTOS)? Explain its core components and how it manages tasks, memory, and interrupts.

A Real-Time Operating System (RTOS) is an operating system designed for applications with strict timing requirements. It guarantees that certain processes or tasks will be completed within a specified time constraint, making it suitable for embedded systems, robotics, and industrial control. Core components include a scheduler, inter-process communication (IPC) mechanisms, memory management, and interrupt handlers.

RTOS manages tasks using a scheduler, often priority-based, to determine which task runs next. Memory management may involve static allocation or dynamic allocation schemes, with careful control to avoid fragmentation and ensure timely access. Interrupts are handled with minimal latency, using interrupt handlers to respond quickly to external events. Task context switching is optimized for speed, enabling the RTOS to switch between tasks rapidly in response to interrupts or scheduling decisions.

4. Explain the concept of a volatile variable in C. Why is it important in embedded systems, especially when dealing with hardware registers or interrupt service routines?

A volatile variable in C tells the compiler that the value of the variable may change at any time, without any action being taken by the code. This prevents the compiler from performing optimizations that assume the variable's value remains constant. This is crucial in embedded systems because hardware registers and interrupt service routines (ISRs) can modify variables asynchronously.

In embedded systems, consider a hardware register that updates a sensor reading. If the compiler isn't aware that the register can change outside the normal program flow, it might optimize code based on a stale value. Similarly, ISRs can update variables used in the main program. Without volatile, the compiler might optimize away reads or writes to these shared variables, leading to incorrect behavior. By declaring a variable volatile, we force the compiler to always read the variable from memory and write changes directly back to memory, ensuring the program always uses the most up-to-date value. For example: volatile uint32_t *hardware_register;

5. Describe different inter-process communication (IPC) mechanisms used in RTOS environments. What factors influence your choice of IPC method?

RTOS environments employ various IPC mechanisms to enable tasks to communicate and synchronize. Common methods include:

- Queues: Allow tasks to send and receive data in a FIFO (First-In, First-Out) manner. Useful for passing messages and data streams.

- Semaphores: Used for synchronization and mutual exclusion. Binary semaphores act as mutexes, while counting semaphores control access to a limited number of resources.

- Mutexes: Provide mutual exclusion, ensuring that only one task can access a shared resource at a time. Often include priority inheritance to avoid priority inversion issues.

- Mailboxes: Enable tasks to send and receive single messages, usually pointers to data structures.

- Shared Memory: Allows tasks to directly access a common memory region. Requires careful synchronization using other IPC mechanisms to prevent data corruption.

- Pipes: Support unidirectional data flow between tasks.

Factors influencing the choice of IPC method include: data size, synchronization requirements, latency constraints, complexity, and RTOS support. For example, queues are suitable for asynchronous data transfer, while mutexes are ideal for protecting critical sections. If speed is paramount, shared memory might be considered, but with careful management.

6. What is the purpose of a watchdog timer in an embedded system? How does it work, and what are the potential consequences of not using one properly?

A watchdog timer is a hardware or software timer used in embedded systems to detect and recover from malfunctions. Its primary purpose is to reset the system if it gets stuck in an unexpected state, preventing complete system failure. The watchdog timer works by requiring the main program to periodically 'kick' or 'pet' the watchdog within a specified time window. If the watchdog isn't kicked in time, it assumes the system is malfunctioning and triggers a reset.

If a watchdog timer isn't used or isn't configured properly, the embedded system can become unresponsive or enter an infinite loop, leading to system crashes or unpredictable behavior. This can have severe consequences, such as data loss, equipment damage, or even safety hazards in critical applications like medical devices or automotive systems. Improper configuration, such as too long a timeout, can render the watchdog ineffective in detecting failures quickly. Conversely, too short a timeout can cause spurious resets if the system is performing a lengthy, legitimate operation.

7. Explain the significance of bit manipulation in embedded C programming. Provide examples of scenarios where bitwise operations are essential.

Bit manipulation is crucial in embedded C programming because it allows direct control and manipulation of individual bits within memory locations. This is essential in resource-constrained environments where memory and processing power are limited. It is efficient in setting or clearing specific hardware registers, interpreting sensor data, and implementing communication protocols.

Examples where bitwise operations are essential:

- Hardware control: Setting specific bits in a register to enable/disable peripherals or configure their operating mode.

#define ENABLE_LED (1 << 5) // Shift 1 to the 5th bit position PORTA |= ENABLE_LED; // Enable the LED by setting the 5th bit - Data packing: Combining multiple small data values into a single byte or word to save memory.

- Status flags: Representing multiple boolean states within a single variable.

#define FLAG_A (1 << 0) #define FLAG_B (1 << 1) uint8_t flags = 0; flags |= FLAG_A; // Set flag A if (flags & FLAG_B) { // Check if flag B is set } - Communication protocols: Extracting or inserting data bits in specific formats required by protocols like SPI or I2C.

8. Discuss the challenges of power management in embedded systems. What techniques can be used to minimize power consumption and extend battery life?

Power management in embedded systems is challenging due to the constraints of limited battery capacity, real-time operation requirements, and the need to support diverse functionalities. Key challenges include minimizing static power consumption (leakage), dynamic power consumption (switching), and managing power during idle states. Balancing performance with energy efficiency is crucial.

Several techniques can be used to minimize power consumption:

- Clock gating: Disabling the clock signal to inactive modules.

- Voltage/Frequency scaling (DVFS): Reducing voltage and frequency when full performance isn't required.

- Power gating: Completely shutting down power to inactive modules.

- Sleep modes: Transitioning to low-power states when the system is idle.

- Efficient coding: Optimizing code to reduce CPU cycles and memory accesses (e.g., using lookup tables instead of complex calculations where appropriate or utilizing efficient data structures to minimize CPU usage).

- Peripheral management: Disabling unused peripherals and configuring peripherals to operate at lower power levels.

9. Describe the differences between big-endian and little-endian architectures. How does endianness affect data storage and retrieval in embedded systems?

Big-endian and little-endian are two ways of storing multi-byte data types (like integers and floating-point numbers) in computer memory. In big-endian, the most significant byte (MSB) is stored at the lowest memory address, while in little-endian, the least significant byte (LSB) is stored at the lowest memory address. For example, storing the 32-bit value 0x12345678 at memory address 0x1000 would result in different byte orders depending on the endianness.

In embedded systems, endianness affects data storage and retrieval when dealing with: communication protocols: Different devices might use different endianness, requiring byte swapping for interoperability, example with a function like this: uint32_t swap_endian(uint32_t value) { return ((value >> 24) & 0xFF) | ((value >> 8) & 0xFF00) | ((value << 8) & 0xFF0000) | ((value << 24) & 0xFF000000); }; file formats: Storing and reading data from files created on systems with different endianness can lead to misinterpretations if not handled correctly; memory mapping: When accessing hardware registers or memory regions, understanding the endianness is crucial for interpreting the data correctly. Misinterpreting endianness can lead to incorrect calculations, system malfunctions, and data corruption.

10. Explain the concept of a circular buffer (or ring buffer) and its applications in embedded systems. Provide examples of situations where it is beneficial.

A circular buffer, also known as a ring buffer, is a data structure that uses a fixed-size buffer as if it were connected end-to-end. It's commonly implemented as an array with two pointers: one for the head (where data is written) and another for the tail (where data is read). When either pointer reaches the end of the buffer, it wraps around to the beginning, creating a circular effect.

In embedded systems, circular buffers are beneficial in several situations:

- Data streaming: When receiving data from sensors or communication interfaces (e.g., UART, SPI), a circular buffer can store the incoming data until the main processing loop is ready to process it. This prevents data loss due to timing variations.

- Audio processing: When recording or playing back audio, circular buffers can act as a buffer between the audio input/output and the processing algorithms. This helps to maintain a smooth audio stream.

- Real-time data logging: When logging data from various system parameters, a circular buffer can store the most recent data, allowing analysis of recent system behavior. Old data is overwritten as new data arrives.

- Inter-task communication: In multi-threaded or multi-tasking embedded systems, circular buffers can be used as a communication mechanism between tasks, allowing them to exchange data without blocking each other.

- Undo/Redo functionality: Can be used to implement undo/redo stacks. e.g., storing states for a limited number of operations for potential rollback.

For example, consider a UART receiver in an embedded system. The interrupt handler receives bytes from the UART and writes them to a circular buffer. The main application loop reads bytes from the buffer and processes them. This decouples the fast interrupt routine from the slower main loop. Code for a simple circular buffer write operation could look like this:

void circular_buffer_write(circular_buffer *cb, uint8_t data) {

cb->buffer[cb->head] = data;

cb->head = (cb->head + 1) % cb->size;

if (cb->head == cb->tail) {

cb->tail = (cb->tail + 1) % cb->size; // Overwrite oldest data

}

}

11. What is DMA (Direct Memory Access)? How does it improve system performance in embedded applications, and what are its limitations?

DMA (Direct Memory Access) is a hardware technique that allows certain hardware subsystems within a computer to access system memory (RAM) independently of the CPU. Instead of the CPU being involved in every data transfer operation, a DMA controller takes over, moving data directly between peripherals and memory or vice versa. This frees up the CPU to perform other tasks.

DMA significantly improves system performance in embedded applications by reducing CPU overhead. This allows the CPU to handle more complex tasks or reduce its clock speed to save power. However, DMA has limitations: it requires careful configuration to prevent memory conflicts, and faulty DMA configurations can cause system instability. Additionally, setting up DMA transfers involves some initial overhead and requires dedicated DMA channels/hardware, increasing system complexity.

12. Describe the purpose and function of interrupt vectors in embedded systems. How are interrupts handled, and what is the role of the interrupt vector table?

Interrupt vectors in embedded systems provide the addresses of interrupt service routines (ISRs) or interrupt handlers. When an interrupt occurs, the system uses the interrupt vector corresponding to that interrupt to locate the appropriate ISR to execute. This mechanism allows the system to quickly respond to various events without constantly polling for them.

Interrupt handling involves several steps: 1) An interrupt is triggered by a hardware or software event. 2) The CPU suspends its current execution and saves its state (registers, program counter). 3) The CPU uses the interrupt vector to find the address of the ISR. 4) The ISR is executed to handle the interrupt. 5) After the ISR completes, the CPU restores its saved state and resumes execution from where it left off. The interrupt vector table is a data structure (usually an array) that stores the interrupt vectors. Each entry in the table corresponds to a specific interrupt, and the value stored in the entry is the memory address of the associated ISR.

13. Explain the challenges of memory management in resource-constrained embedded systems. What strategies can be used to optimize memory usage and prevent memory leaks?

Memory management in resource-constrained embedded systems presents several challenges. Limited RAM necessitates careful allocation and deallocation to prevent fragmentation and out-of-memory errors. The absence of virtual memory in many embedded systems means that physical memory is the only memory available, and memory leaks can quickly lead to system instability. Real-time constraints further complicate matters, as memory allocation and garbage collection (if present) must be deterministic to avoid unpredictable delays. Complex memory management schemes could take up more resources than what is available, making execution slower.

Strategies to optimize memory usage include static memory allocation whenever possible, using memory pools, minimizing dynamic memory allocation, employing techniques like data compression, and carefully managing the stack size. Preventing memory leaks involves rigorous code reviews, using smart pointers (if the language supports them and the overhead is acceptable), and implementing custom memory management schemes with explicit allocation and deallocation routines. Regular testing, including leak detection tools, is essential. Using tools such as valgrind (memcheck) is also vital during the development phase.

14. What are the key considerations when selecting a microcontroller for a specific embedded application? Discuss factors such as processing power, memory, peripherals, and cost.

Selecting a microcontroller for an embedded application requires careful consideration of several key factors. Processing power (measured in MHz or DMIPS) should match the application's computational demands. Insufficient power leads to sluggish performance, while excessive power increases cost and energy consumption. Memory (RAM and Flash) needs to be adequate for storing program code, data, and buffers. Consider future growth and potential for over-the-air updates. Peripherals are crucial; choose a microcontroller with the necessary interfaces (UART, SPI, I2C, ADC, DAC, timers) to interact with sensors, actuators, and other external devices. The number of GPIO pins is also important.

Finally, cost is always a factor. Balance performance and features with budget constraints. Consider not only the microcontroller's price but also development tools, software libraries, and long-term availability. Evaluate the power consumption for battery-powered applications. Furthermore, consider the operating temperature and any required certifications.

15. Describe the different types of memory used in embedded systems (e.g., SRAM, DRAM, Flash). What are their characteristics, advantages, and disadvantages?

Embedded systems utilize various types of memory, each with distinct characteristics. SRAM (Static RAM) is known for its speed and low latency, making it ideal for frequently accessed data. It retains data as long as power is supplied, but it's relatively expensive and consumes more power per bit than DRAM. DRAM (Dynamic RAM) is denser and cheaper than SRAM, but it requires periodic refreshing to maintain data, leading to slower access times. DRAM is commonly used for main memory due to its cost-effectiveness. Flash memory is non-volatile, meaning it retains data even without power. It's used for storing firmware, configuration data, and file systems. Flash memory comes in two main types: NOR Flash, which allows random access for code execution (execute-in-place or XIP), and NAND Flash, which offers higher density and lower cost but requires block-wise access, making it suitable for data storage rather than direct code execution.

In summary, SRAM is fast but expensive and power-hungry, DRAM is cheaper but slower and requires refreshing, and Flash is non-volatile for persistent storage with varying access methods (NOR for code execution, NAND for data storage).

16. Explain the concept of a device driver. What is its role in an embedded system, and what are the challenges of writing effective device drivers?

A device driver acts as a translator between the operating system or application software and a specific hardware device. Its role in an embedded system is to provide a standardized interface for the system to interact with peripherals like sensors, actuators, communication interfaces (UART, SPI, I2C), and memory devices. Without drivers, the OS wouldn't know how to send or receive data from the hardware.

Challenges in writing effective device drivers include: hardware complexity and limited documentation, real-time constraints and interrupt handling, resource management (memory, DMA), debugging (often requires specialized tools like JTAG debuggers), and ensuring portability across different hardware platforms or OS versions. Also, driver developers often need a deep understanding of both the hardware and software aspects of the system, including memory management, interrupt handling, and concurrency.

17. What is the purpose of linker scripts in embedded development? How do they influence memory allocation and program execution?

Linker scripts in embedded development are crucial for defining how the compiled object files are combined to form the final executable image and how this image is loaded into the target device's memory. They primarily serve to map sections of the code and data to specific memory regions defined by the hardware architecture, such as Flash, RAM, and peripherals. Without a linker script, the default behavior might not be suitable for the target device, potentially leading to program malfunction.

Linker scripts influence memory allocation by specifying the memory regions (e.g., FLASH, RAM) and assigning input sections (e.g., .text, .data, .bss) to these regions. They define the starting address of each section and ensure that sections are placed in the correct memory location. This affects program execution because the CPU fetches instructions and data from these pre-defined memory addresses, and incorrect placement would cause the program to crash or behave unexpectedly. Additionally, they are used to define the location of the interrupt vector table, which is essential for handling interrupts correctly.

18. Describe the differences between preemptive and cooperative multitasking. What are the advantages and disadvantages of each approach in an RTOS environment?

Preemptive multitasking allows the operating system to interrupt a running task and switch to another task based on priority or time slice. This ensures that no single task can monopolize the CPU, preventing system starvation. Advantages in an RTOS include better responsiveness and guaranteed deadlines. Disadvantages are higher overhead due to context switching and potential for priority inversion.

Cooperative multitasking relies on tasks to voluntarily yield control to other tasks. If a task doesn't yield, it can block other tasks from running. Advantages in an RTOS are lower overhead and simpler implementation. Disadvantages include poor responsiveness, inability to guarantee deadlines, and vulnerability to tasks that don't yield, leading to system stalls.

19. Explain the concept of code optimization for embedded systems. What techniques can be used to reduce code size and improve execution speed?

Code optimization in embedded systems focuses on reducing resource consumption (memory, power) and improving performance (execution speed). Given the limited resources of embedded devices, it's a critical process.