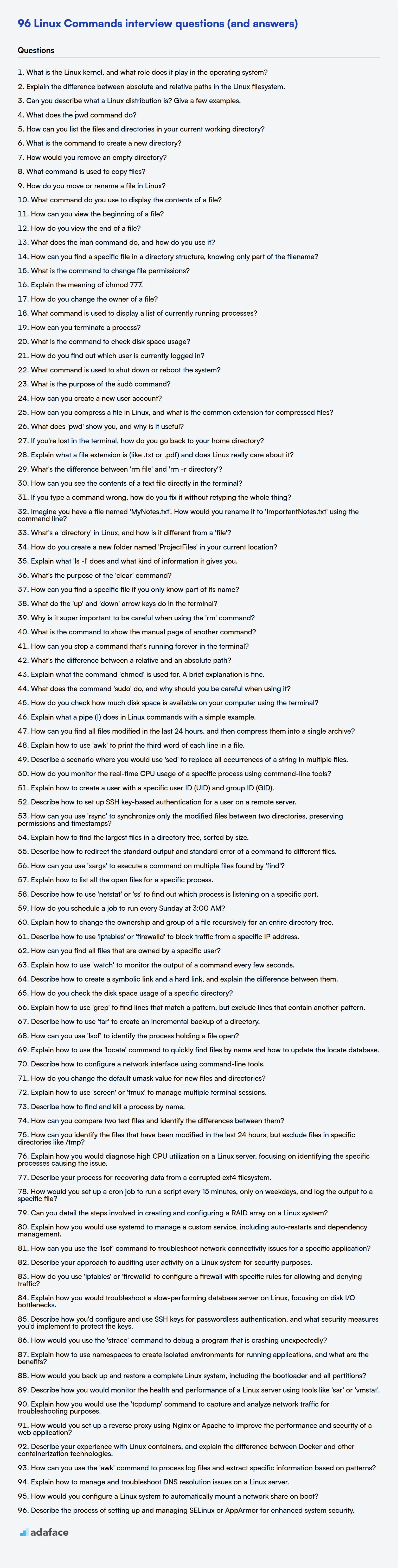

In today's tech landscape, Linux skills are highly sought after, making command-line proficiency a key differentiator for candidates. Evaluating these skills through targeted interview questions helps ensure you're hiring someone ready to tackle real-world challenges, as with the essential skills for a systems engineer.

This blog post provides a curated list of Linux command interview questions, categorized by experience level, from freshers to experienced professionals, along with multiple-choice questions. This resource equips you with the right questions to gauge candidates' understanding and practical skills in Linux environments.

By using these questions, you'll streamline your interview process and accurately assess candidates' Linux capabilities, ensuring a stronger, more capable team; before interviews, use our Linux online test to filter only the most skilled candidates.

Table of contents

Linux Commands interview questions for freshers

1. What is the Linux kernel, and what role does it play in the operating system?

The Linux kernel is the core of the Linux operating system. It's the software layer that sits between the hardware and user-level applications. Essentially, it manages the system's resources, allowing software to interact with the hardware.

The kernel's primary role includes:

- Process management: Creating, scheduling, and terminating processes.

- Memory management: Allocating and freeing memory for programs.

- Device drivers: Enabling communication with hardware devices (e.g., disks, network cards).

- System calls: Providing an interface for user-level applications to request services from the kernel (e.g., file I/O, network communication).

- Security: Enforcing access control and security policies.

2. Explain the difference between absolute and relative paths in the Linux filesystem.

In the Linux filesystem, an absolute path starts from the root directory (/) and specifies the complete location of a file or directory. For example, /home/user/documents/report.txt is an absolute path. It always points to the same location, regardless of the current working directory. A relative path, on the other hand, is defined relative to the current working directory. For example, if your current working directory is /home/user, then documents/report.txt is a relative path that points to the same file as the absolute path above. Relative paths can use . (current directory) and .. (parent directory) to navigate the file system.

To illustrate:

- Absolute Path:

/usr/bin/python3 - Relative Path (from /home/user):

../../usr/bin/python3

3. Can you describe what a Linux distribution is? Give a few examples.

A Linux distribution is essentially an operating system built around the Linux kernel. It bundles the kernel with other software, such as system utilities, desktop environments (like GNOME or KDE), applications, and installation tools, to make it a complete and usable OS for various purposes.

Some popular examples include:

- Ubuntu

- Fedora

- Debian

- CentOS (now CentOS Stream)

- Arch Linux

- Pop!_OS

4. What does the `pwd` command do?

The pwd command stands for "print working directory". It displays the absolute path of the current directory you are in. This path starts from the root directory (/) and shows the complete hierarchy of directories to reach your current location.

For example, if you are in the directory /home/user/documents, running pwd will output: /home/user/documents

5. How can you list the files and directories in your current working directory?

To list files and directories in the current working directory, you can use the ls command in Unix-like systems or dir command in Windows.

- Unix/Linux/macOS: Open a terminal and type

ls. For more detailed information, use options likels -l(long listing format),ls -a(lists all files including hidden files), orls -t(sorts by modification time). - Windows: Open Command Prompt or PowerShell and type

dir. To view hidden files, usedir /a.

6. What is the command to create a new directory?

The command to create a new directory is mkdir. For example, to create a directory named 'mydirectory', you would use the command mkdir mydirectory. This command works in most command-line interfaces, including those on Linux, macOS, and Windows (PowerShell, WSL).

7. How would you remove an empty directory?

To remove an empty directory, you can use the rmdir command in Unix-like systems or the Remove-Item cmdlet in PowerShell. For example, in a terminal, you would type rmdir directory_name to remove the directory. Similarly, in PowerShell, you would use Remove-Item directory_name. These commands will return an error if the directory is not empty.

Alternatively, if you are using a programming language like Python, you can use the os.rmdir() function from the os module. For instance, import os; os.rmdir("directory_name") achieves the same result, removing the directory only if it's empty. Handling potential errors with try...except blocks is recommended in production code.

8. What command is used to copy files?

The primary command used to copy files is cp. Its basic syntax is cp source destination, where source is the file or directory to be copied and destination is the location where the copy should be placed.

For example, cp file.txt /path/to/new/location/ will copy file.txt to the specified directory. Options like -r are used for recursively copying directories, and -p preserves file attributes.

9. How do you move or rename a file in Linux?

In Linux, you can move or rename a file using the mv command. The basic syntax is mv [source] [destination]. To rename a file, the destination should be the new name for the file in the same directory. For example, mv oldfile.txt newfile.txt will rename oldfile.txt to newfile.txt.

To move a file, the destination should be the path to the new directory. For example, mv file.txt /path/to/new/directory/ will move file.txt to the specified directory. You can also rename and move a file simultaneously by providing a new name along with the destination directory, like so: mv file.txt /path/to/new/directory/newname.txt. This will move file.txt to /path/to/new/directory/ and rename it to newname.txt in the process.

10. What command do you use to display the contents of a file?

To display the contents of a file, I would use the cat command. For example, cat filename.txt would display the entire contents of filename.txt to the terminal. Other useful commands include less for viewing large files page by page and head or tail to view the beginning or end of a file, respectively.

11. How can you view the beginning of a file?

To view the beginning of a file, you can use the head command in Unix-like systems. By default, head displays the first 10 lines of a file. For example:

head filename.txt

You can specify a different number of lines to view using the -n option. For example, to view the first 20 lines:

head -n 20 filename.txt

Another common tool is a text editor like nano, vim, or emacs, which allows you to open the file and immediately see the beginning. However, for quick viewing without editing, head is generally preferred.

12. How do you view the end of a file?

The end of a file can be viewed in several ways depending on the context (e.g., text editor, command line, programming). In a text editor, you simply scroll to the last line. On the command line, tools like tail can be used to view the last few lines or bytes, or tools like wc -l can be used to count the number of lines. In a programming context, you can use language-specific functions to read the file from the end or determine the file size and read the last chunk of data. For example, in Python, you can use seek() to position the file pointer to the end using file.seek(0, os.SEEK_END).

The OS also uses an EOF (End-Of-File) character to signify the end of a stream of data, but this isn't typically 'viewed' as such; more so it's detected by programs interacting with the file stream.

13. What does the `man` command do, and how do you use it?

The man command is used to display the user manual pages for a given command or topic in a Unix-like operating system. It's an essential tool for understanding how to use commands, utilities, and system calls.

To use it, you simply type man followed by the name of the command you want to learn about. For example, man ls will display the manual page for the ls command. You can navigate the manual page using the arrow keys, j and k, or Page Up and Page Down. To search within the page, use / followed by your search term. To quit, press q.

14. How can you find a specific file in a directory structure, knowing only part of the filename?

You can use the find command in Unix-like systems or Get-ChildItem in PowerShell. For find, use wildcards with the -name option. For example, find . -name "*part_of_filename*" searches the current directory and its subdirectories for files containing "part_of_filename". In PowerShell, use Get-ChildItem -Path . -Recurse -Filter "*part_of_filename*". The -Recurse parameter ensures that the command searches through all subdirectories.

Alternatively, many code editors and IDEs have built-in find-in-files functionalities that allow searching based on partial filenames across a directory structure. These are often more user-friendly than command-line tools, especially for large directory structures.

15. What is the command to change file permissions?

The command to change file permissions in Unix-like systems is chmod. It modifies the access rights for the owner, group, and others.

For example, chmod 755 filename sets read, write, and execute permissions for the owner, and read and execute permissions for the group and others. Alternatively, you can use symbolic mode, such as chmod u+x filename to add execute permission for the owner.

16. Explain the meaning of `chmod 777`.

chmod 777 is a command in Unix-like operating systems (like Linux and macOS) that changes the permissions of a file or directory. The '777' part represents the permissions being granted. Each digit corresponds to a permission level for the owner, group, and others (respectively). In this case, 7 grants read (4), write (2), and execute (1) permissions to everyone: the owner of the file, the group the file belongs to, and all other users on the system.

Essentially, chmod 777 makes a file or directory completely open, allowing anyone to read, modify, or execute it. This is generally discouraged due to security risks, as it can leave files vulnerable to tampering or unauthorized access. It is recommended to assign permissions more restrictively based on need.

17. How do you change the owner of a file?

To change the owner of a file in Linux/Unix-like systems, you typically use the chown command. The basic syntax is chown new_owner filename. You may need superuser (root) privileges or ownership of the file to perform this action.

For example, to change the owner of myfile.txt to john, you would use the command: sudo chown john myfile.txt. If you also need to change the group ownership, you can specify both, separated by a colon: sudo chown john:developers myfile.txt.

18. What command is used to display a list of currently running processes?

The command to display a list of currently running processes depends on the operating system. Common commands include:

- Linux/Unix/macOS:

ps aux,top,htop - Windows:

tasklist, accessed via command prompt or PowerShell. GUI tool is Task Manager.

19. How can you terminate a process?

A process can be terminated using several methods, varying by operating system. Generally, one can use a kill command (Unix/Linux) or Task Manager (Windows) to send a signal to the process, requesting termination. The most common signal is SIGTERM, which politely asks the process to terminate, allowing it to clean up resources.

Alternatively, you can forcefully terminate a process using SIGKILL (Unix/Linux) or "End Task" (Windows). This immediately stops the process without giving it a chance to clean up. Programmatically, functions like os.Kill in Go or TerminateProcess in Windows API can be used. Here's an example using the kill command in linux/unix:

kill <pid>

kill -9 <pid> # forceful termination (SIGKILL)

20. What is the command to check disk space usage?

The primary command to check disk space usage is df. Specifically, df -h provides human-readable output (e.g., using 'K', 'M', 'G' suffixes) making the results easier to understand. Another useful command is du, which estimates file space usage. du -sh * in a directory will give the total size of each file and subdirectory.

df reports on filesystem usage, while du reports on the space occupied by files. There can be discrepancies between the two due to factors like reserved space or deleted files that are still open.

21. How do you find out which user is currently logged in?

The method for determining the currently logged-in user depends heavily on the specific operating system, programming language, and framework in use. However, here are some general approaches:

- Operating System Level: Most operating systems store the currently logged-in user's information in environment variables or system calls. For example, in Linux/macOS, you might use the

whoamicommand in the terminal, or access the$USERenvironment variable. Windows has similar environment variables and API calls for user identification. For example, a user might useGetUserName()in Windows API to get the logged in user. - Application Level: Web applications often use session management to track logged-in users. The server stores a session ID (often in a cookie), which is associated with a specific user's data. So you can check that session to find the user details. Similarly desktop applications could use a class that saves the user once logged in and other parts of the application can access that class to identify the user.

22. What command is used to shut down or reboot the system?

The shutdown command is used to shut down or reboot a Linux system. It can be used to halt, power-off, or reboot the machine. For example, sudo shutdown -r now will reboot the system immediately. The -r option specifies reboot, and now specifies the time.

23. What is the purpose of the `sudo` command?

The sudo command allows a user to execute commands with the security privileges of another user, by default the superuser (root). It stands for "superuser do".

Its main purpose is to grant administrative privileges to regular users on a temporary and controlled basis, without requiring them to log in as root directly. This enhances system security by minimizing the risk of accidental or malicious damage to the system caused by running everything as root.

24. How can you create a new user account?

The process of creating a new user account depends on the system in question. Typically, it involves administrative privileges. For example:

- Operating Systems (Windows, Linux, macOS): These usually have a 'User Accounts' or 'Users & Groups' section in the system settings or control panel. An administrator can add a new user here.

- Web Applications: These often have a registration form where a new user can enter their details (email, password, etc.). An administrator might also be able to create users via an admin panel. The backend code would typically store the new user’s data in a database.

- Databases: Databases often have a

CREATE USERcommand, requiring administrative privileges. Example (PostgreSQL):CREATE USER new_user WITH PASSWORD 'some_password';

25. How can you compress a file in Linux, and what is the common extension for compressed files?

In Linux, you can compress a file using the gzip command. For example, gzip filename will compress 'filename'. The compressed file will have the extension .gz. Other common compression tools include bzip2 (extension .bz2) and xz (extension .xz), which often provide better compression ratios at the cost of increased compression time.

To uncompress a gzip file, you would use the gunzip filename.gz command. Similarly, bunzip2 filename.bz2 and unxz filename.xz are used to uncompress bzip2 and xz compressed files, respectively.

Linux Commands interview questions for juniors

1. What does 'pwd' show you, and why is it useful?

pwd (present working directory) shows you the absolute path of the directory you are currently in. It's useful because it clarifies your location within the file system, preventing confusion when navigating or executing commands.

For example, if you are in /home/user/documents then pwd will output /home/user/documents. This becomes critical when dealing with relative paths. Knowing the present working directory allows you to correctly reference files and directories using paths relative to your current location, ensuring commands operate on the intended files.

2. If you're lost in the terminal, how do you go back to your home directory?

To navigate back to your home directory in the terminal, you can use the cd command without any arguments. Simply typing cd and pressing Enter will take you back. Alternatively, you can use cd ~, where ~ is a shortcut representing the home directory. Both commands achieve the same result.

3. Explain what a file extension is (like .txt or .pdf) and does Linux really care about it?

A file extension is the suffix at the end of a filename, usually separated by a period (.). It's a convention used by operating systems and applications to identify the type of file. For example, .txt typically indicates a plain text file, and .pdf indicates a Portable Document Format file. The extension helps the operating system and applications determine which program to use to open the file and how to handle its contents. While often three letters long, extensions can be different lengths.

Linux, unlike some other operating systems, primarily uses the file's content, and particularly the "magic number" at the beginning of the file (a sequence of bytes that identifies the file type), to determine the file type, rather than relying solely on the file extension. Therefore, Linux does not strictly depend on the file extension to identify a file's type. You can rename a .txt file to .pdf in Linux, and if the file content is still plain text, a text editor will still open it. However, the GUI may attempt to open the file with a PDF viewer depending on configuration. So, whilst the OS doesn't depend on it, some desktop environments may rely on them to some degree.

4. What's the difference between 'rm file' and 'rm -r directory'?

rm file removes a single file. It cannot remove directories, and will throw an error if you try to use it on a directory.

rm -r directory recursively removes a directory and all its contents (files and subdirectories). The -r option stands for recursive, and it's essential when you want to delete a directory that isn't empty. Use with caution, as deleted files are not typically recoverable.

5. How can you see the contents of a text file directly in the terminal?

You can use several commands in the terminal to view the contents of a text file. The most common ones are:

cat filename.txt: This command concatenates and prints the entire file to the standard output.less filename.txt: This command allows you to view the file page by page, making it suitable for larger files. You can navigate using the arrow keys or page up/down keys. Pressqto quit.head filename.txt: This command displays the first few lines of the file (default is 10 lines).tail filename.txt: This command displays the last few lines of the file (default is 10 lines).

6. If you type a command wrong, how do you fix it without retyping the whole thing?

Several methods can help avoid retyping an entire command.

- Bash History: Use the up arrow key to cycle through previously entered commands. Once you find the incorrect command, edit it using the left/right arrow keys and the

DeleteorBackspacekeys. You can also useCtrl+ato move to the beginning of the line andCtrl+eto move to the end.Ctrl+kwill delete from the cursor to the end of the line, andCtrl+uwill delete from the cursor to the beginning of the line. - Command Line Editing: Bash (and other shells) have advanced editing capabilities.

Ctrl+fandCtrl+bmove the cursor forward and backward one character, respectively.Alt+fandAlt+bmove forward and backward one word.!!will execute the last command.!abcwill execute the last command starting with abc.^old^new^will replaceoldwithnewin the last command and execute it. - Tab Completion: Use the

Tabkey to auto-complete commands, filenames, and paths, reducing typing errors in the first place.

7. Imagine you have a file named 'MyNotes.txt'. How would you rename it to 'ImportantNotes.txt' using the command line?

To rename 'MyNotes.txt' to 'ImportantNotes.txt' from the command line, you would use the mv command (short for move) on Linux/macOS or the ren command on Windows.

- Linux/macOS:

mv MyNotes.txt ImportantNotes.txt - Windows:

ren MyNotes.txt ImportantNotes.txt

8. What's a 'directory' in Linux, and how is it different from a 'file'?

In Linux, a directory is a special type of file that contains a list of files and subdirectories. Think of it as a container or folder used to organize the file system.

The key difference between a directory and a regular file is their purpose. A regular file stores data (text, images, executables, etc.), while a directory stores metadata about other files and directories, including their names, locations, and permissions. A directory doesn't store the actual content of the files it contains; it simply points to them.

9. How do you create a new folder named 'ProjectFiles' in your current location?

To create a new folder named 'ProjectFiles' in your current location, you can use the following command in your terminal:

mkdir ProjectFiles

This command, mkdir, is short for "make directory." Executing this creates the new folder.

10. Explain what 'ls -l' does and what kind of information it gives you.

ls -l is a command in Unix-like operating systems that lists directory contents in a long listing format. This format provides detailed information about each file and directory.

The information provided includes:

- File type and permissions: (e.g.,

-rw-r--r--for a file,drwxr-xr-xfor a directory). The first character indicates the file type (e.g.,-for regular file,dfor directory,lfor symbolic link). The next nine characters represent permissions for the owner, group, and others (read, write, execute). - Number of hard links: The number of links pointing to the file.

- Owner username: The username of the file's owner.

- Group name: The group associated with the file.

- File size: The size of the file in bytes.

- Last modified time: The date and time the file was last modified.

- Filename: The name of the file or directory.

11. What's the purpose of the 'clear' command?

The clear command is used to clear the terminal screen. It removes all previously executed commands and their output, providing a clean slate for the user. This is useful for improving readability, reducing clutter, and focusing on the current task.

12. How can you find a specific file if you only know part of its name?

You can use the find command in Unix-like systems (Linux, macOS) to locate files based on partial names. The basic syntax is find <directory> -name "*part_of_name*". The <directory> specifies where to start the search (e.g., . for the current directory, or / for the entire system). The -name option searches for filenames matching the given pattern, and the wildcards (*) allow you to specify the 'part_of_name' can appear anywhere in the full filename. Use quotes to prevent the shell from interpreting the asterisks.

For example, to find all files containing 'report' in their name within the current directory, you would use find . -name "*report*". You can also use locate command in many systems for quick lookup. To find files containing 'report', use locate report. locate relies on a database that is updated periodically, so recent files may not be immediately found. Use updatedb command to update the locate database.

13. What do the 'up' and 'down' arrow keys do in the terminal?

In most terminal emulators (like Bash, Zsh, or PowerShell), the 'up' and 'down' arrow keys are used to navigate through your command history. Pressing the 'up' arrow key recalls the previously executed command, letting you quickly re-run or modify it. Repeatedly pressing 'up' cycles further back through the history.

The 'down' arrow key works in the opposite direction, moving forward through the command history, typically from a previous 'up' selection. If you have gone back in your history using the 'up' arrow, 'down' will take you to more recently used commands, eventually returning to the most recently typed (but not executed) command, which is often a blank command line.

14. Why is it super important to be careful when using the 'rm' command?

The rm command in Unix-like operating systems permanently deletes files and directories. There is no undo function or recycle bin. Once a file is removed using rm, it's gone. This can lead to significant data loss if used carelessly, such as accidentally deleting important documents, system files, or entire directories.

Incorrect usage, especially with wildcard characters (e.g., rm -rf *), can quickly escalate into a disaster. Therefore, it's crucial to double-check the target and options before executing rm to avoid irreversible consequences. The -i option can be used for interactive confirmation before deleting each file, providing an extra layer of safety.

15. What is the command to show the manual page of another command?

To show the manual page of another command, use the man command followed by the name of the command you want to learn about. For example, to see the manual page for the ls command, you would type man ls in your terminal. This will display detailed information about the command, including its syntax, options, and usage examples.

Alternatively, some commands also provide built-in help using the --help flag. However, man pages are typically more comprehensive.

16. How can you stop a command that's running forever in the terminal?

To stop a command running indefinitely in the terminal, the most common and direct approach is to use the keyboard shortcut Ctrl + C. This sends an interrupt signal (SIGINT) to the process, which usually causes it to terminate gracefully.

Alternatively, if Ctrl + C doesn't work (e.g., the program is ignoring the signal), you can try Ctrl + Z. This suspends the process, placing it in the background. Then, you can use the kill command with the process ID (PID) to terminate it forcefully. To find the PID, use the jobs command (if you suspended it) or ps aux | grep <command_name> and then kill <PID>. If a normal kill doesn't work kill -9 <PID> is a last resort.

17. What's the difference between a relative and an absolute path?

An absolute path specifies the location of a file or directory from the root directory of the file system. It always starts with the root directory (e.g., / on Unix-like systems or C:\ on Windows). A relative path, on the other hand, specifies the location of a file or directory relative to the current working directory.

For example, if your current working directory is /home/user/documents, an absolute path to a file named report.txt might be /home/user/documents/report.txt, while a relative path could be simply report.txt (if the file is in the current directory) or ./report.txt which explicitly refers to the current directory. If it were one directory up it would be ../report.txt.

18. Explain what the command 'chmod' is used for. A brief explanation is fine.

The chmod command in Unix-like operating systems (Linux, macOS, etc.) is used to change the access permissions of files and directories. It allows you to control who can read, write, and execute a file.

For example, chmod 755 my_script.sh makes the script executable by its owner and readable and executable by others.

19. What does the command 'sudo' do, and why should you be careful when using it?

The sudo command allows a permitted user to execute a command as the superuser (root) or another user, as specified in the sudoers file. It essentially grants elevated privileges temporarily.

You should be extremely careful when using sudo because root access bypasses normal security restrictions. A mistake with sudo can potentially damage your system, delete important files, or compromise security. Always double-check the command you're about to execute with sudo, and ensure you understand its implications.

20. How do you check how much disk space is available on your computer using the terminal?

To check disk space on the terminal, you can use the df command. df -h is commonly used because the -h option makes the output human-readable, displaying sizes in units like KB, MB, GB, and TB.

Alternatively, you can use the du command to check the disk usage of specific files or directories. For example, du -sh * will show the total size of each file or directory in the current directory. The -s flag provides a summary, and the -h flag again makes the output human-readable.

21. Explain what a pipe (|) does in Linux commands with a simple example.

In Linux, a pipe (|) is a form of redirection that is used to send the output of one command as the input to another command. It allows you to chain commands together to perform complex operations.

For example, ls -l | grep 'myfile.txt' will first list all files and directories in the current directory (using ls -l) and then pass that output to the grep command, which will filter the results to only show lines containing 'myfile.txt'. This effectively finds 'myfile.txt' in the long listing of the directory.

Linux Commands intermediate interview questions

1. How can you find all files modified in the last 24 hours, and then compress them into a single archive?

To find all files modified in the last 24 hours and compress them into a single archive, you can use the find command in combination with tar. Here's how:

First, use the find command to locate files modified within the last 24 hours:

find . -type f -mtime -1

This command searches the current directory (.) for files (-type f) modified within the last one day (-mtime -1). To compress these files into a tar.gz archive, pipe the output of find to tar:

find . -type f -mtime -1 -print0 | tar -czvf archive.tar.gz --null -T -

Explanation of tar options:

-c: Create archive-z: Compress archive using gzip-v: Verbose output-f archive.tar.gz: Specify the archive file name--null: Handle filenames containing spaces or special characters-T -: Read the list of files to archive from standard input, which is piped from thefindcommand using-print0option which separates files by null character which is safe for special characters in filenames. Without this thetarcommand fails if any file has spaces or special characters.

2. Explain how to use 'awk' to print the third word of each line in a file.

To print the third word of each line in a file using awk, you can use the following command:

awk '{print $3}' filename

Here, $3 refers to the third field of each line, where fields are separated by whitespace (by default). awk processes each line of the file, and the print $3 action prints the content of the third field. Replace filename with the actual name of your file.

3. Describe a scenario where you would use 'sed' to replace all occurrences of a string in multiple files.

A common scenario is updating a copyright notice across many source code files. Let's say I need to replace Copyright 2023 Company A with Copyright 2024 Company A in all .txt files in a directory. I would use sed with the -i (in-place) and -s (separate files) options along with find and xargs.

Here's the command:

find . -name "*.txt" -print0 | xargs -0 sed -i 's/Copyright 2023 Company A/Copyright 2024 Company A/g'

This command finds all .txt files, passes them to sed which then performs a global replacement (indicated by the g flag in the s///g substitution command) of the copyright string within each file. The -print0 and -0 options in find and xargs respectively, handle filenames with spaces safely. The -i option tells sed to edit files in place and -s treats each file separately, which is helpful for error reporting.

4. How do you monitor the real-time CPU usage of a specific process using command-line tools?

To monitor the real-time CPU usage of a specific process using command-line tools, you can use top or ps. top provides a dynamic, real-time view.

To use top, first identify the process ID (PID) of the process you want to monitor. Then, execute top -p <PID>. This will filter the top output to only show information for the specified process, including its CPU usage (%CPU).

Alternatively, you can use ps in combination with watch. Run watch -n 1 ps -p <PID> -o %cpu. The -n 1 tells watch to update every second. The -o %cpu option tells ps to only output the CPU usage.

5. Explain how to create a user with a specific user ID (UID) and group ID (GID).

To create a user with a specific UID and GID on a Linux system, you can use the useradd command with the -u and -g options, respectively. You need root privileges to execute this command. For example, sudo useradd -u 1001 -g 1001 newuser will create a user named 'newuser' with UID 1001 and assign it to the group with GID 1001. The group must exist beforehand. If you also need to specify a home directory, use the -m (create home directory) and -d (specify home directory path) options in conjunction. You would also then want to set a password for the user with the passwd command.

Alternatively, you can use the adduser command, which is a more user-friendly wrapper around useradd. However, adduser typically doesn't allow direct UID/GID specification without further configuration or using command-line options. It's generally recommended to stick with useradd for precise control over UID/GID assignments in scripted environments or when creating multiple users with specific requirements. Always verify the changes with id <username> after creation.

6. Describe how to set up SSH key-based authentication for a user on a remote server.

To set up SSH key-based authentication, first generate an SSH key pair on your local machine using ssh-keygen. This creates a private key (e.g., id_rsa) and a public key (e.g., id_rsa.pub). Securely copy the public key to the remote server, typically to the ~/.ssh/authorized_keys file for the desired user. You can use ssh-copy-id user@remote_host if it's available, or manually copy the contents of the .pub file.

Ensure the .ssh directory and authorized_keys file on the remote server have the correct permissions: .ssh should be 700 (drwx------) and authorized_keys should be 600 (-rw-------). After this setup, you should be able to SSH into the remote server without being prompted for a password, using ssh user@remote_host.

7. How can you use 'rsync' to synchronize only the modified files between two directories, preserving permissions and timestamps?

To synchronize only modified files between two directories using rsync, while preserving permissions and timestamps, you can use the following command:

rsync -avz --delete source_directory/ destination_directory

-a(archive mode): This option is a combination of several other options that preserve attributes like permissions, ownership, timestamps, symbolic links, etc. It's equivalent to-rlptgoD. Specifically,-tpreserves timestamps.-v(verbose): Increases verbosity.-z(compress): Compresses file data during the transfer. Good for transferring files over a network.--delete: This option removes files from the destination directory that no longer exist in the source directory. Crucially, without--delete, rsync only adds/updates files; it doesn't remove anything from the destination. It ensures destination is a mirror of the source.

Remember to include the trailing / on the source directory. Omitting it changes the behavior of rsync, causing the source directory itself to be copied as a subdirectory of the destination.

8. Explain how to find the largest files in a directory tree, sorted by size.

To find the largest files in a directory tree sorted by size, you can use a combination of command-line tools. A common approach involves using find to locate all files, du to determine their size, and sort to order them.

Here's a basic example:

find . -type f -print0 | xargs -0 du -h | sort -hr | head -n 10

This command does the following:

find . -type f -print0: Finds all files (-type f) starting from the current directory (.) and prints their names separated by null characters (-print0).xargs -0 du -h: Takes the null-separated file names and passes them todu -h, which calculates the disk usage of each file in human-readable format.sort -hr: Sorts the output ofdu -hin reverse order (-r) using human-numeric sorting (-h).head -n 10: Displays the top 10 largest files.

You can adjust the head -n 10 to show a different number of files as needed.

9. Describe how to redirect the standard output and standard error of a command to different files.

To redirect standard output (stdout) and standard error (stderr) to different files in a Unix-like environment, you use the > and 2> redirection operators respectively.

For example, to redirect stdout to output.txt and stderr to errors.txt for a command named my_command, you would use:

my_command > output.txt 2> errors.txt

This command will run my_command, and any standard output will be written to output.txt, while any standard error will be written to errors.txt. If the files don't exist, they will be created. If they do exist, they will be overwritten. To append to existing files, use >> and 2>>.

10. How can you use 'xargs' to execute a command on multiple files found by 'find'?

You can use xargs in conjunction with find to execute a command on multiple files. The find command locates the files, and xargs takes the output of find (the list of files) as input and executes the specified command with those files as arguments.

For example, to find all .txt files in the current directory and its subdirectories and then compress them using gzip, you can use the following command:

find . -name "*.txt" -print0 | xargs -0 gzip

Here, -print0 and -0 are used to handle filenames with spaces or special characters safely. find . -name "*.txt" finds all files ending with .txt, -print0 outputs the filenames separated by null characters. xargs -0 gzip then takes this null-separated list and executes gzip on each file.

11. Explain how to list all the open files for a specific process.

To list all open files for a specific process, you can use the lsof or proc filesystem. With lsof, you can use the command lsof -p <pid>, replacing <pid> with the process ID. For example, lsof -p 1234 will list open files for process ID 1234.

Alternatively, using the proc filesystem (typically available on Linux systems), you can navigate to /proc/<pid>/fd/. This directory contains symbolic links representing the file descriptors. ls -l /proc/<pid>/fd/ will show the files associated with each file descriptor. For example, ls -l /proc/1234/fd/. Note that access to these methods may require appropriate permissions (e.g., sudo).

12. Describe how to use 'netstat' or 'ss' to find out which process is listening on a specific port.

To find the process listening on a specific port using netstat, you can use the following command: netstat -tulnp | grep :<port_number>. The -t option shows TCP connections, -u shows UDP connections, -l shows listening sockets, -n displays numerical addresses and port numbers, and -p shows the process ID and name. Piping the output to grep :<port_number> filters the results to only show lines containing the specified port number.

Using ss (socket statistics), the command is: ss -tulnp | grep :<port_number>. Similar to netstat, -t is for TCP, -u for UDP, -l for listening sockets, -n for numerical ports, and -p shows the process using the socket. grep filters for the specific port. For example, to find the process listening on port 8080, you would use ss -tulnp | grep :8080 or netstat -tulnp | grep :8080.

13. How do you schedule a job to run every Sunday at 3:00 AM?

The method depends on the operating system. On Linux-based systems, you would typically use cron. You would add a line to the crontab file using the crontab -e command. The line would look something like this:

0 3 * * 0 command_to_execute

This line means: at minute 0 of hour 3, on every day of the month, every month, and only on Sunday (0 represents Sunday), execute the specified command. Replace command_to_execute with the actual command or script you want to run. Windows uses Task Scheduler, where you can configure a task to run on a schedule, including weekly on Sundays at 3:00 AM.

14. Explain how to change the ownership and group of a file recursively for an entire directory tree.

To recursively change the ownership and group of a file for an entire directory tree, you can use the chown and chgrp commands with the -R option. The -R option stands for recursive, meaning the command will apply to all files and subdirectories within the specified directory.

For example, to change the ownership to user newuser and group to newgroup for the directory /path/to/directory and all its contents, you would use the following commands:

sudo chown -R newuser /path/to/directory

sudo chgrp -R newgroup /path/to/directory

sudo might be needed depending on your user's permissions and the existing ownership of the files. The chown command changes the user ownership, and the chgrp command changes the group ownership. Running both accomplishes the task of changing the user and group recursively.

15. Describe how to use 'iptables' or 'firewalld' to block traffic from a specific IP address.

To block traffic from a specific IP address using iptables, you would use the following command:

sudo iptables -A INPUT -s <IP_ADDRESS> -j DROP

Replace <IP_ADDRESS> with the actual IP address you want to block. This command adds a rule to the INPUT chain that drops all packets coming from the specified IP address. To make the rule persistent across reboots, you'll need to save the iptables rules (e.g., using iptables-save).

With firewalld, you can use the following command:

sudo firewall-cmd --add-rich-rule='rule family="ipv4" source address="<IP_ADDRESS>" reject'

sudo firewall-cmd --reload

Again, replace <IP_ADDRESS> with the IP you want to block. The --add-rich-rule option allows you to create more complex rules. --reload applies the changes. To make the rule permanent (across reboots), add --permanent to the firewall-cmd command and then reload the firewall.

16. How can you find all files that are owned by a specific user?

You can use the find command in Unix-like operating systems to find files owned by a specific user. The basic syntax is:

find /path/to/search -user username

Where /path/to/search is the directory you want to search in (e.g., /, /home), and username is the username of the user you are looking for. This command will recursively search the specified directory and print the paths of all files owned by that user. You can use other options with find to filter the results further, such as -type f to only find files (not directories).

17. Explain how to use 'watch' to monitor the output of a command every few seconds.

The watch command in Linux (and other Unix-like systems) is used to execute a command periodically and display its output in full-screen mode. This is useful for monitoring how a command's output changes over time.

To use watch, simply type watch followed by the command you want to monitor. For example, watch ls -l will execute ls -l every two seconds (by default) and update the display with the new output. You can change the interval using the -n option followed by the number of seconds; for example, watch -n 1 date will show the output of the date command every second. To exit watch, press Ctrl+C.

18. Describe how to create a symbolic link and a hard link, and explain the difference between them.

To create a symbolic link (symlink) use ln -s target link_name. To create a hard link, use ln target link_name. The key difference is that a symbolic link is a pointer to a file, storing the path to the target file. If the target is moved or deleted, the symlink breaks. A hard link, however, creates a new directory entry pointing to the same underlying inode (data block) as the original file. Deleting the original file does not affect the hard link, and vice versa, as they both point to the same data.

19. How do you check the disk space usage of a specific directory?

To check the disk space usage of a specific directory, you can use the du command in Linux or macOS. The basic syntax is du -sh <directory_path>. The -s flag provides a summary, and the -h flag displays the output in a human-readable format (e.g., KB, MB, GB).

For example, to check the disk space used by the /var/log directory, you would use the command du -sh /var/log. The output will show the total size of the directory and its contents.

20. Explain how to use 'grep' to find lines that match a pattern, but exclude lines that contain another pattern.

To find lines matching one pattern while excluding lines containing another, you can combine grep with its -v option (for invert-match) and pipe the results. First, grep for the desired pattern. Then, pipe that output to another grep -v command that excludes the unwanted pattern.

For example, to find lines containing "apple" but not "orange", you would use: grep "apple" | grep -v "orange". This first finds all lines with "apple", and then filters those results to exclude any lines also containing "orange".

21. Describe how to use 'tar' to create an incremental backup of a directory.

To create an incremental backup using tar, you primarily use the --listed-incremental option along with a snapshot file. The snapshot file keeps track of which files have been backed up in previous backups. First you create a full backup, then subsequent backups only save changes since the last backup recorded in the snapshot file. For example:

tar -cvzf /backup/full.tar.gz /data(Initial full backup)tar --create --gzip --file=/backup/incremental1.tar.gz --listed-incremental=/backup/snapshot /data(First incremental backup)tar --create --gzip --file=/backup/incremental2.tar.gz --listed-incremental=/backup/snapshot /data(Second incremental backup and updates snapshot)

Each incremental backup will only contain files that have changed since the snapshot file was last updated. The snapshot file is automatically updated after each incremental backup. To restore, you'd first restore the full backup, and then apply each incremental backup in the order they were created.

22. How can you use 'lsof' to identify the process holding a file open?

You can use lsof to identify the process holding a file open by specifying the file path as an argument. For example, to find the process holding /path/to/your/file.txt open, you would run lsof /path/to/your/file.txt. The output will list the process ID (PID), user, and other relevant information about the process using the file.

The output from lsof includes columns that tell you what type of access the process has to the file (read, write, etc.). The command returns a lot of info for each process holding the file, and so can be easily grepped using awk or grep. For example lsof /path/to/your/file.txt | awk '{print $2}' will return just the PID.

23. Explain how to use the 'locate' command to quickly find files by name and how to update the locate database.

The locate command quickly finds files by name by searching a pre-built database. To use it, simply type locate <filename> (e.g., locate myfile.txt). This is much faster than find because it searches the database instead of the entire filesystem in real-time.

The database is not updated automatically. To update the locate database, you need to run the updatedb command as a privileged user (usually with sudo updatedb). This command crawls the filesystem and rebuilds the database. It's typically scheduled to run regularly (e.g., daily) via cron or systemd timers. The frequency of updates depends on how often your filesystem changes.

24. Describe how to configure a network interface using command-line tools.

To configure a network interface via the command line, you'll typically use tools like ifconfig or ip. First, identify the interface name (e.g., eth0, wlan0) using ifconfig -a or ip link. Then, use commands to set the IP address, netmask, and gateway.

For example, using ifconfig: sudo ifconfig eth0 192.168.1.10 netmask 255.255.255.0 up and sudo route add default gw 192.168.1.1. With ip: sudo ip addr add 192.168.1.10/24 dev eth0, sudo ip link set eth0 up, and sudo ip route add default via 192.168.1.1. You might also need to configure DNS settings by editing /etc/resolv.conf or network configuration files in /etc/network/interfaces (Debian/Ubuntu) or /etc/sysconfig/network-scripts/ (RedHat/CentOS).

25. How do you change the default umask value for new files and directories?

The default umask value can be changed in several ways. For a specific user, the umask can be set in the user's shell configuration file, such as .bashrc or .zshrc. Add a line like umask 022 to set the desired umask. This change will only affect new shells started by that user.

For a system-wide change affecting all users, the umask can be set in a system-wide configuration file. The specific file depends on the system, but common locations include /etc/profile, /etc/bash.bashrc, or /etc/login.defs. Modifying these files requires root privileges. Be cautious when modifying system-wide configurations, as incorrect settings can cause security issues. For example, to set umask to 027 for all users, add or modify the umask line within the appropriate configuration file to umask 027.

26. Explain how to use 'screen' or 'tmux' to manage multiple terminal sessions.

screen and tmux are terminal multiplexers that allow you to manage multiple terminal sessions within a single window. They are particularly useful for running long-running processes, detaching and reattaching to sessions, and working on multiple tasks simultaneously.

To use screen, you typically start a new session with screen. You can then create new windows within the session using Ctrl+a c. Switch between windows using Ctrl+a n (next) or Ctrl+a p (previous). To detach from the session (leaving it running in the background), use Ctrl+a d. You can reattach to the session later with screen -r. tmux works similarly, but uses a different command prefix (usually Ctrl+b). Start a tmux session with tmux new -s <session_name>. Create new windows with Ctrl+b c, switch with Ctrl+b n or Ctrl+b p, detach with Ctrl+b d, and reattach with tmux attach -t <session_name>.

27. Describe how to find and kill a process by name.

To find and kill a process by name, you can use command-line tools. First, use ps (or tasklist on Windows) to list processes and grep (or findstr on Windows) to filter by name. For example, on Linux/macOS, use ps aux | grep process_name to find the PID. Once you have the PID, you can use the kill command (or taskkill on Windows) to terminate the process, for example kill PID (or taskkill /PID PID /F on Windows).

Alternatively, programming languages often provide ways to interact with the OS to achieve the same. For example, in Python you could use the psutil library to find processes by name and terminate them. A basic illustration:

import psutil

for proc in psutil.process_iter(['pid', 'name']):

if 'process_name' in proc.info['name']:

pid = proc.info['pid']

proc = psutil.Process(pid)

proc.terminate()

print(f"Process with PID {pid} killed.")

28. How can you compare two text files and identify the differences between them?

You can compare two text files and identify the differences using various methods, including command-line tools, dedicated software, or programming languages. A common approach is to use the diff command in Unix-like systems or Compare-Object in PowerShell. These tools highlight additions, deletions, and modifications between the files.

For programmatic comparison, many languages offer libraries to read files line by line and compare them. For example, in Python, you can read both files into lists of strings and then iterate through the lists, comparing elements and noting any discrepancies. Here's a simple example:

file1_lines = open("file1.txt", 'r').readlines()

file2_lines = open("file2.txt", 'r').readlines()

for i in range(min(len(file1_lines), len(file2_lines))):

if file1_lines[i] != file2_lines[i]:

print(f"Difference at line {i+1}:")

print(f"File1: {file1_lines[i].strip()}")

print(f"File2: {file2_lines[i].strip()}")

Linux Commands interview questions for experienced

1. How can you identify the files that have been modified in the last 24 hours, but exclude files in specific directories like /tmp?

To identify files modified in the last 24 hours, excluding specific directories like /tmp, you can use the find command in Unix-like systems. The command would be similar to:

find . -not -path "*/tmp*" -type f -mtime -1

This command searches the current directory (.) but excludes any path containing /tmp. -type f ensures only files are listed. -mtime -1 filters for files modified within the last 24 hours (1 day). You can adjust the path if you are looking for files in other directories.

2. Explain how you would diagnose high CPU utilization on a Linux server, focusing on identifying the specific processes causing the issue.

To diagnose high CPU utilization on a Linux server, I would start by using top or htop. These commands provide a real-time view of system processes, sorted by CPU usage by default. The output will show the processes consuming the most CPU. I'd note the PID (Process ID) of the top CPU consumers. Once I have the PID(s), I can use commands like ps -aux | grep <PID> or ps -p <PID> -o %cpu,%mem,cmd to get more detailed information about the process, including the user running it, its command, CPU, and memory usage. Also, strace -p <PID> can be useful to see the system calls the process is making, which may reveal the cause, such as excessive file I/O or network activity. Another approach is to use pidstat -p <PID> 1 which gives per-process CPU usage statistics every 1 second.

If the process is a Java application, tools like jstack <PID> or a profiler such as JProfiler or VisualVM are helpful to analyze thread activity and identify CPU-intensive methods. For other languages, similar profiling tools might be available (e.g., for Python, you can use cProfile). If the high CPU usage is intermittent, tools like perf can be used to sample system-wide events and identify hotspots in the code.

3. Describe your process for recovering data from a corrupted ext4 filesystem.

Data recovery from a corrupted ext4 filesystem involves several steps. First, I'd attempt to mount the filesystem read-only to prevent further damage. If that fails, fsck.ext4 is my primary tool, running it with options like -y (to automatically fix errors) and -v (for verbose output). It's crucial to work on a backup image of the partition to avoid making the situation worse. Before running fsck, I would create a copy of the partition using dd.

If fsck doesn't fully recover the data, I'd then explore tools like testdisk and photorec to identify and recover lost files based on file headers, even if the directory structure is damaged. debugfs is also helpful for manual inspection and repair of the filesystem metadata in advanced situations.

4. How would you set up a cron job to run a script every 15 minutes, only on weekdays, and log the output to a specific file?

To schedule a cron job that runs a script every 15 minutes, only on weekdays, and logs the output to a specific file, you would edit the crontab using the command crontab -e. Then add a line similar to this:

*/15 * * * 1-5 /path/to/your/script.sh >> /path/to/your/logfile.log 2>&1

This line does the following:

*/15 * * * 1-5: Specifies the schedule.*/15means every 15 minutes.1-5represents Monday through Friday (weekdays)./path/to/your/script.sh: Is the full path to the script you want to execute.>> /path/to/your/logfile.log 2>&1: Redirects both standard output (stdout) and standard error (stderr) to the specified log file. The>>appends to the file. If you want to overwrite the log file each time the script runs, use>instead of>>.

5. Can you detail the steps involved in creating and configuring a RAID array on a Linux system?

Creating and configuring a RAID array on Linux typically involves these steps: First, install the mdadm utility, which is used to manage RAID arrays. This is typically done via sudo apt-get install mdadm (Debian/Ubuntu) or sudo yum install mdadm (CentOS/RHEL). Next, identify the disks you'll be using for the array using commands like lsblk. Then, create the RAID array using mdadm --create /dev/md0 --level=<raid_level> --raid-devices=<number_of_devices> <device1> <device2> .... Replace <raid_level> with the desired RAID level (e.g., 1, 5, 10), <number_of_devices> with the number of disks, and <device1>, <device2>, etc., with the actual device names (e.g., /dev/sdb, /dev/sdc). After creating the array, format it with a filesystem like ext4 using mkfs.ext4 /dev/md0. Create a mount point (e.g., /mnt/raid) and mount the array using mount /dev/md0 /mnt/raid.

Finally, to make the array persistent across reboots, save the RAID configuration using mdadm --detail --scan >> /etc/mdadm/mdadm.conf and update the initramfs with update-initramfs -u. Also, add an entry to /etc/fstab to automatically mount the RAID array at boot. The entry would look like: /dev/md0 /mnt/raid ext4 defaults 0 0. Remember to replace /dev/md0 and /mnt/raid with your actual device and mount point, and ext4 with your selected filesystem.

6. Explain how you would use systemd to manage a custom service, including auto-restarts and dependency management.

To manage a custom service with systemd, I'd create a service unit file (e.g., my-service.service) in /etc/systemd/system/. This file would define the service's behavior, including the command to execute (ExecStart), user/group context (User, Group), and restart policy (Restart=on-failure for auto-restarts on failure). Dependency management is handled using Requires= and After= directives. For example, Requires=network.target and After=network.target would ensure that the service starts only after the network is available. To enable and start the service, I'd use systemctl enable my-service.service and systemctl start my-service.service. Finally, systemctl status my-service.service is used to confirm the service is running as expected.

For example, a simple service unit file could look like this:

[Unit]

Description=My Custom Service

After=network.target

[Service]

User=myuser

ExecStart=/usr/local/bin/my-service-script.sh

Restart=on-failure

[Install]

WantedBy=multi-user.target

7. How can you use the 'lsof' command to troubleshoot network connectivity issues for a specific application?

The lsof command can help troubleshoot network connectivity issues by identifying which files and network sockets a specific application has open. You can use lsof -i to display network connections and then filter by the application's process ID (PID) or command name. For example, lsof -i -p <PID> or lsof -i | grep <application_name>. This allows you to see the application's open TCP and UDP connections, listening ports, and the remote addresses it's communicating with.

By examining the output, you can determine if the application is listening on the correct port, if it's successfully establishing connections to the expected remote servers, and if any connections are in a problematic state (e.g., TIME_WAIT, CLOSE_WAIT). This helps diagnose issues like incorrect port configuration, firewall blocks, or problems with the application's network communication logic. Using lsof +c 15 can increase the displayed information. Adding -n skips name resolution, speeding up the query.

8. Describe your approach to auditing user activity on a Linux system for security purposes.

My approach to auditing user activity on a Linux system for security involves several key areas. Primarily, I focus on leveraging the system's built-in auditing capabilities using auditd. This involves configuring auditd rules to monitor critical system calls related to file access, process execution, and network connections. These rules generate detailed logs that capture information about user actions. Analyzing these logs, often using tools like ausearch and aureport, helps to identify suspicious patterns or unauthorized activities.

Additionally, I'd examine standard system logs like /var/log/auth.log (or /var/log/secure on some systems) for authentication attempts (successful and failed), and /var/log/syslog for general system events that might indicate security issues. Implementing log rotation and centralized log management (e.g., using rsyslog or syslog-ng and sending logs to a SIEM) are also crucial for long-term analysis and correlation. Regularly reviewing user accounts, permissions, and sudo access is also part of the process. For more in-depth analysis, tools like osquery can be used to query system state as if it were a database, enabling more complex correlations.

9. How do you use 'iptables' or 'firewalld' to configure a firewall with specific rules for allowing and denying traffic?

Both iptables and firewalld configure Linux firewalls, but they have different mechanisms. iptables directly manipulates kernel tables using commands like iptables -A INPUT -p tcp --dport 80 -j ACCEPT (accepts HTTP traffic). firewalld is a dynamic firewall manager providing a higher-level abstraction. With firewalld, you'd use firewall-cmd --permanent --add-port=80/tcp --zone=public (permits HTTP on the public zone). To deny traffic, you can replace ACCEPT with DROP or REJECT in iptables or use the --add-rich-rule option with firewall-cmd to define complex conditions, such as firewall-cmd --permanent --add-rich-rule='rule family="ipv4" source address="192.168.1.10" drop' (drops traffic from 192.168.1.10). Remember to reload firewalld after permanent changes: firewall-cmd --reload.

Basic rules include specifying the table (filter, nat, mangle), chain (INPUT, OUTPUT, FORWARD), protocol (tcp, udp, icmp), source/destination IP addresses and ports, and the target action (ACCEPT, DROP, REJECT). iptables rules are applied in order. firewalld utilizes zones (e.g., public, private, trusted) to manage rulesets based on network connections. Always start with a default deny policy and selectively allow necessary traffic.

10. Explain how you would troubleshoot a slow-performing database server on Linux, focusing on disk I/O bottlenecks.

To troubleshoot a slow-performing database server on Linux with a focus on disk I/O bottlenecks, I'd start by identifying the problem and then narrowing it down. I would use tools such as iostat, vmstat, and iotop to monitor disk I/O. Specifically, iostat -xz 1 will show extended statistics including utilization (%util) and service time (await), helping determine if the disks are saturated. High %util and await values indicate a potential I/O bottleneck.

Next, I'd investigate the specific processes consuming the most I/O using iotop. If the database process is the culprit, I'd examine the database queries and operations being performed. Analyzing slow query logs or using database-specific performance monitoring tools can help identify inefficient queries or operations that are generating excessive disk I/O. Solutions might involve query optimization, indexing, or even upgrading the storage system to faster drives or a RAID configuration.

11. Describe how you'd configure and use SSH keys for passwordless authentication, and what security measures you'd implement to protect the keys.

To configure SSH keys for passwordless authentication, I'd first generate a key pair using ssh-keygen on my local machine. I'd then copy the public key (e.g., ~/.ssh/id_rsa.pub) to the remote server's ~/.ssh/authorized_keys file for the user I want to log in as. This allows authentication without a password. To protect the SSH keys, I would implement several security measures:

- Restrict key permissions: Ensure the

.sshdirectory has permissions700and theauthorized_keysfile has permissions600. - Use a strong passphrase: When generating the key pair, use a strong and unique passphrase.

- Disable password authentication: On the server, disable password authentication in the

/etc/ssh/sshd_configfile by settingPasswordAuthentication noandChallengeResponseAuthentication noand restarting the sshd service. - Key rotation: Regularly rotate SSH keys to limit the impact of a compromised key.

- Store private key securely: Store the private key with appropriate file system permissions and consider using an encrypted container if the key will be stored long term without a passphrase protection.

- Use SSH agent forwarding with caution: When using SSH agent forwarding, be aware of the potential security risks if the remote server is compromised. Agent forwarding can allow the compromised server to access further resources with the originating user's authentication.

- Implement MFA: Integrate Multi-Factor Authentication (MFA) using a hardware key or an authenticator app as an additional layer of protection.

12. How would you use the 'strace' command to debug a program that is crashing unexpectedly?

To debug a crashing program with strace, I'd start by running strace <program_name> to observe the system calls being made before the crash. I would redirect the output to a file (e.g., strace -o trace.txt <program_name>) for easier analysis, especially if the program produces a lot of output. After the crash, I would examine the end of the trace.txt file to identify the last few system calls made. These can often indicate the cause of the crash such as:

- Segmentation faults: look for calls related to memory access (e.g.,

read,write,mmap) that might be accessing invalid memory addresses. - File issues: examine calls like

open,read,write,closefor file-related errors. - Signal handling: look for calls related to signal handling (e.g.,

sigaction,kill) that might be causing the program to terminate.

By analyzing the last system calls, I can gain valuable insights into the program's behavior leading up to the crash and pinpoint potential areas of concern in the code. Adding -f can be useful to trace child processes and -T can provide time spent in each syscall to understand performance or timing issues. For example, if a write call fails with EPIPE, it may indicate a broken pipe that lead to the crash.

13. Explain how to use namespaces to create isolated environments for running applications, and what are the benefits?

Namespaces provide a way to isolate resources for processes, creating separate environments as if the processes are running on distinct systems. This isolation includes process IDs (PID), network interfaces, mount points, inter-process communication (IPC), hostname, and user IDs. Using namespaces involves system calls like clone() with specific flags (e.g., CLONE_NEWPID, CLONE_NEWNET) or utilities like unshare.

The benefits include improved security by limiting the impact of compromised applications, better resource management by preventing interference between applications, and simplified deployment and testing using containerization technologies like Docker (which heavily rely on namespaces). Containers, in effect, are processes running within a set of namespaces.

14. How would you back up and restore a complete Linux system, including the bootloader and all partitions?

To back up a complete Linux system, including the bootloader and all partitions, I would use dd or partclone. dd can create a full image of the entire disk: dd if=/dev/sda of=/path/to/backup.img bs=4M status=progress. Alternatively, partclone can back up specific partitions, saving space by only backing up used blocks: partclone.ext4 -c -s /dev/sda1 -o /path/to/sda1.img. For bootloader, backing up the MBR/EFI partition is crucial. For EFI dd if=/dev/sda1 of=/path/to/efi.img bs=4M count=1 , for MBR dd if=/dev/sda of=/path/to/mbr.img bs=512 count=1

To restore, I would boot from a live environment. For dd, I would reverse the command: dd if=/path/to/backup.img of=/dev/sda bs=4M status=progress. For partclone, I would use partclone.ext4 -r -s /path/to/sda1.img -o /dev/sda1. Restoring the bootloader depends on if it is EFI or MBR. For MBR, dd if=/path/to/mbr.img of=/dev/sda bs=512 count=1. For EFI, copy the backed up files to the EFI partition after mounting it. Note that restoring with dd will overwrite the entire disk, including partitions, so ensuring you are pointing to the correct disk is vital. Also use fdisk or gdisk to recreate the partition table if needed before restoring partition data.

15. Describe how you would monitor the health and performance of a Linux server using tools like 'sar' or 'vmstat'.

To monitor a Linux server's health and performance using sar and vmstat, I'd focus on key metrics. vmstat provides a quick overview of CPU usage (user, system, idle), memory usage (free, buffer, cache), swap activity, and I/O. I'd pay close attention to high CPU usage (especially system time), low free memory, excessive swapping, and high I/O wait times as indicators of potential problems. sar, on the other hand, offers historical data and a more granular view. Using sar, I would look for trends over time, using flags such as -u for CPU utilization, -r for memory statistics, -b for I/O and -n TCP, ETCP for network statistics. I'd set up sar to collect data regularly, enabling me to analyze performance patterns and identify bottlenecks that might not be immediately apparent with vmstat's real-time snapshot. The combination of quick vmstat checks and trended sar data is useful for comprehensive server monitoring.

16. Explain how you would use the 'tcpdump' command to capture and analyze network traffic for troubleshooting purposes.

To capture network traffic with tcpdump, I'd start with a basic command like tcpdump -i <interface>. This captures all traffic on the specified interface. For example, tcpdump -i eth0 captures traffic on the eth0 interface. To filter, I'd use expressions like tcpdump port 80 to capture HTTP traffic, or tcpdump src host 192.168.1.100 to capture traffic from a specific IP address. I can also save the captured traffic to a file using tcpdump -w <filename.pcap> -i <interface>.

For analysis, I'd use tools like Wireshark to open the .pcap file or I'd use tcpdump with read option to analyze live output in terminal using option such as -r <filename.pcap> or I will analyze capture traffic directly using tcpdump filtering options. I'd look for patterns, errors, or unexpected traffic. For example, I can filter for retransmissions or TCP resets to diagnose connection issues. Also, analyzing packet sizes and timing can help identify bandwidth bottlenecks or latency problems. Specifically, I use -nn to avoid DNS lookups, which speeds up analysis, and -v or -vv for more verbose output.

17. How would you set up a reverse proxy using Nginx or Apache to improve the performance and security of a web application?

To set up a reverse proxy with Nginx or Apache, you'd configure it to sit in front of your web application server. For performance, this allows the reverse proxy to cache static content like images, CSS, and JavaScript, reducing the load on the application server and speeding up response times for users. The proxy can also handle SSL/TLS encryption, offloading this processing from the application server.

For security, the reverse proxy acts as a shield, hiding the application server's IP address and internal structure from the outside world. This makes it harder for attackers to directly target the application server. You can also configure the reverse proxy to implement security measures like rate limiting, web application firewall (WAF) rules, and protection against DDoS attacks. Here's a simple Nginx configuration example:

server {

listen 80;

server_name example.com;

location / {

proxy_pass http://backend_server_ip:8080;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

}

18. Describe your experience with Linux containers, and explain the difference between Docker and other containerization technologies.

I have experience using Linux containers, primarily with Docker. I've used Docker to containerize applications, manage dependencies, and create portable environments for development, testing, and deployment. I'm familiar with writing Dockerfiles, building images, managing containers, and orchestrating them using tools like Docker Compose.

The main difference between Docker and other containerization technologies lies in Docker's comprehensive ecosystem. While other technologies like LXC (Linux Containers) provide the underlying containerization capabilities, Docker offers a user-friendly interface, a rich set of tools for image management, networking, and storage, and a large community and public registry (Docker Hub). Docker simplifies the entire container lifecycle, from building and sharing images to deploying and managing containers, making it more accessible and efficient for developers and operations teams. Other container technologies like rkt (now deprecated) and containerd primarily focus on the container runtime, leaving other aspects of the container lifecycle to be handled by other tools. Docker's integrated approach has contributed to its widespread adoption.

19. How can you use the 'awk' command to process log files and extract specific information based on patterns?

Awk is a powerful tool for processing log files by searching for patterns and extracting relevant data. You can use it to filter lines based on specific criteria, extract fields, and format the output. For example, to extract all lines containing the word 'error' from a log file, you could use: awk '/error/' logfile.txt. To print the first field (separated by spaces) of each line, use awk '{print $1}' logfile.txt.

Awk can also handle more complex patterns and actions. To extract specific information based on more complex patterns and print them in a custom format, you can combine regular expressions and print statements. For instance, awk '/error/ {print "Timestamp: " $1 ", Message: " $NF}' logfile.txt will find lines containing "error", and print the first field as a timestamp and the last field as the message. You can also redirect the output to another file, perform calculations, and define functions within awk scripts for more sophisticated log analysis.

20. Explain how to manage and troubleshoot DNS resolution issues on a Linux server.

To manage and troubleshoot DNS resolution issues on a Linux server, start by checking /etc/resolv.conf to ensure the correct DNS server addresses are listed. Use nslookup, dig, or host commands to query DNS servers and verify name resolution for specific domains. For example, nslookup google.com or dig google.com. These tools help identify if the server can reach the DNS servers and if the DNS servers are returning the correct IP addresses.

Common troubleshooting steps include flushing the local DNS cache (systemd-resolve --flush-caches or /etc/init.d/nscd restart), verifying network connectivity to the DNS servers using ping, and checking firewall rules that might be blocking DNS traffic (port 53). If the issue persists, investigate the DNS server configuration itself for errors or outages. Examining system logs (/var/log/syslog or /var/log/messages) can also provide valuable clues about DNS resolution failures.

21. How would you configure a Linux system to automatically mount a network share on boot?

To automatically mount a network share on boot in Linux, you'd typically modify the /etc/fstab file. Add a line specifying the share's location, mount point, filesystem type, and mount options. For example, to mount a Samba share, you might add a line like:

//server/share /mnt/share cifs credentials=/path/to/credentials.txt,uid=1000,gid=1000 0 0

Replace //server/share with the network share's address, /mnt/share with the desired mount point, and /path/to/credentials.txt with a file containing the username and password. You can use uid and gid to set the user and group ownership of the mounted share. The 0 0 at the end controls whether the filesystem will be dumped and checked on boot, setting them to 0 disables these features for this mount point. Consider using a credentials file for security, and ensure the mount point directory exists.

22. Describe the process of setting up and managing SELinux or AppArmor for enhanced system security.