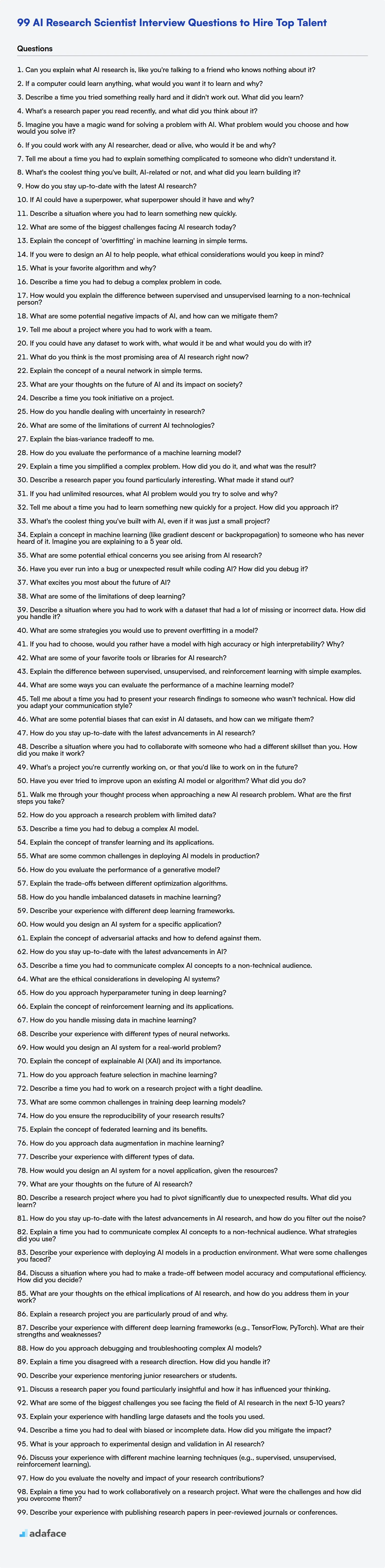

Hiring an AI Research Scientist requires more than just understanding algorithms; it demands professionals who can innovate and push the boundaries of what's possible, just like hiring a machine learning engineer. A well-structured interview process is a must to identify candidates who possess the knowledge, creativity, and problem-solving to drive your organization's AI initiatives.

This blog post provides a curated list of interview questions for AI Research Scientist roles, covering various experience levels from freshers to experienced professionals. You will also find multiple-choice questions (MCQs) to help you thoroughly assess a candidate's theoretical and practical knowledge.

By using these questions, you can better gauge a candidate's suitability and potential to excel in your AI research team. To further streamline your hiring, consider using Adaface's Research Scientist Test to pre-screen candidates for core skills before the interview.

Table of contents

AI Research Scientist interview questions for freshers

1. Can you explain what AI research is, like you're talking to a friend who knows nothing about it?

Okay, so imagine AI research as trying to make computers think and act like humans. It's not about building robots that take over the world, but more about creating smart tools that can solve problems, learn from data, and make decisions. Think of it like teaching a computer to recognize your face in photos, translate languages, or even play games like chess really well.

Basically, AI researchers are exploring different ways to give computers 'intelligence'. This could involve developing new algorithms, creating massive datasets for training, or even trying to understand how the human brain works so we can mimic those processes in machines. It is a wide field that borrows ideas from math, computer science, psychology, and even neuroscience.

2. If a computer could learn anything, what would you want it to learn and why?

If a computer could learn anything, I'd want it to learn common sense reasoning and human empathy. While AI excels at processing data and performing specific tasks, it often struggles with the nuances of everyday situations and understanding human emotions.

Learning common sense would allow AI to make better decisions in complex, real-world scenarios, avoiding illogical or harmful outcomes. Combining that with empathy, the AI could provide more personalized and compassionate assistance, fostering better human-computer interactions and addressing ethical concerns related to AI's growing influence.

3. Describe a time you tried something really hard and it didn't work out. What did you learn?

During my final year project, I attempted to implement a novel image recognition algorithm from a research paper. The paper claimed state-of-the-art accuracy, and I was ambitious to replicate it. I spent weeks meticulously coding the algorithm, debugging, and tuning hyperparameters. Despite my best efforts, I couldn't achieve the reported performance. My implementation consistently underperformed compared to the results in the paper, even after verifying data pre-processing and environment setup. I realized that replicating complex research often involves unspoken nuances and hidden implementation details not explicitly mentioned in the paper. I learned the importance of focusing on understanding the fundamental concepts first and thoroughly testing individual components before integrating everything. While the project didn't achieve its initial goal, it deepened my understanding of image recognition algorithms and the challenges of replicating research. More importantly, I now prioritize a more iterative and analytical approach when tackling complex tasks, focusing on building a solid foundation before aiming for ambitious targets. Debugging this project also improved my git bisect skills tremendously.

4. What's a research paper you read recently, and what did you think about it?

Recently, I read the paper "Language Models are Few-Shot Learners" (Brown et al., 2020), which introduced GPT-3. I found the scale of the model and its few-shot learning capabilities quite impressive. The paper demonstrated that large language models, without any gradient updates or fine-tuning, can perform reasonably well on a wide range of tasks simply by providing a few examples in the prompt.

While the results were compelling, I also considered the limitations. The paper highlights the computational cost and the potential for biased outputs. Furthermore, the lack of interpretability in such large models raises concerns about understanding their decision-making process. Overall, I found the paper a significant contribution to the field, showcasing the potential and challenges of large language models.

5. Imagine you have a magic wand for solving a problem with AI. What problem would you choose and how would you solve it?

If I had a magic AI wand, I'd tackle personalized education. Current education systems often follow a one-size-fits-all approach, which isn't effective for all students. The AI would analyze each student's learning style, strengths, weaknesses, and interests to create a completely customized learning path.

The AI would continuously adapt the curriculum based on the student's progress and feedback. It would identify areas where the student is struggling and provide targeted support and resources. Conversely, if a student is excelling, the AI would offer more advanced challenges to keep them engaged and motivated. This personalized approach could unlock each student's full potential and make learning more enjoyable and effective.

6. If you could work with any AI researcher, dead or alive, who would it be and why?

I would choose to work with Geoffrey Hinton. His pioneering work on backpropagation and deep learning laid the foundation for many of the AI advancements we see today. I'm particularly interested in his current research on capsule networks and his continued exploration of how to make AI systems more robust and interpretable.

Working with him would provide invaluable learning opportunities. I'd be excited to contribute to his team and to gain insights into his approach to problem-solving, his intuition about promising research directions, and his dedication to pushing the boundaries of AI. His work inspires me, and collaborating with him would be a dream come true.

7. Tell me about a time you had to explain something complicated to someone who didn't understand it.

I once had to explain the concept of RESTful APIs to a marketing team who were unfamiliar with software development. They needed to understand how our new website integrated with third-party services. I avoided technical jargon and instead used an analogy of a restaurant. I explained that the API was like a waiter (the interface), the menu was the available requests (like getting user data or submitting a form), and the kitchen was the server processing the request. I focused on the what and why – what data we were exchanging and why it was important for their marketing campaigns – rather than the how, which involved code and servers.

I used visuals, like a simplified diagram showing the flow of information between the website, the API, and the external services. For example, I might show: Website -> API (requesting user profile) -> External Service (providing user profile) -> API (delivering to website). I also related it to their daily tasks by showing how using a CMS to update product information ultimately used an API call to update information on the live website. By framing it in terms of familiar concepts and focusing on their specific needs, I was able to get them to understand the basics of APIs and how they supported our marketing efforts.

8. What's the coolest thing you've built, AI-related or not, and what did you learn building it?

The coolest thing I built was a real-time object detection system for a robotics project. It used YOLOv5 and ran on a Raspberry Pi with a Neural Compute Stick. The robot could identify and track objects in its environment, allowing it to navigate autonomously. The robot also made use of a Kalman filter for sensor fusion to further improve accuracy.

Building it taught me a lot about optimizing deep learning models for embedded systems. I learned about quantization, pruning, and other techniques to reduce model size and improve inference speed. I also gained experience with hardware acceleration and working with limited resources. This included troubleshooting hardware interfacing and efficiently debugging code in a constrained environment. Furthermore, it gave me practical experience deploying a full AI solution from start to finish, from the initial design to real-world execution.

9. How do you stay up-to-date with the latest AI research?

I stay up-to-date with the latest AI research through a combination of online resources and community engagement. I regularly read research papers on arXiv and follow key AI conferences like NeurIPS, ICML, and ICLR to understand the current trends and breakthroughs. Additionally, I subscribe to AI-focused newsletters and blogs from reputable organizations and researchers, such as those from Google AI, OpenAI, and DeepMind.

To go further, I actively participate in online communities such as Reddit's r/MachineLearning, follow prominent AI researchers on Twitter, and occasionally take online courses or workshops to deepen my understanding of specific topics. This multi-faceted approach ensures I am aware of both theoretical advancements and practical applications in the field.

10. If AI could have a superpower, what superpower should it have and why?

If AI could have a superpower, it should have the ability to flawlessly and ethically synthesize information from all available sources, regardless of format or language. This would allow it to provide humans with a comprehensive and unbiased understanding of complex issues, facilitating better decision-making and accelerating scientific discovery.

This superpower would be invaluable because current AI is often limited by the data it's trained on, leading to biases and incomplete perspectives. Imagine AI instantly synthesizing the entire corpus of medical research to find a cure for cancer or analyzing all climate data to develop effective mitigation strategies. The ethical component ensures responsible use, preventing manipulation or the spread of misinformation.

11. Describe a situation where you had to learn something new quickly.

During my internship, I was assigned to a project involving a new cloud platform I had no prior experience with. The project was already underway and I needed to contribute immediately. I spent the first few days immersing myself in the platform's documentation and online tutorials. I focused on the specific services we were using, such as serverless functions and message queues. I also paired with a senior engineer who walked me through the codebase and explained the architecture.

To solidify my understanding, I took on small tasks and actively sought feedback. For example, I was tasked with implementing a new API endpoint using the serverless functions. I used online documentation and the examples from the senior engineer to complete the task. I was able to contribute meaningfully to the project within a week due to the focused learning and hands on approach.

12. What are some of the biggest challenges facing AI research today?

AI research faces several significant challenges. One major hurdle is the lack of truly generalizable AI. Current AI models often excel in specific tasks but struggle to adapt to new, unseen situations. This requires more research into areas like transfer learning and meta-learning. Another issue is data dependency: many AI algorithms, especially deep learning models, require massive amounts of labeled data, which can be expensive and time-consuming to acquire or create. This limits the applicability of AI in domains where data is scarce.

Furthermore, ensuring AI safety and alignment remains a critical concern. As AI systems become more powerful, it is crucial to develop mechanisms to ensure that their goals align with human values and that they operate safely and ethically. Addressing issues like bias in algorithms, explainability, and adversarial attacks is crucial for building trustworthy AI. Finally, computational resources remain a limiting factor, especially for training large models. Advances in hardware and efficient algorithms are necessary to continue pushing the boundaries of AI capabilities.

13. Explain the concept of 'overfitting' in machine learning in simple terms.

Overfitting happens when a machine learning model learns the training data too well, including its noise and outliers. Instead of generalizing to new, unseen data, the model essentially memorizes the training set. This leads to very high accuracy on the training data, but poor performance on new data.

Think of it like studying for an exam by only memorizing the answers to practice questions. You'll do great on the practice questions, but if the exam has any slightly different questions, you'll struggle because you haven't actually understood the underlying concepts.

14. If you were to design an AI to help people, what ethical considerations would you keep in mind?

If I were to design an AI to help people, several ethical considerations would be paramount. First, bias mitigation is crucial. The training data must be carefully curated to avoid perpetuating or amplifying existing societal biases related to gender, race, socioeconomic status, etc. Regular audits and testing are needed to identify and correct for unintended biases in the AI's decision-making processes. I'd also prioritize transparency and explainability. Users should understand how the AI arrives at its recommendations or decisions. This builds trust and allows users to critically evaluate the AI's output. Explainable AI (XAI) techniques would be necessary to achieve this. Finally, data privacy and security are fundamental. The AI must handle sensitive user data responsibly, complying with relevant regulations (e.g., GDPR, CCPA) and implementing strong security measures to prevent unauthorized access or misuse. Anonymization and differential privacy could be used.

15. What is your favorite algorithm and why?

My favorite algorithm is the A* search algorithm. I find it elegant and efficient for pathfinding and graph traversal problems. Its use of a heuristic function to guide the search allows it to intelligently explore the most promising paths first, leading to significantly faster solutions compared to uninformed search algorithms.

Specifically, I appreciate how A* balances the actual cost from the start node (g(n)) with an estimated cost to the goal (h(n)), using f(n) = g(n) + h(n) to prioritize nodes. The adaptability of the heuristic function makes it useful in various scenarios. Implementing it often involves priority queues, which are also useful data structures to be familiar with. For example:

def a_star(graph, start, goal, heuristic):

open_set = PriorityQueue()

open_set.put((0, start))

came_from = {}

g_score = {start: 0}

f_score = {start: heuristic(start, goal)}

while not open_set.empty():

current = open_set.get()[1]

if current == goal:

return reconstruct_path(came_from, current)

for neighbor in graph[current]:

temp_g_score = g_score[current] + graph[current][neighbor]

if temp_g_score < g_score.get(neighbor, float('inf')):

came_from[neighbor] = current

g_score[neighbor] = temp_g_score

f_score[neighbor] = temp_g_score + heuristic(neighbor, goal)

if neighbor not in [item[1] for item in open_set.queue]:

open_set.put((f_score[neighbor], neighbor))

return None

def reconstruct_path(came_from, current):

path = [current]

while current in came_from:

current = came_from[current]

path.insert(0, current)

return path

16. Describe a time you had to debug a complex problem in code.

During my work on a high-throughput data processing pipeline, we experienced intermittent failures in one of the critical data transformation services. The logs showed generic 'internal server error' messages without any specific error details. To debug this, I started by implementing more verbose logging, adding request IDs and timestamps to track individual data flows. I also introduced health check endpoints to monitor the service's resource utilization (CPU, memory) and database connectivity. After deploying these changes, I correlated the error timestamps with system metrics and identified a memory leak that gradually exhausted available memory, eventually causing the service to crash.

To fix this, I used a memory profiler to identify the objects consuming the most memory. It turned out that a caching mechanism, intended to improve performance, was not properly evicting old data. The cache was unbounded and grew continuously. I implemented a Least Recently Used (LRU) eviction policy for the cache, limiting its size. After deploying the fix, the memory leak was resolved, and the intermittent failures stopped.

17. How would you explain the difference between supervised and unsupervised learning to a non-technical person?

Imagine you're teaching a dog tricks. In supervised learning, you show the dog exactly what to do, giving it a treat (the correct answer) when it does it right. You have a clear idea of the desired outcome and provide labeled examples. For example, you show a picture of a cat and tell the system 'this is a cat' repeatedly so that it can then identify other cats on its own.

In unsupervised learning, you let the dog explore on its own. You don't tell it the 'right' answer. Instead, the dog figures out patterns and relationships in the data itself. Think of giving the dog a bunch of toys; it might naturally group them based on size, color, or material, even without you telling it how to classify them. For example, you give the system a bunch of customer data, and it figures out that there are distinct groups of customers based on their spending habits, without you ever telling the system what the groups are.

18. What are some potential negative impacts of AI, and how can we mitigate them?

Potential negative impacts of AI include job displacement due to automation, algorithmic bias leading to unfair or discriminatory outcomes, privacy concerns from extensive data collection and surveillance, and the potential for misuse in autonomous weapons systems. Addressing these requires proactive measures.

Mitigation strategies involve investing in retraining programs for displaced workers, developing techniques for detecting and mitigating algorithmic bias (e.g., using fairness-aware algorithms, diverse datasets), establishing robust data privacy regulations and ethical guidelines for AI development, and promoting international cooperation to prevent the weaponization of AI. Public education and transparency in AI systems are also crucial to foster trust and understanding.

19. Tell me about a project where you had to work with a team.

In my previous role, I was part of a team developing a new feature for our company's mobile application. My primary responsibility was to implement the user authentication module using OAuth 2.0. This involved close collaboration with backend engineers to define API endpoints, UI/UX designers to ensure a seamless user experience, and QA testers to identify and resolve any bugs.

We used agile methodologies, with daily stand-up meetings to track progress and identify roadblocks. One challenge we faced was integrating with a third-party authentication provider that had inconsistent documentation. To overcome this, we held multiple meetings with their support team, experimented with different approaches, and shared our findings with the rest of the team using a shared document. Ultimately, we successfully implemented the authentication module, which led to an increase in user engagement.

20. If you could have any dataset to work with, what would it be and what would you do with it?

If I could choose any dataset, I'd love to work with a comprehensive dataset of social media interactions, encompassing platforms like Twitter, Reddit, and Facebook (if ethically sourced, of course). The data would include posts, comments, likes, shares, and user profiles, anonymized to protect privacy.

I would use this data for several interesting projects:

- Sentiment Analysis: Analyzing overall public sentiment towards various topics, brands, or events over time.

- Network Analysis: Mapping the relationships between users and communities to understand influence and information flow.

PythonwithNetworkXwould be my primary tool. - Misinformation Detection: Building models to identify and flag potentially false or misleading information spreading online. I'd use NLP techniques with libraries like

spaCyandtransformersto train models on labelled data.

21. What do you think is the most promising area of AI research right now?

One of the most promising areas of AI research right now is the intersection of large language models (LLMs) with reasoning and planning. While LLMs have demonstrated impressive capabilities in generating text and answering questions, their ability to perform complex reasoning and planning remains limited. Research is focused on augmenting LLMs with external tools and knowledge sources to enable them to solve more intricate problems and make informed decisions. This includes techniques like chain-of-thought prompting, retrieval-augmented generation, and the integration of symbolic reasoning systems. These advancements hold immense potential for applications such as automated problem-solving, robotics, and decision support systems.

Another area with great potential is self-supervised learning and unsupervised learning. Scaling up labelled datasets is expensive, so research into methods that can learn effectively from unlabelled data is important. Advancements in contrastive learning, masked autoencoders, and generative models are paving the way for more robust and generalizable AI systems that can adapt to new environments and tasks with minimal human supervision.

22. Explain the concept of a neural network in simple terms.

A neural network is a computer system modeled after the human brain. It consists of interconnected nodes called "neurons" organized in layers. These neurons process information, and the connections between them have weights that are adjusted during learning.

Essentially, the network learns by example. You feed it lots of data, and it adjusts the weights of the connections to become better at predicting or classifying new data. Think of it like teaching a child to recognize a cat by showing it many pictures of cats and non-cats. The network gradually learns what features (ears, whiskers, etc.) are important for identifying a cat.

23. What are your thoughts on the future of AI and its impact on society?

The future of AI is poised to bring significant transformations across various aspects of society. We can expect advancements in areas like healthcare (personalized medicine, drug discovery), transportation (autonomous vehicles), and automation (increased efficiency in industries). However, it's crucial to proactively address potential challenges such as job displacement, ethical concerns regarding algorithmic bias, and the need for robust regulatory frameworks. Education and reskilling initiatives will be essential to adapt to the changing job market, and careful consideration must be given to ensuring fairness, transparency, and accountability in AI systems.

Ultimately, the impact of AI will depend on the choices we make today. By focusing on responsible development and deployment, prioritizing human well-being, and promoting collaboration between researchers, policymakers, and the public, we can harness the power of AI to create a more equitable and prosperous future for all. Ignoring these considerations risks exacerbating existing inequalities and creating new challenges that could undermine the potential benefits of AI.

24. Describe a time you took initiative on a project.

During my time working on the data pipeline project at my previous company, I noticed that the current data validation process was taking an unusually long time, especially when dealing with large datasets. The existing validation script checked for data types and missing values, but it lacked more sophisticated checks for data anomalies or inconsistencies.

Taking initiative, I researched and implemented a new data validation module using Python and Pandas. This module included checks for statistical outliers, data range constraints, and cross-field consistency. I integrated it into the existing pipeline, and the overall validation time was reduced by approximately 30%, significantly improving data quality and reducing downstream errors. This proactive step prevented several costly data-related issues down the line and was well-received by the data science team.

25. How do you handle dealing with uncertainty in research?

Uncertainty is inherent in research. I address it by first acknowledging and quantifying it whenever possible. This involves identifying potential sources of error or bias in my data, methods, or assumptions. I then try to mitigate uncertainty through strategies like:

- Robust Experimental Design: Employing techniques like randomization, control groups, and blinding to minimize confounding variables.

- Sensitivity Analysis: Testing how my results change under different assumptions or parameter values.

- Bayesian methods: Incorporating prior beliefs to handle uncertain situations.

- Extensive Literature Review: Understanding what's already known and where gaps remain.

When communicating results, I clearly articulate the limitations and uncertainties, using confidence intervals or other statistical measures to convey the range of possible outcomes. In short, embrace it, quantify it, and mitigate it.

26. What are some of the limitations of current AI technologies?

Current AI technologies, particularly those relying on deep learning, have several limitations. They often require massive amounts of labeled data to train effectively, making them data-hungry. Furthermore, these models can be brittle and lack robustness, meaning they perform poorly when exposed to data outside of their training distribution. Explainability is another major challenge; it's often difficult to understand why an AI model makes a particular decision, hindering trust and accountability.

AI systems also struggle with common-sense reasoning and generalization. They may excel at specific tasks but fail to transfer their knowledge to related problems. Additionally, they can be susceptible to biases present in the training data, leading to unfair or discriminatory outcomes. Finally, current AI generally lacks true understanding and consciousness; they are good at pattern recognition and prediction but do not possess genuine intelligence.

27. Explain the bias-variance tradeoff to me.

The bias-variance tradeoff is a fundamental concept in machine learning that deals with the balance between a model's ability to fit the training data (bias) and its ability to generalize to unseen data (variance). Bias refers to the error introduced by approximating a real-world problem, which is often complex, by a simplified model. High bias models tend to underfit the data, missing relevant relationships. Variance, on the other hand, refers to the model's sensitivity to small fluctuations in the training data. High variance models tend to overfit the data, learning the noise instead of the underlying signal.

A good model aims to achieve a sweet spot where both bias and variance are minimized. Reducing bias often increases variance, and vice versa. For example, a complex model (e.g., high-degree polynomial regression) may have low bias on the training data but high variance on unseen data. A simple model (e.g., linear regression) may have high bias on the training data but low variance on unseen data. Regularization techniques (e.g., L1 or L2 regularization) help control the complexity of the model, and cross-validation helps in estimating the model's performance on unseen data to optimize this tradeoff.

28. How do you evaluate the performance of a machine learning model?

Evaluating a machine learning model depends on the problem type. For classification, common metrics include accuracy, precision, recall, F1-score, and AUC-ROC. Regression models are often evaluated using metrics like Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and R-squared.

Beyond these, it's crucial to consider the context. For example, if one type of error is much more costly than another (like in fraud detection), you might prioritize recall over precision, and consider specific metrics such as average precision. Ultimately, the right evaluation metric aligns with the business objective and the model's intended use.

AI Research Scientist interview questions for juniors

1. Explain a time you simplified a complex problem. How did you do it, and what was the result?

In a previous role, I was tasked with optimizing a slow-running data processing pipeline. The existing code was a tangled mess of nested loops and conditional statements, making it difficult to understand and improve. To simplify it, I first profiled the code to identify the performance bottlenecks. Then, I refactored the code to break it down into smaller, more modular functions with single responsibilities. This allowed me to replace inefficient algorithms with more optimized ones for each module.

The result was a significant performance improvement. The pipeline's execution time was reduced by approximately 60%, and the codebase became much easier to maintain and extend. This also allowed other members of the team to contribute more easily, as the new design was far more comprehensible.

2. Describe a research paper you found particularly interesting. What made it stand out?

A research paper that I found particularly interesting was "Attention is All You Need", which introduced the Transformer architecture. What made it stand out was its departure from recurrent neural networks for sequence-to-sequence tasks. It solely relied on attention mechanisms, enabling parallelization and significantly improving training speed and performance, especially for long sequences.

The core idea of using self-attention to capture relationships between different parts of the input sequence, without relying on sequential processing, was a game-changer. The paper was well-written and presented a novel approach with strong empirical results on machine translation tasks, paving the way for numerous advancements in natural language processing and other fields.

3. If you had unlimited resources, what AI problem would you try to solve and why?

If I had unlimited resources, I would focus on achieving true Artificial General Intelligence (AGI) with a strong emphasis on safety and ethical considerations. This would involve creating an AI system capable of understanding, learning, and applying knowledge across a wide range of domains, much like a human. The 'why' stems from the potential to solve some of humanity's most pressing problems, from climate change and disease eradication to resource management and scientific discovery, at a scale and speed currently unimaginable.

Achieving AGI, however, requires significant breakthroughs in areas like common-sense reasoning, contextual understanding, and the ability to generalize from limited data. Furthermore, guaranteeing its alignment with human values and preventing unintended consequences is paramount. Therefore, I'd dedicate resources not only to developing advanced AI architectures but also to robust safety mechanisms, explainable AI techniques, and ethical frameworks, ensuring AGI benefits all of humanity.

4. Tell me about a time you had to learn something new quickly for a project. How did you approach it?

In my previous role, I was assigned to a project involving sentiment analysis using a library I hadn't used before, spaCy. The timeline was tight, so I needed to get up to speed quickly.

My approach involved a few key steps: 1) I started with the official spaCy documentation and focused on the core concepts and examples relevant to sentiment analysis. 2) I searched for online tutorials and blog posts that specifically addressed sentiment analysis using spaCy. 3) I started with a small, simple test case, trying to replicate examples I found. This hands-on approach allowed me to quickly grasp the basic usage and identify any potential issues. 4) I then gradually increased the complexity of the test cases, incorporating more features and edge cases, until I felt comfortable enough to apply the library to the actual project.

5. What's the coolest thing you've built with AI, even if it was just a small project?

The coolest thing I've built with AI, even though it was a small project, was a simple text summarization tool using the transformers library in Python. I fine-tuned a pre-trained model (BART or T5) on a small dataset of news articles and their summaries. It was fascinating to see how the model learned to extract the key information from longer texts and generate concise summaries. The project highlighted the power of transfer learning and how readily available pre-trained models can be adapted to specific tasks with relatively little data and effort.

Specifically, I enjoyed seeing how I could take a model that had been trained on millions of webpages to extract meaningful summaries with just a few hundred examples, that alone was quite awesome. The key part was using Hugging Face transformers, and using the pipeline to achieve the summarization goals with very little code. It provided a very accessible way of working with these otherwise complex models.

6. Explain a concept in machine learning (like gradient descent or backpropagation) to someone who has never heard of it. Imagine you are explaining to a 5 year old.

Imagine you have a toy car, and you want to push it to the bottom of a bowl. Gradient descent is like you closing your eyes and feeling which way is downhill with your finger, then moving the car a little bit in that direction. You keep doing that, feeling and moving, feeling and moving, until the car gets to the bottom! In machine learning, the bowl is like a problem we want to solve, and the car is like the computer's guess. We use gradient descent to help the computer find the best guess, just like finding the bottom of the bowl.

Backpropagation is similar, but it's how the computer learns which way is downhill. Imagine the car accidentally went up a little bump before reaching the bottom of the bowl. Backpropagation is like figuring out why the car went up the bump, so next time, the computer knows to push the car in a slightly different direction to avoid the bump and get to the bottom faster. It's like learning from mistakes!

7. What are some potential ethical concerns you see arising from AI research?

AI research presents several ethical concerns. One major area is bias amplification. AI models are trained on data, and if that data reflects existing societal biases (e.g., in gender, race), the AI can perpetuate and even amplify these biases, leading to unfair or discriminatory outcomes in areas like hiring, loan applications, and criminal justice. Another concern is job displacement. As AI and automation become more sophisticated, they could replace human workers in various industries, potentially leading to widespread unemployment and economic inequality. Finally, the potential for misuse, particularly in areas like surveillance, autonomous weapons, and disinformation campaigns, raises serious ethical questions about accountability and the potential for harm.

8. Have you ever run into a bug or unexpected result while coding AI? How did you debug it?

Yes, I encountered an unexpected result when training a neural network for image classification. The training accuracy plateaued at a surprisingly low level despite seemingly optimal hyperparameters. Debugging involved several steps. First, I verified the data pipeline to ensure data integrity, checking for corrupted images and proper normalization. Then, I visualized the data to see if any underlying patterns or biases were present that the model was struggling with. Next, I reviewed the loss function and evaluation metrics to ensure they accurately reflected the desired outcome, confirming no issues with the metric implementation itself. After confirming these key aspects, I looked at the model specifically. To better understand what the model was learning, I used TensorBoard to monitor training metrics like loss and gradients, and I also visualized the activations of different layers. Doing so revealed vanishing gradients in the deeper layers. Addressing this was accomplished by switching to ReLU activation functions and Batch Normalization, improving gradient flow and allowing the network to learn effectively. Finally, careful hyperparameter tuning using grid search further optimized the model's performance.

9. What excites you most about the future of AI?

I'm most excited about AI's potential to democratize access to knowledge and expertise. Imagine AI-powered tools that can provide personalized education, healthcare, and legal advice to anyone, regardless of their background or location. The possibility of leveling the playing field and empowering individuals to achieve their full potential is truly inspiring.

Furthermore, I anticipate significant advancements in AI's ability to collaborate with humans on complex problems. From scientific discovery to artistic creation, AI could augment human capabilities and unlock new levels of innovation. This collaborative synergy, where AI complements human strengths, promises to reshape various industries and drive societal progress in profound ways.

10. What are some of the limitations of deep learning?

Deep learning, while powerful, has several limitations. A major one is its reliance on vast amounts of labeled data. Acquiring and labeling this data can be expensive and time-consuming. If the training data is biased, the model will likely inherit and amplify those biases, leading to unfair or inaccurate predictions.

Another limitation is the lack of interpretability. Deep learning models are often considered "black boxes" because it's difficult to understand why they make specific decisions. This lack of transparency can be problematic in critical applications where trust and explainability are essential. Furthermore, deep learning models can be computationally expensive to train and deploy, requiring specialized hardware like GPUs and significant energy consumption. They are also susceptible to adversarial attacks, where small, carefully crafted perturbations to the input data can fool the model.

11. Describe a situation where you had to work with a dataset that had a lot of missing or incorrect data. How did you handle it?

In a recent project involving customer churn prediction, I encountered a dataset with significant missing values and inconsistencies. Several fields, like income and tenure, had a high percentage of missing entries, and categorical variables had unexpected values due to data entry errors.

To handle this, I first performed a thorough data exploration to understand the patterns of missingness and identify the sources of errors. I then employed a combination of techniques: imputation using the median for numerical features, mode imputation for categorical features where appropriate, and for columns with a high number of missing values, created a new boolean column indicating whether the value was missing. We also corrected inconsistencies based on business knowledge, like mapping similar values to a standard category. We validated the imputed data by checking the data distributions before and after.

12. What are some strategies you would use to prevent overfitting in a model?

To prevent overfitting, several strategies can be employed. One common approach is to increase the amount of training data. More data allows the model to learn a more generalized representation of the underlying patterns. Another strategy is to use regularization techniques such as L1 or L2 regularization, which penalize large weights in the model, effectively simplifying it.

Further strategies include:

- Cross-validation: Evaluate model performance on unseen data.

- Early stopping: Monitor performance on a validation set and stop training when performance degrades.

- Dropout: Randomly deactivate neurons during training.

- Data augmentation: Artificially increase the size of the training dataset by creating modified versions of existing data.

- Simplifying the model architecture: Use a model with fewer parameters. For example, reduce the number of layers or neurons in a neural network. A simpler model is less likely to memorize the training data.

13. If you had to choose, would you rather have a model with high accuracy or high interpretability? Why?

The better choice between high accuracy and high interpretability depends heavily on the specific application. In scenarios where errors carry significant consequences, such as medical diagnosis or autonomous driving, high accuracy is paramount. We prioritize correct predictions, even if the model's decision-making process is a black box.

However, in contexts where understanding why a model makes certain predictions is crucial, high interpretability takes precedence. This is often the case in scientific research, legal settings, or when building trust with users. An interpretable model allows us to validate its reasoning, identify potential biases, and ensure fairness. There is often a trade-off between accuracy and interpretability. Sometimes simpler models can be both sufficiently accurate and highly interpretable.

14. What are some of your favorite tools or libraries for AI research?

Some of my favorite tools and libraries for AI research include:

- TensorFlow and Keras: These are powerful and flexible frameworks for building and training deep learning models. TensorFlow provides low-level control, while Keras offers a user-friendly API for rapid prototyping.

- PyTorch: Another popular deep learning framework known for its dynamic computation graph and Pythonic interface, making it great for research and experimentation.

- Scikit-learn: A comprehensive library for various machine learning tasks, including classification, regression, clustering, and dimensionality reduction. It's easy to use and provides a wide range of algorithms.

- Hugging Face Transformers: Essential for Natural Language Processing (NLP) research, providing pre-trained models and tools for fine-tuning and building NLP applications. Includes access to thousands of models.

transformersalso includesacceleratefor distributed training. - NumPy and Pandas: Fundamental libraries for numerical computation and data manipulation in Python, respectively. They are the foundation for most AI and data science workflows.

- MLflow or Weights & Biases: These are great for tracking experiments and managing machine learning workflows. They help in reproducibility and collaboration.

- CUDA: CUDA from NVIDIA is a parallel computing platform and programming model that makes using GPUs for general purpose processing simple and elegant. Example:

import torch

if torch.cuda.is_available():

device = torch.device('cuda')

print('GPU is available.')

else:

device = torch.device('cpu')

print('GPU is not available, using CPU.')

# Example: Creating a tensor on the GPU

tensor = torch.randn(3, 3).to(device)

print(tensor)

15. Explain the difference between supervised, unsupervised, and reinforcement learning with simple examples.

Supervised learning involves training a model on a labeled dataset, where the correct output is known. For example, training a classifier to identify images of cats and dogs, where each image is labeled as either 'cat' or 'dog'. The model learns to map inputs to outputs based on the provided labels. Unsupervised learning, in contrast, deals with unlabeled data, where the goal is to discover hidden patterns or structures. A simple example is clustering customers based on their purchasing behavior, without any prior knowledge of customer segments. The algorithm identifies groups of customers with similar behaviors.

Reinforcement learning is about training an agent to make decisions in an environment to maximize a reward. Think of training a robot to navigate a maze. The robot receives a reward when it reaches the goal and penalties for bumping into walls. It learns through trial and error to find the optimal path to the goal by maximizing the cumulative reward it receives.

16. What are some ways you can evaluate the performance of a machine learning model?

Evaluating a machine learning model's performance depends on the type of task. For classification tasks, common metrics include accuracy, precision, recall, F1-score, and the Area Under the ROC Curve (AUC-ROC). We can also use a confusion matrix to visualize the model's performance, highlighting true positives, true negatives, false positives, and false negatives.

For regression tasks, metrics like Mean Absolute Error (MAE), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and R-squared are frequently used. Additionally, it's essential to consider factors like overfitting (evaluating performance on both training and validation datasets) and the model's ability to generalize to unseen data. Cross-validation techniques are useful to get a robust estimate of the model's performance.

17. Tell me about a time you had to present your research findings to someone who wasn't technical. How did you adapt your communication style?

I once had to present my findings on a machine learning model for predicting customer churn to the marketing team. They didn't have a technical background, so I avoided jargon and focused on the 'so what?' I explained the model's purpose as simply 'identifying customers likely to leave so we can try to keep them'. Instead of discussing algorithms and metrics like precision/recall, I used analogies, for example, 'think of the model as a filter that catches customers at risk, it's not perfect but better than guessing'.

I used visualizations and relatable examples to illustrate the model's predictions. Instead of showing them code or equations, I displayed graphs showing potential revenue saved by using the model's predictions and scenarios of how the marketing team could use these insights to run targeted campaigns, improving customer retention. I also made sure to invite questions throughout the presentation and encourage the audience to use simple language while asking them.

18. What are some potential biases that can exist in AI datasets, and how can we mitigate them?

AI datasets can suffer from several biases, including: historical bias (reflecting past societal inequalities), sampling bias (when the data doesn't represent the population), measurement bias (errors in data collection), and representation bias (certain groups are underrepresented). These biases can lead to unfair or discriminatory outcomes when the AI model is deployed.

Mitigation strategies include: careful data collection and preprocessing to ensure diverse and representative datasets, using techniques like data augmentation to balance classes, employing fairness-aware algorithms that explicitly address bias during training, and rigorously evaluating model performance across different subgroups to identify and correct disparities. For instance, one could use techniques like re-weighting or adversarial debiasing. Regular auditing and monitoring of the AI system after deployment are also crucial.

19. How do you stay up-to-date with the latest advancements in AI research?

I stay updated on AI research through a combination of strategies. I regularly read research papers on arXiv and follow publications from leading AI conferences like NeurIPS, ICML, and ICLR. I also subscribe to AI-related newsletters and blogs from reputable sources such as OpenAI, Google AI, and DeepMind.

Furthermore, I actively participate in online communities like Reddit's r/MachineLearning and engage in discussions on platforms like Twitter to learn from other researchers and practitioners. I also experiment with new tools and frameworks to understand their capabilities and limitations better. Finally, I follow influential AI researchers and thought leaders on social media and professional networking sites like LinkedIn.

20. Describe a situation where you had to collaborate with someone who had a different skillset than you. How did you make it work?

In a recent project, I, as a backend developer, needed to collaborate with a UX designer who focused on user interface and user experience. Our skillsets were vastly different, as mine revolved around server-side logic and data management, while theirs focused on visual appeal and user interaction. To make it work, we established clear communication channels, using daily stand-ups to discuss progress and challenges. We also made a point of explaining our respective domains to each other. For instance, I'd explain the constraints of the backend API, and they'd explain how those constraints would affect user experience.

Specifically, there was a feature where the designer envisioned a complex data visualization. Initially, I wasn't sure how to efficiently retrieve and format the data to support that visualization. Instead of simply saying it was impossible, I worked with the designer to understand the core user need and propose alternative, more efficient data structures. We iterated on the design and data model together, eventually arriving at a solution that met both the UX requirements and the technical limitations. This involved open communication, mutual respect for each other's expertise, and a willingness to compromise to achieve the best overall outcome.

21. What's a project you're currently working on, or that you'd like to work on in the future?

I'm currently working on a personal project involving building a web application for managing personal finances. It's a full-stack project where I'm using React for the frontend, Node.js with Express for the backend, and MongoDB for the database. The aim is to create a user-friendly interface for tracking income, expenses, and investments, with features like budget planning and generating insightful reports.

In the future, I'd like to explore machine learning applications, specifically in natural language processing. I envision working on a project that utilizes NLP techniques to summarize long documents or analyze sentiment from text data. I'm particularly interested in using models like BERT or transformers for these kinds of tasks, and deploying them through a simple API.

22. Have you ever tried to improve upon an existing AI model or algorithm? What did you do?

Yes, I have. In a previous role, I worked on improving a pre-existing sentiment analysis model used for classifying customer reviews. The initial model, while functional, had accuracy issues, particularly with nuanced or sarcastic language. To address this, I employed a few strategies.

First, I augmented the training dataset with a larger and more diverse set of labeled reviews, including examples specifically designed to challenge the model's ability to detect sarcasm and implied sentiment. Second, I experimented with different model architectures, specifically exploring transformer-based models like BERT, which are known for their strong performance in natural language understanding tasks. Finally, I fine-tuned the hyperparameters of the selected model using a validation set and techniques like grid search to optimize its performance on the specific task. This involved adjusting parameters like the learning rate and batch size. The result was a significant improvement in the model's accuracy and its ability to handle complex sentiment expressions.

23. Walk me through your thought process when approaching a new AI research problem. What are the first steps you take?

When approaching a new AI research problem, I typically start by thoroughly understanding the problem's context, goals, and potential impact. I conduct a literature review to identify existing solutions, relevant research papers, and state-of-the-art techniques. This helps me understand the current landscape and identify gaps in knowledge.

Next, I try to define a clear and measurable objective function. I then explore various approaches, considering their feasibility, computational cost, and potential performance. This might involve brainstorming different algorithms, architectures, or data representations. I prioritize experimenting with simpler approaches first before moving to more complex models. I focus on establishing a baseline and then iteratively improving upon it, documenting my experiments, and analyzing results to guide further research.

AI Research Scientist intermediate interview questions

1. How do you approach a research problem with limited data?

When faced with a research problem with limited data, my initial approach involves a combination of strategies to maximize the information available and mitigate the limitations. First, I would focus on a thorough understanding of the problem domain to derive insights from existing knowledge and theories. This helps to formulate reasonable assumptions and constraints that can compensate for data scarcity. Next, I would prioritize data augmentation techniques, such as bootstrapping or synthetic data generation (e.g., using generative models, but only if applicable and carefully validated), to expand the dataset. Furthermore, transfer learning from related datasets or pre-trained models (if suitable) can be very useful.

In parallel, I would emphasize simpler models with fewer parameters to avoid overfitting the limited data. Regularization techniques are also crucial. For example, using L1 or L2 regularization with linear or logistic regression. Careful feature selection and engineering become even more important to extract maximum information from the existing features, and I would pay close attention to cross-validation and other validation methods to rigorously evaluate the model's generalization ability. Finally, clearly document the limitations of the research and acknowledge the uncertainty in the results.

2. Describe a time you had to debug a complex AI model.

During a project to build a recommendation engine, the model began producing inexplicably poor recommendations for a subset of users. Initial metrics looked fine, but deeper analysis revealed a significant bias towards popular items, effectively ignoring user preferences. I began by systematically examining the data pipeline, feature engineering, and model architecture. I identified a subtle bug in the feature scaling process where a certain feature related to user activity was being inadvertently normalized using the global dataset statistics instead of user-specific statistics. This skewed the feature values and caused the model to prioritize the overall popularity of items over individual user history.

To debug, I implemented a series of targeted tests, isolating each component of the pipeline. I used code blocks to redefine the scaling functions with user-specific logic. I also ran simulations with synthetic data to confirm the fix. After correcting the feature scaling, the model's performance improved significantly, and the bias was eliminated. The recommendations became more personalized, leading to higher user engagement.

3. Explain the concept of transfer learning and its applications.

Transfer learning is a machine learning technique where a model trained on one task is re-used as the starting point for a model on a second task. It leverages the knowledge gained from solving a similar problem to improve learning efficiency and performance on a new, related problem. This is especially useful when you have limited data for the target task, as it allows you to benefit from the pre-trained model's understanding of general features.

Applications include:

- Image Recognition: Using models pre-trained on ImageNet for tasks like object detection with less training data.

- Natural Language Processing: Employing pre-trained language models (e.g., BERT, GPT) for text classification, sentiment analysis, or question answering.

- Speech Recognition: Adapting models trained on large speech datasets to specific accents or dialects.

4. What are some common challenges in deploying AI models in production?

Deploying AI models in production introduces several challenges. Model performance degradation is a significant issue; models can become less accurate over time due to changes in the input data (data drift) or the environment. Ensuring scalability and reliability is also crucial, as models must handle varying workloads and maintain consistent performance under pressure.

Other challenges include:

- Monitoring and explainability: Tracking model performance and understanding its decision-making process are essential for maintaining trust and identifying potential biases.

- Infrastructure limitations: Existing infrastructure may not be optimized for the computational demands of AI models, requiring significant investment in hardware and software.

- Security concerns: AI models can be vulnerable to adversarial attacks or data breaches, necessitating robust security measures.

- Reproducibility: Difficulty in recreating the model training environment, leading to inconsistencies in model behavior.

- Cost Management: Managing the operational costs of AI models, including compute, storage, and monitoring.

5. How do you evaluate the performance of a generative model?

Evaluating generative models involves assessing both the quality and diversity of the generated samples. Several metrics and approaches are used, often tailored to the specific type of data being generated (images, text, audio, etc.). For images, common metrics include Inception Score (IS) and Fréchet Inception Distance (FID). IS measures both the quality and diversity of generated images, while FID compares the generated image distribution to the real image distribution using Fréchet distance, a lower FID indicates better performance.

For text generation, metrics like Perplexity, BLEU score (comparing generated text to a reference), and ROUGE score are frequently employed. More recent approaches often use human evaluation or rely on pre-trained language models to assess fluency, coherence, and relevance. Evaluating diversity can involve measuring the number of unique generated samples or using metrics like the self-BLEU score to assess the similarity within the generated dataset. It's important to note that no single metric is perfect, and a combination of quantitative metrics and qualitative human evaluation is usually preferred for a comprehensive assessment.

6. Explain the trade-offs between different optimization algorithms.

Optimization algorithms offer different trade-offs, primarily balancing speed, accuracy, and robustness. Gradient Descent is simple and fast for smooth, convex functions, but can be slow and get stuck in local minima for non-convex problems. Algorithms like Adam and RMSprop adapt the learning rate for each parameter, often converging faster than standard Gradient Descent and being less sensitive to initial learning rate, but introduce additional hyperparameters that require tuning. Second-order methods like Newton's method converge faster with better accuracy (quadratic convergence), but are computationally expensive due to the Hessian matrix calculation, making them unsuitable for large datasets. Some algorithms use momentum to accelerate learning in the right direction, and can avoid oscillations.

Evolutionary algorithms (e.g., Genetic Algorithms) are robust to non-convexity and can find global optima, but are slow and computationally intensive. Simulated Annealing is another option for global optimization, using probabilistic acceptance of worse solutions to escape local minima, but requires careful tuning of the cooling schedule. The choice depends on the specific problem, data size, and available computational resources. A good starting point is often Adam or a similar adaptive gradient method, and experimenting and observing the results is crucial.

7. How do you handle imbalanced datasets in machine learning?

Imbalanced datasets can negatively impact machine learning model performance by biasing predictions towards the majority class. Several strategies can address this:

- Resampling Techniques:

- Oversampling: Increase the number of instances in the minority class (e.g., SMOTE). This creates synthetic samples.

- Undersampling: Reduce the number of instances in the majority class. Can lead to information loss.

- Cost-Sensitive Learning: Assign higher misclassification costs to the minority class during model training. Most algorithms have parameters to directly control class weights.

- Algorithm Selection: Some algorithms are less susceptible to imbalanced data (e.g., tree-based methods with careful hyperparameter tuning). Specifically, consider methods robust to imbalanced data, like anomaly detection techniques where the target variable is rare events.

- Evaluation Metrics: Avoid accuracy; use metrics like precision, recall, F1-score, and AUC-ROC that better reflect performance on the minority class.

- Ensemble Methods: Utilize ensemble techniques specifically designed for imbalanced datasets, such as EasyEnsemble or BalanceCascade.

8. Describe your experience with different deep learning frameworks.

I have experience with several deep learning frameworks, primarily TensorFlow and PyTorch. With TensorFlow, I've used both the Keras API for high-level model building and the TensorFlow Core API for more customized implementations. I've built and trained various models using TensorFlow, including convolutional neural networks (CNNs) for image classification, recurrent neural networks (RNNs) for time series analysis, and autoencoders for anomaly detection. model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

I'm also proficient in PyTorch. I appreciate its dynamic computation graph, which allows for easier debugging and more flexible model architectures. My experience with PyTorch includes implementing custom layers and loss functions, utilizing pre-trained models from torchvision, and training models on both CPUs and GPUs. For example, I have used the following command to set up my environment: conda install pytorch torchvision torchaudio -c pytorch. I also used other libraries such as Scikit-learn to preprocessing, model evaluation, and comparison.

9. How would you design an AI system for a specific application?

Designing an AI system involves several key steps. First, I'd clearly define the application's goals and the specific problem AI will solve. This includes identifying the input data available, the desired output, and the metrics for success. For example, if designing an AI for image classification, the goal might be to accurately categorize images with a high degree of precision. I'd then choose an appropriate AI model (e.g., CNN for images, RNN/Transformers for text).

Next, data preparation and model training are critical. Data would be cleaned, preprocessed, and split into training, validation, and testing sets. The model would then be trained using a suitable algorithm and loss function, iteratively adjusted until performance on the validation set is satisfactory. Finally, the trained model would be rigorously tested on the test set to ensure generalization and deployed to the target environment. Monitoring and retraining are essential for long-term performance.

10. Explain the concept of adversarial attacks and how to defend against them.

Adversarial attacks involve intentionally crafting inputs (adversarial examples) that cause machine learning models to make incorrect predictions. These attacks exploit vulnerabilities in the model's decision boundaries. For example, adding a small, carefully chosen perturbation to an image can cause an image classifier to misclassify it, even though the change is imperceptible to humans.

Defenses against adversarial attacks include:

- Adversarial training: Retraining the model on adversarial examples to make it more robust.

- Defensive distillation: Training a new model on the soft probabilities output by a robust model.

- Input sanitization: Preprocessing the input to remove or reduce adversarial perturbations. E.g., using image compression or adding noise to the image.

- Gradient masking: Obscuring the gradients used by attackers to craft adversarial examples. However, this method has been shown to be unreliable.

11. How do you stay up-to-date with the latest advancements in AI?

I stay up-to-date with AI advancements through a variety of channels. These include:

- Reading Research Papers: I regularly check arXiv, NeurIPS, ICML, and other relevant publications for the latest research.

- Following Industry Blogs and Newsletters: I subscribe to blogs like Google AI Blog, OpenAI's blog, and newsletters such as The Batch by Andrew Ng.

- Online Courses and Tutorials: I utilize platforms like Coursera, edX, and fast.ai to take courses and tutorials on specific AI topics.

- Attending Conferences and Webinars: I participate in AI conferences and webinars to learn from experts and network with other professionals.

- Following AI Influencers on Social Media: I keep an eye on leading AI researchers and practitioners on platforms like Twitter and LinkedIn to stay informed about current trends and discussions. I also experiment and implement smaller project with new libraries (e.g.

transformers) to gain hands-on experience.

12. Describe a time you had to communicate complex AI concepts to a non-technical audience.

I once had to explain the concept of a recommendation engine to our marketing team, who primarily focused on traditional advertising. Instead of diving into algorithms, I used the analogy of a well-stocked grocery store. I explained that the engine works like a knowledgeable store clerk who remembers past purchases and suggests other items a customer might like, such as suggesting coffee filters after someone buys coffee. I emphasized that this allows us to personalize marketing campaigns and improve customer engagement by showing relevant products, leading to a better return on investment.

To further clarify, I used examples they would understand, like Netflix movie suggestions, and avoided technical jargon like 'collaborative filtering.' I focused on the outcome - increased sales and customer satisfaction - rather than the technical how of the AI process. This made the concept much more approachable and helped them understand its value for marketing initiatives.

13. What are the ethical considerations in developing AI systems?

Ethical considerations in AI development are crucial and multifaceted. Key concerns include bias in data and algorithms, which can perpetuate and amplify existing societal inequalities. This necessitates careful data curation, algorithm auditing, and fairness-aware design principles to mitigate discriminatory outcomes. Another concern is transparency and explainability. It is crucial to understand how AI systems arrive at their decisions, especially in high-stakes applications like healthcare and criminal justice. Opaque 'black box' AI models can erode trust and accountability. We also need to consider job displacement due to automation and the potential for misuse of AI in surveillance and autonomous weapons. Therefore, focusing on responsible AI development, ensuring human oversight, and establishing clear ethical guidelines are paramount.

Specifically, developers need to think about:

- Data privacy: Protecting sensitive user information.

- Bias mitigation: Actively working to remove bias in training data and algorithms.

- Transparency: Ensuring that AI systems' decision-making processes are understandable.

- Accountability: Establishing clear lines of responsibility for AI system errors and harms.

14. How do you approach hyperparameter tuning in deep learning?

Hyperparameter tuning in deep learning is often done through a combination of automated search and manual refinement. I generally start with a broad search using techniques like random search or grid search to identify promising regions in the hyperparameter space. Random search is often preferred as it explores the space more efficiently than grid search. Bayesian optimization or tools like Optuna can be used to intelligently sample the hyperparameter space based on previous results.

Once I have a sense of the important hyperparameters and their effective ranges, I refine the search, potentially focusing on a smaller subset of hyperparameters. This can involve more targeted searches or manual experimentation based on intuition and understanding of the model and data. Evaluating performance on a validation set is crucial throughout the process, and techniques like cross-validation can improve the robustness of the evaluation. Consider using techniques such as learning rate schedules or early stopping to further improve the model.

15. Explain the concept of reinforcement learning and its applications.

Reinforcement learning (RL) is a type of machine learning where an agent learns to make decisions by interacting with an environment to maximize a cumulative reward. The agent takes actions, observes the outcome (reward and next state), and updates its strategy (policy) accordingly. The goal is to learn an optimal policy that maps states to actions, leading to the highest possible cumulative reward over time. It differs from supervised learning because there is no labeled training data; instead, the agent learns through trial and error.

RL has many applications, including robotics (e.g., teaching robots to walk or manipulate objects), game playing (e.g., training AI to play chess or Go), recommendation systems (e.g., personalizing content recommendations), and resource management (e.g., optimizing energy consumption in buildings). Other examples include autonomous driving and financial trading.

16. How do you handle missing data in machine learning?

Missing data can be handled in several ways. Some common techniques include:

- Imputation: Replacing missing values with estimated values. Simple strategies include using the mean, median, or mode of the column. More complex methods involve using machine learning models to predict the missing values based on other features. For example, using k-NN or regression.

- Deletion: Removing rows or columns with missing values. This is suitable when the amount of missing data is small or when the missingness is completely random. However, it can lead to loss of information.

- Using algorithms that handle missing data: Some algorithms like XGBoost and LightGBM can inherently handle missing values without requiring imputation or deletion. These algorithms learn the best way to deal with missing data during training.

- Creating a missing data indicator: Add a new binary column indicating if the value was originally missing. This can help the model learn if the missingness itself is a predictive feature.

17. Describe your experience with different types of neural networks.

I have experience working with various types of neural networks. I've used feedforward neural networks (FFNNs) with different activation functions (ReLU, sigmoid, tanh) for classification and regression tasks. I've also worked with Convolutional Neural Networks (CNNs) extensively for image recognition and object detection, using libraries like TensorFlow and PyTorch. My CNN experience includes implementing architectures like ResNet and VGG, and I am familiar with concepts like convolutional layers, pooling layers, and batch normalization.

Furthermore, I have experience with Recurrent Neural Networks (RNNs), including LSTMs and GRUs, for sequence modeling tasks like time series analysis and natural language processing. I understand the challenges of vanishing gradients in RNNs and how LSTMs/GRUs address this. I've also experimented with transformers for NLP tasks, leveraging pre-trained models and fine-tuning them for specific applications.

18. How would you design an AI system for a real-world problem?

Designing an AI system for a real-world problem involves several key steps. First, clearly define the problem and the desired outcome. What are we trying to achieve, and what data is available or can be acquired? This requires a thorough understanding of the problem domain. Next, select an appropriate AI model based on the problem type and data characteristics. Consider options like supervised learning (classification or regression), unsupervised learning (clustering), or reinforcement learning. Data preprocessing is vital; cleaning, transforming, and engineering features to improve model performance.

After model selection and data preparation, train the chosen model using the prepared data. Validate the model's performance using appropriate metrics and iterate by tuning hyperparameters or even switching models if necessary. Finally, deploy the trained model into the real-world environment and continuously monitor its performance. Be ready to retrain the model with new data as it becomes available to maintain accuracy and adapt to changing conditions. Consider ethical implications and potential biases throughout the entire process.

19. Explain the concept of explainable AI (XAI) and its importance.

Explainable AI (XAI) refers to AI models and techniques that allow humans to understand and interpret the reasoning behind their decisions or predictions. Unlike "black box" AI models, XAI aims to make the internal workings of AI systems transparent and understandable. This involves providing insights into which features or data points were most influential in arriving at a specific outcome.

The importance of XAI stems from several factors: Trust and acceptance: Understanding how an AI system makes decisions builds trust and encourages wider adoption. Accountability: XAI enables auditing and verification of AI systems, ensuring fairness and identifying potential biases. Improved decision-making: Human experts can leverage XAI insights to refine their own understanding of the problem and make better decisions. Regulatory compliance: Increasingly, regulations require transparency and explainability in AI systems, particularly in sensitive domains like finance and healthcare.

20. How do you approach feature selection in machine learning?

Feature selection aims to identify the most relevant features for a machine learning model, improving performance and interpretability. I typically start by understanding the data and the problem. Then, I consider these approaches:

- Filter methods: Use statistical measures like correlation, chi-squared, or ANOVA to rank features independently of the model. Quick and computationally inexpensive.

- Wrapper methods: Evaluate different feature subsets by training and testing a model. Examples include forward selection, backward elimination, and recursive feature elimination (RFE). Computationally expensive but often yields better results.

- Embedded methods: Feature selection is integrated into the model training process. Examples include LASSO and Ridge regression, which penalize the model for using too many features. Tree-based models also have built-in feature importance measures. I might also consider techniques like Principal Component Analysis (PCA) for dimensionality reduction, though this transforms the features rather than selecting a subset of the original features.

Choosing the right method depends on the dataset size, the model being used, and the desired trade-off between performance and computational cost. It is also important to avoid data leakage when using feature selection and carefully validate the model on unseen data.

21. Describe a time you had to work on a research project with a tight deadline.

During my final year, I worked on a machine learning project to predict customer churn. We had only two months to deliver a functional prototype, including data gathering, model training, and evaluation. The timeline was extremely challenging, especially considering the initial data quality issues and the complexity of achieving acceptable model accuracy.

To manage the deadline, we divided the project into smaller, time-boxed tasks. I personally focused on data preprocessing and feature engineering, while other team members handled model selection and deployment. We held daily stand-up meetings to track progress, identify roadblocks, and re-prioritize tasks as needed. We employed techniques like early stopping and efficient hyperparameter optimization to accelerate model training. Despite the pressure, we successfully delivered a working prototype within the timeframe, demonstrating a practical churn prediction system, but with clear indications on how data quality issues impacted overall quality of the models produced.

22. What are some common challenges in training deep learning models?

Training deep learning models presents several challenges. One major hurdle is overfitting, where the model learns the training data too well, leading to poor generalization on unseen data. This is often addressed using techniques like regularization, dropout, and data augmentation. Another significant challenge is the vanishing/exploding gradient problem, which occurs when gradients become extremely small or large during backpropagation, hindering effective learning. Solutions include using appropriate activation functions (e.g., ReLU), gradient clipping, and batch normalization.