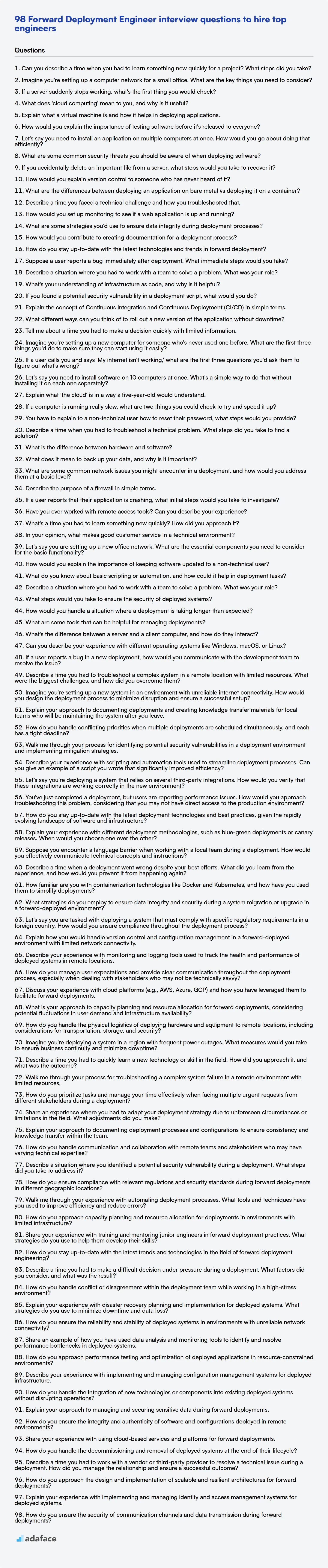

Hiring a Forward Deployment Engineer requires a keen understanding of their specific skills and expertise, more so than hiring a systems engineer or a cloud engineer. A well-prepared interviewer can assess candidates effectively, ensuring they possess the necessary technical and problem-solving abilities.

This blog post provides a curated list of interview questions for Forward Deployment Engineers across different experience levels, from freshers to experienced professionals. It also includes multiple-choice questions to help you gauge a candidate's grasp of fundamental concepts.

By using these questions, you can identify candidates who are ready to tackle the challenges of Forward Deployment. To further refine your selection process, consider using Adaface's Forward Deployed Engineer test before the interview.

Table of contents

Forward Deployment Engineer interview questions for freshers

1. Can you describe a time when you had to learn something new quickly for a project? What steps did you take?

During a recent project, we needed to integrate a new payment gateway, Stripe, which I had no prior experience with. The timeline was tight, so I needed to learn quickly. I started by thoroughly reviewing Stripe's official documentation and API reference. I then explored online tutorials and code examples to understand the fundamental concepts and implementation patterns. Next, I set up a test environment and started experimenting with the API, writing small code snippets to handle different payment scenarios.

I focused on understanding the core concepts like tokens, charges, and webhooks. I actively sought help from senior developers on my team when I encountered roadblocks or had specific questions. I would show them the code I was trying and explain my assumptions. This combination of independent learning and seeking expert guidance allowed me to quickly grasp the key aspects of Stripe and successfully integrate it into our project within the given timeframe.

2. Imagine you're setting up a computer network for a small office. What are the key things you need to consider?

When setting up a computer network for a small office, several key aspects need consideration. Firstly, network infrastructure is crucial, including choosing the right router, switch (if needed), and cabling (Ethernet). Wireless access points should be considered for device mobility. Internet connectivity, selecting an appropriate ISP and bandwidth plan, is also important. Next, you should consider security, including firewall configuration, password policies and network segregation if needed. Finally, you need to think about how to configure your network including IP addressing (DHCP or static), subnetting and DNS settings for accessing resources. Consider also future scalability to accommodate business growth.

3. If a server suddenly stops working, what's the first thing you would check?

The very first thing I'd check is the server's power and network connectivity. Is it plugged in and powered on? Can I ping it from another machine on the network? A simple check of the power cord and a ping can quickly rule out basic physical and network issues.

If power and network are confirmed, I'd then access the server's console (if possible) to examine any error messages displayed during the boot process or in the operating system logs. Checking system logs (/var/log/syslog, /var/log/messages, /var/log/kern.log on Linux or the Event Viewer on Windows) would be a crucial next step to identify any recent errors or warnings that might indicate the cause of the failure.

4. What does 'cloud computing' mean to you, and why is it useful?

Cloud computing, to me, is essentially using someone else's computers (servers, storage, databases, etc.) over the internet. Instead of owning and maintaining physical infrastructure, you access resources on demand from a provider like AWS, Azure, or Google Cloud.

It's useful because it offers several benefits: scalability (easily increase or decrease resources as needed), cost efficiency (pay-as-you-go model reduces capital expenditure), reliability (providers often have redundancy and disaster recovery measures in place), and accessibility (access resources from anywhere with an internet connection).

5. Explain what a virtual machine is and how it helps in deploying applications.

A virtual machine (VM) is essentially a software-defined computer within a physical computer. It emulates a complete hardware system, allowing you to run an operating system and applications as if they were running on dedicated hardware.

VMs are helpful in application deployment because they provide isolation. Each application can run in its own VM, preventing conflicts with other applications or the host operating system. This also allows for easier scaling, as you can easily create and deploy new VMs as needed. Furthermore, VMs encapsulate the application and its dependencies, ensuring consistent behavior across different environments, such as development, testing, and production. This eliminates the "it works on my machine" problem.

6. How would you explain the importance of testing software before it's released to everyone?

Testing software before release is crucial for several reasons. Primarily, it helps to identify and fix bugs or defects that could cause the software to malfunction or crash. Early detection prevents these issues from impacting end-users, leading to a better user experience and preserving the reputation of the developers or company. Think about a scenario where a core banking function fails on a release, without proper testing, leading to financial losses.

Furthermore, thorough testing ensures the software meets the required specifications and performs as expected. This includes verifying functionality, security, usability, performance, and compatibility. It can also save significant costs in the long run because fixing bugs in production is much more expensive and time-consuming than fixing them during the development or testing phases.

7. Let's say you need to install an application on multiple computers at once. How would you go about doing that efficiently?

To efficiently install an application on multiple computers simultaneously, I'd leverage a combination of tools and strategies. Configuration management tools like Ansible, Puppet, or Chef are excellent for automating software deployments across a network. These tools allow me to define the desired state of the systems, including which applications should be installed and configured. I would then create a playbook or recipe that describes the installation process.

Alternatively, if the environment is Windows-centric, tools like Microsoft Endpoint Configuration Manager (MECM) or Group Policy Objects (GPO) can be used to distribute software packages. For simpler scenarios, scripting combined with remote execution tools (e.g., PowerShell remoting on Windows or ssh with scp on Linux/macOS) could work. The key is to centralize the application package and automate the installation process as much as possible, ensuring consistency and reducing manual intervention.

8. What are some common security threats you should be aware of when deploying software?

When deploying software, being aware of potential security threats is crucial. Common threats include: SQL injection (where malicious SQL code is inserted into queries), Cross-Site Scripting (XSS) (injecting malicious scripts into websites viewed by other users), and Cross-Site Request Forgery (CSRF) (tricking users into performing actions they didn't intend to).

Other threats to consider are vulnerabilities in third-party libraries, which can be exploited if not properly patched, and Denial-of-Service (DoS) attacks, aiming to overwhelm the system and make it unavailable. Also, Broken Authentication and Sensitive Data Exposure due to inadequate security measures can also be severe. Keeping software and dependencies up-to-date, implementing strong authentication and authorization mechanisms, and employing input validation and output encoding techniques can help mitigate these risks. Using tools like static analysis and penetration testing can also help identify and address vulnerabilities.

9. If you accidentally delete an important file from a server, what steps would you take to recover it?

First, immediately stop any activity on the affected server to prevent further data overwriting. Then, check the server's recycle bin or trash folder if the file was simply moved there. If not, I'd examine backups. I'd look for recent backups of the server or the specific directory where the file was located and restore the file from the most recent backup. If backups aren't available or useful, I would explore data recovery tools, prioritizing those designed for the server's operating system and file system. Before using a data recovery tool in production, I would test it thoroughly in a safe, isolated environment.

10. How would you explain version control to someone who has never heard of it?

Imagine you're working on a document, and you want to be able to go back to earlier versions if you make mistakes or decide you liked something better before. Version control is like a super-powered "undo" button and a detailed history book for your files, especially code. It tracks every change made, who made it, and when, allowing you to revert to previous states, compare different versions, and work collaboratively without overwriting each other's work.

For example, using git, if you want to track changes to a project you would use commands like git add, git commit and git push. A typical workflow using git might look like:

git clone <repository_url>: Download the project to your computer.git checkout -b feature/new-feature: Create a branch to work on a new feature- Make changes to the files

git add .: Stage the changesgit commit -m "Add new feature": Commit the changes with a messagegit push origin feature/new-feature: Upload the changes to the remote repository- Create a pull request on the remote repository to merge the new feature into the main branch. This allows others to review the code before it's merged.

11. What are the differences between deploying an application on bare metal vs deploying it on a container?

Deploying an application on bare metal involves installing the application directly onto the physical hardware's operating system. This gives you maximum control over resources and performance tuning, but also requires you to manage the entire infrastructure stack, including OS updates, security patching, and hardware maintenance.

In contrast, deploying an application in a container involves packaging the application and its dependencies into a lightweight, isolated environment. Containers share the host OS kernel, making them more resource-efficient than virtual machines. Containerization provides portability, consistency across different environments (dev, test, prod), and easier scaling. Managing containers typically relies on container orchestration platforms like Kubernetes.

12. Describe a time you faced a technical challenge and how you troubleshooted that.

During a recent project, I encountered a performance bottleneck with our data processing pipeline. The pipeline, built using Apache Spark, was taking significantly longer than expected to process a large dataset. To troubleshoot, I first monitored the Spark UI to identify resource bottlenecks (CPU, memory, network). This revealed that a particular transformation step involving a large join was consuming excessive memory and causing frequent garbage collection.

To address this, I explored several optimization techniques. Initially, I tried increasing the Spark executor memory, but it only provided marginal improvement. I then focused on optimizing the join operation. I realized the join was being performed on a non-optimal key. After switching to a more appropriate join key and leveraging broadcast join for smaller datasets, the processing time was reduced by over 50%. This involved code changes like repartitioning data based on new key: df.repartition(col("new_key")) and using broadcast hints broadcast(small_df).join(large_df, "join_key"). This experience highlighted the importance of careful data analysis and choosing the right optimization strategies for distributed data processing.

13. How would you set up monitoring to see if a web application is up and running?

I would set up a monitoring system to periodically check the availability of the web application. This can be done by using tools like Pingdom, UptimeRobot, or custom solutions built with libraries like requests in Python or curl in shell scripts.

The monitoring system would send HTTP requests (e.g., GET requests) to specific endpoints of the application (e.g., the homepage). It would then verify if the server responds with a successful HTTP status code (e.g., 200 OK). If the response code is not 200 or if the request times out, the monitoring system would trigger an alert (e.g., via email, SMS, or integration with a communication platform like Slack). I would configure thresholds for response time to also alert if the application becomes slow.

14. What are some strategies you'd use to ensure data integrity during deployment processes?

To ensure data integrity during deployment processes, I would employ several strategies. Firstly, implement database backups before any deployment. This provides a reliable rollback point. Secondly, use transactional deployments where possible. Wrap database changes in transactions to ensure atomicity – either all changes succeed, or none do. If a rollback is needed, it’s a simple transaction rollback.

Other strategies include: Validation scripts that run before and after deployment to verify data consistency and correctness, checksums for file transfers to guarantee no data corruption occurs during transfer, and implementing monitoring to detect anomalies immediately after deployment. Also, consider using blue/green deployments or canary releases to minimize the impact of potential errors and provide a controlled environment for validation.

15. How would you contribute to creating documentation for a deployment process?

I would contribute to deployment documentation by focusing on clarity, completeness, and maintainability. I'd start by identifying the target audience and tailoring the language and level of detail accordingly. For each step in the deployment process, I would document the purpose, inputs, expected outputs, and any potential error scenarios, including troubleshooting steps. This can be achieved by using numbered steps with examples and code snippets, where needed.

To keep documentation up-to-date, I would propose a versioning system and a review process involving developers, QA, and operations. Furthermore, I would actively participate in maintaining the documentation by incorporating feedback, updating instructions when the process changes, and ensuring it's easily accessible and searchable. This could also include creating diagrams or flowcharts to visually represent the deployment workflow, using tools like Mermaid.js.

For example:

Step 1: Build the application

./gradlew build- Purpose: Compile the application code.

- Input: Source code.

- Output: Deployable artifact (e.g.,

.jarfile).

Step 2: Deploy to staging environment

scp target/my-app.jar user@staging-server:/opt/my-app/- Purpose: Copy the application to the staging server.

- Input: Compiled application artifact.

- Output: Application deployed on the staging server.

16. How do you stay up-to-date with the latest technologies and trends in forward deployment?

I stay current with forward deployment technologies through a combination of continuous learning and practical application. I actively follow industry blogs and publications like the AWS News Blog, the Google Cloud Blog, and specific technology-focused sites (e.g., for Kubernetes, Istio). I also participate in relevant online communities and forums like Stack Overflow and Reddit's r/devops to learn from others' experiences and insights. Attending webinars and conferences focused on cloud technologies and deployment strategies (e.g., AWS re:Invent, KubeCon) is valuable.

Practically, I dedicate time to hands-on experimentation with new tools and techniques. For example, I might explore a new CI/CD pipeline tool, experiment with different container orchestration strategies using kubectl, or delve into infrastructure-as-code (IaC) with tools like Terraform or CloudFormation. This hands-on experience helps me understand the real-world applicability and challenges associated with these technologies.

17. Suppose a user reports a bug immediately after deployment. What immediate steps would you take?

First, acknowledge the user report and thank them for reporting the issue. Immediately gather as much information as possible from the user about the bug: what were they doing, what did they expect to happen, and what actually happened? Then, alert the relevant team members (developers, QA, DevOps) about the reported bug, emphasizing that it's a post-deployment issue.

Next, begin triage. Attempt to reproduce the bug in a staging or development environment to confirm its existence and understand its scope. Investigate recent code changes or deployments to identify potential causes. Based on the severity and impact, decide whether to roll back the deployment to the previous stable version, implement a hotfix, or disable the problematic feature. Communicate the chosen course of action to stakeholders and keep the user who reported the bug updated on the progress.

18. Describe a situation where you had to work with a team to solve a problem. What was your role?

During a recent project at my previous company, we encountered a critical bug in our e-commerce platform's checkout process, causing a significant drop in sales. Our team, consisting of frontend developers, backend developers, and QA engineers, needed to identify and resolve the issue quickly. My role was primarily as a backend developer.

I took the lead in debugging the backend services related to order processing and payment integration. We used logging and debugging tools to trace the flow of data and identify the root cause: a misconfiguration in the payment gateway API. After identifying the problem I then worked with other team members to implement a fix and push to production using continuous deployment techniques. We then worked together to monitor the application for any issues.

19. What's your understanding of infrastructure as code, and why is it helpful?

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure through machine-readable definition files, rather than through manual processes or interactive configuration tools. It treats infrastructure configuration as code.

IaC is helpful because it enables automation, consistency, and repeatability in infrastructure management. This leads to faster deployments, reduced errors, improved version control, and easier scalability. Key benefits include:

- Automation: Automates provisioning and configuration.

- Version Control: Infrastructure definitions can be version controlled.

- Consistency: Ensures consistent environments across deployments.

- Cost Reduction: Automates tasks and reduces manual effort.

- Faster Deployments: Speeds up the deployment process.

20. If you found a potential security vulnerability in a deployment script, what would you do?

If I discovered a potential security vulnerability in a deployment script, my immediate action would be to stop the deployment process if it's currently running. Then I would report the vulnerability to the appropriate team or individual, such as the security team or the script's owner, providing as much detail as possible about the potential impact and location of the issue within the script.

Next, I'd work with the relevant team to assess the vulnerability and prioritize its remediation. This might involve modifying the script to eliminate the vulnerability, for example, by using parameterized queries to prevent SQL injection or securely storing secrets instead of hardcoding them. Once the fix is implemented, it would be thoroughly tested before being redeployed. Finally, I'd document the vulnerability and its resolution to prevent similar issues in the future. The following is an example showing how to use parameterized queries in python with psycopg2

import psycopg2

conn = psycopg2.connect(database="mydb", user="user", password="password", host="host", port="5432")

cur = conn.cursor()

user_id = input("Enter user ID: ")

# Avoid SQL injection using parameterized queries

query = "SELECT * FROM users WHERE id = %s;"

cur.execute(query, (user_id,))

results = cur.fetchall()

for row in results:

print(row)

cur.close()

conn.close()

21. Explain the concept of Continuous Integration and Continuous Deployment (CI/CD) in simple terms.

CI/CD is like an automated assembly line for software. Continuous Integration (CI) is the practice of regularly merging code changes from multiple developers into a central repository, then automatically building and testing that code. This helps catch integration bugs early.

Continuous Deployment (CD) takes it a step further. Once the code passes all the automated tests in the CI phase, CD automatically deploys the code to various environments, such as staging or production. This means code changes are released to users more frequently and reliably. Ultimately, CI/CD reduces the risk involved in software releases, increases velocity, and enables faster feedback loops.

22. What different ways can you think of to roll out a new version of the application without downtime?

Several strategies minimize or eliminate downtime during application updates:

- Blue/Green Deployment: Maintain two identical environments (blue and green). Deploy the new version to the inactive environment, test it, and then switch traffic to it. If issues arise, switch back to the original environment.

- Rolling Deployment: Update instances gradually. Replace a subset of servers with the new version while the remaining ones handle traffic. Continue until all servers are updated. This reduces the impact of any errors.

- Canary Deployment: Release the new version to a small subset of users or servers to monitor its behavior in production before wider rollout. If no problems are detected, roll out to the remaining users/servers.

- Feature Flags: Introduce new features using feature flags. These flags can be enabled or disabled without deploying new code. Allows for testing in production and gradual rollout.

- Load Balancing with Health Checks: Load balancers can route traffic only to healthy instances. During deployment, take instances out of the load balancer's rotation, update them, and then reintroduce them after successful validation.

23. Tell me about a time you had to make a decision quickly with limited information.

During an incident where our production database server started experiencing high CPU utilization, I had to quickly decide how to mitigate the issue. We had limited monitoring in place to pinpoint the exact cause immediately. I prioritized restoring service quickly. My options were to restart the server, which would cause a brief outage, or try to identify the specific problematic query causing the load, which could take longer.

I decided to restart the server. While it meant a short interruption, it was the fastest way to bring the system back to a stable state. I documented the steps taken, the potential risks, and the reasoning behind the decision. After the restart, we implemented more granular monitoring to prevent similar incidents and facilitate quicker diagnosis in the future. This involved adding query performance logging and setting up alerts for specific resource thresholds.

Forward Deployment Engineer interview questions for juniors

1. Imagine you're setting up a new computer for someone who's never used one before. What are the first three things you'd do to make sure they can start using it easily?

The first three things I'd do are:

Set up the display and input devices: Ensure the monitor is properly connected and displaying correctly. Then, I'd verify the mouse and keyboard are functioning (wired or wireless) and that the user understands basic operations like clicking, scrolling, and typing. I might adjust mouse sensitivity for ease of use.

Connect to the internet: Establishing an internet connection is crucial. I'd connect to a Wi-Fi network or use a wired connection. Then, I'd test the connection by opening a web browser and navigating to a familiar website to make sure the connection is working.

Introduce basic software and navigation: I'd familiarize the user with essential software such as the web browser and demonstrate how to use the start menu/dock (depending on the operating system) to find and launch applications. I would explain the concept of files and folders and how to save a file to a known location.

2. If a user calls you and says 'My internet isn't working,' what are the first three questions you'd ask them to figure out what's wrong?

Okay, if a user calls and says their internet isn't working, the first three questions I'd ask are:

- "What device are you using to access the internet?" (This helps narrow down if the issue is device-specific.)

- "Are any other devices in your household able to connect to the internet?" (This helps determine if it's a network-wide outage or isolated to one device.)

- "Have you tried restarting your modem and router?" (This addresses a common and simple fix, and also helps verify if they have physical access to the equipment. This might require clarification such as 'unplug them from the power outlet, wait 30 seconds, and plug them back in').

3. Let's say you need to install software on 10 computers at once. What's a simple way to do that without installing it on each one separately?

A simple way to install software on multiple computers simultaneously is to use a software deployment tool or system. Many options exist, ranging from built-in operating system features to third-party solutions. For example, in a Windows environment, you could utilize Group Policy Objects (GPOs) to deploy software packages (.msi files) across a domain.

Alternatively, you could use tools like Ansible, Chef, or Puppet for configuration management and software deployment. These tools allow you to define the desired state of your systems and automatically enforce it across multiple machines. For simpler scenarios, network shared folders coupled with scripts that install from the share can also work, especially for basic installations. The key is to centralize the installation process and automate it to avoid manual installations on each machine.

4. Explain what 'the cloud' is in a way a five-year-old would understand.

Imagine you have lots of toys, but instead of keeping them all at home, you keep some in a big, safe playroom far away. That playroom is 'the cloud'. It's a place on the internet where we can keep things like pictures, videos, and games, so they don't take up space on your phone or tablet. You can get your toys from the cloud anytime you want, as long as you have the internet!

5. If a computer is running really slow, what are two things you could check to try and speed it up?

Two common things to check when a computer is running slow are:

- CPU Usage: High CPU usage indicates a program or process is consuming a lot of processing power. Use Task Manager (Windows) or Activity Monitor (macOS) to identify the culprit and close it if possible or troubleshoot the underlying issue. If the CPU is constantly at or near 100%, even when idle, there may be malware or background processes that are causing this.

- Memory (RAM) Usage: Insufficient RAM can cause the computer to use the hard drive as virtual memory, which is much slower. Check RAM usage and close unnecessary applications or browser tabs to free up memory. Consider adding more RAM if usage is consistently high.

6. You have to explain to a non-technical user how to reset their password, what steps would you provide?

Okay, no problem! Let's reset your password. First, go to the website or app where you want to reset the password. Look for a link that says something like "Forgot Password?", "Reset Password", or "Need Help Logging In?". Click on that link.

You'll then be asked to enter either your username or the email address you used when you signed up. Type that in, and then click "Submit" or "Send". Check your email inbox (and also your spam or junk folder, just in case) for an email from them. This email will contain a link or button you can click to reset your password. Click the link, and you'll be taken to a page where you can create a new password. Choose a strong password (a mix of upper and lowercase letters, numbers, and symbols), type it in twice to confirm, and then click "Save" or "Update Password". You should then be able to log in with your new password.

7. Describe a time when you had to troubleshoot a technical problem. What steps did you take to find a solution?

During a recent project, our application started experiencing intermittent errors related to database connectivity. I began by checking the application logs and the database server's status, which revealed numerous connection timeout errors. My troubleshooting steps included:

- Isolate the Problem: Confirmed the issue was specifically related to database connections.

- Gather Information: Reviewed recent code changes and system updates to identify potential causes.

- Hypothesis and Testing: Suspected a resource exhaustion issue and used monitoring tools to check CPU, memory, and connection limits on the database server. Increased the database connection pool size in the application configuration.

- Verification: Monitored the application and database after increasing the pool size, and the errors subsided. The root cause was indeed insufficient connections available in the pool to handle the application's load. I also suggested implementing connection pooling more efficiently using

try-with-resourcesto avoid resource leaks in the future.

8. What is the difference between hardware and software?

Hardware refers to the physical components of a computer system, such as the CPU, memory, storage devices, and peripherals. It's the tangible equipment you can touch. Software, on the other hand, is a set of instructions, data, or programs used to operate computers and execute specific tasks. It is intangible and exists as code.

In essence, hardware provides the platform, and software provides the instructions for the hardware to perform actions. Hardware executes the software's instructions. For example, the monitor (hardware) displays the output generated by a word processor (software). Without software, hardware is essentially useless, and without hardware, software cannot run.

9. What does it mean to back up your data, and why is it important?

Backing up data means creating a copy of your digital information and storing it in a separate location from the original. This ensures that you have a safeguard in case the original data is lost, corrupted, or becomes inaccessible due to hardware failure, software issues, human error, cyberattacks (like ransomware), or natural disasters.

Data backups are crucial because they allow you to recover your information and resume operations quickly after an unforeseen event. Without backups, you risk losing valuable files, documents, settings, and systems, potentially leading to significant financial losses, reputational damage, and disruptions to your personal or business activities.

10. What are some common network issues you might encounter in a deployment, and how would you address them at a basic level?

Some common network issues in a deployment include connectivity problems, slow network speeds, and DNS resolution failures. To address connectivity issues, I would first verify basic network settings like IP address, subnet mask, and gateway using tools like ipconfig (Windows) or ifconfig/ip addr (Linux). I'd then test connectivity to other devices on the network using ping and ensure firewalls aren't blocking traffic. For slow network speeds, I would investigate potential bandwidth bottlenecks by checking network usage and possibly running speed tests. If DNS resolution is failing, I'd check the DNS server configuration and verify that the DNS server is reachable and functioning correctly. I would also use nslookup or dig to diagnose DNS resolution issues.

11. Describe the purpose of a firewall in simple terms.

A firewall is like a security guard for your computer or network. Its main purpose is to control network traffic, allowing legitimate traffic to pass through while blocking malicious or unauthorized traffic.

Think of it as a filter that examines incoming and outgoing data packets, comparing them against a set of predefined rules. If a packet matches a rule that permits it, it's allowed through. If it doesn't match any allowing rules, it's blocked, protecting the system from potential threats such as viruses, hackers, and other unwanted intrusions.

12. If a user reports that their application is crashing, what initial steps would you take to investigate?

First, I'd gather information from the user: what were they doing when the crash occurred? What version of the application are they using? What's their operating system? Are there any error messages? Are they able to reproduce the crash consistently?

Next, I'd examine the application's logs (if available) for error messages or stack traces around the time of the crash. I would look for things like exceptions, out-of-memory errors, or other unusual events. If possible, I'd try to reproduce the crash myself. I may also use debugging tools to attach to the application and examine its state when the crash occurs. If the crash happens on the client side I would check the resources the client is using like memory, CPU, disk i/o.

13. Have you ever worked with remote access tools? Can you describe your experience?

Yes, I have experience with various remote access tools. I've used tools like TeamViewer and AnyDesk for providing technical support and accessing remote systems for troubleshooting. I also used Remote Desktop Protocol (RDP) extensively, particularly within Windows Server environments, for server administration and maintenance.

My experience includes setting up remote access connections, configuring security settings, managing user permissions, and performing remote software installations and updates. I'm familiar with the importance of using strong passwords and enabling multi-factor authentication when configuring remote access to protect against unauthorized access.

14. What's a time you had to learn something new quickly? How did you approach it?

In my previous role, we adopted a new CI/CD tool, Jenkins X, to improve our deployment pipeline. I had no prior experience with it. My approach was multi-faceted: First, I reviewed the official documentation and online tutorials to grasp the fundamental concepts and architecture. Then, I set up a local development environment to experiment with the tool's features hands-on. I built a simple pipeline and gradually added complexity, addressing issues as they arose. Finally, I collaborated closely with a senior engineer who had used Jenkins X before, asking clarifying questions and soliciting feedback on my implementation.

Specifically, I remember struggling with configuring the jx-requirements.yml file. To overcome this, I referred to example configurations and used a process of trial and error, committing small changes and testing frequently until I achieved the desired behavior. This hands-on approach, combined with documentation and mentorship, allowed me to quickly become proficient and contribute to the team's migration to the new CI/CD tool.

15. In your opinion, what makes good customer service in a technical environment?

Good customer service in a technical environment hinges on understanding the customer's technical skill level and adapting communication accordingly. It involves prompt, clear, and accurate responses, explaining solutions without condescension. Empathy is crucial; acknowledging the customer's frustration and actively listening to their issues builds trust.

Key elements include:

- Technical Proficiency: Possessing the necessary technical knowledge to address the issue effectively.

For example, being able to debug code or troubleshoot system errors. - Clear Communication: Explaining technical concepts in a way that the customer can understand, avoiding jargon when possible.

- Patience and Empathy: Understanding the customer's frustration and remaining calm and helpful throughout the interaction.

- Responsiveness: Addressing the customer's issue in a timely manner and keeping them informed of progress.

- Documentation: Providing clear and concise documentation for common issues and solutions.

16. Let's say you are setting up a new office network. What are the essential components you need to consider for the basic functionality?

For a basic office network, several essential components are crucial. First, you'll need internet connectivity, typically through an Internet Service Provider (ISP), and a modem to translate the signal. Next, a router is required to distribute the internet connection to multiple devices, create a local network, and provide security through a firewall. You'll also need network cables (Ethernet cables) for wired connections and a switch to connect multiple wired devices to the network.

Additionally, devices with network adapters (computers, printers, etc.) are obviously needed. If wireless connectivity is desired, a wireless access point (WAP), often integrated into the router, is necessary. Finally, DNS servers are required to translate domain names to IP addresses for accessing resources on the internet.

17. How would you explain the importance of keeping software updated to a non-technical user?

Keeping your software updated is like giving your computer or phone regular check-ups and tune-ups. These updates are important for a few key reasons. First, they often include security fixes that protect you from viruses and hackers trying to steal your personal information. Think of it like locking your doors and windows to keep burglars out; updates do the same for your digital life. Second, updates can make your software run faster and more smoothly. Just like a car needs regular maintenance to perform well, your software benefits from updates that improve its performance and fix bugs.

18. What do you know about basic scripting or automation, and how could it help in deployment tasks?

I have experience with basic scripting and automation, primarily using shell scripting (Bash) and Python. I understand fundamental concepts like variables, loops, conditional statements, and functions which enable creating scripts to automate repetitive tasks.

In deployment tasks, scripting can be incredibly helpful. For example, I can automate server provisioning, configuration management, and application deployment. Specifically, this may involve tasks like:

- Setting up new servers: Automating OS installation, package installation, and user creation.

- Configuring software: Modifying configuration files across multiple servers using tools like

sedor Python templating. - Deploying code: Writing scripts to pull code from a repository, run tests, and deploy to production or staging environments. This can also include tasks such as database migrations or cache invalidation. Code example:

#!/bin/bash git pull origin main && ./deploy.sh

19. Describe a situation where you had to work with a team to solve a problem. What was your role?

In my previous role, our team was tasked with improving the efficiency of our data processing pipeline. The existing system was experiencing bottlenecks, leading to delays in report generation. My role was to identify and optimize slow-performing database queries. I used profiling tools to pinpoint resource-intensive queries and then rewrote them using more efficient indexing and join strategies.

I collaborated with the data engineers who were responsible for the ETL processes, sharing my optimized queries and working with them to integrate the changes into the pipeline. I also worked with the DevOps team to ensure the database server had sufficient resources to handle the increased load after the optimization. Through collaborative effort, we were able to reduce the processing time by 40%, significantly improving the report generation time.

20. What steps would you take to ensure the security of deployed systems?

To ensure the security of deployed systems, I would take several steps. First, I'd implement a robust security patching and vulnerability management process, ensuring systems are regularly updated with the latest security patches. This includes using automated tools for vulnerability scanning and patch deployment.

Second, I'd focus on access control and least privilege principles, restricting user and application access to only what's necessary. This can involve implementing multi-factor authentication (MFA), using role-based access control (RBAC), and regularly reviewing user permissions. Network segmentation and firewalls would be configured to limit lateral movement. Furthermore, intrusion detection and prevention systems would be set up to monitor for and respond to malicious activity. Regular security audits and penetration testing would identify weaknesses.

21. How would you handle a situation where a deployment is taking longer than expected?

If a deployment is taking longer than expected, my first step is to assess the situation to determine the reason for the delay. I would check logs, monitor resource utilization (CPU, memory, network), and verify the deployment pipeline for any bottlenecks or errors. If possible, I'd communicate with the team to ensure everyone is aware and collaborating.

Next, I would prioritize mitigation strategies. This could involve scaling up resources, rolling back to a previous stable version, or implementing circuit breakers to prevent cascading failures. I'd also ensure that communication with stakeholders is clear and consistent, providing updates on the progress and any expected impact. Finally, after the deployment is complete (regardless of rollback or fix), I would conduct a post-mortem to identify the root cause of the delay and implement measures to prevent similar issues in the future.

22. What are some tools that can be helpful for managing deployments?

Several tools facilitate deployment management. Ansible, Chef, Puppet, and SaltStack are configuration management tools that can automate infrastructure provisioning and application deployments. Terraform is an Infrastructure as Code (IaC) tool that allows you to define and manage infrastructure resources across various cloud providers. Docker and Kubernetes are containerization technologies widely used for packaging and deploying applications in isolated environments. For CI/CD pipelines, tools like Jenkins, GitLab CI, CircleCI, and Azure DevOps help automate the build, test, and deployment processes. Cloud-specific tools like AWS CloudFormation, Azure Resource Manager, and Google Cloud Deployment Manager are also popular for managing deployments on their respective platforms.

Furthermore, monitoring tools such as Prometheus, Grafana, and Datadog help in tracking the health and performance of deployed applications. Using tools like Helm for Kubernetes deployments simplifies managing and templating the process.

23. What's the difference between a server and a client computer, and how do they interact?

A server provides resources, data, services, or programs to other computers (clients) over a network. A client, on the other hand, requests and uses these resources offered by the server. Servers are typically powerful machines optimized for availability and handling multiple requests simultaneously, while clients are often user-facing devices like desktops, laptops, or mobile phones.

The interaction follows a request-response model. A client sends a request to the server for a specific resource or service. The server processes the request and sends back a response to the client. For example, when you access a website, your web browser (the client) sends a request to the web server, which then responds by sending the HTML, CSS, and JavaScript files that make up the webpage. HTTP is a common protocol used for this interaction.

24. Can you describe your experience with different operating systems like Windows, macOS, or Linux?

I have experience with Windows, macOS, and Linux operating systems. I've used Windows extensively for personal and professional tasks, including software development, general productivity, and gaming. I'm familiar with the Windows command line, system administration tools, and the Windows Subsystem for Linux (WSL).

My experience with macOS includes software development using Xcode, managing applications through the App Store, and general desktop usage. I also have experience using Linux, primarily Ubuntu and CentOS, for server administration, scripting (Bash), and development. I'm comfortable with the command line interface, package management (apt, yum), and configuring system services. I've also worked with Linux in cloud environments like AWS.

25. If a user reports a bug in a new deployment, how would you communicate with the development team to resolve the issue?

First, I'd gather comprehensive details from the user regarding the bug, including steps to reproduce, the observed behavior, the expected behavior, and any error messages. Then, I would concisely document the issue, prioritize it based on severity and impact, and promptly share the information with the development team. This communication should include:

- A clear description of the bug.

- Steps to reproduce the bug.

- The expected vs. actual results.

- The user's environment (browser, OS, etc.).

- Any relevant logs or screenshots.

I would also remain available to provide additional information or clarification as needed by the development team throughout the debugging and resolution process. Furthermore, I'd track the progress of the bug fix and communicate updates to the user.

Forward Deployment Engineer intermediate interview questions

1. Describe a time you had to troubleshoot a complex system in a remote location with limited resources. What were the biggest challenges, and how did you overcome them?

In a previous role, I was responsible for maintaining a network of IoT devices deployed across remote agricultural fields. One time, the central data server experienced a sudden outage, disrupting data collection from hundreds of sensors. I had to troubleshoot the system remotely with limited information and intermittent satellite internet access.

The biggest challenges were the slow internet connection, lack of on-site technical support, and uncertainty about the root cause. I overcame these by first establishing a secure remote connection and prioritizing essential diagnostic tasks, such as checking system logs and network connectivity. Because access was slow, I wrote a small python script that polled the server for specific diagnostic data. The script only pulled what I needed. import socket; def check_port(host, port): sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM); sock.settimeout(5); result = sock.connect_ex((host, port)); sock.close(); return result == 0; print(check_port("example.com", 80)). I then collaborated with local farm staff (who had limited technical skills) via phone to perform basic hardware checks and reboots. By analyzing logs and sensor data patterns, I identified a software bug causing a memory leak, which eventually crashed the server. I pushed a patch remotely, restoring functionality and preventing future issues.

2. Imagine you're setting up a new system in an environment with unreliable internet connectivity. How would you design the deployment process to minimize disruption and ensure a successful setup?

To minimize disruption during deployment with unreliable internet, I'd prioritize an offline-first approach. This involves packaging all necessary dependencies, code, and configurations locally. The deployment process would be designed as a series of idempotent operations, allowing for retries without causing inconsistencies. A local repository (e.g., using apt-mirror for Debian-based systems or a similar solution for other package managers) would be created to serve packages without relying on external sources. Critical services are deployed first, ensuring a functional baseline. Monitoring is set up with local alerting mechanisms to detect and address issues promptly even without immediate internet access.

Further, I would implement robust logging and rollback procedures. Before any major changes, a backup of the existing system state should be taken. Deployments are done in stages, allowing for quick rollbacks if an issue is detected. The system would be designed to continue functioning, perhaps in a degraded mode, even during deployment failures, ensuring business continuity as much as possible until full connectivity is restored and the deployment can be retried. Automated scripts and health checks are essential to verify correct functionality after each stage. Consider using tools like Ansible or Chef for automated and idempotent configuration management, or docker containers for encapsulated deployments.

3. Explain your approach to documenting deployments and creating knowledge transfer materials for local teams who will be maintaining the system after you leave.

My approach to documenting deployments and creating knowledge transfer materials emphasizes clarity and maintainability. I start by creating a comprehensive deployment runbook containing step-by-step instructions, including:

- Prerequisites (software, hardware, access credentials).

- Configuration settings (environment variables, configuration files).

- Deployment commands (using tools like

Ansible,Terraform, or shell scripts). - Rollback procedures.

- Troubleshooting tips for common issues.

For knowledge transfer, I create a simplified architecture diagram and a maintainer's guide. The guide covers system functionality, monitoring procedures, log file locations, and escalation paths. I conduct training sessions with the local team, walking them through the documentation and providing hands-on experience with the system. I also record these sessions for future reference, ensuring the team is comfortable maintaining the system independently.

4. How do you handle conflicting priorities when multiple deployments are scheduled simultaneously, and each has a tight deadline?

When facing conflicting deployment priorities and tight deadlines, I prioritize communication and collaboration. I would first gather all stakeholders to understand the business impact and dependencies of each deployment. Then, I'd work with them to assess the urgency and potential consequences of delaying each. Based on this, we collaboratively define a prioritized deployment order. Technical strategies like feature toggles, blue-green deployments, or canary releases can help mitigate risks and allow for faster, incremental deployments, potentially enabling us to deliver key features from multiple deployments within the given timeframe. If conflicts remain, I would escalate to management with a clear explanation of the trade-offs and proposed solutions.

I also emphasize clear communication and continuous monitoring throughout the deployment process. This includes providing regular updates to stakeholders, proactively identifying and addressing potential issues, and having rollback plans in place. Automation is key to handle the complexities of multiple simultaneous deployments.

5. Walk me through your process for identifying potential security vulnerabilities in a deployment environment and implementing mitigation strategies.

My process for identifying security vulnerabilities involves a multi-faceted approach. First, I conduct thorough vulnerability scanning using tools like Nmap, Nessus, or OpenVAS to identify potential weaknesses in the system. I also perform manual code reviews, focusing on areas known for common vulnerabilities, such as input validation, authentication, and authorization. For web applications, I utilize tools like OWASP ZAP or Burp Suite to identify vulnerabilities such as SQL injection and cross-site scripting (XSS). I then analyze the scan results and prioritize vulnerabilities based on severity and potential impact.

To implement mitigation strategies, I prioritize vulnerabilities based on risk (likelihood and impact). I apply security patches promptly and configure firewalls and intrusion detection/prevention systems (IDS/IPS) to block malicious traffic. I also implement strong authentication and authorization mechanisms, enforce the principle of least privilege, and encrypt sensitive data both in transit and at rest. Regular security audits and penetration testing are also crucial to ensure the effectiveness of the implemented mitigation strategies and to identify any new vulnerabilities that may arise. I document all findings and mitigation steps for future reference and improvement.

6. Describe your experience with scripting and automation tools used to streamline deployment processes. Can you give an example of a script you wrote that significantly improved efficiency?

I have experience using scripting and automation tools like Bash, Python, Ansible, and Terraform to streamline deployment processes. I've used them for tasks such as automating server provisioning, configuring network settings, deploying applications, and running tests. My experience includes writing scripts to automate repetitive tasks, which reduces deployment time and minimizes the risk of human error.

For example, I wrote a Python script using the boto3 library to automate the deployment of EC2 instances on AWS. The script took several parameters (instance type, AMI, security group, etc.), created the instances, configured them with necessary software (installing packages with apt through subprocess), and integrated them with our monitoring system. This script reduced deployment time from several hours of manual effort to approximately 15 minutes, and it also improved consistency across environments. The script also included error handling and logging to ensure a robust deployment process.

7. Let’s say you're deploying a system that relies on several third-party integrations. How would you verify that these integrations are working correctly in the new environment?

To verify third-party integrations in a new environment, I would implement a multi-faceted approach. First, I'd use automated integration tests that simulate real user flows. These tests should cover critical functionalities and data exchanges with each third-party service. These tests should also confirm that the correct data mapping is taking place.

Secondly, I would utilize monitoring and alerting tools to proactively detect issues after deployment. This involves setting up dashboards to track key metrics such as API response times, error rates, and data synchronization status. Synthetic transactions could be created to check API endpoints are healthy. Finally, engaging in end-to-end system tests with data validation after calls to the integration is also vital. A rollback plan should be prepared in the event of critical failures.

8. You've just completed a deployment, but users are reporting performance issues. How would you approach troubleshooting this problem, considering that you may not have direct access to the production environment?

First, I'd gather as much information as possible from the users reporting the issues. This includes specific examples of slow operations, timestamps of when the problems occurred, their geographic location (if relevant), and the browsers/devices they are using. I'd then check the monitoring dashboards (e.g., CPU utilization, memory usage, network latency, error rates) and logs to identify potential bottlenecks or error patterns.

Since I don't have direct access, I'd collaborate closely with the operations team or whoever has access to the production environment. Based on the user reports and the monitoring data, I'd suggest specific actions to the operations team, such as checking database query performance, examining application server logs for errors, verifying cache hit rates, or inspecting network traffic patterns. If code changes were part of the deployment, I'd ask them to check for any newly introduced errors or performance regressions. We could also consider rolling back to the previous version as a temporary solution while we investigate the root cause.

9. How do you stay up-to-date with the latest deployment technologies and best practices, given the rapidly evolving landscape of software and infrastructure?

I stay current with deployment technologies through a combination of active learning and community engagement. I regularly read industry blogs and articles from sources like InfoQ, DZone, and the official blogs of major cloud providers (AWS, Azure, GCP). I also participate in online forums and communities such as Stack Overflow and relevant subreddits to learn from others' experiences and contribute my own knowledge.

Specifically, I dedicate time each week to explore new tools or techniques. For example, recently I've been experimenting with infrastructure-as-code tools like Terraform and Ansible, containerization technologies like Docker and Kubernetes, and CI/CD pipelines using tools such as Jenkins, GitLab CI, and GitHub Actions. I also follow thought leaders on social media and attend webinars and online conferences to stay informed about emerging trends and best practices. For example, I will check out trending github repositories related to DevOps, like ArgoCD, FluxCD, or tools for automating infrastructure as code.

10. Explain your experience with different deployment methodologies, such as blue-green deployments or canary releases. When would you choose one over the other?

I have experience with both blue-green and canary deployments. Blue-green deployments involve running two identical environments, one live (blue) and one staged (green). New code is deployed to the green environment, tested, and then traffic is switched over from blue to green. This provides a very safe deployment with minimal downtime. Canary releases, on the other hand, involve gradually rolling out a new version to a small subset of users. This allows you to monitor the impact of the new version in a production environment before a full rollout. For example, you might route 5% of your user traffic to the canary release and monitor error rates and performance.

I would choose blue-green deployments when I need a very low-risk deployment strategy with minimal downtime, perhaps for critical systems or large feature changes. I'd opt for canary releases when I want to test the impact of a new version on a live user base with real-world traffic, allowing me to identify and address any issues before a full rollout to all users. Canary deployments are particularly useful for changes that are difficult to test in a staging environment, such as changes that impact performance or scalability.

11. Suppose you encounter a language barrier when working with a local team during a deployment. How would you effectively communicate technical concepts and instructions?

When facing a language barrier during a deployment, I'd prioritize clear and visual communication. I'd use diagrams, flowcharts, and simple illustrations to explain technical concepts. I would create step-by-step guides with screenshots and annotations, translating key terms into the local language with help from a translator or team member proficient in both languages.

For instructions, I would use short, concise sentences, avoiding jargon. I would leverage tools like Google Translate for basic translation, but always double-check the accuracy with someone fluent in the language. To demonstrate configurations or code changes, I'd use code snippets with comments in both English and the local language, for instance: `# This is a comment in English

Esto es un comentario en español`

I'd encourage active listening and use clarifying questions to ensure understanding. Finally, I would remain patient and respectful throughout the communication process.

12. Describe a time when a deployment went wrong despite your best efforts. What did you learn from the experience, and how would you prevent it from happening again?

During a recent deployment, we experienced a critical database connection failure despite thorough pre-deployment testing. We'd load-tested the application and database separately, but hadn't accurately simulated the combined load during the actual deployment window. The root cause was traced to connection pool exhaustion under the sustained write load of the new release, something we hadn't foreseen. We had set up monitoring, but the alerts were not sensitive enough to catch the slow degradation.

From that experience, I learned the importance of end-to-end simulation under realistic conditions and fine-tuning monitoring thresholds. Moving forward, we now conduct more comprehensive integration tests that mirror production load and scale. We also implemented more granular monitoring and alerting that triggers at earlier signs of resource constraints, including proactive connection pool monitoring. Another step was adding automated rollback procedures as a safeguard against similar unforeseen issues. Specifically for connection issues we added retry logic to some of our critical jobs and code. Code example:

import time

def execute_with_retry(func, max_retries=3, delay=1):

for i in range(max_retries):

try:

return func()

except Exception as e:

print(f"Attempt {i+1} failed: {e}")

if i == max_retries - 1:

raise

time.sleep(delay)

13. How familiar are you with containerization technologies like Docker and Kubernetes, and how have you used them to simplify deployments?

I have a solid understanding of containerization technologies, primarily Docker and Kubernetes. I've used Docker extensively for building, packaging, and distributing applications as lightweight, portable containers. This has significantly simplified deployments by ensuring consistency across different environments (development, staging, production). I'm comfortable writing Dockerfiles, composing multi-container applications with Docker Compose, and pushing/pulling images to/from container registries.

With Kubernetes, I've used it to orchestrate and manage containerized applications at scale. This includes deploying applications, scaling them based on demand, managing rolling updates and rollbacks, and handling service discovery and load balancing. I have experience defining Kubernetes manifests (YAML files) for deployments, services, pods, and configmaps. I've also used tools like Helm to streamline application deployments on Kubernetes. For example, I've automated the deployment of a Node.js application using a Dockerfile and then orchestrated it with Kubernetes ensuring high availability and scalability.

14. What strategies do you employ to ensure data integrity and security during a system migration or upgrade in a forward-deployed environment?

During system migrations or upgrades in a forward-deployed environment, I prioritize data integrity and security using a multi-faceted approach. This begins with meticulous planning, including comprehensive data backups and validation procedures before, during, and after the migration. We use checksums and data validation scripts to confirm data accuracy.

Security is paramount. This includes encrypting data in transit and at rest, adhering to strict access control policies throughout the migration, and conducting thorough security testing post-migration. We also establish secure channels for data transfer and closely monitor system logs for any anomalies. A robust rollback plan is essential in case unforeseen issues arise during the migration process.

15. Let's say you are tasked with deploying a system that must comply with specific regulatory requirements in a foreign country. How would you ensure compliance throughout the deployment process?

To ensure compliance with regulatory requirements in a foreign country during system deployment, I would take a multi-faceted approach. First, I'd thoroughly research and document all relevant regulations, potentially engaging legal counsel or regulatory experts specializing in that region. This includes understanding data privacy laws (like GDPR equivalents), security standards, and any specific industry regulations. I would then translate these requirements into concrete, verifiable specifications for the system.

Next, throughout the deployment process, I'd implement regular compliance checks. This includes using automated tools to scan for potential violations and conducting audits. I would work closely with the development, security, and operations teams to ensure that all aspects of the system meet the defined specifications. For example, if the regulation dictates data encryption, I'd verify that encryption is properly implemented using the correct algorithms and key management practices and ensure the system logs actions for auditability.

16. Explain how you would handle version control and configuration management in a forward-deployed environment with limited network connectivity.

In a forward-deployed environment with limited connectivity, I'd prioritize a distributed version control system like Git. I'd use local Git repositories on each deployed system, allowing developers to commit changes independently. We can stage changes, commit, and branch locally.

Configuration management would involve Infrastructure as Code (IaC) tools like Ansible or Terraform, with playbooks/configurations stored in the Git repository. These configurations would be applied locally on each system. Synchronization between systems would occur when network connectivity is available using git pull to fetch updates and then ansible-playbook to apply the new configuration. If connectivity is extremely limited, physical transport of compressed Git bundles (using git bundle create and git bundle fetch) could be used as a last resort.

17. Describe your experience with monitoring and logging tools used to track the health and performance of deployed systems in remote locations.

I have experience with several monitoring and logging tools for tracking system health and performance in remote locations. For monitoring, I've used Prometheus and Grafana to collect and visualize metrics like CPU utilization, memory usage, disk I/O, and network traffic. These tools allowed me to set up alerts based on predefined thresholds, enabling proactive identification and resolution of potential issues. I've also used tools like Datadog for more comprehensive monitoring across infrastructure, applications, and logs.

For logging, I've worked with the ELK stack (Elasticsearch, Logstash, and Kibana) and Graylog to aggregate, analyze, and search logs from remote systems. These tools helped me identify patterns, troubleshoot errors, and gain insights into system behavior. I've configured agents like Filebeat or rsyslog to ship logs securely from remote locations to centralized logging servers. I have also worked with cloud-based logging services like AWS CloudWatch and Azure Monitor.

18. How do you manage user expectations and provide clear communication throughout the deployment process, especially when dealing with stakeholders who may not be technically savvy?

Managing user expectations, especially with non-technical stakeholders, is crucial. I focus on clear, consistent communication using non-technical language. Before deployment, I'd explain the process in simple terms, highlighting the benefits and potential disruptions (if any) in a meeting, and then follow up with an email summary. I'd set realistic timelines and scope, and manage expectations around what the deployment will achieve. Regular updates are key, even if there's no significant progress; a brief email stating 'Deployment progressing as planned' can alleviate anxiety. I would make myself readily available for questions, offering multiple channels for communication (email, phone, quick meetings). Finally, post-deployment, I'd provide a summary of what was accomplished, any deviations from the plan, and any next steps.

19. Discuss your experience with cloud platforms (e.g., AWS, Azure, GCP) and how you have leveraged them to facilitate forward deployments.

I have experience with AWS, Azure, and GCP, utilizing them for various forward deployments. On AWS, I've used services like EC2, ECS, and Lambda, coupled with CodePipeline and CodeDeploy for automated deployments. I've configured CI/CD pipelines to build, test, and deploy applications seamlessly, including blue/green deployments for zero-downtime updates. I've also used services such as S3 for storing artifacts and CloudFront for content delivery.

On Azure, I've leveraged services such as Azure VMs, Azure Kubernetes Service (AKS) and Azure DevOps for similar purposes. This includes setting up build and release pipelines, defining infrastructure as code using ARM templates, and implementing deployment strategies like rolling updates using Kubernetes. I have also utilized Azure's monitoring capabilities to ensure smooth application operation post-deployment. Similarly, I have done similar deployment tasks on GCP using Compute Engine, Google Kubernetes Engine (GKE), and Cloud Build.

20. What is your approach to capacity planning and resource allocation for forward deployments, considering potential fluctuations in user demand and infrastructure availability?

My approach to capacity planning for forward deployments involves a combination of proactive forecasting and adaptive resource allocation. I start by analyzing historical user demand data, considering seasonal trends and potential growth factors. This helps establish a baseline capacity requirement. Then, I build in buffer capacity to handle unexpected spikes in demand or temporary infrastructure outages. This includes scaling cloud resources or pre-positioning backup systems.

Resource allocation is dynamically adjusted based on real-time monitoring of key performance indicators (KPIs) like CPU utilization, memory consumption, and network bandwidth. I utilize infrastructure-as-code (IaC) to automate resource provisioning and scaling. Tools like Terraform or Ansible enable rapid deployment and configuration of additional resources as needed. Continuous monitoring and alerting systems notify me of potential capacity bottlenecks, triggering automated or manual scaling operations. I also prioritize communication with field teams to anticipate localized surges in demand or infrastructure challenges.

21. How do you handle the physical logistics of deploying hardware and equipment to remote locations, including considerations for transportation, storage, and security?

Handling physical hardware deployments to remote locations requires careful planning and coordination. For transportation, I'd assess the location's accessibility to determine the most appropriate method (e.g., ground, air, sea freight), considering factors like cost, speed, and reliability. Secure packaging and tracking mechanisms are essential to minimize damage and loss during transit. Insurance coverage is also important. Storage, if needed, should be in secure, climate-controlled facilities to prevent environmental damage or theft. Detailed inventory management is crucial.

Security protocols at the remote site should be established beforehand. This could involve background checks for personnel handling the equipment, security cameras, access control systems, and secure storage areas. Chain of custody documentation should track the hardware from origin to final deployment. We should also conduct risk assessments to identify potential security vulnerabilities and implement mitigation strategies. I'd also consider working with local security providers if necessary.

22. Imagine you're deploying a system in a region with frequent power outages. What measures would you take to ensure business continuity and minimize downtime?

To ensure business continuity during frequent power outages, I'd implement several key measures. First, invest in uninterruptible power supplies (UPS) for critical servers and network equipment, providing short-term power during outages. For longer outages, I'd utilize generators with automatic failover capabilities. Data replication to multiple availability zones within the region, or even a secondary region, is crucial to minimize data loss and allow for rapid failover.

Furthermore, I'd implement a robust monitoring system to detect outages quickly and trigger automated failover procedures. Regularly test the failover mechanisms to ensure they function correctly. Finally, develop a comprehensive disaster recovery plan that outlines specific steps to take during an outage, including communication protocols and roles and responsibilities. This includes backing up config files to a separate location for ease of setup in a new environment, if needed. Also, use serverless solutions whenever possible as they inherently provide high availability.

Forward Deployment Engineer interview questions for experienced

1. Describe a time you had to quickly learn a new technology or skill in the field. How did you approach it, and what was the outcome?

During a project involving data migration to AWS, I needed to quickly learn AWS Glue. Initially, I felt overwhelmed, but I broke it down by focusing on the core functionalities for data transformation. I started with AWS documentation, tutorials, and explored example Glue jobs. I then built a simple proof of concept pipeline to understand how Glue crawlers, Spark ETL jobs, and Glue Data Catalog work together.

The outcome was successful data migration with optimized ETL processes. I was able to write efficient PySpark scripts within Glue to handle data transformations and load data into S3 and Redshift. This experience taught me the importance of focusing on practical application and iterative learning when tackling new technologies.

2. Walk me through your process for troubleshooting a complex system failure in a remote environment with limited resources.

When troubleshooting remotely with limited resources, I prioritize a systematic approach. First, I gather as much information as possible about the failure: logs, error messages, user reports, and any recent changes. I then try to reproduce the issue locally, if possible, to isolate the problem. If direct reproduction isn't possible, I'll focus on analyzing logs and metrics to identify patterns or anomalies that might indicate the root cause. Remote access tools are key, and I would use them to examine system configurations, process states and resource usage.

Next, I formulate hypotheses and test them methodically. Due to limited resources, I favor incremental changes with careful monitoring after each step to avoid compounding the issue. I always communicate clearly with the remote team and document all actions and observations in a shared document. If needed, I would collaborate with subject matter experts, making sure that I get their buy-in as they are more familiar with that specific part of the system. My goal is to restore functionality quickly while also understanding the root cause to prevent future occurrences.

3. How do you prioritize tasks and manage your time effectively when facing multiple urgent requests from different stakeholders during a deployment?

When facing multiple urgent requests during a deployment, I prioritize by assessing impact and urgency. I quickly evaluate which requests are blocking the deployment or have the most significant impact if delayed. I communicate with stakeholders to understand their priorities and negotiate timelines if necessary. I use a simple prioritization matrix (High-Impact/High-Urgency, High-Impact/Low-Urgency, etc.) to guide my decisions.

To manage time, I break down larger tasks into smaller, manageable chunks. I use tools like a Kanban board or a simple to-do list to track progress and stay organized. I also proactively identify potential bottlenecks and escalate them early to avoid delays. I focus on completing the most critical tasks first and delegate or postpone less critical items if possible. Clear communication and proactive problem-solving are key to navigating competing priorities effectively. If a decision requires technical insight I'm unsure of, I'd ask the team lead or most experienced member for their input.

4. Share an experience where you had to adapt your deployment strategy due to unforeseen circumstances or limitations in the field. What adjustments did you make?

During a recent project involving deploying a new microservice to a cloud environment, we initially planned a blue/green deployment strategy. However, we encountered an unexpected limitation: the cloud provider had a temporary restriction on creating new load balancers due to an ongoing maintenance window in that region. This meant we couldn't provision a separate 'green' environment with its own load balancer for testing.

To adapt, we switched to a canary deployment strategy using the existing load balancer. We configured the load balancer to route a small percentage (5%) of traffic to the new microservice version while monitoring its performance and stability. We gradually increased the traffic percentage in small increments while closely watching for any errors or performance degradation. This allowed us to deploy the new service with minimal risk and impact, despite the initial limitation.

5. Explain your approach to documenting deployment processes and configurations to ensure consistency and knowledge transfer within the team.