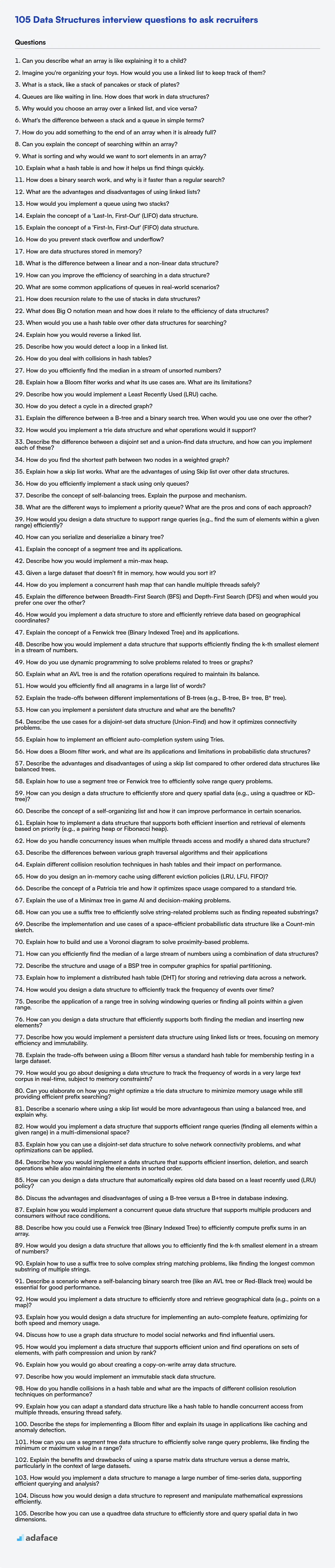

As a recruiter or hiring manager, you know that data structures are the building blocks of efficient software. Understanding data structures is a must if you aim to assess the technical capabilities of candidates.

This blog post offers a comprehensive list of interview questions, broken down by difficulty level, to help you evaluate candidates thoroughly.

By using these questions, you can streamline your interview process and ensure you're hiring candidates who possess a strong understanding of data structures. For a quick way to assess, try our pre-employment tests for candidates before your interviews.

Table of contents

Basic Data Structures interview questions

1. Can you describe what an array is like explaining it to a child?

Imagine you have a toy box, but instead of throwing all your toys in randomly, you line them up neatly in a row, each toy having its own special number. An array is just like that! It's a list of things (like your toys), and each thing has a number that tells you where it is in the list. We start counting from zero, so the first toy is number 0, the second toy is number 1, and so on.

For example, in programming we can say that: let myArray = ["car", "ball", "doll"]; here myArray[0] will give you the "car", myArray[1] will give you the "ball", and myArray[2] will give you the "doll". Each item in the array has an index or number. So the array stores many items, just like a box organizes many toys.

2. Imagine you're organizing your toys. How would you use a linked list to keep track of them?

Imagine each toy is a node in the linked list. Each node contains two things: the toy itself (or a description of it) and a pointer (or link) to the next toy in the list. The first toy in the list is the 'head', and the last toy's pointer points to null, signifying the end.

To organize, I could simply add toys to the end of the list as I find them. Alternatively, I could insert toys in a specific order. For example, I might want to keep my stuffed animals grouped together. I could easily insert a new stuffed animal node after the last existing stuffed animal node in the list, even if it's not at the absolute end. This insertion/deletion is efficient in a linked list since I only need to update the pointers and not shift the remaining items like in an array.

3. What is a stack, like a stack of pancakes or stack of plates?

A stack is a fundamental data structure that follows the LIFO (Last-In, First-Out) principle. Think of it like a stack of pancakes; the last pancake you put on top is the first one you eat. Operations on a stack include:

- Push: Adds an element to the top of the stack.

- Pop: Removes the element from the top of the stack.

- Peek: Views the top element without removing it.

- IsEmpty: Checks if the stack is empty.

Stacks are commonly used in programming for tasks like function call management, expression evaluation, and undo/redo functionalities. Example: stack.push(element) adds an element to the stack.

4. Queues are like waiting in line. How does that work in data structures?

In data structures, a queue operates on the principle of "First-In, First-Out" (FIFO), much like waiting in a line. Elements are added to the rear (enqueue) and removed from the front (dequeue). Think of it like people joining the back of a line and being served from the front.

Common operations and characteristics include:

- Enqueue: Adds an element to the rear of the queue.

- Dequeue: Removes an element from the front of the queue.

- Front: Retrieves the element at the front of the queue without removing it.

- Rear: Retrieves the element at the rear of the queue without removing it.

- Queues can be implemented using arrays or linked lists. The key is maintaining the FIFO order. For example, a simple array-based queue could use two pointers to track the front and rear indices.

class Queue:

def __init__(self):

self.items = []

def enqueue(self, item):

self.items.append(item)

def dequeue(self):

if not self.is_empty():

return self.items.pop(0)

else:

return None

def is_empty(self):

return len(self.items) == 0

5. Why would you choose an array over a linked list, and vice versa?

Arrays offer constant time access to elements via their index (O(1)), making them ideal when you need frequent random access. They also offer better cache locality due to contiguous memory allocation, leading to improved performance in many scenarios. However, arrays require a contiguous block of memory, making insertion or deletion in the middle expensive (O(n)) as elements need to be shifted.

Linked lists, on the other hand, excel in dynamic memory allocation. Insertion and deletion at a known location are efficient (O(1)) because they only involve changing pointers. They don't require contiguous memory, making them suitable when memory fragmentation is a concern. However, accessing an element in a linked list requires traversing from the head (O(n)), making random access slow compared to arrays.

6. What's the difference between a stack and a queue in simple terms?

A stack and a queue are both linear data structures used to store collections of items, but they differ in how items are added and removed. A stack operates on a LIFO (Last-In, First-Out) principle, like a stack of plates: the last plate you put on is the first one you take off. Think of it like this push() adds to the top and pop() removes from the top.

In contrast, a queue operates on a FIFO (First-In, First-Out) principle, like a waiting line: the first person in line is the first one served. Operations are enqueue() to add to the back and dequeue() to remove from the front.

7. How do you add something to the end of an array when it is already full?

When an array is full and you need to add more elements, you cannot directly extend it in most languages because arrays have a fixed size upon creation. The common approach is to create a new, larger array and copy the contents of the old array into the new one, then add the new element.

Here's how you might accomplish this conceptually (or in languages like JavaScript that abstract array resizing):

- Create a new array with a larger capacity (e.g., double the size or increment by a fixed amount).

- Copy all elements from the original (full) array to the new array.

- Add the new element to the end of the new array.

- If necessary, replace the original array with the new, larger array, taking care with memory management to avoid leaks.

8. Can you explain the concept of searching within an array?

Searching within an array involves finding a specific element within the array's collection of elements. The goal is to determine if the target element exists and, optionally, to locate its position (index) within the array. Common approaches include linear search, where each element is checked sequentially, and binary search, which is more efficient but requires the array to be sorted first.

- Linear Search: Simple but inefficient for large arrays.

- Binary Search: Requires a sorted array but is significantly faster for large datasets.

def linear_search(arr, target):

for i in range(len(arr)):

if arr[i] == target:

return i # Element found at index i

return -1 # Element not found

9. What is sorting and why would we want to sort elements in an array?

Sorting is the process of arranging elements in a specific order (ascending or descending). We sort arrays for several reasons. Primarily, it makes searching for elements much faster and more efficient, especially with algorithms like binary search which requires a sorted array.

Beyond searching, sorting is crucial for many other tasks: displaying data in a user-friendly manner, preprocessing data for other algorithms, and identifying duplicates. It can also assist in tasks such as finding the median or mode of a dataset. Common sorting algorithms include:

- Bubble Sort

- Insertion Sort

- Merge Sort

- Quick Sort

10. Explain what a hash table is and how it helps us find things quickly.

A hash table (or hash map) is a data structure that stores key-value pairs. It uses a hash function to compute an index (a 'hash') into an array of buckets or slots, from which the desired value can be found.

The beauty of a hash table lies in its speed. Instead of searching through a list one item at a time (O(n) time), we can jump directly to the bucket where the value should be, assuming we know the key. Ideally, this gives us an average-case time complexity of O(1) for lookups, insertions, and deletions. Collisions (when different keys hash to the same index) can affect performance, but well-designed hash functions and collision resolution strategies (like chaining or open addressing) help minimize their impact.

# Example of a simple hash function

def hash_function(key, table_size):

return key % table_size

# Basic lookup operation

def hash_table_lookup(table, key):

index = hash_function(key, len(table))

if table[index] and table[index][0] == key: #assuming linear probing

return table[index][1] # Return the value

else:

return None

11. How does a binary search work, and why is it faster than a regular search?

Binary search is an efficient algorithm for finding a specific element within a sorted list. It repeatedly divides the search interval in half. If the middle element matches the target, the search is successful. Otherwise, if the target is less than the middle element, the search continues in the left half; if the target is greater, the search continues in the right half. This halving process continues until the target is found or the interval is empty. For example, given a sorted array [2, 5, 7, 8, 11, 12], to search for 13, first compare it to 8. Then, because 13 > 8, search the right half [11, 12]. Since 13 is not found it returns that it is not found.

Binary search is faster than a regular (linear) search because it eliminates half of the remaining search space with each comparison. Linear search, in the worst case, has to check every element in the list. Therefore, binary search has a time complexity of O(log n), while linear search has a time complexity of O(n). For large lists, this logarithmic difference makes binary search significantly faster.

12. What are the advantages and disadvantages of using linked lists?

Advantages of linked lists include dynamic size allocation, meaning they can grow or shrink as needed during runtime. Insertion and deletion of elements are efficient, requiring only pointer adjustments without shifting elements in memory, unlike arrays. The memory is used efficiently as memory can be allocated or deallocated as needed.

Disadvantages involve the need to store pointers, which consume extra memory. Random access is not supported; you must traverse the list sequentially to reach a specific node. This can result in slower access times compared to arrays. Linked Lists also consume more memory than arrays, as extra memory space is needed to store the pointers for each node.

13. How would you implement a queue using two stacks?

To implement a queue using two stacks, stack1 (for enqueue) and stack2 (for dequeue), the enqueue operation simply pushes the element onto stack1. For dequeue, if stack2 is empty, all elements from stack1 are popped and pushed onto stack2, reversing their order. The top element of stack2 is then popped and returned. If stack2 is not empty, its top element is simply popped and returned. This ensures FIFO order.

Here's a conceptual overview:

- Enqueue(x): Push x to

stack1. - Dequeue():

- If

stack2is empty, transfer all elements fromstack1tostack2. - Pop and return the top element from

stack2. Ifstack2is still empty, return null/error as queue is empty.

- If

14. Explain the concept of a 'Last-In, First-Out' (LIFO) data structure.

A Last-In, First-Out (LIFO) data structure, also known as a stack, operates on the principle that the most recently added item is the first one to be removed. Think of it like a stack of plates: you add new plates to the top, and when you need a plate, you take it from the top as well.

Common operations on a LIFO stack include:

push: Adds an element to the top of the stack.pop: Removes and returns the element at the top of the stack.peek: Returns the element at the top of the stack without removing it.isEmpty: Checks if the stack is empty.

A simple implementation can be visualised:

stack.push(1);

stack.push(2);

stack.pop(); // Returns 2

15. Explain the concept of a 'First-In, First-Out' (FIFO) data structure.

FIFO (First-In, First-Out) is a data structure where the first element added to the structure is the first element to be removed. Think of it like a queue or a line – the person who joins the queue first is the first to be served. The primary operations are enqueue (adding an element to the end) and dequeue (removing the element from the front).

Key characteristics of a FIFO data structure include that elements are processed in the order they arrive, which makes it suitable for tasks like managing print jobs or handling requests in a web server. Common implementations include using a linked list or an array with pointers to track the head and tail of the queue. For example, consider this sequence: add A, add B, add C, then remove. 'A' would be removed first, followed by 'B', and then 'C'.

16. How do you prevent stack overflow and underflow?

Stack overflow occurs when a program exceeds the call stack's memory limit, typically due to excessive recursion or large local variables. To prevent it:

- Use iterative solutions instead of recursion where possible.

- If recursion is necessary, ensure it has a clearly defined base case and reduces the problem size with each call to avoid infinite loops.

- Limit the size of local variables within recursive functions.

- Increase the stack size (OS-dependent), but this is usually a workaround, not a solution.

Stack underflow, in the context of stack data structures, happens when you try to pop from an empty stack. To prevent it:

- Always check if the stack is empty before attempting to

popan element. - Implement error handling or return a default value when underflow occurs.

17. How are data structures stored in memory?

Data structures are stored in memory as a contiguous block of bytes. The specific arrangement depends on the data structure type and the programming language. Primitive data types like integers and floats are stored directly in memory locations, using a fixed number of bytes based on their type (e.g., an int might use 4 bytes).

For more complex data structures such as arrays, memory is allocated to hold all elements sequentially. For linked lists, each node contains the data and a pointer (memory address) to the next node, thus, elements are not stored contiguously. Structures and objects are stored as a sequence of their member variables, padded as necessary for memory alignment. Pointers are simply memory addresses that hold the location of other data. Memory management (allocation and deallocation) is handled either manually by the programmer (e.g., in C/C++) or automatically by a garbage collector (e.g., in Java, Python, Go).

18. What is the difference between a linear and a non-linear data structure?

Linear data structures arrange data elements in a sequential manner, where each element is connected to its predecessor and successor. Examples include arrays, linked lists, stacks, and queues. Operations like traversal are typically straightforward, following a linear path.

Non-linear data structures, in contrast, do not arrange data elements sequentially. Elements can have multiple connections to other elements, creating a hierarchical or network-like structure. Trees and graphs are common examples. These structures often allow for more complex relationships between data elements and can be more efficient for certain operations, but traversal and manipulation can also be more complex than with linear structures.

19. How can you improve the efficiency of searching in a data structure?

To improve search efficiency, consider the following:

- Choose the right data structure: For fast lookups,

HashMaps(or dictionaries) offer O(1) average time complexity. If data is sorted, binary search on arrays or balanced trees (like AVL or Red-Black trees) provides O(log n) efficiency. - Indexing: Creating indexes on frequently searched columns in databases significantly speeds up queries.

- Caching: Store frequently accessed data in a cache (e.g., using

RedisorMemcached) to avoid repeated searches in the primary data store. This is especially helpful for immutable data. - Algorithm Optimization: Improve search algorithms by short circuiting where possible and breaking when a satisfactory result is found, instead of continuing.

20. What are some common applications of queues in real-world scenarios?

Queues are fundamental data structures employed in various real-world applications due to their FIFO (First-In, First-Out) nature. A very common example is print spooling, where print jobs are added to a queue and processed in the order they are received. Operating systems use queues to manage processes waiting for CPU time or I/O resources. In call centers, incoming calls are placed in a queue until an agent becomes available.

Another set of use cases revolves around asynchronous communication and data processing. Message queues, like RabbitMQ or Kafka, enable decoupling of applications by queuing messages for later processing. Web servers often use queues to handle incoming requests. Queues are also used in breadth-first search (BFS) algorithms for traversing graphs and trees. They also can manage order processing in e-commerce systems.

21. How does recursion relate to the use of stacks in data structures?

Recursion relies heavily on the stack data structure. Each recursive call adds a new frame onto the stack. This frame contains information such as the function's arguments, local variables, and the return address (where execution should resume after the recursive call completes).

When a recursive function reaches its base case, the stack unwinds. Each frame is popped off the stack, and the return values are passed back up the call chain. The stack ensures that the program returns to the correct point in the calling function after each recursive call completes, maintaining the proper order of execution. Without a stack, tracking the state of nested recursive calls would be incredibly difficult, if not impossible, leading to incorrect program behavior.

22. What does Big O notation mean and how does it relate to the efficiency of data structures?

Big O notation is a way to classify the efficiency of algorithms. It describes how the runtime or memory usage of an algorithm grows as the input size grows. Specifically, it provides an upper bound on the growth rate, focusing on the worst-case scenario. For example, O(n) means the time or space required grows linearly with the input size 'n', while O(1) means it remains constant regardless of 'n'.

Big O notation directly relates to the efficiency of data structures because the choice of data structure impacts the performance of operations performed on it. Different data structures have different Big O complexities for common operations like insertion, deletion, searching, etc. Choosing a data structure with lower Big O complexity for frequently used operations can significantly improve overall efficiency. For instance, searching in a hash table is on average O(1), while searching in an unsorted array is O(n). Understanding Big O helps in selecting the optimal data structure for a given task.

Here are some examples:

- Array Access: O(1)

- Linked List Insertion (at head): O(1)

- Searching a Sorted Array (Binary Search): O(log n)

- Searching an Unsorted Array (Linear Search): O(n)

- Sorting an Array (Merge Sort): O(n log n)

23. When would you use a hash table over other data structures for searching?

A hash table is ideal for searching when you need very fast average-case performance (O(1)) for lookups, insertions, and deletions, and when the order of elements doesn't matter. It excels when you need to frequently check for the presence of a specific key within a large dataset.

Specifically, consider using a hash table over other structures like:

- Arrays/Lists: When searching is frequent and you want to avoid O(n) linear search.

- Binary Search Trees: Hash tables generally offer faster average-case performance than BSTs (O(log n)), although BSTs maintain sorted order, which hash tables do not. Use BSTs when order matters or you need range queries.

- Treesets or other sorted sets: Use a treeset when order matters or you need min/max/range queries.

24. Explain how you would reverse a linked list.

To reverse a linked list, you iterate through the list, changing the next pointer of each node to point to the previous node. You'll need three pointers: prev, current, and next. Initially, prev is null, current points to the head of the list, and next will temporarily store the next node in the original list.

In each iteration:

- Store the next node in

next(next = current.next). - Reverse the current node's

nextpointer to point to the previous node (current.next = prev). - Move

prevto the current node (prev = current). - Move

currentto the next node (current = next).

After the loop finishes (when current is null), prev will be the new head of the reversed list. Return prev.

25. Describe how you would detect a loop in a linked list.

To detect a loop in a linked list, I would use Floyd's cycle-finding algorithm, also known as the "tortoise and hare" algorithm. It involves using two pointers: a 'slow' pointer that moves one node at a time and a 'fast' pointer that moves two nodes at a time. If there's a loop, the fast pointer will eventually catch up to the slow pointer.

The algorithm works as follows:

- Initialize both slow and fast pointers to the head of the linked list.

- Iterate through the list. In each iteration, move the slow pointer one step and the fast pointer two steps.

- If the fast pointer becomes

NULLor the next node of the fast pointer isNULL, it means there is no loop in the linked list, and the algorithm terminates. - If the slow pointer and fast pointer meet at any point, it means there is a loop in the linked list.

bool hasCycle(ListNode *head) {

if (head == nullptr) return false;

ListNode *slow = head;

ListNode *fast = head;

while (fast != nullptr && fast->next != nullptr) {

slow = slow->next;

fast = fast->next->next;

if (slow == fast) return true;

}

return false;

}

26. How do you deal with collisions in hash tables?

Collisions in hash tables occur when different keys produce the same hash value, thus mapping to the same index in the underlying array. There are several techniques to handle this. Two common methods are separate chaining and open addressing.

- Separate Chaining: Each index in the hash table points to a linked list (or other data structure) of key-value pairs. When a collision occurs, the new key-value pair is simply added to the linked list at that index. Retrieval involves searching the linked list at the index.

- Open Addressing: When a collision occurs, the algorithm probes for an empty slot in the hash table. Common probing techniques include linear probing (incrementing the index by 1), quadratic probing (incrementing the index by a quadratic function), and double hashing (using a second hash function to determine the probe interval). For example,

(hash(key) + i) % table_sizefor linear probing, where 'i' is the probe number.

Intermediate Data Structures interview questions

1. How do you efficiently find the median in a stream of unsorted numbers?

To efficiently find the median in a stream of unsorted numbers, we can use two heaps: a max-heap to store the smaller half of the numbers and a min-heap to store the larger half. The max-heap's root will be the largest element in the smaller half, and the min-heap's root will be the smallest element in the larger half. We maintain a balance such that the sizes of the heaps differ by at most 1.

When a new number arrives, we insert it into the appropriate heap. If the number is smaller than the root of the max-heap, it goes into the max-heap; otherwise, it goes into the min-heap. After insertion, we rebalance the heaps to maintain the size constraint. If the max-heap becomes larger than the min-heap by more than 1, we move the root of the max-heap to the min-heap. Conversely, if the min-heap becomes larger than the max-heap by more than 1, we move the root of the min-heap to the max-heap. The median can then be found in O(1) time by averaging the roots of the heaps if they have the same size, or simply taking the root of the larger heap if their sizes differ by 1.

2. Explain how a Bloom filter works and what its use cases are. What are its limitations?

A Bloom filter is a probabilistic data structure used to test whether an element is a member of a set. It works by hashing each element using multiple hash functions, and setting bits in a bit array at the positions indicated by the hash functions. To check if an element is in the set, hash the element again and check if all the corresponding bits in the bit array are set. If any of the bits are not set, the element is definitely not in the set. If all bits are set, the element is probably in the set. Bloom filters can return false positives (reporting an element is in the set when it is not), but never false negatives.

Common use cases include: checking if a username is available before registration, filtering URLs in web crawlers to avoid revisiting already crawled pages, and speeding up database queries by checking if a key exists before querying the database. The limitations are: the possibility of false positives, elements cannot be removed once added (without significantly impacting accuracy and potentially introducing false negatives), and the need to know the approximate number of elements to be stored in advance in order to choose an appropriate bit array size and number of hash functions. bits = - (n * ln(p) / (ln(2)^2)) and k = m/n * ln(2) where n is number of expected items, p is desired false positive rate, m is number of bits and k is the number of hash functions.

3. Describe how you would implement a Least Recently Used (LRU) cache.

An LRU cache can be implemented using a combination of a hash map and a doubly linked list. The hash map allows for O(1) average-case time complexity for get and put operations, while the doubly linked list maintains the order of keys based on their usage. When a key is accessed (either through get or put), it's moved to the head of the list. When the cache reaches its capacity, the least recently used key (the one at the tail of the list) is evicted.

Specifically:

- The hash map stores key-value pairs, where the value is a pointer to the corresponding node in the doubly linked list.

- The doubly linked list stores the keys in the order of their usage, from most recently used (head) to least recently used (tail).

get(key): If the key exists, move the node to the head of the list and return the value. Otherwise, return null.put(key, value): If the key exists, update the value and move the node to the head. Otherwise, create a new node, add it to the head, and add the key-node pair to the hash map. If the cache is full, remove the tail node from the list and the corresponding entry from the hash map.

4. How do you detect a cycle in a directed graph?

A cycle in a directed graph can be detected using Depth First Search (DFS). The key idea is to track the nodes currently in the recursion stack (or the current path being explored). If we encounter a node already present in the recursion stack during DFS traversal, it implies the existence of a cycle.

Here's a breakdown of the approach:

- Maintain a

visitedarray to track visited nodes. - Maintain a

recursionStack(orcurrentlyInPath) array to track nodes in the current DFS path. - During DFS:

- Mark the current node as

visitedand add it to therecursionStack. - For each neighbor of the current node:

- If the neighbor is not

visited, recursively call DFS on the neighbor. - If the neighbor is already in the

recursionStack, a cycle is detected. Returntrue.

- If the neighbor is not

- After exploring all neighbors, remove the current node from the

recursionStack(backtrack) and returnfalseif no cycle was found.

- Mark the current node as

5. Explain the difference between a B-tree and a binary search tree. When would you use one over the other?

A binary search tree (BST) has at most two children per node, whereas a B-tree can have many children per node (typically more than two). BSTs aim for O(log n) time complexity for search, insertion, and deletion in the average case, but can degrade to O(n) in the worst case (e.g., a skewed tree). B-trees, on the other hand, are self-balancing and maintain balanced even with insertions/deletions, guaranteeing logarithmic time complexity O(log n) for these operations, but with a different base for the logarithm. They are also optimized for disk access. A B-tree minimizes the number of disk accesses by having many keys in a node and thus reducing the height of the tree.

You would generally use a B-tree when dealing with large amounts of data stored on disk because their structure minimizes the number of disk I/O operations needed to find elements. Databases often use B-trees (or variants like B+ trees). A BST is suitable for in-memory data where the data volume is relatively small and maintaining a perfectly balanced tree is not a critical requirement, or where the insertion and deletion operations don't cause significant skewing of the tree.

6. How would you implement a trie data structure and what operations would it support?

A trie (also known as a prefix tree) is a tree-like data structure used for storing a dynamic set of strings. Each node in a trie represents a prefix of a string. The root node represents an empty string. Each node can have multiple child nodes, each representing a different character. Strings are stored by traversing down a branch of the trie, character by character. A common implementation uses a map (or array if the alphabet is small and known) to store children, keyed by character.

Typical operations supported by a trie include:

- Insert(string): Adds a string to the trie.

- Search(string): Checks if a string is present in the trie.

- StartsWith(prefix): Checks if there is any string in the trie that starts with the given prefix.

- Delete(string): Removes a string from the trie.

A basic Trie node can be represented in code as follows:

class TrieNode:

def __init__(self):

self.children = {}

self.is_end_of_word = False

7. Describe the difference between a disjoint set and a union-find data structure, and how can you implement each of these?

A disjoint set is an abstract data type that represents a collection of sets where no two sets share any elements. It supports two main operations: find(x), which determines which set an element x belongs to, and union(x, y), which merges the sets containing elements x and y. It describes what you want to do. On the other hand, a union-find data structure is a concrete data structure that implements the disjoint set abstract data type. It describes how to do it.

Common implementations of the union-find data structure use either a list based or tree based approach. List based implementations are typically faster for initial union operations, but slower for the find operation, and tree based operations are the opposite, slower for the initial union operations but faster for the find operations. Path compression and union by rank are two common optimizations when a tree based implementation is used. Here is an example of using the list implementation in Python:

class DisjointSet:

def __init__(self, elements):

self.sets = {element: i for i, element in enumerate(elements)}

def find(self, element):

return self.sets[element]

def union(self, element1, element2):

set1 = self.find(element1)

set2 = self.find(element2)

if set1 != set2:

for element, set_id in self.sets.items():

if set_id == set2:

self.sets[element] = set1

8. How do you find the shortest path between two nodes in a weighted graph?

Dijkstra's algorithm is a common and efficient method for finding the shortest path between two nodes in a weighted graph, especially when all edge weights are non-negative. It works by iteratively exploring nodes from a starting node, maintaining a set of visited nodes and a distance estimate for each node. The algorithm selects the unvisited node with the smallest distance estimate, updates the distance estimates of its neighbors, and adds the current node to the visited set. This process continues until the destination node is reached or all reachable nodes have been visited.

Alternatives such as the Bellman-Ford algorithm can handle graphs with negative edge weights, but it's less efficient than Dijkstra's for non-negative weights. The A* search algorithm is another option that uses heuristics to guide the search, potentially improving performance compared to Dijkstra's, particularly in large graphs, but its correctness depends on the heuristic used.

9. Explain how a skip list works. What are the advantages of using Skip list over other data structures.

A skip list is a probabilistic data structure that uses multiple levels of linked lists to allow efficient searching, insertion, and deletion operations. It works by having a base list (level 0) that contains all the elements, and then successively higher levels where each element appears with a certain probability (typically 1/2 or 1/4). Searching starts at the highest level and proceeds horizontally until the target key is greater than the current node's key. Then, the search drops down a level and continues. This continues until the target is found or the bottom level is reached.

Skip lists offer several advantages. They provide an average time complexity of O(log n) for search, insertion, and deletion, which is comparable to balanced trees like AVL trees or red-black trees. However, skip lists are generally simpler to implement than balanced trees. They also offer more efficient insertion and deletion operations compared to sorted arrays (which require shifting elements). Furthermore, skip lists are inherently probabilistic, meaning their performance is based on random coin flips, so they don't require complex rebalancing operations like self-balancing trees do. They also offer good performance even in worst-case scenarios without elaborate balancing schemes, especially if probabilities are properly selected.

10. How do you efficiently implement a stack using only queues?

To efficiently implement a stack using only queues, you typically need two queues. The core idea is to ensure that only one queue holds the actual stack elements at any given time, while the other acts as a temporary storage.

For the push operation, simply enqueue the new element into the non-empty queue (or the first queue if both are empty). For the pop operation, dequeue all elements from the main queue except the last one, enqueuing each into the auxiliary queue. Then, dequeue the last element from the main queue (which is the element to be popped). Swap the names (or references) of the two queues, so the queue that acted as auxiliary now becomes the main queue holding the stack elements. This process effectively reverses the order to simulate stack-like behavior (LIFO) using queues (FIFO).

11. Describe the concept of self-balancing trees. Explain the purpose and mechanism.

Self-balancing trees are tree data structures that automatically adjust their structure to maintain a balanced height. This balance ensures that operations like search, insertion, and deletion have a guaranteed time complexity of O(log n), where n is the number of nodes. Without self-balancing, a tree can become skewed, leading to a worst-case time complexity of O(n) for these operations.

The mechanism involves rotations and other restructuring operations (e.g., AVL trees use rotations based on balance factors, red-black trees use color flips and rotations) that are triggered when an insertion or deletion causes the tree to become unbalanced. These operations reorganize the tree locally to restore the balance properties, maintaining the logarithmic time complexity. Examples include AVL trees, Red-Black trees, and B-trees. Each type uses different algorithms to maintain balance after insert and delete operations.

12. What are the different ways to implement a priority queue? What are the pros and cons of each approach?

Several ways to implement a priority queue exist, each with its own trade-offs. A straightforward approach is using an unordered array or list. Insertion is fast (O(1)), but finding and removing the highest priority element requires a linear search (O(n)). Another option is a sorted array or list. Here, finding the highest priority element is quick (O(1)), but insertion becomes O(n) as elements need to be shifted to maintain the sorted order.

A more efficient implementation uses a binary heap. A binary heap offers O(log n) time complexity for both insertion and deletion of the highest priority element. This makes it a good general-purpose choice. Finally, for specific data distributions, more advanced data structures like a Fibonacci heap can offer amortized O(1) insertion and O(log n) deletion, but they are more complex to implement and may not be beneficial for all use cases. Another specialized approach would be using a bucket queue which could offer O(1) insertion and deletion under specific constraints where the priority values fall within a bounded range.

13. How would you design a data structure to support range queries (e.g., find the sum of elements within a given range) efficiently?

For efficient range queries (like sum, min, max within a range), a Segment Tree or a Fenwick Tree (Binary Indexed Tree) are good choices. A Segment Tree allows for more complex operations and modifications, but requires more space and can be slightly more complex to implement. A Fenwick Tree excels in space efficiency and simpler implementation, but it primarily supports cumulative queries and updates, which can be adapted for range queries.

For the sum of elements within a given range [L, R], using a Fenwick Tree, you can calculate sum(R) - sum(L-1), where sum(i) represents the cumulative sum from index 0 to i. A Segment Tree can directly store the sum for each segment, allowing you to combine the sums of relevant segments to answer the query in O(log n) time. Code Example (Segment Tree - Sum Query):

def query(tree, node, start, end, l, r):

if r < start or end < l:

return 0

if l <= start and end <= r:

return tree[node]

mid = (start + end) // 2

p1 = query(tree, 2 * node, start, mid, l, r)

p2 = query(tree, 2 * node + 1, mid + 1, end, l, r)

return p1 + p2

14. How can you serialize and deserialize a binary tree?

Serialization converts a tree into a linear representation (like a string), and deserialization reconstructs the tree from that representation. One common approach is using a pre-order traversal. During serialization, traverse the tree in pre-order (root, left, right). Store the value of each node and use a special marker (like # or null) to represent null nodes. The serialized output could be a comma-separated string of node values and null markers. For example, a tree with root 1, left child 2, and right child 3 (and no further children) could be serialized as "1,2,#,#,3,#,#".

For deserialization, read the serialized string, splitting it into an array or list based on the delimiter. Use a recursive function. The first element represents the root. If it's not the null marker, create a new node with that value and recursively call the function to build the left and right subtrees. If it is the null marker, return null. The index of the processed array increments with each step. Using a queue is also an option to avoid recursion, it holds the nodes to be processed and attached as children.

15. Explain the concept of a segment tree and its applications.

A segment tree is a tree data structure used for efficiently performing range queries on an array. Each node in the tree represents an interval, and the root represents the entire array. Leaf nodes represent individual array elements. Internal nodes store aggregated information (e.g., sum, min, max) about the interval they represent, which is derived from the information stored in their children.

Segment trees have various applications, including:

- Range Queries: Finding the sum, minimum, maximum, or other aggregate functions within a specific range of indices in an array. For example

sumRange(l, r),minRange(l, r)in O(log n) time. - Range Updates: Updating a range of values in the array efficiently. For example, adding a value to all elements in a given range. Such operations, also happen in O(log n) time.

- Finding the smallest element in a range: A segment tree can be used to find the smallest element in a range in logarithmic time.

- Other applications: They can be adapted for various other operations that involve dividing a problem into smaller subproblems, like computational geometry problems and dynamic programming optimization.

16. Describe how you would implement a min-max heap.

A min-max heap is a complete binary tree where each level alternates between being a min level and a max level. The root is always a min node. Implementation involves ensuring that elements at min levels are smaller than their descendants, and elements at max levels are larger than their descendants.

Insertion and deletion operations require maintaining this min-max property. After insertion, the new node may need to be 'bubbled up' - comparing it with its parent and grandparent repeatedly. For deletion of the minimum element, we replace it with the last element, then 'trickle down' the element, comparing it with its children and grandchildren to restore the min-max heap property. Similarly, deleting the maximum element involves similar considerations. Arrays or ArrayList can be used as the underlying data structure.

17. Given a large dataset that doesn't fit in memory, how would you sort it?

For sorting a large dataset that doesn't fit in memory, external merge sort is a suitable algorithm. The basic idea is to:

- Divide and Conquer: Split the large dataset into smaller chunks that can fit into memory.

- Sort Chunks: Load each chunk into memory, sort it using an efficient in-memory sorting algorithm (e.g., quicksort or mergesort), and write the sorted chunk back to disk as a temporary file.

- Merge: Merge the sorted chunks into a single sorted file. This is done iteratively, reading a small portion of each sorted chunk into memory, merging them, and writing the result to a new (larger) sorted file. This merging process can be done in multiple passes, depending on the number of chunks and available memory. Optimized implementations often use a k-way merge, where 'k' is the number of chunks being merged simultaneously. This reduces the number of passes.

This approach minimizes memory usage by processing data in smaller, manageable chunks and leverages disk storage for intermediate results. Careful consideration should be given to block sizes to optimize I/O operations.

18. How do you implement a concurrent hash map that can handle multiple threads safely?

A concurrent hash map can be implemented safely using techniques like lock striping or using concurrent data structures provided by languages/libraries. Lock striping involves dividing the hash map into multiple segments, each protected by its own lock. This allows multiple threads to access different segments concurrently without blocking each other. Alternatively, you could leverage existing concurrent hash map implementations provided by libraries like ConcurrentHashMap in Java or similar structures in other languages.

For example, in Java, ConcurrentHashMap handles concurrency internally. In a manual implementation using lock striping:

- Divide the hash map into segments.

- Use a

ReentrantLockfor each segment. - When performing operations (put, get, remove), acquire the lock for the relevant segment.

- Release the lock after the operation.

19. Explain the difference between Breadth-First Search (BFS) and Depth-First Search (DFS) and when would you prefer one over the other?

BFS and DFS are both graph traversal algorithms. BFS explores all the neighbor nodes at the present depth prior to moving on to the nodes at the next depth level, using a queue data structure. DFS explores as far as possible along each branch before backtracking, using a stack (implicitly through recursion or explicitly with a stack data structure).

BFS is preferred when you want to find the shortest path between two nodes or when you need to explore all nodes at a given distance from a starting node. DFS is preferred when you want to explore a graph exhaustively, determine if a path exists between two nodes (though it may not be the shortest), or solve problems that can be naturally expressed recursively. For example, solving mazes or topological sorting often use DFS.

20. How would you implement a data structure to store and efficiently retrieve data based on geographical coordinates?

A Quadtree or a Geohash-based approach are efficient ways to store and retrieve geographical data. A Quadtree recursively divides a geographical area into four quadrants, allowing for efficient searching within specific regions. Geohashing encodes geographical coordinates into short strings; data points with similar geohashes are geographically close, enabling fast proximity searches. The choice depends on factors like data distribution and query patterns; for evenly distributed data, Quadtrees are suitable, while Geohashing is better for clustered data.

With Geohashing, the implementation would involve calculating the Geohash of each coordinate and storing the data points indexed by their Geohashes in a database. For example:

import geohash

latitude = 37.7749

longitude = -122.4194

geohash_value = geohash.encode(latitude, longitude, precision=7)

print(geohash_value) # Example: '9q8yywe'

21. Explain the concept of a Fenwick tree (Binary Indexed Tree) and its applications.

A Fenwick Tree (or Binary Indexed Tree) is a data structure used for efficiently calculating prefix sums in an array. It allows both querying the sum of the first i elements and modifying the value of an element in O(log n) time, where n is the size of the array. It achieves this by cleverly representing the array as a tree where each node stores the sum of a certain range of elements. The key idea is that each index in the Fenwick tree represents the sum of a range whose length is a power of 2. The lowest bit set in the index determines the range. For example, index 12 (1100 in binary) represents the sum of elements from index 9 to 12.

Applications of Fenwick Trees include:

- Calculating prefix sums: The most basic application.

- Range queries and updates: Can be adapted to handle range updates by using two Fenwick Trees.

- Frequency arrays/counters: Maintaining counts of elements in a data stream.

- Rank queries: Finding the number of elements less than or equal to a given value in a sorted array.

- 2D Range Queries: By nesting Fenwick Trees, efficient 2D range queries can be answered.

22. Describe how you would implement a data structure that supports efficiently finding the k-th smallest element in a stream of numbers.

I would use a min-heap of size k. As I iterate through the stream, I'll maintain the k smallest elements seen so far in the min-heap. For each new element, I'll compare it with the root of the min-heap (the largest element in the heap). If the new element is smaller than the root, I'll replace the root with the new element and then heapify to maintain the min-heap property. After processing all elements in the stream, the root of the min-heap will be the k-th smallest element. This approach has a time complexity of O(log k) for each element in the stream, resulting in O(n log k) overall, where n is the number of elements in the stream. Space complexity is O(k) due to the size of the heap.

Alternatively, Quickselect algorithm can be adapted. Although its worst-case time complexity is O(n^2), the average time complexity is O(n), making it a practical choice. For a continuous stream, this would mean storing the incoming values in a buffer. Periodically, or when a request for the k-th smallest element is made, Quickselect is applied to this buffer. After which, the buffer is cleared. This comes at the cost of increased space to store incoming data.

23. How do you use dynamic programming to solve problems related to trees or graphs?

Dynamic programming (DP) on trees and graphs involves breaking down the problem into smaller, overlapping subproblems, solving them, and storing their solutions to avoid recomputation. For trees, we often use recursion combined with memoization. The recursive calls handle subtrees, and memoization stores the results for each node to prevent redundant calculations. Common approaches include post-order traversal where we compute solutions for children nodes before the parent, or pre-order traversal if the solution requires information from the root. Example: Calculating the maximum independent set in a tree.

For graphs, especially with cyclic dependencies, standard DP might not directly apply. Instead, techniques like memoization with topological sort (for directed acyclic graphs - DAGs) or using shortest path algorithms (like Dijkstra or Bellman-Ford, which inherently use DP) become crucial. We might also combine DP with graph traversal algorithms like DFS or BFS. For example, finding the longest path in a DAG using topological sort and DP. The state definition in DP for graph problems often incorporates the node and potentially other information like the distance from a source, number of edges, etc.

24. Explain what an AVL tree is and the rotation operations required to maintain its balance.

An AVL tree is a self-balancing Binary Search Tree (BST) where the height difference between the left and right subtrees of any node is at most one. This difference is called the balance factor. To maintain this balance after insertions or deletions, AVL trees use rotations.

There are four main types of rotations:

- Left Rotation: Used when a node's right subtree is too heavy.

- Right Rotation: Used when a node's left subtree is too heavy.

- Left-Right Rotation: A combination of a left rotation followed by a right rotation, needed when the imbalance occurs in a specific pattern.

- Right-Left Rotation: A combination of a right rotation followed by a left rotation, similarly needed for a specific imbalance pattern.

These rotations involve rearranging nodes and updating their parent-child relationships to restore the AVL property.

25. How would you efficiently find all anagrams in a large list of words?

To efficiently find all anagrams in a large list of words, I would use a hash table (dictionary) where the keys are the sorted versions of the words, and the values are lists of words that have that sorted form. The process involves iterating through the list of words. For each word, I sort its characters alphabetically. Then, I use this sorted string as a key to either create a new entry in the hash table or append the original word to the existing list of anagrams associated with that key. After processing all the words, the hash table will contain all the anagram groups.

For example, in python:

def find_anagrams(words):

anagram_groups = {}

for word in words:

sorted_word = "".join(sorted(word))

if sorted_word in anagram_groups:

anagram_groups[sorted_word].append(word)

else:

anagram_groups[sorted_word] = [word]

return [group for group in anagram_groups.values() if len(group) > 1] # Return only groups with more than one word

This approach ensures that words with the same characters (anagrams) map to the same key, allowing for efficient grouping.

Advanced Data Structures interview questions

1. Explain the trade-offs between different implementations of B-trees (e.g., B-tree, B+ tree, B* tree).

B-trees, B+ trees, and B* trees are all variations of balanced tree data structures optimized for disk-based storage, but they have different trade-offs. B-trees store data in both internal and leaf nodes, potentially requiring fewer levels to reach a specific record. However, this mixed structure can lead to less efficient sequential access. B+ trees, on the other hand, store data only in leaf nodes, with internal nodes acting as an index. This results in faster sequential access and better support for range queries, because all data is stored sequentially at the leaf level, at the cost of potentially needing more space for the index.

B* trees are a variation of B+ trees that improve space utilization by ensuring that nodes are at least 2/3 full, instead of the standard 1/2. This is achieved by redistributing keys between adjacent sibling nodes before splitting a node, resulting in fewer splits and more efficient storage utilization. However, the redistribution process can add complexity and potentially increase the time for insertions and deletions.

2. How can you implement a persistent data structure and what are the benefits?

A persistent data structure preserves previous versions of itself when modified. This can be implemented using techniques like path copying or using immutable data structures. Path copying involves creating new nodes only for the parts of the structure that change, while sharing the unchanged parts with previous versions. Immutable data structures, once created, cannot be modified. Any operation that would normally modify the structure instead creates a new structure with the desired changes. Languages like Clojure and Scala provide built-in immutable collections.

The benefits include: * Data immutability: Ensures data integrity and prevents accidental modification. * Simplified debugging: Easier to track changes and identify the source of errors. * Concurrency: Safe to use in concurrent environments without locks. * Auditing: Provides a history of data changes, useful for auditing purposes. * Time travel: Ability to access previous states of the data structure, useful for debugging or historical analysis.

3. Describe the use cases for a disjoint-set data structure (Union-Find) and how it optimizes connectivity problems.

A disjoint-set data structure (also known as Union-Find) is primarily used to solve connectivity problems. It's particularly effective in scenarios where you need to determine whether two elements are in the same connected component or to merge two components together. Some common use cases include:

- Kruskal's Algorithm: Finding the Minimum Spanning Tree (MST) in a graph. The disjoint-set data structure efficiently tracks which vertices are already part of the same tree to avoid cycles.

- Network Connectivity: Determining if two computers are in the same network.

- Image Segmentation: Grouping pixels with similar properties into regions.

- Maze generation: To ensure that all cells are connected during the maze generation process.

- Dynamic Connectivity: Where connections between objects are added over time, and you need to quickly query whether two objects are connected.

The optimization arises from two key techniques: union by rank and path compression. Union by rank minimizes the height of the trees, ensuring find operations are efficient. Path compression flattens the tree structure during find operations by making each visited node point directly to the root, drastically reducing the cost of subsequent find operations. These optimizations result in near-constant time complexity (amortized O(α(n)), where α(n) is the inverse Ackermann function, which grows extremely slowly) for both union and find operations, making it highly efficient for large datasets.

4. Explain how to implement an efficient auto-completion system using Tries.

A Trie (prefix tree) is an excellent data structure for implementing auto-completion. Each node represents a character, and paths from the root to nodes form prefixes. To implement auto-completion efficiently:

- Construction: Insert all words from the dictionary into the Trie. Each word is traversed character by character, creating nodes as needed and marking the end of a word with a special flag (e.g.,

is_word = True). - Completion: When a user enters a prefix, traverse the Trie to the node representing that prefix. Then, perform a depth-first search (DFS) or breadth-first search (BFS) from that node to find all words that have the entered prefix. The DFS/BFS should collect all words by traversing all subtrees under that node. The efficiency stems from quickly locating the prefix (O(k) where k is the prefix length) and then retrieving all completions in O(m) where m is the combined length of all words with given prefix.

5. How does a Bloom filter work, and what are its applications and limitations in probabilistic data structures?

A Bloom filter is a probabilistic data structure used to test whether an element is a member of a set. It works by using multiple hash functions to map each element to multiple positions in a bit array, setting those bits to 1. To check if an element is in the set, hash it with the same functions and see if all corresponding bits are 1. If so, the element might be in the set; if any bit is 0, it's definitely not. Bloom filters offer space efficiency but can produce false positives.

Applications include: * Caching: Checking if an item is likely in a cache before a more expensive lookup.

- Database: Determining if a key exists in a database to avoid disk access.

- Networking: URL filtering in web browsers. Limitations: * False positives: Can indicate an element is present when it's not.

- No deletions: Removing an element is difficult as it may affect other elements' bits. * Optimal Size Required: The number of hash functions and the size of the bit array need to be chosen carefully to balance the false positive rate and space usage.

6. Describe the advantages and disadvantages of using a skip list compared to other ordered data structures like balanced trees.

Skip lists offer several advantages over balanced trees. Simpler implementation is a key benefit; the algorithms for insertion and deletion are generally easier to understand and code compared to complex tree balancing operations like rotations in AVL or red-black trees. Skip lists also support efficient search, insertion, and deletion operations with an average time complexity of O(log n), comparable to balanced trees. Furthermore, skip lists can be more space-efficient in some cases, as they don't require storing parent or color bits like balanced trees. Concurrent updates are also often simpler to manage in skip lists than in balanced trees due to the localized nature of changes.

However, skip lists have disadvantages. The space usage can be higher in the worst case compared to balanced trees, especially if the random level assignment results in many levels. Although the average search time is O(log n), the worst-case search time can degrade to O(n), which is less favorable than the guaranteed O(log n) of balanced trees. Debugging can also be tricky. Finally, the performance depends on a good random number generator to ensure even distribution of nodes across levels. A biased random number generator can significantly degrade performance.

7. Explain how to use a segment tree or Fenwick tree to efficiently solve range query problems.

Segment Trees and Fenwick Trees (Binary Indexed Trees) are data structures used to efficiently perform range queries and updates on arrays. A Segment Tree represents the array in a tree-like structure, where each node stores the result of an operation (e.g., sum, min, max) on a segment of the array. Range queries and updates can be performed in logarithmic time (O(log n)). Fenwick Trees achieve the same logarithmic time complexity but use less space and are generally easier to implement. They are particularly well-suited for prefix sum queries and single element updates.

For example, consider finding the sum of elements in a range [l, r] using a Fenwick tree: you query the prefix sum up to r and subtract the prefix sum up to l-1. Updating an element involves traversing the tree structure to update the affected nodes. update(index, value) adds value to all elements that index contributes to. query(index) returns the cumulative sum of elements from 1 to index.

8. How can you design a data structure to efficiently store and query spatial data (e.g., using a quadtree or KD-tree)?

For efficient spatial data storage and querying, a quadtree or KD-tree are common choices. A quadtree recursively divides a 2D space into four quadrants, each potentially containing data points. This allows for quick narrowing down of the search area when querying for points within a specific region. A KD-tree, on the other hand, recursively partitions space along different dimensions. At each level of the tree, the data is split based on the median value of one of the dimensions.

To implement such a data structure, you'd start with a root node representing the entire space. Insertions involve traversing the tree, choosing the appropriate branch based on the point's coordinates and the tree's partitioning strategy. Queries similarly traverse the tree, pruning branches that don't overlap the query region. For example, in a quadtree query, if the query region does not overlap a quadrant, we can ignore everything under that sub-tree. For range queries, the algorithm involves checking leaf nodes in the quadtree to find any points that fall within the specified range.

9. Describe the concept of a self-organizing list and how it can improve performance in certain scenarios.

A self-organizing list is a data structure (typically implemented as a linked list or array) that dynamically rearranges its elements based on access frequency. The goal is to move frequently accessed items closer to the front of the list, reducing the average access time. Common self-organization techniques include:

- Move-to-Front: The accessed element is moved to the head of the list.

- Transpose: The accessed element is swapped with its predecessor.

- Frequency Count: Each element maintains a count of its accesses; elements are sorted based on these counts.

Self-organizing lists can improve performance when dealing with data where access patterns exhibit locality of reference - that is, a small subset of items are accessed frequently. For example, in a cache, if a particular memory address is accessed repeatedly, move-to-front will ensure subsequent lookups are fast. This adaptive nature avoids the overhead of maintaining a perfect order based on full knowledge of access patterns, which is often impractical.

10. Explain how to implement a data structure that supports both efficient insertion and retrieval of elements based on priority (e.g., a pairing heap or Fibonacci heap).

A pairing heap is a self-adjusting heap data structure that offers efficient insertion and retrieval of elements based on priority. Insertion involves simply merging the new element with the existing heap. The find-min operation is trivial as the root holds the minimum value. The delete-min operation is where the core logic resides. The root is removed, and the remaining child subtrees are merged pairwise. These merged subtrees are then merged from right to left to form the new heap.

While Fibonacci heaps offer theoretically superior amortized time complexity for certain operations (especially decrease-key), pairing heaps often perform better in practice due to lower constant factors and simpler implementation. Both support efficient insertion, find-min, and delete-min. decrease-key is typically implemented by cutting the node from its parent and merging the resulting subtree with the root list (in Fibonacci heaps; similar concepts apply in pairing heaps but may not be explicitly named 'root list').

11. How do you handle concurrency issues when multiple threads access and modify a shared data structure?

Concurrency issues when multiple threads access and modify shared data can lead to race conditions, data corruption, and unpredictable behavior. To prevent these issues, synchronization mechanisms are used. Common methods include:

- Locks (Mutexes): Protect critical sections of code, allowing only one thread to access the shared data at a time. For example, in Java, you can use

synchronizedblocks orjava.util.concurrent.locks.Lock. In python, you can usethreading.Lock - Semaphores: Control access to a limited number of resources, preventing too many threads from accessing the data concurrently.

- Atomic Operations: Use hardware-level atomic instructions to perform operations on shared variables without the need for locks, ensuring atomicity and preventing race conditions (e.g.,

AtomicIntegerin Java). - Concurrent Data Structures: Utilize thread-safe data structures designed for concurrent access (e.g.,

ConcurrentHashMapin Java orQueuefromqueuemodule in Python). These structures typically use internal synchronization to ensure data consistency. - Read-Write Locks: Allow multiple threads to read the data concurrently but only one thread to write, improving performance in read-heavy scenarios. You can use

ReadWriteLockin Java andthreading.Rlockin Python.

Choosing the appropriate mechanism depends on the specific requirements and the complexity of the data structure.

12. Describe the differences between various graph traversal algorithms and their applications

Graph traversal algorithms explore all reachable vertices in a graph. Two fundamental algorithms are Breadth-First Search (BFS) and Depth-First Search (DFS). BFS explores level by level, using a queue, finding the shortest path in unweighted graphs. Applications include finding nearest neighbors, web crawling, and shortest path in maps. DFS explores deeply along each branch before backtracking, using a stack (implicitly through recursion). Applications include topological sorting, detecting cycles in graphs, and solving mazes.

Other variations and specialized algorithms exist, like Dijkstra's algorithm (for shortest paths in weighted graphs), A* search (an informed search using heuristics), and topological sort (specifically for Directed Acyclic Graphs, DAGs). The choice of algorithm depends on the specific problem and graph properties. For example, Dijkstra's algorithm uses a priority queue to efficiently determine the shortest path. BFS and DFS also have different memory implications, depending on the nature of the graph being processed.

13. Explain different collision resolution techniques in hash tables and their impact on performance.

Collision resolution techniques in hash tables handle scenarios when different keys map to the same index. Common techniques include:

- Separate Chaining: Each index in the hash table points to a linked list (or another data structure) of key-value pairs. When a collision occurs, the new key-value pair is added to the list at that index. Performance depends on the length of the lists; long lists degrade search to O(n), while short, relatively uniform lists keep search closer to O(1). Implementation is fairly straightforward.

- Open Addressing: If a collision occurs, the algorithm probes (searches) for an empty slot in the table. Common probing methods include:

- Linear Probing: Searches sequentially for the next available slot. Simple to implement but suffers from primary clustering, where collisions cluster together, degrading performance.

- Quadratic Probing: Uses a quadratic function to determine the next slot to probe. Reduces primary clustering but can lead to secondary clustering.

- Double Hashing: Uses a second hash function to determine the step size for probing. Helps to distribute keys more evenly and reduces clustering. More complex to implement than linear or quadratic probing. Open Addressing techniques generally offer better space utilization than separate chaining since they don't require extra data structures. However, performance degrades significantly as the table fills up (approaching its load factor).

14. How do you design an in-memory cache using different eviction policies (LRU, LFU, FIFO)?

To design an in-memory cache with LRU (Least Recently Used), LFU (Least Frequently Used), and FIFO (First-In, First-Out) eviction policies, I'd use a combination of data structures. For all policies, a HashMap would provide O(1) average-case time complexity for get and put operations. The different eviction policies would be implemented as follows:

- LRU: A doubly linked list or Java's

LinkedHashMapcan track the order of access. When an item is accessed, it's moved to the tail. On eviction, the head is removed. - LFU: A

HashMapwould map keys to (frequency, value) tuples. A priority queue (min-heap) or a frequency list (list of buckets) would track frequencies to allow efficient retrieval of the least frequently used items for eviction. When an item is accessed or inserted, its frequency count is increased. When eviction is needed, the least frequently used item(s) are removed. - FIFO: A simple queue (e.g.,

LinkedList) would store entries in the order they were inserted. New items are added to the tail, and evictions occur from the head of the queue.

The get and put operations of the cache would interact with the HashMap for data access and with the corresponding data structure (linked list, priority queue, or queue) to maintain the eviction policy order. remove operations may be required if the cache gets full.

15. Describe the concept of a Patricia trie and how it optimizes space usage compared to a standard trie.

A Patricia trie (Practical Algorithm To Retrieve Information Coded in Alphanumeric) is a specialized form of a trie data structure that optimizes space usage by eliminating nodes with only a single child. In a standard trie, each node represents a character, and paths from the root to a leaf represent keys. This can lead to significant wasted space when keys share long prefixes, as each character in the prefix gets its own node, even if the path is unambiguous.

Patricia tries address this inefficiency by collapsing single-child paths into a single edge labeled with the entire sequence of characters from the skipped nodes. This means instead of storing individual characters at each level of the trie for a long shared prefix, Patricia stores the entire shared prefix string directly on the edge. This optimization dramatically reduces the number of nodes, especially when storing a large set of keys with common prefixes, leading to a more compact representation compared to standard tries.

16. Explain the use of a Minimax tree in game AI and decision-making problems.

The Minimax algorithm is a decision-making algorithm used in game AI and other decision problems where two or more players have opposing goals. It works by constructing a decision tree, where each node represents a possible game state, and the branches represent the possible moves. The algorithm explores the tree to a certain depth, alternating between the maximizing player (typically the AI) trying to choose the move that yields the highest score, and the minimizing player (typically the opponent) trying to choose the move that yields the lowest score for the AI.

At the leaf nodes of the tree (the end of the exploration depth), a heuristic evaluation function is applied to estimate the value of the game state. These values are then propagated up the tree, with the maximizing player choosing the maximum value among its children, and the minimizing player choosing the minimum value. By recursively applying this process, the Minimax algorithm determines the optimal move for the maximizing player, assuming the opponent plays optimally. Alpha-beta pruning is often used to optimize the Minimax algorithm by eliminating branches that don't need to be explored.

17. How can you use a suffix tree to efficiently solve string-related problems such as finding repeated substrings?

A suffix tree efficiently solves string problems like finding repeated substrings by representing all suffixes of a string in a tree structure. Each path from the root to a leaf represents a suffix. Repeated substrings correspond to internal nodes having a path-label with multiple leaf descendants. Finding the longest repeated substring, for example, becomes a matter of identifying the deepest internal node in the suffix tree (the internal node farthest from root) with at least two leaf descendants.

To find all repeated substrings, one would traverse the tree. Any internal node represents a repeated substring. The path from the root to that internal node gives you the substring itself. The time complexity is often linear, O(n), where n is the length of the string, because the suffix tree can be constructed and traversed in linear time. For instance to find all repeated substrings, you traverse the suffix tree and print labels of all internal nodes. Repeated substrings can also be identified as branches, so any node with more than one child represents the end of a repeated substring.

18. Describe the implementation and use cases of a space-efficient probabilistic data structure like a Count-min sketch.

A Count-min sketch is a probabilistic data structure used for estimating the frequency of events in a stream of data, while using significantly less space than storing the entire dataset. It uses a 2D array (or matrix) with d hash functions and w counters per hash function. When an item x arrives, it is hashed d times, each hash function mapping x to a counter in a different row of the matrix. Each of these counters is incremented. To estimate the frequency of x, we hash x using the same d hash functions and return the minimum value of the d corresponding counters.

Use cases for Count-min sketches include:

- Traffic monitoring: Estimating the frequency of different IP addresses or URLs in network traffic.

- Log analysis: Identifying frequent error messages or user actions in large log files.

- Database query optimization: Estimating the size of intermediate results in query processing.

- Data stream mining: Finding frequent items in a continuous stream of data where storing all items is infeasible.

19. Explain how to build and use a Voronoi diagram to solve proximity-based problems.

A Voronoi diagram divides a plane into regions based on proximity to a set of points (sites). Each region, called a Voronoi cell, contains all points closer to its site than to any other. Building it involves algorithms like Fortune's algorithm (a sweep line algorithm with O(n log n) complexity) or divide-and-conquer. Using libraries like SciPy or CGAL simplifies this process.

To solve proximity problems, such as finding the nearest site to a given point, you simply determine which Voronoi cell the point lies within. The site associated with that cell is the nearest site. Other applications include finding the largest empty circle (center lies at a Voronoi vertex) or neighbor search. For example, in SciPy:

from scipy.spatial import Voronoi, voronoi_plot_2d

import matplotlib.pyplot as plt

points = [[0, 0], [0, 1], [1, 0], [1, 1]]

vor = Voronoi(points)

voronoi_plot_2d(vor)

plt.show()

#Finding the region for a specific point requires further computation/searching

20. How can you efficiently find the median of a large stream of numbers using a combination of data structures?

To efficiently find the median of a large stream of numbers, you can use a combination of two heaps: a max-heap to store the smaller half of the numbers and a min-heap to store the larger half. The max-heap will store elements less than or equal to the median, and the min-heap will store elements greater than or equal to the median.

Whenever a new number arrives:

- Add it to one of the heaps: If the number is smaller than the root of the max-heap or the max-heap is empty, add it to the max-heap; otherwise, add it to the min-heap.

- Rebalance the heaps: If the sizes of the heaps differ by more than 1, transfer the root element from the larger heap to the smaller heap.

At any time, the median can be found as follows:

- If the heaps have the same size, the median is the average of the roots of the max-heap and min-heap.

- If the heaps have different sizes, the median is the root of the larger heap. This approach maintains a time complexity of O(log n) for each insertion and O(1) for finding the median.

21. Describe the structure and usage of a BSP tree in computer graphics for spatial partitioning.

A Binary Space Partitioning (BSP) tree is a data structure used in computer graphics for efficient spatial partitioning. It recursively divides space into convex sets using hyperplanes (lines in 2D, planes in 3D). Each node in the tree represents a convex region, and the leaves represent empty or fully occupied regions. The tree structure is built by repeatedly splitting space along a chosen splitting plane. Each node stores the equation of the splitting plane and pointers to its two children, representing the regions on either side of the plane.