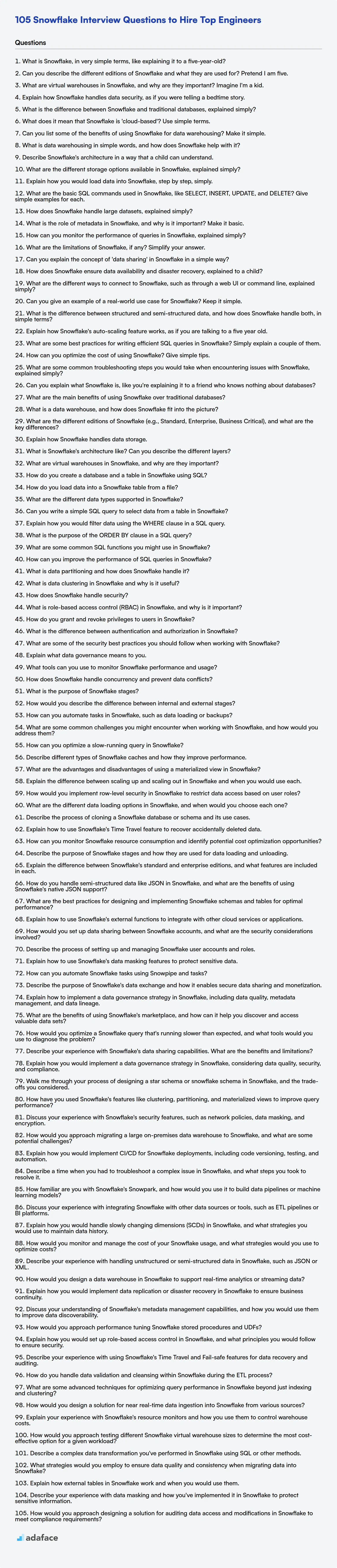

Hiring Snowflake professionals requires a keen understanding of their data warehousing, data lake and cloud computing skills. Recruiters and hiring managers need a well-prepared set of questions to evaluate candidates thoroughly and assess their fit for the role.

This blog post provides a detailed compilation of Snowflake interview questions categorized by experience level, ranging from freshers to experienced professionals, including multiple-choice questions. It aims to equip you with the right questions to gauge a candidate's knowledge and practical skills effectively, helping you to identify the best talent.

By using these questions, you can streamline your interview process and make informed hiring decisions. Enhance your candidate screening with our Snowflake online assessment to ensure you're selecting the most qualified individuals.

Table of contents

Snowflake interview questions for freshers

1. What is Snowflake, in very simple terms, like explaining it to a five-year-old?

Imagine you have lots and lots of toys, like LEGOs, dolls, and cars. Snowflake is like a giant, super-organized playroom in the cloud where you can keep all those toys.

It helps you keep them safe, lets you play with them whenever you want, and even lets your friends play with them too, without messing up your own collection! It's like magic, but it's just a really clever way to store and use information.

2. Can you describe the different editions of Snowflake and what they are used for? Pretend I am five.

Imagine Snowflake is like different flavors of ice cream! Some flavors are simple and cheap, and others are fancy and cost more. Each flavor lets you do different things with your data.

There's a basic flavor called Standard. It's good for starting out and doing simple data tasks. Then, there's Enterprise, which is like adding sprinkles and a cherry on top! It has more features for bigger companies. Business Critical is the super-duper flavor, with extra protection to keep your data safe and sound, like a bodyguard. And finally, Virtual Private Snowflake (VPS), which is the most secure of them all, it is like having your very own locked freezer only for your ice cream, keeping it isolated and secure from everyone else. Each of these editions provides different levels of security, performance, and features, so you pick the one that fits what you need to do with your data the best!

3. What are virtual warehouses in Snowflake, and why are they important? Imagine I'm a kid.

Imagine Snowflake is like a big playground with lots of toys (data!). A virtual warehouse is like a group of kids you can hire to play with those toys. You tell them what you want them to do (like count how many red blocks there are), and they do it for you. You can have different groups of kids (warehouses), some big and fast, others small and slow, depending on how much work you need done.

They're important because without them, nobody can play with the toys! They provide the computing power needed to ask questions and get answers from all that data. Also, you only pay for the time the kids are actually playing, so it's like renting them only when you need them, which saves money.

4. Explain how Snowflake handles data security, as if you were telling a bedtime story.

Once upon a time, in the land of Data Cloud, lived Snowflake, a magical ice castle protecting precious data. Snowflake ensured that only authorized users could access the data using secure keys (authentication). Each key had specific permissions (role-based access control), like letting a prince read only the royal announcements, but not change them. Data was further protected by encrypting it using secret codes (encryption at rest and in transit) while it slept in the ice castle and when it traveled to faraway lands (like when you access it from your computer). Snowflake also keeps a watchful eye on who is doing what (auditing) so if any mischievous goblins try to sneak in, they can be caught and stopped.

To make extra sure no one steals secrets, Snowflake has extra layers of protection. Some secrets are hidden using techniques like data masking, so the important parts can't be seen without permission. Snowflake uses network policies like a magic wall to control the entry of data. It's like Snowflake is keeping data safe and sound, all through the night and day!

5. What is the difference between Snowflake and traditional databases, explained simply?

Snowflake differs from traditional databases in a few key ways. Traditional databases often require you to manage the infrastructure, including servers, storage, and backups. Snowflake is a fully managed service, meaning Snowflake handles all the infrastructure aspects. This allows users to focus on data and analysis rather than database administration.

Another major difference is Snowflake's architecture. Traditional databases typically use a shared-disk or shared-nothing architecture, which can limit scalability and concurrency. Snowflake uses a unique multi-cluster shared data architecture, allowing for independent scaling of compute and storage. This separation allows for better performance and cost optimization, as you can scale compute resources up or down based on demand without impacting storage costs or performance.

6. What does it mean that Snowflake is 'cloud-based'? Use simple terms.

Snowflake being 'cloud-based' simply means that it runs on the internet using someone else's computers (servers) instead of your own. It's like renting computing power and storage from a provider like Amazon Web Services (AWS), Microsoft Azure, or Google Cloud Platform (GCP).

Instead of buying and managing servers yourself, Snowflake takes care of all that. You just pay for what you use. This offers benefits like scalability (easily handle more data or users), reduced costs (no need to buy expensive hardware), and accessibility (access your data from anywhere with an internet connection).

7. Can you list some of the benefits of using Snowflake for data warehousing? Make it simple.

Snowflake offers several key benefits for data warehousing including:

- Scalability and Elasticity: Compute and storage scale independently, allowing you to adjust resources based on workload demands without downtime.

- Performance: Snowflake's architecture and optimized query engine deliver fast query performance.

- Ease of Use: Simplified data loading, management, and a user-friendly interface reduces administrative overhead.

- Cost-Effectiveness: Pay-as-you-go pricing model and efficient resource utilization can lead to cost savings.

- Data Sharing: Securely share data with internal and external users without moving the data.

- Support for Various Data Types: Snowflake handles structured, semi-structured, and unstructured data.

8. What is data warehousing in simple words, and how does Snowflake help with it?

Data warehousing is like creating a special storage area where you collect and organize information from different parts of your business (like sales, marketing, and customer service). This helps you analyze the data to spot trends, make better decisions, and improve your business performance. Think of it as taking all the ingredients from different refrigerators and pantries in a restaurant, and neatly organizing them in a central, easily-accessible walk-in refrigerator, so the chef can easily find and use them for cooking.

Snowflake helps with data warehousing by providing a cloud-based platform to store, process, and analyze vast amounts of data. It's scalable, meaning you can easily increase or decrease resources as needed. Key benefits are:

- Scalability: Handle huge datasets without performance issues.

- Concurrency: Multiple users can query data at the same time.

- Cost-effective: Pay only for the resources you use.

- Security: Built-in security features to protect your data.

- Easy to Use: Snowflake has a simple user interface compared to other older data warehousing solutions.

9. Describe Snowflake's architecture in a way that a child can understand.

Imagine Snowflake is like a big online Lego building platform. All the Lego bricks (your data) are stored in a special, super-safe warehouse (cloud storage). When you want to build something (run a query), you borrow some robot builders (virtual warehouses). These robots don't touch the original Lego bricks; they only make copies to build with, so your original data is always safe. Once the robots are done building, they disappear, and you only pay for the time they were working! You can have many different groups of robots working on different projects at the same time, all using the same Lego bricks from the warehouse. This makes it easy for everyone to share and use the data without messing it up.

So, it has three main parts: The safe Lego warehouse, smart robot builders, and a control center that makes sure everyone plays nicely together. This allows you to analyze your data quickly and easily, without having to worry about managing all the computers yourself.

10. What are the different storage options available in Snowflake, explained simply?

Snowflake abstracts away the complexity of choosing specific storage types. All data in Snowflake is stored in a columnar format, compressed, and encrypted. Snowflake uses cloud storage (either AWS S3, Azure Blob Storage, or Google Cloud Storage) to physically store the data.

Essentially, you don't directly manage storage configurations like you would with traditional databases. Snowflake handles the storage optimization, availability, and durability aspects for you in the background. You simply focus on loading and querying your data.

11. Explain how you would load data into Snowflake, step by step, simply.

Loading data into Snowflake involves a few key steps. First, stage your data in a location Snowflake can access, like AWS S3, Azure Blob Storage, or Google Cloud Storage. Snowflake provides internal stages as well. Second, create a file format object in Snowflake that describes the structure of your data files (e.g., CSV, JSON, Parquet). Define the file type, field delimiter, compression, and other relevant properties using CREATE FILE FORMAT. Third, use the COPY INTO command to load the data from the stage into a Snowflake table. You specify the table, the stage location, and the file format to use. For example, COPY INTO mytable FROM @mystage FILE_FORMAT = (FORMAT_NAME = my_file_format);.

Alternatively, for smaller datasets or real-time ingestion, you might use Snowpipe. Snowpipe is Snowflake's continuous data ingestion service. You set up a pipe object that listens for new files in a stage. When new files arrive, Snowpipe automatically loads the data into the specified table. This requires creating a pipe object and configuring a notification mechanism to alert Snowpipe when new files are staged.

12. What are the basic SQL commands used in Snowflake, like SELECT, INSERT, UPDATE, and DELETE? Give simple examples for each.

Basic SQL commands in Snowflake are used to manipulate data. SELECT retrieves data, INSERT adds new data, UPDATE modifies existing data, and DELETE removes data.

Examples:

SELECT:SELECT * FROM employees;(Retrieves all columns and rows from the 'employees' table.)INSERT:INSERT INTO employees (name, salary) VALUES ('John Doe', 60000);(Inserts a new employee named 'John Doe' with a salary of 60000.)UPDATE:UPDATE employees SET salary = 65000 WHERE name = 'John Doe';(Updates the salary of 'John Doe' to 65000.)DELETE:DELETE FROM employees WHERE name = 'John Doe';(Deletes the employee named 'John Doe'.)

13. How does Snowflake handle large datasets, explained simply?

Snowflake handles large datasets using a massively parallel processing (MPP) architecture. Essentially, data is divided into micro-partitions (small, contiguous units of storage) and spread across many compute nodes. When you run a query, Snowflake automatically distributes the workload across these nodes, allowing them to process data simultaneously, dramatically speeding up query execution.

This separation of storage and compute allows Snowflake to scale compute resources up or down independently based on the workload. It also utilizes columnar storage, which stores data by columns instead of rows, improving query performance for analytical workloads by reading only the necessary columns for a given query. This architecture allows Snowflake to handle very large datasets efficiently and cost-effectively.

14. What is the role of metadata in Snowflake, and why is it important? Make it basic.

Metadata in Snowflake is essentially data about data. It describes various aspects of your Snowflake environment, such as: tables, columns, users, warehouses, queries, and data lineage. Think of it like a library catalog; it tells you what books (data) are available and where to find them.

It's important because it powers almost everything Snowflake does. It enables efficient query processing, security, data governance, and overall system management. Without accurate metadata, Snowflake wouldn't know how to access or manage your data effectively. For example, when you run a query, Snowflake uses metadata to determine the optimal execution plan. Metadata also allows Snowflake to track data lineage, showing how data flows through the system.

15. How can you monitor the performance of queries in Snowflake, explained simply?

You can monitor Snowflake query performance using the web interface, SQL, or partner tools.

Using Snowflake's web interface, you can view query history and examine query profiles for details like execution time, the amount of data scanned, and any bottlenecks. SQL functions and views (e.g., QUERY_HISTORY, QUERY_HISTORY_BY_*) allow you to programmatically retrieve performance metrics. For more advanced monitoring, tools offered by Snowflake partners often provide richer visualizations and alerting capabilities. Key metrics to observe include execution time, warehouse size utilization, bytes scanned, and query compilation time.

16. What are the limitations of Snowflake, if any? Simplify your answer.

Snowflake, while powerful, has some limitations. A key one is cost. Its consumption-based pricing can become expensive if not carefully monitored and optimized, especially with large datasets and complex queries. Also, while Snowflake handles structured and semi-structured data very well, it's not ideally suited for unstructured data like images or videos without significant transformation.

Furthermore, despite continuous improvements, Snowflake's metadata management can be a bottleneck in very large deployments. While it has support for many languages via external functions, it does not natively support procedural languages like PL/SQL that some organizations are accustomed to. This might require rewriting existing logic.

17. Can you explain the concept of 'data sharing' in Snowflake in a simple way?

Data sharing in Snowflake lets you securely share data between Snowflake accounts without actually moving or copying the data. Think of it like granting access to a virtual table (or set of tables, views, etc.) that resides in your account to another account. The other account can then query this 'shared' data as if it were their own, but the data remains physically stored and managed in your account. No ETL or data replication needed.

This is accomplished via Snowflake's secure data sharing architecture. You, as the data provider, create a 'share' which is a pointer to the data you want to share and then grant access to a specific Snowflake account. The consumer (the account receiving the shared data) creates a database from that share, and that database acts as a read-only mirror of the shared data.

18. How does Snowflake ensure data availability and disaster recovery, explained to a child?

Imagine Snowflake has a magical piggy bank where it keeps your data. To make sure you always have your data even if something bad happens, like the piggy bank falling and breaking, Snowflake does a few clever things. First, it makes lots of copies of your data and spreads them out in different places far away. Think of it like having extra piggy banks hidden in different houses! If one house has a problem (like a fire!), you can still get your money (data) from the other houses.

Second, Snowflake constantly backs up your data. This means it regularly takes snapshots of your piggy bank, so if something accidentally gets deleted or changed, you can go back to an older snapshot and get the correct data back. So, even if you accidentally break your toy (data), Snowflake has a picture of it to put it back together again!

19. What are the different ways to connect to Snowflake, such as through a web UI or command line, explained simply?

Snowflake offers several ways to connect. The most common is through the Snowsight web UI, which is a browser-based interface for writing SQL queries, managing users, and monitoring performance. It's accessible directly through your Snowflake account.

Another way is via the SnowSQL command-line client. This requires installation on your local machine. You can then execute SQL commands and scripts directly from your terminal. There are also connectors available for various programming languages like Python (using the Snowflake Connector for Python), allowing you to interact with Snowflake programmatically.

20. Can you give an example of a real-world use case for Snowflake? Keep it simple.

A large online retailer uses Snowflake to consolidate all their sales data from various sources (online store, physical stores, mobile app) into a single, unified data warehouse. This allows them to perform comprehensive sales analytics, such as:

- Identifying top-selling products across all channels.

- Understanding customer purchase behavior patterns.

- Optimizing inventory levels based on demand forecasts.

Because Snowflake can handle large volumes of data and complex queries efficiently, the retailer can gain valuable insights into their sales performance and make data-driven decisions to improve profitability and customer satisfaction.

21. What is the difference between structured and semi-structured data, and how does Snowflake handle both, in simple terms?

Structured data has a predefined schema, like a table in a relational database with columns and rows (think CSV files or SQL databases). Semi-structured data doesn't have a strict schema but uses tags or markers to delineate elements, like JSON or XML. It offers flexibility but still has some organization.

Snowflake can handle both. For structured data, Snowflake works like a standard SQL database, allowing you to define tables and load data into them. For semi-structured data, Snowflake offers native support using the VARIANT data type. This lets you load JSON or XML directly, and then query it using SQL with functions to parse and extract specific elements. Snowflake automatically infers schema on read for semi-structured data stored in VARIANT columns.

22. Explain how Snowflake's auto-scaling feature works, as if you are talking to a five year old.

Imagine Snowflake is like a playground with slides. When only a few kids are playing, we only need one slide open. That's like a small Snowflake! But when lots and lots of kids come to play, one slide isn't enough, so we open more slides automatically so everyone can have fun faster. Snowflake does the same thing with computers. When there's not much work, it uses only a few computers, but when there's lots of work to do, it adds more computers automatically so things get done quickly, like opening more slides on the playground!

23. What are some best practices for writing efficient SQL queries in Snowflake? Simply explain a couple of them.

When writing efficient SQL queries in Snowflake, consider these best practices:

- Use WHERE clause filtering: Always filter data as early as possible in the query using the

WHEREclause. This reduces the amount of data that Snowflake needs to scan and process. Avoid filtering after joins, unless it's unavoidable. For example, preferSELECT * FROM table WHERE column = 'value'over selecting all data and then filtering. - Leverage Clustering: Snowflake's clustering can significantly improve query performance. Choose a clustering key based on frequently filtered or joined columns. When filtering queries use clustered columns with the proper

WHEREclause.

24. How can you optimize the cost of using Snowflake? Give simple tips.

To optimize Snowflake costs, focus on efficient data warehousing practices. Reduce compute costs by right-sizing virtual warehouses, auto-suspending them when idle, and scaling up/down based on workload. Improve query performance through proper data clustering, using appropriate data types, and avoiding SELECT *. Minimize storage costs by leveraging data compression, partitioning large tables, and implementing data retention policies to remove unnecessary data. Materialized views are great for repeat query performance and cost savings.

Additionally, monitor Snowflake usage through the web interface or programmatically to identify cost drivers and areas for improvement. Consider using Snowflake's features like Resource Monitors to limit credit consumption, and take advantage of discounts like capacity pricing if your needs are predictable. Be sure to analyze your queries.

25. What are some common troubleshooting steps you would take when encountering issues with Snowflake, explained simply?

When troubleshooting Snowflake issues, I generally start with the basics. First, I check my connection and credentials to ensure I can actually connect to the Snowflake environment. A good first step is to try a simple SELECT 1; query to verify connectivity and basic functionality. Then, I examine query history to see if any recent queries failed and review their error messages. Snowflake's query profile is invaluable for identifying performance bottlenecks, such as slow-performing joins or full table scans. I also check the Snowflake status page for any reported service outages that might be impacting performance or availability.

Next, I investigate the specific error message or unexpected behavior. If it's a data issue, I'd examine the data itself, checking for nulls, incorrect data types, or inconsistencies. For performance problems, I'd look at query execution plans to identify areas for optimization, such as adding or adjusting clustering keys or using appropriate warehouse sizes. Finally, I often consult Snowflake's documentation and community forums for solutions to common issues, especially if the error message isn't immediately clear. I always try to isolate the problem by simplifying the query or process to pinpoint the root cause.

Snowflake interview questions for juniors

1. Can you explain what Snowflake is, like you're explaining it to a friend who knows nothing about databases?

Imagine you have tons of data, like all the sales records from your shop, or website logs, and you want to understand patterns or trends from it. Snowflake is like a giant, super-powered spreadsheet in the cloud that can handle all that data. It's really good because it's fast, easy to use, and scales automatically – meaning you don't have to worry about it crashing when you load too much data.

Think of it like this: Normally, you'd need to set up complicated database servers, configure storage, and worry about backups. Snowflake handles all of that for you. You just upload your data, and then you can start asking questions and getting answers. It also plays nicely with other tools like data visualization software (Tableau) and programming languages for doing more advanced data analysis.

2. What are the main benefits of using Snowflake over traditional databases?

Snowflake offers several advantages over traditional databases, primarily centered around its cloud-native architecture. These benefits include: Scalability and Performance: Snowflake can independently scale compute and storage resources, allowing for quick adaptation to changing workloads. This typically leads to faster query performance compared to traditional databases where scaling can be more complex and time-consuming. Cost Efficiency: The pay-as-you-go model allows you to only pay for the resources you consume. Also, the ability to independently scale compute means you can scale up compute only when needed and scale back down when not. Data Sharing: Snowflake's architecture facilitates secure and easy data sharing between internal teams, partners, and customers without the need for data replication or complex ETL processes.

Furthermore, Snowflake provides Simplified Management: Snowflake is a fully managed service, reducing the operational overhead associated with traditional database administration tasks like patching, backups, and performance tuning. Support for Semi-structured Data: Snowflake natively supports semi-structured data formats like JSON and XML, simplifying data ingestion and analysis without requiring complex transformations upfront.

3. What is a data warehouse, and how does Snowflake fit into the picture?

A data warehouse is a central repository for storing integrated data from one or more disparate sources. It's designed for reporting and data analysis, and is a core component of business intelligence. Key characteristics include subject-oriented, integrated, time-variant, and non-volatile data.

Snowflake is a cloud-based data warehousing platform offered as Software-as-a-Service (SaaS). It provides a fully managed service which means users don't have to worry about the infrastructure, setup, or maintenance. Snowflake offers features like: * Separate compute and storage scaling. * Support for semi-structured data. * Strong security features. * Pay-as-you-go pricing. Snowflake is used to store and analyze large amounts of structured and semi-structured data, enabling organizations to gain insights and make data-driven decisions.

4. What are the different editions of Snowflake (e.g., Standard, Enterprise, Business Critical), and what are the key differences?

Snowflake offers several editions tailored to different needs and budgets. The main editions are Standard, Enterprise, Business Critical (formerly Enterprise for Sensitive Data), and Virtual Private Snowflake (VPS). Key differences lie in features, security, compliance, and performance.

Standard Edition is the entry-level option, providing core data warehousing functionalities. Enterprise Edition adds advanced features such as time travel, materialized views, and enhanced security. Business Critical Edition provides the highest level of data protection and availability, including HIPAA compliance and enhanced encryption. Virtual Private Snowflake (VPS), is a physically isolated environment for organizations with stringent security and compliance needs. Each higher tier builds upon the features of the lower tiers, offering increasing levels of performance, security, and governance.

5. Explain how Snowflake handles data storage.

Snowflake employs a hybrid architecture for data storage, separating compute and storage. Data is stored in a columnar format, optimized for analytics, within Snowflake's internal storage system, which is typically cloud object storage (like AWS S3, Azure Blob Storage, or Google Cloud Storage). Snowflake compresses and encrypts this data. Snowflake manages the organization, file size, structure, metadata, and compression. You don't have direct access to the underlying object storage.

Snowflake's storage is virtually unlimited and scales elastically. When you load data, Snowflake automatically reorganizes it into its optimized, columnar format. Data is also automatically partitioned and micro-partitioned. Micro-partitions are contiguous units of storage, typically 50 to 500 MB of uncompressed data, organized in a columnar fashion. Snowflake uses metadata about these micro-partitions for query optimization.

6. What is Snowflake's architecture like? Can you describe the different layers?

Snowflake's architecture is a hybrid of shared-disk and shared-nothing architectures. It uses a central data repository that is accessible by all compute nodes, but each compute node has its own independent compute resources. Snowflake has three main layers:

- Cloud Services Layer: This layer manages the entire system, including authentication, access control, infrastructure management, metadata management, query parsing, and optimization.

- Compute Layer: This layer consists of virtual warehouses, which are clusters of compute resources (CPU, memory, and temporary storage) used to execute queries. Each virtual warehouse is independent and doesn't share compute resources.

- Storage Layer: This layer stores all data in a columnar format, optimized for analytical queries. Snowflake manages the storage and compression of the data. Users don't need to worry about data distribution or management; Snowflake handles all of that.

7. What are virtual warehouses in Snowflake, and why are they important?

Virtual warehouses in Snowflake are compute engines that you use to execute queries and perform other data manipulation operations. They are essentially clusters of compute resources (CPU, memory, and temporary storage) that you can dynamically provision and scale independently of storage.

They are important because they provide several key benefits:

- Compute resource isolation: Different workloads can be run on separate warehouses, preventing resource contention and improving performance.

- Scalability: You can easily resize warehouses to handle varying workloads. For example, scale up for large data loads and scale down during off-peak hours.

- Concurrency: Snowflake automatically manages concurrency within a warehouse, allowing multiple users or applications to run queries simultaneously.

- Cost optimization: You only pay for the compute resources you use, and warehouses can be automatically suspended when idle, reducing costs.

- Flexibility: Different warehouse sizes are available to suit different workload requirements.

8. How do you create a database and a table in Snowflake using SQL?

To create a database in Snowflake, use the CREATE DATABASE command followed by the database name.

CREATE DATABASE my_database;

To create a table within that database, first ensure you are using the database with USE DATABASE my_database;. Then, use the CREATE TABLE command, specifying the table name and column definitions (name, data type, constraints).

CREATE TABLE my_table (

id INT,

name VARCHAR(255),

created_at TIMESTAMP

);

9. How do you load data into a Snowflake table from a file?

To load data into a Snowflake table from a file, you typically use the COPY INTO <table> command. First, stage your data file (e.g., CSV, JSON, Parquet) in a location Snowflake can access, such as an internal stage (Snowflake-managed) or an external stage (e.g., AWS S3, Azure Blob Storage, Google Cloud Storage). Then, execute the COPY INTO command, specifying the table name, the stage location, and file format options to match your data file.

For example:

COPY INTO my_table

FROM @my_stage/data.csv

FILE_FORMAT = (TYPE = CSV FIELD_DELIMITER = ',' SKIP_HEADER = 1);

10. What are the different data types supported in Snowflake?

Snowflake supports a variety of data types, which can be broadly categorized into:

- Numeric:

NUMBER,DECIMAL,INT,INTEGER,BIGINT,SMALLINT,TINYINT,BYTEINT,FLOAT,FLOAT8,DOUBLE,DOUBLE PRECISION,REAL - String:

VARCHAR,STRING,TEXT - Binary:

BINARY - Date & Time:

DATE,TIME,DATETIME,TIMESTAMP(and its variants likeTIMESTAMP_LTZ,TIMESTAMP_NTZ,TIMESTAMP_TZ) - Boolean:

BOOLEAN - Semi-structured Data:

VARIANT,OBJECT,ARRAY

VARIANT is particularly important as it allows storing semi-structured data like JSON, Avro, ORC, Parquet, or XML without requiring a predefined schema.

11. Can you write a simple SQL query to select data from a table in Snowflake?

To select all columns from a table named employees in Snowflake, you would use the following SQL query:

SELECT * FROM employees;

If you only want to select specific columns, for example, first_name and last_name, you would modify the query like this:

SELECT first_name, last_name FROM employees;

12. Explain how you would filter data using the WHERE clause in a SQL query.

The WHERE clause in SQL is used to filter rows from a table based on a specified condition. It appears after the FROM clause and allows you to select only those rows that meet your criteria. The condition in the WHERE clause can use various operators such as =, !=, >, <, >=, <=, LIKE, BETWEEN, IN, and NOT. For example:

SELECT * FROM employees WHERE salary > 50000;

This query would retrieve all columns (*) from the employees table, but only for those employees whose salary is greater than 50000.

13. What is the purpose of the ORDER BY clause in a SQL query?

The ORDER BY clause in a SQL query is used to sort the result set of the query. It specifies one or more columns that should be used as the basis for ordering the rows.

By default, ORDER BY sorts in ascending order (A-Z, 0-9). You can explicitly specify ascending order using ASC or descending order using DESC. For example: ORDER BY column_name DESC would sort the results by column_name in descending order. Multiple columns can be specified for sorting, creating a hierarchical sort.

14. What are some common SQL functions you might use in Snowflake?

Snowflake supports a wide range of SQL functions. Some common ones I've used include:

- String functions:

UPPER(),LOWER(),TRIM(),SUBSTRING(),LENGTH(),CONCAT()for manipulating text data. - Date/Time functions:

CURRENT_DATE(),CURRENT_TIMESTAMP(),DATE(),TIME(),DATEADD(),DATEDIFF(),EXTRACT()for working with date and time values. - Numeric functions:

ROUND(),TRUNC(),ABS(),MOD(),SQRT()for performing mathematical operations. - Conditional functions:

CASE WHEN ... THEN ... ELSE ... END,IFNULL(),NVL()for handling conditional logic. - Aggregate functions:

COUNT(),SUM(),AVG(),MIN(),MAX()for summarizing data. - Window functions:

ROW_NUMBER(),RANK(),LAG(),LEAD(),SUM() OVER(),AVG() OVER()for performing calculations across a set of rows. - Variant Functions:

PARSE_JSON(),TO_JSON(),GET()andGET_PATH()for handling semi-structured JSON data.

Here's an example using CASE WHEN and UPPER():

SELECT

CASE

WHEN status = 'active' THEN UPPER(name)

ELSE name

END AS processed_name

FROM users;

15. How can you improve the performance of SQL queries in Snowflake?

To improve SQL query performance in Snowflake, consider these points: Optimize query structure. Use EXPLAIN to understand query plans and identify bottlenecks. Filter data early using WHERE clauses, especially on clustered columns. Leverage Snowflake's features. Utilize clustering keys to physically organize data for faster retrieval. Use materialized views for pre-computing frequently accessed data. Optimize data loading and storage. Ensure data is properly loaded using best practices for file size and format (Parquet is generally preferred). Regularly analyze table metadata to identify opportunities for reclustering or optimization. Use proper data types. Ensure correct data types are used to reduce unnecessary data conversions.

Hardware considerations: Although Snowflake automatically handles the infrastructure aspects, choose the appropriate warehouse size for the workload. Consider scaling up the warehouse for short-term performance boosts or scaling out for concurrency. Caching: Snowflake utilizes caching layers to speed up data retrieval. Ensure the virtual warehouse isn't frequently suspended to maintain cache validity.

16. What is data partitioning and how does Snowflake handle it?

Data partitioning is the process of dividing a large table into smaller, more manageable pieces. This improves query performance by allowing the database to scan only the relevant partitions, rather than the entire table. Snowflake automatically handles data partitioning through its micro-partitioning feature.

Snowflake divides all data in a table into micro-partitions, which are contiguous units of storage typically ranging in size from 50 to 500 MB (before compression). Data within each micro-partition is automatically sorted using a combination of techniques (including clustering keys if defined), providing efficient query performance. Snowflake's query optimizer leverages micro-partition metadata to prune unnecessary partitions during query execution, further optimizing performance. The user does not manage partitions directly, so it simplifies data management.

17. What is data clustering in Snowflake and why is it useful?

Data clustering in Snowflake refers to the process of organizing data within a table based on one or more columns, known as clustering keys. Snowflake physically rearranges the data on disk to improve query performance. When queries filter or sort on the clustering keys, Snowflake can efficiently prune large portions of the table that don't contain relevant data, thus reducing the amount of data scanned.

The usefulness of data clustering lies in its ability to significantly speed up query execution and reduce costs. By minimizing the amount of data scanned, queries complete faster, leading to improved user experience. Furthermore, reduced data scanning translates to lower Snowflake credits consumed, resulting in cost savings. The effectiveness of clustering depends on how well the clustering keys align with common query patterns. The clustering information is available via SYSTEM$CLUSTERING_INFORMATION function.

18. How does Snowflake handle security?

Snowflake employs a multi-layered approach to security, encompassing network, infrastructure, data, and account security. Key features include: network isolation using virtual private clouds (VPCs), encryption of data at rest and in transit (AES 256-bit encryption), support for federated authentication and single sign-on (SSO), multi-factor authentication (MFA), role-based access control (RBAC), and comprehensive auditing capabilities. Snowflake is also compliant with various industry standards like SOC 2 Type II, HIPAA, and PCI DSS.

Data governance is achieved through features like data masking (dynamic and static), row-level security, and column-level security, allowing for fine-grained control over data access. Regular security assessments and penetration testing are performed to identify and address potential vulnerabilities, and Snowflake provides detailed security documentation and best practices for customers.

19. What is role-based access control (RBAC) in Snowflake, and why is it important?

Role-Based Access Control (RBAC) in Snowflake is a method of managing access rights based on the roles assigned to users. Instead of granting permissions directly to individual users, permissions are associated with roles, and users are then assigned to these roles. This simplifies access management. For example, you might have roles like data_engineer, analyst, or manager, each with specific permissions to access certain data or perform specific actions.

RBAC is important because it enhances security, simplifies administration, and improves auditability. It reduces the risk of granting excessive permissions, making it easier to manage user access and ensure compliance with security policies. By centralizing permissions within roles, administrators can easily grant or revoke access rights for groups of users, making user management more efficient and less error-prone. It also helps to track who has access to what, making auditing a breeze.

20. How do you grant and revoke privileges to users in Snowflake?

In Snowflake, you grant and revoke privileges using the GRANT and REVOKE commands, respectively. The general syntax is as follows:

GRANT <privilege> ON <object_type> <object_name> TO ROLE <role_name>; REVOKE <privilege> ON <object_type> <object_name> FROM ROLE <role_name>;

Replace <privilege> with the specific privilege (e.g., SELECT, INSERT, USAGE, OWNERSHIP), <object_type> with the type of object (e.g., DATABASE, TABLE, WAREHOUSE), <object_name> with the name of the object, and <role_name> with the role you are granting or revoking privileges to. You then grant the role to the user.

GRANT ROLE <role_name> TO USER <user_name>;

Typical object types include: DATABASE, SCHEMA, TABLE, VIEW, WAREHOUSE, etc. Roles are central to managing permissions. You grant privileges to roles, and then assign roles to users.

21. What is the difference between authentication and authorization in Snowflake?

Authentication verifies who you are, while authorization determines what you are allowed to do. Authentication confirms your identity, typically through username/password or other credentials. Snowflake supports various authentication methods such as username/password, MFA, OAuth, and key pair authentication.

Authorization, on the other hand, happens after successful authentication. It governs your access rights within Snowflake. For example, even after you've logged in (authenticated), you might only be authorized to read data from certain tables, but not others. Roles and privileges are central to authorization in Snowflake; roles are granted privileges, and users are assigned to roles. This determines what actions users can perform on Snowflake objects.

22. What are some of the security best practices you should follow when working with Snowflake?

When working with Snowflake, security best practices revolve around access control, data protection, and network security. Key practices include: using multi-factor authentication (MFA) for all user accounts, regularly rotating keys and secrets, and implementing strong password policies. Utilize network policies to restrict access to Snowflake based on IP address, and leverage features like data masking and encryption (both at rest and in transit) to protect sensitive information.

Further, enforce role-based access control (RBAC) to grant users only the necessary permissions, monitor user activity and audit logs for suspicious behavior, and keep the Snowflake client software up to date with the latest security patches. Secure data loading processes, validate data inputs, and be mindful of potential SQL injection vulnerabilities when constructing queries. Regularly review and update security configurations to adapt to evolving threats and security best practices.

23. Explain what data governance means to you.

Data governance, to me, is the overall management of the availability, usability, integrity, and security of an organization's data. It defines who can take what action, upon what data, in what situations. It's about establishing policies and procedures to ensure data is accurate, consistent, reliable, and protected throughout its lifecycle.

The goal of data governance is to enable better decision-making, improve operational efficiency, reduce risks, and comply with regulatory requirements. Key aspects include defining data ownership, setting data quality standards, establishing data security protocols, and implementing data access controls. Ultimately, good data governance ensures that data is a valuable asset and not a liability.

24. What tools can you use to monitor Snowflake performance and usage?

Snowflake provides several tools to monitor performance and usage. The primary tool is the Snowflake web interface, which offers a user-friendly interface to view query history, resource utilization, and warehouse activity. You can also use SQL commands to query Snowflake's metadata and system tables for detailed information on query performance, storage usage, and data transfer costs.

Other options include using Snowflake's REST API to programmatically retrieve monitoring data for integration with external monitoring systems like Datadog, New Relic, or Splunk. These third-party tools often provide more advanced visualization and alerting capabilities.

25. How does Snowflake handle concurrency and prevent data conflicts?

Snowflake handles concurrency using its multi-version concurrency control (MVCC) architecture. Each query sees a consistent snapshot of the data as of the query's start time. This eliminates the need for read locks, allowing multiple queries to read the same data concurrently without blocking each other or experiencing data conflicts.

Updates and deletes in Snowflake are handled through a copy-on-write mechanism. When data is modified, Snowflake creates new micro-partitions with the updated data, leaving the original micro-partitions unchanged. The metadata is then updated to point to the new micro-partitions for subsequent queries. This ensures that concurrent queries continue to see the consistent snapshot from when they started, preventing data conflicts and maintaining data integrity.

26. What is the purpose of Snowflake stages?

Snowflake stages are used for storing and managing data files that you want to load into or unload from Snowflake. They act as a pointer to a location where your data files reside, whether it's an internal Snowflake location or an external cloud storage service like AWS S3, Azure Blob Storage, or Google Cloud Storage.

Essentially, stages simplify the process of bulk loading and unloading data by providing a centralized and secure way to access your files. They offer features like automatic data encryption, file format definition, and data compression, which streamline the data transfer process and improve efficiency. You can think of stages as a virtual file system that is accessed by Snowflake for data ingestion and extraction.

27. How would you describe the difference between internal and external stages?

Internal stages in Snowflake store data within Snowflake's managed storage, offering simplicity and performance. Data stored here is automatically encrypted and managed by Snowflake. External stages, on the other hand, point to data stored in external cloud storage services like AWS S3, Azure Blob Storage, or Google Cloud Storage.

The key difference lies in data ownership and management. With internal stages, Snowflake handles everything. With external stages, you manage the storage and access permissions in your cloud storage account, while Snowflake accesses the data based on the credentials you provide. External stages are useful for leveraging existing cloud storage and sharing data with systems outside of Snowflake.

28. How can you automate tasks in Snowflake, such as data loading or backups?

Snowflake provides several options for automating tasks like data loading and backups. These include:

- Snowflake Tasks: These are scheduled SQL statements that can automate data loading, transformation, and other DML operations. You can define dependencies between tasks to create complex workflows.

- Snowpipe: For continuous data ingestion from cloud storage, Snowpipe automatically loads data as soon as it's available in the defined stage.

- External Orchestration Tools: Tools like Airflow, dbt, or other ETL tools can connect to Snowflake and orchestrate complex data pipelines, including loading, transformation, and backups. You can also use cloud provider services such as AWS Step Functions or Azure Data Factory for workflow orchestration.

29. What are some common challenges you might encounter when working with Snowflake, and how would you address them?

Some common challenges encountered with Snowflake include managing costs, optimizing query performance, and handling data governance/security. To address cost management, I'd proactively monitor resource utilization using Snowflake's built-in tools, implement appropriate warehouse sizing and scaling strategies, and leverage features like auto-suspend and auto-resume. For query performance, I would analyze query execution plans using EXPLAIN, optimize table structures using clustering keys, and ensure proper indexing where applicable. Regarding data governance, I would implement robust access controls using roles and permissions, enforce data masking and encryption policies, and utilize Snowflake's data lineage features to track data provenance. Proper documentation is key to ensure everyone follows these best practices. Other common challenges include understanding Snowflakes semi-structured data capabilities. SELECT $1:field_name FROM table; is a useful query example to extract data from VARIANT columns and requires careful thought.

Snowflake intermediate interview questions

1. How can you optimize a slow-running query in Snowflake?

To optimize a slow-running query in Snowflake, several strategies can be employed. First, analyze the query profile in Snowflake's web interface to identify bottlenecks (e.g., full table scans, excessive spilling to disk). Then, consider the following:

- Optimize table structure: Ensure appropriate clustering keys are defined for frequently queried columns. Consider using materialized views for pre-computation of aggregations or complex joins.

- Optimize query structure: Rewrite the query to leverage joins instead of

INsubqueries where possible. UseEXISTSinstead ofCOUNT(*)if you only need to check for the existence of rows. Limit the amount of data being scanned, possibly using partitioning or data pruning techniques. Use theQUALIFYclause to filter results based on window function calculations instead of doing it with subqueries. - Increase warehouse size: Temporarily scaling up the virtual warehouse can provide more compute resources to execute the query faster, especially for large datasets. However, this should be considered after optimizing the query itself. Also, ensure that the proper warehouse size is configured to be optimal for cost and performance.

2. Describe different types of Snowflake caches and how they improve performance.

Snowflake utilizes several layers of caching to optimize query performance. These caches significantly reduce the need to fetch data directly from the underlying cloud storage, thereby improving speed and reducing costs.

Different types of Snowflake caches include:

- Metadata Cache: This cache stores metadata about tables and micro-partitions (file metadata, statistics, etc.). It's crucial for query optimization because Snowflake uses the metadata to determine which micro-partitions need to be scanned for a given query.

- Query Result Cache: Snowflake automatically caches the results of queries. If the same query is executed again, and the underlying data hasn't changed, Snowflake can return the results directly from the cache, providing extremely fast response times. This cache is available across virtual warehouses. It is important to note that even a small change will not trigger a result cache hit.

- Virtual Warehouse Local Disk Cache: Each virtual warehouse has a local disk cache that stores data retrieved from cloud storage. This cache sits on the compute nodes themselves. If data is needed for a query, Snowflake first checks the local disk cache. If the data is present (a cache hit), it can be retrieved much faster than reading it from cloud storage.

3. What are the advantages and disadvantages of using a materialized view in Snowflake?

Materialized views in Snowflake offer significant advantages, primarily improved query performance. They pre-compute and store the results of a query, so subsequent identical queries can retrieve data directly from the materialized view rather than re-executing the original query. This can lead to substantial performance gains, especially for complex aggregations or joins. Other advantages include simplified query logic for end-users and potential cost savings by reducing compute resource usage.

However, materialized views also have disadvantages. They consume storage space, as they store a copy of the data. They require maintenance; Snowflake automatically refreshes them when underlying data changes, which consumes compute resources and can incur costs. The refresh frequency can impact data staleness, meaning the data in the materialized view might not always be perfectly up-to-date. Further, there are limitations on the types of queries that can be used to define materialized views (e.g., no QUALIFY clause).

4. Explain the difference between scaling up and scaling out in Snowflake and when you would use each.

Scaling up (vertical scaling) in Snowflake means increasing the size of your virtual warehouse. You choose a larger warehouse size (e.g., from X-Small to Small, Medium, Large, etc.). This provides more compute resources (CPU, memory) to a single processing unit. You'd use scaling up when you need to improve the performance of individual queries, especially complex ones that are bottlenecked by resource constraints. It is suitable when you need faster results and can tolerate a brief interruption to resize the warehouse. However, it has a limit to how large you can scale a single warehouse.

Scaling out (horizontal scaling) in Snowflake involves adding more virtual warehouses. Instead of one large warehouse, you have multiple smaller or medium-sized warehouses running concurrently. This is often achieved through multi-cluster warehouses. Scaling out is ideal when you have a high volume of concurrent queries, each of which may not be particularly resource-intensive on their own. It allows you to handle more users and workloads without significantly impacting the performance of individual queries. It provides concurrency and better handles spikes in demand. Consider scaling out when you want to improve overall system throughput and reduce queueing for users, or when you need to isolate workloads by assigning dedicated warehouses to different users or processes. Scaling out avoids single point of contention limits found with scaling up.

5. How would you implement row-level security in Snowflake to restrict data access based on user roles?

Row-level security (RLS) in Snowflake can be implemented using security policies and user role mapping. First, you define a security policy that contains a boolean expression determining which rows a user can see. This expression typically uses the CURRENT_ROLE() or CURRENT_USER() function to compare the user's role or username against a column in the table (often a user ID or department ID).

Then, you apply the security policy to the table using the ALTER TABLE command. When a user queries the table, Snowflake automatically applies the security policy, filtering the results based on the user's role and the policy's expression. For example:

CREATE OR REPLACE SECURITY POLICY my_policy AS (

department VARCHAR

) RETURNS BOOLEAN ->

CURRENT_ROLE() = 'ACCOUNTADMIN' OR CURRENT_ROLE() = department;

ALTER TABLE my_table ADD ROW ACCESS POLICY my_policy ON (department);

6. What are the different data loading options in Snowflake, and when would you choose each one?

Snowflake offers several data loading options, each suited for different scenarios:

- COPY INTO [

]:

This is the most common and versatile option. It loads data from staged files (internal or external) into Snowflake tables. Ideal for batch loading, transformation during load, and handling various file formats (CSV, JSON, Parquet, etc.). Choose this when you have data in files and need a reliable, configurable loading process. - Snowpipe: For continuous data ingestion, Snowpipe automates the loading process as soon as new data files are available in a stage. Best for near real-time loading, like streaming data or frequently updated files. It uses event notifications (e.g., from cloud storage) to trigger data loading.

- Data Loading using connectors/drivers (JDBC/ODBC/Snowflake Connector for Python): These allow loading data directly from applications or scripts. Useful for programmatic loading, integration with ETL tools, or smaller data loads.

- Web UI: Snowflake's web interface offers a limited data loading capability suitable for loading from a single file. Useful for ad-hoc loading or experimenting with small datasets.

7. Describe the process of cloning a Snowflake database or schema and its use cases.

Cloning in Snowflake creates a zero-copy replica of a database, schema, or table at a specific point in time. It's metadata operation, so it's fast and doesn't consume extra storage until changes are made to the clone. The basic syntax is CREATE {DATABASE | SCHEMA | TABLE} <object_name> CLONE <source_object_name>;.

Use cases include: creating development/testing environments from production data without impacting performance or storage costs; backing up data to a specific point in time for disaster recovery; enabling data sharing without copying; and facilitating experimentation by allowing modifications to a cloned dataset without affecting the original.

8. Explain how to use Snowflake's Time Travel feature to recover accidentally deleted data.

Snowflake's Time Travel allows you to access historical data at any point within a defined period. To recover accidentally deleted data, you can use the UNDROP command if the table was dropped. For example, UNDROP TABLE my_table; would restore the table if it's within the Time Travel retention period (default is 1 day, can be up to 90 days for Enterprise Edition). If the data within the table was deleted, you can create a clone of the table at a point in time before the deletion using the AT or BEFORE clauses in a CREATE TABLE ... CLONE statement. E.g. CREATE TABLE my_table_restore CLONE my_table AT(timestamp => 'YYYY-MM-DD HH24:MI:SS'); Then insert the recovered data back into the original table.

9. How can you monitor Snowflake resource consumption and identify potential cost optimization opportunities?

Snowflake provides several ways to monitor resource consumption and identify cost optimization opportunities. The primary tools include the Snowflake web interface (Snowsight), SQL queries against Snowflake's metadata tables (ACCOUNT_USAGE and ORGANIZATION_USAGE), and partner monitoring solutions.

To monitor resource usage, you can use Snowsight's dashboards to visualize compute and storage costs over time, broken down by warehouse, database, user, and other dimensions. SQL queries against the WAREHOUSE_METERING_HISTORY, DATABASE_STORAGE_USAGE_HISTORY, and other ACCOUNT_USAGE views allow for more granular analysis. You can also identify inefficient queries using the QUERY_HISTORY view. Cost optimization strategies involve right-sizing warehouses, optimizing query performance (reducing query execution time), leveraging caching, setting appropriate data retention periods, and automating warehouse suspension/resumption. Using features like materialized views and search optimization service wisely can also contribute to cost savings.

10. Describe the purpose of Snowflake stages and how they are used for data loading and unloading.

Snowflake stages are storage locations where data files are stored for loading into or unloading from Snowflake tables. They act as intermediate areas for data transfer. There are two main types of stages: internal stages (managed by Snowflake) and external stages (linked to external cloud storage like AWS S3, Azure Blob Storage, or Google Cloud Storage).

Stages are used to streamline the data loading and unloading processes. For loading, data files are first uploaded to a stage, and then the COPY INTO command is used to load the data from the stage into a Snowflake table. For unloading, the COPY INTO command is used to unload data from a Snowflake table into data files in a stage. From there, the files can be downloaded.

11. Explain the difference between Snowflake's standard and enterprise editions, and what features are included in each.

Snowflake offers various editions with different features and pricing. The Standard edition provides core features like data warehousing, standard security, and basic performance optimization. The Enterprise edition builds upon Standard by adding advanced security features (e.g., HIPAA compliance, key management), enhanced performance capabilities (e.g., materialized views), and more comprehensive support. Features like Time Travel (for data recovery) have longer retention periods in Enterprise.

In essence, Standard is suitable for basic data warehousing needs, while Enterprise caters to organizations requiring higher security, performance, and governance capabilities.

12. How do you handle semi-structured data like JSON in Snowflake, and what are the benefits of using Snowflake's native JSON support?

Snowflake handles semi-structured data like JSON using the VARIANT data type. You can load JSON data directly into a VARIANT column. Snowflake offers functions to parse and query JSON, such as GET, GET_PATH, and FLATTEN. These functions allow you to extract specific elements from the JSON structure. For example SELECT GET(json_column, 'name') from table_name. Snowflake automatically parses JSON data during query execution, without requiring a predefined schema. This schema-on-read capability is a key benefit.

The benefits of Snowflake's native JSON support include flexibility in handling evolving data structures, simplified data loading without upfront transformation, and powerful querying capabilities with JSON-specific functions. It also enables efficient storage and retrieval of JSON data within the Snowflake data warehouse, while leveraging Snowflake's performance and scalability.

13. What are the best practices for designing and implementing Snowflake schemas and tables for optimal performance?

When designing Snowflake schemas and tables for optimal performance, focus on data organization and query efficiency. Key best practices include using appropriate data types, leveraging clustering keys for frequently filtered columns, and employing partitioning strategies for large tables (though Snowflake's micro-partitioning handles much of this automatically). Optimize for star or snowflake schemas where appropriate, using dimension tables for descriptive attributes and fact tables for transactional data.

Also, consider using appropriate table types (transient or temporary) based on the data retention requirements. Regularly analyze query performance using Snowflake's query profiling tools and identify expensive operations. Optimize SQL queries by minimizing the amount of data scanned (using WHERE clauses and avoiding SELECT *), leveraging materialized views for complex aggregations, and considering query hints when needed. Regularly maintain statistics for tables and consider data compression techniques to improve storage efficiency and query performance.

14. Explain how to use Snowflake's external functions to integrate with other cloud services or applications.

Snowflake external functions enable you to call code running outside of Snowflake, such as in AWS Lambda, Azure Functions, or Google Cloud Functions, effectively integrating with other cloud services or applications. You define an external function in Snowflake that points to the remote service. When the external function is called within a SQL statement, Snowflake sends the data to the remote service, which processes it and returns the result back to Snowflake.

For example, let's say you want to use a sentiment analysis API. You'd create an external function in Snowflake that specifies the API endpoint. Then, you'd call this function in a query, passing the text for analysis as input. The API would return the sentiment score, which Snowflake would then include in the query results. CREATE EXTERNAL FUNCTION my_sentiment_analysis(text VARCHAR) RETURNS VARCHAR LANGUAGE PYTHON AS $$ <handler_code> $$ API_INTEGRATION = my_api_integration;

15. How would you set up data sharing between Snowflake accounts, and what are the security considerations involved?

To set up data sharing between Snowflake accounts, I would use Snowflake's Secure Data Sharing feature. A provider account creates a share, grants access to specific database objects (tables, views, etc.), and then grants usage privileges on the share to one or more consumer accounts. The consumer accounts can then create a database from the share, which acts as a read-only reference to the provider's data. No data is copied or moved, ensuring near real-time access.

Security considerations include: access control (granting minimal necessary privileges), data masking (protecting sensitive information), row-level security (limiting data visibility based on user roles), and monitoring (auditing access patterns). It's crucial to ensure that the shared data doesn't violate any compliance regulations (e.g., GDPR, HIPAA). The provider account is responsible for the security of the underlying data, while the consumer account is responsible for securing access to the shared database.

16. Describe the process of setting up and managing Snowflake user accounts and roles.

Setting up and managing Snowflake user accounts and roles involves several steps. First, create user accounts using the CREATE USER command, specifying properties like username, password, and default role. For example: CREATE USER <username> PASSWORD='<password>' DEFAULT_ROLE = <rolename>;. Then, define roles using the CREATE ROLE command to group privileges. Grant privileges to roles using GRANT <privilege> ON <object> TO ROLE <rolename>; which defines what actions roles can perform on database objects.

To manage users, you can grant roles to users using the GRANT ROLE <rolename> TO USER <username>; command. Users inherit the privileges associated with the roles granted to them. You can also use SHOW USERS and SHOW ROLES to view existing users and roles, respectively. Revoking privileges or roles can be done using REVOKE commands similar to the GRANT syntax, ensuring security and access control. User management can also be automated through scripting and CI/CD pipelines for Infrastructure as Code (IaC).

17. Explain how to use Snowflake's data masking features to protect sensitive data.

Snowflake offers data masking to protect sensitive data by replacing it with altered values, without permanently changing the underlying data. There are two primary types: dynamic data masking and external tokenization. Dynamic masking uses masking policies defined on columns, and the transformation happens at query runtime based on the role of the user executing the query. When a user with insufficient privileges queries the masked column, they see the masked value instead of the actual data. Conversely, users with the APPLY MASKING POLICY privilege or a role with the OWNERSHIP privilege on the masked column, will see the unmasked values.

To use dynamic masking, you first create a masking policy using CREATE MASKING POLICY. This policy specifies the masking expression (e.g., replacing characters with 'X', hashing, or using a regular expression). Then, you apply the policy to a column using ALTER TABLE ... ALTER COLUMN ... SET MASKING POLICY. Snowflake also supports external tokenization, where a third-party service handles the tokenization and detokenization. Snowflake provides the interface to call these services through a user-defined function.

18. How can you automate Snowflake tasks using Snowpipe and tasks?

Snowflake's Snowpipe and Tasks can be combined to automate data ingestion and transformation. Snowpipe continuously loads data as it arrives in cloud storage (e.g., S3), while Tasks allow you to schedule and execute SQL statements, including data transformations. To automate, configure Snowpipe to load raw data. Then, create a Task that triggers on a schedule or after a Snowpipe load event using the AFTER keyword. This Task would then execute SQL to transform the newly ingested data from its raw form into a processed, usable format.

For example, the Task could call a stored procedure to perform the transformation, update statistics, or perform other data management operations. The combination creates an automated, end-to-end pipeline. Here's a snippet demonstrating how a task could trigger after snowpipe loads data:

CREATE OR REPLACE TASK my_transformation_task

WAREHOUSE = my_warehouse

AFTER my_snowpipe

AS

CALL my_transformation_procedure();

19. Describe the purpose of Snowflake's data exchange and how it enables secure data sharing and monetization.

Snowflake's Data Exchange facilitates the secure and governed sharing of data between Snowflake customers and business partners. It acts as a marketplace, connecting data providers and consumers directly within the Snowflake environment.

The Data Exchange enables secure data sharing by leveraging Snowflake's zero-copy architecture. Data providers grant access to specific datasets without physically moving or duplicating the data. Consumers can then query and analyze this data directly within their Snowflake instance, ensuring data governance, security, and reducing the risks associated with traditional data transfer methods. Data providers can also monetize their data by offering subscriptions or usage-based pricing through the exchange.

20. Explain how to implement a data governance strategy in Snowflake, including data quality, metadata management, and data lineage.

Implementing a data governance strategy in Snowflake involves several key aspects. For data quality, you can leverage Snowflake's data validation features using constraints, policies, and scheduled tasks to check for anomalies. Tools like Great Expectations can be integrated for more advanced data quality testing. For metadata management, Snowflake's information schema provides metadata about tables, columns, and users. You can enhance this with a dedicated metadata management tool such as Alation or Collibra, which provides a centralized repository for data definitions, business glossaries, and data ownership details. Data lineage can be tracked using Snowflake's query history, which allows you to trace the origin of data transformations. Additionally, tools like Atlan can be used to automatically capture and visualize data lineage across your Snowflake environment and integrated data pipelines.

To ensure proper data governance, establish clear roles and responsibilities. Implement data masking and row-level security to protect sensitive information. Regularly audit data access and usage to identify potential risks. Also, document data governance policies and procedures, and conduct training for all users to ensure compliance. Finally, establish data retention policies to manage data lifecycle and optimize storage costs. Automate as much of the governance process as possible using Snowflake's features and integrated tools.

21. What are the benefits of using Snowflake's marketplace, and how can it help you discover and access valuable data sets?

Snowflake's marketplace offers several benefits for discovering and accessing valuable datasets. First, it provides a centralized location to find a wide variety of data from different providers, eliminating the need for individual agreements and data ingestion pipelines. You can easily browse and search for datasets based on industry, use case, or other relevant criteria.

Second, accessing data through the marketplace often simplifies data acquisition. Datasets are typically shared directly into your Snowflake account, eliminating the need for ETL processes. Furthermore, many datasets are available for free or with a pay-as-you-go pricing model, allowing you to test and evaluate data before committing to a long-term subscription.

Snowflake interview questions for experienced

1. How would you optimize a Snowflake query that's running slower than expected, and what tools would you use to diagnose the problem?

To optimize a slow Snowflake query, I'd start by identifying the bottleneck. Snowflake's web UI provides query profiles which graphically show the execution plan and time spent in each step. I'd look for stages with high execution time or rows scanned. Common issues include: full table scans due to missing or ineffective filters, inefficient joins, and data spillage to local disk (indicating insufficient warehouse size).

Based on the diagnosis, I'd apply optimizations such as: adding appropriate filters using the WHERE clause, creating or leveraging existing indexes (Snowflake automatically manages them, but understanding data distribution helps), rewriting joins (e.g., using JOIN hints, if appropriate), increasing warehouse size to avoid spillage, and partitioning/clustering frequently queried tables. Consider using EXPLAIN to understand the query plan before and after optimization. Materialized views can also pre-compute and store results for frequently executed queries, thereby improving performance. Also, caching can be used in conjunction with materialized views to quickly get to the end results.

2. Describe your experience with Snowflake's data sharing capabilities. What are the benefits and limitations?

I've used Snowflake's data sharing extensively, both as a provider and consumer. I've configured secure views and user-defined functions to control exactly what data is shared and how it can be accessed. I've also worked with direct shares and Snowflake Data Marketplace listings. Data sharing is a powerful way to collaborate without the overhead of traditional ETL processes.

The benefits are numerous: near real-time data access for consumers, no data duplication, simplified data governance, and the ability to monetize data. However, limitations exist. As a provider, you still bear the cost of compute for consumer queries (unless using reader accounts or marketplace), and you are responsible for defining and managing grants. As a consumer, your access is dependent on the provider's uptime and performance and the data is immutable by the consumer. Finally, cross-cloud data sharing is generally unavailable, and cross-region data sharing can incur egress costs.

3. Explain how you would implement a data governance strategy in Snowflake, considering data quality, security, and compliance.

Implementing data governance in Snowflake involves a multi-faceted approach covering data quality, security, and compliance. For data quality, I would leverage Snowflake's data validation features, combined with external tools for profiling and cleansing. This involves defining data quality rules, monitoring data quality metrics, and implementing automated workflows to address data quality issues. Data security would be addressed using Snowflake's robust security features, including network policies, role-based access control (RBAC), data masking, and encryption. These controls ensure that only authorized users have access to sensitive data, and that data is protected both in transit and at rest.

Compliance requirements would be met by implementing audit logging, data lineage tracking, and data retention policies. Snowflake's built-in features for auditing and lineage can be used to track data access and modifications. Integration with external compliance tools would further help automate compliance reporting and monitoring. A key part would be regular reviews and updates to the governance strategy based on evolving business needs and regulatory requirements.

4. Walk me through your process of designing a star schema or snowflake schema in Snowflake, and the trade-offs you considered.

When designing a star or snowflake schema in Snowflake, I usually start by understanding the business requirements and identifying the key performance indicators (KPIs) that need to be measured. Then, I identify the facts (events or transactions) and the dimensions (contextual attributes related to the facts). The fact table typically contains the measures and foreign keys to the dimension tables. Dimension tables contain descriptive attributes, aiming for single-level attributes for the star schema or normalized attributes (multiple levels) for the snowflake schema. In Snowflake, the choice depends on data volume, query patterns, and maintenance considerations. I consider these tradeoffs:

Star schema offers simplicity and faster query performance due to fewer joins, but it can lead to data redundancy if dimensions are highly denormalized. Snowflake schema reduces data redundancy and improves data integrity through normalization but might introduce more complex queries and potentially slower performance due to the need for joining more tables. Snowflake's columnar storage and query optimization engine can mitigate performance issues with snowflake schemas. I also consider the ease of loading and transforming data into each schema. I prototype and test with sample data to validate the chosen schema meets performance requirements.

5. How have you used Snowflake's features like clustering, partitioning, and materialized views to improve query performance?

I've used Snowflake's features to optimize query performance in several ways. For example, I've employed clustering on frequently queried columns to improve data retrieval speeds. By clustering tables on relevant dimensions (e.g., date, region), Snowflake efficiently micro-partitions data, minimizing the amount of data scanned during queries. I've also leveraged materialized views to pre-compute and store the results of complex aggregations or joins. This reduced the query execution time for dashboards and reports that rely on these pre-calculated results.

While Snowflake doesn't support traditional partitioning, its micro-partitioning combined with effective clustering offers similar benefits in terms of query performance. I carefully select clustering keys based on query patterns and analyze Snowflake's query profile to identify areas for optimization. I've also monitored clustering depth to ensure tables remain well-clustered, re-clustering when necessary to maintain optimal performance.

6. Discuss your experience with Snowflake's security features, such as network policies, data masking, and encryption.