Hiring an AI Model Designer requires a structured approach to interviews, ensuring you identify candidates who can truly drive innovation. Many factors need to be considered during the hiring process, not just technical skills, but also understanding of product and business like we discussed in our skills required for ai product manager blog post.

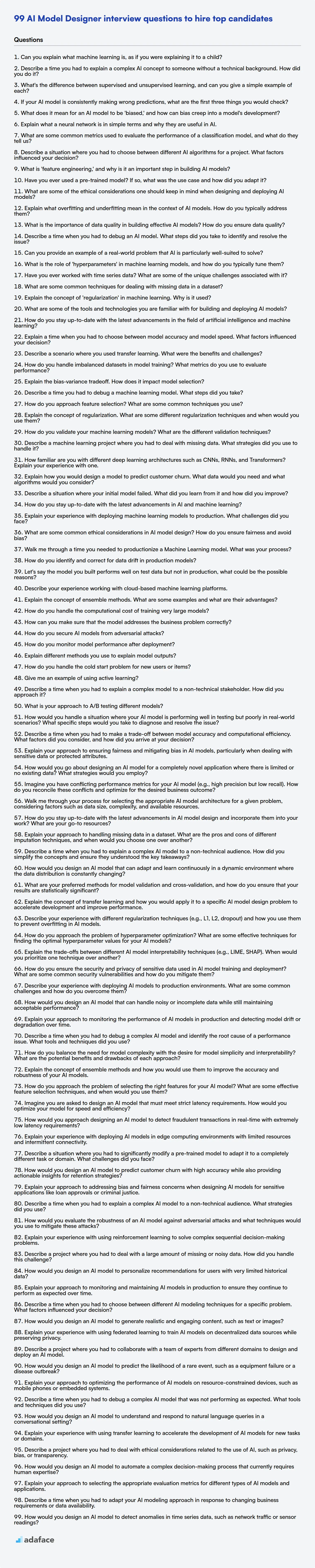

This blog post offers a spectrum of interview questions for AI Model Designers, categorized by experience level, starting from basic to expert, including a set of MCQs. These questions will help you to assess candidates' technical understanding, problem-solving capabilities, and practical knowledge in AI model design.

By using these questions, you can streamline your interview process and quickly identify top-tier talent. To further refine your candidate selection, consider using our AI Model Designer Test before interviews.

Table of contents

Basic AI Model Designer interview questions

1. Can you explain what machine learning is, as if you were explaining it to a child?

Imagine you have a dog, and you want to teach it to sit. You show the dog what 'sit' means, and give it a treat when it does it right. Machine learning is like teaching a computer in the same way! We show the computer lots of examples, and it learns from them. It figures out patterns and rules, just like your dog learning to sit.

So, instead of teaching a dog, we might teach a computer to recognize pictures of cats, or to predict what the weather will be like. The computer gets better and better as it sees more examples, just like your dog gets better at sitting with practice!

2. Describe a time you had to explain a complex AI concept to someone without a technical background. How did you do it?

I once had to explain how a recommendation engine worked to our marketing manager, who primarily focused on campaign performance and didn't have a technical background. I avoided jargon like 'machine learning' and 'algorithms'. Instead, I used the analogy of a bookstore. I explained that just as a bookstore owner might notice that people who buy mystery novels often buy thrillers, a recommendation engine notices patterns in what users buy or watch. It then uses these patterns to suggest similar items they might like. I emphasized that it's all about finding connections and making relevant suggestions, like a helpful salesperson who knows what their customers like.

3. What's the difference between supervised and unsupervised learning, and can you give a simple example of each?

Supervised learning uses labeled data to train a model to predict outcomes. The model learns from input-output pairs. A simple example is image classification, where the model is trained on images labeled with their corresponding classes (e.g., 'cat', 'dog').

Unsupervised learning, on the other hand, uses unlabeled data to discover hidden patterns or structures within the data. The model learns without explicit guidance or correct answers. An example is clustering, where the algorithm groups similar data points together based on their features, without knowing the actual categories beforehand.

4. If your AI model is consistently making wrong predictions, what are the first three things you would check?

If my AI model is consistently making wrong predictions, the first three things I would check are:

- Data Quality: I'd examine the training data for errors, inconsistencies, and biases. This includes checking for missing values, outliers, and ensuring the labels are accurate. Garbage in, garbage out.

- Feature Engineering/Selection: I'd review the features used by the model. Are they relevant to the target variable? Are there any features that are highly correlated or redundant? I might try different feature combinations or feature scaling techniques. For example, if using

sklearn, I might try something likeStandardScalerorPCAto reduce dimensionality and scale the data. - Model Configuration/Hyperparameters: I would check the model's hyperparameters and architecture. Are they appropriate for the problem? Is the model underfitting or overfitting the data? I'd experiment with different hyperparameter values (e.g., learning rate, number of layers, regularization strength) using techniques like cross-validation to find a better configuration.

5. What does it mean for an AI model to be 'biased,' and how can bias creep into a model's development?

AI model bias means the model unfairly favors certain outcomes or groups over others. This results in systematic and repeatable errors that discriminate against particular demographics.

Bias can creep into a model's development in several ways: 1) Data Bias: The training data might not accurately represent the real world, containing skewed or incomplete information. 2) Algorithmic Bias: The design of the algorithm itself might inherently favor certain outcomes. 3) Sampling Bias: The way data is collected might introduce bias. For example, using only data from one region for a global model. 4) Labeling Bias: If the labels assigned to the data are biased, the model will learn and perpetuate these biases. 5) Pre-existing societal biases: models trained on data that reflects societal biases will also reflect them. Mitigation strategies include careful data collection, bias detection during training, and fairness-aware algorithms.

6. Explain what a neural network is in simple terms and why they are useful in AI.

A neural network is like a simplified model of the human brain. It's made up of interconnected nodes, or "neurons," organized in layers. These neurons receive inputs, perform simple calculations, and pass the results to other neurons. By adjusting the strength of connections between neurons (weights), the network learns to recognize patterns and make predictions based on the data it's trained on.

Neural networks are useful in AI because they can automatically learn complex relationships in data without needing explicit programming for every scenario. This allows them to solve a wide range of problems, such as image recognition, natural language processing, and predictive modeling, where traditional algorithms might struggle. Essentially, they provide AI systems with the ability to learn and adapt from data, leading to more intelligent and flexible behavior.

7. What are some common metrics used to evaluate the performance of a classification model, and what do they tell us?

Common metrics for evaluating classification models include accuracy, precision, recall, F1-score, and AUC-ROC. Accuracy measures the overall correctness of the model, but it can be misleading with imbalanced datasets. Precision measures the proportion of correctly predicted positives out of all instances predicted as positive. Recall (or sensitivity) measures the proportion of correctly predicted positives out of all actual positive instances. F1-score is the harmonic mean of precision and recall, providing a balanced measure. AUC-ROC (Area Under the Receiver Operating Characteristic curve) assesses the model's ability to discriminate between classes across various threshold settings. These metrics help us understand different aspects of a model's performance, like its ability to avoid false positives (precision), avoid false negatives (recall), and overall classification ability (accuracy, F1-score, AUC-ROC).

8. Describe a situation where you had to choose between different AI algorithms for a project. What factors influenced your decision?

In a recent project involving fraud detection, I had to choose between using a Logistic Regression model and a Random Forest classifier. The primary goal was to identify fraudulent transactions in real-time with high accuracy while minimizing false positives, due to the potential for customer disruption. Logistic Regression offered interpretability and speed, critical for real-time analysis. Random Forest, on the other hand, typically provides higher accuracy, but at the cost of interpretability and computational cost.

Ultimately, I selected Random Forest due to its superior performance metrics (AUC and F1-score) during initial testing. While Logistic Regression was faster and more interpretable, the higher accuracy of Random Forest significantly reduced financial losses associated with fraud. We also implemented techniques to gain some insight into the Random Forest model by feature importance, and the performance benefits outweighed the interpretability trade-off in this instance.

9. What is 'feature engineering,' and why is it an important step in building AI models?

Feature engineering is the process of selecting, transforming, and creating new features from raw data to improve the performance of machine learning models. It involves using domain knowledge to extract meaningful variables that can help the model learn more effectively.

It's important because the quality of features directly impacts the model's ability to learn patterns and make accurate predictions. Well-engineered features can lead to simpler models, higher accuracy, faster training times, and better generalization to unseen data. Poor features, on the other hand, can result in underfitting, overfitting, and overall poor model performance.

10. Have you ever used a pre-trained model? If so, what was the use case and how did you adapt it?

Yes, I've used pre-trained models extensively. A common use case was image classification. I started with a pre-trained ResNet50 model from PyTorch's torchvision.models. This model was trained on ImageNet, so it already had learned useful features.

To adapt it, I removed the final classification layer (the fully connected layer) and replaced it with a new one that matched the number of classes in my specific dataset. Then, I froze the weights of the earlier layers to prevent them from being drastically altered during initial training (transfer learning). After training the new classification layer, I optionally fine-tuned some of the earlier layers with a very low learning rate to further adapt the model to my dataset.

11. What are some of the ethical considerations one should keep in mind when designing and deploying AI models?

When designing and deploying AI models, several ethical considerations are crucial. These include: Bias and Fairness: Ensuring the model doesn't discriminate against specific groups due to biased training data. Transparency and Explainability: Understanding how the model arrives at its decisions to avoid 'black box' scenarios, enabling debugging and trust. Privacy: Protecting sensitive user data used for training and inference. Accountability: Establishing who is responsible when the AI makes mistakes or causes harm. Security: Protecting the AI system from malicious attacks or manipulation, such as adversarial inputs.

It's important to proactively address these concerns throughout the AI development lifecycle, from data collection and model training to deployment and monitoring. Regularly audit the AI systems, monitor their performance, and implement safeguards to mitigate potential harm and ensure responsible AI adoption. For example, utilizing techniques such as differential privacy, explainable AI methods (e.g., SHAP values), and robust validation datasets can help to build more trustworthy and ethical AI systems.

12. Explain what overfitting and underfitting mean in the context of AI models. How do you typically address them?

Overfitting happens when a model learns the training data too well, including its noise and outliers. This results in high accuracy on the training set but poor generalization to new, unseen data. Underfitting, conversely, occurs when a model is too simple to capture the underlying patterns in the training data. It performs poorly on both the training and test sets.

To address overfitting, techniques like cross-validation, regularization (L1 or L2), data augmentation, and early stopping are commonly used. Reducing model complexity (e.g., fewer layers in a neural network) can also help. For underfitting, increasing model complexity (e.g., adding more features or layers), training for a longer duration, or using a more sophisticated model architecture are typical solutions.

13. What is the importance of data quality in building effective AI models? How do you ensure data quality?

Data quality is paramount for effective AI models because the model's performance is directly proportional to the quality of the data it's trained on. Poor data quality leads to biased, inaccurate, and unreliable models, resulting in flawed predictions and decisions. Essentially, "garbage in, garbage out" applies directly to AI. If the data isn't representative, complete, consistent, and accurate, the model will learn and amplify these flaws.

To ensure data quality, a multi-faceted approach is crucial. This includes data validation and cleaning processes that identify and correct errors, inconsistencies, and missing values. Specific steps include:

- Data Validation: Implementing checks to ensure data conforms to expected formats, ranges, and rules.

- Data Cleaning: Handling missing values through imputation or removal, correcting inconsistencies, and removing duplicates.

- Data Transformation: Converting data into a suitable format for model training.

- Data Auditing: Regularly monitoring data quality metrics and implementing improvements as needed.

- Profiling: Analyzing the data to understand distributions, identify anomalies, and assess completeness.

14. Describe a time when you had to debug an AI model. What steps did you take to identify and resolve the issue?

During a project involving an image classification model, I encountered a significant drop in accuracy after retraining with a new dataset. My initial step was to thoroughly examine the new dataset for inconsistencies or biases, comparing its statistical properties (mean, variance of pixel values) with the original dataset. I also visualized a sample of images from both datasets to check for labeling errors or significant differences in image quality.

Upon identifying that the new dataset contained images with slightly different lighting conditions, I implemented data augmentation techniques to make the model more robust to variations in lighting. This involved randomly adjusting the brightness and contrast of the training images. Furthermore, I utilized techniques like gradient checking and layer-wise relevance propagation (LRP) to identify problematic layers and features within the network. After retraining with the augmented data and using a lower learning rate for fine-tuning, the model's performance on the validation set improved substantially, ultimately resolving the accuracy issue.

15. Can you provide an example of a real-world problem that AI is particularly well-suited to solve?

A great example is fraud detection in financial transactions. AI, specifically machine learning models, can analyze massive datasets of past transactions to identify patterns indicative of fraudulent activity. Traditional rule-based systems often struggle to keep up with the evolving tactics of fraudsters, leading to both false positives and missed detections.

AI models can learn complex patterns and anomalies that humans might miss, and adapt to new fraud techniques over time. By flagging suspicious transactions in real-time, AI enables banks and financial institutions to proactively prevent fraudulent activity, saving significant amounts of money and protecting customers. Techniques like anomaly detection, using algorithms such as Isolation Forest or One-Class SVM, and classification models trained on labeled fraudulent and legitimate transactions are commonly employed.

16. What is the role of 'hyperparameters' in machine learning models, and how do you typically tune them?

Hyperparameters are settings that are external to the model and are not learned from the data during training. They control the learning process itself and influence model complexity. Examples include learning rate, the number of layers in a neural network, or the depth of a decision tree.

Hyperparameter tuning typically involves searching for the optimal set of values that results in the best model performance on a validation set. Common techniques include:

- Grid Search: Exhaustively trying all possible combinations from a predefined set of hyperparameter values.

- Random Search: Randomly sampling hyperparameter values from predefined distributions. Often more efficient than grid search.

- Bayesian Optimization: Building a probabilistic model of the objective function and using it to intelligently explore the hyperparameter space. Can be more efficient than grid or random search.

- Manual Tuning: Iteratively adjusting hyperparameters based on experience and intuition, monitoring validation performance.

17. Have you ever worked with time series data? What are some of the unique challenges associated with it?

Yes, I have worked with time series data. Some unique challenges include handling temporal dependencies, seasonality, and trends. Unlike independent and identically distributed (i.i.d.) data, time series data's value at one point depends on previous values, requiring specialized models like ARIMA or recurrent neural networks. Seasonality (e.g., weekly or yearly patterns) must be accounted for through techniques like decomposition or seasonal differencing to avoid biased predictions. Trends, whether increasing or decreasing, can also skew results if not addressed. These techniques help us preprocess and prepare the data for analysis.

Missing data is also a significant challenge. Simple imputation methods may not be suitable because they might not preserve the temporal dependencies. Specialized methods like interpolation or model-based imputation are often required. Furthermore, time series data can be non-stationary, meaning its statistical properties change over time, which necessitates stationarization techniques before applying many models. Evaluating time series models requires specialized metrics that account for temporal dependencies like rolling window forecast. For instance, using sklearn.model_selection.TimeSeriesSplit is critical when evaluating time series models.

18. What are some common techniques for dealing with missing data in a dataset?

Common techniques for handling missing data include imputation and deletion. Imputation involves replacing missing values with estimated values. Simple techniques include mean/median imputation (replacing missing values with the mean or median of the feature), or using a constant value. More sophisticated methods involve using machine learning models to predict the missing values based on other features. Deletion involves removing rows or columns with missing values. This is simple, but can lead to loss of information if a large proportion of the data is missing.

Other considerations involve analyzing the patterns of missingness. Is the data missing completely at random (MCAR), missing at random (MAR), or missing not at random (MNAR)? The appropriate technique will depend on the nature of the missing data. In pandas (python):

df.fillna(df.mean())

# or drop rows with missing values:

df.dropna()

19. Explain the concept of 'regularization' in machine learning. Why is it used?

Regularization is a technique used to prevent overfitting in machine learning models. Overfitting occurs when a model learns the training data too well, including its noise and outliers, leading to poor performance on unseen data. Regularization adds a penalty term to the model's loss function, discouraging it from learning overly complex patterns.

It is used because it improves the model's ability to generalize to new, unseen data. Common regularization techniques include L1 regularization (Lasso), which adds the absolute value of the coefficients to the loss function, and L2 regularization (Ridge), which adds the squared value of the coefficients. These techniques shrink the model's coefficients, simplifying the model and reducing its sensitivity to noise. Elastic net is also used that combines both L1 and L2 regularization.

20. What are some of the tools and technologies you are familiar with for building and deploying AI models?

I'm familiar with a range of tools and technologies for building and deploying AI models. For model building, I have experience with Python libraries like TensorFlow, PyTorch, scikit-learn, and transformers. I'm also familiar with tools for data processing and preparation such as Pandas, NumPy, and cloud-based services like Google Cloud Dataflow or AWS Glue. For model deployment, I've worked with containerization technologies like Docker, orchestration tools such as Kubernetes, and cloud platforms like Google Cloud AI Platform, AWS SageMaker, and Azure Machine Learning. I also have experience with model serving frameworks like TensorFlow Serving and TorchServe.

21. How do you stay up-to-date with the latest advancements in the field of artificial intelligence and machine learning?

I stay up-to-date with AI/ML advancements through a multi-faceted approach. I regularly read research papers on arXiv, attend online webinars and conferences (like NeurIPS, ICML, and CVPR), and follow prominent researchers and organizations on social media (Twitter, LinkedIn). I also subscribe to industry newsletters and blogs from companies like Google AI, OpenAI, and DeepMind.

Furthermore, I actively participate in online courses and communities (Coursera, fast.ai, Kaggle) to learn new techniques and tools hands-on. I also dedicate time to experimenting with new libraries and frameworks, such as TensorFlow, PyTorch, and scikit-learn, by implementing small projects or reproducing research results. This practical experience reinforces my understanding and allows me to evaluate the real-world applicability of new advancements.

Intermediate AI Model Designer interview questions

1. Explain a time when you had to choose between model accuracy and model speed. What factors influenced your decision?

In a project involving real-time fraud detection for an e-commerce platform, I faced a trade-off between model accuracy and speed. A complex deep learning model achieved 98% accuracy but had a latency of 500ms per transaction, which was unacceptable for real-time processing. A simpler logistic regression model, on the other hand, achieved 95% accuracy with a latency of just 50ms.

Ultimately, I chose the faster logistic regression model. The 3% drop in accuracy was deemed acceptable because the business prioritized minimizing false negatives (missing fraudulent transactions) without significantly impacting the user experience (delaying legitimate transactions). Factors influencing this decision included:

- Business Requirements: Real-time fraud detection was critical, and a slow model would lead to abandoned transactions.

- Impact of False Positives/Negatives: Weighing the cost of blocking legitimate transactions (false positives) against missing fraudulent ones (false negatives). In this case, missing fraud was deemed more costly.

- Computational Resources: The existing infrastructure could easily handle the simpler model's processing load.

2. Describe a scenario where you used transfer learning. What were the benefits and challenges?

In a recent project, I used transfer learning to classify chest X-ray images for detecting pneumonia. I leveraged a pre-trained ResNet50 model, which was trained on the ImageNet dataset, a large dataset of natural images. Instead of training a deep convolutional neural network from scratch, I fine-tuned the ResNet50 model on my smaller chest X-ray dataset. This involved freezing the initial layers of the pre-trained model (to retain the general image features learned from ImageNet) and retraining only the later layers, along with adding a new classification layer tailored to my pneumonia detection task.

The benefits were significant. Transfer learning drastically reduced the training time and computational resources needed, as I didn't have to train millions of parameters from random initialization. It also improved the model's performance, especially given the limited size of my chest X-ray dataset; the pre-trained model had already learned useful features from a vast amount of image data. One challenge was the potential for negative transfer, where the pre-trained model's features were not entirely relevant to chest X-ray images. To mitigate this, I carefully chose the layers to fine-tune and experimented with different learning rates. Ensuring the dataset was representative of the real-world scenarios and addressing any biases in the pre-trained model were other challenges.

3. How do you handle imbalanced datasets in model training? What metrics do you use to evaluate performance?

To handle imbalanced datasets, I employ several strategies. These include resampling techniques like oversampling the minority class (e.g., using SMOTE) or undersampling the majority class. Cost-sensitive learning, where misclassification costs are higher for the minority class, is another effective approach. I also consider using ensemble methods specifically designed for imbalanced data, such as Balanced Random Forest or EasyEnsemble.

For evaluating performance, accuracy can be misleading with imbalanced data. Therefore, I prioritize metrics such as precision, recall, F1-score, and the area under the receiver operating characteristic curve (AUC-ROC). Precision measures the accuracy of positive predictions, while recall measures the ability to find all actual positives. The F1-score is the harmonic mean of precision and recall. AUC-ROC provides a comprehensive view of the model's ability to distinguish between classes across different threshold settings. I also consider the area under the precision-recall curve (AUC-PR) which is more sensitive to changes in the ratio of positive to negative classes than AUC-ROC.

4. Explain the bias-variance tradeoff. How does it impact model selection?

The bias-variance tradeoff is a central concept in machine learning that deals with finding the right balance between a model's ability to fit the training data (low bias) and its ability to generalize to unseen data (low variance). Bias refers to the error introduced by approximating a real-world problem, which is often complex, by a simplified model. High bias can lead to underfitting, where the model fails to capture the important patterns in the data. Variance, on the other hand, refers to the model's sensitivity to small fluctuations in the training data. High variance can lead to overfitting, where the model learns the noise in the training data, resulting in poor performance on new data.

In model selection, we aim to choose a model that minimizes both bias and variance. However, decreasing bias often increases variance, and vice versa. This means that model selection involves finding a sweet spot where the model is complex enough to capture the underlying patterns in the data without being too sensitive to noise. Techniques like cross-validation and regularization are commonly used to estimate a model's performance on unseen data and control its complexity, helping us navigate the bias-variance tradeoff effectively. For example, L1 and L2 regularization can be used to prevent overfitting by adding a penalty term to the loss function, thus controlling model complexity.

5. Describe a time you had to debug a machine learning model. What steps did you take?

During a project to predict customer churn, the model's performance on the validation set was significantly worse than on the training set. My first step was to verify the data pipeline for both sets, ensuring no data leakage or inconsistencies existed between training and validation data. I then checked for overfitting by examining the training and validation loss curves; a large gap indicated overfitting. To mitigate this, I employed techniques such as regularization (L1/L2), dropout, and early stopping.

Further investigation revealed that certain features, particularly categorical variables, were not properly encoded, causing the model to misinterpret them. I debugged the feature engineering process, using one-hot encoding and other suitable techniques and confirmed the changes improved the metrics. Finally, I performed error analysis by examining misclassified instances, which allowed me to identify additional data quality issues. Specifically, some of the target labels were not labelled correctly. Addressing these data quality and modelling issues significantly improved the model's generalization performance.

6. How do you approach feature selection? What are some common techniques you use?

My approach to feature selection involves understanding the data and the problem, followed by applying relevant techniques. Initially, I analyze the features for their individual relevance to the target variable using methods like univariate statistical tests (e.g., chi-squared for categorical features, ANOVA for numerical features with categorical target). Then, I explore feature interactions and potential multicollinearity using correlation matrices or variance inflation factors (VIF). Finally, I consider the trade-off between model complexity and performance using techniques like:

- Filter methods: These use statistical measures to select features, like information gain or correlation.

- Wrapper methods: These use a machine learning algorithm to evaluate subsets of features, like recursive feature elimination (RFE) or sequential feature selection.

- Embedded methods: These incorporate feature selection into the model training process, like LASSO regularization (L1) in linear models or feature importance in tree-based models.

I prioritize a combination of methods and thorough validation to ensure the selected features generalize well to unseen data and improve model performance and interpretability.

7. Explain the concept of regularization. What are some different regularization techniques and when would you use them?

Regularization is a technique used to prevent overfitting in machine learning models. Overfitting occurs when a model learns the training data too well, including its noise and outliers, and performs poorly on unseen data. Regularization adds a penalty term to the model's loss function, discouraging it from learning overly complex patterns. This penalty term is based on the magnitude of the model's coefficients; larger coefficients are penalized more heavily. Some regularization techniques include:

- L1 Regularization (Lasso): Adds a penalty equal to the absolute value of the magnitude of coefficients. It can lead to feature selection by shrinking some coefficients to zero.

- L2 Regularization (Ridge): Adds a penalty equal to the squared magnitude of coefficients. It shrinks coefficients towards zero but rarely sets them exactly to zero.

- Elastic Net Regularization: A combination of L1 and L2 regularization.

- Dropout: Randomly deactivates neurons during training, preventing over-reliance on specific neurons. This is commonly used in neural networks.

L1 regularization is useful when you suspect that many features are irrelevant and want to perform feature selection. L2 regularization is effective when you want to reduce the impact of all features without necessarily eliminating any. Elastic Net is a good choice when you have a large number of features and suspect that some are correlated. Dropout is specifically useful in neural networks to prevent complex co-adaptations.

8. How do you validate your machine learning models? What are the different validation techniques?

Validating machine learning models is crucial to ensure they generalize well to unseen data. Several techniques can be used, broadly categorized into:

- Hold-out validation: Splitting the dataset into training and testing sets. The model is trained on the training set and evaluated on the testing set.

- K-fold cross-validation: Dividing the dataset into k folds. The model is trained on k-1 folds and tested on the remaining fold. This process is repeated k times, with each fold used as the test set once. The average performance across all folds is then calculated. Stratified k-fold ensures each fold has the same proportion of classes as the whole dataset.

- Leave-one-out cross-validation (LOOCV): A special case of k-fold where k equals the number of data points. Each data point is used as the test set once.

- Time-series validation: For time-series data, splitting the data sequentially, training on the past and predicting the future. This prevents data leakage.

Choosing the right technique depends on the dataset size, computational resources, and the specific problem. We also use metrics like accuracy, precision, recall, F1-score, AUC-ROC, and others depending on the task to measure validation performance.

9. Describe a machine learning project where you had to deal with missing data. What strategies did you use to handle it?

In a customer churn prediction project, a significant portion of customer profile data was missing, specifically income and usage frequency. To address this, I first analyzed the missing data patterns to determine if it was missing completely at random (MCAR), missing at random (MAR), or missing not at random (MNAR). For income, which seemed MAR (potentially correlated with other observed variables like job title), I employed multiple imputation using a chained equations (MICE) approach. This involved creating several plausible datasets by predicting the missing values based on the observed data. For usage frequency, a simpler approach like mean/median imputation was considered initially due to its relative simplicity and the smaller potential impact on the model given the relatively smaller amount of missing values. However, the final model used K-Nearest Neighbors (KNN) imputation, which yielded better performance by predicting the missing values based on the most similar customers.

10. How familiar are you with different deep learning architectures such as CNNs, RNNs, and Transformers? Explain your experience with one.

I am familiar with several deep learning architectures including CNNs, RNNs, and Transformers. CNNs are well-suited for image processing tasks due to their ability to extract spatial features through convolutional layers. RNNs, particularly LSTMs and GRUs, excel in handling sequential data like text or time series, by maintaining an internal state to remember past information. Transformers, based on the attention mechanism, have revolutionized NLP tasks, outperforming RNNs in many sequence-to-sequence problems and showing state-of-the-art results due to parallelization and capturing long-range dependencies.

My experience is strongest with Transformers. I have used the Hugging Face Transformers library extensively for tasks such as text classification, sentiment analysis, and machine translation. For example, I fine-tuned a pre-trained BERT model on a custom dataset to classify customer reviews with high accuracy, achieving significant improvement over traditional machine learning methods. I also experimented with different Transformer variants like RoBERTa and DistilBERT to balance performance and computational cost. Understanding the self-attention mechanism and how it captures relationships between words has been crucial in improving model performance.

11. Explain how you would design a model to predict customer churn. What data would you need and what algorithms would you consider?

To design a customer churn prediction model, I would start by gathering relevant data, including customer demographics (age, location), usage patterns (frequency of use, features used), engagement metrics (website visits, support tickets), billing information (payment history, plan type), and satisfaction scores (from surveys or reviews). Feature engineering might involve creating metrics like recency, frequency, and monetary value (RFM).

For algorithms, I'd consider logistic regression (for its interpretability), support vector machines (SVM) or random forests (for potentially better accuracy), and gradient boosting machines (like XGBoost or LightGBM) for high performance. Model evaluation would involve metrics like precision, recall, F1-score, and AUC, focusing on optimizing for both identifying potential churners and minimizing false positives.

12. Describe a situation where your initial model failed. What did you learn from it and how did you improve?

Early in my career, I built a churn prediction model for a subscription service. The initial model, a simple logistic regression, performed well in cross-validation but poorly in production. I realized that the cross-validation split wasn't representative of the real-world data distribution, specifically the timing of when subscribers were acquired. The training data included more older subscribers.

I learned the importance of creating realistic validation splits reflecting the real-world deployment environment. I improved the model by incorporating time-based validation, where I trained on older data and validated on more recent data. I also added features capturing subscriber tenure and recent activity, which improved the model's performance on newer subscribers. Finally, I also performed A/B testing and monitoring the results as a continuous feedback loop to evaluate the model.

13. How do you stay up-to-date with the latest advancements in AI and machine learning?

I stay updated on AI/ML advancements through a variety of channels. I regularly read research papers on arXiv and attend virtual conferences like NeurIPS and ICML. I also follow prominent AI researchers and organizations (e.g., DeepMind, OpenAI) on social media and read their blogs.

Furthermore, I participate in online courses and workshops on platforms like Coursera and edX, focusing on specific topics or tools. Experimenting with new libraries and frameworks (e.g., TensorFlow, PyTorch, scikit-learn) and working on personal projects helps me gain practical experience and understand the implications of these advancements.

14. Explain your experience with deploying machine learning models to production. What challenges did you face?

I have experience deploying machine learning models using various platforms like AWS SageMaker, Google Cloud AI Platform, and Flask APIs on Docker containers. My deployments typically involve creating a REST API endpoint that receives input data, preprocesses it, feeds it to the model, and returns the prediction. I've used model versioning, A/B testing strategies, and monitoring tools to ensure model performance and stability after deployment.

Some common challenges I've faced include managing dependencies across different environments, ensuring low latency for real-time predictions (often tackled using model optimization techniques like quantization), and addressing data drift over time, which requires continuous monitoring and retraining. Scaling the infrastructure to handle fluctuating request volumes and implementing robust error handling are also important considerations.

15. What are some common ethical considerations in AI model design? How do you ensure fairness and avoid bias?

Ethical considerations in AI model design include fairness, accountability, transparency, and privacy. Addressing bias is crucial; this can arise from biased training data, flawed algorithms, or skewed feature selection. Consequences of bias include discrimination or unfair outcomes for certain demographic groups.

To ensure fairness and mitigate bias, several techniques can be used. This includes careful data collection and pre-processing to identify and correct biases, using fairness-aware algorithms that explicitly optimize for fairness metrics (e.g., equal opportunity, demographic parity), and regularly auditing model outputs for disparate impact. Techniques such as re-weighting the dataset, or adversarial debiasing could be used. Ensuring diverse representation within the development team can also help.

16. Walk me through a time you needed to productionize a Machine Learning model. What was your process?

When productionizing a churn prediction model, my process involved several key steps. First, I collaborated with the stakeholders to define clear success metrics (e.g., precision, recall, cost savings) and understand deployment constraints (latency, infrastructure). Then, I focused on model retraining and validation, setting up a pipeline that automatically retrained the model on a scheduled basis using the latest data, while also validating that the model performance was within the acceptable threshold.

Next, I containerized the model using Docker and deployed it to a cloud platform, using REST APIs for inference. A/B testing was conducted to evaluate the model's impact in the real world. Finally, I implemented monitoring to track model performance (accuracy, drift) and system health (latency, errors). If the model degraded past a certain threshold, I triggered an automated retraining or alert.

17. How do you identify and correct for data drift in production models?

Data drift, a change in model input data that leads to performance degradation, can be identified using several techniques. Monitoring input data distributions and comparing them to the training data distribution using statistical tests (e.g., Kolmogorov-Smirnov test) or visualization techniques is crucial. We can also track model performance metrics like accuracy, precision, recall, and F1-score; a significant drop often indicates drift. Setting up alerts based on predefined thresholds for these metrics is essential for proactive detection.

Correcting for data drift involves several strategies. Retraining the model with fresh, recent data is the most common approach. Implementing adaptive models that can automatically adjust to changing data patterns is also useful. Data preprocessing techniques like feature scaling or normalization can mitigate the impact of drift. Finally, utilizing ensemble methods that combine multiple models trained on different data subsets can improve robustness to data variations.

18. Let's say the model you built performs well on test data but not in production, what could be the possible reasons?

Several reasons could explain a model performing well on test data but poorly in production. One common issue is data drift, where the characteristics of the production data differ significantly from the training or test data. This can include changes in feature distributions, new feature values, or shifts in the relationships between features and the target variable. Another possibility is overfitting to the test set, despite good cross-validation. The test set may not be truly representative of real-world scenarios.

Other potential problems include: pipeline discrepancies (differences in feature engineering or data preprocessing between the training/test environment and production); serving infrastructure issues (e.g., resource constraints, incorrect model version deployed); and delayed feedback loops (where the model's actions influence future data, leading to a feedback loop that wasn't present during training). Finally, bugs in model implementation during deployment can also lead to performance issues. Monitoring key metrics and retraining the model with fresh data are essential for addressing these challenges.

19. Describe your experience working with cloud-based machine learning platforms.

I have experience working with cloud-based machine learning platforms, primarily Google Cloud Platform (GCP) and Amazon Web Services (AWS). On GCP, I've utilized services like Vertex AI for training and deploying custom models, as well as pre-trained APIs such as the Vision API and Natural Language API for various tasks like image recognition and sentiment analysis. I've also worked with BigQuery for data warehousing and analysis, integrating it with my ML pipelines.

On AWS, I've used SageMaker for model building, training, and deployment, including experimenting with different algorithms and hyperparameter tuning. I'm familiar with services like S3 for data storage, Lambda for serverless compute, and IAM for access control. I have also experience with utilizing cloud-based data storage solutions like S3 and Google Cloud Storage. I've also used cloud infrastructure to orchestrate and automate machine learning workflows.

20. Explain the concept of ensemble methods. What are some examples and what are their advantages?

Ensemble methods combine multiple individual models to create a stronger, more robust model. The idea is that by aggregating the predictions of several "weak learners", you can achieve better performance than any single model could on its own. This works because different models may have different biases or focus on different aspects of the data, and combining them can smooth out errors and reduce variance.

Some common examples include:

- Bagging (Bootstrap Aggregating): Creates multiple models by training on different subsets of the training data, then averages their predictions (e.g., Random Forest).

- Boosting: Sequentially builds models, where each model tries to correct the errors of its predecessors (e.g., AdaBoost, Gradient Boosting Machines).

- Stacking: Combines the predictions of multiple different types of models using another model (a "meta-learner") to learn how to best weight their outputs.

Advantages are:

- Improved accuracy: Often outperforms single models.

- Robustness: Less prone to overfitting and can generalize better to unseen data.

- Handles complex relationships: Can capture more complex patterns in the data than simpler models.

21. How do you handle the computational cost of training very large models?

To handle the computational cost of training very large models, I leverage several techniques. These include data parallelism, where the dataset is split across multiple devices (GPUs or TPUs), and model parallelism, where the model itself is split across devices. Gradient accumulation, which combines gradients from multiple batches before updating model weights, reduces communication overhead.

Furthermore, techniques like mixed-precision training (using FP16 or BF16) and gradient checkpointing can significantly reduce memory footprint, allowing for larger batch sizes or model sizes. Finally, using efficient libraries like TensorFlow, PyTorch, or JAX, which are optimized for distributed training and hardware acceleration, is crucial.

22. How can you make sure that the model addresses the business problem correctly?

To ensure the model addresses the business problem correctly, begin by clearly defining the problem and the desired outcomes with stakeholders. Establish specific, measurable, achievable, relevant, and time-bound (SMART) goals. Continuously validate the model's performance against these goals through iterative testing and feedback loops. Involve business users in the validation process to confirm that the model's outputs are meaningful and actionable within their context.

Furthermore, conduct thorough error analysis to identify areas where the model's predictions deviate from expected results. Analyze the impact of these errors on business outcomes, and prioritize model improvements based on their potential business value. Regularly monitor the model's performance in a production environment and be prepared to retrain or refine the model as business conditions evolve.

23. How do you secure AI models from adversarial attacks?

Securing AI models against adversarial attacks involves several strategies. Adversarial training is a core technique where the model is trained on both clean and adversarial examples, making it more robust. Input validation and sanitization can help filter out potentially malicious inputs. Techniques like gradient masking and defensive distillation aim to obfuscate the model's decision boundaries, making it harder for attackers to craft effective adversarial examples. Rate limiting and input monitoring can also help detect and mitigate attacks in real-time.

Furthermore, exploring robust architectures and incorporating certified defenses can provide provable guarantees about the model's robustness against certain types of attacks. These include methods like randomized smoothing and abstract interpretation based techniques. Continuous monitoring and retraining of models with new adversarial examples are crucial for maintaining security over time.

24. How do you monitor model performance after deployment?

After deploying a model, continuous monitoring is crucial. Key metrics to track include accuracy, precision, recall, F1-score (depending on the problem), and drift (both data and concept drift). Monitoring should also include tracking prediction distributions and input feature statistics. Alerts should be set up to notify the team when performance degrades beyond acceptable thresholds.

Techniques include implementing dashboards using tools like Grafana or Kibana to visualize model performance metrics and setting up automated model retraining pipelines triggered by performance drops. Tools like Prometheus can be used for monitoring the deployed application and models. Log the model predictions and inputs for later analysis and debugging purposes. Furthermore, A/B testing new model versions against the existing production model helps ensure improvements before a full rollout.

25. Explain different methods you use to explain model outputs?

I use several methods to explain model outputs, depending on the model type and the audience. For simpler models like linear regression, I focus on the coefficients and their significance. For more complex models, I use techniques like feature importance scores (e.g., from tree-based models), which rank features based on their contribution to the model's predictions. I also use partial dependence plots (PDPs) to visualize the relationship between a feature and the predicted outcome, holding other features constant. SHAP (SHapley Additive exPlanations) values provide a more comprehensive view by attributing the contribution of each feature to individual predictions, offering local explainability. For NLP models I might use attention weights or LIME to highlight important words or phrases. For image models, techniques like Grad-CAM can highlight the areas of the image that were most important for the prediction.

To communicate these explanations effectively, I use visualizations and simple language. For example, I might say "Feature X is positively correlated with the outcome, meaning that as X increases, the predicted outcome also tends to increase". I also ensure that the explanations are relevant to the specific business problem and the audience's understanding. Finally, I use sanity checks, ensuring the model and explanations align with domain expertise. For example, does the top features align with what a domain expert would expect? For code you might use something like:

import shap

explainer = shap.TreeExplainer(model)

shap_values = explainer.shap_values(X)

shap.summary_plot(shap_values, X)

26. How do you handle the cold start problem for new users or items?

The cold start problem, where a system lacks sufficient data to make reliable predictions for new users or items, can be addressed using several strategies. For new users, we can leverage content-based filtering by asking them for explicit preferences or implicitly inferring them from their initial interactions (e.g., browsing history). Collaborative filtering can be applied later once enough interaction data is available. Another approach is to use demographic information (if available) to group new users with similar existing users.

For new items, content-based filtering is often the primary approach, relying on item metadata (e.g., descriptions, tags) to recommend similar items to users who have shown interest in related content. Hybrid approaches, combining content-based and collaborative filtering, can also be effective by utilizing both item metadata and limited user interaction data to make initial recommendations, then transitioning to collaborative filtering as more data becomes available. We can also boost the visibility of new items randomly or based on pre-defined business rules.

27. Give me an example of using active learning?

Imagine training a spam filter. Instead of passively training on a fixed dataset, active learning allows the model to request labels for the emails it's most uncertain about. The workflow might look like this:

- Train an initial model on a small, labeled dataset.

- Use the model to predict the labels of a larger, unlabeled dataset.

- Identify the emails where the model has the lowest confidence in its prediction (e.g., probabilities close to 0.5).

- Request labels for these uncertain emails from a human annotator.

- Add the newly labeled emails to the training set and retrain the model.

- Repeat steps 2-5 until a desired performance level is reached.

This targeted labeling approach can significantly reduce the amount of labeled data needed to achieve a high-performing model, compared to purely supervised learning with random sampling.

28. Describe a time when you had to explain a complex model to a non-technical stakeholder. How did you approach it?

In a prior role, I developed a churn prediction model for a subscription service. When presenting it to the marketing director, who wasn't technical, I avoided jargon. I focused on the business value: how the model could identify at-risk customers and help reduce churn, increasing revenue.

Instead of detailing algorithms, I used analogies and visuals. For example, I likened the model to a 'smart filter' that sorted customers based on their likelihood to leave, similar to how email spam filters work. I showed simplified charts illustrating how the model's predictions correlated with actual churn rates and highlighted the potential ROI from targeted intervention campaigns, focusing on actionable insights rather than technical specifics.

29. What is your approach to A/B testing different models?

My approach to A/B testing models involves defining a clear hypothesis and success metric before starting. I then randomly split the user base into two (or more) groups. One group (the control) experiences the existing model, while the other(s) experience the new model(s). Key is ensuring the groups are statistically similar to start with. Data is collected on the defined metric for each group over a sufficient period.

After the test period, I perform statistical analysis (e.g., t-tests, chi-squared tests) to determine if the difference in the success metric between the groups is statistically significant. If the new model performs significantly better, it is rolled out. I also carefully monitor the model after deployment to ensure the A/B test results translate to real-world performance.

Advanced AI Model Designer interview questions

1. How would you handle a situation where your AI model is performing well in testing but poorly in real-world scenarios? What specific steps would you take to diagnose and resolve the issue?

When an AI model performs well in testing but poorly in real-world scenarios (a.k.a., generalization issue), it indicates a discrepancy between the training data and the actual data distribution. I would first diagnose the problem by:

- Data Distribution Analysis: Compare the statistical properties (mean, variance, skewness, missing values) of the training data and real-world data. Look for dataset shift or covariate shift. Identify if the real-world data contains edge cases or outliers not present during training.

- Feature Importance Analysis: Check if the features that were important during training are still relevant in the real-world scenario. A change in feature importance could highlight a problem with feature engineering or data representation.

- Error Analysis: Analyze the specific instances where the model fails in the real world. Look for patterns or common characteristics among these failures. This involves looking at the model's predictions alongside the actual correct answers.

To resolve the issue, I would consider:

- Data Augmentation or Re-sampling: Supplement the training data with more representative samples from the real-world data, or upsample under-represented edge cases.

- Fine-tuning: Fine-tune the model on a smaller set of real-world data. This can help adapt the model to the new data distribution.

- Regularization: Increase regularization to prevent overfitting to the training data.

- Feature Engineering: Re-evaluate feature engineering to create features more robust to real-world variations.

- Ensemble Methods: Use ensemble methods that combine multiple models trained on different subsets of the data or with different architectures to improve robustness. For instance, stacking or boosting.

- Domain Adaptation Techniques: Explore domain adaptation techniques to bridge the gap between the training and real-world data distributions. Some examples include adversarial domain adaptation.

2. Describe a time when you had to make a trade-off between model accuracy and computational efficiency. What factors did you consider, and how did you arrive at your decision?

In a project involving real-time fraud detection, I faced a trade-off between the accuracy of a complex deep learning model and the latency requirements for flagging fraudulent transactions. While the deep learning model achieved 98% accuracy in offline testing, its inference time was around 200ms per transaction. This was unacceptable, as transactions needed to be evaluated in under 50ms to avoid impacting user experience and preventing fraudulent activity effectively. Factors considered included the business impact of delayed fraud detection, the cost of deploying more powerful infrastructure to support the complex model, and the potential loss of accuracy.

To address this, I explored simpler models like logistic regression and decision trees. Although they achieved lower accuracy (around 95%), their inference times were significantly faster (under 10ms). I also implemented feature selection techniques to reduce the dimensionality of the input data for the deep learning model, and utilized model quantization to reduce model size and hence inference time. Ultimately, I chose a decision tree model, enhanced with carefully engineered features, that met the latency requirements while maintaining an acceptable level of accuracy. This decision was made based on profiling different models, creating a cost-benefit analysis, and collaborating with stakeholders to agree on the acceptable level of risk versus the need for real-time performance. We monitored the model's performance closely and planned to revisit the choice of model if the fraud landscape changed significantly.

3. Explain your approach to ensuring fairness and mitigating bias in AI models, particularly when dealing with sensitive data or protected attributes.

To ensure fairness and mitigate bias in AI models, especially with sensitive data, I take a multi-faceted approach. First, I focus on data collection and preprocessing, ensuring diverse and representative datasets while addressing imbalances that could lead to discriminatory outcomes. This includes techniques like oversampling, undersampling, or synthetic data generation. During data exploration, I conduct thorough bias audits to identify potential biases related to protected attributes such as race, gender, or age.

Model development involves employing fairness-aware algorithms or techniques like re-weighting, adversarial debiasing, or post-processing methods to correct for biases. I also pay close attention to feature selection, carefully considering whether certain features might inadvertently encode discriminatory information. Finally, rigorous evaluation using fairness metrics (e.g., demographic parity, equal opportunity) is crucial, along with ongoing monitoring and auditing of deployed models to detect and address any emerging biases.

4. How would you go about designing an AI model for a completely novel application where there is limited or no existing data? What strategies would you employ?

When designing an AI model for a novel application with limited data, I'd focus on techniques to maximize learning from minimal examples. First, I'd prioritize data augmentation to artificially expand the dataset. This could involve applying transformations, generating synthetic data using domain knowledge or other generative models (if feasible with available resources), or leveraging transfer learning from related domains with pre-trained models.

Next, I'd select a model architecture appropriate for small datasets, such as simpler models with fewer parameters to avoid overfitting. Techniques like few-shot learning or meta-learning become crucial, allowing the model to generalize from very few examples. Regularization techniques, Bayesian methods, and active learning (iteratively selecting the most informative samples for labeling) would further improve performance and reduce reliance on large datasets. Finally, a rigorous evaluation strategy, like cross-validation, would be used to validate performance and prevent bias.

5. Imagine you have conflicting performance metrics for your AI model (e.g., high precision but low recall). How do you reconcile these conflicts and optimize for the desired business outcome?

When facing conflicting performance metrics like high precision but low recall, I'd prioritize understanding the business context and the relative costs of false positives versus false negatives. For example, in fraud detection, low recall (missing fraudulent transactions) might be more costly than low precision (flagging legitimate transactions as fraud). Therefore, I'd focus on improving recall, even if it slightly reduces precision. This could involve adjusting the model's prediction threshold.

To reconcile these conflicts, I'd use techniques like:

- ROC curve analysis: Visualizing the trade-off between true positive rate (recall) and false positive rate (1-precision) to choose an optimal threshold.

- Cost-sensitive learning: Explicitly incorporating the costs of different types of errors into the model's training.

- Ensemble methods: Combining multiple models trained with different objectives to balance precision and recall.

- Collect additional data: Sometimes the model needs more data to make better discriminations. Especially, adding examples around the decision boundary could improve model performance.

6. Walk me through your process for selecting the appropriate AI model architecture for a given problem, considering factors such as data size, complexity, and available resources.

My process for selecting an AI model architecture involves several key considerations. First, I assess the data: its size, type (structured/unstructured), and the presence of noise or missing values. Small datasets often benefit from simpler models like linear regression or decision trees, while larger, complex datasets warrant exploring deep learning architectures such as convolutional neural networks (CNNs) for image data, recurrent neural networks (RNNs) or Transformers for sequential data, or graph neural networks (GNNs) for graph-structured data. I also evaluate the problem's complexity. Is it a classification, regression, or something else? This guides the choice of output layer and loss function.

Second, I factor in available resources, including computational power (CPU/GPU), memory, and time constraints. Training large deep learning models requires significant computational resources and time. If resources are limited, I might opt for a smaller model, transfer learning from a pre-trained model, or explore model compression techniques. Finally, I consider the interpretability requirements. If understanding the model's decision-making process is crucial, simpler, more interpretable models like decision trees or linear models might be preferred over black-box deep learning models. I often start with simpler models and gradually increase complexity, monitoring performance metrics and adjusting the architecture as needed. I may also explore AutoML solutions to suggest suitable architectures and hyperparameters.

7. How do you stay up-to-date with the latest advancements in AI model design and incorporate them into your work? What are your go-to resources?

I stay updated on AI model design advancements through a combination of academic and industry resources. I regularly read research papers on arXiv and other academic databases like IEEE Xplore, focusing on areas relevant to my work. I also follow prominent AI research labs and individuals on platforms like Twitter and LinkedIn to stay informed about their latest publications and projects.

My go-to resources include: * arXiv: For pre-prints of research papers. * Google AI Blog & other company blogs: For insights into practical applications and research. * Conference proceedings (NeurIPS, ICML, ICLR): To understand state-of-the-art techniques. * Online courses (Coursera, edX): For structured learning on specific topics. * Podcasts & Newsletters (e.g., The Batch from Andrew Ng): For curated summaries of recent developments. When incorporating new advancements, I start with small experiments and A/B tests to validate their effectiveness within my specific context, while being mindful of resource constraints.

8. Explain your approach to handling missing data in a dataset. What are the pros and cons of different imputation techniques, and when would you choose one over another?

My approach to handling missing data involves first understanding the nature and extent of the missingness. I try to determine if the data is missing completely at random (MCAR), missing at random (MAR), or missing not at random (MNAR). Then, I decide on a strategy which might include removing rows with missing values (if the amount of missing data is small and won't significantly impact the analysis), or using imputation techniques.

Common imputation techniques include:

- Mean/Median Imputation: Simple but can distort distributions and underestimate variance.

- Mode Imputation: Useful for categorical data, but similarly distorts distributions.

- K-Nearest Neighbors (KNN) Imputation: More sophisticated, uses similar data points to estimate missing values. Can be computationally expensive for large datasets.

- Regression Imputation: Predicts missing values based on other variables. Assumes a linear relationship and can overestimate accuracy.

- Multiple Imputation: Generates multiple plausible datasets, reflecting the uncertainty around the imputed values. Considered a gold standard but is more complex to implement.

I'd choose mean/median imputation for quick and dirty analysis or when the missing data is minimal and the impact on the overall analysis is negligible. KNN imputation is appropriate when there are complex relationships between variables and the dataset isn't too large. Multiple imputation is the preferred method when accuracy and reducing bias are paramount, despite the increased computational cost and complexity.

9. Describe a time when you had to explain a complex AI model to a non-technical audience. How did you simplify the concepts and ensure they understood the key takeaways?

I once had to explain a fraud detection AI model to a team of bank managers. Instead of diving into the algorithms, I focused on the model's purpose: identifying suspicious transactions to prevent financial losses. I used an analogy of a detective, explaining that the model looks for unusual patterns, like transactions from new locations or unusually large amounts, just like a detective looks for clues.

To ensure understanding, I avoided jargon and used visuals. I showed simple charts illustrating how the model scored transactions based on risk. I also emphasized the model's accuracy rate and how it reduced false positives compared to the previous manual system, focusing on the tangible benefits for their daily work. I made sure to take time to answer questions and address any concerns they had in simple, non-technical terms.

10. How would you design an AI model that can adapt and learn continuously in a dynamic environment where the data distribution is constantly changing?

To design an AI model that adapts and learns continuously in a dynamic environment, I would use a combination of online learning techniques and drift detection methods. Online learning allows the model to update its parameters incrementally with each new data point, rather than requiring retraining on the entire dataset. This is crucial for adapting to changing data distributions in real-time. Techniques like stochastic gradient descent (SGD) and adaptive learning rate optimizers (e.g., Adam) would be beneficial.

Furthermore, I'd incorporate drift detection mechanisms to identify when the data distribution has shifted significantly. Algorithms such as the Drift Detection Method (DDM) or Page-Hinkley test can signal when retraining or adaptation is necessary. Upon detection, the model could trigger a learning rate adjustment, ensemble new models weighted by performance or initiate a more comprehensive retraining process using a recent data window to focus on the current data distribution. Regularization techniques would also be vital to prevent overfitting to the most recent data and maintain generalization ability.

11. What are your preferred methods for model validation and cross-validation, and how do you ensure that your results are statistically significant?

My preferred methods for model validation include techniques like k-fold cross-validation and stratified k-fold, especially when dealing with imbalanced datasets. I also use hold-out validation sets for a final check on unseen data. To ensure statistically significant results, I focus on metrics appropriate for the problem (e.g., precision/recall for classification, RMSE for regression) and calculate confidence intervals where possible.

Specifically, I will run cross-validation multiple times with different random seeds and then average the performance metrics to obtain a more robust estimate of the model's generalization ability. I also use statistical tests like the t-test or ANOVA to compare the performance of different models and determine if the differences are statistically significant. If sample sizes are small, I'd opt for a non-parametric test such as Mann-Whitney U.

12. Explain the concept of transfer learning and how you would apply it to a specific AI model design problem to accelerate development and improve performance.

Transfer learning is a machine learning technique where a model trained on one task is re-used as the starting point for a model on a second task. It's particularly useful when you have limited data for the new task. Instead of training from scratch, you leverage the learned features from a pre-trained model. This saves training time and often improves performance, especially when the original and new tasks are related.

For example, imagine building an image classifier to identify different species of birds, but having only a few hundred images. I would use a pre-trained convolutional neural network (CNN) like ResNet or Inception, trained on a large dataset like ImageNet (millions of general images). I'd remove the final classification layer of the pre-trained model and replace it with a new layer tailored to the bird species. Then, I would freeze the weights of the earlier layers (that learned general image features like edges and textures) and only train the final classification layer, or fine-tune a few of the last layers, using my bird species dataset. This approach drastically reduces training time and, because the model starts with generalizable features, will likely give better results than training a CNN from scratch.

13. Describe your experience with different regularization techniques (e.g., L1, L2, dropout) and how you use them to prevent overfitting in AI models.

I have experience using various regularization techniques to prevent overfitting in AI models. L1 regularization (Lasso) adds the absolute value of the coefficients to the loss function, promoting sparsity and feature selection. L2 regularization (Ridge) adds the squared value of the coefficients, shrinking their magnitude but not forcing them to zero. Dropout randomly deactivates neurons during training, preventing the network from relying too heavily on specific neurons and thus enhancing generalization.

In practice, I choose regularization techniques based on the specific problem and dataset. For example, L1 regularization might be preferred when feature selection is important. I often experiment with different regularization strengths (e.g., different values for the lambda parameter in L1 and L2 regularization) using techniques like cross-validation to find the optimal balance between model complexity and performance.

14. How do you approach the problem of hyperparameter optimization? What are some effective techniques for finding the optimal hyperparameter values for your AI models?

I approach hyperparameter optimization by first understanding the role of each hyperparameter and its potential impact on model performance. Then, I typically start with a broad search using techniques like random search or grid search to identify promising regions in the hyperparameter space. For more efficient exploration, I leverage techniques like:

- Bayesian optimization: This uses a probabilistic model to guide the search, balancing exploration and exploitation.

- Gradient-based optimization: When hyperparameters are continuous, gradient descent can be applied to optimize them.

- Hyperband: This focuses on allocating resources to the most promising configurations early on, stopping the poorly performing ones.

After identifying a promising region, I fine-tune the hyperparameters using a narrower search, often combined with cross-validation, to ensure robust performance on unseen data. Regularization and early stopping are also helpful in preventing overfitting during the optimization process.

15. Explain the trade-offs between different AI model interpretability techniques (e.g., LIME, SHAP). When would you prioritize one technique over another?

LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) are both model-agnostic interpretability techniques, but they have different trade-offs. LIME provides local explanations by approximating the model with a simpler, interpretable model around a specific prediction. It's computationally faster, making it suitable for large datasets or real-time analysis. However, LIME's explanations can be unstable, varying slightly with different sampling. SHAP, on the other hand, uses Shapley values from game theory to provide more globally consistent explanations, attributing each feature's contribution to the prediction. SHAP is computationally more expensive than LIME, particularly for complex models or large datasets, but gives more accurate and stable feature importance scores.

I'd prioritize LIME when speed is critical and a rough understanding of local feature importance is sufficient. SHAP would be preferred when accuracy, consistency, and a global understanding of feature importance are paramount, even if it means longer computation times. For example, in a fraud detection scenario where immediate action is needed based on limited features, LIME's speed would be prioritized. Conversely, when analyzing a complex medical diagnosis model for regulatory approval, SHAP's consistency and accuracy would be crucial.

16. How do you ensure the security and privacy of sensitive data used in AI model training and deployment? What are some common security vulnerabilities and how do you mitigate them?

Securing sensitive data in AI involves several strategies. During training, techniques like differential privacy, federated learning, and homomorphic encryption can be used to protect data privacy. Differential privacy adds noise to the data, federated learning trains models on decentralized data without direct access, and homomorphic encryption allows computations on encrypted data. Access control mechanisms, data masking/anonymization, and secure enclaves are also crucial.

Common vulnerabilities include data poisoning (adversarial data injected into training sets), model inversion attacks (recovering training data from a model), and membership inference attacks (determining if a data point was used in training). Mitigation strategies involve robust input validation, adversarial training to make models resilient to attacks, regular security audits, and implementing strict access controls and monitoring throughout the AI lifecycle.

17. Describe your experience with deploying AI models to production environments. What are some common challenges and how do you overcome them?

My experience with deploying AI models to production includes utilizing tools like Docker, Kubernetes, and cloud platforms such as AWS (SageMaker, ECS) and Google Cloud Platform (Vertex AI). I've worked on projects involving image classification, NLP, and time-series forecasting, where model deployment involved containerizing the model, setting up REST APIs using frameworks like Flask or FastAPI, and integrating it with existing infrastructure. I have also worked on projects deploying models to edge devices for low-latency applications.

Common challenges include model versioning and management, ensuring scalability and reliability, monitoring model performance (drift detection), and handling data privacy and security. I address these challenges by implementing CI/CD pipelines for automated model deployment, using robust monitoring systems (e.g., Prometheus, Grafana) to track model metrics, employing techniques like A/B testing and shadow deployment to validate new models, and following secure coding practices to protect sensitive data. Furthermore, I use model registries to track model versions and facilitate rollbacks when necessary. I also prioritize optimizing model inference speed through techniques like quantization and pruning.

18. How would you design an AI model that can handle noisy or incomplete data while still maintaining acceptable performance?