Hiring Scala developers requires a keen understanding of the language's unique features and functional programming paradigms. You need to ensure candidates possess the right skills to contribute effectively to your projects, just like understanding the skills needed for other developers.

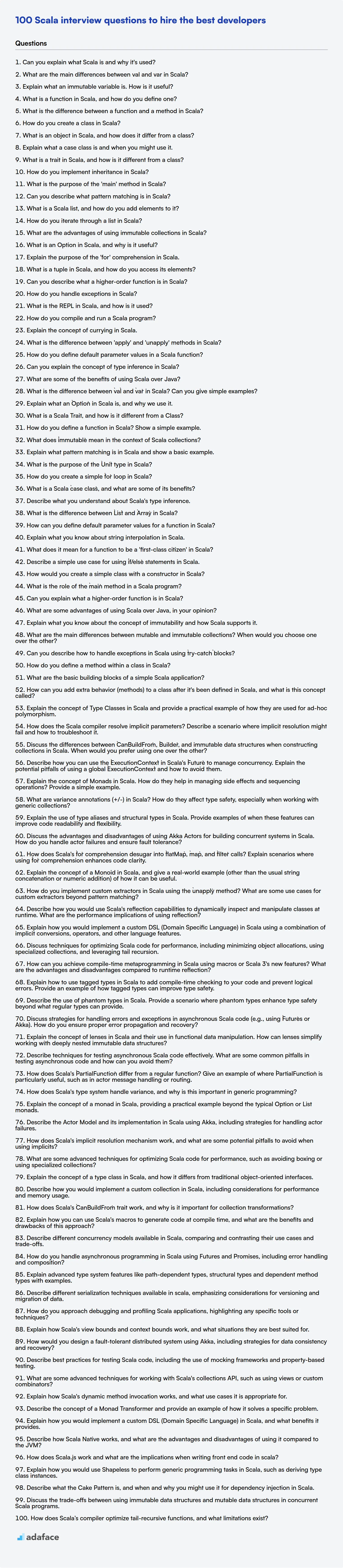

This blog post provides a curated list of Scala interview questions, categorized by experience level, from freshers to experts, including multiple-choice questions. These questions will help you assess candidates on their knowledge of Scala's syntax, data structures, concurrency, and more.

Use these questions to streamline your hiring process and identify top Scala talent or even better, use a Scala online test to filter candidates before the interview.

Table of contents

Scala interview questions for freshers

1. Can you explain what Scala is and why it's used?

Scala is a modern multi-paradigm programming language designed to be concise, elegant, and type-safe. It blends object-oriented and functional programming features, making it versatile for building various applications. It runs on the Java Virtual Machine (JVM) and is interoperable with Java code.

Scala is used because of several advantages: its ability to handle concurrency efficiently (using Actors or Futures/Promises), its powerful type system that catches errors early, its support for functional programming (immutable data structures, higher-order functions), and its concise syntax. Common use cases include big data processing with frameworks like Spark, building high-performance distributed systems, and developing web applications using frameworks like Play.

2. What are the main differences between val and var in Scala?

The main difference between val and var in Scala lies in their mutability. val declares an immutable variable, meaning its value cannot be changed after initialization. Once assigned, the reference (or value, for primitives) remains constant. var, on the other hand, declares a mutable variable, allowing its value to be reassigned after initialization.

In essence:

val: Immutable. The reference/value bound to the variable cannot be changed.var: Mutable. The reference/value bound to the variable can be changed.

Consider the following examples:

val x = 10 // x cannot be reassigned

var y = 20 // y can be reassigned

// x = 15 // This will result in a compilation error

y = 25 // This is perfectly valid

3. Explain what an immutable variable is. How is it useful?

An immutable variable is one whose value cannot be changed after it is initially assigned. Once created, its state remains constant throughout its existence. Attempts to modify its value will typically result in a new variable being created, leaving the original untouched. Languages like Python and Java (with the final keyword) offer ways to create immutable objects.

Immutability is useful for several reasons. It simplifies debugging, as the value of an immutable variable is always predictable. It also enhances thread safety, as immutable variables can be safely shared between multiple threads without the risk of race conditions. Furthermore, it can improve performance by allowing compilers to optimize code based on the guarantee that certain values will not change. Immutable objects are also naturally suited for use as keys in hash tables, where their consistent hashCode() is essential.

4. What is a function in Scala, and how do you define one?

In Scala, a function is a first-class citizen, meaning it can be treated like any other value. You can pass functions as arguments to other functions, return them as values from other functions, and assign them to variables. Functions are fundamental building blocks for creating reusable and modular code.

To define a function in Scala, you typically use the def keyword followed by the function name, parameter list (with types), a return type, and the function body. For example:

def add(x: Int, y: Int): Int = {

x + y

}

This defines a function named add that takes two integer parameters (x and y) and returns their sum as an integer. The return type is specified after the parameter list, and the function body is enclosed in curly braces {}. Scala can often infer the return type, allowing you to omit it in simpler cases.

5. What is the difference between a function and a method in Scala?

In Scala, the primary difference between a function and a method lies in their definition context. A method is a function that is defined as a member of a class, trait, or object. It implicitly operates on the instance of that class (or trait or object). Think of it as a function associated with an object. A function, on the other hand, can exist independently, without being tied to a particular class or object.

To summarize: Methods are part of a class/trait/object, while functions can exist on their own. You can assign a method to a function value by partially applying it, using the _ placeholder like so: val myFunc = myObject.myMethod _. This converts the method into a first-class function.

6. How do you create a class in Scala?

In Scala, you create a class using the class keyword. The syntax is similar to Java, but with some key differences. Here's a basic example:

class MyClass(val x: Int, var y: String) {

// Class body (fields and methods)

def printValues(): Unit = {

println(s"x = $x, y = $y")

}

}

Key aspects:

class MyClass(...): Declares a class namedMyClass.(val x: Int, var y: String): Defines the class parameters which also serve as constructor arguments.valmakesxa read-only field, whilevarmakesya mutable field. If neithervalnorvarare specified, the parameter is only accessible within the class constructor.- The class body can contain fields (variables), methods (functions), and other class definitions.

7. What is an object in Scala, and how does it differ from a class?

In Scala, an object is a singleton instance of a class. It's a way to have a single instance of a class accessible throughout your application. Unlike a class, you can't create multiple instances of an object using new. Think of it as a class that is instantiated only once.

The key differences are:

- Instantiation: Classes can be instantiated multiple times using

new, while objects are singletons and are instantiated only once. - Purpose: Objects are often used for utility methods, constants, or as entry points to applications (similar to static members in Java), while classes define blueprints for creating objects with state and behavior.

- Companion Objects: Objects can be companion objects to classes. A companion object has the same name as a class and can access the private members of the class and vice versa. This is a way to implement static-like members in Scala.

Example:

object MyObject {

val constant = 10

def utilityMethod(x: Int): Int = x * 2

}

class MyClass {

def printConstant(): Unit = {

println(MyObject.constant)

}

}

8. Explain what a case class is and when you might use it.

A case class in Scala is a class that is immutable by default and whose instances are compared by value rather than by reference. Scala automatically generates methods like equals, hashCode, and toString, as well as a companion object with an apply method (for easy instantiation) and an unapply method (for pattern matching).

You might use a case class when you need a simple data holder. For example, to represent a Point(x: Int, y: Int) or a message in an actor system. They are also used extensively in functional programming because of their immutability and ease of use with pattern matching and destructuring.

9. What is a trait in Scala, and how is it different from a class?

In Scala, a trait is like an interface with potential implementations. It's a fundamental building block for code reuse, allowing you to define shared behavior that can be mixed into classes. Unlike classes, traits cannot be instantiated directly, but they can be extended by classes or other traits. Traits support multiple inheritance, which allows a class to inherit from multiple traits, overcoming the single inheritance limitation of classes.

The key differences are:

- Instantiation: Classes can be instantiated (created as objects), traits cannot.

- Multiple Inheritance: Classes can inherit from multiple traits, but only one class.

- State: Traits can have state (fields), similar to classes. However, if a class inherits from multiple traits with the same field name, it must resolve the conflict.

10. How do you implement inheritance in Scala?

In Scala, inheritance is implemented using the extends keyword. A class can inherit from another class (single inheritance) or multiple traits. When a class inherits from another class, it inherits all non-private members (fields and methods). You can override methods from the parent class using the override keyword. Traits are similar to interfaces with default implementations and a class can inherit from multiple traits using the with keyword after the extends keyword for the base class.

For example:

class Animal {

def speak(): Unit = println("Generic animal sound")

}

class Dog extends Animal {

override def speak(): Unit = println("Woof!")

}

trait Swimmer {

def swim(): Unit = println("Swimming!")

}

class Duck extends Animal with Swimmer {

override def speak(): Unit = println("Quack!")

}

11. What is the purpose of the 'main' method in Scala?

The main method in Scala serves as the entry point for a Scala application. When you execute a Scala program, the Scala runtime environment looks for a main method to begin execution. Without a main method, the program won't know where to start.

Specifically, the main method in Scala must have the following signature: def main(args: Array[String]): Unit. args is an array of strings representing command-line arguments passed to the program. Unit represents the return type (similar to void in Java), indicating that the main method does not return any value.

12. Can you describe what pattern matching is in Scala?

Pattern matching in Scala is a mechanism for checking a value against a pattern. It's similar to a switch statement in other languages but is much more powerful. Scala's pattern matching can match on types, values, and the structure of data.

It is commonly used with the match keyword. For example:

x match {

case 1 => "one"

case 2 => "two"

case _ => "other"

}

Key aspects include:

- Matching on values: Exact value matches, like the example above.

- Matching on types: Determining the type of a variable.

- Case classes: Deconstructing case classes to access their fields.

13. What is a Scala list, and how do you add elements to it?

In Scala, a List is an ordered, immutable sequence of elements. This means once a list is created, its elements cannot be changed; instead, operations that appear to modify a list actually return a new list. Lists are singly linked lists.

To add elements to a Scala list, you don't actually add in place, but rather create a new list with the added elements. Common methods include:

::(cons): Adds an element to the beginning of a list. Example:1 :: List(2, 3)results inList(1, 2, 3).:+: Adds an element to the end of a list. Example:List(1, 2) :+ 3results inList(1, 2, 3).:::(concat): Concatenates two lists. Example:List(1, 2) ::: List(3, 4)results inList(1, 2, 3, 4).

For example:

val myList = List(2, 3)

val newList1 = 1 :: myList // List(1, 2, 3)

val newList2 = myList :+ 4 // List(2, 3, 4)

val newList3 = List(0,1) ::: myList // List(0, 1, 2, 3)

14. How do you iterate through a list in Scala?

Scala offers several ways to iterate through a list. The most common methods include using a for loop, the foreach method, and recursion.

forloop:val myList = List(1, 2, 3, 4, 5) for (element <- myList) { println(element) }foreachmethod:myList.foreach(element => println(element))Recursion:

def printList(list: List[Int]): Unit = list match { case Nil => // Do nothing, end of list case head :: tail => println(head) printList(tail) } printList(myList)

15. What are the advantages of using immutable collections in Scala?

Immutable collections in Scala offer several advantages. They enhance thread safety by eliminating the risk of concurrent modification, crucial in multi-threaded environments. Since their state cannot change, they're inherently safe to share across threads without synchronization. This simplifies concurrent programming and reduces the potential for race conditions and deadlocks.

Furthermore, immutable collections improve reasoning about code. Because their state is fixed, it's easier to predict the behavior of functions that use them. This leads to more maintainable and testable code. They also offer better performance in certain scenarios, such as when used in conjunction with caching or memoization, as the cached results remain valid as long as the underlying collection remains unchanged. They can be efficiently copied or shared because no defensive copying is required, as the original cannot be mutated. Finally, they support functional programming principles, allowing for elegant and concise code using methods like map, filter, and reduce without side effects. Consider for example:

val numbers = List(1, 2, 3)

val doubled = numbers.map(_ * 2) // doubled is a new list, numbers remains unchanged

16. What is an Option in Scala, and why is it useful?

In Scala, an Option is a container object used to represent optional values. It's a type that can hold either Some(value) or None, indicating the presence or absence of a value, respectively. Think of it as a wrapper around a value that might be there or might not be there.

The primary benefit of Option is to handle potentially missing values in a type-safe manner, avoiding NullPointerExceptions. Instead of returning null, which can lead to runtime errors, you return an Option. This forces the caller to explicitly handle the possibility of a missing value, often done using methods like map, flatMap, getOrElse, or pattern matching. Using Option improves code clarity, reliability, and reduces the risk of unexpected null-related crashes. For example:

val maybeValue: Option[String] = Some("hello")

val value: String = maybeValue.getOrElse("default")

17. Explain the purpose of the 'for' comprehension in Scala.

The for comprehension in Scala provides a concise and readable way to express operations on collections like lists, arrays, and options. It's essentially syntactic sugar for chained calls to flatMap, map, and filter (or withFilter). It enables you to iterate, filter, and transform elements within collections in a more declarative style, making code easier to understand and maintain.

For example, you can use it to iterate over a list, apply a filter condition, and transform the resulting elements into a new list. The compiler translates the for comprehension into a series of flatMap, map, and filter operations, handling the underlying details of the collection traversal and transformation.

18. What is a tuple in Scala, and how do you access its elements?

In Scala, a tuple is a data structure that holds a fixed-size sequence of elements, which can be of different types. Tuples are immutable, meaning their elements cannot be changed after creation.

To access elements of a tuple, you can use either the underscore notation or pattern matching. With the underscore notation, elements are accessed using ._1 for the first element, ._2 for the second, and so on. For instance, if you have val myTuple = (1, "hello", true), you can access the first element using myTuple._1 (which would be 1), the second using myTuple._2 (which would be "hello"), and the third using myTuple._3 (which would be true). Alternatively, pattern matching can be used to extract the elements into named variables, like so: val (x, y, z) = myTuple where x will be 1, y will be "hello" and z will be true.

19. Can you describe what a higher-order function is in Scala?

In Scala, a higher-order function is a function that takes one or more functions as arguments, or returns a function as its result. Essentially, it treats functions as first-class citizens. This allows you to create more abstract and reusable code.

For example, you could define a function that takes another function as an argument and applies it to every element in a list. A simple illustration in scala is as follows:

def operateOnList(list: List[Int], operation: Int => Int): List[Int] = {

list.map(operation)

}

def square(x: Int): Int = x * x

val numbers = List(1, 2, 3, 4, 5)

val squaredNumbers = operateOnList(numbers, square) // squaredNumbers will be List(1, 4, 9, 16, 25)

20. How do you handle exceptions in Scala?

Scala handles exceptions similarly to Java. You use try, catch, and finally blocks. The try block encloses the code that might throw an exception. The catch block handles specific exceptions, and the finally block executes regardless of whether an exception occurred.

Here's a simple example:

try {

// Code that might throw an exception

val result = 10 / 0

} catch {

case e: ArithmeticException => println("Caught an arithmetic exception: " + e.getMessage)

case e: Exception => println("Caught a generic exception: " + e.getMessage)

} finally {

// Code that always executes (e.g., closing resources)

println("Finally block executed")

}

Scala also doesn't require you to declare checked exceptions, unlike Java.

21. What is the REPL in Scala, and how is it used?

The REPL (Read-Eval-Print Loop) is an interactive shell for Scala. It allows you to enter Scala expressions, which are then evaluated and the results are printed out. It's a powerful tool for learning the language, experimenting with code, and quickly testing out ideas.

To use the REPL, you simply type scala in your terminal. Once the REPL is running, you can enter Scala code directly. For example:

scala> 1 + 1

res0: Int = 2

scala> val x = 5

x: Int = 5

scala> println("Hello, world!")

Hello, world!

The REPL is very useful for:

- Learning Scala: Experimenting with language features.

- Prototyping: Quickly testing algorithms and ideas.

- Debugging: Examining variables and evaluating expressions.

22. How do you compile and run a Scala program?

To compile a Scala program, you typically use the Scala compiler, scalac. For example, if your source code is in a file named MyProgram.scala, you would run scalac MyProgram.scala. This will generate .class files containing the compiled bytecode.

To run the compiled Scala program, you use the scala command, which invokes the Scala runtime environment. Assuming your MyProgram.scala file contains a main method within an object named MyProgram, you would run scala MyProgram. This will execute the main method within your Scala program.

23. Explain the concept of currying in Scala.

Currying in Scala is a technique of transforming a function that takes multiple arguments into a sequence of functions that each take a single argument. In essence, a function with multiple parameters is converted into a chain of functions, each accepting one parameter. Each function in the chain returns another function (that accepts the next parameter) until the final function returns the result. This allows you to apply arguments to a function one at a time, creating a series of specialized functions.

For example:

def add(x: Int)(y: Int): Int = x + y

val add5 = add(5) _ //add5 is now a function that takes one Int and adds 5 to it.

val result = add5(3) // result will be 8

Here, add is a curried function. add(5) _ partially applies the first argument, x = 5, resulting in a new function that accepts only y and returns 5 + y.

24. What is the difference between 'apply' and 'unapply' methods in Scala?

In Scala, the apply and unapply methods are used to define how objects can be created and deconstructed, respectively, often in the context of case classes and companion objects. The apply method typically acts as a factory, allowing you to create instances of a class without explicitly using the new keyword. For example, MyClass(arg1, arg2) is shorthand for MyClass.apply(arg1, arg2) when apply is defined in the companion object of MyClass.

The unapply method, on the other hand, is used for pattern matching. It takes an object and attempts to "extract" its constituent parts, returning them as an Option. If the extraction is successful, it returns Some(extracted_values); otherwise, it returns None. This allows you to use pattern matching to deconstruct objects and access their individual fields. In essence, apply lets you create objects conveniently, and unapply lets you easily take them apart.

25. How do you define default parameter values in a Scala function?

In Scala, you can define default parameter values directly in the function signature. If a caller omits an argument, the default value is used. The default value expression is evaluated every time the function is called without the corresponding argument.

For example:

def greet(name: String = "Guest", greeting: String = "Hello") = {

println(s"$greeting, $name!")

}

greet() // Prints "Hello, Guest!"

greet("Alice") // Prints "Hello, Alice!"

greet("Bob", "Hi") // Prints "Hi, Bob!"

26. Can you explain the concept of type inference in Scala?

Type inference in Scala is the compiler's ability to automatically determine the data type of a variable or expression without explicit type annotations provided by the programmer. This simplifies the code and makes it more concise. The Scala compiler analyzes the context in which a variable or expression is used to deduce its type.

For example:

val message = "Hello, Scala!" // The compiler infers 'message' to be of type String

val number = 10 // The compiler infers 'number' to be of type Int

//You can see here that the type is not specified, but the compiler infers it.

27. What are some of the benefits of using Scala over Java?

Scala offers several advantages over Java, including its concise syntax, functional programming capabilities, and powerful type system. Scala's syntax reduces boilerplate code, leading to more readable and maintainable codebases. Its support for functional programming paradigms, like immutable data structures and higher-order functions, can simplify concurrent programming and improve code testability.

Furthermore, Scala's advanced type system, featuring features like type inference and pattern matching, enables the compiler to catch errors earlier, enhancing code reliability. Scala also seamlessly integrates with existing Java code and libraries, offering a smooth transition for Java developers. Specific features include:

- Conciseness: Less code for the same functionality.

- Immutability: Easier concurrency management.

- Pattern Matching: Elegant code for data manipulation.

- Type Inference: Reduced verbosity and improved readability.

Scala interview questions for juniors

1. What is the difference between `val` and `var` in Scala? Can you give simple examples?

val and var are keywords in Scala used to declare variables. The key difference lies in their mutability. val declares an immutable variable, meaning its value cannot be reassigned after initialization. var, on the other hand, declares a mutable variable, allowing its value to be changed multiple times.

Example:

val immutableValue: Int = 10 // Value cannot be changed after this

var mutableValue: String = "Hello" // Value can be changed

//immutableValue = 20 // This would cause a compilation error

mutableValue = "World" // This is perfectly valid

println(immutableValue) // Prints 10

println(mutableValue) // Prints World

2. Explain what an `Option` in Scala is, and why we use it.

In Scala, an Option is a container object that may or may not contain a non-null value. It's used to represent the optional presence of a value, addressing the issue of null pointer exceptions common in other languages. An Option can be in one of two states: Some(value), which means it contains a value, or None, which means it doesn't.

We use Option to make our code safer and more expressive. Instead of returning null to indicate the absence of a value, which can lead to unexpected errors if not handled properly, we return None. This forces the caller to explicitly handle the possibility that a value might be missing, typically by using methods like map, flatMap, getOrElse, or pattern matching. This encourages more robust and maintainable code, as it makes the potential absence of a value explicit in the type system.

3. What is a Scala Trait, and how is it different from a Class?

A Scala Trait is a fundamental building block that allows you to define shared interfaces and behaviors. Unlike classes, traits cannot be instantiated directly. They are designed to be mixed into classes, providing a mechanism for code reuse and multiple inheritance. Think of them as interfaces with potential implementations.

Key differences include:

- Instantiation: Classes can be instantiated; traits cannot.

- Multiple Inheritance: Classes can inherit from only one class but can mix in multiple traits.

- State: Traits can have state (fields), similar to classes.

- Constructors: Classes can have constructors and constructor parameters, traits cannot have constructors with parameters.

4. How do you define a function in Scala? Show a simple example.

In Scala, you define a function using the def keyword, followed by the function name, parameter list (if any), return type, and the function body. The syntax is as follows:

def functionName(param1: Type1, param2: Type2): ReturnType = {

// Function body

// Return value

}

Here's a simple example of a function that adds two integers:

def add(x: Int, y: Int): Int = {

x + y

}

// Or, more concisely:

def addConcise(x: Int, y: Int): Int = x + y

5. What does `immutable` mean in the context of Scala collections?

In Scala, an immutable collection means that once the collection is created, its contents cannot be changed. Operations on immutable collections do not modify the original collection; instead, they return a new collection with the desired modifications.

This immutability provides several benefits:

- Thread Safety: Immutable collections are inherently thread-safe because multiple threads can access them concurrently without the risk of data corruption.

- Predictability: The state of the collection is always consistent, making it easier to reason about the code.

- Referential Transparency: Immutable collections support referential transparency, meaning that an expression can be replaced with its value without changing the program's behavior.

6. Explain what pattern matching is in Scala and show a basic example.

Pattern matching in Scala is a mechanism for checking a value against a pattern. It's similar to a switch statement in other languages, but much more powerful. It allows you to deconstruct data structures and bind values to variables based on the structure of the data.

A simple example:

val x = 10

x match {

case 1 => println("One")

case 10 => println("Ten")

case _ => println("Something else")

}

In this example, x is matched against different cases. If x is 1, it prints "One". If it's 10, it prints "Ten". The _ is a wildcard, matching anything that hasn't already been matched.

7. What is the purpose of the `Unit` type in Scala?

The Unit type in Scala serves a purpose similar to void in Java or C++. It's primarily used to indicate that a method or function does not return a meaningful value, focusing instead on side effects. Essentially, Unit is a singleton type with only one value, denoted as ().

Specifically, Unit is useful:

- Return type for side-effecting functions: When a function's main goal is to perform an action (e.g., print to the console, update a variable) rather than compute a value, its return type is often

Unit. - Placeholder: It can be used as a placeholder type when a type is needed but no actual value is required.

- Avoiding null: Using

Unitis often preferred over returningnullas it provides type safety and avoids potentialNullPointerExceptionerrors.

8. How do you create a simple `for` loop in Scala?

In Scala, a simple for loop can be created using the following syntax:

for (i <- 0 to 5) {

println(s"Value of i: $i")

}

This loop iterates from 0 to 5 (inclusive). Alternatively, you can use until if you want to exclude the upper bound. The <- operator is used to iterate over a range of values or a collection.

9. What is a Scala `case class`, and what are some of its benefits?

A Scala case class is a regular class that automatically provides several useful features, making it a concise way to define data-holding classes. These classes are immutable by default.

Benefits include:

- Automatic generation of

equals(),hashCode(), andtoString()methods. - Automatic generation of a companion object with an

apply()method for easy object creation (nonewkeyword needed). - Support for pattern matching.

- Copy method for creating modified instances.

- Parameters are implicitly

valby default, making them immutable. For example:case class Point(x: Int, y: Int)creates aPointclass wherexandyare immutable values.

10. Describe what you understand about Scala's type inference.

Scala's type inference is the compiler's ability to automatically deduce the data type of an expression. This allows you to omit explicit type annotations in your code, making it more concise and readable. The compiler analyzes the context in which an expression is used to determine its most suitable type.

Specifically, Scala can infer types for:

- Local variables:

val x = 10 // x is inferred to be Int - Method return types (in some cases):

def add(a: Int, b: Int) = a + b // return type Int is inferred - Generic type parameters:

val list = List(1, 2, 3) // list is inferred to be List[Int]

Type inference simplifies code but it's good practice to add explicit typing in public APIs, complicated situations, or when it helps readability.

11. What is the difference between `List` and `Array` in Scala?

In Scala, both List and Array are used to store sequences of elements, but they differ significantly.

Array is a mutable, fixed-size data structure that is contiguous in memory, like Java arrays. Elements in an array can be updated in place. List, on the other hand, is an immutable, linked-list data structure. Because List is immutable, operations like adding or removing elements create a new List rather than modifying the original. Here's a summary:

- Mutability:

Arrayis mutable,Listis immutable. - Size:

Arrayhas a fixed size upon creation,Listdoes not (can grow or shrink when creating new lists). - Implementation:

Arrayis contiguous in memory,Listis a linked list. - Performance:

Arrayprovides constant-time access to elements by index (e.g.,arr(i)).Listprovides linear-time access to elements because you need to traverse the list. - Use Cases:

Arrayis suitable when you need a mutable sequence with fast random access, whileListis appropriate when immutability and ease of adding/removing elements (creating a new list) are preferred.

12. How can you define default parameter values for a function in Scala?

In Scala, you can define default parameter values directly in the function definition. When a caller omits an argument with a default value, the default value is used. This provides flexibility and reduces the need for multiple overloaded function definitions.

For example:

def greet(name: String, greeting: String = "Hello"): String = {

s"$greeting, $name!"

}

println(greet("Alice")) // Output: Hello, Alice!

println(greet("Bob", "Hi")) // Output: Hi, Bob!

13. Explain what you know about string interpolation in Scala.

String interpolation in Scala allows you to embed variables directly within string literals. It's a way to construct strings by evaluating embedded expressions. Scala provides three main string interpolation methods:

- s-interpolation: Allows you to directly embed variables prefixed with a

$sign. For example,val name = "Alice"; println(s"Hello, $name!")will print "Hello, Alice!". You can also embed arbitrary expressions within curly braces, likes"The answer is ${1 + 1}". - f-interpolation: Similar to s-interpolation but also allows you to format the embedded values using printf-style format specifiers. For example,

val height = 1.9d; println(f"Height is $height%.2f")formats the height to two decimal places. - raw-interpolation: Performs no escaping of characters. It prints the string exactly as is. Useful when you need the raw string representation without interpreting escape sequences like

\n(newline).

14. What does it mean for a function to be a 'first-class citizen' in Scala?

In Scala, a function is a 'first-class citizen' when it can participate in all operations available to other data types. This means functions can be:

- Assigned to variables, much like integers or strings.

- Passed as arguments to other functions (higher-order functions).

- Returned as the result of a function.

- Stored in data structures like lists or maps.

This capability is fundamental to functional programming and allows for powerful abstractions and code reuse. Functions are treated as values.

15. Describe a simple use case for using `if/else` statements in Scala.

A simple use case for if/else in Scala is determining whether a number is even or odd. You can use the modulo operator (%) to check if a number is divisible by 2. If the remainder is 0, it's even; otherwise, it's odd.

val number = 7

if (number % 2 == 0) {

println("Even")

} else {

println("Odd")

}

This example showcases how if/else facilitates branching logic based on a condition, a fundamental concept in programming.

16. How would you create a simple class with a constructor in Scala?

In Scala, you can create a class with a constructor very easily. The constructor is defined directly within the class definition. Here's a simple example:

class Person(val firstName: String, val lastName: String) {

def fullName: String = firstName + " " + lastName

}

In this example, Person is the class name, and firstName and lastName are the constructor parameters. The val keyword makes these parameters accessible as public read-only fields of the class. You can then create instances of the class like so: val person = new Person("John", "Doe"), and access the fields using person.firstName and person.lastName, or call the method person.fullName.

17. What is the role of the `main` method in a Scala program?

The main method in Scala serves as the entry point for the execution of a Scala program. Similar to languages like Java and C++, the Scala runtime environment looks for a main method when launching an application.

Specifically, the main method in Scala must have the following signature: def main(args: Array[String]): Unit. The args parameter is an array of strings representing command-line arguments passed to the program. When a Scala program is executed, the code within the main method is the first to be executed. It typically initializes the program's state, processes input, performs computations, and produces output.

18. Can you explain what a higher-order function is in Scala?

In Scala, a higher-order function is a function that either takes one or more functions as arguments or returns a function as its result. Essentially, it treats functions as first-class citizens, allowing you to manipulate them like any other value.

For example, consider a function that takes another function as input and applies it to each element of a list: def operateOnList(list: List[Int], operation: Int => Int): List[Int] = list.map(operation). Here, operateOnList is a higher-order function because it takes operation (a function of type Int => Int) as an argument. Another example is a function that returns a function. def multiplier(factor: Int): Int => Int = (x: Int) => x * factor. multiplier is a higher order function because it returns another function Int => Int.

19. What are some advantages of using Scala over Java, in your opinion?

Scala offers several advantages over Java. First, its conciseness leads to more readable and maintainable code. Features like type inference and a more expressive syntax reduce boilerplate. Scala's support for both object-oriented and functional programming paradigms allows developers to choose the most appropriate approach for a given problem. This hybrid approach can result in cleaner, more modular designs.

Second, Scala's advanced features like pattern matching, case classes, and immutable data structures promote safer and more robust code. Its strong concurrency support, leveraging the Actor model or Futures/Promises, simplifies building concurrent applications. Furthermore, its seamless interoperability with Java makes it easier to integrate into existing Java projects and leverage existing Java libraries. For instance, using java.util.ArrayList directly in Scala code is straightforward, and libraries like Akka can be implemented in Scala or Java, showing a direct comparison of the syntax.

20. Explain what you know about the concept of immutability and how Scala supports it.

Immutability means that once an object is created, its state cannot be changed. This is beneficial because it simplifies reasoning about code, makes it easier to write concurrent programs (since no locks are needed), and can improve performance through techniques like memoization. Immutability avoids unintended side effects.

Scala strongly supports immutability. It encourages the use of immutable collections (like List, Vector, Map) by default. val keyword declares immutable variables (references), meaning they cannot be reassigned after initialization. Data structures like case classes are immutable by default. Also, features like sealed classes further promote immutability. While Scala allows mutable data structures, the language design guides developers towards using immutable alternatives when possible.

21. What are the main differences between mutable and immutable collections? When would you choose one over the other?

Mutable collections can be modified after creation (elements can be added, removed, or changed), while immutable collections cannot be modified after creation. Any operation that appears to modify an immutable collection actually returns a new collection with the changes.

Choose mutable collections when you need to modify the collection in-place, optimizing for performance when changes are frequent. Choose immutable collections when you need to ensure data integrity, prevent unintended side effects, and simplify reasoning about your code, especially in concurrent or multi-threaded environments. Immutability can also aid in caching and optimization techniques. For example, in Java: List<String> mutableList = new ArrayList<>(); vs. List<String> immutableList = List.of("a", "b");

22. Can you describe how to handle exceptions in Scala using `try-catch` blocks?

In Scala, try-catch blocks are used for exception handling, similar to Java. The try block encloses the code that might throw an exception. If an exception occurs, the control is transferred to the corresponding catch block. Multiple catch blocks can be defined to handle different types of exceptions. A finally block can be included, which will always execute, regardless of whether an exception was thrown or caught; this is useful for resource cleanup.

Here's an example:

try {

// Code that might throw an exception

val result = 10 / 0

} catch {

case e: ArithmeticException =>

println("Caught an arithmetic exception: " + e.getMessage)

case e: Exception =>

println("Caught a generic exception: " + e.getMessage)

} finally {

// Optional finally block (always executed)

println("Finally block executed")

}

23. How do you define a method within a class in Scala?

In Scala, you define a method within a class using the def keyword, followed by the method name, parameter list (if any), return type, and the method body. The general syntax is:

class MyClass {

def myMethod(param1: Type1, param2: Type2): ReturnType = {

// Method body

// ...

return value //If return type is specified

}

}

For example, to define a method named add that takes two integers and returns their sum, you would write:

class Calculator {

def add(x: Int, y: Int): Int = {

x + y

}

}

24. What are the basic building blocks of a simple Scala application?

The basic building blocks of a simple Scala application include:

- Classes and Objects: These are fundamental. Classes define blueprints for creating objects, while objects are singleton instances of classes. Scala is an object-oriented language, and almost everything is an object. The

objectkeyword defines a singleton object. Every Scala application needs amainobject, which contains the entry point of the application. Themainmethod, the entry point of any application, needs to have the following signature:def main(args: Array[String]): Unit. - Methods and Functions: Methods are defined within classes or objects, while functions can exist independently. Both encapsulate reusable blocks of code. For example:

def add(x: Int, y: Int): Int = x + y. - Variables: Used to store data. Scala supports both mutable (

var) and immutable (val) variables. Immutable variables are preferred. - Expressions: Scala is expression-oriented, meaning that almost everything returns a value. Expressions can be simple values, or complex blocks of code.

- Traits: Used for defining object types by specifying the signature of supported methods. They are similar to interfaces in Java.

25. How can you add extra behavior (methods) to a class after it's been defined in Scala, and what is this concept called?

In Scala, you can add extra behavior (methods) to a class after it's been defined using implicit conversions. This concept is commonly referred to as "implicit classes" or "pimp my library" pattern. It involves defining an implicit class that takes an instance of the original class as a parameter, and then adding new methods to this implicit class.

For example:

object StringImprovements {

implicit class StringHelper(s: String) {

def increment: String = s.map(c => (c + 1).toChar)

}

}

import StringImprovements._

val hello = "hello".increment //Result: ifmmp

In this example, an implicit class StringHelper is defined, which takes a String as input. It adds a new method increment to the String class. When "hello".increment is called, the compiler implicitly converts the String "hello" to an instance of StringHelper which allows the method to be called.

Advanced Scala interview questions

1. Explain the concept of Type Classes in Scala and provide a practical example of how they are used for ad-hoc polymorphism.

Type classes in Scala enable ad-hoc polymorphism, allowing you to define functions that can operate on different types without requiring them to be part of a specific inheritance hierarchy or share a common interface. A type class defines a set of operations (methods) that a type must support to be considered an instance of that type class. The Scala compiler then uses implicit resolution to find the appropriate implementation of these operations for a given type.

For example, consider a JsonWriter type class that serializes objects to JSON. We can define a JsonWriter[A] trait with a toJson method. Specific implementations are provided as implicit instances for different types (e.g., Int, String, custom case classes). A generic format function takes an object of type A and an implicit JsonWriter[A] and uses the toJson method to serialize the object. This means any type A that has an implicit JsonWriter[A] defined, can be serialized using the format function. This demonstrates ad-hoc polymorphism as the format function behaves differently based on the type and associated JsonWriter instance available implicitly at the call site.

trait JsonWriter[A] {

def toJson(value: A): String

}

object JsonWriter {

implicit val intWriter: JsonWriter[Int] = (value: Int) => s"\"$value\""

implicit val stringWriter: JsonWriter[String] = (value: String) => s"\"$value\""

}

object Main {

def format[A](value: A)(implicit writer: JsonWriter[A]): String = writer.toJson(value)

def main(args: Array[String]): Unit = {

println(format(42))

println(format("hello"))

}

}

2. How does the Scala compiler resolve implicit parameters? Describe a scenario where implicit resolution might fail and how to troubleshoot it.

The Scala compiler resolves implicit parameters by searching for available implicit values in the current scope and companion objects of the implicit parameter's type. The compiler considers the following scopes in order: the current scope, imported implicits, outer scopes, inheritance, package objects, and companion objects.

Implicit resolution can fail when the compiler cannot find a unique implicit value that matches the required type. A common scenario is ambiguity: when multiple implicit values of the same type are in scope, and the compiler can't choose one. Troubleshooting involves:

- Ensuring uniqueness: Verify that only one implicit value of the required type is in scope at the call site.

- Import statements: Check for conflicting or missing

importstatements that bring implicits into scope. - Type mismatch: Confirm the type of the available implicit values matches the expected type of the implicit parameter.

- Scope: Verify that the intended implicit value is actually accessible from the point where it is needed. Sometimes, a simple explicit

importresolves the issue. - Using

-Xlog-implicitsflag: Run the compiler with-Xlog-implicitsflag to see the implicit search process and pinpoint where it fails. E.g.,scalac -Xlog-implicits MyFile.scala.

3. Discuss the differences between `CanBuildFrom`, `Builder`, and immutable data structures when constructing collections in Scala. When would you prefer using one over the other?

CanBuildFrom is a type class that enables the creation of new collections from existing ones based on the source collection's type and the elements being added. It provides a newBuilder method that returns a Builder. Builder is a mutable data structure (conceptually) that efficiently accumulates elements to construct the final collection. Immutable data structures, like List or Vector, are collections that cannot be modified after creation; any operation that appears to modify them returns a new collection. They are built using a builder pattern internally.

When performance is critical and you are performing many operations to build a collection, especially in a loop or within a complex algorithm, using CanBuildFrom implicitly or working with a Builder directly can be more efficient because you're using a mutable intermediate step. If immutability and thread safety are paramount, or if the collection is built through a series of functional transformations (map, filter, etc.), leveraging immutable data structures directly is preferable. CanBuildFrom is used implicitly by many collection methods to select an appropriate builder for the result type, and generally, you don't interact with it directly unless creating custom collections.

4. Describe how you can use the `ExecutionContext` in Scala's `Future` to manage concurrency. Explain the potential pitfalls of using a global `ExecutionContext` and how to avoid them.

The ExecutionContext in Scala's Future is crucial for managing concurrency because it dictates where the asynchronous computation defined by a Future will be executed. It's essentially a thread pool (or something that can execute tasks). When you create a Future, you implicitly (or explicitly) provide an ExecutionContext, telling the Future where to run its code. Different ExecutionContexts can provide different levels of parallelism and resource management.

Using a global ExecutionContext (like scala.concurrent.ExecutionContext.global) can lead to problems if not managed carefully. Since all Futures share the same pool, long-running or blocking operations can starve other Futures, decreasing overall application responsiveness. To avoid this, use a dedicated ExecutionContext for specific tasks or types of tasks. For I/O-bound operations, a cached thread pool might be suitable. For CPU-bound tasks, a fixed-size thread pool tuned to the number of available cores can be a better option. You can create these using java.util.concurrent.Executors and then wrap them as ExecutionContexts. It is also important to appropriately handle exceptions and avoid unbounded queue sizes to prevent memory exhaustion.

5. Explain the concept of Monads in Scala. How do they help in managing side effects and sequencing operations? Provide a simple example.

Monads in Scala (and functional programming) are a design pattern that allows you to chain operations together, particularly when those operations might have side effects or return values wrapped in some context (like Option, Try, or Future). They provide a way to sequence computations while handling the underlying context. A Monad must implement a flatMap function, which takes a function as input. This function transforms the value inside the Monad and returns another Monad, allowing for chaining. They also have a unit or pure method (often the constructor) for wrapping a normal value into the monadic context.

Monads help manage side effects by isolating them within the monadic context. For example, Option handles potential null values, Try handles exceptions, and Future handles asynchronous operations. flatMap ensures that subsequent operations are only executed if the previous operation was successful (or, in the case of Option, if a value is present). This allows cleaner sequencing of operations, avoiding nested if statements or manual error handling. Here's an example with Option:

val a: Option[Int] = Some(1)

val b: Option[Int] = Some(2)

val c: Option[Int] = None

val result1 = a.flatMap(x => b.map(y => x + y)) // Some(3)

val result2 = a.flatMap(x => c.map(y => x + y)) // None

6. What are variance annotations (+/-) in Scala? How do they affect type safety, especially when working with generic collections?

Variance annotations in Scala (+ and -) specify how subtyping relationships between generic types relate to the subtyping relationships of their type parameters. + indicates covariance (e.g., List[+T] means List[Cat] is a subtype of List[Animal] if Cat is a subtype of Animal). - indicates contravariance (e.g., Function[-A, +B] means Function[Animal, Cat] is a subtype of Function[Cat, Animal] if Cat is a subtype of Animal). No annotation means invariance.

Variance annotations are crucial for type safety, especially with generic collections. Without covariance, you couldn't, for example, treat a List[Cat] as a List[Animal], even though a Cat is an Animal. This would be overly restrictive. However, incorrect variance annotations can lead to runtime type errors. For example, if List[+T] allowed adding arbitrary T to the list, you could add a Dog to what was originally a List[Cat], violating type safety. Scala's type system prevents this through variance checks at compile time, often requiring you to use invariant positions for certain methods (e.g., methods that take a T as input when the class is covariant in T).

7. Explain the use of type aliases and structural types in Scala. Provide examples of when these features can improve code readability and flexibility.

Type aliases in Scala create alternative names for existing types. This improves readability by giving context-specific names to types. For example, type UserId = String makes it clear that a string represents a user ID. They don't create new types; they're just aliases.

Structural types define a type based on the methods or fields it possesses, rather than inheritance or explicit type declarations. This promotes flexibility because any type possessing the defined structure can be used, regardless of its declared type. For instance, type HasName = { def name: String } defines a type that has a name method returning a string. Any object with such a method conforms to this structural type. Using structural types can increase coupling, though, so they should be used judiciously. They're most beneficial when interacting with code where you can't or don't want to define strict interface or inheritance relationships.

8. Discuss the advantages and disadvantages of using Akka Actors for building concurrent systems in Scala. How do you handle actor failures and ensure fault tolerance?

Akka Actors offer several advantages for concurrent systems. They provide a high level of abstraction over threads, simplifying concurrent programming. Actors communicate via asynchronous message passing, avoiding shared mutable state and reducing the need for explicit locking. This model promotes concurrency and scalability. Akka also provides built-in support for fault tolerance through the actor hierarchy and supervision strategies. However, Akka Actors also have disadvantages. The actor model can be more complex to learn initially compared to simpler threading models. Debugging can also be more challenging due to the asynchronous nature of message passing. Overuse of actors can introduce overhead, so careful design and profiling are important.

To handle actor failures and ensure fault tolerance, Akka utilizes a hierarchical supervision model. When an actor fails (throws an exception), its supervisor actor decides how to handle the failure. Common supervision strategies include: Resume (restart the failed actor, preserving its internal state), Restart (restart the actor, resetting its state), Stop (terminate the failed actor), and Escalate (pass the failure up to the supervisor's supervisor). Supervisors can also implement custom logic for handling specific types of exceptions, implementing a 'let it crash' paradigm while maintaining the overall stability of the system. Using DeathWatch, one actor can monitor another actor and receive a notification when the monitored actor terminates, allowing for proactive handling of actor failures and dependencies.

9. How does Scala's `for` comprehension desugar into `flatMap`, `map`, and `filter` calls? Explain scenarios where using `for` comprehension enhances code clarity.

Scala's for comprehension is syntactic sugar that the compiler transforms into a series of flatMap, map, and filter (or withFilter) calls. The specific transformation depends on the structure of the for comprehension. A for comprehension with a single yield translates primarily to map, while nested for comprehensions or those involving intermediate bindings translate to flatMap calls. if guards within the for comprehension are translated into filter or withFilter calls.

for comprehensions enhance code clarity, especially when dealing with nested collections or option types. For example, processing data from multiple nested Option types becomes much more readable using a for comprehension rather than deeply nested flatMap calls. Also, performing multiple filtering steps becomes more concise and easier to understand. For example:

// Without for comprehension (complex)

option1.flatMap(x => option2.flatMap(y => if (x + y > 10) Some(x + y) else None))

// With for comprehension (cleaner)

for {

x <- option1

y <- option2

if x + y > 10

} yield x + y

The second example clearly demonstrates the intent - iterate through option1, iterate through option2, filter based on the sum, and yield the sum. This declarative style improves readability and maintainability.

10. Explain the concept of a Monoid in Scala, and give a real-world example (other than the usual string concatenation or numeric addition) of how it can be useful.

A Monoid in Scala (and functional programming in general) is a type A along with an associative binary operation (often called combine or op) that takes two As and returns another A, and an identity element (often called empty or zero) that, when combined with any A, returns that same A. Formally, a Monoid must satisfy the following laws:

- Associativity:

op(op(x, y), z) == op(x, op(y, z))for allx,y, andzof typeA. - Identity:

op(x, empty) == xandop(empty, x) == xfor allxof typeA.

A real-world example (other than string concatenation or numeric addition) is combining sets of permissions in an access control system. Imagine you have several sources of permissions for a user (e.g., group memberships, direct assignments). Each source provides a Set[Permission]. The union of these sets represents the user's effective permissions. The Set[Permission] type, along with the union operation and the empty set (no permissions), forms a monoid. The combine operation would be set union, and the empty value would be an empty set. This allows you to easily aggregate permissions from multiple sources in a principled and composable way. Code Example:

import cats.Monoid

import cats.implicits._

case class Permission(name: String)

implicit val permissionSetMonoid: Monoid[Set[Permission]] = new Monoid[Set[Permission]] {

override def empty: Set[Permission] = Set.empty[Permission]

override def combine(x: Set[Permission], y: Set[Permission]): Set[Permission] = x union y

}

val permissions1 = Set(Permission("read"), Permission("write"))

val permissions2 = Set(Permission("execute"))

val combinedPermissions = permissions1 |+| permissions2 // Using cats syntax

println(combinedPermissions) // Output: Set(Permission(read), Permission(write), Permission(execute))

11. How do you implement custom extractors in Scala using the `unapply` method? What are some use cases for custom extractors beyond pattern matching?

In Scala, custom extractors are implemented using the unapply method. The unapply method takes an object as input and attempts to "extract" values from it. It returns an Option. If extraction is successful, it returns Some(extractedValues), where extractedValues can be a single value or a tuple of values. If extraction fails, it returns None. Here's a simple example:

object Email {

def unapply(str: String): Option[(String, String)] = {

val parts = str.split("@")

if (parts.length == 2) Some((parts(0), parts(1))) else None

}

}

val email = "john.doe@example.com"

email match {

case Email(user, domain) => println(s"User: $user, Domain: $domain")

case _ => println("Not an email address")

}

Beyond pattern matching, custom extractors are useful for:

- Data validation: Checking if a string conforms to a specific format.

- Data transformation: Extracting and transforming data from one format to another.

- Dependency injection: Providing different implementations based on runtime conditions.

- Routing: Directing requests based on extracted data. For example, you could extract information from a URL to determine which handler to use.

- Simplifying complex conditional logic: Instead of many

ifstatements, pattern matching with extractors can provide cleaner code.

12. Describe how you would use Scala's reflection capabilities to dynamically inspect and manipulate classes at runtime. What are the performance implications of using reflection?

Scala's reflection allows inspecting and manipulating classes at runtime. To use it, you'd first obtain a TypeTag for the class you want to work with, typically using typeOf[MyClass]. Then, using the reflection API, you can get information about the class's members (fields, methods, constructors) and even create instances or call methods dynamically. For example:

import scala.reflect.runtime.universe._

val tpe = typeOf[MyClass]

val constructorSymbol = tpe.decl(termNames.CONSTRUCTOR).asMethod

val constructor = mirror.reflectClass(tpe.typeSymbol.asClass).reflectConstructor(constructorSymbol)

val instance = constructor()

Performance-wise, reflection is generally slower than direct method calls. This is because the compiler cannot optimize code that relies on runtime class lookups. Reflection involves looking up class and method information at runtime, which is computationally expensive compared to direct calls resolved at compile time. Therefore, it should be used sparingly, where compile-time knowledge is unavailable or where dynamic behavior is absolutely necessary.

13. Explain how you would implement a custom DSL (Domain Specific Language) in Scala using a combination of implicit conversions, operators, and other language features.

To implement a custom DSL in Scala, I'd leverage implicit conversions to enrich existing classes with new methods and operators. For example, I could implicitly convert a String to a custom QueryBuilder class. This allows me to define custom operators on QueryBuilder, like where or equals, enabling a more natural syntax.

Further, I would use features like case classes and pattern matching to define the data structures and logic of the DSL. Implicit classes help to avoid the verbosity of explicitly creating a new class for implicit conversions. Using apply methods on companion objects allows for construction of DSL elements in a natural way. Finally, operator overloading allows you to define the behavior of your operators within the DSL.

14. Discuss techniques for optimizing Scala code for performance, including minimizing object allocations, using specialized collections, and leveraging tail recursion.

To optimize Scala code, several techniques can be employed. Minimizing object allocations is crucial; prefer val over var where immutability suffices, and consider using value classes or structs (if available in the Scala version) to avoid boxing. Employing specialized collections like Array[Int] or Array[Double] instead of Array[Any] can significantly reduce memory overhead and improve performance, particularly in numerical computations. For loops, consider using the while loops, since they perform better. Also, try to avoid complex data structures, and prefer simple structures when possible.

Tail recursion is another powerful optimization technique. When a function's recursive call is the last operation performed (tail position), the compiler can optimize it into a loop, preventing stack overflow errors. Ensure that recursive functions are tail-recursive by marking them with @tailrec annotation (from scala.annotation.tailrec) and verifying that the recursive call is indeed in the tail position. For example:

import scala.annotation.tailrec

@tailrec

final def factorial(n: Int, acc: Int = 1): Int = {

if (n <= 1) acc

else factorial(n - 1, n * acc)

}

15. How can you achieve compile-time metaprogramming in Scala using macros or Scala 3's new features? What are the advantages and disadvantages compared to runtime reflection?

In Scala, compile-time metaprogramming can be achieved using macros or, in Scala 3, using features like inline methods and metaprogramming constructs like scala.quoted.*. Macros allow you to inspect and transform code at compile time, generating new code based on the existing one. Scala 3's inline and quoted constructs offer a more type-safe and maintainable approach to similar tasks, enabling code generation and manipulation during compilation.

Compared to runtime reflection, compile-time metaprogramming offers significant advantages. Compile-time techniques catch errors earlier in the development cycle, improve performance by generating optimized code ahead of time (avoiding runtime overhead), and enhance type safety because the code is generated based on type information available during compilation. However, compile-time metaprogramming can increase compilation time and may be more complex to implement and debug than runtime reflection. Runtime reflection offers more flexibility, allowing code to inspect and modify itself dynamically, but at the cost of performance and type safety since errors might only be caught during execution.

16. Explain how to use tagged types in Scala to add compile-time checking to your code and prevent logical errors. Provide an example of how tagged types can improve type safety.

Tagged types (also known as phantom types or type tags) in Scala allow you to add extra compile-time information to existing types without modifying their runtime representation. This helps prevent logical errors by ensuring that values are used in the correct context. You create a tagged type by defining a tag (usually an empty trait) and then using type refinement to associate the base type with the tag.

For example:

trait USD

trait EUR

type Money = Double

type USDAmount = Money with USD

type EURAmount = Money with EUR

def addUSD(x: USDAmount, y: USDAmount): USDAmount = (x + y).asInstanceOf[USDAmount]

val usd1: USDAmount = 10.0.asInstanceOf[USDAmount]

val eur1: EURAmount = 20.0.asInstanceOf[EURAmount]

// addUSD(usd1, eur1) // This will not compile, preventing a logical error!

val usd2: USDAmount = addUSD(usd1, usd1)

println(usd2)

In this example, USDAmount and EURAmount are tagged types based on Double. The addUSD function only accepts USDAmount values. Attempting to pass an EURAmount will result in a compile-time error, enhancing type safety and preventing accidental mixing of currencies.

17. Describe the use of phantom types in Scala. Provide a scenario where phantom types enhance type safety beyond what regular types can provide.

Phantom types in Scala are a technique where a type parameter is used in a class or trait definition but not actually used in the implementation. They act as compile-time markers to encode additional information about the type without affecting the runtime representation.

Consider a state management scenario. Suppose we have a NetworkConnection class, and it can be in Connected or Disconnected states. Using regular types, we could have methods like send(data: String) and disconnect(). However, we can't prevent calling send() when the connection is Disconnected. With phantom types, we can define Connected and Disconnected marker traits and parameterize NetworkConnection with a type representing its state. Only a NetworkConnection[Connected] would have the send() method available, preventing incorrect method calls at compile time. NetworkConnection[Disconnected] would only have the connect() method. This enhances type safety by encoding state information into the type system itself.

18. Discuss strategies for handling errors and exceptions in asynchronous Scala code (e.g., using `Future`s or Akka). How do you ensure proper error propagation and recovery?

When dealing with errors in asynchronous Scala code, particularly with Futures, it's crucial to handle potential failures gracefully. Several strategies exist. You can use Future.recover and Future.recoverWith to handle specific exceptions or provide fallback values. Future. FallbackTo offers another option, executing a different Future if the original fails. The try block within a Future body can catch synchronous exceptions thrown during the Future's immediate execution.

For error propagation, using Future.transform or Future.transformWith allows you to uniformly map both success and failure cases. In Akka, supervision strategies are key. A supervisor actor defines how to handle exceptions thrown by its children (e.g., resume, restart, stop, escalate). Proper logging of errors is vital, along with metrics for monitoring failure rates. Avoid swallowing exceptions without logging or handling them appropriately; otherwise, you risk masking problems and making debugging significantly harder. Proper error handling is about ensuring resilience.

19. Explain the concept of lenses in Scala and their use in functional data manipulation. How can lenses simplify working with deeply nested immutable data structures?

Lenses in Scala provide a way to access and modify parts of immutable data structures in a composable and type-safe manner. They essentially offer a functional getter and setter for a specific field within a potentially deeply nested object. Lenses avoid the need to manually copy and update each level of the data structure, which can become verbose and error-prone.

Using lenses simplifies working with nested immutable data because you can focus on the specific part you want to change without worrying about the intermediate structures. For example, consider a nested object Address(Street(name: String), City(name: String)). With lenses, you can update the street name with code like addressLens.composeLens(streetLens).composeLens(streetNameLens).set(address, "New Street Name") , whereas without lenses, you'd need to manually copy each level. Lenses also provide type safety and composability, making them a powerful tool for functional data manipulation. Libraries like quicklens provide convenient ways to define and use lenses.

20. Describe techniques for testing asynchronous Scala code effectively. What are some common pitfalls in testing asynchronous code and how can you avoid them?

Testing asynchronous Scala code requires strategies to handle non-blocking operations. Techniques include using Future combinators (map, flatMap, recover) to transform asynchronous results into predictable states for assertions. Libraries like ScalaTest with its Eventually trait are crucial for waiting for asynchronous operations to complete before asserting outcomes. Mocking frameworks (Mockito, ScalaMock) can stub external asynchronous dependencies, enabling isolated unit tests. For integration tests, consider using embedded systems (like in-memory databases) or lightweight message queues to simulate real-world asynchronous interactions.

Common pitfalls involve race conditions and non-deterministic behavior. Avoid using Thread.sleep for synchronization, as it's unreliable. Instead, prefer Future combinators or explicitly waiting using Await.result (with caution, and always with a timeout). When mocking, ensure asynchronous behavior is simulated realistically (e.g., returning a Future that completes after a delay). Ensure to handle exceptions appropriately within your asynchronous code to prevent test failures, and always aim for deterministic test setups and teardowns to avoid flaky tests. Be mindful of thread pools' sizes when testing.

21. How does Scala's `PartialFunction` differ from a regular function? Give an example of where `PartialFunction` is particularly useful, such as in actor message handling or routing.

A regular function in Scala, Function1[A, B] (or A => B), is defined for all possible input values of type A and will always return a value of type B (or throw an exception). A PartialFunction[A, B] is a function that is not defined for all possible input values of type A. It has a isDefinedAt method which returns true if the function can handle the input, and false otherwise. If a partial function is called with an input for which it is not defined, it will typically throw a MatchError.

PartialFunction is useful in actor message handling. An actor's receive method is a partial function that defines how the actor responds to different types of messages. Here's an example:

import akka.actor.{Actor, ActorSystem, Props}

case class Greet(name: String)

case object Goodbye

class MyActor extends Actor {

override def receive: Receive = {

case Greet(name) => println(s"Hello, $name!")

case Goodbye => println("Goodbye!"); context.stop(self)

}

}

object Main extends App {

val system = ActorSystem("MySystem")

val actor = system.actorOf(Props[MyActor], "myActor")

actor ! Greet("Alice")

actor ! Goodbye

Thread.sleep(1000)

system.terminate()

}

In this example, receive is a PartialFunction[Any, Unit]. It's only defined for Greet and Goodbye messages. If the actor receives a message it doesn't understand, MatchError may occur, or the message might be unhandled depending on the actor's configuration and parent's behavior.

Expert Scala interview questions

1. How does Scala's type system handle variance, and why is this important in generic programming?

Scala's type system handles variance through annotations that specify how subtyping relationships between parameterized types relate to subtyping relationships between their type arguments. The three variance annotations are:

- Covariance (+T):

List[Cat]is a subtype ofList[Animal]ifCatis a subtype ofAnimal. - Contravariance (-T):

Function1[Animal, String]is a subtype ofFunction1[Cat, String]ifCatis a subtype ofAnimal. The parameter type is contravariant. - Invariance (no annotation):

List[Cat]is not a subtype or a supertype ofList[Animal]unlessCatandAnimalare the same type.

Variance is important in generic programming because it allows you to create flexible and reusable code that can work with a variety of types while maintaining type safety. By correctly specifying variance annotations, you can ensure that your generic classes and methods behave as expected when used with different subtypes and supertypes. This leads to more expressive and safer APIs, preventing runtime errors caused by incorrect type assumptions.

2. Explain the concept of a monad in Scala, providing a practical example beyond the typical Option or List monads.

A monad is a design pattern that allows you to chain operations together in a specific context, managing effects like null values, asynchronous computations, or state. It provides a way to sequence computations while handling underlying complexities in a controlled manner. Essential components are a type constructor M[A], a unit function (pure or unit) that lifts a value into the monad A => M[A], and a bind function (flatMap) which chains monadic computations M[A] => (A => M[B]) => M[B].

Consider a State monad for managing mutable state. Imagine updating a configuration object immutably. The State[S, A] monad represents a computation that, given a state S, produces a value of type A and a new state S. Here's a conceptual example:

case class Config(setting1: String, setting2: Int)

type State[S, A] = S => (A, S)

def unit[S, A](a: A): State[S, A] = s => (a, s)

def flatMap[S, A, B](st: State[S, A])(f: A => State[S, B]): State[S, B] = s => {

val (a, newState) = st(s)

f(a)(newState)

}

def updateSetting1(newValue: String): State[Config, Unit] = config => ((), config.copy(setting1 = newValue))

val initialState = Config("initial", 0)

val computation = flatMap(updateSetting1("new value"))(_ => unit(123))

val (result, finalState) = computation(initialState) // result = 123, finalState = Config("new value", 0)

In this case, the State monad manages the state transitions, allowing us to chain updates to the Config object without explicitly passing the state around.

3. Describe the Actor Model and its implementation in Scala using Akka, including strategies for handling actor failures.

The Actor Model is a concurrent computation model where 'Actors' are the fundamental units of computation. Actors encapsulate state and behavior, communicating exclusively via asynchronous message passing. Each actor has a mailbox that stores incoming messages, processed sequentially. Key principles include: all communication is asynchronous, actors are independent and concurrent, and actors can create other actors. In Scala, Akka is a popular framework for implementing the Actor Model.

Akka provides an ActorSystem for managing actors. To create an actor, you define a class extending Actor and implement the receive method to handle incoming messages. Actor failures are handled using supervision strategies. These strategies define how a supervisor actor should react to a child actor's failure. Common strategies include: Resume (retains state), Restart (restarts with initial state), Stop (terminates the actor), and Escalate (passes the failure to the supervisor's supervisor). The following demonstrates Akka in Scala:

import akka.actor._

class MyActor extends Actor {

def receive = {

case message: String => println(s"Received message: $message")

case _ => println("Unknown message")

}

}

val system = ActorSystem("MySystem")

val myActor = system.actorOf(Props[MyActor], name = "myActor")

myActor ! "Hello, Akka!"

4. How does Scala's implicit resolution mechanism work, and what are some potential pitfalls to avoid when using implicits?

Scala's implicit resolution mechanism automatically provides values or conversions when the compiler encounters missing parameters or type mismatches. It searches for available implicits in the current scope, companion objects of the involved types, and the implicit scope of the expected type. The compiler prioritizes implicits based on their location: explicit imports take precedence over those defined in companion objects.

Potential pitfalls include:

- Ambiguity: Multiple suitable implicits can lead to compile-time errors. Resolve this by explicitly importing the correct implicit or providing a more specific one.

- Implicit conversions overuse: Can make code harder to understand and debug. Use them judiciously, primarily for type enrichment or DSL construction.

- Implicit scope pollution: Defining too many implicits in a common scope can lead to unexpected behavior. Keep implicits close to where they are used.