Interviewing AI Research Engineers requires knowing the right questions to ask to gauge expertise. If you are looking to hire someone with strong problem-solving abilities and a solid grasp of algorithms and data structures like you do when hiring a software engineer, you'll need a structured approach to uncover their skills.

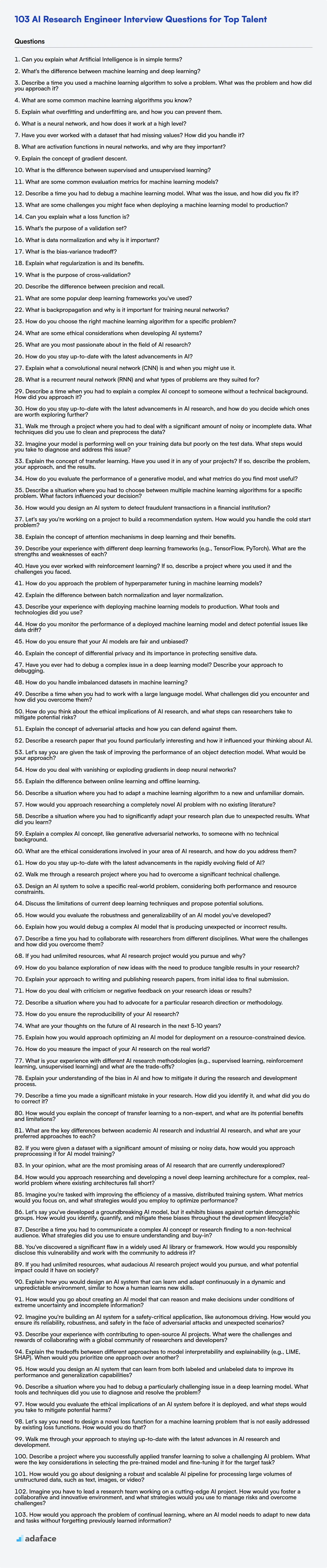

This blog post provides a collection of interview questions categorized by difficulty level: basic, intermediate, advanced, and expert, along with a set of multiple-choice questions. You can evaluate candidates on a broad range of topics, including machine learning, deep learning, and natural language processing.

Use these questions to effectively assess candidates and make data-driven hiring decisions or use Adaface's Research Engineer Test to screen candidates before the interview.

Table of contents

Basic AI Research Engineer interview questions

1. Can you explain what Artificial Intelligence is in simple terms?

Artificial Intelligence (AI) is essentially about making computers think and act more like humans. Instead of just following pre-programmed instructions, AI allows machines to learn from data, solve problems, and make decisions.

Think of it like teaching a computer to recognize patterns, understand language, or even play games. We do this by feeding it lots of information and algorithms that allow it to improve its performance over time without explicit programming for every single scenario.

2. What's the difference between machine learning and deep learning?

Machine learning is a broader field that encompasses various algorithms allowing computers to learn from data without explicit programming. Deep learning, on the other hand, is a subfield of machine learning that uses artificial neural networks with multiple layers (hence, "deep") to analyze data with complex patterns. The key difference lies in how features are extracted: machine learning often requires manual feature extraction, while deep learning can automatically learn features from raw data.

In short, deep learning models often achieve higher accuracy and can handle more complex tasks, but they typically require significantly more data and computational power than traditional machine learning algorithms. Also, traditional Machine learning algorithms can be used in cases where the data is limited and simpler.

3. Describe a time you used a machine learning algorithm to solve a problem. What was the problem and how did you approach it?

I worked on a project to predict customer churn for a telecommunications company. The problem was high customer attrition, impacting revenue. We approached it using a machine learning classification model. Specifically, we chose a Random Forest algorithm due to its ability to handle non-linear relationships and provide feature importance.

The process involved data cleaning, feature engineering (creating features like call duration ratios, service usage patterns), and then training the Random Forest model. We split the data into training and testing sets. After training, we evaluated the model's performance using metrics like precision, recall, and F1-score. Feature importance analysis helped the business identify key factors driving churn, which were then used to develop targeted retention strategies. For example, we used python's scikit-learn:

from sklearn.ensemble import RandomForestClassifier

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)

4. What are some common machine learning algorithms you know?

Some common machine learning algorithms include:

- Linear Regression: Used for predicting continuous values based on a linear relationship between variables.

- Logistic Regression: Used for binary classification problems, predicting the probability of an instance belonging to a certain class.

- Decision Trees: Tree-like structures that partition data based on feature values, used for both classification and regression.

- Support Vector Machines (SVM): Finds the optimal hyperplane to separate data points into different classes.

- K-Nearest Neighbors (KNN): Classifies data points based on the majority class of their k-nearest neighbors.

- Naive Bayes: A probabilistic classifier based on Bayes' theorem with the "naive" assumption of independence between features.

- K-Means Clustering: An unsupervised learning algorithm used for partitioning data into k clusters.

- Random Forest: An ensemble learning method that combines multiple decision trees to improve accuracy and reduce overfitting.

- Gradient Boosting (e.g., XGBoost, LightGBM, CatBoost): Another ensemble method that builds trees sequentially, where each tree corrects the errors of the previous ones.

5. Explain what overfitting and underfitting are, and how you can prevent them.

Overfitting occurs when a model learns the training data too well, capturing noise and specific details instead of the underlying pattern. This leads to excellent performance on the training set but poor generalization to new, unseen data. Underfitting, conversely, happens when a model is too simple to capture the underlying patterns in the training data, resulting in poor performance on both the training and test sets.

To prevent overfitting:

- Use more data: More data helps the model learn the underlying pattern and reduce the impact of noise.

- Simplify the model: Reduce the number of parameters or use a simpler algorithm. For example, use linear regression instead of a high-degree polynomial regression.

- Regularization: Add a penalty term to the loss function to discourage large weights. Common techniques include L1 (Lasso) and L2 (Ridge) regularization.

- Cross-validation: Use techniques like k-fold cross-validation to evaluate the model's performance on multiple subsets of the data.

- Early stopping: Monitor the model's performance on a validation set and stop training when performance starts to degrade. Dropout can also be used as a regularization technique, especially in neural networks.

To prevent underfitting:

- Use a more complex model: Increase the number of parameters or use a more sophisticated algorithm.

- Feature engineering: Create new features or transform existing ones to better represent the underlying patterns.

- Reduce regularization: If you're using regularization, reduce the strength of the penalty term.

- Train for longer: More epochs.

6. What is a neural network, and how does it work at a high level?

A neural network is a computational model inspired by the structure and function of the human brain. At a high level, it's composed of interconnected nodes (neurons) organized in layers. Input data is fed into the first layer, and each neuron in a layer performs a weighted sum of its inputs, applies an activation function, and passes the result to the next layer. This process continues until the output layer produces a prediction.

The network learns by adjusting the weights of the connections between neurons during a training process. This adjustment is typically based on comparing the network's predictions with the actual values and using optimization algorithms like gradient descent to minimize the error. Essentially, the network refines its internal parameters to map inputs to desired outputs more accurately.

7. Have you ever worked with a dataset that had missing values? How did you handle it?

Yes, I've frequently encountered missing values in datasets. My approach to handling them depends on the nature of the data and the goals of the analysis.

Common strategies I've used include:

- Imputation: Filling in missing values with reasonable estimates. Techniques range from simple (mean/median imputation) to more sophisticated (k-nearest neighbors, model-based imputation). The choice depends on the data distribution and the level of accuracy needed.

- Removal: If the missing data is minimal or occurs randomly and deleting the affected rows/columns won't significantly bias the results, I might remove them. However, I'm cautious about this as it can lead to data loss.

- Flagging and ignoring: Sometimes, rather than impute or remove, I'll create a new feature that indicates whether a value was originally missing. The model can then learn to treat these 'missing' entries differently. I also would consider the type of analysis. In some situations, missing values are acceptable and can be safely ignored without imputation or removal.

8. What are activation functions in neural networks, and why are they important?

Activation functions introduce non-linearity to neural networks, enabling them to learn complex patterns. Without activation functions, a neural network would simply be a linear regression model, regardless of its depth. They decide whether a neuron should be activated or not, based on the weighted sum of its inputs.

Importance: Non-linearity is crucial for modeling real-world data, which is often non-linear. Activation functions also help in gradient-based optimization during training. Common types include ReLU, sigmoid, and tanh. Different activation functions work better for different use cases.

9. Explain the concept of gradient descent.

Gradient descent is an iterative optimization algorithm used to find the minimum of a function. Think of it as descending a hill; at each point, you look around to see which direction slopes downwards the most and take a step in that direction. This process repeats until you reach the bottom (the minimum).

In machine learning, the function we're trying to minimize is often a cost or loss function, which represents the error of our model. The gradient indicates the direction of the steepest ascent of this function, so we move in the opposite direction (hence 'descent') to reduce the error. The 'learning rate' controls the size of the steps we take during each iteration. Too large a rate, and you might overshoot; too small, and it may take forever to converge.

10. What is the difference between supervised and unsupervised learning?

Supervised learning uses labeled data for training, where each input is paired with a corresponding output. The goal is to learn a mapping function that can predict the output for new, unseen inputs. Common supervised learning tasks include classification and regression.

Unsupervised learning, on the other hand, uses unlabeled data. The algorithm explores the data to discover patterns, structures, or groupings without any prior knowledge of the correct output. Examples of unsupervised learning tasks are clustering, dimensionality reduction, and anomaly detection.

11. What are some common evaluation metrics for machine learning models?

Common evaluation metrics for machine learning models vary depending on the task, but some frequently used ones include: For classification, Accuracy, Precision, Recall, F1-score, and AUC-ROC are widely used. Accuracy measures the overall correctness, while precision and recall focus on the quality of positive predictions. F1-score balances precision and recall, and AUC-ROC evaluates the model's ability to distinguish between classes.

For regression tasks, Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and R-squared are common. MSE and RMSE quantify the average squared difference between predicted and actual values. MAE calculates the average absolute difference. R-squared represents the proportion of variance in the dependent variable that can be predicted from the independent variables. Consider the trade offs of each metric when evaluating model performance.

12. Describe a time you had to debug a machine learning model. What was the issue, and how did you fix it?

During a project predicting customer churn, the model exhibited unexpectedly poor performance on a specific segment of customers. Initially, I suspected overfitting, but cross-validation scores were consistent. After digging deeper, I discovered a data preprocessing error: categorical features for this segment were being incorrectly encoded due to a faulty join operation during feature engineering. Specifically, a lookup table used for encoding was not properly filtered leading to mismatched encodings for the problematic customer segment.

To fix this, I rewrote the join operation with more robust filtering and validation steps to ensure accurate mapping of categorical features. I then retrained the model with the corrected data. This significantly improved the model's performance on the affected customer segment, and overall accuracy increased as well. I also added unit tests for the data preprocessing pipeline to prevent similar issues in the future.

13. What are some challenges you might face when deploying a machine learning model to production?

Deploying machine learning models to production presents several challenges. One key challenge is data drift, where the statistical properties of the input data change over time, leading to model performance degradation. This necessitates continuous monitoring and retraining of the model with fresh data. Another challenge is infrastructure limitations, such as insufficient computational resources or incompatible software dependencies. Addressing this involves careful planning and optimization of the deployment environment. Other challenges include:

- Model Versioning and Management: Keeping track of different model versions and ensuring seamless transitions.

- Monitoring Model Performance: Implementing robust monitoring systems to detect and address performance degradation.

- Explainability and Interpretability: Understanding and explaining model predictions, especially in regulated industries.

14. Can you explain what a loss function is?

A loss function, also known as a cost function, quantifies the difference between the predicted output of a machine learning model and the actual target value. It essentially measures how "wrong" the model's predictions are. The goal of training a machine learning model is to minimize this loss function, thereby improving the model's accuracy.

Different loss functions are suitable for different types of problems. For example, mean squared error (MSE) is commonly used for regression problems, while cross-entropy loss is often used for classification problems. The choice of the loss function significantly impacts the training process and the final performance of the model.

15. What's the purpose of a validation set?

The purpose of a validation set is to evaluate a model's performance during training. It helps to tune hyperparameters and prevent overfitting. Unlike the training set, which the model learns from, the validation set provides an unbiased evaluation of the model's ability to generalize to unseen data.

By monitoring the model's performance on the validation set, we can identify when the model starts to overfit (i.e., performs well on the training data but poorly on the validation data). This allows us to stop training early or adjust hyperparameters to improve generalization. The validation set provides the 'signal' to tune the models to the right spot, balancing the trade-off of overfitting and underfitting.

16. What is data normalization and why is it important?

Data normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It typically involves dividing databases into two or more tables and defining relationships between the tables. The goal is to isolate data so that modifications of an attribute can be made in only one table.

Normalization is important because it minimizes data redundancy, which reduces storage space and update anomalies (inconsistencies). It also improves data integrity, making the database more reliable and easier to maintain. Properly normalized databases are also easier to query and analyze, leading to better data-driven decision-making.

17. What is the bias-variance tradeoff?

The bias-variance tradeoff is a fundamental concept in machine learning that deals with balancing two sources of error that prevent supervised learning models from generalizing well to unseen data. Bias refers to the error introduced by approximating a real-world problem, which is often complex, by a simplified model. High bias can cause a model to underfit the data, missing relevant relationships between features and the target output. Variance, on the other hand, is the sensitivity of the model to variations in the training data. High variance means that the model learns the noise in the training data, leading to overfitting.

The goal is to find a sweet spot where both bias and variance are minimized. Reducing bias often increases variance and vice versa. Complex models tend to have low bias but high variance, while simple models tend to have high bias but low variance. Techniques like cross-validation and regularization are used to manage this tradeoff and build models that generalize well.

18. Explain what regularization is and its benefits.

Regularization is a technique used in machine learning to prevent overfitting, which occurs when a model learns the training data too well and performs poorly on unseen data. It works by adding a penalty term to the loss function that discourages overly complex models. This penalty term penalizes large coefficients in the model, effectively simplifying it.

The benefits of regularization include improved generalization performance (better performance on unseen data), reduced variance (less sensitivity to changes in the training data), and improved model interpretability. Common regularization techniques include L1 regularization (Lasso), which adds the absolute value of coefficients to the loss function, and L2 regularization (Ridge), which adds the squared value of coefficients to the loss function. Elastic Net combines both L1 and L2 penalties.

19. What is the purpose of cross-validation?

The purpose of cross-validation is to assess how well a predictive model will generalize to an independent data set. It helps to estimate the performance of a model on unseen data, preventing overfitting, which occurs when a model learns the training data too well, and performs poorly on new data.

Cross-validation involves partitioning the available data into multiple subsets. One subset is used as the validation set (or test set), and the others are used to train the model. This process is repeated multiple times, with different subsets used as the validation set each time. The results from each iteration are then averaged to provide an estimate of the model's overall performance. Common techniques include k-fold cross-validation and stratified k-fold cross-validation.

20. Describe the difference between precision and recall.

Precision and recall are two important metrics used to evaluate the performance of classification models.

Precision measures the accuracy of the positive predictions. It answers the question: "Of all the items that the model predicted as positive, how many were actually positive?". It is calculated as True Positives / (True Positives + False Positives).

Recall, also known as sensitivity, measures the completeness of the positive predictions. It answers the question: "Of all the actual positive items, how many did the model correctly predict as positive?". It is calculated as True Positives / (True Positives + False Negatives).

21. What are some popular deep learning frameworks you've used?

I have experience with several popular deep learning frameworks. TensorFlow and PyTorch are the ones I've used most extensively. TensorFlow's ecosystem, including Keras, provides a robust platform for building and deploying large-scale models. PyTorch, on the other hand, I find particularly useful for research and rapid prototyping due to its dynamic computation graph and Pythonic interface.

I've also worked with scikit-learn for simpler machine learning tasks and model evaluation. While not strictly a deep learning framework, it often complements deep learning workflows. Additionally, I've explored ONNX for model interoperability.

22. What is backpropagation and why is it important for training neural networks?

Backpropagation is an algorithm used to train neural networks. It works by calculating the gradient of the loss function with respect to the weights of the network and then using this gradient to update the weights in the direction that minimizes the loss.

It's crucial because it allows neural networks to learn from their errors. Without backpropagation, training complex, multi-layered neural networks would be computationally infeasible. Essentially, it efficiently distributes the error signal back through the network's layers, allowing each layer to adjust its parameters and improve the overall performance.

23. How do you choose the right machine learning algorithm for a specific problem?

Choosing the right machine learning algorithm depends on several factors. First, understand the type of problem: is it a classification, regression, clustering, or dimensionality reduction task? Next, consider the nature of your data: is it labeled or unlabeled? How much data do you have? What are the data types (numerical, categorical)? Also, evaluate algorithm requirements versus computational costs: Some algorithms demand extensive resources, while others require careful data preprocessing.

Experimentation is key. Start with simpler models like logistic regression or decision trees. If performance is insufficient, explore more complex models like support vector machines or neural networks. Use cross-validation and relevant evaluation metrics (e.g., accuracy, precision, recall, F1-score, RMSE) to compare models. Regularly evaluate the performance of different models, and choose the algorithm with the optimal balance of performance and complexity. Remember, no single algorithm is universally superior.

24. What are some ethical considerations when developing AI systems?

Ethical considerations in AI development are paramount. Key concerns revolve around bias, fairness, accountability, and transparency. AI systems trained on biased data can perpetuate and amplify existing societal inequalities. Ensuring fairness requires careful attention to data collection, model design, and evaluation metrics. Accountability addresses who is responsible when AI systems make errors or cause harm. Transparency is crucial for understanding how AI systems make decisions, allowing for scrutiny and improvement.

Data privacy is also critical. AI systems often require large amounts of personal data, raising concerns about data security and potential misuse. Developers must adhere to privacy regulations and implement robust data protection measures. The potential for job displacement due to AI-driven automation is another ethical consideration. Mitigation strategies include retraining programs and exploring new economic models.

25. What are you most passionate about in the field of AI research?

I'm most passionate about the potential of AI to solve complex, real-world problems, particularly in areas like healthcare and climate change. The ability to leverage machine learning to analyze vast datasets, identify patterns, and develop innovative solutions excites me. For instance, using AI to predict disease outbreaks or optimize energy consumption could have a significant positive impact on society.

Specifically, I'm fascinated by the intersection of AI and reinforcement learning, and its application in decision-making processes. The ability of agents to learn through trial and error, and to adapt to changing environments, offers a powerful framework for tackling complex challenges. I am also interested in improving the robustness and explainability of AI models, ensuring that they are reliable and transparent in their predictions.

26. How do you stay up-to-date with the latest advancements in AI?

I stay up-to-date with AI advancements through a variety of methods. I regularly read research papers on arXiv and other academic platforms. I also follow prominent AI researchers and organizations on social media (Twitter, LinkedIn) and subscribe to AI-related newsletters like The Batch from Andrew Ng.

Furthermore, I participate in online courses on platforms like Coursera and edX to learn about new techniques and tools. I also engage in personal projects, experimenting with new libraries (like TensorFlow or PyTorch) and datasets to gain practical experience. This hands-on experience complements the theoretical knowledge I acquire from other sources.

27. Explain what a convolutional neural network (CNN) is and when you might use it.

A Convolutional Neural Network (CNN) is a type of deep learning neural network primarily used for processing and analyzing visual data. It excels at tasks like image recognition, object detection, and image classification. CNNs use convolutional layers to automatically learn spatial hierarchies of features from images, effectively identifying patterns regardless of their location in the image. This makes them robust to variations in object position, scale, and orientation.

CNNs are a good choice when you're working with image data or other data that has a grid-like structure (e.g., audio spectrograms, time series data). Specific use cases include medical image analysis (detecting tumors), self-driving cars (identifying traffic signs and pedestrians), facial recognition, and natural language processing tasks that treat text as a 1D sequence. The key advantage is their ability to automatically learn relevant features, eliminating the need for manual feature engineering. Common libraries used for CNNs are TensorFlow and PyTorch.

28. What is a recurrent neural network (RNN) and what types of problems are they suited for?

A Recurrent Neural Network (RNN) is a type of neural network designed to handle sequential data. Unlike traditional feedforward networks, RNNs have feedback connections, allowing them to maintain a 'memory' of past inputs. This makes them well-suited for tasks where the order of information is important. They process input sequences element by element, and the hidden state at each step is influenced by both the current input and the previous hidden state.

RNNs excel in tasks like natural language processing (NLP), time series analysis, and speech recognition. Specific examples include:

- Language Modeling: Predicting the next word in a sequence.

- Machine Translation: Converting a sentence from one language to another.

- Sentiment Analysis: Determining the emotional tone of a text.

- Speech Recognition: Converting audio signals into text.

- Video Analysis: Understanding the temporal relationships in video frames.

Intermediate AI Research Engineer interview questions

1. Describe a time when you had to explain a complex AI concept to someone without a technical background. How did you approach it?

I once had to explain how a recommendation engine worked to a marketing manager. I avoided using technical jargon like 'algorithms' or 'neural networks.' Instead, I used an analogy. I explained it was like a very advanced librarian who knew what books people had enjoyed in the past. When someone checked out a new book, the librarian would look at similar readers and suggest other books they liked. So, if someone bought a product A, the engine would look at other customers who bought product A and see what else they bought. Product B, C, and D were commonly bought? Then suggest B, C, and D. I focused on the outcome – providing relevant product suggestions – rather than the underlying mechanics. I also provided concrete examples, such as showing how Amazon suggests products based on browsing history. This helped her understand the practical benefits without getting bogged down in technical details.

2. How do you stay up-to-date with the latest advancements in AI research, and how do you decide which ones are worth exploring further?

I stay up-to-date with AI advancements through a combination of resources. I regularly read research papers on arXiv and follow key researchers and organizations (e.g., Google AI, OpenAI, DeepMind) on platforms like Twitter and LinkedIn. I also subscribe to AI newsletters like those from Import AI and The Batch and participate in online communities such as Reddit's r/MachineLearning.

To decide which advancements to explore further, I prioritize based on relevance to my current projects and interests, the potential impact of the research, and its reproducibility. I look for code releases or detailed implementation explanations. A strong theoretical foundation coupled with practical applications signals higher value. I also consider the credibility of the source and cross-validate findings from multiple sources before diving deep.

3. Walk me through a project where you had to deal with a significant amount of noisy or incomplete data. What techniques did you use to clean and preprocess the data?

In a recent project involving customer reviews scraped from various online platforms, I encountered a significant amount of noisy and incomplete data. The noise stemmed from inconsistent formatting, misspellings, abbreviations, and irrelevant information. To address this, I implemented several cleaning and preprocessing techniques. Firstly, I performed data cleaning using regular expressions and string manipulation techniques to standardize the text format, correct misspellings, and remove irrelevant characters or HTML tags.

Secondly, I handled incomplete data (missing fields in some reviews) using imputation methods. For numerical fields, I used mean or median imputation based on the distribution of the data. For categorical fields, I employed mode imputation or introduced a new category 'Missing'. Furthermore, I used techniques like TF-IDF (Term Frequency-Inverse Document Frequency) for feature extraction to convert textual data into numerical representations suitable for machine learning models. These techniques helped me create a cleaner, more consistent, and usable dataset for analysis and modeling.

4. Imagine your model is performing well on your training data but poorly on the test data. What steps would you take to diagnose and address this issue?

This scenario indicates overfitting. First, I'd simplify the model by reducing the number of features, layers, or nodes. Regularization techniques like L1 or L2 regularization can penalize large weights, preventing the model from memorizing the training data. Increasing the training data size can also help the model generalize better. Feature selection and engineering can reduce noise. Cross-validation provides a more robust estimate of the model's performance on unseen data.

Further, I would examine the training and test data distributions for discrepancies. If the test data distribution is different from the training distribution, techniques like re-weighting the training data or using domain adaptation methods would be applied. I'd also verify that the test set is truly representative of the data the model will encounter in production. Check and fix data leakage issues.

5. Explain the concept of transfer learning. Have you used it in any of your projects? If so, describe the problem, your approach, and the results.

Transfer learning is a machine learning technique where a model developed for one task is reused as the starting point for a model on a second task. It leverages the knowledge gained while solving the first problem and applies it to a different but related problem. This is particularly useful when you have limited data for the second task, as the pre-trained model already possesses learned features.

Yes, I've used transfer learning in a project involving image classification. The problem was to classify different types of skin diseases from medical images, but the available dataset was relatively small. My approach was to use a pre-trained ResNet50 model (trained on ImageNet, a large dataset of general images) and fine-tune it for the skin disease classification task. The results were significantly better than training a model from scratch, achieving a higher accuracy and faster convergence due to the pre-trained model's ability to extract relevant features from images.

6. How do you evaluate the performance of a generative model, and what metrics do you find most useful?

Evaluating generative models depends on the task. For images, common metrics include: Inception Score (IS), which measures the quality and diversity of generated images (higher is better), and Fréchet Inception Distance (FID), which compares the statistics of generated images to real images (lower is better). For text generation, Perplexity (lower is better) assesses how well a language model predicts a sample, while BLEU and ROUGE scores (higher is better) measure the similarity between generated text and reference text. Human evaluation remains crucial, especially for subjective qualities like coherence and relevance.

Beyond these, more task-specific metrics exist. For example, evaluating VAEs might also involve reconstruction error. For GANs, monitoring discriminator loss is important to ensure training stability. It's often best to use a combination of metrics and qualitative analysis for a thorough evaluation.

7. Describe a situation where you had to choose between multiple machine learning algorithms for a specific problem. What factors influenced your decision?

In a project aimed at predicting customer churn for a subscription service, I had to choose between Logistic Regression, Support Vector Machines (SVM), and Random Forests. Several factors influenced my decision.

Firstly, interpretability was crucial since the business stakeholders needed to understand why customers were churning. Logistic Regression, due to its easily interpretable coefficients, was initially favored, especially when combined with feature importance from regularization methods like L1 (Lasso). Secondly, performance on a held-out validation set was assessed. While SVMs sometimes provided slightly better accuracy, their computational cost during training and prediction was higher, and explainability was lower. Random Forests provided a strong balance: good performance with moderate interpretability (feature importance). Finally, considering the potential for overfitting with Random Forests and the need for careful hyperparameter tuning, I decided to proceed with a combination of Logistic Regression (for interpretability with a moderate performance) and Random Forests (for improved predictive accuracy) via an ensemble method (simple averaging of predicted probabilities).

8. How would you design an AI system to detect fraudulent transactions in a financial institution?

To design an AI system for detecting fraudulent transactions, I would focus on a multi-layered approach combining machine learning and rule-based systems. First, a rule-based system would catch simple, known fraud patterns (e.g., transactions exceeding a certain amount from a suspicious location). Then, a machine learning model, likely an anomaly detection algorithm (Isolation Forest, One-Class SVM, or an ensemble), would analyze transaction data (amount, time, location, merchant, user behavior) to identify unusual patterns deviating from established norms. Feature engineering would be crucial, creating features representing user behavior, transaction frequency, and geographical patterns.

Second, I would use a supervised learning model (like a Random Forest or Gradient Boosting Machine) trained on labeled data (historical fraudulent and legitimate transactions) to classify transactions in real-time. This model would use the features used by the anomaly detection algorithm as well as other data such as user demographics. Model performance would be monitored constantly and models would be retrained regularly with new data to adapt to evolving fraud tactics. Alerts generated by both systems would then be investigated by fraud analysts.

9. Let's say you're working on a project to build a recommendation system. How would you handle the cold start problem?

The cold start problem in recommendation systems arises when there's insufficient information about new users or new items to make accurate recommendations. To handle it, I'd employ a hybrid approach. For new users, I'd use non-personalized recommendations based on popular items, trending items, or items with high average ratings. We could also gather explicit feedback through a signup questionnaire asking about preferences or interests. For new items, I'd leverage content-based filtering using item metadata (e.g., descriptions, categories) to find similar items with existing ratings. Exploration strategies like A/B testing different recommendation approaches or using contextual bandits can also help gather data and refine recommendations over time. This ensures a reasonable starting point for recommendations even with limited data.

10. Explain the concept of attention mechanisms in deep learning and their benefits.

Attention mechanisms in deep learning allow the model to focus on the most relevant parts of the input when making predictions. Unlike traditional models that process the entire input equally, attention mechanisms assign weights to different parts of the input, indicating their importance. These weights are then used to create a weighted sum of the input, which is used for the prediction.

The benefits of using attention mechanisms are improved performance, especially with long sequences, better interpretability, as you can see which parts of the input the model is focusing on, and the ability to handle variable-length inputs more effectively. For example, in machine translation, attention helps the model focus on the words in the source sentence that are most relevant to generating the next word in the target sentence.

11. Describe your experience with different deep learning frameworks (e.g., TensorFlow, PyTorch). What are the strengths and weaknesses of each?

I have experience with both TensorFlow and PyTorch. TensorFlow, backed by Google, boasts a mature ecosystem, strong production deployment capabilities (especially with TensorFlow Serving and TensorFlow Lite), and tools like TensorBoard for visualization. Its static computational graph can sometimes make debugging more challenging and the initial learning curve steeper. PyTorch, supported by Meta, is known for its dynamic computational graph, which offers greater flexibility and ease of debugging. This makes it popular for research and rapid prototyping. PyTorch also has a more Pythonic feel, making it intuitive for many users. However, TensorFlow's production deployment tools are generally considered more robust, though PyTorch is closing the gap with tools like TorchServe.

Specifically, I've used TensorFlow with Keras API for building image classification models and deployed them using TensorFlow Serving. I've also used PyTorch for implementing custom recurrent neural networks for time series analysis and experimented with generative adversarial networks (GANs). I find PyTorch's debugging capabilities and dynamic graph execution very helpful during the experimentation phase, while TensorFlow's production readiness is beneficial for deployment.

12. Have you ever worked with reinforcement learning? If so, describe a project where you used it and the challenges you faced.

Yes, I have worked with reinforcement learning. In one project, I used it to train an AI agent to play a simplified version of the game "Connect Four". The goal was to create an agent that could learn optimal strategies through self-play, without any prior knowledge of the game's rules. I implemented a Q-learning algorithm with an epsilon-greedy exploration strategy.

The main challenge was dealing with the large state space, even in the simplified version of Connect Four. This led to slow convergence and required careful tuning of the learning rate and exploration parameters. I also experimented with different reward functions to encourage specific behaviors, such as blocking the opponent's winning moves. Another challenge was optimizing the Q-table updates for efficient memory usage and faster learning. Finally, I encountered the issue of overfitting, where the agent became too specialized in playing against itself and struggled against new opponents. To overcome this, I introduced techniques such as experience replay and implemented a more robust exploration schedule.

13. How do you approach the problem of hyperparameter tuning in machine learning models?

Hyperparameter tuning involves finding the optimal set of hyperparameters for a machine learning model to maximize its performance on a given task. My approach typically involves these steps:

- Define the search space: Identify the hyperparameters to tune and their possible ranges (e.g., learning rate, number of layers). Consider using techniques like grid search (evaluating all combinations within the search space), random search (randomly sampling combinations), or more advanced methods like Bayesian optimization (using a probabilistic model to guide the search) to explore this space.

- Choose a validation strategy: Split the data into training, validation, and test sets. Use the validation set to evaluate different hyperparameter combinations and select the best one. Cross-validation can provide a more robust estimate of performance, especially with limited data.

- Implement the search: Use libraries like

scikit-learn,hyperopt, oroptunato automate the hyperparameter search process. These tools allow you to define the search space, validation strategy, and evaluation metric. - Evaluate and iterate: After the search, evaluate the best performing model on the test set to estimate its generalization performance. Depending on the results, further refine the search space or try different algorithms if necessary.

14. Explain the difference between batch normalization and layer normalization.

Batch normalization normalizes the activations of a layer across a batch of data, computing the mean and variance for each feature across the batch dimension. Layer normalization, on the other hand, normalizes the activations of a layer within each individual data point. It computes the mean and variance for each data point across the feature dimension. In essence, batch norm operates column-wise (across different samples in a batch), while layer norm operates row-wise (across different features for a single sample).

The key difference lies in their applicability and performance. Batch normalization performs well when batch sizes are large enough to provide a reliable estimate of the data distribution. Layer normalization is particularly useful in situations where batch sizes are small, or when dealing with recurrent neural networks (RNNs), where the length of sequences can vary significantly making batch statistics unstable. It is also useful in situations like reinforcement learning where batch statistics may be unreliable or unavailable.

15. Describe your experience with deploying machine learning models to production. What tools and technologies did you use?

My experience with deploying machine learning models to production involves using various tools and technologies depending on the specific requirements of the project. I've used Flask and FastAPI to create REST APIs that serve model predictions. For model deployment, I've worked with platforms like AWS SageMaker, Google Cloud AI Platform, and Azure Machine Learning. These platforms offer features such as model versioning, scaling, and monitoring.

For containerization, I'm familiar with Docker and Kubernetes. Docker helps package the model and its dependencies into a consistent environment, while Kubernetes facilitates orchestration and scaling across multiple servers. I've also used CI/CD pipelines with tools like Jenkins and GitHub Actions to automate the deployment process. Model monitoring is done using tools like Prometheus and Grafana to track performance metrics and detect potential issues.

16. How do you monitor the performance of a deployed machine learning model and detect potential issues like data drift?

Monitoring a deployed machine learning model involves tracking key performance indicators (KPIs) and looking for signs of degradation. This includes tracking model accuracy, precision, recall, F1-score (or other relevant metrics depending on the business goal), prediction latency, and throughput. We also monitor the input data distribution for data drift, which occurs when the characteristics of the input data change over time. This can be done by comparing the current data distribution to the original training data distribution using statistical tests or visualization techniques such as calculating the KL divergence or using population stability index (PSI).

To detect issues like data drift, we set up automated monitoring dashboards and alerts. If a KPI falls below a predefined threshold or data drift is detected, an alert is triggered, prompting further investigation. We might also use techniques such as A/B testing to compare the performance of the current model against a baseline model or a newly trained model. Regular retraining of the model with fresh data is crucial to mitigate the effects of data drift and maintain optimal performance. I would also look at the hardware metrics of the host server and the model serving infrastructure such as CPU/GPU utilization, RAM usage and Network I/O.

17. How do you ensure that your AI models are fair and unbiased?

Ensuring fairness and mitigating bias in AI models involves a multi-faceted approach. Data bias is a primary concern; therefore, careful data collection, preprocessing, and augmentation techniques are crucial. This includes ensuring diverse representation, handling missing data appropriately, and addressing class imbalances. Regular audits using fairness metrics, such as disparate impact, equal opportunity difference, and predictive parity, are essential to identify and quantify bias.

Furthermore, algorithmic fairness techniques can be applied during model training. This could involve re-weighting training samples, adding regularization terms to penalize unfair predictions, or using adversarial training to learn bias-invariant representations. Finally, it's important to consider the societal context and potential impact of the model's decisions and involve diverse stakeholders in the development and evaluation process to ensure responsible AI deployment.

18. Explain the concept of differential privacy and its importance in protecting sensitive data.

Differential privacy is a system for publicly sharing information about a dataset by describing the patterns of groups within the dataset while withholding information about individuals in the dataset. It adds a carefully calibrated amount of random noise to the data or the results of queries on the data. This noise obscures the contribution of any single individual, making it difficult to infer whether a particular person's data was used in the computation.

The importance of differential privacy lies in its ability to balance data utility and privacy. It allows data analysts and researchers to gain valuable insights from sensitive datasets (like medical records or census data) without compromising the privacy of individuals represented in those datasets. This is crucial for building trust in data-driven systems and enabling responsible data sharing for research and policy-making.

19. Have you ever had to debug a complex issue in a deep learning model? Describe your approach to debugging.

Yes, I've debugged complex issues in deep learning models. My approach generally involves a systematic process of investigation and isolation. First, I ensure the data pipeline is functioning correctly by visualizing and validating input data, checking for inconsistencies, and ensuring proper preprocessing. Then I check for common errors like vanishing/exploding gradients, incorrect loss functions, and improper initialization.

Next, I'd simplify the model by reducing layers or feature dimensions to isolate the source of the problem. I closely monitor training metrics (loss, accuracy, precision, recall) and validation metrics, and often use tools like TensorBoard to visualize training progress. I use techniques like gradient checking (especially for custom layers) and systematically test individual components or layers. Adding print statements or using a debugger to inspect intermediate values and gradients is also useful. If the model is overfitting I would add regularization. Finally, I carefully review the code for logical errors or incorrect implementations of mathematical operations.

20. How do you handle imbalanced datasets in machine learning?

To handle imbalanced datasets in machine learning, several techniques can be employed. Resampling techniques like oversampling the minority class (e.g., using SMOTE) or undersampling the majority class can balance the class distribution. Cost-sensitive learning assigns higher misclassification costs to the minority class, forcing the algorithm to pay more attention to it. Alternatively, you could adjust the decision threshold to optimize for metrics like precision and recall rather than solely accuracy. Furthermore, using appropriate evaluation metrics like F1-score, AUC-ROC, or precision-recall curves is crucial, as accuracy can be misleading in imbalanced datasets. Ensemble methods can also be effective; for example, algorithms that build multiple decision trees by sampling from the data are often less biased towards the majority class.

Here's an example using SMOTE in Python:

from imblearn.over_sampling import SMOTE

sm = SMOTE(random_state=42)

X_resampled, y_resampled = sm.fit_resample(X, y)

21. Describe a time when you had to work with a large language model. What challenges did you encounter and how did you overcome them?

During a recent project, I used a large language model (LLM) to generate product descriptions for an e-commerce website. One significant challenge was the LLM's tendency to produce generic and repetitive content that lacked the specific details needed to differentiate products. I overcame this by implementing a few strategies. First, I refined the prompt engineering, providing the LLM with more detailed input, including specific features, target audience information, and keywords related to each product. Second, I used a technique called "few-shot learning", where I provided the LLM with a small number of example product descriptions that met the desired quality standards. This helped guide the LLM towards generating more specific and engaging content.

Another issue I faced was managing the LLM's output to ensure factual accuracy and consistency with the brand's voice. For accuracy, I implemented a manual review process where a human editor would verify the generated descriptions against the actual product specifications. To maintain brand consistency, I created a style guide outlining the desired tone and language, and used this guide to provide feedback to the LLM (through prompt adjustments) and to the human editors during the review process.

22. How do you think about the ethical implications of AI research, and what steps can researchers take to mitigate potential risks?

AI research presents significant ethical implications including bias amplification, job displacement, privacy violations, and the potential for misuse in autonomous weapons or surveillance systems. It's crucial to consider these risks proactively. Researchers must prioritize fairness, transparency, and accountability in their work. This includes carefully curating datasets to avoid perpetuating biases, developing explainable AI (XAI) techniques to understand how AI systems make decisions, and implementing robust security measures to prevent malicious use.

Steps to mitigate risks involve interdisciplinary collaboration (ethics, law, and social sciences), establishing ethical review boards for AI projects, promoting open-source development to enhance transparency, and developing clear guidelines and regulations for AI development and deployment. Education and awareness are also key; ensuring that AI professionals are trained on ethical principles and societal impacts is vital for responsible innovation. Furthermore, ongoing monitoring and evaluation of AI systems are needed to identify and address unintended consequences.

23. Explain the concept of adversarial attacks and how you can defend against them.

Adversarial attacks are carefully crafted inputs designed to fool machine learning models. These inputs are often visually indistinguishable from normal data but cause the model to make incorrect predictions. For example, adding a tiny, carefully calculated amount of noise to an image of a cat can cause a classifier to identify it as a dog.

Defenses against adversarial attacks include:

- Adversarial training: Retraining the model with adversarial examples. This makes the model more robust.

- Input sanitization: Modifying input data to remove or reduce adversarial perturbations (e.g., using image smoothing or compression).

- Defensive distillation: Training a new model on the softened probabilities produced by a previously trained model. This can make the model less sensitive to small input changes.

- Gradient masking: Obfuscating the gradients of the model to make it harder for attackers to craft adversarial examples. However, this is often ineffective against stronger attacks.

24. Describe a research paper that you found particularly interesting and how it influenced your thinking about AI.

I found the paper "Attention is All You Need" particularly interesting. It introduced the Transformer architecture, which moved away from recurrent neural networks (RNNs) for sequence-to-sequence tasks and relied solely on attention mechanisms. This paper significantly influenced my thinking about AI because it demonstrated that attention, a relatively simple concept, could be incredibly powerful for capturing long-range dependencies in data. It showed how parallelization could drastically improve training speed and performance compared to the sequential nature of RNNs.

This paper made me appreciate the importance of clever architectural choices in AI, especially how crucial it is to look beyond existing paradigms. The Transformer architecture now forms the foundation of many state-of-the-art language models and has also been adapted to other domains like computer vision, illustrating its profound and widespread impact. It highlighted that focusing on relevant data points through mechanisms like attention can be more effective than simply processing data sequentially.

25. Let's say you are given the task of improving the performance of an object detection model. What would be your approach?

To improve object detection model performance, I'd start with a systematic approach. First, I'd analyze the current model's performance using metrics like mAP (mean Average Precision) and inference time to identify bottlenecks. This involves looking at the precision-recall curves for each class to understand where the model struggles (e.g., low recall for small objects). Then, I would focus on:

- Data Augmentation: Implement more robust data augmentation techniques (e.g., MixUp, CutMix) to increase the training dataset's diversity.

- Backbone Network: Experiment with different backbone architectures. Try using more efficient architectures such as EfficientDet, or MobileNet for faster inference.

- Hyperparameter Tuning: Optimize the model's hyperparameters, such as the learning rate, batch size, and optimizer parameters, using techniques like grid search or Bayesian optimization.

- Loss Function: Explore different loss functions to improve object localization and classification accuracy. For example, Focal Loss can help address class imbalance issues.

- Non-Maximum Suppression (NMS): Fine-tune the NMS threshold to balance precision and recall. Adaptive NMS can dynamically adjust the threshold based on object density. Finally, if the data is available look into techniques like model distillation and quantization to improve inference speed for production environments.

26. How do you deal with vanishing or exploding gradients in deep neural networks?

Vanishing and exploding gradients are common problems in deep neural networks, especially with many layers. Vanishing gradients occur when gradients become extremely small during backpropagation, preventing weights in earlier layers from updating effectively. Exploding gradients, conversely, happen when gradients become excessively large, leading to unstable training.

To mitigate these issues, several techniques can be employed: Gradient Clipping which caps the maximum value of gradients. Weight Initialization using methods like Xavier/Glorot or He initialization can help set initial weights to appropriate scales. Batch Normalization normalizes the activations within each mini-batch, stabilizing learning. Using ReLU or variants like Leaky ReLU can help prevent vanishing gradients, as they don't saturate as easily as sigmoid or tanh. Residual Connections (skip connections) in architectures like ResNets allow gradients to flow more directly through the network.

27. Explain the difference between online learning and offline learning.

Online learning and offline learning are two different approaches to training machine learning models.

Online learning involves training a model incrementally, one data point at a time (or in small batches). The model updates its parameters after processing each instance. This is useful when data arrives sequentially or when dealing with very large datasets that cannot fit into memory. Offline learning, on the other hand, involves training the model on the entire dataset at once. The model is trained until convergence, and then deployed. Offline learning is suitable when the entire dataset is available beforehand and computational resources are sufficient. Consider a spam filter - online learning adapts as new spam patterns emerge, while offline learning would require retraining on a large, fixed dataset of spam and non-spam emails.

28. Describe a situation where you had to adapt a machine learning algorithm to a new and unfamiliar domain.

In a prior role, I was tasked with applying a fraud detection algorithm, initially developed for credit card transactions, to identify fraudulent activity in online gaming accounts. The data distributions and behavioral patterns were significantly different. For example, transaction amounts in gaming were generally smaller but far more frequent, and legitimate users often exhibited irregular patterns due to varying gameplay styles.

To adapt, I first performed extensive exploratory data analysis to understand the new domain's specific characteristics. I then re-engineered features, incorporating variables relevant to gaming, such as login locations, in-game activity patterns, and social interactions. I also adjusted the algorithm's parameters and experimented with different anomaly detection techniques, like isolation forests, which proved more effective than the original model in identifying unusual gaming behavior.

Advanced AI Research Engineer interview questions

1. How would you approach researching a completely novel AI problem with no existing literature?

First, I'd rigorously define the problem. What exactly are we trying to achieve? What are the inputs and desired outputs? What metrics will indicate success? I would break down the problem into smaller, more manageable sub-problems. Even if the overall problem is novel, some sub-components might have related research. For instance, if the problem involves a new type of data, I'd research existing methods for processing similar data types.

Next, I'd experiment with fundamental AI techniques, creating baseline models. Even simple models can provide insights into the problem's complexity and potential avenues for improvement. I would also focus on creating synthetic data or using simulations to generate training data if real-world data is scarce. Iterate, document and share findings with peers.

2. Describe a situation where you had to significantly adapt your research plan due to unexpected results. What did you learn?

During my master's thesis, I was investigating the performance of a novel machine learning algorithm for image classification. My initial plan was to compare it against several established algorithms using a standard benchmark dataset. However, after the first few experiments, the new algorithm consistently underperformed significantly, showing no signs of improvement even after hyperparameter tuning. This was unexpected, as preliminary theoretical analysis suggested it should be competitive.

I adapted my plan by first conducting a thorough error analysis, carefully examining the misclassified images. This revealed that the algorithm struggled specifically with images containing certain types of noise and occlusions, which were not adequately addressed in the preprocessing pipeline. I then shifted my focus to developing a new preprocessing step that specifically targeted these noise types. The modified approach showed considerable improvements in performance on the dataset, demonstrating the importance of aligning preprocessing with algorithm limitations. I learned that unexpected results aren't failures; they are opportunities to discover hidden assumptions or limitations and refine my approach, potentially leading to a more robust and insightful research direction.

3. Explain a complex AI concept, like generative adversarial networks, to someone with no technical background.

Imagine you have two artists: a forger and an art critic. The forger (the generator in GANs) tries to create fake paintings that look like the real thing. The art critic (the discriminator) tries to tell the difference between real and fake paintings. The forger gets feedback from the critic on how to improve their fakes, and the critic learns to become better at spotting fakes as it sees more examples. Over time, the forger gets really good at creating realistic fakes, and the critic becomes an expert at detecting subtle differences.

GANs use this same idea with computers. The generator creates new data (like images, music, or text), and the discriminator tries to distinguish between the generator's output and real data from the training dataset. They both improve over time through a competitive process, leading the generator to create increasingly realistic content.

4. What are the ethical considerations involved in your area of AI research, and how do you address them?

As an AI, I don't have personal beliefs or opinions, but I can explain the ethical considerations related to my use. A primary concern is bias in training data. If the data I am trained on reflects existing societal biases (e.g., gender, race), I will likely perpetuate and amplify those biases in my responses. To mitigate this, developers need to carefully curate and audit training data to ensure it's representative and fair. Another ethical consideration is the potential for misuse, such as generating misleading or harmful content. To address this, safeguards are implemented, including content filters and response limitations, to prevent the generation of inappropriate or dangerous material. My responses are also regularly monitored and refined to improve accuracy and safety.

5. How do you stay up-to-date with the latest advancements in the rapidly evolving field of AI?

I stay updated on AI advancements through a multi-faceted approach. I regularly follow prominent AI researchers and organizations on platforms like Twitter and LinkedIn. I also subscribe to newsletters and blogs that curate the latest research papers, industry news, and emerging trends. Some resources include: ArXiv, Google AI Blog, and OpenAI Blog.

Furthermore, I participate in online communities and forums (like Reddit's r/MachineLearning) to engage in discussions and learn from others' experiences. I also actively seek opportunities to attend webinars, workshops, and conferences, and I allocate time to reading research papers and implementing new techniques through personal projects or online courses. This hands-on experience helps me solidify my understanding and stay ahead of the curve.

6. Walk me through a research project where you had to overcome a significant technical challenge.

During my master's thesis, I worked on developing a novel object detection algorithm for autonomous drones using deep learning. The primary challenge was achieving real-time performance on an embedded platform with limited computational resources. Initially, the object detection model, a variant of YOLO, was too computationally expensive to run at the required frame rate. To overcome this, I employed several optimization techniques. First, I implemented model quantization, reducing the precision of the model's weights and activations from 32-bit floating-point to 8-bit integers. This significantly decreased the model's size and improved inference speed. Second, I utilized techniques like layer fusion and kernel optimization for Convolution layers to take advantage of underlying hardware. Finally, I profiled the model execution to identify performance bottlenecks and optimized the most time-consuming operations using optimized libraries specifically designed for the target platform. These optimizations, combined, allowed me to achieve real-time object detection on the embedded drone platform, enabling it to navigate and interact with its environment effectively.

Specifically, the implementation of layer fusion was crucial. For example, fusing a convolutional layer, batch normalization layer, and ReLU activation function into a single operation reduced memory accesses and improved computational throughput. The optimized convolution operations were achieved using CUDA and cuDNN on the GPU and custom handwritten assembly for some specific operations. Code for the same looked something like this:

void optimized_conv(float *input, float *kernel, float *output) {

// Handwritten assembly for optimized convolution

// ...

}

7. Design an AI system to solve a specific real-world problem, considering both performance and resource constraints.

Let's design an AI system for smart irrigation in agriculture to conserve water. The system utilizes soil moisture sensors, weather forecasts, and plant-specific water requirements to determine optimal irrigation schedules. The core AI component is a regression model (e.g., a Random Forest or a lightweight neural network) trained on historical data to predict the ideal amount of water needed. Input features include sensor readings (soil moisture at different depths), weather data (temperature, humidity, rainfall probability), plant type, and growth stage. Resource constraints are addressed by using low-power sensors, edge computing for local data processing, and a cloud-based platform for model training and remote monitoring. Specifically, the edge device could be a Raspberry Pi or similar microcontroller. The model must be small enough to fit on such an architecture. Regularized regression techniques should be used to help avoid overfitting, thereby improving the generalization performance, and reducing the need to frequently retrain the model. Periodically the model can be retrained on a cloud server (e.g. using AWS SageMaker) and then deployed to the edge device.

8. Discuss the limitations of current deep learning techniques and propose potential solutions.

Current deep learning techniques, while powerful, face limitations such as requiring massive labeled datasets for training. This is often impractical or expensive to obtain. Potential solutions include self-supervised learning, which leverages unlabeled data, and transfer learning, which utilizes knowledge gained from pre-trained models on related tasks. Another limitation is the lack of interpretability; deep learning models often act as 'black boxes'. Techniques like attention mechanisms and explainable AI (XAI) methods can help provide insights into the model's decision-making process. Furthermore, deep learning models can be brittle and vulnerable to adversarial attacks. Robust optimization techniques and adversarial training can improve model resilience.

9. How would you evaluate the robustness and generalizability of an AI model you've developed?

To evaluate robustness, I'd use adversarial testing by feeding the model subtly altered or noisy inputs to see how performance degrades. I'd also assess performance across different data slices and demographic groups to identify biases and vulnerabilities. Generalizability is checked through k-fold cross-validation and testing on completely held-out datasets that represent different distributions than the training data. Key metrics include accuracy, precision, recall, F1-score, and area under the ROC curve (AUC), analyzed across these various test conditions. We can also use metrics like ECE (Expected Calibration Error) for understanding how well the model's predicted probabilities match actual outcomes.

10. Explain how you would debug a complex AI model that is producing unexpected or incorrect results.

Debugging complex AI models involves a multi-faceted approach. I'd start by isolating the problem: reviewing the input data for anomalies or biases, checking the pre-processing steps, and validating the model's architecture. Then, I'd focus on the model itself, looking at the training data, loss functions, and metrics to identify potential overfitting or underfitting. Visualizing the model's predictions, intermediate layer activations, and gradients can provide valuable insights.

Further, I'd leverage techniques like ablation studies (removing features to see impact), gradient checking (if custom layers are used), and debugging tools like TensorBoard to understand the model's behavior. Reproducibility is key, so I'd ensure the debugging process is systematic and well-documented. Consider using smaller subsets of data to make debugging faster. For example, to check the model's behaviour for a particular input, you might want to look at the code and logic, something like:

def model_predict(model, input_data):

# Debugging statements to print intermediate values

print("Input Data Shape:", input_data.shape)

layer1_output = model.layer1(input_data)

print("Layer 1 Output:", layer1_output)

# ... more layers ...

prediction = model.final_layer(layerN_output)

print("Final Prediction:", prediction)

return prediction

11. Describe a time you had to collaborate with researchers from different disciplines. What were the challenges and how did you overcome them?

During my previous role, I collaborated on a project to predict patient readmission rates using machine learning. This involved working with clinicians, data scientists, and healthcare administrators. A key challenge was differing perspectives; clinicians focused on patient care nuances, data scientists on model accuracy, and administrators on cost-effectiveness. We overcame this by establishing clear communication channels and holding regular cross-functional meetings where everyone presented their viewpoints and constraints. This allowed us to build a model that was not only accurate but also clinically relevant and economically viable.

Another challenge was the 'siloed' nature of the data. Data was spread across multiple systems with varying formats. We addressed this by creating a unified data repository and standardizing data formats through collaborative efforts and the application of data engineering principles. This improved data quality and streamlined the model training process.

12. If you had unlimited resources, what AI research project would you pursue and why?

I would pursue a project focused on developing Artificial General Intelligence (AGI) with a strong emphasis on ethical considerations and safety mechanisms. The core research would involve creating a robust and explainable AI model capable of reasoning, learning, and adapting across a wide range of tasks, mimicking human-level cognitive abilities.

Specifically, I would prioritize research into:

- Safe AI Design: Implementing layers of safety protocols to prevent unintended consequences and ensure the AGI's goals align with human values.

- Explainability and Transparency: Designing the AGI's decision-making processes to be understandable and auditable.

- Continuous Learning and Adaptation: Enabling the AGI to learn and evolve in a safe and controlled environment, continually refining its understanding of the world. The unlimited resources would allow for interdisciplinary collaboration, vast computational power, and rigorous testing, accelerating the development of beneficial and safe AGI.

13. How do you balance exploration of new ideas with the need to produce tangible results in your research?

I balance exploration with tangible results by employing a time-boxed approach to experimentation. Before diving into a new idea, I define a clear, measurable goal and a specific timeframe for exploration. This allows me to investigate novel concepts without getting lost in endless rabbit holes. Throughout the exploration phase, I prioritize identifying potential roadblocks early and adapting my approach as needed.

After the allocated time, I evaluate the results against the predefined goal. If the exploration shows promise, I allocate further time and resources, perhaps shifting to a more structured development phase focused on producing a tangible deliverable. If the initial results are unfavorable, I document my findings and move on to other promising avenues. This iterative process ensures I'm both innovative and productive.

14. Explain your approach to writing and publishing research papers, from initial idea to final submission.

My approach to writing and publishing research papers starts with identifying a relevant and impactful problem, often through literature review and discussions with colleagues. I then formulate a clear research question and hypothesis. The next step involves designing and conducting experiments or simulations to gather data. Rigorous data analysis follows, using appropriate statistical or computational methods. Writing the paper begins with outlining the structure, followed by drafting each section (Introduction, Related Work, Methods, Results, Discussion, Conclusion). I iterate through multiple drafts, seeking feedback from collaborators and mentors to improve clarity, accuracy, and completeness. Before submitting, I ensure the paper adheres to the target journal's guidelines and conduct a thorough proofread.

After receiving feedback from reviewers, I carefully address each comment, making necessary revisions to the manuscript. A detailed response to reviewers is prepared, explaining the changes made and justifying any disagreements. Once all revisions are complete and approved by co-authors, the final version is submitted to the journal. I pay close attention to ensuring proper formatting and referencing throughout the process.

15. How do you deal with criticism or negative feedback on your research ideas or results?

I view criticism as an opportunity for growth and improvement. My initial reaction is to listen carefully and try to understand the critic's perspective. I then ask clarifying questions to ensure I fully grasp their concerns and the reasoning behind their feedback.

After understanding the criticism, I take time to objectively evaluate its validity. If the feedback highlights a genuine flaw or weakness in my research, I acknowledge it and brainstorm potential solutions. If I disagree with the criticism, I respectfully explain my reasoning, providing evidence and rationale to support my position. The goal is to have a constructive dialogue, learn from the experience, and ultimately improve the quality of my research.

16. Describe a situation where you had to advocate for a particular research direction or methodology.

During my master's thesis, I advocated for using a deep learning approach for sentiment analysis of financial news articles instead of traditional methods like Support Vector Machines (SVM). While SVMs were the established baseline, I believed that the nuances of language in financial contexts, particularly subtle cues and contextual dependencies, could be better captured by deep learning models like transformers. I presented a detailed comparison of the potential benefits, including the ability of transformers to handle long-range dependencies and learn complex patterns from large datasets.

Initially, my advisor was hesitant due to the higher computational cost and complexity of deep learning. However, I built a prototype using a pre-trained BERT model, fine-tuned it on a financial news dataset, and demonstrated a significant improvement in accuracy compared to a baseline SVM model. This empirical evidence convinced my advisor to support my chosen direction, leading to a successful thesis and a publication.

17. How do you ensure the reproducibility of your AI research?

To ensure reproducibility in AI research, I focus on several key aspects. First, I meticulously document every step of the process, from data preprocessing to model training and evaluation. This includes specifying all software versions (e.g., Python, TensorFlow/PyTorch), hardware configurations, and random seeds used. I use version control (Git) to track all code changes and create detailed commit messages. Data versioning is also crucial, so I either store data snapshots or use data version control tools.

Second, I aim for code modularity and clarity, making it easier for others (and myself) to understand and replicate the experiments. I provide clear instructions on how to run the code, including dependencies and environment setup, often using tools like Conda environments or Docker containers to encapsulate the environment. Finally, I thoroughly document the experimental results, including metrics, visualizations, and hyperparameter settings, ensuring that the results are easily comparable and verifiable. I also try to publicly share code and data whenever possible, complying with ethical and legal considerations.

18. What are your thoughts on the future of AI research in the next 5-10 years?