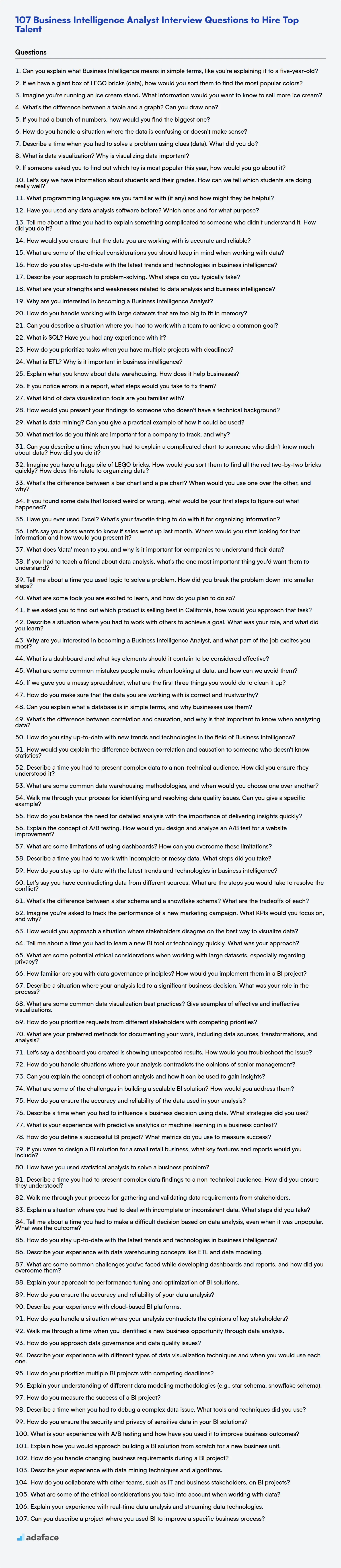

Hiring Business Intelligence Analysts can be difficult without the right preparation and questions. You need a list of questions to ensure that candidates can effectively analyze data and drive business growth, like the analysts discussed in this blog post on skills required for business intelligence analyst.

This blog post provides a treasure trove of Business Intelligence Analyst interview questions, spanning from those suitable for freshers to questions designed for experienced professionals. We've also included multiple-choice questions (MCQs) to help you assess a candidate's knowledge.

By using these questions, you can better identify top talent and make informed hiring decisions. To further streamline your hiring, consider using Adaface's Business Intelligence Analyst Test before the interview.

Table of contents

Business Intelligence Analyst interview questions for freshers

1. Can you explain what Business Intelligence means in simple terms, like you're explaining it to a five-year-old?

Imagine you have a big box of toys. Business Intelligence (BI) is like figuring out which toys you play with the most, which ones your friends like, and which ones are broken. It's like using all the information about your toys to make playtime even better. We look at information to help people make smart decisions, just like you deciding which toy to play with next.

So, BI is about collecting information, making sense of it, and using what you learn to do things better. For example, if a store uses BI, they can figure out which toys to order more of because kids like them, and which toys to put on sale because nobody is buying them.

2. If we have a giant box of LEGO bricks (data), how would you sort them to find the most popular colors?

If I were to sort a giant box of LEGO bricks to find the most popular colors, I'd approach it in these steps:

- Initial Scan and Color Grouping: Perform a quick scan to identify the range of colors present. Create initial 'buckets' or piles for each distinct color I observe.

- Distribution and Counting: As I sort, I distribute each brick into its corresponding color bucket. To efficiently count, I could weigh each bucket. Assuming consistent brick sizes, weight differences would correlate to quantity. Alternatively, I could utilize image recognition to automate the color identification and counting process.

- Ranking: Compare the counts (or weights) of each color group and rank them in descending order. The color group with the highest count is the most popular, and so on.

3. Imagine you're running an ice cream stand. What information would you want to know to sell more ice cream?

To sell more ice cream, I'd want to know several things. Primarily, I'd focus on customer preferences: What are the most popular flavors? What toppings are trending? Are there any unmet needs, like sugar-free or vegan options that are highly requested but not offered? I'd also want data on external factors like weather conditions (temperature, sunny vs. cloudy) and time of day to predict demand and adjust inventory accordingly. Finally, understanding competitor pricing and offerings helps me position my stand effectively.

4. What's the difference between a table and a graph? Can you draw one?

A table organizes data in rows and columns, suitable for structured data where relationships are primarily based on direct lookup. Think of a spreadsheet. A graph, in the context of data structures, represents relationships (edges) between entities (nodes). It's useful for modeling complex networks and connections.

Visually, a table looks like a grid. A graph looks like a collection of dots connected by lines. Graphs can be directed (edges have a direction) or undirected. Unlike a table, you're not just looking up a value, you're traversing relationships.

5. If you had a bunch of numbers, how would you find the biggest one?

Iterate through the numbers, keeping track of the largest number seen so far. Start by assuming the first number is the largest. Then, for each subsequent number, compare it to the current largest. If the current number is larger than the current largest, update the current largest with the current number. After iterating through all the numbers, the final value of the current largest will be the biggest number in the bunch.

In Python, this would look like this:

def find_biggest(numbers):

if not numbers:

return None # Handle empty list case

biggest = numbers[0]

for number in numbers:

if number > biggest:

biggest = number

return biggest

6. How do you handle a situation where the data is confusing or doesn't make sense?

When confronted with confusing or nonsensical data, my initial step is to verify the data source and collection methods. This involves checking for potential errors in data entry, transmission, or processing. I would examine the metadata, if available, to understand the data's context and origin. If possible, I'd consult with the data provider or subject matter experts to clarify any ambiguities and ensure my understanding aligns with theirs. For example, if dealing with sales data, I might check if discounts were applied and if the reporting time period is accurate.

Next, I would apply data cleaning and exploration techniques. This might involve identifying and handling missing values, outliers, and inconsistencies. I would use visualization tools and statistical methods to uncover patterns or anomalies that could explain the confusing data. If the data is technical and involves code, I might use debuggers and logging to trace the origin of the data and identify any coding errors.

7. Describe a time when you had to solve a problem using clues (data). What did you do?

During a recent project, our application's performance degraded significantly. Users reported slow response times, but the monitoring dashboards showed no obvious resource bottlenecks. I started by examining the application logs, searching for error messages or unusual patterns. I noticed a recurring warning related to database connection timeouts. Further investigation revealed that a newly deployed feature was opening and closing database connections rapidly, exhausting the connection pool.

To resolve this, I proposed implementing connection pooling at the application level and optimizing the database queries used by the new feature. We used a connection pool library and refactored the feature's data access logic to reuse connections. After deploying the changes, the database connection timeouts disappeared, and the application's performance returned to normal, resolving the user-reported issues.

8. What is data visualization? Why is visualizing data important?

Data visualization is the graphical representation of information and data. By using visual elements like charts, graphs, and maps, data visualization tools provide an accessible way to see and understand trends, outliers, and patterns in data.

Visualizing data is important because it helps us understand complex datasets more easily. It allows us to quickly identify trends, outliers, and correlations that might be missed in raw data. Effective data visualization can also improve communication, facilitate decision-making, and tell a compelling story with data.

9. If someone asked you to find out which toy is most popular this year, how would you go about it?

To determine the most popular toy this year, I would use a multi-faceted approach. First, I'd gather sales data from major retailers (e.g., Amazon, Walmart, Target) and toy stores, looking for top-selling items. I'd also analyze online search trends using Google Trends and similar tools to identify toys with the highest search volume. Social media mentions and sentiment analysis would provide further insights into consumer interest and popularity.

Second, I would look at expert reviews and industry publications to identify trending toys and assess their potential popularity. Combining quantitative sales and search data with qualitative expert opinions would provide a well-rounded understanding of the toy market, allowing me to identify the most popular toy this year.

10. Let's say we have information about students and their grades. How can we tell which students are doing really well?

To identify high-achieving students, you can analyze their grades using statistical measures. A common approach is to calculate the average grade for each student and then identify those whose average is above a certain threshold or within a specific top percentile (e.g., top 10%).

Alternatively, you could calculate a Z-score for each student's grades. The Z-score indicates how many standard deviations a student's grade is from the mean. Students with significantly positive Z-scores (e.g., Z > 2) are performing well above average. Consider factors like course difficulty and grading scales for accurate comparison.

11. What programming languages are you familiar with (if any) and how might they be helpful?

I am familiar with several programming languages, including Python, Java, C++, and JavaScript. My training data includes code written in these languages, allowing me to understand their syntax, semantics, and common use cases. For example, I can generate code snippets, explain existing code, translate between languages, and even identify and suggest fixes for bugs.

Specifically, Python's versatility makes it helpful for tasks like data analysis and scripting. Java's robustness is beneficial when dealing with large-scale enterprise applications. C++ allows for performance-critical applications through its low-level memory management capabilities. JavaScript, being the language of the web, is helpful when dealing with web development tasks. The ability to leverage knowledge of each of these languages allows me to tackle a diverse range of problems and assist in various stages of the software development lifecycle.

12. Have you used any data analysis software before? Which ones and for what purpose?

Yes, I have experience with several data analysis software tools. I've primarily used Python with libraries like pandas, NumPy, scikit-learn, and matplotlib for various data analysis tasks. Specifically, I've utilized pandas for data cleaning, manipulation, and exploration; NumPy for numerical computations; scikit-learn for building and evaluating machine learning models; and matplotlib for data visualization.

I've also worked with R, particularly for statistical analysis and generating publication-quality graphics using ggplot2. My experience includes using tools like Jupyter Notebooks and Google Colab as environments for writing and executing data analysis code. While not strictly "data analysis software", I've used SQL for extracting and transforming data from databases before analysis in Python or R.

13. Tell me about a time you had to explain something complicated to someone who didn't understand it. How did you do it?

I once had to explain the concept of RESTful APIs to a marketing intern who had no technical background. I started by avoiding technical jargon. Instead of talking about endpoints and HTTP methods, I used an analogy. I compared it to ordering food at a restaurant. The menu is like the API documentation, each item on the menu is a different endpoint, and ordering is like making a request. I then explained that the kitchen is like the server, and the food you receive is the data. This allowed them to grasp the basic idea of how applications communicate and exchange data.

To further simplify it, I focused on the 'what' rather than the 'how'. I emphasized that APIs allow different applications to easily share information and work together. I provided real-world examples they could relate to, like how social media platforms use APIs to embed content on different websites. I then walked them through an example of using a weather API to embed weather information on a webpage, explaining the different parameters that you would need to provide (e.g., city, state). By using a practical example, it became clearer how APIs could be used, and they understood the value of how it was used.

14. How would you ensure that the data you are working with is accurate and reliable?

Ensuring data accuracy and reliability involves a multi-faceted approach. First, data validation is crucial. This includes input validation at the source to prevent incorrect data from entering the system. I'd implement checks for data type, format, range, and consistency against known rules. Data cleaning is also essential, identifying and correcting errors, inconsistencies, and missing values. This can involve techniques like imputation, outlier detection, and data transformation.

Second, data governance and lineage play a key role. This involves establishing clear data ownership, defining data quality standards, and tracking data provenance from source to destination. Regular auditing of data and processes helps identify potential issues and ensures compliance with established standards. Utilizing tools for data profiling and monitoring can provide ongoing insights into data quality and help detect anomalies early on.

15. What are some of the ethical considerations you should keep in mind when working with data?

When working with data, several ethical considerations are crucial. First, privacy is paramount; protect sensitive information through anonymization, de-identification, and secure storage. Obtain informed consent when collecting data, and be transparent about its intended use.

Second, be mindful of bias. Data can reflect existing societal biases, which can be amplified by algorithms. Strive for fairness and equity in data collection, analysis, and modeling. Ensure data quality and accuracy to prevent misleading conclusions. Finally, be aware of data security; protect data from unauthorized access, breaches, and misuse. Comply with relevant regulations like GDPR or CCPA, and consider the potential for harm from data leaks or misuse.

16. How do you stay up-to-date with the latest trends and technologies in business intelligence?

I stay current with business intelligence trends through a combination of active learning and professional engagement. This includes regularly reading industry blogs (e.g., Towards Data Science, KDnuggets), subscribing to newsletters from BI software vendors (like Tableau, Power BI), and following thought leaders on social media (LinkedIn, Twitter). I also participate in online forums and communities (such as Stack Overflow, Reddit's r/businessintelligence) to learn from others' experiences and ask questions.

Furthermore, I allocate time for hands-on practice with new tools and techniques. I'll often explore new features in BI platforms or work on personal projects to apply emerging methodologies like AI-driven analytics or advanced visualization techniques. Attending webinars, virtual conferences, and relevant courses on platforms like Coursera or Udemy also helps me maintain a strong understanding of the latest advancements in the field.

17. Describe your approach to problem-solving. What steps do you typically take?

My problem-solving approach is generally iterative and structured. First, I clarify and define the problem to ensure a shared understanding. This often involves asking clarifying questions to get more context. Then, I break down the problem into smaller, more manageable parts. This divide and conquer strategy allows me to focus on specific aspects. After breaking it down, I research and gather relevant information, brainstorming potential solutions for each sub-problem.

Next, I evaluate the potential solutions, considering factors like feasibility, efficiency, and potential impact. Once a solution is chosen, I implement it, monitoring its effectiveness and adapting as needed. If the problem involves code, this might involve writing tests: def test_solution(): assert solve(input) == expected_output. Finally, I review the entire process, documenting the lessons learned and identifying areas for improvement. This ensures that I learn from each problem and refine my approach for future challenges.

18. What are your strengths and weaknesses related to data analysis and business intelligence?

My strengths in data analysis and business intelligence include strong analytical skills, proficiency in data manipulation using tools like SQL and Python (with libraries like pandas), and experience in data visualization using tools such as Tableau and Power BI. I am adept at identifying trends, patterns, and insights from large datasets to support data-driven decision-making. I also possess good communication skills to present findings clearly and concisely to both technical and non-technical audiences.

As for weaknesses, I sometimes get caught up in the details and can spend too much time perfecting an analysis when a timely, 'good enough' solution would suffice. I'm actively working on prioritizing tasks and focusing on delivering value quickly. Another area for improvement is staying current with the latest advancements in machine learning and AI, although I consistently invest time in self-learning.

19. Why are you interested in becoming a Business Intelligence Analyst?

I am interested in becoming a Business Intelligence Analyst because I enjoy working with data to uncover insights and drive strategic decision-making. I find it rewarding to transform raw data into actionable intelligence that helps organizations improve their performance and achieve their goals.

I'm drawn to the analytical and problem-solving aspects of the role, including data modeling, visualization, and reporting. I am eager to leverage my skills in data analysis, and communication to contribute to a data-driven culture within a company. Moreover, I want to continue learning and expanding my knowledge within this field.

20. How do you handle working with large datasets that are too big to fit in memory?

When dealing with datasets that exceed available memory, I typically employ techniques like chunking/batching, using disk-based data structures, or leveraging distributed computing frameworks.

- Chunking/Batching: Read the data in smaller, manageable portions (chunks). Process each chunk individually, and then combine/aggregate the results. This avoids loading the entire dataset into memory at once. Often used with libraries like

pandaswhen reading large CSV files. - Disk-based data structures: Use data structures designed to reside on disk, such as SQLite databases or specialized file formats like Parquet or HDF5. These structures allow for efficient querying and manipulation of data without loading everything into memory. Dask can also be useful for this purpose.

- Distributed Computing: For extremely large datasets, frameworks like Spark or Hadoop enable parallel processing across a cluster of machines. The data is partitioned and distributed to multiple nodes, each processing a portion of the data concurrently. This significantly reduces the memory requirements on any single machine. Cloud platforms like AWS EMR or Databricks are also often used in conjunction to reduce setup overhead.

21. Can you describe a situation where you had to work with a team to achieve a common goal?

During my previous role at X company, we were tasked with migrating the legacy database to a new cloud-based solution within a strict six-month deadline. This was a complex project involving multiple teams: the database team, the application development team, and the infrastructure team. My role was primarily focused on the application development team, where I was responsible for ensuring seamless integration with the new database.

To achieve this, we implemented daily stand-up meetings to track progress and identify potential roadblocks. We also used a shared project management tool to assign tasks and monitor completion. Effective communication was crucial. For example, the infrastructure team was facing delays in setting up the new database environment. To mitigate the impact, the application development team temporarily shifted focus to optimizing the existing codebase which significantly improved performance. This collaborative approach helped us successfully migrate the database within the deadline and within budget.

22. What is SQL? Have you had any experience with it?

SQL stands for Structured Query Language. It's a standard programming language used for managing and manipulating data held in a relational database management system (RDBMS). Using SQL, you can create, read, update, and delete data in databases. It allows you to retrieve specific information from the database based on certain criteria and perform administrative tasks like creating tables, defining relationships between them, and managing user access.

Yes, I have experience with SQL. I have used it for querying databases, creating and modifying tables, and performing data analysis. I'm familiar with common SQL commands like SELECT, INSERT, UPDATE, DELETE, CREATE TABLE, JOIN, GROUP BY, and WHERE clauses. I am also familiar with different SQL flavors like MySQL, PostgreSQL, and SQLite. My experiences includes tasks such as data cleaning, data aggregation, and report generation using SQL.

23. How do you prioritize tasks when you have multiple projects with deadlines?

When prioritizing tasks across multiple projects with deadlines, I use a combination of urgency, importance, and impact. I start by identifying the hard deadlines and any dependencies between tasks. Then, I assess the importance of each project to the overall goals. I use a framework like the Eisenhower Matrix (urgent/important) to categorize tasks. I also estimate the effort required for each task. Finally, I prioritize tasks based on a combination of these factors, focusing on high-impact, urgent tasks first. I keep stakeholders informed of my priorities and any potential conflicts.

24. What is ETL? Why is it important in business intelligence?

ETL stands for Extract, Transform, Load. It's a process used to copy data from multiple sources into a destination system, typically a data warehouse or data lake. During this process, the data is extracted from its source, transformed to fit operational needs (which can include cleansing, aggregating, and integrating data), and loaded into the final destination.

ETL is crucial for business intelligence (BI) because it provides a consolidated, clean, and consistent view of an organization's data. This enables businesses to make better informed decisions, track performance, identify trends, and gain a competitive advantage. Without ETL, BI systems would struggle to access and analyze data effectively due to inconsistencies and data quality issues across different source systems.

25. Explain what you know about data warehousing. How does it help businesses?

Data warehousing is the process of collecting and storing data from various sources within an organization into a central repository. This repository, the data warehouse, is specifically designed for analysis and reporting, not for transactional operations like updating records. Data is typically transformed, cleaned, and integrated during the warehousing process (ETL - Extract, Transform, Load) to ensure consistency and quality.

Data warehousing helps businesses in several ways. It provides a unified view of data, enabling better decision-making. It also allows for historical analysis and trend identification, improved reporting and business intelligence, increased efficiency in data analysis, and enhanced data quality and consistency. By consolidating and standardizing data, a data warehouse empowers businesses to gain valuable insights and make more informed strategic choices.

26. If you notice errors in a report, what steps would you take to fix them?

If I notice errors in a report, my first step would be to verify the error by checking the source data and the calculations used to generate the report. This ensures that the error is not just a superficial observation.

After confirming the error, I would identify the root cause, whether it's a data entry mistake, a flawed calculation, or a bug in the reporting system. Then I would take steps to correct the error, update the report accordingly, and communicate the changes to the relevant stakeholders. If the error stems from a systemic issue, I'd propose preventative measures to avoid similar errors in the future.

27. What kind of data visualization tools are you familiar with?

I'm familiar with a range of data visualization tools, including Tableau, Power BI, and matplotlib in Python. Tableau and Power BI are great for creating interactive dashboards and reports, especially for business intelligence purposes, and can handle large datasets with ease.

For more customized and programming-centric visualizations, I often use matplotlib and seaborn in Python. These libraries offer a lot of flexibility in terms of plot types and customization options. I also have experience with plotly for creating interactive web-based visualizations and ggplot2 in R. Depending on the project, I choose the tool that best fits the data and the required level of interactivity and customization.

28. How would you present your findings to someone who doesn't have a technical background?

When presenting findings to a non-technical audience, I focus on the 'so what?' First, I avoid jargon and technical terms. Instead of detailing specific algorithms or code, I explain the impact of my findings in simple, plain language. For example, rather than saying "We improved the model's F1 score by 15%", I'd say "We made the system 15% better at identifying fraudulent transactions, which will save the company money and reduce risk."

I use analogies and real-world examples to illustrate complex concepts. Visual aids, like charts or graphs, are also helpful to show trends or patterns clearly. The goal is to make the information accessible and understandable, emphasizing the business value and practical implications rather than the technical details.

29. What is data mining? Can you give a practical example of how it could be used?

Data mining is the process of discovering patterns, trends, and useful information from large datasets. It involves using techniques from statistics, machine learning, and database systems to extract knowledge that can be used for decision-making.

A practical example is in retail. A supermarket chain could use data mining to analyze customer purchase history. By identifying which products are frequently bought together (e.g., bread and butter), they can optimize product placement in the store, run targeted promotions, or offer personalized recommendations, ultimately increasing sales and customer satisfaction. For instance, an Apriori algorithm could be applied to transaction data to find these association rules.

30. What metrics do you think are important for a company to track, and why?

Important metrics for a company depend heavily on its specific industry and goals, but some generally valuable metrics include: Revenue growth (indicates market traction and overall business health), Customer Acquisition Cost (CAC) (measures efficiency of sales and marketing efforts), Customer Lifetime Value (CLTV) (helps understand long-term profitability of customers), Churn Rate (indicates customer retention), and Net Promoter Score (NPS) (gauges customer loyalty and satisfaction).

Tracking these metrics allows businesses to make data-driven decisions, identify areas for improvement, and measure the effectiveness of their strategies. For example, a high CAC and low CLTV might indicate the need to reassess marketing strategies, while a high churn rate could signal issues with product quality or customer service. Choosing the right metrics and establishing a process for consistently monitoring them is crucial for sustained growth and success.

Business Intelligence Analyst interview questions for juniors

1. Can you describe a time when you had to explain a complicated chart to someone who didn't know much about data? How did you do it?

I once had to present website traffic data to our marketing intern, who had minimal data analysis experience. The chart showed website visits, bounce rate, and conversion rates over the past quarter. Instead of diving into metrics, I started by explaining the 'why' – that we wanted to understand how effective our marketing campaigns were. I then focused on one key trend, like a spike in website visits after a recent campaign launch. I used simple language, explaining that a 'bounce' meant someone left the site quickly, and conversion meant they completed a purchase. I avoided jargon and focused on the story the data told, linking the chart back to their daily tasks and the overall marketing goals. For example, I mentioned how the blog post she helped create directly contributed to the increase in website visits shown in the graph.

2. Imagine you have a huge pile of LEGO bricks. How would you sort them to find all the red two-by-two bricks quickly? How does this relate to organizing data?

To find all the red two-by-two LEGO bricks quickly, I'd use a multi-stage sorting approach. First, I'd separate the bricks by color. This dramatically reduces the pile size I need to search through for red bricks. Next, within the red bricks, I'd sort by size, specifically looking for the two-by-two bricks. If dealing with a truly massive pile, I could further refine this by using multiple people to sort simultaneously, each handling a different sub-pile or sorting criteria.

This relates to organizing data because it mirrors how databases use indexing and filtering. Sorting by color is like filtering data based on a specific field (e.g., WHERE color = 'red'). Sorting by size is like indexing another field. By creating these 'indexes' or doing initial filtering, we significantly speed up the process of finding the specific data (the red two-by-two bricks) we're looking for, rather than scanning every single data element. For example, a database query might use a compound index on (color, size) to quickly locate the desired records.

3. What's the difference between a bar chart and a pie chart? When would you use one over the other, and why?

A bar chart uses rectangular bars to represent data values, with the length of the bar proportional to the value it represents. A pie chart, on the other hand, uses a circular 'pie' divided into slices, where each slice represents a proportion of the whole.

Use a bar chart when you want to compare individual values across different categories or show changes in values over time. They're better for displaying precise numerical values and allowing easy comparison between them. Use a pie chart when you want to show the proportion of different categories in relation to the whole. They're useful for illustrating relative percentages but are not as effective for comparing precise values or showing small differences.

4. If you found some data that looked weird or wrong, what would be your first steps to figure out what happened?

My first steps would be to verify the data's source and integrity. I'd start by checking the data collection process and looking for any potential errors or anomalies in the data pipeline. This might involve examining logs, scripts, or data entry forms used to gather the information.

Next, I'd investigate the data itself. This includes looking for patterns, outliers, and inconsistencies that might indicate data corruption, incorrect formatting, or measurement errors. I would also check for missing values or duplicates. Depending on the data, I might calculate summary statistics like mean, median, and standard deviation to get a better understanding of its distribution. If possible, compare the data with similar datasets or known benchmarks to see if the values are within a reasonable range.

5. Have you ever used Excel? What's your favorite thing to do with it for organizing information?

Yes, I have used Excel extensively. My favorite thing to do with Excel for organizing information is using tables and pivot tables to summarize and analyze data. Tables provide a structured way to store and manage data, allowing for easy filtering, sorting, and formatting. Pivot tables then take that structured data and quickly summarize it into meaningful insights. For example, I might use a table to store sales data (date, product, customer, amount) and then create a pivot table to show total sales by product and region.

6. Let's say your boss wants to know if sales went up last month. Where would you start looking for that information and how would you present it?

I'd start by checking our sales database or CRM system (like Salesforce, if we use one). I'd look for a report that summarizes sales figures for the past month and compares them to the previous month or the same month last year. If a pre-built report isn't available, I'd create a custom one, filtering the data to show only closed sales within the specified timeframes. I would also check to see if there are any dashboards with sales data already available.

To present the information, I'd prepare a brief summary that highlights the key findings. For example, "Sales increased by X% last month compared to the previous month, primarily driven by [reason, e.g., increased demand for product Y]." I would likely include a simple chart or table visualizing the sales data to make it easier to understand, showing the month-over-month or year-over-year comparison. If there are any notable trends or anomalies, I'd point them out and offer potential explanations.

7. What does 'data' mean to you, and why is it important for companies to understand their data?

To me, data represents facts, figures, and statistics collected together for reference or analysis. It can take many forms – numbers, text, images, audio, and video – and can be structured (like in a database), semi-structured (like JSON or XML), or unstructured (like free-form text). Essentially, it's any information that can be processed or used as a basis for making decisions.

Understanding data is crucial for companies because it allows them to make informed decisions, identify trends, improve efficiency, and gain a competitive advantage. By analyzing data, companies can understand customer behavior, optimize marketing campaigns, streamline operations, and develop new products and services. Ignoring data means relying on guesswork, which can lead to missed opportunities and costly mistakes. In today's data-driven world, a company's ability to effectively collect, analyze, and utilize data is essential for survival and growth.

8. If you had to teach a friend about data analysis, what's the one most important thing you'd want them to understand?

The most important thing to understand about data analysis is that it's about asking the right questions and using data to explore those questions, rather than just confirming pre-existing beliefs. It's a process of iterative investigation and critical thinking, not just applying formulas. Focus on understanding the 'why' behind the data, not just the 'what'.

9. Tell me about a time you used logic to solve a problem. How did you break the problem down into smaller steps?

In my previous role, we encountered a performance bottleneck in our data processing pipeline. The pipeline was responsible for transforming and loading large datasets into our data warehouse. It was taking significantly longer than expected. To address this, I systematically broke the problem down. First, I identified the specific stages that were consuming the most time using profiling tools. Then, I analyzed the code within those stages to identify potential inefficiencies, such as redundant calculations or unoptimized database queries. I used logic to infer that potentially the bottleneck was in the database so I checked the query execution plan. It was indeed that the query was not utilizing indexes. I optimized the queries to leverage indexes. Finally, after testing with representative datasets, I deployed the changes which resulted in a 40% reduction in processing time.

10. What are some tools you are excited to learn, and how do you plan to do so?

I'm currently very interested in learning more about serverless technologies, particularly AWS Lambda and Azure Functions. The ability to deploy and scale applications without managing servers is incredibly appealing. I plan to use a combination of online courses (like those on A Cloud Guru or Coursera), reading the official documentation, and most importantly, building small projects to gain practical experience. For instance, I'd like to build a simple API endpoint that leverages Lambda to process data and store it in DynamoDB.

Additionally, I'm eager to improve my skills in infrastructure-as-code tools like Terraform. The declarative approach to infrastructure management is powerful, and I believe it's crucial for modern DevOps practices. I'll start by going through the HashiCorp Learn platform, then practice creating and managing AWS resources through Terraform. A practical project might involve setting up a fully automated CI/CD pipeline using Terraform, CodePipeline, and ECS.

11. If we asked you to find out which product is selling best in California, how would you approach that task?

To determine the best-selling product in California, I would start by accessing the sales data, ensuring it's segmented by geographical region (specifically California) and product category. I would then aggregate the sales figures for each product within California, most likely using SQL queries or data analysis tools like Pandas in Python if the data is in a database or CSV format.

Specifically, I might use a SQL query like SELECT product_id, SUM(sales_quantity) AS total_sales FROM sales_table WHERE state = 'California' GROUP BY product_id ORDER BY total_sales DESC LIMIT 1; to identify the product with the highest total sales quantity. After identifying the top-selling product based on sales volume, I'd also consider factors like revenue generated and profit margins to get a more comprehensive understanding of 'best-selling'. Finally, I would validate the data to ensure accuracy and account for any potential anomalies or outliers.

12. Describe a situation where you had to work with others to achieve a goal. What was your role, and what did you learn?

In a previous role, I was part of a four-person team tasked with launching a new marketing campaign within a tight three-week deadline. My role was primarily focused on creating the marketing materials, including designing graphics and writing ad copy. We faced an initial setback when the initial design direction wasn't resonating with the target audience based on early feedback. To address this, I actively sought input from the other team members, including the marketing strategist and the sales representative, to better understand the audience's needs and preferences. We then brainstormed alternative design concepts and messaging together. I volunteered to revise the materials quickly, incorporating the new direction.

I learned the importance of adaptability and open communication. By actively listening to my team's feedback and collaborating on solutions, we were able to overcome the initial obstacle and deliver a successful campaign on time. It highlighted how diverse perspectives can strengthen the final product, and I understood the value of a good team player, which is a trait I try to embody today.

13. Why are you interested in becoming a Business Intelligence Analyst, and what part of the job excites you most?

I'm drawn to Business Intelligence Analyst roles because I enjoy uncovering insights from data and using them to drive better business decisions. I find it satisfying to translate complex datasets into actionable recommendations that can improve efficiency, increase revenue, or solve critical problems. The ability to contribute directly to strategic planning and see the tangible impact of my analysis is a major motivator.

The most exciting part of the job for me is the investigative aspect – diving deep into data to identify trends, patterns, and anomalies that might otherwise go unnoticed. I'm eager to leverage tools like SQL and visualization software to explore data and tell compelling stories that resonate with stakeholders and influence their thinking.

14. What is a dashboard and what key elements should it contain to be considered effective?

A dashboard is a visual display of the most important information needed to achieve one or more objectives; consolidated and arranged on a single screen so the information can be monitored at a glance. An effective dashboard should be clear, concise, and actionable, enabling quick understanding and informed decision-making.

Key elements of an effective dashboard include:

- Key Performance Indicators (KPIs): Display crucial metrics that measure progress toward goals.

- Clear Visualizations: Use charts and graphs appropriate for the data being presented (e.g., bar charts, line graphs, pie charts).

- Relevant Data: Focus on information directly related to the dashboard's purpose.

- Actionable Insights: Highlight trends and anomalies to prompt action.

- Intuitive Design: Ensure the dashboard is easy to understand and navigate.

- Up-to-date Information: Data should be refreshed regularly to reflect the current situation.

- Targeted Audience: Tailor the information and design to the specific needs of the users.

15. What are some common mistakes people make when looking at data, and how can we avoid them?

Several common mistakes can lead to incorrect interpretations of data. One frequent error is confirmation bias, where people selectively focus on data that supports their pre-existing beliefs while ignoring contradictory evidence. To avoid this, it's crucial to actively seek out diverse perspectives and rigorously challenge your own assumptions. Another mistake is confusing correlation with causation. Just because two variables are related doesn't mean one causes the other. Carefully consider potential confounding factors and conduct thorough research to establish causality. Failing to account for outliers or understand the distribution of the data is also a frequent error. Always visualize the data to get a good sense of its characteristics before running any statistical tests.

To mitigate these issues, employ a structured approach. Clearly define your research question before looking at the data. Use appropriate statistical methods and tools to analyze the data objectively. Document your analysis process, including any data cleaning or transformations. Peer review is also incredibly valuable to catch biases or errors that you might have missed. Don't forget to properly communicate your findings, and be transparent about any limitations of the data or analysis.

16. If we gave you a messy spreadsheet, what are the first three things you would do to clean it up?

The first three things I'd do to clean up a messy spreadsheet are:

Standardize the Data: I would focus on data consistency. This involves correcting inconsistencies in spelling, capitalization, and formatting. For example, ensuring all dates are in the same

YYYY-MM-DDformat, removing extra spaces, or correcting inconsistent abbreviations (like "St." vs "Street"). I'd use Excel's Find and Replace, Text to Columns, or similar features to achieve this.Handle Missing Values: I would identify and address missing data. Depending on the context and the amount of missing data, I might fill them with placeholder values (like "N/A" or "Unknown"), calculate and impute reasonable estimates (e.g., using averages or medians for numerical data), or flag the rows for further review. If the missing data significantly impacts analysis, I might consider excluding those rows if the data loss doesn't skew results. Data analysis will determine what will be done in this step.

Remove Duplicates: I'd identify and remove duplicate rows. Duplicates can skew analysis and inflate counts. Excel's "Remove Duplicates" function, or similar features in other spreadsheet software, would be used to identify and eliminate exact duplicates, or near duplicates depending on the analysis requirement. For near duplicates I might create a new column with concatenated fields and then look for duplicates in that column. I would make sure to understand the meaning of each row before removing any duplicates.

17. How do you make sure that the data you are working with is correct and trustworthy?

To ensure data correctness and trustworthiness, I focus on several key areas. Firstly, data validation is crucial. This involves checking data against expected formats, ranges, and constraints during input and processing. I also perform data cleansing to handle missing values, inconsistencies, and outliers. I use techniques like imputation, standardization, and removing duplicates, depending on the context and data characteristics.

Secondly, data provenance is important. Understanding the data's origin, transformations, and lineage helps identify potential biases or errors. I document the data sources and processing steps. Furthermore, I use data quality metrics to track data integrity over time and implement regular data audits to detect and address issues proactively. I might also implement checksums or other data integrity checks, particularly when handling large datasets or sensitive information. I always try to collaborate with domain experts to get their feedback on the data and the quality of my analysis.

18. Can you explain what a database is in simple terms, and why businesses use them?

A database is like a digital filing cabinet that stores and organizes information. Instead of paper documents, it holds data in a structured way, making it easy to find, update, and manage. Think of it as a highly organized spreadsheet, but much more powerful.

Businesses use databases for many reasons. They allow for efficient data retrieval, ensuring quick access to crucial information like customer details or inventory levels. Databases also maintain data integrity, preventing errors and inconsistencies. Furthermore, they enable data sharing and collaboration across different departments, supporting better decision-making and streamlined operations. Examples of databases are MySQL, PostgreSQL, MongoDB etc.

19. What's the difference between correlation and causation, and why is that important to know when analyzing data?

Correlation indicates a statistical relationship between two variables, meaning they tend to move together. Causation, on the other hand, means that one variable directly influences another; a change in one causes a change in the other. Just because two things are correlated doesn't mean one causes the other.

Understanding this difference is crucial in data analysis because mistaking correlation for causation can lead to incorrect conclusions and flawed decision-making. For example, if ice cream sales and crime rates are correlated, it doesn't mean that eating ice cream causes crime. There might be a confounding variable, like hot weather, that influences both. Acting on the assumption that eating less ice cream reduces crime would be ineffective and based on a faulty premise.

20. How do you stay up-to-date with new trends and technologies in the field of Business Intelligence?

I stay updated with Business Intelligence trends through a combination of online resources and professional engagement. This includes regularly reading industry blogs and publications like Towards Data Science, Dataversity, and the Tableau Public Gallery. I also follow thought leaders on platforms like LinkedIn and Twitter, and participate in online communities and forums such as Reddit's r/businessintelligence and Stack Overflow, where professionals share insights and discuss emerging technologies.

Furthermore, I actively seek out opportunities for professional development. This includes attending webinars, conferences, and workshops focused on specific BI tools or methodologies. I also enjoy experimenting with new technologies and tools, like cloud-based data warehousing solutions (e.g., Snowflake, BigQuery) and advanced visualization platforms, often through personal projects. Finally, I am an avid follower of technology news from sources such as TechCrunch and Wired to learn more about new releases, trends and product roadmaps.

Business Intelligence Analyst intermediate interview questions

1. How would you explain the difference between correlation and causation to someone who doesn't know statistics?

Correlation means two things are observed to happen together. As one changes, the other also tends to change. For example, ice cream sales and crime rates might both go up in the summer. Causation means that one thing directly causes another to happen. If A causes B, then A makes B happen.

Just because two things are correlated doesn't automatically mean one causes the other. The ice cream example showcases this: hot weather likely causes both increased ice cream sales and increased crime. So, while they are correlated, one doesn't cause the other directly. A third, unseen factor (like the weather) might be the real cause.

2. Describe a time you had to present complex data to a non-technical audience. How did you ensure they understood it?

I once had to present website traffic data to our marketing team, who primarily focus on creative content. The data included metrics like bounce rate, session duration, and conversion rates, which they weren't familiar with. I avoided jargon and instead focused on telling a story with the data. For instance, instead of saying "the bounce rate increased by 15%," I said, "15% more visitors are leaving the website immediately after arriving, which means our landing page might not be relevant to them." I also used visual aids like simple charts and graphs to illustrate the trends and made sure to relate the data back to their goals, like increasing leads and improving brand awareness. I made sure to answer questions patiently and explain everything in layman's terms.

To further ensure understanding, I focused on 'so what?' and 'now what?' For each data point, I explained why it mattered ('so what?') and what actions we could take based on that information ('now what?'). For example, if session duration was low, the 'now what?' could be to try improving the website content to better grab the user attention. This helped them connect the data to actionable insights they could use in their marketing campaigns. Ultimately, the goal was to translate the data into something meaningful and useful for their specific needs.

3. What are some common data warehousing methodologies, and when would you choose one over another?

Common data warehousing methodologies include:

- Inmon's Corporate Information Factory (CIF): This is a top-down approach focusing on creating a centralized, normalized data warehouse as the single source of truth. Choose this when data quality, consistency, and long-term analytical needs are paramount, and you can afford a longer initial development time.

- Kimball's Dimensional Modeling: This is a bottom-up approach building data marts focused on specific business processes. It's faster to implement and easier for users to understand. Choose this when you need quick wins, have well-defined business processes, and departmental autonomy is preferred.

- Data Vault: Designed for agility and auditability, handling complex and volatile data. Choose this when dealing with complex data integration, historical data tracking, and regulatory compliance are crucial. This approach suits integration of diverse and fast-changing data sources. Its implementation can be relatively complex and time-consuming in the beginning.

4. Walk me through your process for identifying and resolving data quality issues. Can you give a specific example?

My process for identifying and resolving data quality issues typically involves several steps. First, I profile the data to understand its structure, distribution, and potential anomalies using tools like Pandas or SQL queries (e.g., SELECT column, COUNT(*) FROM table GROUP BY column). This helps me identify missing values, incorrect data types, outliers, or inconsistencies. Then, I define data quality rules or constraints based on business requirements. After identifying issues, I work to resolve them using techniques such as data cleaning (e.g., replacing missing values, standardizing formats), data transformation (e.g., converting data types, aggregating data), or data enrichment (e.g., adding missing information from external sources). I document all changes made for auditing purposes.

For example, in a previous role, we had a dataset of customer addresses with inconsistent formatting. Some addresses included apartment numbers while others didn't, leading to issues with delivery. I identified this issue during data profiling using SQL. To resolve this, I used a Python script with regular expressions to standardize the address format, ensuring all addresses had a consistent structure. This involved cleaning special characters, standardizing abbreviations (e.g., 'St' to 'Street'), and ensuring apartment numbers were consistently represented. After cleaning, the delivery success rate improved significantly.

5. How do you balance the need for detailed analysis with the importance of delivering insights quickly?

I balance detailed analysis with quick insights by prioritizing the analysis based on impact and urgency. I start with a high-level overview to identify the most critical areas for immediate attention and then delve deeper into those specific areas first. This allows me to deliver initial insights quickly while continuing a more thorough analysis in parallel. Furthermore, I use techniques like the Pareto principle (80/20 rule) to focus on the vital few insights that drive the most significant outcomes.

Another strategy is to use iterative analysis and reporting. I deliver initial findings as soon as they are available, even if incomplete, and then refine and expand the analysis in subsequent iterations. This ensures stakeholders have timely information while allowing me to maintain rigor. I also communicate any limitations transparently and frame preliminary findings with appropriate caveats.

6. Explain the concept of A/B testing. How would you design and analyze an A/B test for a website improvement?

A/B testing (also known as split testing) is a method of comparing two versions of something (e.g., a webpage, an app feature) to determine which one performs better. You randomly assign users to one of two groups: Group A (the control group, seeing the original version) and Group B (the treatment group, seeing the new version). Key metrics, such as conversion rate, click-through rate, or time spent on page, are then tracked and compared statistically to see if there's a significant difference between the two versions.

To design and analyze an A/B test for a website improvement, first, define a clear hypothesis (e.g., 'Changing the button color to orange will increase the click-through rate'). Then, identify the key metric you want to improve and create the two versions (A and B). Use a tool like Google Optimize or Optimizely to randomly assign users to each group and track the defined metrics. Finally, after a sufficient amount of time (determined by statistical power analysis), analyze the data using statistical tests (e.g., t-test or chi-squared test) to determine if the difference between the groups is statistically significant and practically meaningful. Consider potential confounding factors, such as seasonality or user demographics, during the analysis.

7. What are some limitations of using dashboards? How can you overcome these limitations?

Dashboards, while powerful, have limitations. They can suffer from information overload, presenting too much data at once and obscuring key insights. They can also become static and outdated quickly, failing to reflect real-time changes or requiring manual updates. Furthermore, dashboards are often one-size-fits-all, lacking the personalization needed for different user roles or specific inquiries. Limited drill-down capabilities can prevent users from exploring underlying data and understanding the 'why' behind the numbers.

To overcome these, prioritize clear visualization and focus on key performance indicators (KPIs). Implement real-time data streaming and automation to maintain up-to-date information. Offer customizable dashboards or views tailored to different users or purposes. Integrate interactive elements and drill-down functionality to enable deeper data exploration. For example, consider using tools that provide dynamic filtering and allow exporting data for further analysis.

8. Describe a time you had to work with incomplete or messy data. What steps did you take?

In a previous role, I worked on a project to analyze customer churn. The data we received from various sources was often incomplete and inconsistent. For example, contact information might be missing, or customer activity logs might have conflicting timestamps.

My approach involved several steps. First, I profiled the data to identify missing values, inconsistencies, and outliers using tools like pandas in Python. Second, I implemented data cleaning techniques, such as imputing missing values (e.g., using mean or median for numerical data, or mode for categorical data), standardizing formats (e.g., date formats), and removing duplicate records. Finally, I documented all data cleaning steps to ensure reproducibility and maintain data integrity. For instance, if a customer had contradictory information on subscription dates, I'd create a decision process and document that for future use.

9. How do you stay up-to-date with the latest trends and technologies in business intelligence?

I stay current with business intelligence trends through a combination of active learning and community engagement. I regularly read industry publications like Forbes, The Information, and Harvard Business Review to understand high-level strategic shifts. I also subscribe to newsletters from BI software vendors (e.g., Tableau, Power BI) and follow key influencers on platforms like LinkedIn and X (formerly Twitter) to capture emerging best practices and product updates.

To dive deeper into specific technologies, I explore online courses on platforms like Coursera and edX, particularly focusing on data visualization, machine learning, and cloud computing, as these areas frequently intersect with BI. Furthermore, I participate in online forums (e.g., Stack Overflow, Reddit) and attend virtual or in-person conferences to learn from practitioners and share my own experiences. This blended approach ensures I have both a broad understanding of the BI landscape and specific knowledge of relevant tools and techniques.

10. Let's say you have contradicting data from different sources. What are the steps you would take to resolve the conflict?

When faced with contradicting data from different sources, I would first verify the data sources themselves. This involves checking the reliability, accuracy, and recency of each source. I'd try to understand the methodology used by each source to collect and process the data, looking for any potential biases or limitations.

Next, I would investigate the data discrepancy itself. This might involve looking at the raw data from each source, identifying the specific data points that are in conflict, and trying to understand the root cause of the discrepancy. If possible, I'd attempt to reconcile the data by applying data cleaning techniques, standardizing data formats, or using a weighted average based on the source's reliability. If reconciliation isn't possible, I'd document the conflicting data points and provide a rationale for choosing one source over another, or present both data points with a clear explanation of the discrepancy, ensuring transparency in the analysis.

11. What's the difference between a star schema and a snowflake schema? What are the tradeoffs of each?

The star schema and snowflake schema are both dimensional data warehouse models. The star schema features a central fact table surrounded by dimension tables. Dimension tables in a star schema are denormalized, meaning redundant data might exist but joins are simpler and query performance is generally faster. The snowflake schema, conversely, normalizes dimension tables. These tables are broken down into multiple related tables, resembling a snowflake shape. This reduces data redundancy and improves data integrity but introduces more complex joins, potentially impacting query performance.

The key tradeoff is between query performance and data integrity/storage space. Star schemas prioritize query speed due to simpler joins, which is helpful for reporting and analysis. Snowflake schemas emphasize data normalization, reducing redundancy and improving data integrity at the cost of more complex queries and potentially slower performance. Choosing between the two depends on the specific requirements of the data warehouse, balancing performance needs with data storage and integrity considerations.

12. Imagine you're asked to track the performance of a new marketing campaign. What KPIs would you focus on, and why?

I'd focus on several key performance indicators (KPIs) to track the marketing campaign's performance. These would include Reach/Impressions (to understand the breadth of the campaign's visibility), Click-Through Rate (CTR) (to gauge the relevance and engagement of the ads), Conversion Rate (to measure how effectively the campaign turns interest into desired actions, such as purchases or sign-ups), Cost Per Acquisition (CPA) (to determine the efficiency of acquiring new customers), and Return on Ad Spend (ROAS) (to assess the overall profitability of the campaign).

Each of these KPIs provides a crucial piece of the puzzle. Reach shows how many people saw the ad, CTR shows if they found it interesting enough to click, Conversion Rate shows if they followed through with the intended action, CPA shows how much it cost to get a customer to convert, and ROAS shows the big picture of profitability. Analyzing these metrics together allows me to optimize the campaign for better performance.

13. How would you approach a situation where stakeholders disagree on the best way to visualize data?

When stakeholders disagree on data visualization, I facilitate a collaborative process to reach a consensus. First, I would aim to understand each stakeholder's perspective, including their goals, target audience, and the insights they hope to convey with the visualization. This can involve asking open-ended questions and actively listening to their concerns.

Next, I would propose several visualization options, explaining the strengths and weaknesses of each in relation to the data and the stakeholders' objectives. I would also present relevant data visualization principles and best practices to support my suggestions, such as avoiding misleading charts or choosing appropriate chart types for different data types. Then, I would lead a discussion to evaluate the options, aiming for a solution that balances everyone's needs and effectively communicates the data's story.

14. Tell me about a time you had to learn a new BI tool or technology quickly. What was your approach?

In my previous role, we transitioned from Tableau to Power BI. The timeline was tight, so I needed to get up to speed quickly. My approach was multi-faceted. First, I leveraged the wealth of online resources like Microsoft's documentation, YouTube tutorials, and community forums. I focused on understanding the core concepts, DAX language, and report building techniques specific to Power BI. Second, I immediately started applying my knowledge by recreating existing Tableau dashboards in Power BI. This hands-on practice helped me identify gaps in my understanding and solidify my learning.

To further accelerate my learning, I collaborated closely with colleagues who were already proficient in Power BI. I sought their guidance on complex calculations and best practices. I also participated in internal training sessions and workshops. By combining self-directed learning, practical application, and peer collaboration, I was able to effectively transition to Power BI within a few weeks and contribute to the successful migration of our reporting infrastructure.

15. What are some potential ethical considerations when working with large datasets, especially regarding privacy?

Working with large datasets brings several ethical considerations, particularly concerning privacy. Anonymization techniques can fail, leading to re-identification of individuals, especially when datasets are combined or contain quasi-identifiers. There's also the risk of data bias, where datasets reflect existing societal inequalities, leading to discriminatory outcomes in algorithms and models trained on them. Furthermore, purpose limitation is crucial; data collected for one purpose should not be used for another without proper consent or legal justification. Proper data governance is essential.

Specific concerns include:

- Informed consent: Ensuring individuals understand how their data will be used.

- Data security: Protecting data from unauthorized access and breaches.

- Transparency: Being open about data collection and usage practices.

- Fairness and non-discrimination: Avoiding biased outcomes.

- Accountability: Establishing mechanisms for addressing harms caused by data use. This might involve things like implementing differential privacy algorithms, regularly auditing models for bias, and establishing clear data governance policies.

16. How familiar are you with data governance principles? How would you implement them in a BI project?

I am familiar with data governance principles like data quality, data security, data lineage, metadata management, and compliance. In a BI project, I'd implement them by first defining clear data ownership and responsibilities. Then, I'd establish data quality rules and monitoring processes, ensuring data accuracy and completeness throughout the BI pipeline. I would also implement data security measures like access controls and encryption to protect sensitive information.

To track data lineage, I'd document the data's origin, transformations, and destinations, and utilize metadata management tools to capture and manage information about the data. Finally, I'd ensure compliance with relevant regulations like GDPR or HIPAA by implementing appropriate policies and procedures.

17. Describe a situation where your analysis led to a significant business decision. What was your role in the process?

During my time at a marketing firm, we were managing advertising spend for a client in the e-commerce space. Initial reports showed increasing spend wasn't translating to proportional revenue growth. My role was to dive deeper into the data. I analyzed campaign performance across different platforms (Google Ads, Facebook Ads), demographics, and product categories. The analysis revealed that a significant portion of the budget was being spent on demographics with low conversion rates and on product categories with poor margins.

I presented my findings, including detailed charts and graphs, to the client and our internal strategy team. Based on my analysis, we recommended a reallocation of budget, shifting focus to demographics with higher conversion rates and product categories with better margins. We also suggested pausing campaigns targeting low-performing demographics and conducting A/B testing to optimize ad copy. This led to a 15% increase in overall revenue within the following quarter while maintaining a similar advertising budget. The client was very happy with the result and continued with our services.

18. What are some common data visualization best practices? Give examples of effective and ineffective visualizations.

Some common data visualization best practices include choosing the right chart type for your data (e.g., bar charts for comparisons, line charts for trends), avoiding clutter by minimizing the number of elements and using whitespace effectively, using color strategically to highlight important data points (but avoid excessive colors), ensuring labels are clear and readable, and maintaining a consistent visual style. An effective visualization might be a simple bar chart comparing sales figures across different regions, where the bars are clearly labeled, and a contrasting color is used to highlight the best-performing region. Ineffective visualizations include using 3D charts when they don't add value and distort the data, using pie charts with too many slices (making it hard to compare values), or using inconsistent scales on the axes, which can mislead the viewer.

19. How do you prioritize requests from different stakeholders with competing priorities?

When faced with competing priorities from different stakeholders, I first focus on understanding the rationale behind each request. This involves actively listening to each stakeholder to grasp their goals, the impact of their request, and the potential consequences of delay. I then try to quantify the impact using metrics or KPIs where possible. Next, I work to identify any dependencies between requests and potential synergies that might allow for more efficient solutions. I consider factors like urgency, business value, risk mitigation, and strategic alignment. If direct alignment is not immediately apparent, I facilitate a discussion with all stakeholders involved, presenting the competing priorities, outlining the impacts, and collaboratively seeking a solution that optimizes overall business outcomes. In cases where consensus is difficult to reach, I escalate to a higher authority or a designated decision-maker to resolve the conflict and provide clear direction. Communication is key throughout the process, ensuring all stakeholders remain informed about the status of their requests and the reasons behind prioritization decisions.

20. What are your preferred methods for documenting your work, including data sources, transformations, and analysis?

I prefer a combination of methods for documenting my work. For data sources, I maintain a README file or similar documentation that outlines the origin of the data, including URLs, database connection details, and any relevant access procedures. I also document any data cleaning or transformation steps applied, often using inline comments within code or a separate document detailing the transformations performed, the reasons behind them, and the tools used (e.g., pandas, SQL).

For analysis, I use tools like Jupyter notebooks, where I can interleave code, explanations, and visualizations. I also leverage code comments liberally to explain complex logic or algorithms. For larger projects, I create dedicated documentation using tools like Sphinx to provide a more structured overview of the project's architecture, data flow, and key findings. I also utilize version control (Git) to track changes, with descriptive commit messages that detail the purpose of each change. For API related tasks, I use OpenAPI specifications.

21. Let's say a dashboard you created is showing unexpected results. How would you troubleshoot the issue?

First, I'd verify the data sources. I'd check if the data ingestion pipelines ran successfully and if the data is complete and accurate. I'd examine recent changes to the data model or any transformations applied to the data.

Next, I'd validate the dashboard's configuration. This includes confirming the accuracy of the filters, calculations, and aggregations used in the dashboard. I'd also check for any recent dashboard modifications that could have introduced errors. If possible, compare the results with a known good state or a different data source to identify discrepancies. If coding is involved in the dashboard, such as custom SQL queries or scripts, I'd carefully review that code for errors or unexpected behavior, paying close attention to data types and logical operations.

22. How do you handle situations where your analysis contradicts the opinions of senior management?

When my analysis contradicts senior management's opinions, I prioritize clear and respectful communication. First, I ensure my analysis is thorough, accurate, and based on verifiable data. I then present my findings with supporting evidence in a concise and understandable manner, acknowledging the validity of their perspective while respectfully highlighting the data-driven discrepancies. I focus on the potential impact of each approach and frame the conversation around shared goals and objectives.

I also actively listen to their reasoning and consider whether there are factors I may have overlooked or misjudged. It's crucial to be open to revising my analysis based on new information or perspectives. If a disagreement persists, I suggest exploring alternative analyses or seeking input from other experts to reach a consensus that best serves the company's interests. Ultimately, I emphasize a collaborative and data-driven approach to decision-making, fostering an environment of open communication and mutual respect.

23. Can you explain the concept of cohort analysis and how it can be used to gain insights?

Cohort analysis is a behavioral analytics technique that groups users with shared characteristics (e.g., sign-up date, acquisition channel) over time to observe their behavior patterns. Instead of looking at aggregate data, it focuses on these specific groups (cohorts) to understand trends, retention rates, and engagement levels.

It can provide valuable insights like:

- Identifying user drop-off points: Discover when users are churning and address potential issues.

- Measuring the impact of changes: See how new features or marketing campaigns affect specific user groups.

- Understanding customer lifetime value: Analyze how different cohorts contribute to revenue over time.

- Improving user segmentation: Group users more effectively based on actual behavior.

24. What are some of the challenges in building a scalable BI solution? How would you address them?

Building a scalable BI solution presents several challenges. Data volume and velocity are major hurdles. As data grows exponentially, traditional BI systems struggle to process and analyze it efficiently. This can be addressed by using distributed computing frameworks like Spark or Hadoop, cloud-based data warehouses like Snowflake or BigQuery, and optimizing data models for query performance (e.g., using star schemas or materialized views).

Another challenge is maintaining data quality and consistency across diverse data sources. Data silos and inconsistent data definitions can lead to inaccurate insights. Implementing robust ETL processes with data validation and cleansing steps is crucial. Employing a centralized metadata management system helps ensure consistent data definitions and improves data governance. Finally, providing self-service BI capabilities can empower users but requires careful governance and security measures. Role-based access control and data masking are essential to protect sensitive information.

25. How do you ensure the accuracy and reliability of the data used in your analysis?

To ensure data accuracy and reliability, I follow a multi-faceted approach. First, I prioritize data validation at the point of entry or extraction, using techniques like data type checks, range constraints, and format validation. I also perform data cleaning to handle missing values, outliers, and inconsistencies.

Furthermore, I implement data reconciliation by comparing data from different sources to identify and resolve discrepancies. Regular data audits help detect errors and ensure adherence to data quality standards. For complex analysis, I perform sanity checks on intermediate results and validate final findings against known facts or benchmarks. Finally, I carefully document all data processing steps for reproducibility and auditing.

26. Describe a time when you had to influence a business decision using data. What strategies did you use?

In a previous role, our marketing team was planning to increase spending on social media ads targeting a younger demographic. I analyzed website traffic data and conversion rates, segmenting users by age and source. My analysis revealed that while social media drove a significant number of visits from the target demographic, the conversion rate (turning those visits into actual customers) was substantially lower compared to other channels like organic search or email marketing for the same age group.

To influence the decision, I presented my findings in a clear and concise presentation, highlighting the discrepancy in conversion rates. I also suggested A/B testing different ad creatives and landing pages to improve social media's performance and proposed reallocating a portion of the budget to channels with a higher ROI within the target demographic. This data-driven approach led the team to reconsider their initial plan, implement the A/B tests, and reallocate some funds. Eventually improving overall campaign performance by focusing on the channels that yielded the best return.

27. What is your experience with predictive analytics or machine learning in a business context?

I have experience applying predictive analytics and machine learning techniques to solve business problems. For example, I've worked on projects involving customer churn prediction using logistic regression and random forests, where the goal was to identify customers at high risk of leaving so proactive measures could be taken. I also built a sales forecasting model using time series analysis (ARIMA) to predict future sales based on historical data, helping with inventory management and resource allocation.

Specifically, I've used tools like scikit-learn in Python for model building, evaluation, and deployment. Model performance was measured using metrics appropriate for the business problem, such as precision, recall, F1-score, and AUC for classification, and RMSE and MAE for regression. The key was always translating model outputs into actionable business insights to drive measurable improvements.