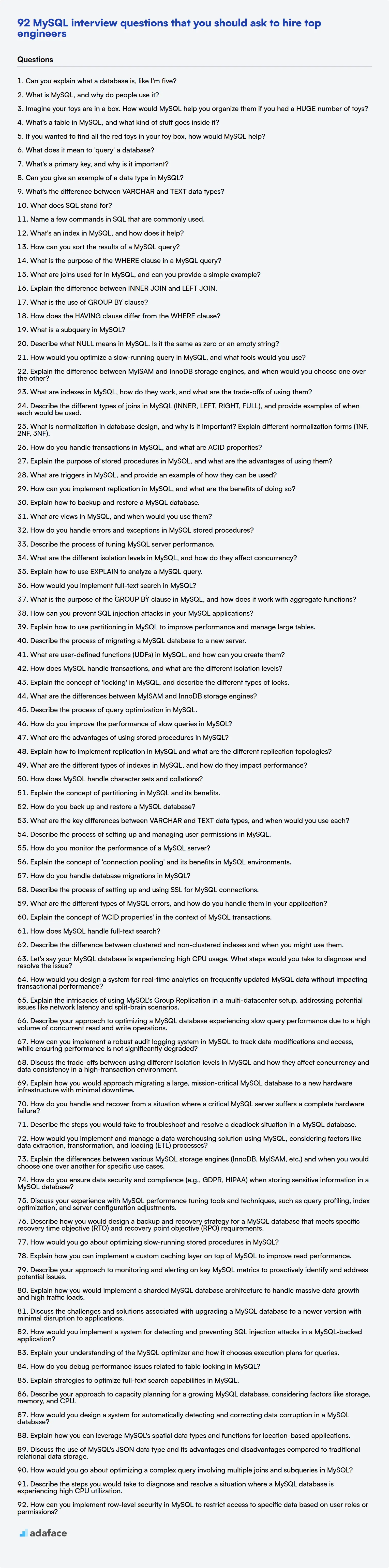

Evaluating MySQL proficiency can be complex, requiring a blend of theoretical knowledge and practical application. Recruiters need a reliable set of questions to accurately gauge a candidate's expertise; you can also read our guide on skills required for data engineers.

This blog post provides a curated list of MySQL interview questions, spanning basic to expert levels, along with a set of multiple-choice questions. It's designed to assist interviewers in assessing candidates' understanding of MySQL concepts and their ability to apply them in real-world scenarios.

By using these questions, you can ensure you're hiring candidates with the MySQL skills your team needs, and before the interview, you can also use Adaface's MySQL online test to screen candidates.

Table of contents

Basic MySQL interview questions

1. Can you explain what a database is, like I'm five?

Imagine you have a big box where you keep all your toys. A database is like a super organized box, but for a computer! It helps the computer remember lots of things like names, numbers, and even your favorite colors.

Instead of toys, the computer stores information in this box. It's like having labels and compartments so the computer can find things quickly. So, if you ask the computer 'What's my name?', it can look in its database box and tell you right away!

2. What is MySQL, and why do people use it?

MySQL is an open-source relational database management system (RDBMS). It uses SQL (Structured Query Language) to manage and manipulate data organized into tables. It's designed for a variety of applications, from small single-server setups to large-scale web applications.

People use MySQL because it's reliable, scalable, and relatively easy to use. Some key reasons include:

- Cost-effective: Being open-source, it offers a free version suitable for many use cases.

- Widely supported: Large community and extensive documentation make it easier to find solutions and support.

- Cross-platform: Runs on various operating systems (Windows, Linux, macOS).

- Scalable: Can handle large datasets and high traffic with proper configuration and optimization. Commonly used in conjunction with web technologies like

PHP,Python, andNode.jsfor backend data storage.

3. Imagine your toys are in a box. How would MySQL help you organize them if you had a HUGE number of toys?

If I had a HUGE number of toys in a box, MySQL could help me organize them by acting like a super-powered list and organizer. Instead of just throwing all the toys in the box randomly, I could create a table in MySQL where each toy is a 'row'. Each 'column' in the table would describe a feature of the toy, like toy_name, toy_type (e.g., action figure, stuffed animal, car), color, size, and storage_location.

Then, I could use MySQL queries (like SELECT * FROM toys WHERE toy_type = 'action figure' AND color = 'blue';) to quickly find specific toys without having to dig through the entire box. I could also ORDER BY size or name to keep the toys organized and use INDEX to search faster. This is far better than just rummaging through a big box, ensuring that I can quickly locate my favorite toys whenever I want!

4. What's a table in MySQL, and what kind of stuff goes inside it?

In MySQL, a table is a structured collection of data, organized into rows and columns. Think of it like a spreadsheet. Each row represents a single record or entry, and each column represents a specific attribute or field of that record.

Tables contain the actual data you want to store and manage in your database. This could include things like:

- Customer information (name, address, phone number)

- Product details (name, price, description)

- Order history (order date, items ordered, customer ID)

- User accounts (username, password, email address)

5. If you wanted to find all the red toys in your toy box, how would MySQL help?

MySQL is a database management system, not a physical object recognition system. It can't directly 'see' or identify red toys in a physical toy box.

However, if you had a database table representing the toys in your toy box, with columns like toy_name, color, and type, then you could use MySQL to query for the red toys. For example, a SQL query like SELECT toy_name FROM toys WHERE color = 'red'; would return the names of all toys listed as red in your toy database. The caveat is that the data first needs to be populated into a database table.

6. What does it mean to 'query' a database?

To 'query' a database means to request specific information from it. It's essentially asking the database a question, and the database responds with the data that matches your criteria.

This is typically done using a structured query language (SQL). A query describes what data you want to retrieve, filter, sort, and even manipulate. For example, a simple SQL query might look like SELECT * FROM users WHERE age > 25;, which retrieves all information from the 'users' table where the age is greater than 25.

7. What's a primary key, and why is it important?

A primary key is a column or a set of columns in a database table that uniquely identifies each row in that table. It enforces uniqueness and prevents null values. Only one primary key is allowed per table.

It's important because it ensures data integrity by guaranteeing that each record is uniquely identifiable. This uniqueness is crucial for efficiently retrieving, updating, and deleting specific records. Primary keys also facilitate relationships between tables by acting as foreign keys in other tables, enabling data normalization and consistency across the database.

8. Can you give an example of a data type in MySQL?

One example of a data type in MySQL is INT, which represents an integer. INT is used to store whole numbers (numbers without decimal points). Different variations like TINYINT, SMALLINT, MEDIUMINT, INT, and BIGINT offer different storage sizes and ranges for the integer values.

Another common data type is VARCHAR(n), where 'n' specifies the maximum length of the string. VARCHAR is used to store variable-length character strings. For example, VARCHAR(255) can store a string with up to 255 characters.

9. What's the difference between VARCHAR and TEXT data types?

Both VARCHAR and TEXT are used to store strings, but they differ primarily in their storage capacity and indexing capabilities.

VARCHAR has a limited length (e.g., VARCHAR(255)), making it suitable for short strings with a known maximum size. Because of this length limit, VARCHAR columns can usually be indexed, improving search performance. TEXT, on the other hand, is designed for storing much larger strings, typically with no predefined maximum length (or a very high limit). However, TEXT columns often cannot be fully indexed directly, or require prefix indexing, which can impact search performance on large text fields. The specific limits and indexing support vary depending on the database system being used.

10. What does SQL stand for?

SQL stands for Structured Query Language. It is a standard programming language used for managing and manipulating data stored in relational database management systems (RDBMS).

11. Name a few commands in SQL that are commonly used.

Some commonly used SQL commands include:

SELECT: Retrieves data from one or more tables.INSERT: Adds new rows into a table.UPDATE: Modifies existing data in a table.DELETE: Removes rows from a table.CREATE TABLE: Creates a new table in the database.ALTER TABLE: Modifies the structure of an existing table.DROP TABLE: Deletes a table from the database.

These commands form the basic building blocks for interacting with relational databases. Other important commands cover transaction control (COMMIT, ROLLBACK), data definition (CREATE INDEX, CREATE VIEW), and data control (GRANT, REVOKE).

12. What's an index in MySQL, and how does it help?

In MySQL, an index is a data structure that improves the speed of data retrieval on a table. It's like an index in a book; instead of scanning every page to find information, you can look up the topic in the index to find the page number(s) where it's located.

Indexes help by significantly reducing the number of rows the database server must examine to find the required data. Without an index, MySQL would have to perform a full table scan, which can be very slow, especially for large tables. By using an index on one or more columns, the database can quickly locate the rows that match the search criteria, leading to faster query execution. CREATE INDEX index_name ON table_name (column_name);

13. How can you sort the results of a MySQL query?

You can sort the results of a MySQL query using the ORDER BY clause. This clause specifies the column (or columns) to sort by and the sort order (ascending or descending).

For example:

SELECT * FROM employees ORDER BY last_name ASC, first_name DESC;

This query sorts the employees table first by last_name in ascending order (A-Z) and then by first_name in descending order (Z-A) within each last_name group. ASC is the default, so you can omit it. DESC must be explicitly specified for descending order.

14. What is the purpose of the WHERE clause in a MySQL query?

The WHERE clause in a MySQL query is used to filter records based on a specified condition. It allows you to retrieve only the rows that meet certain criteria, rather than retrieving all rows from a table.

For example, if you have a table named employees with columns like employee_id, name, and salary, you could use the WHERE clause to select only the employees whose salary is greater than $50,000. SELECT * FROM employees WHERE salary > 50000;

15. What are joins used for in MySQL, and can you provide a simple example?

Joins in MySQL are used to combine rows from two or more tables based on a related column between them. They allow you to retrieve data from multiple tables in a single query, creating a unified result set.

Here's a simple example:

Suppose you have two tables: Customers and Orders.

SELECT Customers.CustomerID, Customers.CustomerName, Orders.OrderID

FROM Customers

INNER JOIN Orders ON Customers.CustomerID = Orders.CustomerID;

This INNER JOIN combines rows from the Customers and Orders tables where the CustomerID matches in both tables, effectively linking customers to their respective orders. Other types of joins include LEFT JOIN, RIGHT JOIN, and FULL OUTER JOIN.

16. Explain the difference between INNER JOIN and LEFT JOIN.

INNER JOIN returns only the rows where there is a match in both tables based on the join condition. If a row exists in one table but not the other, it is excluded from the result set.

LEFT JOIN (or LEFT OUTER JOIN) returns all rows from the left table and the matching rows from the right table. If there is no match in the right table for a row in the left table, the columns from the right table will contain NULL values. In essence, it includes all rows from the left table, regardless of whether there is a corresponding row in the right table.

17. What is the use of GROUP BY clause?

The GROUP BY clause in SQL is used to group rows that have the same values in one or more columns into a summary row, like finding totals, averages, or counts for each group. It's commonly used with aggregate functions such as SUM(), AVG(), COUNT(), MIN(), and MAX(). The GROUP BY clause allows you to perform calculations on these groups.

For instance, if you have a table of sales data, you could use GROUP BY to calculate the total sales for each region or the number of customers in each city. The columns specified in the GROUP BY clause determine how the data is aggregated.

18. How does the HAVING clause differ from the WHERE clause?

The WHERE clause filters rows before aggregation (grouping), while the HAVING clause filters rows after aggregation. WHERE operates on individual rows based on their values. HAVING operates on the results of aggregate functions (like SUM, AVG, COUNT, MIN, MAX) performed on groups of rows. WHERE cannot use aggregate functions, but HAVING can.

Think of it this way: WHERE is used to select specific records to work on and HAVING is used to select specific groups to report on.

19. What is a subquery in MySQL?

A subquery in MySQL is a query nested inside another query. It is used to retrieve data that will be used in the main query. Subqueries can appear in the SELECT, FROM, and WHERE clauses.

For example, you might use a subquery to find all customers who placed orders larger than the average order size. A subquery can return a single value, a single row, or multiple rows. The main query then uses the result(s) returned from the subquery. Here's an example:

SELECT customer_name

FROM customers

WHERE customer_id IN (SELECT customer_id FROM orders WHERE order_total > 100);

20. Describe what NULL means in MySQL. Is it the same as zero or an empty string?

In MySQL, NULL represents a missing or unknown value. It signifies that a field has no value. Importantly, NULL is not the same as zero or an empty string.

- Zero is a numeric value. An empty string is a string with zero length.

NULLrepresents the absence of a value, not a value of zero or a zero-length string. Attempting to perform comparisons likecolumn_name = 0orcolumn_name = ''will not identifyNULLvalues. To check forNULLyou must usecolumn_name IS NULLorcolumn_name IS NOT NULL.

Intermediate MySQL interview questions

1. How would you optimize a slow-running query in MySQL, and what tools would you use?

To optimize a slow-running MySQL query, I'd start by understanding the query execution plan using EXPLAIN SELECT .... This reveals how MySQL intends to execute the query, highlighting potential bottlenecks like full table scans, missing indexes, or inefficient joins. I'd then focus on addressing these bottlenecks by:

- Adding appropriate indexes: Indexes speed up data retrieval, especially for

WHEREclause columns, join conditions, andORDER BYclauses. Consider composite indexes for multiple columns used together. - Rewriting the query: Optimize joins (e.g., using

INNER JOINinstead ofLEFT JOINif appropriate), avoidSELECT *(specify only needed columns), and simplify complex subqueries orORconditions. - Analyzing and updating table statistics:

ANALYZE TABLEupdates statistics used by the optimizer for plan selection. Outdated statistics can lead to poor decisions. - Checking MySQL configuration: Ensure sufficient memory allocation (e.g.,

innodb_buffer_pool_size) and optimal settings for the query cache (if enabled). The slow query log can also give information about slow queries. Tools likemysqldumpsloworpt-query-digesthelp analyze this log.pt-index-usageshows which indexes are being used or not. Finally,SHOW PROCESSLISThelps identify long running queries at the present moment. Performance monitoring tools like Percona Monitoring and Management (PMM) can help pinpoint the root cause.

2. Explain the difference between MyISAM and InnoDB storage engines, and when would you choose one over the other?

MyISAM and InnoDB are both storage engines for MySQL, each with distinct characteristics. MyISAM excels in read-heavy workloads, offering faster SELECT queries due to its simple table structure and full-text search capabilities. It supports table-level locking, which can lead to performance bottlenecks in write-intensive environments. InnoDB, on the other hand, provides superior data integrity and reliability. It supports transactions, row-level locking (reducing contention), and foreign keys, making it suitable for applications requiring ACID properties.

Choosing between the two depends on the application's needs. Use MyISAM for applications with a high volume of read operations and where transaction support is not critical (e.g., data warehousing, read-only analytics). Select InnoDB when data integrity, concurrency, and transaction support are paramount (e.g., e-commerce, financial systems, applications with frequent updates and deletes).

3. What are indexes in MySQL, how do they work, and what are the trade-offs of using them?

Indexes in MySQL are special lookup tables that the database search engine can use to speed up data retrieval. They work by creating a data structure (usually a B-tree) that maps the values of one or more columns in a table to the corresponding rows. When you execute a query that uses indexed columns in the WHERE clause, MySQL can use the index to quickly locate the matching rows without having to scan the entire table. This significantly reduces the query execution time, especially for large tables.

The trade-offs of using indexes include:

- Increased storage space: Indexes require additional storage space because they are separate data structures stored alongside the table.

- Slower write operations:

INSERT,UPDATE, andDELETEoperations become slower because the indexes also need to be updated to reflect the changes in the table data. - Complexity: Choosing the right columns to index and managing indexes effectively can add complexity to database administration. You need to consider the types of queries you are running and the frequency of write operations.

4. Describe the different types of joins in MySQL (INNER, LEFT, RIGHT, FULL), and provide examples of when each would be used.

MySQL supports several types of joins to combine rows from two or more tables based on a related column. INNER JOIN returns only the rows where there is a match in both tables. Example: SELECT * FROM employees INNER JOIN departments ON employees.department_id = departments.id; - Use this to find employees and their respective departments where department IDs match.

LEFT JOIN (or LEFT OUTER JOIN) returns all rows from the left table and the matched rows from the right table. If there is no match in the right table, it returns NULLs for the right table's columns. Example: SELECT * FROM employees LEFT JOIN departments ON employees.department_id = departments.id; - Use this to retrieve all employees and their department information, even if some employees are not assigned to a department.

RIGHT JOIN (or RIGHT OUTER JOIN) is similar to LEFT JOIN but returns all rows from the right table and the matched rows from the left table, using NULLs for no match in the left table. Example: SELECT * FROM employees RIGHT JOIN departments ON employees.department_id = departments.id; - Use this to find all departments and the associated employees, even if some departments have no employees. Keep in mind that you can achieve the same output with LEFT JOIN by swapping the order of the tables.

FULL OUTER JOIN returns all rows when there is a match in one of the tables. MySQL doesn't directly support FULL OUTER JOIN, but it can be emulated using a UNION of LEFT JOIN and RIGHT JOIN excluding the INNER JOIN results. Example: SELECT * FROM employees LEFT JOIN departments ON employees.department_id = departments.id UNION SELECT * FROM employees RIGHT JOIN departments ON employees.department_id = departments.id WHERE employees.department_id IS NULL; - Use this when you want every row from both tables, whether or not there's a match between them.

5. What is normalization in database design, and why is it important? Explain different normalization forms (1NF, 2NF, 3NF).

Normalization in database design is the process of organizing data to reduce redundancy and improve data integrity. It's important because it minimizes storage space, eliminates data anomalies (insertion, update, and deletion), and makes it easier to maintain data consistency. Without normalization, databases can become inefficient and prone to errors.

Different Normal Forms:

- 1NF (First Normal Form): Eliminates repeating groups of data. Each column should contain only atomic values, and there should be a primary key.

- 2NF (Second Normal Form): Must be in 1NF and eliminates redundant data dependent on a part of the composite key. It means all non-key attributes are fully functionally dependent on the primary key.

- 3NF (Third Normal Form): Must be in 2NF and eliminates transitive dependencies. This means that non-key attributes should not be dependent on other non-key attributes.

6. How do you handle transactions in MySQL, and what are ACID properties?

In MySQL, transactions are managed using the START TRANSACTION, COMMIT, and ROLLBACK statements. A transaction is initiated with START TRANSACTION;, followed by a series of SQL operations. If all operations succeed, COMMIT; permanently saves the changes. If any operation fails or a problem occurs, ROLLBACK; reverts the database to its previous state.

ACID properties are crucial for reliable transactions:

- Atomicity: The entire transaction is treated as a single, indivisible unit. Either all changes are applied, or none are.

- Consistency: The transaction ensures that the database transitions from one valid state to another, adhering to defined rules and constraints.

- Isolation: Concurrent transactions are isolated from each other, preventing interference and ensuring data integrity. Isolation levels (e.g., READ COMMITTED, REPEATABLE READ) control the degree of isolation.

- Durability: Once a transaction is committed, the changes are permanent and will survive even system failures. This is typically achieved through write-ahead logging.

7. Explain the purpose of stored procedures in MySQL, and what are the advantages of using them?

Stored procedures in MySQL are precompiled SQL code blocks that you can save and execute repeatedly. They encapsulate a set of SQL statements into a single unit, acting like a function or subroutine within the database.

The advantages include:

- Improved Performance: Stored procedures are precompiled, reducing parsing overhead on subsequent executions.

- Reduced Network Traffic: Instead of sending multiple SQL statements across the network, you send a single call to the stored procedure.

- Enhanced Security: Stored procedures can help to secure data by granting access only to the procedure and not the underlying tables. They can also prevent SQL injection.

- Code Reusability: Stored procedures can be called from multiple applications, promoting code reuse and consistency.

- Data Consistency: Enforce business rules centrally within the database.

- Simplified Maintenance: Changes to data access logic can be made in one place, the stored procedure, rather than in multiple applications.

Example of a simple stored procedure:

CREATE PROCEDURE GetCustomerByID (IN cust_id INT)

BEGIN

SELECT * FROM Customers WHERE CustomerID = cust_id;

END;

Then call it using CALL GetCustomerByID(123);

8. What are triggers in MySQL, and provide an example of how they can be used?

Triggers in MySQL are stored programs that automatically execute in response to certain events on a particular table. These events can be INSERT, UPDATE, or DELETE. Triggers are useful for enforcing business rules, auditing data changes, or replicating data to other tables.

For example, consider a scenario where you want to keep a log of all updates made to an employees table. You can create a trigger that fires AFTER UPDATE on the employees table. This trigger could insert a record into an employee_audit table, capturing the old and new values of the updated row, along with a timestamp and the user who made the change. Here is an example of the trigger's SQL:

CREATE TRIGGER employee_update_audit

AFTER UPDATE ON employees

FOR EACH ROW

BEGIN

INSERT INTO employee_audit (employee_id, old_salary, new_salary, updated_at, updated_by)

VALUES (OLD.id, OLD.salary, NEW.salary, NOW(), USER());

END;

9. How can you implement replication in MySQL, and what are the benefits of doing so?

MySQL replication involves copying data from a master server to one or more slave servers. This is typically achieved using binary log (binlog) replication, where the slave servers replay the events recorded in the master's binlog. To set it up, you configure the master server to enable binary logging and grant replication privileges to a replication user. Then, on each slave server, you configure the connection details to the master and start the replication process.

The benefits of MySQL replication include improved read performance by distributing read queries across multiple slaves, increased data availability through redundancy, and enabling backups to be performed on slaves without impacting the master server's performance. It also allows for data analytics and reporting to be done on the slaves, reducing the load on the primary database. Also, DR (Disaster Recovery) benefit is provided as a copy is kept in another location.

10. Explain how to backup and restore a MySQL database.

Backing up a MySQL database is typically done using the mysqldump utility. This creates a SQL script containing the commands to recreate the database and its data. For example: mysqldump -u [username] -p[password] [database_name] > backup.sql. It's important to store backups securely and regularly to prevent data loss. Options include scheduling regular backups and storing them in a separate physical location or cloud storage.

Restoring a MySQL database uses the mysql command-line client or similar tools like phpMyAdmin. You would point the client to the backup file: mysql -u [username] -p[password] [database_name] < backup.sql. Ensure the user has the necessary privileges to create databases and tables. It's wise to test restore procedures periodically to ensure the backups are valid and the process is well understood.

11. What are views in MySQL, and when would you use them?

Views in MySQL are virtual tables based on the result set of a SQL query. They don't store data themselves; instead, they act as a stored query that can be treated like a regular table.

Views are useful for several reasons:

- Simplifying complex queries: A view can encapsulate a complex join or subquery, making it easier to access the data. Instead of rewriting the complex query each time, you can simply query the view.

- Security: Views can restrict access to certain columns or rows of a table, limiting what users can see. You can grant users access to the view without granting them access to the underlying tables.

- Data abstraction: Views provide a layer of abstraction between the physical database schema and the applications that access it. This allows you to change the underlying schema without affecting the applications that use the view.

- Data consistency: If you have a calculated column (e.g.,

total_price = quantity * price), putting the calculation in a view ensures that it's always calculated the same way.

12. How do you handle errors and exceptions in MySQL stored procedures?

In MySQL stored procedures, errors and exceptions are handled using DECLARE HANDLER statements. These handlers specify actions to be taken when specific errors or classes of errors occur. You can handle SQLEXCEPTION, SQLWARNING, or NOT FOUND conditions. Common actions include logging the error, setting an error flag, or exiting the procedure.

For example:

DECLARE EXIT HANDLER FOR SQLEXCEPTION

BEGIN

-- Error handling logic here

ROLLBACK;

SET @error_message = 'An error occurred.';

END;

Alternatively, you can use SIGNAL or RESIGNAL to explicitly raise exceptions with custom SQLSTATE values and messages.

13. Describe the process of tuning MySQL server performance.

Tuning MySQL performance involves several key areas. First, analyze slow queries using the slow query log and EXPLAIN statements. Optimize these queries by adding indexes to frequently queried columns, rewriting inefficient queries, or restructuring the database schema. Second, configure MySQL server parameters in my.cnf (or my.ini on Windows) such as innodb_buffer_pool_size (adjusting based on available RAM), key_buffer_size (for MyISAM, though InnoDB is generally preferred), query_cache_size (though often deprecated), table_open_cache, and innodb_log_file_size.

Third, monitor server resource usage (CPU, memory, disk I/O) using tools like top, iostat, and MySQL's SHOW GLOBAL STATUS and SHOW GLOBAL VARIABLES to identify bottlenecks. Fourth, consider using connection pooling to reduce connection overhead. Regular maintenance tasks like optimizing tables (OPTIMIZE TABLE) and updating statistics (ANALYZE TABLE) can also improve performance. Lastly, regularly review and adjust configuration based on workload changes and growth.

14. What are the different isolation levels in MySQL, and how do they affect concurrency?

MySQL offers four isolation levels that control the degree to which concurrent transactions are isolated from each other: READ UNCOMMITTED, READ COMMITTED, REPEATABLE READ, and SERIALIZABLE. Lower isolation levels offer higher concurrency but are more susceptible to concurrency problems, such as dirty reads, non-repeatable reads, and phantom reads. Higher isolation levels reduce concurrency but prevent these issues.

- READ UNCOMMITTED: The lowest level; transactions can see uncommitted changes from other transactions (dirty reads). It offers the highest concurrency, but is rarely used.

- READ COMMITTED: Transactions can only read committed data, preventing dirty reads. However, non-repeatable reads are still possible (a transaction may read different values for the same row during its execution if another transaction commits an update to that row in between).

- REPEATABLE READ: This is the default level for MySQL. It prevents dirty reads and non-repeatable reads. However, phantom reads are still possible (a transaction may see new rows inserted by other transactions during its execution).

- SERIALIZABLE: The highest level; transactions are completely isolated. It prevents dirty reads, non-repeatable reads, and phantom reads by essentially serializing transactions. It provides the lowest concurrency.

15. Explain how to use EXPLAIN to analyze a MySQL query.

To analyze a MySQL query using EXPLAIN, simply prepend the EXPLAIN keyword to your SELECT, INSERT, UPDATE, or DELETE statement. EXPLAIN SELECT * FROM users WHERE age > 25; This will output a table describing how MySQL plans to execute the query. The key information to look at includes:

id: The order in which the tables are accessed.select_type: The type of the query (e.g.,SIMPLE,PRIMARY,SUBQUERY).table: The table being accessed.type: The join type, indicating how MySQL finds the rows (e.g.,ALL,index,range,ref,eq_ref,const,system,NULL). Aim forrefor better.possible_keys: The indexes that MySQL could use.key: The actual index MySQL chose to use.key_len: The length of the used key.ref: The columns or constants that are compared to the index.rows: The number of rows MySQL estimates it will have to examine to execute the query.Extra: Additional information about the query execution (e.g.,Using index,Using where,Using filesort).

By examining these values, particularly the type, rows, and Extra columns, you can identify potential performance bottlenecks, such as full table scans (type: ALL) or the need for additional indexes. You can then optimize your query or add appropriate indexes to improve performance.

16. How would you implement full-text search in MySQL?

MySQL provides built-in full-text search capabilities using MATCH() and AGAINST(). To implement it, you need to create a FULLTEXT index on the text column(s) you want to search. For example:

ALTER TABLE articles ADD FULLTEXT INDEX article_body_idx (title, body);

Then, you can use the MATCH() function with AGAINST() to perform the search. Different modes like NATURAL LANGUAGE MODE, BOOLEAN MODE, and WITH QUERY EXPANSION are available. BOOLEAN MODE gives more control. For example:

SELECT * FROM articles WHERE MATCH(title, body) AGAINST('MySQL full-text search' IN BOOLEAN MODE);

17. What is the purpose of the `GROUP BY` clause in MySQL, and how does it work with aggregate functions?

The GROUP BY clause in MySQL is used to group rows that have the same values in one or more columns into a summary row. Its primary purpose is to perform aggregate calculations on these groups. Without GROUP BY, aggregate functions like COUNT, SUM, AVG, MIN, and MAX would operate on the entire table.

When used with aggregate functions, GROUP BY instructs MySQL to apply the function to each group separately. For instance, SELECT category, COUNT(*) FROM products GROUP BY category; would return the number of products in each category. The database groups rows by the category column, and then COUNT(*) calculates the number of rows (products) within each distinct category.

18. How can you prevent SQL injection attacks in your MySQL applications?

To prevent SQL injection attacks in MySQL applications, prioritize parameterized queries or prepared statements. These methods send the SQL code and the data separately, so the database treats the data as data, not executable code. This ensures that even if a user enters malicious SQL code, it will be treated as a literal string and not executed as part of the SQL query.

Other measures include input validation and sanitization to filter out potentially malicious characters, using the principle of least privilege to limit database user permissions, and employing a web application firewall (WAF) to detect and block suspicious traffic. Also, escaping user-provided data using functions specific to MySQL (like mysql_real_escape_string) is a legacy approach but still offers some protection, though it's less robust than parameterized queries and not recommended for new applications.

19. Explain how to use partitioning in MySQL to improve performance and manage large tables.

Partitioning in MySQL divides a table into smaller, more manageable pieces based on a defined rule. This improves performance primarily by allowing queries to scan only the relevant partitions, significantly reducing the amount of data scanned, especially for large tables. For example, if you partition a sales table by month, queries for a specific month only need to scan that month's partition. This is called partition pruning.

Partitioning also helps with manageability. You can drop or archive older partitions without affecting the rest of the table, making tasks like data retention much simpler. MySQL supports several partitioning types including: RANGE, LIST, HASH, and KEY. The choice depends on how you want to distribute the data. For example, you might use RANGE partitioning based on date ranges, or LIST partitioning if you have discrete values like regions. Here's an example:

CREATE TABLE sales (

sale_date DATE,

product_id INT,

amount DECIMAL(10, 2)

) PARTITION BY RANGE (YEAR(sale_date)) (

PARTITION p2020 VALUES LESS THAN (2021),

PARTITION p2021 VALUES LESS THAN (2022),

PARTITION p2022 VALUES LESS THAN (2023),

PARTITION pFuture VALUES LESS THAN MAXVALUE

);

20. Describe the process of migrating a MySQL database to a new server.

Migrating a MySQL database involves several steps. First, backup the existing database on the old server using mysqldump. This creates a SQL file containing the database schema and data. The command looks like this: mysqldump -u [user] -p[password] [database_name] > backup.sql. Next, transfer the backup file to the new server using tools like scp or rsync. On the new server, create a new database with the same name. Finally, restore the database from the backup file using the mysql command-line tool: mysql -u [user] -p[password] [database_name] < backup.sql. After restoring, verify the data and update application configurations to point to the new server. Consider replication options such as asynchronous replication or using mysqldbcopy for minimized downtime during migration.

Remember to also update DNS records to point to the new server's IP address after the migration is complete and verified.

21. What are user-defined functions (UDFs) in MySQL, and how can you create them?

User-Defined Functions (UDFs) in MySQL are functions that extend the built-in functionality of MySQL. They allow you to create custom functions using languages like C or C++ and call them directly from SQL statements. UDFs can be very useful for implementing complex logic or accessing external resources that are not directly supported by MySQL's built-in functions.

To create a UDF, you generally write the function in C/C++, compile it into a shared library, and then register the function with MySQL using the CREATE FUNCTION statement. The CREATE FUNCTION statement specifies the function name, the parameters it accepts, the return type, and the name of the shared library and the function within it that implements the UDF. For example:

CREATE FUNCTION my_udf (param1 INT, param2 VARCHAR(255))

RETURNS INT

SONAME 'my_udf.so';

This assumes my_udf.so is the shared library and contains the function my_udf.

Advanced MySQL interview questions

1. How does MySQL handle transactions, and what are the different isolation levels?

MySQL handles transactions by using ACID properties (Atomicity, Consistency, Isolation, Durability). Atomicity ensures that a transaction is treated as a single unit of work (either all changes are applied, or none are). Consistency ensures that a transaction brings the database from one valid state to another. Durability guarantees that once a transaction is committed, it will remain so, even in the event of power loss or system failures. Isolation ensures that concurrent transactions do not interfere with each other.

MySQL supports four isolation levels, which determine the degree to which transactions are isolated from each other:

- READ UNCOMMITTED: Allows a transaction to read uncommitted changes from other transactions (dirty reads are possible).

- READ COMMITTED: Prevents dirty reads; a transaction can only read committed changes.

- REPEATABLE READ: Provides a snapshot view of the data; a transaction will always see the same data, even if other transactions modify it. (default level in MySQL).

- SERIALIZABLE: Provides the highest level of isolation; transactions are executed as if they were executed serially (one after the other).

2. Explain the concept of 'locking' in MySQL, and describe the different types of locks.

Locking in MySQL is a mechanism to prevent concurrent access to data, ensuring data integrity and consistency. When a transaction acquires a lock on a resource (e.g., a row, a table), other transactions might be blocked from accessing the same resource until the lock is released. This prevents race conditions and data corruption.

MySQL uses several types of locks, including:

- Table Locks: These locks affect the entire table. There are

READ(shared) locks, allowing multiple sessions to read the table concurrently, andWRITE(exclusive) locks, allowing only one session to write to the table. - Row Locks: These locks apply to individual rows within a table. InnoDB uses row-level locking which includes:

- Shared Locks (S Locks): Allow multiple transactions to read a row.

- Exclusive Locks (X Locks): Allow a single transaction to write to a row. Other transactions must wait until the X lock is released.

- Intention Locks: InnoDB uses intention locks (IS and IX) at the table level to indicate that a transaction intends to acquire shared (IS) or exclusive (IX) locks on rows within that table. They prevent a transaction from acquiring a table-level lock that would conflict with row-level locks held by other transactions. These enhance the performance of lock management.

- Metadata Locks (MDL): Protect the metadata of database objects like tables and stored procedures. Used during schema changes like

ALTER TABLEorDROP TABLE. These can lead to deadlocks if not carefully managed.

Deadlocks can occur when two or more transactions are blocked indefinitely, waiting for each other to release locks. MySQL automatically detects and resolves deadlocks by rolling back one of the transactions.

3. What are the differences between MyISAM and InnoDB storage engines?

MyISAM and InnoDB are storage engines for MySQL. The key differences lie in their features and suitability for different workloads. MyISAM excels in read-heavy operations. It uses table-level locking, leading to faster reads but slower writes, especially in concurrent environments. It also doesn't support transactions or foreign keys. InnoDB, on the other hand, provides row-level locking (improving concurrency), supports ACID transactions, and enforces referential integrity through foreign keys. This makes it better for applications requiring data integrity and reliability, even if it means slightly slower read performance than MyISAM.

Essentially, choose MyISAM for read-intensive applications where data integrity is not paramount. Choose InnoDB for applications requiring high reliability, concurrency, and transaction support.

4. Describe the process of query optimization in MySQL.

MySQL query optimization is the process of selecting the most efficient execution plan for a SQL query. It involves several stages. First, the parser checks the SQL syntax. The query optimizer then analyzes the query and considers various execution plans. It uses indexes, table statistics, and join algorithms to estimate the cost of each plan. The cost is typically measured in terms of I/O operations, CPU usage, and memory consumption. Finally, MySQL chooses the plan with the lowest estimated cost and executes it.

Key techniques include:

- Index usage: Selecting appropriate indexes to speed up data retrieval.

- Query rewriting: Transforming the query into a more efficient equivalent form.

- Join order optimization: Determining the optimal order in which tables should be joined.

- Subquery optimization: Converting subqueries into joins where possible.

- Using

EXPLAIN:EXPLAIN SELECT * FROM table WHERE ...;helps analyze the execution plan chosen by MySQL.

5. How do you improve the performance of slow queries in MySQL?

To improve the performance of slow queries in MySQL, several strategies can be employed. Firstly, analyze the query using EXPLAIN to identify bottlenecks like missing indexes, full table scans, or inefficient joins. Add appropriate indexes to columns frequently used in WHERE, JOIN, and ORDER BY clauses. Optimize the query structure itself, such as rewriting subqueries into joins or simplifying complex WHERE conditions. Furthermore, ensure statistics are up-to-date by running ANALYZE TABLE on the involved tables.

Consider hardware improvements like increasing RAM for better caching, upgrading to faster storage (SSDs), or scaling the database server. Review the MySQL configuration parameters, such as innodb_buffer_pool_size, to optimize memory allocation. Also, if appropriate, query caching can be enabled, but be mindful of its invalidation overhead.

6. What are the advantages of using stored procedures in MySQL?

Stored procedures in MySQL offer several advantages. They improve performance by precompiling SQL statements, reducing network traffic as only the procedure name and parameters are sent, and enhancing security by granting users access only to the procedure rather than the underlying tables. They also promote code reusability and maintainability since logic is encapsulated in a single unit, making updates easier.

Specifically consider an example where you have a complex query which needs to be run repeatedly. Instead of sending the entire query each time from the client, you create a stored procedure. The first time the stored procedure is executed the SQL is parsed and compiled. Subsequent calls to the stored procedure will use the compiled version which is faster. Another example is using stored procedures to hide complex queries from the outside world. A user might only be granted EXECUTE privileges on a specific stored procedure and not be granted SELECT, INSERT, UPDATE or DELETE privileges on the underlying tables. This ensures that business logic is kept secure and centralized on the database server.

7. Explain how to implement replication in MySQL and what are the different replication topologies?

MySQL replication involves copying data from a master server to one or more slave servers. This is achieved using the master server's binary log (binlog), which records all data changes. The slave server reads the binlog and applies these changes to its own database. This process ensures data consistency across multiple servers, enhancing read performance through distributing reads to slaves, and providing redundancy.

Different replication topologies include: * Master-Slave: A single master replicates to one or more slaves. This is the most common setup. * Master-Master: Two servers act as both master and slave to each other, suitable for high availability. Requires conflict resolution strategies. * Circular Replication: Similar to master-master, but extended to more servers in a circular fashion. * Multi-Source Replication: A slave replicates from multiple masters. Useful for aggregating data from different sources. * Group Replication: Provides a distributed, fault-tolerant system with automatic group membership management.

8. What are the different types of indexes in MySQL, and how do they impact performance?

MySQL offers several index types, each optimized for different query patterns. The most common are: B-Tree indexes, which are the default and work well for range queries, equality checks, and sorting; Hash indexes, used primarily by the MEMORY storage engine for very fast equality lookups, but they don't support range queries; Full-Text indexes, designed for searching text fields, allowing for complex searches based on keywords and phrases; and Spatial indexes, used for indexing spatial data like geographical coordinates.

Indexes generally improve SELECT query performance by allowing the database to quickly locate relevant rows without scanning the entire table. However, they can slow down INSERT, UPDATE, and DELETE operations because the index also needs to be updated whenever data changes. Choosing the right index type and balancing index usage with write performance is crucial for overall database efficiency. Over-indexing can lead to performance degradation, so it's important to analyze query patterns and data characteristics before adding indexes.

9. How does MySQL handle character sets and collations?

MySQL uses character sets to define the set of characters that can be stored in a string, and collations to define the rules for comparing those characters. A character set is a set of symbols and encodings. A collation is a set of rules for comparing characters in a character set. Each character set has one or more collations.

MySQL supports character sets at multiple levels: server, database, table, and column. The server character set is the default for all databases. The database character set is the default for all tables within that database. The table character set is the default for all columns within that table. Finally, a column can have its own specific character set. Collations are applied at the same levels, and each level inherits from the level above. When comparing strings, MySQL uses the collation associated with the column or expression. You can override these defaults using CHARACTER SET and COLLATE clauses in CREATE and ALTER statements, and in queries.

10. Explain the concept of partitioning in MySQL and its benefits.

Partitioning in MySQL involves dividing a table into smaller, more manageable parts called partitions. Each partition can be stored on different physical storage, although this is not required. This division is transparent to the application, meaning queries don't need to be modified to access partitioned tables.

The benefits include improved query performance, especially for queries that access only a small subset of the data. It simplifies data management tasks like archiving or purging old data, as entire partitions can be easily dropped. Also, it enhances availability because operations like index rebuilding or backup can be performed on individual partitions instead of the entire table. Furthermore, it can improve storage efficiency by placing frequently accessed partitions on faster storage.

11. How do you back up and restore a MySQL database?

MySQL databases can be backed up using mysqldump. This utility creates a logical backup, typically as a SQL script containing CREATE and INSERT statements. For example: mysqldump -u [user] -p[password] [database_name] > backup.sql. To restore, you can use the mysql client: mysql -u [user] -p[password] [database_name] < backup.sql.

For larger databases, consider using mysqldump with the --single-transaction option (for InnoDB tables) to ensure a consistent snapshot, or xtrabackup for faster, physical backups. Restore using the mysql client, or for physical backups, using appropriate tools like xtrabackup (following necessary preparation steps). Replication can also be used as a backup strategy in some scenarios.

12. What are the key differences between VARCHAR and TEXT data types, and when would you use each?

VARCHAR and TEXT are both used to store strings, but they differ in storage and usage. VARCHAR stores variable-length strings with a specified maximum length (e.g., VARCHAR(255)). TEXT, on the other hand, is designed for storing larger, variable-length strings without a predefined maximum length (although databases often impose a limit).

Use VARCHAR when you know the maximum length of the string you need to store and it's relatively short, as it's often more efficient for indexing and searching. Use TEXT for storing larger chunks of text, such as articles, blog posts, or comments, where the length is unpredictable and can be quite large. TEXT columns are often stored off-row, which can affect performance if frequently accessed, but is better when the data size is very large.

13. Describe the process of setting up and managing user permissions in MySQL.

Setting up and managing user permissions in MySQL involves several steps. First, you create a user account using the CREATE USER statement, specifying the username and hostname (e.g., 'user'@'localhost' or 'user'@'%'). Next, you grant specific privileges to the user using the GRANT statement. This allows you to control what actions the user can perform on databases, tables, or specific columns. Common privileges include SELECT, INSERT, UPDATE, DELETE, CREATE, DROP, ALL PRIVILEGES, and USAGE. For example: GRANT SELECT, INSERT ON database.table TO 'user'@'localhost';. Finally, after granting or revoking privileges, it's essential to execute FLUSH PRIVILEGES; to reload the grant tables and ensure the changes take effect immediately.

To manage permissions, you can revoke privileges using the REVOKE statement. For example: REVOKE SELECT ON database.table FROM 'user'@'localhost';. You can view existing grants for a user with the SHOW GRANTS FOR 'user'@'localhost'; statement. Remember to follow the principle of least privilege, granting only the necessary permissions to each user. Also, consider using roles to group permissions and assign them to users for easier management. You can create and manage roles using the CREATE ROLE, GRANT role TO user, and SET DEFAULT ROLE statements.

14. How do you monitor the performance of a MySQL server?

I monitor MySQL server performance using a combination of tools and techniques. Key metrics include CPU utilization, memory usage, disk I/O, network traffic, query execution time, and connection statistics. Tools like mysqltop, pt-query-digest, and the MySQL Performance Schema are essential. SHOW GLOBAL STATUS and SHOW ENGINE INNODB STATUS provide valuable insights.

Specifically, I monitor slow query logs to identify problematic queries. I also use monitoring systems like Prometheus and Grafana (often in conjunction with the mysqld_exporter) for long-term trend analysis and alerting. Regular analysis helps to identify bottlenecks and optimize configurations.

15. Explain the concept of 'connection pooling' and its benefits in MySQL environments.

Connection pooling is a technique used to reuse existing database connections instead of creating new ones every time an application needs to interact with the database. When an application requests a connection, it's retrieved from a pool of pre-established connections. When the application is done, the connection is returned to the pool instead of being closed, ready for another request.

The benefits in MySQL environments include:

- Reduced overhead: Creating a new database connection is resource-intensive. Connection pooling avoids this repeated overhead.

- Improved performance: Reusing connections significantly speeds up database operations, resulting in faster response times for applications.

- Scalability: By efficiently managing connections, connection pooling enables applications to handle a larger number of concurrent users.

- Resource Management: Limits the number of total connections to the database server preventing the exhaustion of server resources.

16. How do you handle database migrations in MySQL?

I typically use a tool like Flyway, Liquibase, or MySQL's built-in features alongside version control (like Git) to manage database migrations. The process involves creating migration scripts that define schema changes (e.g., adding tables, altering columns, creating indexes). Each script is versioned, ensuring changes are applied in the correct order. When deploying changes, the migration tool checks the current database version and applies only the necessary scripts to bring the database up to date.

Specifically, using a tool the workflow involves:

- Creating migration scripts (SQL files) that contain DDL statements.

- Storing these scripts in a version control system.

- The tool tracks which migrations have been applied to the database.

- During deployment, the tool automatically applies any pending migrations.

17. Describe the process of setting up and using SSL for MySQL connections.

To set up SSL for MySQL connections, you first need to generate SSL certificates and keys on the server. This typically involves using openssl. You need a Certificate Authority (CA) certificate, a server certificate and key, and optionally a client certificate and key. Configure MySQL to use these certificates by setting the ssl-ca, ssl-cert, and ssl-key options in the my.cnf configuration file. Restart the MySQL server after making these changes.

On the client side, configure the MySQL client to use SSL by specifying the --ssl-ca, --ssl-cert, and --ssl-key options when connecting. Alternatively, you can enforce SSL by requiring it within the MySQL user's grant statement using REQUIRE SSL. Verify the connection is using SSL by running SHOW STATUS LIKE 'Ssl_cipher'; within the MySQL client. A non-empty value indicates SSL is active. If SSL is not being used, this will return an empty string. For example:

GRANT ALL PRIVILEGES ON *.* TO 'your_user'@'%' REQUIRE SSL;

18. What are the different types of MySQL errors, and how do you handle them in your application?

MySQL errors can be broadly categorized into: Syntax Errors, occurring due to incorrect SQL syntax; Runtime Errors, like division by zero or constraint violations (e.g., duplicate key); and Connection Errors, which arise from issues connecting to the database server. Application handling involves using try...catch blocks around database operations. Within the catch block, you inspect the error code/message (e.g., using language-specific functions like mysqli_errno() in PHP).

Based on the error type, you can implement specific logic. For example, for duplicate key errors, you might display a user-friendly message prompting the user to choose a different value. For connection errors, you could implement retry logic with exponential backoff. Logging errors is crucial for debugging. Use parameterized queries to mitigate SQL injection risks.

19. Explain the concept of 'ACID properties' in the context of MySQL transactions.

ACID properties are a set of principles that guarantee reliable processing of database transactions. They ensure data integrity and consistency even in the face of errors, power failures, or other unforeseen events. They are:

- Atomicity: All operations within a transaction are treated as a single "unit" of work. Either all operations succeed, or none do. If any part fails, the entire transaction is rolled back, leaving the database in its original state.

- Consistency: A transaction must maintain the integrity of the database. It moves the database from one valid state to another. This means adhering to all defined rules, constraints, and data types.

- Isolation: Concurrent transactions should not interfere with each other. Each transaction should operate as if it were the only transaction running on the database. Isolation levels control the degree to which transactions are isolated from each other.

- Durability: Once a transaction is committed, its changes are permanent and will survive even system failures. The database system guarantees that the changes will be persisted and available, typically through techniques like write-ahead logging.

20. How does MySQL handle full-text search?

MySQL provides full-text search capabilities through its MATCH ... AGAINST syntax and the FULLTEXT index type. This allows you to efficiently search for words or phrases within text columns. When creating a full-text index, MySQL tokenizes the text data, creating an index of individual words. During a search, MySQL uses this index to quickly identify rows containing the specified search terms.

MySQL's full-text search supports boolean operators (+, -, >, <, ~), wildcard characters (*), and proximity searches (though limited compared to dedicated search engines). It also includes stopword lists to ignore common words like "the" and "a" which reduces index size and improves search relevance. When a FULLTEXT index is created, a stopword list is applied implicitly. By default, fulltext search has a minimum word length of 3 characters. The performance is based on the size of the index and number of rows.

21. Describe the difference between clustered and non-clustered indexes and when you might use them.

Clustered indexes define the physical order of data in a table. A table can only have one clustered index because the data itself can only be sorted in one physical order. Think of it like a phone book sorted by last name; the actual physical book is ordered by last name.

Non-clustered indexes, on the other hand, are like creating a separate index or table that points to the data in the main table. A table can have multiple non-clustered indexes. Imagine an index in the back of a textbook; it provides pointers to the actual content but doesn't change the physical arrangement of the pages. Use a clustered index when you frequently need to retrieve data in a specific order or need range-based queries (e.g., dates). Use non-clustered indexes to speed up lookups based on specific columns without affecting the physical order of the data.

22. Let's say your MySQL database is experiencing high CPU usage. What steps would you take to diagnose and resolve the issue?

First, I'd identify the source of the high CPU usage. I'd use SHOW PROCESSLIST to see currently running queries and identify long-running or frequently executed queries. performance_schema or tools like pt-query-digest or mysqldumpslow can help analyze query performance and identify the most resource-intensive queries. I would also check the slow query log. I'd also look at server status variables with SHOW GLOBAL STATUS looking for unusual activity or bottlenecks like high connection counts or excessive table scans.

Once identified, I'd optimize the problematic queries by:

- Adding or optimizing indexes: Use

EXPLAINto analyze query execution plans and identify missing indexes. - Rewriting inefficient queries: Simplify complex queries or break them down into smaller, more manageable parts.

- Optimizing table structures: Review table structures and data types for efficiency.

- Adjusting MySQL configuration: Optimize configuration parameters like

innodb_buffer_pool_size,query_cache_size(if applicable), andsort_buffer_size. Consider using a tool likemysqltuner.plfor recommendations. If needed, scale the database instance (vertically or horizontally) if the load exceeds the capacity.

Expert MySQL interview questions

1. How would you design a system for real-time analytics on frequently updated MySQL data without impacting transactional performance?

To design a real-time analytics system on frequently updated MySQL data without impacting transactional performance, I'd employ a strategy that minimizes direct load on the primary database.

I'd use a combination of techniques:

- Change Data Capture (CDC): Tools like Debezium or Maxwell's daemon can capture changes (inserts, updates, deletes) from the MySQL binlog in real-time.

- Message Queue: The CDC stream publishes these changes to a message queue such as Kafka or RabbitMQ. This decouples the database from the analytics system.

- Data Transformation: A stream processing engine (e.g., Apache Flink, Apache Spark Streaming) consumes the data from the message queue. This engine transforms and aggregates the data as needed.

- Analytics Database: The transformed data is then loaded into a separate analytical database like ClickHouse or Apache Druid optimized for fast aggregations and queries.

- Read Replicas: Offload analytical queries to read replicas. If the data is not time-sensitive, scheduled batch processing to replicate the data is an option.

This architecture minimizes the impact on the primary MySQL database by offloading analytics processing to a separate system.

2. Explain the intricacies of using MySQL's Group Replication in a multi-datacenter setup, addressing potential issues like network latency and split-brain scenarios.

MySQL Group Replication across multiple datacenters presents challenges primarily due to network latency. Increased latency directly impacts transaction commit times as all members must agree before a transaction is considered complete. This can significantly reduce write throughput. Also, network partitions can lead to split-brain scenarios where the group splits into multiple subgroups, each thinking it's the primary. Data divergence between these subgroups becomes a major issue.

To mitigate these issues:

- Increase group communication timeouts: Allow more time for members to respond, but be cautious of increasing the time too much, as this can mask real failures.

- Utilize asynchronous replication for disaster recovery: In addition to group replication, configure asynchronous replication to a separate site for DR purposes, understanding the potential for data loss.

- Node weighting: If a DC has better reliability/network, ensure the weight is high so it is less likely to be isolated.

- Configure

group_replication_consistency: This setting helps manage the level of consistency required within the group, allowing for some trade-offs between consistency and performance. - Implement robust monitoring: Closely monitor network latency, group membership, and transaction commit times to detect and respond to issues quickly.

- Use proper quorum configuration: Ensuring the majority of voters reside in the primary datacenter helps to avoid a split brain in case of a cross-datacenter network partition.

3. Describe your approach to optimizing a MySQL database experiencing slow query performance due to a high volume of concurrent read and write operations.

When optimizing a MySQL database experiencing slow query performance due to high concurrency, I would first identify the slow queries using tools like MySQL's slow query log or performance schema. Then, I would focus on optimizing those queries by analyzing their execution plans using EXPLAIN and adding appropriate indexes to the tables involved. Caching frequently accessed data using MySQL's query cache (if enabled and beneficial) or a dedicated caching layer like Redis or Memcached can also reduce the load on the database.

Beyond query optimization, I would consider implementing connection pooling to reduce the overhead of establishing new connections for each request. For write-heavy workloads, techniques like partitioning large tables, using appropriate storage engines (InnoDB for ACID properties, MyISAM for read-heavy scenarios if appropriate), and optimizing transaction sizes can improve performance. Replication (read replicas) will scale reads across multiple servers. Finally, monitoring key database metrics such as CPU usage, disk I/O, and network traffic is crucial for identifying bottlenecks and proactively addressing performance issues.

4. How can you implement a robust audit logging system in MySQL to track data modifications and access, while ensuring performance is not significantly degraded?

A robust audit logging system in MySQL can be implemented using a combination of triggers, stored procedures, and a dedicated audit table. Triggers are attached to the tables you want to audit (e.g., BEFORE INSERT, BEFORE UPDATE, BEFORE DELETE) and, when fired, call a stored procedure. The stored procedure then inserts relevant information (timestamp, user, affected columns and values, query type) into the audit table.

To minimize performance impact:

- Asynchronous logging: Consider writing audit logs asynchronously using message queues or similar mechanisms if real-time logging is not critical.

- Selective auditing: Audit only critical tables and columns. Avoid auditing read-only or frequently accessed but low-impact tables.

- Optimized audit table: Index the audit table appropriately for querying (e.g., by timestamp, user, table name). Partitioning may also help for large audit tables.

- Batch inserts: Implement batch inserts into the audit table to reduce the number of write operations.

- Use

LOGFILEgroup option for audit logging. - Consider MySQL Enterprise Audit: If available, MySQL Enterprise Audit provides a built-in, potentially more performant, audit logging solution. Its configurable to specify specific users, hosts, or queries to be audited.

5. Discuss the trade-offs between using different isolation levels in MySQL and how they affect concurrency and data consistency in a high-transaction environment.

MySQL offers several isolation levels (Read Uncommitted, Read Committed, Repeatable Read, Serializable), each representing a trade-off between concurrency and data consistency. Lower isolation levels like Read Uncommitted allow dirty reads (reading uncommitted data), maximizing concurrency but sacrificing consistency. Read Committed prevents dirty reads, but allows non-repeatable reads (a read can return different results within the same transaction if another transaction commits changes). Repeatable Read (the default in MySQL) further prevents non-repeatable reads, ensuring that a transaction sees a consistent snapshot of the data. Serializable provides the highest level of isolation, preventing phantom reads (new rows appearing during a transaction) by essentially locking the range of data being read. However, this severely limits concurrency.

In a high-transaction environment, the choice of isolation level hinges on the application's needs. Repeatable Read strikes a good balance between consistency and concurrency for many applications. If data integrity is paramount and write conflicts are rare, Serializable might be acceptable. If the application can tolerate occasional inconsistencies and needs maximum throughput, Read Committed could be considered. However, Read Uncommitted is generally discouraged due to the risk of reading invalid data. Each level affects how locks are acquired and released, directly influencing the number of concurrent transactions that can be processed without conflicts.

6. Explain how you would approach migrating a large, mission-critical MySQL database to a new hardware infrastructure with minimal downtime.

To migrate a large, mission-critical MySQL database with minimal downtime, I'd use a combination of replication and potentially logical backups. First, I would set up a replica (or multiple replicas) on the new hardware. This involves configuring binary log replication from the source database to the new replica(s). After the replica(s) are fully synchronized, I would perform a controlled failover. This means changing the application's database connection settings to point to the new replica, which is now promoted to be the new primary database. This minimizes downtime as the application switches from the old database to the new one with minimal disruption.

For the final switch, I'd use a short maintenance window. Any remaining transactions on the old primary would be allowed to complete or be gracefully handled, then replication would be stopped. A final logical backup (using mysqldump with appropriate flags for consistency, or a tool like Percona XtraBackup for non-blocking backups) could be taken from the old primary as a fallback. Connection strings are then updated to point to the new primary in production. Monitoring would be crucial throughout the entire process to ensure data integrity and performance.

7. How do you handle and recover from a situation where a critical MySQL server suffers a complete hardware failure?

In the event of a complete hardware failure of a critical MySQL server, a well-defined disaster recovery plan is crucial. First, immediately activate a standby server, ideally a replicated slave, to take over as the primary. This requires updating DNS records to point to the new server and potentially rerouting application connections. The old server should be investigated to determine the root cause of the hardware failure. If data recovery is possible from the failed server, consider restoring it to another server as a read-only replica for analysis or to recover data that might not have been fully replicated.

To minimize downtime and data loss, implement robust replication strategies (e.g., semi-synchronous replication) and regular backups. Configure monitoring to detect hardware issues before they become critical. Regularly test the failover process to ensure its effectiveness and identify potential problems. Using a cluster solution like MySQL Group Replication or a managed database service can greatly simplify failover and recovery.

8. Describe the steps you would take to troubleshoot and resolve a deadlock situation in a MySQL database.

To troubleshoot a deadlock in MySQL, I'd first identify it. I would use SHOW ENGINE INNODB STATUS; to inspect the InnoDB engine status, looking for the LATEST DETECTED DEADLOCK section which details the involved transactions and the resources they're contending for. Alternatively, I'd check the MySQL error log for deadlock related messages.

Once identified, I'd analyze the involved queries to understand the locking order. Deadlocks often arise from inconsistent locking sequences across different transactions. To resolve it, I'd implement one or more of the following: Simplify transactions: Keep transactions short and focused. Consistent Locking Order: Ensure that applications acquire locks on resources in the same order. Lock timeouts: Set innodb_lock_wait_timeout to a reasonable value to automatically rollback transactions that wait too long for a lock. Retry Logic: Implement application-level retry logic to automatically retry transactions that are rolled back due to deadlocks. Finally, review the application code for potential locking issues. Choose the simplest and least disruptive solution first.

9. How would you implement and manage a data warehousing solution using MySQL, considering factors like data extraction, transformation, and loading (ETL) processes?

Implementing a data warehousing solution with MySQL involves several key steps. First, we'd define the schema for our data warehouse, typically a star or snowflake schema optimized for analytical queries. Then, we would set up ETL processes. For extraction, we can use tools like mysqldump or custom scripts to pull data from our operational databases. Transformation would involve cleaning, aggregating, and reshaping the data, potentially using SQL queries, Python with libraries like Pandas, or dedicated ETL tools like Apache NiFi. Finally, loading the transformed data into the MySQL data warehouse can be done using LOAD DATA INFILE for bulk loading or through scripting. Indexing and partitioning are crucial for performance.

Management involves monitoring ETL processes for errors and performance bottlenecks, scheduling ETL jobs with tools like cron or Airflow, and ensuring data quality through validation checks. We'd also need to implement a data retention policy to manage storage costs and consider backup and recovery strategies for the data warehouse. Regularly updating the data warehouse with fresh data is essential to keep the analytics relevant.

10. Explain the differences between various MySQL storage engines (InnoDB, MyISAM, etc.) and when you would choose one over another for specific use cases.

MySQL offers several storage engines, each with distinct characteristics. InnoDB is the default and most widely used. It supports ACID properties, transactions, row-level locking (minimizing contention), and foreign keys, making it suitable for applications requiring data integrity and concurrency, such as e-commerce platforms or financial systems. MyISAM, on the other hand, offers faster read speeds due to its table-level locking, but lacks transaction support and foreign key constraints. It's generally better suited for read-intensive applications where data integrity is less critical, like data warehousing or logging systems.

Other engines include MEMORY (for temporary tables), CSV (for storing data in CSV format), and ARCHIVE (for archiving large amounts of data with high compression). The choice depends heavily on the application's needs. If transactions and data integrity are paramount, InnoDB is the clear choice. If read performance is the primary concern and data integrity is less critical, MyISAM might be considered. MEMORY is ideal for temporary, high-speed data storage, while ARCHIVE is suitable for long-term data storage with minimal storage overhead.

11. How do you ensure data security and compliance (e.g., GDPR, HIPAA) when storing sensitive information in a MySQL database?

To ensure data security and compliance (GDPR, HIPAA) when storing sensitive data in MySQL, several strategies are employed. Data encryption both at rest (using tools like InnoDB's tablespace encryption or column encryption) and in transit (using SSL/TLS for connections) is crucial. Access control mechanisms, such as role-based access control (RBAC), limit user privileges based on their roles. Data masking and anonymization techniques can be used for non-production environments or reporting. Regular auditing and monitoring of database activities help detect and respond to security incidents. Secure configuration and regular patching of the MySQL server are also essential to address vulnerabilities.

Compliance-specific measures include implementing data retention policies (GDPR's "right to be forgotten"), ensuring proper data breach notification procedures, and documenting all security measures to demonstrate compliance to auditors. For HIPAA, specific rules regarding access logs and encryption of protected health information (PHI) are enforced. Regularly reviewing and updating security protocols in accordance with evolving compliance requirements is a necessity.

12. Discuss your experience with MySQL performance tuning tools and techniques, such as query profiling, index optimization, and server configuration adjustments.

I have experience using various MySQL performance tuning tools and techniques. For query profiling, I've utilized EXPLAIN to analyze query execution plans, identifying slow queries and potential bottlenecks. I've also used tools like pt-query-digest to aggregate and analyze query logs for performance patterns. For index optimization, I focus on creating appropriate indexes based on query patterns identified through profiling, ensuring that indexes cover frequently used columns in WHERE clauses and JOIN conditions. I monitor index usage with tools like SHOW INDEX and pt-index-usage to identify unused or redundant indexes.

Regarding server configuration, I've adjusted parameters like innodb_buffer_pool_size based on available memory and workload characteristics to improve data access performance. I've also modified connection-related settings like max_connections to handle concurrent user load efficiently. I often monitor server performance using tools such as mysqltuner.pl and performance schema to identify and address potential issues related to CPU utilization, disk I/O, and memory usage, tuning configuration parameters to optimize resource allocation for improved overall system performance.

13. Describe how you would design a backup and recovery strategy for a MySQL database that meets specific recovery time objective (RTO) and recovery point objective (RPO) requirements.

A robust MySQL backup and recovery strategy to meet specific RTO and RPO targets would involve a combination of full, incremental, and binary log backups. Full backups would be performed weekly, incremental backups daily to minimize data loss, and binary logs every hour to allow for point-in-time recovery. This setup allows restoration to a point within the last hour (RPO). The RTO can be minimized by using replication. A replica server acts as a hot standby; in case of primary server failure, switch application connections to the replica, ensuring rapid recovery (RTO within minutes).

To streamline the process:

- Full Backups:

mysqldump --all-databases --single-transaction > full_backup.sql - Incremental Backups:

mysqldump --all-databases --single-transaction --master-data --lock-all-tables --flush-logs > incremental_backup.sql - Binary Logs: Enable binary logging: