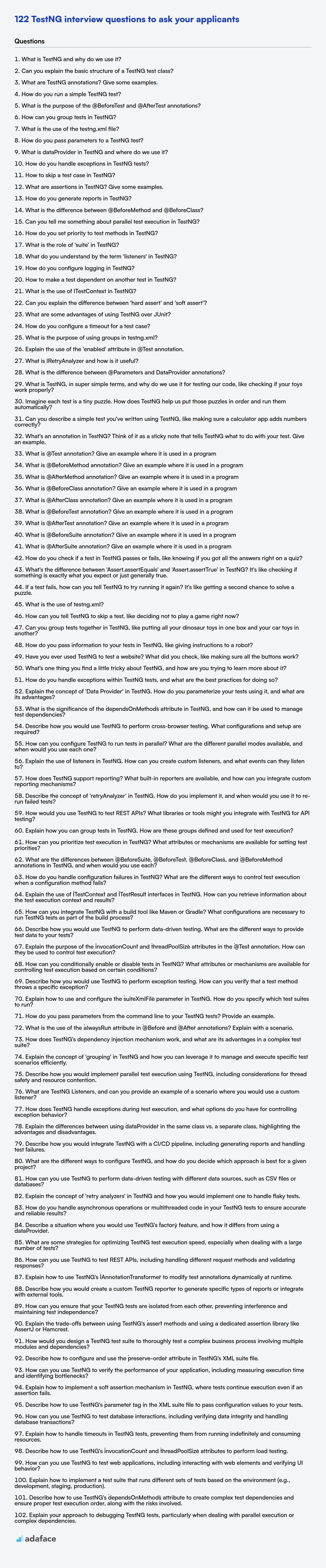

As a recruiter, your primary task is to find and hire the best TestNG professionals. This guide is designed to help you assess candidates' knowledge effectively.

It provides a comprehensive set of interview questions tailored for different experience levels, ensuring you can evaluate candidates' skills across the board.

This blog post is your go-to resource for TestNG interview questions, covering freshers to experienced professionals.

You'll find a range of questions, including multiple-choice questions (MCQs), to help you assess candidates thoroughly.

By utilizing this guide, you'll be well-equipped to gauge a candidate's expertise and suitability for the role. Consider using our Java assessment as a pre-interview tool.

Table of contents

TestNG interview questions for freshers

1. What is TestNG and why do we use it?

TestNG is a testing framework inspired by JUnit and NUnit but introduces some new functionalities that make it more powerful and easier to use. It's designed to cover a wide range of testing categories: unit, functional, end-to-end, integration, etc. It provides features like annotations, parallel test execution, parameterized testing, and reporting.

We use TestNG because it offers several advantages over JUnit and other testing frameworks. Key benefits include: Annotations for easier test configuration, Parallel execution to reduce test execution time, Data-driven testing through parameterization, Flexible test configuration via testng.xml, and Powerful reporting to analyze test results effectively.

2. Can you explain the basic structure of a TestNG test class?

A TestNG test class in Java typically includes the following elements:

importstatements: Import necessary TestNG annotations and classes (e.g.,org.testng.annotations.*).- Class declaration: A regular Java class.

- Test methods: Methods annotated with

@Test. These methods contain the actual test logic. @Beforeand@Afterannotations: Methods annotated with@BeforeSuite,@BeforeTest,@BeforeClass,@BeforeMethod,@AfterSuite,@AfterTest,@AfterClass,@AfterMethodto configure and tear down the test environment before and after different scopes (suite, test, class, method). They help manage setup and cleanup of tests. For example@BeforeMethodruns before each@Testmethod.- Assertions: Use

Assertclass methods (e.g.,Assert.assertEquals(),Assert.assertTrue()) to verify expected results.

3. What are TestNG annotations? Give some examples.

TestNG annotations are special tags that control the execution flow of tests in TestNG. They provide a way to configure and customize test methods, setup and teardown methods, and test suites. Annotations simplify test code by replacing complex XML configurations.

Examples of TestNG annotations include:

@Test: Marks a method as a test method.@BeforeMethod: Method will execute before each test method.@AfterMethod: Method will execute after each test method.@BeforeClass: Method will execute before the first test method in the current class is invoked.@AfterClass: Method will execute after all the test methods in the current class have been run.@BeforeSuite: Method will execute before all tests in the suite.@AfterSuite: Method will execute after all tests in the suite.@DataProvider: Marks a method as supplying data for a test method. Example usage@DataProvider(name = "testData") public Object[][] testData() { return new Object[][] {{ "data1" }, { "data2" }}; } @Test(dataProvider = "testData") public void myTest(String data) { System.out.println("Data: " + data); }

4. How do you run a simple TestNG test?

To run a simple TestNG test, you typically need a test class with methods annotated with @Test. These methods contain the test logic. To execute the test:

- Using an IDE (e.g., IntelliJ IDEA, Eclipse): Right-click on the test class or a specific test method in the IDE and select "Run" (or a similar option) which automatically configures TestNG to execute the selected tests.

- Using the command line: You can execute TestNG tests via the command line using a

testng.xmlconfiguration file. This file specifies which tests to run. To do so, use a command likejava -cp "testng.jar;your_classpath" org.testng.TestNG testng.xml. Replacetestng.jarwith the actual path to the TestNG JAR file andyour_classpathwith the classpath of your project.

5. What is the purpose of the @BeforeTest and @AfterTest annotations?

The @BeforeTest and @AfterTest annotations in testing frameworks (like TestNG) are used to define methods that should be executed before and after each test method within a <test> tag in the test suite XML file. These annotations help in setting up the test environment (e.g., initializing variables, establishing database connections) before each test and tearing it down (e.g., closing connections, cleaning up resources) after each test.

Specifically, @BeforeTest methods are run once before any of the test methods inside the <test> tag are executed. Conversely, @AfterTest methods are run once after all the test methods inside the <test> tag have been executed. This ensures that each test runs in a clean environment and that resources are properly managed, improving the reliability and repeatability of tests.

6. How can you group tests in TestNG?

In TestNG, tests can be grouped using several mechanisms. The most common is using the <groups> tag in the testng.xml file. You can define groups and then assign tests (methods or classes) to those groups using the @Test(groups = {"groupName"}) annotation in your test classes or methods.

Another way to group tests is through meta-groups. This involves defining groups that are composed of other groups. This is also configured within the testng.xml file using the <define> tag. Example: <define name="regression"> <include name="sanity"/> <include name="performance"/> </define>. In this scenario, regression group includes all the test present in sanity and performance groups.

7. What is the use of the testng.xml file?

The testng.xml file in TestNG is a configuration file used to control and customize the execution of your tests. It provides a way to define test suites, test groups, parameters, and other settings without modifying the code directly. It acts as a centralized control panel for your tests, making it easier to manage and execute them.

Specifically, testng.xml allows you to:

- Define test suites: Group related tests into logical suites.

- Control test execution order: Specify the order in which tests or test classes should run.

- Parameterize tests: Pass different values to tests using parameters.

- Include/Exclude tests: Selectively include or exclude tests based on groups or other criteria.

- Specify listeners: Configure listeners to monitor test execution and generate reports.

8. How do you pass parameters to a TestNG test?

TestNG offers several ways to pass parameters to tests:

testng.xml: You can define parameters within the

testng.xmlfile using the<parameter>tag. These parameters can be accessed in your test methods using the@Parametersannotation. This method is useful for configuration values that might change between environments or test runs.<parameter name="browser" value="chrome"/> <test name="MyTest"> <classes> <class name="MyTestClass"/> </classes> </test>@Parameters annotation: Used in conjunction with

testng.xml, or data providers. This allows you to specify which parameters a test method should receive.@Parameters("browser") @Test public void testMethod(String browser) { // Use the 'browser' parameter }Data Providers: Data providers are methods annotated with

@DataProviderthat return a 2D array of objects. Test methods annotated with@Test(dataProvider = "dataProviderName")will receive data from the specified data provider. This is excellent for data-driven testing where you want to run the same test with multiple sets of data.@DataProvider(name = "testData") public Object[][] provideData() { return new Object[][] {{"data1", 123}, {"data2", 456}}; } @Test(dataProvider = "testData") public void testMethod(String data, int number) { // Use the data and number parameters }Programmatic Creation of TestNG: Parameters can also be passed when creating TestNG programmatically.

9. What is dataProvider in TestNG and where do we use it?

In TestNG, a dataProvider is an annotation (@DataProvider) used to supply test methods with data. It allows you to run the same test method multiple times with different sets of input data. This is very useful for data-driven testing, where you want to verify the behavior of your code with various inputs without duplicating test code.

We use dataProvider primarily to:

- Provide different input values for a test method.

- Separate test data from test logic, making the tests more maintainable and readable.

- Easily execute the same test case with a variety of data sets.

10. How do you handle exceptions in TestNG tests?

In TestNG, exceptions during test execution can be handled using a few approaches. One common method is to specify expected exceptions directly within the @Test annotation using the expectedExceptions attribute. For example, @Test(expectedExceptions = ArithmeticException.class) tells TestNG to pass the test if an ArithmeticException is thrown and to fail it if the exception is not thrown or if a different exception occurs.

Alternatively, exceptions can be caught within the test method itself using standard Java try-catch blocks. This provides more control, allowing you to perform assertions on the exception or take specific actions based on the type of exception caught. For instance, you can catch a NullPointerException, log details, and then re-throw the exception to fail the test, or assert something about the caught exception before proceeding. This method facilitates more granular handling and validation of exceptions during the test execution.

11. How to skip a test case in TestNG?

In TestNG, you can skip a test case using a few methods:

- Using

enabled = falsein@Testannotation: This is the simplest way to skip a test. Setenabled = falsewithin the@Testannotation for the method you want to skip. For example:@Test(enabled = false) public void myTest() { ... }. - Using

SkipException: Throw aorg.testng.SkipExceptionfrom within the test method. This indicates that the test should be skipped during execution. Example:

import org.testng.SkipException;

import org.testng.annotations.Test;

public class MyTestClass {

@Test

public void myTest() {

if (someCondition) {

throw new SkipException("Skipping this test due to some condition.");

}

// Test logic here

}

}

- Programmatically skipping test via IRetryAnalyzer: Implement

IRetryAnalyzerto skip tests based on custom logic during runtime. The retry analyzer determines whether a test should be retried, and if not, it's effectively skipped.

12. What are assertions in TestNG? Give some examples.

Assertions in TestNG are statements used to verify that the actual output of a test case matches the expected output. If an assertion fails, the test case is marked as failed, and execution might halt depending on the configuration. They are crucial for validating the correctness of your code during testing.

Examples include:

Assert.assertEquals(actual, expected): Checks if two values are equal.Assert.assertTrue(condition): Checks if a condition is true.Assert.assertFalse(condition): Checks if a condition is false.Assert.assertNull(object): Checks if an object is null.Assert.assertNotNull(object): Checks if an object is not null.Assert.assertSame(actual, expected): Checks if two objects refer to the same object.Assert.assertNotSame(actual, expected): Checks if two objects do not refer to the same object.Assert.fail(message): Marks the test as failed with a given message.

13. How do you generate reports in TestNG?

TestNG provides built-in reporting capabilities. By default, TestNG generates an HTML report (index.html) in the 'test-output' directory after the test execution. This report summarizes the test results, including pass/fail status, execution time, and any exceptions encountered.

TestNG also allows for custom reporting through listeners and reporters. You can implement the org.testng.IReporter or org.testng.IInvokedMethodListener interfaces to generate custom reports in different formats (e.g., XML, PDF). These listeners can be configured in the testng.xml file using the <listeners> tag. Frameworks like Extent Reports can be integrated easily with TestNG using listeners to generate visually appealing and detailed reports.

14. What is the difference between @BeforeMethod and @BeforeClass?

@BeforeClass annotated methods in TestNG execute only once before the first test method in the current class is invoked. This is commonly used for setting up the test environment like initializing resources, database connections, or any configurations that are required for all tests in that class.

In contrast, @BeforeMethod annotated methods execute before each test method within the class. It's used to perform actions before every test such as resetting the state of an object, loading test data, or logging initial details relevant to each individual test. This ensures that each test starts with a clean and consistent environment.

15. Can you tell me something about parallel test execution in TestNG?

TestNG supports parallel test execution to reduce overall test execution time. You can configure parallel execution at different levels: test methods, classes, or test suites. This is typically done using the <suite> tag in testng.xml. The parallel attribute can take values like methods, classes, tests, or instances, and the thread-count attribute specifies the number of threads to use.

For example, parallel="methods" thread-count="5" will execute test methods in parallel using 5 threads. You can also control parallel execution programmatically, but the testng.xml configuration is the most common approach. Remember to ensure your tests are thread-safe when running them in parallel; avoid shared mutable state without proper synchronization.

16. How do you set priority to test methods in TestNG?

TestNG allows setting priority to test methods using the priority attribute within the @Test annotation. Lower numbers indicate higher priority; for example, a method with priority = 1 will execute before a method with priority = 2. Test methods without a specified priority are treated as having a priority of 0 and are executed after methods with explicitly defined positive priority values.

Example:

@Test(priority = 1)

public void testMethod1() { }

@Test(priority = 2)

public void testMethod2() { }

@Test

public void testMethod3() { }

In this example, testMethod1 will execute first, followed by testMethod2, and then testMethod3.

17. What is the role of 'suite' in TestNG?

In TestNG, a 'suite' represents a collection of test cases. It serves as a top-level container to group and organize related tests. You define a suite using an XML file (testng.xml), where you specify the tests to be included and various configurations such as listeners, parameters, and thread pool size.

The main role of a suite is to execute multiple tests in a structured manner. Suites allow you to define dependencies between tests, control the order of execution, and generate comprehensive reports. They provide a way to manage and run large test suites efficiently.

18. What do you understand by the term 'listeners' in TestNG?

In TestNG, listeners are Java interfaces that listen to specific events during the execution of a test suite. They allow you to customize TestNG's behavior and perform actions before, during, or after certain events occur, such as the start or end of a test, method, or suite. This enables features like logging, reporting, taking screenshots upon failure, or modifying test results dynamically.

Listeners are implemented as classes that implement the relevant TestNG listener interface. Common listener interfaces include ITestListener, IAnnotationTransformer, and ISuiteListener. By registering listeners in your TestNG configuration (e.g., via the <listeners> tag in testng.xml or using annotations), you can extend TestNG's capabilities without modifying your core test code.

19. How do you configure logging in TestNG?

TestNG doesn't have built-in logging capabilities like Log4j or SLF4j. However, you can integrate external logging frameworks. To configure logging, you'd typically add the necessary dependencies (e.g., Log4j, SLF4j) to your project. Then, configure the logging framework (e.g., using a log4j.properties or logback.xml file) to define log levels, appenders (where logs are written), and the logging format. Within your TestNG tests, you'd use the logging API (e.g., Logger.getLogger().info("message")) provided by the logging framework to log messages.

For example, if using Log4j, you would add log4j dependency in pom.xml. A log4j.properties (or log4j.xml) configuration file in the resources folder configures where the logs should be generated. Then, in your test, you can use Logger logger = Logger.getLogger(YourTestClass.class); logger.info("This is a log message"); to generate your log messages.

20. How to make a test dependent on another test in TestNG?

In TestNG, you can make a test method dependent on another test method using the dependsOnMethods attribute within the @Test annotation. This ensures that the dependent test method will only be executed if the method it depends on has passed.

For example:

@Test

public void method1() {

// Test logic for method1

Assert.assertTrue(true); //Simulate pass or fail

}

@Test(dependsOnMethods = {"method1"})

public void method2() {

// Test logic for method2

// This will only run if method1 passes

}

If method1 fails, method2 will be skipped. You can also specify multiple dependent methods in the dependsOnMethods array.

21. What is the use of ITestContext in TestNG?

ITestContext in TestNG provides access to the test execution context. It allows you to retrieve information about the test run, such as the test suite name, test name, start time, end time, and any parameters defined in the test suite or test. You can use it to share data between different test methods or classes within the same test run.

Specifically, ITestContext can be used for:

- Accessing test parameters:

context.getCurrentXmlTest().getAllParameters()

- Setting and retrieving attributes for the test run:

context.setAttribute(String name, Object value)context.getAttribute(String name)

- Getting the test name and suite name:

context.getName()context.getSuite().getName()

- Adding listeners dynamically during runtime:

context.addTestListener(ITestListener listener)

22. Can you explain the difference between 'hard assert' and 'soft assert'?

Hard assert stops the test execution immediately when an assertion fails. Subsequent test steps within the same test case are skipped. This ensures that you know exactly when and where a failure occurred and prevents cascading failures.

Soft assert, on the other hand, continues the test execution even when an assertion fails. It records the failures but doesn't halt the test. You typically use an assertAll() method (or equivalent) at the end of the test to report all accumulated failures. This allows you to identify multiple issues in a single test run, but debugging can be a bit more complex due to the continued execution.

23. What are some advantages of using TestNG over JUnit?

TestNG offers several advantages over JUnit. One key benefit is its flexible test configuration using testng.xml, allowing for easy test suite creation and management. TestNG also supports more complex testing scenarios with features like dependent methods (dependsOnMethods), parallel execution, and parameterization through data providers (@DataProvider). These features simplify the execution of complex scenarios.

Additionally, TestNG provides built-in support for generating detailed HTML reports, making test results easier to understand and analyze. JUnit requires external libraries for similar reporting capabilities. TestNG also supports annotations for specifying test groups and priorities, offering finer-grained control over test execution order and selection. The use of annotations like @BeforeSuite, @AfterSuite, @BeforeGroups, @AfterGroups, @BeforeClass, @AfterClass provide more granular control over setup and teardown.

24. How do you configure a timeout for a test case?

Test case timeouts can be configured at various levels, depending on the testing framework used. Most frameworks provide mechanisms to specify a maximum execution time for individual test cases. If a test exceeds this time, it's automatically marked as failed.

For example, in pytest, you can use the pytest.mark.timeout decorator: @pytest.mark.timeout(10) to set a 10-second timeout. In JUnit, you'd use the @Test(timeout = 10000) annotation to specify a 10-second timeout (milliseconds). Similarly, many other testing frameworks like NUnit and testing libraries in languages like JavaScript (e.g., Jest, Mocha) have built-in timeout features, often configurable via command-line arguments or configuration files.

25. What is the purpose of using groups in testng.xml?

Groups in TestNG's testng.xml are used to categorize and selectively run tests. They provide a way to organize tests based on functionality, priority, environment, or any other criteria. This allows for more granular control over test execution.

By defining groups, you can easily include or exclude specific tests during a test run. For example, you might have groups for 'regression', 'smoke', or 'performance' tests. You can then configure TestNG to only run the 'smoke' tests during a quick verification cycle, or run all 'regression' tests before a release. The <groups> tag defines groups, while the <test> tag lets you specify which groups to include or exclude using <include> and <exclude> tags.

26. Explain the use of the 'enabled' attribute in @Test annotation.

The enabled attribute in the @Test annotation is used to control whether a test method should be executed or skipped during a test run. By default, enabled is true, meaning the test will run. Setting enabled = false effectively disables the test, preventing it from being executed. This is useful for temporarily skipping tests that are failing, not yet implemented, or dependent on features that are not currently available.

When a test is disabled using enabled = false, it's typically marked as 'skipped' in the test results, providing clear visibility that the test was intentionally not run. It's a better alternative to commenting out the entire test method as the disabled test will still be visible and can be easily re-enabled. For Example: @Test(enabled = false)

27. What is IRetryAnalyzer and how is it useful?

IRetryAnalyzer is an interface in TestNG that allows you to automatically retry failed test methods. It's useful when tests fail due to intermittent issues, such as network glitches or temporary resource unavailability, rather than actual code defects.

Its usefulness lies in:

- Reducing false failures: Retries help distinguish between genuine bugs and transient problems.

- Improving test reliability: Makes test suites more stable and less prone to reporting failures due to environmental factors.

- Saving time: Avoids manual re-execution of failing tests caused by flaky infrastructure.

28. What is the difference between @Parameters and DataProvider annotations?

@Parameters and @DataProvider are both used in TestNG to provide data to test methods, but they differ in how they achieve this.

@Parameters retrieves data from the testng.xml file. You define parameters in the XML and map them to method arguments using the @Parameters annotation. This approach is best suited for simple, static data configurations.

@DataProvider, on the other hand, is a more flexible approach. It uses a method (annotated with @DataProvider) to dynamically generate test data. This allows for more complex data sets, data generation logic, and data sources (e.g., reading from a file or database). The @Test method specifies the dataProvider name to use.

TestNG interview questions for juniors

1. What is TestNG, in super simple terms, and why do we use it for testing our code, like checking if your toys work properly?

TestNG is like a super organized checklist for testing your code. Imagine checking if your toys work properly. Instead of just randomly playing with them, you have a list: Does the car move forward?, Does the doll's voice work?, Is the ball bouncy? TestNG helps you create and run these kinds of checks in your code automatically.

We use it because it makes testing easier and more reliable. It lets you:

- Group tests: You can group tests related to one part of your code.

- Run tests in order: You can define the order in which tests are executed.

- Generate reports: It gives you reports showing which tests passed or failed, making it easy to find problems.

2. Imagine each test is a tiny puzzle. How does TestNG help us put those puzzles in order and run them automatically?

TestNG helps organize and automate tests like this:

It provides features to define the order tests should run using:

- Annotations: Like

@Test(priority = 1)to specify execution order. This helps in controlling which test runs first if there are dependencies. testng.xml: A configuration file where you can group tests into suites and define execution order, parallel execution and dependencies between tests. For example, you can specify that test methods belong to specific groups and order their execution within a<test>section or order the execution of<suite>sections.- Dependencies: You can use the

dependsOnMethodsordependsOnGroupsattributes in the@Testannotation to define dependencies between tests. If a test that a subsequent test depends on fails, the dependent test will be skipped.

TestNG's reporting features then give a clear overview of which "puzzle pieces" (tests) passed or failed, streamlining the testing process.

3. Can you describe a simple test you've written using TestNG, like making sure a calculator app adds numbers correctly?

I've used TestNG to verify basic calculator functionality. Here’s a simple example:

import org.testng.Assert;

import org.testng.annotations.Test;

public class CalculatorTest {

@Test

public void testAddition() {

Calculator calculator = new Calculator();

int result = calculator.add(2, 3);

Assert.assertEquals(result, 5, "Addition failed");

}

}

class Calculator {

public int add(int a, int b) {

return a + b;

}

}

This test case, testAddition, creates a Calculator object, calls its add method with inputs 2 and 3, and then uses Assert.assertEquals to check if the result is 5. If the result is not 5, the test will fail, and it will display the message "Addition failed". This ensures the calculator's addition functionality works as expected.

4. What's an annotation in TestNG? Think of it as a sticky note that tells TestNG what to do with your test. Give an example.

In TestNG, an annotation is a special tag that provides instructions or metadata about a test method. Think of it as a way to configure and control how TestNG executes your tests. Annotations always start with @ symbol. They tell TestNG what a method's purpose is, such as whether it's a test case, a setup method, or a teardown method.

For example, the @Test annotation marks a method as a test case that TestNG should execute. There are other annotations like @BeforeMethod (runs before each test method), @AfterMethod (runs after each test method), @BeforeSuite (runs before all tests in a suite), @AfterSuite (runs after all tests in a suite) @Parameters which is used to parameterize the test case to be executed with different sets of data.

import org.testng.annotations.Test;

public class ExampleTest {

@Test

public void myFirstTest() {

System.out.println("Running my first test!");

}

}

5. What is @Test annotation? Give an example where it is used in a program

The @Test annotation is used in JUnit to mark a method as a test case. When JUnit runs, it identifies methods annotated with @Test and executes them as individual tests. It signifies that the annotated method should be executed as a test method.

Example:

import org.junit.Test;

import static org.junit.Assert.assertEquals;

public class MyClassTest {

@Test

public void testAdd() {

MyClass calculator = new MyClass();

int result = calculator.add(2, 3);

assertEquals(5, result);

}

}

In this example, testAdd method is annotated with @Test. JUnit will execute this method, and the assertEquals assertion will verify if the add method of MyClass returns the expected value (5) when given 2 and 3 as arguments.

6. What is @BeforeMethod annotation? Give an example where it is used in a program

The @BeforeMethod annotation in TestNG is used to specify a method that should be executed before each test method within a class. It ensures that certain setup or initialization tasks are performed before every test case, providing a clean and consistent environment for each test. This helps in avoiding dependencies between tests and makes test results more reliable.

Here's an example:

import org.testng.annotations.Test;

import org.testng.annotations.BeforeMethod;

public class ExampleTest {

@BeforeMethod

public void setup() {

// Code to initialize resources or set up the test environment

System.out.println("Setting up before each test method");

}

@Test

public void testCase1() {

System.out.println("Executing test case 1");

}

@Test

public void testCase2() {

System.out.println("Executing test case 2");

}

}

In this example, the setup() method annotated with @BeforeMethod will be executed before both testCase1() and testCase2().

7. What is @AfterMethod annotation? Give an example where it is used in a program

The @AfterMethod annotation in TestNG is used to specify a method that should be executed after each test method within a test class. This is typically used for cleanup tasks, such as closing database connections, deleting temporary files, or resetting object states, ensuring that each test starts with a clean environment.

Example:

import org.testng.annotations.Test;

import org.testng.annotations.AfterMethod;

public class ExampleTest {

@Test

public void testMethod1() {

// Test logic here

System.out.println("testMethod1 executed");

}

@Test

public void testMethod2() {

// Test logic here

System.out.println("testMethod2 executed");

}

@AfterMethod

public void cleanup() {

// Cleanup tasks after each test

System.out.println("Cleanup after test");

}

}

In this example, the cleanup() method will be executed after both testMethod1() and testMethod2() have finished execution.

8. What is @BeforeClass annotation? Give an example where it is used in a program

The @BeforeClass annotation in JUnit is used to signal that a method should be executed once before any of the test methods in a class are run. This is typically used for resource-intensive setup operations that only need to be performed once for the entire test class, such as establishing a database connection or initializing a shared data structure. The method annotated with @BeforeClass must be static.

Example:

import org.junit.BeforeClass;

import org.junit.Test;

public class MyTest {

private static DatabaseConnection connection;

@BeforeClass

public static void setUpClass() {

connection = new DatabaseConnection("jdbc:h2:mem:testdb");

connection.connect();

}

@Test

public void testQuery1() {

// Use the connection to run a query

}

@Test

public void testQuery2() {

// Use the connection to run another query

}

}

In this example, setUpClass() establishes a database connection once before testQuery1() and testQuery2() (and any other @Test methods) are executed. Without @BeforeClass, the connection would be established for each test method, which is less efficient.

9. What is @AfterClass annotation? Give an example where it is used in a program

The @AfterClass annotation in JUnit (and TestNG) is used to specify a method that should be executed once after all the test methods within a class have finished running. This is typically used for cleanup activities, such as closing database connections, releasing resources, or deleting temporary files that were created during the tests.

Here's an example:

import org.junit.AfterClass;

import org.junit.Test;

public class MyTestClass {

@Test

public void testMethod1() {

// Test logic here

}

@Test

public void testMethod2() {

// Another test

}

@AfterClass

public static void tearDown() {

// Cleanup code, executed once after all tests in the class

System.out.println("Cleaning up after all tests");

}

}

In this example, the tearDown() method, annotated with @AfterClass, will be executed only once after both testMethod1() and testMethod2() have completed.

10. What is @BeforeTest annotation? Give an example where it is used in a program

The @BeforeTest annotation in TestNG is used to specify a method that will be executed before each test case. It ensures that certain setup or initialization tasks are performed prior to running any of the tests within a test suite. This is useful for tasks like setting up test data, initializing resources, or logging into an application.

Example:

import org.testng.annotations.BeforeTest;

import org.testng.annotations.Test;

public class ExampleTest {

@BeforeTest

public void setup() {

System.out.println("Setting up the test environment...");

// Initialize resources, create test data, etc.

}

@Test

public void testCase1() {

System.out.println("Running test case 1");

}

@Test

public void testCase2() {

System.out.println("Running test case 2");

}

}

In this example, the setup() method, annotated with @BeforeTest, will execute before both testCase1() and testCase2() methods are run. This guarantees that the test environment is properly configured before each test execution.

11. What is @AfterTest annotation? Give an example where it is used in a program

The @AfterTest annotation in TestNG is used to specify a method that should be executed after each test method. It ensures that certain cleanup or post-test actions are performed regardless of whether the test passed or failed. This is useful for tasks like closing database connections, releasing resources, or resetting the environment to a known state.

Here's an example:

import org.testng.annotations.Test;

import org.testng.annotations.AfterTest;

public class ExampleTest {

@Test

public void testMethod1() {

System.out.println("Executing testMethod1");

}

@Test

public void testMethod2() {

System.out.println("Executing testMethod2");

}

@AfterTest

public void afterTest() {

System.out.println("Executing afterTest - Cleaning up resources");

}

}

In this example, afterTest() will be executed after both testMethod1() and testMethod2() complete their execution. This ensures resource cleanup after each test.

12. What is @BeforeSuite annotation? Give an example where it is used in a program

The @BeforeSuite annotation in TestNG is used to specify a method that should run before all tests in the suite have run. This method is executed only once per suite.

Example:

import org.testng.annotations.BeforeSuite;

import org.testng.annotations.Test;

public class MyTestClass {

@BeforeSuite

public void setupSuite() {

System.out.println("Setting up the test suite!");

// Code to initialize databases, configurations, etc.

}

@Test

public void testMethod1() {

System.out.println("Running test method 1");

}

@Test

public void testMethod2() {

System.out.println("Running test method 2");

}

}

In this example, setupSuite() method will be executed before testMethod1() and testMethod2().

13. What is @AfterSuite annotation? Give an example where it is used in a program

The @AfterSuite annotation in TestNG signifies that a method annotated with it will be executed only once after all the tests in the suite have finished execution. It's commonly used for cleanup tasks that should be performed after the entire test suite is complete, such as closing database connections, releasing resources, or generating final reports. This annotation guarantees that its annotated method will only run at the end of the test suite, regardless of the test results.

Example:

import org.testng.annotations.AfterSuite;

public class ExampleTest {

@AfterSuite

public void afterSuite() {

System.out.println("After Suite completed");

// Close database connection

// Release resources

}

}

14. How do you check if a test in TestNG passes or fails, like knowing if you got all the answers right on a quiz?

TestNG provides several ways to determine if a test has passed or failed. One common approach is to use Assert statements within your test methods. If an Assert statement fails (e.g., Assert.assertEquals(expected, actual)), TestNG will mark the test as failed. If all Assert statements in a test method pass without throwing an exception, the test is considered passed.

Alternatively, you can use TestNG listeners, specifically ITestListener. Implement the onTestSuccess, onTestFailure, and onTestSkipped methods to perform actions based on the test result. For instance, you could log the test outcome or take a screenshot on failure. These listener methods are automatically called by TestNG after each test method completes, providing a mechanism to react to the test's success, failure, or skip status.

15. What's the difference between 'Assert.assertEquals' and 'Assert.assertTrue' in TestNG? It's like checking if something is exactly what you expect or just generally true.

Assert.assertEquals checks if two values are equal. It compares the actual result with the expected result. If they are equal, the assertion passes; otherwise, it fails. This is for exact matching.

Assert.assertTrue checks if a given condition is true. You provide a boolean expression, and if the expression evaluates to true, the assertion passes. It's useful when you only need to verify that something is generally true, rather than matching a specific value. For example: Assert.assertTrue(x > 0);

16. If a test fails, how can you tell TestNG to try running it again? It's like getting a second chance to solve a puzzle.

TestNG provides the retryAnalyzer attribute to handle test retries. You need to create a class that implements the IRetryAnalyzer interface. This interface has a single method retry(ITestResult result) which determines whether the test should be retried based on the ITestResult.

To use it, you annotate your test method (or the entire class or suite) with @Test(retryAnalyzer = RetryAnalyzerClass.class), where RetryAnalyzerClass is the class implementing IRetryAnalyzer. In the retry method, you return true if you want to retry the test and false otherwise. You can also manage the maximum number of retries within the retry analyzer class to prevent infinite loops. Example:

@Test(retryAnalyzer = MyRetryAnalyzer.class)

public void myFailingTest() {

//Test code

}

17. What is the use of testng.xml?

testng.xml is a configuration file in TestNG that controls the execution of tests. It defines test suites, tests, classes, methods, and parameters, essentially orchestrating which tests to run, how to run them, and with what data.

Specifically, it allows you to:

- Define test suites: Group related tests together.

- Specify test classes and methods: Control which classes and methods are included in the test run.

- Set parameters: Pass data to test methods.

- Configure listeners: Attach listeners to the test execution lifecycle.

- Manage dependencies: Define dependencies between tests.

- Run tests in parallel: Speed up execution by running tests concurrently. Example:

<suite name="MyTestSuite" parallel="methods" thread-count="5">

<test name="MyTest">

<classes>

<class name="com.example.MyTestClass"/>

</classes>

</test>

</suite>

18. How can you tell TestNG to skip a test, like deciding not to play a game right now?

TestNG offers several ways to skip a test. One common method is to use the @Test annotation's enabled attribute. Setting enabled = false will prevent the test from being executed.

Another approach is to use Assert.ignore() within the test method itself. This allows you to conditionally skip a test based on runtime conditions. For example:

import org.testng.Assert;

import org.testng.annotations.Test;

public class MyTest {

@Test

public void myConditionalTest() {

boolean shouldRun = false; // Or any condition

if (!shouldRun) {

Assert.ignore("Skipping because shouldRun is false");

}

// Actual test logic here

}

}

You can also use the ITestContext or Method object to determine information about the current test during beforeInvocation and skip using listeners. The SkipException can also be used for advanced scenarios.

19. Can you group tests together in TestNG, like putting all your dinosaur toys in one box and your car toys in another?

Yes, TestNG provides several ways to group tests, analogous to organizing toys into different boxes. You can group tests using the <groups> tag in your testng.xml file. This allows you to define groups and then assign tests to those groups.

For example:

<test name="MyTest">

<groups>

<define name="regression">

<include name="dinosaur" />

<include name="car" />

</define>

</groups>

<classes>

<class name="MyClass">

<methods>

<include name="dinosaurTest" groups="dinosaur"/>

<include name="carTest" groups="car"/>

</methods>

</class>

</classes>

</test>

Alternatively, you can use the @Test(groups = {"group1", "group2"}) annotation directly within your test classes. This is a more direct way to assign a test method to one or more groups.

20. How do you pass information to your tests in TestNG, like giving instructions to a robot?

In TestNG, you can pass information to your tests using several mechanisms, similar to giving instructions to a robot. One common approach is using testng.xml to define parameters. These parameters can then be accessed within your tests using the @Parameters annotation. For example, you can define <parameter name="browser" value="chrome"/> in your testng.xml file, and then in your test method, use @Parameters("browser") to retrieve the value "chrome".

Another method involves using Data Providers (@DataProvider). Data Providers allow you to feed multiple sets of data to the same test method, effectively running the same test with different inputs. This is especially useful for data-driven testing where you want to validate the same functionality with various inputs. For instance:

@DataProvider(name = "testData")

public Object[][] provideData() {

return new Object[][] { { "user1", "pass1" }, { "user2", "pass2" } };

}

@Test(dataProvider = "testData")

public void testMethod(String username, String password) {

// Your test logic here using username and password

}

21. Have you ever used TestNG to test a website? What did you check, like making sure all the buttons work?

Yes, I have used TestNG to test websites. I've automated various aspects of web application testing, including UI functionality, data validation, and API interactions.

Specifically, I have checked things like:

- Button Functionality: Ensuring buttons are clickable and perform the expected actions (e.g., navigation, form submission).

- Form Submission: Verifying that forms submit correctly, data is validated, and appropriate success/error messages are displayed.

- Navigation: Confirming that links and menus navigate to the correct pages.

- Data Display: Validating that data is displayed correctly on the page, including text, images, and tables.

- API Responses: Testing API endpoints used by the website to ensure they return the expected data and status codes. For example I've used

RestAssuredwith TestNG for these checks. - Cross-browser Compatibility: Running tests on different browsers (e.g., Chrome, Firefox, Safari) to ensure consistent behavior.

22. What's one thing you find a little tricky about TestNG, and how are you trying to learn more about it?

One aspect of TestNG I find slightly tricky is understanding the interplay between different annotations like @DataProvider, @Factory, and @Parameters, especially when trying to pass complex data structures or create dynamic test configurations. The order of execution and the scope of these annotations can sometimes be confusing.

To improve my understanding, I'm actively working through real-world examples and tutorials that demonstrate their combined usage. I am also experimenting with different configuration scenarios in my projects and debugging extensively to understand how TestNG handles the data flow. Specifically, I've been trying to master passing data to test methods using DataProviders defined in external files. Additionally, I am reading the official TestNG documentation thoroughly and exploring community forums for solutions to common issues.

TestNG intermediate interview questions

1. How do you handle exceptions within TestNG tests, and what are the best practices for doing so?

In TestNG, exceptions within tests can be handled using try-catch blocks directly within the test methods. This allows you to catch specific exceptions, log them, and potentially assert that an expected exception occurred. Alternatively, you can use the @Test(expectedExceptions = {MyException.class}) annotation to assert that a particular exception is thrown during the test execution. If the specified exception (or one of its subclasses) is thrown, the test passes; otherwise, it fails.

Best practices include using specific exception types in both try-catch blocks and @Test annotations to avoid catching/expecting unintended exceptions. Also, log exceptions with sufficient detail to aid in debugging. Avoid catching generic Exception unless absolutely necessary, as this can mask unexpected errors. When using @Test(expectedExceptions = ...) ensure that the exception is actually thrown within the test method to avoid false positives. Remember to use assertions to validate the state of the system after the exception occurs, if appropriate.

2. Explain the concept of 'Data Provider' in TestNG. How do you parameterize your tests using it, and what are its advantages?

In TestNG, a Data Provider is an annotation (@DataProvider) that allows you to pass different sets of data to a test method. This enables you to run the same test multiple times with varying inputs, effectively parameterizing your tests.

To use it, you create a method annotated with @DataProvider that returns a 2D array of Objects ( Object[][] ). Each row in the array represents a set of parameters for a single test execution. Then, in your test method, you use the dataProvider attribute of the @Test annotation to specify the name of the Data Provider method. The advantages include: reduced code duplication, enhanced test coverage, and easier maintenance as test data is centralized. Here's an example:

@DataProvider(name = "testData")

public Object[][] dataProviderMethod() {

return new Object[][] { { "user1", "pass1" }, { "user2", "pass2" } };

}

@Test(dataProvider = "testData")

public void testMethod(String user, String password) {

// Test logic using user and password

System.out.println("User: " + user + ", Password: " + password);

}

3. What is the significance of the `dependsOnMethods` attribute in TestNG, and how can it be used to manage test dependencies?

The dependsOnMethods attribute in TestNG allows you to define dependencies between test methods. A test method specified in dependsOnMethods will only execute if the methods it depends on have successfully passed. This is crucial for managing test execution order and ensuring that tests are run in a logical sequence where the outcome of one test influences the suitability of running another. If a dependent method fails, the test method relying on it will be skipped (marked as SKIP), preventing potentially cascading failures and providing clearer, more meaningful test results.

For example, if testLogin needs to pass before testCreateAccount can be meaningfully executed, testCreateAccount would have dependsOnMethods = {"testLogin"}. This dependency management helps in scenarios like testing database interactions or UI flows where a specific state needs to be established before subsequent actions can be validated. It improves test reliability and focuses debugging efforts on the actual root cause of failures, rather than chasing secondary errors due to unmet prerequisites.

4. Describe how you would use TestNG to perform cross-browser testing. What configurations and setup are required?

To perform cross-browser testing with TestNG, I would primarily leverage TestNG's parameterization capabilities and Selenium WebDriver. The core idea is to pass the browser type as a parameter to my test methods. This can be done either through the testng.xml file or via the @Parameters annotation directly in the test class. The testng.xml file would define different parameter sets for each browser. Within the test method, a conditional statement would instantiate the appropriate WebDriver (e.g., ChromeDriver, FirefoxDriver, EdgeDriver) based on the passed browser parameter.

Setup involves adding Selenium WebDriver dependencies to the project (e.g., via Maven or Gradle) and downloading the necessary browser drivers (ChromeDriver, GeckoDriver, etc.) for the targeted browsers. You would ensure these drivers are accessible, either by placing them in the system PATH or explicitly specifying their location when instantiating the WebDriver. The testng.xml file is crucial for defining the test suites and parameter combinations, thus controlling which tests are executed on which browsers.

5. How can you configure TestNG to run tests in parallel? What are the different parallel modes available, and when would you use each one?

TestNG can be configured to run tests in parallel using the parallel attribute in the testng.xml file. The thread-count attribute specifies the number of threads to use.

Different parallel modes are:

methods: Each test method runs in a separate thread.tests: All methods within a<test>tag run in the same thread, but each<test>tag runs in a separate thread.classes: All methods in the same class run in the same thread, but each class runs in a separate thread.instances: Similar to classes, but each instance of a class runs in a separate thread.

Use methods for fine-grained parallelism when methods are independent. Use tests when tests represent logical groupings of methods. Use classes or instances when you need to maintain state within a class but want to parallelize across different classes or instances.

6. Explain the use of listeners in TestNG. How can you create custom listeners, and what events can they listen to?

Listeners in TestNG are interfaces that allow you to customize TestNG's behavior. They listen for specific events during the test execution lifecycle and execute custom code when those events occur. This provides a way to perform actions such as logging, reporting, or modifying test execution flow.

To create a custom listener, you need to implement one of the TestNG listener interfaces (e.g., ITestListener, IAnnotationTransformer, ISuiteListener). Then you override the methods corresponding to the events you want to listen to. For example, ITestListener provides methods like onTestStart, onTestSuccess, onTestFailure, which are triggered at the start, success, and failure of a test method, respectively. Other events can be around suite execution (e.g., ISuiteListener.onStart and ISuiteListener.onFinish). You then configure TestNG to use your custom listener, typically using the <listeners> tag in your testng.xml file or by using the @Listeners annotation in your test class.

7. How does TestNG support reporting? What built-in reporters are available, and how can you integrate custom reporting mechanisms?

TestNG offers built-in reporting capabilities to provide detailed information about test execution. It includes default HTML reports, XML reports, and console output. The default HTML reports are generated automatically upon test completion, providing a user-friendly interface to view test results, including pass/fail statuses, execution time, and any exceptions encountered.

For custom reporting, TestNG provides several extension points. You can implement the IReporter interface to generate custom reports in any format (e.g., PDF, CSV). You can also use Listeners (ITestListener, ISuiteListener, etc.) to hook into different stages of the test execution lifecycle and gather data for your custom reports. Data can be collected and formatted as needed, then written to external files or databases. Using these approaches you can integrate with tools like Allure or implement your own reporting solution.

8. Describe the concept of 'retryAnalyzer' in TestNG. How do you implement it, and when would you use it to re-run failed tests?

In TestNG, a retryAnalyzer is a mechanism to automatically re-run failed test methods. It's implemented by creating a class that implements the IRetryAnalyzer interface and overriding the retry() method. This method determines whether a test should be retried based on certain conditions. You then associate this retry analyzer with a test using the @Test annotation's retryAnalyzer attribute, like this:

@Test(retryAnalyzer = MyRetryAnalyzer.class)

public void myTest() {

// Test logic here

}

The retryAnalyzer is used when you have tests that fail intermittently due to external factors like network issues, flaky services, or database connection problems. Instead of immediately marking the test as failed, the retry analyzer allows you to re-run the test a few times, giving it a chance to pass on subsequent attempts. This helps to distinguish between genuine failures and transient issues, leading to more reliable test results.

9. How would you use TestNG to test REST APIs? What libraries or tools might you integrate with TestNG for API testing?

TestNG can be used to test REST APIs by sending HTTP requests to the API endpoints and then asserting the responses using TestNG's assertion methods. You would use a library like RestAssured or Spring's RestTemplate to send the HTTP requests. RestAssured is particularly well-suited for API testing due to its fluent interface and built-in JSON/XML parsing capabilities. You can define your test methods with @Test annotation, use @BeforeTest or @BeforeClass for setup like configuring base URLs, and then within the test methods, make requests and validate the response status codes, headers, and body content using TestNG's Assert class.

Several libraries can be integrated with TestNG for effective API testing. RestAssured, as mentioned, simplifies request sending and response parsing. Jackson or Gson libraries can be used for more complex JSON handling, especially when dealing with data serialization/deserialization. For reporting, you might integrate with tools like Extent Reports to generate detailed and visually appealing test reports. You can also use tools like Swagger or OpenAPI to generate test cases from API specifications. Consider using a data provider (@DataProvider) to parameterize tests and run the same test with different input data.

10. Explain how you can group tests in TestNG. How are these groups defined and used for test execution?

TestNG allows grouping tests for better organization and selective execution. Groups are defined using the @Test annotation's groups attribute. For example, @Test(groups = {"smoke", "regression"}) assigns a test method to both the 'smoke' and 'regression' groups.

These groups are then used in the testng.xml file to control which tests are executed. You can include or exclude specific groups. Here's an example:

<groups>

<run>

<include name="smoke"/>

<exclude name="performance"/>

</run>

</groups>

This configuration runs all tests belonging to the 'smoke' group and excludes any tests belonging to the 'performance' group. Groups can also inherit from each other.

11. How can you prioritize test execution in TestNG? What attributes or mechanisms are available for setting test priorities?

TestNG provides several ways to prioritize test execution. The primary mechanism is the priority attribute within the @Test annotation. Lower numerical values indicate higher priority; for example, a test with priority=1 will run before a test with priority=2. Tests without an explicit priority attribute are assigned a default priority of 0, meaning they will run before tests with positive priority values but after tests with negative priority values.

Furthermore, the dependsOnMethods attribute can implicitly influence execution order by defining dependencies between tests. TestNG will ensure that a method a test depends on is executed before the test itself. You can also organize tests into groups using the @Test(groups = { "group1", "group2" }) annotation and then control the execution order of these groups within the testng.xml file, influencing the priority based on group definitions.

12. What are the differences between `@BeforeSuite`, `@BeforeTest`, `@BeforeClass`, and `@BeforeMethod` annotations in TestNG, and when would you use each?

In TestNG, @BeforeSuite runs once before all tests in the suite. Use it for setup tasks needed only once, like initializing a database connection. @BeforeTest runs before each <test> tag in your testng.xml file. It's useful for setting up the test environment specific to each test set defined in XML. @BeforeClass runs once before the first test method in the current class is invoked. This is suitable for tasks like creating a WebDriver instance that will be used by all test methods in that class. Finally, @BeforeMethod runs before each test method. Use it for setting up the preconditions or state for each individual test case within a class.

To summarize:

@BeforeSuite: Suite-wide setup.@BeforeTest: Test-specific setup (defined intestng.xml).@BeforeClass: Class-wide setup.@BeforeMethod: Test method-specific setup.

13. How do you handle configuration failures in TestNG? What are the different ways to control test execution when a configuration method fails?

TestNG allows you to handle configuration failures using the @BeforeSuite, @BeforeTest, @BeforeClass, and @BeforeMethod annotations. When a configuration method fails, TestNG provides ways to control the subsequent test execution flow. One approach is to use the alwaysRun attribute. Setting alwaysRun = true will ensure the configuration method is always executed, even if prior configuration methods have failed. Another control is the dependsOnMethods and dependsOnGroups attributes to specify dependencies. If a configuration method fails, TestNG will skip the methods or groups that depend on it.

There are different ways to define how TestNG should behave upon configuration failure. The ITestResult interface offers methods like isSuccess() and setStatus() to check and modify the test result status in a TestListenerAdapter. Additionally, you can control the behavior through the skipFailedInvocations attribute in the @Test annotation which prevents further invocations of the test method if the setup has failed. Also, use @AfterSuite, @AfterTest, @AfterClass, and @AfterMethod to define cleanup operations irrespective of configuration failures.

14. Explain the use of `ITestContext` and `ITestResult` interfaces in TestNG. How can you retrieve information about the test execution context and results?

ITestContext and ITestResult are key interfaces in TestNG providing access to test execution details. ITestContext offers information about the entire test run, like the test suite name, start/end times, parameters defined in the testng.xml, and access to the ISuite object. You can retrieve an ITestContext instance using @BeforeSuite, @BeforeTest, or @AfterSuite and @AfterTest annotated methods via dependency injection. ITestResult provides information about a specific test method's execution, including its status (PASS, FAIL, SKIP), start/end times, any exceptions thrown, and the test method's name. ITestResult is typically accessed within @BeforeMethod, @AfterMethod, @AfterInvocation, or listeners, using dependency injection or the Reporter.getCurrentTestResult() method.

To retrieve information, you would typically use the getter methods provided by these interfaces. For example, ITestContext.getName() returns the test name, and ITestResult.getStatus() returns the test's status as an integer constant (e.g., ITestResult.SUCCESS). ITestResult.getThrowable() gets any exceptions that occurred. You can also get parameters passed to tests using ITestContext.getCurrentXmlTest().getAllParameters() or ITestResult.getParameters(). The ITestContext allows retrieving the suite object via ITestContext.getSuite(). These methods offer a robust way to monitor and react to test execution events within your TestNG tests.

15. How can you integrate TestNG with a build tool like Maven or Gradle? What configurations are necessary to run TestNG tests as part of the build process?

To integrate TestNG with Maven, you need to add the TestNG dependency to your pom.xml file:

<dependency>

<groupId>org.testng</groupId>

<artifactId>testng</artifactId>

<version>7.x.x</version>

<scope>test</scope>

</dependency>

Then, you can configure the maven-surefire-plugin to execute TestNG tests. This involves specifying the location of your TestNG suite XML file (testng.xml) or using annotations. A basic configuration within the <plugins> section of your pom.xml would look like this:

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<suiteXmlFiles>

<suiteXmlFile>testng.xml</suiteXmlFile>

</suiteXmlFiles>

</configuration>

</plugin>

For Gradle, you would add the TestNG dependency to your build.gradle file:

dependencies {

testImplementation 'org.testng:testng:7.x.x'

}

Then, configure the test task to use TestNG. You can specify the TestNG XML suite file or let Gradle auto-discover tests based on annotations. Here is an example snippet:

tasks.withType(Test) {

useTestNG() {

suites 'src/test/resources/testng.xml' //Optional: to define the suite file

}

}

With these configurations, running mvn test or gradle test will execute your TestNG tests as part of the build process.

16. Describe how you would use TestNG to perform data-driven testing. What are the different ways to provide test data to your tests?

TestNG facilitates data-driven testing by allowing you to pass different sets of data to the same test method. You can achieve this primarily through the @DataProvider annotation. You create a method annotated with @DataProvider, which returns a two-dimensional array (Object[][]). Each row in the array represents a set of data that will be passed to the test method. The test method then declares parameters that correspond to the columns in the data provider array.

Different ways to provide data include:

- Hardcoding data within the

@DataProvidermethod: Suitable for small datasets. - Reading data from external sources: This includes reading from CSV files, Excel files, databases, or other data sources. You would use Java code within the

@DataProvidermethod to read and format the data into the required Object[][] structure. - Using TestNG's

dataProviderClassattribute: You can define the@DataProviderin a separate class and refer to it in your test method usingdataProviderClass.

17. Explain the purpose of the `invocationCount` and `threadPoolSize` attributes in the `@Test` annotation. How can they be used to control test execution?

The invocationCount attribute in the @Test annotation (typically in JUnit) specifies how many times the test method should be executed. This is useful for stress testing or performance testing scenarios where you want to run the same test repeatedly to observe its behavior under load.

The threadPoolSize attribute defines the number of threads that JUnit will use to execute the test method concurrently. When invocationCount is greater than 1, setting threadPoolSize allows you to run multiple invocations of the test in parallel, effectively distributing the load across multiple threads. For example:

@Test(invocationCount = 10, threadPoolSize = 5)

public void myTest() {

// Test logic

}

This configuration would run myTest() 10 times using 5 threads concurrently. This allows you to control how many concurrent executions of the test occur.

18. How can you conditionally enable or disable tests in TestNG? What attributes or mechanisms are available for controlling test execution based on certain conditions?

TestNG offers several ways to conditionally enable or disable tests. The most common is using the enabled attribute within the @Test annotation. Setting @Test(enabled = false) will disable a test, preventing it from running. You can use boolean expressions or variables to dynamically control this attribute, like @Test(enabled = condition).

Another approach is using the @Before and @After annotations to set up or tear down test environments conditionally. If a @Before method (e.g., @BeforeMethod) fails, TestNG will skip the associated test methods. You can also use groups to include or exclude tests based on group membership defined in the testng.xml configuration file or using the @Test(groups = { "groupName" }) annotation. The exclude or include tags within <groups> can control which groups are executed.

19. Describe how you would use TestNG to perform exception testing. How can you verify that a test method throws a specific exception?

TestNG provides a mechanism to test if a method throws an expected exception using the expectedExceptions attribute in the @Test annotation. You specify the exception class you anticipate to be thrown. If the method throws the specified exception (or one of its subclasses), the test passes; otherwise, it fails.

For example:

import org.testng.annotations.Test;

public class ExceptionTest {

@Test(expectedExceptions = ArithmeticException.class)

public void testDivisionByZero() {

int result = 10 / 0; // This should throw an ArithmeticException

}

@Test(expectedExceptions = {IllegalArgumentException.class, NullPointerException.class})

public void testMultipleExceptions() {

String str = null;

str.length(); //This will throw NullPointerException

}

@Test(expectedExceptions = Exception.class)

public void testAnyException() throws Exception {

throw new Exception("Generic Exception");

}

}

In this example, testDivisionByZero is expected to throw an ArithmeticException. If it does, the test passes; otherwise, it fails. In testMultipleExceptions the test passes if an IllegalArgumentException or NullPointerException is thrown. testAnyException verifies if any exception is thrown.

20. Explain how to use and configure the `suiteXmlFile` parameter in TestNG. How do you specify which test suites to run?

The suiteXmlFile parameter in TestNG is used to specify the location of the XML file that defines the test suite to be executed. You configure it either through the command line or in a build tool like Maven or Gradle. To specify which test suites to run, you provide a list of the paths to the suite XML files.

For example, using the command line, you might run: java org.testng.TestNG testng1.xml testng2.xml. In Maven's pom.xml, you would configure the maven-surefire-plugin like this:

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>3.0.0</version>

<configuration>

<suiteXmlFiles>

<suiteXmlFile>testng1.xml</suiteXmlFile>

<suiteXmlFile>testng2.xml</suiteXmlFile>

</suiteXmlFiles>

</configuration>

</plugin>

This configuration tells TestNG to run the suites defined in testng1.xml and testng2.xml.

21. How do you pass parameters from the command line to your TestNG tests? Provide an example.

TestNG provides several ways to pass parameters from the command line. One common approach is using the <parameter> tag in the testng.xml file along with the -D system property when running the test from the command line.

For example, in testng.xml:

<suite name="MyTestSuite">

<test name="MyTest">

<parameter name="browser" value="chrome"/>

<classes>

<class name="MyTestClass"/>

</classes>

</test>

</suite>

In the test class:

import org.testng.annotations.Parameters;

import org.testng.annotations.Test;

public class MyTestClass {

@Test

@Parameters("browser")

public void testMethod(String browser) {

System.out.println("Browser: " + browser);

}

}

From the command line, override the browser parameter:

java -Dbrowser=firefox -cp "path/to/testng.jar:path/to/your/classes" org.testng.TestNG testng.xml

This would execute the testMethod with "firefox" as the browser parameter value. Alternatively, you can use the -p option in the command line as well.

22. What is the use of the `alwaysRun` attribute in `@Before` and `@After` annotations? Explain with a scenario.

The alwaysRun attribute in @Before and @After annotations in TestNG specifies whether the annotated method should be executed regardless of the success or failure of the preceding test methods or configuration methods. By default, if a method annotated with @Before or @After fails, subsequent test methods or @After methods might be skipped.

Consider a scenario where you have a @BeforeSuite method that sets up a database connection. If this setup fails, you might still want to execute a @AfterSuite method with alwaysRun = true to attempt to close the connection and clean up resources, preventing potential resource leaks or leaving the system in an inconsistent state. Similarly, for @AfterMethod, even if a test fails, you might want to always execute code to take a screenshot for debugging purposes. Using alwaysRun = true ensures that these cleanup or diagnostic actions are performed reliably.

TestNG interview questions for experienced

1. How does TestNG's dependency injection mechanism work, and what are its advantages in a complex test suite?

TestNG's dependency injection allows you to pass parameters to test methods directly from the testng.xml file or using data providers. This is achieved using the @Parameters annotation to specify parameters from testng.xml or the @DataProvider annotation to provide data dynamically. TestNG then injects these values into the test method's arguments when the method is executed.

The primary advantage in a complex test suite is increased modularity and reusability. Instead of hardcoding values within test methods, you externalize them. This allows you to run the same test with different datasets or configurations easily. Consider these benefits:

- Data-Driven Testing: Run a test with multiple sets of input data without duplicating the test code.

- Configuration Management: Easily switch between different environments (e.g., development, staging, production) by simply modifying the parameters in the

testng.xmlfile. - Reduced Code Duplication: You can reuse the same test logic with different parameters, which leads to cleaner and more maintainable code.

- Parameter Reuse: Data providers can be used across multiple tests.

2. Explain the concept of 'grouping' in TestNG and how you can leverage it to manage and execute specific test scenarios efficiently.

TestNG groups allow you to categorize test methods. You can then run specific groups, excluding others, which provides focused test execution. This is particularly useful for running subsets of tests, like smoke tests, regression tests, or tests related to a specific feature.

To use groups, you annotate test methods with the @Test(groups = { "group1", "group2" }) annotation. You can then specify which groups to include or exclude in your testng.xml file or command-line arguments. For example, in testng.xml, you'd use the <groups> tag with <run> and <include> or <exclude> tags. Running testng.xml would then only execute test methods belonging to the included groups.

3. Describe how you would implement parallel test execution using TestNG, including considerations for thread safety and resource contention.

TestNG facilitates parallel test execution through its parallel attribute in the testng.xml file. We can set parallel to methods, tests, classes, or instances to control the scope of parallelization. For example, <suite name="MyTestSuite" parallel="methods" thread-count="5"> executes methods in parallel using 5 threads. To ensure thread safety, avoid shared mutable state between tests. If shared resources are necessary, employ synchronization mechanisms like synchronized blocks or concurrent collections (ConcurrentHashMap, etc.). Resource contention can be mitigated by careful design to minimize shared resource usage or by using resource pools with appropriate locking or semaphores to limit concurrent access.

To further control execution, use TestNG's @DataProvider to provide test data. Each data set can be executed in parallel. You can also use TestNG listeners (IAnnotationTransformer, ISuiteListener, etc.) to configure and monitor test execution in parallel environments. Always review test results for unexpected failures that may indicate thread-safety issues or resource contention problems.

4. What are TestNG Listeners, and can you provide an example of a scenario where you would use a custom listener?

TestNG Listeners are interfaces that listen to and react to events during the execution of a test suite. They allow you to customize TestNG's behavior. Common listeners include ITestListener, IAnnotationTransformer, and IReporter. They can be used to log test results, take screenshots on failure, or modify test behavior at runtime.

For example, consider a scenario where you need to automatically retry failed tests a limited number of times. You can create a custom ITestListener that implements the onTestFailure method. Within this method, you can check if the test has already been retried. If not, you can use IRetryAnalyzer to re-run the test. This can improve test stability by handling transient failures without manual intervention. Here's a simple code illustration:

public class RetryListener implements ITestListener {

@Override

public void onTestFailure(ITestResult result) {

RetryAnalyzer retryAnalyzer = new RetryAnalyzer();

result.getMethod().setRetryAnalyzer(retryAnalyzer);

}

}

public class RetryAnalyzer implements IRetryAnalyzer {

private int retryCount = 0;

private int maxRetryCount = 3;

@Override

public boolean retry(ITestResult result) {

if (retryCount < maxRetryCount) {

retryCount++;

return true;

}

return false;

}

}

5. How does TestNG handle exceptions during test execution, and what options do you have for controlling exception behavior?

TestNG provides several ways to handle exceptions during test execution. By default, if a test method throws an exception, TestNG marks the test as failed. You can control this behavior using the @Test annotation's expectedExceptions attribute. This allows you to specify which exceptions are expected to be thrown by a test. If the specified exception (or any of its subclasses) is thrown, the test will pass; otherwise, it will fail. Example @Test(expectedExceptions = ArithmeticException.class).

Alternatively, you can handle exceptions within the test method itself using try-catch blocks. This gives you more granular control, allowing you to perform specific actions based on the type of exception caught. The alwaysRun attribute in @Before and @After annotations can also be used to ensure that setup/teardown methods are executed even if exceptions occur in the preceding test methods. Furthermore, you can use ITestListener to implement custom exception handling logic globally for all tests.

6. Explain the differences between using `dataProvider` in the same class vs. a separate class, highlighting the advantages and disadvantages.

When using dataProvider within the same class, the advantage is simplicity and locality. The data provider is easily accessible and maintainable alongside the test methods it serves. A disadvantage is potential code bloat if the data provider logic becomes extensive, and reduced reusability across different test classes. This approach is fine for simple test setups or when data providers are highly specific to the tests in that class.