Hiring skilled MapReduce developers is crucial for organizations dealing with big data processing and analysis. Effective interviews help identify candidates who possess not only theoretical knowledge but also practical experience in implementing MapReduce paradigms.

This blog post provides a comprehensive list of MapReduce interview questions, ranging from basic to advanced levels. We've categorized the questions to help you assess candidates at different experience levels, covering technical definitions, optimization techniques, and real-world scenarios.

By using these questions, you can effectively evaluate a candidate's MapReduce expertise and problem-solving abilities. Consider complementing your interview process with a MapReduce skills test to get a well-rounded assessment of potential hires.

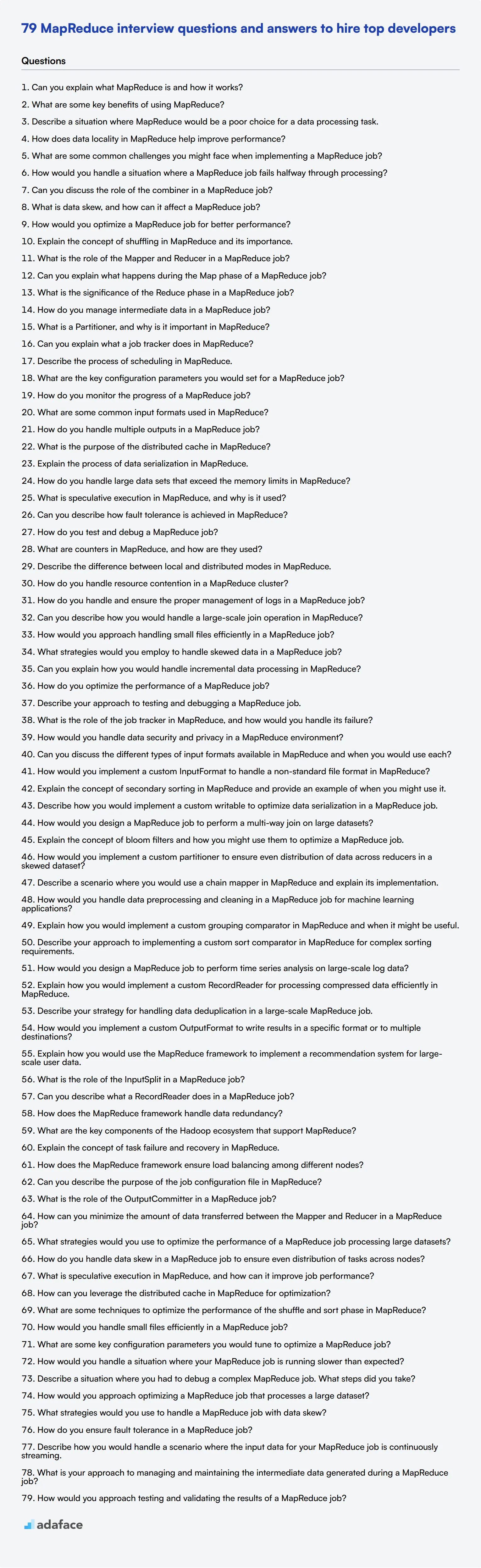

Table of contents

10 basic MapReduce interview questions and answers to assess applicants

To figure out if your applicants have a solid grasp of MapReduce concepts, use these 10 essential interview questions. They'll help you evaluate whether candidates understand key principles and can apply them to real-world scenarios.

1. Can you explain what MapReduce is and how it works?

MapReduce is a programming model used for processing large data sets with a distributed algorithm on a cluster. It simplifies data processing by breaking it into two main functions: the Map function, which processes and filters data, and the Reduce function, which aggregates the results.

An ideal candidate should be able to explain the flow of data through the Map and Reduce stages, including the splitting of data into chunks, mapping tasks to these chunks, shuffling and sorting, and finally reducing the output. Look for clarity in their explanation and their ability to relate it to practical applications.

2. What are some key benefits of using MapReduce?

MapReduce is highly scalable and can process petabytes of data efficiently. It distributes the computational load across many machines, making it fault-tolerant and reliable. Additionally, it abstracts the complexity of parallel programming, allowing developers to focus on the actual data processing logic.

Candidates should mention benefits like scalability, fault tolerance, and abstraction of complex processes. They should also be able to provide examples of scenarios where MapReduce is particularly advantageous, such as log analysis or large-scale data mining.

3. Describe a situation where MapReduce would be a poor choice for a data processing task.

MapReduce is not ideal for low-latency, real-time data processing tasks or tasks that require extensive iterative processing, such as machine learning algorithms. Its batch processing nature can introduce significant delays.

Look for answers that highlight the limitations of MapReduce, such as its unsuitability for real-time analytics, iterative computations, or tasks requiring complex inter-task communication. An ideal response should also suggest alternative technologies, like Apache Spark, for these scenarios.

4. How does data locality in MapReduce help improve performance?

Data locality refers to the concept of moving computation closer to where the data resides, rather than transferring large volumes of data across the network. This minimizes network congestion and reduces the time taken to process data.

Candidates should explain that MapReduce optimizes performance by executing map tasks on the same nodes where the data is stored. This reduces data transfer overhead and leads to faster processing times. Strong responses will include examples of how data locality has positively impacted performance in past projects.

5. What are some common challenges you might face when implementing a MapReduce job?

Common challenges include data skew, where some nodes end up processing significantly more data than others, leading to inefficiencies. Debugging distributed jobs can also be complex, and managing the overhead of shuffling and sorting data between map and reduce stages can be resource-intensive.

Candidates should mention these challenges and suggest possible solutions, like partitioning the data effectively, using combiners to reduce the amount of data shuffled, and employing monitoring tools to identify bottlenecks. They should demonstrate a problem-solving approach and experience in overcoming these hurdles.

6. How would you handle a situation where a MapReduce job fails halfway through processing?

First, I would check the logs to identify the root cause of the failure. Common issues can include data node failures, insufficient memory, or coding errors. Based on the diagnosis, I would take appropriate actions, such as reallocating resources, fixing code issues, or restarting the job from the last checkpoint.

The candidate should explain their debugging process and mention any tools or practices they use for monitoring and error handling. Look for a structured approach to problem-solving and their ability to learn from failures to prevent future issues.

7. Can you discuss the role of the combiner in a MapReduce job?

A combiner acts as a mini-reducer that performs local reduction at the map node before the data is sent to the reducer. This helps minimize the volume of data transferred across the network, improving overall efficiency and performance.

Candidates should explain how combiners reduce data transfer and provide examples of when and how to use them effectively. Look for an understanding of the limitations and the correct scenarios for applying combiners.

8. What is data skew, and how can it affect a MapReduce job?

Data skew occurs when the data distribution across the nodes is uneven, causing some nodes to handle significantly more data than others. This can lead to inefficiencies and longer processing times as the overloaded nodes become bottlenecks.

Candidates should understand the impact of data skew and suggest strategies to mitigate it, such as using custom partitioners, optimizing data distribution, or pre-processing the data to balance the load. Look for practical experience in dealing with data skew and an ability to implement effective solutions.

9. How would you optimize a MapReduce job for better performance?

To optimize a MapReduce job, I would focus on data locality, efficient data partitioning, and minimizing data transfer. Using combiners, tuning the number of map and reduce tasks, and leveraging appropriate file formats (like Parquet or Avro) can also enhance performance.

Candidates should discuss various optimization techniques and provide examples of how they've applied them in past projects. Look for a comprehensive understanding of performance bottlenecks and a proactive approach to optimization.

10. Explain the concept of shuffling in MapReduce and its importance.

Shuffling is the process of transferring data from the map tasks to the reduce tasks based on the intermediate keys. It's a critical phase that involves sorting and merging data to ensure that each reducer receives the correct set of intermediate data.

Candidates should explain the importance of shuffling in aggregating and sorting data for the reduce phase. Look for an understanding of how shuffling affects performance and how to manage its overhead effectively. Experience with shuffling-related issues and their solutions is a plus.

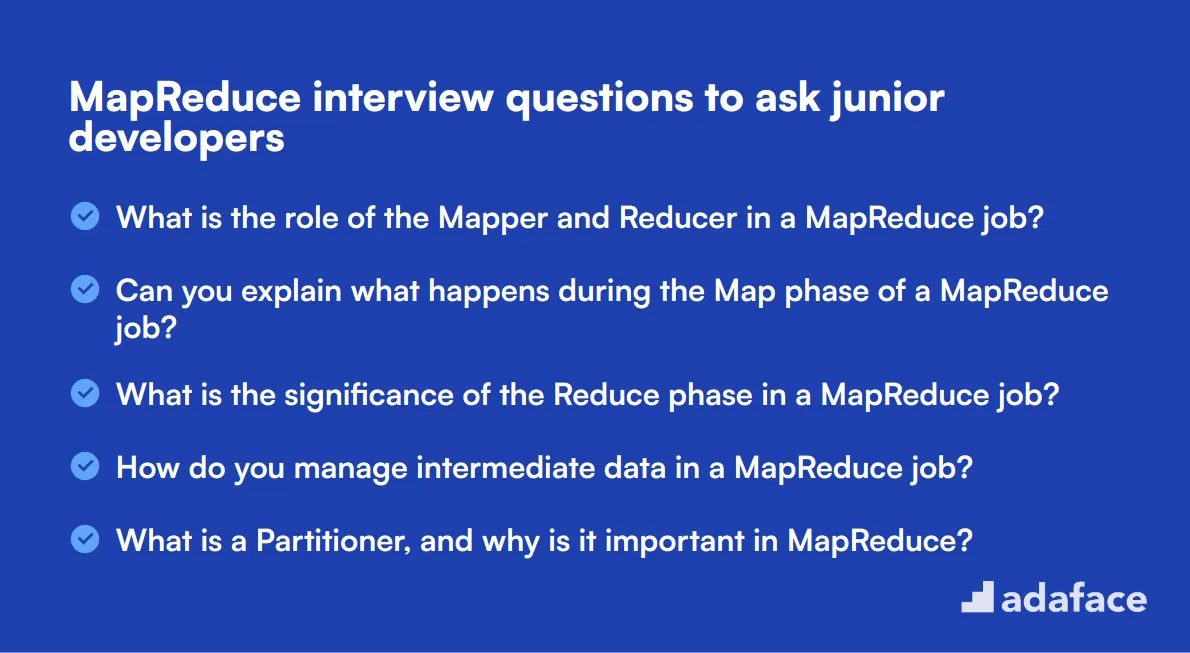

20 MapReduce interview questions to ask junior developers

To identify if candidates have the right foundational knowledge in MapReduce, use these interview questions specifically tailored for junior developers. These questions will help you assess their technical skills and problem-solving abilities, ensuring they are ready for roles such as Hadoop Developer.

- What is the role of the Mapper and Reducer in a MapReduce job?

- Can you explain what happens during the Map phase of a MapReduce job?

- What is the significance of the Reduce phase in a MapReduce job?

- How do you manage intermediate data in a MapReduce job?

- What is a Partitioner, and why is it important in MapReduce?

- Can you explain what a job tracker does in MapReduce?

- Describe the process of scheduling in MapReduce.

- What are the key configuration parameters you would set for a MapReduce job?

- How do you monitor the progress of a MapReduce job?

- What are some common input formats used in MapReduce?

- How do you handle multiple outputs in a MapReduce job?

- What is the purpose of the distributed cache in MapReduce?

- Explain the process of data serialization in MapReduce.

- How do you handle large data sets that exceed the memory limits in MapReduce?

- What is speculative execution in MapReduce, and why is it used?

- Can you describe how fault tolerance is achieved in MapReduce?

- How do you test and debug a MapReduce job?

- What are counters in MapReduce, and how are they used?

- Describe the difference between local and distributed modes in MapReduce.

- How do you handle resource contention in a MapReduce cluster?

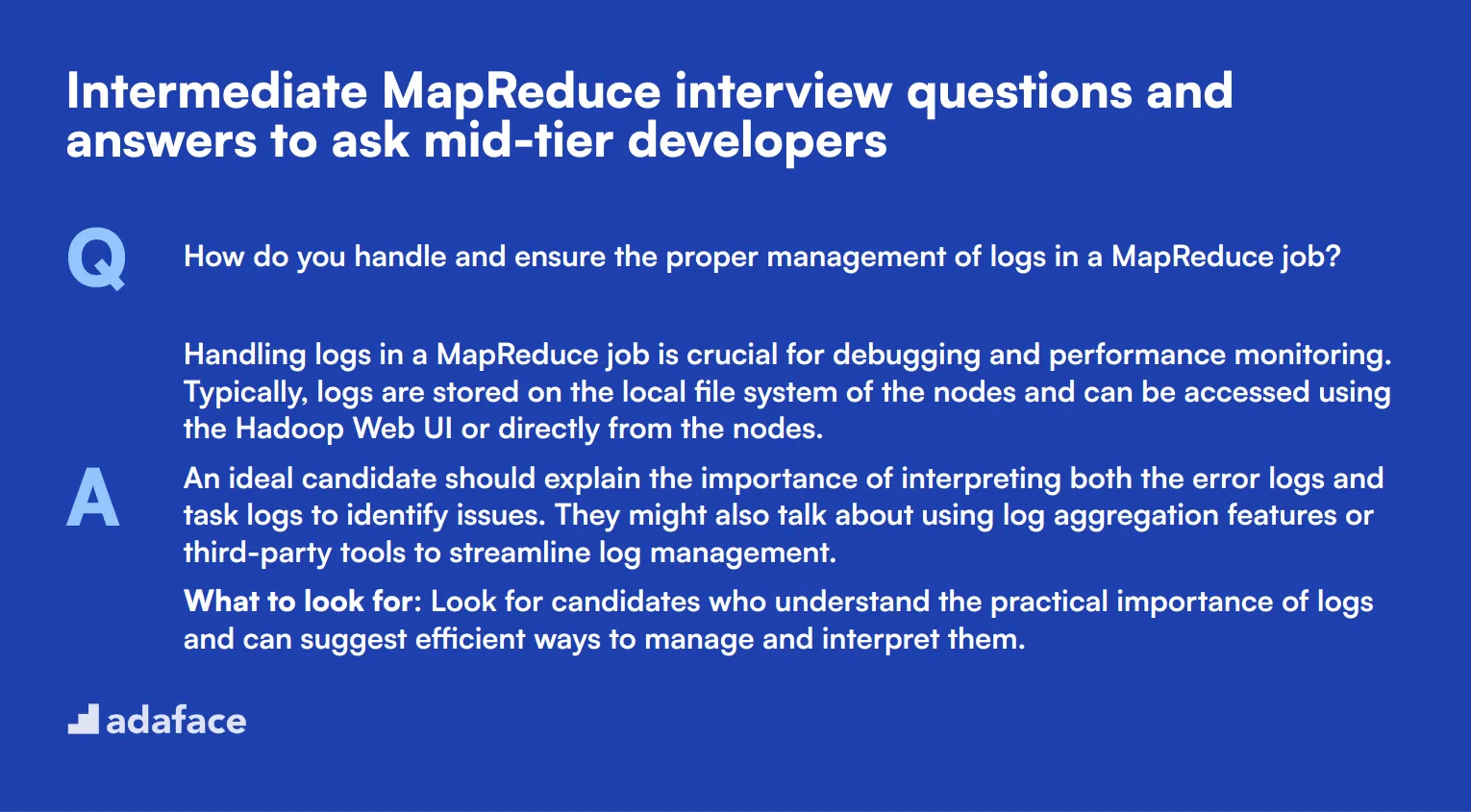

10 intermediate MapReduce interview questions and answers to ask mid-tier developers

To determine whether your applicants have the right skills to tackle intermediate MapReduce tasks, ask them some of these questions. This list is designed to help you gauge the depth of their understanding and their ability to overcome common challenges they might face in a real-world scenario.

1. How do you handle and ensure the proper management of logs in a MapReduce job?

Handling logs in a MapReduce job is crucial for debugging and performance monitoring. Typically, logs are stored on the local file system of the nodes and can be accessed using the Hadoop Web UI or directly from the nodes.

An ideal candidate should explain the importance of interpreting both the error logs and task logs to identify issues. They might also talk about using log aggregation features or third-party tools to streamline log management.

What to look for: Look for candidates who understand the practical importance of logs and can suggest efficient ways to manage and interpret them.

2. Can you describe how you would handle a large-scale join operation in MapReduce?

A large-scale join operation in MapReduce can be managed using different strategies, such as the Reduce-side join, Map-side join, or the more efficient Broadcast join depending on the data size and distribution.

Candidates should explain the pros and cons of each method. For instance, a Reduce-side join is more flexible but less efficient due to data shuffling, whereas a Map-side join is faster but requires one dataset to be small enough to fit into memory.

What to look for: Look for a detailed understanding of join techniques and scenarios where each would be applicable. Follow up on their experience with specific use cases.

3. How would you approach handling small files efficiently in a MapReduce job?

Small files can cause inefficiencies in a MapReduce job due to the overhead of managing many small tasks. A common approach is to use a tool like Hadoop Archive (HAR) or sequence files to combine small files into larger ones.

Candidates might also mention using a custom InputFormat to consolidate small files during the Map phase or leveraging tools like Apache HBase or Hive for better small file management.

What to look for: Look for practical solutions and an understanding of the implications of small files on performance. Candidates should show a proactive approach to overcoming this common issue.

4. What strategies would you employ to handle skewed data in a MapReduce job?

Handling skewed data involves identifying the skew and then applying strategies to balance the load. Techniques include pre-splitting the data, using custom partitioners, or rebalancing data across nodes.

Candidates should discuss how they would identify data skew using metrics and logs, and detail their approach to mitigating it, such as using a combiner or tweaking the partitioning logic.

What to look for: Look for a comprehensive understanding of data skew issues and practical strategies to address them. They should demonstrate familiarity with monitoring tools and metrics.

5. Can you explain how you would handle incremental data processing in MapReduce?

Incremental data processing involves processing only the new or updated data since the last job run. Techniques include using timestamps, versioning data, or leveraging frameworks like Apache HBase for real-time processing.

An ideal candidate should discuss the importance of efficient data storage and retrieval to ensure only the necessary data is processed. They might also mention the use of tools like Apache Flume or Kafka for data ingestion.

What to look for: Look for an understanding of the importance of incremental processing in big data environments, and practical approaches to implementing it.

6. How do you optimize the performance of a MapReduce job?

Optimizing a MapReduce job involves several strategies such as tuning configuration parameters (e.g., memory allocation, number of reducers), using combiners, and ensuring data locality.

Candidates should also talk about optimizing the map and reduce functions themselves, potentially through code refactoring or using more efficient data structures. They might also mention using Hadoop’s built-in profiling tools.

What to look for: Look for a methodical approach to performance tuning and experience with specific tools and techniques. Follow up on their experience with real-world performance bottlenecks.

7. Describe your approach to testing and debugging a MapReduce job.

Testing and debugging a MapReduce job typically involves unit testing individual map and reduce functions, using local job runners for small-scale testing, and monitoring logs for errors during job execution.

Candidates should also discuss the use of tools like Apache MRUnit for testing and the Hadoop Web UI for monitoring job progress and debugging. They might mention using sampling techniques to test with subsets of data.

What to look for: Look for a systematic approach to testing and debugging, and familiarity with tools that aid in these processes. Candidates should emphasize the importance of thorough testing to ensure reliability.

8. What is the role of the job tracker in MapReduce, and how would you handle its failure?

The job tracker is responsible for managing resources, scheduling jobs, and monitoring their progress in a MapReduce cluster. It coordinates between the client, name node, and task trackers.

If the job tracker fails, it can disrupt the entire job processing. Candidates should discuss high-availability solutions, such as using a secondary job tracker or switching to YARN which separates resource management and job scheduling.

What to look for: Look for a clear understanding of the job tracker's role and practical solutions to handle its failure. Experience with high-availability setups in Hadoop would be a plus.

9. How would you handle data security and privacy in a MapReduce environment?

Data security and privacy can be managed through encryption, access control, and auditing. Encrypting data both at rest and in transit, and using Kerberos for authentication are common practices.

Candidates should also mention implementing fine-grained access control using Apache Ranger or Sentry, and monitoring data access and usage through auditing tools.

What to look for: Look for a holistic approach to security that covers encryption, authentication, and auditing. Practical experience with security tools in the Hadoop ecosystem is desirable.

10. Can you discuss the different types of input formats available in MapReduce and when you would use each?

MapReduce supports various input formats like TextInputFormat, KeyValueTextInputFormat, SequenceFileInputFormat, and others. The choice depends on the data source and structure.

For example, TextInputFormat is suitable for plain text files, while SequenceFileInputFormat is ideal for binary data. KeyValueTextInputFormat can be used when both key and value need to be parsed from the input file.

What to look for: Look for an understanding of different input formats and their appropriate use cases. Candidates should demonstrate experience with selecting and implementing the most efficient input format for given data types.

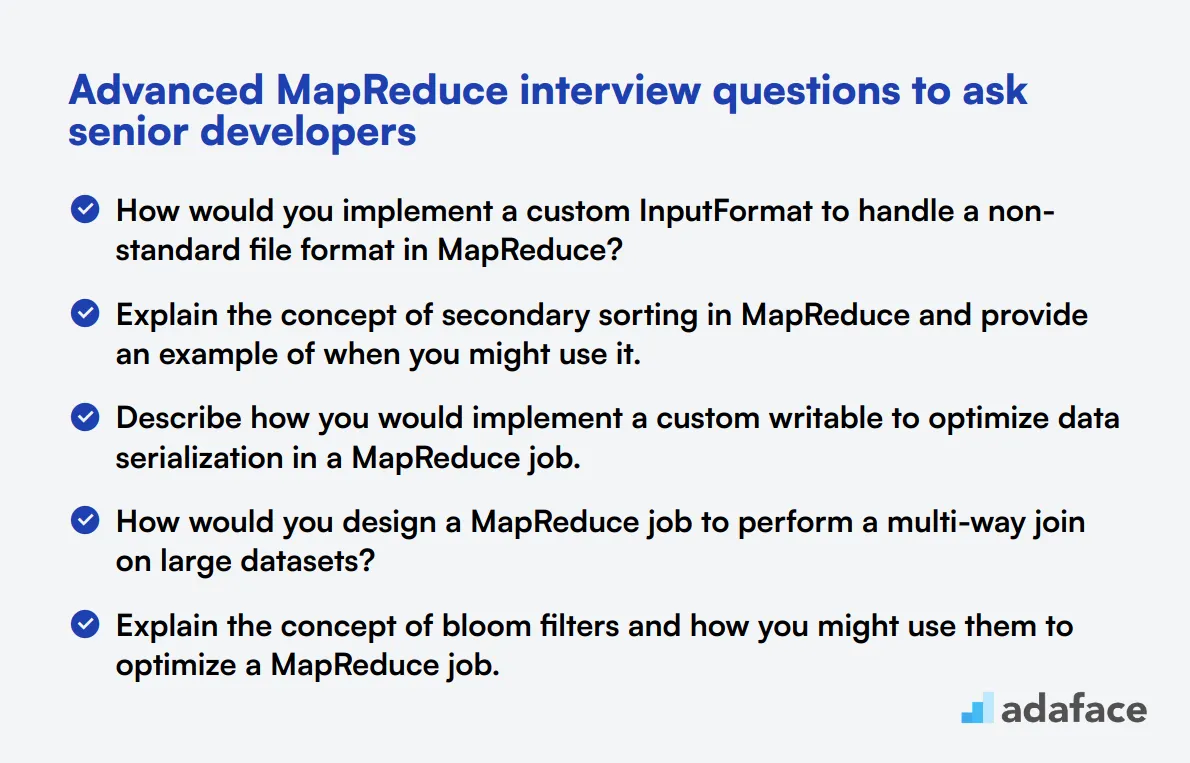

15 advanced MapReduce interview questions to ask senior developers

To assess the advanced MapReduce skills of senior developers, use these 15 in-depth questions. They're designed to probe deep technical knowledge and problem-solving abilities in complex MapReduce scenarios. Use them to identify top-tier candidates who can tackle challenging big data projects.

- How would you implement a custom InputFormat to handle a non-standard file format in MapReduce?

- Explain the concept of secondary sorting in MapReduce and provide an example of when you might use it.

- Describe how you would implement a custom writable to optimize data serialization in a MapReduce job.

- How would you design a MapReduce job to perform a multi-way join on large datasets?

- Explain the concept of bloom filters and how you might use them to optimize a MapReduce job.

- How would you implement a custom partitioner to ensure even distribution of data across reducers in a skewed dataset?

- Describe a scenario where you would use a chain mapper in MapReduce and explain its implementation.

- How would you handle data preprocessing and cleaning in a MapReduce job for machine learning applications?

- Explain how you would implement a custom grouping comparator in MapReduce and when it might be useful.

- Describe your approach to implementing a custom sort comparator in MapReduce for complex sorting requirements.

- How would you design a MapReduce job to perform time series analysis on large-scale log data?

- Explain how you would implement a custom RecordReader for processing compressed data efficiently in MapReduce.

- Describe your strategy for handling data deduplication in a large-scale MapReduce job.

- How would you implement a custom OutputFormat to write results in a specific format or to multiple destinations?

- Explain how you would use the MapReduce framework to implement a recommendation system for large-scale user data.

8 MapReduce interview questions and answers related to technical definitions

To assess whether your candidates grasp the technical definitions critical to MapReduce, ask them some of these interview questions. This list will help you identify candidates who not only understand the core concepts but can also apply them effectively during real-world scenarios.

1. What is the role of the InputSplit in a MapReduce job?

The InputSplit in a MapReduce job defines the chunks of data that a single Mapper will process. It's a logical representation of the data that needs to be processed in parallel.

An ideal candidate should be able to explain how InputSplits contribute to parallel processing and how they help in optimizing the overall performance of a MapReduce job. Look for candidates who understand the balance between the number of InputSplits and the efficiency of data processing.

2. Can you describe what a RecordReader does in a MapReduce job?

A RecordReader in a MapReduce job converts the data in an InputSplit into key-value pairs that the Mapper can process. It takes raw data as input and breaks it down into a format suitable for processing.

Candidates should mention that the RecordReader is responsible for data serialization and can affect the performance of the Mapper. Look for responses that show an understanding of how different types of RecordReaders can be used for various data formats.

3. How does the MapReduce framework handle data redundancy?

The MapReduce framework handles data redundancy through replication. Data is typically replicated across multiple nodes to ensure fault tolerance. If one node fails, the data can still be accessed from another node.

Look for candidates who understand the importance of redundancy in ensuring data availability and reliability. They should be aware of how replication strategies impact the overall performance and fault tolerance of the system.

4. What are the key components of the Hadoop ecosystem that support MapReduce?

Key components of the Hadoop ecosystem that support MapReduce include HDFS (Hadoop Distributed File System) for storage, YARN (Yet Another Resource Negotiator) for resource management, and the MapReduce engine itself for processing.

Candidates should be able to describe how these components work together to facilitate large-scale data processing. Look for a comprehensive understanding of each component's role and how they integrate to support efficient data processing workflows.

5. Explain the concept of task failure and recovery in MapReduce.

In MapReduce, task failure can occur due to various reasons such as hardware failure, software bugs, or network issues. The framework automatically retries the failed task a certain number of times before declaring it as failed.

Candidates should be able to explain how MapReduce handles task recovery by reassigning the failed task to another node. Look for a clear understanding of the retry mechanism and its importance in ensuring job completion.

6. How does the MapReduce framework ensure load balancing among different nodes?

The MapReduce framework ensures load balancing through the distribution of InputSplits across different nodes. It tries to assign tasks based on data locality to minimize data transfer and optimize performance.

Look for candidates who understand the importance of load balancing in preventing bottlenecks and ensuring efficient resource utilization. They should be able to explain how the framework dynamically adjusts task assignments to maintain balanced workloads.

7. Can you describe the purpose of the job configuration file in MapReduce?

The job configuration file in MapReduce contains all the settings and parameters required to run a MapReduce job, including input and output paths, mapper and reducer classes, and various job-specific parameters.

Candidates should mention that the configuration file is crucial for customizing the behavior of a MapReduce job. Look for an understanding of how different parameters can be adjusted to optimize job performance and meet specific requirements.

8. What is the role of the OutputCommitter in a MapReduce job?

The OutputCommitter in a MapReduce job is responsible for managing the output of the task. It ensures that the task's output is committed once the task successfully completes and handles cleanup in case of task failure.

Ideal candidates should explain how the OutputCommitter contributes to data integrity and fault tolerance. Look for a clear understanding of how it helps in managing the final output and ensuring that only fully successful tasks contribute to the output.

8 MapReduce interview questions and answers related to optimization techniques

To determine whether your applicants have the right skills to optimize MapReduce jobs, ask them some of these MapReduce interview questions about optimization techniques. This will help you identify candidates who can ensure efficient data processing and resource utilization.

1. How can you minimize the amount of data transferred between the Mapper and Reducer in a MapReduce job?

Minimizing data transfer between the Mapper and Reducer can be achieved by using combiners. Combiners perform a local 'reduce' task before the actual reduce phase, summarizing data to reduce the amount of data shuffled across the network.

Another approach is to design your MapReduce logic to emit fewer intermediate key-value pairs. Reducing the size of the intermediate dataset can significantly lower the network overhead.

Ideal answers should mention the use of combiners and efficient key-value pair generation. Look for candidates who can explain the practical benefits and potential trade-offs of these techniques.

2. What strategies would you use to optimize the performance of a MapReduce job processing large datasets?

To optimize the performance of a MapReduce job, you can increase the number of reducers to better distribute the workload. This helps in balancing the load and reduces the execution time.

Another effective strategy is to leverage data locality by ensuring that the data is processed on the node where it resides. This minimizes network latency and improves job performance.

Candidates should also mention tuning key configuration parameters such as memory settings and split sizes. Look for answers that provide a clear strategy for balancing resources and minimizing bottlenecks.

3. How do you handle data skew in a MapReduce job to ensure even distribution of tasks across nodes?

Handling data skew involves using a custom partitioner. A custom partitioner can distribute data more evenly across reducers by assigning partitions based on data characteristics rather than default hash partitioning.

Another approach is to preprocess the data to break down larger, skewed records into smaller chunks. This ensures that no single reducer is overloaded with an excessive amount of data.

Look for candidates who can explain the importance of even data distribution and how custom partitioners or preprocessing can mitigate data skew. They should also discuss the potential impacts of data skew on job performance.

4. What is speculative execution in MapReduce, and how can it improve job performance?

Speculative execution is a feature in MapReduce that runs duplicate instances of the slower tasks on different nodes. The task that finishes first is accepted, and the others are killed. This helps in completing the job faster by mitigating the impact of slow or failed nodes.

It can significantly improve job performance, especially in heterogeneous clusters where some nodes might be slower than others. It acts as a safeguard against unpredictable node performance issues.

Ideal responses should highlight the concept of speculative execution and its benefits in improving job reliability and performance. Candidates should also discuss scenarios where this feature is particularly useful.

5. How can you leverage the distributed cache in MapReduce for optimization?

The distributed cache in MapReduce allows you to cache small to medium-sized files and distribute them to all nodes. This is useful for sharing read-only data such as lookup tables that are needed across multiple nodes.

By using the distributed cache, you can avoid redundant I/O operations and network transfers, thereby improving the efficiency of your MapReduce job.

Candidates should explain how the distributed cache can be used to optimize the job by reducing I/O and network overhead. Look for examples where they successfully used this feature to enhance performance.

6. What are some techniques to optimize the performance of the shuffle and sort phase in MapReduce?

To optimize the shuffle and sort phase, you can increase the number of reduce tasks to better distribute the sorting load across the cluster. This speeds up the sorting process by leveraging parallelism.

Another technique is to tune buffer sizes and memory configurations to ensure that sufficient resources are allocated for the shuffle and sort operations.

Strong candidates should mention the importance of optimizing the shuffle and sort phase and discuss practical tuning techniques. They should also highlight the role of buffer management and memory optimization in improving performance.

7. How would you handle small files efficiently in a MapReduce job?

Handling small files efficiently can be achieved by aggregating them into larger files using tools like Hadoop Archive or SequenceFile. This reduces the overhead associated with processing a large number of small files individually.

Another approach is to use the CombineFileInputFormat, which treats multiple small files as a single input split, thereby minimizing the load on the NameNode and improving job performance.

Candidates should be able to explain both techniques and their benefits. Look for answers that demonstrate an understanding of the challenges posed by small files and practical solutions to address them.

8. What are some key configuration parameters you would tune to optimize a MapReduce job?

Key configuration parameters include the number of mappers and reducers, memory allocation settings, and input split sizes. Tuning these parameters can help balance the workload and ensure efficient resource utilization.

For example, increasing the number of reducers can improve load distribution, while adjusting the memory settings can prevent out-of-memory issues. Setting appropriate input split sizes can also help in optimizing the job execution.

Candidates should mention specific parameters and their impact on job performance. Look for answers that provide a clear rationale for tuning these parameters and examples of how they have done it in the past.

8 situational MapReduce interview questions with answers for hiring top developers

To discover if candidates can handle real-world challenges using MapReduce, consider these 8 situational MapReduce interview questions. These questions will help you gauge their practical knowledge and problem-solving skills, ensuring you hire the best fit for your team.

1. How would you handle a situation where your MapReduce job is running slower than expected?

When a MapReduce job runs slower than expected, the first step is to identify the bottleneck. This could be due to data skew, improper configuration settings, or inefficient algorithms.

Candidates should mention monitoring tools and techniques to diagnose performance issues, such as checking the job tracker, looking at the logs, and using profilers to understand where time is being spent.

An ideal candidate will not only identify the root cause but also suggest solutions like optimizing the algorithm, adjusting configuration parameters, or distributing the data more evenly.

2. Describe a situation where you had to debug a complex MapReduce job. What steps did you take?

Debugging a complex MapReduce job starts with analyzing the logs to identify error messages or exceptions. Understanding the stack trace can help pinpoint the issue.

Candidates should explain the use of counters to track the job's progress and identify any anomalies in the intermediate data. They might also discuss running smaller subsets of data to isolate the problem.

Look for answers that demonstrate a systematic approach, including the use of debugging tools and frameworks, as well as collaboration with team members to resolve complex issues.

3. How would you approach optimizing a MapReduce job that processes a large dataset?

Optimizing a MapReduce job for large datasets involves multiple strategies. First, ensure that data locality is maximized so that tasks run on nodes where the data resides.

Candidates should mention techniques like using combiners to reduce the amount of data shuffled between mappers and reducers, and tuning configuration parameters such as the number of mappers and reducers.

An ideal candidate will also discuss the importance of monitoring and profiling the job to identify bottlenecks, and iteratively refining the job’s logic and configuration for better performance.

4. What strategies would you use to handle a MapReduce job with data skew?

Handling data skew involves ensuring an even distribution of data across all reducers. Candidates might suggest using a custom partitioner to distribute the data more evenly.

Another approach is to preprocess the data to balance the load, such as splitting large records into smaller chunks or merging small records into larger ones before the MapReduce job runs.

Look for candidates who demonstrate an understanding of the impact of data skew and can suggest practical solutions to maintain balanced workloads across the cluster.

5. How do you ensure fault tolerance in a MapReduce job?

Fault tolerance in MapReduce is achieved through mechanisms like re-execution of failed tasks. The framework automatically restarts any failed tasks on different nodes to ensure job completion.

Candidates should mention the importance of checkpoints and maintaining intermediate data to recover from partial failures. They might also discuss the role of replication in ensuring data availability.

An ideal response will highlight the candidate’s understanding of the built-in fault-tolerance features of MapReduce and how they can be configured and monitored to ensure job reliability.

6. Describe how you would handle a scenario where the input data for your MapReduce job is continuously streaming.

Handling continuous data streams in MapReduce typically involves using a framework like Apache Kafka to buffer incoming data and feed it into MapReduce jobs in manageable chunks.

Candidates might discuss setting up periodic batch jobs that process data within specific time windows, ensuring that data is processed efficiently without overwhelming the system.

Look for candidates who understand the challenges of streaming data and can suggest practical solutions, such as integrating big data tools that complement MapReduce for real-time processing.

7. What is your approach to managing and maintaining the intermediate data generated during a MapReduce job?

Managing intermediate data involves ensuring it is stored efficiently and can be accessed quickly by reducers. Candidates should mention the use of local disks for temporary storage and the importance of data compression.

They might also discuss techniques like using combiners to reduce the volume of intermediate data and configuring the correct buffer sizes to optimize performance.

An ideal candidate will demonstrate an understanding of various strategies to efficiently manage intermediate data, ensuring the smooth execution of the MapReduce job.

8. How would you approach testing and validating the results of a MapReduce job?

Testing a MapReduce job involves creating test cases with known input and output to ensure the job produces the expected results. Candidates should mention unit testing for individual map and reduce functions.

They might also discuss the importance of running the job on a sample dataset before scaling up to the full dataset, as well as using end-to-end testing frameworks to validate the entire job pipeline.

Look for candidates who emphasize the importance of thorough testing and validation to catch errors early and ensure the reliability of the MapReduce job.

Which MapReduce skills should you evaluate during the interview phase?

While a single interview may not uncover every facet of a candidate's abilities, focusing on key MapReduce skills can yield significant insights into their expertise and potential fit for the role. In the context of MapReduce, certain skills are fundamental and serve as reliable indicators of a candidate's capability to handle real-world data processing challenges.

Understanding of Hadoop Ecosystem

A solid understanding of the Hadoop ecosystem, which includes MapReduce, is fundamental for developers working in data processing. MapReduce often operates within Hadoop, and knowledge of the broader ecosystem can greatly enhance a developer's ability to design and implement efficient data processing solutions.

To assess this skill effectively, consider using a series of MCQs that cover various components of the Hadoop ecosystem. For a well-rounded test, try the MapReduce online test from our library.

A targeted interview question can also provide insights into the candidate's practical knowledge of the Hadoop ecosystem. Here's a question you might consider:

Can you describe how MapReduce fits into the Hadoop ecosystem and name at least two other components that interact with MapReduce?

Look for answers that mention HDFS for data storage and YARN for job scheduling, demonstrating the candidate’s holistic understanding of how these components work together with MapReduce.

Optimization Techniques

In MapReduce programming, understanding optimization techniques is key to enhancing performance and reducing costs. Candidates should be familiar with concepts like Combiner functions, Partitioners, and efficient data sorting and shuffling.

To explore the candidate’s proficiency in optimization, pose the following interview question:

What are some effective optimization strategies for a MapReduce job processing large datasets?

Look for detailed strategies such as using Combiners to reduce the amount of data transferred across the network, optimizing the number of reducers, and partitioning techniques to ensure even distribution of data.

Error Handling and Debugging

Proficiency in error handling and debugging is essential for MapReduce developers, as it ensures the reliability and accuracy of data processing jobs. A developer's ability to quickly identify and rectify errors in MapReduce jobs can significantly impact the efficiency and stability of data processing tasks.

Consider asking this question to gauge expertise in error handling and debugging in MapReduce:

Explain how you would identify and resolve a performance bottleneck in a MapReduce job.

Effective responses should include methods such as reviewing configuration settings, analyzing log files, or using specific Hadoop tools like the JobTracker and TaskTracker for pinpointing and resolving issues.

3 Tips for Effectively Using MapReduce Interview Questions

Before you start applying what you've learned, here are a few tips to enhance your interviewing process.

1. Implement Skills Tests Before Interviews

Using skill tests before interviews is a smart way to filter candidates effectively. These tests allow you to assess practical skills relevant to the job, providing a clearer picture of a candidate's capabilities.

For assessing MapReduce skills, consider using our MapReduce online test or tests in related areas like Hadoop and Spark. Implementing these tests can significantly reduce time spent on unqualified candidates and streamline the selection process.

This approach helps ensure that only the most competent candidates move forward to interviews, allowing you to focus on evaluating the most relevant aspects of their experience and skills.

2. Curate Interview Questions Wisely

In interviews, time is often limited, so it’s essential to select the right questions that touch on the most critical aspects of the candidate's skill set. Focusing on a manageable number of well-chosen questions will maximize your evaluation success.

Consider including questions about related skills or soft skills such as communication and teamwork. For instance, you might refer to questions from our data science or Java categories for additional context.

This strategy allows you to cover important areas without overwhelming the candidate or yourself, leading to a more productive interview.

3. Ask Relevant Follow-Up Questions

Merely asking interview questions isn't enough to gauge a candidate's true depth. Follow-up questions are essential to explore a candidate's real understanding and practical application of their knowledge, helping to reveal any potential discrepancies.

For example, if a candidate explains how they used MapReduce to process large datasets, a good follow-up question would be, 'Can you describe a specific challenge you faced and how you overcame it?' This not only assesses their problem-solving skills but also provides insight into their experience and thought process.

Use MapReduce interview questions and skills tests to hire talented developers

If you're looking to hire someone with MapReduce skills, it's important to ensure they possess these skills accurately. The best way to achieve this is by utilizing skill tests, such as the MapReduce online test.

After conducting this test, you can effectively shortlist the best applicants for interviews. To get started, visit our assessment test library or sign up here to begin your hiring process.

MapReduce Online Test

Download MapReduce interview questions template in multiple formats

MapReduce Interview Questions FAQs

MapReduce interview questions are designed to assess a candidate's knowledge and skills in MapReduce, a programming model used for processing large data sets.

Using MapReduce questions helps you evaluate a candidate's proficiency in handling large-scale data processing tasks, which is crucial for roles in big data and analytics.

For junior developers, focus on basic and technical definition questions. For senior developers, include advanced questions and optimization techniques.

Look for clarity, problem-solving skills, understanding of MapReduce concepts like map, reduce, and optimization techniques, and practical experience.

Yes, these questions can be used effectively in both in-person and remote interviews to assess a candidate's MapReduce skills.

While these questions are generally applicable, they are particularly useful for industries dealing with big data, data analysis, and large-scale data processing.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources