As machine learning becomes a business norm, recruiting the right ML Infrastructure Engineer is more challenging than ever, as is the case with hiring big data engineers. A curated list of questions is a must-have when you want to filter the best candidates from the rest.

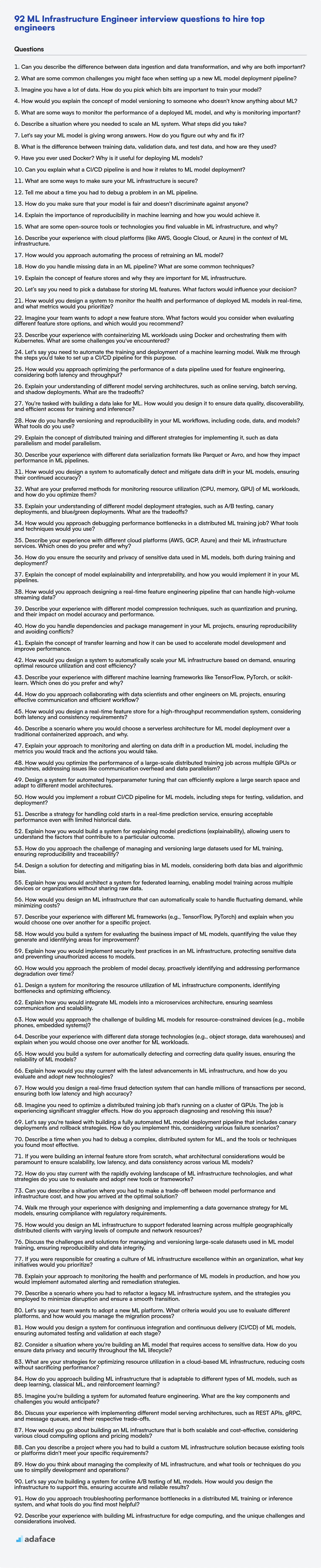

This blog post provides interview questions for ML Infrastructure Engineers across different levels: basic, intermediate, advanced and expert, as well as multiple-choice questions (MCQs). This guide is structured to help you assess the breadth and depth of a candidate's expertise.

Use these questions to identify top talent and build a stronger, more capable ML infrastructure team. To streamline your hiring process, consider using Adaface's ML Infrastructure Engineer test to screen candidates before the interview.

Table of contents

Basic ML Infrastructure Engineer interview questions

1. Can you describe the difference between data ingestion and data transformation, and why are both important?

Data ingestion is the process of collecting or importing data from various sources into a single storage location or system. It's about moving data from point A to point B. Data transformation, on the other hand, involves cleaning, enriching, and converting data into a usable format for analysis or other purposes. It is often necessary to format or restructure the data to make it suitable for downstream consumption.

Both are crucial because ingestion makes the data available, but without transformation, the data might be unusable or provide incorrect insights. Ingestion without transformation results in a data swamp, while transformation without ingestion means you have no data to transform.

2. What are some common challenges you might face when setting up a new ML model deployment pipeline?

Setting up a new ML model deployment pipeline presents several common challenges. These include: Data versioning and management: Ensuring consistency and reproducibility across training, validation, and production environments is critical, often involving managing data drift. Model versioning: Tracking different model versions, their performance metrics, and the data they were trained on can become complex. Infrastructure setup: Configuring the necessary compute resources, networking, and security for model serving can be challenging, especially in cloud environments.

Other potential roadblocks are: Monitoring and logging: Implementing robust monitoring to detect model degradation, data drift, and system errors is essential for maintaining model performance and reliability. Scalability and performance optimization: Ensuring the pipeline can handle increasing traffic and data volume while maintaining acceptable latency requires careful design and optimization. CI/CD for ML: Automating the process of building, testing, and deploying ML models can be difficult and requires specialized tools and workflows. Security: Securing the model and data pipeline against unauthorized access and malicious attacks is a crucial consideration.

3. Imagine you have a lot of data. How do you pick which bits are important to train your model?

Selecting the right data for training involves several strategies. First, data cleaning is crucial to remove noise, handle missing values, and correct inconsistencies. Then, feature selection techniques help identify the most relevant variables. This can involve:

- Univariate selection: Selecting features based on statistical tests.

- Feature importance: Using tree-based models to rank features by their importance.

- Correlation analysis: Identifying and removing highly correlated features.

Furthermore, data sampling can address class imbalance or reduce the dataset size. For example, techniques like oversampling minority classes or undersampling majority classes can improve model performance. Carefully choosing and preparing your data significantly impacts the model's accuracy and efficiency.

4. How would you explain the concept of model versioning to someone who doesn't know anything about ML?

Imagine you're building a recipe (your ML model) to bake a cake (make predictions). Model versioning is like saving different versions of that recipe. Maybe version 1 uses regular flour, and version 2 uses gluten-free flour to cater to different dietary needs. As you improve your cake recipe or adapt it to new ingredients (data), you save each version so you can always go back to a previous 'recipe' if a newer one isn't working as well.

Each version is saved (along with details like ingredients, how it was baked etc.) so that we can track and reproduce the results of that particular model. This is important because as your input data changes over time, your models need to be retrained. Model versioning helps you manage these different versions of your model and lets you switch back to older ones easily if needed.

5. What are some ways to monitor the performance of a deployed ML model, and why is monitoring important?

Monitoring the performance of a deployed ML model is crucial to ensure its continued accuracy and reliability. Model performance can degrade over time due to changes in the input data distribution (data drift) or changes in the relationship between input features and the target variable (concept drift). Failing to monitor can lead to inaccurate predictions and potentially significant negative impacts on business outcomes.

Some ways to monitor deployed ML models include:

- Monitoring prediction accuracy: Tracking metrics like precision, recall, F1-score, AUC, or RMSE to detect drops in performance compared to baseline.

- Monitoring data drift: Tracking the distribution of input features over time and comparing them to the training data distribution. Metrics like Population Stability Index (PSI) and Kullback-Leibler (KL) divergence can be used. We also monitor for missing values or unexpected data types.

- Monitoring prediction distribution: Observing the distribution of model outputs to identify unexpected shifts. For classification models, we can track the proportion of predictions for each class. For regression models, we can monitor the mean and variance of the predicted values.

- Monitoring model serving infrastructure: Tracking resource utilization (CPU, memory, network) and response times to identify potential bottlenecks or performance issues with the deployment environment.

- Logging and Alerting: Implement robust logging to capture input data, predictions, and performance metrics. Configure alerts to notify stakeholders when predefined thresholds are breached.

6. Describe a situation where you needed to scale an ML system. What steps did you take?

In a previous role, I worked on a real-time fraud detection system. Initially, it processed transactions at a rate of ~100 transactions per second (TPS) with acceptable latency. As transaction volume grew to ~1000 TPS during peak hours, the system's latency increased significantly, impacting the user experience. To address this, I profiled the system and identified the model inference stage as the bottleneck. We decided to scale horizontally by deploying multiple instances of the model inference service behind a load balancer. We containerized the inference service using Docker and orchestrated it using Kubernetes. Furthermore, we optimized the model itself by using quantization techniques to reduce its size and improve inference speed. This approach allowed us to handle the increased transaction volume while maintaining acceptable latency.

We also implemented asynchronous processing for certain features that weren't critical for real-time decision making. This reduced the load on the real-time inference service. We monitored the system using Prometheus and Grafana to track key metrics such as TPS, latency, and resource utilization, enabling us to proactively identify and address any potential scaling issues. We adjusted the number of inference service instances based on these metrics.

7. Let's say your ML model is giving wrong answers. How do you figure out why and fix it?

When an ML model gives wrong answers, I would start by systematically investigating potential causes. First, I'd check the input data for quality issues like missing values, outliers, or incorrect labels. Then, I'd examine the model's training data to ensure it's representative of the real-world data the model is encountering and that class distributions are appropriately handled (addressing potential bias). I'd also verify that the evaluation metrics being used are appropriate for the problem. Then, I'd look into model specific issues such as hyperparameter tuning, regularization strength, or potential overfitting. If the model is overfitting, techniques like cross-validation, increasing the amount of training data, or simplifying the model architecture can help.

To fix the issue, depending on the root cause, I would focus on: data cleaning and preprocessing (handling missing data, outliers, and data transformations), data augmentation, feature engineering, re-balancing the training data (if necessary), adjusting model hyperparameters (e.g., learning rate, number of layers), or trying different model architectures. A crucial step is to iterate and validate the changes on a held-out validation set to confirm that the adjustments improved the model's performance and generalizability.

8. What is the difference between training data, validation data, and test data, and how are they used?

Training data is used to train the machine learning model. The model learns patterns and relationships from this data. Validation data is used to evaluate the model's performance during training. This helps to fine-tune the model's hyperparameters and prevent overfitting. Test data is used to evaluate the final model's performance after training is complete. It provides an unbiased assessment of how well the model generalizes to unseen data.

In essence, training data is for learning, validation data is for tuning, and test data is for final evaluation. Splitting your dataset into these three sets is a common practice to build robust and generalizable models.

9. Have you ever used Docker? Why is it useful for deploying ML models?

Yes, I have used Docker. It's particularly useful for deploying ML models because it packages the model, its dependencies (libraries, system tools, etc.), and configurations into a single, consistent container. This ensures that the model runs the same way across different environments (development, testing, production) eliminating the "it works on my machine" problem.

Docker helps with:

Reproducibility: Guarantees consistent environments.

Isolation: Prevents conflicts with other applications.

Scalability: Easily scale deployments using container orchestration tools.

Simplified Deployment: Streamlines the deployment process by providing a ready-to-run package. For example, consider this simple

Dockerfile:FROM python:3.9 WORKDIR /app COPY requirements.txt . RUN pip install -r requirements.txt COPY . . CMD ["python", "app.py"]

10. Can you explain what a CI/CD pipeline is and how it relates to ML model deployment?

A CI/CD pipeline automates the software release process, from code changes to production deployment. CI (Continuous Integration) focuses on automatically building and testing code changes, while CD (Continuous Delivery or Continuous Deployment) automates the release of these changes to various environments. In the context of ML model deployment, a CI/CD pipeline automates the process of training, validating, and deploying ML models.

For ML models, CI/CD involves steps like data validation, model training, model evaluation, model packaging, and deployment to serving infrastructure. Tools like Jenkins, GitLab CI, or cloud-specific services (e.g., AWS CodePipeline, Azure DevOps) can be used to orchestrate these steps. For example, a pipeline might trigger model retraining when new data arrives, automatically evaluate its performance, and deploy it if it meets pre-defined criteria. This ensures models are consistently and reliably updated, and reduces manual intervention.

11. What are some ways to make sure your ML infrastructure is secure?

Securing ML infrastructure involves a multi-faceted approach. Key areas include access control, data encryption, vulnerability management, and secure model deployment. Implement robust authentication and authorization mechanisms, leveraging tools like IAM to restrict access to sensitive data and resources. Encrypt data at rest and in transit using encryption keys and protocols like TLS. Regularly scan for vulnerabilities in your systems and dependencies using tools like vulnerability scanners and penetration testing. Follow secure coding practices and patching. Secure model deployment involves using model versioning and audit trails. Regularly monitor infrastructure for suspicious activity using logging and alerting.

Specifically, consider these points:

- Access Control: Use role-based access control (RBAC) to limit user permissions.

- Data Encryption: Encrypt data at rest (e.g., using AES-256) and in transit (e.g., using TLS).

- Vulnerability Management: Regularly scan for vulnerabilities using tools like

NessusorOpenVAS. Update dependencies and patch systems promptly. Consider using container security tools likeAqua SecurityorTwistlock. - Secure Model Deployment: Implement version control for models. Use secure model serving frameworks.

- Monitoring and Logging: Implement comprehensive logging and monitoring using tools like

PrometheusandGrafanato detect anomalies.

12. Tell me about a time you had to debug a problem in an ML pipeline.

In one project, the ML pipeline was unexpectedly predicting significantly lower values for new customer lifetime value. The first step was isolating the issue. I reviewed recent code changes, checked data input quality, and monitored model performance metrics. It turned out that a recent update to the data preprocessing step inadvertently introduced a bug: it was incorrectly handling missing values in one of the key features by imputing them with zeros instead of using the average. This dramatically skewed that feature's distribution.

To fix it, I reverted the imputation logic to use the historical mean for missing values. I then retrained the model with corrected data and monitored the performance. After redeploying, the model's predictions returned to expected levels, and the problem was resolved. We also implemented unit tests for the data preprocessing steps to prevent similar regressions in the future. We added alerts to monitor the distribution of key features, which would notify us if there were sudden changes that could indicate a data issue. A code review process was also put in place for any future data preprocessing changes.

13. How do you make sure that your model is fair and doesn't discriminate against anyone?

To ensure model fairness and prevent discrimination, I employ several strategies. First, I carefully examine the training data for biases, addressing any imbalances or skewed representations through techniques like data augmentation or re-weighting. Feature selection is also crucial; I avoid using features that are highly correlated with protected attributes (e.g., race, gender) unless they are absolutely necessary and justified. During model development, I monitor performance metrics across different demographic groups, looking for disparities in accuracy, precision, or recall. Regularization techniques and fairness-aware algorithms can also be used to mitigate bias.

After deployment, continuous monitoring is essential. I track model outputs and feedback from users to identify any unintended discriminatory effects. If biases are detected, I retrain the model with updated data and fairness constraints. It is also important to document the model's development process, including the steps taken to address fairness concerns, to ensure transparency and accountability.

14. Explain the importance of reproducibility in machine learning and how you would achieve it.

Reproducibility is crucial in machine learning because it ensures that research findings and models can be independently verified and built upon. It allows others to validate the results, identify potential errors, and extend the work. Without reproducibility, the credibility and reliability of machine learning models are questionable. It also helps in debugging and improving models, making it easier to track down issues when others can reliably reproduce the results.

To achieve reproducibility, I would focus on several key practices:

- Version Control: Use Git to track changes to code, data, and model parameters.

- Dependency Management: Employ tools like

pip(withrequirements.txt) orcondato manage package dependencies and ensure consistent environments. Use a tool likedockerto package all the necessary dependencies and the environment. This can be as simple as creating aDockerfilewith instructions for building the environment. - Data Management: Clearly document data sources, preprocessing steps, and any transformations applied. Store data using a data versioning tool such as

dvcorlakefs. - Configuration Management: Store all hyperparameters and configuration settings in a configuration file (e.g., YAML or JSON).

- Random Seed Management: Set random seeds for all random number generators to ensure consistent results across runs. This is important for the initial weights of the model, but also for sampling from the dataset.

- Document Everything: Create detailed documentation explaining the experimental setup, code, and results. Tools like

MLflowcan help track, organize, and document ML experiments in order to reproduce the experiment results.

15. What are some open-source tools or technologies you find valuable in ML infrastructure, and why?

Some open-source tools I find valuable in ML infrastructure include:

- Kubernetes: For orchestrating containerized ML workloads. Its scalability and resource management are essential for training and deploying models efficiently.

- TensorFlow/PyTorch: These deep learning frameworks are invaluable for model development and research, due to their flexibility and vast community support.

- MLflow: An excellent tool for managing the ML lifecycle, tracking experiments, packaging code, and deploying models. It improves reproducibility and collaboration.

- MinIO: A high-performance, S3 compatible object store that serves as a data lake, useful for storing large datasets used for training or predictions.

- Prefect/Airflow: For reliable and scalable workflow orchestration. They provide tools to define, schedule, and monitor data pipelines and ML training jobs.

- DVC (Data Version Control): It's great for versioning data and ML models, providing reproducibility and traceability similar to Git for code, but adapted for large data and model files. I find it indispensable when working on collaborative ML projects.

The reason these tools are so valuable is their open-source nature, which fosters community-driven development, and allows for transparency, customization, and cost savings. They are often cloud-native, which makes them fit seamlessly in a modern ML infrastructure.

16. Describe your experience with cloud platforms (like AWS, Google Cloud, or Azure) in the context of ML infrastructure.

I have experience working with AWS for building and deploying ML infrastructure. Specifically, I've used S3 for storing large datasets, EC2 for training models (including GPU-enabled instances), SageMaker for model building and deployment, and Lambda for creating serverless ML inference endpoints. I've also utilized CloudWatch for monitoring model performance and infrastructure health.

My experience involves automating model training pipelines using SageMaker Pipelines, deploying models using SageMaker endpoints, and creating custom Docker containers for specific ML frameworks and dependencies. I've also worked with IAM roles and policies to manage access control and security within the AWS environment. While I have more experience with AWS, I am familiar with core concepts in Azure and GCP, and could easily adapt to use similar services like Azure ML or Google AI Platform.

17. How would you approach automating the process of retraining an ML model?

To automate ML model retraining, I'd create a pipeline triggered by specific events, such as data drift detection or a scheduled time interval. The pipeline would involve:

- Data Validation: Verify new data quality and schema.

- Model Training: Retrain the model using the updated dataset and optimal hyperparameters. I'd log all experiments using tools like MLflow.

- Model Evaluation: Evaluate the retrained model's performance against a held-out dataset using relevant metrics.

- Model Deployment: If the new model outperforms the existing one, automatically deploy it to production, potentially using canary deployments for gradual rollout and A/B testing. If not, I'd either keep the current one or notify the team.

- Monitoring: Continuously monitor both model performance and data quality in production to trigger further retraining cycles.

18. How do you handle missing data in an ML pipeline? What are some common techniques?

Handling missing data is crucial in ML pipelines. Common techniques include:

Imputation: Replacing missing values with estimated ones, e.g., using the mean, median, mode, or a constant value. More sophisticated methods involve using regression models or k-NN to predict missing values based on other features. For example, in Python:

from sklearn.impute import SimpleImputer imputer = SimpleImputer(strategy='mean') data['column_with_missing'] = imputer.fit_transform(data[['column_with_missing']])Deletion: Removing rows or columns with missing values. This is suitable when the amount of missing data is small or when the missingness is random and doesn't introduce bias.

Adding a Missing Value Indicator: Creating a new binary feature indicating whether a value was originally missing. This allows the model to learn patterns related to missingness itself.

Using Algorithms Robust to Missing Data: Some algorithms, like tree-based methods (e.g., Random Forests, XGBoost), can inherently handle missing values without requiring explicit imputation. These algorithms often have built-in mechanisms to deal with missing data during the training process. Choosing the right technique depends on the nature of the data, the amount of missingness, and the specific ML algorithm being used.

19. Explain the concept of feature stores and why they are important for ML infrastructure.

Feature stores are centralized repositories for storing, managing, and serving features for machine learning models. They address the challenge of feature engineering consistency and accessibility across different stages of the ML lifecycle (training, validation, and inference).

They are important because they:

- Reduce Feature Engineering Duplication: Prevents teams from reinventing the wheel by providing a shared catalog of features.

- Ensure Training/Serving Consistency: Guarantees that the same feature transformations are applied during both training and inference, preventing model skew.

- Enable Feature Discovery: Facilitates the discovery and reuse of existing features, accelerating model development.

- Simplify Feature Monitoring: Provides a central point for monitoring feature quality and identifying potential issues.

20. Let’s say you need to pick a database for storing ML features. What factors would influence your decision?

When choosing a database for storing ML features, several factors are critical. First, scalability is key: the database must handle the volume of features and the rate at which they're generated. Consider the size of the dataset and the read/write throughput requirements. Second, performance, including read and write latency, is important for model training and real-time inference. We need to consider the query patterns which impact which database better suits the workload. Finally, cost is always a factor, including storage costs, compute costs, and operational overhead. Other factors include support for specific data types (e.g., vectors for embeddings), integration with ML frameworks, and ease of management.

Specifically, I'd consider these aspects and how the database handles them:

- Data Types: Does it natively support vector embeddings or require workarounds?

- Indexing: Does it offer efficient indexing for feature retrieval?

- Querying: Can I easily perform similarity searches or complex feature selection queries? For example, for similarity search with vectors, I would need a database that efficiently supports approximate nearest neighbor search (ANN).

- Scalability: Can it handle the projected data volume and query load, both horizontally and vertically?

- Integration: Does it integrate smoothly with our existing ML pipeline tools (e.g., feature store, training frameworks, deployment platforms)?

Intermediate ML Infrastructure Engineer interview questions

1. How would you design a system to monitor the health and performance of deployed ML models in real-time, and what metrics would you prioritize?

To monitor ML model health and performance in real-time, I'd design a system that captures model inputs, predictions, and actual outcomes. This system would continuously calculate key metrics, alert on deviations, and provide visualizations for analysis.

Prioritized metrics would include: Performance Metrics such as accuracy, precision, recall, F1-score, and AUC (depending on the model type). Data Quality Metrics to detect data drift (using metrics like KL divergence or PSI). Model Health Metrics monitoring prediction distribution, throughput, latency, and error rates. I would also track resource utilization (CPU, memory) for the model serving infrastructure. Alerting thresholds would be set for each metric to trigger notifications when performance degrades or anomalies are detected.

2. Imagine your team wants to adopt a new feature store. What factors would you consider when evaluating different feature store options, and which would you recommend?

When evaluating feature store options, I'd consider several factors. First, data source compatibility is crucial; the feature store must integrate with our existing data lakes and streaming platforms (e.g., S3, Kafka). Second, feature transformation capabilities are important. Can the store handle the transformations we need (e.g., aggregations, windowing)? Related to this, latency for both batch and real-time feature serving is a key performance metric. Finally, scalability and cost need consideration. Can the feature store handle our data volume and query load without breaking the bank? Also, I would look at feature governance (lineage, discoverability) and monitoring capabilities.

Recommendation depends heavily on the specific requirements and existing infrastructure. For example, if we heavily rely on AWS, SageMaker Feature Store would be a strong contender due to seamless integration. If we need a cloud-agnostic solution with strong open-source support, Feast could be a better choice. If real-time feature serving at extremely low latency is paramount, a custom solution built on a key-value store like Redis, or specialist low latency features stores like Firefly may be appropriate.

3. Describe your experience with containerizing ML workloads using Docker and orchestrating them with Kubernetes. What are some challenges you've encountered?

I have experience containerizing machine learning workloads using Docker to create reproducible and isolated environments for training and inference. I've built Dockerfiles specifying dependencies like TensorFlow, PyTorch, and scikit-learn, along with code for model training, serving, and preprocessing. These containers ensure consistent behavior across different environments, from development to production. I've then used Kubernetes to orchestrate these containerized ML workloads, deploying them as pods, managing scaling, and handling rolling updates. I have configured deployments, services, and ingress to expose the models for serving. For example, I can use kubectl apply -f deployment.yaml to deploy a model server. I have experience with monitoring the resource utilization of the pods, using tools like Prometheus and Grafana to track CPU usage, memory consumption, and GPU utilization (if applicable).

Some challenges I've encountered include managing large image sizes, especially when including large datasets within the container, and optimizing resource allocation in Kubernetes to efficiently utilize available resources. I've also faced challenges related to data management, such as efficiently accessing and sharing data between containers during training, and ensuring data versioning and reproducibility. Further challenges include model versioning and deployment management for A/B testing.

4. Let's say you need to automate the training and deployment of a machine learning model. Walk me through the steps you'd take to set up a CI/CD pipeline for this purpose.

To automate ML model training and deployment, I'd implement a CI/CD pipeline with these steps:

First, I'd set up a version control system (like Git) for all code, data schemas, and configurations. Next, a CI pipeline would be created to automatically trigger on code changes. This pipeline would include:

- Data Validation: Check for schema changes and data integrity.

- Unit Tests: Ensure the code (preprocessing, model definition, etc.) is working as expected.

- Model Training: Train the model using the updated code/data. Experiment tracking tools like MLflow or Weights & Biases can track each experiment.

- Model Evaluation: Evaluate the trained model against a hold-out dataset, calculating metrics like accuracy, precision, and recall.

- Model Packaging: If the model performs adequately, package it (e.g., using Docker) with necessary dependencies and model weights.

Finally, a CD pipeline would deploy the packaged model. This can include:

- Model Registry: Store the model in a registry (e.g., MLflow Model Registry, AWS SageMaker Model Registry) with metadata (version, metrics, etc.).

- Deployment: Deploy the model to a serving environment (e.g., Kubernetes, AWS SageMaker, a REST API). This may involve A/B testing to compare the new model against the existing one. Monitoring is crucial; logging model performance, prediction drift, and system health enables quick rollback in case of issues. If performance degrades beyond acceptable thresholds, an automated retraining pipeline can be triggered.

5. How would you approach optimizing the performance of a data pipeline used for feature engineering, considering both latency and throughput?

To optimize a feature engineering pipeline for both latency and throughput, I'd start by profiling the existing pipeline to identify bottlenecks. This involves measuring the execution time of each stage (data extraction, transformation, feature calculation) and the resource utilization (CPU, memory, I/O). Based on the profiling results, I'd apply optimizations such as:

- Parallelization: Distribute the workload across multiple cores or machines using frameworks like Spark or Dask.

- Vectorization: Utilize vectorized operations in libraries like NumPy and Pandas to perform calculations on entire arrays at once.

- Caching: Store frequently used intermediate results to avoid redundant computations.

- Optimize data formats: Use efficient data formats like Parquet or Arrow for storage and transfer.

- Code optimization: Review and optimize code for algorithmic efficiency and memory usage. For example, using appropriate data structures or optimizing loops.

- Database optimization: Ensure correct indexing and query optimization.

I'd also consider using a message queue (e.g., Kafka, RabbitMQ) for asynchronous processing, allowing different stages of the pipeline to run independently and handle bursts of data more effectively. Finally, monitoring is crucial to track performance metrics and identify new bottlenecks as the pipeline evolves.

6. Explain your understanding of different model serving architectures, such as online serving, batch serving, and shadow deployments. What are the tradeoffs?

Model serving architectures cater to different latency and throughput requirements. Online serving focuses on low-latency predictions for real-time applications. It processes individual requests as they arrive, making it suitable for applications requiring immediate responses, like recommendation engines. Tradeoffs include higher infrastructure costs to maintain low latency and limited batching capabilities. Batch serving, on the other hand, processes predictions in batches, optimizing for throughput. It's well-suited for applications where latency isn't critical, such as overnight report generation or scoring leads. Tradeoffs include higher latency and staleness of predictions. Shadow deployments involve deploying a new model alongside the existing production model, without directly serving traffic to the new model. Real-world requests are mirrored to the shadow model, and its performance is compared against the existing model. The main tradeoff involves the extra infrastructure cost and resource usage associated with running two models concurrently. However, it provides a safe way to validate the performance of a new model before fully deploying it.

7. You're tasked with building a data lake for ML. How would you design it to ensure data quality, discoverability, and efficient access for training and inference?

To design a data lake for ML, I'd focus on three key areas: data quality, discoverability, and efficient access. For data quality, implement validation checks at ingestion (schema validation, data type checks, range constraints) and use data profiling tools to identify anomalies and missing values. Establish a data catalog with metadata (schema, data lineage, descriptions) for discoverability, making it searchable. Apply tags and classifications to further enhance discoverability. Regarding access, partition data based on common query patterns (e.g., time series data by date) and use appropriate file formats (e.g., Parquet, ORC) for efficient storage and retrieval. Implement an access control system to manage permissions and data security.

Additionally, I would ensure data versioning by creating immutable copies of data as it lands, enabling reproducibility for training and inference. I would also set up automated data quality monitoring with alerts to promptly address potential issues. Investigate the use of serverless query services to minimize overhead, and use a data lineage tool to track the data's journey from source to model output for compliance and debugging purposes.

8. How do you handle versioning and reproducibility in your ML workflows, including code, data, and models? What tools do you use?

I handle versioning and reproducibility in ML workflows by versioning code, data, and models separately. For code, I primarily use Git, following standard branching strategies (e.g., Gitflow) and pull request workflows. Data versioning is handled using tools like DVC or lakeFS, which allow me to track changes to datasets, store different versions, and ensure data lineage. For models, I use MLflow Model Registry or similar tools to version and track model artifacts, parameters, and metrics. These tools create reproducible records of each model version.

To ensure reproducibility, I also employ containerization with Docker to encapsulate the entire environment, including dependencies and configurations. This, combined with a tool like conda to manage package dependencies, ensures consistent execution across different environments. Using experiment tracking tools like MLflow or Weights & Biases, I record all relevant information about each experiment (code version, data version, parameters, metrics) to facilitate easy reproduction and comparison. For pipeline orchestration, I leverage tools like Airflow or Prefect, which store the pipeline definition in code and log execution details, ensuring that each step is executed in a consistent manner.

9. Explain the concept of distributed training and different strategies for implementing it, such as data parallelism and model parallelism.

Distributed training involves splitting the machine learning workload across multiple machines or devices to accelerate the training process. This is particularly useful for large datasets and complex models. Two common strategies are data parallelism and model parallelism.

- Data parallelism: The dataset is divided among the workers, and each worker trains a copy of the entire model on its portion of the data. Gradients computed on each worker are then aggregated (e.g., averaged) to update the global model. Frameworks often provide built-in support for data parallelism via methods such as

tf.distribute.MirroredStrategyin TensorFlow orDistributedDataParallelin PyTorch. - Model parallelism: The model itself is split across multiple workers, with each worker responsible for training a different part of the model. This is necessary when the model is too large to fit on a single device. Communication between workers is required to pass intermediate activations and gradients during the forward and backward passes. Model parallelism can be more complex to implement than data parallelism and often requires manual partitioning and careful management of dependencies between model parts.

10. Describe your experience with different data serialization formats like Parquet or Avro, and how they impact performance in ML pipelines.

I have experience with Parquet and Avro, both of which are columnar storage formats designed for efficient data processing. Parquet, in particular, is great for analytical queries due to its columnar storage, predicate pushdown, and encoding capabilities. I've used Parquet extensively for storing feature data and intermediate results in ML pipelines, which significantly reduces I/O compared to row-based formats like CSV, leading to faster model training and evaluation. Avro, on the other hand, is a row-based format that is good for schema evolution and handling complex data structures. I've used it when the pipeline requires schema flexibility and the data doesn't benefit as much from columnar operations.

The impact on performance is noticeable, especially with large datasets. Using Parquet can reduce the storage footprint and improve query performance by only reading the necessary columns. This directly translates to faster data loading and feature extraction, which are often bottlenecks in ML pipelines. I have also used compression techniques available with these formats (like Snappy, Gzip) to further optimize storage and I/O. I have found that choosing the right format and compression codec can lead to significant performance improvements in terms of both processing time and resource utilization within the ML pipeline.

11. How would you design a system to automatically detect and mitigate data drift in your ML models, ensuring their continued accuracy?

To detect data drift, I would implement a monitoring system that continuously tracks the statistical properties of incoming data and compares them to the characteristics of the training data. Key metrics to monitor include mean, variance, and distributions using techniques like Kolmogorov-Smirnov tests or KL divergence. If significant drift is detected (exceeding a predefined threshold), an alert is triggered. For mitigation, I would automate a retraining pipeline. This pipeline would use the new, drifted data to retrain the model, either from scratch or via fine-tuning, and then deploy the updated model, replacing the old one. A/B testing could be used before a full deployment to validate the new model's performance.

Additionally, a shadow deployment strategy can be incorporated to monitor the new model's performance in a production-like environment without impacting live users. This allows a more thorough assessment of the retrained model's effectiveness before a full switch. Feature importance analysis of the retrained model can further help to determine if specific features have become less relevant or if new features need to be engineered.

12. What are your preferred methods for monitoring resource utilization (CPU, memory, GPU) of ML workloads, and how do you optimize them?

I prefer using a combination of tools for monitoring resource utilization of ML workloads. For real-time monitoring and profiling during training, I often use tools like nvidia-smi (for GPU utilization), top or htop (for CPU and memory), and dedicated profiling tools like TensorBoard (for TensorFlow) or profiling capabilities within PyTorch. For longer-term monitoring and trend analysis, I integrate with systems like Prometheus and Grafana, using exporters like node_exporter to capture system-level metrics and custom exporters to track ML-specific metrics.

To optimize resource utilization, I employ several strategies. These include:

- Code optimization: Profiling code to identify bottlenecks and optimize algorithms or data structures.

- Batch size tuning: Experimenting with different batch sizes to find the optimal balance between throughput and memory usage.

- Mixed precision training: Utilizing mixed precision (e.g., FP16) to reduce memory footprint and accelerate computations, particularly on GPUs.

- Resource allocation: Properly requesting and allocating resources (CPU cores, memory, GPUs) based on workload requirements.

- Distributed training: Scaling out training across multiple nodes to improve throughput and reduce training time when applicable. Making sure to utilize libraries like Horovod or torch.distributed.

13. Explain your understanding of different model deployment strategies, such as A/B testing, canary deployments, and blue/green deployments. What are the tradeoffs?

Model deployment strategies aim to safely introduce new models into production. A/B testing exposes different model versions to different user segments simultaneously, allowing for direct performance comparison using metrics like conversion rate or click-through rate. Canary deployments roll out the new model to a small subset of users first, monitoring its performance closely before gradually increasing the user base. This helps identify issues early on with minimal impact. Blue/Green deployments involve running two identical environments, one with the old model (blue) and one with the new model (green). Traffic is switched to the green environment once it's validated, providing a quick rollback mechanism.

Tradeoffs exist with each approach. A/B testing requires statistically significant traffic to draw conclusions. Canary deployments might delay full deployment if issues are found during the initial rollout. Blue/Green deployments require double the infrastructure resources but offer the fastest and safest rollback option. The choice depends on risk tolerance, available resources, and the need for rapid deployment versus cautious validation.

14. How would you approach debugging performance bottlenecks in a distributed ML training job? What tools and techniques would you use?

Debugging performance bottlenecks in distributed ML training involves a systematic approach. First, I'd profile the training job to identify the slowest parts. This could involve using tools like: TensorBoard (for visualizing metrics and the computational graph), PyTorch Profiler, or tf.profiler (if using TensorFlow). These tools help pinpoint bottlenecks in data loading, model computations, or communication between workers.

Next, I'd analyze the communication overhead. Tools like tcpdump or network monitoring dashboards can reveal network congestion or inefficient data transfer patterns. I'd examine the data loading pipeline to ensure data is preprocessed and batched efficiently, and that data is sharded correctly across workers to minimize data skew. I would also consider techniques such as gradient compression or asynchronous updates to reduce communication bottlenecks. Finally, consider optimizing model architecture or hyperparameters to reduce the amount of computation performed per iteration. Remember to instrument code with logging to track progress and identify errors.

15. Describe your experience with different cloud platforms (AWS, GCP, Azure) and their ML infrastructure services. Which ones do you prefer and why?

I have experience with AWS, GCP, and Azure, primarily focusing on their ML infrastructure. On AWS, I've utilized SageMaker for model building, training, and deployment, as well as services like S3 for data storage and Lambda for inference endpoints. On GCP, I've worked with Vertex AI for similar ML workflows, leveraging Cloud Storage and Cloud Functions alongside it. With Azure, I've used Azure Machine Learning Studio and Azure Databricks, incorporating Azure Blob Storage and Azure Functions.

My preference leans slightly towards GCP's Vertex AI due to its intuitive interface, streamlined workflow, and strong integration with other Google services like BigQuery. While SageMaker is powerful, I find Vertex AI more user-friendly for rapid experimentation and deployment. However, the optimal choice highly depends on the specific project requirements, existing infrastructure, and team familiarity. For example, if the team is already heavily invested in the AWS ecosystem, SageMaker would likely be the best choice. Each platform has its strengths; it's about aligning those strengths with the project's needs.

16. How do you ensure the security and privacy of sensitive data used in ML models, both during training and deployment?

Securing sensitive data in ML involves several key strategies during both training and deployment. During training, techniques like differential privacy (adding noise to the data or gradients), federated learning (training models on decentralized data without directly accessing it), homomorphic encryption (performing computations on encrypted data), and secure multi-party computation (SMPC) can be employed. Data masking, tokenization, and anonymization are also crucial for de-identifying sensitive information before it's used. Access control, encryption at rest and in transit, and strict data governance policies are essential.

For deployment, model input validation is paramount to prevent adversarial attacks. Output sanitization, where model outputs are modified to remove or obscure sensitive information, can mitigate privacy risks. Continuous monitoring for anomalies and potential data breaches is necessary. Robust access control mechanisms, regular security audits, and adherence to relevant privacy regulations (like GDPR or CCPA) are all vital for ensuring data security and privacy in deployed ML models.

17. Explain the concept of model explainability and interpretability, and how you would implement it in your ML pipelines.

Model interpretability refers to the degree to which a human can understand the cause-and-effect relationship within a model. It's about making the model's decision-making process transparent. Model explainability, on the other hand, is a broader concept encompassing techniques to explain a model's predictions. It focuses on understanding why a model made a particular decision, even if the inner workings are opaque. Interpretability is often achieved through simpler models (e.g., linear regression, decision trees), while explainability techniques can be applied to complex models like neural networks.

To implement explainability in ML pipelines, I would use tools like SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-agnostic Explanations) to understand feature importance for individual predictions or the model as a whole. These tools provide insights into which features are driving the model's output. I would also visualize model behavior through techniques like partial dependence plots or feature importance rankings using methods inherent to the algorithm (e.g. feature_importances_ in scikit-learn tree based methods). Monitoring these explanations over time is important to detect model drift or unexpected behavior.

18. How would you approach designing a real-time feature engineering pipeline that can handle high-volume streaming data?

Designing a real-time feature engineering pipeline for high-volume streaming data involves several key steps. First, I'd choose a distributed stream processing framework like Apache Kafka for ingestion and message queuing, coupled with Apache Flink or Spark Streaming for real-time data processing and feature engineering. These frameworks provide fault tolerance and scalability. Feature engineering logic would be implemented as a series of transformations applied to the incoming data streams. For example using Flink:

DataStream<InputEvent> inputStream = env.addSource(new FlinkKafkaConsumer<>("input-topic", new InputEventSchema(), kafkaProps));

DataStream<Feature> featureStream = inputStream.map(new MapFunction<InputEvent, Feature>() {

@Override

public Feature map(InputEvent event) throws Exception {

// Perform feature engineering here based on the event data

double featureValue = calculateFeature(event);

return new Feature(event.getId(), featureValue);

}

});

Next, I would monitor the performance of the pipeline (latency, throughput) and implement alerting based on predefined thresholds. I would also use a feature store to persist the engineered features for model serving and offline analysis. The pipeline should be designed to be idempotent to handle potential failures and data replays gracefully. Finally the deployment should include techniques like autoscaling of resources to manage high-volume load.

19. Describe your experience with different model compression techniques, such as quantization and pruning, and their impact on model accuracy and performance.

I have experience with several model compression techniques, including quantization and pruning. Quantization, which reduces the precision of model weights (e.g., from 32-bit floating point to 8-bit integer), can significantly reduce model size and improve inference speed, especially on hardware that supports integer arithmetic. However, it can also lead to a decrease in accuracy, requiring techniques like quantization-aware training to mitigate the loss.

Pruning, which involves removing less important connections or neurons from the network, also reduces model size and computational cost. I've worked with both unstructured pruning (removing individual weights) and structured pruning (removing entire channels or layers). Structured pruning is generally more hardware-friendly. The impact on accuracy depends on the pruning ratio and the sensitivity of different parts of the network; therefore, it often requires careful fine-tuning or retraining to maintain performance. For example, I have used the torch.nn.utils.prune module in PyTorch to implement pruning techniques.

20. How do you handle dependencies and package management in your ML projects, ensuring reproducibility and avoiding conflicts?

I use pip with requirements.txt to manage dependencies. I create a virtual environment (using venv or conda) for each project to isolate dependencies. This helps avoid conflicts between projects that require different versions of the same package. To ensure reproducibility, I pin down the exact versions of each package in requirements.txt using == (e.g., numpy==1.23.0). For larger projects, I might use tools like Poetry or Docker to further improve dependency management and reproducibility. These tools handle dependency resolution and packaging in a more robust way.

21. Explain the concept of transfer learning and how it can be used to accelerate model development and improve performance.

Transfer learning is a machine learning technique where a model trained on one task is re-used as the starting point for a model on a second task. Instead of training a model from scratch on a new dataset, transfer learning leverages the knowledge (learned features, weights, etc.) gained from a pre-trained model, often trained on a large, general dataset. This is particularly useful when you have limited data for your specific task.

Transfer learning accelerates model development because the pre-trained model has already learned useful features. You can either use the pre-trained model as a feature extractor (freezing the pre-trained layers) or fine-tune the entire model (or a subset of its layers) on your new data. This reduces training time and can significantly improve performance, especially when the new dataset is small, by avoiding overfitting and leveraging the generalized knowledge learned by the pre-trained model. Common libraries like TensorFlow and PyTorch provide many pre-trained models.

22. How would you design a system to automatically scale your ML infrastructure based on demand, ensuring optimal resource utilization and cost efficiency?

To design an auto-scaling ML infrastructure, I would leverage a combination of monitoring, prediction, and automated resource management tools. First, I'd monitor key metrics like CPU/GPU utilization, memory consumption, request latency, and queue lengths for serving endpoints, and training jobs using tools like Prometheus or CloudWatch. Next, I'd use these metrics as inputs to a predictive model that forecasts future resource demand. This model could be a simple moving average or a more sophisticated time series model. Based on the prediction, an auto-scaling policy would be triggered. This policy would automatically provision or de-provision resources (VMs, containers, etc.) using tools like Kubernetes, AWS Auto Scaling Groups, or cloud-specific ML platform scaling features.

To optimize cost, I'd use spot instances or preemptible VMs for non-critical workloads like experimentation and model retraining. I would also implement resource quotas and limits to prevent runaway processes from consuming excessive resources. Finally, regular monitoring and analysis of scaling events would help refine the prediction models and scaling policies, ensuring optimal resource utilization and minimizing costs over time.

23. Describe your experience with different machine learning frameworks like TensorFlow, PyTorch, or scikit-learn. Which ones do you prefer and why?

I have experience with scikit-learn, TensorFlow, and PyTorch. Scikit-learn I've used extensively for various classical machine learning tasks such as classification, regression, and clustering. I appreciate its ease of use and comprehensive collection of algorithms. TensorFlow I've used primarily for building and training deep learning models, particularly for image classification and object detection, leveraging its powerful computational graph capabilities. PyTorch is my preferred framework for deep learning research and development due to its dynamic computation graph, which provides greater flexibility for debugging and experimentation. I also find its Pythonic nature and strong community support beneficial.

While TensorFlow provides production-ready deployment options and scalability, PyTorch's ease of use, debugging capabilities, and flexibility, particularly when experimenting with novel architectures or custom loss functions, make it my primary tool for deep learning projects. I've used torch.nn module, torch.optim for optimization and torchvision for handling image datasets. I am comfortable with writing custom layers and training loops. Understanding both frameworks gives me flexibility to choose the right tool based on the problem.

24. How do you approach collaborating with data scientists and other engineers on ML projects, ensuring effective communication and efficient workflow?

Collaboration on ML projects requires clear and frequent communication. I prioritize understanding the data scientists' goals and model requirements, and conversely, ensuring they understand the engineering constraints and deployment needs. I use tools like Jira or similar for tracking tasks and progress, and hold regular meetings (stand-ups) for quick updates and issue resolution. Openly documenting code, design decisions, and API contracts is essential.

For efficient workflow, I advocate for version control (Git) and code reviews. I focus on building robust and maintainable code, including comprehensive unit and integration tests. I also collaborate closely on defining feature pipelines, deployment strategies (e.g., containerization, cloud deployment), and monitoring systems to ensure the models perform as expected in production. I might also use shared notebooks or IDEs for pair programming or collaborative debugging.

Advanced ML Infrastructure Engineer interview questions

1. How would you design a real-time feature store for a high-throughput recommendation system, considering both latency and consistency requirements?

A real-time feature store for a high-throughput recommendation system requires careful consideration of latency, consistency, and scale. I'd architect it with a multi-layered approach. The first layer would involve online feature computation and caching, using a system like Redis or Memcached for extremely low-latency access to frequently used features. Features would be precomputed and updated asynchronously via stream processing (e.g., Kafka Streams or Flink) from upstream data sources. Data transformations in the stream processing layer would use an appropriate tool (e.g., Pandas, Spark) depending on transformation complexity.

The second layer would consist of an offline feature store (e.g., using cloud storage like AWS S3 or Google Cloud Storage, with a query engine like Spark SQL or Presto). This would act as a source of truth for feature values and would be used for backfilling the online cache and for training machine learning models. Consistency would be handled by eventual consistency models at the cache layer and by using idempotent updates to the offline store. Feature versioning is critical for reproducibility and debugging of models. The overall architecture would need monitoring and alerting to detect data quality issues and performance degradation. Feature access patterns would be monitored to optimize caching strategies.

2. Describe a scenario where you would choose a serverless architecture for ML model deployment over a traditional containerized approach, and why.

A good scenario for serverless ML deployment over containerized deployment is when you have infrequent or unpredictable model inference requests. For example, an image classification model used by a small internal team that only processes a few images per day. In this case, a serverless architecture like AWS Lambda with SageMaker Endpoints or Azure Functions with Azure ML would be more cost-effective.

With serverless, you only pay for the compute time used during the actual inference, whereas with a containerized approach (e.g., running on Kubernetes), you're paying for the servers to be up and running even when they're idle. Serverless also simplifies operations by abstracting away the underlying infrastructure management. The autoscaling capabilities of serverless functions ensure that the model can handle sudden spikes in traffic without requiring manual intervention. Conversely, containerized approaches are better suited for consistent, high-volume workloads where maintaining control over the environment and maximizing resource utilization are critical.

3. Explain your approach to monitoring and alerting on data drift in a production ML model, including the metrics you would track and the actions you would take.

My approach to monitoring data drift involves several key steps. First, I'd establish a baseline by analyzing the statistical distribution of features in the training dataset. Then, in production, I'd continuously monitor incoming data and compare its distribution to the baseline. I'd track metrics like: Kolmogorov-Smirnov (KS) test for numerical features, Chi-squared test for categorical features, and Population Stability Index (PSI). Significant deviations in these metrics would trigger alerts.

Upon receiving an alert, I'd first investigate the cause of the drift. This might involve checking for changes in data sources, data pipelines, or upstream systems. Depending on the severity and nature of the drift, I might retrain the model with the new data, adjust the model's hyperparameters, or even engineer new features to better handle the changed data distribution. An example using the Evidently library in Python to generate drift report is shown below:

from evidently.report import Report

from evidently.metric_preset import DataDriftPreset

drift_report = Report(metrics=[DataDriftPreset()])

drift_report.run(current_data=production_data, reference_data=training_data, column_mapping=column_mapping)

drift_report.show()

4. How would you optimize the performance of a large-scale distributed training job across multiple GPUs or machines, addressing issues like communication overhead and data parallelism?

To optimize large-scale distributed training, I would focus on minimizing communication overhead and maximizing data parallelism efficiency. Communication overhead can be reduced by using techniques like gradient compression (e.g., using 16-bit floating point numbers or quantization), employing asynchronous communication methods (e.g., using parameter servers or decentralized architectures with gossip protocols), and optimizing network topology for faster data transfer. Data parallelism can be improved by ensuring balanced data distribution across workers to avoid stragglers, using efficient all-reduce algorithms (e.g., ring all-reduce), and overlapping communication and computation through techniques such as pipelining.

Further improvements come from smart data loading and pre-processing. This involves using high-performance data loaders that can efficiently feed data to the GPUs, prefetching data to hide I/O latency, and using optimized data formats. Adjusting the batch size and learning rate schedule based on the number of workers can also significantly improve convergence and overall training speed. Monitoring key metrics like GPU utilization, network bandwidth, and training loss is crucial for identifying bottlenecks and making informed optimization decisions.

5. Design a system for automated hyperparameter tuning that can efficiently explore a large search space and adapt to different model architectures.

An automated hyperparameter tuning system can leverage techniques like Bayesian Optimization or Tree-structured Parzen Estimator (TPE) to efficiently explore the search space. These methods build a probabilistic model of the objective function (validation performance) and intelligently suggest promising hyperparameter configurations to evaluate. Adaptability to different model architectures can be achieved by defining a flexible search space that encompasses all relevant hyperparameters for various models, using conditional hyperparameters where the presence of a hyperparameter depends on the model architecture, and incorporating model-specific knowledge into the optimization process, potentially through custom surrogate models or acquisition functions.

Furthermore, a distributed architecture can accelerate the tuning process by parallelizing the evaluation of different hyperparameter configurations. This involves a central controller that manages the optimization process and distributes tasks to worker nodes, each responsible for training and evaluating a model with a specific set of hyperparameters. The system should also incorporate mechanisms for early stopping to avoid wasting resources on unpromising configurations and support for warm starting to reuse information from previous tuning runs.

6. How would you implement a robust CI/CD pipeline for ML models, including steps for testing, validation, and deployment?

A robust CI/CD pipeline for ML models involves several key steps. First, upon code commit, automated tests are triggered to validate code quality, data integrity, and model performance (e.g., unit tests, integration tests, and model performance tests against a held-out dataset). This includes checking for data drift and model bias. Model validation occurs next, using techniques like A/B testing or shadow deployment to compare the new model against the existing one in a production-like environment. If validation passes, the model is deployed, usually using containerization (e.g., Docker) and orchestration (e.g., Kubernetes) for scalability and reliability.

To implement this, tools like Jenkins, GitLab CI, or GitHub Actions can be used for orchestration. For model registry and versioning, MLflow or similar tools are beneficial. Monitoring is critical post-deployment to detect performance degradation and trigger retraining. Code examples of testing/validation can include:

# Example model performance test

from sklearn.metrics import accuracy_score

def test_model_performance(model, X_test, y_test, threshold=0.8):

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

assert accuracy >= threshold, f"Model accuracy {accuracy} below threshold {threshold}"

7. Describe a strategy for handling cold starts in a real-time prediction service, ensuring acceptable performance even with limited historical data.

To mitigate cold starts in a real-time prediction service, especially with limited historical data, employ a combination of strategies. First, leverage rule-based or heuristic models as a fallback. These can be based on domain expertise or simple statistical calculations, providing a baseline prediction until enough data is available to train more complex models. Second, use transfer learning. Fine-tune a pre-trained model (trained on a related, larger dataset) with your limited data. This allows you to benefit from the knowledge embedded in the pre-trained model without requiring a massive dataset initially.

Furthermore, implement an exploration-exploitation strategy. Initially, prioritize exploration by randomly sampling predictions or assigning higher weights to uncertain features. As more data is collected, shift towards exploitation by favoring predictions from your evolving model. This balance allows the system to learn and improve its accuracy over time while still providing acceptable predictions during the cold start phase. Consider A/B testing different cold start strategies to identify which performs best for your specific use case.

8. Explain how you would build a system for explaining model predictions (explainability), allowing users to understand the factors that contribute to a particular outcome.

To build an explainable AI system, I would focus on providing users with insights into the key factors driving model predictions. This involves a multi-faceted approach. First, feature importance analysis, highlighting the most influential features overall. SHAP values or permutation importance can be used to quantify feature contributions. Second, providing local explanations for individual predictions. Techniques like LIME (Local Interpretable Model-agnostic Explanations) can approximate the model locally with a simpler, interpretable model. Third, offering visualizations that help users understand feature relationships and their impact on the prediction. Partial dependence plots or individual conditional expectation plots can be effective. Finally, presenting explanations in a user-friendly format, prioritizing clarity and conciseness. This may involve generating plain-language explanations or interactive dashboards.

Specifically, I would consider these components:

- Data Preprocessing and Feature Engineering Analysis: Understanding the source features.

- Model-Agnostic Explanation Techniques: Applying SHAP or LIME for local explanations.

- Visualization Tools: Creating interactive plots using libraries like

matplotlib,seaborn, orplotly. - Explanation Interface: Designing a user-friendly interface to present explanations, which could be a web application or a report generation tool.

9. How do you approach the challenge of managing and versioning large datasets used for ML training, ensuring reproducibility and traceability?

Managing and versioning large datasets for ML training to ensure reproducibility and traceability involves several key practices. First, I'd use a data versioning tool like DVC (Data Version Control) or lakeFS. These tools allow you to track changes to your data, similar to how Git tracks code changes. This ensures that you can always revert to a specific version of your dataset and know exactly what data was used to train a particular model.

Second, I'd implement a data pipeline with clear steps for data extraction, transformation, and loading (ETL). Each step should be documented and versioned, ideally using tools like Apache Airflow or Prefect for orchestration and lineage tracking. Finally, metadata management is crucial. Tracking metadata like data source, creation date, transformations applied, and data quality metrics helps in understanding the dataset's characteristics and ensures better traceability. All experiments performed should then also log the data version they are trained on, allowing reproducibility of results.

10. Design a solution for detecting and mitigating bias in ML models, considering both data bias and algorithmic bias.

To detect and mitigate bias in ML models, a multi-faceted approach addressing both data and algorithmic bias is necessary. For data bias, begin with thorough data exploration and visualization to identify skewed distributions or under-representation of certain groups. Techniques like oversampling minority classes, undersampling majority classes, or using synthetic data generation (e.g., SMOTE) can balance the dataset. Evaluate fairness metrics (e.g., disparate impact, equal opportunity) alongside traditional performance metrics to quantify bias.

Algorithmic bias can be addressed through techniques like: 1) Regularization to prevent overfitting on biased features. 2) Adversarial debiasing which trains a model to be invariant to sensitive attributes. 3) Pre/in/post-processing fairness interventions, such as re-weighting training examples based on sensitive attributes or adjusting model outputs to satisfy fairness constraints. Continuously monitor model performance across different demographic groups in production and retrain models periodically to adapt to evolving data and ensure fairness over time.

11. Explain how you would architect a system for federated learning, enabling model training across multiple devices or organizations without sharing raw data.

Federated learning system architecture focuses on training a central model across decentralized devices or organizations holding local data samples. Each participant trains a local model on their data and only shares model updates (e.g., gradients) with a central server. The central server aggregates these updates, often using techniques like federated averaging, to improve the global model. This global model is then redistributed to the participants for further local training. Privacy is enhanced because raw data remains on the devices, and only model updates are exchanged.

Key components include: 1. Local devices/organizations: Train models on their data. 2. Central server: Aggregates model updates. 3. Secure aggregation protocols: Protect updates during aggregation (e.g., using differential privacy or secure multi-party computation). 4. Model update mechanisms: Define how updates are shared (e.g., synchronous or asynchronous).

12. How would you design an ML infrastructure that can automatically scale to handle fluctuating demand, while minimizing costs?

To design a scalable and cost-effective ML infrastructure, I'd leverage cloud-based services like AWS SageMaker, Google AI Platform, or Azure Machine Learning. These platforms offer auto-scaling capabilities for training and inference. For training, I'd use spot instances or preemptible VMs to reduce costs during periods of high demand, coupled with managed Kubernetes for orchestration.

For inference, I'd use serverless functions (like AWS Lambda or Google Cloud Functions) or containerized deployments (using Kubernetes with Horizontal Pod Autoscaler) that automatically scale based on incoming request volume. Monitoring tools would track resource utilization, and autoscaling policies would dynamically adjust the number of instances to match the workload, ensuring optimal performance and cost efficiency. We'd also incorporate caching mechanisms to reduce the load on the model endpoints and implement model versioning for easy rollback in case of issues.

13. Describe your experience with different ML frameworks (e.g., TensorFlow, PyTorch) and explain when you would choose one over another for a specific project.

I have experience with both TensorFlow and PyTorch. With TensorFlow, I've used Keras for building and training neural networks, particularly for image classification and time series analysis. I appreciate its robust production deployment capabilities, especially TensorFlow Serving and TensorFlow Lite for mobile/embedded devices. With PyTorch, I've focused on projects involving research and experimentation, leveraging its dynamic computation graph for easier debugging and flexibility in defining custom models. I also have experience using both frameworks for NLP tasks involving transformer models.

I would choose TensorFlow for projects prioritizing production readiness and large-scale deployment, where a static graph and optimized serving infrastructure are crucial. Its strong community support and established ecosystem are also a plus for enterprise environments. Conversely, I would opt for PyTorch for research-oriented projects, rapid prototyping, or when needing greater flexibility in model design. PyTorch's Python-friendly interface and eager execution make it more intuitive for debugging and experimentation. The choice also depends on team expertise, if the team is highly proficient in PyTorch, I would go with PyTorch and vice versa.

14. How would you build a system for evaluating the business impact of ML models, quantifying the value they generate and identifying areas for improvement?

To build a system for evaluating the business impact of ML models, I'd focus on defining clear, measurable business metrics upfront (e.g., revenue increase, cost reduction, customer retention). Then, I would establish a framework to track these metrics before and after model deployment, using A/B testing or shadow deployments to isolate the model's impact. This involves setting up data pipelines to collect necessary data, building dashboards to visualize key performance indicators (KPIs) related to the business goals, and performing statistical analysis to attribute changes in metrics to the model's performance. For example, if we are evaluating churn model, we may look into metrics like: Churn Rate, Customer Lifetime Value (CLTV), Retention Rate.

To quantify the value and identify areas for improvement, I'd calculate the monetary value of the model's impact on each metric. This might involve estimating the incremental revenue generated or the cost savings achieved. I would also incorporate feedback loops by monitoring user behavior and gathering input from stakeholders to identify areas where the model is underperforming or causing unintended consequences. This feedback can then be used to refine the model, adjust its deployment strategy, or even reconsider the original business goals.

15. Explain how you would implement security best practices in an ML infrastructure, protecting sensitive data and preventing unauthorized access to models.

Securing an ML infrastructure involves several key practices. Data encryption, both at rest and in transit, is crucial for protecting sensitive information. Access control lists (ACLs) and role-based access control (RBAC) should be implemented to restrict access to data and models based on user roles and permissions. Regularly auditing access logs helps identify and address potential security breaches.

Furthermore, model security is paramount. Employ techniques like differential privacy and federated learning to protect sensitive data used in training. Implement input validation and sanitization to prevent adversarial attacks. Regularly monitor model performance for anomalies that could indicate tampering or malicious use. Version control for models and infrastructure as code is crucial for reproducibility and security audits.

16. How would you approach the problem of model decay, proactively identifying and addressing performance degradation over time?

To proactively address model decay, I'd implement a monitoring system tracking key performance metrics (accuracy, precision, recall, F1-score) on a rolling basis, using a dedicated validation dataset or real-time inference data. Significant drops in these metrics, detected through statistical tests or predefined thresholds, would trigger alerts, suggesting potential model degradation. I would also track data drift, which refers to changes in the input data distribution, by monitoring features using techniques like calculating the population stability index (PSI).

When decay is detected, several strategies can be applied. These include retraining the model on more recent data, adjusting model parameters based on observed data drift, or ensembling the older model with a newly trained one. Furthermore, periodically re-evaluating the feature set to identify and remove irrelevant or outdated features helps.

17. Design a system for monitoring the resource utilization of ML infrastructure components, identifying bottlenecks and optimizing efficiency.