Hiring an IT Specialist can be a daunting task, as these roles are vital for maintaining an infrastructure. Without a skilled IT Specialist, you risk technology disruptions and inefficiencies, as we discussed in skills required for network engineer, potentially costing your company time and money.

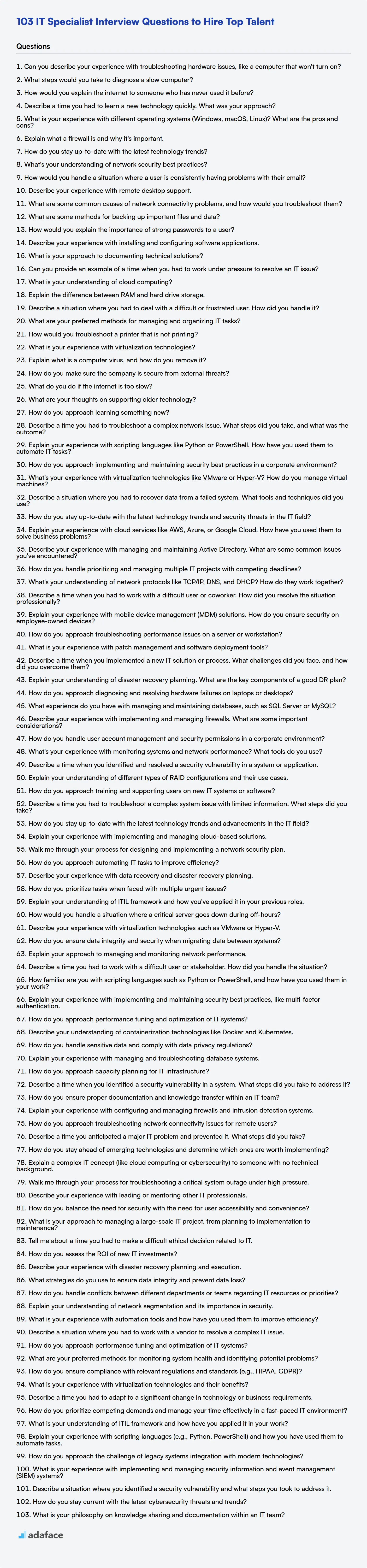

This blog post provides a detailed list of interview questions categorized by experience level: basic, intermediate, advanced, and expert, and also MCQs. This guide ensures you're equipped to assess candidates comprehensively, from their technical understanding to their problem-solving abilities.

By using these questions, you'll be able to find the best IT Specialist for your organization, and, before the interviews, you could use our IT Freshers Test to quickly screen candidates.

Table of contents

Basic IT Specialist interview questions

1. Can you describe your experience with troubleshooting hardware issues, like a computer that won't turn on?

When troubleshooting hardware issues like a computer that won't turn on, I typically follow a systematic approach. First, I verify the obvious: is the power cord securely connected to both the computer and the outlet, and is the outlet working? I then check the power supply itself, often by testing it with a multimeter if available or trying a known good power supply. If those checks pass, I'd move on to internal components.

This involves visually inspecting the motherboard for any signs of damage like burnt components or bulging capacitors. I'd then try reseating components like RAM and the graphics card, one at a time, to rule out loose connections. If the system has any diagnostic LEDs or a beep code system, I’d use that information to narrow down the problem. Finally, if possible, I would attempt to boot into BIOS, if the initial checks allow that, to identify any errors relating to specific hardware.

2. What steps would you take to diagnose a slow computer?

To diagnose a slow computer, I would start by checking the Task Manager (or Activity Monitor on macOS) to identify processes consuming excessive CPU, memory, or disk I/O. I would then close unnecessary applications and browser tabs.

Next, I'd scan for malware or viruses using updated antivirus software. I would also check the hard drive's health and fragmentation, defragmenting if needed (though this is less relevant for SSDs). Finally, I'd ensure that the operating system and drivers are up to date and consider upgrading hardware like RAM or switching to an SSD if performance remains poor.

3. How would you explain the internet to someone who has never used it before?

Imagine a vast network of interconnected computers all over the world. That's the internet. It's like a global library, post office, and telephone system all rolled into one. You can use it to find information, send messages, watch videos, shop, and much more.

Think of it as a way for computers to talk to each other and share information. To access it, you need a device like a phone or computer and a connection to the internet, often through something called Wi-Fi or cellular data. Then, you can use applications or a web browser to navigate and explore this network.

4. Describe a time you had to learn a new technology quickly. What was your approach?

During my previous role, I was tasked with integrating a new GraphQL API into our existing React application. I had limited prior experience with GraphQL. My approach involved several key steps. First, I focused on understanding the fundamental concepts of GraphQL, including schemas, queries, mutations, and subscriptions. I used online resources like the official GraphQL documentation and tutorials from platforms like Apollo and Udemy. Next, I experimented with the new API using tools like GraphiQL to explore the schema and construct queries. Finally, I began integrating the API into our React application using Apollo Client, focusing on implementing simple queries first and gradually increasing complexity.

To accelerate my learning, I also paired with a senior engineer who had experience with GraphQL. This allowed me to quickly resolve any roadblocks and learn best practices for implementation within our specific codebase. I also actively contributed to code reviews to improve my understanding of the overall system. This experience taught me the importance of a structured approach to learning new technologies: start with the basics, experiment with practical examples, and leverage the expertise of others.

5. What is your experience with different operating systems (Windows, macOS, Linux)? What are the pros and cons?

I have experience with Windows, macOS, and Linux. Windows is widely used and has excellent software compatibility, but can be resource-intensive and susceptible to malware. macOS is known for its user-friendliness and strong integration with Apple hardware, but is limited to Apple devices and can be expensive. Linux offers great flexibility, customization, and is open source, but can have a steeper learning curve and less commercial software support.

Specifically, on Windows, I've used the command line and PowerShell for scripting and automation. With macOS, I'm familiar with the terminal and its BSD-based utilities. On Linux, I've worked with various distributions like Ubuntu and CentOS, utilizing the bash shell for system administration tasks. I'm comfortable with package managers like apt and yum for installing and managing software. apt update && apt upgrade is a command I frequently use on Debian-based systems for system updates.

6. Explain what a firewall is and why it's important.

A firewall is a network security system that monitors and controls incoming and outgoing network traffic based on predetermined security rules. Think of it as a gatekeeper that examines each packet of data and decides whether it should be allowed to pass through or be blocked.

Firewalls are important because they protect networks and devices from unauthorized access, malicious attacks (like malware and viruses), and data breaches. Without a firewall, a network is more vulnerable to security threats that can compromise sensitive information and disrupt operations. They provide a critical first line of defense in a comprehensive security strategy.

7. How do you stay up-to-date with the latest technology trends?

I stay up-to-date with technology trends through a variety of methods. I regularly read industry news websites and blogs, such as Hacker News, Reddit's r/programming, and specific technology vendor blogs. I also follow key influencers and thought leaders on social media platforms like Twitter and LinkedIn.

To stay current with practical skills, I dedicate time to exploring new tools and frameworks. This might involve working on personal projects, taking online courses on platforms like Coursera or Udemy, or contributing to open-source projects. I also attend relevant webinars, conferences, and meetups whenever possible to network with other professionals and learn about the latest advancements firsthand. For example, if a new Javascript framework is trending, I might try to build a small application using it.

8. What's your understanding of network security best practices?

Network security best practices revolve around protecting network infrastructure and data from unauthorized access, use, disclosure, disruption, modification, or destruction. Key practices include implementing strong firewalls, intrusion detection/prevention systems (IDS/IPS), and VPNs for secure remote access. Regular security audits and vulnerability assessments are crucial to identify and remediate weaknesses.

Other important aspects are:

- Strong Password Policies: Enforcing complex passwords and multi-factor authentication (MFA).

- Principle of Least Privilege: Granting users only the necessary access rights.

- Regular Software Updates and Patching: Keeping systems updated to address known vulnerabilities.

- Network Segmentation: Dividing the network into smaller, isolated segments to limit the impact of breaches.

- Data Loss Prevention (DLP): Implementing measures to prevent sensitive data from leaving the network.

- Employee Training: Educating employees about phishing, social engineering, and other security threats.

9. How would you handle a situation where a user is consistently having problems with their email?

First, I'd gather information. I'd ask the user specific questions about the problems they're experiencing: Are they unable to send/receive emails? Are there error messages? What email client are they using (e.g., Outlook, Gmail, Thunderbird)? When did the problems start? This helps narrow down the potential causes.

Next, I'd try basic troubleshooting steps depending on the issue. This could involve checking their internet connection, verifying email server settings (incoming/outgoing), ensuring the email client is up-to-date, checking spam/junk folders, or testing the email account on a different device. If the problem persists, I'd consider more advanced troubleshooting, such as examining email logs (if available), checking for conflicts with other software, or escalating the issue to a higher-level support team if necessary. Throughout the process, clear communication with the user is key, keeping them informed of the steps taken and expected outcomes.

10. Describe your experience with remote desktop support.

I have extensive experience providing remote desktop support, assisting users with technical issues from various locations. I'm proficient in using remote access tools such as TeamViewer, AnyDesk, and Remote Desktop Protocol (RDP) to troubleshoot and resolve problems on Windows, macOS, and Linux systems. My experience includes diagnosing and fixing software errors, configuring network settings, installing applications, and providing user training remotely. I prioritize clear communication, ensuring users understand the steps being taken to resolve their issues. I'm also comfortable using ticketing systems like Jira and Zendesk to manage support requests and track progress.

Specifically, I've helped users recover from system crashes, resolve printer connectivity problems, configured email clients, and provided assistance with general software usage. I'm adept at identifying root causes quickly and efficiently, minimizing downtime and ensuring user productivity. I can also escalate complex issues to appropriate teams when necessary, documenting the steps taken and findings to facilitate a smooth handover.

11. What are some common causes of network connectivity problems, and how would you troubleshoot them?

Common causes of network connectivity problems include: faulty network hardware (routers, switches, cables), incorrect IP configurations (duplicate IPs, wrong gateway or DNS), firewall issues blocking traffic, wireless interference, and software bugs.

Troubleshooting steps would involve checking physical connections, using ping and traceroute to identify where connectivity is failing. Verify IP configuration using ipconfig (Windows) or ifconfig (Linux/macOS). Examine firewall rules and logs. Restart network devices. Use network monitoring tools like Wireshark to capture and analyze network traffic.

12. What are some methods for backing up important files and data?

Several methods exist for backing up important files and data. A common approach is using external hard drives or USB drives for manual backups. Cloud storage services (like Google Drive, Dropbox, or AWS S3) offer automated and off-site backups, protecting against local disasters. Network Attached Storage (NAS) devices provide centralized storage and backup solutions within a local network.

Specific backup strategies include full backups (copying all data), incremental backups (copying only changes since the last backup), and differential backups (copying changes since the last full backup). Regular testing of backups is crucial to ensure data recoverability. Consider implementing the 3-2-1 backup rule: keep three copies of your data on two different media, with one copy offsite.

13. How would you explain the importance of strong passwords to a user?

Strong passwords are vital for protecting your personal information and online accounts from unauthorized access. Think of your password as the key to your digital life. If someone gets hold of it, they can access your emails, bank accounts, social media, and much more, potentially leading to identity theft, financial loss, or reputational damage.

A strong password acts as a robust defense. It should be unique, complex, and difficult to guess. Avoid using easily obtainable information like your name, birthday, or pet's name. Instead, aim for a combination of uppercase and lowercase letters, numbers, and symbols. The longer the password, the better. Using a password manager can help you create and store strong, unique passwords for each of your accounts.

14. Describe your experience with installing and configuring software applications.

I have extensive experience installing and configuring various software applications across different operating systems (Windows, Linux, macOS). My experience includes installing desktop applications, server software, and command-line tools. I'm comfortable using graphical installers, package managers (like apt, yum, brew), and command-line interfaces for installation. I ensure to follow the principle of least privilege while installing and always keep a back up of the system before performing any installation.

Configuration tasks I've handled involve setting environment variables, modifying configuration files (e.g., .env, YAML, XML), configuring databases, and setting up network services. I am also experienced with automation tools like Ansible for consistent and repeatable deployments.

15. What is your approach to documenting technical solutions?

My approach to documenting technical solutions involves creating clear, concise, and maintainable documentation that caters to different audiences. I typically start by outlining the problem being solved, the proposed solution, and the reasoning behind choosing that particular approach. I use a combination of diagrams, flowcharts, and textual descriptions to illustrate the solution's architecture and functionality.

Specifically, I focus on writing documentation that addresses the following:

- High-level overview: A general description of the solution for stakeholders.

- Technical specifications: Detailed information for developers and engineers, including API documentation, data models, and code samples. I often use tools like Swagger or similar to generate these.

- Implementation details: Step-by-step instructions for implementing the solution, including configuration settings and dependencies. Example:

npm install --save fastify - Troubleshooting guide: Common issues and their solutions.

- Deployment instructions: Steps to deploy the solution to different environments.

I believe in keeping documentation up-to-date and easily accessible, usually within the codebase (e.g., using README files or a dedicated documentation folder) or in a centralized knowledge base (like Confluence or a dedicated documentation website).

16. Can you provide an example of a time when you had to work under pressure to resolve an IT issue?

During a critical system migration, we encountered a major database corruption issue just hours before the go-live. The pressure was immense as the entire company's operations depended on this migration. I quickly assembled a team of database admins, developers, and infrastructure engineers. We worked collaboratively around the clock to diagnose the root cause, which turned out to be an unexpected incompatibility between the new database version and a legacy application.

To resolve the issue under immense time pressure, we implemented a temporary workaround by creating a data transformation script to bridge the compatibility gap. This involved writing SQL queries and utilizing data manipulation tools. Simultaneously, we engaged the application vendor for a permanent fix. We closely monitored the system after go-live, and while it was a tense situation, we successfully averted a major business disruption and ensured a smooth transition for the company.

17. What is your understanding of cloud computing?

Cloud computing is essentially the delivery of computing services—including servers, storage, databases, networking, software, analytics, and intelligence—over the Internet ("the cloud") to offer faster innovation, flexible resources, and economies of scale. You typically pay only for cloud services you use, helping you lower your operating costs, run your infrastructure more efficiently, and scale as your business needs change.

Key characteristics include on-demand self-service, broad network access, resource pooling, rapid elasticity, and measured service. Examples include services like AWS, Azure, and Google Cloud, offering everything from basic compute power and storage to specialized services like machine learning and data analytics. These resources can be provisioned and released quickly, reducing the need for long-term contracts and hardware investments.

18. Explain the difference between RAM and hard drive storage.

RAM (Random Access Memory) and hard drive storage serve different purposes in a computer system. RAM is a type of volatile memory used for short-term data storage that the CPU actively uses. It provides very fast read and write speeds, enabling quick access to running applications and data. However, data in RAM is lost when the power is turned off.

Hard drive storage (HDD/SSD) is non-volatile memory used for long-term data storage. It persists data even when the power is off. While hard drive storage offers much larger capacities than RAM, its read and write speeds are significantly slower. It's primarily used for storing the operating system, applications, files, and other data that needs to be retained persistently.

19. Describe a situation where you had to deal with a difficult or frustrated user. How did you handle it?

In a previous role, I supported a software application used by external clients. One client called in extremely frustrated because they were unable to access a critical report needed for a deadline. They had been trying for hours and were very upset, raising their voice and using strong language.

I remained calm and acknowledged their frustration, apologizing for the inconvenience. I actively listened to fully understand the issue, resisting the urge to interrupt. I assured them I would do everything I could to resolve the problem quickly. After confirming their account details and attempting to reset their password, I discovered a system-wide authentication issue that hadn't been reported yet. I escalated the problem to the development team, and while they worked on a fix, I provided the client with a manual workaround to generate the report, which resolved their immediate need. I followed up after the system issue was fully resolved to ensure they could access the report normally and thanked them for their patience. They were appreciative that I had not dismissed them out of hand and provided them with a viable solution.

20. What are your preferred methods for managing and organizing IT tasks?

I prefer a combination of approaches for managing IT tasks. Primarily, I utilize a task management system like Jira or Trello to track progress, assign ownership, and set priorities. This allows for transparency and collaboration within the team. I also leverage techniques like Kanban to visualize workflow and identify bottlenecks.

Additionally, I rely heavily on documentation and clear communication. For recurring tasks, I create detailed runbooks or standard operating procedures (SOPs) to ensure consistency and reduce errors. I also use tools like Slack or Microsoft Teams for real-time communication and quick issue resolution. I also use automation, where appropriate, to handle repetitive tasks and free up time for more strategic initiatives.

21. How would you troubleshoot a printer that is not printing?

First, check the basics: Is the printer powered on? Is it connected to the computer (either wired or wirelessly)? Is there paper in the tray, and is the ink/toner at a reasonable level? Ensure there are no paper jams. Then, check the computer: Is the correct printer selected as the default? Is the printer online and not paused in the print queue? Restarting both the printer and computer can often resolve simple software glitches.

If the issue persists, try printing a test page directly from the printer's control panel. If that works, the problem likely lies with the computer or the connection. Update the printer driver, or try reinstalling it. If it doesn't work, the printer itself may have a hardware issue, or the printer's control panel is malfunctioning. Also check for error messages on the printer's display panel, which can provide clues to the problem.

22. What is your experience with virtualization technologies?

I have experience working with virtualization technologies such as VMware vSphere and Docker. With VMware, I've performed tasks including virtual machine creation, resource allocation, and snapshot management. I also have experience troubleshooting performance issues within virtualized environments.

Regarding Docker, I'm familiar with containerizing applications, building Docker images using Dockerfiles, and managing containers using Docker Compose. I understand concepts like Docker networking and volume management, and I've used Docker for both development and deployment workflows. I've also worked with orchestration tools like Kubernetes at a basic level, understanding its role in managing containerized applications at scale.

23. Explain what is a computer virus, and how do you remove it?

A computer virus is a type of malicious software that, when executed, replicates itself by modifying other computer programs and inserting its own code. This self-replication allows it to spread from one computer to another, often without the user's knowledge. Viruses can cause various types of damage, from slowing down the computer to corrupting data and stealing personal information.

To remove a computer virus, you can follow these steps:

- Disconnect from the internet: This prevents the virus from spreading or receiving further instructions.

- Run a full system scan: Use a reputable antivirus software to scan your entire computer.

- Quarantine or delete infected files: The antivirus software should identify infected files and offer the option to quarantine or delete them.

- Update your antivirus software: Ensure that your antivirus software is up-to-date with the latest virus definitions.

- Restart your computer: After removing the virus, restart your computer to ensure that all traces of the virus are gone. If the problem continues, consider using a specialized removal tool or reinstalling your operating system (as a last resort).

24. How do you make sure the company is secure from external threats?

Securing a company from external threats involves a multi-layered approach. A strong firewall is crucial for controlling network traffic and preventing unauthorized access. Regularly updating software and systems patches mitigates known vulnerabilities that attackers can exploit.

Implementing intrusion detection and prevention systems (IDS/IPS) helps identify and block malicious activity in real-time. Employee training on phishing and social engineering is equally vital, as human error is often a significant vulnerability. Furthermore, enforcing strong password policies, multi-factor authentication, and conducting periodic security audits and penetration testing enhances the overall security posture.

25. What do you do if the internet is too slow?

When the internet is slow, I first try to identify the cause. I check if the issue is widespread (affecting all devices) or localized to my machine. I also try different websites or services to see if the problem is specific to one site. Then, I proceed with troubleshooting steps.

Some common steps include: 1. Restarting my modem and router. 2. Checking the WiFi connection strength and ensuring I'm within range. 3. Closing unnecessary applications or browser tabs that might be consuming bandwidth. 4. Running a speed test to quantify the actual speed. 5. Contacting my internet service provider if the problem persists or if the speed test results are significantly lower than my subscribed plan. For more technical solutions, I might investigate DNS server issues or network congestion using tools like ping or traceroute.

26. What are your thoughts on supporting older technology?

Supporting older technology presents a trade-off. On one hand, it can be crucial for maintaining compatibility, avoiding disruption for existing users, and maximizing the lifespan of investments. It also ensures accessibility for those who may not be able to afford the latest hardware or software. Think about critical infrastructure or medical devices that rely on older systems; updates can be risky and expensive.

However, supporting older technology can also be expensive in terms of development effort, security vulnerabilities, and performance. Older systems often lack modern security features, making them vulnerable to attacks. Maintaining compatibility can also hinder innovation and prevent the adoption of newer, more efficient technologies. A careful cost-benefit analysis should be performed considering factors like user base, security risks, and potential for innovation to determine the appropriate level of support.

27. How do you approach learning something new?

When approaching something new, I start by defining clear goals. What do I want to achieve by learning this? This helps focus my efforts. Then, I gather resources – books, online courses, documentation, and tutorials – and create a structured learning plan, breaking the topic into smaller, manageable chunks. I prioritize understanding the fundamentals before moving to more complex concepts.

Next, I actively practice what I learn. For technical topics, this might involve writing code, experimenting with APIs, or building small projects. I seek feedback regularly, whether from mentors, online communities, or by reviewing my own work. I embrace mistakes as learning opportunities and adjust my approach as needed. Finally, I make sure to document my learning journey for future reference.

Intermediate IT Specialist interview questions

1. Describe a time you had to troubleshoot a complex network issue. What steps did you take, and what was the outcome?

In a previous role, we experienced intermittent connectivity issues affecting a critical application used by remote workers. The problem manifested as dropped connections and slow response times, making it difficult to pinpoint the root cause. My initial steps involved gathering data: I examined network device logs (routers, firewalls), monitored bandwidth utilization, and ran packet captures during periods of high latency. This revealed a pattern of excessive retransmissions and TCP resets pointing to a potential issue with the ISP link.

Further investigation with the ISP confirmed they were experiencing congestion during peak hours. As a temporary workaround, we implemented traffic shaping policies to prioritize application traffic. We also worked with the ISP to explore upgrading our bandwidth and implementing QoS on their end. Ultimately, the bandwidth upgrade resolved the congestion issue, leading to stable and reliable connectivity for our remote users. The key was systematic data gathering, analysis, and collaboration with the ISP to address the problem at its source.

2. Explain your experience with scripting languages like Python or PowerShell. How have you used them to automate IT tasks?

I have experience using both Python and PowerShell to automate IT tasks. With Python, I've primarily focused on automating tasks related to data processing, network device configuration, and report generation. For example, I created a script to automatically parse log files from multiple servers, extract relevant information, and generate a summarized report. Another project involved using Python with libraries like Netmiko to automate configuration backups and changes on Cisco network devices.

In PowerShell, I've used it extensively for Windows system administration. This includes tasks like automating user account creation and management, software installation and patching across multiple machines, and generating system inventory reports. I've also used PowerShell to automate tasks within Active Directory, such as bulk user modifications and group membership management. I often leverage PowerShell's cmdlets and its integration with COM objects to interact with various Windows services and applications programmatically.

3. How do you approach implementing and maintaining security best practices in a corporate environment?

Implementing and maintaining security best practices involves a multi-faceted approach. Firstly, I'd focus on establishing a strong security foundation through policies, procedures, and employee training covering areas like password management, phishing awareness, and data handling. This includes regular security audits and vulnerability assessments to identify weaknesses. Technical controls like firewalls, intrusion detection systems, and endpoint protection are crucial, alongside access control mechanisms like multi-factor authentication and the principle of least privilege.

Secondly, continuous monitoring and improvement are essential. This involves staying updated on the latest threats, security patches, and industry best practices. Regularly reviewing and updating security policies, conducting penetration testing, and fostering a security-conscious culture throughout the organization are all crucial. Incident response plans should be in place and regularly tested to ensure a swift and effective response to any security breaches. Furthermore, tools to automate security task, and to create reports for compliance, such as CIS benchmark, are also very important.

4. What's your experience with virtualization technologies like VMware or Hyper-V? How do you manage virtual machines?

I have experience with both VMware and Hyper-V, primarily in development and testing environments. I've used VMware Workstation and Hyper-V Manager to create, configure, and manage virtual machines. This includes tasks such as allocating resources (CPU, memory, storage), installing operating systems, configuring networking, and taking snapshots for rollback purposes.

Specifically, with VMware, I've used vCenter Converter to migrate physical machines to virtual machines. With Hyper-V, I've used PowerShell cmdlets for scripting VM creation and management. My management typically involves using the respective GUIs or command-line tools to monitor performance, apply updates, and troubleshoot issues. I've also worked with virtual networking concepts like virtual switches and VLANs within these environments.

5. Describe a situation where you had to recover data from a failed system. What tools and techniques did you use?

In a previous role, a critical database server experienced a hardware failure, rendering it inaccessible. We initiated our disaster recovery plan, which involved restoring the database from the most recent backup. The backup was stored on an offsite tape library. We used the database vendor's native restore tools (e.g., pg_restore for PostgreSQL, or RESTORE DATABASE for SQL Server) to bring the database back online on a standby server.

To minimize data loss between the last backup and the failure, we also reviewed the transaction logs. Fortunately, we had configured transaction log shipping to a separate server, allowing us to recover most recent transactions. We applied the transaction logs to the restored database to bring it to a consistent state as close as possible to the point of failure. We also used checksum utilities like md5sum or sha256sum to confirm that restored files are same as original files.

6. How do you stay up-to-date with the latest technology trends and security threats in the IT field?

I stay updated through a multi-faceted approach. I regularly read industry blogs and news sites like TechCrunch, Wired, and specialized security blogs like KrebsOnSecurity and Dark Reading. I also follow key influencers and thought leaders on social media platforms like Twitter and LinkedIn.

Furthermore, I actively participate in online communities and forums such as Reddit's r/programming and Stack Overflow to learn from peers and discuss current challenges. I attend webinars and online conferences to gain insights into emerging technologies and security threats. I also explore official documentation and experiment with new tools in personal projects.

7. Explain your experience with cloud services like AWS, Azure, or Google Cloud. How have you used them to solve business problems?

I have experience with AWS, primarily focusing on services like EC2 for compute, S3 for storage, and Lambda for serverless functions. I've used EC2 to deploy and manage web applications, scaling instances based on demand using Auto Scaling groups. S3 has been crucial for storing static assets, backups, and large datasets, taking advantage of its durability and cost-effectiveness. Lambda has enabled me to build event-driven applications and automate tasks, such as processing uploaded images or triggering workflows based on database changes.

For example, I once used AWS Lambda and S3 to build an image resizing service. When a user uploaded an image to S3, a Lambda function was triggered, automatically resizing the image to different sizes and storing them back in S3. This solved a business problem by reducing storage costs and improving website performance by serving optimized images based on the user's device. Other services I've interacted with are CloudWatch for monitoring and IAM for access control.

8. Describe your experience with managing and maintaining Active Directory. What are some common issues you've encountered?

I've managed Active Directory environments for several years, handling tasks like user and group management, GPO creation and application, DNS management, and security auditing. I'm proficient in using the Active Directory Administrative Center, PowerShell, and command-line tools for these tasks. I've worked with multi-domain forests and understand replication topologies.

Common issues I've encountered include replication problems, DNS resolution failures, GPO conflicts, account lockouts, and performance bottlenecks. I've resolved these through troubleshooting tools like dcdiag, repadmin, and performance monitoring, implementing solutions such as correcting DNS settings, optimizing GPOs, and adjusting replication schedules.

9. How do you handle prioritizing and managing multiple IT projects with competing deadlines?

I prioritize IT projects by assessing their strategic alignment with business goals, potential impact, and urgency. I use a framework like the Eisenhower Matrix (urgent/important) or weighted scoring to rank projects. I then work with stakeholders to confirm priorities and set realistic deadlines, acknowledging potential resource constraints.

To manage multiple projects, I break down each project into smaller, manageable tasks and use project management tools (e.g., Jira, Asana) to track progress, dependencies, and resource allocation. I hold regular status meetings to identify roadblocks, adjust priorities as needed, and ensure clear communication among team members and stakeholders. If conflicts arise, I focus on open communication and collaborative problem-solving to find solutions that minimize disruption.

10. What's your understanding of network protocols like TCP/IP, DNS, and DHCP? How do they work together?

TCP/IP is the foundational protocol suite for the internet, handling reliable data transmission (TCP) and addressing/routing (IP). DNS (Domain Name System) translates human-readable domain names (like google.com) into IP addresses that computers use to locate each other. DHCP (Dynamic Host Configuration Protocol) automatically assigns IP addresses, subnet masks, default gateways, and DNS server addresses to devices on a network.

They work together as follows: A device connecting to a network uses DHCP to obtain an IP address and DNS server information. When the device wants to access a website (e.g., google.com), it uses DNS to resolve the domain name to an IP address. Finally, TCP/IP is used to establish a connection and transmit data between the device and the server hosting the website using that IP address.

11. Describe a time when you had to work with a difficult user or coworker. How did you resolve the situation professionally?

In a previous role, I encountered a coworker, Sarah, who consistently missed deadlines and rarely communicated proactively. This impacted our shared project timelines. To address the situation, I first scheduled a one-on-one meeting with Sarah. During the meeting, I actively listened to understand her perspective and any challenges she might be facing, avoiding accusatory language. It turned out she was struggling with managing her workload due to a lack of clarity on project priorities.

To resolve this, I collaborated with our manager to redefine project priorities and create a shared task management system using Trello. I also offered Sarah support in breaking down tasks into smaller, manageable steps. By fostering open communication, understanding her challenges, and offering practical solutions, we significantly improved her performance and our team's overall productivity. Ultimately, the project was delivered successfully and on time, improving our working relationship.

12. Explain your experience with mobile device management (MDM) solutions. How do you ensure security on employee-owned devices?

My experience with MDM solutions includes using platforms like Microsoft Intune and MobileIron to manage and secure mobile devices, primarily iOS and Android. I've configured policies for password complexity, enforced encryption, and managed app deployments. I've also used MDM to remotely wipe or lock devices in case of loss or theft.

To ensure security on employee-owned devices (BYOD), I implement containerization to separate work and personal data. This involves creating a secure workspace on the device where corporate data resides, and applying stricter policies to that container without affecting the user's personal apps and data. Other measures include requiring multi-factor authentication, implementing data loss prevention (DLP) policies, and regularly patching the mobile OS and apps.

13. How do you approach troubleshooting performance issues on a server or workstation?

When troubleshooting performance issues, I typically start by defining the problem clearly: What is slow? When did it start? Who is affected? Then, I gather data using monitoring tools (Task Manager, Resource Monitor, perfmon, or server-specific tools). I check CPU, memory, disk I/O, and network usage for bottlenecks. Identifying a resource constraint is key. Next, I analyze logs (system, application) for errors or warnings. Based on the data, I form a hypothesis and test it. This might involve restarting services, optimizing code, or upgrading hardware. I iterate until the issue is resolved.

More specifically, I would:

- Check basic system health: CPU, Memory, Disk, Network

- Examine application logs.

- Look at running processes to see resource consumption.

- If applicable, analyze slow queries or code execution paths using profiling tools.

- Test incremental changes and revert if they don't improve the situation.

14. What is your experience with patch management and software deployment tools?

I have experience with patch management and software deployment tools like SCCM, WSUS, and Chocolatey. I've used these tools to automate the process of deploying software updates and new applications across networks. This includes scheduling deployments, managing software packages, and monitoring the deployment process for success or failure, ensuring systems are up-to-date and secure.

Specifically, with SCCM, I've created and managed software packages, configured deployment task sequences, and used reporting features to track compliance. In smaller environments, I've utilized WSUS for managing Windows updates and Chocolatey for package management on Windows systems. I'm familiar with creating and managing software repositories, and automating software installation using scripts.

15. Describe a time when you implemented a new IT solution or process. What challenges did you face, and how did you overcome them?

During my time at Company X, we needed to migrate our customer support ticketing system from a legacy on-premise solution to a cloud-based platform, Zendesk. The initial challenge was data migration. The old system had a different data structure, and a direct transfer wasn't possible without significant data loss or corruption. To overcome this, I worked with a data engineer to develop a custom ETL (Extract, Transform, Load) script using Python. We carefully mapped the data fields, cleaned inconsistencies, and transformed the data into the format required by Zendesk. This involved extensive testing and several iterations to ensure data integrity.

Another hurdle was user adoption. Some support agents were resistant to change and comfortable with the old system. To address this, I conducted comprehensive training sessions, created detailed documentation, and provided ongoing support during the transition period. We also solicited feedback from the agents and incorporated their suggestions to improve the usability of the new system. This collaborative approach fostered a sense of ownership and ultimately led to a successful and smooth migration.

16. Explain your understanding of disaster recovery planning. What are the key components of a good DR plan?

Disaster recovery planning is the process of creating and implementing strategies to ensure business continuity in the event of a disruptive event, such as natural disasters, cyberattacks, or hardware failures. A good DR plan aims to minimize downtime and data loss, allowing the organization to resume critical functions as quickly as possible.

Key components include: 1. Risk Assessment: Identifying potential threats and vulnerabilities. 2. Business Impact Analysis (BIA): Determining the criticality of different business functions and their associated recovery time objectives (RTOs) and recovery point objectives (RPOs). 3. Recovery Strategies: Defining specific procedures for restoring IT infrastructure, applications, and data, which might include backups, replication, failover systems and cloud-based solutions. 4. Testing and Maintenance: Regularly testing the DR plan to identify weaknesses and updating it to reflect changes in the business environment. 5. Communication Plan: Establishing clear communication channels and protocols to keep stakeholders informed during a disaster. 6. Documentation: Maintaining comprehensive documentation of the DR plan, including roles, responsibilities, procedures, and contact information.

17. How do you approach diagnosing and resolving hardware failures on laptops or desktops?

My approach to diagnosing and resolving hardware failures on laptops or desktops involves a systematic process. First, I gather information about the problem, including when it started, any recent changes, and any error messages. Then I systematically check the basics: power supply, connections, and any obvious physical damage. Next, I utilize diagnostic tools and techniques: check boot sequence, listen for unusual sounds, observe indicator lights, boot into safe mode, or use bootable diagnostic utilities like Memtest86 or manufacturer-specific tools for hardware tests (CPU, RAM, HDD/SSD).

Once the failing component is identified, I will then look at options to resolve the issue; this might involve reseating components, updating drivers/firmware, replacing the faulty hardware, or escalating to a specialist if the problem is complex (e.g., motherboard issue). Finally, after the repair, I thoroughly test the system to ensure the problem is resolved and there are no new issues.

18. What experience do you have with managing and maintaining databases, such as SQL Server or MySQL?

I have experience managing and maintaining both SQL Server and MySQL databases. I've worked with SQL Server for about 3 years, primarily focusing on tasks such as creating and modifying database schemas, writing complex queries using T-SQL, performing data backups and restores, and monitoring database performance using tools like SQL Server Management Studio. I've also been involved in setting up database replication for high availability.

My experience with MySQL is similar, though I've used it mainly in a Linux environment. I'm comfortable with tasks such as database installation and configuration, user management, writing SQL queries, and optimizing queries for performance. I've also used command-line tools and scripting for automating database maintenance tasks, such as backups and index optimization. I understand concepts like indexing, normalization, and transaction management in both database systems.

19. Describe your experience with implementing and managing firewalls. What are some important considerations?

I have experience implementing and managing firewalls, including both hardware and software-based solutions like pfSense, iptables and cloud-based firewalls such as AWS Security Groups and Azure Network Security Groups. My experience involves configuring firewall rules based on the principle of least privilege, defining source and destination IPs/ports, and specifying allowed protocols to control network traffic. I have also worked with network address translation (NAT) and port forwarding to expose internal services securely.

Important considerations when implementing and managing firewalls include: regularly reviewing and updating firewall rules to adapt to changing security needs; monitoring firewall logs for suspicious activity and potential breaches; implementing intrusion detection/prevention systems (IDS/IPS) alongside the firewall for enhanced security; documenting all firewall configurations and changes for auditing purposes; and testing the firewall configuration periodically to ensure it's functioning as intended. It's important to consider things like stateful vs stateless inspection, and how the firewall integrates into the broader security architecture.

20. How do you handle user account management and security permissions in a corporate environment?

User account management and security permissions in a corporate environment are handled using a combination of tools and policies. Centralized directory services like Active Directory or Azure AD are used to manage user accounts, groups, and authentication. Role-Based Access Control (RBAC) is implemented to assign permissions based on job roles, ensuring users have only the necessary access to perform their duties. Multi-Factor Authentication (MFA) is enforced to enhance security during login.

Regular security audits and reviews are performed to identify and address any vulnerabilities or misconfigurations in the access control system. Password policies are enforced, and users are trained on security best practices, including recognizing phishing attempts and protecting sensitive information. Least privilege principle is the core of security implementation. This includes granting minimum permissions required for a resource.

21. What's your experience with monitoring systems and network performance? What tools do you use?

I have experience with monitoring systems and network performance analysis, focusing on identifying bottlenecks and ensuring optimal system health. I've worked with a variety of tools, including Prometheus for metric collection, Grafana for visualization and dashboarding, and Nagios for alerting based on predefined thresholds. I also have experience with cloud-based monitoring solutions like AWS CloudWatch and Azure Monitor. These tools help in monitoring CPU usage, memory utilization, network latency, and application response times.

My approach involves setting up alerts for critical metrics, analyzing historical data to identify trends, and collaborating with development and operations teams to resolve performance issues. For network-specific monitoring, I've utilized tools like tcpdump and Wireshark for packet capture and analysis, helping diagnose network-related problems and optimize network configurations. I also use ping and traceroute to troubleshoot network connectivity and routing issues.

22. Describe a time when you identified and resolved a security vulnerability in a system or application.

During a code review for a web application, I identified a potential SQL injection vulnerability. The application was concatenating user-supplied input directly into a SQL query without proper sanitization or parameterization. I immediately flagged this to the team, explained the potential risks (data breaches, unauthorized access), and proposed a solution.

To resolve this, I demonstrated how to use parameterized queries (also known as prepared statements) with placeholder variables. This approach separates the SQL code from the user input, preventing malicious code from being injected. I then refactored the vulnerable code to use parameterized queries, ensuring all user inputs were properly sanitized before being used in SQL queries. We also implemented input validation and output encoding to strengthen security against similar vulnerabilities.

23. Explain your understanding of different types of RAID configurations and their use cases.

RAID (Redundant Array of Independent Disks) combines multiple physical drives into a single logical unit to improve performance, redundancy, or both. Common RAID levels include: RAID 0 (striping, for performance, no redundancy), RAID 1 (mirroring, for redundancy, copies data to multiple drives), RAID 5 (striping with parity, good balance of performance and redundancy, requires at least 3 drives), RAID 6 (striping with double parity, higher redundancy than RAID 5, requires at least 4 drives), and RAID 10 (combination of RAID 1 and RAID 0, high performance and redundancy, requires at least 4 drives).

RAID 0 is suitable where speed is more important than data integrity. RAID 1 is good for critical data where redundancy is paramount. RAID 5 is a common choice for general-purpose servers and NAS devices. RAID 6 is useful when very high fault tolerance is required. RAID 10 offers the best performance and redundancy at a higher cost.

24. How do you approach training and supporting users on new IT systems or software?

My approach to training and supporting users on new IT systems involves a multi-faceted strategy. First, I'd create tailored training materials, including documentation and videos, focusing on common user tasks. These materials would be accessible and easy to understand, avoiding technical jargon as much as possible. Hands-on workshops or interactive online sessions would complement these resources, allowing users to practice using the new system in a supportive environment.

Following the training, I'd provide ongoing support through various channels, such as a dedicated help desk, FAQs, and quick-start guides. Gathering user feedback is crucial to identify areas where additional support or modifications to the system are needed. This iterative process ensures that the system effectively meets the users' needs and promotes user adoption.

Advanced IT Specialist interview questions

1. Describe a time you had to troubleshoot a complex system issue with limited information. What steps did you take?

During my time working on a distributed data pipeline, we experienced intermittent failures in data processing jobs. The error logs were vague, simply indicating a 'transformation failure' without pinpointing the source. Limited information was available because the system spanned multiple services and teams, with no centralized monitoring in place at the time.

My approach involved several steps. First, I aggregated logs from all relevant services to correlate events around the failure timestamps. Then, I replicated the failure scenario in a staging environment using sample data similar to the failed job. From there I added granular logging statements to the data transformation module that seemed suspect. After running the pipeline again in staging, the new logging quickly revealed a previously undetected data type mismatch between the source and destination databases which triggered an error during schema validation. Finally, the team implemented more comprehensive data validation and monitoring to prevent future occurrences of this issue, including automated tests to catch schema discrepancies during deployment.

2. How do you stay up-to-date with the latest technology trends and advancements in the IT field?

I stay up-to-date through a combination of active learning and community engagement. I regularly read industry blogs and news sites like TechCrunch, Wired, and specialized publications related to my areas of interest (e.g., specific programming languages, cloud computing, or cybersecurity). I also follow key influencers and thought leaders on platforms like Twitter and LinkedIn.

Furthermore, I participate in online courses and webinars offered by platforms like Coursera, Udemy, and edX. I also dedicate time to explore new technologies through personal projects and contribute to open-source projects when possible. Attending industry conferences and meetups, or watching recordings of these, provides valuable insights and networking opportunities. Finally, I regularly review documentation and publications for the tools and technologies I use on a daily basis.

3. Explain your experience with implementing and managing cloud-based solutions.

I have experience implementing and managing cloud-based solutions primarily using AWS and Azure. I've worked with services like EC2, S3, Lambda, and DynamoDB on AWS, and Azure VMs, Blob Storage, Functions, and Cosmos DB on Azure. This includes tasks like provisioning resources, configuring networking, setting up security policies (IAM roles, Azure AD), and monitoring performance using tools like CloudWatch and Azure Monitor.

My responsibilities have spanned the entire lifecycle, from initial design and deployment using Infrastructure as Code (Terraform, CloudFormation, ARM templates), to ongoing maintenance, scaling, and optimization. I've also been involved in migrating existing on-premises applications to the cloud, leveraging services like AWS DMS and Azure Database Migration Service. I have used CI/CD pipelines (Jenkins, Azure DevOps) to automate deployments and ensure consistent environments, and have experience troubleshooting issues and implementing best practices for cost management and security.

4. Walk me through your process for designing and implementing a network security plan.

My process for designing and implementing a network security plan typically involves several key stages. First, I focus on assessing the current network infrastructure and identifying potential vulnerabilities through techniques like vulnerability scanning and penetration testing. This also includes understanding the business requirements and compliance regulations that need to be met. Next, I'll design the security architecture. This involves selecting appropriate security controls such as firewalls, intrusion detection/prevention systems (IDS/IPS), VPNs, and access control mechanisms and then strategically placing them within the network to protect critical assets. I also create security policies and procedures. Finally, I implement the plan, configure the security devices, train personnel, and continuously monitor and maintain the effectiveness of the security measures through regular audits and updates, adapting to new threats and vulnerabilities as they arise.

5. How do you approach automating IT tasks to improve efficiency?

I approach automating IT tasks by first identifying repetitive and time-consuming processes. Then, I evaluate available tools and technologies, such as scripting languages (Python, PowerShell), configuration management tools (Ansible, Chef, Puppet), or task schedulers. A prioritized list helps focus on the most impactful automations.

Next, I develop and test the automation scripts or workflows in a staging environment. After rigorous testing, I deploy the automation, monitor its performance, and make necessary adjustments. Documentation and proper error handling are essential for maintainability and troubleshooting. Version control (Git) is also important.

6. Describe your experience with data recovery and disaster recovery planning.

My experience with data recovery includes utilizing tools like ddrescue for imaging failing drives and employing file carving techniques with tools like foremost to recover deleted files from various file systems. I've also worked with RAID recovery, understanding different RAID levels and using specialized software to reconstruct data from degraded or failed arrays. In a previous role, I automated the process of backing up critical database servers using pg_dump and storing them on geographically diverse cloud storage, ensuring business continuity.

Regarding disaster recovery planning, I've contributed to creating and testing DR plans, including defining RTOs and RPOs for critical systems. I participated in simulated failover exercises, documenting procedures for restoring services in a secondary data center. I am familiar with concepts like warm standby, cold standby, and active-active configurations, and I've assisted in implementing infrastructure-as-code (IaC) using Terraform to facilitate rapid infrastructure provisioning during a disaster recovery event.

7. How do you prioritize tasks when faced with multiple urgent issues?

When faced with multiple urgent issues, I prioritize tasks based on a few factors. First, I assess the impact of each issue. Which issue has the most significant consequences if not addressed immediately? This includes considering factors like the number of users affected, potential financial losses, or legal and compliance risks. Second, I consider the urgency and deadlines associated with each issue. Some issues may have strict deadlines that cannot be missed, making them a higher priority. Third, I look at the effort/complexity involved to resolve each issue. If a quick fix can resolve a critical issue, I might prioritize it over a more complex issue with a similar impact.

I then create a prioritized list, often using a simple matrix that scores impact and urgency. I communicate this list to stakeholders to ensure alignment and transparency. For example, I might use a table like this to visualize priority:

| Issue | Impact | Urgency | Priority |

|---|---|---|---|

| Server Outage | High | High | 1 |

| Security Vulnerability | High | Medium | 2 |

| Minor Bug | Low | Low | 3 |

Throughout the process, I maintain open communication with the team and stakeholders to address any concerns and adjust priorities as needed.

8. Explain your understanding of ITIL framework and how you've applied it in your previous roles.

ITIL (Information Technology Infrastructure Library) is a framework of best practices for IT service management (ITSM). It provides a structured approach to align IT services with the needs of the business, improve efficiency, and manage risk. ITIL focuses on service strategy, service design, service transition, service operation, and continual service improvement.

In my previous roles, I've applied ITIL principles by participating in incident management processes, ensuring timely resolution of issues and adhering to defined SLAs. I've also been involved in change management, where I helped assess the impact of changes, documented procedures, and collaborated with stakeholders to minimize disruptions. Additionally, I contributed to problem management by identifying root causes of recurring issues and implementing preventative measures, improving overall service stability. I've used tools that supported incident, problem and change management (service now).

9. How would you handle a situation where a critical server goes down during off-hours?

First, I'd ensure I'm the appropriate person to respond, and if not, immediately escalate to the on-call personnel. If I am, I'd follow the documented incident response plan, which usually includes: 1) Confirming the server is actually down (check monitoring tools, ping, etc.). 2) Identifying the potential cause (recent deployments, hardware failures, etc.). 3) Attempting immediate restoration (reboot, failover to a backup server). 4) If immediate restoration fails, escalating to subject matter experts based on the suspected cause.

Throughout the process, I'd maintain clear communication with stakeholders about the situation, steps taken, and the estimated time to resolution. I'd also document all actions taken for post-incident analysis. Post-incident, I would participate in a root cause analysis to prevent future occurrences.

10. Describe your experience with virtualization technologies such as VMware or Hyper-V.

I have experience working with VMware vSphere in both development and production environments. I've used vCenter to manage ESXi hosts, deploy and configure virtual machines (VMs), and monitor resource utilization. My tasks included creating and managing VMs using vSphere Client and PowerCLI, configuring network settings (vSwitches, port groups), and managing storage (datastore provisioning, snapshots). I've also worked with VM templates and cloning for rapid deployment.

Additionally, I have some exposure to Hyper-V through lab environments. I've created and managed VMs using Hyper-V Manager, configured virtual networks, and explored features such as Hyper-V Replica for disaster recovery. While my hands-on experience with Hyper-V isn't as extensive as with VMware, I understand the core concepts and principles of virtualization that apply to both platforms.

11. How do you ensure data integrity and security when migrating data between systems?

Data integrity and security during migration can be ensured through a multi-faceted approach. Before the migration, data profiling helps to understand the data and identify potential issues. During the migration, validate the data at the source and destination using checksums or row counts. Encryption both in transit and at rest ensures data confidentiality. Implement access controls and audit logging to track all migration activities and identify any unauthorized access or modifications.

Specific steps may include: * Data Validation: Implement validation rules to check for data consistency and accuracy. * Data Transformation: Correct any known data issues. * Secure Channels: Use HTTPS or VPNs to protect data in transit. * Backup and Recovery: Maintain backups of the data before migration to allow for rollback if needed. * Testing: Thoroughly test the migrated data to ensure its functionality and accuracy in the new system.

12. Explain your approach to managing and monitoring network performance.

My approach to managing and monitoring network performance involves a multi-faceted strategy. I start with establishing baseline metrics for key performance indicators (KPIs) like latency, throughput, packet loss, and jitter. I then use network monitoring tools like SolarWinds, PRTG, or even open-source solutions like Nagios or Zabbix to continuously track these metrics. These tools provide real-time visibility into network health and alert me to any anomalies or deviations from the baseline.

When issues arise, I employ a systematic troubleshooting approach. This includes analyzing logs, performing packet captures with tools like Wireshark to identify bottlenecks or misconfigurations, and utilizing network diagnostic commands (ping, traceroute, iperf) to pinpoint the source of the problem. I also prioritize proactive maintenance, such as regular firmware updates and capacity planning, to prevent performance degradation before it impacts users. Finally, I document all changes and incidents to build a knowledge base for future reference and improved problem resolution.

13. Describe a time you had to work with a difficult user or stakeholder. How did you handle the situation?

In a previous role, I worked with a stakeholder who consistently pushed back on data visualization designs, often without providing clear reasoning. To handle this, I first made sure to actively listen to their concerns, asking clarifying questions to understand the underlying issues. I then started presenting multiple design options, explaining the pros and cons of each, and explicitly linking them to the stakeholder's goals and the overall project objectives.

By offering choices and involving them in the decision-making process, I was able to foster a sense of ownership and collaboration. We eventually agreed on a design that addressed their concerns while still meeting the project requirements. Maintaining open communication and focusing on shared objectives were key to resolving the conflict and building a more positive working relationship.

14. How familiar are you with scripting languages such as Python or PowerShell, and how have you used them in your work?

I'm proficient in both Python and PowerShell. I've used Python extensively for automating tasks, data analysis, and creating simple web applications. For example, I've written scripts to automate server log analysis using libraries like pandas and re, and I've also built small web tools using Flask for internal team use. I've also used it for interacting with REST APIs to automate tasks like user creation or data extraction.

With PowerShell, I primarily use it for Windows system administration tasks such as automating software deployments, managing Active Directory users and groups, and configuring server settings. I've written scripts to streamline the process of deploying new virtual machines on Hyper-V, automating user onboarding processes, and generating reports on system performance. I've also used it to automate tasks within Azure environments, leveraging modules such as Az.Resources and Az.Compute.

15. Explain your experience with implementing and maintaining security best practices, like multi-factor authentication.

I have experience implementing and maintaining various security best practices throughout my career. A key area has been multi-factor authentication (MFA). I've worked with enabling and enforcing MFA across different platforms, including cloud services like AWS and Google Cloud, as well as corporate VPNs and internal applications. This involved configuring MFA policies, integrating with identity providers (like Okta or Azure AD), and educating users on how to enroll and use MFA effectively. I've also helped troubleshoot MFA-related issues, such as users locked out of their accounts or experiencing difficulties with authentication methods.

Beyond MFA, I've been involved in other security measures such as regular security audits, vulnerability scanning, and penetration testing. I helped in configuring secure configurations for systems like firewalls and servers. This also included implementing security awareness training programs to educate employees about phishing, social engineering, and other common threats.

16. How do you approach performance tuning and optimization of IT systems?

Performance tuning and optimization involve a systematic approach. First, identify bottlenecks using monitoring tools (e.g., CPU usage, memory consumption, disk I/O, network latency). Then, analyze the data to pinpoint the root cause of performance issues. This might involve code profiling, database query analysis, or examining system configurations.

Next, implement targeted solutions. For example, optimizing database queries, improving code efficiency, caching frequently accessed data, or upgrading hardware. After each change, re-measure performance to ensure the optimization is effective and doesn't introduce new problems. This iterative process helps improve overall system responsiveness and efficiency.

17. Describe your understanding of containerization technologies like Docker and Kubernetes.

Containerization, using technologies like Docker, packages an application and its dependencies into a standardized unit for software development. This provides consistency across different environments. Docker provides the tools to create, manage, and run these containers, isolating applications from the underlying host system. Key benefits include portability, efficiency, and consistency.

Kubernetes is a container orchestration platform. It automates the deployment, scaling, and management of containerized applications. Kubernetes handles tasks such as load balancing, service discovery, and self-healing. It ensures the desired state of your application by automatically restarting failed containers and scaling resources based on demand. In short, Docker creates containers, and Kubernetes manages them.

18. How do you handle sensitive data and comply with data privacy regulations?

Handling sensitive data requires a multi-faceted approach. First, data encryption both in transit and at rest is crucial using algorithms like AES-256. Second, access control must be strictly enforced using the principle of least privilege; user roles should dictate what data they can access. Third, data masking and tokenization are essential for non-production environments. Specifically, for data privacy regulations, it involves performing a data impact assessment, obtaining user consent when required, ensuring GDPR compliance by implementing processes like data portability and the right to be forgotten. Finally, regular audits, penetration testing, and security training are essential components to ensure ongoing compliance.

19. Explain your experience with managing and troubleshooting database systems.

I have experience managing and troubleshooting various database systems, including MySQL, PostgreSQL, and MongoDB. My responsibilities have included database installation, configuration, performance monitoring, query optimization, and backup/recovery strategies. I've used tools like pgAdmin, MySQL Workbench, and MongoDB Compass for administration.

When troubleshooting, I've addressed issues such as slow query performance by analyzing execution plans and adding indexes, connection issues by reviewing network configurations and database server settings, and data corruption by implementing restoration procedures from backups. I'm familiar with techniques like connection pooling, read replicas, and database sharding to improve scalability and reliability.

20. How do you approach capacity planning for IT infrastructure?

Capacity planning involves forecasting future IT resource needs to ensure adequate infrastructure. My approach includes: 1. Requirements Gathering: Understanding current and future application demands, user growth, and business objectives. 2. Performance Monitoring: Analyzing existing resource utilization (CPU, memory, disk I/O, network) using tools. 3. Forecasting: Predicting future resource needs based on trends and growth projections, accounting for seasonality and planned projects. 4. Gap Analysis: Identifying discrepancies between current capacity and projected needs. 5. Solution Evaluation: Exploring options like scaling existing resources (vertical scaling) or adding more resources (horizontal scaling), considering cost, performance, and manageability. 6. Implementation and Monitoring: Deploying the chosen solution and continuously monitoring performance to ensure capacity meets demand. This is an iterative process, requiring regular review and adjustments.

21. Describe a time when you identified a security vulnerability in a system. What steps did you take to address it?

During a code review of a web application I was working on, I noticed a potential SQL injection vulnerability. Specifically, user input for the search functionality was being directly concatenated into a SQL query without proper sanitization or parameterization. This meant a malicious user could craft an input that would execute arbitrary SQL code.

To address this, I immediately reported the vulnerability to my team lead. I then implemented prepared statements with parameterized queries to ensure all user input was properly escaped and treated as data rather than executable code. I also added input validation to further restrict the type of data that could be entered. Finally, I wrote unit tests to verify the fix and ensure that SQL injection was no longer possible. I subsequently advocated for more frequent and comprehensive security code reviews.

22. How do you ensure proper documentation and knowledge transfer within an IT team?

Effective documentation and knowledge transfer are crucial. We can use several methods to achieve this. Firstly, maintain a central, accessible repository (like a wiki, SharePoint, or Confluence) for all project-related documents, standard operating procedures (SOPs), and architectural diagrams. This includes documenting code (with tools like JSDoc or similar), infrastructure setup, and troubleshooting steps. Document new features and changes using Markdown or similar to keep it readable and easily accessible for new team members. Standardize document templates to ensure consistency.

Secondly, promote active knowledge sharing through regular team meetings, code reviews, and pair programming. Encourage team members to create and present training sessions on specific topics. Create shared documents for recurring issues and their solutions, and have designated experts mentor junior team members. Regularly rotate roles to expose team members to different areas. When a team member leaves or changes roles, ensure a thorough handover process with comprehensive documentation and knowledge transfer sessions.

23. Explain your experience with configuring and managing firewalls and intrusion detection systems.

I have experience configuring and managing both firewalls and Intrusion Detection Systems (IDS) to protect network infrastructure. With firewalls, I've worked with both hardware (Cisco ASA) and software-based solutions (iptables, firewalld) to define rulesets that control network traffic based on source/destination IP addresses, ports, and protocols. This includes implementing and managing NAT, VPNs, and access control lists. For example, I have configured firewall rules to only allow specific IP ranges to access critical database servers.

Regarding IDS, I've utilized tools like Snort and Suricata to monitor network traffic for malicious activity. This involves configuring signature databases, tuning rules to reduce false positives, and analyzing alerts to identify and respond to security incidents. I have also integrated IDS with SIEM systems to centralize log management and incident response.

24. How do you approach troubleshooting network connectivity issues for remote users?

When troubleshooting network connectivity issues for remote users, I start by gathering information: What is the user experiencing (e.g., no internet, slow speeds, specific application issues)? What is their setup (e.g., home network, VPN, device type)? I then proceed with basic checks such as verifying internet connectivity using ping or traceroute to external resources like Google's DNS server (8.8.8.8) and checking if the VPN is connected. I also ensure that the user's firewall isn't blocking necessary traffic.

Next, I move to more advanced troubleshooting steps like examining the user's network configuration (IP address, gateway, DNS settings) using ipconfig or ifconfig. I would also investigate the VPN connection logs and routing tables to identify any potential issues with the network path. Depending on the issue, I may remotely access the user's machine or guide them through troubleshooting steps, like clearing DNS cache or resetting the network adapter. Throughout the process, clear communication with the user is essential to gather accurate information and provide effective support.

Expert IT Specialist interview questions

1. Describe a time you anticipated a major IT problem and prevented it. What steps did you take?

During a system upgrade project, I noticed the projected downtime significantly exceeded the acceptable window for our e-commerce platform. After analyzing the upgrade plan, I identified that a large database migration was the critical path. Instead of the default in-place migration, I proposed and implemented a parallel migration strategy.

This involved setting up a new database instance, migrating the data incrementally while the existing system remained live, and then performing a cutover during a maintenance window. We used tools like rsync for initial data sync and implemented change data capture (CDC) to keep the new database synchronized. This reduced the downtime to under an hour, far below the initial estimate, and prevented significant revenue loss.

2. How do you stay ahead of emerging technologies and determine which ones are worth implementing?

I stay updated through various channels, including reading industry blogs and publications like TechCrunch, Wired, and MIT Technology Review. I also follow key influencers and thought leaders on platforms like Twitter and LinkedIn. Attending webinars, conferences, and workshops is crucial for deeper dives into specific technologies.

To determine implementation worth, I assess technologies based on several factors: business need, potential ROI, scalability, integration complexity, and security implications. I often conduct proof-of-concept (POC) projects to test the technology in a controlled environment before broader adoption. Evaluating vendor support, community activity, and long-term viability is also part of my assessment. For example, if evaluating a new framework like TensorFlow vs. PyTorch, I would compare their performance on specific use cases, community support, and deployment options.