Hiring Scala developers can be a challenge, given the language's complexity and the variety of skills required. Recruiters and hiring managers need a comprehensive set of questions to assess candidates effectively across different experience levels, much like when hiring for Java developer roles.

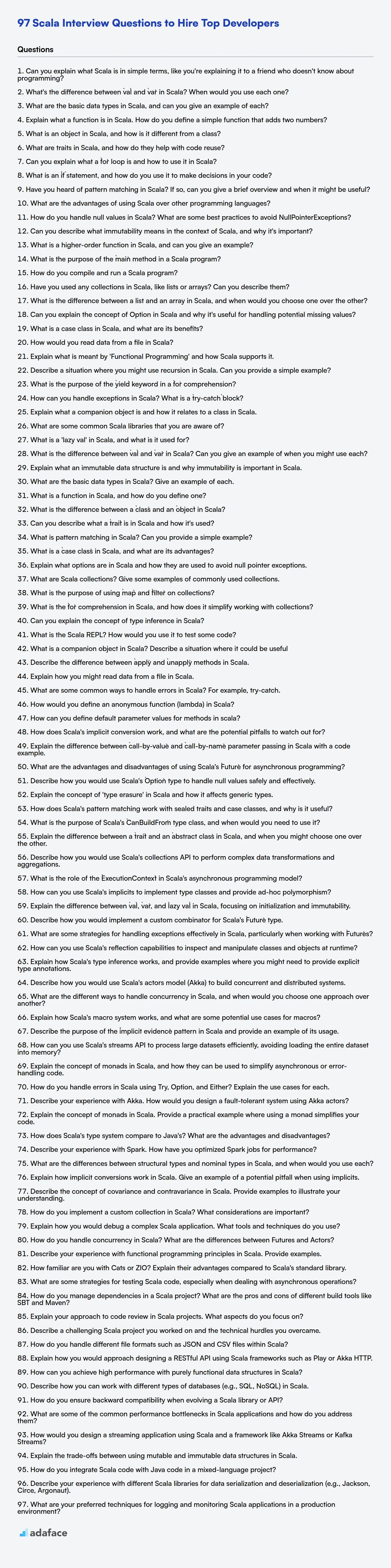

This blog post provides a curated list of Scala interview questions tailored for freshers, juniors, intermediate, and experienced developers, along with Scala MCQs. Each section aims to help you gauge a candidate's proficiency in Scala's core concepts, functional programming principles, and their ability to apply these skills to real-world problems.

By using these questions, you can streamline your interview process and identify candidates who possess the skills you need; for a more structured approach, consider using a Scala online test before the interview stage to filter candidates based on their skills.

Table of contents

Scala Developer interview questions for freshers

1. Can you explain what Scala is in simple terms, like you're explaining it to a friend who doesn't know about programming?

Imagine Scala as a souped-up version of Java. If Java is like a reliable family car, Scala is like a sports car built on the same platform. It's a programming language that runs on the Java Virtual Machine (JVM), so it's compatible with Java code, but it adds a lot of modern features to make programming more concise, expressive, and less prone to errors.

Think of it this way: Scala lets you do more with less code. It blends object-oriented and functional programming paradigms, giving you the flexibility to choose the best approach for each problem. Key features includes:

- Conciseness: Write more effective code with fewer lines.

- Type Safety: Helps catch errors early during development.

- Concurrency: Easier to handle multiple tasks at once, especially useful for web applications.

2. What's the difference between `val` and `var` in Scala? When would you use each one?

val and var are keywords in Scala used to declare variables, but they differ in mutability. val creates an immutable variable, meaning its value cannot be changed after it's initialized. var creates a mutable variable, allowing its value to be reassigned.

Use val by default for immutability, which promotes safer and more predictable code. Use var only when you specifically need to change the value of a variable, such as within a loop counter or when updating state. Example:

val x = 10 // x cannot be reassigned

var y = 20 // y can be reassigned

y = 30

3. What are the basic data types in Scala, and can you give an example of each?

Scala has several basic data types. These can be broadly categorized into numeric types, the Boolean type, and the String type.

- Numeric Types: These include

Byte(8-bit signed integer),Short(16-bit signed integer),Int(32-bit signed integer),Long(64-bit signed integer),Float(32-bit floating-point number), andDouble(64-bit floating-point number). For example:val age: Int = 30,val price: Double = 19.99 - Boolean Type: Represents truth values, either

trueorfalse. For example:val isAdult: Boolean = true - String Type: Represents a sequence of characters. For example:

val name: String = "John Doe" - Unit Type: The

Unittype is equivalent tovoidin Java and is used when a function doesn't return a meaningful value. Example:def printMessage(): Unit = { println("Hello") } - Char Type: represents a single character. Example:

val initial: Char = 'J'

4. Explain what a function is in Scala. How do you define a simple function that adds two numbers?

In Scala, a function is a first-class citizen, meaning it can be treated like any other value. This implies functions can be passed as arguments to other functions, returned as values from functions, and assigned to variables.

To define a simple function in Scala that adds two numbers, you can use the following syntax:

def add(x: Int, y: Int): Int = x + y

Here,

defkeyword is used to define the function.addis the name of the function.(x: Int, y: Int)specifies the parameters and their types. Here, x and y are the parameters of typeInt.: Intspecifies the return type of the function, which isIntin this case.= x + yis the function body, which calculates the sum of x and y and returns the result.

5. What is an object in Scala, and how is it different from a class?

In Scala, an object is a singleton instance of a class. It's similar to a class, but only one instance of an object can ever exist. This is different from a class, where you can create multiple instances (objects) using the new keyword.

Key differences include:

- Instantiation: Objects are instantiated immediately when they are first accessed, while classes are instantiated using the

newkeyword. - Uniqueness: Only one instance of an object can exist, ensuring a single point of access. Classes can have multiple instances.

- Use Cases: Objects are often used for utility functions, constants, or as entry points to applications (like the

mainmethod), while classes are used to define blueprints for creating objects with state and behavior.

6. What are traits in Scala, and how do they help with code reuse?

Traits in Scala are like interfaces with implementations. They define a type by specifying the methods and fields that a type must have but can also provide concrete method implementations and field definitions. A class or object can extend multiple traits, allowing for multiple inheritance of behavior.

Traits help with code reuse by allowing you to define common behavior in one place and then mix it into multiple classes. This avoids code duplication and promotes a more modular and maintainable codebase. Instead of inheriting from a single base class, you can combine multiple traits to achieve the desired functionality. This provides flexibility in composing classes with the behaviors they need. Traits enable functionalities like:

- Defining reusable interfaces: Traits define contracts that implementing classes must adhere to.

- Providing default implementations: Traits can provide concrete method implementations.

- Mixing in functionality: Classes can mix in multiple traits to inherit behavior from multiple sources, avoiding single inheritance limitations.

7. Can you explain what a `for` loop is and how to use it in Scala?

A for loop in Scala is a control flow statement that allows you to iterate over a sequence of elements. It provides a concise way to execute a block of code repeatedly for each element in the sequence. The basic syntax involves using the for keyword followed by a generator (which specifies the sequence to iterate over and the variable to assign each element to) and then the code block to execute.

For example:

for (i <- 1 to 5) {

println(s"The value of i is: $i")

}

In this example 1 to 5 creates a range of numbers from 1 to 5 inclusive. The variable i takes on each value in the range successively, and the println statement is executed for each value. <- is used to assign each element of the sequence to i. You can also include guards (if conditions) to filter the elements being processed and use yield keyword to generate a new collection.

8. What is an `if` statement, and how do you use it to make decisions in your code?

An if statement is a fundamental control flow structure in programming that allows you to execute a block of code conditionally. It checks a boolean expression (a condition), and if the expression evaluates to true, the code block within the if statement is executed. If the expression is false, the code block is skipped.

To use an if statement, you write the keyword if, followed by the condition in parentheses (), and then the code block to be executed in curly braces {}. Here's an example:

if (x > 10) {

System.out.println("x is greater than 10");

}

You can also add an else block to execute different code when the condition is false, or use else if for multiple conditions. This allows for branching logic based on different possibilities.

9. Have you heard of pattern matching in Scala? If so, can you give a brief overview and when it might be useful?

Yes, I have heard of pattern matching in Scala. It's a mechanism for checking a value against a pattern. It's like a more powerful switch statement. In essence, it allows you to deconstruct data structures and bind values based on the structure of the data.

Pattern matching is useful in several scenarios:

- Type checking and casting: Matching on the type of a variable.

- Deconstructing case classes: Easily extracting values from case classes.

- Matching on collections: Deconstructing lists, tuples, etc.

- Defining functions based on input structure: Creating functions that behave differently depending on the input pattern. For example:

def describe(x: Any): String = x match {

case 5 => "Five"

case "hello" => "Hello string"

case true => "Truth"

case List(1, 2, _*) => "List starting with 1,2"

case _ => "Something else"

}

10. What are the advantages of using Scala over other programming languages?

Scala offers several advantages, including its seamless integration with Java (runs on the JVM and uses Java libraries), making it easy to adopt in existing Java environments. It combines object-oriented and functional programming paradigms, offering flexibility and enabling concise, expressive code. Scala's static typing system helps catch errors at compile time, improving code reliability. It is also inherently concurrent, which makes is suitable for building high performance application, leveraging features like Actors. Furthermore, it has powerful features like pattern matching and immutability.

Compared to Java, Scala reduces boilerplate code, enhancing developer productivity. Compared to dynamic languages like Python or JavaScript, Scala's static typing provides better runtime safety and allows for earlier error detection. Its functional programming capabilities facilitate writing more maintainable and testable code.

11. How do you handle null values in Scala? What are some best practices to avoid NullPointerExceptions?

Scala, being a hybrid functional language, encourages avoiding null values to prevent NullPointerExceptions. Scala provides Option[T] to represent optional values, which can be either Some(value) or None. Using Option forces you to explicitly handle the case where a value might be absent, making your code safer and more readable.

Best practices include: 1. Favor Option over null for representing potentially missing values. 2. Use pattern matching or methods like map, flatMap, getOrElse, and orElse to safely extract or provide default values from Option instances. 3. Consider using libraries like cats or scalaz which provide more advanced features for working with Option and other functional constructs, but this is often only for advanced uses. 4. Avoid using Java APIs that return null when possible, wrapping them in Scala code that returns Option instead when you cannot avoid it. For example, val optionalValue: Option[String] = Option(nullableJavaMethod()) where nullableJavaMethod() returns a potentially null string.

12. Can you describe what immutability means in the context of Scala, and why it's important?

Immutability in Scala means that once an object is created, its state cannot be changed. This applies to fields within the object; their values remain constant throughout the object's lifetime. Scala encourages immutability through the use of val for declaring variables and immutable collections.

Immutability is important because it promotes safer and more predictable code. It simplifies reasoning about program behavior, especially in concurrent environments, as immutable objects are inherently thread-safe. It also enables techniques like caching and memoization, as the result of a function will always be the same given the same immutable input. Data structures like List, Vector, and Map in Scala are immutable by default.

13. What is a higher-order function in Scala, and can you give an example?

A higher-order function in Scala is a function that takes one or more functions as arguments and/or returns a function as its result. Essentially, it treats functions as first-class citizens.

For example:

def operateOnList(list: List[Int], operation: Int => Int): List[Int] = {

list.map(operation)

}

def square(x: Int): Int = x * x

val numbers = List(1, 2, 3, 4, 5)

val squaredNumbers = operateOnList(numbers, square) // squaredNumbers will be List(1, 4, 9, 16, 25)

In this case, operateOnList is a higher-order function because it takes another function (operation) as an argument. square is a regular function that's passed into operateOnList.

14. What is the purpose of the `main` method in a Scala program?

The main method in Scala serves as the entry point of a program's execution. Similar to Java, when you run a Scala application, the JVM looks for a main method to begin the program's execution. Without a main method, the program won't know where to start.

The main method in Scala must have a specific signature: def main(args: Array[String]): Unit. Here, args is an array of strings that represents the command-line arguments passed to the program. The Unit return type indicates that the main method does not return any meaningful value (similar to void in Java).

15. How do you compile and run a Scala program?

To compile a Scala program, you typically use the scalac compiler. For example, if your Scala file is named MyProgram.scala, you would compile it using the command scalac MyProgram.scala. This generates .class files containing the bytecode.

To run the compiled Scala program, you use the scala command followed by the name of the main class. For example, if your main class is named MyProgram, you would run it using the command scala MyProgram. The scala command executes the compiled bytecode using the Scala runtime environment. You can also package your Scala application into a .jar file using sbt or other build tools and run the jar file with java -jar myapp.jar.

16. Have you used any collections in Scala, like lists or arrays? Can you describe them?

Yes, I have used collections extensively in Scala. Scala offers a rich set of immutable and mutable collections. Common ones include:

- Lists: Immutable, singly linked lists. They are great for functional programming and prepending elements efficiently.

- Arrays: Mutable, fixed-size sequences of elements, similar to Java arrays.

ArrayBufferprovides a growable array. - Sets: Collections of unique elements, available in both mutable and immutable variants.

- Maps: Collections of key-value pairs, also available in mutable and immutable variants. They provide efficient lookups by key.

Scala collections come with a wealth of methods for transformation (e.g., map, filter), aggregation (e.g., reduce, fold), and iteration. These methods are often used to avoid explicit loops. For example:

val numbers = List(1, 2, 3, 4, 5)

val squaredNumbers = numbers.map(x => x * x) // Returns List(1, 4, 9, 16, 25)

17. What is the difference between a list and an array in Scala, and when would you choose one over the other?

In Scala, both Lists and Arrays are used to store sequences of elements, but they differ in mutability and memory allocation. List is an immutable, singly-linked list. This means once a List is created, its elements cannot be changed. Operations like adding or removing elements create a new List. Array, on the other hand, is a mutable, contiguous block of memory, similar to Java arrays. Elements in an Array can be modified after creation.

You'd choose a List when immutability is desired or required, such as in functional programming paradigms where avoiding side effects is crucial. List also provides efficient prepend operations (using ::). Choose Array when you need a mutable sequence and/or performance is critical, particularly when accessing elements by index or modifying existing elements frequently as they provide O(1) random access. Arrays are also often preferred when interoperating with Java code that expects Java arrays. Also, Lists will have a slight overhead compared to arrays, due to being implemented as linked lists, and needing to be immutable (copy new objects to simulate append operations).

18. Can you explain the concept of Option in Scala and why it's useful for handling potential missing values?

In Scala, Option is a container object that may or may not contain a non-null value. It's a way to explicitly represent the possibility of a value being absent, addressing the common problem of NullPointerException in other languages. An Option can be in one of two states: Some(value), which wraps an actual value, or None, which indicates that the value is missing.

Using Option enforces that you, as a developer, handle the case where a value might be absent. Instead of implicitly dealing with null, the compiler guides you to explicitly consider the possibility of None. This leads to more robust and less error-prone code. Common operations on Option include getOrElse, map, flatMap, and pattern matching to safely access or transform the contained value, if present. For example: val result: Option[Int] = Some(5); val value = result.getOrElse(0) // value will be 5 or val result: Option[Int] = None; val value = result.getOrElse(0) // value will be 0.

19. What is a case class in Scala, and what are its benefits?

In Scala, a case class is a regular class that is immutable by default and provides several useful methods automatically, such as equals, hashCode, and toString. It simplifies creating data-centric classes.

Benefits include:

- Immutability: Makes code safer and easier to reason about.

- Concise syntax: Reduces boilerplate code.

- Pattern matching support: Enables elegant and powerful code for data analysis.

- Automatic methods:

equals,hashCode, andtoStringare generated automatically. - Copy method: Provides a simple way to create modified copies of objects.

- Example:

case class Point(x: Int, y: Int)

20. How would you read data from a file in Scala?

In Scala, you can read data from a file using several approaches. One common way is to use the scala.io.Source object. Here's a basic example:

import scala.io.Source

try {

val filename = "data.txt"

for (line <- Source.fromFile(filename).getLines()) {

println(line)

}

} catch {

case e: Exception => println("Couldn't find that file.")

}

This code opens the file specified by filename, iterates through each line, and prints it to the console. The try-catch block handles potential exceptions such as the file not existing. Another option for reading an entire file at once into a string is Source.fromFile(filename).mkString.

21. Explain what is meant by 'Functional Programming' and how Scala supports it.

Functional Programming (FP) is a programming paradigm that treats computation as the evaluation of mathematical functions and avoids changing state and mutable data. In essence, FP emphasizes immutability, pure functions (functions with no side effects), and treating functions as first-class citizens (meaning functions can be passed as arguments to other functions, returned as values, and assigned to variables).

Scala supports functional programming through several key features:

Immutability: Scala encourages the use of immutable data structures (like

valfor variables and immutable collections). This helps avoid unintended side effects and makes code easier to reason about.Pure Functions: Scala promotes the creation of pure functions.

First-Class Functions: Functions in Scala are first-class citizens. This allows for higher-order functions (functions that take other functions as arguments or return them as results). Example:

def operate(x: Int, y: Int, f: (Int, Int) => Int): Int = f(x, y) val sum = operate(2, 3, (a, b) => a + b) // sum is 5Case Classes: Case classes provide a concise way to define immutable data structures and automatically generate useful methods like

equals,hashCode, andtoString.Pattern Matching: Scala's pattern matching allows you to deconstruct data structures and handle different cases in a concise and expressive way, which is useful for working with immutable data and defining functions based on data structure.

Lazy Evaluation: Scala supports lazy evaluation, allowing expressions to be evaluated only when their values are needed.

22. Describe a situation where you might use recursion in Scala. Can you provide a simple example?

Recursion is useful in Scala when dealing with problems that can be broken down into smaller, self-similar subproblems. A classic example is calculating the factorial of a number. Instead of using a loop, a recursive function can call itself with a smaller input until it reaches a base case.

Here's a simple example:

def factorial(n: Int): Int = {

if (n <= 1) {

1 // Base case: factorial of 0 or 1 is 1

} else {

n * factorial(n - 1) // Recursive call

}

}

In this code, factorial calls itself with n - 1 until n is 1 or less. The result is then built up as the calls return.

23. What is the purpose of the `yield` keyword in a `for` comprehension?

The yield keyword in a for comprehension (specifically, a generator expression) transforms the comprehension into a generator. Instead of producing a list or other collection immediately, it creates an iterator that yields values one at a time as they are requested.

This is useful for working with large or infinite sequences of data, as it avoids storing the entire sequence in memory at once. Each value is computed only when it's needed. It is also very useful for lazy evaluation of data that may not be needed at all. If no values are requested, no values are ever computed.

24. How can you handle exceptions in Scala? What is a `try-catch` block?

In Scala, exceptions are handled using try-catch-finally blocks, similar to Java. The try block encloses the code that might throw an exception. The catch block(s) follow, each handling a specific type of exception. You can have multiple catch blocks to handle different exception types.

A try-catch block is structured as follows:

try {

// Code that might throw an exception

} catch {

case ex: SpecificExceptionType => {

// Handle SpecificExceptionType

}

case ex: AnotherExceptionType => {

// Handle AnotherExceptionType

}

} finally {

// Optional: Code that always executes, regardless of exceptions

}

The finally block is optional and contains code that will always be executed, whether or not an exception was thrown or caught. This is commonly used for cleanup operations like closing resources.

25. Explain what a companion object is and how it relates to a class in Scala.

In Scala, a companion object is an object that is declared in the same file as a class and shares the same name. A companion object provides a place to put static members or factory methods that are associated with the class. It's a mechanism to achieve what static members provide in languages like Java.

The companion object and its class have a special relationship: they can access each other's private members. This allows the companion object to act as a utility or helper for the class. Common uses include implementing factory patterns to create instances of the class, defining constant values, or providing helper functions. If a class or object has a companion, it is allowed to access the private members of the other. The class is called the companion class of the object, and the object is called the companion object of the class.

26. What are some common Scala libraries that you are aware of?

I'm familiar with several common Scala libraries. Some frequently used ones include:

- Cats and Scalaz: For functional programming constructs like type classes, monads, and applicative functors.

- Akka: A toolkit for building concurrent, distributed, and resilient message-driven applications. Includes Akka Actors, Akka Streams, and Akka HTTP.

- Spark: A powerful engine for big data processing and analytics, with Scala providing a clean and concise API for interacting with Spark's distributed computing capabilities.

- Play Framework: A web framework known for its reactive architecture and developer productivity.

- ScalaTest and Specs2: Popular testing frameworks for writing unit and integration tests.

- circe and jackson-module-scala: For JSON serialization/deserialization.

- Http4s: A purely functional, non-blocking HTTP server and client.

27. What is a 'lazy val' in Scala, and what is it used for?

A lazy val in Scala is a value that is initialized only once, and only when it is first accessed. It combines the properties of both val (immutable after initialization) and lazy evaluation. Unlike a regular val, its right-hand side is not evaluated at the point of declaration. Instead, its evaluation is deferred until the first time the lazy val is used.

lazy val is commonly used to:

- Defer expensive computations: If a value's calculation is costly and not always needed,

lazy valdelays the computation until it's actually required, potentially improving performance. - Avoid initialization order issues: When defining values that depend on each other,

lazy valcan help avoid issues related to initialization order, as the values are only initialized when accessed. - Handle potentially erroneous values: If the computation of a value might result in an exception,

lazy valdelays the exception until the value is actually used, allowing the program to potentially avoid the error if the value is never accessed.

Scala Developer interview questions for juniors

1. What is the difference between `val` and `var` in Scala? Can you give an example of when you might use each?

val and var are keywords in Scala used to declare variables. val declares an immutable variable, meaning its value cannot be reassigned after initialization. var declares a mutable variable, meaning its value can be changed after initialization.

Use val when you want to ensure that a variable's value remains constant throughout its scope, promoting immutability and preventing unintended side effects. For example:

val message = "Hello, world!" // message cannot be reassigned

Use var when you need to update a variable's value. For example:

var counter = 0 // counter can be reassigned

counter = counter + 1

2. Explain what an immutable data structure is and why immutability is important in Scala.

An immutable data structure is one whose state cannot be modified after it's created. If you need to change it, you must create a new instance with the desired modifications. In Scala, immutability is a core principle and offers several benefits. It simplifies reasoning about code since the value of an immutable object is predictable throughout its lifetime, preventing unexpected side effects. This makes debugging easier and leads to more reliable code.

Furthermore, immutability naturally supports concurrency. Because immutable objects can't be changed after creation, multiple threads can safely access them without the need for locking or synchronization mechanisms. This results in more efficient and scalable concurrent applications. Scala provides built-in immutable collections (like List, Map, Set), and encourages their use, which aids in writing safer, more maintainable, and concurrent programs. Consider this example:

val list = List(1, 2, 3)

val newList = list :+ 4 // Creates a *new* list with 4 appended

println(list) // Still List(1, 2, 3)

println(newList) // List(1, 2, 3, 4)

3. What are the basic data types in Scala? Give an example of each.

Scala has several basic data types. These can be broadly categorized as numeric types, the Boolean type, and the String type.

- Numeric Types:

Int: Represents 32-bit signed integers. Example:val age: Int = 30Long: Represents 64-bit signed integers. Example:val bigNumber: Long = 1234567890123LFloat: Represents 32-bit single-precision floating-point numbers. Example:val price: Float = 19.99fDouble: Represents 64-bit double-precision floating-point numbers. Example:val pi: Double = 3.14159Short: Represents 16-bit signed integers. Example:val smallNumber: Short = 10Byte: Represents 8-bit signed integers. Example:val byteValue: Byte = 127

- Boolean Type:

Boolean: Represents a value that can be eithertrueorfalse. Example:val isAdult: Boolean = true

- String Type:

String: Represents a sequence of characters. Example:val name: String = "John Doe"

- Character Type:

Char: Represents a single 16-bit Unicode character. Example:val grade: Char = 'A'

- Unit Type:

Unit: Equivalent tovoidin Java/C++. It has only one value,(). Example:def doSomething(): Unit = { println("Done") }

- Null Type:

Null: The type ofnullliteral. It is a subtype of all reference types. Example:val ref: String = null

- Nothing Type:

Nothing: A subtype of all other types. It indicates non-termination or an error. For example, a function that always throws an exception has a return type ofNothing.

4. What is a function in Scala, and how do you define one?

In Scala, a function is a first-class citizen, meaning it can be treated like any other value. You can pass functions as arguments to other functions, return them as values from other functions, and assign them to variables.

You define a function using the def keyword, followed by the function name, parameter list (with types), return type, and the function body. For example:

def add(x: Int, y: Int): Int = {

x + y

}

This defines a function named add that takes two integer parameters x and y, and returns their sum as an integer. The return type can sometimes be inferred by the compiler, allowing you to omit it.

5. What is the difference between a `class` and an `object` in Scala?

A class in Scala is a blueprint or template for creating objects. It defines the properties (fields) and behaviors (methods) that objects of that class will have. Think of it as a cookie cutter.

An object is a concrete instance of a class. It's the actual entity that exists in memory. It's the cookie created using the cookie cutter. You can create multiple objects from a single class, each with its own state, by using the new keyword.

6. Can you describe what a `trait` is in Scala and how it's used?

In Scala, a trait is a collection of abstract and non-abstract methods that can be used to define object characteristics. Think of it as an interface with possible implementations. Unlike classes, traits cannot be instantiated directly. They are designed to be mixed into classes using the with keyword, allowing for code reuse and the implementation of multiple inheritance.

Traits are similar to Java 8 interfaces, and can also have fields. If a class mixes in multiple traits, it inherits all their members. Traits can define a type, specifying methods a class must implement. Here's a simple example:

trait Logger {

def log(message: String): Unit

}

class MyClass extends Logger {

def log(message: String): Unit = println(message)

}

7. What is pattern matching in Scala? Can you provide a simple example?

Pattern matching in Scala is a mechanism for checking a value against a pattern. It's similar to a switch statement in other languages but is much more powerful. It allows you to deconstruct data structures, match against specific values, types, and even use guards to add more complex conditions.

Here's a simple example:

val x = 10

x match {

case 1 => println("One")

case 10 => println("Ten")

case _ => println("Something else")

}

In this example, x is matched against different patterns. The case _ is a wildcard, which matches if none of the other cases match.

8. What is a `case class` in Scala, and what are its advantages?

A case class in Scala is a regular class with some additional features that are automatically generated by the compiler, making it more concise and convenient to use, especially for data modeling. These features include:

- Automatic constructor parameter promotion: Constructor parameters are implicitly

valfields. equalsandhashCodemethods: Implemented based on the class's fields, enabling structural equality.toStringmethod: Provides a human-readable string representation of the object.copymethod: Creates a new instance of the class with potentially modified field values.- Companion object with

applyandunapplymethods: Enables factory-like object creation without usingnewand pattern matching, respectively.

The main advantages of using case class include reduced boilerplate code, simplified data comparison and manipulation, and enhanced support for pattern matching, leading to more readable and maintainable code. They are commonly used to represent immutable data structures in functional programming.

9. Explain what options are in Scala and how they are used to avoid null pointer exceptions.

In Scala, an Option is a container object used to represent optional values. It's a type that can hold either Some(value) if a value is present, or None if a value is absent. This approach helps avoid null pointer exceptions, a common problem in languages like Java.

Instead of returning null to indicate the absence of a value, Scala functions can return an Option. Clients of the function can then use pattern matching or methods like isDefined, get, getOrElse, or map to safely access or handle the potential absence of a value. This forces developers to explicitly consider the case where a value might not be present, making code more robust and less prone to errors. For example:

val maybeValue: Option[String] = Some("hello")

maybeValue match {

case Some(value) => println(s"Value is: $value")

case None => println("No value found")

}

10. What are Scala collections? Give some examples of commonly used collections.

Scala collections are data structures that hold and organize multiple elements. They provide a rich set of operations for manipulating data, such as adding, removing, searching, and transforming elements. Scala's collections are immutable by default, promoting safer and more predictable code. However, mutable collections are also available when needed.

Some commonly used Scala collections include:

- List: An ordered, immutable sequence of elements.

- Array: A mutable, fixed-size sequence of elements.

- Set: An unordered collection of unique elements (immutable by default).

- Map: A collection of key-value pairs (immutable by default). Keys are unique.

- Tuple: An ordered, immutable collection of a fixed number of elements, which can be of different types.

- Option: Represents an optional value; either

Some(value)orNone. - Vector: An indexed, immutable sequence providing fast random access and updates. More efficient than list for large datasets.

Example:

val myList = List(1, 2, 3)

val mySet = Set("apple", "banana", "cherry")

val myMap = Map("a" -> 1, "b" -> 2)

11. What is the purpose of using `map` and `filter` on collections?

The map function transforms each element of a collection (like a list or array) into a new value based on a provided function. Essentially, it applies the same operation to every item and returns a new collection containing the transformed results.

filter, on the other hand, selects elements from a collection based on a condition (defined by a function). It returns a new collection containing only the elements that satisfy the condition, effectively removing elements that don't meet the criteria. For example, using python,

numbers = [1, 2, 3, 4, 5, 6]

even_numbers = list(filter(lambda x: x % 2 == 0, numbers))

squared_numbers = list(map(lambda x: x**2, numbers))

print(even_numbers) # Output: [2, 4, 6]

print(squared_numbers) # Output: [1, 4, 9, 16, 25, 36]

12. What is the `for` comprehension in Scala, and how does it simplify working with collections?

In Scala, a for comprehension provides a concise way to work with collections (like lists, arrays, options, etc.). It essentially desugars into a series of map, flatMap, and filter operations. This allows you to perform complex operations on collections in a more readable and expressive manner than using these functions directly.

For example, instead of nested flatMap calls to iterate through multiple collections, you can use a for comprehension with multiple for clauses. Similarly, if conditions within the for comprehension are translated into filter operations. This simplification makes code dealing with collections easier to write, understand, and maintain. Here's a simple example:

val numbers = List(1, 2, 3, 4, 5)

val evenNumbersSquared = for {

n <- numbers

if n % 2 == 0

} yield n * n

// evenNumbersSquared will be List(4, 16)

13. Can you explain the concept of type inference in Scala?

Type inference in Scala is the compiler's ability to automatically deduce the data type of an expression. Instead of explicitly declaring the type of a variable, the compiler infers it based on the context, such as the value assigned to it or the methods called on it. This makes Scala code more concise and readable, as it reduces the need for explicit type annotations.

For example, instead of writing val message: String = "Hello", you can simply write val message = "Hello". The Scala compiler infers that message is of type String because it's initialized with a string literal. Similarly, for function return types, if the return type is straightforward, you can omit it and the compiler will infer it based on the returned value within the function.

14. What is the Scala REPL? How would you use it to test some code?

The Scala REPL (Read-Eval-Print Loop) is an interactive interpreter for the Scala language. It allows you to enter Scala expressions and statements, which are then immediately evaluated, and the result is printed back to the console. It's a powerful tool for experimentation and learning the language.

To use the REPL to test code, you can simply paste code snippets directly into the REPL. For example:

def add(x: Int, y: Int): Int = x + y

add(5, 3) // Output: 8

You can also define variables and classes and test them similarly. If you have code in a file, you can load it into the REPL using :load filename.scala. Then, you can interact with the loaded code by calling functions or creating instances of classes and testing their behavior directly in the REPL.

15. What is a companion object in Scala? Describe a situation where it could be useful

In Scala, a companion object is an object that is declared in the same source file as a class or trait and shares the same name. The class or trait is called the companion class or trait. A companion object can access the private members of its companion class/trait, and vice versa.

A useful situation for companion objects is creating factory methods. For example, if you want to provide a more descriptive way to construct instances of a class or perform some initialization logic before instance creation, you can define factory methods within the companion object. Here's an example:

class Circle(val radius: Double) {

// ... class members

}

object Circle {

def apply(radius: Double): Circle = new Circle(radius) // Factory method

def createUnitCircle(): Circle = new Circle(1.0)

}

// Usage

val circle1 = Circle(5.0) // Calls Circle.apply

val unitCircle = Circle.createUnitCircle()

16. Describe the difference between `apply` and `unapply` methods in Scala.

In Scala, apply and unapply methods are used for object construction and deconstruction, respectively, often in companion objects. The apply method lets you create instances of a class without using the new keyword. For example, MyClass(arg1, arg2) is actually calling MyClass.apply(arg1, arg2) if an apply method is defined in MyClass's companion object. It's the mechanism behind factory methods.

The unapply method, conversely, is used for pattern matching. It takes an object and tries to extract values from it. If successful, it returns Some containing the extracted values (typically as a tuple); otherwise, it returns None. This enables you to use custom types in match expressions and extract their components. If only one value needs to be extracted, then the returned value can be wrapped directly in the Some object without creating a tuple.

object Email {

def apply(user: String, domain: String): String = user + "@" + domain

def unapply(str: String): Option[(String, String)] = {

val parts = str split "@"

if (parts.length == 2) Some(parts(0), parts(1)) else None

}

}

val emailString = Email("john", "example.com") // Uses apply

emailString match {

case Email(user, domain) => println(s"User: $user, Domain: $domain") // Uses unapply

case _ => println("Not an email address")

}

17. Explain how you might read data from a file in Scala.

In Scala, you can read data from a file using several methods. One common approach involves using the scala.io.Source object. You can read the entire file content at once or process it line by line. For example, to read the entire file into a string, you can use Source.fromFile("filename.txt").mkString. Alternatively, to iterate over each line, you can use Source.fromFile("filename.txt").getLines().foreach(println). Don't forget to close the Source after using it, though in the examples above it is handled automatically or not strictly required because the operations are short lived and the Source object is immediately garbage collected.

Another approach involves using Java's I/O classes within Scala. This allows leveraging Java's BufferedReader for efficient line-by-line reading, particularly useful for large files. For example:

import java.io.{File, BufferedReader, FileReader}

try {

val file = new File("filename.txt")

val reader = new BufferedReader(new FileReader(file))

var line: String = reader.readLine()

while (line != null) {

println(line)

line = reader.readLine()

}

reader.close()

} catch {

case e: Exception => e.printStackTrace()

}

18. What are some common ways to handle errors in Scala? For example, try-catch.

Scala offers several ways to handle errors. A common approach is using try-catch blocks, similar to Java. This allows you to catch specific exceptions and handle them accordingly, or provide a general fallback. Another idiomatic way is using the Try type, which represents a computation that may either result in a success or a failure. This promotes a more functional style, allowing you to chain operations and handle potential errors in a declarative manner using methods like map, flatMap, recover, and recoverWith.

For situations where a value might be absent, the Option type is often used. It represents either Some(value) or None. Using Option avoids null pointer exceptions and forces you to explicitly handle the case where a value is missing. Either is also useful for representing results that can be either a success (Right) or a failure (Left), allowing you to handle different error types explicitly.

19. How would you define an anonymous function (lambda) in Scala?

In Scala, an anonymous function, also known as a lambda, is a function without a name. You define it using the following syntax:

(parameter1: Type1, parameter2: Type2, ...) => expression

For example:

val add = (x: Int, y: Int) => x + y

println(add(2, 3)) // Output: 5

20. How can you define default parameter values for methods in scala?

In Scala, you can define default values for method parameters directly in the method signature. When calling the method, if an argument for a parameter with a default value is omitted, the default value is used. This is a concise and readable way to provide default behavior.

For example:

def greet(name: String, greeting: String = "Hello") = {

println(s"${greeting}, ${name}!")

}

greet("Alice") // Output: Hello, Alice!

greet("Bob", "Hi") // Output: Hi, Bob!

Scala Developer intermediate interview questions

1. How does Scala's implicit conversion work, and what are the potential pitfalls to watch out for?

Scala's implicit conversions automatically convert a value of one type to another when the compiler expects a different type. An implicit conversion is triggered when the compiler encounters a type mismatch. It searches for an implicit function in scope that can perform the conversion. For example, an Int might be implicitly converted to a String if a function expects a String and an implicit function implicit def intToString(i: Int): String = i.toString is available.

Potential pitfalls include: * Implicit ambiguity: Multiple applicable implicit conversions can lead to compiler errors. * Debugging difficulties: Implicit conversions can obscure the actual types being used, making debugging harder. * Unexpected behavior: Unintended implicit conversions can cause unexpected results. Use implicits judiciously and with caution, always considering readability and maintainability.

2. Explain the difference between `call-by-value` and `call-by-name` parameter passing in Scala with a code example.

In Scala, call-by-value evaluates the function arguments before calling the function, and the evaluated values are passed. call-by-name, on the other hand, evaluates the function arguments only when they are used inside the function. It passes the argument expression itself, which is then evaluated each time it's accessed within the function body.

Here's an example:

def callByValue(x: Int): Unit = {

println("By value: " + x)

println("By value: " + x)

}

def callByName(x: => Int): Unit = {

println("By name: " + x)

println("By name: " + x)

}

var i = 5

callByValue(i + 5) // Evaluates i + 5 to 10 before calling

//Output:

//By value: 10

//By value: 10

callByName(i + 5) //Evaluates i + 5 when x is accessed

//Output:

//By name: 10

//By name: 10

var j = 5

def getJ(): Int = {

j += 1

return j

}

callByValue(getJ()) //Evaluates getJ() only once before calling

//Output:

//By value: 6

//By value: 6

callByName(getJ()) //Evaluates getJ() every time when x is accessed

//Output:

//By name: 7

//By name: 8

3. What are the advantages and disadvantages of using Scala's `Future` for asynchronous programming?

Scala's Future offers several advantages for asynchronous programming. It simplifies concurrent operations by providing a clean, composable API for working with values that may not yet be available. This composability, through methods like map, flatMap, filter, and recover, allows you to chain asynchronous operations easily and handle potential errors gracefully. Future also integrates well with Scala's type system and standard collections library. Futures provide a standard and built-in way to handle concurrency, reducing the need for custom thread management.

However, Future also has disadvantages. Debugging can be challenging, especially with complex chains of asynchronous operations, as stack traces can be less informative than in synchronous code. Managing resource consumption is crucial, as each Future can potentially consume a thread. Without careful consideration, an excessive number of Futures can lead to thread exhaustion. Additionally, while Future offers error handling mechanisms, it can be easy to overlook potential exceptions, leading to unhandled errors in the application. Futures are also eager, meaning they start executing immediately upon creation, which may not always be desired. Consider using Promise to control execution.

4. Describe how you would use Scala's `Option` type to handle null values safely and effectively.

Scala's Option type is a container that represents optional values, addressing null pointer exceptions. Instead of assigning null directly, you wrap a value in Some(value) if it exists or use None if it's absent. This forces you to explicitly handle the possibility of a missing value.

To use Option effectively, you can leverage methods like map, flatMap, getOrElse, and orElse. For example, optionValue.map(_ + 1) will only increment the value if optionValue is Some, otherwise it does nothing and returns None. getOrElse(defaultValue) provides a default value if the Option is None. Pattern matching is another powerful way to work with Option, allowing you to explicitly handle both Some and None cases. This approach enhances code safety and readability by making the potential absence of a value explicit.

5. Explain the concept of 'type erasure' in Scala and how it affects generic types.

Type erasure in Scala (and Java) means that the type parameters of generic types are removed during compilation. At runtime, the JVM doesn't know the specific type of a generic collection or class; it only sees the raw type (e.g., List instead of List[String]).

This affects generic types because you can't perform runtime type checks on the type parameters. For instance, you cannot reliably use isInstanceOf[List[String]] to check if a List is specifically a List of Strings. The JVM only sees a List. This limitation necessitates careful design when dealing with generics and runtime type information, often requiring workarounds like using ClassTag or manifest to retain type information.

6. How does Scala's pattern matching work with sealed traits and case classes, and why is it useful?

Scala's pattern matching provides a powerful way to deconstruct data structures, especially when combined with sealed traits and case classes. A sealed trait restricts the possible subtypes to those defined within the same file. Case classes provide automatic implementations for things like equality checks and toString and can easily be deconstructed using pattern matching. When pattern matching on a sealed trait, the compiler can check for exhaustiveness, warning you if you haven't covered all possible cases. This ensures your code handles all possible variations of the data.

This combination is useful for building robust and maintainable code. Exhaustiveness checking helps prevent runtime errors by ensuring all cases are handled. The conciseness of case classes simplifies data modeling, and pattern matching makes it easy to work with complex data structures. For example:

sealed trait Option[+A]

case class Some[A](value: A) extends Option[A]

case object None extends Option[Nothing]

def getOrElse[A](option: Option[A], default: A): A = option match {

case Some(v) => v

case None => default

}

In this example, the compiler will warn you if you remove either Some or None from the match expression, ensuring complete handling of Option types.

7. What is the purpose of Scala's `CanBuildFrom` type class, and when would you need to use it?

The CanBuildFrom type class in Scala is used to control the return type of higher-order functions like map, flatMap, and filter when applied to collections. It essentially acts as a factory for building a new collection of the appropriate type, based on the original collection and the type of the transformation function's result. It allows maintaining the original collection type as much as possible, unless the transformation inherently changes the type.

You would need to use CanBuildFrom when you are creating your own custom collection types and want to ensure that transformations on your collection return the correct type. Without a proper CanBuildFrom instance, a transformation might default to returning a generic collection like Seq instead of your specific custom collection. Or when you are writing a higher-order function that works on multiple collection types and needs to produce a collection of the correct type.

8. Explain the difference between a `trait` and an `abstract class` in Scala, and when you might choose one over the other.

Both traits and abstract classes in Scala provide a way to define abstract members and achieve polymorphism. However, a key difference lies in multiple inheritance: a class can extend only one abstract class but can mix in multiple traits. Traits are generally preferred for defining interfaces or behaviors that can be added to multiple unrelated classes.

Choose an abstract class when you want to define a clear inheritance hierarchy and share concrete implementation details. Choose a trait when you want to enable code reuse across different class hierarchies or when you need multiple inheritance. For example, use a trait for functionalities like Serializable or Cloneable that can be added to any class. Use an abstract class when defining the core behavior of a family of related classes.

9. Describe how you would use Scala's collections API to perform complex data transformations and aggregations.

Scala's collections API offers a rich set of methods for data transformation and aggregation. I would leverage immutable collections, combined with functional programming principles, to achieve this. For complex transformations, I'd use methods like map, flatMap, filter, and groupBy to reshape and prepare the data. For example, data.filter(_ > 10).map(_ * 2) filters elements greater than 10 and then doubles them. groupBy allows categorizing elements based on a function, such as data.groupBy(_.length). Furthermore, I'd use combinators like zip, union, and intersect when dealing with multiple collections.

For aggregations, I'd use methods like reduce, fold, aggregate, and sum. reduce combines elements into a single result, while fold provides an initial value. aggregate provides more flexibility by allowing different operations for combining elements within partitions and combining the partial results. In situations that require parallel execution, I'd convert the collection to a parallel collection (.par) to leverage multi-core processors. Scala collections also have operations that can calculate statistics directly, such as max, min, mean, and median.

10. What is the role of the `ExecutionContext` in Scala's asynchronous programming model?

The ExecutionContext in Scala's asynchronous programming model (primarily involving Futures) is essentially a thread pool (or a means of obtaining threads) used to execute asynchronous tasks. It provides the underlying mechanism for managing and scheduling the execution of Futures. Without an ExecutionContext, Futures would not be able to run in the background.

Specifically, an ExecutionContext is responsible for:

- Executing the code associated with a

Future: When you create aFuture, you provide a block of code to be executed asynchronously. TheExecutionContextdetermines where and when that code will run. - Managing threads: It decides which thread will run which task, often using a thread pool to avoid the overhead of creating new threads for each

Future. - Handling exceptions: It provides a way to handle exceptions that might occur during the execution of a

Future's code block.

For example, if you don't explicitly provide an ExecutionContext, Scala often implicitly provides a global one:

import scala.concurrent.Future

import scala.concurrent.ExecutionContext.Implicits.global

val future = Future { println("Running in a Future!") }

In this case, scala.concurrent.ExecutionContext.Implicits.global provides a default ExecutionContext.

11. How can you use Scala's implicits to implement type classes and provide ad-hoc polymorphism?

Scala's implicits are fundamental to implementing type classes and enabling ad-hoc polymorphism. A type class defines a behavior (e.g., Serializable, Showable) that a type can support. We define a type class as a trait parameterized by a type T along with methods representing the operations for that behavior. To provide instances of the type class for specific types, we create implicit values of the type class trait for those types.

Ad-hoc polymorphism is achieved because the compiler uses implicits to find the appropriate type class instance based on the type being used. When a method requires a type class instance as an implicit parameter, the compiler searches the implicit scope for a matching instance. This allows different types to behave differently for the same method call, based on the available implicit instances. Example:

trait Showable[T] {

def show(value: T): String

}

object Showable {

implicit val intShowable: Showable[Int] = (value: Int) => s"Int: $value"

implicit val stringShowable: Showable[String] = (value: String) => s"String: $value"

}

def printValue[T](value: T)(implicit showable: Showable[T]): Unit = {

println(showable.show(value))

}

printValue(10) // Output: Int: 10

printValue("hello") // Output: String: hello

12. Explain the difference between `val`, `var`, and `lazy val` in Scala, focusing on initialization and immutability.

val, var, and lazy val in Scala differ primarily in their mutability and initialization timing. val defines an immutable variable, meaning its value cannot be changed after initialization. The value is computed immediately when the val is defined. var defines a mutable variable, allowing its value to be reassigned after initialization. Like val, the value for var is computed immediately.

lazy val also defines an immutable variable, but its initialization is delayed until the first time it is accessed. This is useful for expensive computations that might not always be needed or for breaking circular dependencies. Once a lazy val is initialized, its value is fixed and behaves like a val. To summarise:

- val: Immutable, initialized immediately.

- var: Mutable, initialized immediately.

- lazy val: Immutable, initialized on first access (lazy initialization).

13. Describe how you would implement a custom combinator for Scala's `Future` type.

To implement a custom combinator for Scala's Future, I would define an extension method on the Future type using implicit classes. This allows me to add new methods to the existing Future class without modifying its original source code. For example, let's say I want to create a retry combinator that retries a Future a certain number of times if it fails.

Here's how the implementation would look:

import scala.concurrent.{Future, ExecutionContext}

import scala.util.{Success, Failure}

implicit class FutureExtensions[T](future: Future[T]) {

def retry(attempts: Int)(implicit ec: ExecutionContext): Future[T] = {

future.recoverWith {

case _ if attempts > 1 => retry(attempts - 1)

case ex => Future.failed(ex)

}

}

}

This code defines an implicit class FutureExtensions that takes a Future[T] as input. The retry method then uses recoverWith to recursively retry the future if it fails, up to the specified number of attempts. If all retries fail, the original exception is propagated. This approach lets you add custom logic for handling and transforming Future results in a composable way.

14. What are some strategies for handling exceptions effectively in Scala, particularly when working with `Future`s?

When handling exceptions in Scala, especially with Futures, several strategies can be employed. For synchronous code, standard try-catch blocks are effective. However, Futures require a different approach due to their asynchronous nature. The primary strategies include using recover, recoverWith, and transform methods. recover allows you to provide a fallback value if a Future fails. recoverWith lets you provide another Future as a fallback, enabling more complex error handling logic. transform provides a way to handle both successful and failed Futures in a single operation, and transformWith allows Future operations within that handling.

For example:

import scala.concurrent.ExecutionContext.Implicits.global

import scala.concurrent.Future

val futureResult: Future[Int] = Future {

throw new Exception("Something went wrong")

}

futureResult.recover {

case e: Exception => println(s"Recovered from exception: ${e.getMessage}"); -1 // Provide a default value

}

futureResult.recoverWith {

case e: Exception => Future { println(s"Recovered from exception: ${e.getMessage}"); -1 } // Provide another Future

}

futureResult.transform {

case scala.util.Success(value) => scala.util.Success(value * 2) // process success value

case scala.util.Failure(exception) => scala.util.Success(-1) // process exception and return a success value

}

futureResult.transformWith {

case scala.util.Success(value) => Future.successful(value * 2) // process success value

case scala.util.Failure(exception) => Future.successful(-1) // process exception and return a success value

}

These methods help ensure that errors are gracefully handled without crashing the application and allows the Future to complete with either a success or a manageable failure, preventing unhandled exceptions from propagating.

15. How can you use Scala's reflection capabilities to inspect and manipulate classes and objects at runtime?

Scala's reflection API allows you to examine and modify classes and objects at runtime. You typically start by obtaining a TypeTag for the class you want to inspect. This TypeTag provides access to the class's structure, including its fields, methods, and constructors. Using scala.reflect.runtime.universe._, you can create mirrors (instances of Mirror) which provide the actual runtime access.

With reflection, you can:

- Inspect class members (fields, methods, constructors) by accessing the

membersmethod of aType. - Create instances of classes dynamically using

newInstanceon aClassSymbol. - Invoke methods using

MethodMirror. - Access and modify fields using

FieldMirror.

Example (Illustrative):

import scala.reflect.runtime.universe._

case class MyClass(x: Int, y: String)

val mirror = runtimeMirror(getClass.getClassLoader)

val tpe = typeOf[MyClass]

val constructorSymbol = tpe.decl(termNames.CONSTRUCTOR).asMethod

val classSymbol = tpe.typeSymbol.asClass

val classMirror = mirror.reflectClass(classSymbol)

val constructorMirror = classMirror.reflectConstructor(constructorSymbol)

val instance = constructorMirror(10, "hello").asInstanceOf[MyClass]

println(instance.x) // Output: 10

16. Explain how Scala's type inference works, and provide examples where you might need to provide explicit type annotations.

Scala's type inference allows the compiler to automatically deduce the type of an expression, reducing the need for explicit type annotations. It analyzes the surrounding code, considering assignments, method signatures, and the types of literals to determine the most appropriate type. For example, val x = 5 doesn't require val x: Int = 5 because the compiler infers x as an Int based on the value 5. Similarly, with methods: def add(x: Int, y: Int) = x + y infers the return type as Int.

Explicit type annotations are necessary in situations where type inference cannot unambiguously determine the type. Some examples include:

- Recursive Methods: When a method calls itself, the return type must be specified to guide inference.

def factorial(n: Int): Int = if (n <= 1) 1 else n * factorial(n - 1) - Ambiguous Cases: When the compiler has multiple possible types, an annotation resolves the ambiguity. For example, When creating generic empty collections:

val list: List[String] = List() - Contravariant or Covariant Positions: Sometimes in more complex type hierarchies, especially with variance, type annotations are important to guide the compiler. In anonymous functions when the expected type is not clear from context, explicit type annotations for parameters are required

val f: Int => String = (x: Int) => x.toString - Controlling API visibility: Explicit types on public methods and fields allows to control public APIs.

17. Describe how you would use Scala's actors model (Akka) to build concurrent and distributed systems.

Akka actors provide a powerful way to build concurrent and distributed systems in Scala. The actor model promotes concurrency through message passing, avoiding shared mutable state. To build such a system, I would define actors that represent different components or services. These actors would interact by sending and receiving messages, enabling asynchronous and non-blocking communication.

For example, consider a distributed image processing system. I would create actors for:

- Image Input: Receives images and distributes them to worker actors.

- Worker Actors: Processes image chunks concurrently.

- Aggregator Actor: Collects processed chunks and assembles the final image.

Akka's remoting capabilities would allow these actors to run on different machines, creating a truly distributed system. Features like supervision and fault tolerance would ensure resilience in the face of failures.

18. What are the different ways to handle concurrency in Scala, and when would you choose one approach over another?

Scala provides several concurrency models, each suited for different scenarios. Futures offer a non-blocking way to perform computations, using callbacks or Await.result to retrieve the result. They're ideal for asynchronous tasks where you don't want to block the main thread. Actors, using Akka or other actor libraries, provide a message-passing concurrency model which is suitable for managing complex state and communication between different parts of a system. They're useful for building resilient and scalable applications.

The choice depends on the application's complexity and requirements. For simple asynchronous operations like API calls, Futures are often sufficient. When you need robust, fault-tolerant systems with complex interactions, Actors are a better choice. Consider using Futures when dealing with a limited number of concurrent tasks and Actors when managing a large number of concurrent, independent entities that communicate with each other.

19. Explain how Scala's macro system works, and what are some potential use cases for macros?

Scala's macro system allows you to perform compile-time code manipulation. Macros operate on the abstract syntax tree (AST) of your code, enabling you to inspect, transform, and generate new code before it's compiled into bytecode. There are two main types: compiler macros which can modify the compilation process, and macro annotations that can automatically generate boilerplate code for classes and traits.

Some potential use cases include:

- Code generation: Automatically generate repetitive code like boilerplate, serializers, or accessors.

- Compile-time checking: Enforce constraints or validate code at compile time, preventing runtime errors.

- Domain-Specific Languages (DSLs): Create more expressive and concise syntax for specific domains.

- Performance optimization: Implement specialized algorithms or optimizations at compile time based on the available information.

Example:

import scala.language.experimental.macros

import scala.reflect.macros.blackbox.Context

object HelloMacro {

def hello: String = macro helloImpl

def helloImpl(c: Context): c.Expr[String] = {

import c.universe._

c.Expr[String](q"Hello, world!")

}

}

20. Describe the purpose of the `implicit evidence` pattern in Scala and provide an example of its usage.

The implicit evidence pattern in Scala allows you to enforce constraints on type parameters at compile time. It essentially requires that a certain type class instance exists for a particular type. If the required instance isn't available implicitly in the scope, the code will fail to compile. This enables type-safe operations and allows you to write generic code that operates only on types that satisfy certain properties, defined by type classes.

Example:

trait Summable[A] {

def sum(a: A, b: A): A

}

object Summable {

implicit val intSummable: Summable[Int] = (a, b) => a + b

implicit val stringSummable: Summable[String] = (a, b) => a + b

}

def sumList[A](list: List[A])(implicit summable: Summable[A]): A = {

list.reduce(summable.sum)

}

// Usage

val intList = List(1, 2, 3)

val stringList = List("a", "b", "c")

println(sumList(intList)) // Output: 6

println(sumList(stringList)) // Output: abc

//If Summable[SomeOtherType] is not defined and you try `sumList(List(SomeOtherType()))`, it will not compile.

21. How can you use Scala's streams API to process large datasets efficiently, avoiding loading the entire dataset into memory?

Scala's Streams API is designed for processing large datasets efficiently by avoiding loading the entire dataset into memory at once. Streams are lazy collections; elements are computed only when they are needed. This allows you to work with potentially infinite sequences or datasets that are too large to fit in memory.

To efficiently process large datasets, you can create a stream from your data source (e.g., a file, a database query). Use stream operations like map, filter, flatMap, and take to transform and process the data. Because streams are lazy, these operations don't execute immediately. They build up a chain of transformations. When you finally request a value from the stream (e.g., using foreach, toList, or head), only the necessary elements are computed. Here's an example of how you might read a large file and process each line:

import scala.io.Source

val lines = Source.fromFile("large_file.txt").getLines().toStream

val processedData = lines.filter(_.contains("keyword"))

.map(_.toUpperCase)

.take(100) // Process only first 100 matching lines

processedData.foreach(println) // Evaluates the stream and prints the results

In this example, the entire file is not loaded into memory. Lines are read and processed on demand, making it efficient for large datasets.

22. Explain the concept of monads in Scala, and how they can be used to simplify asynchronous or error-handling code.

In Scala, a monad is a design pattern that allows you to chain operations together while managing context, such as errors or asynchronous computations. A monad must provide two functions: flatMap (also known as bind) which chains operations, and unit (often named apply or pure) which lifts a value into the monad. Common monads in Scala include Option, Try, Future, and List.

Monads simplify asynchronous or error-handling code by providing a structured way to sequence operations that might fail or involve asynchronous execution. For example, using Option avoids nested null checks by allowing you to chain operations that might return None. Similarly, Future lets you chain asynchronous operations using flatMap avoiding callback hell and making the code more readable and manageable. This is because monads define how to handle the context (e.g., a potentially missing value or an asynchronous result) in a consistent manner across multiple operations using flatMap.

Scala Developer interview questions for experienced

1. How do you handle errors in Scala using Try, Option, and Either? Explain the use cases for each.

Scala offers Try, Option, and Either for error handling, each serving distinct purposes. Try is designed for handling exceptions, particularly those thrown by side-effecting operations. It encapsulates a computation that might fail, returning either Success(value) or Failure(exception). Use it when you want to gracefully manage exceptions without halting program execution. For example:

import scala.util.{Try, Success, Failure}

val result: Try[Int] = Try {

"10".toInt // Could throw NumberFormatException

}

result match {

case Success(value) => println(s"Result: $value")

case Failure(exception) => println(s"Error: ${exception.getMessage}")

}

Option is used to represent optional values where a value may or may not be present (null replacement). It returns either Some(value) or None. It’s perfect for avoiding NullPointerException and explicitly handling cases where a value is missing. Either is a more general-purpose error handling mechanism, suitable when you need to return either a successful value or an error value with context. It returns either Left(error) or Right(value). Either is ideal when you want to communicate specific error information back to the caller, rather than just a generic exception type. By convention Right holds the success, Left holds the failure.

2. Describe your experience with Akka. How would you design a fault-tolerant system using Akka actors?

I have experience using Akka for building concurrent and distributed systems. Specifically, I've worked with Akka actors, supervision strategies, and Akka remoting to create resilient applications. I'm familiar with the actor model and its benefits for managing state and concurrency.

To design a fault-tolerant system with Akka, I would leverage the actor model's inherent fault tolerance capabilities. Key aspects include:

- Supervision: Define supervision strategies (e.g.,

Resume,Restart,Stop,Escalate) for parent actors to handle failures in their children. This prevents failures from cascading and keeps the system running. - Actor Hierarchy: Structure actors in a hierarchy to define clear ownership and responsibility for handling failures. The root supervisor should be designed for maximum stability.

- Persistence (Akka Persistence): Use Akka Persistence to persist the state of critical actors, allowing them to recover after a crash. This ensures data consistency and prevents data loss.

- Clustering (Akka Cluster): For distributed systems, use Akka Cluster to distribute actors across multiple nodes. Use cluster-aware routers and consistent hashing to distribute load and ensure that actors can be accessed even if some nodes fail.

- Circuit Breaker: Implement a circuit breaker pattern using libraries like

akka-managementfor handling failing external services. This prevents the system from being overwhelmed by repeated failures. - Dead Letter Queue: Monitor the dead letter queue to debug and potentially recover from unhandled messages.

- Idempotent operations: Wherever possible operations should be idempotent to allow for retries.

Example Supervision Strategy code:

override val supervisorStrategy =

OneForOneStrategy(maxNrOfRetries = 10, withinTimeRange = 1 minute) {

case _: ArithmeticException => Resume

case _: NullPointerException => Restart

case _: IllegalArgumentException => Stop

case _: Exception => Escalate

}

3. Explain the concept of monads in Scala. Provide a practical example where using a monad simplifies your code.

Monads in Scala (and functional programming in general) are a design pattern that allows you to sequence operations that involve wrapping values inside a context. Think of a monad as a container that adds extra behavior to the values it holds, such as dealing with nulls (Option), handling errors (Either), or managing side effects (IO). A monad must provide flatMap (or bind), map, and unit (or pure) operations to adhere to the monad laws which ensure predictable and consistent behavior.

Consider a scenario where you need to retrieve a user's address from a database, where both the user and address retrieval can potentially return null. Without monads, you'd have nested if statements to check for nulls. Using Option (a monad in Scala), you can simplify this: val address: Option[Address] = Option(userDao.getUser(id)).flatMap(user => Option(addressDao.getAddress(user.addressId))). This elegantly chains the operations, and if either getUser or getAddress returns null, the result is automatically None, avoiding explicit null checks. It allows cleaner more readable code avoiding nested if statements.

4. How does Scala's type system compare to Java's? What are the advantages and disadvantages?

Scala's type system is significantly more advanced than Java's. Scala includes features like type inference, algebraic data types, pattern matching, traits, and higher-kinded types, which are absent in Java (or recently added, in a limited form). This leads to more concise and expressive code, reducing boilerplate. Scala's type system allows for more robust compile-time checking, potentially catching errors that would only be discovered at runtime in Java.

However, the complexity of Scala's type system can also be a disadvantage. It can lead to a steeper learning curve and make code more difficult to understand for developers unfamiliar with advanced type system features. Compile times can also be slower in Scala due to the more sophisticated type checking. Java's relative simplicity makes it easier to learn and maintain for many developers, and the larger ecosystem can offer better support in some areas.

5. Describe your experience with Spark. How have you optimized Spark jobs for performance?

I have experience using Spark for large-scale data processing, including ETL pipelines, data analysis, and machine learning. I've worked with Spark Core, Spark SQL, and Spark MLlib extensively. I am proficient in using Spark's DataFrame API.

To optimize Spark jobs, I've employed various techniques. These include:

- Data partitioning: Choosing the right number of partitions and partitioning keys to minimize data shuffling.

- Caching: Using

cache()orpersist()to store frequently accessed DataFrames in memory or on disk. - Broadcast variables: Broadcasting large, read-only datasets to worker nodes to avoid sending them with every task.

- Tuning Spark configurations: Adjusting settings like