As a recruiter or hiring manager, sourcing the right Product Manager is often a complex task. This is because the role demands a diverse skill set and deep understanding of both product development and business strategy, a guide on the topic can be useful.

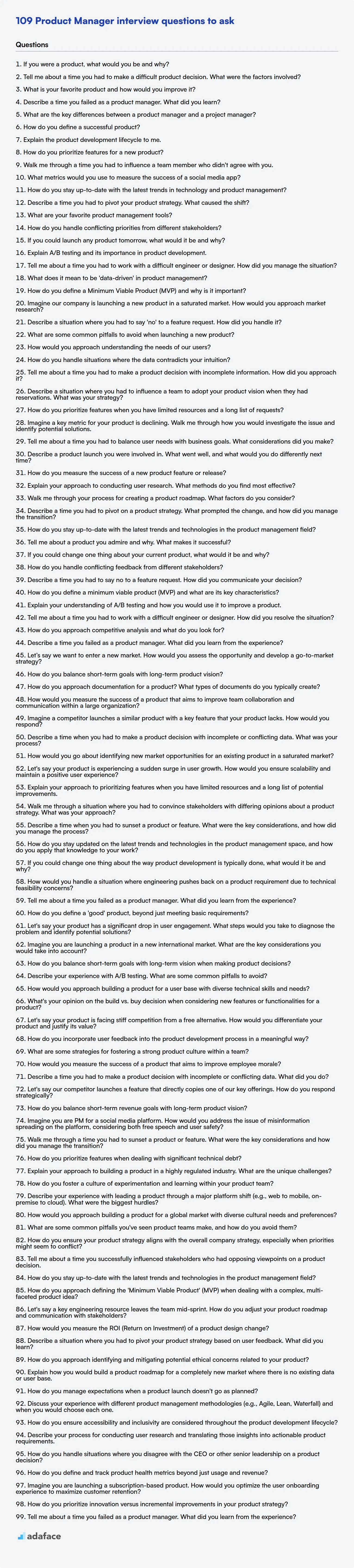

This comprehensive guide provides a detailed list of interview questions tailored specifically for Product Manager roles at various experience levels, including basic, intermediate, advanced, and expert levels. You'll find a range of questions covering different aspects of product management, from strategy to execution.

By using these questions, you can assess candidates effectively and identify those with the potential to excel in the Product Manager role. Before the interviews, consider using Adaface's pre-employment assessment tests to screen candidates and identify top talent.

Table of contents

Basic Product Manager interview questions

1. If you were a product, what would you be and why?

If I were a product, I would be a Swiss Army knife. I'm versatile and adaptable, capable of handling a variety of tasks and challenges. Just like a Swiss Army knife has different tools for different situations, I have a broad skillset that allows me to contribute effectively in diverse roles and projects.

Specifically, I can quickly learn new technologies and adapt to changing environments. I am reliable, dependable, and committed to delivering high-quality results, much like the consistent performance you expect from a well-made Swiss Army knife. I strive to be a valuable and indispensable tool in any team or organization.

2. Tell me about a time you had to make a difficult product decision. What were the factors involved?

I once had to decide whether to delay a feature launch to fix a performance issue or release on time with the known problem. The factors involved were: customer expectations around the promised launch date, the severity of the performance issue (it caused occasional slowdowns, but wasn't a complete blocker), the potential impact on user experience and adoption, and the engineering team's estimate for fixing the issue (which was uncertain). Ultimately, I decided to delay the launch.

The reasoning was that releasing with a known performance issue could damage our reputation and lead to negative reviews, potentially hurting long-term adoption. We communicated the delay to users transparently, explaining the reason and new timeline, which was well-received. We used the extra time to optimize the code and resolve the performance bottleneck, resulting in a much better user experience upon release.

3. What is your favorite product and how would you improve it?

My favorite product is Google Maps. It's incredibly useful for navigation, exploring new places, and finding businesses.

If I were to improve it, I would focus on enhancing its real-time information and personalization. Specifically, I'd like to see more accurate and granular data on traffic conditions, including lane closures and accidents before encountering them. This could involve better integration with local traffic authorities and user-reported incidents. I would also add more robust personalization options for route planning. For example, users could specify preferences for avoiding highways, tolls, or certain types of roads (e.g., dirt roads). Furthermore, the integration of more social elements could be interesting, like easily sharing favorite routes or places with friends and family within the app.

4. Describe a time you failed as a product manager. What did you learn?

Early in my career, I was responsible for launching a new feature that aimed to increase user engagement. I relied heavily on quantitative data showing a potential need but neglected to conduct thorough user interviews to understand the 'why' behind the data. The feature launched with strong initial adoption, but quickly saw a drop-off in usage. I had failed to truly validate the problem from a user perspective.

I learned the critical importance of qualitative user research. While data is crucial, it only tells part of the story. Understanding user motivations, pain points, and behaviors through direct interaction is essential for building successful products. Since then, I've always prioritized user interviews and usability testing throughout the product development lifecycle. I've also made it a point to present both data and user insights in a balanced manner during prioritization and planning, ensuring that we're building features that solve real user problems.

5. What are the key differences between a product manager and a project manager?

Product managers define the 'what' and 'why' of a product – its vision, strategy, and roadmap. They focus on market needs, customer problems, and overall product success. They own the product, not the project. Project managers, on the other hand, focus on the 'how' and 'when' – planning, executing, and delivering projects to bring the product vision to life.

In essence, product managers chart the course, and project managers navigate the ship. A product manager is externally focused on strategy and the market. Project managers are internally focused on getting the work done on time and within budget. Though both require communication and leadership skills, their focus areas differ significantly.

6. How do you define a successful product?

A successful product is one that achieves its intended goals and provides value to both the users and the business. Key indicators include meeting user needs, solving a specific problem effectively, and demonstrating market viability.

More specifically, success can be measured by metrics like user adoption rate, customer satisfaction (e.g., Net Promoter Score), revenue generation, and overall business impact. A truly successful product is not only well-designed and functional but also contributes to the long-term growth and sustainability of the organization.

7. Explain the product development lifecycle to me.

The product development lifecycle (PDLC) describes the stages a product goes through from conception to retirement. A common model includes these phases:

- Ideation: Generating and brainstorming ideas.

- Research: Validating the market need and feasibility of the idea. Includes user research and competitive analysis.

- Planning: Defining the product requirements, scope, and resources. Creating a roadmap.

- Design: Creating wireframes, mockups, and prototypes. Focusing on user experience (UX) and user interface (UI).

- Development: Building the actual product (e.g., coding, manufacturing).

- Testing: Ensuring the product meets quality standards and user needs through various testing methods.

- Deployment: Releasing the product to the market.

- Maintenance: Providing ongoing support, updates, and bug fixes.

- Retirement: End-of-life planning and eventual decommissioning of the product.

Different organizations or software methodologies (like Agile) might have slightly different or more granular variations of this lifecycle, but this is a general overview.

8. How do you prioritize features for a new product?

Prioritizing features for a new product involves understanding user needs and business goals. I'd start by gathering requirements through user research, market analysis, and competitor analysis to define a clear product vision and strategy. Then, I'd use a framework like RICE (Reach, Impact, Confidence, Effort) or MoSCoW (Must have, Should have, Could have, Won't have) to score and rank potential features.

Features with high impact, high reach, high confidence, and low effort would be prioritized for the initial release (MVP). Focus will be given to must have features. We should always validate assumptions quickly and iterate based on user feedback. Regular prioritization exercises should be conducted throughout the product lifecycle.

9. Walk me through a time you had to influence a team member who didn't agree with you.

In a recent project, we were deciding on the technology stack for a new microservice. I advocated for using gRPC due to its performance benefits and contract-based approach, which aligned with our long-term architectural goals. However, one team member strongly preferred REST with JSON, citing their familiarity and ease of implementation.

To influence them, I first acknowledged their concerns and the benefits of their preferred approach. Then, I presented data comparing the performance of both approaches under projected load, highlighting the potential scalability issues with REST in our context. I also demonstrated how gRPC's code generation could actually reduce boilerplate code in the long run. Finally, I suggested a compromise: a small, isolated proof-of-concept using gRPC to validate its suitability and address their concerns hands-on. This collaborative approach, coupled with data and a willingness to experiment, eventually convinced them to support gRPC for this project, and they became a strong advocate after seeing the initial results.

10. What metrics would you use to measure the success of a social media app?

To measure the success of a social media app, I'd focus on metrics related to user engagement, growth, and retention. Key metrics would include:

- Daily/Monthly Active Users (DAU/MAU): Indicates the app's popularity and usage frequency.

- Retention Rate: Measures how many users return to the app over time.

- Engagement Metrics: Likes, comments, shares, time spent in-app, and content creation rates. These metrics reflect how actively users are participating.

- Conversion Rate: Measures how many users are completing desired actions such as purchases or subscriptions.

- Churn Rate: The rate at which users stop using the app.

- Customer Acquisition Cost (CAC): The cost to acquire a new user.

- Net Promoter Score (NPS): Measures customer loyalty and satisfaction.

11. How do you stay up-to-date with the latest trends in technology and product management?

I stay current through a variety of methods. I regularly read industry publications like TechCrunch, Wired, and The Product Manager. I also subscribe to newsletters such as Product Habits and attend webinars and online courses on platforms like Coursera and Udemy to learn specific skills or explore new technologies. Following key influencers and thought leaders on LinkedIn and Twitter keeps me abreast of discussions and emerging trends in both technology and product management.

Furthermore, I participate in online communities and forums, such as Reddit's r/ProductManagement, to engage in discussions and learn from the experiences of other professionals. For specific technologies relevant to current or potential projects, I dedicate time to reading documentation, experimenting with new tools, and exploring open-source projects on GitHub.

12. Describe a time you had to pivot your product strategy. What caused the shift?

During the development of a new mobile app for restaurant reservations, we initially focused on a broad audience with a wide range of features, including social networking and detailed restaurant reviews. However, user testing revealed that the core value proposition – quick and easy reservations – was getting lost in the noise. Users found the app overwhelming and difficult to navigate for its primary function.

Based on this feedback, we pivoted our product strategy to focus solely on streamlining the reservation process. We removed the social networking features, simplified the user interface, and concentrated on optimizing the search and booking experience. This shift resulted in a significant increase in user engagement and a higher rate of successful reservations, proving the value of prioritizing core functionality over feature bloat.

13. What are your favorite product management tools?

My favorite product management tools depend on the specific needs of the team and product, but I consistently find value in a few core categories. For project and task management, I often use Jira or Asana. For product analytics, tools like Google Analytics and Amplitude are essential for understanding user behavior and identifying areas for improvement. For communication and collaboration, Slack and Confluence facilitate efficient teamwork and documentation.

Beyond these, I also utilize tools for roadmapping (e.g., Productboard), user research (e.g., surveys via Google Forms or dedicated platforms), and wireframing/prototyping (e.g., Figma or Sketch). The best tools are the ones that help the team stay organized, make data-driven decisions, and effectively communicate throughout the product development lifecycle.

14. How do you handle conflicting priorities from different stakeholders?

When facing conflicting priorities, I first strive to understand the rationale behind each stakeholder's request. This involves actively listening to their perspectives and asking clarifying questions to grasp the urgency and impact of each task. I then attempt to quantify the value or risk associated with each priority, potentially using metrics or scoring systems.

Next, I facilitate a discussion with all involved stakeholders to collaboratively find a solution. This could involve negotiation, compromise, or identifying dependencies that allow for a phased approach. If a consensus cannot be reached, I escalate the issue to a higher authority, providing them with a clear overview of the situation, the potential consequences of each choice, and my recommended course of action, along with supporting data.

15. If you could launch any product tomorrow, what would it be and why?

If I could launch any product tomorrow, it would be a personalized AI-powered educational platform specifically designed to bridge skill gaps in underserved communities. It would offer adaptive learning paths tailored to each user's individual needs and learning style, focusing on in-demand skills like coding, data analysis, and digital marketing.

My motivation stems from the desire to democratize access to quality education and empower individuals with the tools they need to succeed in the modern economy. By providing personalized support and resources, this platform could significantly improve economic mobility and create a more equitable society.

16. Explain A/B testing and its importance in product development.

A/B testing (also known as split testing) is a method of comparing two versions of a product (A and B) to determine which one performs better. Typically, users are randomly assigned to either version A or version B, and their behavior is tracked to see which version leads to a desired outcome, such as higher conversion rates, more clicks, or increased engagement.

A/B testing is important in product development because it allows data-driven decisions. It minimizes reliance on assumptions or gut feelings, and provides empirical evidence to support changes. This helps optimize user experience, improve key metrics, and ultimately create a more successful product. For example, testing different button colors, layouts, or copy can reveal which combination resonates best with users, leading to significant improvements in performance.

17. Tell me about a time you had to work with a difficult engineer or designer. How did you manage the situation?

In a previous role, I collaborated with a senior engineer who often dismissed others' ideas without fully considering them. This created tension and stifled collaborative problem-solving. To address this, I scheduled a one-on-one meeting to understand their perspective and concerns. I actively listened, acknowledged their experience, and then gently presented alternative solutions, backing them up with data or examples when possible.

By focusing on finding common ground and presenting ideas in a non-confrontational way, I was able to build a more collaborative working relationship. Over time, the engineer became more receptive to input from others. I also worked with my manager to facilitate team-building activities aimed at fostering better communication and understanding among all team members. This approach helped defuse the situation and improve overall team dynamics.

18. What does it mean to be 'data-driven' in product management?

Being 'data-driven' in product management means making decisions based on evidence and insights derived from data, rather than relying solely on intuition, opinions, or assumptions. It involves actively collecting, analyzing, and interpreting data to understand user behavior, market trends, and product performance. This understanding then informs product strategy, prioritization, and roadmap decisions. Examples of data include user analytics, A/B test results, customer feedback, and market research.

Specifically, a data-driven product manager will:

- Define KPIs: Establish clear, measurable Key Performance Indicators to track product success.

- Collect Data: Implement tracking and analytics tools to gather relevant data.

- Analyze Data: Use data analysis techniques to identify patterns, trends, and insights.

- Make Decisions: Base product decisions on data-driven insights.

- Iterate and Improve: Continuously monitor performance and make adjustments based on data.

19. How do you define a Minimum Viable Product (MVP) and why is it important?

An MVP, or Minimum Viable Product, is a version of a new product with just enough features to attract early-adopter customers and validate a product idea early in the product development cycle. It's about delivering the core value proposition with minimal effort and resources, focusing on the essential functionalities that solve a key problem for the target audience. Think of it as the simplest possible working product that still provides value and allows for learning.

It's important because it helps reduce risks by testing assumptions before committing significant resources. An MVP allows you to gather valuable user feedback, iterate quickly based on real-world usage, and ultimately build a product that truly meets market needs. This approach minimizes waste, saves time and money, and increases the chances of building a successful product. For instance, if building a ride-sharing app, the MVP might just be a simple app to connect riders and drivers for a specific route or area, without features like in-app payments or advanced mapping initially.

20. Imagine our company is launching a new product in a saturated market. How would you approach market research?

In a saturated market, thorough market research is crucial. I'd start by focusing on identifying unmet needs or underserved segments within the existing market. This involves analyzing competitor offerings, customer reviews, and available market reports to pinpoint gaps. I'd then conduct primary research, such as surveys and focus groups, targeted at these segments to understand their specific pain points and preferences.

Next, I'd evaluate the feasibility of addressing these needs with our new product. This includes assessing the market size, potential profitability, and competitive landscape for each identified segment. Based on this research, I'd recommend a target market and a tailored marketing strategy that highlights our product's unique value proposition and differentiates it from competitors.

21. Describe a situation where you had to say 'no' to a feature request. How did you handle it?

In a previous role, a product manager requested a new feature that would allow users to customize the application's color scheme. While seemingly minor, the engineering team had just completed a major refactoring of the UI framework to improve performance and accessibility. Introducing this new feature at that stage would have required reverting parts of the refactoring, potentially reintroducing accessibility issues, and delaying the release of the performance improvements. I had to say no.

I explained the technical constraints and the potential negative impact on performance and accessibility to the product manager. Instead of an outright 'no', I proposed alternative solutions, such as exploring a limited set of pre-defined themes that could be implemented without impacting the core UI framework. We also agreed to add the customizable color scheme feature to the product roadmap for a later sprint, after the initial performance improvements were released and we had more time to assess the technical feasibility. This approach allowed us to address the underlying user need without jeopardizing critical performance and accessibility goals.

22. What are some common pitfalls to avoid when launching a new product?

Launching a new product is exciting, but several pitfalls can doom it from the start. One common mistake is insufficient market research. Without understanding your target audience and competitive landscape, you risk building something nobody wants. Another frequent problem is a lack of a clear value proposition. If potential customers don't immediately grasp how your product benefits them, they won't bother. Neglecting user feedback during development is also problematic, leading to a product that doesn't meet user needs.

Furthermore, poor execution can be devastating. This includes issues like a buggy product, a confusing user interface, or inadequate customer support. Inadequate marketing and pre-launch buzz is another pitfall; you need to create anticipation and excitement before launch to drive initial adoption. Finally, many fail to plan for scalability, leading to performance issues as user base grows. Proper testing and infrastructure are key.

23. How would you approach understanding the needs of our users?

To understand user needs, I'd start with a multi-faceted approach:

- Direct User Research: Conduct user interviews, surveys, and usability testing to gather qualitative and quantitative data about their goals, pain points, and behaviors. I'd focus on understanding their current workflow and the challenges they face. Analyze existing user feedback (e.g., support tickets, reviews) to identify recurring issues and areas for improvement.

- Data Analysis: Use product analytics tools to monitor user behavior, track key metrics (e.g., feature usage, conversion rates, drop-off points), and identify patterns. Employ A/B testing to validate assumptions and optimize the user experience. I'd also use tools like heatmaps and session recordings to understand how users interact with the product.

- Collaboration: Work closely with stakeholders (product managers, designers, engineers, customer support) to gather their insights and perspectives on user needs. Facilitate workshops and brainstorming sessions to generate ideas and prioritize solutions.

24. How do you handle situations where the data contradicts your intuition?

When data contradicts my intuition, I prioritize the data. My initial reaction is to investigate the discrepancy. This involves verifying the data's accuracy and integrity, checking for potential biases or errors in the data collection or processing methods. I also critically re-examine my own assumptions and the reasoning behind my intuition, identifying any flaws or limitations in my mental model.

If the data is validated and my intuition remains challenged, I update my understanding. This might involve exploring alternative explanations or revising my initial hypotheses. I treat the contradiction as a valuable learning opportunity, prompting further investigation and refinement of my knowledge. Ultimately, data-driven insights take precedence over personal hunches, ensuring that decisions are based on evidence rather than preconceived notions.

Intermediate Product Manager interview questions

1. Tell me about a time you had to make a product decision with incomplete information. How did you approach it?

In a previous role, we were launching a new feature but had limited data on user behavior in a similar, older version of the product. I approached the decision by first identifying the key assumptions we were making about user needs and how they would interact with the new feature. Then, I prioritized gathering what data we could get quickly – things like running small user surveys focused on specific questions related to those assumptions, analyzing support tickets for related keywords from the older version, and doing a competitive analysis. Finally, I created a simple decision matrix. I listed the decision criteria (e.g., ease of use, development time, potential impact) and scored each possible implementation option based on the available information and my best judgment. It wasn't perfect, but by outlining our assumptions and systematically evaluating the options, we were able to make a well-reasoned decision and launch a version we could iterate on later.

2. Describe a situation where you had to influence a team to adopt your product vision when they had reservations. What was your strategy?

In a prior role, I championed a shift to a microservices architecture. The team, comfortable with a monolithic system, worried about increased complexity and operational overhead. My strategy began with understanding their specific concerns through one-on-one discussions. I then presented a phased adoption plan, starting with non-critical services, backed by data highlighting improved scalability and faster deployment cycles based on industry benchmarks and internal POCs. Crucially, I invested in training and mentorship to upskill the team, ensuring they felt supported and empowered during the transition. This combination of addressing concerns, providing evidence, and enabling the team ultimately led to successful adoption.

Further, I proactively sought feedback at each stage and iterated on the rollout plan based on their input. Demonstrating the value of microservices through tangible, incremental improvements, such as a 20% reduction in deployment time for the initial services, was essential in building trust and solidifying buy-in. I also highlighted the benefits of independent deployments and reduced blast radius, addressing the concerns around single points of failure within a monolith. This transparency and collaborative approach fostered a shared sense of ownership, leading to a successful shift towards the new architecture.

3. How do you prioritize features when you have limited resources and a long list of requests?

Prioritizing features with limited resources requires a structured approach. I'd start by assessing each feature's value (impact on users and business goals) and effort (development time and cost). I'd use a framework like the Value vs. Effort matrix to categorize features into "Quick Wins" (high value, low effort), "Major Projects" (high value, high effort), "Fill-ins" (low value, low effort), and "Hard Slog" (low value, high effort). I'd focus on Quick Wins first, followed by strategically selecting Major Projects that align with the overall product roadmap and business objectives.

Furthermore, I'd constantly communicate with stakeholders (users, product owners, developers) to gather feedback and ensure alignment. Techniques like user surveys, A/B testing, and analyzing user behavior metrics can provide valuable data to refine prioritization. I'd re-evaluate priorities regularly, adjusting as needed based on new information and changing circumstances. Using tools like Jira to track and manage feature requests and prioritization can also be beneficial.

4. Imagine a key metric for your product is declining. Walk me through how you would investigate the issue and identify potential solutions.

First, I'd define the metric precisely and understand the scope of the decline (e.g., how much, over what period, and for which segments). I'd then gather data, looking for correlations and anomalies. This involves breaking down the metric by dimensions like platform, geography, user cohort, and acquisition channel. I'd also examine recent changes to the product, marketing campaigns, or external factors (like competitor actions or seasonality) that could be contributing. I'd look at funnels leading up to the metric to identify drop-off points.

Based on the data, I'd form hypotheses about the root cause. For example, if user engagement is down, possible causes could be a bug introduced in the latest release, a competitor offering a better service, or changes in user behavior. I would then prioritize these hypotheses based on their potential impact and likelihood. I would then test these hypotheses through A/B testing, user surveys, or further data analysis. Once I've identified the most likely root cause, I would propose solutions to address the problem. This could involve bug fixes, product improvements, marketing adjustments, or new feature development.

5. Tell me about a time you had to balance user needs with business goals. What considerations did you make?

In a previous role, I was working on a feature to allow users to customize their dashboard. User research indicated a strong desire for highly granular control, including the ability to rearrange and resize every element. However, the development team estimated that implementing this level of customization would take significantly longer and increase the risk of introducing performance issues, impacting our key business goal of maintaining platform stability and speed.

To balance these competing priorities, I proposed a phased approach. We initially launched a simpler version of the dashboard customization feature, focusing on the most requested elements and a more streamlined rearrangement process. This allowed us to quickly deliver value to users while minimizing the development effort and performance risks. We then planned to iterate based on user feedback and analytics data, gradually adding more advanced customization options as resources allowed. This approach allowed us to meet user needs in a timely manner while still aligning with business goals.

6. Describe a product launch you were involved in. What went well, and what would you do differently next time?

I was involved in the launch of a new mobile app for our company, aimed at improving user engagement. What went well was the pre-launch marketing campaign that generated significant buzz, leading to a high number of downloads in the first week. Also, the cross-functional team collaboration was exceptional, ensuring smooth coordination across different departments.

However, the app suffered from some performance issues initially due to underestimated server capacity. Next time, I would advocate for more extensive load testing under various simulated user scenarios to identify potential bottlenecks and ensure the infrastructure is robust enough to handle peak loads. Additionally, a more detailed rollback plan would be created in case of critical issues post launch.

7. How do you measure the success of a new product feature or release?

Measuring the success of a new product feature or release involves identifying key performance indicators (KPIs) before the launch. These KPIs should be directly tied to the goals of the feature/release. Examples include increased user engagement (time spent, features used), improved conversion rates, reduced churn, positive customer feedback (surveys, reviews), and increased revenue. A/B testing can be crucial to isolate the impact of the new feature.

We track these KPIs using analytics platforms (e.g., Google Analytics, Mixpanel) and monitor them closely after the release. We compare the post-release performance to the pre-release baseline (or a control group in the case of A/B testing). Success is determined by whether the KPIs show a statistically significant improvement and align with the initial goals. Qualitative feedback, such as user interviews, can also provide valuable insights into user satisfaction and areas for improvement.

8. Explain your approach to conducting user research. What methods do you find most effective?

My approach to user research is iterative and begins with clearly defining the research goals and identifying the target users. I then select the most appropriate research methods based on the objectives, budget, and timeline. This often involves a mix of qualitative and quantitative techniques. For example, I might start with user interviews to understand their needs and pain points, followed by surveys to gather quantitative data on a larger scale. A/B testing can be used to validate design decisions and usability testing provides direct feedback on product interactions. I analyze the collected data to identify patterns and insights, which are then used to inform product development and design decisions.

Some of the most effective methods I find are user interviews, usability testing, and surveys. User interviews provide rich, qualitative data that can uncover unexpected user behaviors and motivations. Usability testing allows me to observe users interacting with a product in real-time, identifying usability issues and areas for improvement. Surveys are valuable for gathering quantitative data and validating findings from qualitative research.

9. Walk me through your process for creating a product roadmap. What factors do you consider?

My product roadmap process starts with understanding the 'why' – the overall company strategy, user needs, and market analysis. I gather data from customer feedback, market research, and stakeholder interviews. Then, I prioritize potential features and initiatives based on factors like impact, effort, and alignment with strategic goals. We also look at competitive landscape and technical feasibility.

Next, I create a visual roadmap, typically using a tool like Jira or Aha!, that outlines key themes, epics, and releases over a specific timeframe (e.g., quarterly or annually). The roadmap is iterative and flexible, allowing for adjustments based on new information or changing priorities. I believe it's crucial to communicate the roadmap clearly to all stakeholders and regularly review and update it based on progress and feedback. Important factors are strategic fit, user value, feasibility, and resource availability.

10. Describe a time you had to pivot on a product strategy. What prompted the change, and how did you manage the transition?

In my previous role, we were developing a mobile app focused on social event planning. Our initial strategy centered around a broad audience, targeting anyone interested in organizing or attending local events. However, early user feedback indicated that the app lacked a clear value proposition and was getting lost in the noise of existing social platforms. Usage metrics were also low.

We pivoted to focus specifically on outdoor adventure events (hiking, biking, climbing). This was prompted by qualitative data suggesting a strong interest in this niche, and a lack of dedicated solutions. To manage the transition, we prioritized the features catering to outdoor activities, refined the UI to reflect this focus, and launched targeted marketing campaigns toward adventure enthusiasts. This resulted in higher user engagement and a better product-market fit.

11. How do you stay up-to-date with the latest trends and technologies in the product management field?

I stay up-to-date through a combination of active learning and community engagement. I regularly read industry blogs and publications like Mind the Product, Product Talk, and The Pragmatic Marketer. I also subscribe to newsletters from thought leaders in product management, such as those from Marty Cagan and Teresa Torres. Furthermore, I participate in online communities like Product School and attend relevant webinars and online conferences.

I also value practical learning. I dedicate time to experimenting with new product management tools, like user research platforms, A/B testing tools, and product analytics platforms. I also enjoy listening to podcasts focused on product management, such as Product Thinking and This is Product Management to absorb new perspectives and strategies. I also enjoy reading product books, and recently finished 'Inspired' by Marty Cagan.

12. Tell me about a product you admire and why. What makes it successful?

I admire the Tesla Model 3. Its success comes from a combination of factors, primarily its strong brand image as an innovator in the EV space, its sleek and modern design, and its superior performance and range compared to many competitors at its price point. Tesla has also built a comprehensive charging network, which alleviates range anxiety and adds convenience for owners. The consistent over-the-air software updates constantly improve the car's features and functionality, making it feel like it's always getting better.

Furthermore, Tesla’s direct-to-consumer sales model allows them to control the customer experience and bypass traditional dealerships. This also helps to create a strong community and brand loyalty. Even with increasing competition, the Model 3 continues to be a top-selling EV due to its integrated ecosystem, technological innovation, and overall user experience.

13. If you could change one thing about your current product, what would it be and why?

If I could change one thing about our current product, it would be improving the onboarding experience for new users. Currently, some users find the initial setup and feature discovery process overwhelming, leading to a higher churn rate within the first few weeks.

By streamlining the onboarding with interactive tutorials, contextual help tips, and a more intuitive user interface, we could significantly reduce user frustration, improve initial engagement, and ultimately increase user retention. This could involve A/B testing different onboarding flows and gathering user feedback to identify the most effective approaches.

14. How do you handle conflicting feedback from different stakeholders?

When I receive conflicting feedback from different stakeholders, I prioritize understanding the reasoning behind each perspective. I actively listen to each stakeholder, asking clarifying questions to fully grasp their concerns and objectives. I then try to identify common ground or shared goals that can serve as a foundation for a unified approach. If a consensus is not immediately apparent, I facilitate a discussion where each stakeholder can present their case, and I work to find a compromise or alternative solution that addresses the key concerns of all parties involved. This might involve proposing different options, outlining the pros and cons of each, and seeking further input to refine the solution until it is acceptable to everyone. Ultimately, I aim to find a balanced approach that aligns with the project's overall objectives and minimizes negative impact on any stakeholder.

15. Describe a time you had to say no to a feature request. How did you communicate your decision?

In a previous role, a product manager requested a feature that would allow users to upload very large files (multiple GBs) directly to our web application. After analyzing the request, I realized that our current infrastructure couldn't handle such large uploads without significant performance degradation and potential security vulnerabilities. We also hadn't implemented any chunking or resumable upload capabilities.

I communicated my concerns to the product manager, explaining the technical limitations and potential risks. I presented data on current server load and projected the impact of the new feature. I also suggested alternative solutions, such as using a third-party service designed for large file uploads or implementing a phased rollout with a smaller file size limit initially. We ultimately decided to postpone the feature and prioritize upgrading our infrastructure and exploring more robust upload solutions.

16. How do you define a minimum viable product (MVP) and what are its key characteristics?

A Minimum Viable Product (MVP) is a version of a new product with just enough features to be usable by early customers who can then provide feedback for future product development. It focuses on releasing a functional product quickly to validate assumptions and gather data with minimal effort and resources.

Key characteristics include: it solves a core problem, provides sufficient value, is usable, and provides a feedback loop for iteration. The goal is not to build a perfect product, but to test a hypothesis with real users, and then iterate based on user feedback. The MVP should be viable - meaning it has the capacity to be maintained over a period of time, so it should be a quality product and not just something that crashes repeatedly.

17. Explain your understanding of A/B testing and how you would use it to improve a product.

A/B testing (also known as split testing) is a method of comparing two versions of something (e.g., a website page, app feature, marketing email) to determine which one performs better. It involves randomly showing one version (A) to a segment of users and another version (B) to a similar segment, then analyzing which version achieves a desired outcome (e.g., higher click-through rate, increased conversion rate). The core principle is to use statistical analysis to confirm which variation performs significantly better.

I would use A/B testing to incrementally improve a product by focusing on specific user interactions. For example, if I wanted to increase the click-through rate on a button, I would create two versions: the original (A) and a modified version (B) with a different color or text. I'd track the click-through rates for each version over a set period. Based on the statistical analysis, if version B shows a statistically significant improvement, I would implement that change. I could also A/B test different landing pages, signup flows, or pricing models to optimize key metrics.

18. Tell me about a time you had to work with a difficult engineer or designer. How did you resolve the situation?

In a previous role, I collaborated with a senior engineer who was resistant to new technologies and preferred sticking to older, established methods. This created friction because our team was trying to implement a more modern architecture. Initially, I tried to directly convince them of the benefits, but this wasn't effective.

Instead, I shifted my approach to understanding their concerns and validating their experience. I asked them about the specific challenges they foresaw with the new technology. I then researched solutions to address those challenges, presenting them with data and examples of how the new tech could mitigate those problems. I also suggested a phased rollout, allowing them to gradually adopt the new technology and build confidence. Ultimately, they became more receptive, and we successfully integrated the new architecture by working together. This experience taught me the importance of empathy and tailoring my communication style to individual personalities, rather than pushing my own perspective.

19. How do you approach competitive analysis and what do you look for?

My approach to competitive analysis involves a few key steps. First, I identify direct and indirect competitors. Direct competitors offer similar products/services to the same target market, while indirect competitors address the same need in a different way. Then, I gather information about them by using their products, studying their marketing materials, and reading customer reviews. I look for things like their pricing strategies, target audience, feature sets, strengths and weaknesses, and marketing tactics.

Specifically, I focus on understanding their market share, identifying unique selling propositions (USPs), and evaluating their customer satisfaction. I also keep an eye on their online presence, including website traffic, social media engagement, and content strategy. This helps me identify opportunities to differentiate our offering, improve our strategies, and gain a competitive edge.

20. Describe a time you failed as a product manager. What did you learn from the experience?

Early in my career, I was responsible for launching a new feature aimed at improving user engagement. I relied heavily on initial market research but failed to continuously monitor user behavior and feedback post-launch. As a result, the feature didn't resonate with our target audience, and adoption rates were significantly lower than projected. We had to pull the feature after a few months.

From that experience, I learned the critical importance of iterative development and continuous user feedback. I now prioritize A/B testing, user surveys, and data analysis throughout the entire product lifecycle, not just during the initial planning phase. I also now set up automated dashboards to monitor metrics more closely, and proactively reach out to users at different stages of feature rollouts.

21. Let’s say we want to enter a new market. How would you assess the opportunity and develop a go-to-market strategy?

To assess a new market opportunity, I would first focus on market sizing and attractiveness: Market Size (TAM, SAM, SOM), Growth Rate, Profitability, Competitive Landscape, Regulatory Environment, and Technological Trends. This helps determine if the market is worth pursuing. Next, I would analyze our internal capabilities and resources to see if we have the right skills and assets to succeed in the market. A SWOT analysis helps to identify strengths, weaknesses, opportunities, and threats.

For the go-to-market strategy, I would define the target customer (their needs, pain points, and behaviors). Then, determine the value proposition (what unique benefits do we offer). Next, choose the distribution channels (sales, partners, online). Finally, establish the marketing and communication strategy to reach the target customer and create awareness. Testing and iterating are vital, so I'd use pilot programs and A/B testing to optimize the approach continuously.

22. How do you balance short-term goals with long-term product vision?

Balancing short-term goals with long-term product vision requires a strategic approach. First, clearly define and communicate the long-term vision to the entire team. This provides a guiding star for all decisions. Then, break down the vision into smaller, achievable short-term goals (e.g., quarterly objectives). Prioritize these short-term goals based on their contribution to the long-term vision and immediate user needs. Regularly review progress and adjust plans as needed, ensuring alignment with both the immediate priorities and the overarching vision.

It's also crucial to maintain flexibility. Be willing to adapt the short-term roadmap based on user feedback, market changes, or technological advancements, but always evaluate these changes against the long-term vision to prevent scope creep or deviation from the core product strategy. Use metrics to track progress on both short-term goals and the overall movement toward the vision, such as tracking feature adoption, user satisfaction, and key performance indicators (KPIs). This allows for informed decision-making and course correction.

23. How do you approach documentation for a product? What types of documents do you typically create?

When approaching product documentation, I first identify the target audience and their needs. This informs the scope and style of the documentation. I aim for clarity, accuracy, and conciseness, prioritizing the information most relevant to the user's goals. Then, I outline the different types of documentation that are required and assign priority according to the product launch plan.

Typical documents I create include:

- User Guides: Step-by-step instructions for using the product's features.

- API Documentation: (If applicable) Explains how developers can interact with the product's API, including endpoints, parameters, and example code.

- Troubleshooting Guides: Solutions to common problems users might encounter. This will include error codes and how to solve it.

- Release Notes: Summaries of new features, bug fixes, and known issues in each release.

- FAQ: Answers to frequently asked questions.

- Tutorials/How-to guides: Task-based guides showing usage through examples.

Advanced Product Manager interview questions

1. How would you measure the success of a product that aims to improve team collaboration and communication within a large organization?

To measure the success of a product designed to enhance team collaboration and communication, I would focus on several key metrics. These include: 1) Increased frequency and quality of communication (measured through surveys, sentiment analysis of communication channels, and tracking active users). 2) Improved team performance (tracked through project completion rates, reduced project cycle times, and achievement of team goals). 3) Enhanced employee satisfaction (assessed through employee surveys focusing on collaboration ease, communication clarity, and overall team morale). 4) Product adoption and engagement (measured through daily/weekly active users, feature usage rates, and user retention).

Specifically, I'd look for quantifiable improvements in these areas before and after the product's implementation, using A/B testing or control groups where feasible. Furthermore, qualitative data from user interviews and feedback sessions will provide valuable context and insights into the user experience and product impact.

2. Imagine a competitor launches a similar product with a key feature that your product lacks. How would you respond?

My initial response would be to analyze the competitor's feature in detail. This includes understanding its functionality, how it's implemented, and the value it provides to users. I'd then assess the potential impact on our product and user base, considering whether the feature addresses a genuine unmet need or simply represents a marginal improvement.

Next, I'd prioritize evaluating whether to build, buy, or partner to address the gap. This decision hinges on factors like development costs, time to market, and strategic alignment. We might also explore alternative solutions that differentiate our product in a different, potentially more valuable way. Simultaneously, transparent communication with our users is crucial, explaining our roadmap and vision for the product.

3. Describe a time when you had to make a product decision with incomplete or conflicting data. What was your process?

In a previous role, we were deciding whether to prioritize feature A or feature B for our next sprint. User research indicated high demand for both, but the engineering team estimated significantly longer development time for feature A. Sales data showed a slightly higher potential ROI for feature A, but the data was based on early projections and wasn't conclusive. My process involved: 1) Quantifying the uncertainty: I worked with the data team to estimate confidence intervals for the sales projections and development timelines. 2) Running a sensitivity analysis: We explored how the overall ROI would change under different scenarios (e.g., best-case, worst-case, most likely) for both features. 3) Considering strategic alignment: I assessed which feature better aligned with our long-term product vision, even if the immediate ROI was less certain.

Ultimately, we decided to prioritize feature B. While feature A had higher potential upside, the risk-adjusted ROI, considering the longer development time and uncertain sales projections, was lower. Prioritizing feature B allowed us to deliver value faster, validate assumptions earlier, and remain agile.

4. How would you go about identifying new market opportunities for an existing product in a saturated market?

To identify new market opportunities for an existing product in a saturated market, I would focus on a multi-pronged approach. First, I would analyze existing customer data to identify underserved segments or unmet needs through surveys, feedback analysis, and usage pattern analysis. Next, I'd explore adjacent markets or applications for the product, considering modifications or adaptations that would appeal to new customer groups, and do competitive research to understand gaps in the market.

Furthermore, I'd look at geographic expansion possibilities, prioritizing regions with similar demographics or needs but less market saturation. Finally, I would explore partnerships or collaborations with other companies to reach new customer bases or bundle the product with complementary offerings. Doing so may offer chances to create new value propositions or tap into synergistic marketing channels.

5. Let's say your product is experiencing a sudden surge in user growth. How would you ensure scalability and maintain a positive user experience?

To ensure scalability and maintain a positive user experience during a sudden user growth surge, I would focus on several key areas. First, I'd monitor system performance closely using metrics like response times, error rates, and resource utilization (CPU, memory, database load). Based on the monitoring, I would scale resources proactively. This might involve scaling horizontally by adding more servers/instances, optimizing database queries and caching strategies to reduce database load, and leveraging Content Delivery Networks (CDNs) to distribute static assets. We'd also look at implementing load balancing to distribute traffic evenly across servers. Finally, I'd prioritize addressing any critical bugs or performance bottlenecks identified during the surge. We would communicate with users regarding possible performance hiccups if they do occur to manage expectations and maintain transparency.

Specifically, we could leverage technologies like auto-scaling groups in cloud environments to dynamically adjust the number of servers based on demand. Database optimizations could involve adding indexes, rewriting slow queries, or implementing read replicas. Caching strategies could leverage tools like Redis or Memcached. The goal is to make sure that the system scales, performance is great, and users don't get a bad experience.

6. Explain your approach to prioritizing features when you have limited resources and a long list of potential improvements.

When prioritizing features with limited resources, I focus on impact and effort. I would start by creating a matrix to visually represent features based on these two factors. High-impact, low-effort features are prioritized first as these provide the quickest wins. After that, high-impact, high-effort features are considered. To determine impact, I'd analyze user data, conduct user interviews, and review business goals. Effort is estimated in collaboration with the development team.

Additionally, I consider dependencies and potential for technical debt. Features that unlock other high-value items or reduce future maintenance are given higher priority. I would then clearly communicate the rationale behind these decisions to all stakeholders to ensure alignment and manage expectations. Continual feedback is crucial; after a few iterations I revisit the prioritization and refine it accordingly.

7. Walk me through a situation where you had to convince stakeholders with differing opinions about a product strategy. What was your approach?

In a prior role, we needed to decide the best approach for a new feature in our mobile app - whether to build it natively or use a cross-platform framework. Engineering strongly favored native for performance reasons, while product and design preferred a cross-platform approach for faster iteration and unified look-and-feel across platforms.

My approach was to first facilitate a discussion to fully understand each group's priorities and concerns. I then presented a data-driven analysis comparing the two options, including development time estimates, projected performance metrics, and potential maintenance costs. To bridge the gap, I proposed a hybrid approach: developing core functionalities natively while leveraging cross-platform elements for UI components. This addressed the performance concerns of engineering and offered a relatively quicker development time acceptable to product and design. After further discussion and minor adjustments, all stakeholders agreed on this hybrid strategy, leading to a successful feature launch.

8. Describe a time when you had to sunset a product or feature. What were the key considerations, and how did you manage the process?

In a previous role, we had a feature that allowed users to create custom reports based on pre-defined templates. Usage had declined significantly over time as more users adopted the platform's built-in reporting tools. The key considerations for sunsetting it were: 1) identifying the remaining users and understanding their needs, 2) providing alternative solutions (training on the new tool), and 3) minimizing disruption during the migration. We managed the process by communicating the change well in advance, offering personalized support, and setting a firm deprecation date.

We also had to address a technical debt associated with the custom reporting feature. LegacyReportGenerator.java was tightly coupled to other modules. Before complete removal, we refactored it to reduce dependencies and improve maintainability of the core platform. This allowed a smoother transition and reduced the risk of unexpected issues after the sunset.

9. How do you stay updated on the latest trends and technologies in the product management space, and how do you apply that knowledge to your work?

I stay updated through a variety of channels. I regularly read industry blogs and publications like Mind the Product, Product Talk, and The Pragmatic Engineer. I also follow key influencers and thought leaders on platforms like LinkedIn and Twitter. Participating in webinars, online courses, and industry conferences (both virtual and in-person) helps me learn about new frameworks, methodologies, and tools. Finally, I actively participate in product management communities and forums to exchange ideas and experiences with other professionals.

I apply this knowledge by experimenting with new approaches in my work. For example, if I learn about a new prioritization framework like RICE, I might pilot it with a small feature set to see if it improves our decision-making process. I also share relevant articles and insights with my team to foster a culture of continuous learning. Furthermore, being informed allows me to proactively identify opportunities to leverage new technologies or adapt our product strategy to address emerging market trends and user needs.

10. If you could change one thing about the way product development is typically done, what would it be and why?

I would change the tendency to over-engineer solutions upfront without sufficient user feedback. Too often, teams spend significant time building features based on assumptions, only to discover later that users don't need or want them in that particular way. This leads to wasted effort and delays in delivering value.

Instead, I'd advocate for a more iterative approach focused on rapid prototyping and continuous user testing. This involves quickly building minimal viable products (MVPs), gathering user feedback, and iterating based on that feedback. This approach ensures that the product development aligns closely with user needs and preferences, reducing the risk of building features that nobody wants and accelerating the time to market.

11. How would you handle a situation where engineering pushes back on a product requirement due to technical feasibility concerns?

When engineering pushes back on a product requirement due to technical feasibility, I first aim to fully understand their concerns. I would schedule a meeting with the relevant engineers to discuss the specific challenges and constraints. This involves actively listening, asking clarifying questions, and ensuring I grasp the technical limitations they're facing. The goal is to establish a shared understanding of the problem.

Next, I'd work collaboratively to explore alternative solutions or compromises. This might involve refining the requirement, adjusting the scope, or researching alternative technologies. I'd present any available data supporting the requirement's importance and potential impact. If necessary, I would involve other stakeholders (e.g., designers, other engineers) to brainstorm solutions or to prioritize which aspect of the requirement is most important. The objective is to find an acceptable solution that balances business needs with technical realities, while making sure stakeholders understand the tradeoffs involved.

12. Tell me about a time you failed as a product manager. What did you learn from the experience?

Early in my career, I championed a new feature that, based on initial user research, seemed like a clear win. We built and launched it, but adoption was shockingly low. I failed to properly validate the depth of the user need beyond the initial interviews.

The key takeaway was the importance of iterative testing and a broader, more quantitative validation approach. I learned to prioritize A/B testing on key assumptions, track leading indicators more closely during the initial rollout, and build a more robust feedback loop to identify and address issues quickly. Now, I am more diligent about post-launch monitoring and user segmentation analysis, ensuring we are measuring success with the right metrics.

13. How do you define a 'good' product, beyond just meeting basic requirements?

A good product goes beyond just fulfilling the specified requirements; it delights the user and solves a real problem effectively. It's intuitive to use, reliable, performant, and aesthetically pleasing where appropriate. Furthermore, a good product is maintainable and scalable, allowing for future improvements and adaptations.

Key aspects of a 'good' product include:

- Usability: Easy to learn and use.

- Reliability: Performs consistently and predictably.

- Performance: Responds quickly and efficiently.

- Maintainability: Easy to update and fix bugs.

- Scalability: Can handle increased load and new features.

- Value: Provides a positive return on investment for the user/business.

- Security: Protects user data and privacy.

14. Let's say your product has a significant drop in user engagement. What steps would you take to diagnose the problem and identify potential solutions?

First, I'd define 'significant drop' with specific metrics and timeframes. Then, I'd analyze data: looking at user activity, feature usage, and conversion funnels, segmenting users by cohort, platform, and behavior. I would also review recent changes to the product, marketing campaigns, or external factors (like competitor activity or seasonality). Furthermore, I'd look at system health metrics to rule out bugs/performance issues.

Next, I'd formulate hypotheses based on the data. I'd validate these by gathering qualitative data through user surveys, interviews, and analyzing support tickets and social media. Once the root cause(s) are identified, I'd brainstorm potential solutions and prioritize them based on impact and feasibility. Finally, I'd design and run A/B tests to validate proposed solutions before full implementation.

15. Imagine you are launching a product in a new international market. What are the key considerations you would take into account?

Launching a product internationally requires careful planning. Key considerations include: Market Research (understanding local needs, competition, and regulatory landscape), Localization (adapting the product and marketing materials to the local language and culture), Legal and Regulatory Compliance (ensuring adherence to local laws and regulations), Distribution and Logistics (establishing efficient distribution channels and managing logistics), Payment Processing (offering payment options preferred by local customers), and Customer Support (providing support in the local language and time zone).

Beyond these, it's vital to understand cultural nuances to tailor marketing, branding and customer interactions appropriately. A phased roll-out can help with initial testing and gathering feedback. Consider forming partnerships with local businesses to improve market penetration and credibility. Pricing strategies will be dependent on the local market and your competitive positioning within it.

16. How do you balance short-term goals with long-term vision when making product decisions?

Balancing short-term goals with long-term vision requires a strategic approach focusing on iterative progress. Prioritize short-term goals that directly contribute to the long-term vision or provide valuable learning opportunities. This means carefully selecting features that provide immediate user value while laying the foundation for future expansion and innovation. Regularly reassess the product roadmap and adjust short-term plans based on market feedback and progress towards the long-term vision.

Consider using a framework like Objectives and Key Results (OKRs). The Objective represents the long-term vision, while Key Results are the measurable short-term goals that demonstrate progress towards that Objective. This allows for consistent alignment and helps in making informed trade-offs between immediate needs and strategic aspirations. Another tactic is to allocate a percentage of development time for innovative tasks that don't directly impact the current sprint goals, but contribute to long term objectives.

17. Describe your experience with A/B testing. What are some common pitfalls to avoid?

I've used A/B testing extensively to optimize various aspects of user experience, from website landing pages to email campaigns. My experience includes designing tests, implementing them using tools like Google Optimize and Optimizely, analyzing the results statistically (using t-tests or chi-squared tests to determine statistical significance), and making data-driven decisions based on the findings. I've worked with multivariate testing as well, though more commonly with A/B due to simplicity. My focus is always on defining clear hypotheses, selecting appropriate metrics, and ensuring the tests run long enough to achieve statistical significance and account for variations in user behavior (e.g., weekday vs. weekend traffic).

Some common pitfalls to avoid include: premature termination of tests (before reaching statistical significance), testing too many variables at once (making it difficult to isolate the impact of individual changes), ignoring external factors that could influence results (e.g., a major news event), and not segmenting data properly (e.g., mobile vs. desktop users). Another pitfall is focusing solely on short-term gains without considering the long-term impact on user engagement or brand perception. Finally, not having a control group that truly represents the original experience is a huge issue.

18. How would you approach building a product for a user base with diverse technical skills and needs?

To build a product for a user base with diverse technical skills and needs, I'd prioritize user research and accessibility. This involves gathering insights through surveys, interviews, and usability testing to understand the different skill levels, needs, and pain points of each user segment. The product should then be designed with a layered approach:

- Beginner-friendly interface: A simple, intuitive interface with clear instructions and tooltips for users with limited technical expertise.

- Advanced features: Offer advanced features and customization options for technically proficient users, potentially accessible through a separate section or settings panel. Consider using progressive disclosure to avoid overwhelming new users.

- Comprehensive documentation: Provide clear, concise documentation, tutorials, and FAQs catering to different skill levels. Visual aids, such as videos and screenshots, can also be helpful. Prioritize accessibility considerations like screen reader compatibility.

19. What's your opinion on the build vs. buy decision when considering new features or functionalities for a product?

The build vs. buy decision is a crucial consideration involving a trade-off between control, cost, and time. Building provides maximum customization and intellectual property control but can be expensive and time-consuming. Buying offers faster implementation and lower upfront costs but may lack specific features and introduce vendor dependencies.

Factors to consider include the feature's core competency to the business, budget constraints, available resources, time to market requirements, and long-term maintenance costs. If the feature is highly specialized and critical, building might be preferable. If it's a commodity and rapid deployment is essential, buying is likely the better option. A hybrid approach combining both might be suitable as well.

20. Let's say your product is facing stiff competition from a free alternative. How would you differentiate your product and justify its value?

Faced with a free competitor, I'd focus on highlighting the areas where our product offers superior value. This could involve several strategies:

- Focus on Premium Features & Support: Clearly articulate the advanced functionalities our product offers that the free alternative lacks. This could include enhanced security, better performance, deeper analytics, or integrations with other key tools. Premium support is also a major differentiator. Quick response times and dedicated assistance can be invaluable, especially for businesses that rely on the product.

- Highlight Total Cost of Ownership (TCO): Even if the initial cost is zero, free alternatives can have hidden costs. These could be in the form of time spent configuring, maintaining, or troubleshooting the product, or limitations requiring workarounds. I would demonstrate how our paid product offers a lower TCO by providing a more streamlined experience, reducing support overhead, and offering features that increase efficiency. We can also focus on stability and reliability that the free version may lack. Highlighting user experience and enterprise-level scalability becomes critical to win over customers.

21. How do you incorporate user feedback into the product development process in a meaningful way?

I incorporate user feedback through a structured process. First, I collect feedback from various channels like user interviews, surveys, support tickets, and analytics. Then, I analyze the feedback, categorizing it by themes and prioritizing it based on impact and frequency. To ensure the feedback is representative and actionable, I use a combination of qualitative and quantitative data.

Next, I share insights with the product and development teams, fostering discussion and alignment on potential solutions. This might involve presenting data reports, user stories, or even directly involving users in sprint reviews. Finally, I iterate on the product based on these insights and track the impact of changes through A/B testing and monitoring key metrics. I maintain a feedback loop, ensuring that users know their input matters and that we're continuously improving the product.

22. What are some strategies for fostering a strong product culture within a team?

Fostering a strong product culture involves several key strategies. First, emphasize customer empathy by regularly sharing customer feedback and usage data with the team. Encourage direct interaction with users through research and support channels. Second, promote a shared understanding of the product vision and strategy. Clearly define the 'why' behind the product and ensure everyone is aligned on the goals. This can be achieved through regular strategy reviews, product roadmaps, and open communication channels.

Further, empower the team to take ownership and make decisions. Encourage experimentation and learning from failures. Create a safe space for constructive feedback and collaboration. Value continuous improvement through retrospectives and a focus on data-driven decision-making. Celebrate successes and recognize individual and team contributions. Finally, invest in product training and development. Ensure the team has the skills and knowledge necessary to build and deliver high-quality products. Foster a culture of learning and encourage continuous growth.

Expert Product Manager interview questions

1. How would you measure the success of a product that aims to improve employee morale?

Measuring the success of a product aimed at improving employee morale involves a combination of quantitative and qualitative measures. Quantitatively, you could track metrics like employee turnover rate (decreased turnover indicates higher morale), absenteeism (lower absenteeism suggests improved morale), employee Net Promoter Score (eNPS - a higher score is better), participation in company events (increased participation reflects better engagement), and productivity metrics (improved productivity can be a result of better morale). Surveys and questionnaires can also be used to generate measurable scores based on employee responses, tracking changes over time.

Qualitatively, gathering feedback through employee interviews, focus groups, and anonymous feedback channels provides deeper insights. Look for changes in employee sentiment, improved communication and collaboration, and a more positive overall work environment. Analyzing employee reviews on platforms like Glassdoor can also provide valuable qualitative data. Regular check-ins with managers to gauge team morale and identify any potential issues are crucial to understand if the product is driving positive changes in employee satisfaction and overall work culture.

2. Describe a time you had to make a product decision with incomplete or conflicting data. What did you do?

In a previous role, we were deciding whether to prioritize development of a new feature based on initial user feedback and market analysis. The user feedback was positive, indicating a potential need, but the market analysis data was conflicting, suggesting a smaller addressable market than anticipated. To resolve this, I initiated a small-scale beta program with a select group of users to gather more qualitative data. This involved conducting user interviews and closely monitoring feature usage.

The beta program provided clearer insights. While the overall market might be smaller, the users who did need the feature found it extremely valuable and were willing to pay a premium. This, combined with the initial positive feedback, led us to prioritize the feature, albeit with a slightly adjusted scope and marketing strategy targeting the identified niche. Ultimately, the feature proved successful within that niche, validating the decision to move forward despite the initial data conflicts.

3. Let's say our competitor launches a feature that directly copies one of our key offerings. How do you respond strategically?

First, we need to validate if the feature is indeed a direct copy and assess its impact on our user base and market share. Then, we should focus on our differentiators and double down on what makes us unique. This could involve:

- Accelerating our product roadmap: Prioritize features that the competitor doesn't have and that address unmet user needs.

- Strengthening our brand and messaging: Emphasize our experience, expertise, and any unique value proposition.

- Improving customer experience: Focus on providing superior customer support and service.

- Considering legal options: If the competitor has infringed on our intellectual property, we may need to pursue legal action. Ultimately, the best response is to continue innovating and providing superior value to our customers, rendering the competitor's copy irrelevant over time.

4. How do you balance short-term revenue goals with long-term product vision?

Balancing short-term revenue and long-term vision requires a strategic approach focusing on prioritization and communication. I would advocate for a roadmap that incorporates both quick wins and foundational investments. Short-term goals should align with, and not contradict, the overall long-term vision. For example, if the long term is migrating to a new technology, a short-term project could involve learning and testing the waters with a small part of it. Another example is phased rollouts of features. This allows for quicker revenue generation while also validating and improving the product vision.

Regularly communicating the roadmap and trade-offs to stakeholders is crucial. Demonstrating how short-term efforts contribute to the long-term strategy helps maintain buy-in and avoids the perception of sacrificing the future for immediate gains. Key is identifying metrics and milestones for both short-term and long-term goals to track progress and make informed adjustments along the way. If short-term revenue hinders the long-term goal, the priorities should be re-evaluated and discussed with the stakeholders.

5. Imagine you are PM for a social media platform. How would you address the issue of misinformation spreading on the platform, considering both free speech and user safety?

As a PM, I'd tackle misinformation using a multi-pronged approach. Balancing free speech with user safety requires careful calibration. First, I'd focus on reducing the economic incentives for spreading misinformation. This includes demonetizing accounts and websites known to consistently share false content. Second, I'd implement robust content moderation policies that clearly define what constitutes misinformation, particularly regarding health, elections, and public safety. These policies need to be transparent and consistently enforced. Third, I would invest in user education to help people identify and avoid misinformation. This could involve partnering with fact-checking organizations to provide context and warnings on potentially misleading content, clearly flagging disputed information. Finally, algorithmic changes that prioritize credible sources and downrank misinformation would be beneficial. This involves deprioritizing content from accounts known to spread misinformation in search results, feeds, and recommendations. These methods, together, help limit the spread of misinformation while respecting free speech.