In today's competitive job market, hiring the right Data Engineer is crucial for organizations aiming to leverage their data effectively. By asking the right interview questions, recruiters and hiring managers can assess candidates' technical skills, problem-solving abilities, and cultural fit.

This blog post provides a comprehensive list of Data Engineer interview questions tailored for different experience levels and specific areas of expertise. From initial screening questions to in-depth technical assessments, we cover a wide range of topics to help you evaluate candidates thoroughly.

By using these curated questions, you can streamline your hiring process and identify top talent more efficiently. Consider complementing your interviews with a pre-employment Data Engineer assessment to get a holistic view of candidates' capabilities.

Table of contents

10 Data Engineer interview questions to initiate the interview

To effectively assess candidates for data engineering roles, use these questions to gauge their technical skills and practical experience. This list will help you initiate meaningful conversations during the interview, ensuring you find the right fit for your team. For more insights into the role, check out the data engineer job description.

- Can you explain the importance of ETL processes in data engineering and describe your experience with them?

- What data storage solutions have you worked with, and how do you decide which one to use for a specific project?

- How do you ensure the quality and consistency of data in your pipelines?

- Can you walk us through a challenging data migration project you've completed? What were the key steps and outcomes?

- What tools and frameworks do you prefer for data processing and why?

- How do you approach data modeling for a new project? What factors do you consider?

- Could you discuss a time when you had to optimize a data pipeline for performance? What changes did you implement?

- What strategies do you use for version control in data engineering projects?

- How do you handle data privacy and compliance issues in your work?

- Can you describe a scenario where you had to collaborate with data scientists or analysts? How did you ensure effective communication?

8 Data Engineer interview questions and answers to evaluate junior engineers

To gauge whether junior data engineers possess foundational skills and a problem-solving mindset, consider these insightful interview questions. They are designed to reveal how candidates approach real-world challenges, ensuring you find the right fit for your team.

1. How do you approach debugging a data pipeline issue?

When faced with a data pipeline issue, I start by identifying any recent changes made to the pipeline since these are often the source of new problems. Next, I would review the logs to pinpoint where the failure occurred, which can help me understand if it was a data issue or a configuration error.

If the logs don't provide enough information, I might run smaller test datasets through the pipeline to isolate the problem. I also make use of monitoring tools to track metrics that could indicate where the pipeline is failing.

Look for candidates who demonstrate a logical approach to troubleshooting and mention specific tools or methodologies they use, such as log analysis or monitoring tools. This can indicate a systematic approach to problem-solving.

2. Can you describe your experience with automating data workflows?

In my previous role, I automated several data workflows to improve efficiency and accuracy. For instance, I used scheduling tools to automatically trigger data ingestion processes, ensuring fresh data was available without manual intervention.

I also implemented alerting mechanisms to notify the team in case of failures, allowing for quicker response times. Automation helped us maintain consistency and reliability in our data processes.

The ideal candidate should discuss specific tools and strategies they used to automate workflows and any improvements in efficiency or accuracy as a result. Be on the lookout for an understanding of both the technical and operational benefits of automation.

3. What steps do you take to ensure data security in your projects?

Data security is a top priority in all my projects. I ensure that data is encrypted both in transit and at rest. Implementing access control measures is crucial, so I ensure only authorized personnel have access to sensitive data.

I also regularly review and update security protocols to adapt to new threats. Additionally, I collaborate with data security teams to stay aligned with organizational policies and industry standards.

Candidates should demonstrate an understanding of data security principles and mention specific practices they have implemented. It's a good sign if they also show awareness of compliance with industry regulations.

4. How do you prioritize tasks when managing multiple data engineering projects?

When managing multiple projects, I prioritize tasks based on urgency and impact. I start by assessing deadlines and the potential impact of each task on the overall project goals.

I often use project management tools to keep track of deadlines and dependencies and communicate regularly with stakeholders to align priorities. This helps ensure that I'm focusing on tasks that will deliver the most value first.

Look for candidates who can articulate a clear strategy for prioritization and mention tools or methods they use, such as project management software. Effective communication and stakeholder management skills are also important.

5. Describe a time when you had to deal with a large volume of data. What challenges did you face and how did you overcome them?

In a previous project, we had to process a large volume of real-time data from multiple sources. One of the challenges was ensuring that our systems could handle the data load without performance degradation.

We tackled this by implementing data partitioning strategies and optimizing our data storage solutions for better performance. Additionally, we set up scalable cloud resources to manage peak loads effectively.

Candidates should highlight their experience with handling large datasets and discuss specific strategies they used to manage challenges. It's beneficial if they mention how they leveraged cloud solutions or other technologies to scale efficiently.

6. How do you stay updated with the latest trends and technologies in data engineering?

I make it a point to regularly read industry blogs, attend webinars, and participate in online forums related to data engineering. This helps me stay informed about the latest trends and tools.

I also experiment with new technologies in personal projects to evaluate their potential for solving real-world problems. Networking with peers and attending conferences when possible is another way I stay connected to industry advancements.

Candidates should show a proactive approach to continuous learning and mention specific resources or communities they engage with. This indicates a genuine interest in the field and a commitment to professional growth.

7. Can you explain how you document your data engineering processes?

Documentation is crucial for ensuring that team members can understand and maintain data engineering processes. I typically create detailed documentation that includes data flow diagrams, pipeline steps, and configuration settings.

I also keep track of changes and updates using version control systems, so there is a clear history of modifications. This helps in troubleshooting and onboarding new team members.

Look for candidates who emphasize the importance of clear and comprehensive documentation. They should mention using version control and collaboration tools to maintain and share documentation effectively.

8. How do you ensure your data solutions are scalable?

Scalability is key in data engineering. I design solutions with scalability in mind, ensuring that they can handle increased data loads by utilizing distributed computing frameworks and cloud-based services.

I also conduct regular performance evaluations and load testing to identify bottlenecks and optimize resources accordingly. This proactive approach helps in maintaining scalability as data volumes grow.

Candidates should discuss specific strategies or technologies they use to ensure scalability, such as cloud platforms or distributed systems. It's important for them to show an understanding of the balance between performance, cost, and scalability.

15 intermediate Data Engineer interview questions and answers to ask mid-tier engineers.

To identify mid-tier data engineers who can handle more complex tasks and responsibilities, consider using these intermediate-level interview questions. These questions are designed to uncover a candidate’s depth of experience and problem-solving skills, crucial for effective decision-making in data engineering roles.

- Can you explain how you would design a data pipeline to handle real-time data processing?

- What are the key considerations when choosing between batch processing and real-time processing in data engineering?

- Describe a situation where you had to implement data governance in your projects. What steps did you take?

- How do you use data partitioning to enhance query performance in large datasets?

- What experience do you have with cloud-based data solutions, and how do you manage costs effectively?

- Can you discuss your approach to handling schema evolution in a data warehouse?

- How do you troubleshoot and resolve data pipeline bottlenecks?

- What role do you believe data engineers play in ensuring data democratization within an organization?

- Explain your experience with building and maintaining data lakes. What are the challenges, and how do you overcome them?

- How do you ensure effective data integration from multiple sources with different formats and structures?

- Can you provide an example of how you've used machine learning in a data engineering context?

- What methods do you use to monitor data pipeline performance and reliability?

- How do you balance the needs of end-users with the technical constraints of data engineering?

- Describe your approach to collaborating with cross-functional teams, including product managers and software engineers.

- What are the typical steps you take to migrate data from on-premises systems to the cloud?

7 Data Engineer interview questions and answers related to data pipelines

Ready to dive into the world of data pipelines? These seven interview questions will help you assess candidates' understanding of this crucial aspect of data engineering. Use them to gauge applicants' knowledge, problem-solving skills, and real-world experience with data pipeline design and management.

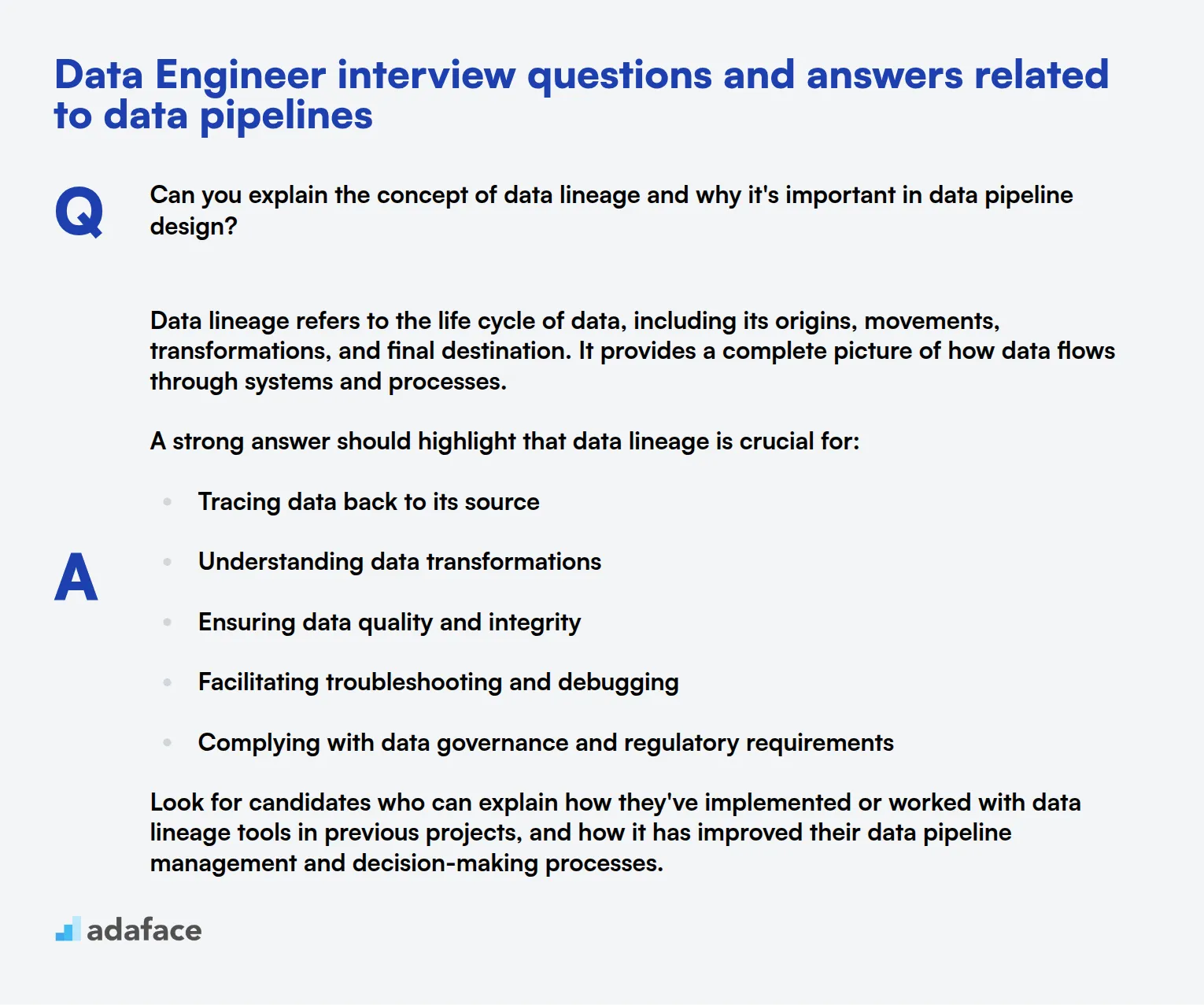

1. Can you explain the concept of data lineage and why it's important in data pipeline design?

Data lineage refers to the life cycle of data, including its origins, movements, transformations, and final destination. It provides a complete picture of how data flows through systems and processes.

A strong answer should highlight that data lineage is crucial for:

- Tracing data back to its source

- Understanding data transformations

- Ensuring data quality and integrity

- Facilitating troubleshooting and debugging

- Complying with data governance and regulatory requirements

Look for candidates who can explain how they've implemented or worked with data lineage tools in previous projects, and how it has improved their data pipeline management and decision-making processes.

2. How would you approach designing a data pipeline that needs to handle both batch and streaming data?

A comprehensive answer should outline a hybrid approach that accommodates both batch and streaming data processing. Key points to listen for include:

- Use of a unified processing framework like Apache Spark or Flink

- Implementation of a lambda or kappa architecture

- Consideration of data storage solutions that support both paradigms

- Strategies for handling late-arriving data and out-of-order events

- Mechanisms for data quality checks and error handling in both modes

Evaluate the candidate's ability to articulate trade-offs between different architectural choices and their understanding of when to use batch vs. streaming processing based on business requirements and data characteristics.

3. Describe a situation where you had to optimize a data pipeline for better performance. What steps did you take?

An ideal response should demonstrate the candidate's problem-solving skills and practical experience. Look for a structured approach such as:

- Identifying bottlenecks through monitoring and profiling

- Analyzing data flow and processing steps

- Implementing optimizations like:

- Parallelization of tasks

- Caching frequently accessed data

- Optimizing query performance

- Adjusting resource allocation

- Testing and measuring the impact of changes

- Iterating on the optimization process

Pay attention to how the candidate balances technical optimizations with business needs and resource constraints. A strong answer will also include metrics that show the quantifiable improvements achieved through their optimization efforts.

4. How do you ensure data quality and consistency across different stages of a data pipeline?

A comprehensive answer should cover multiple aspects of data quality management:

- Implementing data validation checks at ingestion points

- Using schema enforcement and data type checking

- Applying data cleansing and normalization techniques

- Setting up automated data quality tests throughout the pipeline

- Establishing data reconciliation processes between stages

- Implementing error handling and logging mechanisms

- Using data profiling tools to detect anomalies

Look for candidates who emphasize the importance of data governance and mention specific tools or frameworks they've used for maintaining data quality. A strong answer might also touch on how they've handled data quality issues in real-world scenarios and the processes they've put in place for continuous monitoring and improvement.

5. What strategies do you use for versioning data pipelines and managing schema changes?

An effective answer should cover both code versioning and data schema versioning:

For code versioning:

- Using version control systems like Git

- Implementing branching strategies (e.g., GitFlow)

- Applying CI/CD practices for pipeline deployment

For schema versioning:

- Implementing schema evolution techniques (e.g., backward/forward compatibility)

- Using schema registries (like Confluent Schema Registry for Kafka)

- Applying blue-green deployment strategies for schema changes

- Maintaining schema version history

Look for candidates who can explain how they've handled complex schema changes without disrupting downstream consumers. They should also be able to discuss strategies for testing schema changes and rolling back if issues occur. A strong answer might include examples of tools they've used for schema management and how they've integrated schema versioning into their overall data pipeline development process.

6. How do you handle data pipeline failures and ensure fault tolerance?

A comprehensive answer should cover multiple aspects of fault tolerance and error handling:

- Implementing retry mechanisms for transient failures

- Using checkpointing and state management in streaming pipelines

- Designing idempotent operations to handle duplicate processing

- Implementing circuit breakers to prevent cascading failures

- Setting up comprehensive monitoring and alerting systems

- Creating detailed logging for easier troubleshooting

- Implementing data recovery mechanisms (e.g., dead letter queues)

- Designing graceful degradation strategies

Look for candidates who can provide specific examples of how they've handled pipeline failures in the past. They should be able to discuss the trade-offs between different fault tolerance strategies and how they choose the appropriate approach based on the criticality of the data and business requirements. A strong answer might also touch on how they conduct post-mortem analyses and implement improvements to prevent similar failures in the future.

7. Can you explain the concept of data partitioning in the context of data pipelines and when you would use it?

Data partitioning involves dividing large datasets into smaller, more manageable pieces based on specific criteria. In the context of data pipelines, partitioning can be used for:

- Improving query performance by allowing parallel processing

- Enabling easier data management and maintenance

- Facilitating data retention policies

- Optimizing storage costs

- Enhancing data loading and processing efficiency

Candidates should be able to explain different partitioning strategies such as:

- Time-based partitioning (e.g., by date or timestamp)

- Range-based partitioning

- List partitioning

- Hash partitioning

Look for answers that demonstrate an understanding of when to use each strategy and the impact on pipeline design. A strong response might include examples of how they've implemented partitioning in previous projects and the benefits they've observed. Candidates should also be aware of potential drawbacks, such as increased complexity in query writing and the need for careful partition management.

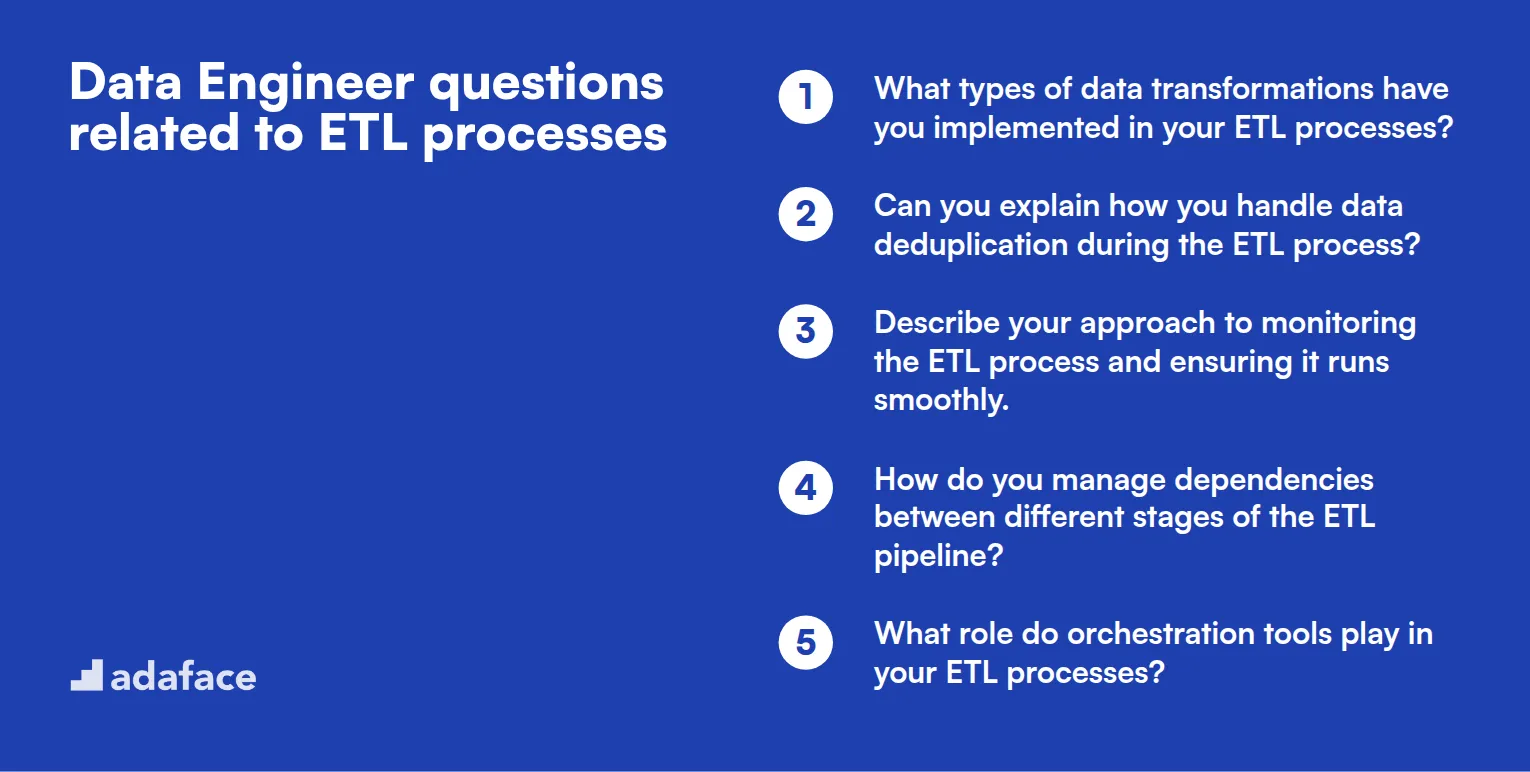

10 Data Engineer questions related to ETL processes

To effectively evaluate candidates' knowledge and abilities in ETL processes, utilize this curated list of questions. These questions are designed to help you assess the technical skills and problem-solving abilities necessary for a data engineer role. For a deeper understanding of the necessary skills, check out our ETL developer job description.

- What types of data transformations have you implemented in your ETL processes?

- Can you explain how you handle data deduplication during the ETL process?

- Describe your approach to monitoring the ETL process and ensuring it runs smoothly.

- How do you manage dependencies between different stages of the ETL pipeline?

- What role do orchestration tools play in your ETL processes?

- Can you give an example of how you have implemented error handling in an ETL workflow?

- How do you optimize ETL jobs to reduce execution time?

- What strategies do you use for scheduling and automating ETL processes?

- Can you discuss your experience with data extraction from various sources, such as APIs or databases?

- How do you ensure that your ETL processes are compliant with data governance policies?

Which Data Engineer skills should you evaluate during the interview phase?

Evaluating candidates during the interview phase can be challenging due to the limited time frame. While it's impossible to assess every skill in a single session, there are certain core skills that are critical to the role of a Data Engineer. These skills lay the groundwork for a successful career in data engineering and should be prioritized during the interview.

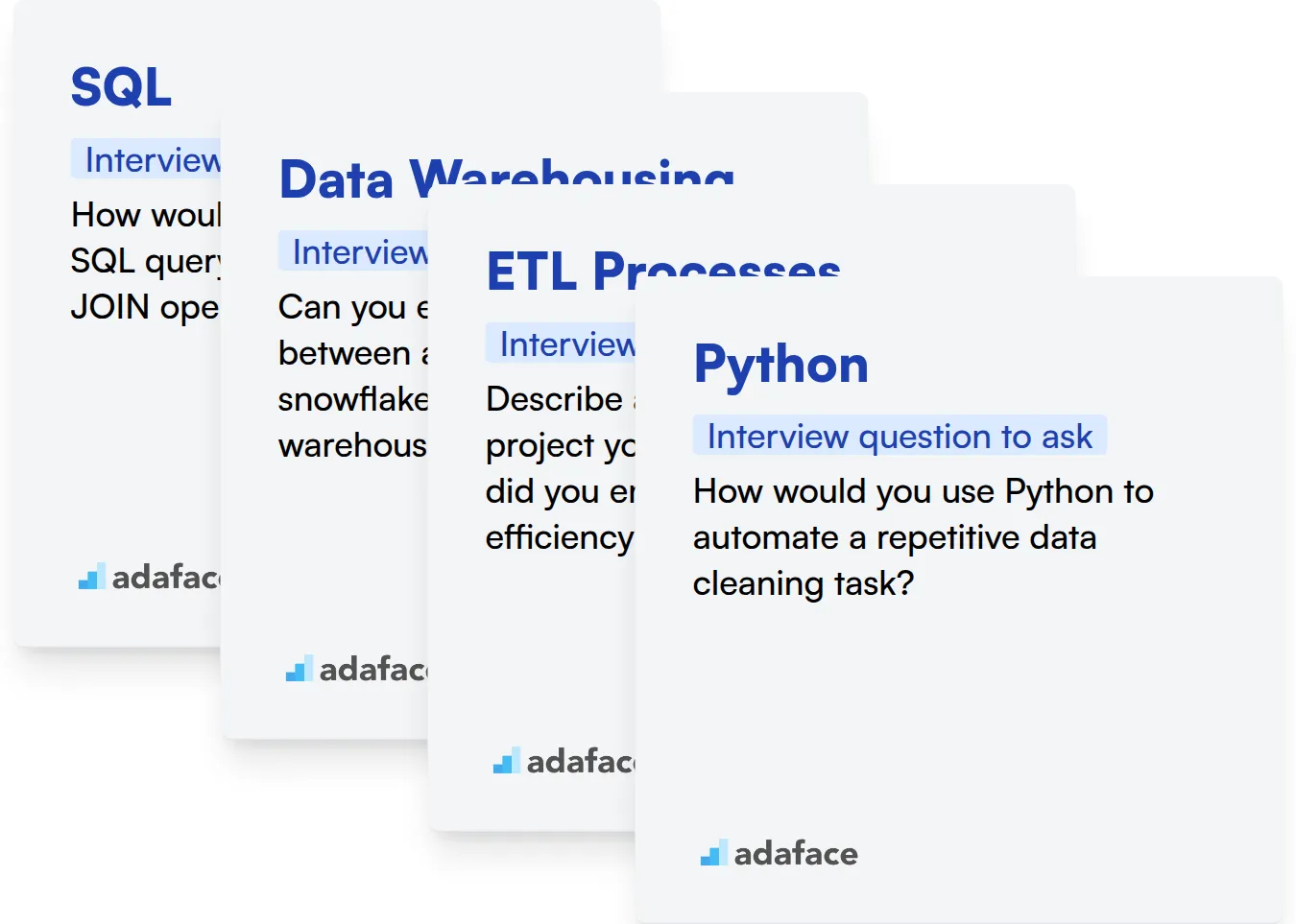

SQL

An assessment test with relevant SQL questions can help you determine a candidate's proficiency in writing and optimizing queries. You might consider using an SQL Coding Test to evaluate this skill.

During the interview, asking targeted questions can further gauge the candidate's SQL capabilities. Here's one question you can use:

How would you optimize a slow SQL query that involves multiple JOIN operations?

When asking this question, listen for the candidate's approach to identifying bottlenecks and their understanding of JOIN operations. Look for a methodical thought process and knowledge of indexing or query restructuring.

Data Warehousing

To evaluate a candidate's understanding of data warehousing, you can pose targeted questions like:

Can you explain the differences between a star schema and a snowflake schema in data warehousing?

Look for clarity in explaining the structural differences and the implications of each schema. A well-rounded answer will showcase an understanding of when to use each schema based on business needs and data complexity.

ETL Processes

Consider utilizing an ETL Online Test to efficiently gauge a candidate's proficiency in ETL processes.

Asking specific questions can also help you understand a candidate's experience with ETL processes. For example:

Describe a challenging ETL project you have worked on. How did you ensure data accuracy and efficiency?

A strong response will highlight the candidate's problem-solving skills and attention to detail. Look for examples of how they tackled data quality issues and optimized the ETL workflow.

Python

A Python Online Test can be a helpful way to assess a candidate's programming capabilities in Python.

To further evaluate Python skills, consider asking questions that explore practical applications. For example:

How would you use Python to automate a repetitive data cleaning task?

Expect the candidate to demonstrate knowledge of Python libraries like Pandas or NumPy, and their ability to write clean, efficient code. Be attentive to their problem-solving approach and code optimization strategies.

Hire the Best Data Engineers with Adaface

If you're aiming to bring top-tier data engineering talent into your team, it's important to ensure candidates possess the necessary skills. Accurate evaluation of these skills is crucial for making the right hiring decision.

A reliable approach to assessing these skills is through specialized skill tests. Adaface offers a variety of relevant tests, such as the Apache NiFi Online Test, Data Modeling Test, and SQL Online Test.

By utilizing these tests, you'll be able to efficiently shortlist the best applicants who are truly qualified. Once you've identified the top candidates, you can proceed to invite them for interviews to further evaluate their fit for your team.

To get started with finding the right talent, consider signing up on our platform here or explore our online assessment platform to learn more about our offerings.

Apache NiFi Online Test

Download Data Engineer interview questions template in multiple formats

Data Engineer Interview Questions FAQs

Look for skills in database management, ETL processes, data modeling, programming languages like Python or SQL, and experience with big data technologies.

Ask scenario-based questions about data pipeline issues or optimization challenges to evaluate their analytical and problem-solving abilities.

Junior roles focus on basic data tasks, while senior roles involve complex system design, optimization, and leadership in data projects.

Very important. Familiarity with cloud platforms like AWS, Azure, or GCP is increasingly necessary for modern data engineering roles.

Yes, coding tests or take-home assignments can help assess practical skills in data manipulation, ETL processes, and algorithm implementation.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources