Cloud Architects are the masterminds behind designing, building, and managing an organization's cloud computing strategy and infrastructure; they oversee the implementation of cloud solutions. As with all tech roles, identifying the right talent involves more than just checking off a list of skills; it's about probing their understanding and practical knowledge.

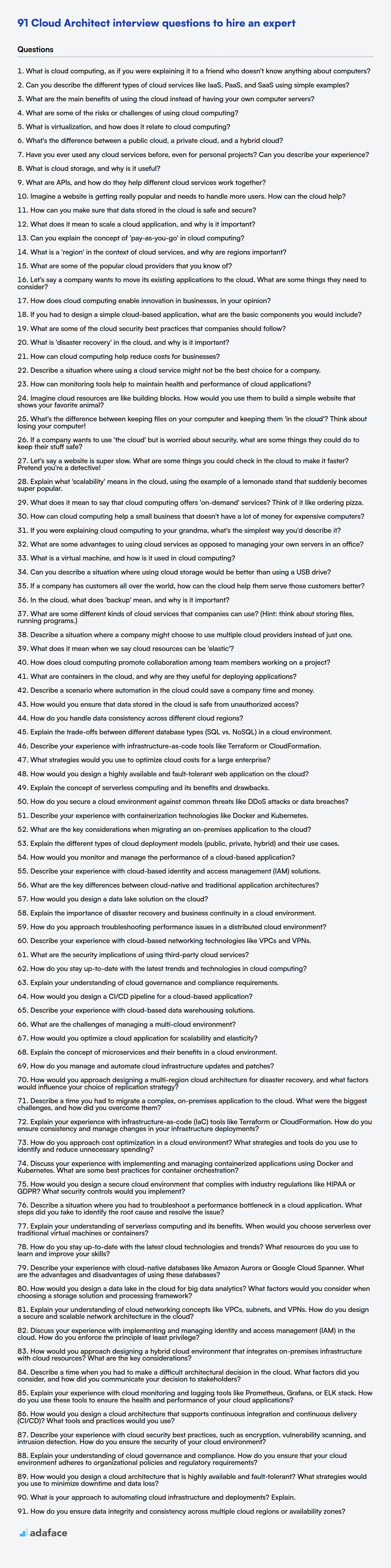

This post provides a detailed compilation of interview questions tailored for Cloud Architects at various career stages, from freshers to experienced professionals, alongside multiple-choice questions (MCQs). We've organized the questions by experience level to help you easily find the most relevant inquiries for each candidate, making the assessment process smoother.

By using these questions, you'll be able to separate the wheat from the chaff and gauge candidates' abilities to architect scalable and secure cloud solutions. To further streamline your hiring, consider using Adaface's cloud computing online test to screen candidates efficiently before the interview stage.

Table of contents

Cloud Architect interview questions for freshers

1. What is cloud computing, like you're explaining it to a kid?

Imagine you have a lot of toys, but instead of keeping them all in your room, you keep some of them in a big playroom that everyone can share. Cloud computing is like that playroom! Instead of keeping all your computer stuff, like your games, documents, and pictures, on your own computer or phone, you keep them in a big, safe computer area that many people can use, called the 'cloud'.

So, you can access your stuff from any computer or phone, anytime you want, as long as you have the internet. It's like borrowing toys from the big playroom whenever you need them, and then putting them back when you're done. This way, you don't need to worry about running out of space on your computer, and you can share your things with others easily!

2. Can you name three different types of cloud services?

Three different types of cloud services are:

- Infrastructure as a Service (IaaS): Provides access to fundamental computing resources like virtual machines, storage, and networks. Users manage the operating system, middleware, and applications.

- Platform as a Service (PaaS): Offers a platform for developing, running, and managing applications without the complexity of managing the underlying infrastructure. It typically includes operating systems, programming execution environment, database, web server.

- Software as a Service (SaaS): Delivers software applications over the internet, on demand. Users access the software through a web browser or other client application. Examples include Salesforce, Gmail, and Dropbox.

3. What does 'scalability' mean in the cloud?

Scalability in the cloud refers to the ability of a system, application, or resource to handle increasing workloads by adding resources, without negatively impacting performance or availability. It means that your cloud infrastructure can grow or shrink dynamically to meet changing demands.

There are two primary types of scalability: vertical scalability (scaling up/down), which involves increasing/decreasing the resources (CPU, RAM, etc.) of a single instance, and horizontal scalability (scaling out/in), which involves adding/removing more instances of a resource. Cloud environments are particularly well-suited for horizontal scalability, allowing for more flexible and cost-effective scaling strategies. For example, auto-scaling groups in AWS can automatically add or remove EC2 instances based on demand.

4. Why is cloud security important?

Cloud security is crucial because it protects data, applications, and infrastructure hosted in the cloud. As organizations increasingly rely on cloud services for storage, computing, and software, securing these environments becomes paramount to prevent data breaches, ensure compliance, and maintain business continuity.

Without robust cloud security measures, organizations are vulnerable to various threats, including unauthorized access, malware infections, denial-of-service attacks, and data loss. Strong cloud security helps organizations maintain customer trust, protect their reputation, and meet regulatory requirements.

5. What's the difference between public and private cloud?

Public and private clouds differ primarily in ownership, management, and resource sharing. A public cloud is owned and operated by a third-party provider (like AWS, Azure, or Google Cloud) and offers resources to multiple tenants over the internet. Users share the underlying infrastructure, which typically leads to lower costs but potentially less control and customization.

In contrast, a private cloud is dedicated to a single organization. It can be hosted on-premises (in the organization's data center) or by a third-party provider. Because the resources are not shared, private clouds offer greater control, security, and customization but usually come with higher upfront and operational costs.

6. How can cloud computing help a small business?

Cloud computing offers several benefits to small businesses. It reduces IT costs by eliminating the need for expensive hardware and in-house IT staff. Cloud services are typically pay-as-you-go, making them budget-friendly. This allows small businesses to scale their resources up or down based on their needs, avoiding overspending during slow periods.

Furthermore, cloud solutions improve collaboration and accessibility. Employees can access data and applications from anywhere with an internet connection, boosting productivity. Automatic backups and disaster recovery features ensure business continuity in case of unexpected events. Security is often enhanced, as cloud providers invest heavily in protecting data, potentially offering better security than a small business could afford on its own.

7. What are some advantages of using cloud storage?

Cloud storage offers several advantages, including cost savings by eliminating the need for on-site hardware and reducing IT maintenance expenses. Scalability is another key benefit, allowing you to easily increase or decrease storage capacity as needed, providing flexibility and responsiveness to changing demands.

Additionally, cloud storage enhances accessibility, enabling you to access your data from anywhere with an internet connection, promoting collaboration and remote work. It also often includes robust security measures like encryption and access controls, improving data protection and disaster recovery capabilities through automated backups and redundancy.

8. Explain 'Infrastructure as Code' in simple terms.

Infrastructure as Code (IaC) is like using a recipe (code) to automatically build and manage your computer networks, servers, and other IT infrastructure. Instead of manually configuring each piece, you write code that defines how your infrastructure should look, and then use tools to automatically provision and update it.

Think of it like this: if you needed ten identical servers, instead of setting each one up by hand, you'd write a script (IaC code) that describes the server's configuration (operating system, software installed, etc.). Then, you'd run the script, and the IaC tool would automatically create and configure all ten servers for you. This ensures consistency, reduces errors, and makes it much easier to manage and scale your infrastructure. Examples of IaC tools include Terraform, Ansible, and CloudFormation.

9. What is a virtual machine?

A virtual machine (VM) is a software-defined emulation of a physical computer. It allows you to run an operating system and applications within another operating system. Think of it as a computer inside a computer.

VMs provide several benefits, including resource optimization, isolation (important for security and testing), and portability. They are commonly used in cloud computing, software development, and server consolidation.

10. What's a container, and how is it different from a virtual machine?

A container is a standardized unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. Unlike virtual machines (VMs), containers virtualize the operating system instead of the hardware. Each VM includes a full copy of an operating system, the application, necessary binaries and libraries - and can be several GBs in size.

Containers, on the other hand, share the host OS kernel. This makes them lightweight (MBs in size) and much faster to start. Because of their small footprint, a single server can host many more containers than VMs. They're also more portable and efficient in resource usage.

11. What is a cloud region and why is it important?

A cloud region is a geographical area containing multiple availability zones. These availability zones are physically isolated datacenters within the region.

Regions are important because they provide fault tolerance and low latency. By distributing resources across multiple availability zones in a region, you can ensure that your application remains available even if one availability zone fails. Also, choosing a region close to your users minimizes latency, improving the user experience.

12. What is the difference between IaaS, PaaS, and SaaS?

IaaS (Infrastructure as a Service) provides you with the basic building blocks for cloud IT. You control the OS, storage, deployed applications, and networking. Examples: AWS EC2, Azure Virtual Machines. PaaS (Platform as a Service) provides a platform allowing customers to develop, run, and manage applications without the complexity of building and maintaining the infrastructure. Examples: AWS Elastic Beanstalk, Google App Engine. SaaS (Software as a Service) delivers software applications over the Internet, on-demand. You simply use the software, with the provider managing everything else. Examples: Salesforce, Gmail, Dropbox.

Think of it this way: with IaaS, you manage the most; with PaaS, you manage the applications and data; and with SaaS, you manage nothing but your data and usage of the application. The provider manages everything else.

13. What is a CDN and how does it help websites?

A CDN (Content Delivery Network) is a geographically distributed network of servers that cache static content like images, videos, CSS, and JavaScript files. When a user requests this content, the CDN serves it from the server closest to the user's location, reducing latency and improving website loading times.

CDNs help websites in several ways: Improved performance: Faster loading times lead to a better user experience. Reduced bandwidth costs: By serving content from geographically closer servers, CDNs reduce the load on the origin server and lower bandwidth consumption. Increased reliability: If the origin server goes down, the CDN can continue to serve cached content, ensuring website availability. Enhanced security: CDNs can provide DDoS protection and other security features.

14. Can you describe a situation where cloud computing might NOT be a good fit?

Cloud computing might not be a good fit when dealing with extremely low latency requirements, such as real-time applications in high-frequency trading or certain medical devices. The inherent network latency of communicating with a remote cloud provider can be a bottleneck. Similarly, situations where data sovereignty and compliance are strict, and regulations prevent data from leaving a specific geographical location, can make cloud adoption problematic. Some organizations might find it more cost-effective to maintain on-premises infrastructure if their computing needs are highly predictable and consistent, thus avoiding the ongoing operational expenses associated with cloud services.

Also, consider scenarios where internet connectivity is unreliable or unavailable. Cloud services rely on stable internet access; without it, critical applications and data become inaccessible. Finally, migrating a very large, monolithic legacy application to the cloud without significant refactoring can be complex and expensive, potentially outweighing the benefits.

15. What is serverless computing?

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. The user only pays for the actual resources consumed by their application, rather than pre-purchasing or renting fixed units of infrastructure. It abstracts away the underlying infrastructure management, allowing developers to focus solely on writing and deploying code.

Key characteristics include automatic scaling, pay-per-use billing, and reduced operational overhead. Services like AWS Lambda, Azure Functions, and Google Cloud Functions are common examples. Developers deploy code as functions, triggered by events (e.g., HTTP requests, database updates), without managing servers.

16. What are some common cloud providers?

Some common cloud providers include:

- Amazon Web Services (AWS): A comprehensive suite of cloud services, offering everything from compute and storage to databases and machine learning.

- Microsoft Azure: Another major player, providing a wide range of services and strong integration with Microsoft products.

- Google Cloud Platform (GCP): Known for its strengths in data analytics, machine learning, and Kubernetes.

- IBM Cloud: Offers a variety of services, with a focus on enterprise solutions and hybrid cloud environments.

- Oracle Cloud: Provides a range of cloud services, including database, infrastructure, and platform services.

- DigitalOcean: Popular among developers for its simplicity and affordable pricing, especially for smaller projects.

17. What is cloud migration?

Cloud migration is the process of moving digital assets, such as applications, data, and IT resources, from on-premises infrastructure to a cloud environment. This can involve different strategies, including rehosting (lift and shift), replatforming, refactoring, repurchasing (SaaS), and retiring.

The goal of cloud migration is often to improve scalability, reduce costs, enhance security, and increase agility. It allows organizations to leverage the benefits of cloud computing, such as pay-as-you-go pricing and access to a wide range of services, without managing physical infrastructure.

18. Explain the concept of 'pay-as-you-go' pricing in the cloud.

Pay-as-you-go (PAYG) pricing in the cloud is a model where you only pay for the resources you consume. Instead of fixed, upfront costs or long-term contracts, you are charged based on actual usage, such as compute time, storage used, data transfer, or number of requests. This is similar to how you pay for utilities like electricity or water.

The key benefits are cost efficiency (avoiding over-provisioning and paying only for what you use), scalability (easily adjust resources based on demand), and flexibility (no long-term commitments). It allows businesses to start small, experiment with different services, and scale up or down as needed, making it ideal for startups and projects with variable workloads.

19. What does high availability mean in the context of cloud computing?

High availability (HA) in cloud computing refers to a system's ability to remain operational and accessible for a defined period. The goal is to minimize downtime and ensure continuous service, even in the face of failures, such as hardware issues, software bugs, or network outages. Systems achieving high availability incorporate redundancy, failover mechanisms, and automatic recovery processes to maintain uptime.

In essence, HA ensures that if one component fails, another immediately takes over, providing a seamless experience for users. Common techniques include load balancing, replication, and automated monitoring with alerts, all contribute to ensuring the system will continue to be available. Specific availability is often defined by a percentage, such as 99.99% uptime.

20. What are some tools you can use to monitor the performance of cloud resources?

There are many tools available for monitoring cloud resource performance. Some popular options include cloud provider native tools like: AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring. These offer comprehensive monitoring capabilities specific to their respective platforms, often providing metrics, logs, and tracing information. There are also third-party tools like Datadog, New Relic, and Dynatrace which offer broader cross-platform monitoring and advanced features like anomaly detection and AI-powered insights.

Other tools include open-source options like Prometheus and Grafana, particularly useful in Kubernetes environments. Choosing the right tool depends on specific needs, the cloud provider being used, and the desired level of detail and integration.

21. What are some ways to optimize costs in the cloud?

Optimizing cloud costs involves several strategies. Right-sizing instances is crucial; avoid over-provisioning resources by monitoring utilization and scaling up or down as needed. Utilize reserved instances or committed use discounts for predictable workloads to secure lower prices. Leveraging spot instances for fault-tolerant applications can significantly reduce costs, but be prepared for potential interruptions.

Further optimization includes deleting unused resources, such as old snapshots or idle databases. Employing auto-scaling ensures resources are only provisioned when demand is high. Consider using serverless computing (e.g., AWS Lambda, Azure Functions) for event-driven tasks, which only charges for actual execution time. Finally, regularly review your cloud spending and identify areas for improvement.

22. How do you ensure data security and compliance in the cloud?

Data security and compliance in the cloud are achieved through a multi-layered approach. Key strategies include implementing strong access controls using Identity and Access Management (IAM), encrypting data both at rest and in transit, and regularly monitoring and logging activities for suspicious behavior. We would also use tools provided by the cloud provider like AWS CloudTrail or Azure Monitor.

To ensure compliance, it's crucial to understand and adhere to relevant regulations like GDPR, HIPAA, or PCI DSS. This involves performing regular audits, implementing data loss prevention (DLP) measures, and establishing a robust incident response plan. You might also use a Configuration Management Database (CMDB) and Infrastructure as Code (IaC) to ensure your environment remains in a consistent, auditable state.

23. What are some common cloud security threats?

Common cloud security threats include:

- Data Breaches: Unauthorized access, theft, or leakage of sensitive data stored in the cloud.

- Misconfiguration: Incorrectly configured cloud services leaving them vulnerable to attack. This can include overly permissive access controls or leaving default configurations enabled.

- Insufficient Access Control: Weak or poorly managed access controls allowing unauthorized users or services to access resources.

- Malware Infections: Introduction of malicious software into the cloud environment.

- Denial of Service (DoS) and Distributed Denial of Service (DDoS) Attacks: Overwhelming cloud resources making them unavailable to legitimate users.

- Insider Threats: Malicious or negligent actions by employees or other insiders with access to cloud resources.

- Account Hijacking: Attackers gaining control of legitimate user accounts to access cloud resources.

- Vulnerabilities in Third-Party Services: Security flaws in services and applications used within the cloud environment that attackers can exploit.

- Lack of Visibility and Control: Difficulty in monitoring and managing security across cloud environments.

- Compliance Violations: Failure to meet regulatory requirements for data security and privacy in the cloud.

24. Explain the shared responsibility model in cloud security.

The shared responsibility model in cloud security dictates that both the cloud provider and the customer have specific security responsibilities. The provider is generally responsible for the security of the cloud, which includes the physical infrastructure, hardware, and software that make up the cloud environment. This covers areas like the physical security of data centers, network infrastructure, and virtualization software.

The customer is responsible for the security in the cloud. This means securing their data, applications, operating systems, identity and access management (IAM), and anything else they put into the cloud. The exact division of responsibilities varies depending on the service model (IaaS, PaaS, SaaS). For example, in IaaS, the customer has more responsibility for managing the operating system and application security than in PaaS or SaaS. In all service models, the customer is always responsible for their data.

25. What is disaster recovery in the cloud?

Disaster recovery (DR) in the cloud refers to the strategies, processes, and technologies used to recover IT infrastructure and data in a cloud computing environment after a disruptive event. It involves replicating data and applications to a separate cloud region or provider, ensuring business continuity with minimal downtime and data loss.

Cloud-based DR offers benefits like cost-effectiveness (pay-as-you-go), scalability, and automated failover capabilities compared to traditional on-premises DR solutions. Key components often include replication services, backup and restore mechanisms, and automated failover procedures to switch to the secondary cloud environment when a disaster occurs.

26. How can you automate tasks in the cloud?

Cloud automation allows you to streamline repetitive tasks, reduce manual intervention, and improve efficiency. Several tools and services facilitate this.

- Cloud-native tools: Cloud providers offer native services like AWS CloudFormation, Azure Resource Manager, and Google Cloud Deployment Manager for infrastructure as code (IaC). You can define your infrastructure using templates (e.g., YAML, JSON) and automatically provision and manage resources. Services such as AWS Lambda, Azure Functions, and Google Cloud Functions enable event-driven automation by executing code in response to triggers.

- Third-party automation tools: Tools like Terraform, Ansible, Chef, and Puppet can manage infrastructure and configurations across multiple cloud platforms. They use declarative or procedural approaches to define the desired state and automate the process of achieving it. Consider using tools like IFTTT or Zapier for connecting various cloud applications.

27. What are some open-source technologies used in cloud computing?

Several open-source technologies are fundamental to cloud computing. Some prominent examples include:

- Operating Systems: Linux (various distributions like Ubuntu, CentOS) form the base of many cloud infrastructures.

- Virtualization: KVM and Xen are widely used for creating and managing virtual machines.

- Containerization: Docker and Kubernetes are essential for container orchestration, enabling portability and scalability.

- Databases: MySQL, PostgreSQL, and MongoDB are popular open-source database options.

- Cloud Management Platforms: OpenStack provides a comprehensive platform for building and managing private and public clouds. Cloud Foundry is a PaaS solution.

- Monitoring and Logging: Prometheus and Grafana are used for monitoring system performance and visualizing metrics. ELK stack (Elasticsearch, Logstash, Kibana) provides log management and analysis capabilities.

- Message Queues: RabbitMQ and Kafka are utilized for asynchronous communication between cloud services.

- Automation: Ansible, Terraform and Chef are Infrastructure as Code (IaC) tools.

These technologies contribute significantly to the flexibility, cost-effectiveness, and innovation within cloud environments.

Cloud Architect interview questions for juniors

1. Imagine cloud is like a big playground. How do you keep all the toys (data and apps) safe and organized so everyone can play nicely?

To keep the cloud playground safe and organized, we need a few key things. First, security is crucial. Think of it like a fence around the playground and locks on the toy boxes. We use things like firewalls (rules about who can enter), identity and access management (IAM, like giving each kid a name tag and only letting them play with certain toys), and encryption (scrambling the toys so only the right kids can understand them). Regular security audits (checking the fence for holes) and penetration testing (trying to break into the toy boxes) are also essential.

Second, organization is important. We need rules about where the toys go when playtime is over. This is where things like resource groups (labeling toy boxes for specific activities), tagging (stickers on each toy with details), and naming conventions (using names everyone understands) come in handy. We also use tools to monitor how many toys are being used and where, so we don't run out or waste them. We can also use infrastructure-as-code (IaC) for consistency. For example using Terraform to create a S3 bucket:

resource "aws_s3_bucket" "example" {

bucket = "my-tf-test-bucket"

acl = "private"

tags = {

Name = "My bucket"

Environment = "Dev"

}

}

2. If a website suddenly gets super popular, how can the cloud help it handle all the new visitors without crashing?

The cloud provides several ways to handle a sudden surge in website traffic. First, auto-scaling automatically increases the number of servers to handle the load. Load balancers distribute incoming traffic across these multiple servers, preventing any single server from being overwhelmed. Secondly, Content Delivery Networks (CDNs) cache website content (images, videos, etc.) and serve it from geographically distributed locations, reducing the load on the origin server. Finally, managed database services can automatically scale database resources, such as CPU and memory, to accommodate increased data access.

These solutions enable a website to handle unexpected spikes in traffic, maintain performance, and avoid crashes by dynamically adjusting resources based on demand. Cloud services handle the complexity of provisioning and managing these resources, allowing developers to focus on the application itself.

3. What's the difference between keeping your files on your computer and keeping them in the cloud, like on Google Drive?

Keeping files on your computer means they're stored locally on your hard drive. You have direct control, but you're responsible for backups and risk losing data if your computer fails. Accessing them requires being at that specific computer.

Cloud storage, like Google Drive, keeps files on remote servers managed by a provider. This offers automatic backups, accessibility from anywhere with an internet connection and typically enables easier sharing. However, you rely on the provider's security and availability, and storage space might come at a cost.

4. Let's say you want to build a simple game. How could you use the cloud to make it available to all your friends?

To make a simple game available to my friends using the cloud, I would utilize a platform like AWS, Google Cloud, or Azure. I could package the game and its dependencies into a container using Docker. Then, I'd deploy this container to a managed container service like AWS ECS or Google Kubernetes Engine (GKE). This would ensure the game runs reliably and scales to handle multiple players. I'd configure a load balancer to distribute traffic and point a domain name to the load balancer for easy access.

Alternatively, for a less technical solution, I could use a game engine with built-in cloud support like Unity or Godot. These engines often offer services for hosting multiplayer games, handling matchmaking, and managing player data. Some even let you directly deploy to the web, making the game accessible through a simple URL. The cost would be usage based and easy for friends to use, rather than setting up servers and load balancers.

5. What are some things you would keep in mind when helping a small business move their files and applications to cloud? How will that affect the business?

When migrating a small business to the cloud, several factors are crucial. Firstly, data security is paramount, requiring robust encryption and access controls. Secondly, cost optimization is key, as cloud expenses can quickly escalate, so selecting the right pricing model (pay-as-you-go, reserved instances) is essential. Thirdly, you need to consider downtime minimization during the migration process, which can be achieved through phased migrations or using specialized migration tools. Finally, application compatibility must be verified to ensure seamless operation in the cloud environment.

The impact on the business can be significant. Cloud migration can enhance scalability and flexibility, allowing the business to adapt quickly to changing demands. It can also improve collaboration by enabling easier file sharing and access to applications from anywhere. Furthermore, it can reduce IT costs associated with hardware maintenance and infrastructure management. However, the business needs to be prepared for a potential learning curve associated with new cloud-based systems and workflows, as well as the need to rely on a stable internet connection. Careful planning and execution are vital to ensure a successful transition and realize the full benefits of cloud adoption.

6. Can you describe a situation where using the cloud would be a better choice than using a traditional server, and why?

A situation where the cloud would be a better choice than a traditional server is when dealing with a highly variable or unpredictable workload. For example, consider an e-commerce website during the holiday season. A traditional server setup would require provisioning enough hardware to handle peak traffic, leading to significant wasted resources during off-peak times. The cloud, using services like AWS Auto Scaling or Azure Virtual Machine Scale Sets, allows for automatic scaling of resources based on demand. This ensures optimal performance during peak loads and reduces costs during periods of low activity by only paying for the resources actually used.

Furthermore, the cloud offers benefits in terms of redundancy and disaster recovery. With cloud providers having geographically diverse data centers, applications can be easily replicated across multiple regions, ensuring high availability and minimizing downtime in case of hardware failures or regional outages. Setting up a similar level of redundancy with traditional servers would be considerably more complex and expensive. Cloud providers often offer managed services like databases, reducing the burden of management and allowing developers to focus on building features rather than infrastructure.

7. What is cloud computing, in your own words? Describe like you're explaining it to your grandparents.

Imagine you have a computer program, like a photo album or a game. Instead of keeping it on your computer at home, you put it on a computer in a big building somewhere else, a place with lots of computers all connected. This big building is the "cloud."

So, cloud computing is like renting space and using software on someone else's powerful computers instead of using your own. You can get to your photos or play your games from anywhere with the internet. It's convenient because you don't have to worry about storing everything yourself or keeping the software up-to-date; the people running the "cloud" take care of that for you.

8. Why is security important when working with cloud services, and what are some basic security measures you might use?

Security is crucial when working with cloud services because you're entrusting your data and applications to a third-party provider and a shared infrastructure. Data breaches, unauthorized access, and denial-of-service attacks can lead to significant financial losses, reputational damage, and legal liabilities. Cloud environments can have vulnerabilities if not configured and secured properly.

Some basic security measures include:

- Strong Passwords and Multi-Factor Authentication (MFA): Protect user accounts from unauthorized access.

- Access Control: Implementing Role-Based Access Control (RBAC) to limit user permissions.

- Encryption: Encrypting data at rest and in transit to protect confidentiality.

- Regular Security Audits and Vulnerability Scanning: Identifying and addressing potential weaknesses in the cloud environment.

- Firewall Configuration: Configuring firewalls to control network traffic and prevent unauthorized access.

- Monitoring and Logging: Monitoring system activity and logging events to detect and respond to security incidents.

9. What does it mean to scale a cloud application, and why is scalability important?

Scaling a cloud application refers to the ability to handle an increasing workload by adding resources to the system. This can be done in two primary ways: vertical scaling (scaling up) and horizontal scaling (scaling out).

Scalability is important because it allows applications to maintain performance and availability as demand grows. Without scalability, applications can become slow, unresponsive, or even crash under heavy load, leading to a poor user experience and potential loss of revenue. Cloud environments provide the infrastructure and tools necessary to efficiently scale applications based on real-time needs.

10. How can cloud services help a company save money on their IT infrastructure?

Cloud services offer several ways for companies to save money on their IT infrastructure. Firstly, they reduce capital expenditure (CAPEX) by eliminating the need to purchase and maintain physical servers, networking equipment, and data centers. Instead of large upfront investments, companies pay for resources as they consume them (OPEX model), often leading to lower overall costs and better resource utilization.

Secondly, cloud services automate many IT tasks like patching, backups, and disaster recovery, reducing the need for a large IT staff. Scalability is also a key factor; companies can easily scale resources up or down based on demand, avoiding over-provisioning and paying only for what they use. Cloud providers also typically offer better security and compliance features than many on-premise solutions, potentially reducing security-related costs.

11. What's one thing that excites you about the future of cloud computing?

The increasing accessibility and democratization of advanced technologies like AI/ML through the cloud are incredibly exciting. This means smaller companies and individual developers can leverage powerful tools that were previously only available to large corporations with significant resources.

Specifically, I'm looking forward to seeing more serverless platforms and managed services that abstract away the complexities of infrastructure management. This will allow developers to focus on building innovative applications and solving real-world problems without being bogged down by operational overhead.

12. If you had to explain the 'pay-as-you-go' model of cloud computing, what analogy would you use?

Imagine utilities like electricity or water. You only pay for what you consume. In cloud computing's 'pay-as-you-go' model, it's similar. You're charged only for the computing resources (like processing power, storage, network bandwidth) that you actually use, and for the time that you use them. No upfront commitments or long-term contracts are required, providing flexibility and cost efficiency.

Another good analogy is a toll road. You only pay the toll for the distance you drive on the road. If you don't use the road, you don't pay anything. Cloud 'pay-as-you-go' works the same way: the more resources you consume, the more you pay; if you don't use any resources, you incur no charges.

13. Tell me about a time you encountered a technical challenge, and how you went about solving it.

During a recent project, I faced a performance bottleneck in our data processing pipeline. We were using a standard for loop to iterate through a large dataset and perform some transformations. The process was taking hours, which was unacceptable. I suspected the problem was the iterative nature and the overhead of each loop iteration.

To solve this, I researched alternative approaches and discovered that vectorizing the operations using NumPy could significantly improve performance. I refactored the code to leverage NumPy's array operations, which allowed us to perform the transformations on the entire dataset at once. This dramatically reduced the processing time from hours to minutes. I also implemented profiling to pinpoint which operations were taking the longest time. After profiling, I realized some of the numpy functions were not as performant as expected and by switching to Numba's JIT compiler I was able to get even more speedup. This experience taught me the importance of understanding the underlying mechanisms of libraries and considering alternative approaches for optimization.

14. Describe your understanding of the different types of cloud computing: IaaS, PaaS, and SaaS. Can you provide a simple example of each?

IaaS (Infrastructure as a Service) provides access to fundamental computing resources like virtual machines, storage, and networks. You control the operating system, storage, deployed applications, and possibly select networking components (e.g., firewalls). An example is AWS EC2, where you manage the server instance. PaaS (Platform as a Service) delivers a platform for developing, running, and managing applications. You don't manage the underlying infrastructure (servers, networks, storage), but you control the applications and data. Google App Engine, which lets you deploy and run web applications without managing servers, is an example. SaaS (Software as a Service) provides ready-to-use applications over the internet. You simply use the software; the provider manages everything else. Salesforce, a CRM application accessed via a web browser, is a common example.

15. What is 'serverless' computing and what are some of its use cases?

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. You don't have to provision or manage servers to run code. You only pay for the compute time your code consumes. This contrasts with traditional cloud models where you reserve and pay for virtual machines or servers regardless of utilization.

Some use cases include:

- Web applications: Hosting APIs and backends for web apps.

- Mobile backends: Handling authentication, data processing, and push notifications for mobile apps.

- Data processing: Performing ETL (Extract, Transform, Load) operations, stream processing, and batch processing.

- Chatbots: Powering conversational interfaces.

- Automation: Automating tasks triggered by events, such as image resizing or sending emails.

- IoT Backends: Ingesting and processing data from IoT devices. Example: ```python

import json

def lambda_handler(event, context):

return {

'statusCode': 200,

'body': json.dumps('Hello from Lambda!')

}

16. How does the cloud help companies with disaster recovery?

The cloud provides several advantages for disaster recovery (DR). Cloud-based DR solutions enable companies to replicate their data and applications to a geographically separate cloud region. This eliminates the need for maintaining expensive, redundant on-premises infrastructure solely for DR purposes.

Specifically, the cloud offers:

- Cost-effectiveness: Pay-as-you-go pricing models reduce capital expenditure.

- Scalability and Flexibility: Resources can be quickly scaled up during a disaster.

- Automated Failover: DR processes can be automated for faster recovery times.

- Reduced Complexity: Cloud providers handle the underlying infrastructure management.

17. What are some trade-offs between different cloud providers like AWS, Azure, and Google Cloud?

Choosing between AWS, Azure, and Google Cloud involves several trade-offs. AWS offers the most mature and extensive service catalog, but its complexity can be overwhelming. Azure is tightly integrated with Microsoft products and ideal for organizations heavily invested in the Microsoft ecosystem. Google Cloud excels in data analytics, machine learning, and Kubernetes, making it a strong choice for data-intensive applications and containerized workloads.

Cost structures also differ. AWS has a pay-as-you-go model that can be complex to optimize. Azure offers reserved instances and hybrid benefits, which can lead to cost savings for long-term commitments or hybrid cloud environments. Google Cloud provides sustained use discounts and committed use discounts, which can also offer cost advantages. Ultimately, the best choice depends on your specific requirements, existing infrastructure, and technical expertise.

18. How would you ensure that your cloud resources are being used efficiently and not wasting money?

To ensure efficient cloud resource utilization and prevent unnecessary spending, I would implement several strategies. First, I'd regularly monitor resource utilization using cloud provider tools (like AWS Cost Explorer, Azure Cost Management, or Google Cloud Cost Management). This involves identifying idle or underutilized resources, such as instances, storage, or databases, and either rightsizing them or decommissioning them entirely. Automated scaling is also crucial; configure services to automatically adjust resource allocation based on demand, scaling up during peak periods and down during off-peak times.

Furthermore, I would leverage cost optimization techniques like reserved instances or committed use discounts offered by cloud providers for predictable workloads. It's important to implement and enforce resource tagging to track costs effectively and allocate them to specific departments or projects. Regularly reviewing billing reports and setting up cost alerts can help quickly identify unexpected spending spikes and address them promptly. Continuous monitoring and optimization are key to maintaining cost-effectiveness in the cloud.

19. Can you explain the concept of 'cloud migration'? What kind of tasks will it include?

Cloud migration is the process of moving digital assets, like data, applications, and IT infrastructure, from on-premises data centers or one cloud environment to another. The goal is typically to improve scalability, reduce costs, increase agility, or enhance security.

Tasks involved in cloud migration often include:

- Assessment and Planning: Analyzing existing infrastructure, identifying dependencies, and defining migration strategy.

- Data Migration: Moving data to the cloud, often involving ETL processes and ensuring data integrity.

- Application Migration: Rehosting (lift and shift), replatforming, or refactoring applications to run in the cloud.

- Security Configuration: Setting up security measures in the cloud, like IAM roles, network security groups, and encryption.

- Testing and Validation: Ensuring applications and data function correctly in the cloud environment.

- Optimization: Fine-tuning cloud resources for performance and cost efficiency.

20. What's your experience with any specific cloud technologies or services, even if it's just in a learning or hobby context?

While my experience is primarily through learning and personal projects, I've been exploring cloud technologies on AWS. I've experimented with services like:

- EC2: I've launched and configured EC2 instances for various purposes, including hosting simple web applications and running batch processing jobs. I've also practiced using security groups to manage network access.

- S3: I've used S3 for object storage, storing and retrieving files programmatically using the AWS SDK for Python (

boto3). - Lambda: I've created serverless functions using Lambda, triggered by events such as S3 uploads or API Gateway requests. This included writing the functions in Python and configuring the necessary IAM roles and permissions. I'm also familiar with the AWS CLI for managing these services.

21. If the cloud is all about sharing, how do you make sure different users have access to only what they are supposed to see?

Access control in the cloud is primarily achieved through a combination of Identity and Access Management (IAM) policies and Role-Based Access Control (RBAC). IAM defines who can access what cloud resources, while RBAC assigns specific permissions to roles, and then assigns those roles to users or groups. Cloud providers offer services that allow administrators to precisely define these policies, ensuring users only have the necessary permissions to perform their tasks, following the principle of least privilege. These mechanisms can control access at a very granular level, even down to individual API calls on specific resources.

Beyond IAM/RBAC, security groups and network access control lists (ACLs) manage network traffic and can restrict access based on IP addresses or ports. Encryption, both in transit and at rest, is crucial for protecting sensitive data. Multi-factor authentication (MFA) adds an extra layer of security, and regular auditing of access logs helps identify and address any potential security breaches or misconfigurations.

22. Suppose your application in the cloud slows down unexpectedly. How would you go about figuring out what's wrong?

First, I'd check the cloud provider's status page for any known outages or service degradations. Then, I'd focus on monitoring key application metrics using the cloud provider's monitoring tools (e.g., CloudWatch, Azure Monitor, Google Cloud Monitoring). Specifically, I'd look at CPU utilization, memory usage, disk I/O, network latency, and request rates. Unusual spikes or sustained high values in any of these metrics could indicate a bottleneck.

Next, I'd examine application logs for errors or warnings. I would correlate the timing of the slowdown with any log entries to pinpoint potential problem areas in the code or configuration. Tools like grep, awk or cloud-based log aggregation services (e.g., ELK stack, Splunk) would be very useful. If database performance is suspected, I'd examine database query performance and resource utilization. Finally, I'd consider recent deployments or configuration changes as potential causes and might revert to a previous stable version if necessary.

23. How would you explain the concept of 'containers' to someone who doesn't know anything about technology?

Imagine containers like lightweight shipping containers in the real world. They package everything an application needs to run - the code, libraries, settings - ensuring it works the same way, regardless of where it's shipped or run, whether that's your computer, a friend's computer, or a big company's server.

Think of it this way: if you give someone a recipe and all the ingredients perfectly measured, they can recreate your dish exactly. Containers do the same for software - they deliver the app and its 'ingredients' in a neat package, guaranteeing consistent results every time.

24. Why is automation important in cloud environments, and what are some ways to automate tasks?

Automation is crucial in cloud environments because it enhances efficiency, reduces errors, and improves scalability. Manual tasks are time-consuming, prone to mistakes, and don't scale well with dynamic cloud resources. Automation enables faster deployments, consistent configurations, and quicker responses to incidents.

Some ways to automate tasks include using Infrastructure as Code (IaC) tools like Terraform or CloudFormation to provision and manage resources. Configuration management tools like Ansible or Chef can automate software installation and configuration. Scripting languages like Python or Bash can be used for custom automation tasks. Cloud providers also offer services like AWS Lambda or Azure Functions for event-driven automation. CI/CD pipelines automate the build, test, and deployment processes.

25. What are some resources you use to stay up-to-date with the latest trends and technologies in cloud computing?

I stay updated with cloud computing trends through a variety of resources. I regularly read industry blogs and publications like the AWS Blog, Google Cloud Blog, and the Microsoft Azure Blog. These provide insights into new services, features, and best practices. I also follow prominent thought leaders on platforms like Twitter and LinkedIn.

Furthermore, I participate in online communities like Reddit's r/aws, r/azure, and r/googlecloud. I also attend webinars and virtual conferences offered by cloud providers and other industry organizations to learn about new technologies and hear from experts. For hands-on learning, I utilize platforms like A Cloud Guru and Udemy for courses on specific cloud services and technologies.

Cloud Architect intermediate interview questions

1. Explain how you would design a hybrid cloud solution for a large enterprise with specific security and compliance requirements, detailing the connectivity, data synchronization, and security considerations.

Designing a hybrid cloud for a large enterprise with stringent security and compliance involves careful planning across connectivity, data synchronization, and security. Connectivity would be established using a secure VPN or dedicated private circuits (e.g., AWS Direct Connect, Azure ExpressRoute) to ensure encrypted communication between the on-premises data center and the cloud. Data synchronization would leverage tools like AWS Storage Gateway, Azure File Sync, or hybrid ETL processes using tools like Informatica or Databricks to maintain data consistency, with differential synchronization minimizing bandwidth usage and near real-time updates where required. Data classification is vital to ensure sensitive data remains on-premise, while less sensitive can go to the cloud. Backup and DR plans would need to be reviewed to support the hybrid architecture.

Security considerations are paramount. Implementing a unified identity and access management (IAM) system across both environments using solutions like Azure AD Connect or Okta is key. Data encryption at rest and in transit using KMS (Key Management Service) or HSM (Hardware Security Modules) is a must. Compliance requirements dictate rigorous auditing, so centralized logging and monitoring using tools like Splunk or ELK stack are crucial. Regular vulnerability scanning, penetration testing, and adherence to frameworks like SOC 2 or HIPAA would also be enforced consistently across the hybrid environment. Network segmentation and microsegmentation are helpful to control the flow of traffic across the two environments based on data classification and security risk. Lastly, incident response procedures must be designed to effectively address issues in either environment.

2. Describe a scenario where a microservices architecture on the cloud would be beneficial, and explain the challenges associated with managing and deploying such an architecture.

A good scenario for a microservices architecture on the cloud is an e-commerce platform. Different microservices could handle product catalog, user authentication, shopping cart, payment processing, and order fulfillment. Each service can be scaled independently based on demand (e.g., the product catalog might need more scaling during a sale). This also allows for independent deployments meaning individual teams can update a single microservice without disrupting the entire platform.

Challenges include increased complexity in deployment and management. Managing inter-service communication becomes crucial and often requires service meshes or API gateways. Monitoring and logging are also more complex as you need to correlate logs across multiple services. Deployment strategies like blue-green deployments can be challenging to implement across a distributed system. Also debugging issues is harder because a single request can span across multiple services. You will also need robust CI/CD pipelines to ensure that the multiple services can be deployed and integrated seamlessly. Security is also an important challenge because you need to secure communication between services, and secure the API gateway or service mesh.

3. How would you approach migrating a large on-premises database to a cloud-based database service, covering aspects such as data consistency, downtime, and cost optimization?

Migrating a large on-premises database to the cloud involves careful planning. I'd start with a thorough assessment of the existing database, including size, schema complexity, dependencies, and performance characteristics. This helps choose the appropriate cloud database service (e.g., AWS RDS, Azure SQL Database, Google Cloud SQL) and migration strategy. Data consistency is paramount, so I'd use techniques like transactional replication or change data capture (CDC) to minimize data loss during the migration process. A phased approach, possibly starting with non-critical data, allows for validation and reduces risk. We would also use checksums and validation scripts to verify data integrity after the migration.

Downtime can be minimized by employing online migration tools or techniques like logical replication. Cost optimization involves selecting the right instance size, storage type, and reserved capacity options in the cloud. Post-migration, continuous monitoring and performance tuning are essential to ensure optimal performance and cost efficiency. Utilizing cloud-native features such as auto-scaling and serverless functions for related applications can further enhance cost savings. Thorough testing and validation at each stage are key to a successful migration.

4. Imagine a sudden surge in traffic to a web application. How would you use cloud services to automatically scale the application to handle the increased load, and what metrics would you monitor?

To automatically scale a web application in response to a traffic surge using cloud services, I'd leverage services like AWS Auto Scaling with Elastic Load Balancing (ELB) or Azure Virtual Machine Scale Sets with Azure Load Balancer. The Auto Scaling group would be configured to automatically increase the number of instances based on predefined metrics. Specifically, I would monitor metrics such as CPU utilization, memory utilization, request latency, and the number of active connections. Thresholds would be set for each metric to trigger scaling events (e.g., if CPU utilization exceeds 70%, add another instance).

The ELB (or Azure Load Balancer) would distribute incoming traffic across all healthy instances, ensuring no single instance is overwhelmed. In addition to instance scaling, I might also consider autoscaling other components of the application, such as databases or caching layers (e.g., using Amazon RDS Auto Scaling or Azure Cache for Redis scaling options), if those are becoming bottlenecks.

5. Explain the difference between Infrastructure as Code (IaC) and traditional infrastructure management, and discuss the benefits of using IaC in a cloud environment.

Traditional infrastructure management involves manual configuration and management of servers, networks, and other infrastructure components. This process is often time-consuming, error-prone, and difficult to scale. Infrastructure as Code (IaC), on the other hand, uses code to define and manage infrastructure. This allows for automation, version control, and repeatability, leading to more efficient and reliable infrastructure management.

The benefits of using IaC in a cloud environment are numerous. IaC enables faster deployment and scaling of resources, reduces the risk of human error, improves consistency across environments, and facilitates easier disaster recovery. It also allows for infrastructure to be treated as code, enabling developers to use familiar tools and workflows for managing infrastructure. This also enhances security through version control and automated auditing. Ultimately, IaC leads to increased agility, reduced costs, and improved overall infrastructure management in the cloud.

6. Describe how you would design a disaster recovery plan for a critical application running in the cloud, including considerations for RTO (Recovery Time Objective) and RPO (Recovery Point Objective).

A disaster recovery (DR) plan for a critical cloud application should prioritize minimizing downtime and data loss, aligning with defined RTO and RPO. A multi-region active-passive setup is a common approach. The active region hosts the live application, while the passive region mirrors the application's infrastructure and data. Data replication should be configured to meet the RPO, employing asynchronous replication for lower latency but potential data loss, or synchronous replication for minimal data loss (but higher latency). Critical components should have automated failover mechanisms, where the passive region automatically takes over if the active region fails.

Considerations include:

- RTO: Implement automation for failover (e.g., using cloud provider's services) to minimize the time it takes to switch to the passive region.

- RPO: Choose a data replication strategy (synchronous or asynchronous) to minimize potential data loss based on the application's needs. Regularly test the DR plan to ensure that it meets the RTO and RPO requirements. Use infrastructure as code(IaC) for both regions to easily replicate environments.

7. Explain the concept of serverless computing and provide an example of when you would choose a serverless architecture over a traditional virtual machine-based architecture.

Serverless computing allows you to run code without managing servers. You deploy functions or applications, and the cloud provider automatically allocates and manages the underlying infrastructure. You only pay for the actual compute time your code consumes.

I'd choose serverless over VMs when building an application with event-driven architecture, such as processing image uploads. With serverless, a function triggers on image upload, processes it (e.g., resizing, watermarking), and stores the result. This avoids the overhead of managing a VM that's constantly running, especially when uploads are infrequent. Another example is a REST API with infrequent usage, where scaling down to zero when not in use is highly advantageous cost-wise.

8. How would you ensure the security of data stored in a cloud-based object storage service, considering aspects like encryption, access control, and data lifecycle management?

Securing cloud object storage involves several key strategies. Encryption, both at rest and in transit, is crucial. For example, using server-side encryption (SSE) options provided by the cloud provider (like SSE-S3, SSE-KMS, or SSE-C with AWS) or encrypting data client-side before uploading. Access control should be implemented using IAM roles and policies, bucket policies, and potentially access control lists (ACLs) to grant granular permissions to users and services. Regular auditing of these policies is important. Data lifecycle management policies help automatically transition data to cheaper storage tiers based on access frequency, or automatically delete it after a specified period, reducing risk and cost.

Further enhance security with multi-factor authentication (MFA) for administrative access. Implement versioning for data recovery. Regularly monitor activity logs and set up alerts for unusual access patterns or suspicious activities. Data masking or tokenization techniques can be employed to protect sensitive information when stored in object storage. Consider using data loss prevention (DLP) tools for monitoring and preventing sensitive data from leaving the organization's control.

9. Describe how you would implement a CI/CD pipeline for deploying applications to the cloud, including automated testing, deployment, and rollback strategies.

A CI/CD pipeline for cloud deployment involves several stages. First, code changes trigger an automated build process, followed by unit and integration tests. If the tests pass, the application is deployed to a staging environment for further testing, including user acceptance testing (UAT). Tools like Jenkins, GitLab CI, or GitHub Actions can automate these processes.

For deployment, I'd use infrastructure-as-code (IaC) tools like Terraform or CloudFormation to provision cloud resources. Deployment strategies include blue/green deployments (deploying a new version alongside the old, then switching traffic), canary deployments (releasing the new version to a subset of users), or rolling updates (gradually replacing instances). Rollback strategies depend on the deployment method; blue/green allows immediate switch back, while canary or rolling updates require monitoring metrics and reverting to the previous version if issues arise. Automated monitoring and alerting systems are essential for detecting and addressing any deployment failures.

10. Explain how you would monitor the performance and health of a cloud-based application, and how you would use monitoring data to identify and resolve performance bottlenecks.

To monitor a cloud-based application's performance and health, I'd employ a multi-faceted approach. First, I'd leverage cloud provider monitoring services like AWS CloudWatch, Azure Monitor, or Google Cloud Monitoring to track key metrics such as CPU utilization, memory usage, network latency, and disk I/O. I would also implement application performance monitoring (APM) tools like DataDog or New Relic to gain deeper insights into application behavior, including response times, error rates, and database query performance. Setting up alerts based on threshold breaches for these metrics would enable proactive issue detection. Logging, both application and system logs, will be crucial for debugging issues. Aggregated logging can be achieved using tools like the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk.

Identifying and resolving performance bottlenecks involves analyzing the collected monitoring data. I'd start by correlating performance dips with specific events or changes. Using APM tools, I can drill down into slow transactions to pinpoint the problematic code sections or database queries. If CPU or memory usage is high, I'd investigate the processes consuming the resources. Network latency issues might require examining network configurations and traffic patterns. I would also use load testing tools to simulate user traffic to identify bottlenecks under stress. Automation of remediation through auto-scaling and automated rollbacks upon error detection can greatly increase application resiliency. Finally, continuous performance testing and optimization based on monitoring data are essential for maintaining a healthy application.

11. How would you optimize cloud costs for a company that is experiencing high cloud spending, identifying potential areas for cost reduction and implementing cost management strategies?

To optimize cloud costs, I'd first analyze current spending using cloud provider cost management tools to identify the largest cost drivers (e.g., compute, storage, network). Then, I'd focus on these areas for reduction. Strategies include: right-sizing instances, utilizing reserved instances or savings plans for consistent workloads, deleting unused resources, optimizing storage tiers (moving infrequently accessed data to cheaper storage), and implementing auto-scaling to adjust resources based on demand.

Furthermore, I'd implement cost monitoring and alerting to track spending and identify anomalies. For continuous improvement, I would establish policies for resource tagging, cost allocation, and regular cost reviews. Infrastructure-as-code (IaC) adoption would help manage resources efficiently. Consider spot instances for fault-tolerant workloads.

12. Describe how you would approach the challenge of vendor lock-in when adopting cloud services, and what strategies you would use to mitigate this risk.

To address vendor lock-in when adopting cloud services, I'd focus on building a flexible and adaptable architecture. This involves prioritizing open standards and avoiding proprietary technologies specific to a single vendor. Key strategies include:

- Containerization (e.g., Docker, Kubernetes): Package applications into containers for easy portability across different cloud environments or even on-premises infrastructure.

- Infrastructure as Code (IaC): Use tools like Terraform or Ansible to define and provision infrastructure in a vendor-neutral way, allowing for easier migration.

- Abstracted Data Layers: Avoid relying heavily on vendor-specific database services or data storage formats. Consider using data lakes or data warehouses that are easily exportable or migrating to open-source database solutions.

- Multi-Cloud or Hybrid Cloud Strategy: Distribute workloads across multiple cloud providers or a combination of cloud and on-premises infrastructure to reduce dependence on a single vendor.

- Careful Selection of Cloud Services: When choosing cloud services, carefully evaluate the level of vendor lock-in involved and prioritize services with strong industry standards support.

- Regularly Audit Dependencies: Continuously monitor the technology stack to identify potential lock-in points and explore alternative solutions.

By implementing these strategies, organizations can minimize the risks associated with vendor lock-in and maintain greater control over their cloud deployments.

13. Explain the different cloud deployment models (e.g., public, private, hybrid, multi-cloud) and discuss the advantages and disadvantages of each model.

Cloud deployment models describe where your infrastructure and applications reside. Public cloud involves using resources owned and operated by a third-party provider (e.g., AWS, Azure, GCP). Advantages are scalability, cost-effectiveness (pay-as-you-go), and reduced maintenance. Disadvantages include potential security concerns, limited control, and vendor lock-in. Private cloud utilizes infrastructure exclusively for a single organization, either on-premises or hosted by a third party. Advantages are greater security, control, and compliance. Disadvantages are higher costs, more maintenance, and less scalability compared to public cloud.

Hybrid cloud combines public and private clouds, allowing workloads to move between them. Advantages include flexibility, cost optimization, and disaster recovery. Disadvantages involve complexity in managing and integrating different environments. Multi-cloud uses multiple public cloud providers. Advantages are reduced vendor lock-in, improved resilience, and access to specialized services from different providers. Disadvantages include increased complexity and potential compatibility issues.

14. How would you design a secure and scalable API gateway for a microservices architecture, including considerations for authentication, authorization, and rate limiting?

A secure and scalable API gateway for a microservices architecture would involve several key components. Authentication would typically be handled via JWT (JSON Web Tokens) or OAuth 2.0, with the gateway verifying token signatures and validity before routing requests. Authorization can be implemented using role-based access control (RBAC) or attribute-based access control (ABAC), often relying on policies defined and enforced at the gateway level to control access to specific microservices or endpoints.

To ensure scalability, the gateway itself should be designed as a distributed system, potentially using technologies like Kubernetes, Envoy, or Kong. Rate limiting is crucial for preventing abuse and ensuring fair usage. This can be achieved through algorithms like token bucket or leaky bucket, applied at different levels (e.g., per user, per IP address) and with configurable thresholds. Technologies like Redis or Memcached can be used for storing rate limit counters efficiently. Monitoring and logging are essential for identifying security threats and performance bottlenecks.

15. Explain how you would use cloud-based identity and access management (IAM) services to control access to cloud resources and ensure compliance with security policies.

Cloud-based IAM services like AWS IAM, Azure Active Directory, or Google Cloud IAM are central to controlling access and ensuring compliance in the cloud. I would use them to define roles and permissions that grant specific access to cloud resources. For example, a role might allow read-only access to a database or full access to a compute instance. These roles are then assigned to users, groups, or applications, adhering to the principle of least privilege.

To ensure compliance, I would leverage features like multi-factor authentication (MFA), conditional access policies (e.g., requiring specific device types or locations), and access reviews. IAM services provide audit logs that track user activity and resource access, facilitating compliance reporting and security investigations. These logs can be integrated with security information and event management (SIEM) systems for real-time monitoring and alerting on suspicious activities. Finally, service control policies (SCPs) can be implemented at the organizational level to enforce mandatory restrictions on IAM permissions, further strengthening security posture and compliance.

16. Describe how you would implement a data lake in the cloud for storing and processing large volumes of structured and unstructured data, and what technologies you would use.

To implement a data lake in the cloud, I'd leverage cloud-native services. For storage, I would use object storage like AWS S3, Azure Blob Storage, or Google Cloud Storage due to their scalability and cost-effectiveness. I'd establish a well-defined folder structure and metadata management system to organize the data. Data ingestion would be handled using services like AWS Glue, Azure Data Factory, or Google Cloud Dataflow, which allow for both batch and real-time data loading. Data would be stored in its raw format (e.g., Parquet, ORC, JSON, CSV) for flexibility.

For processing and analysis, I would use a combination of technologies. For large-scale batch processing, I'd use distributed processing frameworks like Apache Spark (via services like AWS EMR, Azure Synapse Analytics, or Google Dataproc). For interactive querying and analysis, I'd use serverless query services like AWS Athena, Azure Synapse Serverless SQL pool, or Google BigQuery. I'd also consider using machine learning services like Amazon SageMaker, Azure Machine Learning, or Google AI Platform for advanced analytics and predictive modeling. All of this would require robust security measures, including access controls, encryption, and auditing, implemented through the cloud provider's identity and access management (IAM) services.

17. Explain how you would design a solution for real-time data streaming and analytics in the cloud, and what services you would use to ingest, process, and analyze the data.

For real-time data streaming and analytics in the cloud, I would use a combination of cloud-native services. Data ingestion would start with services like Amazon Kinesis Data Streams or Azure Event Hubs, capable of handling high-velocity data. Then, I would utilize a stream processing engine like Apache Flink (managed via Amazon Kinesis Data Analytics or Azure Stream Analytics) or Spark Streaming (managed via Databricks) for real-time transformations, aggregations, and filtering. These services enable windowing, state management, and fault tolerance.

For data storage and analytics, the processed data can be routed to services like Amazon S3, Azure Data Lake Storage, or Google Cloud Storage for archival and batch analytics. Real-time analytical queries can be performed using services like Amazon Athena, Azure Synapse Analytics or Google BigQuery. Furthermore, for real-time dashboards and visualizations, services like Amazon QuickSight, Microsoft Power BI, or Google Data Studio can be used, directly connected to the analytical layer or to the stream processing output, for immediate insights.

18. How do you approach capacity planning in a cloud environment, and what tools and techniques do you use to predict future resource needs?

Capacity planning in the cloud involves understanding current resource utilization and predicting future needs to avoid performance bottlenecks or wasted resources. I typically start by establishing baseline metrics for CPU, memory, network, and storage using cloud monitoring tools like AWS CloudWatch, Azure Monitor, or Google Cloud Monitoring. I then analyze historical trends to identify patterns, seasonality, and growth rates. This helps project future resource demands. I consider both short-term and long-term projections, factoring in planned application changes, marketing campaigns, and business growth.

To predict resource needs, I leverage a combination of techniques. This includes statistical forecasting methods, such as time series analysis, and predictive modeling using machine learning algorithms. I also perform load testing and simulations to understand how applications behave under different traffic scenarios. Cloud-native auto-scaling features are crucial; I configure them based on observed metrics and predicted workloads, setting thresholds for scaling up or down. Additionally, I continuously monitor performance and adjust capacity plans as needed based on real-world usage.

19. Walk me through the process of selecting appropriate cloud services based on a specific business requirement. How do you weigh different factors like cost, performance, and security?

Selecting cloud services starts with a clear understanding of the business requirement. I would begin by defining the specific problem the cloud service needs to solve and the desired outcome. Next, I'd identify the key factors influencing the decision such as cost, performance, security, compliance, scalability, and operational overhead.

To weigh these factors, I'd assign relative importance scores based on the business priorities. For example, security might be the highest priority for sensitive data, while cost might be the primary concern for less critical workloads. Then, I would evaluate potential cloud services based on how well they meet each factor. This involves comparing pricing models, performance benchmarks, security features (encryption, access control), compliance certifications, and scalability options. I'd also consider the level of management required and available support. I would then select the service that best aligns with the prioritized factors and delivers the optimal balance of cost, performance, and security for the given business requirement.

20. Suppose a client wants to reduce latency for users across the globe. How would you leverage cloud services to achieve this, detailing specific technologies and their implementation?

To reduce latency for global users, I would leverage cloud services by primarily using a Content Delivery Network (CDN). Specifically, I'd use services like Amazon CloudFront, Akamai, or Cloudflare. These services cache content (static assets, images, videos) in geographically distributed edge locations. When a user requests content, the CDN serves it from the nearest edge location, minimizing the distance the data has to travel, thereby reducing latency.

Beyond CDNs, consider global load balancing using services like AWS Global Accelerator or Azure Traffic Manager. These services intelligently route user requests to the closest healthy application endpoint (e.g., a web server in a specific region) based on factors like geographic location and server health. Additionally, deploying application servers in multiple regions closer to the user base is crucial. For example, deploying the app in AWS regions like us-east-1, eu-west-1, and ap-southeast-1. The combination of these cloud services ensures that data and applications are delivered to users with the lowest possible latency, regardless of their location. Using a global database like DynamoDB with global tables could also help to keep the database close to the application servers.

21. Explain how you would implement a robust logging and auditing system in a cloud environment to meet compliance requirements and facilitate security investigations.

In a cloud environment, a robust logging and auditing system requires a centralized approach to collect, store, and analyze logs from various sources (applications, systems, network devices). I would implement a solution using cloud-native logging services like AWS CloudWatch, Azure Monitor, or Google Cloud Logging. Logs would be structured using a standard format (e.g., JSON) and enriched with relevant metadata. Security is paramount, therefore, log data must be encrypted both in transit and at rest and access should be strictly controlled via IAM policies. Long-term storage of logs would be in a cost-effective storage tier like S3 or Azure Blob Storage, with data retention policies defined to meet compliance needs.

To facilitate security investigations, I would integrate the logging system with a SIEM (Security Information and Event Management) solution such as Splunk, Sumo Logic, or cloud-native offerings like AWS Security Hub or Azure Sentinel. The SIEM would be configured with alerts for suspicious activities and provide advanced analytics capabilities to detect anomalies. Regular auditing of the logging system itself is crucial, including access controls, configuration changes, and data integrity checks. We would enable audit logging (e.g., CloudTrail in AWS) to track all API calls and user actions performed on the logging infrastructure.

22. What are some of the key differences in architectural considerations between designing for a single cloud provider versus a multi-cloud environment?