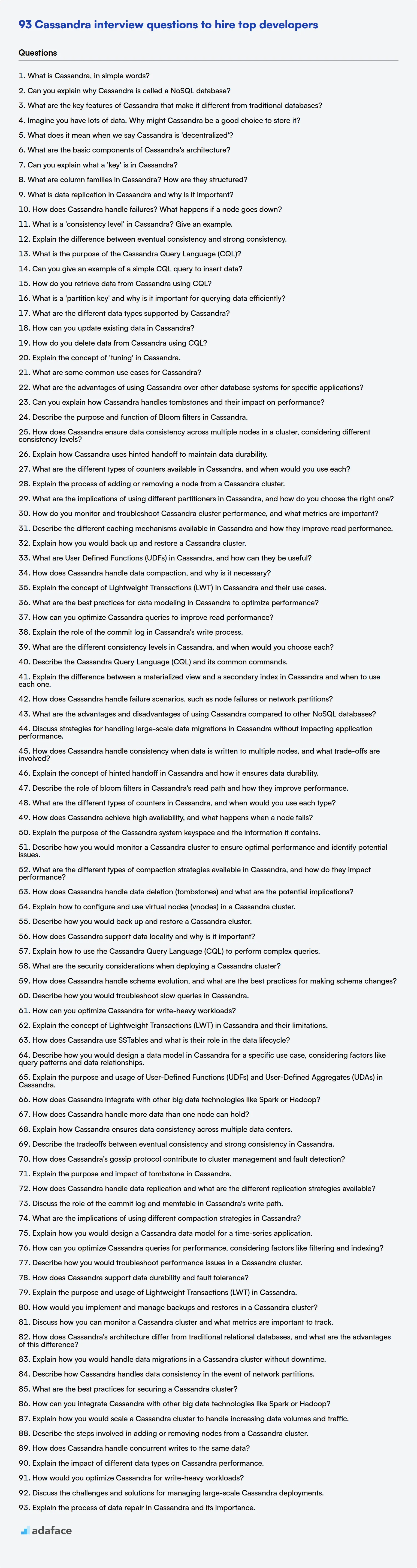

Are you looking to hire Cassandra developers? This comprehensive list of interview questions is designed to help you assess the skills of candidates, regardless of their experience level.

This curated collection covers a range of topics, ensuring you can evaluate both foundational knowledge and advanced expertise. It's a great resource, that could prove useful, similar to the insights in our guide on hiring software engineers.

This blog post is meticulously structured to provide you with interview questions, categorized by experience levels, from fresher to expert. By using these questions, you can confidently evaluate potential hires.

To further refine your candidate assessment, consider leveraging pre-employment tests before the interviews.

Table of contents

Cassandra interview questions for freshers

1. What is Cassandra, in simple words?

Cassandra is a NoSQL database designed for handling massive amounts of data across many servers, providing high availability with no single point of failure. It's like a highly scalable and fault-tolerant data storage system. It's particularly good for use cases needing high write throughput, such as logging, sensor data, or social media feeds.

Unlike traditional relational databases, Cassandra uses a distributed architecture that allows it to scale horizontally by simply adding more nodes to the cluster. Its key features include:

- Decentralized: No master node, ensuring no single point of failure.

- Scalable: Easily add more machines to the cluster as needed.

- Fault-tolerant: Data is replicated across multiple nodes.

- High Write Throughput: Optimized for fast writes.

2. Can you explain why Cassandra is called a NoSQL database?

Cassandra is called a NoSQL database because it deviates from the traditional relational database management system (RDBMS) model. Unlike RDBMS, it doesn't rely on a fixed schema, SQL for querying, and ACID (Atomicity, Consistency, Isolation, Durability) properties in the strict sense. Cassandra prioritizes availability and partition tolerance (AP in CAP theorem) offering eventual consistency, making it suitable for handling large amounts of data across distributed environments.

Instead of SQL, Cassandra uses CQL (Cassandra Query Language), which is similar to SQL but has differences and limitations based on Cassandra's data model. Cassandra's schema is also more flexible, allowing for easier addition of new columns without affecting existing data, which is a characteristic that differs substantially from relational database schema design.

3. What are the key features of Cassandra that make it different from traditional databases?

Cassandra distinguishes itself from traditional databases through several key features. Primarily, it's a distributed, NoSQL database designed for high availability and scalability. Unlike relational databases, Cassandra uses a decentralized architecture with no single point of failure, ensuring continuous operation even if some nodes fail. Data is automatically replicated across multiple nodes, providing fault tolerance and preventing data loss.

Key differences include its flexible schema, which allows for evolving data structures without requiring downtime. Cassandra's write-optimized architecture enables very fast write speeds, making it suitable for applications with high write volumes. Traditional databases often prioritize consistency, while Cassandra offers tunable consistency, allowing users to choose the level of consistency needed for their specific application requirements. Its data model is based on column families, not relational tables. This facilitates storing and retrieving related data together, improving performance for specific query patterns. Finally, Cassandra's linear scalability means you can add more nodes to the cluster to increase capacity without significant performance degradation.

4. Imagine you have lots of data. Why might Cassandra be a good choice to store it?

Cassandra is a good choice for storing large amounts of data primarily due to its scalability and fault tolerance. It's designed to handle massive datasets distributed across many commodity servers, providing high availability with no single point of failure. This makes it ideal for applications requiring constant uptime and the ability to scale horizontally to accommodate growing data volumes and user traffic.

Specifically, Cassandra offers:

- High Availability: Data is automatically replicated across multiple nodes.

- Scalability: Easily add more nodes to the cluster as data grows.

- Fault Tolerance: Continues to operate even if some nodes fail.

- Fast Writes: Optimized for write-heavy workloads.

- Decentralized Architecture: No master node to become a bottleneck.

5. What does it mean when we say Cassandra is 'decentralized'?

When we say Cassandra is 'decentralized,' it means no single node or group of nodes controls the entire cluster. Instead, all nodes in the cluster are equally responsible and contribute to the system's overall availability and fault tolerance.

Specifically, decentralization in Cassandra manifests in several key aspects:

- No Single Point of Failure: Failure of any one node does not bring down the system. Data is replicated across multiple nodes.

- Equal Nodes: All nodes have the same role, which avoids bottlenecks and complexity.

- Autonomous Operation: Nodes communicate with each other to coordinate tasks (data replication, reads, and writes) in a peer-to-peer fashion, without relying on a central master.

- Flexible Topology: New nodes can be added or removed from the cluster without significant disruption, enabling easy scalability.

6. What are the basic components of Cassandra's architecture?

Cassandra's architecture is composed of several key components working together. These include:

- Nodes: The fundamental building blocks of a Cassandra cluster. Each node stores a portion of the data and participates in the cluster's operation.

- Data Centers: A logical grouping of nodes, typically located in the same physical location. Used for fault tolerance and disaster recovery.

- Clusters: The highest level of organization, consisting of one or more data centers. A cluster represents the entire Cassandra deployment.

- Commit Log: Each node has a commit log to capture writes.

- Memtable: Data is first written to memory in a structure called Memtable.

- SSTable: Data from memtables are flushed to disk in sorted files called SSTables. Data is stored permanently in SSTables.

- Partitioner: Determines which node is responsible for storing a particular row of data. Common partitioners include Murmur3Partitioner and RandomPartitioner.

7. Can you explain what a 'key' is in Cassandra?

In Cassandra, a 'key' is a fundamental concept used to identify and locate data within a table. There are two main types of keys:

- Partition Key: This determines which node in the cluster will store the data. Rows with the same partition key are stored on the same node. Good partition key selection is crucial for efficient data distribution and query performance.

- Clustering Key: Within a partition, clustering keys determine the order in which data is stored on disk. They also allow you to efficiently query data within a specific partition based on the clustering order. A composite key, also known as a primary key, is a combination of the partition key and one or more clustering keys. For example,

PRIMARY KEY ((partition_key), clustering_key1, clustering_key2).

8. What are column families in Cassandra? How are they structured?

In Cassandra, a column family (analogous to a table in relational databases, but with significant differences) is a container for rows. It defines the structure of the data stored within it. Think of it as a map of keys to rows. Each row is uniquely identified by a row key.

Column families are structured as follows: each row contains columns, and each column has a name, a value, and a timestamp. It's important to understand that different rows within the same column family need not have the same columns. This flexible schema allows for efficient storage of varied data. Cassandra is now referred to as having tables instead of column families.

9. What is data replication in Cassandra and why is it important?

Data replication in Cassandra involves creating multiple copies of data across different nodes in the cluster. This is crucial for ensuring high availability and fault tolerance. If one node fails, the data is still accessible from other nodes that hold replicas.

Replication is configured using a replication factor, which specifies the number of copies of the data. It's important because it enhances data durability, read performance (as reads can be served from the nearest replica), and disaster recovery capabilities. Different replication strategies such as SimpleStrategy or NetworkTopologyStrategy control how replicas are placed across the cluster for optimal redundancy and performance.

10. How does Cassandra handle failures? What happens if a node goes down?

Cassandra handles failures gracefully through its distributed architecture and replication strategy. When a node goes down, Cassandra continues to operate because data is replicated across multiple nodes.

Specifically, Cassandra uses a concept called a replication factor to determine how many copies of each piece of data are stored. If a node becomes unavailable, the other nodes holding replicas of the data can still serve read and write requests. The system ensures consistency using mechanisms like hinted handoffs and read repair. Hinted handoffs mean that when a node is down, other nodes will temporarily store writes destined for the unavailable node, and replay them when it comes back online. Read repair occurs during read operations; if the data is inconsistent across replicas, Cassandra will repair the data in the background to ensure consistency.

11. What is a 'consistency level' in Cassandra? Give an example.

In Cassandra, a consistency level determines how many nodes in a cluster must acknowledge a write operation before it is considered successful, or how many nodes must respond to a read operation before the data is returned to the client. It represents a trade-off between data consistency and availability/latency. Higher consistency levels guarantee stronger consistency but might lead to higher latency and potential unavailability if many nodes are down. Lower consistency levels offer better availability and lower latency but at the cost of potential data inconsistency.

For example, if you set the consistency level to QUORUM for a write operation, a majority of the replicas for that data must acknowledge the write before Cassandra considers it successful. If you set it to ONE, only one replica needs to acknowledge the write. For reads, if the consistency level is LOCAL_QUORUM, a quorum of replicas within the local data center must agree on the data to be returned. Possible consistency levels include ONE, QUORUM, ALL, LOCAL_QUORUM, EACH_QUORUM, SERIAL, and LOCAL_SERIAL each offering different guarantees.

12. Explain the difference between eventual consistency and strong consistency.

Strong consistency guarantees that after an update, all subsequent accesses will reflect that update immediately. It's like a single source of truth, and everyone sees the same data at the same time. Eventual consistency, on the other hand, guarantees that if no new updates are made to a data item, eventually all accesses will return the last updated value. There's a period of inconsistency, a 'window,' where different users might see different versions of the data.

In practical terms, eventual consistency is often used in distributed systems where high availability and performance are prioritized over immediate consistency. Strong consistency is crucial in scenarios where data accuracy and synchronization are paramount, even at the cost of latency.

13. What is the purpose of the Cassandra Query Language (CQL)?

The Cassandra Query Language (CQL) is the primary language for interacting with Cassandra databases. It's designed to be similar to SQL, making it easier for users familiar with relational databases to learn and use Cassandra. CQL allows you to define the structure of your data (keyspaces, tables, columns), insert data, query data, update data, and delete data.

Essentially, CQL provides a user-friendly interface for performing all necessary database operations in Cassandra. It abstracts away the complexities of Cassandra's distributed architecture, allowing developers to focus on data modeling and application logic. CQL also supports features like indexing and batch processing to optimize performance.

14. Can you give an example of a simple CQL query to insert data?

A simple CQL query to insert data into a Cassandra table looks like this:

INSERT INTO users (id, first_name, last_name, email) VALUES (UUID(), 'John', 'Doe', 'john.doe@example.com');

This statement inserts a new row into the users table, specifying the values for the id, first_name, last_name, and email columns. Note the use of UUID() function to generate a unique identifier. Ensure data types match column definitions.

15. How do you retrieve data from Cassandra using CQL?

Data retrieval from Cassandra using CQL (Cassandra Query Language) is done via the SELECT statement. The basic syntax is SELECT column1, column2, ... FROM table_name WHERE condition;. You can select specific columns or use SELECT * to retrieve all columns. The WHERE clause is used to filter the data based on specified conditions, typically involving primary key columns (partition key and clustering columns).

For example:

SELECT * FROM users WHERE user_id = '123';

This query retrieves all columns from the users table where the user_id is '123'. You can also use operators like =, >, <, >=, <=, IN, and ALLOW FILTERING (though the latter should be used sparingly as it can impact performance) within the WHERE clause. Remember that CQL requires you to specify the full primary key for efficient queries.

16. What is a 'partition key' and why is it important for querying data efficiently?

A partition key is a field in your data that is used to determine which physical partition (or shard) the data will be stored in. It's crucial for efficient querying because when you specify the partition key in your query, the database can directly target the specific partition(s) that contain the data you're looking for, rather than scanning the entire dataset.

This significantly improves query performance, especially in large datasets, because it reduces the amount of data that needs to be read and processed. Without a partition key (or without specifying it in your query), the database might need to perform a full table scan, which is much slower. For example, in DynamoDB, specifying the partition key allows for queries that take O(1) time, rather than O(N).

17. What are the different data types supported by Cassandra?

Cassandra supports a variety of data types, which can be broadly categorized as follows:

- Primitive Types: These include

ascii,bigint,blob,boolean,decimal,double,float,inet,int,smallint,text,timeuuid,timestamp,tinyint,uuid,varchar, andvarint. These represent basic data like numbers, strings, and unique identifiers. - Collection Types: Cassandra provides

list,set, andmapfor storing collections of data within a single column. For example,list<text>can hold a list of strings. - Counter Type: The

countertype is specifically designed for incrementing and decrementing values. It offers atomic operations ideal for tracking counts. - User-Defined Types (UDTs): Cassandra allows you to create custom data types by grouping multiple fields together. This enhances data modeling flexibility by enabling more complex, structured data within columns.

- Tuple Type: Tuples allow you to store a fixed-size sequence of values with different data types in a single column. For example:

tuple<int, text, boolean>

18. How can you update existing data in Cassandra?

In Cassandra, you update existing data using the UPDATE statement, similar to SQL. The UPDATE statement modifies the values of specified columns in a row, identified by its primary key. If a column doesn't exist, it will be created (unless the table is configured otherwise).

For example:

UPDATE users SET email = 'new_email@example.com', age = 35 WHERE id = UUID('a1b2c3d4-e5f6-7890-1234-567890abcdef');

This command updates the email and age columns in the users table for the row where the id matches the provided UUID. You can also use conditional updates using IF clause for optimistic locking.

19. How do you delete data from Cassandra using CQL?

To delete data from Cassandra using CQL (Cassandra Query Language), you primarily use the DELETE statement. The basic syntax is DELETE FROM table_name WHERE condition;. The condition part usually involves the primary key (or part of it) to specify which row(s) you want to delete. For example:

DELETE FROM users WHERE user_id = 'some_user_id';

You can also delete specific columns instead of entire rows. In that case the syntax would be DELETE column_name FROM table_name WHERE condition;. If you need to delete multiple columns, you can list them separated by commas: DELETE column1, column2 FROM table_name WHERE condition;

20. Explain the concept of 'tuning' in Cassandra.

In Cassandra, 'tuning' refers to the process of optimizing the database for performance and stability based on specific workload characteristics and hardware configurations. It involves adjusting various parameters and configurations to achieve desired levels of throughput, latency, and resource utilization.

Tuning can involve several aspects:

- Schema design: Choosing appropriate data models and column families.

- Compaction strategy: Selecting the best compaction strategy based on workload (e.g., SizeTieredCompactionStrategy, LeveledCompactionStrategy).

- Cache settings: Configuring key and row caches.

- JVM settings: Optimizing JVM heap size and garbage collection.

- Hardware: Optimizing disk I/O using RAID configurations or SSDs.

- Network settings: Optimize connections to Cassandra cluster for best throughput and latency. This involves tweaking parameters such as

num_tokensandconcurrent_reads. Proper tuning ensures efficient resource utilization and responsiveness for applications using Cassandra.

21. What are some common use cases for Cassandra?

Cassandra is well-suited for applications requiring high availability, scalability, and fault tolerance, especially when dealing with large volumes of data. Some common use cases include:

- Time-series data: Storing and analyzing sensor data, financial transactions, or IoT data.

- Social media: Handling user profiles, social graphs, activity streams, and messaging.

- E-commerce: Managing product catalogs, order history, and customer information.

- Logging and monitoring: Aggregating and analyzing logs from various systems.

- Recommendation engines: Storing user preferences and product information for personalized recommendations.

- IoT (Internet of Things): Ingesting, storing, and processing massive amounts of data from connected devices.

22. What are the advantages of using Cassandra over other database systems for specific applications?

Cassandra offers advantages in scenarios prioritizing high availability, scalability, and fault tolerance. Its decentralized architecture eliminates single points of failure, making it ideal for applications requiring continuous uptime, like social media feeds or IoT data collection. Data is automatically replicated across multiple nodes, ensuring data availability even if some nodes are down. This robustness is often superior to traditional RDBMS systems that can have more complex failover procedures.

Furthermore, Cassandra excels at handling massive write throughput. Its write-optimized architecture is designed for ingesting large volumes of data quickly, making it suitable for use cases involving high-velocity data streams, such as real-time analytics or sensor data logging. Its ability to scale horizontally by simply adding more nodes is a key advantage over other database systems that may require more complex scaling strategies or have limitations in terms of maximum capacity.

Intermediate Cassandra interview questions

1. Can you explain how Cassandra handles tombstones and their impact on performance?

Cassandra uses tombstones to mark data for deletion. When you delete data, Cassandra doesn't immediately remove it from disk. Instead, it inserts a tombstone, which is a special marker indicating that the data should be treated as deleted. During reads, Cassandra must check for tombstones and filter out the corresponding deleted data. This adds overhead to read operations.

The impact on performance can be significant if tombstones accumulate. Each tombstone requires Cassandra to read and process it, increasing latency. Excessive tombstones can lead to higher disk I/O, increased read latencies, and even prevent Cassandra from efficiently compacting data files. Regular compactions and setting appropriate gc_grace_seconds are essential to manage tombstones and mitigate their performance impact.

2. Describe the purpose and function of Bloom filters in Cassandra.

Bloom filters in Cassandra are a probabilistic data structure used to efficiently test whether an element is a member of a set. In Cassandra, they reside in memory and are used to quickly determine if a requested row exists in an SSTable (Sorted String Table) before performing costly disk I/O.

Functionally, before Cassandra checks an SSTable for a particular piece of data, it consults the Bloom filter. If the Bloom filter says the data is not present, Cassandra skips reading that SSTable entirely. A Bloom filter can have false positives (saying an element is present when it isn't), but it will never have false negatives (saying an element isn't present when it is). The false positive probability is configurable, trading off memory usage for accuracy. When a Bloom filter returns a false positive, Cassandra falls back and checks on disk, guaranteeing correctness.

3. How does Cassandra ensure data consistency across multiple nodes in a cluster, considering different consistency levels?

Cassandra ensures data consistency across multiple nodes using a tunable consistency model. When writing data, the client specifies a consistency level (e.g., ONE, QUORUM, ALL). This determines how many nodes must acknowledge the write before it's considered successful. Similarly, on reads, the consistency level dictates how many nodes must respond with the same data before the read is considered consistent. If the data is not consistent, Cassandra performs read repair in the background to reconcile differences, ensuring eventual consistency.

Different consistency levels offer trade-offs between consistency and availability. Stronger consistency levels like QUORUM or ALL guarantee data consistency but might sacrifice availability if some nodes are down. Weaker levels like ONE allow for higher availability but may return stale data if the data hasn't propagated to all nodes yet. Cassandra also uses hinted handoffs to ensure writes are not lost when nodes are temporarily unavailable. In this approach, another available node will store the write on behalf of the unavailable node and replay it when the unavailable node comes back online.

4. Explain how Cassandra uses hinted handoff to maintain data durability.

Cassandra employs hinted handoff to ensure data durability and availability when a node is temporarily unavailable. When a write request arrives at a coordinator node and one or more replica nodes are down, the coordinator stores a 'hint' locally. This hint contains the data to be written along with metadata indicating the intended recipient(s).

Once a down replica node recovers, the coordinator delivers the stored hints to it. This ensures the node is brought up to date with the missed writes, maintaining data consistency across the cluster. The hints have a time-to-live (TTL), and are discarded if the target node remains unavailable beyond that time, preventing indefinite storage of hints. This mechanism contributes to Cassandra's fault tolerance and ability to handle temporary node outages without losing data.

5. What are the different types of counters available in Cassandra, and when would you use each?

Cassandra offers a specialized counter column type for performing increment and decrement operations efficiently. Unlike standard columns, counter columns are designed to handle concurrent updates without conflicts. There's technically only one type of counter in Cassandra but its usage can be categorized based on scope and application.

When to use counters? Primarily for use cases requiring scalable counting, like website hits, vote tallies, or inventory management where absolute accuracy to the last count isn't crucial (eventual consistency applies). Avoid counters for financial transactions or situations demanding strict ACID properties. Keep in mind that counters cannot be set to an arbitrary value. You can only increment or decrement them. If you need to reset a counter, you effectively set it to zero by decrementing it by its current value or creating a new table.

6. Explain the process of adding or removing a node from a Cassandra cluster.

Adding a node to a Cassandra cluster involves bootstrapping the new node. The node is configured with the cluster's seed node list. When started, the new node contacts the seed node, learns the cluster topology, and begins streaming data from existing nodes to take ownership of its token range. The auto_bootstrap: true setting in cassandra.yaml usually handles this automatically. Post bootstrap, running nodetool status verifies that the new node is up and contributing to the cluster.

Removing a node requires decommissioning it first. Using nodetool decommission on the node initiates the process of streaming the node's data to other nodes responsible for that data. After decommissioning, the node can be safely shut down and removed from the infrastructure. Finally, update the seed node list on remaining nodes to reflect the removed node. nodetool removenode <node ID> may be needed on a healthy node in case of an unclean shutdown.

7. What are the implications of using different partitioners in Cassandra, and how do you choose the right one?

Different partitioners in Cassandra affect how data is distributed across the nodes in the cluster. The primary partitioners are Murmur3Partitioner and RandomPartitioner. Murmur3Partitioner is generally recommended as it provides a more even distribution, reducing hotspots. RandomPartitioner distributes data randomly based on the MD5 hash of the key. OrderPreservingPartitioner is generally discouraged because its inherent ordered partitioning makes it susceptible to hotspots. Also, it can be difficult to change partitioners.

Choosing the right partitioner depends on your data model and access patterns. If you have monotonically increasing or sequential keys, Murmur3Partitioner is almost always the better choice. If keys are random, either Murmur3 or RandomPartitioner may work. Consider the implications of each partitioner carefully before choosing because you may need to migrate data if you change it later. Use nodetool cfstats to analyze data distribution after initial setup.

8. How do you monitor and troubleshoot Cassandra cluster performance, and what metrics are important?

Monitoring Cassandra cluster performance involves tracking key metrics and using tools to identify and resolve issues. Important metrics include: latency (read/write), throughput (operations/second), error rates, storage space usage, CPU utilization, memory usage (heap and off-heap), and garbage collection activity. Tools like nodetool, cqlsh, and monitoring systems (e.g., Prometheus with Grafana) are crucial.

Troubleshooting involves analyzing these metrics for anomalies. High latency could indicate network issues, overloaded nodes, or poorly tuned queries. High CPU or memory usage might point to inefficient data models or insufficient resources. Frequent garbage collection pauses can impact performance. Analyzing Cassandra logs is also critical for identifying errors and debugging problems. Using jstack and jmap can help diagnose memory leaks and thread contention.

9. Describe the different caching mechanisms available in Cassandra and how they improve read performance.

Cassandra employs several caching mechanisms to enhance read performance. These caches minimize disk I/O by storing frequently accessed data in memory. The primary caches are:

- Key Cache: Stores the location of data on disk (partition key to index mapping). Improves lookup speed.

- Row Cache: Stores entire rows of data in memory. Fastest read performance for frequently accessed rows.

- Chunk Cache: Stores SSTable data in off-heap memory. Good balance of capacity and performance. Provides performance boost by avoiding disk reads.

10. Explain how you would back up and restore a Cassandra cluster.

Backing up a Cassandra cluster typically involves using the nodetool snapshot command on each node. This creates a consistent snapshot of the data stored on that node. The snapshots are stored in the data/keyspace/table-UUID/snapshots/snapshot_name directory within the Cassandra data directory. To back up the entire cluster, you would iterate through each node, execute the nodetool snapshot command, and then copy the snapshot directories to a secure external storage location like S3 or a network file system. The snapshot name should be unique and descriptive. Consider also backing up the Cassandra schema using cqlsh DESCRIBE KEYSPACES or nodetool describecluster to capture the cluster's metadata.

Restoring a Cassandra cluster from a backup involves stopping the Cassandra service on all nodes, clearing the existing data directories (or creating new ones), and then copying the backed-up snapshot data into the appropriate data/keyspace/table-UUID/snapshots/snapshot_name directory on each node. Before starting Cassandra, the sstableloader tool is used to stream the data from the snapshot into the cluster. The schema needs to be restored by executing the previously backed-up CQL statements. If the cluster's topology has changed, you may also need to adjust the cassandra.yaml configuration file accordingly before starting the nodes.

11. What are User Defined Functions (UDFs) in Cassandra, and how can they be useful?

User Defined Functions (UDFs) in Cassandra allow you to extend Cassandra's functionality by writing custom functions in languages like Java or Javascript (Nashorn engine prior to Cassandra 4.0, Javascript based on GraalVM in Cassandra 4.0+). These functions can then be called from CQL queries, enabling you to perform operations that are not natively supported by Cassandra.

UDFs can be useful for several reasons:

- Data Transformation: Performing complex data transformations or calculations during query execution.

- Data Validation: Implementing custom validation rules before inserting or updating data.

- Custom Aggregation: Creating custom aggregate functions to summarize data in specific ways.

- Business Logic: Embedding specific business logic directly into the database.

Here is an example for creating and calling a Javascript based UDF. Note the language and appropriate arguments need to be correctly provided.

CREATE FUNCTION IF NOT EXISTS keyspace_name.udf_name (argument1_name data_type, argument2_name data_type)

RETURNS data_type

LANGUAGE javascript

AS 'return argument1_name + argument2_name;';

SELECT keyspace_name.udf_name(column1, column2) FROM table_name WHERE ...;

12. How does Cassandra handle data compaction, and why is it necessary?

Cassandra handles data compaction by merging multiple SSTables (Sorted String Tables) into new ones. This process involves reading data from several SSTables, merging the rows with the same partition key, and writing the merged data into new SSTables. Older SSTables are then marked for deletion. Compaction is essential for several reasons:

- Performance Optimization: Reduces the number of SSTables Cassandra needs to read during queries, improving read performance. Without compaction, queries would need to scan through a large number of SSTables to find the required data.

- Space Reclamation: Eliminates obsolete data (deleted or updated data) and reclaims disk space.

- Tombstone Management: Removes tombstones (markers indicating deleted data) after a certain grace period, preventing them from accumulating indefinitely and impacting performance.

- Data Organization: Optimizes data layout on disk by merging SSTables based on their age or size, resulting in better data locality.

13. Explain the concept of Lightweight Transactions (LWT) in Cassandra and their use cases.

Lightweight Transactions (LWT) in Cassandra provide a way to achieve conditional updates, ensuring atomicity and isolation for operations that modify data only if certain conditions are met. Under the hood, LWTs use Paxos to achieve consensus across replicas, guaranteeing that either all replicas apply the update or none do. This is crucial when you need to prevent race conditions or ensure data consistency in scenarios where multiple clients might be trying to modify the same data concurrently.

Common use cases include implementing counters, user profile updates (e.g., updating a user's email only if the current email matches a specific value), and managing distributed locks. It’s important to note that LWTs are significantly slower than regular writes due to the overhead of Paxos, so they should be used sparingly and only when strict consistency is absolutely necessary. Regular writes are ‘eventually consistent’. LWTs are ‘strongly consistent’.

14. What are the best practices for data modeling in Cassandra to optimize performance?

Cassandra data modeling prioritizes query patterns over data normalization. Key practices include:

- Denormalization: Duplicate data across tables to satisfy different query needs, avoiding joins. This improves read performance.

- Choosing the Right Primary Key: Select a partition key that distributes data evenly across nodes to prevent hotspots. Consider composite partition keys for finer-grained control.

- Clustering Columns: Use clustering columns to sort data within a partition, enabling efficient range queries. The order of clustering columns matters.

- Data Locality: Design tables to retrieve all required data from a minimal number of partitions. This reduces inter-node communication.

- Avoid

ALLOW FILTERING: If possible, design tables to support queries without needingALLOW FILTERINGbecause it can lead to unpredictable performance and full table scans. - Sizing Rows Appropriately: Large rows can impact performance. Consider techniques like bucketing or splitting data into multiple rows if necessary.

- Use appropriate data types: Selecting the right data type can improve storage efficiency and query performance. For example, use

UUIDorTIMEUUIDfor unique identifiers. - Compression: Enable compression to reduce storage space and improve I/O performance. Use

LZ4orSnappycompression algorithms.

15. How can you optimize Cassandra queries to improve read performance?

Optimizing Cassandra queries for improved read performance involves several strategies. Firstly, ensure you're querying by the partition key. Queries that filter on non-partition key columns (without secondary indexes) result in full table scans and are extremely slow. Use ALLOW FILTERING sparingly, only for small datasets, and consider creating appropriate secondary indexes for common non-partition key queries.

Secondly, optimize your data model. Denormalize data to avoid joins (which Cassandra doesn't support). Use appropriate data types and avoid large partitions (ideally under 100MB). Limit the number of columns retrieved using SELECT and consider using LIMIT to restrict the result set size. Regularly run nodetool compact to merge SSTables and remove tombstones. Monitor query performance using tools like nodetool cfstats and address slow queries accordingly.

16. Explain the role of the commit log in Cassandra's write process.

The commit log in Cassandra plays a crucial role in ensuring data durability. Before any data is written to a memtable (in-memory data structure), it is first appended to the commit log on disk. This ensures that even if the Cassandra node crashes before the memtable is flushed to disk (as an SSTable), the data is not lost.

Upon restarting after a crash, Cassandra replays the commit log, effectively re-writing the data that was in memory but not yet persisted to disk. The commit log is a sequential write-only log, optimized for fast writes. Once the data in a commit log segment is successfully flushed to SSTables, the corresponding segment of the commit log can be discarded.

17. What are the different consistency levels in Cassandra, and when would you choose each?

Cassandra offers tunable consistency levels, allowing you to balance consistency and availability. Consistency levels determine how many nodes must acknowledge a write operation as successful before returning confirmation to the client or how many nodes must respond to a read request. Common consistency levels include:

- ANY: Writes are accepted as long as at least one node is available. Least consistent, highest availability. Useful for fire-and-forget scenarios like logging, where data loss is acceptable.

- ONE: Requires at least one replica to acknowledge the write or respond to the read. Good balance between consistency and availability. Often the default choice.

- QUORUM: Requires a majority of replicas to acknowledge the write or respond to the read. Provides strong consistency but can be affected by node failures.

- ALL: Requires all replicas to acknowledge the write or respond to the read. Highest consistency, lowest availability. Use when data integrity is paramount.

- LOCAL_ONE: Similar to ONE, but only considers replicas in the local data center.

- LOCAL_QUORUM: Similar to QUORUM, but only considers replicas in the local data center. Provides strong consistency within the local data center.

- EACH_QUORUM: Requires a quorum of replicas in each data center to acknowledge the write or respond to the read. Provides strong consistency across multiple data centers.

18. Describe the Cassandra Query Language (CQL) and its common commands.

CQL (Cassandra Query Language) is the primary language for interacting with Cassandra databases. It's similar to SQL but tailored for Cassandra's distributed, NoSQL nature. CQL allows users to define schemas, insert, query, update, and delete data.

Common CQL commands include:

CREATE KEYSPACE: Defines a keyspace (similar to a database).CREATE TABLE: Defines a table within a keyspace.INSERT INTO: Inserts data into a table.SELECT: Queries data from a table.UPDATE: Modifies existing data in a table.DELETE: Removes data from a table.ALTER TABLE: Modifies a table's structure.CREATE INDEX: Creates an index on a table column.DROP KEYSPACE: Deletes a keyspace.DROP TABLE: Deletes a table.

Example:

CREATE KEYSPACE mykeyspace WITH replication = {'class': 'SimpleStrategy', 'replication_factor': 1};

USE mykeyspace;

CREATE TABLE users (id UUID PRIMARY KEY, name text, age int);

INSERT INTO users (id, name, age) VALUES (uuid(), 'John Doe', 30);

SELECT * FROM users WHERE name = 'John Doe';

19. Explain the difference between a materialized view and a secondary index in Cassandra and when to use each one.

A materialized view in Cassandra is essentially a pre-computed table derived from a base table. It automatically keeps itself updated whenever the base table changes. You would use it when you need to query data based on a different partition key or clustering columns than the base table offers, and those queries are frequent. It improves read performance for those specific queries at the expense of increased write latency and storage space.

A secondary index, on the other hand, is an index created on a specific column of a table. It helps Cassandra locate rows based on the indexed column. Use a secondary index when you need to filter data based on a non-primary key column, but the query is not as frequent or critical as a materialized view. Secondary indexes are generally less performant than materialized views, especially for high cardinality columns, but they offer lower write latency overhead.

20. How does Cassandra handle failure scenarios, such as node failures or network partitions?

Cassandra is designed to be highly resilient to failures. For node failures, Cassandra uses a gossip protocol to detect downed nodes quickly. Data is replicated across multiple nodes according to the replication factor. When a node fails, reads and writes are automatically handled by the remaining replicas. Once the failed node is back online, data is automatically synchronized.

For network partitions, Cassandra employs a tunable consistency model. Using consistency levels, you can choose how many replicas must acknowledge a read or write operation before it's considered successful. This allows you to prioritize availability (allowing operations to proceed even with some replicas unavailable) or consistency (requiring a majority of replicas to agree before considering an operation successful), depending on the application's needs. Cassandra also supports features like hinted handoff, where a node temporarily stores writes intended for a failed node and replays them when the node recovers, ensuring eventual consistency.

21. What are the advantages and disadvantages of using Cassandra compared to other NoSQL databases?

Cassandra excels in handling massive datasets with high availability and fault tolerance due to its decentralized architecture and data replication across multiple nodes. It provides excellent write performance, making it suitable for applications with heavy write loads like time-series data or logging. Cassandra's linear scalability allows it to easily handle growing data volumes by adding more nodes to the cluster. However, it's eventual consistency model can lead to stale data reads in some scenarios, requiring careful consideration of consistency levels for applications that demand strong consistency. Its complex setup and tuning can also be a disadvantage compared to simpler NoSQL databases.

Compared to other NoSQL databases, Cassandra's focus on high availability and write performance often comes at the cost of read performance, especially for complex queries. For example, MongoDB excels in document-based data storage and provides more flexible querying options, while Redis is renowned for its speed and in-memory data storage, making it suitable for caching and real-time applications. Choosing the right NoSQL database depends heavily on the specific requirements of the application, prioritizing either read-heavy workloads or write-heavy workloads that necessitate extremely high availability.

22. Discuss strategies for handling large-scale data migrations in Cassandra without impacting application performance.

To handle large-scale data migrations in Cassandra without impacting application performance, several strategies can be employed. One key approach is to use techniques like incremental migrations. This involves migrating data in smaller chunks over time, rather than all at once. This can be achieved using timestamp-based filtering or token range splitting. Employing dual writes is crucial: The application writes to both the old and new schema during the migration period. This ensures data consistency and allows for a gradual transition. Monitor system resources carefully (CPU, memory, disk I/O) during the migration to identify and address any bottlenecks promptly. For schema changes, consider online schema changes available in Cassandra 3.0 and later, which allow schema modifications without downtime.

Another effective strategy is using Spark Cassandra Connector to perform the data migration. Spark allows for parallel processing of data, significantly speeding up the migration process. Before starting the migration, perform thorough testing in a staging environment that mirrors production. This helps identify potential issues and refine the migration plan. Finally, always have a rollback plan in place should any issues arise during the migration. Keep the old schema accessible until full migration and validation are done.

Advanced Cassandra interview questions

1. How does Cassandra handle consistency when data is written to multiple nodes, and what trade-offs are involved?

Cassandra achieves tunable consistency by allowing you to configure the consistency level for both read and write operations. When writing data, Cassandra replicates it to multiple nodes as determined by the replication factor. The consistency level dictates how many nodes must acknowledge the write before it's considered successful. Higher consistency levels (like QUORUM or ALL) guarantee stronger consistency, meaning you're more likely to read the most up-to-date data. However, this comes at the cost of increased latency and reduced availability, as more nodes need to be contacted and agree.

The trade-offs are between consistency, availability, and latency. Lower consistency levels (like ONE or LOCAL_ONE) provide faster writes and higher availability, because fewer nodes need to respond. But there's a greater chance of reading stale data. Choosing the right consistency level depends on the application's requirements. If data integrity is paramount, then higher consistency levels should be used. If availability and speed are more important, lower consistency levels are more appropriate. It's also possible to configure different consistency levels for reads and writes, e.g., strong consistency writes and weaker consistency reads for improved performance.

2. Explain the concept of hinted handoff in Cassandra and how it ensures data durability.

Hinted Handoff in Cassandra is a mechanism that ensures data durability and availability when a node is temporarily unavailable. When a write operation is intended for a node that is down, the coordinating node (the one that receives the client request) stores a hint locally. This hint contains the data to be written and information about the target node.

Later, when the target node comes back online, the coordinating node handoffs the hinted data to the now-available node. This ensures that no data is lost due to temporary node outages, maintaining data consistency across the cluster. The hints are stored on disk and are replayed when the target node is available. Cassandra uses a configurable parameter, hinted_handoff_enabled, to control whether hinted handoff is enabled. The max_hint_window_in_ms parameter determines how long Cassandra will store hints before discarding them.

3. Describe the role of bloom filters in Cassandra's read path and how they improve performance.

Bloom filters in Cassandra are probabilistic data structures used to efficiently test whether an element is a member of a set. In Cassandra's read path, a bloom filter is consulted before accessing the SSTable on disk to check if the requested row key exists within that SSTable. If the bloom filter returns 'false', it definitely means the row key is not in the SSTable, avoiding a costly disk I/O operation.

By quickly filtering out SSTables that do not contain the desired data, bloom filters significantly reduce unnecessary disk reads. This optimization dramatically improves read performance, especially when data is not present or when querying for a wide range of data across multiple SSTables. It's important to note that bloom filters can produce false positives (reporting that a key might be present when it is not), but they never produce false negatives (reporting that a key is absent when it is actually present). When a false positive occurs, Cassandra still accesses the SSTable, but the performance gain from avoiding the majority of unnecessary reads far outweighs the occasional false positive penalty.

4. What are the different types of counters in Cassandra, and when would you use each type?

Cassandra offers standard counters. Standard counters track a numerical value that can only be incremented or decremented. They are useful for things like hit counters or tracking likes on a social media post.

Unlike regular columns, counters require special handling. You increment or decrement a counter, never directly set it. Operations on counters are not idempotent, meaning replaying the same operation will change the counter value again. For high consistency, Cassandra uses a special data structure and protocol. Also, counters are only supported in tables created without a default_time_to_live (TTL). If you need to expire counter data, you'll need to handle that in your application logic.

5. How does Cassandra achieve high availability, and what happens when a node fails?

Cassandra achieves high availability through replication and a distributed architecture. Data is automatically replicated across multiple nodes, defined by the replication factor. If one node goes down, the other nodes containing the replicated data can still serve requests, ensuring continuous operation. Cassandra uses a gossip protocol for node failure detection and membership management. When a node fails, the remaining nodes detect the failure and temporarily take over the responsibilities of the failed node, using the replicated data. Data consistency is eventually achieved when the failed node comes back online, or data is re-distributed if the node is permanently removed.

6. Explain the purpose of the Cassandra system keyspace and the information it contains.

The system keyspace in Cassandra is a critical metadata repository that stores information about the cluster's internal state and configuration. It's automatically created when the cluster is initialized and is essential for the proper functioning of Cassandra. It contains details about:

- Keyspaces: Definitions of all keyspaces in the cluster (including replication strategies).

- Column Families (Tables): Metadata about each table, such as column names, data types, and storage parameters.

- Users and Permissions: Information about user accounts and their granted privileges.

- Functions and Aggregates: Definitions of user-defined functions (UDFs) and aggregates.

- Topology Information: Data about nodes in the cluster (status, tokens)

Modifying data directly within the system keyspace can have severe consequences for the cluster's stability and data integrity, and should be done with extreme caution and usually only through the provided CQL commands. It is best left alone, except when performing administration and troubleshooting tasks.

7. Describe how you would monitor a Cassandra cluster to ensure optimal performance and identify potential issues.

To monitor a Cassandra cluster, I'd focus on several key metrics. For node-level monitoring, I'd use nodetool to track things like heap usage, compaction activity, and read/write latencies. OS-level metrics like CPU utilization, disk I/O, and network traffic are also critical; tools like iostat, vmstat, and netstat (or their modern equivalents) would be valuable here. I'd use a monitoring system like Prometheus with the Cassandra exporter or Datadog to collect and visualize these metrics over time, configuring alerts for thresholds that indicate potential problems, like high latency, low disk space, or excessive compaction backlog. For example, critical alerts would be triggered when PendingTasks is consistently high.

At the cluster level, I'd monitor keyspace-specific metrics such as read/write latency, number of mutations, and cache hit ratios. Examining slow query logs and auditing authentication/authorization events is crucial for security. I would also utilize Cassandra's built-in metrics through JMX, visualize the data with Grafana, and set up alerts in tools like PagerDuty. Ultimately, a holistic view combining node and cluster-level metrics allows for proactive identification and resolution of performance bottlenecks or potential failures. Automated playbooks could be set up to address common issues like restarting unresponsive nodes.

8. What are the different types of compaction strategies available in Cassandra, and how do they impact performance?

Cassandra offers several compaction strategies, each impacting performance differently. SizeTieredCompactionStrategy (STCS) is the default. It compacts SSTables of similar sizes, which can lead to write amplification and uneven performance but is simpler to manage. LeveledCompactionStrategy (LCS) is suitable for read-heavy workloads. It creates multiple levels of SSTables based on size, minimizing read latency but increasing write latency and disk space usage. TimeWindowCompactionStrategy (TWCS) is designed for time-series data. It compacts SSTables based on their age, making it efficient for time-bound queries but potentially less efficient for other types of data.

There's also DateTieredCompactionStrategy (DTCS) which is similar to TWCS but deprecated. The choice of compaction strategy depends on the workload characteristics; STCS is good for write intensive workload, LCS for read intensive and TWCS for time series data. Improper selection can negatively affect read/write latencies and disk space utilization. You should choose the appropriate strategy based on your data and query patterns.

9. How does Cassandra handle data deletion (tombstones) and what are the potential implications?

Cassandra handles data deletion using tombstones. When a DELETE operation is performed, Cassandra doesn't immediately remove the data from disk. Instead, it inserts a special marker called a tombstone. This tombstone marks the data as deleted. During subsequent reads, Cassandra checks for tombstones and filters out any data that has been marked for deletion.

The main implication of tombstones is that they can accumulate and impact performance. If tombstones are not properly managed, they can lead to increased storage usage and slower read times. This is because Cassandra has to scan through more data (including tombstones) to satisfy read requests. Regular compaction processes are essential for removing tombstones after a certain grace period (configured by gc_grace_seconds) to reclaim space and maintain performance. If gc_grace_seconds is too short, data can be resurrected if a node is down during deletion and later rejoins the cluster. If the value is too long it can lead to performance problems due to excessive tombstones.

10. Explain how to configure and use virtual nodes (vnodes) in a Cassandra cluster.

Virtual nodes (vnodes) simplify Cassandra cluster management by allowing each physical node to own multiple smaller partitions of data, rather than a single large range. This improves data distribution, load balancing, and recovery. To configure vnodes, you typically set num_tokens in cassandra.yaml to a value greater than 1 (e.g., 256 is common). Cassandra then automatically assigns these tokens randomly across the ring. No initial token assignment is needed per node when using vnodes.

To use vnodes, simply start the Cassandra node after configuring num_tokens. Cassandra handles the token assignment and data distribution automatically. When adding or removing nodes, Cassandra rebalances data across vnodes, minimizing the manual intervention required with traditional token-based assignments.

11. Describe how you would back up and restore a Cassandra cluster.

Backing up a Cassandra cluster involves taking snapshots of the data on each node. This is typically done using the nodetool snapshot command. It creates hard links to the existing SSTable files in a new directory, providing a consistent point-in-time view of the data. These snapshots are then copied to a secure, offsite location (e.g., cloud storage, another data center). Automation is key, using scripts or tools like OpsCenter to schedule regular backups.

Restoring involves copying the snapshot data back to the cluster. First, ensure the cluster is running and that the target keyspaces/tables are either truncated or do not exist. Then, copy the snapshot directories into the appropriate data directories on each node. Finally, use the nodetool refresh command to load the data from the snapshots into Cassandra. Repair operations should follow the restore to ensure data consistency and integrity across the cluster.

12. How does Cassandra support data locality and why is it important?

Cassandra achieves data locality through its consistent hashing-based data distribution strategy and replication. Data is distributed across the cluster nodes based on a partition key, and each node is responsible for a specific range of these keys. Replication ensures that multiple copies of the data exist on different nodes. When a client requests data, Cassandra attempts to route the request to one of the nodes that owns the data replica, preferably the closest one in terms of network proximity. This minimizes latency and improves read performance.

Data locality is crucial in distributed database systems like Cassandra because it directly impacts performance and cost. By minimizing network hops to access data, Cassandra reduces latency and improves throughput. This is especially important for latency-sensitive applications. Furthermore, reduced network traffic translates to lower network costs and more efficient resource utilization across the cluster. By keeping data close to where it's being processed, we optimize performance.

13. Explain how to use the Cassandra Query Language (CQL) to perform complex queries.

CQL (Cassandra Query Language) allows complex queries via several mechanisms. You can filter data using the WHERE clause with operators like =, >, <, >=, <=, IN, and BETWEEN. For more advanced filtering, use ALLOW FILTERING which executes the query even if the WHERE clause doesn't use an index, but be aware this can be slow on large datasets.

Beyond basic filtering, CQL supports functions for data manipulation within queries (e.g., toDate(), token()). Aggregation is also possible using functions like COUNT(), SUM(), MIN(), MAX(), and AVG(), usually in conjunction with GROUP BY. Joins are generally discouraged in Cassandra due to performance implications and data denormalization is the preferred approach; however, materialized views can be used to pre-compute joins which are then queried. Here's an example: SELECT COUNT(*) FROM my_table WHERE partition_key = 'some_value' ALLOW FILTERING;

14. What are the security considerations when deploying a Cassandra cluster?

When deploying a Cassandra cluster, several security aspects demand attention. Authentication is crucial; enable and enforce authentication using Cassandra's built-in mechanisms or integrate with external systems like LDAP. Authorization, which governs user permissions, should be configured to adhere to the principle of least privilege, granting users only the necessary access. Encryption, both in-transit (using SSL/TLS for client-to-node and node-to-node communication) and at-rest (encrypting data on disk), protects sensitive data. Consider network segmentation to isolate the Cassandra cluster from other parts of the infrastructure. Regular security audits and vulnerability scanning are important to identify and address potential weaknesses.

Further considerations include proper firewall configuration to restrict access to essential ports only. Stay updated with Cassandra's security advisories and apply patches promptly. Regularly review and rotate credentials. Implement monitoring and alerting for suspicious activities, such as unusual login attempts or data access patterns. Secure backups are also critical to ensure data recovery in case of a security incident.

15. How does Cassandra handle schema evolution, and what are the best practices for making schema changes?

Cassandra handles schema evolution through mutations. You can add, drop, or modify columns in a table without taking the entire cluster offline. Cassandra propagates schema changes asynchronously to all nodes.

Best practices include: 1. Testing schema changes on a staging environment before applying them to production. 2. Adding columns with a default value to avoid null values in existing rows and application logic errors. 3. Avoid dropping columns that are still in use. Instead, deprecate them and remove them later. 4. Using ALTER TABLE command carefully and monitoring its impact on the cluster's performance. 5. Never changing the PRIMARY KEY as it can lead to data corruption. You would have to create a new table and migrate the data.

16. Describe how you would troubleshoot slow queries in Cassandra.

To troubleshoot slow queries in Cassandra, I would first enable query logging using TRACE level in cassandra.yaml. This will log detailed information about the query execution, including latency at various stages. Then, I'd analyze the logs to identify bottlenecks – for example, high latency during read repair, tombstone scans, or data retrieval from disk. Also, examine system.log for potential garbage collection pauses or disk I/O issues.

Next, I'd use tools like nodetool cfstats and nodetool tablehistograms to check table statistics like average read latency, partition size, and tombstone counts. High tombstone counts or large partitions can significantly impact query performance. Finally, review the query itself; ensure proper indexing and avoid ALLOW FILTERING when possible. Consider denormalizing data to optimize reads if needed. Analyzing the application's data model and query patterns is vital for long-term performance improvements.

17. How can you optimize Cassandra for write-heavy workloads?

To optimize Cassandra for write-heavy workloads, several strategies can be employed. First, tune the consistency level. Using LOCAL_ONE or ONE instead of higher levels like QUORUM reduces the number of nodes that need to acknowledge the write before it's considered successful, thus improving write throughput. Second, optimize the data model. Avoid wide rows, as they can lead to performance bottlenecks during reads and writes. Partition data effectively to distribute the write load evenly across the cluster. Compaction strategy choice is important; SizeTieredCompactionStrategy (STCS) might be better for write-heavy workloads initially but consider LeveledCompactionStrategy (LCS) if read performance degrades later. Third, dedicate sufficient resources to Cassandra nodes: ensure adequate RAM, fast disks (SSDs are preferred), and sufficient CPU cores. Adjust JVM heap size appropriately for the workload. Finally, monitor the cluster's performance using tools like nodetool, and address any bottlenecks that arise.

18. Explain the concept of Lightweight Transactions (LWT) in Cassandra and their limitations.

Lightweight Transactions (LWT) in Cassandra provide a way to achieve linearizable consistency for single-row operations. Under the hood, LWT uses Paxos to achieve consensus before committing the update. This guarantees that only one client can successfully update a row based on a specific condition. Essentially, it's a "compare and set" operation. For example, you might use LWT to ensure a username is unique during registration by checking if it exists before inserting a new user row.

However, LWTs have limitations. They are significantly slower than regular writes because they require multiple round trips for Paxos to reach consensus. They also have a higher chance of conflict, leading to retries and increased latency. Furthermore, LWT performance degrades significantly under high contention and they are not recommended for frequently updated rows or performance-critical operations. Use them sparingly and only when strict consistency is absolutely necessary. Schema changes are not LWT. They also don't provide ACID guarantees for multi-row updates. If you need ACID semantics across multiple rows, Cassandra isn't the right tool.

19. How does Cassandra use SSTables and what is their role in the data lifecycle?

Cassandra uses SSTables (Sorted String Tables) as the fundamental data storage format on disk. SSTables are immutable, sorted files that store data partitions. When data is written to Cassandra, it's first stored in memory (memtable). Once the memtable reaches a certain threshold, it's flushed to disk as an SSTable. Being immutable, SSTables do not get updated in-place. Instead, updates and deletes are written as new entries.

The data lifecycle involves the creation of SSTables, compaction, and eventual deletion. Compaction merges multiple SSTables into new ones, removing duplicates and tombstones (markers for deleted data). This process optimizes read performance and reduces disk space. Over time, old SSTables are replaced by newer, compacted ones, ensuring efficient data retrieval. The use of SSTables and compaction strategies are key to Cassandra's write performance and scalability.

20. Describe how you would design a data model in Cassandra for a specific use case, considering factors like query patterns and data relationships.

For a time-series data use case, like storing sensor readings, I'd design a Cassandra table with sensor_id, reading_time and value as columns. The primary key would be (sensor_id, reading_time) where sensor_id is the partition key and reading_time is the clustering key. This ensures that readings from the same sensor are stored on the same node and ordered by time. Query patterns would likely involve fetching readings for a specific sensor within a time range, so the WHERE clause would use sensor_id = ? AND reading_time >= ? AND reading_time <= ?. Data relationships are implicitly defined through sensor_id, allowing us to aggregate readings from the same sensor.

To optimize for a different query pattern, like finding the latest reading from all sensors, a materialized view could be created. This view would have (reading_time, sensor_id) as the primary key, ordered by reading_time descending. Querying this view would efficiently provide the latest reading across all sensors.

21. Explain the purpose and usage of User-Defined Functions (UDFs) and User-Defined Aggregates (UDAs) in Cassandra.

User-Defined Functions (UDFs) in Cassandra allow you to extend the database's functionality by writing custom functions in languages like Java or JavaScript. These functions can then be called within CQL queries, similar to built-in functions, to perform operations that aren't natively supported. UDFs are useful for data transformations, custom validation logic, or complex calculations within queries.

User-Defined Aggregates (UDAs) are similar to UDFs but are specifically designed for aggregating data across multiple rows. They allow you to define custom aggregation logic beyond the standard functions like SUM, COUNT, AVG, etc. A UDA typically consists of a state variable, a state function that updates the state for each row, and a final function that returns the final aggregated result based on the accumulated state.

22. How does Cassandra integrate with other big data technologies like Spark or Hadoop?

Cassandra integrates with other big data technologies like Spark and Hadoop primarily through connectors. For Spark, the spark-cassandra-connector allows Spark to read data from and write data to Cassandra tables efficiently. This enables using Spark for complex analytics, machine learning, and data transformations on data stored in Cassandra.

For Hadoop, while direct integration isn't as common, Cassandra can be used as a data store for Hadoop jobs. Data can be extracted from Cassandra, processed by Hadoop MapReduce jobs, and the results can be written back to Cassandra or another data store. The cassandra-hadoop project provides the necessary input/output formats for Hadoop to interact with Cassandra.

Expert Cassandra interview questions

1. How does Cassandra handle more data than one node can hold?

Cassandra handles more data than one node can hold by distributing data across multiple nodes in a cluster. This is achieved through a technique called partitioning and replication.

Specifically, data is partitioned based on a partition key, which is a part of the primary key. A hash function is applied to the partition key to determine which node(s) will store the data. Cassandra uses a consistent hashing algorithm, which minimizes data movement when nodes are added or removed from the cluster. Replication ensures that data is stored on multiple nodes, providing fault tolerance. The replication factor determines how many copies of each data item are stored.

2. Explain how Cassandra ensures data consistency across multiple data centers.

Cassandra achieves data consistency across multiple data centers through tunable consistency levels and hinted handoffs. Consistency levels determine how many replicas must acknowledge a write operation before it is considered successful. For example, QUORUM requires a majority of replicas to acknowledge, while LOCAL_QUORUM requires a majority within the local data center. EACH_QUORUM requires a majority from each datacenter to respond. Hinted handoffs ensure that if a replica is temporarily unavailable, the write is stored locally and replayed when the replica comes back online, preventing data loss.

Additionally, Cassandra employs anti-entropy mechanisms like read repair and node repair to reconcile differences between replicas. Read repair happens during read operations; if data inconsistencies are detected, the most recent version is propagated to all replicas. Node repair, triggered manually or automatically, compares data between replicas and synchronizes them, ensuring long-term consistency. Cross-datacenter replication is handled using gossip protocol to disseminate cluster state information.

3. Describe the tradeoffs between eventual consistency and strong consistency in Cassandra.

Cassandra offers tunable consistency, allowing you to choose between strong consistency and eventual consistency based on your application's needs. Strong consistency guarantees that after an update, all subsequent reads will see the latest value. This is achieved through mechanisms like quorum writes, where a majority of replicas must acknowledge the write before it's considered successful. However, strong consistency comes at the cost of availability and performance, as it requires coordination across multiple nodes and can be affected by network latency or node failures.

Eventual consistency, on the other hand, prioritizes availability and performance. Updates are propagated to replicas asynchronously. While this allows for faster writes and better fault tolerance (as writes can succeed even if some nodes are down), there's a period where different replicas might have different versions of the data. Applications need to be designed to handle potentially stale data during this period, often using techniques like read repair or hinted handoff to ensure data convergence over time. The choice depends on the use case. For example, financial transactions often need strong consistency, while social media feeds can tolerate eventual consistency.

4. How does Cassandra’s gossip protocol contribute to cluster management and fault detection?

Cassandra's gossip protocol is a peer-to-peer communication mechanism that enables nodes in a cluster to share information about themselves and other nodes. This facilitates cluster management and fault detection. Each node periodically exchanges gossip messages with a small number of other randomly selected nodes. These messages contain state information, such as node status (up/down), load, schema version, and other metadata.

Through gossip, information about node failures and changes in cluster topology propagates rapidly throughout the cluster. When a node detects a failure (e.g., by not receiving a response from a node), it disseminates this information via gossip. Other nodes then become aware of the failure and can adjust their routing and replication strategies accordingly. This decentralized approach makes Cassandra resilient to single points of failure and ensures that the cluster can automatically adapt to changes in membership and state. Also, gossip helps to maintain consistency of metadata across the cluster.

5. Explain the purpose and impact of tombstone in Cassandra.

Tombstones in Cassandra are markers indicating that a piece of data has been deleted. When a row or column is deleted, Cassandra doesn't immediately remove the data from disk. Instead, it inserts a tombstone, which acts as a 'delete marker'. This is because Cassandra is a distributed database, and immediate deletion across all replicas is inefficient.

The impact of tombstones can be significant. If tombstones accumulate and are not properly managed, they can negatively affect performance. During read operations, Cassandra has to check tombstones to ensure that it doesn't return deleted data. Excessive tombstones can slow down read queries as Cassandra scans through them. Compaction processes eventually remove tombstones, but if the gc_grace_seconds is not configured properly, or if there are many tombstones, it can lead to performance degradation and increased storage requirements.

6. How does Cassandra handle data replication and what are the different replication strategies available?

Cassandra ensures high availability and fault tolerance through data replication across multiple nodes. Data is automatically replicated to multiple nodes based on the configured replication strategy.

Cassandra offers two main replication strategies:

- SimpleStrategy: Replicates data to N nodes sequentially in a ring. Suitable for single datacenter deployments or testing.

- NetworkTopologyStrategy: Replicates data across multiple datacenters. Specifies the number of replicas per datacenter, ensuring data is distributed geographically for disaster recovery and reduced latency for users in different regions.

7. Discuss the role of the commit log and memtable in Cassandra's write path.

In Cassandra's write path, the commit log and memtable play crucial roles in ensuring durability and performance. The commit log is an append-only log on disk where every write operation is recorded before it's applied to the memtable. This ensures data durability; even if the Cassandra node crashes before the memtable is flushed to disk, the writes can be replayed from the commit log upon recovery. The memtable, on the other hand, is an in-memory data structure that stores the writes. It's essentially a sorted map, optimized for fast writes.

Once the memtable reaches a certain threshold (size or number of mutations), it is flushed to disk as an SSTable (Sorted String Table). This process is asynchronous, allowing Cassandra to continue accepting writes with minimal latency. After the memtable is successfully flushed, the corresponding entries in the commit log can be purged. This design effectively balances durability (via the commit log) with write performance (via the memtable and asynchronous flushing).

8. What are the implications of using different compaction strategies in Cassandra?

Different compaction strategies in Cassandra have significant implications for performance, resource utilization, and data lifecycle management.

- SizeTieredCompactionStrategy (STCS): Good for write-heavy workloads; however, can lead to disk fragmentation and high read latency during compaction. It merges SSTables of similar sizes, creating larger SSTables over time.

- LeveledCompactionStrategy (LCS): Optimized for read-heavy workloads; has lower read latency and better disk space utilization compared to STCS. It divides data into levels, minimizing the number of SSTables that need to be read for a single query. However, it has higher write amplification.

- TimeWindowCompactionStrategy (TWCS): Suited for time-series data; automatically compacts data within specific time windows, making it efficient for expiring old data. Not appropriate for non-time-series data.

- DateTieredCompactionStrategy (DTCS): Another option for time-series data; similar to TWCS but compacts based on date tiers. It's deprecated, and TWCS is usually preferred.

The best strategy depends on the specific workload and data characteristics. Incorrect strategy selection can lead to performance bottlenecks or inefficient resource usage.

9. Explain how you would design a Cassandra data model for a time-series application.

For a time-series application in Cassandra, I would design the data model with time as the primary clustering key to optimize for time-based queries. A typical table design would include a primary key comprised of a partition key and clustering key. The partition key would be based on the entity or device generating the time-series data (e.g., sensor ID, user ID), enabling efficient distribution of data across the Cassandra cluster. The clustering key would be the timestamp, allowing for efficient retrieval of data within a specific time range. Other relevant metrics or data points would be stored as regular columns.

For example:

CREATE TABLE time_series_data (

sensor_id UUID,

ts timestamp,

value double,

PRIMARY KEY (sensor_id, ts)

) WITH CLUSTERING ORDER BY (ts ASC);

This structure would facilitate quick retrieval of data for a given sensor within a specified time window. Consider using bucketing strategies (e.g., hourly, daily) for the partition key if a single entity generates very high volume data to avoid large partitions. Additionally, appropriate TTL (Time-To-Live) settings should be configured to automatically expire older data, optimizing storage utilization.

10. How can you optimize Cassandra queries for performance, considering factors like filtering and indexing?