As the DeFi landscape expands, identifying software engineers who grasp blockchain technology, smart contracts, and decentralized applications is important for sustained growth. This becomes even more important when you want to scale teams quickly, but you need to also test for specific skills, not just a generalist view, unlike when hiring a regular software engineer.

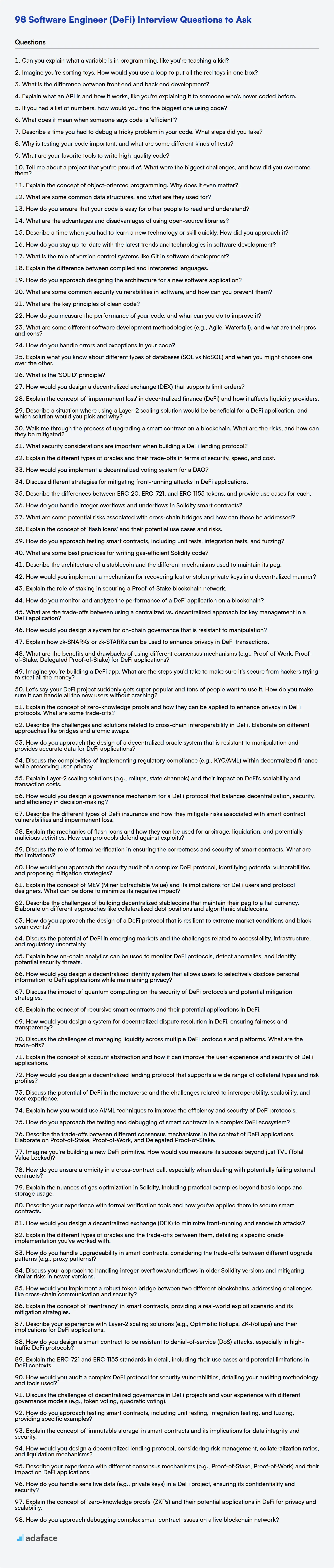

This blog post provides a curated list of interview questions categorized by skill level – Basic, Intermediate, Advanced, and Expert – along with a set of multiple-choice questions (MCQs). It is designed to equip you with the right questions to assess a candidate's expertise in decentralized finance and software engineering.

By utilizing these questions, you can streamline your hiring process and identify top talent for your DeFi projects; before investing expensive engineering time in interviews, consider using a Decentralized Finance (DeFi) Test to filter for relevant candidates.

Table of contents

Basic Software Engineer (Defi) interview questions

1. Can you explain what a variable is in programming, like you're teaching a kid?

Imagine a variable like a labeled box. You can put things inside the box, like a number, a word, or even a list of your favorite toys. The label on the box tells you what the box is for or what kind of thing it's supposed to hold. In programming, a variable is a name that represents a memory location where you can store data.

For example, in the code x = 5, x is the variable (the label on the box), and 5 is the value stored in it (what's inside the box). Later, if you write x = 10, you're replacing the old value (5) with a new one (10) in the same box. The value in the variable can be used later in your program to do calculations or make decisions.

2. Imagine you're sorting toys. How would you use a loop to put all the red toys in one box?

Imagine I have a bunch of toys scattered around. To put all the red toys in one box using a loop, I'd do the following:

I'd look at each toy one by one (that's the loop!). For each toy, I'd check if it's red. If the toy is red, I'd put it in the red toy box. If it's not red, I'd leave it where it is or put it in a different box for other colors or types. Essentially, the loop repeats the process of checking the color and moving the toy if it matches my criteria (being red).

3. What is the difference between front end and back end development?

Front-end development focuses on the user interface (UI) and user experience (UX) of a website or application. It involves using technologies like HTML, CSS, and JavaScript to create the visual elements, interactive components, and overall look and feel that users directly interact with. The goal is to ensure a smooth, responsive, and engaging user experience.

Back-end development, on the other hand, deals with the server-side logic, databases, and infrastructure that power the front-end. It involves using languages like Python, Java, or Node.js, along with databases such as MySQL or MongoDB, to manage data, handle requests, and ensure the application functions correctly. Back-end developers build and maintain the server, application, and database, ensuring data is securely stored and delivered to the front-end as needed. They essentially build the 'engine' that drives the application.

4. Explain what an API is and how it works, like you're explaining it to someone who's never coded before.

Imagine an API as a waiter in a restaurant. You (the user or application) have a menu (list of things you can do). You tell the waiter (the API) what you want (your request). The waiter then goes to the kitchen (the system or database), gets the food (the data or action), and brings it back to you (the response). So, the API is a messenger that allows different applications to talk to each other without needing to know the complex details of how the other application works.

For example, think about booking a flight online. The website uses APIs to talk to different airlines' systems. You search for flights, and the website uses an API to ask each airline for available flights and prices. The airline's system processes the request and sends back the information through the same API. The website then shows you the results from all the airlines. In essence, an API defines a set of rules and specifications that software programs can follow to communicate with each other. API endpoints are the specific URLs where the service "waits" for the requests. Methods like GET, POST, PUT, and DELETE are used to perform actions on the API (e.g. get data, create new data, update, or delete).

5. If you had a list of numbers, how would you find the biggest one using code?

To find the biggest number in a list, you can iterate through the list and keep track of the largest number found so far. Initialize a variable, say largest, with the first element of the list. Then, iterate through the remaining elements. If an element is greater than largest, update largest with that element. After iterating through the entire list, largest will hold the biggest number.

Here's an example in Python:

def find_biggest(numbers):

if not numbers:

return None # Handle empty list case

largest = numbers[0]

for number in numbers:

if number > largest:

largest = number

return largest

6. What does it mean when someone says code is 'efficient'?

When someone says code is 'efficient', it generally means the code uses resources (like CPU time, memory, and network bandwidth) economically. Efficient code completes its task with minimal resource consumption. This often translates to faster execution times, lower memory footprint, and reduced operational costs.

Key aspects of efficient code include:

- Time Complexity: How the execution time grows with input size (e.g., using a O(n log n) sorting algorithm instead of O(n^2)).

- Space Complexity: How much memory the code uses.

- Code Optimization: Techniques like loop unrolling, inlining functions, and using appropriate data structures (e.g., using a

HashMapfor fast lookups). Example: Consider searching for a value in a list. A linear search (O(n)) is less efficient than a binary search (O(log n)) on a sorted list.

7. Describe a time you had to debug a tricky problem in your code. What steps did you take?

During a recent project involving a complex data pipeline, I encountered an issue where processed data was intermittently incorrect. The system involved several microservices and message queues, making the root cause difficult to pinpoint.

My debugging process involved several steps. First, I isolated the problem by writing unit tests to reproduce the erroneous output in a controlled environment. Then, I used logging and tracing to follow the data's journey through the system, identifying the specific microservice where the corruption occurred. Within that service, I used a debugger to step through the code, examining variable values at each stage. I discovered that a subtle type conversion error was causing a loss of precision during a calculation. To resolve this, I modified the code to handle the data type conversion correctly and added comprehensive unit tests to prevent similar issues in the future. Finally, I deployed the updated code and monitored the system to ensure the problem was resolved.

8. Why is testing your code important, and what are some different kinds of tests?

Testing is crucial for ensuring code quality, reliability, and maintainability. It helps identify bugs early in the development cycle, reducing the cost and effort required to fix them later. Thorough testing leads to more robust software, improved user experience, and increased confidence in the code's functionality.

Different kinds of tests include: Unit tests (testing individual components or functions in isolation), Integration tests (testing the interaction between different parts of the system), End-to-end tests (testing the entire application workflow from the user's perspective), Regression tests (verifying that new code changes haven't introduced new bugs or broken existing functionality), and Acceptance tests (confirming that the software meets the customer's requirements).

Here's a code example of a unit test in Python using unittest:

import unittest

def add(x, y):

return x + y

class TestAdd(unittest.TestCase):

def test_add_positive_numbers(self):

self.assertEqual(add(2, 3), 5)

def test_add_negative_numbers(self):

self.assertEqual(add(-2, -3), -5)

if __name__ == '__main__':

unittest.main()

9. What are your favorite tools to write high-quality code?

My favorite tools for writing high-quality code include a good IDE like VS Code or IntelliJ IDEA, along with extensions for linting (e.g., ESLint for JavaScript, Pylint for Python) and formatting (e.g., Prettier, Black). These tools help me catch errors early and maintain consistent code style.

I also rely on version control systems like Git for collaboration and tracking changes, and testing frameworks (e.g., Jest, pytest) to ensure the correctness and reliability of my code. Code review tools like GitHub's pull requests are invaluable for getting feedback and improving code quality. Static analysis tools such as SonarQube help me identify potential security vulnerabilities and code smells.

10. Tell me about a project that you're proud of. What were the biggest challenges, and how did you overcome them?

I'm proud of developing a data pipeline for processing real-time social media feeds to identify trending topics. The biggest challenge was handling the high volume and velocity of data. We used Apache Kafka for message queuing to buffer the incoming data stream and then employed Apache Spark for distributed data processing. This allowed us to scale horizontally to handle the load. Another challenge was the noisy data. To overcome this, we implemented robust data cleaning and filtering techniques, including sentiment analysis to filter out irrelevant noise, and implemented regular expression filters to remove unwanted symbols or stop words. Finally, we used rate limiting to gracefully handle API limits imposed by different social media platforms.

Specifically, we built a function to check for common spam indicators and added it into the data cleaning portion of our pipeline. Here is how a simplified check for spam might look:

def is_spam(text):

spam_keywords = ['buy now', 'limited offer', 'discount']

text = text.lower()

for keyword in spam_keywords:

if keyword in text:

return True

return False

11. Explain the concept of object-oriented programming. Why does it even matter?

Object-oriented programming (OOP) is a programming paradigm based on "objects", which contain data in the form of fields (attributes or properties) and code in the form of procedures (methods). These objects interact with each other to design applications and computer programs. OOP focuses on creating reusable code, improving modularity, and providing a clear structure for complex projects. The fundamental principles include:

- Encapsulation: Bundling data and methods that operate on that data within a class, hiding internal implementation details.

- Inheritance: Creating new classes (derived classes) from existing classes (base classes), inheriting their properties and methods.

- Polymorphism: The ability of an object to take on many forms, allowing you to write code that can work with objects of different classes in a uniform way.

- Abstraction: Simplifying complex reality by modeling classes appropriate to the problem, and working at the right level of inheritance.

OOP matters because it offers several advantages. It promotes code reuse through inheritance and composition, making development faster and more efficient. Encapsulation helps in managing complexity by hiding internal details and preventing unintended data modification. Polymorphism allows for flexible and extensible code, making it easier to adapt to changing requirements. Overall, OOP improves code organization, maintainability, and scalability, leading to more robust and reliable software systems. It allows for easier reasoning in complex software.

12. What are some common data structures, and what are they used for?

Common data structures include arrays, linked lists, stacks, queues, trees, graphs, hash tables (or dictionaries), and heaps. Arrays store collections of elements of the same type, accessed by index. Linked lists store a sequence of nodes, each containing data and a pointer to the next node. Stacks follow the LIFO (Last-In, First-Out) principle, useful for function call management and undo operations. Queues follow the FIFO (First-In, First-Out) principle, suitable for task scheduling and breadth-first search.

Trees represent hierarchical relationships, often used in file systems and organizational structures. Graphs model relationships between objects, applicable to social networks and route finding algorithms. Hash tables provide efficient key-value lookups, used extensively in databases and caching. Heaps are tree-based data structures that implement priority queues, employed in scheduling and graph algorithms like Dijkstra's algorithm. Code example for hash table insertion:

my_dict = {}

my_dict['key1'] = 'value1'

13. How do you ensure that your code is easy for other people to read and understand?

I focus on several key principles to ensure code readability. Primarily, I use meaningful and descriptive variable and function names. For example, instead of x, I'd use user_age. Consistent code style and formatting is also crucial; I adhere to established style guides (like PEP 8 for Python) and use linters and formatters (like flake8 and black) to enforce them automatically. I also include concise, relevant comments to explain complex logic or the why behind certain decisions, but avoid over-commenting obvious code.

Additionally, I break down large functions into smaller, more manageable, and well-named functions, each with a specific purpose. This promotes modularity and makes the code easier to follow. Using well-established design patterns and documenting the public API with docstrings further enhances understanding. For example, def calculate_total(price: float, quantity: int) -> float: is self-explanatory.

14. What are the advantages and disadvantages of using open-source libraries?

Advantages of using open-source libraries include: Cost-effectiveness (often free to use), Faster development (pre-built components), Community support (large user base for help), Transparency (source code is available for review), and Flexibility (customizable to specific needs).

Disadvantages of using open-source libraries include: Security vulnerabilities (potential for malicious code), Compatibility issues (may not work with all systems), Limited support (reliance on community), Licensing complexities (understanding and adhering to licenses), and Maintenance risks (library may become unmaintained).

15. Describe a time when you had to learn a new technology or skill quickly. How did you approach it?

During a project involving data analysis, I needed to learn Pandas in Python very quickly. I started by identifying the specific Pandas functions and data structures required for my immediate tasks like data cleaning, manipulation, and aggregation. I then focused on online resources such as the official Pandas documentation, tutorials on platforms like DataCamp and Stack Overflow, and practical examples available on GitHub. I prioritized learning by doing, applying what I learned to real project data and experimenting with different approaches to solve the problems at hand.

I adopted a focused and iterative learning approach. I started with the basics, such as reading CSV files, creating DataFrames, and performing basic filtering and sorting. As I progressed, I tackled more complex operations like merging, grouping, and pivoting data. If I encountered a problem, I would first consult the documentation and online resources. If that wasn't enough, I would seek help from colleagues or online communities. The key was to constantly apply what I was learning and to actively seek out solutions to problems as they arose. This helped me retain the information more effectively and build a solid foundation in Pandas in a short amount of time.

16. How do you stay up-to-date with the latest trends and technologies in software development?

I stay up-to-date with the latest trends and technologies in software development through a combination of online resources, community engagement, and personal projects. Specifically, I regularly read industry blogs and newsletters like The Morning Paper, Martin Fowler's blog, and Hacker News. I also follow key influencers and organizations on social media platforms like Twitter and LinkedIn.

Furthermore, I actively participate in online communities such as Stack Overflow and Reddit to learn from others and contribute my own knowledge. I also try to attend relevant conferences and webinars when possible. To solidify my understanding, I often experiment with new technologies and frameworks through personal projects. For example, I recently explored Serverless architecture using AWS Lambda and API Gateway to build a small application, allowing me to gain practical experience with these cutting-edge technologies.

17. What is the role of version control systems like Git in software development?

Version control systems (VCS) like Git are crucial in software development for managing changes to source code over time. They allow multiple developers to collaborate effectively, track modifications, and revert to previous versions if needed. Git enables branching and merging, facilitating parallel development and feature integration.

Specifically, Git provides benefits such as:

- Collaboration: Enables multiple developers to work on the same codebase without conflicts.

- Tracking Changes: Records every modification made to the code, along with who made it and when.

- Branching and Merging: Supports creating separate branches for new features or bug fixes, which can then be merged back into the main codebase. Branches are useful for isolating experimental code, preventing a broken feature from impacting the main code base.

- Reverting: Allows easy rollback to previous versions if necessary.

- Backup and Recovery: Acts as a backup of the codebase, protecting against data loss.

18. Explain the difference between compiled and interpreted languages.

Compiled languages, like C++ or Java (when compiled to native code), are translated directly into machine code that can be executed by the processor. This translation happens before the program is run, by a compiler. This results in faster execution speeds since the processor directly understands the compiled code.

Interpreted languages, such as Python or JavaScript, are not directly translated to machine code. Instead, an interpreter reads and executes the code line by line. This makes development faster, because there is no compilation step needed before the code can be run. However, the runtime execution is typically slower, because the interpreter has to parse and execute each line of code every time the program runs.

19. How do you approach designing the architecture for a new software application?

When approaching a new software application architecture, I start by understanding the application's requirements, including its purpose, target audience, expected scale, and performance needs. I then consider key architectural patterns like microservices, layered architecture, or event-driven architecture, selecting the one that best fits the requirements and considering factors like scalability, maintainability, and security. I also consider aspects like the database design, API design (REST or GraphQL, for example), choice of programming languages, frameworks and infrastructure (cloud or on-premise).

Next, I would begin thinking about the deployment strategy and the testing strategy. It is crucial to consider the tradeoffs between different approaches, and I always aim for a design that is modular, testable, and adaptable to future changes. I document my design decisions and regularly review them as the project progresses, incorporating feedback from the development team.

20. What are some common security vulnerabilities in software, and how can you prevent them?

Common security vulnerabilities include SQL Injection, Cross-Site Scripting (XSS), and Buffer Overflows. SQL Injection happens when user input is directly used in SQL queries, allowing attackers to manipulate the database. To prevent it, use parameterized queries or ORMs. XSS occurs when malicious scripts are injected into websites. Sanitize user input and use output encoding to prevent this. Buffer overflows happen when a program writes data beyond the allocated buffer size. Use bounds checking and safer string handling functions to avoid them.

Other vulnerabilities include insecure authentication/authorization, where weak passwords or flawed access control mechanisms are exploited. Use strong password policies, multi-factor authentication, and proper authorization checks. Also, vulnerabilities arise from using components with known vulnerabilities. Regularly update and patch dependencies. Finally, lack of proper error handling and logging can expose sensitive information and hinder debugging. Implement robust error handling and logging mechanisms.

21. What are the key principles of clean code?

Clean code emphasizes readability, maintainability, and reducing complexity. Key principles include:

- DRY (Don't Repeat Yourself): Avoid duplicating code; use functions or classes to encapsulate logic.

- KISS (Keep It Simple, Stupid): Favor simplicity and clarity over complex solutions.

- YAGNI (You Ain't Gonna Need It): Don't add functionality until you need it.

- Single Responsibility Principle (SRP): A class should have only one reason to change.

- Meaningful Names: Use clear and descriptive names for variables, functions, and classes.

- Small Functions: Functions should be small and do one thing well. Usually under 20 lines is recommended.

- Comments should explain why, not what: Self-documenting code is best, explain your reasoning behind the code if necessary.

- Error Handling: Handle errors gracefully and provide meaningful error messages.

- Testing: Write unit tests to ensure code works as expected, and to prevent regressions. Ideally following TDD - Test Driven Development.

22. How do you measure the performance of your code, and what can you do to improve it?

I measure code performance using profiling tools like perf on Linux or the built-in profiler in languages like Python (cProfile). These tools help identify bottlenecks by showing where the program spends most of its time. I also use benchmarking libraries (e.g., pytest-benchmark in Python, Criterion.rs in Rust) to compare different implementations or algorithms. Unit tests with timing assertions are also helpful for catching performance regressions.

To improve performance, I consider several strategies. First, I analyze the algorithm's time complexity and look for opportunities to use more efficient algorithms or data structures (e.g., switching from a linear search to a binary search, or using a hash table for faster lookups). Code optimization techniques like loop unrolling, memoization, and inlining can also help. Finally, optimizing I/O operations (e.g., using buffered I/O, reducing the number of system calls) and leveraging parallelism or concurrency can significantly boost performance.

23. What are some different software development methodologies (e.g., Agile, Waterfall), and what are their pros and cons?

Software development methodologies provide frameworks for structuring, planning, and controlling the process of developing information systems. Some popular methodologies include Agile and Waterfall.

Waterfall is a linear, sequential approach where each phase (requirements, design, implementation, testing, deployment, maintenance) must be completed before the next begins. Pros: Simple to understand and manage, well-defined stages, suitable for projects with stable requirements. Cons: Inflexible to changes, high risk and uncertainty, significant delays if errors are not detected early.

Agile is an iterative and incremental approach focused on flexibility and customer collaboration. Popular Agile frameworks include Scrum and Kanban. Pros: Adaptable to changing requirements, frequent delivery of working software, high customer satisfaction. Cons: Requires significant customer involvement, less predictable timelines and budgets, can be challenging to manage large teams.

24. How do you handle errors and exceptions in your code?

I use try-except blocks to handle errors gracefully. Within the try block, I place the code that might raise an exception. If an exception occurs, the corresponding except block catches it. This allows me to prevent the program from crashing and to implement specific error handling logic, like logging the error, displaying a user-friendly message, or retrying the operation.

I also use finally blocks for cleanup operations that need to be executed regardless of whether an exception occurred. For example, closing a file or releasing a resource. Furthermore, I raise custom exceptions using raise when necessary to signal specific error conditions within my code, allowing for more informative error handling at a higher level.

25. Explain what you know about different types of databases (SQL vs NoSQL) and when you might choose one over the other.

SQL databases are relational, using a structured schema to define how data is stored in tables with rows and columns. They use SQL (Structured Query Language) to manage and manipulate data. Transactions are ACID compliant (Atomicity, Consistency, Isolation, Durability), ensuring data integrity.

NoSQL databases are non-relational and offer more flexibility in schema design. They come in various types, such as document stores (e.g., MongoDB), key-value stores (e.g., Redis), column-family stores (e.g., Cassandra), and graph databases (e.g., Neo4j). NoSQL databases often prioritize scalability and performance over strict ACID compliance. I'd choose SQL when data consistency and complex relationships are crucial (e.g., financial transactions, inventory management). NoSQL is better suited for applications needing high scalability, rapid development, and flexible data models (e.g., social media feeds, real-time analytics, IoT data).

26. What is the 'SOLID' principle?

The SOLID principles are a set of five design principles intended to make software designs more understandable, flexible, and maintainable. They are a subset of many principles promoted by Robert C. Martin.

- Single Responsibility Principle: A class should have only one reason to change.

- Open/Closed Principle: Software entities should be open for extension, but closed for modification.

- Liskov Substitution Principle: Subtypes must be substitutable for their base types.

- Interface Segregation Principle: Many client-specific interfaces are better than one general-purpose interface.

- Dependency Inversion Principle: Depend upon abstractions, not concretions. High-level modules should not depend on low-level modules. Both should depend on abstractions.

Intermediate Software Engineer (Defi) interview questions

1. How would you design a decentralized exchange (DEX) that supports limit orders?

To design a DEX with limit orders, I'd use an on-chain order book or an off-chain order relay. For an on-chain order book, users submit limit orders to the smart contract, which stores and matches them. This is fully decentralized but gas intensive. For an off-chain relay, orders are broadcast to relayers who maintain the order book and submit matching orders to the contract for execution, offering better performance.

Key components include:

- Smart Contracts: Handling order placement, cancellation, and execution.

- Order Book: Storing limit orders (either on-chain or managed off-chain by relayers).

- Matching Engine: Matching buy and sell orders based on price and time priority (either on-chain or handled off-chain by relayers).

- Settlement: Executing trades and transferring assets between users' wallets.

2. Explain the concept of 'impermanent loss' in decentralized finance (DeFi) and how it affects liquidity providers.

Impermanent loss (IL) occurs when you provide liquidity to a decentralized exchange (DEX) and the price of your deposited assets changes compared to when you deposited them. The larger the price divergence, the greater the impermanent loss. It's called 'impermanent' because the loss is only realized if you withdraw your liquidity at that point; if the price reverts, the loss could disappear.

Liquidity providers are affected because the value of their deposited assets can decrease relative to simply holding the assets outside of the liquidity pool. While they earn trading fees, these fees may not always offset the impermanent loss, potentially resulting in a lower return compared to holding. The losses are relative; the pool is rebalanced and they receive back a different ratio of the tokens than originally deposited, leading to potentially more of the underperforming asset.

3. Describe a situation where using a Layer-2 scaling solution would be beneficial for a DeFi application, and which solution would you pick and why?

A DeFi application experiencing high transaction fees and slow confirmation times on the Ethereum mainnet would greatly benefit from a Layer-2 scaling solution. For example, consider a decentralized exchange (DEX) seeing increased trading volume. As transaction volume increases the gas fees also rises making small trades very costly and preventing widespread use of the DEX. In this case, a Layer-2 scaling solution such as Optimism would be highly beneficial.

Optimism uses Optimistic Rollups, which bundle multiple transactions into a single transaction on the main chain, significantly reducing gas costs and improving transaction throughput. Other solutions like zk-rollups (e.g., zkSync) provide enhanced security but are more complex to implement. Therefore, given the scenario, Optimism strikes a good balance between security, EVM compatibility, and relative ease of integration, making it a suitable choice for scaling the DEX. It's important to note that selecting the best Layer-2 solution depends on the specific needs and priorities of the application.

4. Walk me through the process of upgrading a smart contract on a blockchain. What are the risks, and how can they be mitigated?

Upgrading a smart contract involves deploying a new contract and migrating data and/or functionality from the old contract. The process often includes:

- Deployment of New Contract: The updated smart contract is deployed to the blockchain.

- Data Migration: Existing data from the old contract needs to be transferred to the new contract. This can be complex, especially with intricate data structures. Strategies include using proxies, state variable modification, or data export/import mechanisms.

- Functionality Migration: Routing calls to the new contract. Often achieved using proxy patterns.

- Contract Migration: Setting up a proxy to point towards new smart contract.

Risks include data loss or corruption during migration, unexpected behavior in the new contract, and potential security vulnerabilities introduced in the upgrade. Mitigations include thorough testing on testnets, using upgradeable contract patterns like proxy patterns (e.g., Transparent Proxy Pattern or UUPS), implementing data validation and backup mechanisms, and having a rollback plan in case the upgrade fails. Also, time lock delays can provide users with an opportunity to exit before the change.

5. What security considerations are important when building a DeFi lending protocol?

When building a DeFi lending protocol, several security considerations are paramount. Smart contract vulnerabilities are a primary concern; thorough auditing by multiple independent firms is essential. This includes checking for common exploits like reentrancy attacks, integer overflow/underflow, and front-running. Access control mechanisms need careful design to prevent unauthorized modifications to the protocol's parameters or funds.

Oracle manipulation is another significant risk. Since lending protocols rely on external price feeds, securing these oracles against attacks is vital. Strategies include using decentralized oracle networks and implementing outlier detection mechanisms. Finally, consider governance security; a compromised governance system can lead to malicious changes in the protocol. Time locks on critical changes and decentralized governance models can mitigate this risk.

6. Explain the different types of oracles and their trade-offs in terms of security, speed, and cost.

Oracles provide external data to blockchains. Different types exist, each with trade-offs. Centralized oracles are fast and cost-effective but represent a single point of failure, posing a significant security risk. Decentralized oracles, like Chainlink, enhance security by aggregating data from multiple sources, mitigating the risk of manipulation; however, this approach usually impacts speed and increases operational costs.

Another category is hardware oracles, which use physical sensors or devices to provide real-world data. They are secure against digital manipulation but can be expensive and complex to implement. Finally, computation oracles perform off-chain computations and relay results to the blockchain. These can be vulnerable if the computation environment is compromised. The choice of oracle depends on the specific application's requirements and the acceptable balance between security, speed, and cost. For example, price feeds generally use decentralized oracles while a simple 'was event X triggered' may use a cheaper, faster centralized oracle.

7. How would you implement a decentralized voting system for a DAO?

A decentralized voting system for a DAO can be implemented using blockchain technology, ensuring transparency, security, and immutability. Smart contracts on platforms like Ethereum can define voting rules, proposal submission processes, and vote counting mechanisms. Token holders of the DAO would typically use their tokens as voting power, weighted according to their holdings. Examples:

- Token-weighted voting: Each token represents a vote.

- Quadratic voting: Reduces the influence of large token holders.

- Snapshot voting: Uses off-chain voting for gas efficiency, referencing on-chain token balances at a specific block height.

The voting process involves submitting proposals, casting votes (usually signed transactions interacting with the smart contract), and tallying the results after a predefined voting period. The smart contract enforces the rules and automatically executes the outcome if the proposal passes the defined quorum and threshold.

8. Discuss different strategies for mitigating front-running attacks in DeFi applications.

Mitigating front-running attacks in DeFi requires a multi-faceted approach. One common strategy is using transaction ordering services (e.g., using Flashbots), which allow users to bundle transactions and specify conditions like minimum acceptable price or slippage, making it harder for front-runners to insert their transactions ahead. Another strategy is implementing commit-reveal schemes, where users initially commit to a transaction hash, revealing the actual transaction details later, preventing front-runners from knowing the transaction's content beforehand.

Further approaches involve using delayed execution via time locks, implementing submarine sends (off-chain agreement before on-chain execution), and integrating optimistic rollups or other layer-2 scaling solutions which provide faster transaction confirmation times and reduced gas costs, diminishing front-running opportunities. Finally, smart contract design choices like using batch auctions or frequent price updates from oracles can also help reduce the profitability of front-running.

9. Describe the differences between ERC-20, ERC-721, and ERC-1155 tokens, and provide use cases for each.

ERC-20 tokens are fungible, meaning each token is identical and interchangeable. They are used for cryptocurrencies, utility tokens, and reward points, where each unit has the same value.

ERC-721 tokens are non-fungible (NFTs), where each token is unique. They are used for representing ownership of digital or physical assets like artwork, collectibles, or real estate. Each token has distinct characteristics and value.

ERC-1155 tokens are multi-token standard allowing both fungible and non-fungible tokens to be managed in a single contract. This is more efficient for games or applications that require both types of tokens. Use cases include in-game assets, representing both consumable items (fungible) and unique collectibles (non-fungible) within the same system.

10. How do you handle integer overflows and underflows in Solidity smart contracts?

Integer overflows and underflows can lead to unexpected behavior and vulnerabilities in Solidity smart contracts. Prior to Solidity version 0.8.0, manual checks or external libraries like SafeMath were necessary to prevent these issues. SafeMath provides functions like add(), sub(), mul(), and div() which revert the transaction if an overflow or underflow occurs.

Since Solidity 0.8.0, arithmetic operations have built-in overflow and underflow checks. If an overflow or underflow happens, the transaction will revert. To revert to the previous behavior (wrapping arithmetic), you can use the unchecked block like so:

unchecked {

uint256 x = a + b; // Could overflow, wraps around

}

Using unchecked requires careful consideration as it disables the safety checks.

11. What are some potential risks associated with cross-chain bridges and how can these be addressed?

Cross-chain bridges, while enabling interoperability, introduce several risks. Smart contract vulnerabilities are primary concerns, as flaws can lead to exploits and fund losses. Centralization is another risk; bridges often rely on a limited set of validators or custodians, creating single points of failure and potential for collusion or censorship. Liquidity risks also exist if a bridge doesn't have sufficient assets to meet withdrawal demands. Regulatory uncertainty adds complexity, given the varying legal landscapes in different jurisdictions.

To address these risks, rigorous security audits and formal verification of smart contracts are crucial. Decentralizing bridge governance and validator sets enhances security and resilience. Implementing robust monitoring systems and automated risk management tools helps detect and mitigate suspicious activities. Furthermore, exploring trustless or minimally trusted bridge designs, such as those leveraging zero-knowledge proofs or optimistic rollups, can minimize reliance on centralized entities. Regulatory compliance and proactive engagement with regulatory bodies are essential for navigating the evolving legal landscape.

12. Explain the concept of 'flash loans' and their potential use cases and risks.

Flash loans are uncollateralized loans in decentralized finance (DeFi) that must be repaid within the same transaction block. If the loan isn't repaid by the end of the block, the entire transaction is reverted, as if it never happened. This is made possible by the atomic nature of blockchain transactions.

Potential use cases include arbitrage (profiting from price differences across exchanges), collateral swapping (moving collateral between lending platforms), and self-liquidation (avoiding liquidation penalties). Risks primarily involve sophisticated attacks, like arbitrage bots front-running profitable trades or malicious actors manipulating prices within the same block to profit at the expense of others. Smart contract vulnerabilities in the flash loan provider or related protocols also present a significant risk.

13. How do you approach testing smart contracts, including unit tests, integration tests, and fuzzing?

Testing smart contracts requires a multi-faceted approach. Unit tests focus on individual functions or modules in isolation, ensuring they behave as expected with different inputs. Tools like Hardhat, Foundry or Truffle are common for writing and running these tests using languages such as JavaScript/TypeScript or Solidity. We can write assertions to check for expected return values, state changes, and event emissions. For example:

it("Should transfer tokens correctly", async function () {

// ...

expect(await token.balanceOf(receiver.address)).to.equal(amount);

});

Integration tests verify the interaction between different smart contracts or components within a larger system. This involves deploying contracts to a test network (like Ganache or a public testnet), simulating user interactions, and verifying the overall system behavior. Fuzzing, using tools like Echidna or DappTools' hevm, involves automatically generating a large number of random or semi-random inputs to uncover unexpected behavior, edge cases, and vulnerabilities. The goal is to ensure the contract doesn't break with unexpected input. This can involve setting properties we expect to always hold true and verifying it is true under randomly generated inputs.

14. What are some best practices for writing gas-efficient Solidity code?

To write gas-efficient Solidity code, consider these best practices:

- Use

calldatafor function arguments:calldataprevents data from being copied to memory, saving gas. - Minimize storage writes: Storage is the most expensive operation. Cache values in memory when possible.

- Use

memoryinstead ofstoragefor temporary variables: Memory is cheaper than storage. - Pack variables: Group variables that use less than 256 bits into a single storage slot to reduce storage costs. For example:

struct Example { uint16 a; uint16 b; uint32 c; } - Use short circuiting: Utilize short circuiting in logical expressions (e.g.,

&&,||) to avoid unnecessary evaluations. - Use the unchecked keyword: Where possible, use the

uncheckedkeyword for arithmetic operations where overflow/underflow isn't a concern. - Careful with Loops: Keep loops as short as possible and avoid unnecessary operations within them.

- Avoid unnecessary external calls: Minimize the number of external calls since they are more expensive.

- Use constants and immutables: Use

constantfor values known at compile time andimmutablefor values known at contract deployment. - Use appropriate data types: Use the smallest data type that fits your needs (e.g.,

uint8instead ofuint256if the value is always less than 256).

15. Describe the architecture of a stablecoin and the different mechanisms used to maintain its peg.

Stablecoins aim to maintain a stable value, typically pegged to a fiat currency like the US dollar. Their architecture depends on the mechanism used to achieve this peg. Broadly, stablecoins fall into these categories:

- Fiat-collateralized: These are backed by reserves of fiat currency held in custody. The issuer mints stablecoins representing a claim on those reserves. The peg is maintained by arbitragers who buy or sell the stablecoin to profit from deviations from the peg. Transparency and trust in the custodian are crucial.

- Crypto-collateralized: These are backed by other cryptocurrencies. Due to the volatility of crypto, they are often over-collateralized. Smart contracts manage the collateral and minting/burning of stablecoins. Mechanisms like liquidation ratios and stability fees help maintain the peg. Examples include MakerDAO's DAI.

- Algorithmic: These rely on algorithms to adjust the supply of the stablecoin to maintain the peg. This can involve minting new coins or burning existing ones, often through the use of a secondary token. These are generally more complex and have faced challenges in maintaining stability. Rebase tokens are a subset of algorithmic stablecoins.

- Commodity-collateralized: These are backed by commodities such as gold.

16. How would you implement a mechanism for recovering lost or stolen private keys in a decentralized manner?

A decentralized key recovery mechanism can be implemented using Shamir's Secret Sharing (SSS). The private key is split into multiple 'shares,' and each share is distributed to different trusted entities or individuals. A threshold number of these shares (e.g., 3 out of 5) are required to reconstruct the original private key. This prevents a single point of failure, as losing one or two shares doesn't compromise the key.

Smart contracts can manage the shares and the reconstruction process. For example, a smart contract could be configured to allow reconstruction only if the required number of shares are submitted along with valid signatures from each share holder. This approach ensures that the key recovery process is transparent, auditable, and decentralized, reducing the risk of unauthorized access or manipulation. Here's a basic example:

# Shamir's Secret Sharing example (simplified)

# In a real implementation, use a robust library

def split_key(key, num_shares, threshold):

shares = []

# Implementation details omitted for brevity

return shares

def recover_key(shares):

key = None

# Implementation details omitted for brevity

return key

17. Explain the role of staking in securing a Proof-of-Stake blockchain network.

In a Proof-of-Stake (PoS) blockchain, staking is the process where validators lock up a certain amount of their cryptocurrency holdings as collateral to participate in the consensus mechanism. This locked-up cryptocurrency acts as a guarantee that the validator will act in the best interest of the network. By staking, validators earn the right to propose and validate new blocks, and in return, they receive staking rewards.

The security aspect stems from the fact that validators risk losing their staked coins if they attempt to manipulate the blockchain or validate fraudulent transactions. This economic disincentive encourages validators to behave honestly and follow the rules of the protocol. The more tokens staked overall, the more secure the network becomes, as a larger amount of capital would need to be compromised to attack the chain successfully. Thus, staking aligns the interests of validators with the health and security of the network.

18. How do you monitor and analyze the performance of a DeFi application on a blockchain?

Monitoring and analyzing the performance of a DeFi application on a blockchain involves tracking several key metrics and using various tools. Common metrics include transaction success rates, gas usage, smart contract execution time, and network congestion. Tools such as blockchain explorers (e.g., Etherscan), node monitoring software (e.g., Prometheus, Grafana), and specialized DeFi analytics platforms (e.g., Nansen, Dune Analytics) are used to gather and visualize this data.

Specifically, analyzing smart contract performance often involves examining gas costs using eth_estimateGas before deployment, and then analyzing actual gas used in production. Debugging tools like Tenderly or hardhat can be used to simulate transactions and pinpoint performance bottlenecks in the contract code. Monitoring API response times and error rates from services interacting with the blockchain is also crucial. Finally, setting up alerts for critical events or performance degradations helps ensure proactive management.

19. What are the trade-offs between using a centralized vs. decentralized approach for key management in a DeFi application?

Centralized key management offers simplicity and control. It's easier to implement, manage, and recover keys if lost or compromised. However, it introduces a single point of failure and trust. A breach in the central key store compromises all user funds. Decentralized key management enhances security by distributing key control among multiple parties or devices, reducing the risk of a single point of failure. This approach improves user autonomy but adds complexity in implementation, key recovery, and transaction signing. It may also increase gas costs for certain operations. Trade-offs often involve balancing security, usability, and cost.

20. How would you design a system for on-chain governance that is resistant to manipulation?

To design a manipulation-resistant on-chain governance system, several mechanisms can be combined. Quadratic voting/funding limits the influence of large token holders, as the cost to vote increases quadratically with the number of tokens used. Timelocks introduce delays before governance proposals are executed, allowing the community time to analyze and react, reducing the impact of rushed or malicious proposals. Decentralized identity (DID) or proof-of-personhood can help mitigate sybil attacks, where attackers create multiple identities to amplify their voting power. Finally, the use of multisig wallets for key governance functions provides an extra layer of security, requiring multiple independent actors to approve critical actions.

Further mechanisms include:

- Delegated Proof of Stake (DPoS): Select delegates to represent the community.

- Reputation Systems: Weigh votes based on past contributions or reputation.

- Formal Verification: Use of formal methods to verify the correctness of the smart contract code.

21. Explain how zk-SNARKs or zk-STARKs can be used to enhance privacy in DeFi transactions.

zk-SNARKs (Zero-Knowledge Succinct Non-Interactive ARguments of Knowledge) and zk-STARKs (Zero-Knowledge Scalable Transparent ARguments of Knowledge) are cryptographic proofs that allow one party (the prover) to prove to another party (the verifier) that a certain computation was performed correctly without revealing any information about the computation itself, or its inputs. In DeFi, they can be used to enhance privacy by allowing users to prove that they have sufficient funds or meet certain conditions for a transaction without revealing the actual amount of funds they possess or the specifics of their eligibility criteria.

For example, a user could prove they have enough tokens to participate in a lending pool without revealing their exact token balance. This is achieved by generating a zero-knowledge proof that satisfies a predefined smart contract logic. The smart contract only verifies the proof without learning anything about the user's actual data, preserving user's privacy. zk-STARKs offer the additional advantage of transparency, eliminating the need for a trusted setup required by zk-SNARKs, although they generally result in larger proof sizes.

22. What are the benefits and drawbacks of using different consensus mechanisms (e.g., Proof-of-Work, Proof-of-Stake, Delegated Proof-of-Stake) for DeFi applications?

Different consensus mechanisms offer varying trade-offs for DeFi applications. Proof-of-Work (PoW), like Bitcoin, offers strong security but suffers from scalability issues and high energy consumption, making it less suitable for high-throughput DeFi. Proof-of-Stake (PoS), used by Ethereum, improves energy efficiency and scalability but introduces potential centralization risks and the 'nothing at stake' problem. Delegated Proof-of-Stake (DPoS) further enhances speed and scalability through elected delegates, but its reliance on a small group intensifies centralization concerns.

The choice depends on the specific DeFi application's priorities. For high-value transactions where security is paramount, PoW might be considered despite its limitations. For applications prioritizing speed and lower fees, PoS or DPoS are more appropriate, but developers must carefully consider the centralization risks and implement measures to mitigate them. Other consensus mechanisms like Proof of Authority (PoA) offer even greater speed, but are usually limited to private or permissioned blockchains, which might not align with the core principles of DeFi.

23. Imagine you're building a DeFi app. What are the steps you'd take to make sure it's secure from hackers trying to steal all the money?

Securing a DeFi app involves a multi-faceted approach. First, rigorous code audits by multiple independent security firms are crucial to identify vulnerabilities in smart contracts. Static analysis tools and fuzzing can also help. Formal verification, although more complex, provides mathematical proof of contract correctness. Implement robust access controls, limiting privileges to only necessary functions. Use established and audited libraries like OpenZeppelin for common functionalities, and avoid writing custom code when possible. Implement circuit breakers to pause the contract in case of suspicious activity, and have a well-defined emergency response plan.

Second, prioritize security during development. Implement thorough testing, including unit, integration, and penetration testing. Monitor on-chain activity for anomalies, such as large or unusual transactions. Secure the private keys used for contract deployment and administration using hardware security modules (HSMs) or multi-signature wallets. Consider bug bounty programs to incentivize ethical hackers to find and report vulnerabilities. Finally, implement rate limits to prevent denial-of-service attacks and consider insurance to mitigate potential losses.

24. Let's say your DeFi project suddenly gets super popular and tons of people want to use it. How do you make sure it can handle all the new users without crashing?

To handle a surge in DeFi project users, scalability is key. First, I'd focus on optimizing the smart contracts for gas efficiency and minimizing on-chain operations. This might involve batch processing transactions or using more efficient data structures. Second, I'd explore Layer 2 scaling solutions like rollups (Optimistic or ZK) or sidechains to offload transaction processing from the main Ethereum chain. Load balancing across multiple nodes would also be essential to distribute the workload and prevent bottlenecks. Finally, robust monitoring and alerting systems are needed to quickly identify and address any performance issues that arise, allowing for proactive intervention and scaling adjustments.

Some concrete steps I would explore:

- Gas Optimization: Review and refactor smart contract code, reduce storage reads/writes

- Layer 2 scaling: Implement optimistic or ZK rollups for off-chain transaction processing

- Database Optimization: Implement proper indexing and caching to improve database performance

- Load Balancing: Distribute traffic across multiple nodes or servers

- Caching: Implement caching mechanisms to reduce database load for frequently accessed data

- Monitoring and Alerting: Use tools like Prometheus and Grafana to monitor performance metrics and set up alerts for critical issues.

Advanced Software Engineer (Defi) interview questions

1. Explain the concept of zero-knowledge proofs and how they can be applied to enhance privacy in DeFi protocols. What are some trade-offs?

Zero-knowledge proofs (ZKPs) allow one party (the prover) to convince another party (the verifier) that a statement is true without revealing any information beyond the validity of the statement itself. In DeFi, ZKPs can enhance privacy by allowing users to prove they meet certain criteria (e.g., having sufficient collateral for a loan, possessing KYC credentials from a trusted source) without disclosing their actual balance, transaction history, or identity. This enables privacy-preserving lending, trading, and other DeFi activities.

Some trade-offs of using ZKPs include the high computational cost of generating proofs, which can lead to increased transaction fees and slower processing times. Implementing and auditing ZKP systems are complex, which introduces potential security vulnerabilities. Also, there is not a wide adoption of the underlying cryptographic libraries, leading to risks of unknown flaws and bugs. Furthermore, proving certain complex statements using ZKPs can be challenging, limiting their applicability in some DeFi scenarios.

2. Describe the challenges and solutions related to cross-chain interoperability in DeFi. Elaborate on different approaches like bridges and atomic swaps.

Cross-chain interoperability in DeFi faces several challenges. Security risks are prominent, as bridges often become targets for exploits due to the complexities of managing assets across different chains. Scalability is another hurdle; transaction speeds and costs can vary greatly between chains, impacting the overall user experience. Furthermore, achieving true decentralization and trustlessness is difficult when relying on centralized or federated bridges. Different chains also have varying consensus mechanisms and data structures, making seamless communication difficult.

Solutions include bridges and atomic swaps. Bridges, such as those employing lock-and-mint or burn-and-mint mechanisms, facilitate asset transfers between chains, but must carefully handle security, preventing double spending and ensuring consensus integrity. Atomic swaps allow for direct peer-to-peer exchange of assets across different blockchains without intermediaries, but they often require time-sensitive transactions and may not be suitable for all asset types. Other solutions include LayerZero which aims to provide generic messaging between chains and Chainlink's CCIP (Cross-Chain Interoperability Protocol), which seeks to offer a secure and reliable communication layer for cross-chain applications. The optimal approach often depends on the specific use case and the trade-offs between security, speed, and cost.

3. How do you approach the design of a decentralized oracle system that is resistant to manipulation and provides accurate data for DeFi applications?

Designing a manipulation-resistant and accurate decentralized oracle involves several key considerations. Data source selection is crucial; using a diverse set of reputable APIs helps mitigate single-source failures and manipulation. Data aggregation methods like median or weighted averages can filter out outliers and malicious data points. Furthermore, incorporating economic incentives and staking mechanisms where oracle nodes are penalized for providing inaccurate data and rewarded for accuracy improves data integrity.

Additionally, employing techniques like commit-reveal schemes can prevent nodes from influencing the aggregated result based on other nodes' submissions. On-chain verification, where feasible, ensures that the oracle's reported data aligns with off-chain sources. Finally, frequent audits and upgrades are essential to address vulnerabilities and improve security over time.

4. Discuss the complexities of implementing regulatory compliance (e.g., KYC/AML) within decentralized finance while preserving user privacy.

Implementing regulatory compliance like KYC/AML in DeFi presents a significant challenge due to the inherent conflict with user privacy. DeFi's permissionless and pseudonymous nature clashes with traditional KYC/AML requirements that mandate identifying and verifying users. Balancing these demands requires innovative solutions that minimize data collection and maximize privacy.

Some approaches include: zero-knowledge proofs to verify user attributes without revealing the underlying data, federated learning for collaborative model training without sharing raw data, and decentralized identity solutions where users control their data and selectively disclose information to comply with regulations. However, these technologies are still evolving, and their effectiveness in preventing illicit activities while preserving privacy is an ongoing area of research and development. Furthermore, the global nature of DeFi makes it difficult to harmonize regulatory frameworks across different jurisdictions.

5. Explain Layer-2 scaling solutions (e.g., rollups, state channels) and their impact on DeFi's scalability and transaction costs.

Layer-2 scaling solutions address blockchain limitations by processing transactions off the main chain (Layer-1) while still benefiting from its security. Two primary types are rollups and state channels. Rollups bundle multiple transactions into a single transaction on Layer-1, reducing gas costs and increasing throughput. There are two main types: Optimistic Rollups, which assume transactions are valid unless challenged, and Zero-Knowledge Rollups (ZK-Rollups), which use cryptographic proofs to ensure validity without requiring fraud proofs.

State channels involve direct interaction between participants off-chain, submitting only the final state to the main chain. This drastically reduces on-chain activity and associated costs. These technologies significantly improve DeFi's scalability by handling more transactions per second and lower the barrier to entry by reducing transaction fees, thus fostering broader adoption. Specifically, they make microtransactions and high-frequency trading viable within DeFi ecosystems.

6. How would you design a governance mechanism for a DeFi protocol that balances decentralization, security, and efficiency in decision-making?

A balanced DeFi governance mechanism involves a multi-layered approach. Initially, a token-based voting system allows broad community participation, ensuring decentralization. Proposals are submitted and voted on by token holders, weighted by their stake. To enhance security, a council of elected oracles or reputable community members can review proposals, especially those involving critical protocol changes, before they go to a full vote. This council acts as a safeguard against malicious or poorly vetted proposals.

For efficiency, quadratic voting or conviction voting can be implemented to allow users to express the intensity of their preferences. Furthermore, delegated voting enables users to entrust their voting power to representatives with specialized knowledge. Off-chain voting mechanisms with on-chain execution can reduce gas costs. A clear framework defining proposal types, quorums, and voting periods is crucial. Finally, a constitution outlining core principles and dispute resolution mechanisms ensures long-term stability.

7. Describe the different types of DeFi insurance and how they mitigate risks associated with smart contract vulnerabilities and impermanent loss.

DeFi insurance primarily covers smart contract vulnerabilities and impermanent loss. Smart contract cover mitigates risks from bugs, exploits, and hacks in smart contracts. Policies pay out if a contract is compromised, leading to fund loss. Impermanent loss insurance protects liquidity providers (LPs) in automated market makers (AMMs) like Uniswap. Impermanent loss occurs when the price ratio of deposited tokens changes, reducing their USD value compared to simply holding them. Insurance covers this loss, compensating LPs if the loss exceeds a certain threshold.

Different types exist: discretionary, where claims are assessed on a case-by-case basis, and parametric, which pays out automatically when pre-defined conditions are met (e.g., a specific smart contract exploit is confirmed by oracles). Also, some insurance focuses on specific events like oracle failures, while others offer broader coverage. Some platforms use pooled capital models where users pool funds to cover each other, while others operate more like traditional insurance companies, using actuarial models to price risk and capital reserves.

8. Explain the mechanics of flash loans and how they can be used for arbitrage, liquidation, and potentially malicious activities. How can protocols defend against exploits?

Flash loans are a type of uncollateralized lending within DeFi where you can borrow funds without providing any collateral, as long as the borrowed amount and a fee are repaid within the same transaction block. They enable arbitrage by allowing traders to capitalize on price differences across different exchanges. For example, if an asset is cheaper on exchange A than on exchange B, a trader can borrow funds via a flash loan, buy the asset on A, sell it on B, repay the loan plus fees, and pocket the difference, all in a single transaction. Flash loans also facilitate liquidations in lending protocols, where borrowers' positions are automatically closed when their collateral falls below a certain threshold. Someone could use flash loans to trigger these liquidations and profit from it.

Flash loans can be used for malicious activities such as manipulating prices on decentralized exchanges or governance attacks. Protocols can defend against these exploits by using reliable price oracles that are resistant to manipulation, implementing slippage controls to limit the impact of large trades on price, introducing delay mechanisms for governance proposals, and employing rigorous security audits and formal verification of smart contract code. Rate limiting to mitigate a series of flash loan attacks in a short time frame can also be used.

9. Discuss the role of formal verification in ensuring the correctness and security of smart contracts. What are the limitations?

Formal verification plays a crucial role in smart contract security by mathematically proving that the contract's code behaves as intended, preventing unexpected behavior like vulnerabilities or exploits. It uses techniques like model checking, theorem proving, and symbolic execution to analyze the contract's logic against a formal specification of its desired properties. This helps ensure functional correctness (the code does what it is supposed to) and security properties (e.g., no re-entrancy attacks, no integer overflows).

Limitations include the complexity of formally specifying the intended behavior, which can be time-consuming and error-prone. It can be challenging to scale formal verification to complex smart contracts. It also may not catch all possible vulnerabilities, as it relies on the accuracy of the formal specification. Furthermore, formal verification only addresses the code itself, not issues related to the blockchain environment (e.g., oracle manipulation) or human errors in deployment or usage.

10. How would you approach the security audit of a complex DeFi protocol, identifying potential vulnerabilities and proposing mitigation strategies?

A security audit of a complex DeFi protocol involves a multi-faceted approach. First, I'd start with a deep dive into the protocol's documentation and smart contract code, focusing on identifying potential vulnerabilities such as reentrancy attacks, integer overflows/underflows, front-running, and flaws in access control mechanisms. Static analysis tools (e.g., Slither, Mythril) would be used to automatically detect common vulnerabilities.

Next, a manual code review by experienced security auditors would be performed to identify logical errors and business logic flaws that automated tools may miss. Dynamic analysis and fuzzing would be used to test the protocol's behavior under various conditions, including edge cases and malicious inputs. Finally, mitigation strategies such as implementing checks-effects-interactions pattern, using safe math libraries, employing rate limiting, and implementing robust access control mechanisms would be proposed to address the identified vulnerabilities. Also a bug bounty program should be considered to continuously improve the protocol's security posture. Formal verification can also be considered for critical components.

11. Explain the concept of MEV (Miner Extractable Value) and its implications for DeFi users and protocol designers. What can be done to minimize its negative impact?

Miner Extractable Value (MEV), now often referred to as Maximal Extractable Value, refers to the profit that miners (or validators in Proof-of-Stake systems) can extract by including, excluding, or reordering transactions within a block they produce. This can happen in various ways, such as front-running user transactions on decentralized exchanges (DEXs), sandwich attacks (buying before and selling after a large order), or arbitrage opportunities across different DeFi platforms. For DeFi users, MEV can lead to higher transaction costs, slippage, and overall unfair execution. Protocol designers must be aware of MEV and build mechanisms to mitigate its negative impact.

To minimize the negative impact of MEV, several strategies can be employed. These include using transaction privacy solutions (e.g., hiding transaction details until execution), implementing frequent batch auction mechanisms (e.g., CoW protocol), using transaction ordering services that aim for fair ordering, and designing protocols that are less susceptible to MEV extraction. Ultimately, addressing MEV requires a multifaceted approach combining technological and economic considerations to create a more equitable and efficient DeFi ecosystem.

12. Describe the challenges of building decentralized stablecoins that maintain their peg to a fiat currency. Elaborate on different approaches like collateralized debt positions and algorithmic stablecoins.

Maintaining a stable peg for decentralized stablecoins presents several challenges. Collateralized debt positions (CDPs) require users to over-collateralize their stablecoin loans with volatile assets, which can lead to liquidations if the collateral value drops significantly. This requires robust liquidation mechanisms and oracles to accurately track collateral prices. Algorithmic stablecoins, on the other hand, rely on algorithms to adjust the supply based on demand, but they can be susceptible to death spirals if confidence in the system erodes. Maintaining sufficient demand during market downturns is a key challenge.

Specific challenges include: Oracle manipulation: attackers can exploit vulnerabilities in oracle systems to manipulate price feeds. Scalability: many stablecoin mechanisms are slow, expensive or difficult to implement on current blockchains. Centralization risks: while designed to be decentralized, some stablecoins rely on centralized components to function, potentially creating single points of failure, or introducing regulatory hurdles. Regulatory uncertainty is also a big hurdle to stablecoin adoption as the regulatory landscape surrounding cryptocurrencies is constantly evolving.

13. How do you approach the design of a DeFi protocol that is resilient to extreme market conditions and black swan events?

Designing a DeFi protocol resilient to extreme market conditions requires a multi-faceted approach. Key considerations include: diversification of collateral types to minimize reliance on any single asset, robust oracle mechanisms that are resistant to manipulation and can handle extreme price volatility (using multiple oracles and outlier detection), circuit breakers or emergency shutdown mechanisms that can pause the protocol in case of catastrophic events, and dynamic risk parameters that adjust collateralization ratios and liquidation thresholds based on market conditions. Furthermore, comprehensive auditing and formal verification of the smart contracts are crucial to identify and eliminate potential vulnerabilities. Finally, implementing a decentralized governance system enables the community to react and adapt quickly to unforeseen events.

Stress testing and simulation of the protocol under various extreme scenarios (e.g., sudden price crashes, flash loan attacks) are also essential. Monitoring key metrics such as collateralization ratios, liquidity, and oracle accuracy is crucial for early detection of potential issues. Regular audits and updates should be scheduled to ensure the long-term security and stability of the protocol.

14. Discuss the potential of DeFi in emerging markets and the challenges related to accessibility, infrastructure, and regulatory uncertainty.

DeFi offers significant potential in emerging markets by providing access to financial services for the unbanked and underbanked, facilitating cross-border payments, and enabling new lending and investment opportunities. It can bypass traditional intermediaries, reducing costs and increasing efficiency. This can lead to greater financial inclusion and economic empowerment in these regions.

However, challenges abound. Limited internet access and low levels of digital literacy hinder accessibility. Poor infrastructure, including unreliable electricity and network connectivity, can disrupt DeFi services. Regulatory uncertainty and the lack of clear legal frameworks create risks for both users and DeFi providers. Furthermore, volatility in cryptocurrency markets and security vulnerabilities in DeFi protocols pose significant threats, especially for less financially sophisticated users.

15. Explain how on-chain analytics can be used to monitor DeFi protocols, detect anomalies, and identify potential security threats.

On-chain analytics involves examining blockchain data to gain insights into DeFi protocol behavior. By monitoring transaction patterns, smart contract interactions, and token flows, anomalies can be detected. Unusual spikes in transaction volume, large fund withdrawals from a protocol, or unexpected smart contract calls can signal potential security threats like exploits or rug pulls.

Specifically, it allows us to:

- Track TVL (Total Value Locked) changes to identify potential fund drains.

- Monitor smart contract event logs for suspicious function calls or parameter values.

- Analyze transaction graphs to uncover illicit fund flows or coordinated attacks.

- Identify whale movements that could manipulate market prices.

- Alerts can be set up based on pre-defined thresholds for key metrics.

16. How would you design a decentralized identity system that allows users to selectively disclose personal information to DeFi applications while maintaining privacy?

A decentralized identity (DID) system for DeFi could use a combination of verifiable credentials (VCs) and zero-knowledge proofs (ZKPs) to enable selective disclosure and maintain privacy. Users would obtain VCs from trusted issuers (e.g., KYC providers, educational institutions) attesting to specific attributes. When interacting with a DeFi application, instead of sharing the entire VC, users could employ ZKPs to prove that they possess a VC with the required attributes (e.g., age over 18, accredited investor status) without revealing the underlying data.

Technically, this involves:

- DID registration: Users create a DID using a DID method compatible with a decentralized ledger.

- VC issuance: Trusted issuers sign VCs using their private keys, storing them on a decentralized storage or directly with the user.

- Selective Disclosure: Users generate ZKPs based on their VCs, proving specific attributes to DeFi applications. The application verifies the proof against the issuer's public key, ensuring authenticity and validity without revealing the user's private information. For example, using a library like

circomto create the circuits for zero-knowledge proof construction.

17. Discuss the impact of quantum computing on the security of DeFi protocols and potential mitigation strategies.

Quantum computing poses a significant threat to the security of DeFi protocols primarily because it can break widely used cryptographic algorithms like RSA and ECC, which are the foundation for digital signatures and encryption in many DeFi applications. This could allow attackers to steal funds, manipulate transactions, and compromise smart contracts.

Mitigation strategies include transitioning to post-quantum cryptography (PQC) algorithms that are resistant to quantum attacks. This involves:

- Identifying vulnerable components: Analyzing the DeFi protocol to pinpoint areas relying on susceptible algorithms.

- Implementing PQC: Replacing classical algorithms with quantum-resistant alternatives. This can involve libraries like liboqs.

- Hybrid approaches: Combining classical and PQC algorithms to maintain security during the transition phase.

- Smart contract upgrades: Designing upgradeable smart contracts to facilitate algorithm updates.

- Key management: Implementing robust key management practices that are resistant to quantum attacks, such as using larger key sizes or quantum key distribution (QKD) where feasible.

18. Explain the concept of recursive smart contracts and their potential applications in DeFi.

Recursive smart contracts are contracts that can call themselves, either directly or indirectly. This means a function within the contract initiates a call to the same contract, potentially triggering the same function or a different one within it. This allows for complex operations to be broken down into smaller, self-repeating steps, executed within a single transaction, until a certain condition is met. While powerful, recursion depth needs careful control to avoid exceeding gas limits.

In DeFi, recursive contracts can be used for:

- Automated market maker (AMM) strategies: Rebalancing liquidity pools or executing complex trades across multiple pools in a single transaction.

- Decentralized autonomous organizations (DAOs): Enabling complex voting mechanisms or automated fund distribution based on recursive logic.

- Yield farming optimization: Compounding yield farming rewards automatically and recursively across different protocols.

- Layer-2 scaling solutions: Batching transactions and recursively verifying proofs to improve throughput. However, it’s crucial to be aware of gas limits and security vulnerabilities (reentrancy attacks) when designing recursive smart contracts.

19. How would you design a system for decentralized dispute resolution in DeFi, ensuring fairness and transparency?