Recruiting for roles requiring a solid grasp of computer science fundamentals can be challenging. Interviewers need a set of questions to assess candidates effectively, ensuring they possess the required knowledge.

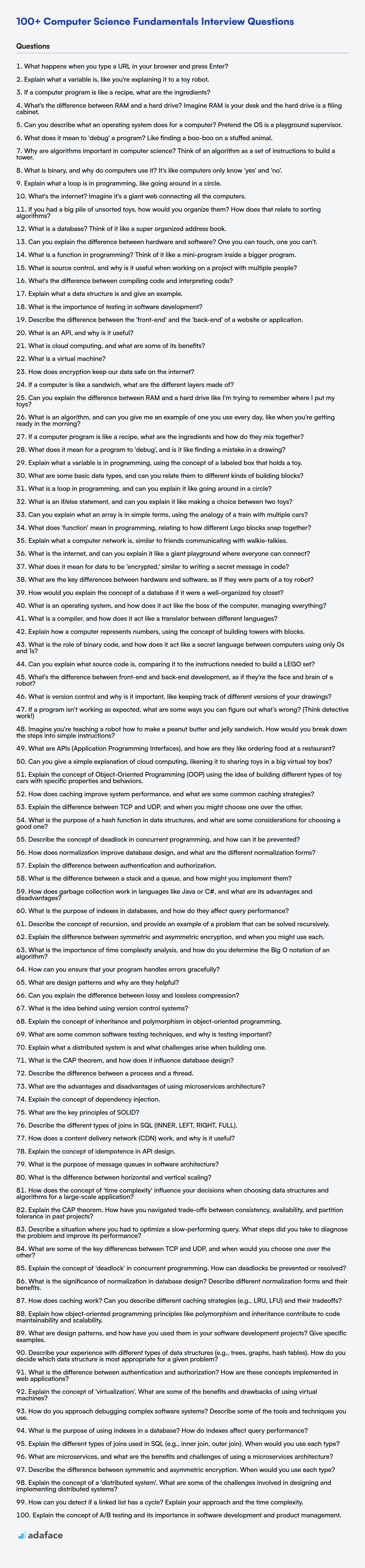

This blog post provides a curated list of computer science interview questions for various experience levels, from freshers to experienced professionals; there are even some MCQs to spice it up. The questions cover data structures, algorithms, and general programming principles.

By using these questions, you can filter candidates more effectively, ensuring they meet the skills required, and use Adaface's online tests for a data-driven hiring process.

Table of contents

Computer Science Fundamentals interview questions for freshers

1. What happens when you type a URL in your browser and press Enter?

When you type a URL in your browser and press Enter, several things happen behind the scenes. First, the browser parses the URL to determine the protocol (e.g., HTTP, HTTPS), domain name (e.g., example.com), and path (e.g., /index.html). It then checks its cache and the local DNS cache to find the IP address associated with the domain name. If the IP address isn't found locally, it queries a DNS server to resolve the domain name to an IP address.

Once the browser has the IP address, it establishes a connection with the server (typically via TCP). For HTTPS, a TLS/SSL handshake occurs to encrypt the connection. The browser then sends an HTTP request to the server, requesting the resource specified in the URL. The server processes the request and sends back an HTTP response, which includes the requested resource (HTML, CSS, JavaScript, images, etc.) and HTTP headers. The browser then renders the received content, displaying the webpage to you.

2. Explain what a variable is, like you're explaining it to a toy robot.

Beep boop! Imagine I have a special box. A variable is like that box. We give the box a name, like 'number_of_wheels'. Then, we can put something inside the box, like the number 4 (because cars have four wheels). Later, if we want to know how many wheels there are, we just look inside the 'number_of_wheels' box and it tells us! We can even change what's inside the box if we want to. For example, if we are talking about a bicycle we can change the box 'number_of_wheels' to have 2 inside instead.

3. If a computer program is like a recipe, what are the ingredients?

If a computer program is like a recipe, the ingredients are the data the program uses and the instructions it follows to process that data. Think of data as things like numbers, text, or even files, and the instructions as the steps the program takes to manipulate that data, similar to how a chef would manipulate ingredients.

Specifically, in programming terms, the 'ingredients' could be broken down into:

- Variables: Placeholders for storing data (like flour or sugar amounts).

- Data Structures: Ways of organizing data (like using a specific bowl for mixing).

- Input: Data received from the user or other sources (like adding a pinch of salt from another container).

4. What's the difference between RAM and a hard drive? Imagine RAM is your desk and the hard drive is a filing cabinet.

RAM (Random Access Memory) is like your desk – it's where you actively work on things. It provides fast, temporary storage for the data and instructions your computer is currently using. When you close a program or turn off the computer, the information on your desk (RAM) is cleared.

A hard drive, on the other hand, is like a filing cabinet. It's a much larger, permanent storage space where you keep all your files, documents, and applications even when the computer is off. Accessing data from the hard drive is slower than accessing data from RAM because the computer needs to retrieve the information from the filing cabinet and place it on your desk (RAM) before it can be used.

5. Can you describe what an operating system does for a computer? Pretend the OS is a playground supervisor.

The operating system (OS) is like a playground supervisor for a computer. It manages all the computer's resources, ensuring everything runs smoothly and fairly. It makes sure each program (like each child) gets a turn to use the CPU (playground equipment), memory (sandbox), and storage (toy box) without interfering with others.

Specifically, the OS performs tasks like: 1) Resource allocation (CPU time, memory, disk space). 2) Hardware management (communicating with devices like printers and keyboards). 3) Process management (starting, stopping, and scheduling programs). 4) Providing a user interface (allowing users to interact with the computer). 5) File system management (organizing and storing files). Similar to how a playground supervisor prevents fights and makes sure everyone gets a chance to play, the OS prevents conflicts and ensures each program gets the resources it needs.

6. What does it mean to 'debug' a program? Like finding a boo-boo on a stuffed animal.

Debugging a program is like finding a 'boo-boo' or problem in your code that's causing it to not work correctly. It involves identifying the source of the error (the 'bug'), understanding why it's happening, and then fixing it so the program runs as expected.

Think of it this way: a program is a set of instructions. When something goes wrong, debugging is the process of carefully going through those instructions to find the point where things went off track. Tools like debuggers help programmers step through code, examine variables, and pinpoint the exact location where the issue lies, similar to a doctor using tools to find what's wrong with a patient.

7. Why are algorithms important in computer science? Think of an algorithm as a set of instructions to build a tower.

Algorithms are fundamental to computer science because they provide a systematic way to solve problems. Like instructions to build a tower, they ensure that the desired outcome (a correctly built tower or a solved computational problem) is achieved efficiently and reliably. Different algorithms can solve the same problem, but some might be faster, use less memory, or be easier to implement.

Specifically, algorithms enable:

- Efficiency: Optimizing resource usage (time and memory).

- Scalability: Handling larger inputs without performance degradation.

- Correctness: Guaranteeing accurate and consistent results.

- Abstraction: Creating reusable solutions for common problems, as shown with the plethora of sorting or searching algorithms.

8. What is binary, and why do computers use it? It's like computers only know 'yes' and 'no'.

Binary is a base-2 number system, meaning it uses only two digits: 0 and 1. Computers use binary because their fundamental components, like transistors, can easily represent two states: on (1) or off (0). It's essentially the simplest way for a computer to process and store information.

Think of it like switches: either a switch is on or off. By combining many of these 'switches' (transistors), computers can represent complex data and perform calculations. This 'yes' (1) and 'no' (0) approach makes binary a very efficient and reliable system for digital logic.

9. Explain what a loop is in programming, like going around in a circle.

A loop in programming is a way to repeat a block of code multiple times. Imagine walking in a circle – you keep going around and around until you decide to stop. In programming, this 'circle' is the code block, and the 'walking around' is the repetition. The loop continues to execute as long as a certain condition is true.

For example, you might use a loop to:

- Print numbers from 1 to 10.

- Process each item in a list.

- Keep asking for user input until the input is valid.

Here's a simple example in Python:

for i in range(5):

print(i)

This code will print the numbers 0 to 4, repeating the print(i) statement five times.

10. What's the internet? Imagine it's a giant web connecting all the computers.

The internet is a global network of interconnected computer networks that use the Internet Protocol Suite (TCP/IP) to communicate with each other. Think of it like a massive spider web linking computers worldwide.

It allows devices to share information and resources. This includes things like:

- Websites

- Emails

- Streaming video

- Online games

- File sharing

11. If you had a big pile of unsorted toys, how would you organize them? How does that relate to sorting algorithms?

To organize a big pile of unsorted toys, I'd likely start by grouping them based on broad categories, such as type (e.g., cars, dolls, building blocks), size (small, medium, large), or color. Then, within each group, I might further sort them based on more specific characteristics. For example, I might sort the cars by manufacturer or the dolls by type of clothing. This approach mimics several sorting algorithms.

Specifically, the initial grouping by broad categories resembles a bucket sort or radix sort, where items are distributed into buckets based on some key (category, size, or color in this case). The further sorting within each group is similar to insertion sort or merge sort, where each subset is sorted individually. If I were to look for a specific toy (e.g., a blue car), this would be a more efficient approach than searching the entire unsorted pile. Similar to how optimized sorting algorithms are beneficial when large datasets are involved.

12. What is a database? Think of it like a super organized address book.

A database is a structured collection of data, organized for efficient storage, retrieval, modification, and deletion. Think of it like a super organized address book: it stores information (like names, addresses, and phone numbers) in a way that makes it easy to find specific entries or update the information when needed.

Key characteristics include:

- Organization: Data is structured into tables with rows and columns.

- Persistence: Data is stored persistently, even when the system is turned off.

- Querying: Data can be retrieved using a query language like SQL (Structured Query Language). For example:

SELECT name, address FROM contacts WHERE city = 'New York'; - Data Integrity: Enforces rules to maintain the accuracy and consistency of the data.

13. Can you explain the difference between hardware and software? One you can touch, one you can't.

Hardware refers to the physical components of a computer system, like the CPU, RAM, hard drive, keyboard, and monitor. These are tangible objects that you can physically touch. Software, on the other hand, consists of the set of instructions or programs that tell the hardware what to do. Examples include operating systems (like Windows or macOS), applications (like web browsers or word processors), and games.

Essentially, hardware provides the platform, and software utilizes that platform to perform specific tasks. You cannot physically touch software; you can only interact with it through the hardware.

14. What is a function in programming? Think of it like a mini-program inside a bigger program.

A function is a reusable block of code that performs a specific task. Think of it as a mini-program within a larger program. It accepts inputs (arguments or parameters), processes them, and often returns an output. Functions help to organize code, making it more readable and maintainable by breaking down complex problems into smaller, manageable units.

Functions offer several advantages: they prevent code duplication (write once, use many times), improve modularity, and make debugging easier. For example, a function could calculate the area of a circle. You define it once, and then reuse it whenever you need to calculate the area, passing in the radius as an argument. Here's an example in Python:

def calculate_area(radius):

return 3.14159 * radius * radius

area = calculate_area(5) # call the function with radius = 5

print(area)

15. What is source control, and why is it useful when working on a project with multiple people?

Source control (also known as version control) is a system that tracks changes to a set of files over time, allowing you to revert to specific versions later. It's essentially a database of changes.

When working with multiple people, source control is invaluable because it:

- Manages concurrent changes: Prevents conflicts when multiple developers modify the same files simultaneously. Common tools like Git will help merge these changes, or highlight conflicts to be resolved.

- Provides a history of changes: Allows you to see who made what changes and when, facilitating debugging and understanding the evolution of the codebase.

- Enables collaboration: Simplifies sharing code, reviewing changes, and coordinating efforts among team members. Tools often include features like pull requests for code review.

- Facilitates branching and merging: Lets developers work on isolated features or bug fixes without disrupting the main codebase. Branches can then be merged back in.

- Offers backups and redundancy: Protects against data loss and ensures that the project can be restored to a previous state if needed. A central repository typically exists.

- Enables auditing: Useful for compliance and understanding the development process.

16. What's the difference between compiling code and interpreting code?

Compiling converts source code into machine code (or an intermediate representation like bytecode) before execution. The resulting executable file can then be run directly by the operating system or a virtual machine. Interpreting, on the other hand, executes source code line by line at runtime. An interpreter reads a statement, translates it, and then executes it immediately, without generating a separate executable file.

Here's a simple breakdown:

- Compilation: Source code -> Compiler -> Machine code/Bytecode -> Execution

- Interpretation: Source code -> Interpreter -> Execution (line by line)

17. Explain what a data structure is and give an example.

A data structure is a way of organizing and storing data in a computer so that it can be used efficiently. It defines the relationship between the data and the operations that can be performed on the data. Essentially, it's a blueprint for how data is arranged in memory.

For example, an array is a data structure. It's a collection of elements of the same data type stored in contiguous memory locations. Elements can be accessed using their index.

my_array = [1, 2, 3, 4, 5]

print(my_array[0]) # Accessing the first element (index 0)

18. What is the importance of testing in software development?

Testing is crucial in software development to ensure the quality, reliability, and performance of the software. It helps in identifying and resolving defects or bugs early in the development lifecycle, preventing them from reaching the end-users. Effective testing reduces development costs, improves user satisfaction, and minimizes the risk of software failure.

Specifically, testing provides several key benefits:

- Early bug detection: Finding and fixing bugs early is far cheaper than fixing them later.

- Improved software quality: Testing helps ensure the software meets the required standards.

- Increased reliability: Testing ensures the software functions correctly under various conditions.

- Reduced risk: Testing minimizes the risk of software failure and data loss.

- Enhanced user satisfaction: Delivering a high-quality, reliable product leads to satisfied users.

19. Describe the difference between the 'front-end' and the 'back-end' of a website or application.

The front-end, also known as the 'client-side', is what users directly interact with. It encompasses the user interface (UI) and user experience (UX) aspects of a website or application. This includes everything from the layout and design to the interactive elements and animations. Technologies commonly used in front-end development include:

- HTML (structure)

- CSS (styling)

- JavaScript (behavior)

The back-end, also known as the 'server-side', is the part of the application that handles the logic, data storage, and processing behind the scenes. It's responsible for tasks like managing databases, handling user authentication, and running server-side code. Technologies often used in back-end development can be:

- Python, Java, Node.js (server-side languages)

- Databases like PostgreSQL, MySQL, MongoDB

20. What is an API, and why is it useful?

An API (Application Programming Interface) is a set of rules and specifications that software programs can follow to communicate with each other. It defines the methods and data formats that applications use to request and exchange information, enabling them to interact and leverage each other's functionality.

APIs are useful because they promote modularity and reusability. They allow developers to integrate different services and applications without needing to understand the underlying implementation details. This simplifies development, reduces code duplication, and fosters innovation by enabling easy access to existing functionalities. Example:

- A weather app can use a weather API to fetch weather data.

- A payment gateway API allows an e-commerce site to process payments.

- A social media API enables an app to post updates to a user's timeline.

APIs are essential for modern software development, enabling seamless integration and collaboration between diverse systems.

21. What is cloud computing, and what are some of its benefits?

Cloud computing is the delivery of computing services—including servers, storage, databases, networking, software, analytics, and intelligence—over the Internet ("the cloud") to offer faster innovation, flexible resources, and economies of scale. Instead of owning and maintaining physical data centers and servers, you access these resources on demand from a cloud provider.

Some key benefits include:

- Cost Savings: Reduced capital expenditure and operational costs.

- Scalability: Easily scale resources up or down based on demand.

- Flexibility: Access a wide range of services and technologies.

- Reliability: Improved uptime and data redundancy.

- Accessibility: Access resources from anywhere with an internet connection.

22. What is a virtual machine?

A virtual machine (VM) is a software-defined environment that emulates a physical computer. It allows you to run an operating system and applications within another operating system. Think of it as a computer within a computer.

VMs provide isolation, meaning that if one VM crashes, it doesn't affect other VMs or the host system. They are useful for testing different operating systems, running legacy applications, and consolidating server resources. Several different hypervisors provide these functionalities such as: VMWare, VirtualBox, Hyper-V, etc.

23. How does encryption keep our data safe on the internet?

Encryption transforms readable data (plaintext) into an unreadable format (ciphertext), making it incomprehensible to unauthorized individuals. It uses algorithms (mathematical formulas) and keys to perform this transformation. When data is transmitted over the internet, encryption ensures that even if intercepted, the data remains secure because the interceptor lacks the correct key to decrypt it.

Specifically, encryption protects data in several key ways:

- Confidentiality: Only authorized parties with the decryption key can access the original data.

- Integrity: Encryption can include mechanisms to detect if the data has been tampered with during transmission.

- Authentication: Encryption protocols can verify the identity of the sender and receiver, preventing impersonation.

Common encryption methods used on the internet include HTTPS (TLS/SSL) for secure web browsing and end-to-end encryption for messaging apps.

Computer Science Fundamentals interview questions for juniors

1. If a computer is like a sandwich, what are the different layers made of?

If a computer is like a sandwich, the layers can be described as follows:

- Bread (Top): The user interface (UI), which is what the user directly interacts with (e.g., a graphical user interface or command line). It's the visible part of the 'sandwich'.

- Filling (Various Layers): This represents the software stack. It includes applications, operating system, libraries, and drivers. Each of these layers builds upon the one below. The OS manages resources, libraries provide reusable code, and drivers allow the OS to communicate with hardware.

- Bread (Bottom): The hardware. This is the physical components of the computer, such as the CPU, memory, storage, and motherboard. It's the foundation upon which everything else is built. Think of this as the most 'basic' and essential part, like the bottom slice holding it all together.

2. Can you explain the difference between RAM and a hard drive like I'm trying to remember where I put my toys?

Imagine RAM is like the floor of your playroom where you keep the toys you're currently playing with. It's fast and easy to grab whatever you need right away. The hard drive is like the big toy box in your closet. It can hold lots more toys, but it takes longer to get something out of it.

So, RAM is small and fast, used for things you're doing right now. The hard drive is big and slow, used for storing everything, even the toys you aren't using at the moment.

3. What is an algorithm, and can you give me an example of one you use every day, like when you're getting ready in the morning?

An algorithm is a well-defined, step-by-step procedure or set of rules to solve a problem or accomplish a specific task. It takes an input, processes it through a sequence of instructions, and produces an output. Think of it like a recipe; it has ingredients (input), instructions (steps), and a final dish (output).

An example of an algorithm I use every morning is getting ready. The steps might look like this: 1. Wake up. 2. Brush my teeth. 3. Take a shower. 4. Get dressed. 5. Eat breakfast. Each step is an instruction, and following them in order helps me get ready for the day. The 'input' is being asleep, and the 'output' is being ready to start the day.

4. If a computer program is like a recipe, what are the ingredients and how do they mix together?

In the recipe analogy, the ingredients are like the data and variables that a program uses. This data could be numbers, text, or more complex structures. The instructions in the recipe are analogous to the code or algorithms that manipulate the data.

The way these ingredients mix together relates to how the code executes. Instructions are executed in a specific order to transform the initial data (ingredients) into the desired result (finished dish). Control flow elements like if statements (checking if the oven is hot enough) or for loops (stirring for a specific time) dictate the order and conditions under which certain instructions are applied, mirroring how a recipe guides the combination and transformation of ingredients.

5. What does it mean for a program to 'debug', and is it like finding a mistake in a drawing?

Debugging a program means identifying and fixing errors (bugs) that cause it to behave unexpectedly or incorrectly. It's a systematic process of finding the root cause of a problem and then implementing a solution to correct it. This often involves using debugging tools, testing, and code review. Debugging is fundamental to software development.

While there's some analogy to finding a mistake in a drawing, debugging is often more complex. A drawing mistake is usually visible and has a direct, easily understood cause. Bugs in code can be subtle, hidden, and have indirect or cascading effects. Code might compile without issues but still contain bugs that only manifest under specific conditions or with certain inputs. Debugging often requires understanding the program's logic, data flow, and interactions with external systems.

6. Explain what a variable is in programming, using the concept of a labeled box that holds a toy.

Imagine a variable as a labeled box. The label is the variable's name (e.g., my_toy), and the box holds a value, like a toy (e.g., a car). So, my_toy = "car" means we have a box labeled my_toy, and inside that box is the toy "car". We can change the toy in the box (change the variable's value) later. For example, my_toy = "doll" would replace the car with a doll.

In programming terms, a variable is a named storage location in the computer's memory. It holds a value, which can be different data types such as numbers, text, or more complex structures. The variable's name lets us easily access and modify that value throughout our program. Changing the value means overwriting the contents of that memory location with a new value.

7. What are some basic data types, and can you relate them to different kinds of building blocks?

Basic data types represent different kinds of values that a variable can hold. Think of them like different types of building blocks: integers (like whole numbers, e.g., 10, -5) are like solid, fixed-size bricks. Floats (like numbers with decimal points, e.g., 3.14, -0.001) are like bricks that can be precisely measured to fractional lengths. Strings (sequences of characters, e.g., "Hello", "World!") are like flexible pipes or wires that can carry information. Booleans (true/false values) are like switches that are either on or off. Other examples includes char for single characters, and more complex data types are often built using these fundamentals.

In code, these look like:

int my_int = 10;

float my_float = 3.14;

string my_string = "Hello";

bool my_bool = true;

char my_char = 'A';

8. What is a loop in programming, and can you explain it like going around in a circle?

A loop in programming is a way to repeat a block of code multiple times. Think of it like walking around in a circle. You start at a certain point, follow a path, and eventually end up back where you started. If you keep walking, you'll just repeat the same path again and again.

In programming, the 'path' is the block of code you want to repeat. The 'starting point' is the beginning of that code block, and 'walking around the circle' means executing the code block and then going back to the beginning to execute it again. There are different types of loops, like for loops, while loops, and do-while loops, each with slightly different ways to control how many times the code block is repeated. For example, for (int i = 0; i < 10; i++) repeats the code 10 times.

9. What is an if/else statement, and can you explain it like making a choice between two toys?

An if/else statement is a basic control flow tool in programming that lets your program make a decision. Think of it like choosing between two toys: a teddy bear or a toy car. The if part is like checking if you want the teddy bear. If you do want the teddy bear (the condition is true), you get the teddy bear. The else part is what happens if you don't want the teddy bear (the condition is false); in that case, you get the toy car instead.

In code, it looks something like this:

if you_want_teddy_bear:

get_teddy_bear()

else:

get_toy_car()

The if checks a condition (you_want_teddy_bear). If that condition is true, the code inside the if block runs. Otherwise, the code inside the else block runs.

10. Can you explain what an array is in simple terms, using the analogy of a train with multiple cars?

Think of an array like a train. The train itself is the array, and each car in the train represents an element within the array. Each car holds something specific (passengers, cargo), just like each element in an array holds a specific value (number, string, object). Each car has a specific position or 'index' in the train and this starts from 0, same with an array.

So, if you want to access the contents of the third car, you'd go to the car with the index of 2. In programming terms, my_array[2] would give you the element stored in that position.

11. What does 'function' mean in programming, relating to how different Lego blocks snap together?

In programming, a 'function' is like a specific Lego block that performs a defined task. Just as a Lego brick with studs can connect to other bricks with corresponding holes, a function takes inputs (like 'studs'), processes them (the internal shape of the brick), and produces an output (a 'connected' brick). It's a reusable building block of code. For instance, a function calculating the area of a rectangle might take length and width as inputs, perform the multiplication, and return the area as the output.

Similar to how you can combine different types of Lego blocks to create a complex structure, you can combine different functions in programming to build a complex application. Functions help break down a large problem into smaller, manageable, and reusable pieces. For example, def calculate_area(length, width): return length * width is a simple python function.

12. Explain what a computer network is, similar to friends communicating with walkie-talkies.

Imagine your friends have walkie-talkies. A computer network is like a group of computers that can 'talk' to each other, just like your friends using the walkie-talkies. Instead of radio waves, computers use cables or wireless signals (like Wi-Fi) to send messages.

Each computer has a unique 'address' so messages reach the right place. They follow specific rules (called protocols) on how to send and receive information, ensuring everyone understands each other. So, a network allows computers to share files, access the internet, and play games together, similar to how your friends share stories and coordinate activities through their walkie-talkies.

13. What is the internet, and can you explain it like a giant playground where everyone can connect?

The internet is like a giant, global playground connecting billions of devices. Instead of swings and slides, it uses cables and wireless signals to let computers, phones, and even smart refrigerators talk to each other.

Think of websites as different areas of the playground. When you visit a website, it's like going to that specific area. You can 'play' by reading articles, watching videos, or chatting with friends, all thanks to the internet connecting everything together.

14. What does it mean for data to be 'encrypted,' similar to writing a secret message in code?

Encryption is transforming data into an unreadable format, called ciphertext, so that only authorized parties can decipher and read it. It's like writing a secret message in code; without the correct key (or method to break the code), the message appears as gibberish.

Technically, encryption uses algorithms (ciphers) to scramble the data. A key is used during the encryption process and is also needed to decrypt the data back to its original form (plaintext). Common encryption algorithms include AES, RSA, and DES. Example: AES(plaintext, key) = ciphertext

15. What are the key differences between hardware and software, as if they were parts of a toy robot?

Imagine a toy robot. The hardware is like the robot's physical body - the metal frame, the motors that move its limbs, the sensors that allow it to 'see' and 'hear', and the battery that powers it. You can touch it, and it's what gives the robot its physical form and abilities. If the motor breaks or the battery dies, the robot can't function, regardless of how smart it is.

In contrast, the software is like the robot's brain or mind. It's the set of instructions, or program, that tells the robot what to do - how to walk, respond to commands, or interact with its environment. It's intangible; you can't see or touch it directly. The software defines the robot's behavior. Even with perfect hardware, a robot with no software, or faulty software, is just a static, unmoving object.

16. How would you explain the concept of a database if it were a well-organized toy closet?

Imagine a toy closet. A database is like that, but for information. Instead of toys, it holds data. Just like you organize toys into labeled bins or shelves (cars in one bin, dolls on a shelf), a database organizes data into tables and columns, using names and rules (data types, relationships). This makes it easy to find specific information quickly.

Think of each shelf as a table, and each label on the shelf as a field name describing the data on that shelf. If you wanted a specific car, you would know which bin to check. Similarly, if you want to find a specific user's information in the database, you know which table to query, and which column contains the information you are after.

17. What is an operating system, and how does it act like the boss of the computer, managing everything?

An operating system (OS) is the software that manages computer hardware and software resources, providing common services for computer programs. Think of it as the 'boss' because it's in charge of everything that happens on your computer.

The OS handles tasks like:

- Resource Allocation: Deciding which program gets access to the CPU, memory, and storage.

- Process Management: Starting, stopping, and coordinating different programs running at the same time.

- Device Management: Communicating with hardware devices like printers, keyboards, and monitors using drivers.

- File System Management: Organizing and accessing files and directories on storage devices.

- Security: Protecting the system from unauthorized access. Without it applications wouldn't be able to function.

18. What is a compiler, and how does it act like a translator between different languages?

A compiler is a program that translates source code written in a high-level programming language (like Python or Java) into a low-level language (like assembly code or machine code) that a computer can directly execute. It acts like a translator between different languages because it takes human-readable code and converts it into machine-understandable instructions.

Think of it this way: if you have a document written in English (the high-level language) and you need someone who only understands Spanish (the low-level language) to read it, you would use a translator. The compiler does the same thing for code. The source code is like the English document, and the machine code is like the Spanish translation. It analyzes the entire source code before generating the output, often performing optimizations to improve the efficiency of the generated code. A simple example of a compilation command is: gcc myprogram.c -o myprogram

19. Explain how a computer represents numbers, using the concept of building towers with blocks.

Imagine you're building towers with blocks. Instead of our usual base-10 system (where we have digits 0-9), computers use base-2, also known as the binary system. In binary, you only have two "digits": 0 and 1. Think of '0' as "no block" and '1' as "one block". To represent a number, you build a tower. Each position in the tower represents a power of 2, starting from the right: 1, 2, 4, 8, 16, and so on.

For example, the number 5 would be represented as 101 in binary. This means (1 * 4) + (0 * 2) + (1 * 1) = 4 + 0 + 1 = 5. So, the computer builds a tower with a "block" in the 4's place and a "block" in the 1's place, and no block in the 2's place. Essentially, computers use patterns of on/off switches (0s and 1s) to represent all sorts of data, including numbers, and these switches become our "blocks" for tower building.

20. What is the role of binary code, and how does it act like a secret language between computers using only 0s and 1s?

Binary code is the fundamental language that computers use to represent and process all information. At its core, a computer operates using electronic switches that are either 'on' (representing 1) or 'off' (representing 0). Binary code allows computers to translate instructions, data, and complex operations into a series of these 0s and 1s. Think of it as a secret language because, to a human without the key (understanding of binary), it's just a series of seemingly random digits. But for a computer, these sequences represent specific actions and data.

Just as humans use words and grammar to communicate, computers use specific binary sequences and protocols. For instance, the letter 'A' might be represented by the binary code 01000001 (ASCII). Different combinations of 0s and 1s represent different instructions (like 'add', 'subtract') or data values. Because all computer operations, from running an operating system to displaying graphics, are ultimately broken down into binary, it acts as the universal language understood by every component within a computing system.

21. Can you explain what source code is, comparing it to the instructions needed to build a LEGO set?

Source code is like the instructions for building a LEGO set. The instructions tell you, step by step, how to assemble the LEGO bricks in the correct order to create the final model. Similarly, source code is a set of instructions written in a programming language that tells the computer, step by step, what to do. The computer then executes these instructions to perform a task or run a program. Instead of bricks, source code manipulates data and controls the computer's hardware.

Think of the LEGO model as the software application. The source code is the blueprint, or set of instructions, that makes the application work. Without the source code, you wouldn't know how to build the application, just like without instructions, it would be very difficult to build the intended LEGO model.

22. What's the difference between front-end and back-end development, as if they're the face and brain of a robot?

Think of a robot. The front-end is like the robot's face: its appearance, how it interacts with the world (buttons, screens, voice recognition). It's what users see and interact with. This involves technologies like HTML, CSS, and JavaScript.

On the other hand, the back-end is like the robot's brain. It handles all the logic, data storage, and processing that make the robot function. It's the 'behind the scenes' part. This involves languages like Python, Java, and databases like SQL or NoSQL.

23. What is version control and why is it important, like keeping track of different versions of your drawings?

Version control is a system that records changes to a file or set of files over time so that you can recall specific versions later. Think of it like having a detailed history of your drawings, showing every edit and allowing you to go back to a previous state if needed. It's like an 'undo' button for your entire project history.

It's important because it allows for collaboration, tracks changes, helps in debugging, enables reverting to previous states, and facilitates experimentation without fear of breaking the main project. Without it, managing changes, especially in collaborative projects, becomes incredibly difficult and error-prone. For example in coding, tools like git with commands like git commit, git branch are used to manage versions. Similarly in drawing software, version control keeps the original and edits as different versions.

24. If a program isn't working as expected, what are some ways you can figure out what’s wrong? (Think detective work!)

When a program isn't working as expected, I approach it like a detective. First, I carefully review the problem description and expected output to ensure I understand the issue. Then, I start by reproducing the bug consistently. Once reproducible, I begin isolating the problem area by employing techniques like:

- Debugging: Using a debugger to step through the code line by line, inspecting variable values and program flow. Code example using

pdbin Python:import pdb; pdb.set_trace() - Logging: Adding strategic print statements or using a logging library to track the program's execution and data at different points. For example:

print(f"Variable x: {x}"). - Code Review: Scrutinizing the code for errors, paying close attention to recent changes or potentially problematic sections. I also utilize tools like linters and static analyzers to catch potential bugs.

- Unit Tests: Writing or running unit tests to verify the behavior of individual components or functions. Failing tests pinpoint the location of the issue.

- Simplify and Isolate: If the code is complex, try simplifying the code, commenting parts of the code that is irrelevant to the problem at hand, and isolating the logic to the smallest testable section. This can make it easier to understand the underlying problem.

25. Imagine you're teaching a robot how to make a peanut butter and jelly sandwich. How would you break down the steps into simple instructions?

Okay, here's how I'd teach a robot to make a PB&J:

- Open the bread bag. Locate the bread bag. If a clip is present, remove it. Unseal the bag opening.

- Get two slices of bread. Reach into the bag. Grasp one slice of bread. Pull it out of the bag. Place it on a clean surface. Repeat to get a second slice.

- Open the peanut butter jar. Locate the peanut butter jar. Unscrew the lid. Place the lid aside.

- Apply peanut butter. Insert a knife into the peanut butter jar. Scoop out a portion of peanut butter. Spread the peanut butter evenly on one slice of bread.

- Open the jelly jar. Locate the jelly jar. Unscrew the lid. Place the lid aside.

- Apply jelly. Using a clean knife (or a different knife), insert it into the jelly jar. Scoop out a portion of jelly. Spread the jelly evenly on the other slice of bread.

- Combine the slices. Carefully pick up the jelly-covered slice of bread. Place it on top of the peanut butter-covered slice, jelly-side down.

- (Optional) Cut the sandwich. If desired, use a knife to cut the sandwich in half (diagonally is a common option).

- Serve. Place the peanut butter and jelly sandwich on a plate or serving surface.

- Clean Up: Wipe knives and other utensils that were used during preparation.

26. What are APIs (Application Programming Interfaces), and how are they like ordering food at a restaurant?

APIs, or Application Programming Interfaces, are like ordering food at a restaurant because they act as intermediaries between different systems. Imagine you're at a restaurant (your application) and want to order food (data or functionality) from the kitchen (another application or system). You don't go directly to the kitchen and start cooking. Instead, you interact with the waiter (the API). You tell the waiter what you want (send a request), and the waiter communicates your order to the kitchen. The kitchen prepares the food, and the waiter brings it back to you (returns a response).

In technical terms, an API defines the methods and data formats that applications can use to request and exchange information. The API exposes specific functions or data from an application without requiring you to understand the underlying complexity. For example, if you want to get weather data from a weather service, you wouldn't need to know how the service collects and processes weather information. You just use the API to request the data, and the API returns the weather information in a standardized format like JSON. Here's a simple example of making a request to a weather API:

GET /weather?city=London HTTP/1.1

Host: api.example.com

27. Can you give a simple explanation of cloud computing, likening it to sharing toys in a big virtual toy box?

Imagine a big virtual toy box where lots of people can store and play with toys. That's like cloud computing. Instead of owning and storing software, data, and computing power on your own computer or server (your own toy box), you access them over the internet from a shared resource. You only pay for what you use, like renting a toy instead of buying it.

So, cloud computing is all about sharing computing resources (servers, storage, databases, networking, software, analytics, and intelligence) over the Internet (“the cloud”) to offer faster innovation, flexible resources, and economies of scale. You access these resources as services, paying only for what you use, which avoids the capital expenditure of owning and maintaining your own infrastructure.

28. Explain the concept of Object-Oriented Programming (OOP) using the idea of building different types of toy cars with specific properties and behaviors.

Object-Oriented Programming (OOP) is like building different types of toy cars. Imagine we want to create various toy cars - a sports car, a truck, and a sedan. In OOP, each type of car would be a class. Each class has its own properties (attributes) like color, number of wheels, engine type) and behaviors (methods), like accelerate, brake, turn. So, the sports car might have properties like "red color", "2 doors", and behaviors like "high acceleration", while the truck may have "large cargo bed", "4 doors", and "strong towing capacity".

OOP allows us to create reusable code. If all our cars need to accelerate, we define that accelerate() method in a base Car class. Then, the SportsCar, Truck, and Sedan classes can inherit this accelerate() method from the Car class, avoiding redundant code. Each class can also have its own specific implementation of the accelerate() method. This concept of sharing attributes and behaviors is a key aspect of OOP through the use of concepts like inheritance and polymorphism.

Computer Science Fundamentals intermediate interview questions

1. How does caching improve system performance, and what are some common caching strategies?

Caching enhances system performance by storing frequently accessed data closer to the requester, reducing latency and network traffic. When data is requested, the cache is checked first. If the data is present (a cache hit), it's served directly, avoiding slower data sources like databases or remote servers. This results in faster response times and reduced load on backend systems.

Common caching strategies include:

- Write-through cache: Data is written to both the cache and the backing store simultaneously.

- Write-back cache: Data is written only to the cache initially, and updates are propagated to the backing store later.

- Cache-aside: The application checks the cache first; if the data is not found, it retrieves it from the backing store and updates the cache.

- Content Delivery Networks (CDNs): Distribute content across multiple servers geographically to serve users from the closest location. For example, a CDN might cache images and static HTML files.

- Local Caching: Caching data on client-side (like in browsers, or mobile apps), on a user's machine.

2. Explain the difference between TCP and UDP, and when you might choose one over the other.

TCP (Transmission Control Protocol) and UDP (User Datagram Protocol) are both protocols used for sending data over an IP network, but they differ significantly in their approach. TCP is connection-oriented, meaning it establishes a connection before sending data, ensures reliable data delivery through acknowledgements and retransmissions, and provides in-order delivery. UDP, on the other hand, is connectionless, meaning it sends data without establishing a connection, offers no guarantee of delivery or order, and is generally faster due to the reduced overhead.

You'd choose TCP when reliability and order are crucial, such as for web browsing (HTTP/HTTPS), email (SMTP), and file transfer (FTP). You'd choose UDP when speed and low latency are more important than guaranteed delivery, such as for online gaming, video streaming, and DNS lookups. UDP is simple to implement and hence can be used for applications like DHCP.

3. What is the purpose of a hash function in data structures, and what are some considerations for choosing a good one?

The primary purpose of a hash function is to map data of arbitrary size to a fixed-size value, typically an integer, called a hash code or hash. This hash code serves as an index into a hash table or other data structure, enabling efficient data retrieval. A good hash function should distribute keys uniformly across the hash table to minimize collisions, where different keys map to the same index. Collisions degrade performance, potentially turning search operations from O(1) into O(n) in the worst case.

When choosing a hash function, several factors should be considered: Speed: A fast hash function is crucial for performance. Uniform Distribution: The hash function should distribute keys evenly across the table. Collision Resistance: It should minimize the chance of collisions. Determinism: For a given input, it should always produce the same output. Common strategies include using prime numbers in the hashing algorithm and avoiding patterns in the input data that might lead to biased distributions. Examples include using modular arithmetic and bitwise operations. Example code:

def hash_function(key, table_size):

return key % table_size

4. Describe the concept of deadlock in concurrent programming, and how can it be prevented?

Deadlock occurs in concurrent programming when two or more threads are blocked indefinitely, waiting for each other to release resources that they need. This creates a circular dependency, preventing any of the threads from proceeding. For example, thread A holds resource X and is waiting for resource Y, while thread B holds resource Y and is waiting for resource X.

Deadlock prevention involves eliminating one of the four necessary conditions for deadlock to occur (Coffman conditions): mutual exclusion, hold and wait, no preemption, and circular wait. Common prevention techniques include:

- Mutual Exclusion: This is often unavoidable, as some resources inherently require exclusive access.

- Hold and Wait: Threads must request all required resources at once or release any held resources before requesting new ones. This can be implemented using techniques like resource ordering.

- No Preemption: If a thread is holding a resource and requests another that cannot be immediately granted, the held resources can be preempted (taken away) and given to another thread (or the thread releases them). However, this isn't always feasible.

- Circular Wait: Establish a total ordering of resources and require threads to request resources in increasing order. This breaks the circular dependency. For instance, assigning a unique numerical ID to each resource and forcing threads to acquire resources in ascending order.

5. How does normalization improve database design, and what are the different normalization forms?

Normalization in database design reduces data redundancy and improves data integrity by organizing data into tables in such a way that dependencies of attributes are properly enforced. This minimizes storage space and eliminates update, insertion, and deletion anomalies. Without normalization, databases become prone to inconsistencies and errors, impacting reliability.

Common normalization forms include:

- 1NF (First Normal Form): Eliminates repeating groups of data by ensuring each column contains atomic values.

- 2NF (Second Normal Form): Must be in 1NF and eliminates redundant data that depends on only part of the primary key (partial dependencies).

- 3NF (Third Normal Form): Must be in 2NF and eliminates redundant data that depends on non-key attributes (transitive dependencies).

- BCNF (Boyce-Codd Normal Form): A stricter version of 3NF that addresses anomalies arising from overlapping composite keys.

- 4NF (Fourth Normal Form): Addresses multi-valued dependencies.

- 5NF (Fifth Normal Form): Deals with join dependencies. Higher normal forms are less commonly used.

6. Explain the difference between authentication and authorization.

Authentication verifies who a user is. It's the process of confirming a user's identity, often through credentials like usernames and passwords. Authorization, on the other hand, determines what a user is allowed to access. It governs what resources a user has permission to view, modify, or execute after they have been authenticated. Think of it like this: authentication is showing your ID to get into a building, while authorization is determining which rooms inside the building you have access to.

7. What is the difference between a stack and a queue, and how might you implement them?

A stack is a LIFO (Last-In, First-Out) data structure, meaning the last element added is the first one removed. A queue, on the other hand, is a FIFO (First-In, First-Out) data structure, where the first element added is the first one removed.

Stacks can be implemented using arrays or linked lists. Basic operations include push (add an element), pop (remove the top element), and peek (view the top element). Queues can also be implemented using arrays or linked lists. Common operations are enqueue (add an element to the rear), dequeue (remove the element from the front), and peek (view the front element).

8. How does garbage collection work in languages like Java or C#, and what are its advantages and disadvantages?

Garbage collection in languages like Java and C# automatically reclaims memory occupied by objects that are no longer in use by the application. It traces reachable objects starting from root objects (e.g., static variables, local variables on the stack) and marks them as 'alive'. Any object not reachable is considered garbage and its memory is freed. Java primarily uses a mark-and-sweep approach, while C# uses a generational garbage collector which divides objects into generations based on age, focusing collection efforts on younger, more frequently discarded objects.

The advantages include automatic memory management (reducing memory leaks and dangling pointers), simplified development (developers don't need to manually allocate/free memory), and increased robustness. Disadvantages include performance overhead due to the garbage collection process (which can cause pauses), unpredictable execution times, and potential for increased memory consumption as garbage collection might not immediately reclaim all unused memory.

9. What is the purpose of indexes in databases, and how do they affect query performance?

Indexes in databases are special lookup tables that the database search engine can use to speed up data retrieval. They essentially create a shortcut, allowing the database to quickly locate specific rows in a table without scanning the entire table. This is similar to using an index in a book to find a specific topic.

The primary effect of indexes is significantly improved query performance, especially for SELECT queries that involve WHERE clauses. However, indexes come with a cost. They require additional storage space, and they can slow down INSERT, UPDATE, and DELETE operations because the index also needs to be updated whenever the underlying data changes. The key is to find the right balance between read and write performance by indexing columns that are frequently used in search criteria, while avoiding excessive indexing, which can degrade overall database performance.

10. Describe the concept of recursion, and provide an example of a problem that can be solved recursively.

Recursion is a programming technique where a function calls itself within its own definition. It's a way of solving problems by breaking them down into smaller, self-similar subproblems. Each recursive call works on a smaller instance of the problem, eventually reaching a base case, which is a condition that stops the recursion and provides a direct solution. Without a base case, the recursion would continue infinitely, leading to a stack overflow error.

A classic example is calculating the factorial of a number:

def factorial(n):

if n == 0:

return 1 # Base case

else:

return n * factorial(n-1) # Recursive call

11. Explain the difference between symmetric and asymmetric encryption, and when you might use each.

Symmetric encryption uses the same key for both encryption and decryption. It's faster but requires a secure way to share the key. Examples include AES and DES. Use it when speed is important and you can securely share the key (e.g., encrypting data on a single server or within a secure network).

Asymmetric encryption uses a key pair: a public key for encryption and a private key for decryption. The public key can be shared freely, while the private key must be kept secret. RSA and ECC are common algorithms. It's slower than symmetric encryption but solves the key exchange problem. Use it for key exchange (like in HTTPS with TLS), digital signatures, and when secure key sharing is not feasible.

12. What is the importance of time complexity analysis, and how do you determine the Big O notation of an algorithm?

Time complexity analysis is crucial for understanding how an algorithm's runtime scales with the input size. It helps us predict performance, compare different algorithms for the same task, and identify potential bottlenecks before implementation. A poorly performing algorithm can become unusable as the input data grows, making time complexity a vital consideration for efficient software design. Without understanding time complexity, choosing the right algorithm becomes guesswork, potentially leading to significant performance issues in production.

To determine Big O notation, you analyze the algorithm's steps and identify the dominant operation that executes most frequently as the input size n increases. You then express the number of times this operation is executed as a function of n and simplify it to its highest order term, discarding constant factors and lower-order terms. For example, if an algorithm has a loop that iterates n times, and inside that loop, there's another loop that iterates n/2 times, the total number of operations could be proportional to n * (n/2), but the Big O notation would be O(n^2) because n^2 is the dominating factor. Common Big O notations include O(1) - constant, O(log n) - logarithmic, O(n) - linear, O(n log n) - linearithmic, O(n^2) - quadratic, and O(2^n) - exponential.

13. How can you ensure that your program handles errors gracefully?

To ensure graceful error handling, I implement several strategies. Firstly, I use try-except blocks (or equivalent error handling mechanisms in other languages) to anticipate potential exceptions, allowing the program to catch and manage them instead of crashing. Within these blocks, I log the error details for debugging and provide informative error messages to the user, guiding them on how to resolve the issue or contacting support if needed.

Furthermore, I incorporate validation checks on user inputs and external data to prevent errors before they occur. For critical operations, I implement fallback mechanisms or retry logic to handle temporary failures. Finally, I have global exception handlers to catch any unhandled exceptions, ensuring a controlled shutdown or recovery attempt and preventing unexpected program termination.

14. What are design patterns and why are they helpful?

Design patterns are reusable solutions to commonly occurring problems in software design. They represent best practices, providing a template for how to solve a design issue in a particular context.

They are helpful because they:

- Improve code readability and maintainability: Patterns provide a common vocabulary and structure, making code easier to understand.

- Promote code reuse: Patterns offer proven solutions, reducing the need to reinvent the wheel.

- Increase reliability: They are based on experience and have been tested and refined over time.

- Speed up development: Patterns provide a starting point, accelerating the development process.

15. Can you explain the difference between lossy and lossless compression?

Lossy compression reduces file size by permanently removing some data. This results in smaller files but can degrade the quality. Examples include JPEG for images and MP3 for audio.

Lossless compression, on the other hand, reduces file size without losing any data. The original file can be perfectly reconstructed from the compressed file. Examples include PNG for images and ZIP for archiving. Lossless compression typically achieves less compression than lossy compression because it can't discard any data.

16. What is the idea behind using version control systems?

The core idea behind using version control systems (VCS) is to track and manage changes to files (often source code) over time. This allows developers to revert to previous versions, collaborate effectively, and understand the history of modifications made to a project. It helps prevent conflicts and allows for easy auditing of changes.

Specifically, VCS enables features like:

- Collaboration: Multiple developers can work on the same files simultaneously without overwriting each other's changes.

- History Tracking: Every change is recorded, allowing you to see who made what changes and when.

- Branching and Merging: You can create separate branches to work on new features or bug fixes without affecting the main codebase. These changes can then be merged back in.

- Reverting Changes: If something goes wrong, you can easily revert to a previous, stable version of the code.

- Backup and Recovery: Your project is backed up, protecting it from data loss.

17. Explain the concept of inheritance and polymorphism in object-oriented programming.

Inheritance is a mechanism where a new class (subclass or derived class) inherits properties and behaviors from an existing class (superclass or base class). This promotes code reuse and establishes an "is-a" relationship. For example, a Dog class can inherit from an Animal class, inheriting properties like name and breed, and methods like eat().

Polymorphism, meaning "many forms", allows objects of different classes to respond to the same method call in their own specific ways. This is often achieved through method overriding (where a subclass provides its own implementation of a method inherited from its superclass) or method overloading (having multiple methods with the same name but different parameters in the same class). For instance, if both Dog and Cat classes inherit from Animal and both implement a makeSound() method, calling makeSound() on a Dog object will produce a different sound than calling it on a Cat object.

18. What are some common software testing techniques, and why is testing important?

Some common software testing techniques include: Unit Testing (testing individual components in isolation), Integration Testing (testing how different components work together), System Testing (testing the entire system as a whole), Acceptance Testing (testing from the end-user's perspective to ensure it meets requirements), and Regression Testing (re-running tests after code changes to ensure no new issues are introduced). Testing is important because it helps to identify and fix defects early in the development lifecycle, ensuring software quality, reliability, and security. It reduces the risk of costly failures, improves user satisfaction, and ultimately saves time and resources.

19. Explain what a distributed system is and what challenges arise when building one.

A distributed system is a collection of independent computers that appear to its users as a single coherent system. These computers communicate and coordinate their actions by exchanging messages.

Challenges in building distributed systems include:

- Concurrency: Managing simultaneous access to shared resources.

- Consistency and Availability: Balancing data consistency with system availability (CAP theorem).

- Fault Tolerance: Designing the system to continue operating correctly even when some components fail.

- Latency: Dealing with delays in communication between nodes.

- Partial Failure: Handling situations where only some parts of the system fail.

- Security: Securing communication and data across multiple nodes.

- Complexity: Increased complexity in design, implementation, and testing.

- Debugging: Debugging distributed systems can be significantly difficult.

20. What is the CAP theorem, and how does it influence database design?

The CAP theorem, also known as Brewer's theorem, states that it is impossible for a distributed data store to simultaneously provide more than two out of the following three guarantees:

- Consistency (C): Every read receives the most recent write or an error.

- Availability (A): Every request receives a (non-error) response, without guarantee that it contains the most recent write.

- Partition Tolerance (P): The system continues to operate despite arbitrary partitioning due to network failures.

CAP influences database design by forcing architects to make trade-offs. For example, a system prioritizing consistency and partition tolerance (CP) might sacrifice availability during network partitions. Conversely, a system prioritizing availability and partition tolerance (AP) might sacrifice consistency, leading to eventual consistency models. Database choice is thus heavily guided by which guarantees are most critical for the specific application.

21. Describe the difference between a process and a thread.

A process is an independent execution environment, with its own memory space and resources. Think of it as a running program. Threads, on the other hand, are lightweight units of execution within a process. Multiple threads can exist within a single process, sharing the same memory space and resources. This makes communication between threads faster than between processes.

Key differences include:

- Memory: Processes have separate memory spaces; threads share the same memory space.

- Resource usage: Processes have more overhead due to separate resources; threads are more lightweight.

- Communication: Inter-process communication (IPC) is generally more complex than inter-thread communication.

- Isolation: If one process crashes, it typically doesn't affect other processes. If one thread crashes, it can crash the entire process.

22. What are the advantages and disadvantages of using microservices architecture?

Microservices offer several advantages including increased agility and faster deployment cycles, as each service can be developed, deployed, and scaled independently. They also improve fault isolation; if one service fails, it doesn't necessarily bring down the entire application. Furthermore, microservices allow for technology diversity, enabling teams to choose the best technology stack for each specific service. However, microservices also come with disadvantages. Complexity increases significantly due to distributed systems challenges like inter-service communication, distributed tracing, and eventual consistency. Deployment and operational overhead are also higher, requiring robust automation and monitoring. Lastly, designing a microservices architecture requires careful planning to define service boundaries and avoid tight coupling, which can be a complex undertaking.

Some key pros and cons in list form:

Advantages:

- Independent Deployment

- Technology Diversity

- Improved Fault Isolation

- Scalability

Disadvantages:

- Increased Complexity

- Operational Overhead

- Distributed Debugging

- Potential for Inconsistency

23. Explain the concept of dependency injection.

Dependency Injection (DI) is a design pattern in which a class receives its dependencies from external sources rather than creating them itself. This promotes loose coupling, making code more modular, testable, and maintainable. Instead of a class being responsible for creating or locating its dependencies, these dependencies are 'injected' into the class, typically through constructor injection, setter injection, or interface injection.

Key benefits include increased code reusability, easier unit testing (by mocking dependencies), and improved overall code structure. Using DI helps adhere to the Dependency Inversion Principle (DIP), a core tenet of SOLID principles, which states that high-level modules should not depend on low-level modules; both should depend on abstractions.

24. What are the key principles of SOLID?

SOLID is an acronym that represents five key principles of object-oriented design, intended to make software designs more understandable, flexible, and maintainable.

- Single Responsibility Principle (SRP): A class should have only one reason to change. In other words, a class should have only one job.

- Open/Closed Principle (OCP): Software entities (classes, modules, functions, etc.) should be open for extension but closed for modification. This means you should be able to add new functionality without changing existing code.

- Liskov Substitution Principle (LSP): Subtypes must be substitutable for their base types without altering the correctness of the program. If a class

Bis a subclass of classA, then we should be able to replace any object ofAwith an object ofBwithout disrupting the behavior of the application. - Interface Segregation Principle (ISP): Many client-specific interfaces are better than one general-purpose interface. Clients should not be forced to depend on methods they do not use.

- Dependency Inversion Principle (DIP): High-level modules should not depend on low-level modules. Both should depend on abstractions (e.g., interfaces or abstract classes). Abstractions should not depend on details. Details should depend on abstractions.

25. Describe the different types of joins in SQL (INNER, LEFT, RIGHT, FULL).

SQL joins are used to combine rows from two or more tables based on a related column. The main types are:

- INNER JOIN: Returns rows only when there is a match in both tables.

- LEFT JOIN (or LEFT OUTER JOIN): Returns all rows from the left table, and the matched rows from the right table. If there is no match in the right table, it returns NULL values for the columns from the right table.

- RIGHT JOIN (or RIGHT OUTER JOIN): Returns all rows from the right table, and the matched rows from the left table. If there is no match in the left table, it returns NULL values for the columns from the left table.

- FULL OUTER JOIN: Returns all rows when there is a match in either the left or right table. If there is no match, the side that lacks a match will contain NULL values.

26. How does a content delivery network (CDN) work, and why is it useful?

A Content Delivery Network (CDN) is a geographically distributed network of proxy servers and their data centers. The primary goal is to serve content to users with high availability and high performance. When a user requests content, the CDN redirects the request to the server closest to the user, which reduces latency. CDNs cache static content like images, videos, and stylesheets. The CDN edge servers store these cached versions reducing the load on the origin server.

CDNs are useful because they improve website loading speed, reduce bandwidth costs, improve website availability, and enhance website security by mitigating DDoS attacks. CDNs make websites faster and more reliable for end users, no matter where they are located.

27. Explain the concept of idempotence in API design.

Idempotence in API design means that an API endpoint, when called multiple times with the same input, produces the same result as if it were called only once. This is regardless of how many times the request is repeated. A PUT request is a typical example of an idempotent operation. If you PUT a resource with specific data, repeating the same PUT request doesn't change the final state of the resource after the first request.

This is crucial for building reliable and fault-tolerant systems. If a client doesn't receive a response (due to network issues, for instance), it can safely retry the request without worrying about unintended side effects. Operations like creating a resource are generally not idempotent (a POST request), but updates and deletes should be idempotent where possible. You can achieve idempotency even with non-idempotent underlying operations by implementing mechanisms like:

- Using a unique request ID sent by the client.

- Implementing versioning and conditional updates.

28. What is the purpose of message queues in software architecture?

Message queues facilitate asynchronous communication between different components or services in a software system. They decouple the sender (producer) from the receiver (consumer), allowing them to operate independently. Producers send messages to the queue, and consumers retrieve and process them at their own pace.

Key benefits include improved system reliability (if a consumer fails, messages remain in the queue), scalability (consumers can be added or removed as needed), and flexibility (different consumers can process the same message in different ways). Message queues can also handle traffic spikes more gracefully compared to direct communication, as they act as a buffer. Examples of popular message queue systems are Kafka, RabbitMQ, and Redis.

29. What is the difference between horizontal and vertical scaling?

Horizontal scaling (scaling out) means adding more machines to your pool of resources, while vertical scaling (scaling up) means adding more power (CPU, RAM, etc.) to an existing machine.

- Horizontal Scaling: Distributes the load across multiple machines. Easier to implement fault tolerance. Can be more cost-effective for some workloads. Requires software and architecture designed for distributed systems.

- Vertical Scaling: Simpler to implement initially. Can reach a hardware limit. Downtime is usually required for upgrades. Offers less fault tolerance.

Computer Science Fundamentals interview questions for experienced

1. How does the concept of 'time complexity' influence your decisions when choosing data structures and algorithms for a large-scale application?

Time complexity is a crucial consideration when selecting data structures and algorithms for large-scale applications because it directly impacts performance and scalability. Inefficient algorithms with high time complexity (e.g., O(n^2) or O(n!)) can become bottlenecks as the input data grows, leading to slow response times and resource exhaustion. For example, if I'm deciding between a linear search (O(n)) and a binary search (O(log n)) for a large, sorted dataset, I'd choose binary search because its logarithmic time complexity ensures significantly faster lookups as the data scales. Similarly, I might prefer using a hash table (average O(1) for insertion and lookup) over a linked list (O(n) for lookup) for frequent data access.

Therefore, I prioritize data structures and algorithms with lower time complexities like O(log n) or O(n) whenever possible. Understanding the trade-offs between different data structures (e.g., space vs. time) is also critical. For instance, using a more complex data structure like a B-tree might be justifiable in certain scenarios to achieve better search performance despite increased memory overhead. Analyzing the time complexity of different approaches helps in making informed decisions that optimize performance and ensure the application remains responsive and efficient even with massive datasets. Profiling the application and identifying performance-critical sections is also essential in verifying choices and identifying potential areas for further optimization.

2. Explain the CAP theorem. How have you navigated trade-offs between consistency, availability, and partition tolerance in past projects?

The CAP theorem states that it's impossible for a distributed system to simultaneously guarantee all three of the following properties: Consistency (all nodes see the same data at the same time), Availability (every request receives a response, without guarantee that it contains the most recent version of the information), and Partition Tolerance (the system continues to operate despite arbitrary partitioning due to network failures). In essence, you can only pick two out of three.

In past projects, I've navigated these trade-offs by understanding the specific requirements of the application. For example, in a project requiring high availability for serving cached content, we prioritized availability and partition tolerance (AP), accepting eventual consistency. Whereas, in a financial transaction system where data accuracy was paramount, we opted for consistency and partition tolerance (CP), and accepted potential temporary unavailability during network partitions or outages.

3. Describe a situation where you had to optimize a slow-performing query. What steps did you take to diagnose the problem and improve its performance?

In a previous role, I encountered a slow-performing query that was causing delays in generating a critical daily report. The report aggregated data from several tables, including a large transactions table. Initially, I used the database's query execution plan tool (e.g., EXPLAIN in MySQL or PostgreSQL) to identify the bottleneck. This revealed a full table scan on the transactions table during a JOIN operation. The key column for joining wasn't indexed.

To resolve this, I created an index on the frequently used foreign key column in the transactions table. I also rewrote the query to optimize the JOIN order, ensuring the smallest table was used as the driving table. After these changes, the query execution plan showed index seeks instead of full table scans, significantly reducing the query's execution time and resolving the report generation delays. Finally, I monitored the query's performance after the optimization to ensure the improvements were sustained.

4. What are some of the key differences between TCP and UDP, and when would you choose one over the other?