As Microsoft Azure solidifies its position as a top cloud platform, identifying candidates with the right expertise is more pressing than ever. Hiring managers must be prepared to assess candidates on a spectrum of Azure services, from compute and storage to networking and security, to ensure they fit the team's requirements.

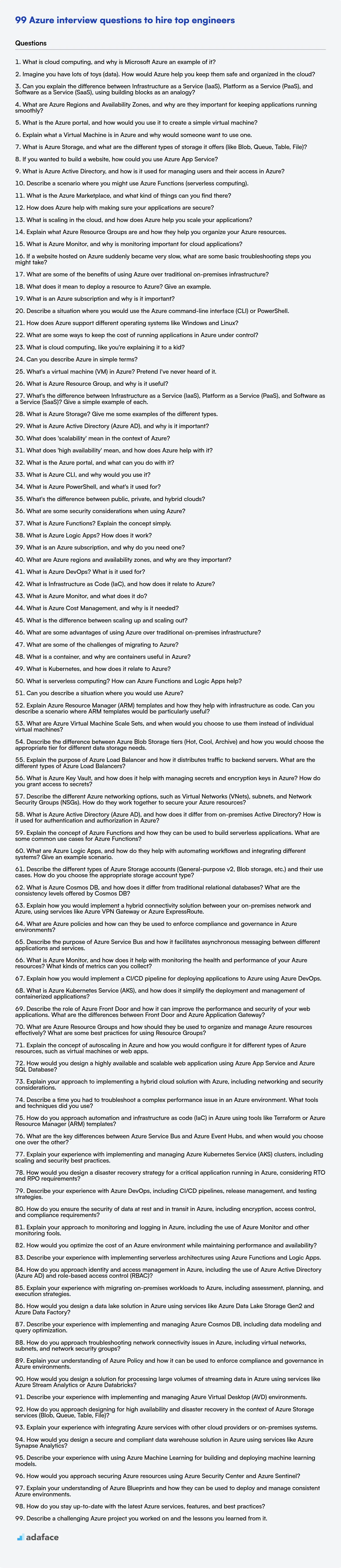

This blog post provides a carefully curated collection of Azure interview questions, spanning various experience levels from freshers to seasoned professionals. It also includes a selection of multiple-choice questions (MCQs) to gauge candidates' grasp of core concepts and challenge their knowledge.

By using these questions, recruiters can streamline their Azure hiring process and identify top talent effectively. To further refine your candidate selection, consider using Adaface's Azure online test to screen candidates before the interview stage.

Table of contents

Azure interview questions for freshers

1. What is cloud computing, and why is Microsoft Azure an example of it?

Cloud computing is the delivery of computing services—including servers, storage, databases, networking, software, analytics, and intelligence—over the Internet (“the cloud”) to offer faster innovation, flexible resources, and economies of scale. Instead of owning and maintaining your own data centers, you access these resources on demand from a cloud provider.

Microsoft Azure is an example of cloud computing because it provides a comprehensive suite of these services. Users can provision virtual machines, storage, databases, and more through Azure's infrastructure without managing the underlying hardware. Azure offers various deployment models (IaaS, PaaS, SaaS) and service offerings making it a widely adopted cloud platform. It abstracts away the physical infrastructure management allowing businesses to focus on application development and service delivery.

2. Imagine you have lots of toys (data). How would Azure help you keep them safe and organized in the cloud?

Azure offers several services to help keep my toys (data) safe and organized in the cloud. For security, I'd leverage Azure Active Directory for identity and access management, ensuring only authorized users can access the data. I would also use Azure Key Vault to securely store encryption keys and secrets. Furthermore, I'd use Azure Security Center for threat detection and vulnerability assessment to proactively identify and mitigate security risks.

For organization, I could use Azure Blob Storage, categorizing toys into different containers like "cars," "dolls," and "board_games." I could also use Azure Data Lake Storage for larger datasets and more complex data structures. To further organize the toys (data), I can apply metadata tagging to categorize and search toys efficiently. For compliance, Azure Policy allows me to implement and enforce organizational standards and assess compliance at-scale. Finally, I'd use Azure Backup to create regular backups of my data, protecting it from data loss due to accidental deletion or disasters.

3. Can you explain the difference between Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS), using building blocks as an analogy?

Think of building blocks. IaaS is like getting the basic Lego blocks (servers, storage, networking) – you build whatever you want on top. You manage the operating system, middleware, applications, and data. PaaS is like getting a pre-designed Lego set (like a car kit) – it gives you the blocks and instructions (a platform) to build a specific type of thing (applications). The provider manages the OS, middleware, and runtime; you manage the application and data. SaaS is like getting a fully built Lego model (already assembled car) – it's a ready-to-use application over the internet. You simply use the software; the provider manages everything.

4. What are Azure Regions and Availability Zones, and why are they important for keeping applications running smoothly?

Azure Regions are geographically distinct locations containing one or more datacenters. They provide a way to deploy applications closer to users for reduced latency and offer redundancy across a wide geographical area. Availability Zones are physically separate datacenters within an Azure region. Each zone has independent power, network, and cooling.

They are important for high availability and disaster recovery. By deploying applications across multiple Availability Zones, you protect against single points of failure within a region. If one zone goes down, the application continues to run in the other zones. Using multiple regions protects against large scale disasters.

5. What is the Azure portal, and how would you use it to create a simple virtual machine?

The Azure portal is a web-based, unified console that provides an alternative to command-line tools. You can manage your Azure subscription using a graphical user interface. You can build, manage, and monitor everything from simple web apps to complex cloud deployments.

To create a simple virtual machine, you would log in to the Azure portal, search for 'Virtual machines' then select the 'Virtual machines' service. Click '+ Create' and then 'Azure virtual machine'. You then fill out the necessary details such as the subscription, resource group, VM name, region, image, size, username, and password or SSH key. Finally, review the configuration and click 'Create' to deploy the VM.

6. Explain what a Virtual Machine is in Azure and why would someone want to use one.

An Azure Virtual Machine (VM) is an on-demand, scalable computing resource that represents a virtualized instance of a computer. It provides infrastructure as a service (IaaS) and allows you to have complete control over the operating system, applications, and configurations running on it. Think of it as renting a computer in Microsoft's data center.

Someone would use an Azure VM for several reasons:

- Flexibility and Control: Full control over the OS, software, and configurations.

- Application Hosting: Run applications that aren't suitable for platform-as-a-service (PaaS) offerings.

- Testing and Development: Create isolated environments for testing and development purposes.

- Disaster Recovery: Use VMs as part of a disaster recovery strategy.

- Migration: Migrate on-premises workloads to the cloud without significant code changes.

7. What is Azure Storage, and what are the different types of storage it offers (like Blob, Queue, Table, File)?

Azure Storage is a cloud storage service offered by Microsoft Azure for storing various types of data. It's scalable, durable, and highly available. It provides several storage services, each designed for different use cases.

The main types of Azure Storage are:

- Blob Storage: For storing unstructured data like text, images, audio, and video. There are three types: Block Blobs (for large files), Append Blobs (for log data), and Page Blobs (for random access files, like VHDs).

- Queue Storage: A messaging service for asynchronous communication between application components.

- Table Storage: A NoSQL key-attribute data store for structured data. (deprecated, use Cosmos DB's Table API)

- File Storage: Fully managed file shares in the cloud, accessible via the SMB protocol.

8. If you wanted to build a website, how could you use Azure App Service?

Azure App Service provides a platform-as-a-service (PaaS) environment for hosting web applications, REST APIs, and mobile back ends. To build a website using it, you would first create an App Service plan which defines the underlying virtual machine resources. Then, you'd create an App Service (e.g., a Web App) within that plan.

You can deploy your website code using various methods like: git deploy, zip deploy, or through CI/CD pipelines using Azure DevOps, GitHub Actions, or other tools. App Service handles infrastructure management, scaling, security patching, and load balancing. You can configure custom domains, SSL certificates, and other settings through the Azure portal or Azure CLI. Supports various languages and frameworks like .NET, Node.js, Python, Java, PHP, and Ruby.

9. What is Azure Active Directory, and how is it used for managing users and their access in Azure?

Azure Active Directory (Azure AD) is Microsoft's cloud-based identity and access management service. It's used to manage users and their access to Azure resources, Microsoft 365 applications, and other SaaS applications. Think of it as the central directory for your cloud environment, ensuring only authenticated and authorized users can access specific resources.

Azure AD enables you to create and manage user accounts and groups, assign permissions to control access to resources, and enforce security policies like multi-factor authentication (MFA). It supports various authentication protocols (e.g., OAuth, SAML) allowing seamless integration with different applications. It differs from traditional Active Directory Domain Services (AD DS) in that it's delivered as a service, is highly scalable, and is designed for the cloud.

10. Describe a scenario where you might use Azure Functions (serverless computing).

A good scenario for using Azure Functions is processing data uploaded to Azure Blob Storage. Imagine you have a system where users upload images. You can trigger an Azure Function whenever a new image is uploaded. This function could then perform tasks such as:

- Generating thumbnails.

- Resizing the image.

- Extracting metadata (e.g., dimensions, EXIF data).

- Storing the processed image in another Blob Storage container.

This serverless approach is efficient because you only pay for the compute time used when the function is triggered. You don't need to manage any underlying infrastructure or servers. The function scales automatically based on the number of image uploads.

11. What is the Azure Marketplace, and what kind of things can you find there?

The Azure Marketplace is an online store offering certified and community-driven applications, services, and development tools built on or designed to integrate with Azure. It allows customers to find, try, buy, and deploy these solutions. Think of it as an app store for Azure.

You can find a wide variety of things in the Azure Marketplace, including:

- Virtual Machines: Pre-configured VMs with various operating systems (Windows, Linux) and software stacks (databases, web servers). Examples include preconfigured VMs with SQL Server, or a specific version of Ubuntu.

- Containers: Containerized applications ready to deploy to Azure Kubernetes Service (AKS) or other container platforms.

- Managed Applications: Ready-to-deploy solutions managed by the publisher, simplifying deployment and maintenance. Example: An enterprise-grade firewall with automated updates.

- SaaS Applications: Software as a Service offerings directly integrated with Azure Active Directory for single sign-on.

- Developer Tools: Tools and extensions for Visual Studio and other development environments.

- Data and AI: Machine learning models, datasets, and AI services. Example: Pre-trained computer vision models.

- Consulting Services: Professional services from partners to help with Azure deployments and integrations.

12. How does Azure help with making sure your applications are secure?

Azure provides a multi-layered approach to security, offering both platform-level security features and tools to help you secure your applications. At the platform level, Azure has built-in protections against DDoS attacks, intrusion detection, and threat intelligence. You also have access to Azure Security Center, which provides recommendations and helps you manage your security posture across your Azure resources. Think of it as a unified security management system that helps prevent, detect, and respond to threats.

To secure your specific applications, Azure offers services like Azure Active Directory for identity and access management, Azure Key Vault for securely storing secrets, and Azure Application Gateway with Web Application Firewall (WAF) to protect web applications from common exploits. It also has Azure Monitor for detecting anomalies that might be security risks.

13. What is scaling in the cloud, and how does Azure help you scale your applications?

Scaling in the cloud refers to the ability to increase or decrease the resources allocated to your application, such as compute power, storage, or network bandwidth, based on demand. This ensures optimal performance and cost efficiency by only using and paying for the resources you need at any given time. There are two main types of scaling: vertical scaling (scaling up/down the resources of a single instance) and horizontal scaling (scaling out/in by adding or removing instances).

Azure provides various services and features to help scale applications. For example, Virtual Machine Scale Sets allow you to automatically create and manage a group of identical VMs. Azure App Service offers built-in autoscaling based on metrics like CPU usage. Azure Kubernetes Service (AKS) enables you to orchestrate containerized applications and easily scale them using Kubernetes features. Azure also provides services like Azure Functions for serverless computing, which automatically scale based on event triggers.

14. Explain what Azure Resource Groups are and how they help you organize your Azure resources.

Azure Resource Groups are logical containers that hold related Azure resources. They provide a way to manage these resources as a single unit, enabling you to apply actions like deployment, monitoring, and access control across the entire group. Think of it as a folder in your Azure subscription.

Resource Groups help organize Azure resources in several ways: they enable you to manage the lifecycle of related resources together, simplify deployment and management, and provide a scope for managing access control (RBAC). For example, you can grant a user access to manage all resources within a resource group without granting access to the entire subscription. You can also easily delete a resource group, which will delete all resources within it.

15. What is Azure Monitor, and why is monitoring important for cloud applications?

Azure Monitor is a comprehensive monitoring service in Azure that collects, analyzes, and acts on telemetry data from your cloud and on-premises environments. It helps you understand the performance and health of your applications and infrastructure. This includes collecting metrics, logs, and activity logs.

Monitoring is crucial for cloud applications because it allows you to:

- Identify and resolve issues quickly: Proactively detect and address problems before they impact users.

- Optimize performance: Identify bottlenecks and areas for improvement.

- Ensure availability and reliability: Maintain the health and uptime of your applications.

- Gain insights: Understand user behavior and application usage.

- Meet compliance requirements: Track and report on key performance indicators.

16. If a website hosted on Azure suddenly became very slow, what are some basic troubleshooting steps you might take?

If a website hosted on Azure becomes slow, I'd start with these basic steps:

- Check Azure Service Health: Look for any known Azure-wide incidents or outages that might be affecting the website's region or services.

- Monitor Website Metrics: Use Azure Monitor to check key performance indicators (KPIs) like CPU usage, memory consumption, request latency, and error rates. Identify any spikes or anomalies. Pay close attention to metrics for the App Service Plan, the App Service itself, and any dependent resources like databases.

- Restart the Web App: A simple restart can sometimes resolve temporary issues like memory leaks or hung processes. This can be done through the Azure portal.

- Examine Application Logs: Check the application logs for any errors, warnings, or exceptions that might be causing the slowdown. Look for database query issues or other application-specific problems.

- Test Basic Connectivity: Verify that the website can connect to any backend databases or external services it relies on.

- Scale Up or Out: If resource constraints are identified, consider scaling up the App Service Plan to provide more CPU, memory, or scaling out by adding more instances. Note: scaling up requires a restart so may be better to scale out if restart is not an option.

- Check for recent deployments: If this started happening suddenly, it's possible a recent deployment introduced a bug or performance issue. Rollback to a previous deployment if possible.

- Use Kudu Debug Console: For further investigation use the Kudu debug console to investigate processes and logs on the server

17. What are some of the benefits of using Azure over traditional on-premises infrastructure?

Azure offers several benefits over traditional on-premises infrastructure, including cost savings through reduced capital expenditure and operational expenses. Businesses can scale resources up or down on demand, paying only for what they use, improving agility and responsiveness. Azure also provides enhanced reliability and disaster recovery options with geo-replication and automated backups.

Furthermore, Azure simplifies management with centralized tools for monitoring, security, and compliance. This reduces the burden on IT staff and allows them to focus on strategic initiatives. It also offers a wider range of services, such as AI, machine learning, and analytics, that are often not available or cost-prohibitive to implement on-premises.

18. What does it mean to deploy a resource to Azure? Give an example.

Deploying a resource to Azure means making it available and operational within the Azure cloud environment. It involves configuring and launching a specific Azure service, like a virtual machine, database, or web app, so that it can perform its intended function. This includes provisioning the necessary infrastructure and resources, setting up network configurations, and configuring security settings.

For example, deploying an Azure Web App involves the following: 1. Selecting an App Service plan (specifying resources like CPU and memory). 2. Configuring settings like the runtime stack (e.g., .NET, Python, Node.js). 3. Uploading the web application code. 4. Configuring deployment settings (e.g., deployment slots, continuous integration). Once deployed, the web app is accessible via a public URL and can serve web content.

19. What is an Azure subscription and why is it important?

An Azure subscription is a logical container for accessing and managing Azure resources. Think of it as a billing boundary and access control point. It allows you to organize resources, like virtual machines, databases, and storage accounts, into manageable groups. Each resource belongs to only one subscription.

Subscriptions are important because they:

- Provide a centralized way to manage billing and costs.

- Enable access control and security policies at the subscription level.

- Offer a way to isolate environments (e.g., development, test, production).

- Allow you to scale your cloud resources within defined limits and budgets.

20. Describe a situation where you would use the Azure command-line interface (CLI) or PowerShell.

I would use Azure CLI or PowerShell when automating tasks or managing Azure resources at scale. For example, if I needed to create hundreds of virtual machines with similar configurations, scripting the deployment using Azure CLI or PowerShell would be much more efficient and less error-prone than manually creating each VM through the Azure portal.

Another scenario would be setting up and configuring a complex network topology. Using Infrastructure as Code (IaC) principles with Azure CLI or PowerShell allows me to define the desired state of the network and deploy it consistently across different environments (e.g., development, testing, production). This includes tasks like creating virtual networks, subnets, network security groups, and routing rules. Specifically, for deploying a new version of our application, I might use a PowerShell script to update the App Service Plan: Update-AzAppServicePlan -ResourceGroupName "myResourceGroup" -Name "myAppServicePlan" -Tier Standard.

21. How does Azure support different operating systems like Windows and Linux?

Azure supports a wide range of operating systems, including both Windows and Linux, through its virtualization platform. Azure's core infrastructure utilizes Hyper-V, a hypervisor, to create and manage virtual machines (VMs). These VMs can run various operating systems.

For Windows, Azure provides full support for different versions of Windows Server and client operating systems. For Linux, Azure supports numerous distributions, such as Ubuntu, Red Hat Enterprise Linux, SUSE Linux Enterprise Server, Debian, CentOS, and more. Azure offers pre-built images for these distributions in the Azure Marketplace, simplifying deployment. Additionally, users can upload their own custom OS images. Azure also provides specific tools and services optimized for both Windows and Linux, like Azure CLI and PowerShell for management, as well as monitoring tools. It also provides SDKs and APIs for interacting with Azure services from both Windows and Linux environments.

22. What are some ways to keep the cost of running applications in Azure under control?

To keep Azure costs under control, consider several strategies. Firstly, optimize resource utilization by right-sizing VMs, using reserved instances for predictable workloads, and scaling resources up or down based on demand using autoscaling. Regularly review resource utilization metrics to identify underutilized resources. Implement Azure Cost Management + Billing to monitor spending, set budgets, and receive alerts when costs exceed thresholds. Delete or deallocate resources when not in use, especially development/test environments. Choose the appropriate Azure region, as pricing can vary. Explore using Azure Advisor for cost optimization recommendations.

Secondly, take advantage of Azure Hybrid Benefit if you have on-premises Windows Server or SQL Server licenses. Use tagging to organize and track costs by department, project, or environment. Consider using Azure Resource Manager (ARM) templates or infrastructure-as-code (IaC) to automate resource provisioning and ensure consistency. Implement Azure Policy to enforce cost-related policies, such as restricting VM sizes or regions. Finally, regularly review your Azure bill and analyze cost trends to identify areas for improvement. For example, consider moving non-critical workloads to cheaper storage tiers. Choose spot VMs for fault tolerant workloads.

Azure interview questions for juniors

1. What is cloud computing, like you're explaining it to a kid?

Imagine you have a lot of toys (like computers and software) but instead of keeping them all in your room, you keep them in a big clubhouse with lots of other kids. Cloud computing is like that clubhouse. Instead of owning all the computers and programs yourself, you can borrow them from the "cloud" (which is just someone else's big clubhouse of computers) whenever you need them.

So, you can play games, watch videos, or do your homework using the computers and programs in the cloud, and you only pay for what you use. This way, you don't have to worry about buying expensive computers or keeping them running, and you can access your stuff from anywhere, like your home, your friend's house, or even on vacation. It is also like a shared resource that can be accessed by multiple people at the same time.

2. Can you describe Azure in simple terms?

Azure is Microsoft's cloud platform. Think of it as a massive collection of computer hardware and software that Microsoft owns and operates in data centers around the world. Instead of buying your own servers and infrastructure, you can rent these resources from Azure, paying only for what you use.

Azure offers a wide range of services, including:

- Compute: Virtual machines, containers, serverless functions.

- Storage: Cloud storage for files, databases, and other data.

- Networking: Virtual networks, load balancers, and gateways.

- Databases: SQL databases, NoSQL databases, and data warehousing.

- AI & Machine Learning: Tools and services for building and deploying AI models.

- Developer Tools: DevOps tools for building, testing, and deploying software.

3. What's a virtual machine (VM) in Azure? Pretend I've never heard of it.

Imagine you have a computer. Now, imagine you can create another, completely separate computer inside that first one, using software. That's essentially what a Virtual Machine (VM) in Azure is. It's a software-based computer that runs on Azure's hardware. You can install an operating system (like Windows or Linux) and any software you need on that VM, just like you would on a physical computer.

Think of it like renting a computer in the cloud. You don't have to buy or maintain the physical hardware, Azure does that for you. You just pay for the computing power, storage, and network resources you use on the VM. This allows you to quickly create and deploy applications without needing to invest in physical infrastructure. It provides more cost savings and faster deployment compared to traditional dedicated servers.

4. What is Azure Resource Group, and why is it useful?

An Azure Resource Group is a logical container that holds related resources for an Azure solution. These resources could include virtual machines, storage accounts, web apps, databases, and more. Think of it as a folder to organize and manage your Azure resources as a single unit.

Resource Groups are useful because they enable you to manage the lifecycle of related resources together. You can deploy, update, or delete all the resources in a resource group in a single operation. This simplifies management, enables consistent application of policies, and facilitates cost tracking at the resource group level. They also allow role-based access control (RBAC) at the resource group level, providing granular permission management.

5. What's the difference between Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS)? Give a simple example of each.

IaaS (Infrastructure as a Service) provides you with the basic building blocks for cloud IT. You manage the operating system, storage, deployed applications, and potentially networking (firewalls, load balancers). The provider manages the hardware. Example: AWS EC2 (virtual machines).

PaaS (Platform as a Service) provides a platform allowing customers to develop, run, and manage applications without the complexity of building and maintaining the infrastructure. You manage the application and data. Example: Google App Engine (where you deploy your code).

SaaS (Software as a Service) provides a complete product that is run and managed by the service provider. You simply use the software. Example: Salesforce (CRM software).

6. What is Azure Storage? Give me some examples of the different types.

Azure Storage is Microsoft's cloud storage solution, providing scalable, durable, and highly available storage for various data objects. It offers several different storage types, each suited for specific use cases.

Examples include:

- Blob Storage: For storing unstructured data like text, images, audio, and video. Common uses are storing website content, media files, and backups.

- File Storage: Provides fully managed file shares in the cloud, accessible via the SMB protocol. Ideal for migrating on-premises file shares to Azure.

- Queue Storage: A messaging service for reliable message queuing between application components. Used for decoupling applications and implementing asynchronous processing.

- Table Storage: A NoSQL key-attribute data store for storing structured data. Useful for storing metadata and configuration information.

- Disk Storage: Block-level storage volumes for Azure Virtual Machines. Used as operating system disks and data disks for VMs.

7. What is Azure Active Directory (Azure AD), and why is it important?

Azure Active Directory (Azure AD) is Microsoft's cloud-based identity and access management service. It provides a way to manage user identities and control access to applications and resources, both on-premises and in the cloud. Think of it as a central directory for your users, groups, and devices, allowing you to easily manage who has access to what.

Azure AD is important because it enables secure and streamlined access to resources, enhances security with features like multi-factor authentication (MFA) and conditional access, and simplifies identity management across diverse environments. It also integrates with other Microsoft services (like Microsoft 365) and many third-party applications, offering a single identity solution for your organization.

8. What does 'scalability' mean in the context of Azure?

In Azure, scalability refers to the ability of a system, application, or resource to handle an increasing amount of workload or demand. This means Azure resources can be easily adjusted to accommodate growth without significant performance degradation or requiring major infrastructure changes.

Scalability in Azure can be achieved in two main ways: vertical scaling (scaling up) and horizontal scaling (scaling out). Vertical scaling involves increasing the resources (e.g., CPU, memory) of a single instance, while horizontal scaling involves adding more instances to handle the increased load. Azure offers various services and features to facilitate both types of scaling, such as virtual machine scale sets, Azure Kubernetes Service (AKS), and Azure App Service's auto-scaling capabilities.

9. What does 'high availability' mean, and how does Azure help with it?

High availability (HA) means a system is designed to remain operational for a long period, minimizing downtime. It's about ensuring your application is consistently accessible to users.

Azure provides several features to achieve HA. These include: Availability Sets (group VMs with hardware fault tolerance), Availability Zones (physically separate locations within an Azure region), Azure Load Balancer (distributes traffic across healthy VMs), and Geo-redundancy (replicating data to multiple regions). Azure also offers services like Azure Site Recovery for disaster recovery and automated failover, further enhancing application resilience.

10. What is the Azure portal, and what can you do with it?

The Azure portal is a web-based, unified console that provides a graphical user interface (GUI) for managing your Azure cloud resources. It acts as a central hub for building, deploying, and managing applications and services within the Azure environment.

With the Azure portal, you can perform a wide array of tasks, including:

- Creating and managing resources: Provision virtual machines, databases, storage accounts, web apps, and other Azure services.

- Monitoring resource health and performance: Track metrics, set up alerts, and diagnose issues.

- Configuring security settings: Manage user access, configure firewalls, and implement security policies.

- Deploying and managing applications: Deploy code, configure deployments, and manage application settings.

- Automating tasks: Create runbooks and automate repetitive tasks.

- Cost management: Monitor your Azure spending and identify cost optimization opportunities.

- Accessing documentation and support: Find answers to your questions and get assistance from Microsoft support.

11. What is Azure CLI, and why would you use it?

Azure CLI is a command-line tool that allows you to manage Azure resources. It provides a set of commands you can use to create, configure, and manage Azure services such as virtual machines, databases, and web apps. It is an alternative to the Azure portal.

You would use it for several reasons:

- Automation: You can script tasks for repetitive operations.

- Speed: CLI commands can be faster than navigating the Azure portal for certain tasks.

- Remote Management: You can manage Azure resources from any machine with the CLI installed, including those without a graphical interface.

- CI/CD: Integrate with continuous integration and continuous delivery pipelines.

12. What is Azure PowerShell, and what's it used for?

Azure PowerShell is a module that lets you manage Azure resources from the command line. It's built on PowerShell and provides a set of cmdlets (commands) to automate tasks and manage your Azure infrastructure.

It is used for:

- Automating tasks: You can create scripts to automate common Azure tasks, such as creating virtual machines, configuring networks, and deploying applications.

- Managing resources: Azure PowerShell allows you to manage all Azure resources, like VMs, databases, storage accounts, etc.

- Configuration and Deployment: It's used for configuring and deploying your applications and services to Azure.

Example:New-AzVM -ResourceGroupName "myResourceGroup" -Name "myVM" -Location "EastUS"

13. What's the difference between public, private, and hybrid clouds?

Public clouds are owned and operated by third-party providers, offering resources like servers and storage over the internet. Users share these resources, and security/maintenance is handled by the provider. Private clouds, on the other hand, are infrastructure used exclusively by a single organization. This can be on-premise or hosted by a third-party, offering more control and customization but also greater responsibility for management and security.

Hybrid clouds combine public and private clouds, allowing data and applications to be shared between them. This offers flexibility, scalability, and cost optimization, enabling organizations to leverage the benefits of both environments. For example, you might run less sensitive applications on the public cloud while keeping sensitive data on a private cloud.

14. What are some security considerations when using Azure?

When using Azure, several security considerations are crucial. These include:

- Identity and Access Management (IAM): Use Azure Active Directory (Azure AD) for strong authentication and authorization. Implement multi-factor authentication (MFA) and least privilege access. Regularly review user permissions.

- Network Security: Utilize Azure Network Security Groups (NSGs) to control inbound and outbound traffic to virtual machines and subnets. Employ Azure Firewall or Web Application Firewall (WAF) to protect against network-based attacks.

- Data Protection: Encrypt data at rest and in transit using Azure Key Vault for managing encryption keys. Implement data loss prevention (DLP) measures to prevent sensitive data from leaving the organization.

- Vulnerability Management: Regularly scan for vulnerabilities using Azure Security Center or other third-party tools. Patch systems promptly and monitor for security threats.

- Compliance: Ensure compliance with relevant industry regulations and standards (e.g., GDPR, HIPAA) by leveraging Azure Policy and Azure Blueprints.

- Monitoring and Logging: Enable Azure Monitor and Azure Security Center to collect security logs and monitor for suspicious activities. Implement alerts and automated responses to security incidents.

15. What is Azure Functions? Explain the concept simply.

Azure Functions is a serverless compute service that allows you to run event-triggered code without explicitly provisioning or managing infrastructure. Think of it as a way to execute small pieces of code in the cloud without worrying about servers.

Key aspects:

- Serverless: You don't manage the underlying servers.

- Event-triggered: Code runs in response to events (e.g., a file being uploaded, a message arriving in a queue, or a timer).

- Pay-per-use: You only pay for the compute time your code consumes. Function apps automatically scale out when needed.

16. What is Azure Logic Apps? How does it work?

Azure Logic Apps is a cloud-based platform for creating and running automated workflows that integrate applications, data, systems, and services. It allows you to design and automate business processes without writing code, or with minimal code, using a visual designer and pre-built connectors.

Logic Apps works by connecting various services through connectors. These connectors provide pre-built operations that allow you to interact with different applications and data sources. A Logic App workflow starts with a trigger, which initiates the workflow based on an event, schedule, or API call. The workflow then executes a series of actions, which can include data transformation, conditional logic, and integration with other services. These actions are configured using the Logic Apps designer and can be chained together to create complex business processes.

17. What is an Azure subscription, and why do you need one?

An Azure subscription is a logical container that provides access to Azure services. It acts as a billing boundary and an access control boundary for your Azure resources. Think of it as your personal or organizational account with Microsoft Azure.

You need an Azure subscription to create and manage Azure resources, such as virtual machines, databases, and web apps. Without a subscription, you cannot deploy or use any services within the Azure cloud. It is also needed for billing and usage tracking of Azure resources.

18. What are Azure regions and availability zones, and why are they important?

Azure regions are geographically distinct locations around the world that contain one or more datacenters. They are important because they provide redundancy, enabling you to deploy applications closer to your users for reduced latency and to meet data residency requirements. Availability Zones are physically separate datacenters within an Azure region.

They offer high availability and fault tolerance. By deploying your application across multiple Availability Zones, you can protect it from single points of failure within a region, ensuring business continuity even if one zone experiences an outage.

19. What is Azure DevOps? What is it used for?

Azure DevOps is a suite of cloud-based services from Microsoft that provides a collaborative environment for software development teams. It helps streamline the software development lifecycle from planning and development to testing and deployment.

Azure DevOps is used for:

- Version Control: Managing source code using Git repositories (Azure Repos).

- CI/CD: Automating builds, tests, and deployments (Azure Pipelines).

- Project Management: Planning and tracking work using Agile boards, backlogs, and dashboards (Azure Boards).

- Testing: Planning, executing, and tracking tests (Azure Test Plans).

- Artifact Management: Managing packages and artifacts (Azure Artifacts).

20. What is Infrastructure as Code (IaC), and how does it relate to Azure?

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure through machine-readable definition files, rather than manual configuration or interactive tools. This allows for infrastructure to be treated similarly to application code, enabling version control, automated testing, and repeatable deployments.

In the context of Azure, IaC is often implemented using tools like:

- Azure Resource Manager (ARM) templates: JSON or Bicep files that define the resources to be deployed.

- Terraform: An open-source IaC tool that supports multiple cloud providers, including Azure.

- Azure CLI/PowerShell: Command-line tools that can be used to automate infrastructure deployments.

- Azure DevOps: Provides pipelines for automating the deployment and management of IaC configurations.

By using IaC with Azure, you can automate the creation, modification, and deletion of resources, ensuring consistency and reducing the risk of human error. Here's an example snippet of a basic ARM template:

{

"\$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"resources": []

}

21. What is Azure Monitor, and what does it do?

Azure Monitor is a comprehensive monitoring solution in Azure that collects, analyzes, and acts on telemetry from your cloud and on-premises environments. It helps you understand the performance and health of your applications and infrastructure.

It provides insights into various aspects, including application performance, infrastructure health, and network activity. Azure Monitor enables you to proactively identify and resolve issues, optimize resource utilization, and improve overall system reliability through features such as: logs and metrics collection, alerting, visualizations (dashboards), and automated actions.

22. What is Azure Cost Management, and why is it needed?

Azure Cost Management is a suite of tools provided by Microsoft Azure that helps organizations monitor, allocate, and optimize their cloud spending. It offers features for cost analysis, budgeting, forecasting, and recommendations to reduce unnecessary expenses.

It's needed because cloud costs can quickly become unmanageable without proper oversight. Cost Management helps prevent overspending, identifies areas where resources are underutilized, and provides insights to make informed decisions about resource allocation. This leads to better cost control, improved budget adherence, and maximized return on investment in Azure.

23. What is the difference between scaling up and scaling out?

Scaling up (vertical scaling) means increasing the resources of a single machine. This could involve adding more CPU, RAM, or storage to an existing server. The benefit is simplicity, but there's a hard limit to how much you can scale up a single machine.

Scaling out (horizontal scaling) involves adding more machines to your system. Instead of upgrading a single powerful server, you distribute the load across multiple smaller servers. This provides greater scalability and redundancy, but requires more complex architecture and management, such as load balancing and distributed databases.

24. What are some advantages of using Azure over traditional on-premises infrastructure?

Azure offers several advantages over traditional on-premises infrastructure. Primarily, it provides scalability and flexibility. You can easily scale resources up or down based on demand, paying only for what you use. This eliminates the need for large upfront investments in hardware and reduces ongoing maintenance costs.

Furthermore, Azure provides a range of services like built-in disaster recovery and high availability options, enhancing business continuity. It also offers advanced security features and compliance certifications, helping organizations meet regulatory requirements. Additionally, Azure's global network allows you to deploy applications closer to your users, improving performance and reducing latency. This also includes a wider array of specialized managed services, enabling innovation and faster development cycles.

25. What are some of the challenges of migrating to Azure?

Migrating to Azure, while offering numerous benefits, presents several challenges. One primary challenge is data migration. Moving large datasets can be time-consuming and expensive, often requiring careful planning to minimize downtime and ensure data integrity. Different data formats, legacy systems, and network bandwidth limitations can all complicate the process. Furthermore, applications often require code changes or re-architecting to fully leverage Azure services.

Another challenge lies in security and compliance. Understanding Azure's security model and configuring appropriate security measures is crucial. Meeting industry-specific compliance requirements (e.g., HIPAA, GDPR) in the cloud can also be complex, demanding a thorough assessment of Azure's compliance certifications and the implementation of necessary security controls. Skill gaps within the IT team and the need for training on Azure-specific technologies represent a further impediment to migration.

26. What is a container, and why are containers useful in Azure?

A container is a standardized unit of software that packages up code and all its dependencies, allowing the application to run reliably across different computing environments. Think of it as a lightweight, standalone executable package.

Containers are extremely useful in Azure because they offer portability, consistency, and efficiency. They ensure applications run the same way regardless of the underlying infrastructure, simplifying deployment and management. This is especially beneficial for microservices architectures. Azure offers various container-related services, like Azure Container Instances (ACI) for running individual containers and Azure Kubernetes Service (AKS) for orchestrating container clusters, thus enabling scalable and resilient application deployments.

27. What is Kubernetes, and how does it relate to Azure?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It essentially manages a cluster of machines, scheduling containers to run on them, ensuring they are healthy, and scaling them up or down as needed.

Azure provides several ways to interact with Kubernetes. Azure Kubernetes Service (AKS) is a fully managed Kubernetes service that simplifies deploying and managing Kubernetes clusters in Azure. You can also deploy Kubernetes on Azure VMs yourself, but AKS handles much of the operational overhead. In essence, AKS lets you leverage Kubernetes without having to manage the underlying Kubernetes infrastructure.

28. What is serverless computing? How can Azure Functions and Logic Apps help?

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. You only pay for the compute time you consume, and the underlying infrastructure is completely abstracted away.

Azure Functions and Logic Apps are key services in Azure's serverless offering. Azure Functions lets you run small pieces of code (functions) without worrying about infrastructure. They are ideal for event-driven scenarios. Logic Apps, on the other hand, are designed to automate workflows and integrate different systems using a visual designer, also without managing servers. Together, they help you build scalable and cost-effective serverless applications.

29. Can you describe a situation where you would use Azure?

I would use Azure when a company needs to migrate its existing on-premises infrastructure to the cloud for improved scalability, reliability, and cost-effectiveness. For example, a retail business experiencing seasonal spikes in website traffic could leverage Azure's virtual machines and auto-scaling capabilities to handle the increased load without investing in and maintaining additional on-premises servers.

Another scenario is when developing and deploying a new web application or microservices architecture. Azure offers a comprehensive suite of services like App Service, Azure Kubernetes Service (AKS), and Azure Functions, which simplify the development, deployment, and management processes. Specifically, if I needed to build a serverless function to process image uploads, I could use Azure Functions with a Python script like this:

import azure.functions as func

import logging

def main(req: func.HttpRequest) -> func.HttpResponse:

logging.info('Python HTTP trigger function processed a request.')

try:

req_body = req.get_json()

except ValueError:

return func.HttpResponse(

"Please pass a JSON payload in the request body",

status_code=400

)

image_data = req_body.get('image_data')

if image_data:

# Process the image data here (e.g., resize, save to storage)

return func.HttpResponse(

f"Image processed successfully!",

status_code=200

)

else:

return func.HttpResponse(

"Please pass an image_data property in the JSON payload",

status_code=400

)

if __name__ == "__main__":

# This is just for local testing

import requests

import json

# Mock image data (replace with actual image data)

mock_image_data = "base64encodedimagedata"

payload = {"image_data": mock_image_data}

# Simulate a request to the Azure Function endpoint

url = "http://localhost:7071/api/ImageProcessingFunction" # Replace with your Azure Function URL

headers = {"Content-type": "application/json"}

response = requests.post(url, data=json.dumps(payload), headers=headers)

print(f"Status Code: {response.status_code}")

print(f"Response Body: {response.text}")

Azure intermediate interview questions

1. Explain Azure Resource Manager (ARM) templates and how they help with infrastructure as code. Can you describe a scenario where ARM templates would be particularly useful?

Azure Resource Manager (ARM) templates are JSON files that define the infrastructure and configuration for your Azure resources. They enable infrastructure as code (IaC) by allowing you to define your entire infrastructure in a declarative way, version control it, and repeatedly deploy it consistently. This eliminates manual configuration and reduces the risk of errors. Think of it as a blueprint for your Azure environment.

ARM templates are particularly useful in scenarios requiring repeatable deployments across different environments (dev, test, production). For example, deploying a complex application stack consisting of virtual machines, databases, and networking components. By using an ARM template, the entire stack can be deployed consistently across all environments, ensuring configuration parity and simplifying the deployment process. This makes it easy to recreate the infrastructure in case of disaster recovery or to scale the application by deploying additional instances.

2. What are Azure Virtual Machine Scale Sets, and when would you choose to use them instead of individual virtual machines?

Azure Virtual Machine Scale Sets are an Azure compute resource that you can use to deploy and manage a set of identical virtual machines. They're designed to automatically scale your application, providing high availability and fault tolerance. Instead of managing individual VMs, you manage the scale set, which then handles the creation, configuration, and management of the underlying VMs.

You would choose to use Virtual Machine Scale Sets over individual VMs when you need to deploy a large number of identical VMs, especially for applications that experience variable demand. Key use cases include:

- Scalability: Automatically increase or decrease the number of VMs based on demand.

- High Availability: Distribute VMs across fault domains for resilience.

- Management: Simplified management of a group of VMs as a single entity.

- Cost Optimization: Scale down during periods of low demand to save costs.

- Stateless Applications: Ideal for stateless applications where any VM can handle any request, allowing for easy scaling and fault tolerance.

3. Describe the difference between Azure Blob Storage tiers (Hot, Cool, Archive) and how you would choose the appropriate tier for different data storage needs.

Azure Blob Storage offers different tiers to optimize costs based on data access frequency. The Hot tier is for frequently accessed data, offering the highest performance but also the highest storage cost. The Cool tier is for infrequently accessed data, like backups or older datasets, with lower storage costs but higher access costs. Finally, the Archive tier is for rarely accessed data that can tolerate several hours of retrieval latency, providing the lowest storage cost but highest access cost.

Choosing the right tier depends on your data usage patterns. If you need immediate access to data regularly, Hot is best. If you access data less often and can tolerate some delay, Cool is more cost-effective. If you rarely need to access the data and can wait hours for retrieval, Archive is the most cost-effective option. For example, frequently accessed application logs would be suited for Hot, monthly backups for Cool, and yearly compliance data for Archive.

4. Explain the purpose of Azure Load Balancer and how it distributes traffic to backend servers. What are the different types of Azure Load Balancers?

Azure Load Balancer distributes incoming network traffic across multiple backend servers, ensuring high availability and scalability for applications. It acts as a single point of contact for clients, distributing traffic based on configured rules and health probes. Health probes monitor the health of backend servers, and traffic is only sent to healthy instances, preventing downtime.

Azure offers different types of Load Balancers:

- Public Load Balancer: Distributes traffic from the internet to backend servers in Azure.

- Internal (Private) Load Balancer: Distributes traffic within an Azure virtual network (VNet).

- Basic Load Balancer: Offers basic load balancing functionalities, suitable for simple scenarios. Limited features and scalability.

- Standard Load Balancer: Provides advanced features like autoscaling, multiple frontend IPs, and enhanced monitoring. Recommended for production environments.

5. What is Azure Key Vault, and how does it help with managing secrets and encryption keys in Azure? How do you grant access to secrets?

Azure Key Vault is a cloud service for securely storing and managing secrets, keys, and certificates. It helps manage secrets and encryption keys by providing a centralized and secure repository, reducing the risk of accidental exposure or unauthorized access. Secrets are things like API keys, passwords, or connection strings.

Access to secrets in Key Vault is granted through Azure role-based access control (RBAC) and Key Vault access policies. You can assign RBAC roles like Key Vault Administrator, Key Vault Contributor, or Key Vault Reader to manage the Key Vault itself. For fine-grained control over secrets, you use Key Vault access policies, specifying which users, groups, or applications (service principals) can perform specific operations (e.g., get, list, set, delete) on secrets and keys. Access can be granted via the Azure portal, Azure CLI, or PowerShell.

6. Describe the different Azure networking options, such as Virtual Networks (VNets), subnets, and Network Security Groups (NSGs). How do they work together to secure your Azure resources?

Azure Virtual Networks (VNets) are the fundamental building blocks for your private network in Azure. A VNet enables Azure resources, like VMs, to securely communicate with each other, the internet, and on-premises networks. Within a VNet, you can create subnets to segment the network. Subnets allow you to logically divide the VNet address space into smaller, more manageable chunks, enabling you to apply different security rules to different parts of your application.

Network Security Groups (NSGs) are virtual firewalls that control inbound and outbound traffic to Azure resources. They contain security rules that allow or deny network traffic based on a 5-tuple (source, destination, port, protocol, direction). NSGs can be associated with subnets or individual network interfaces (NICs). By associating NSGs at both subnet and NIC levels, you can implement a layered security approach. For example, a subnet NSG might block all inbound traffic from the internet except for port 80 and 443, while a NIC-level NSG might further restrict access to a specific VM to only traffic from a particular source IP address. This combination of VNets, subnets, and NSGs provides a robust mechanism for securing Azure resources.

7. What is Azure Active Directory (Azure AD), and how does it differ from on-premises Active Directory? How is it used for authentication and authorization in Azure?

Azure Active Directory (Azure AD) is a cloud-based identity and access management service. It's Microsoft's identity platform, providing authentication, authorization, and access control for applications and resources in the cloud.

Azure AD differs from on-premises Active Directory (AD) in several key ways: Azure AD is a cloud service, while on-premises AD is a directory service running on servers within your own network. Azure AD primarily uses standards like OAuth 2.0, SAML, and OpenID Connect for authentication and authorization, while on-premises AD relies heavily on Kerberos. Azure AD uses a flat structure, whereas on-premises AD uses a hierarchical, domain-based structure. In Azure, Azure AD is central for authentication. When a user tries to access an Azure resource, Azure AD verifies their identity. Once authenticated, Azure AD uses role-based access control (RBAC) to determine what resources the user is authorized to access. This involves assigning roles with specific permissions to users or groups, controlling access to resources like virtual machines, databases, and storage accounts.

8. Explain the concept of Azure Functions and how they can be used to build serverless applications. What are some common use cases for Azure Functions?

Azure Functions is a serverless compute service that enables you to run code on-demand without having to explicitly provision or manage infrastructure. It's event-triggered, meaning your code executes in response to various events such as HTTP requests, queue messages, timer triggers, and changes to data in Azure services. You only pay for the compute time consumed while your code is running.

Common use cases include:

- Web API endpoints: Creating HTTP-triggered functions to handle API requests.

- Background processing: Processing queue messages or events from other services.

- Scheduled tasks: Running tasks on a timer basis, such as data cleanup or report generation.

- Event-driven architectures: Responding to events from Azure services like Event Hubs or Cosmos DB change feed.

- Real-time data processing: Functions can be used in IoT solutions to process and analyze data streams from IoT devices.

9. What are Azure Logic Apps, and how do they help with automating workflows and integrating different systems? Give an example scenario.

Azure Logic Apps are a cloud-based platform for building automated workflows and integrating applications, data, and services. They provide a visual designer to model and automate processes without writing code, or you can directly write code to make your own connector. Logic Apps use pre-built connectors to integrate with various services like Azure services, databases, SaaS applications (e.g., Salesforce, Twitter), and on-premises systems. They help in automating business processes and system integration, reducing manual effort and improving efficiency.

For example, consider an e-commerce scenario. When a new order is placed in an online store, a Logic App can automatically:

- Receive the order details.

- Update the inventory in a database.

- Send an email confirmation to the customer.

- Create a shipping request in a logistics system.

- Post a message to a Slack channel to notify the sales team.

10. Describe the different types of Azure Storage accounts (General-purpose v2, Blob storage, etc.) and their use cases. How do you choose the appropriate storage account type?

Azure Storage offers several account types, each optimized for different scenarios. General-purpose v2 (GPv2) is the standard and recommended option for most cases. It supports Blobs, Files, Queues, and Tables, offering the lowest per-GB storage costs and comprehensive features. Blob storage accounts are specialized for storing unstructured data like images, videos, and documents. They offer premium performance and scalability for blob data, with access tiers optimized for different usage patterns (Hot, Cool, Archive). Legacy accounts like GPv1 are still available but generally not recommended for new deployments.

Choosing the right type depends on your workload. If you need a versatile account for various data types and general purposes, GPv2 is the best choice. If you primarily store and access large amounts of unstructured data with high performance requirements, Blob storage is preferable. Consider factors like storage costs, access patterns, and feature requirements when making your decision. For example, if you are working with Azure Data Lake Gen2, you will need a GPv2 account with hierarchical namespace enabled.

11. What is Azure Cosmos DB, and how does it differ from traditional relational databases? What are the consistency levels offered by Cosmos DB?

Azure Cosmos DB is a globally distributed, multi-model database service. Unlike traditional relational databases, which are typically schema-bound and designed for transactional workloads on a single server, Cosmos DB is schema-free, supports multiple data models (e.g., document, graph, key-value, column-family), and is built for horizontal scalability and high availability across multiple geographic regions. Relational databases enforce strict ACID properties, while Cosmos DB offers tunable consistency levels, trading off some consistency for higher availability and lower latency.

Cosmos DB offers five consistency levels:

- Strong: Guarantees that reads return the most recent committed version of an item.

- Bounded Staleness: Reads might lag behind writes by a certain time window or number of versions.

- Session: Guarantees read-your-writes, monotonic reads, monotonic writes, read-your-own-writes, and write-follows-read within a single client session.

- Consistent Prefix: Guarantees that reads return some prefix of all writes, with no out-of-order writes.

- Eventual: No guarantee of read order; reads will eventually catch up with writes.

12. Explain how you would implement a hybrid connectivity solution between your on-premises network and Azure, using services like Azure VPN Gateway or Azure ExpressRoute.

To implement a hybrid connectivity solution, I'd use either Azure VPN Gateway or Azure ExpressRoute, depending on the requirements. For a cost-effective and quicker setup, I'd choose Azure VPN Gateway. This involves creating a VPN Gateway in Azure and configuring a VPN device on-premises. I'd establish a secure, encrypted tunnel (Site-to-Site VPN) between the on-premises network and the Azure virtual network.

For a more reliable and higher-bandwidth connection, I'd opt for Azure ExpressRoute. This requires partnering with a connectivity provider to establish a private, dedicated network connection between on-premises and Azure. The setup involves ordering an ExpressRoute circuit, configuring routing, and ensuring network security. ExpressRoute provides more predictable network performance and enhanced security compared to VPN Gateway.

13. What are Azure policies and how can they be used to enforce compliance and governance in Azure environments?

Azure Policies are a service in Azure that enables you to enforce organizational standards and assess compliance at scale. They provide a way to define rules or policies that govern the configuration and deployment of Azure resources. They work by evaluating resources for compliance with defined rules and can take actions like auditing, denying deployment, or modifying resources to bring them into compliance.

They can be used in several ways to enforce compliance and governance:

- Enforcing naming conventions: Ensure all resources follow a specific naming standard.

- Restricting resource types: Limit the types of resources that can be deployed in a specific environment.

- Enforcing location restrictions: Ensure resources are only deployed in approved regions.

- Auditing configuration settings: Verify that resources have specific configurations applied.

- Tagging Enforcement: Ensuring that resources have the correct tags applied for cost tracking or management purposes.

14. Describe the purpose of Azure Service Bus and how it facilitates asynchronous messaging between different applications and services.

Azure Service Bus is a fully managed enterprise integration message broker. Its purpose is to facilitate asynchronous communication between decoupled applications and services. This allows applications to reliably exchange data without requiring a direct, synchronous connection.

Service Bus facilitates asynchronous messaging through features like queues and topics with subscriptions. Queues enable point-to-point communication, where a sender sends a message to a queue, and a single receiver processes it. Topics and subscriptions provide a publish-subscribe model, allowing multiple subscribers to receive a copy of a message published to a topic. This decoupling enhances scalability, reliability, and fault tolerance, enabling different services to operate independently and at their own pace.

15. What is Azure Monitor, and how does it help with monitoring the health and performance of your Azure resources? What kinds of metrics can you collect?

Azure Monitor is a comprehensive monitoring service in Azure that provides visibility into the performance, health, and availability of your applications and infrastructure. It helps you collect, analyze, and act on telemetry data, allowing you to identify and diagnose problems quickly, optimize performance, and ensure the reliability of your Azure resources.

Azure Monitor can collect a wide variety of metrics, including:

- Platform metrics: CPU utilization, memory usage, disk I/O, network traffic, etc. for Azure resources like VMs, databases, and app services.

- Application metrics: Performance counters, custom metrics emitted by your application code, and request response times.

- Activity logs: Records of operations performed on Azure resources, such as creating, updating, or deleting resources.

- Diagnostic logs: Logs emitted by Azure services, providing insights into their internal operations.

- Azure Kubernetes Service (AKS) metrics: Information about your cluster health, node status, and pod resource utilization.

16. Explain how you would implement a CI/CD pipeline for deploying applications to Azure using Azure DevOps.

To implement a CI/CD pipeline for deploying applications to Azure using Azure DevOps, I'd start by defining the build and release processes in Azure Pipelines. For Continuous Integration (CI), a pipeline would be triggered on code commits to the source repository (e.g., Azure DevOps Repos or GitHub). This pipeline would compile the code, run unit tests, perform static code analysis, and package the application into an artifact (e.g., a zip file or a Docker image).

For Continuous Deployment (CD), a release pipeline would be configured to automatically deploy the artifact to Azure environments (e.g., Dev, Staging, Production). This pipeline would involve tasks such as provisioning Azure resources (using ARM templates or Terraform), deploying the application package, configuring application settings, running integration tests, and performing smoke tests. Approvals and gates can be incorporated into the release pipeline to control deployments to sensitive environments. Azure Key Vault can be integrated for secure storage of secrets. Tools like Azure CLI or PowerShell can be used for interacting with Azure services during deployments.

17. What is Azure Kubernetes Service (AKS), and how does it simplify the deployment and management of containerized applications?

Azure Kubernetes Service (AKS) is a managed container orchestration service provided by Azure, based on Kubernetes. It simplifies deploying and managing containerized applications by abstracting away much of the underlying infrastructure management. AKS handles tasks like provisioning, scaling, and upgrading the Kubernetes control plane, allowing developers to focus on application development and deployment.

AKS simplifies container management through features like automated upgrades, easy scaling, built-in monitoring, and integration with other Azure services. It also provides a serverless Kubernetes experience through features like virtual nodes, enabling quick container deployments. With AKS, users don't need to manage the master nodes. Azure manages that for you.

18. Describe the role of Azure Front Door and how it can improve the performance and security of your web applications. What are the differences between Front Door and Azure Application Gateway?

Azure Front Door is a global, scalable entry point that uses Microsoft's global network to accelerate web applications and provide security. It improves performance through: * Content delivery: Caching static content closer to users. * Dynamic site acceleration: Optimizing routing for dynamic content. * Global load balancing: Directing traffic to the nearest and healthiest backend. For security, it offers: * Web application firewall (WAF): Protecting against common web exploits. * DDoS protection: Mitigating large-scale attacks.

The main differences between Front Door and Azure Application Gateway are scope and features. Front Door is a global service, suitable for routing traffic to multiple regions and providing global security. Application Gateway is a regional service, primarily for load balancing within a single region and providing more advanced application-level routing and features like session affinity and end-to-end TLS encryption. Application Gateway also supports features not available in Front Door, like ingress controller capabilities for Kubernetes.

19. What are Azure Resource Groups and how should they be used to organize and manage Azure resources effectively? What are some best practices for using Resource Groups?

Azure Resource Groups are logical containers that hold related Azure resources. They enable you to manage resources as a single unit, making it easier to deploy, update, and delete them together. Resource Groups are fundamental to organizing resources based on lifecycle, application, or department.

Best practices include:

- Naming Conventions: Use a consistent and informative naming scheme (e.g.,

rg-{environment}-{application}-{location}). - Resource Group per Application/Environment: Group resources for a single application or environment (dev, test, prod) into separate Resource Groups.

- Lifecycle Management: Group resources with the same lifecycle. This allows you to deploy, update, and delete related resources together.

- Role-Based Access Control (RBAC): Assign permissions at the Resource Group level to manage access efficiently.

- Location: Consider the location when creating the Resource Group as all resources within it must be in that location. However, some resources may support other regions.

- Tagging: Use tags to categorize and organize Resource Groups for billing, monitoring, and automation.

20. Explain the concept of autoscaling in Azure and how you would configure it for different types of Azure resources, such as virtual machines or web apps.

Autoscaling in Azure refers to the capability to automatically adjust the number of compute resources allocated to your application based on demand. This ensures optimal performance and cost efficiency. When demand increases, autoscaling adds more resources to handle the load; when demand decreases, it removes resources to reduce costs. For Virtual Machines, you'd typically configure autoscaling using Virtual Machine Scale Sets (VMSS). You define rules based on metrics like CPU utilization or memory consumption. For example, create a VMSS and configure autoscaling rules to increase the number of instances when CPU utilization exceeds 70% and decrease when it drops below 30%. You can set minimum and maximum instance counts. Similarly, for Azure App Service, autoscaling is configured through the App Service Plan. You define rules based on metrics like CPU usage, memory usage, or queue length. The settings determine how many instances (workers) are allocated to your application.

Key settings for autoscaling include:

- Metric Source: The performance data used to trigger scaling (e.g., CPU usage).

- Thresholds: The values that trigger scaling actions (e.g., scale out when CPU > 70%).

- Scale-out/Scale-in Operations: The actions to take when thresholds are met (e.g., add or remove instances).

- Minimum and Maximum Instance Counts: The boundaries for the number of instances.

Azure interview questions for experienced

1. How would you design a highly available and scalable web application using Azure App Service and Azure SQL Database?

To design a highly available and scalable web application using Azure App Service and Azure SQL Database, I'd focus on several key areas. First, I'd configure the App Service to use multiple instances across different availability zones for redundancy. This involves using an App Service Plan that supports scaling out and enabling auto-scaling based on metrics like CPU usage or request queue length. I'd also leverage Azure Traffic Manager or Azure Front Door to distribute traffic across these instances, providing global load balancing and failover capabilities.

For Azure SQL Database, I'd utilize the Business Critical or Premium tier for high availability, which includes built-in replication and failover. I'd also enable geo-replication to a secondary region for disaster recovery. Database scaling would be achieved by using database scaling features like read scale-out using read replicas. I'd also implement connection pooling in the application code to optimize database connection usage and reduce overhead. Finally, monitoring and alerting through Azure Monitor would be essential for proactively identifying and resolving any performance or availability issues.

2. Explain your approach to implementing a hybrid cloud solution with Azure, including networking and security considerations.

My approach to implementing a hybrid cloud solution with Azure centers around establishing a secure and reliable connection between the on-premises environment and Azure. Networking involves using Azure ExpressRoute or a VPN Gateway to create a private, high-bandwidth connection. For ExpressRoute, I'd work with a network provider to establish a dedicated circuit. For VPN Gateway, I would configure a secure IPsec tunnel. Azure Virtual Network (VNet) peering would connect multiple VNets within Azure if required. DNS resolution is crucial; I'd implement a hybrid DNS solution, potentially leveraging Azure Private DNS Zones for internal name resolution.

Security is paramount. I'd implement Azure Security Center for threat detection and vulnerability management across both environments. Azure Active Directory (Azure AD) would serve as the central identity provider, synchronizing identities from on-premises Active Directory using Azure AD Connect. Network security groups (NSGs) would control traffic flow at the subnet level in Azure. Azure Key Vault would manage secrets and certificates. Monitoring and logging are also essential; I'd use Azure Monitor to collect and analyze logs from both environments, enabling proactive identification and resolution of issues. Regular security assessments and penetration testing would be conducted to ensure the ongoing effectiveness of the security measures.

3. Describe a time you had to troubleshoot a complex performance issue in an Azure environment. What tools and techniques did you use?

In a previous role, we experienced a significant performance degradation in our Azure-based e-commerce platform. Users reported slow loading times and intermittent errors during peak hours. To troubleshoot, I first used Azure Monitor to identify the bottleneck. Metrics like CPU utilization, memory consumption, and network I/O pointed towards the database as the primary issue. Specifically, Azure SQL Database DTU consumption was consistently hitting 100% during peak loads.

I then leveraged Azure SQL Database Query Performance Insight to identify the most resource-intensive queries. Once identified, I used SQL Server Management Studio (SSMS) to analyze query execution plans and identify missing indexes and inefficient query patterns. We optimized these queries by adding appropriate indexes, rewriting poorly performing joins, and implementing query caching. We also scaled up the Azure SQL Database instance to provide additional resources. Finally, we implemented connection pooling to reduce the overhead of establishing new database connections. These steps significantly improved the platform's performance and resolved the reported issues.

4. How do you approach automation and infrastructure as code (IaC) in Azure using tools like Terraform or Azure Resource Manager (ARM) templates?

I approach automation and IaC in Azure with a focus on repeatability, version control, and collaboration. With Terraform, I define my infrastructure in HCL files, using modules for reusable components. The workflow typically involves writing the Terraform configuration, initializing the environment (terraform init), planning the changes (terraform plan), and applying them (terraform apply). These files are stored in a Git repository to track changes, enable collaboration, and facilitate rollback if necessary.

When using ARM templates, I create JSON files that describe the Azure resources. I utilize parameters to make the templates reusable across different environments. I then deploy the templates using Azure CLI or PowerShell. Similar to Terraform, these ARM templates are also stored in Git for version control and collaboration. I also leverage Azure DevOps pipelines to automate the deployment of both Terraform and ARM templates, integrating testing and validation steps.

5. What are the key differences between Azure Service Bus and Azure Event Hubs, and when would you choose one over the other?

Azure Service Bus and Azure Event Hubs are both messaging services in Azure, but cater to different scenarios.