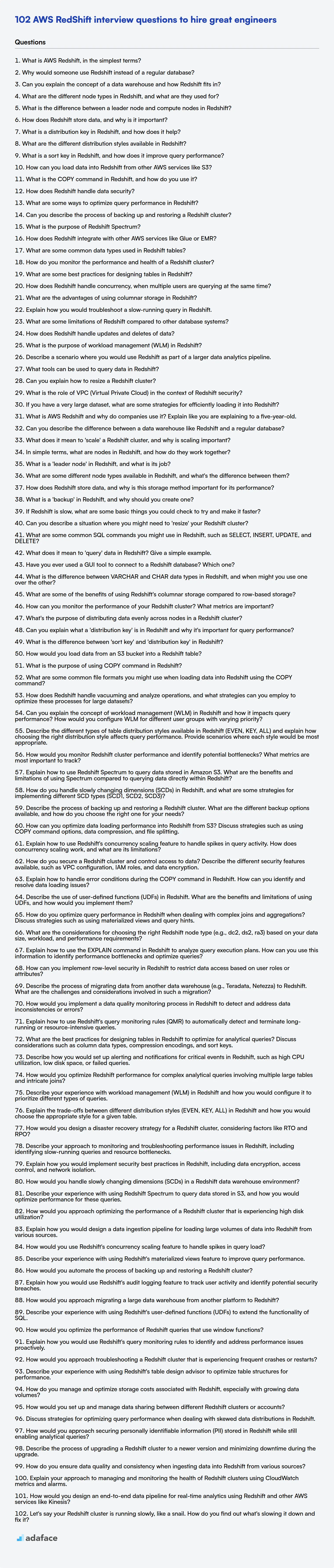

Hiring data professionals who can harness the power of cloud-based data warehousing solutions like AWS Redshift is more important than ever. Recruiters need to be prepared to ask the right questions to filter out the best candidates, as we discuss in skills required for data engineer.

This blog post offers a comprehensive collection of AWS Redshift interview questions, carefully categorized by experience level, covering freshers to seasoned experts. Additionally, we have included a set of multiple-choice questions (MCQs) to provide a well-rounded assessment resource.

By utilizing these questions, you can assess a candidate's practical skills and theoretical knowledge, ensuring you hire someone who can drive data-driven insights using Redshift; consider supplementing your interviews with an AWS online test to further validate their expertise.

Table of contents

AWS RedShift interview questions for freshers

1. What is AWS Redshift, in the simplest terms?

AWS Redshift is a fully managed, petabyte-scale data warehouse service in the cloud. Think of it as a database specifically designed for analytics, allowing you to store and analyze vast amounts of data to gain insights.

In essence, it's like a supercharged database optimized for reading and analyzing data, not for transactional operations like a typical database. It's designed to run complex queries across very large datasets quickly.

2. Why would someone use Redshift instead of a regular database?

Redshift is designed for large-scale data warehousing and analytics, while regular databases are typically optimized for transactional workloads (OLTP). Redshift excels at handling massive datasets and complex queries, which would be slow and inefficient in a standard database.

Specifically, Redshift's columnar storage, massively parallel processing (MPP) architecture, and query optimization techniques allow it to perform analytical queries much faster than a row-oriented database. This makes it suitable for business intelligence, data mining, and generating reports from large volumes of historical data. Standard databases are better suited for handling many small, fast read/write operations.

3. Can you explain the concept of a data warehouse and how Redshift fits in?

A data warehouse is a central repository for integrating data from various sources for analytical purposes. It's designed to support business intelligence activities like reporting, querying, and data mining. It differs from a transactional database, which focuses on real-time operations, because a data warehouse is optimized for read-heavy workloads and historical data analysis.

Amazon Redshift is a fully managed, petabyte-scale data warehouse service in the cloud. It's designed for fast querying and analysis of large datasets. Redshift leverages columnar storage, data compression, and parallel processing to achieve high performance. Essentially, Redshift acts as a powerful engine to perform SQL queries on the data stored within the data warehouse, allowing businesses to derive insights quickly. It's a key component in a cloud-based data warehousing solution.

4. What are the different node types in Redshift, and what are they used for?

Amazon Redshift offers different node types optimized for various workload requirements. The two main families are RA3 and DC2.

- RA3 nodes with managed storage are the recommended choice for most workloads. They allow you to scale compute and storage independently. You pay only for the compute capacity you use. They use high-performance storage managed by AWS. RA3 nodes use a large local SSD cache and direct high-bandwidth network connections to Amazon S3 for durable storage.

- DC2 nodes are compute-intensive nodes that use local storage. They're a good choice for workloads that require high performance and less storage. DC2 nodes have fixed storage, so you need to provision enough storage to meet your needs. DC2.Large is for smaller datasets, and DC2.8xlarge provides more compute power.

5. What is the difference between a leader node and compute nodes in Redshift?

In Amazon Redshift, the leader node manages communication between compute nodes and external applications. It handles SQL queries, compiles code, distributes it to the compute nodes, and aggregates the results. The leader node doesn't perform any data processing itself.

Compute nodes, on the other hand, store data and execute queries. They perform the heavy lifting of data warehousing, such as scanning tables, applying filters, joining data, and aggregating results. A Redshift cluster has multiple compute nodes working in parallel to provide high performance.

6. How does Redshift store data, and why is it important?

Redshift stores data in a columnar fashion. This means that data for each column is stored together on disk, as opposed to row-based storage where data for each row is stored together. This is important because analytical queries often only need to access a subset of columns. Columnar storage allows Redshift to read only the necessary columns, significantly reducing I/O and improving query performance. Furthermore, Redshift uses data compression techniques optimized for each column's data type, further reducing storage space and I/O.

7. What is a distribution key in Redshift, and how does it help?

A distribution key in Amazon Redshift specifies how table data is distributed across the compute nodes in the cluster. Choosing an appropriate distribution key is crucial for optimizing query performance.

It helps by:

- Data Localization: Placing related data on the same node minimizes the need to move data between nodes during query execution, reducing network traffic and improving query speed.

- Parallel Processing: Enabling Redshift to process data in parallel across multiple nodes, improving overall query performance.

There are a few distribution styles:

EVEN: Data is distributed evenly across all nodes in a round-robin fashion.KEY: Data is distributed based on the values in the distribution key column. Rows with the same distribution key value are stored on the same node.ALL: A copy of the entire table is stored on every node. This is best for small, frequently joined tables.

8. What are the different distribution styles available in Redshift?

Redshift offers three main distribution styles for tables:

- EVEN: Data is distributed evenly across all nodes in the cluster in a round-robin fashion. This is suitable for tables that don't participate in joins or when no other distribution style is more appropriate.

- KEY: Data is distributed based on the values in a specific column (the distribution key). Rows with the same distribution key value are stored on the same node. This is ideal for tables that are frequently joined on the distribution key column, as it minimizes data movement during joins. Choose a distribution key with high cardinality. Avoid using date/time columns or columns with limited distinct values.

- ALL: A copy of the entire table is stored on every node in the cluster. This is best suited for small, frequently joined tables (dimension tables). It eliminates the need for data redistribution during joins, as all nodes have a local copy of the data.

9. What is a sort key in Redshift, and how does it improve query performance?

A sort key in Redshift specifies the column or columns by which the data within a table is physically stored. When data is sorted according to a sort key, Redshift can efficiently skip large blocks of data during query execution.

This significantly improves query performance because Redshift can perform zone maps (block range elimination). When a query includes a WHERE clause that filters based on the sort key, Redshift only scans the blocks containing the relevant data, skipping irrelevant blocks. For example, if you have a table sorted by date and a query filters by a specific date range, Redshift only reads the blocks containing data within that date range, thus reducing I/O and improving query speed. Using composite sort keys allows data to be sorted by more than one column, for example, sorting by date and then user ID.

10. How can you load data into Redshift from other AWS services like S3?

You can load data into Redshift from S3 using the COPY command. This command allows you to load data in parallel from S3 buckets. You'll need the necessary IAM roles/permissions that allows Redshift to access S3. The command specifies the S3 bucket and path, the data format (e.g., CSV, JSON, Parquet), and credentials.

For example:

COPY table_name

FROM 's3://your-bucket/your-data'

CREDENTIALS 'aws_iam_role=arn:aws:iam::your-account-id:role/your-redshift-role'

FORMAT AS PARQUET;

You can also use other services such as AWS Glue to perform more complex ETL operations before loading data into Redshift. AWS Data Pipeline and AWS Lambda are also common solutions.

11. What is the COPY command in Redshift, and how do you use it?

The COPY command in Redshift is used to load data from various sources like Amazon S3, DynamoDB, or other data warehouses into Redshift tables. It's the primary way to perform bulk data loading efficiently.

To use it, you specify the target table, the data source, the data format (e.g., CSV, JSON), and any necessary authentication or formatting options. Here's a basic example loading from S3:

COPY my_table

FROM 's3://mybucket/data.csv'

CREDENTIALS 'aws_iam_role=arn:aws:iam::123456789012:role/MyRedshiftRole'

DELIMITER ','

IGNOREHEADER 1;

12. How does Redshift handle data security?

Redshift employs several mechanisms to ensure data security. It leverages network isolation using Amazon VPC, allowing you to control network access to your cluster. Encryption is used both in transit (SSL) and at rest (AWS KMS or HSM). Access control is enforced through IAM roles and policies, granting granular permissions to users and groups.

Redshift also offers auditing features, logging user activity and database events for security monitoring and compliance purposes. Further security measures include data masking and tokenization, which can be implemented using custom SQL or third-party tools. Finally, row-level security is supported through views.

13. What are some ways to optimize query performance in Redshift?

To optimize query performance in Redshift, consider these strategies:

- Choose the right distribution style:

EVEN(suitable for tables without clear distribution keys or small tables),KEY(distribute based on a column commonly used in joins), orALL(replicates the entire table to every node, ideal for small, frequently joined tables). Choosing the wrong one impacts join performance and data skew. - Use appropriate sort keys: Define sort keys (compound or interleaved) based on the columns frequently used in

WHEREclauses andORDER BYclauses. This speeds up data retrieval by storing data in a sorted order. Interleaved sort keys are useful if there are many equally important query filters on different columns. - Analyze tables regularly: Use the

ANALYZEcommand to update the query optimizer's statistics. Accurate statistics enable the optimizer to generate efficient query plans. - Use compression: Apply compression encodings (like ZSTD, LZO, or AZ64) to columns to reduce storage space and I/O overhead, leading to faster query execution.

- Optimize table design: Avoid excessive use of VARCHAR columns; use appropriate data types and column sizes. De-normalize tables strategically, especially for read-heavy workloads, to reduce joins. Partition data using date ranges if appropriate.

- Monitor query performance: Use Redshift's query monitoring tools (e.g., query history, system tables) to identify slow-running queries and bottlenecks. Examine query plans using

EXPLAINto understand how Redshift is executing your queries and identify areas for improvement. - Utilize materialized views: For complex, repetitive queries, create materialized views to precompute and store the results. This significantly improves query performance by avoiding repeated calculations. Make sure base tables are updated as needed.

14. Can you describe the process of backing up and restoring a Redshift cluster?

Backing up a Redshift cluster involves creating snapshots of the cluster's data. These snapshots are incremental, meaning they only store the changes since the last snapshot, optimizing storage and backup time. Snapshots can be manual or automated. Automated snapshots are taken periodically (default is every 8 hours or when 5GB of changes occur, retention period is 1 day but can be customized). Manual snapshots are triggered by the user and retained until explicitly deleted.

Restoring a Redshift cluster from a snapshot creates a new cluster with the data from the snapshot. The restoration process involves selecting the snapshot, specifying the new cluster configuration (node type, number of nodes, etc.), and initiating the restore. Redshift creates a new cluster and populates it with the data from the selected snapshot. The new cluster is available once the restoration process is complete, allowing you to resume operations. It's important to verify data integrity after the restore.

15. What is the purpose of Redshift Spectrum?

Redshift Spectrum enables you to run SQL queries directly against exabytes of data stored in Amazon S3. It eliminates the need to load data into Redshift before querying, making it ideal for analyzing large datasets and historical data.

Essentially, Spectrum extends Redshift's querying capabilities to data residing in S3. You manage the Redshift cluster for compute, while Spectrum handles the data access and processing in S3, providing a cost-effective way to query infrequently accessed data without incurring storage costs within Redshift.

16. How does Redshift integrate with other AWS services like Glue or EMR?

Redshift integrates with other AWS services like Glue and EMR to facilitate data warehousing and analytics workflows. Redshift leverages Glue as a central metadata repository, using the Glue Data Catalog to store table definitions, schemas, and partitioning information. This allows Redshift to query data stored in S3 that's been cataloged by Glue, using CREATE EXTERNAL TABLE statements that point to the Glue Data Catalog.

For integration with EMR, Redshift can load data transformed by EMR jobs. EMR can process large datasets and write the results to S3, which Redshift can then copy into its tables using the COPY command. Redshift can also be used as a data source for EMR jobs through JDBC/ODBC connections, allowing EMR to perform complex analytics on data already stored in the data warehouse. Redshift Spectrum, a feature of Redshift, further enhances integration by enabling direct querying of data stored in S3 without loading it into Redshift, using the same SQL syntax.

17. What are some common data types used in Redshift tables?

Redshift supports several common data types optimized for data warehousing and analytical workloads. These include:

- INTEGER:

INT,SMALLINT,BIGINT(for whole numbers) - DECIMAL:

DECIMALorNUMERIC(for precise numeric values, like currency) - REAL:

REAL,DOUBLE PRECISION,FLOAT(for floating-point numbers) - BOOLEAN:

BOOLEAN(for true/false values) - CHARACTER:

CHAR,VARCHAR(for strings, withVARCHARsupporting variable lengths) - DATE:

DATE(for dates without time) - TIMESTAMP:

TIMESTAMP,TIMESTAMPTZ(for dates and times, withTIMESTAMPTZincluding time zone)

18. How do you monitor the performance and health of a Redshift cluster?

I monitor Redshift cluster performance and health using a combination of Redshift's built-in monitoring tools and AWS CloudWatch. Key metrics I track include CPU utilization, disk space usage, query performance (query duration, queue length), and connection statistics. I use the Redshift console, system tables like STL_QUERY, STL_LOAD_ERRORS, and STV_BLOCKLIST, along with CloudWatch dashboards to visualize these metrics and set up alarms for critical thresholds.

Specifically, I monitor for high CPU utilization (approaching 100%), low disk space (less than 20% free), long query queues, and a high number of blocked queries. CloudWatch alarms notify me of these conditions, enabling proactive intervention such as scaling the cluster, optimizing queries, or addressing connection issues. Regular analysis of system tables helps identify slow-running queries or potential data skew issues that impact overall performance.

19. What are some best practices for designing tables in Redshift?

When designing tables in Redshift, focus on performance and storage efficiency. Choose the right distribution style: EVEN (default, good for uniform data), KEY (distribute based on a join key for co-location), or ALL (replicates the table to every node for small, frequently joined tables). Select an appropriate sort key: compound (multiple columns, ordered importance) or interleaved (equal weighting, good for varied query patterns). Use compression encodings like ZSTD or LZO to reduce storage and I/O. Also, consider using data types efficiently; for example, use SMALLINT instead of INTEGER when appropriate.

Regularly analyze table statistics using ANALYZE to help the query optimizer create efficient query plans. Delete data using TRUNCATE or DELETE and then VACUUM and ANALYZE to reclaim space and improve performance. For frequently updated tables, consider using a staging table for updates and then using TRUNCATE and INSERT to refresh the main table, as Redshift is optimized for bulk loading.

20. How does Redshift handle concurrency, when multiple users are querying at the same time?

Redshift handles concurrency primarily through Workload Management (WLM). WLM defines query queues, and for each queue you can specify memory allocation and concurrency levels. When multiple users query simultaneously, Redshift assigns queries to these queues based on defined rules (e.g., user groups, query groups).

Redshift uses a concurrency scaling feature to automatically add additional cluster capacity when workload increases, so you don't need to manually manage this. Resource queues determine priority and resources allocated per query. The number of slots defined in a queue dictates the degree of concurrency that queue can handle. Queries might experience queuing delays if the concurrency level is reached. This ensures resources are managed and some queries are not starved.

21. What are the advantages of using columnar storage in Redshift?

Columnar storage in Redshift offers significant advantages for analytical workloads. Since data is stored by column rather than row, Redshift can efficiently retrieve only the columns needed for a query, reducing I/O and improving query performance. This is particularly beneficial for aggregations and filtering operations common in data warehousing.

Furthermore, columnar storage enables better data compression. Because data within a column is typically of the same type, compression algorithms can achieve higher compression ratios. This leads to reduced storage costs and further improved I/O performance when reading data.

22. Explain how you would troubleshoot a slow-running query in Redshift.

To troubleshoot a slow-running Redshift query, I'd start by checking the query plan using EXPLAIN. This helps identify potential bottlenecks like full table scans, inefficient joins, or skewed data distribution. Analyze the stl_scan and stl_join system tables to understand scan times and join types, respectively. Also, I would examine stl_query to see the overall query performance and timing. If data distribution seems to be the problem, I would consider using the DISTSTYLE key or sort keys properly. If hardware is an issue, consider resizing the cluster or upgrading node types.

I'd also check for common issues like missing or outdated statistics by running ANALYZE on the tables involved. Verify that the queries are using appropriate indexes or sort keys. Finally, review the workload management (WLM) configuration to ensure the query isn't being throttled by resource allocation rules or competing queries, and increase concurrency scaling, if needed.

23. What are some limitations of Redshift compared to other database systems?

Redshift, while powerful for data warehousing, has some limitations. It's not ideal for OLTP workloads due to its columnar storage and lack of indexing options optimized for single-row lookups. Concurrency can also be a challenge with a large number of concurrent queries potentially impacting performance.

Furthermore, Redshift's ecosystem, while growing, isn't as mature as some other databases. Operations like updating/deleting individual rows can be less efficient compared to row-oriented databases. While support for semi-structured data is improving, it might not be as flexible as native NoSQL solutions or databases with built-in JSON support.

24. How does Redshift handle updates and deletes of data?

Redshift doesn't support direct in-place updates or deletes like traditional databases. Instead, it relies on a combination of techniques:

- Updates: Redshift effectively handles updates by marking the existing row as deleted and inserting a new row with the updated values. The old row is still physically present but is hidden from queries using the current snapshot.

VACUUMoperation reclaims space and resort the table after updates. - Deletes: Similar to updates, deletes mark rows as deleted without physically removing them immediately.

VACUUM DELETEremoves the deleted rows. - Vacuuming: The

VACUUMcommand is essential for reclaiming the space occupied by marked-for-deletion rows and for resorting data to improve query performance. It's a crucial maintenance task.

This approach, while not in-place, is optimized for Redshift's columnar storage and parallel processing architecture. Performing frequent small updates or deletes can degrade performance, making batch operations preferred when possible. Use TRUNCATE command if you need to delete a lot of rows.

25. What is the purpose of workload management (WLM) in Redshift?

The purpose of Workload Management (WLM) in Redshift is to manage resource allocation and query concurrency to optimize query performance and system stability. It allows you to prioritize certain queries or user groups by assigning them to specific queues with dedicated memory and concurrency levels.

WLM essentially divides Redshift's resources (memory and CPU) into multiple query queues. By defining rules, you can route queries to the appropriate queue based on factors like user, query group, or query type. This ensures critical queries get the resources they need while preventing resource contention from less important queries, therefore improving the overall efficiency and responsiveness of the Redshift cluster.

26. Describe a scenario where you would use Redshift as part of a larger data analytics pipeline.

Consider an e-commerce company that collects data from various sources: website clickstream data, order management system, customer relationship management (CRM) system, and marketing campaign data. We would use Redshift as the central data warehouse in our analytics pipeline.

Data from these disparate sources is extracted, transformed, and loaded (ETL) into Redshift. Website clickstream data, containing user behavior, could be streamed into Redshift via Kinesis Data Firehose, then transformed by scheduled SQL jobs in Redshift. Order and customer data, likely residing in relational databases, could be extracted using tools like AWS Data Pipeline or Apache Airflow, transformed (e.g., cleaning, aggregating), and loaded into Redshift. Once in Redshift, analysts can use SQL to query and analyze the combined data to understand customer behavior, identify sales trends, optimize marketing campaigns, and improve overall business performance. Business intelligence tools like Tableau or Looker can connect directly to Redshift for visualization and reporting. Furthermore, we can build machine learning models on the data within Redshift using Redshift ML to predict customer churn or product recommendations.

27. What tools can be used to query data in Redshift?

Several tools can be used to query data in Redshift. The most common is the Amazon Redshift Query Editor, a web-based tool available within the AWS console. You can also use SQL clients like DBeaver, SQL Workbench/J, or pgAdmin, configured to connect to your Redshift cluster via JDBC/ODBC.

For programmatic access and automation, you can leverage the AWS SDKs (e.g., boto3 for Python, AWS SDK for Java) to execute SQL queries against Redshift. Additionally, business intelligence (BI) tools such as Tableau, Power BI, and Looker often offer native connectors to Redshift for data visualization and analysis.

28. Can you explain how to resize a Redshift cluster?

Resizing a Redshift cluster involves changing its node type or number of nodes. This is done using the AWS Management Console, the AWS CLI, or the Redshift API. The most common method is using the console, navigating to the cluster, and selecting 'Resize'. You then choose a new node type or number of nodes. The cluster enters a read-only mode during the resizing operation.

There are two primary types of resizing: classic resize and elastic resize. Classic resize creates a new cluster and migrates data, resulting in downtime. Elastic resize, available for certain node types, adds or removes nodes concurrently with cluster operations, minimizing downtime. Choose the appropriate method based on your uptime requirements and the node types involved. After the resize, it's recommended to analyze and vacuum tables for optimal performance.

29. What is the role of VPC (Virtual Private Cloud) in the context of Redshift security?

A VPC in Redshift provides network isolation, enhancing security. It allows you to launch your Redshift cluster into a private, isolated section of the AWS cloud that you control, defining a virtual network. This control restricts access to your cluster.

Specifically, VPC configuration allows defining network ACLs and security groups which govern inbound and outbound traffic to your Redshift cluster. By controlling these rules, you can limit access to the Redshift cluster to specific IP addresses or CIDR blocks, and only allow traffic on necessary ports, therefore reducing the attack surface and preventing unauthorized access. VPC endpoints further enhance security by enabling private connectivity to other AWS services without traversing the public internet.

30. If you have a very large dataset, what are some strategies for efficiently loading it into Redshift?

To efficiently load a very large dataset into Redshift, several strategies can be employed. Firstly, utilize the COPY command, which is designed for parallel data loading. Split the data into multiple files (ideally 1MB to 1GB in size), and store them in Amazon S3. Ensure that the S3 bucket is in the same region as your Redshift cluster for optimal performance. Then, use the COPY command to load data from S3 into Redshift, taking advantage of Redshift's parallel architecture by specifying the MANIFEST file option or using wildcard characters to load from multiple files concurrently. Consider using compression formats like GZIP or LZO to reduce the amount of data transferred.

Secondly, optimize the data format for loading. Using columnar formats like Parquet or ORC can significantly improve loading speed, especially if you're only selecting a subset of columns. Ensure you have sufficient compute resources (nodes) in your Redshift cluster to maximize the parallelism during the loading process. Monitor Redshift's performance metrics during the load to identify potential bottlenecks, such as skewed data distribution. Finally, consider pre-sorting your data by the sort key column of your Redshift table before loading to improve query performance after the data is loaded.

AWS RedShift interview questions for juniors

1. What is AWS Redshift and why do companies use it? Explain like you are explaining to a five-year-old.

Imagine you have a big box of toys, and you want to quickly find all the red cars. AWS Redshift is like a super-fast computer that helps companies find important information in their very big boxes of data, like finding all the customers who bought a specific toy this month. It's built for analyzing that data to help them make better decisions.

Companies use Redshift because it helps them understand what's happening in their business. For example, a toy company can see which toys are most popular, or how much money they made last year. This helps them plan what toys to make next or how to sell them better, making more people happy and maybe even helping them to be more successful.

2. Can you describe the difference between a data warehouse like Redshift and a regular database?

The key difference lies in their purpose and design. A regular database (like MySQL, PostgreSQL) is optimized for Online Transaction Processing (OLTP), handling frequent read and write operations from applications. It focuses on data normalization, minimizing redundancy for efficient updates.

In contrast, a data warehouse like Redshift is designed for Online Analytical Processing (OLAP). It aggregates large volumes of historical data from various sources for reporting and analysis. Data warehouses are often denormalized to optimize read performance for complex queries, focusing on analytical insights rather than real-time transactions.

3. What does it mean to 'scale' a Redshift cluster, and why is scaling important?

Scaling a Redshift cluster refers to increasing or decreasing its compute and storage capacity to handle changes in data volume, query complexity, and user concurrency. This can involve adding or removing nodes, upgrading node types, or enabling features like concurrency scaling.

Scaling is important because it ensures optimal performance and cost-effectiveness. Scaling up can improve query performance during peak loads or when dealing with larger datasets. Scaling down reduces costs when demand is lower, preventing resource waste. Proper scaling maintains a balance between performance and expenses, ensuring the cluster meets business needs efficiently.

4. In simple terms, what are nodes in Redshift, and how do they work together?

In Redshift, nodes are the fundamental processing units that comprise a cluster. Think of a cluster as a team, and each node is a team member responsible for a piece of the overall workload.

They work together in parallel to execute SQL queries. The leader node distributes the query to the compute nodes. Each compute node then works on its portion of the data, and the results are compiled and returned to the user. There are different types of node that have different CPU power and storage depending on workload needs. These nodes effectively handle the data storage and query processing.

5. What is a 'leader node' in Redshift, and what is its job?

In Amazon Redshift, the leader node is the central point of entry and management for the entire cluster. Its primary job is to coordinate and manage communications between client applications and the compute nodes. It doesn't directly execute queries against the data.

Specifically, the leader node:

- Receives queries from client applications.

- Parses and optimizes SQL queries.

- Distributes query execution to the compute nodes.

- Aggregates the results from the compute nodes.

- Returns the final results to the client application.

- Manages cluster metadata, like table definitions.

- Handles communication with Amazon S3 for data loading and unloading.

6. What are some different node types available in Redshift, and what's the difference between them?

Redshift offers different node types optimized for various workload characteristics. The main categories are Dense Compute (DC) and Dense Storage (DS), and RA3.

- Dense Compute (DC) nodes use fast CPUs, large amounts of RAM, and solid-state disks (SSDs). DC nodes are a good choice for performance-intensive workloads with smaller data volumes.

- Dense Storage (DS) nodes use hard disk drives (HDDs) for lower cost and are well-suited for large data warehouses when performance isn't the top priority.

- RA3 nodes store data in Amazon S3, so you only pay for the storage you use. RA3 is a good choice for growing data warehouses that require fast performance.

7. How does Redshift store data, and why is this storage method important for its performance?

Redshift stores data in a columnar fashion. Instead of storing data row by row, it stores data column by column. This is crucial for performance because analytical queries often only need to access a subset of columns. Columnar storage allows Redshift to read only the necessary columns, significantly reducing I/O operations and improving query speeds. It also enables better data compression, as data within a column tends to be more homogenous.

Furthermore, Redshift distributes data across multiple compute nodes using data distribution styles like EVEN, KEY, and ALL. EVEN distributes data evenly across nodes. KEY distributes data based on a key column. ALL copies the entire table to each node. Choosing the right distribution style minimizes data movement between nodes during query execution, further enhancing performance. This parallel processing architecture coupled with columnar storage enables Redshift to handle large datasets efficiently.

8. What is a 'backup' in Redshift, and why should you create one?

In Amazon Redshift, a backup is a copy of your data warehouse's data and metadata. It's essentially a snapshot of your cluster's state at a particular point in time. These backups are crucial for disaster recovery and data protection.

Creating backups is essential for several reasons: Recovery from data loss: If data is accidentally deleted or corrupted, a backup allows you to restore your cluster to a previous state. Business Continuity: In the event of a disaster or outage, backups ensure minimal downtime by enabling you to quickly restore your data warehouse to a new cluster. Compliance: Many regulations require organizations to maintain backups of their data. Migration and Testing: Backups can be used to create test environments or migrate data to new clusters without impacting the production environment.

9. If Redshift is slow, what are some basic things you could check to try and make it faster?

If Redshift is slow, here are a few basic things to check:

- Check Query Performance: Use

EXPLAINto understand the query plan and identify bottlenecks. Look for full table scans, nested loops, or other inefficient operations. Analyze WLM queue statistics for long running queries, high queue wait times, or high memory usage. - Analyze Table Design: Ensure tables are properly distributed (using

DISTKEYandSORTKEY) based on query patterns. Incorrect distribution can lead to data shuffling and slow performance. Consider using appropriate data types and compression encodings. - Vacuum and Analyze: Regularly run

VACUUMto reclaim space from deleted rows andANALYZEto update statistics used by the query optimizer. Outdated statistics can lead to suboptimal query plans. The commandVACUUM FULLreclaims the most space. - Review WLM Configuration: Verify the Workload Management (WLM) configuration is appropriately allocating resources to different query groups. Short running queries might be starved if competing with long running queries with high priority.

- Consider Concurrency Scaling: If you are regularly hitting concurrency limits, enabling concurrency scaling can help. The feature adds temporary capacity when query volume spikes.

- Check resource utilization: Check CPU, memory, and disk I/O. If any of these is consistently high, it may indicate the need to upgrade the cluster size or optimize queries.

10. Can you describe a situation where you might need to 'resize' your Redshift cluster?

Resizing a Redshift cluster is often necessary to accommodate changes in data volume, query complexity, or user concurrency. For example, if we experience a significant increase in the amount of data being loaded into Redshift, or if query performance degrades due to increased workload, resizing the cluster becomes essential. This might involve increasing the number of nodes, upgrading the node type to one with more compute power or storage, or both.

Another situation where resizing is needed is when anticipating a surge in user activity, such as during a critical reporting period or a major product launch. In such cases, a larger cluster can handle the increased query load and maintain acceptable performance. Conversely, if resource utilization is consistently low, downsizing the cluster can help optimize costs. Choosing the right node type also affects performance e.g. compute optimized vs storage optimized nodes. This ensures a balance between performance and cost efficiency.

11. What are some common SQL commands you might use in Redshift, such as SELECT, INSERT, UPDATE, and DELETE?

Redshift, being a data warehouse based on PostgreSQL, supports standard SQL commands. Some common ones include:

SELECT: Used to retrieve data from tables.SELECT column1, column2 FROM table_name WHERE condition;INSERT: Used to add new rows into a table.INSERT INTO table_name (column1, column2) VALUES (value1, value2);UPDATE: Used to modify existing data in a table.UPDATE table_name SET column1 = value1 WHERE condition;DELETE: Used to remove rows from a table.DELETE FROM table_name WHERE condition;CREATE TABLE: Used to create new tables, specifying column names, data types, and constraints.ALTER TABLE: Used to modify the structure of an existing table.DROP TABLE: Used to remove an existing table. These are fundamental commands, and Redshift supports many more for data manipulation, analysis, and administration.

12. What does it mean to 'query' data in Redshift? Give a simple example.

In Redshift, to 'query' data means to request specific information from the database. This is done using SQL (Structured Query Language) statements. Essentially, you're asking Redshift to filter, sort, and retrieve data based on conditions you define.

For example, consider a table named users with columns like user_id, name, and city. A simple query might be:

SELECT user_id, name FROM users WHERE city = 'New York';

This query retrieves the user_id and name columns for all users living in 'New York'. This illustrates a basic 'query' of data within Redshift.

13. Have you ever used a GUI tool to connect to a Redshift database? Which one?

Yes, I have used GUI tools to connect to Redshift databases. One tool I've used is Dbeaver. It's a universal database tool that supports a wide variety of databases, including Redshift. Dbeaver provides a user-friendly interface for browsing schemas, tables, and data, as well as executing SQL queries.

Another tool I have experience with is the SQL Workbench/J. While it requires some configuration for the Redshift JDBC driver, it offers robust features for data browsing, SQL scripting, and exporting data. I also have used AWS Management Console's Query Editor for simple queries. It is the simplest but lacks features offered by a full-fledged database client like Dbeaver or SQL Workbench/J.

14. What is the difference between VARCHAR and CHAR data types in Redshift, and when might you use one over the other?

VARCHAR and CHAR are both character data types in Redshift, used to store strings. CHAR has a fixed length, specified when the table is created. If the string stored is shorter than the defined length, it's padded with spaces. VARCHAR, on the other hand, is a variable-length string. It stores only the characters provided and does not pad spaces, up to a specified maximum length.

Use CHAR when you know the exact length of the strings you'll be storing and that length will always be consistent, like storing state abbreviations (e.g., 'CA'). Use VARCHAR when the length of the strings can vary significantly. VARCHAR generally saves storage space because it doesn't store padding.

15. What are some of the benefits of using Redshift's columnar storage compared to row-based storage?

Redshift's columnar storage offers significant performance advantages over row-based storage, primarily for analytical workloads. Since data is stored by column, Redshift only needs to read the columns relevant to a query, drastically reducing I/O and improving query speed. This is especially beneficial for aggregations and data warehousing tasks that often involve querying only a subset of columns across a large dataset.

In contrast, row-based storage reads entire rows even if only a few columns are needed, resulting in unnecessary I/O. Columnar storage also enables better data compression because data within a column tends to be more homogenous. This leads to reduced storage costs and further improved I/O performance. This is particularly impactful when dealing with large datasets in data warehousing scenarios.

16. How can you monitor the performance of your Redshift cluster? What metrics are important?

Redshift performance monitoring involves tracking various metrics to identify bottlenecks and optimize cluster efficiency. Important metrics include: CPU utilization (high CPU might indicate insufficient compute resources), Disk space utilization (approaching capacity affects performance), Network Throughput (measures data transfer rates in/out of the cluster), Query execution time (indicates query efficiency; monitor long-running queries), WLM queue statistics (queue wait times signify resource contention), and Connection counts (high connection numbers can impact performance). Redshift provides several tools for monitoring, including the AWS Management Console, CloudWatch metrics, and system tables/views (e.g., STL_QUERY, STV_WLM_QUERY_STATE).

Specifically, you'd use CloudWatch to monitor cluster-level metrics (CPU, Disk, Network). For query-level performance, inspect Redshift system tables to identify slow queries. Tools like AWS Redshift Advisor also provide recommendations based on your cluster's workload. You can also setup monitoring to alert on certain events. For example:

- High CPU utilization (e.g., > 80%)

- Low disk space (e.g., < 20% free)

- Long-running queries (e.g., > 5 minutes)

17. What's the purpose of distributing data evenly across nodes in a Redshift cluster?

Distributing data evenly across nodes in a Redshift cluster is crucial for achieving optimal query performance and efficient resource utilization. Uneven data distribution, known as data skew, can lead to some nodes being overloaded while others are underutilized. This imbalance results in longer query execution times because the overall performance is limited by the slowest node.

Even data distribution ensures that each node processes a roughly equal share of the data. This parallelism minimizes the chances of bottlenecks and allows Redshift to fully leverage the computational power of all nodes in the cluster. As a result, queries complete faster, and the cluster operates more efficiently, leading to better overall performance and cost-effectiveness.

18. Can you explain what a 'distribution key' is in Redshift and why it's important for query performance?

In Amazon Redshift, a distribution key specifies how table data is distributed across the compute nodes in the cluster. It's crucial for query performance because it determines where data resides and how it's accessed during query execution. Choosing the right distribution key minimizes data movement between nodes, which is often the most expensive operation in a distributed database.

When chosen optimally, the distribution key can lead to co-location of related data on the same node. This allows Redshift to perform joins and aggregations locally, significantly reducing network traffic and improving query speed. Poor distribution, on the other hand, can cause significant data redistribution during query processing, known as data skew, leading to slower query performance and increased resource consumption.

19. What is the difference between 'sort key' and 'distribution key' in Redshift?

In Amazon Redshift, the sort key defines the order in which data is physically stored within each block on disk for a given table. Choosing an appropriate sort key allows Redshift to efficiently skip over irrelevant data blocks during query execution, significantly improving query performance. Common strategies include choosing a timestamp column for time-series data or a frequently filtered column.

In contrast, the distribution key determines how rows are distributed across the compute nodes in the Redshift cluster. Selecting a good distribution key ensures even data distribution, minimizing data skew and maximizing parallel processing. There are a few main distribution styles: EVEN (distributes rows evenly across nodes), KEY (distributes rows based on the values in the distribution key column, placing rows with the same value on the same node), and ALL (replicates a copy of the entire table on every node).

20. How would you load data from an S3 bucket into a Redshift table?

To load data from an S3 bucket into a Redshift table, I would use the COPY command. This command allows Redshift to directly read data from files stored in S3 and load it into a table. The COPY command requires specifying the target table, the S3 bucket path containing the data, the AWS credentials (IAM role ARN is preferred for security), and the data format (e.g., CSV, JSON, PARQUET). Optionally, delimiters, compression types, and other format-specific parameters can be specified.

For example:

COPY my_table

FROM 's3://mybucket/data_files/'

IAM_ROLE 'arn:aws:iam::123456789012:role/MyRedshiftRole'

FORMAT AS CSV

DELIMITER ','

IGNOREHEADER 1;

This command loads CSV data from the s3://mybucket/data_files/ S3 location into the my_table Redshift table, assuming the MyRedshiftRole has the necessary permissions to access the S3 bucket.

21. What is the purpose of using COPY command in Redshift?

The COPY command in Redshift is used to load data into Redshift tables from various data sources. These sources commonly include Amazon S3 buckets, AWS Glue Data Catalog, DynamoDB, or even remote hosts via SSH. It's the primary and most efficient way to bulk load data into a Redshift cluster.

Using COPY is significantly faster than using INSERT statements for large datasets because it leverages Redshift's massively parallel processing (MPP) architecture. It can load data in parallel from multiple files, optimizing the loading speed. The COPY command supports various data formats, such as delimited text files (CSV), JSON, Avro, Parquet, and others, enabling flexible data ingestion.

22. What are some common file formats you might use when loading data into Redshift using the COPY command?

Common file formats for loading data into Redshift using the COPY command include:

- CSV (Comma-Separated Values): A simple, widely used format for tabular data.

- JSON (JavaScript Object Notation): Suitable for semi-structured data with nested objects and arrays.

COPYsupports loading JSON data directly. - AVRO: A binary data serialization format often used with Hadoop and other big data frameworks.

- Parquet: A columnar storage format optimized for analytical queries and efficient data compression.

- ORC (Optimized Row Columnar): Another columnar storage format, similar to Parquet, designed for Hadoop workloads.

- Text files: Delimited, fixed-width, and regular expression formats are possible to specify using the

DELIMITER,FIXEDWIDTH, andREGEXkeywords respectively.

AWS RedShift intermediate interview questions

1. How does Redshift handle vacuuming and analyze operations, and what strategies can you employ to optimize these processes for large datasets?

Redshift's VACUUM reclaims space occupied by deleted rows and sorts data for optimal query performance. ANALYZE updates statistics used by the query optimizer. For large datasets, VACUUM and ANALYZE can be time-consuming. Strategies to optimize include:

- Targeted Vacuuming: Vacuum specific tables or even slices that have a high percentage of deleted rows rather than vacuuming everything.

- Concurrency Scaling: Utilize concurrency scaling to provision temporary capacity for vacuum and analyze operations, minimizing impact on user queries.

- Analyze Compression Encodings: Verify that column compression encodings are efficient to ensure

ANALYZEcompletes quickly and generates accurate statistics. - Scheduled Maintenance: Schedule these operations during off-peak hours to minimize disruption.

- Incremental Vacuuming: Vacuum sort key columns more frequently than other columns for improved performance of range-restricted queries.

- Analyze Based on Data Changes: Instead of running

ANALYZEon a set schedule, consider triggeringANALYZEon specific tables after significant data changes like large data loads or deletes.

2. Can you explain the concept of workload management (WLM) in Redshift and how it impacts query performance? How would you configure WLM for different user groups with varying priority?

Workload Management (WLM) in Redshift is a feature that allows you to prioritize queries and manage the resources allocated to them. It divides the available query processing power into queues, each configured with specific memory allocation and concurrency levels. This impacts query performance by ensuring that critical queries receive adequate resources, preventing resource contention, and optimizing overall cluster utilization. By defining rules, WLM can route queries to appropriate queues based on user groups, query types, or other criteria, influencing how quickly queries execute and preventing resource monopolization by less important tasks.

To configure WLM for different user groups with varying priorities, you would define separate queues in the WLM configuration. Higher priority queues would be allocated more memory and potentially higher concurrency. Then, you would create WLM rules that map user groups to specific queues. For instance, you can set up a queue for analysts with high priority and more memory, and another queue for reporting with a lower priority and less memory. This ensures that analysts' queries, which might be time-sensitive, are executed faster while less critical reports are processed with lower resource allocation. WLM also supports query monitoring rules (QMRs) to abort or log long-running queries exceeding defined thresholds, further managing resource consumption.

3. Describe the different types of table distribution styles available in Redshift (EVEN, KEY, ALL) and explain how choosing the right distribution style affects query performance. Provide scenarios where each style would be most appropriate.

Redshift offers three main table distribution styles: EVEN, KEY, and ALL. EVEN distribution distributes data evenly across all nodes in a round-robin fashion. This is suitable when you don't have a clear key to distribute on or want to minimize initial setup complexity. However, it can lead to data being scattered requiring more cross-node communication during joins, negatively impacting query performance. KEY distribution distributes rows based on the values in a designated column (the distribution key). Rows with the same key value are stored on the same node. This is ideal when tables are frequently joined on the distribution key, as it minimizes data movement during joins. Selecting a frequently joined column as the key significantly boosts performance. The tradeoff is potential data skew if the key values are not evenly distributed, leading to uneven resource utilization and slower query performance. ALL distribution copies the entire table to every node in the cluster. This is best for small, frequently joined dimension tables because joins can be performed locally on each node without any data transfer. However, it's unsuitable for large tables due to increased storage costs and longer data loading times. Choosing the right distribution style significantly impacts query performance. Incorrect choice will lead to increased network traffic during joins and slower query execution times.

4. How would you monitor Redshift cluster performance and identify potential bottlenecks? What metrics are most important to track?

To monitor Redshift cluster performance and identify bottlenecks, I'd primarily use the AWS Management Console, CloudWatch metrics, and Redshift system tables. Important metrics to track include: CPUUtilization, DiskSpaceUsed, NetworkReceiveThroughput, NetworkTransmitThroughput, QueryQueueLength, and WLMQueueLength. Additionally, I would pay close attention to metrics indicating I/O operations, such as ReadIOPS and WriteIOPS, especially as they relate to disk usage. Analyzing the Redshift system tables like STL_QUERY, STL_LOAD_ERRORS, STL_ALERT_EVENT_LOG, and STV_WLM_QUERY_STATE provides detailed insights into query performance, load errors, and workload management issues.

Beyond metrics, analyzing slow-running queries using the EXPLAIN command and optimizing table design (distribution style, sort key) are crucial for bottleneck identification and resolution. Regularly vacuuming and analyzing tables helps maintain optimal query performance. I would also set up CloudWatch alarms to notify me of any breaches in the key metrics that might indicate performance degradation or potential problems within the cluster, enabling proactive intervention.

5. Explain how to use Redshift Spectrum to query data stored in Amazon S3. What are the benefits and limitations of using Spectrum compared to querying data directly within Redshift?

Redshift Spectrum allows you to run SQL queries directly against exabytes of unstructured data in Amazon S3 without loading the data into Redshift tables. You define external tables in Redshift that point to data stored in S3. When you query these external tables, Redshift Spectrum leverages massively parallel processing to read and process the data directly from S3, returning only the relevant results to Redshift.

Benefits of Spectrum include querying large datasets without Redshift storage costs, querying data in open formats (like Parquet, JSON, CSV), and separating compute and storage. Limitations include query performance dependent on S3 network performance, additional cost for Spectrum query usage, and potential data consistency challenges if data in S3 is frequently updated. Compared to directly querying data within Redshift, Spectrum trades faster query performance (when data is already loaded into Redshift) for flexibility and cost savings on storage.

6. How do you handle slowly changing dimensions (SCDs) in Redshift, and what are some strategies for implementing different SCD types (SCD1, SCD2, SCD3)?

Handling SCDs in Redshift involves updating a dimension table to reflect changes in source data while preserving historical information. Redshift doesn't natively enforce SCD behavior, so it's typically managed through ETL processes. For SCD1 (overwrite), a simple UPDATE statement replaces the old value with the new one. SCD2 (add new row) is trickier; you insert a new row with the updated values, marking the old row as inactive (e.g., setting is_current = false and end_date to the current timestamp) and the new row as active (is_current = true, start_date = current timestamp). SCD3 (add new column) involves adding a new column to the dimension table to store the previous value. This can be implemented using an UPDATE statement to store the old value into the new column before updating the actual column.

7. Describe the process of backing up and restoring a Redshift cluster. What are the different backup options available, and how do you choose the right one for your needs?

Redshift offers automated and manual snapshot options for backups. Automated snapshots are incremental, taken every 8 hours (or when significant changes occur), and retained for a default period (configurable, up to 365 days). Manual snapshots are user-initiated, full backups, allowing for specific points in time to be preserved indefinitely. For restoration, you select a snapshot (automated or manual) and Redshift creates a new cluster from that snapshot. The new cluster will have the data as it existed at the time the snapshot was taken.

Choosing the right option depends on recovery needs. Automated snapshots are suitable for general data protection and point-in-time recovery within the retention period. Manual snapshots are ideal for long-term archiving, pre-upgrade backups, or preserving data before major changes. Consider the Recovery Time Objective (RTO) and Recovery Point Objective (RPO) when choosing between the two. If near-instant recovery from a recent point is critical, rely on automated snapshots. If long-term retention or capturing data states before risky operations are paramount, use manual snapshots.

8. How can you optimize data loading performance into Redshift from S3? Discuss strategies such as using COPY command options, data compression, and file splitting.

To optimize data loading into Redshift from S3 using the COPY command, several strategies can be employed. First, leverage COMPUPDATE and STATUPDATE options in the COPY command to automatically update table statistics after loading, which is crucial for query performance. Use region to specify the S3 bucket's region. Implement data compression (GZIP, LZOP, ZSTD) before uploading to S3, and specify the compression type in the COPY command using the GZIP, LZOP, or ZSTD keywords; this reduces network transfer time and storage costs.

Furthermore, split large data files into multiple smaller files (ideally 1GB-100GB each). Redshift distributes these files across compute nodes, enabling parallel loading and significantly improving speed. Ensure that IAM roles used by Redshift have appropriate read access to the S3 bucket. Consider using manifest files for reliable loading, especially with a large number of files, listing all the S3 object keys to be loaded; this simplifies management and ensures all files are loaded.

9. Explain how to use Redshift's concurrency scaling feature to handle spikes in query activity. How does concurrency scaling work, and what are its limitations?

Redshift's concurrency scaling automatically adds extra cluster capacity to handle spikes in query activity. When the main cluster's resources are exhausted, queries are routed to concurrency scaling clusters, which run concurrently with the main cluster. This prevents query queuing and maintains consistent performance during peak loads. These clusters are billed per-second, only when in use.

The limitations include a short warm-up time for the concurrency scaling clusters, and a finite number of concurrency scaling clusters available. Complex queries, especially those performing significant data transformations, can still experience performance bottlenecks if concurrency scaling clusters aren't sized appropriately or if underlying data skew exists. Additionally, some operations like write operations are not supported on concurrency scaling clusters. Finally, concurrency scaling has a daily free usage limit; exceeding that will incur charges.

10. How do you secure a Redshift cluster and control access to data? Describe the different security features available, such as VPC configuration, IAM roles, and data encryption.

Securing a Redshift cluster involves multiple layers. First, place the cluster within a Virtual Private Cloud (VPC) to isolate it within your AWS network. Network security is managed using security groups, which control inbound and outbound traffic. IAM roles are crucial for controlling access to the Redshift cluster itself and its ability to access other AWS services. Assign roles with the principle of least privilege. For example, use IAM roles to allow Redshift to load data from S3 buckets without embedding credentials.

Data encryption is another key aspect. Redshift supports both at-rest and in-transit encryption. At-rest encryption uses AWS Key Management Service (KMS) or AWS CloudHSM to manage encryption keys. In-transit encryption is enabled using SSL/TLS connections. Additionally, use database user management and grant appropriate privileges to specific users or groups within Redshift. Row-level security using views can also restrict access to specific rows within a table.

11. Explain how to handle error conditions during the COPY command in Redshift. How can you identify and resolve data loading issues?

To handle error conditions during a Redshift COPY command, several mechanisms are available. The ABORTONERROR parameter specifies the maximum number of errors allowed before the COPY command fails. Setting it to 0 means any error causes the command to halt. MAXERROR can be used as well, to specify an upper bound for acceptable errors.

To identify and resolve data loading issues, examine the STL_LOAD_ERRORS system table. This table contains details about each error encountered during the COPY process, including the file name, line number, and error message. Also, consider using the ACCEPTINVCHARS parameter to replace invalid UTF-8 characters during loading, and the TRUNCATECOLUMNS parameter to truncate strings that exceed column lengths to avoid errors related to maximum column width.

12. Describe the use of user-defined functions (UDFs) in Redshift. What are the benefits and limitations of using UDFs, and how would you implement them?

User-defined functions (UDFs) in Redshift allow you to extend the functionality of SQL by creating custom functions. These functions can be used to perform operations that are not available through built-in SQL functions. Benefits include code reusability (avoiding repetition of complex logic), improved readability (encapsulating complex operations), and potentially improved performance in some cases by optimizing specific tasks. You can create scalar or table UDFs using SQL or Python (for more complex logic).

Limitations exist. Python UDFs have performance overhead due to the communication between Redshift and the Python environment. They are also subject to resource constraints (memory, CPU) of the compute nodes. SQL UDFs are generally faster but are limited to SQL syntax. Implementation involves defining the function's input parameters, return type, and function body (SQL or Python code). Example for creating a SQL based UDF:

CREATE FUNCTION f_greater (a INTEGER, b INTEGER)

RETURNS INTEGER

IMMUTABLE

AS $$

SELECT CASE WHEN a > b THEN a ELSE b END;

$$ LANGUAGE SQL;

13. How do you optimize query performance in Redshift when dealing with complex joins and aggregations? Discuss strategies such as using materialized views and query hints.

To optimize query performance in Redshift when handling complex joins and aggregations, several strategies can be employed. Materialized views can precompute and store the results of expensive queries, significantly speeding up subsequent queries that reuse the precomputed data. This is particularly effective for frequently used aggregations or joins. Query hints, such as specifying join orders or distribution styles, can guide the Redshift query optimizer to choose a more efficient execution plan. For example, /*+ label */ can be used to attach a label and examine the query plan.

Other strategies include ensuring proper data distribution using DISTSTYLE (ALL, EVEN, KEY), using SORTKEY to optimize sorting and filtering, and vacuuming and analyzing tables regularly to maintain optimal performance. Consider using smaller data types where possible, and filter data early in the query to reduce the amount of data processed in later stages.

14. What are the considerations for choosing the right Redshift node type (e.g., dc2, ds2, ra3) based on your data size, workload, and performance requirements?

Choosing the right Redshift node type depends heavily on your data size, workload, and performance needs. dc2 nodes are compute-heavy instances with SSD storage, suitable for smaller data warehouses where performance is crucial. ds2 nodes, now considered legacy, were HDD-based and optimized for large data volumes but offered slower performance compared to SSDs. The modern ra3 nodes offer a good balance using managed storage; they automatically scale storage independently of compute, making them cost-effective for growing datasets where compute and storage needs evolve at different rates.

To decide, estimate your data size. If it's relatively small and requires the best possible performance, dc2 is a good starting point. If your data is large and growing and you want scalability/cost optimization, ra3 is likely the best choice. Consider your workload patterns - are they compute-intensive or I/O bound? Use Redshift's performance monitoring tools after initial setup to optimize your choice; don't be afraid to switch instance types as needed.

15. Explain how to use the EXPLAIN command in Redshift to analyze query execution plans. How can you use this information to identify performance bottlenecks and optimize queries?

The EXPLAIN command in Redshift displays the query execution plan without actually running the query. It shows the steps Redshift will take, including table scans, joins, and data distribution methods. To use it, simply prepend EXPLAIN to your SQL query, like so: EXPLAIN SELECT * FROM my_table WHERE column1 = 'value';. The output is a hierarchical tree, with each node representing an operation.

To identify bottlenecks, look for operations that are particularly costly (high estimated cost). Common issues include full table scans (consider adding indexes or using a more selective WHERE clause), inefficient join strategies (ensure distribution keys align for joining tables), and data redistribution (optimize table distribution styles to minimize data movement). Also, examine the join order, as incorrect order could cause suboptimal performance. By analyzing the plan, you can rewrite queries, adjust table designs (distribution keys and sort keys), or add indexes to improve performance. Redshift Advisor (if enabled) provides recommendations based on EXPLAIN output.

16. How can you implement row-level security in Redshift to restrict data access based on user roles or attributes?

Row-level security in Redshift can be implemented primarily using views in conjunction with the SESSION_USER or CURRENT_USER functions, or by leveraging user-defined functions (UDFs). Create views that filter data based on the current user's role or attributes. Grant users access to these views instead of the underlying tables.

For example, if you have a table sales_data and want to restrict access based on region, you could create views like sales_data_east and sales_data_west, each filtering the data accordingly. Grant users from the East region access to sales_data_east and users from the West region access to sales_data_west. You could then use a UDF which queries a user role table to determine if a user has access or not.

17. Describe the process of migrating data from another data warehouse (e.g., Teradata, Netezza) to Redshift. What are the challenges and considerations involved in such a migration?

Migrating data to Redshift typically involves these steps: 1. Assessment: Analyze source data structures, volume, and dependencies. 2. Extraction: Extract data from the source data warehouse (e.g., Teradata). Tools like bcp or vendor-specific utilities can be used. 3. Transformation: Transform data to match Redshift's schema and data types. This might include data cleansing, aggregation, and format conversions. Scripting languages (Python, SQL) are useful here. 4. Loading: Load transformed data into Redshift using COPY command from S3 or other staging areas. 5. Validation: Verify data integrity and accuracy after migration.

Challenges include schema differences, data type incompatibilities, performance tuning for large datasets, and ensuring minimal downtime. Considerations involve choosing the right tools for extraction and transformation, optimizing the COPY command for efficient loading, handling data quality issues, and planning for incremental data updates. Network bandwidth can also be a limitation, and security considerations like encryption during transit are crucial.

18. How would you implement a data quality monitoring process in Redshift to detect and address data inconsistencies or errors?

Implementing data quality monitoring in Redshift involves several steps. First, define data quality rules and metrics, such as completeness, accuracy, consistency, and validity. Then, implement automated checks using SQL queries in Redshift to detect violations of these rules. For example, you could use COUNT(*) with WHERE clauses to find null values in required fields or identify inconsistent data across tables.

Next, schedule these checks to run regularly (e.g., daily or hourly) using Redshift's scheduler or an external orchestration tool like AWS Glue or Airflow. Store the results of these checks in a dedicated monitoring table. Finally, set up alerts based on the monitoring table, using services like AWS SNS, to notify the data team when data quality issues are detected. This allows for timely investigation and remediation of data inconsistencies or errors. Consider using data profiling tools initially to understand the existing data quality before designing rules.

19. Explain how to use Redshift's query monitoring rules (QMR) to automatically detect and terminate long-running or resource-intensive queries.

Redshift's Query Monitoring Rules (QMR) allow you to define rules that automatically monitor and take actions on queries that exceed specified thresholds for resource consumption or execution time. You create QMRs associated with workload management (WLM) queues. These rules can then automatically terminate queries exceeding the defined limits, preventing resource exhaustion and improving overall system performance.

To use QMR, you first define the rules based on metrics like execution time, CPU usage, or disk I/O. For example, you could define a rule that terminates any query running longer than 600 seconds or consuming more than 80% of available CPU. You then associate these rules with specific WLM queues. When a query running in that queue violates a rule, Redshift takes the defined action, such as logging the event, queuing the query for termination, or immediately terminating the query, thereby managing runaway queries effectively.

20. What are the best practices for designing tables in Redshift to optimize for analytical queries? Discuss considerations such as column data types, compression encodings, and sort keys.

When designing tables in Redshift for analytical queries, several best practices should be followed to optimize performance. Choosing appropriate column data types is crucial; use the smallest data type that can accommodate the data to save storage space and improve query speed. Employ compression encodings to reduce storage footprint and enhance I/O performance. Common encodings include ZSTD, LZO, and BYTEDICT, and the optimal choice depends on the data characteristics.

Furthermore, selecting the right sort key is vital for query optimization. Choose a column frequently used in WHERE clauses, JOIN conditions, or GROUP BY operations as the sort key. Compound sort keys are useful when filtering on multiple columns. Distribute data evenly across nodes using appropriate distribution styles like EVEN, KEY, or ALL, considering the size and usage patterns of tables involved in joins. KEY distribution on the join column is beneficial for large tables joining to smaller tables. Regularly analyze table statistics to enable the query optimizer to make informed decisions.

21. Describe how you would set up alerting and notifications for critical events in Redshift, such as high CPU utilization, low disk space, or failed queries.

I would set up alerting and notifications for critical Redshift events using a combination of CloudWatch metrics, CloudWatch Alarms, and SNS. First, I'd leverage the readily available Redshift performance metrics in CloudWatch, such as CPUUtilization, DiskSpaceUsed, and QueryDuration. Then, I would create CloudWatch Alarms for each critical event. For instance, an alarm could be configured to trigger when CPUUtilization exceeds 80% for a specified duration. Each alarm would be configured to send notifications to an SNS topic.

Finally, I would configure the SNS topic to send notifications to the appropriate channels, such as email, Slack, or PagerDuty. This allows for immediate awareness and response to critical Redshift issues. For failed queries, I'd create a process to monitor Redshift system tables like STL_QUERY and STL_ALERT_EVENT_LOG and send alerts based on error codes or query duration exceeding predefined thresholds.

Expert AWS RedShift interview questions

1. How would you optimize Redshift performance for complex analytical queries involving multiple large tables and intricate joins?

To optimize Redshift performance for complex analytical queries with large tables and intricate joins, focus on several key areas. Firstly, use appropriate data distribution strategies. Choose DISTSTYLE KEY on the most frequently joined column to collocate joining rows on the same compute nodes. If no single column is dominant for joins, consider DISTSTYLE ALL for smaller dimensions tables to replicate the data across all nodes. For very large fact tables without a clear join key, DISTSTYLE EVEN might be the best option to distribute data evenly.

Secondly, optimize table schemas and query structures. Use column encoding (e.g., ZSTD, LZO) to reduce storage space and improve compression. Ensure you have appropriate sort keys (SORTKEY) defined on your tables based on the columns used most frequently in WHERE clauses and ORDER BY clauses. Finally, analyze query execution plans using EXPLAIN to identify bottlenecks. Rewrite queries to leverage Redshift's columnar storage and parallel processing capabilities, reducing unnecessary data scans and join operations by using techniques like common table expressions(CTEs) and materialized views when appropriate. Regularly vacuum and analyze tables to maintain optimal performance.

2. Describe your experience with workload management (WLM) in Redshift and how you would configure it to prioritize different types of queries.

My experience with Redshift WLM involves configuring queue assignment rules to prioritize queries based on criteria like user group, query group, or query type. I've used WLM to ensure that critical reporting queries receive higher priority and resources compared to ad-hoc exploration queries. For example, I might configure a dedicated queue for ETL processes with higher memory allocation and concurrency slots, while a separate queue for data analysts receives lower priority to prevent resource contention.

To configure WLM for prioritization, I define queue assignment rules that match specific criteria, such as user_group = 'reporting' or query_group = 'etl'. These rules direct queries to the appropriate queue. Within each queue, I can adjust parameters like memory_percent_to_use, query_concurrency, and priority (e.g., 'high', 'normal', 'low') to fine-tune resource allocation and query execution order. For shorter queries, I might use short query acceleration (SQA) for immediate execution outside of normal queue configurations.

3. Explain the trade-offs between different distribution styles (EVEN, KEY, ALL) in Redshift and how you would choose the appropriate style for a given table.

Redshift distribution styles impact how data is spread across compute nodes. EVEN distribution spreads data evenly, regardless of column values. This is good for small tables or when there's no clear distribution key but can lead to uneven query performance as data isn't localized to specific nodes based on joins or filters. KEY distribution assigns rows to nodes based on the values in a specified distribution key column. This is ideal when you have frequent joins on a specific key, as it co-locates related data, minimizing data transfer between nodes. However, if the key has skewed values (some values appear much more often than others), it can cause hotspots and uneven node utilization. ALL distribution replicates the entire table to every node. This is best for small, frequently joined dimension tables, as it eliminates the need for data transfer during joins. However, it consumes more storage space and is not suitable for large tables.

Choosing the right style depends on the table's size, query patterns, and data characteristics. For small tables with no specific join patterns, EVEN is suitable. For large fact tables, KEY distribution is often preferred if a suitable distribution key (usually the join key with dimensions) exists and is not highly skewed. For small dimension tables that are frequently joined, ALL provides the best performance.

4. How would you design a disaster recovery strategy for a Redshift cluster, considering factors like RTO and RPO?

A Redshift disaster recovery (DR) strategy focuses on minimizing data loss (RPO) and downtime (RTO). Key components include automated backups, cross-region replication, and a failover plan. Redshift's automated snapshots provide a foundation for recovery. To meet specific RTO/RPO requirements, you can configure cross-region snapshots and restore from these snapshots in a secondary region in case of a primary region failure. For faster recovery, consider Redshift RA3 instances which separate compute and storage, allowing for quicker resizing and recovery.

The disaster recovery plan should include:

- Regular automated snapshots: Configured based on desired RPO.

- Cross-region snapshot replication: Enabled to replicate snapshots to a secondary region.

- Testing the failover process: Periodically test the DR plan to ensure it meets RTO objectives. This involves restoring from snapshots in the DR region, verifying data integrity, and validating application connectivity.

- Monitoring and alerting: Implement monitoring to detect outages and trigger the failover process.