Evaluating a candidate's program testing skills can be tricky, especially with the increasing complexity of software development. It's easy to be caught off guard by candidates who sound good but don't actually possess the practical skills needed for the job, as discussed in our article on skills assessment for recruiters.

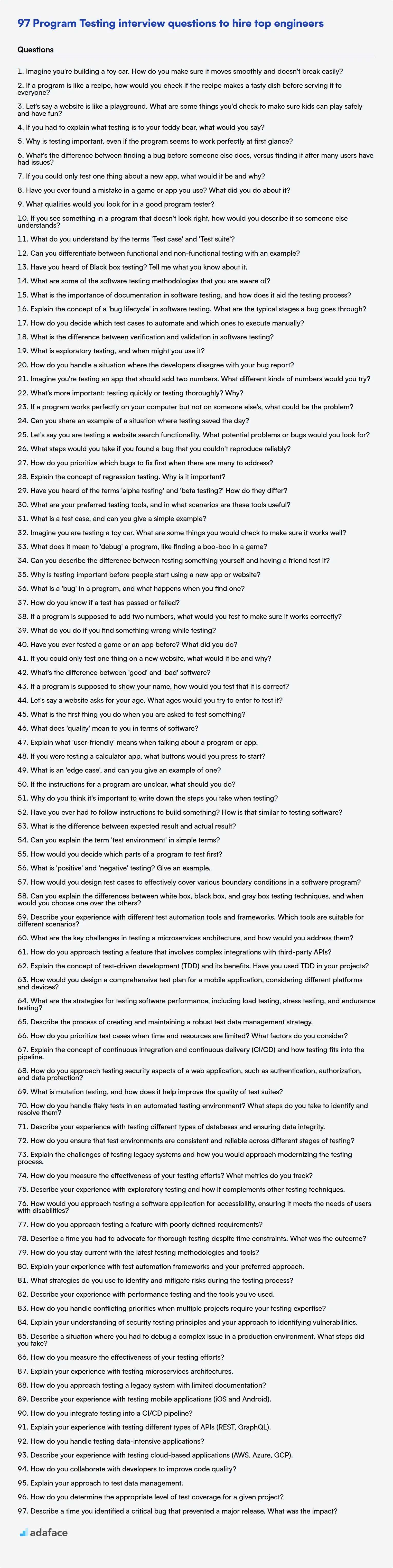

This blog post provides a curated list of interview questions for program testing roles, categorized by experience level from freshers to experienced professionals. You'll also find a selection of multiple-choice questions (MCQs) to quickly gauge a candidate's understanding of key testing concepts.

By using these questions, you can better assess candidates' program testing knowledge and identify those who can ensure software quality, or, use our QA Engineer Test to quickly identify candidates with the right skills.

Table of contents

Program Testing interview questions for freshers

1. Imagine you're building a toy car. How do you make sure it moves smoothly and doesn't break easily?

To make the toy car move smoothly, I'd focus on a few key areas. First, I'd choose low-friction materials for the axles and wheels. Things like smooth plastic or metal axles paired with well-fitted wheels are important. Also, I would ensure the wheels are properly aligned to avoid any unnecessary resistance or wobbling. Finally, the weight distribution is key; the car should be balanced so that no single wheel is taking on more weight than the others.

To ensure durability, I'd use strong and flexible materials for the car's body and chassis. This could be a tough plastic or even a lightweight metal. The connections between the parts also matter. Using robust fasteners or adhesives at the joints, and reinforcing any potentially weak points in the design makes sure it doesn't easily break with regular play.

2. If a program is like a recipe, how would you check if the recipe makes a tasty dish before serving it to everyone?

If a program is like a recipe, checking if it makes a tasty dish before serving involves testing and validation. I would start with unit tests, which are like tasting individual ingredients to ensure they are good (e.g., a function returns the expected value). Then, I'd move to integration tests, which are like tasting combinations of ingredients to see if they work well together (e.g., different modules interact correctly).

Finally, I'd perform end-to-end tests or user acceptance testing (UAT), which is like tasting the entire dish to ensure it meets the desired quality and satisfies the end user. This could involve beta testing with a small group of users to get feedback before a wider release. In a development context, automated testing and code reviews are essential to improve the quality of the 'dish'. Furthermore, monitoring and logging after deployment is like checking if people liked the dish at the table and gathering feedback for future improvements.

3. Let's say a website is like a playground. What are some things you'd check to make sure kids can play safely and have fun?

If a website is a playground, I'd check a few things to ensure safety and fun:

- Security: Is the site protected against bad actors? Are user data and interactions safe? Things like proper HTTPS implementation and protection against common web vulnerabilities (XSS, CSRF) are important.

- Content Moderation: Is there a system in place to remove inappropriate or harmful content? This could be user-generated content, or even ads. We'd want a reporting mechanism and a team or automated system to review reports.

- Accessibility: Can all kids play, including those with disabilities? This means following accessibility guidelines (WCAG) for things like screen readers, keyboard navigation, and color contrast.

- Privacy: Is kids' personal information protected? We need to comply with laws like COPPA and ensure data is not collected or shared without parental consent.

- Usability: Is the playground easy to navigate and understand? Clear instructions and intuitive design are crucial for a positive experience. Is the site responsive so it works on different devices like tablets and phones?

4. If you had to explain what testing is to your teddy bear, what would you say?

Hey Teddy, testing is like playing with toys to make sure they work as expected before giving them to other kids. We try all the buttons, see if they break easily, and make sure they're fun and safe. We do this with software too, trying out different things to see if it works right, doesn't crash, and is easy to use.

Basically, it's all about checking if something does what it's supposed to do, and making sure there aren't any surprises or problems when someone else uses it. We want to catch any issues before they do!

5. Why is testing important, even if the program seems to work perfectly at first glance?

Testing is crucial even if a program appears to function correctly initially because it helps uncover hidden defects, edge cases, and vulnerabilities that might not be immediately apparent. Initial observations often only cover a small subset of possible inputs and scenarios. Testing increases confidence in the software's reliability, stability, and security over time.

Specifically, testing can reveal issues like:

- Regression errors: Changes introduced later can unintentionally break existing functionality.

- Performance bottlenecks: The application might be slow under heavy load.

- Security vulnerabilities: Exploitable weaknesses could exist despite the apparent correctness.

- Unexpected behavior: Inputs outside the anticipated range might cause crashes or incorrect results. Robust testing ensures the program functions as intended across a wider variety of circumstances.

6. What's the difference between finding a bug before someone else does, versus finding it after many users have had issues?

Finding a bug before others significantly reduces the impact and cost. Before release, the cost of fixing a bug is lower as fewer people are affected, and it prevents negative user experiences, reputational damage, and potential financial losses. The development team can address it in a controlled environment with minimal disruption.

Conversely, discovering a bug after many users encounter it leads to a higher cost due to factors such as support requests, potential data corruption, loss of user trust, and emergency patching procedures. It might involve rolling back deployments, issuing public apologies, and potentially offering compensation. Fixing bugs post-release is more complex and disruptive, demanding immediate attention to minimize further damage. The severity escalates dramatically with the number of impacted users.

7. If you could only test one thing about a new app, what would it be and why?

If I could only test one thing, it would be the core functionality or the "happy path" of the app. This is the primary reason users will download and use the app. Ensuring this works flawlessly provides the most immediate value and user satisfaction.

For example, if it's a banking app, I'd test the basic money transfer functionality. If it's a ride-sharing app, I'd test the ability to successfully request and complete a ride. By focusing on the core feature, I can quickly assess if the app is fundamentally useful and worth further exploration and testing.

8. Have you ever found a mistake in a game or app you use? What did you do about it?

Yes, I've encountered a few mistakes in apps I use. For example, I once found a bug in a popular note-taking app where text formatting would sometimes disappear after syncing across devices.

I reported the issue through the app's built-in feedback mechanism, providing specific details about the steps to reproduce the bug and the affected devices. I also included screenshots to visually demonstrate the problem. Reporting it through the proper channels ensures that the developers are aware and can prioritize the fix.

9. What qualities would you look for in a good program tester?

A good program tester should possess several key qualities. Firstly, they need strong analytical and problem-solving skills to effectively dissect the software and identify potential issues. A tester must be detail-oriented, noticing even minor discrepancies and inconsistencies. They should also have excellent communication skills, both written and verbal, to clearly articulate bugs and collaborate effectively with developers.

Furthermore, a successful tester needs to be patient and persistent, as debugging can be a time-consuming process. A good tester will also be curious and constantly seeking new ways to break the software and uncover hidden vulnerabilities. Some technical knowledge is also useful, such as understanding common data structures like arrays and linked lists and how certain algorithms may affect performance.

10. If you see something in a program that doesn't look right, how would you describe it so someone else understands?

When describing something that 'doesn't look right' in code, I focus on being specific and providing context. I start by stating the observed behavior and expected behavior. For instance, instead of saying "the calculation is wrong," I might say, "The total price should be $100, but the program is displaying $80." Then, I clearly articulate the steps to reproduce the issue, if possible. I highlight the problematic code segment:

def calculate_total(price, tax):

total = price + tax # Expected: Total with tax, but it could be miscalculated

return total

Following that, I mention any assumptions I'm making or data I'm using. I aim to make it easy for someone else to understand the problem and investigate further, even if they are not familiar with that specific part of the codebase. Clear, concise, and reproducible is the goal.

11. What do you understand by the terms 'Test case' and 'Test suite'?

A test case is a specific set of actions performed to verify a particular feature or functionality of a software application. It typically includes preconditions, input data, expected results, and postconditions. It's a single, atomic test that aims to validate a specific requirement.

A test suite is a collection of related test cases that are grouped together to test a broader aspect of the application. Test suites are often organized based on functionality, module, or testing type (e.g., regression, smoke, integration). They provide a structured way to execute and manage multiple test cases efficiently. Test suites help in organizing tests and running them in a logical order.

12. Can you differentiate between functional and non-functional testing with an example?

Functional testing verifies that each function of the software application operates in conformance with the requirement specification. It focuses on what the system does. For instance, testing if a user can successfully log in with valid credentials, or if a shopping cart correctly calculates the total price are examples of functional testing. Non-functional testing, on the other hand, evaluates aspects like performance, security, usability, and reliability. It focuses on how well the system works.

For example, functional testing would ensure that the 'submit order' button correctly submits an order. Non-functional testing would ensure that the 'submit order' button submits the order within an acceptable time frame (e.g., less than 3 seconds) under a normal load and under a stress test with hundreds of concurrent users.

13. Have you heard of Black box testing? Tell me what you know about it.

Black box testing is a software testing method where the internal structure, design, and implementation of the item being tested are not known to the tester. The focus is on testing the functionality of the software based on its specifications and requirements. Testers provide inputs and examine the outputs without any knowledge of the internal workings of the system.

Key characteristics of black box testing include testing from an external or end-user perspective, validating functionality according to requirements, and employing techniques like equivalence partitioning, boundary value analysis, and decision table testing to derive test cases. The goal is to uncover errors such as incorrect or missing functions, interface errors, errors in data structures or external database access, and behavior or performance issues.

14. What are some of the software testing methodologies that you are aware of?

I'm familiar with several software testing methodologies, including:

- Agile Testing: Focuses on continuous testing throughout the software development lifecycle, closely integrated with agile development practices. Testers and developers collaborate closely to deliver working software in short iterations. Testing is done early and often.

- Waterfall Testing: A sequential approach where testing occurs after development is complete. Each phase (requirements, design, implementation, testing, deployment) must be fully completed before the next begins. This methodology is less flexible compared to agile.

- V-Model Testing: An extension of the waterfall model, emphasizing the relationship between each development phase and its corresponding testing phase. Verification and validation activities are performed at each stage.

- Exploratory Testing: An approach where testers explore the software to discover defects based on their knowledge and experience. It's less structured and more focused on learning the application during the testing process. This is a good complement to more structured approaches.

- Behavior Driven Development (BDD): Writing tests from a user's perspective, describing the expected behavior of the system. Tests are written in a natural language-like format, making them easily understood by stakeholders. This helps bridge the gap between business requirements and technical implementation.

- Test-Driven Development (TDD): Writing tests before writing the code. This forces developers to think about the requirements and design before implementation. The development cycle follows the pattern of writing a failing test, writing code to pass the test, and then refactoring the code.

15. What is the importance of documentation in software testing, and how does it aid the testing process?

Documentation is crucial in software testing because it provides a clear understanding of the software's intended functionality, design, and behavior. It serves as a reference point for testers, ensuring they have the necessary information to create comprehensive test cases and verify that the software meets the defined requirements. Without proper documentation, testers may misinterpret requirements, overlook critical functionalities, or struggle to reproduce and report defects accurately.

Specifically, documentation aids the testing process by:

- Providing a basis for test case design: Requirements documents, design specifications, and user manuals offer insights into the expected inputs, outputs, and interactions of the software, allowing testers to create targeted test cases.

- Facilitating defect reporting: Clear documentation helps testers accurately describe defects, including steps to reproduce, expected vs. actual results, and the impact of the defect. This improves communication between testers and developers and enables faster resolution.

- Enabling traceability: Documentation allows testers to trace requirements to test cases and defects, ensuring that all functionalities are adequately tested and that defects are linked back to their root causes.

- Supporting regression testing: Documentation helps testers understand the impact of code changes and prioritize regression testing efforts, focusing on areas most likely to be affected.

16. Explain the concept of a 'bug lifecycle' in software testing. What are the typical stages a bug goes through?

The bug lifecycle, also known as the defect lifecycle, is the journey a bug takes from its discovery to its resolution. It outlines the different stages a bug progresses through during the testing and development process. Understanding this lifecycle is crucial for efficient bug tracking and management.

Typical stages include:

- New: A bug is discovered and logged.

- Assigned: The bug is assigned to a developer for investigation.

- Open: The developer begins working on fixing the bug.

- Fixed: The developer believes the bug is resolved and submits the fix.

- Pending Retest: The bug fix is ready for testing.

- Retest: The tester re-tests the fix.

- Verified: The tester confirms the bug is resolved.

- Closed: The bug is successfully fixed and verified.

- Reopened: If the bug persists after the 'Retest' stage, it goes back to 'Open'.

- Rejected: The bug is deemed not a bug or a duplicate.

17. How do you decide which test cases to automate and which ones to execute manually?

When deciding which test cases to automate, I prioritize those that are: repetitive, high-risk/critical, time-consuming, and stable (meaning the application functionality doesn't change frequently). Automation is ideal for regression tests, smoke tests, and data-driven tests where numerous inputs need to be validated. I also consider the ROI, weighing the initial setup cost against the long-term benefits of automation.

Manual testing is better suited for: exploratory testing (unstructured, ad-hoc testing to discover unexpected issues), usability testing (evaluating the user experience which requires human judgment), edge cases, and new features that are still under development and subject to change. Tests requiring subjective assessment (e.g., aesthetics) are also generally performed manually. Ultimately, I aim for a balanced approach, strategically combining automation and manual testing to achieve comprehensive test coverage.

18. What is the difference between verification and validation in software testing?

Verification is the process of checking whether the software meets the specified requirements. It focuses on ensuring that "you built it right." It answers the question: "Are we building the product right?" Activities include reviews, inspections, and testing to confirm that the software conforms to its specification.

Validation, on the other hand, is the process of evaluating the final product to check whether it meets the customer's needs and expectations. It focuses on ensuring that "you built the right thing." It answers the question: "Are we building the right product?" Activities include user acceptance testing and beta testing to confirm that the software is fit for its intended use.

19. What is exploratory testing, and when might you use it?

Exploratory testing is a software testing approach where test cases are not created in advance. Instead, testers dynamically design and execute tests based on their knowledge, experience, and intuition, while simultaneously learning more about the software as they test. It emphasizes discovery, investigation, and learning throughout the testing process.

You might use exploratory testing when: requirements are vague or incomplete, there's limited time for testing, you want to quickly assess the software's usability and find critical issues, or when you want to supplement existing scripted tests with more creative and in-depth exploration.

20. How do you handle a situation where the developers disagree with your bug report?

If developers disagree with my bug report, I first try to understand their perspective. I carefully review their reasoning and try to see if I missed something or if the bug is actually an intended behavior. I then clearly communicate my findings with specific steps to reproduce, expected vs. actual results, and the impact of the bug.

If the disagreement persists, I'll try to gather more evidence, perhaps by testing on different environments or devices. If it's a complex technical issue, I'll involve other testers or senior developers to get a second opinion. The ultimate goal is to reach a common understanding and resolve the issue constructively, focusing on the quality of the software.

21. Imagine you're testing an app that should add two numbers. What different kinds of numbers would you try?

When testing an app that adds two numbers, I would try a variety of inputs to ensure robustness. This includes:

- Positive integers: Basic functionality test (e.g., 2 + 3)

- Negative integers: Check handling of negative signs (e.g., -5 + 1, -2 + -8)

- Zero: Test the additive identity (e.g., 0 + 5, -3 + 0)

- Large numbers: Identify potential overflow issues or limitations (e.g., 2147483647 + 1)

- Floating-point numbers: Check for precision and rounding errors (e.g., 3.14 + 2.71, 0.1 + 0.2)

- Mixed types: Test adding an integer and a float

- Boundary values: Minimum and maximum values the system is designed to handle.

- Invalid inputs: Characters, strings, or null values to verify error handling and prevent crashes.

- Scientific notation: Numbers in exponential format (e.g.,

1.0e+6 + 1)

I would also test edge cases and combinations of these to uncover potential issues.

22. What's more important: testing quickly or testing thoroughly? Why?

The ideal answer balances speed and thoroughness, but prioritizing depends on the context. In fast-paced development, quick testing (unit tests, smoke tests) is crucial for rapid feedback and preventing major regressions. This allows for faster iteration and delivery. However, insufficient testing early on can lead to accumulated technical debt and increased risk of defects.

In safety-critical systems or projects with high reliability requirements, thorough testing (integration, end-to-end, performance, security) is paramount, even if it takes longer. The cost of failure outweighs the benefit of speed. Therefore, a risk-based approach is best, focusing thoroughness on critical areas while using quicker tests for less critical features. Furthermore, automated testing helps to balance speed and thoroughness.

23. If a program works perfectly on your computer but not on someone else's, what could be the problem?

There are several potential reasons why a program might work perfectly on your computer but not on someone else's. The most common involve differences in the environments. These differences can be:

- Operating System: The program may be incompatible with the other person's OS (e.g., written for Windows but run on macOS or Linux).

- Dependencies: Missing or different versions of required libraries or packages. Use a dependency management tool like

pip(Python),npm(Node.js), or Maven (Java) to define/install necessary packages and their correct versions. For example, in Python, you could use arequirements.txtfile containing dependencies.pip install -r requirements.txtcan install these dependencies. - Hardware: Incompatible hardware requirements (e.g., insufficient RAM, specific GPU needed).

- Configuration: Different settings or configurations (e.g., environment variables, configuration files).

- Permissions: Insufficient permissions to access necessary files or resources.

- Software Conflicts: Conflicts with other software installed on the other person's machine.

- File Paths: Hardcoded absolute file paths that are valid on your system but not the other person's.

24. Can you share an example of a situation where testing saved the day?

Early in my career, I was working on a critical e-commerce platform update that involved integrating a new payment gateway. We had a tight deadline and the initial development went smoothly. However, during integration testing, the testing team discovered a subtle but critical bug: the system wasn't correctly handling refunds when multiple discounts were applied to an order. This specific scenario was missed during the initial unit tests.

Without thorough testing, this bug would have gone unnoticed and deployed to production. The impact could have been significant, leading to incorrect refund amounts, customer dissatisfaction, and potential legal issues. The testing team's discovery allowed us to quickly address the issue before launch, ultimately saving the day and preventing a potentially damaging situation.

25. Let's say you are testing a website search functionality. What potential problems or bugs would you look for?

- Incorrect search results: The search returns irrelevant or inaccurate results. This could be due to issues with the search algorithm, indexing problems, or poor data quality.

- No results: The search returns no results even when relevant content exists. This could be due to indexing issues, typos in the search query (and lack of handling for them), or issues with how the search term is being processed.

- Slow search performance: The search takes too long to return results, leading to a poor user experience.

- Error messages: The search returns unexpected error messages, indicating a problem with the search functionality.

- Case sensitivity: The search is case-sensitive, meaning that it only returns results that exactly match the case of the search query. Ideally, searches should be case-insensitive.

- Typos and misspellings: The search does not handle typos or misspellings in the search query. Consider implementing "did you mean" suggestions or fuzzy matching.

- Special characters: The search does not handle special characters correctly, leading to errors or unexpected results. Sanitize input.

- Relevance ranking: The search results are not ranked in order of relevance, making it difficult for users to find the information they are looking for.

- Filtering and sorting: The search lacks filtering or sorting options, making it difficult for users to narrow down their search results.

- Security vulnerabilities: The search functionality is vulnerable to security attacks, such as SQL injection or cross-site scripting (XSS). Properly sanitize search queries.

- Accessibility issues: The search functionality is not accessible to users with disabilities.

- UI/UX issues: Poor placement of the search bar, unclear labels, or a confusing search results page. Consider responsive design for mobile devices.

- Lack of pagination: When there are many results, not providing pagination makes it difficult for the user to browse through all the results. Limit results to a reasonable number per page.

26. What steps would you take if you found a bug that you couldn't reproduce reliably?

When facing an intermittent bug, I'd prioritize gathering as much information as possible during each occurrence. This includes detailed logging (timestamps, system state, relevant variable values) and potentially capturing memory dumps or network traffic if applicable. I'd also document the exact steps taken leading up to the bug, even if seemingly unrelated, to identify potential patterns. I would then try to correlate any observable symptoms and try to reproduce it in a test environment with a debugger attached.

Once I have detailed logs and reproducible steps, I'd analyze the data for clues, focusing on unusual resource usage, error messages, or code paths. I'd also consider race conditions, memory corruption, and external dependencies as potential causes, and use techniques like code reviews and static analysis to identify potential weaknesses in the code. If reproduction remains elusive, I'd create automated tests to run frequently, hoping to catch the bug in a controlled environment.

27. How do you prioritize which bugs to fix first when there are many to address?

I prioritize bugs based on a combination of impact, urgency, and effort. Impact considers the severity of the bug's effect on users and the business. Urgency looks at how quickly the bug needs to be fixed (e.g., is it blocking a release?). Effort estimates the time and resources required to fix the bug. Typically, I'd tackle bugs with high impact and high urgency first. Then, I might consider lower impact/urgency bugs with low effort as quick wins. I also rely on data and communication with stakeholders to refine my prioritization, incorporating user feedback and business priorities.

Specifically, I might use a system that looks like this:

- Critical Bugs: System down, data loss, security vulnerability

- High Bugs: Significant feature broken, major usability issue

- Medium Bugs: Minor feature broken, moderate usability issue

- Low Bugs: Cosmetic issue, minor inconvenience

And I'd communicate with the Product Owners, developers, and QA to ensure everyone agrees on the prioritization.

28. Explain the concept of regression testing. Why is it important?

Regression testing is re-running tests (manual or automated) after code changes (bug fixes, new features, updates) to ensure that existing functionality still works as expected. Its purpose is to verify that the changes haven't unintentionally introduced new bugs or 'regressed' existing working features.

It's important because software changes can have unexpected side effects. Without regression testing, seemingly unrelated parts of the application might break after a modification. This helps maintain software quality, stability, and ensures a consistent user experience. It also avoids the cost and effort of fixing regressions discovered later in the development cycle or, even worse, in production.

29. Have you heard of the terms 'alpha testing' and 'beta testing?' How do they differ?

Yes, I've heard of alpha and beta testing. Alpha testing is conducted internally by the organization developing the software. The main goal is to identify bugs and issues early in the development cycle, before releasing the software to external users. The alpha version is typically unstable and feature-incomplete.

Beta testing, on the other hand, involves releasing the software to a limited group of external users (beta testers). Beta testers use the software in real-world scenarios and provide feedback on its usability, functionality, and stability. This helps uncover bugs and issues that were not found during alpha testing, and validates the software's readiness for general release.

30. What are your preferred testing tools, and in what scenarios are these tools useful?

My preferred testing tools vary depending on the project and requirements. For unit testing, I prefer Jest or pytest. Jest is great for JavaScript projects due to its ease of setup, mocking capabilities, and snapshot testing features. Pytest is my choice for Python projects, known for its simplicity, extensive plugin ecosystem, and powerful assertion rewrites. For end-to-end testing, I often use Cypress or Selenium. Cypress excels in testing anything that runs in a browser, offering excellent debugging tools and speed. Selenium provides broader browser support and is useful when testing across different platforms and browsers.

For API testing, I rely on tools like Postman or Rest-assured. Postman is useful for manual testing and exploring APIs, while Rest-assured, is beneficial for automated API testing in Java projects due to its fluent interface and easy integration with build systems like Maven or Gradle. I also incorporate linters and static analysis tools (e.g., ESLint, flake8) for code quality and early bug detection. When performance testing is needed, I use k6 due its simplicity and integration in CI/CD pipelines. Each tool helps ensure a different part of the overall quality.

Program Testing interview questions for juniors

1. What is a test case, and can you give a simple example?

A test case is a specific set of conditions and inputs designed to verify whether a software application, system, or feature behaves as expected. It typically includes a description of the test, preconditions, input data, expected outcome, and actual outcome. The purpose of a test case is to validate a particular requirement or functionality.

For example, consider a simple function that adds two numbers: add(a, b). A test case could be:

- Test Case ID: TC_001

- Description: Verify that the

addfunction correctly adds two positive integers. - Preconditions: The

addfunction is implemented and available. - Input Data:

a = 2,b = 3 - Expected Output:

5 - Actual Output: (After running the test)

5 - Pass/Fail: Pass

2. Imagine you are testing a toy car. What are some things you would check to make sure it works well?

I would check several aspects of the toy car to ensure it works well. First, I'd check the basic functionality: Does it move forward, backward, left, and right (if applicable)? What's the range and responsiveness of the controls (if it's remote-controlled)? Also I will check the battery life to determine if it meets the expected duration advertised. I would make sure the wheels are properly aligned to ensure that car can move straight.

Next, I would assess the build quality and safety. Are there any sharp edges or small parts that could detach and pose a hazard, especially to younger children? Is the plastic durable enough to withstand regular use and minor impacts? I'd also want to see if the toy car adheres to any relevant safety standards like ASTM or EN71. After running it for some time, I'll check for overheating, and any unusual sounds that could indicate a problem. Finally, I'd check the car's performance on different surfaces like carpet, hardwood, and tile.

3. What does it mean to 'debug' a program, like finding a boo-boo in a game?

Debugging a program is like being a detective and fixing mistakes in code, similar to finding a 'boo-boo' in a game. When a program doesn't work as expected, or crashes, debugging is the process of identifying the cause of the problem (the 'bug'), and then correcting the code to fix it.

Think of it like this: a game character gets stuck in a wall. Debugging involves figuring out why the character got stuck (perhaps a collision detection error), and then changing the game's code to prevent it from happening again. Tools like debuggers allow programmers to step through code line by line, inspect variables, and understand exactly what the program is doing at each step to find the root cause of the 'boo-boo'.

4. Can you describe the difference between testing something yourself and having a friend test it?

Testing something yourself often leads to confirmation bias. You're likely to test in ways that validate your assumptions and overlook potential problems because you already understand how it's supposed to work. A friend, especially someone unfamiliar with the project, provides a fresh perspective. They're more likely to use the software in unexpected ways, uncovering edge cases and usability issues you wouldn't have considered.

Having a friend test can reveal areas of confusion or points of failure that are obvious to you but not to a new user. This outside perspective is invaluable for identifying usability problems, bugs in logic, and assumptions you've made that don't hold true for others. This kind of testing provides much better user experience coverage than you can generally do yourself.

5. Why is testing important before people start using a new app or website?

Testing is crucial before launch to identify and fix bugs, usability issues, and security vulnerabilities. Early detection saves time and resources, preventing negative user experiences that can damage reputation and lead to user churn. A well-tested app ensures reliability and performance, increasing user satisfaction and adoption.

Specifically, testing validates core functionalities, ensures compatibility across devices and browsers, and verifies data security. For example, in a banking app, testing confirms transactions are processed correctly and personal information is protected. Proper testing also covers edge cases and error handling, providing a robust and user-friendly product from the outset.

6. What is a 'bug' in a program, and what happens when you find one?

A 'bug' in a program is an error, flaw, or defect in the software's code that causes it to produce an incorrect or unexpected result, or to behave in unintended ways. This can range from a minor annoyance to a complete system crash.

When a bug is found, the typical process involves:

- Reproducing the bug: Ensuring the issue can be consistently recreated.

- Isolating the cause: Identifying the specific code section responsible.

- Fixing the bug: Modifying the code to correct the error, this may involve debugging using tools like

gdb,pdbor logging. - Testing the fix: Verifying that the bug is resolved and that the fix doesn't introduce new problems (regression testing).

- Documenting the fix: Recording details about the bug and its resolution for future reference.

7. How do you know if a test has passed or failed?

A test passes or fails based on its assertions. Assertions are statements that check if a specific condition is true. If all assertions within a test case evaluate to true, the test passes. If even one assertion evaluates to false, the test fails.

Common examples include checking if a function returns the expected value, if a variable has the correct type, or if an exception is raised when it should be. Test frameworks provide functions like assertEquals(expected, actual) or assertTrue(condition) to perform these checks. The framework then reports the overall test status based on the outcome of these assertions.

8. If a program is supposed to add two numbers, what would you test to make sure it works correctly?

To thoroughly test a program that adds two numbers, I'd consider several categories of inputs.

First, I'd test valid inputs: Positive integers, Negative integers, Zero, Large numbers (approaching the maximum integer value). For each of these I would assert a + b = expected_result. Second, I'd test edge cases and boundary conditions: Maximum and minimum integer values and combinations, very small numbers, and see that there are no overflows or underflows. Third, I'd test invalid inputs: Non-numeric inputs (strings, special characters), Null or missing inputs. Depending on the requirements, the program should either handle these gracefully (e.g., return an error message) or reject them. Lastly if the program supports decimals or floating numbers, testing with floating point numbers (positive and negative), very small and very large floating point numbers is important. I'd use a combination of unit tests and integration tests to verify the functionality and handle potential errors.

9. What do you do if you find something wrong while testing?

If I find something wrong while testing, my immediate action is to document it thoroughly. This includes capturing the exact steps to reproduce the issue, the expected behavior, and the actual behavior observed. I'll gather relevant information like error messages, logs, and the environment configuration.

Next, I report the bug in the appropriate bug tracking system (e.g., Jira, Bugzilla) with a clear and concise description. I prioritize the bug based on its severity and impact. If it's a critical issue blocking further testing, I'll escalate it immediately. Finally, I'm available to provide further details or clarification to the developers if needed and retest the fix once it's implemented.

10. Have you ever tested a game or an app before? What did you do?

Yes, I have tested games and apps before, both formally and informally. When testing, my focus is on identifying bugs, usability issues, and areas for improvement.

My testing process typically involves creating test plans based on requirements or design documents, executing test cases, and documenting any defects found. I also perform ad-hoc testing to explore the application and uncover unexpected issues. When documenting bugs, I make sure to include clear steps to reproduce, expected vs. actual results, and the environment details. Beyond functional testing, I also consider performance, security, and accessibility aspects.

11. If you could only test one thing on a new website, what would it be and why?

If I could only test one thing on a new website, it would be the core functionality related to the website's primary purpose. For example, if it's an e-commerce site, I'd test the 'add to cart' and checkout flow. If it's a blog, I'd test posting and viewing articles.

This is because the core functionality is the most critical part of the user experience. If this is broken, users won't be able to achieve their goals, and the website will fail. Other areas can be improved iteratively, but the core functionality must work reliably from the start.

12. What's the difference between 'good' and 'bad' software?

Good software reliably fulfills its intended purpose, is maintainable, and provides a positive user experience. It's often characterized by qualities like testability, well-defined architecture, clear code, and robust error handling. Performance is usually acceptable and the software is secure against known vulnerabilities.

Bad software, conversely, is unreliable, difficult to maintain, and frustrating to use. Symptoms include frequent crashes, poor performance, hard-to-understand code, lack of documentation, and security flaws. Changes are risky and often introduce new problems. Good software adheres to coding standards and design principles; bad software typically ignores them.

13. If a program is supposed to show your name, how would you test that it is correct?

To test if a program correctly displays my name, I would perform several checks. First, I would input my name (or different variations of it, like with middle initial, different capitalization, nicknames) and verify that the output matches exactly what I expect. This includes checking for correct spelling, spacing, and capitalization.

Second, I'd consider boundary conditions and edge cases. For example, what happens if the name field is left blank? Does it handle very long names correctly, or names with special characters? Testing these scenarios helps ensure the program is robust and handles unexpected inputs gracefully. I'd also consider internationalization and localization if the program is intended to support different languages or regions, to make sure it displays the name correctly with different character sets.

14. Let's say a website asks for your age. What ages would you try to enter to test it?

- Normal ages:

1,18,30,65,100(boundary condition for typical lifespan). - Boundary/Edge cases:

0,120,200(if the system accepts very high ages). - Invalid inputs:

-1,age, ``,!@#,1.5. These check for negative numbers, non-numeric input, empty strings, special characters, and floating-point numbers. - Maximum allowed age: Determine if there's a system-defined limit, e.g., by looking at documentation or error messages. Enter values slightly below, at, and slightly above that limit to test the boundary.

- Large numbers: test if it has the correct size limit in storage, such as

99999999999to check for integer overflow issues or input validation issues.

15. What is the first thing you do when you are asked to test something?

The first thing I do is clarify the scope and requirements. This involves asking questions like:

- What are the key features that need to be tested?

- What are the expected behaviors and functionalities?

- Are there any specific performance or security requirements?

- What are the testing priorities and timelines?

Understanding these aspects ensures that I have a clear understanding of what needs to be tested and helps me create a focused and effective testing strategy.

16. What does 'quality' mean to you in terms of software?

Software quality, to me, means the software reliably meets the defined requirements and user expectations. This includes functionality, performance, security, usability, and maintainability. High-quality software is not just bug-free; it's also efficient, secure, easy to use, and adaptable to future changes.

Specifically, quality involves aspects like:

- Correctness: Functioning as intended according to specifications.

- Reliability: Consistent performance under specified conditions.

- Efficiency: Utilizing resources (CPU, memory) optimally.

- Usability: Ease of learning and use for the intended users.

- Maintainability: Easy to understand, modify, and extend.

- Security: Protecting data and preventing unauthorized access.

- Testability: Ability to easily create tests to validate software quality.

- Portability: The capability of the software to be transferred from one hardware/software environment to another.

17. Explain what 'user-friendly' means when talking about a program or app.

User-friendly, when describing a program or app, means it's easy for the intended users to understand and use effectively. It focuses on minimizing frustration and maximizing efficiency in achieving their goals. A user-friendly application anticipates user needs, provides clear instructions, and offers intuitive navigation.

Essentially, a user-friendly program prioritizes usability. Key aspects include a simple and consistent interface, helpful error messages, and readily available assistance. If the target audience is technically novice, then simplicity is paramount. For expert users, customizability and efficiency may take precedence. Ultimately, it means that minimal effort is required by the user to perform desired tasks.

18. If you were testing a calculator app, what buttons would you press to start?

I would start by testing basic arithmetic operations using a combination of positive and negative numbers, zero, and decimal values. Specifically, I'd press the following sequences: 1 + 1 =, 5 - 3 =, 2 * 4 =, 10 / 2 =, 0 + 7 =, 9 - 0 =, 6 * 0 =, 0 / 5 =, 5 / 0 = (to check for error handling), -2 + 5 =, 3 - (-2) =, 2.5 * 4 =, 7.5 / 1.5 =, 0.5 + 0.5 =. I would also test the clear (C) or all clear (AC) button after each operation to ensure it resets the display to zero. Finally, I would test the +/- button to verify that I am able to switch between a number being positive and negative.

Beyond basic arithmetic, I'd test the order of operations (PEMDAS/BODMAS) with an expression like 2 + 3 * 4 = to confirm the calculator follows the correct precedence. I'd also test the memory functions (M+, M-, MR, MC) if available, using various numbers to store, recall, and clear values from memory.

19. What is an 'edge case', and can you give an example of one?

An edge case is a problem or situation that only occurs at an extreme (maximum or minimum) operating parameter. They are often overlooked during design and testing because they represent unusual inputs or states. Essentially, it's something that could happen but probably won't, yet needs to be handled correctly.

For example, in a function that calculates the average of a list of numbers, an edge case would be an empty list. Dividing by zero in the average calculation is an issue. The function must return a reasonable value such as zero or throw an exception and document this behaviour.

20. If the instructions for a program are unclear, what should you do?

First, I would attempt to clarify the unclear instructions. This might involve asking clarifying questions to the person who provided the instructions, or if available, consulting any existing documentation or specifications related to the program. The goal is to gain a better understanding of the intended functionality and expected behavior.

If clarification isn't readily available, I would make reasonable assumptions based on my existing knowledge and experience, document those assumptions clearly in the code (e.g., using comments), and proceed with implementing the program. I would also design the program in a modular way, so that if the initial assumptions are incorrect, changes can be made with minimal impact to the rest of the codebase. I'd ensure to add adequate logging too, to ease debugging in case the assumptions need to be revisited. For example:

# Assuming input is always a positive integer

def process_input(input_val):

if input_val < 0:

raise ValueError("Input must be a positive integer")

# ... rest of the logic

21. Why do you think it's important to write down the steps you take when testing?

Documenting testing steps is crucial for several reasons. First, it allows for reproducibility. If a bug is found, having a detailed record of the steps makes it easier to recreate the issue and verify the fix. This is especially helpful when someone else needs to investigate or retest the scenario.

Second, clear documentation aids in knowledge sharing and onboarding. New team members can quickly understand how tests are performed. It also makes it possible to review test coverage and identify gaps. Finally, writing down steps can help refine the test process itself, leading to more efficient and thorough testing in the future. For example, you can easily convert written steps to automated tests.

22. Have you ever had to follow instructions to build something? How is that similar to testing software?

Yes, I've followed instructions to assemble furniture many times. The process shares similarities with software testing. Both require a clear understanding of the instructions (specifications) and a systematic approach to verify each step or component functions as expected.

In furniture assembly, you verify parts are present, correctly oriented, and securely fastened. Similarly, in software testing, you verify functionality meets requirements, edge cases are handled, and the overall system behaves as designed. Both processes also involve identifying and resolving defects or errors in the construction/code. If a screw is missing, you find a replacement; if code produces an error, you debug it.

23. What is the difference between expected result and actual result?

The expected result is the outcome you anticipate or predict based on your understanding of the system or component under test. It's what should happen according to the requirements, specifications, or design. It serves as a benchmark to validate the actual result. These are usually documented.

The actual result is the outcome that actually occurs when you run the test or execute the system. It's the real-world output produced by the system. Comparing the actual result against the expected result determines whether the test has passed or failed, and helps identify bugs or deviations from the intended behavior.

24. Can you explain the term 'test environment' in simple terms?

A test environment is a setup of software and hardware where the testing team executes test cases. It supports test execution with specific hardware, software, and network configurations. Think of it as a safe space that mimics the real-world conditions your application will face, but without affecting the live, production system.

It includes things like the operating system, database, web server, and any other software your application needs to run. The goal is to provide a consistent and controlled environment to find bugs before they reach real users. For example, you might have separate test environments for unit testing, integration testing, and user acceptance testing (UAT), each configured to support the specific needs of that testing phase.

25. How would you decide which parts of a program to test first?

I would prioritize testing parts of a program based on several factors. First, I'd focus on critical functionalities or core features essential for the program's operation. These are the components that, if broken, would render the entire application unusable or significantly impact its value. Second, I would test the parts of the program that are most frequently used by users. These areas have the highest impact on user experience. Finally, I'd also prioritize high-risk areas identified as complex, newly developed, or having a history of bugs.

More specifically, I would also prioritize based on:

- Business Impact: Test features directly impacting revenue or crucial business processes first.

- Complexity: Prioritize testing complex code with intricate logic and dependencies.

- Failure Probability: Focus on areas with a higher likelihood of failure based on past experience or code changes.

- Critical bug fixes: Always test bug fixes thoroughly and rapidly.

26. What is 'positive' and 'negative' testing? Give an example.

Positive testing involves providing valid inputs to a system to verify that it behaves as expected. The goal is to confirm that the system functions correctly under normal conditions. For example, in a login form, entering a valid username and password would be a positive test.

Negative testing involves providing invalid or unexpected inputs to a system to verify that it handles errors and exceptions gracefully. The goal is to ensure that the system does not crash or produce incorrect results when faced with abnormal conditions. For example, in the same login form, entering an incorrect password, leaving required fields empty, or using special characters where they are not allowed would be negative tests.

Program Testing intermediate interview questions

1. How would you design test cases to effectively cover various boundary conditions in a software program?

To effectively cover boundary conditions, I'd design test cases focusing on the extreme ends of input ranges and valid/invalid input sets. This involves testing values at the minimum, maximum, just above, and just below these boundaries. For example, if a field accepts integers from 1 to 100, I'd test with 0, 1, 2, 99, 100, and 101.

Specifically, for each boundary, I would create test cases that:

- Use the exact boundary value.

- Use a value slightly below the boundary (boundary - 1).

- Use a value slightly above the boundary (boundary + 1).

- Test for valid and invalid data types at the boundary (e.g.

null, empty string, special characters). For example, if we are testing a functioncalculate_discount(price)then relevant test cases may include:calculate_discount(0),calculate_discount(1),calculate_discount(99),calculate_discount(100),calculate_discount(-1),calculate_discount(101),calculate_discount(null),calculate_discount("abc").

2. Can you explain the differences between white box, black box, and gray box testing techniques, and when would you choose one over the others?

White box testing involves testing the internal structure and code of a software application. Testers need knowledge of the code and design to create test cases. Black box testing, on the other hand, tests the functionality of an application without knowledge of its internal workings. Testers interact with the software through its interface, focusing on inputs and outputs. Gray box testing is a combination of both; testers have partial knowledge of the internal structure, which can be used to design more effective test cases than black box, but without the in-depth knowledge required for white box testing.

The choice depends on the testing goals and resources. White box is suitable for unit testing and code-level debugging where internal knowledge is crucial. Black box is often used for system and acceptance testing, where the focus is on user experience and functional requirements. Gray box can be beneficial for integration testing, where understanding interactions between modules is helpful but complete knowledge of each module's code is not necessary. For example, if testing an API, a gray box approach allows for understanding the input and output formats, leading to more targeted test cases without needing to delve into the API's implementation details.

3. Describe your experience with different test automation tools and frameworks. Which tools are suitable for different scenarios?

I have experience with several test automation tools and frameworks. For web application testing, I've worked with Selenium WebDriver, Cypress, and Playwright. Selenium is versatile and supports multiple languages, making it suitable for complex, cross-browser testing scenarios. Cypress is excellent for end-to-end testing with a focus on developer experience and real-time debugging. Playwright is also a great option for reliable cross-browser testing with strong auto-waiting capabilities. For API testing, I've used Rest Assured and Postman. Rest Assured is useful for Java-based projects, providing a fluent interface for API testing, while Postman is a user-friendly tool for manual and automated API testing.

In terms of frameworks, I'm familiar with TestNG and JUnit for Java-based projects, which provide features for test organization, execution, and reporting. For behavior-driven development (BDD), I've used Cucumber, which allows tests to be written in plain language, making them accessible to non-technical stakeholders.

4. What are the key challenges in testing a microservices architecture, and how would you address them?

Testing microservices presents several challenges. Complexity due to the distributed nature and numerous independent services is a major hurdle. It becomes difficult to trace requests across services and pinpoint failures. Also, ensuring data consistency across different databases used by different services requires careful planning and testing. Furthermore, network latency and failures must be considered as potential points of failure, so testing must address resilience. Finally, setting up a realistic test environment that mimics the production environment can be complex and resource-intensive.

To address these, a combination of strategies is helpful. Implement comprehensive API testing to validate each service's functionality and contracts. Employ integration testing to verify interactions between services, focusing on data consistency and error handling. Use contract testing to ensure services adhere to agreed-upon interfaces. Implement end-to-end testing to simulate real-world user scenarios and validate the entire system's behavior. Utilize chaos engineering principles to introduce failures and test the system's resilience. Finally, invest in observability tools to monitor system behavior, identify bottlenecks, and troubleshoot issues effectively.

5. How do you approach testing a feature that involves complex integrations with third-party APIs?

When testing features with complex third-party API integrations, I prioritize a layered approach. First, I focus on unit testing the code that interacts directly with the API, mocking the API responses to ensure proper handling of different scenarios (success, failure, rate limiting, etc.). This helps isolate issues within my code. Then, I use integration tests to verify the end-to-end flow, using a test environment (if available) against the real API or a stable mock. This verifies data mapping, request formatting, and error handling across the entire integration.

Specifically, I'd use tools like pytest with the requests-mock library in Python for unit testing and potentially utilize containerization to create reproducible test environments that closely mimic production. Load testing tools such as Locust can be used to simulate heavy load on API to test its behavior, error handling and throughput.

6. Explain the concept of test-driven development (TDD) and its benefits. Have you used TDD in your projects?

Test-Driven Development (TDD) is a software development process where you write a failing test case before writing any production code. The process follows a short, repetitive cycle: First, you write a test that defines a desired improvement or new function. Then, you write the minimum amount of code to pass that test. Finally, you refactor the code to acceptable standards. This cycle is often referred to as "Red-Green-Refactor".

The benefits of TDD include improved code quality (since tests drive the design), reduced debugging time (tests act as living documentation and regression checks), and increased confidence in the code's correctness. While working on personal projects or proof-of-concept implementations, I've used TDD to ensure core functionalities are well tested from the beginning. For example, when creating a simple API endpoint, I would first write a test to check if the endpoint returns the expected data with a valid request before implementing the actual API logic. Example using Jest: test('should return 200 status code', () => { //assertion });

7. How would you design a comprehensive test plan for a mobile application, considering different platforms and devices?

A comprehensive test plan for a mobile application across different platforms and devices involves several key stages. First, define the scope, objectives, and target audience. Then, identify the different platforms (iOS, Android, web) and a representative set of devices based on market share and user demographics. Next, create test cases covering functional testing (feature validation), usability testing (user experience), performance testing (load, stress, speed), security testing (data protection, authorization), compatibility testing (different OS versions and devices), and localization testing (language and regional settings). Don't forget accessibility testing, and install/uninstall testing too.

Implement a test management tool to organize test cases, track progress, and manage defects. Automate tests where feasible (e.g., using Appium, Espresso, XCUITest) for regression and performance testing. Prioritize testing based on risk and impact. Report test results and defect information clearly. Example platform specific test:

Android: adb shell dumpsys meminfo <package_name>

iOS: Instruments (Activity Monitor)

8. What are the strategies for testing software performance, including load testing, stress testing, and endurance testing?

Software performance testing involves evaluating the speed, stability, and scalability of a software application. Key strategies include: Load Testing, which simulates expected user traffic to assess response times and identify bottlenecks under normal conditions. Stress Testing exceeds the expected load to determine breaking points and system stability under extreme conditions. Endurance Testing (also called soak testing) maintains a consistent load over a prolonged period to identify memory leaks or performance degradation over time.

These tests often involve specific metrics like response time, throughput, resource utilization (CPU, memory, disk I/O), and error rates. Tools are used to simulate users and monitor system behavior. For example, JMeter or Gatling can be used to simulate user load, while tools like Prometheus can monitor system resources. The choice of tool depends on the technology and scale being tested.

9. Describe the process of creating and maintaining a robust test data management strategy.

Creating a robust test data management (TDM) strategy involves several key phases. First, planning and analysis focuses on identifying test data requirements based on test cases and data sensitivity. This includes defining data types, volumes, and dependencies. Next, data creation encompasses generating synthetic data, masking production data, or extracting subsets of production data. Data masking is critical to ensure compliance with privacy regulations. Then, data provisioning involves delivering the necessary test data to the testing environments, often through automation. Finally, data maintenance requires regularly refreshing test data, archiving old data, and ensuring consistency and accuracy.

Maintaining a TDM strategy involves continuous monitoring and improvement. Key considerations include: Version control of test data, Automating data provisioning and refresh cycles, Implementing data quality checks to ensure data accuracy and consistency, Defining clear roles and responsibilities for managing test data and compliance with data privacy regulations (e.g., GDPR). Tools like data masking software, synthetic data generators, and test data management platforms are often employed to streamline these processes.

10. How do you prioritize test cases when time and resources are limited? What factors do you consider?

When time and resources are limited for testing, I prioritize test cases based on risk, impact, and coverage. High-risk areas, such as critical functionalities or frequently used features, get the highest priority. I also consider the potential impact of a failure on the user experience and business operations. Test cases covering core functionalities and those likely to expose critical defects are prioritized.

Factors considered include: business criticality, failure probability, impact of failure, regulatory compliance requirements, code changes (testing recently modified areas first), and user impact. Techniques like risk-based testing, Pareto principle (80/20 rule), and prioritization matrices help in making informed decisions to ensure the most important aspects of the application are tested effectively within the given constraints. I would also consider exploratory testing to cover areas that might be missed by formal test cases.

11. Explain the concept of continuous integration and continuous delivery (CI/CD) and how testing fits into the pipeline.

Continuous Integration (CI) and Continuous Delivery (CD) are practices designed to release software changes more frequently and reliably. CI focuses on automating the integration of code changes from multiple developers into a shared repository. Each integration triggers automated builds and tests to detect integration errors quickly. CD builds upon CI by automating the release of tested code changes to various environments, including production. Together, they form a pipeline where code changes are automatically built, tested, and deployed.

Testing is integral to the CI/CD pipeline. Automated tests at various stages (unit, integration, end-to-end) validate code changes. If tests fail, the pipeline stops, preventing faulty code from being deployed. This feedback loop enables developers to identify and fix issues early, ensuring higher quality and faster delivery. Different types of tests can be run at different points in the process, for example: Unit tests run first and fast, then potentially integration tests to check for dependencies between modules, followed by end-to-end tests that test the system as a whole. Each stage increases confidence in the code before release.

12. How do you approach testing security aspects of a web application, such as authentication, authorization, and data protection?

Testing web application security involves a multi-faceted approach. For authentication, I'd verify login functionality with valid and invalid credentials, test password reset mechanisms, and assess session management. Authorization testing would include checking role-based access control (RBAC) by attempting to access resources with insufficient privileges, as well as verifying proper handling of different user roles. Data protection testing involves techniques like penetration testing for SQL injection and cross-site scripting (XSS) vulnerabilities. I'd use tools like OWASP ZAP, Burp Suite for these kinds of penetration tests.

13. What is mutation testing, and how does it help improve the quality of test suites?

Mutation testing is a type of software testing that involves introducing small, artificial faults (mutations) into the source code of a program. The aim is to evaluate the quality of the test suite by determining if these faults are detected by the existing tests. Each mutated version of the code is called a mutant. A test suite is considered effective if it can 'kill' a high percentage of mutants (i.e., detect the introduced faults).

Mutation testing improves test suite quality by identifying weaknesses in the test cases. If a mutant survives (not killed by any test), it indicates either an equivalent mutant (functionally identical to the original) or a missing/inadequate test case. This prompts developers to write additional or improved tests to specifically target and kill the surviving mutants, thereby increasing the overall fault detection capability and robustness of the test suite. For example, if you have a function add(a,b) and a test only checks add(1,1) == 2, a mutation replacing + with - might survive, indicating a need for more comprehensive tests.

14. How do you handle flaky tests in an automated testing environment? What steps do you take to identify and resolve them?

Flaky tests are a real pain in automated testing. My approach involves several steps. First, identification. I look for tests that pass and fail intermittently without any code changes. Test reports and CI/CD systems often flag these. I would use tools like test history dashboards in the CI/CD system or specialized tools designed to track test flakiness over time.

Next, I focus on resolving the flakiness. This involves:

- Isolating the test: Run the test repeatedly in isolation to confirm the flakiness.

- Identifying the root cause: Common causes include asynchronous operations (e.g., waiting for an API call), timing issues, resource contention (e.g., database locks), and external dependencies. I might add extra logging, increase timeouts, use more robust synchronization primitives, or mock external dependencies. For example, if dealing with async operations in JavaScript, I'd ensure proper

awaitusage and consider more robust state management usingPromisesorasync/awaitrather than relying onsetTimeout. If database connections are the issue, then use connection pooling. - Fixing the test or the code: Once the cause is identified, either the test is rewritten to be more robust, or the underlying code is fixed.

- Quarantine: If a test can't be immediately fixed, it might be temporarily quarantined (disabled) to prevent blocking the CI/CD pipeline, but with a clear plan to revisit and fix it.

- Reporting: Document the flaky test, the root cause, and the solution or quarantine strategy to ensure transparency and prevent recurring issues.

15. Describe your experience with testing different types of databases and ensuring data integrity.

I have experience testing various types of databases, including relational databases like PostgreSQL and MySQL, as well as NoSQL databases such as MongoDB and Redis. My testing approaches differ based on the database type. For relational databases, I focus on schema validation, referential integrity, and transaction management using SQL queries for data verification.

Ensuring data integrity involves several strategies. I use tools like dbUnit for setting up known states and comparing database snapshots. I write SQL scripts to perform data validation, checking for constraints, data types, and relationships. In NoSQL databases, I perform similar validation checks using queries specific to the database (e.g., MongoDB's query language). I also use automated tests integrated into CI/CD pipelines to validate data after migrations or updates.

16. How do you ensure that test environments are consistent and reliable across different stages of testing?

Ensuring consistent and reliable test environments involves several strategies. Infrastructure as Code (IaC) tools like Terraform or Ansible can automate environment provisioning, guaranteeing identical setups. Configuration management tools like Chef or Puppet further standardize configurations. Containerization using Docker isolates applications and their dependencies, promoting consistency across environments. Version control for environment configurations allows tracking and reverting changes. Data masking or anonymization protects sensitive information while keeping data consistent across environments. Environment monitoring provides early warnings of deviations or degradation.

Regular environment refreshes from a golden image or template helps maintain a clean state. Automating test data generation and management ensures data consistency for each test run. Clear documentation of environment setup, dependencies, and configuration parameters is crucial. Finally, employing continuous integration and continuous deployment (CI/CD) pipelines facilitates automated environment creation and testing, reducing manual errors and ensuring environments are consistently built and tested.

17. Explain the challenges of testing legacy systems and how you would approach modernizing the testing process.

Testing legacy systems presents several challenges including: limited or no documentation, making it difficult to understand the system's functionality and intended behavior; a lack of automated tests, resulting in manual testing which is time-consuming and error-prone; code that is tightly coupled and complex, increasing the risk of introducing new defects during testing; and difficulty setting up test environments that accurately mimic production.

To modernize the testing process, I would start by: 1) Analyzing the system: Prioritize modules based on risk and business impact. Document the existing functionality as much as possible. 2) Introducing automation gradually: Start with unit tests for new code and critical components. Use techniques like characterization testing to capture the existing behavior of legacy code. 3) Improving testability: Refactor code to reduce coupling and improve modularity, making it easier to test. Introduce dependency injection or other design patterns to improve isolation. 4) Implementing a CI/CD pipeline: Automate the build, test, and deployment processes to enable faster feedback and more frequent releases. 5) Utilizing modern testing tools: Introduce tools for test management, defect tracking, and performance testing. 6) Prioritize integration and end-to-end tests: As the system becomes more modular, focus on testing the interactions between components to ensure that the system functions as a whole.

18. How do you measure the effectiveness of your testing efforts? What metrics do you track?

I measure the effectiveness of my testing efforts by tracking several key metrics. These metrics help me understand the quality of the software, the efficiency of the testing process, and the value that testing is providing.

Specifically, I monitor metrics such as:

- Defect Density: Number of defects found per unit of code or time.

- Defect Severity: Distribution of defects by severity level (e.g., critical, high, medium, low).

- Defect Leakage: Number of defects that escape into production.

- Test Coverage: Percentage of code or requirements covered by tests.

- Test Execution Rate: Percentage of tests executed within a given timeframe.

- Test Pass/Fail Rate: Percentage of tests that pass versus fail.

- Test Automation Rate: Percentage of tests that are automated.

- Mean Time To Detect (MTTD): Average time taken to detect a defect.

- Mean Time To Resolve (MTTR): Average time taken to resolve a defect. By analyzing these metrics, I can identify areas for improvement in both the testing process and the software itself. For instance, a high defect leakage rate might indicate the need for more thorough testing or better test coverage.

19. Describe your experience with exploratory testing and how it complements other testing techniques.

Exploratory testing is a dynamic testing approach where test design, test execution, and test result evaluation are performed simultaneously. My experience involves using it to uncover unexpected issues and edge cases that might be missed by scripted tests. It's particularly effective when requirements are vague or rapidly changing. I often use mind maps and session-based test management to structure my exploratory testing efforts, focusing on areas of high risk or complexity.

Exploratory testing complements other techniques like unit, integration, and regression testing. While scripted tests ensure core functionality works as expected, exploratory testing provides a layer of creativity and adaptability, identifying usability problems, performance bottlenecks, or security vulnerabilities that predefined tests may overlook. It also helps in creating more robust and comprehensive test cases for future regression suites based on the issues discovered during exploration.

20. How would you approach testing a software application for accessibility, ensuring it meets the needs of users with disabilities?

To test an application for accessibility, I'd start by understanding the relevant accessibility standards like WCAG (Web Content Accessibility Guidelines) and Section 508. My approach would involve a combination of automated and manual testing techniques. Automated tools can quickly identify common issues like missing alt text or insufficient color contrast. Manual testing is crucial for evaluating the user experience for individuals with disabilities, using assistive technologies such as screen readers, screen magnifiers, and keyboard-only navigation.

Specifically, I would check for things like proper semantic HTML structure, keyboard navigation (tab order, focus indicators), alternative text for images, video captions, ARIA attributes where needed, color contrast, and responsive design for different screen sizes. Usability testing with users who have disabilities is the most valuable step in determining if the application is truly accessible and usable.

Program Testing interview questions for experienced

1. How do you approach testing a feature with poorly defined requirements?

When faced with poorly defined requirements, I prioritize clarification and collaboration. First, I'd actively engage with stakeholders (product owners, business analysts, developers) to uncover implicit assumptions and fill in the gaps. I'd ask clarifying questions, propose concrete examples, and iterate on user stories or acceptance criteria until a shared understanding emerges. If possible, I'd try to understand the 'why' behind the feature to better infer the expected behavior.