Test Duration

~ 30 minsDifficulty Level

Moderate

Questions

- 15 Spark MCQs

Availability

Available as custom testThe Apache Spark Online Test evaluates the candidate's ability to transform structured data with RDD API and SparkSQL (Datasets and DataFrames), convert big-data challenges into iterative/ multi-stage Spark scripts, optimize existing Spark jobs using partitioning/ caching and analyze graph structures using GraphX.

Covered skills:

Test Duration

~ 30 minsDifficulty Level

Moderate

Questions

Availability

Available as custom testThe Spark Online Test helps recruiters and hiring managers identify qualified candidates from a pool of resumes, and helps in taking objective hiring decisions. It reduces the administrative overhead of interviewing too many candidates and saves time by filtering out unqualified candidates at the first step of the hiring process.

The test screens for the following skills that hiring managers look for in candidates:

Use Adaface tests trusted by recruitment teams globally. Adaface skill assessments measure on-the-job skills of candidates, providing employers with an accurate tool for screening potential hires.

We have a very high focus on the quality of questions that test for on-the-job skills. Every question is non-googleable and we have a very high bar for the level of subject matter experts we onboard to create these questions. We have crawlers to check if any of the questions are leaked online. If/ when a question gets leaked, we get an alert. We change the question for you & let you know.

How we design questionsThese are just a small sample from our library of 15,000+ questions. The actual questions on this Spark Test will be non-googleable.

| 🧐 Question | |||||

|---|---|---|---|---|---|

|

Easy

Character count

|

Solve

|

||||

|

|

|||||

|

Medium

File system director

|

Solve

|

||||

|

|

|||||

|

Medium

Grade-Division-Points

|

Solve

|

||||

|

|

|||||

| 🧐 Question | 🔧 Skill | ||

|---|---|---|---|

|

Easy

Character count

|

2 mins Spark

|

Solve

|

|

|

Medium

File system director

|

3 mins Spark

|

Solve

|

|

|

Medium

Grade-Division-Points

|

4 mins Spark

|

Solve

|

| 🧐 Question | 🔧 Skill | 💪 Difficulty | ⌛ Time | ||

|---|---|---|---|---|---|

|

Character count

|

Spark

|

Easy | 2 mins |

Solve

|

|

|

File system director

|

Spark

|

Medium | 3 mins |

Solve

|

|

|

Grade-Division-Points

|

Spark

|

Medium | 4 mins |

Solve

|

With Adaface, we were able to optimise our initial screening process by upwards of 75%, freeing up precious time for both hiring managers and our talent acquisition team alike!

Brandon Lee, Head of People, Love, Bonito

It's very easy to share assessments with candidates and for candidates to use. We get good feedback from candidates about completing the tests. Adaface are very responsive and friendly to deal with.

Kirsty Wood, Human Resources, WillyWeather

We were able to close 106 positions in a record time of 45 days! Adaface enables us to conduct aptitude and psychometric assessments seamlessly. My hiring managers have never been happier with the quality of candidates shortlisted.

Amit Kataria, CHRO, Hanu

We evaluated several of their competitors and found Adaface to be the most compelling. Great library of questions that are designed to test for fit rather than memorization of algorithms.

Swayam Narain, CTO, Affable

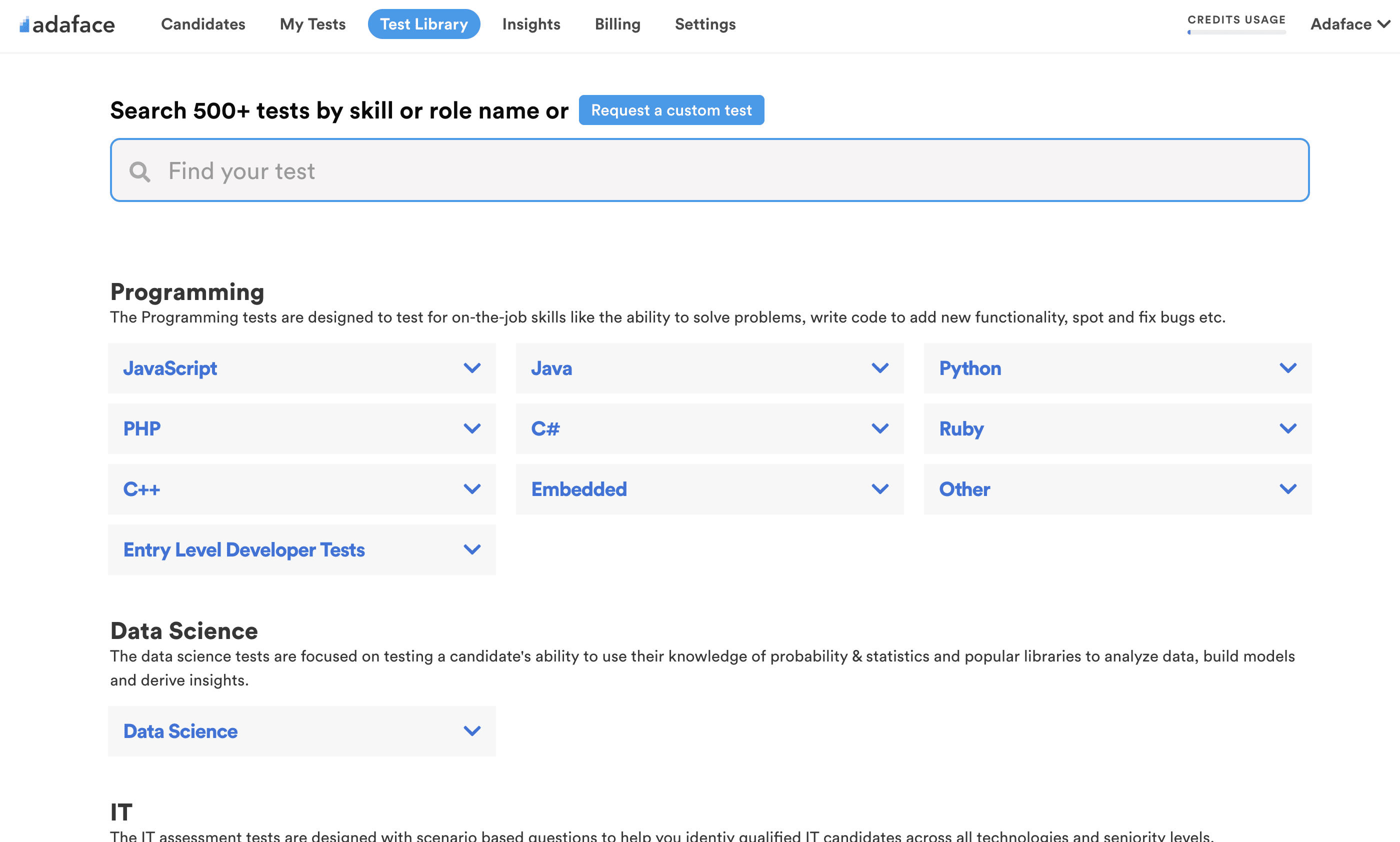

The Adaface test library features 500+ tests to enable you to test candidates on all popular skills- everything from programming languages, software frameworks, devops, logical reasoning, abstract reasoning, critical thinking, fluid intelligence, content marketing, talent acquisition, customer service, accounting, product management, sales and more.

The Spark Test is designed to evaluate a candidate's knowledge and skills in Apache Spark. This test is used by recruiters to identify candidates who are proficient in various Spark-related tasks such as developing and running Spark jobs, processing data with Spark SQL, and more. It is helpful in hiring roles where Spark expertise is required.

Yes, recruiters can request a single custom test with multiple skills, including PySpark. For more details on the PySpark Test, please refer to our test library.

The Spark Test covers a wide range of skills including Fundamentals of Spark Core, Spark SQL, Dataframes and Datasets, Spark Streaming, running Spark on a cluster, iterative algorithms, GraphX library, job tuning, and migrating data from various sources.

Use our assessment software at the early stages of your recruitment process. Add a link to the assessment in your job post or invite candidates via email. Adaface helps you identify the most skilled candidates quickly.

Yes, you can combine both Spark and SQL skills in a single test. This is particularly useful for roles requiring expertise in data processing and analysis. For more details, refer to the SQL Online Test.

Popular Big Data tests include:

Yes, absolutely. Custom assessments are set up based on your job description, and will include questions on all must-have skills you specify. Here's a quick guide on how you can request a custom test.

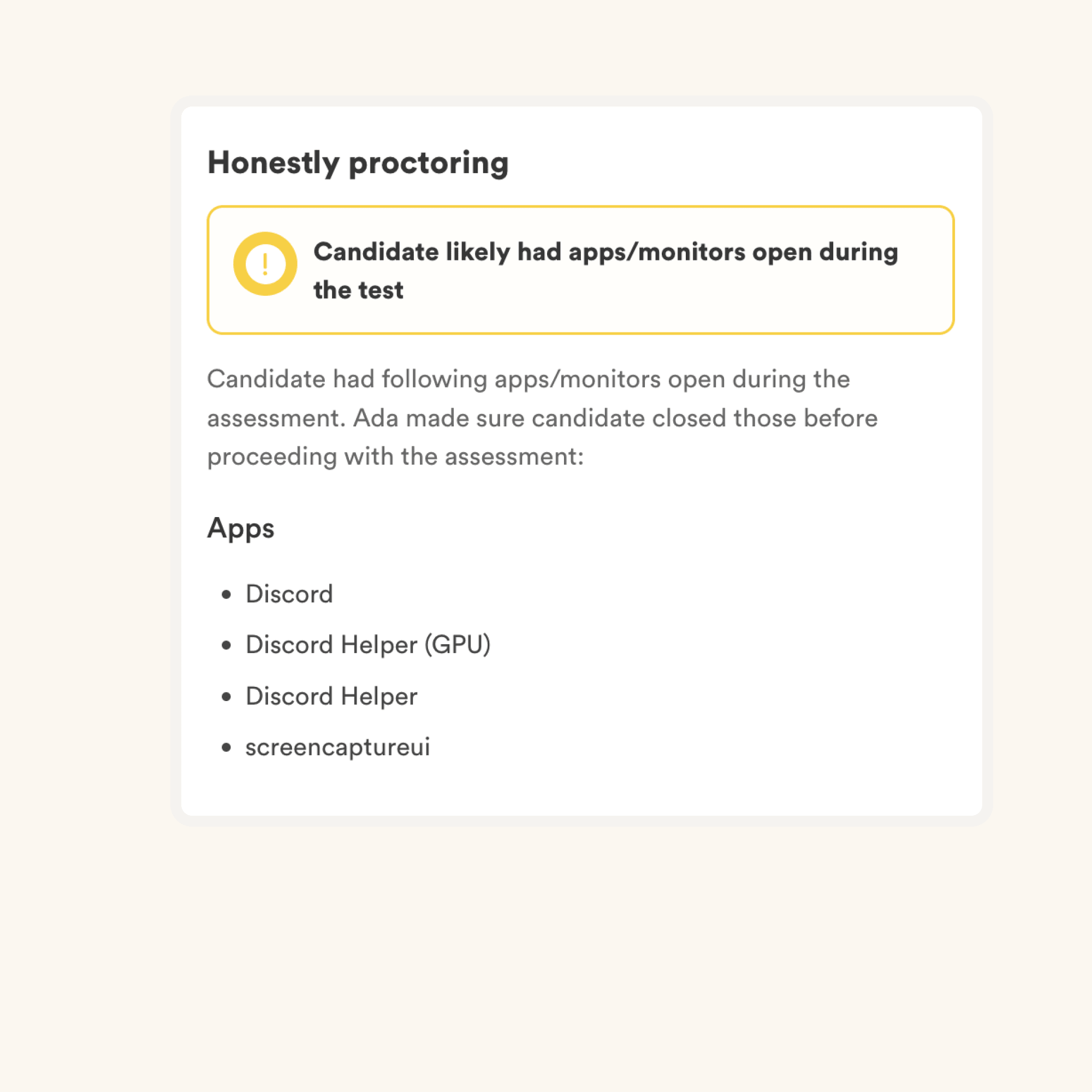

We have the following anti-cheating features in place:

Read more about the proctoring features.

The primary thing to keep in mind is that an assessment is an elimination tool, not a selection tool. A skills assessment is optimized to help you eliminate candidates who are not technically qualified for the role, it is not optimized to help you find the best candidate for the role. So the ideal way to use an assessment is to decide a threshold score (typically 55%, we help you benchmark) and invite all candidates who score above the threshold for the next rounds of interview.

Each Adaface assessment is customized to your job description/ ideal candidate persona (our subject matter experts will pick the right questions for your assessment from our library of 10000+ questions). This assessment can be customized for any experience level.

Yes, it makes it much easier for you to compare candidates. Options for MCQ questions and the order of questions are randomized. We have anti-cheating/ proctoring features in place. In our enterprise plan, we also have the option to create multiple versions of the same assessment with questions of similar difficulty levels.

No. Unfortunately, we do not support practice tests at the moment. However, you can use our sample questions for practice.

You can check out our pricing plans.

Yes, you can sign up for free and preview this test.

Here is a quick guide on how to request a custom assessment on Adaface.