Hiring a skilled game animator can be challenging, requiring a keen eye for talent and a solid understanding of the animation pipeline. Recruiters and hiring managers need to ask the right questions to assess a candidate’s technical skills, artistic abilities, and problem-solving acumen, and it is important to see if they align with the skills required for a game animator.

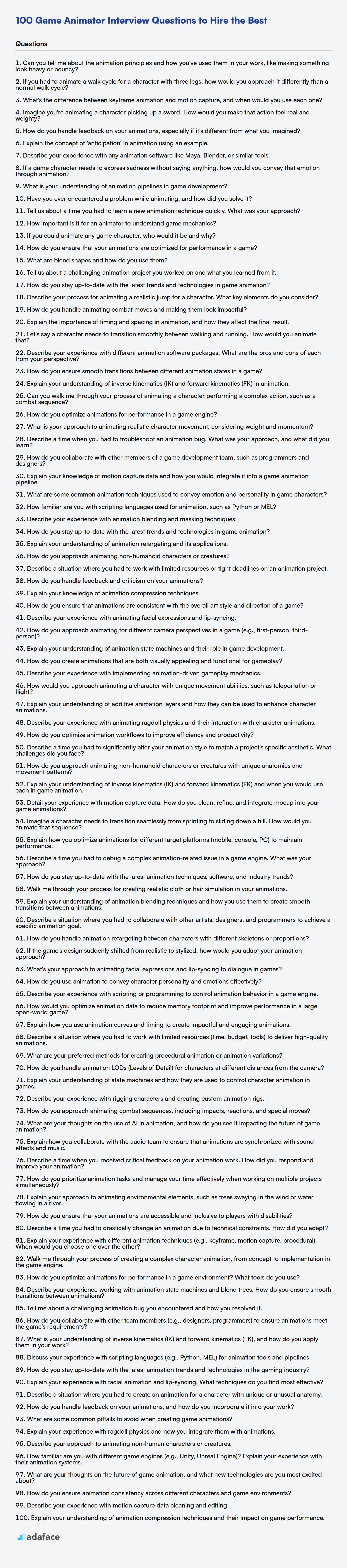

This blog post provides a curated list of interview questions for game animators, covering various experience levels from freshers to advanced professionals. You will find a mix of technical questions, behavioral questions, and multiple-choice questions (MCQs) to comprehensively evaluate candidates.

By using these interview questions, you'll be able to identify top-tier game animators who can bring your game worlds to life. Before the interview, consider using a Unity test to screen candidates for their animation skills, saving you time and resources.

Table of contents

Game Animator interview questions for freshers

1. Can you tell me about the animation principles and how you've used them in your work, like making something look heavy or bouncy?

The animation principles, often called the 12 principles of animation, are fundamental guidelines for creating believable and engaging movement. I've used principles like squash and stretch to exaggerate motion and convey weight, making objects appear bouncy or impactful. For example, in a bouncing ball animation, the ball squashes upon impact to show its flexibility and stretches as it moves through the air to emphasize its speed. Timing and spacing are also crucial; closer spacing between frames creates slower movement, while wider spacing results in faster movement. To make something appear heavy, I would use slower timing and less squash and stretch, whereas a lighter object would have faster timing and more exaggerated squash and stretch. Anticipation prepares the audience for an action. Follow through and overlapping action adds realism. Follow through refers to the way parts of an object continue to move after the main object stops, and overlapping action means that different parts of an object move at different rates. By applying these principles, I can create animations that are not only visually appealing but also communicate weight, force, and personality effectively.

2. If you had to animate a walk cycle for a character with three legs, how would you approach it differently than a normal walk cycle?

Animating a three-legged walk cycle presents unique challenges compared to a standard bipedal walk. The key difference lies in maintaining balance and creating a believable sense of weight distribution. Instead of a simple left-right-left pattern, you'd need to consider a tripod of support at times.

I would approach it by focusing on these points: First, defining a stable triangle of support. This means the character will often have two legs on the ground while the third swings forward. Second, shifting the character's center of gravity significantly to maintain balance as the weight shifts between legs. Finally, I'd carefully consider the timing and spacing of each leg's movement to avoid an awkward or unnatural gait, potentially borrowing from insect or tripod-like robot movement references for inspiration. The up and down bob would likely be less pronounced and occur over a longer time period.

3. What's the difference between keyframe animation and motion capture, and when would you use each one?

Keyframe animation involves manually creating poses (keyframes) at specific points in time, and the software interpolates the movement between those poses. It's ideal for stylized or exaggerated movements, where precision and artistic control are paramount. Think of animating a cartoon character or creating a specific camera move.

Motion capture, on the other hand, records the movements of a real-world actor or object and translates that data into animation. Motion capture is generally preferred for realistic movements, especially for complex actions like fighting or dancing, where it would be difficult to achieve the same level of realism using keyframe animation alone. Motion capture often requires post-processing to clean up the data and adapt it to the target character or rig.

4. Imagine you're animating a character picking up a sword. How would you make that action feel real and weighty?

To make the action of a character picking up a sword feel real and weighty, focus on a few key elements. Firstly, timing and anticipation are crucial. The character shouldn't just instantly grab the sword. Instead, there should be a slight pause or a subtle shift in weight before they reach for it, telegraphing the intention and the effort required. Then, the speed of the movement is important. A heavy sword wouldn't be snatched up quickly; the motion should have a deliberate, slightly slower pace initially, accelerating as the character gets a better grip.

Secondly, pay attention to the character's body mechanics. Their entire body should be involved, not just their arm. Observe how a real person lifts something heavy. The legs might bend slightly for leverage, the core muscles engage, and the shoulders might rotate. Add subtle secondary motions, like the character adjusting their grip or bracing themselves, to sell the weight. Finally, consider adding a slight overshoot or recoil after the lift to emphasize the inertia and the effort involved. A small settle in the stance after the lift can further sell the feeling of weight.

5. How do you handle feedback on your animations, especially if it's different from what you imagined?

When receiving feedback on animations that differ from my initial vision, I focus on understanding the reasoning behind the suggestions. I actively listen and ask clarifying questions to grasp the stakeholder's perspective and goals. For example, "Can you elaborate on what feels off about the timing?" or "What is the intended user experience we are aiming for?". I avoid becoming defensive and treat feedback as a valuable opportunity to improve the animation and align it with project objectives.

My next step is to analyze the feedback objectively. If the suggestion is directly implementable, I'll make the change and seek further review. If the feedback requires more exploration, I prototype different approaches and present them for consideration, illustrating the pros and cons of each option. This collaborative approach often leads to animations that are more effective and better meet the desired outcome than my initial concept. Ultimately, the goal is to deliver an animation that serves the project's needs, not just my personal preferences.

6. Explain the concept of 'anticipation' in animation using an example.

Anticipation is a movement that prepares the audience for a major action. It's a small action in the opposite direction of the main action, signaling what's about to happen, creating believability and visual interest. Without it, actions can feel sudden and unnatural.

For example, before a character jumps, they might crouch down first. The crouch is the anticipation. It shows the audience they are about to jump and builds up the energy for the action. This brief downward motion prepares the viewer for the subsequent upward jump, making the movement feel more powerful and realistic.

7. Describe your experience with any animation software like Maya, Blender, or similar tools.

While I haven't directly used industry-standard animation software like Maya or Blender in a professional capacity, I've gained experience with animation concepts through related tools and libraries. For example, I've utilized Python's matplotlib library to create basic 2D animations for data visualization, and I'm familiar with the principles of keyframing, interpolation, and rendering involved in the animation pipeline. I also have a theoretical understanding of 3D modeling and animation workflows.

Furthermore, I have experimented with simpler animation tools and game engines like Unity which allows you to easily build animations for game characters and environments. I am eager to learn Maya, Blender, or a similar tool if the opportunity arises. I believe my foundational knowledge would allow me to quickly grasp the specifics of those platforms.

8. If a game character needs to express sadness without saying anything, how would you convey that emotion through animation?

To convey sadness through animation without dialogue, focus on subtle physical cues. The character's posture would be key: a slight slouch, rounded shoulders, and a downward tilt of the head suggest dejection. The character's movements would be slow and deliberate, lacking energy or enthusiasm. The eyes are critical; a slight droop in the eyelids and a downward gaze can effectively communicate sorrow. Consider subtle details like trembling lips or a single tear running down the cheek.

Furthermore, animate the character's idle animation to incorporate these elements. Instead of standing upright, they might lean against a wall or sit slumped on the ground. The breathing animation could be slower and shallower. The overall effect should be a visual representation of emotional weight and a lack of vitality.

9. What is your understanding of animation pipelines in game development?

Animation pipelines in game development describe the process of creating, implementing, and managing animations within a game engine. It typically involves several key stages:

- Modeling & Rigging: Creating the 3D model and setting up the skeleton (rig) for animation.

- Animation: Animators create the actual motion, either through keyframing, motion capture, or procedural methods.

- Skinning/Weighting: Attaching the 3D model to the rig, defining how the mesh deforms with the bone movement.

- Import & Integration: Importing the animation data (often in formats like FBX or glTF) into the game engine (e.g., Unity, Unreal Engine).

- Animation Controller/State Machine: Setting up logic to control which animations play and when, based on game events and player input.

- Blending & Transitioning: Smoothing transitions between animations to avoid jarring movements. Common techniques include blending animations together based on weights.

- Optimization: Reducing the size and complexity of animations to improve performance, especially on lower-end hardware. Example:

Animation Compression.

10. Have you ever encountered a problem while animating, and how did you solve it?

Yes, I've encountered several animation problems. A common one is achieving smooth transitions between animation states, especially with complex rigs. Sometimes, keyframes would 'pop' or create a jarring change. To solve this, I often adjust the easing curves of the keyframes, experimenting with bezier curves to find a more natural flow. I also ensure that the keyframes are properly aligned in time, avoiding abrupt shifts in position or rotation. For code-driven animations, I've used techniques like interpolation (lerping or slerping) to generate intermediate frames and smooth out the motion.

Another issue I faced was animating UI elements that needed to react to user input in a responsive way. For example, animating a button press. The problem was the animation felt delayed. The solution here was to use requestAnimationFrame and to debounce the input so that the animation only triggers if input has stabilized and user not repeatedly clicking or touching it.

11. Tell us about a time you had to learn a new animation technique quickly. What was your approach?

During a project involving a UI overhaul, I needed to quickly learn Lottie animations for Android. My approach was multi-faceted: First, I explored the official Lottie documentation and sample projects to understand the core concepts and JSON structure. Next, I found online tutorials and followed along, building simple animations to solidify my understanding. Crucially, I focused on the specific animation techniques needed for the project, such as property animation and shape morphing, rather than trying to learn everything at once.

I then experimented with converting existing After Effects animations into Lottie JSON files using the Bodymovin plugin. This allowed me to understand how complex animations could be structured and optimized for mobile. Whenever I faced issues, I actively searched for solutions on Stack Overflow and GitHub, and also used the Lottie editor to debug the converted JSON files. By focusing on practical application and iterative learning, I was able to effectively integrate Lottie animations into the project within the given timeframe.

12. How important is it for an animator to understand game mechanics?

Understanding game mechanics is crucial for animators. Animations need to react appropriately to game events (e.g., player input, enemy actions, physics). Without this understanding, animations can feel disconnected and unrealistic, negatively impacting the player experience. For example, an attack animation must synchronize with the damage dealing mechanic and the enemy's reaction. If these are out of sync it impacts gameplay negatively.

Animators who grasp game mechanics can proactively contribute to smoother gameplay. They can optimize animations for performance (reducing frame count where appropriate), anticipate potential animation conflicts, and collaborate effectively with programmers and designers. This collaborative understanding helps to deliver a polished and engaging final product.

13. If you could animate any game character, who would it be and why?

I would animate Hollow Knight from Hollow Knight. His design is deceptively simple, but the potential for expressive animation is immense. His movements could convey a wide range of emotions, from determined resolve to crippling fear, simply through subtle shifts in his posture and the way his cloak flows. His attacks, especially, could be incredibly dynamic and visually interesting.

Animating Hollow Knight would also be a great technical challenge. Achieving that balance between insect-like movement and heroic grace would require careful planning and attention to detail, and I am always up for a challenge.

14. How do you ensure that your animations are optimized for performance in a game?

To ensure optimal animation performance, I focus on several key areas. First, I minimize the number of bones in the skeletal mesh, as more bones directly impact rendering cost. I also optimize animation data by reducing keyframe counts and using techniques like animation compression (e.g., quantization). Using animation blending effectively can smooth transitions without requiring complex calculations.

Second, I consider the rendering pipeline. Using simpler shaders for animated objects, especially those far from the camera, improves efficiency. Also, using techniques such as skeletal mesh LODs(Level of Details) where distant objects are rendered with simpler animations or entirely different models with lower poly counts is important. Finally, profiling is crucial; I use profilers to identify bottlenecks and iterate on optimization strategies. For example, tools like Unreal Engine's profiler can pinpoint performance-intensive animations or skeletal meshes.

15. What are blend shapes and how do you use them?

Blend shapes, also known as morph targets, are a technique used in 3D computer graphics to deform a mesh by interpolating between different pre-defined shapes. These shapes represent various expressions, poses, or any other deformation you want to apply to the base mesh. They're commonly used for facial animation, character rigging, and shape variations.

Blend shapes are used by assigning weights (values between 0 and 1, or sometimes outside this range) to each target shape. When the weight of a blend shape is increased, the vertices of the base mesh are moved towards the corresponding vertices of the target shape. The final mesh is a weighted average of the base mesh and all the target shapes. In code, this can involve calculating new vertex positions:

newVertexPosition = baseVertexPosition * (1 - blendWeight) + targetVertexPosition * blendWeight;

For multiple blend shapes, you'd iterate through each one, applying its influence based on its weight.

16. Tell us about a challenging animation project you worked on and what you learned from it.

One challenging animation project involved creating a 3D explainer video for a complex medical device. The challenge lay in simplifying intricate surgical procedures and technical aspects into visually engaging and easily understandable animations. We faced hurdles in accurately portraying the device's functionality while adhering to strict medical accuracy guidelines. The project required extensive collaboration with medical experts, iterative revisions based on their feedback, and creative problem-solving to translate abstract concepts into clear visual representations.

From this project, I learned the importance of clear communication and collaboration with subject matter experts when dealing with complex topics. I also honed my ability to break down complex information into digestible visual elements and improved my skills in balancing technical accuracy with creative storytelling. Furthermore, I realized the value of iterative feedback and incorporating diverse perspectives to achieve a successful outcome.

17. How do you stay up-to-date with the latest trends and technologies in game animation?

I stay up-to-date with game animation trends through a variety of methods. I regularly read industry blogs and websites like Gamasutra, Animation World Network, and personal blogs of leading animators. I also follow relevant accounts on social media platforms like Twitter and ArtStation to see what's trending and what techniques artists are exploring.

Furthermore, I participate in online forums and communities like those on Unreal Engine and Unity platforms to discuss new tools, workflows, and challenges with other animators. Attending industry conferences (like GDC) and workshops when possible also provides valuable insights and networking opportunities. I also dedicate time to experiment with new software and techniques as they emerge, such as new retargeting tools or advancements in motion capture.

18. Describe your process for animating a realistic jump for a character. What key elements do you consider?

My process for animating a realistic jump involves several key elements. First, I consider the character's weight and strength, which influence the jump's height and speed. The animation starts with a anticipation phase (squash), where the character crouches down, storing energy. This is followed by the take-off, where the character explosively pushes off the ground. Then comes the airborne phase, respecting principles of gravity (slowing down at the apex). Finally, the landing phase includes a cushioning effect (stretch), absorbing the impact. Body mechanics matter, limbs act as counterweights to add realism to the jump.

Key elements considered are: timing and spacing (to convey weight and momentum), the arc of the jump (following gravity), and exaggeration of poses (squash and stretch) to emphasize impact and energy. For example, during the airborne phase, there's often a slight settling of the character, which requires attention. I also pay close attention to the character's center of gravity throughout the animation, so the jump doesn't look floaty or unnatural.

19. How do you handle animating combat moves and making them look impactful?

To animate combat moves effectively and make them feel impactful, I focus on several key areas. First, timing and anticipation are crucial. I'd exaggerate the wind-up before a strike, creating a sense of building power. Then, the actual impact should be emphasized with a brief pause or screen shake. For impact consider using techniques such as slow motion, camera zoom, hit stop, and particle effects. Add impact sound design.

Secondly, visual effects play a significant role. Particle effects, such as dust clouds or sparks, can add weight and energy to the animation. I would also consider using subtle screen shakes or camera zooms to further emphasize the impact. Also, use animation blending and inverse kinematics to make character animations more natural.

20. Explain the importance of timing and spacing in animation, and how they affect the final result.

Timing and spacing are fundamental principles of animation that directly influence the perceived motion and weight of objects. Timing refers to the number of frames used to depict an action; more frames mean slower action, fewer frames mean faster action. Spacing refers to the distance an object travels between frames; closer spacing creates slower movement, wider spacing creates faster movement.

By manipulating timing and spacing, animators create the illusion of weight, momentum, and emotion. For example, close spacing and slow timing can make an object appear heavy, while wide spacing and fast timing can make it appear light and quick. Inconsistent or poorly judged timing and spacing can make an animation look unnatural or jarring, undermining the believability of the scene.

21. Let's say a character needs to transition smoothly between walking and running. How would you animate that?

To create a smooth transition between walking and running, I'd use animation blending or interpolation. I would define separate walk and run animation cycles. Then, based on the character's speed (an input parameter), I would blend between these two animations.

Specifically, a value like blend_factor (0.0 to 1.0) would determine the weight of each animation. At blend_factor = 0.0, only the walk animation plays. As blend_factor increases to 1.0, the animation gradually transitions to the run cycle. This can be implemented using libraries or animation tools that support blending between animation clips.

Intermediate Game Animator interview questions

1. Describe your experience with different animation software packages. What are the pros and cons of each from your perspective?

I have experience with several animation software packages, including Adobe Animate, Toon Boom Harmony, and Blender. Adobe Animate is great for 2D vector animation, especially for web and interactive content. Its pros include a large user base, robust drawing tools, and strong integration with the Adobe ecosystem. Cons can include its subscription model cost and occasional performance issues with complex animations.

Toon Boom Harmony is an industry-standard for professional 2D animation, offering advanced rigging and compositing features. The strengths of Harmony are its powerful node-based system, excellent character rigging capabilities, and pipeline integration. However, its steeper learning curve and higher price point can be a barrier. Blender is a free and open-source 3D creation suite that also handles 2D animation through its Grease Pencil tool. Blender's pros are its zero cost, versatile feature set for both 2D and 3D, and large community support. The cons include a potentially overwhelming interface and a less streamlined 2D animation workflow compared to dedicated 2D animation software.

2. How do you ensure smooth transitions between different animation states in a game?

Smooth transitions between animation states in a game are typically achieved using animation blending techniques and state machines. Animation blending involves smoothly interpolating between the keyframes of the outgoing and incoming animations, preventing jarring pops. A common approach is using a blend tree or similar system. State machines define the possible animation states of a character or object (e.g., idle, walking, running) and the conditions that trigger transitions between them.

To ensure smooth transitions, it's crucial to define transition parameters (e.g., blend duration) and trigger conditions carefully. A poorly configured transition can still result in visual artifacts. Consider using animation curves to control the blending speed for more nuanced results. For example, you might want a faster blend at the beginning of a transition. Some game engines provide tooling to visually create and manage animation state machines and blend trees, which can greatly simplify the process. Proper tagging and naming conventions for animations helps a lot.

3. Explain your understanding of inverse kinematics (IK) and forward kinematics (FK) in animation.

Forward kinematics (FK) calculates the position of the end effector of a kinematic chain (like a robot arm or character limb) based on the joint angles. You input the angles of each joint, and the system computes the resulting position and orientation of the end point. It's a direct, feed-forward calculation.

Inverse kinematics (IK) is the opposite. Given a desired position and orientation for the end effector, IK calculates the joint angles needed to achieve that pose. This is a more complex problem, as there can be multiple solutions or no solution at all. Solvers often use iterative methods to find a suitable set of joint angles. IK is crucial for tasks like making a character reach for a specific object or keeping their feet planted on the ground.

4. Can you walk me through your process of animating a character performing a complex action, such as a combat sequence?

My process usually starts with understanding the action and its purpose in the story. I break down the complex action into smaller, manageable chunks or key poses – the beginning, middle, and end of each impactful movement. I then create a rough storyboard or animatic to visualize the timing and flow of the sequence, focusing on clear storytelling and character intention. This involves research, reference videos of similar actions, and sometimes motion capture data if available.

Next, I refine the key poses in the animation software, ensuring they are dynamic and expressive, considering weight, balance, and anticipation. Then, I add breakdowns and in-betweens to create smooth transitions between the key poses. This step involves paying close attention to arcs, spacing, and timing to achieve a realistic or stylized feel, depending on the project's requirements. Throughout the process, I iterate based on feedback and refine the animation until it meets the desired level of polish and conveys the intended emotion and impact.

5. How do you optimize animations for performance in a game engine?

To optimize animations in a game engine, several techniques can be employed. Reducing the complexity of animation rigs is crucial; simpler rigs with fewer bones and constraints require less processing. Also, utilize animation blending and layering to avoid fully recalculating every frame. Texture compression techniques like ASTC or ETC2 are used to reduce memory footprint.

Animation atlases or sprite sheets help reduce draw calls, improving rendering performance. Code-level optimizations, such as caching bone transforms and avoiding unnecessary calculations in animation update loops, are useful. Finally, consider employing skeletal mesh LODs (Level of Detail), which reduces the number of polygons rendered for distant characters.

6. What is your approach to animating realistic character movement, considering weight and momentum?

To animate realistic character movement considering weight and momentum, I'd focus on a few key areas. First, I'd use motion capture data as a reference point, paying close attention to how weight shifts and momentum affects limb movements and the character's center of gravity. This helps ground the animation in reality. I'd also use physics-based animation techniques where appropriate, possibly integrating a physics engine to simulate the effects of gravity, inertia, and collisions.

Specifically, I would analyze the timing and spacing of key poses, ensuring that movements accelerate and decelerate naturally. Overlapping action is crucial; limbs and clothing should continue moving slightly after the character's main body has stopped. Weight is also reflected in the animation using techniques like squash and stretch, and subtle movements of the torso and hips that emphasize weight shift. I would also pay close attention to foot placement and ground reaction forces, ensuring the character's feet make solid contact with the ground at the appropriate times. This can be further enhanced with appropriate sound effects.

7. Describe a time when you had to troubleshoot an animation bug. What was your approach, and what did you learn?

During a recent project involving a character walking animation, I encountered a bug where the character's feet would occasionally clip through the ground. My approach involved several steps. First, I isolated the problem by slowing down the animation and observing exactly when and where the clipping occurred. I then checked the animation data itself in our animation software, Maya. I looked at the bone positions and the character's mesh relative to the ground plane for each frame. It turned out that a few keyframes had slight errors in the character's vertical position, causing the feet to dip too low. I fixed these keyframes directly in Maya.

From this experience, I learned the importance of meticulous animation review and the value of using tools to visualize animation data. I also realized that even small errors in keyframes can have noticeable effects on the final animation. Now, I pay closer attention to foot placement during the animation process and use scripts to check for potential clipping issues automatically, saving time and preventing similar bugs from arising.

8. How do you collaborate with other members of a game development team, such as programmers and designers?

Collaboration is key in game development. I prioritize clear and consistent communication using tools like Slack or shared documents. When working with programmers, I ensure art assets are delivered in the required formats and specifications, and I proactively ask about technical limitations early in the design process to avoid issues later.

With designers, I focus on understanding the gameplay vision and how my art can best support it. I actively participate in design reviews, offering feedback and suggestions based on my artistic expertise and technical understanding. I also iterate on assets based on their feedback and playtesting results, maintaining a flexible and collaborative approach.

9. Explain your knowledge of motion capture data and how you would integrate it into a game animation pipeline.

Motion capture data provides realistic movement for characters in games. My understanding is that it involves recording the movements of a real person (or animal) using specialized equipment, then transferring that data to a digital character. In a game animation pipeline, the raw motion capture data is typically processed to clean up noise, correct errors, and ensure it fits the character's proportions and style. This cleaned data is then used to drive the character's skeleton, creating animations.

To integrate it, I'd first ensure compatibility between the motion capture data format (e.g., FBX, BVH) and the game engine. Then, I'd import the cleaned data and map it to the character's rig. Further refinements such as blending different motion capture clips, adjusting timing, and adding secondary animations (e.g., cloth, hair) would follow. The goal is to get realistic and good-looking animations.

10. What are some common animation techniques used to convey emotion and personality in game characters?

Animation techniques to convey emotion and personality often leverage exaggerating movements and expressions. For example, anticipation before a jump, squash and stretch during impact, and follow-through/overlapping action (hair, clothing) can add depth. Subtle eye movements, head tilts, and posture also significantly influence how players perceive a character's mood. A depressed character might have slumped shoulders and avoid eye contact, while a confident character could stand tall with a broad gait.

Specifically, animation principles like timing and spacing are vital. Faster movements might convey excitement or fear, whereas slow, deliberate motions suggest thoughtfulness or sadness. Facial rigging and blend shapes play a huge role, allowing animators to craft a wide range of expressions. Even small details like the way a character idles or reacts to their environment can communicate volumes about their personality.

11. How familiar are you with scripting languages used for animation, such as Python or MEL?

I am familiar with scripting languages used in animation, particularly Python. I understand its common usage within software like Maya and Blender. I have experience using Python for tasks such as automating repetitive tasks, creating custom tools, and manipulating scene data.

While I may not have extensive hands-on experience with MEL (Maya Embedded Language), I grasp its fundamental role within the Maya ecosystem. I am capable of learning and adapting to new scripting languages as required. I understand scripting is key to extending the functionality of DCC tools. For example a basic python script that helps to automate a task might look like this:

import maya.cmds as cmds

def create_sphere(radius=1):

cmds.sphere(radius=radius)

create_sphere(radius=5)

12. Describe your experience with animation blending and masking techniques.

I have experience with animation blending and masking techniques in game development. I've used animation blending to smoothly transition between different character states, such as walking, running, and jumping. This involves techniques like linear blending (lerping), smoothed transitions using blend trees or state machines, and the use of blend spaces to combine multiple animations based on input parameters. For example, in Unity, I've used Animator.CrossFade and blend trees to achieve seamless transitions. I am familiar with animation masking where specific body parts are affected by certain animations, while others are not. This is useful for combining animations, such as applying an upper-body aiming animation while the lower body is still walking or running. In Unreal Engine, I've used layered blend per bone nodes to achieve this.

Specifically, with masking, I've employed methods to selectively apply animations to specific body parts. For example, when a character aims a weapon, the upper body animation (aiming) can be masked to only affect the arms and torso, while the legs continue to execute a walk cycle. This can be achieved using bone masks or layered blend per bone nodes in tools like Unreal Engine's animation blueprint editor. Also, I have used code to programatically modify blend weights based on game conditions.

13. How do you stay up-to-date with the latest trends and technologies in game animation?

I stay up-to-date with the latest trends and technologies in game animation through a variety of methods. I regularly read industry blogs, articles, and publications like Gamasutra, 80.lv, and Animation World Network. I also follow key figures and studios in the animation field on social media platforms such as Twitter and LinkedIn. Attending industry conferences like GDC and SIGGRAPH helps me learn about new techniques and technologies firsthand.

Furthermore, I actively participate in online communities and forums dedicated to game animation, such as the Unreal Engine and Unity forums, as well as specialized animation subreddits. This allows me to learn from other professionals, share my own knowledge, and stay informed about emerging trends and best practices. I also dedicate time to personal projects to experiment with new software and animation techniques, such as motion capture and procedural animation.

14. Explain your understanding of animation retargeting and its applications.

Animation retargeting is the process of transferring animation data from one character or skeletal structure to another, even if they have different proportions or skeletal hierarchies. This allows you to reuse animations created for one character on another, saving significant time and resources in animation production.

Applications include: game development (using the same animations for different character models), film and visual effects (applying motion capture data to stylized characters), and creating diverse character animations quickly. It involves mapping bones from the source skeleton to the target skeleton and adjusting the animation to fit the target's proportions. Techniques used include bone mapping, scaling, and IK (Inverse Kinematics) solvers to address differences in limb lengths and joint positions.

15. How do you approach animating non-humanoid characters or creatures?

When animating non-humanoid characters, I focus on understanding their unique anatomy and locomotion. This involves researching real-world animals or creatures with similar body structures and movement patterns. I then break down the character's movements into key poses and transitions, paying close attention to weight distribution, balance, and how their anatomy affects their range of motion. Iterative refinement, with feedback incorporated, is key to achieving believable and engaging animations.

I also consider the character's personality and how that translates into their movement style. Is the creature graceful, clumsy, powerful, or timid? These qualities influence the timing, spacing, and exaggeration of the animation. Rigging is also crucial, ensuring the rig is tailored to the specific needs of the creature's anatomy to allow for fluid and natural movement.

16. Describe a situation where you had to work with limited resources or tight deadlines on an animation project.

In a student film project, we aimed for a complex 2D animated short but had a tiny team and only three months. We prioritized key scenes and simplified animation styles; instead of fully animating every frame, we used more 'pose-to-pose' animation and held frames longer, creating a stylized, slightly choppy effect that actually enhanced the film's aesthetic. We also reused assets extensively, modifying colors and details to create variety without creating entirely new drawings.

To meet the deadline, we implemented a rigorous schedule and task delegation using Trello. We broke down each scene into smaller, manageable tasks and assigned them to team members based on their strengths. Daily stand-up meetings helped us track progress and identify potential bottlenecks early on, allowing us to shift resources and adjust deadlines as needed. Despite the constraints, we completed the film on time and received positive feedback on its unique visual style.

17. How do you handle feedback and criticism on your animations?

I view feedback and criticism as valuable opportunities for growth. My initial reaction is to listen carefully and try to understand the perspective of the person providing the feedback. I resist the urge to become defensive, and instead focus on clarifying any points I don't fully grasp by asking constructive questions.

Once I understand the feedback, I take time to process it objectively. I consider how the suggested changes align with the overall goals of the animation and whether they improve the piece. If I agree with the feedback, I incorporate it into my work. If I disagree, I respectfully explain my reasoning, providing concrete examples from the animation to support my choices. It's a collaborative process, and I strive to find solutions that benefit the final product.

18. Explain your knowledge of animation compression techniques.

Animation compression techniques aim to reduce the storage space and bandwidth required for animation data. Common approaches include keyframe compression, where only a subset of frames (keyframes) are stored, and the intermediate frames are interpolated. This significantly reduces the data size, especially for animations with smooth transitions. Other techniques include quantization, which reduces the precision of the animation data (e.g., bone rotations or vertex positions), and delta compression, which stores only the differences between consecutive frames.

More advanced methods leverage techniques like principal component analysis (PCA) or wavelets to represent animation data in a more compact form by identifying and discarding less significant components. Lossless compression algorithms like Huffman coding or Lempel-Ziv (LZ) can further compress the data after applying one of the lossy techniques mentioned above. The choice of technique depends on the desired level of compression, acceptable quality loss, and computational cost of encoding and decoding.

19. How do you ensure that animations are consistent with the overall art style and direction of a game?

To ensure animation consistency with the game's art style, I'd first establish a clear animation style guide. This guide would detail key aspects like timing, easing, level of detail, and the general 'feel' the animations should convey. Regular communication and collaboration with the art director and lead animator are crucial to ensure everyone is on the same page. This includes reviews of animation prototypes and ongoing feedback sessions.

Specifically, I'd ensure animators have access to and understand reference materials (concept art, style guides, existing animations). We'd also use tools like animation blueprints or state machines (if applicable to the engine) to enforce consistency and allow for easy adjustments to animation parameters globally. Finally, integrating animation reviews into the regular development pipeline enables continuous monitoring and early identification of any stylistic deviations.

20. Describe your experience with animating facial expressions and lip-syncing.

My experience with animating facial expressions and lip-syncing includes using software like Adobe After Effects, Blender, and occasionally specialized tools like Papagayo for initial phoneme extraction. I've worked on projects ranging from character animations for explainer videos to creating realistic facial performances for short films. The process typically involves analyzing audio waveforms, identifying key phonemes, and then meticulously crafting the corresponding mouth shapes and subtle facial movements to match the dialogue. I pay close attention to timing, weight shifts of the face, and secondary actions like eye blinks and brow movements to create believable performances.

I often utilize blendshapes or bone-based rigs to control the facial features, depending on the style and requirements of the project. For example, in Blender, I might use shape keys (blendshapes) driven by drivers based on the audio signal or manually animated curves. In After Effects, I typically use puppet tools and expressions to deform facial illustrations and sync them to audio. The goal is always to create expressive and engaging animations that enhance the storytelling.

21. How do you approach animating for different camera perspectives in a game (e.g., first-person, third-person)?

When animating for different camera perspectives, I focus on creating animations that feel natural and responsive from each viewpoint. For first-person, the emphasis is on hand/arm movements and immediate feedback, ensuring the player feels connected to the actions. This often involves more subtle animations and a higher degree of responsiveness to player input. In third-person, the entire character's animation becomes visible, so I pay closer attention to overall body language, fluidity, and creating visually appealing movement. I might use different animation sets or blend animations differently depending on the active camera.

Technically, this often involves using blend trees or animation state machines to switch between animation sets or adjust animation parameters based on the camera perspective. For example, a camera_perspective variable could drive the blend tree to prioritize first-person animations when in that mode. Code examples in Unity might look like:

animator.SetFloat("CameraPerspective", cameraPerspectiveValue);

22. Explain your understanding of animation state machines and their role in game development.

Animation state machines are a core component in game development used to manage the different animation states of a game character or object. They define the transitions between various animations, such as idle, walking, running, jumping, and attacking. Each state represents a specific animation clip or blend of clips, and transitions are triggered by certain game events or conditions, ensuring that animations flow logically and responsively to player input or game logic.

The primary role of animation state machines is to provide a structured and efficient way to control character animations. By using states and transitions, developers can avoid writing complex, spaghetti code for animation handling. They promote modularity and make it easier to add new animations or modify existing ones without affecting other parts of the codebase. For example, a transition from idle to walking might be triggered when the player presses the 'W' key and the character's movement speed is greater than zero.

23. How do you create animations that are both visually appealing and functional for gameplay?

To create visually appealing and functional gameplay animations, I focus on several key areas. First, I prioritize clear communication of game state and player feedback. Animations should instantly convey information such as attack timings, enemy vulnerabilities, or successful actions. This often involves using distinct animation cues and transitions. For example, a character winding up for a powerful attack should have a clearly telegraphed animation, giving the player ample time to react.

Second, I balance visual flair with performance considerations. Excessive or overly complex animations can impact frame rates, especially on lower-end devices. Therefore, I optimize animations through techniques like sprite sheet usage, skeletal animation with efficient rigs, and judicious use of particle effects. I also use animation layering to create variation without dramatically increasing memory usage. Finally, testing animations across various hardware is critical to ensure optimal performance and visual fidelity.

24. Describe your experience with implementing animation-driven gameplay mechanics.

I have experience implementing animation-driven gameplay mechanics in Unity and Unreal Engine. I've worked on systems where character movement, attacks, and other actions are directly tied to animation events and states. For example, I implemented a melee combat system where the animation notifies triggered damage application and sound effects, ensuring actions felt responsive and impactful. I also used animation curves to drive parameter changes in gameplay, like varying movement speed during a run animation based on the curve's values.

Specifically, I've used animation notifies in Unreal Engine and AnimationEvents in Unity to trigger game logic. I also relied on state machines and blend spaces to create fluid transitions between animations based on player input and game state. For instance, I programmed character blending between idle, walk, and run animations based on the player's input via code like this:

animator.SetFloat("Speed", movementSpeed);

25. How would you approach animating a character with unique movement abilities, such as teleportation or flight?

For unique movement like teleportation, I'd use a combination of visual effects and animation tricks. Teleportation would involve quickly fading out the character at the origin, using a particle effect to simulate the 'teleport' energy, and fading the character back in at the destination. No traditional animation is really needed in this case, just visual effects to create the illusion of instantaneous movement.

For flight, I would start with a standard running animation that smoothly transitions into a flight animation. The flight animation itself would likely involve subtle movements in the character's limbs and body to convey a sense of soaring. To make the flight more engaging, I might add variable animation speeds and poses that react to player input (e.g., faster flapping for increased speed, leaning into turns). Additionally, effects like wind trails or changes in clothing physics (like cloth simulation) could further enhance the realism and visual appeal of the flight.

26. Explain your understanding of additive animation layers and how they can be used to enhance character animations.

Additive animation layers allow you to blend animations on top of a base animation, creating variations and enhancing the character's performance without modifying the core animation. Instead of completely overwriting the underlying animation, the additive layer contributes to the final pose.

They're useful for things like adding flinching reactions to a character who's already running, or adding subtle facial expressions on top of a base idle animation. The key benefit is that you can reuse the same base animation and add different additive layers to create a variety of different results. This also means animations can be applied and removed dynamically, such as adding a 'wounded' animation on top of normal movement when the character takes damage.

27. Describe your experience with animating ragdoll physics and their interaction with character animations.

I have experience implementing ragdoll physics and integrating them with character animations, primarily using physics engines like Unity's built-in Physics engine and PhysX. My work involved creating ragdoll representations of characters by connecting rigid bodies with joints, carefully tuning joint constraints (limits, springs, dampers) to achieve realistic and controllable behavior.

I've managed the transition between animation and ragdoll states, often triggered by events like impacts or character death. This involved blending animation poses with physics-driven poses, and controlling the activation and deactivation of rigid bodies/kinematic states to ensure smooth transitions and prevent jarring visual artifacts. I also optimized the physics simulation for performance, considering factors like collision complexity and the number of active ragdolls in a scene. For example, I've used code like GetComponent<Rigidbody>().isKinematic = true; to switch between animation and ragdoll states.

28. How do you optimize animation workflows to improve efficiency and productivity?

To optimize animation workflows, several strategies can be employed. Firstly, streamline asset management by implementing a robust naming convention and folder structure. Centralized asset libraries and version control systems (like Git or Perforce) are crucial. Automating repetitive tasks using scripting (e.g., Python in Maya or Blender) drastically reduces manual work and errors. For example, automating rig creation or batch processing animations.

Secondly, optimize animation performance by using efficient rigs and avoiding unnecessary complexity. Optimize scene geometry and textures, and utilize techniques like LODs (Level of Detail) for distant objects. Consider using motion capture data where appropriate to accelerate the initial animation phase. Efficient collaboration is key, so using project management tools can help in task management and communication. Finally, provide regular feedback and training to animators to improve their skills and awareness of best practices.

Advanced Game Animator interview questions

1. Describe a time you had to significantly alter your animation style to match a project's specific aesthetic. What challenges did you face?

In a project for a children's educational app, I initially employed a stylized, slightly edgy animation style I was comfortable with. However, the client wanted a much softer, more rounded, and universally appealing aesthetic. This meant abandoning sharp lines and exaggerated movements in favor of smoother transitions and gentle character designs. The biggest challenge was retraining my muscle memory and visual instincts.

I addressed this by creating style guides with clear visual references, practicing simplified character rigging techniques, and seeking frequent feedback from the art director. It also involved researching and deconstructing animation styles from existing successful children's shows to understand the underlying principles of their visual language.

2. How do you approach animating non-humanoid characters or creatures with unique anatomies and movement patterns?

Animating non-humanoid characters requires a different approach than humanoid animation. I focus on understanding the creature's anatomy, its weight distribution, and how its joints and muscles (or analogous structures) function. Reference material is key: observing real-world animals or similar creatures, studying documentaries, and looking at concept art informs the animation process.

I then break down the movement into key poses and timings, paying close attention to how the creature interacts with its environment. This often involves experimenting with different rigging techniques to achieve the desired movement. For example, using spline IK for tentacles or procedural animation for scales. It's important to iterate and refine the animation based on feedback, focusing on conveying the creature's personality and believability.

3. Explain your understanding of inverse kinematics (IK) and forward kinematics (FK) and when you would use each in game animation.

Forward kinematics (FK) calculates the position of the end effector (e.g., a hand) based on the joint angles of the kinematic chain (e.g., the arm). You set the angles of each joint, and the position of the hand is determined. FK is useful when you want direct control over each joint, for example, in simple animation sequences or when precisely scripting the movements of each individual joint. It's straightforward to implement.

Inverse kinematics (IK), on the other hand, determines the joint angles required to achieve a desired end effector position. You specify where you want the hand to be, and the IK solver calculates the joint angles needed to reach that position. IK is extremely useful when you want characters to interact with their environment, such as reaching for an object, or planting their feet firmly on uneven ground. It automates the process of finding the right joint angles and is useful when you want to control the end result rather than individual joint angles. Consider using IK when creating animations where a character is interacting with world objects.

4. Detail your experience with motion capture data. How do you clean, refine, and integrate mocap into your game animations?

My experience with motion capture data involves several key steps. First, I address cleaning: this includes filtering noise using tools like Vicon Nexus or MotionBuilder to remove jitter and outliers. I refine the data by filling gaps with interpolation or manual keyframing, paying close attention to foot placement and joint rotations to prevent unnatural movements. Retargeting is crucial, using software like Maya or Blender, to map the mocap data onto the game character's skeleton, adjusting for differences in proportions.

Integration into game animations often involves further refinement in a game engine like Unity or Unreal Engine. I create animation controllers or blueprints to blend mocap animations with other animations, such as transitions or custom actions. If necessary, I write custom scripts to handle specific scenarios, like IK adjustments or procedural animation enhancements. Finally, I optimize the animations for performance, reducing keyframes where possible and ensuring compatibility with the game's animation system.

5. Imagine a character needs to transition seamlessly from sprinting to sliding down a hill. How would you animate that sequence?

The sprint-to-slide animation blends two distinct motion capture or keyframed animations. Trigger the slide animation at the precise moment the sprint animation reaches its peak speed and forward momentum. This involves animation blending: fading out the sprint animation while fading in the slide animation. Key parameters to synchronize are: forward velocity, ground contact, and body posture.

Specifically, the animation system should monitor the character's speed. When the speed is above a certain threshold and the character is on a sloped surface, the transition begins. This transition might involve playing a short 'transition' animation that connects the sprint and the slide, such as a quick lowering of the character's center of gravity. Code-wise, this would involve animator.CrossFade(slideAnimationName, transitionDuration) within the game engine's animation controller.

6. Explain how you optimize animations for different target platforms (mobile, console, PC) to maintain performance.

Optimizing animations across different platforms involves tailoring the animation complexity and execution based on the platform's capabilities. For mobile, I'd prioritize reducing the number of animated elements, simplifying animation curves, and using texture atlases for animated sprites to minimize draw calls. I'd also leverage techniques like GPU instancing where possible, and carefully profile animations to identify performance bottlenecks, potentially using simpler skeletal setups or morph targets where appropriate.

For console and PC, I can usually afford more complex animations. However, even on these platforms, optimization is crucial. I'd focus on LOD (Level of Detail) for animations, using higher-fidelity animations for close-up views and simpler versions at a distance. Techniques like animation compression and efficient skinning algorithms become even more important with more complex rigs and assets. Always profile to ensure no animation is unexpectedly costing performance, and consider techniques like animation blending or additive animation for smooth transitions and efficient state management.

7. Describe a time you had to debug a complex animation-related issue in a game engine. What was your approach?

During the development of a character's running animation in Unity, I encountered an issue where the feet were noticeably sliding on the ground despite using root motion. My initial approach involved breaking down the problem into smaller parts. First, I visually inspected the animation curves in the animation window to identify any inconsistencies in the character's forward movement. I also used Unity's profiler to check for performance bottlenecks that could be causing frame rate drops, potentially affecting animation playback smoothness.

Next, I enabled the 'Show Root Motion' option in the animator window to visualize the root motion being applied. Using this, I confirmed that the root motion was indeed driving the character forward. I then implemented debug rays in FixedUpdate to visualize the exact point of contact of the character's feet with the ground at each frame. Comparing this data with the expected ground contact based on the animation curves, I discovered a subtle mismatch between the animation's intended foot placement and the actual ground position due to slight inaccuracies in the collider setup and ground detection. Adjusting the collider and using a more precise ground detection method resolved the foot sliding issue.

8. How do you stay up-to-date with the latest animation techniques, software, and industry trends?

I stay updated through a combination of online resources and active participation in the animation community. I regularly read industry blogs like Cartoon Brew and Animation World Network, and follow key animators and studios on social media platforms like Twitter and ArtStation. I also subscribe to relevant newsletters and attend online webinars and conferences when possible.

To keep my technical skills sharp, I explore new software features through official documentation, tutorials (like those from Autodesk or Blender's official channels), and online courses on platforms like Skillshare or Udemy. I also practice implementing new techniques in personal projects to gain hands-on experience and a deeper understanding of their applications. Finally, I engage with online forums and communities to learn from other artists and troubleshoot any challenges I encounter.

9. Walk me through your process for creating realistic cloth or hair simulation in your animations.

My process for realistic cloth/hair simulation involves several stages. First, I focus on research and reference gathering. Observing real-world cloth/hair movement is crucial. Then, in my 3D software (e.g., Maya, Blender), I set up the initial geometry with appropriate polygon density. For cloth, this includes defining the cloth object, collision objects, and sewing patterns. For hair, I create guide hairs and define hair properties. Next, I configure the simulation settings such as wind forces, gravity, friction, and stiffness. This involves a lot of tweaking and iterative testing to achieve the desired look, keeping in mind performance considerations such as solver substeps and constraint parameters. Finally, I refine the simulation with post-processing effects like smoothing and collision adjustments.

10. Explain your understanding of animation blending techniques and how you use them to create smooth transitions between animations.

Animation blending is the process of smoothly transitioning between two or more animations. It prevents jarring pops by interpolating between the keyframes of the animations over a specified duration. This creates a seamless visual experience. Techniques include linear blending (simple interpolation), smoothed blending using easing functions (e.g., ease-in-out), and more advanced methods like additive blending (layering animations) and state machines to control transitions based on game logic.

I typically use animation blending through animation controllers or state machines in game engines like Unity or Unreal Engine. I define transition rules between animation states, specifying the blending duration and any conditions that trigger the transition (e.g., character speed, button press). For example, transitioning from an idle animation to a walk animation, I'd set a blend time of 0.2 seconds. The engine then automatically interpolates between the animations during this time. For additive blending, I'd use it to layer animations, like adding a flinch animation on top of a running animation, before smoothly returning to the original run cycle.

11. Describe a situation where you had to collaborate with other artists, designers, and programmers to achieve a specific animation goal.

During the development of a mobile game, I collaborated closely with a team to create a dynamic character animation for the main character's special ability. I worked with a character designer who provided the initial sketches and design specifications. I then collaborated with a 3D modeler who provided the final character mesh. My role was to rig and animate the character in Maya, ensuring the animation was visually appealing and technically sound. The programmers then integrated the animation into the game engine (Unity), using the state machine to trigger the animation based on player input. We regularly communicated, sharing animation previews and addressing any technical issues that arose during implementation. For example, we needed to optimize the animation for mobile performance, so I reduced the number of bones in the rig and baked some of the animation details into the mesh to improve the frame rate. Animator.Play("SpecialAbility"); was used on the game-code for implementing the animation.

12. How do you handle animation retargeting between characters with different skeletons or proportions?

Animation retargeting between characters with different skeletons involves mapping the animation from a source skeleton to a target skeleton. This can be achieved using several techniques. One common method is to use a bone mapping system, where corresponding bones between the source and target skeletons are identified. The animation data (rotation, position) is then transferred based on these mappings, often with adjustments to account for differences in bone lengths and orientations. Techniques like inverse kinematics (IK) can also be used to adapt the animation to the target character's proportions, ensuring that key poses and movements are preserved.

Another approach involves using motion capture data or generic animation rigs as an intermediate. The animation is first mapped to the generic rig and then re-targeted from the generic rig to each character. This approach helps to reduce the number of direct mappings required between different skeletons. Finally, some game engines or animation software may offer built-in tools or plugins specifically designed for animation retargeting, often involving algorithms like proportional editing or space warping to smoothly adapt the animation across characters with varying skeletal structures.

13. If the game's design suddenly shifted from realistic to stylized, how would you adapt your animation approach?

If a game shifted from realistic to stylized, I'd adapt my animation approach by prioritizing exaggeration and clarity over photorealism. This means focusing on key poses, timing, and spacing to create impactful and easily readable movements. I'd research the specific stylized art style to understand its core principles, like squash and stretch, looping animations, and unique deformation.

Technically, this could involve simplifying rigs, using fewer bones, or even incorporating procedural animation techniques for stylized effects like bouncy hair or exaggerated clothing movement. I would also likely decrease the number of frames in animation cycles to achieve a snappier, less realistic feel, and use more baked animation instead of relying on complex constraints.

14. What's your approach to animating facial expressions and lip-syncing to dialogue in games?

My approach to animating facial expressions and lip-syncing involves a combination of techniques. First, I'd analyze the dialogue to identify phonemes and key emotional cues. For lip-syncing, I'd use software like Faceware or similar tools, or potentially custom solutions (depending on project needs). This allows for accurate mouth movements synced to the audio. For facial expressions, blend shapes are essential. I'd create a library of expressions (anger, joy, sadness, etc.) mapped to blend shapes and then trigger these based on the character's emotional state and the dialogue context.

I would then use animation software to blend and refine these expressions, adding secondary animation like eye blinks, eyebrow movements, and subtle head movements to create a more believable and nuanced performance. I prioritize working closely with audio engineers and character artists to ensure the animation aligns with the overall vision for the character and the game. Finally, within the game engine, I set up animation controllers to trigger these animations based on the in-game events and dialogue cues.

15. How do you use animation to convey character personality and emotions effectively?

Animation effectively conveys character personality and emotions through several key elements. Exaggerated movements can emphasize traits like confidence or clumsiness. Facial expressions are crucial; subtle changes in the eyes, mouth, and eyebrows can communicate a wide range of emotions. Body language, including posture and gait, also plays a significant role; a hunched posture might suggest sadness or insecurity, while an upright posture might indicate confidence or authority.

Timing and spacing are also important. A character's speed and the rhythm of their movements can reveal their energy level and emotional state. For instance, a character who moves quickly and erratically might be anxious or excited, while a character who moves slowly and deliberately might be calm or depressed. Using these elements in combination allows animators to bring characters to life and connect with the audience on an emotional level.

16. Describe your experience with scripting or programming to control animation behavior in a game engine.

I have experience using scripting to control animation behavior primarily in Unity. I've used C# to trigger animations via the Animator component, setting parameters like booleans, floats, and triggers based on game logic or player input. For example, I've created systems where the player's movement speed dictates the blend between idle, walk, and run animations. Additionally, I've worked with animation events to trigger specific actions, such as playing sound effects or spawning particle effects, at precise points in the animation timeline. This involved writing code snippets within the animation event function to handle the relevant game logic.

Furthermore, I've implemented inverse kinematics (IK) using scripting to achieve more realistic and interactive animations, such as having a character's hand accurately reach for a specified target, dynamically adjusting the arm pose at runtime. For example:

animator.SetIKPositionWeight(AvatarIKGoal.RightHand, rightHandWeight);

animator.SetIKRotationWeight(AvatarIKGoal.RightHand, rightHandWeight);

animator.SetIKPosition(AvatarIKGoal.RightHand, targetPosition);

animator.SetIKRotation(AvatarIKGoal.RightHand, targetRotation);

17. How would you optimize animation data to reduce memory footprint and improve performance in a large open-world game?

To optimize animation data, I'd employ several strategies. First, keyframe reduction techniques, like using quantization and delta encoding, minimize the data stored per frame by storing only significant changes. Second, animation compression algorithms such as PCA (Principal Component Analysis) can significantly reduce the size of the animation data. For skeletal animation, using bone reordering to improve cache locality and using smaller data types (e.g., half-precision floats) where precision loss is acceptable can help. Finally, level of detail (LOD) for animations, where simpler animations are used for distant objects, reduces the processing load and memory usage for objects that don't require high fidelity animations.

18. Explain how you use animation curves and timing to create impactful and engaging animations.

I use animation curves (also known as easing functions) to control the rate of change of an animation over time. Instead of a linear movement, curves allow for acceleration, deceleration, or more complex motion profiles. For example, an 'ease-in' curve starts slowly and speeds up, creating a feeling of weight or anticipation. An 'ease-out' curve starts quickly and slows down, providing a smooth and natural finish. By experimenting with different curves like easeInOutQuad, easeOutCubic, etc., I can fine-tune the perceived weight, impact, and realism of the animation.

Timing is equally crucial. The duration of an animation significantly affects its impact. A quick animation can feel snappy and responsive, while a longer animation can convey deliberation or grandeur. I often use a combination of animation curves and carefully chosen timings to create engaging experiences. For example, a UI element might slide in with an ease-out curve over 0.3 seconds for a subtle and polished feel, whereas a character jump might use a more complex curve with varying durations for the ascent and descent to emphasize weight and impact. Also, when multiple elements animate together, slightly offsetting their start times can create a more layered and dynamic effect.

19. Describe a situation where you had to work with limited resources (time, budget, tools) to deliver high-quality animations.

In a project for a non-profit, we needed to create a series of animated explainer videos to increase awareness about their cause. However, we had a very tight budget and a short deadline. To overcome these limitations, I focused on streamlining the animation process. I utilized pre-made assets and templates wherever possible, significantly reducing the time spent on creating elements from scratch. Instead of complex 3D animation, I opted for a clean, 2D motion graphics style, which was faster to produce and still effectively conveyed the message.

To maximize our limited resources, I prioritized clear communication and collaboration with the team. We held daily stand-up meetings to track progress, identify bottlenecks, and ensure everyone was aligned on priorities. By carefully planning the animation pipeline, leveraging available resources, and maintaining open communication, we were able to deliver high-quality animations within the given constraints, exceeding the client's expectations despite the challenging circumstances.

20. What are your preferred methods for creating procedural animation or animation variations?

My preferred methods for creating procedural animation and variations often involve combining multiple techniques. One common approach is using mathematical functions (like sine waves, noise functions like Perlin or Simplex noise) to drive animation parameters such as position, rotation, or scale. These functions provide a base level of dynamic movement and can be easily modified with parameters like frequency, amplitude, and offset to create variations. For example, a character's idle animation might use noise to subtly shift their weight or create small breathing movements.

Another powerful technique is using animation blending and state machines, combined with randomization. Here's a brief snippet:

//Example of randomizing animation speed

animationComponent.speed = Random.Range(0.8f, 1.2f);

By blending between different animation clips based on weighted averages and introducing subtle random variations to parameters like timing, speed, or even which animations are selected, I can achieve a wide range of non-repetitive and visually interesting animations. I also make extensive use of animation curves to control the timing and easing of animations for more natural results. Animating directly in code often provides more control, albeit requiring a more complex setup.

21. How do you handle animation LODs (Levels of Detail) for characters at different distances from the camera?

Animation LODs for characters at different distances involve using simpler, less detailed animations as the character moves further away. This improves performance by reducing the computational cost of animation processing. We can implement this by switching animation sets based on distance. For example:

- Closest: Full animation set, including detailed facial expressions and intricate movements.

- Medium Distance: Reduced animation set, simplifying complex movements, e.g., blending between fewer keyframes.

- Farthest: Very simple or even static animations, possibly using looped idle animations or pre-baked animation clips. Alternatively, the character might even be represented by imposters (flat sprites) at extremely long distances. You can calculate the distance in world space (or camera space) and

switch(distance){ case ... }based on predefined threshold values.

22. Explain your understanding of state machines and how they are used to control character animation in games.

A state machine is a computational model that represents an entity transitioning between different states based on input events. In game character animation, state machines manage the various animations a character can perform, such as idle, walk, run, jump, and attack. Each state corresponds to a specific animation or set of animations.

The character's current state determines which animation is playing. When an event occurs (e.g., player presses the jump button), the state machine transitions the character to a new state (e.g., jump), which then triggers the corresponding jump animation. This provides a structured and organized way to control complex animation sequences and create responsive character behavior. Transitioning logic is usually defined via conditions, and based on the conditions state changes will take place. For example:

if (Input.GetButtonDown("Jump") && isGrounded) {

currentState = State.Jumping;

PlayAnimation(jumpAnimation);

}

23. Describe your experience with rigging characters and creating custom animation rigs.

I have experience rigging characters and creating custom animation rigs primarily in Maya. I've worked with both joint-based and deformer-based rigging techniques. My process typically involves creating a skeleton, setting up joint orientations, skinning the mesh, and building a control rig with custom attributes for animators. I often utilize Maya's SDK via Python scripting to automate repetitive tasks and implement specialized functionality like corrective blendshapes driven by joint rotations.

For example, I once developed a quadruped rig where I scripted custom foot roll attributes and automated the creation of reverse foot locks. I'm familiar with various constraints and deformers used in rigging, such as IK handles, clusters, wire deformers, and lattices. I prioritize creating rigs that are intuitive for animators to use, providing clear controls and minimizing technical overhead.

24. How do you approach animating combat sequences, including impacts, reactions, and special moves?

My approach to animating combat sequences involves careful planning and execution. First, I break down the sequence into key poses or actions, focusing on clear storytelling through character positioning and body language. Impacts are emphasized using techniques like squash and stretch, anticipation frames, and follow-through to create a sense of weight and force. Reactions are tailored to the character and the severity of the blow, ranging from subtle flinches to full knockdowns. Special moves require unique animation to highlight their power, often utilizing exaggerated movements, dynamic camera angles, and visual effects to make them visually impressive.