When evaluating candidates for roles requiring data management, it's easy to be overwhelmed by the technical jargon and nuances of SQL. Like sifting through a mountain of data without the right query, assessing SQL skills can feel chaotic if you're not equipped with the right questions, unlike assessing skills for a data analyst.

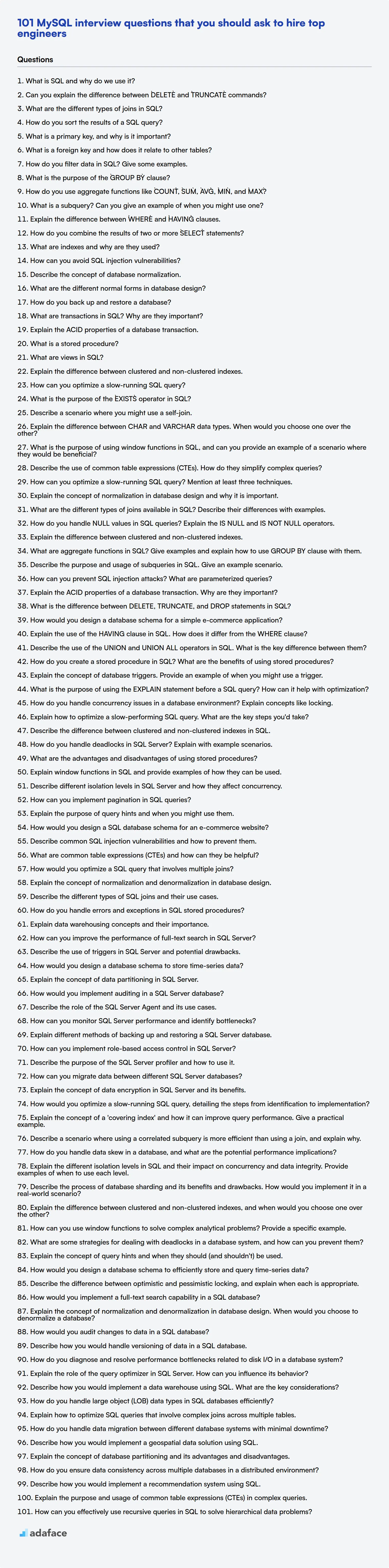

This blog post is structured as a ready-to-use list of SQL interview questions for recruiters and hiring managers. It covers basic, intermediate, advanced and expert level questions along with some multiple-choice questions (MCQs) to make sure you find the best candidate.

By using these questions, you can make sure your interview process is well structured and effective. To ensure candidate SQL mastery before the interview, consider using Adaface's SQL assessments.

Table of contents

Basic SQL interview questions

1. What is SQL and why do we use it?

SQL, which stands for Structured Query Language, is a standard programming language used for managing and manipulating data held in a relational database management system (RDBMS). It allows users to interact with databases to perform various operations.

We use SQL primarily for tasks like:

- Data retrieval: Querying and retrieving specific data from databases.

- Data manipulation: Inserting, updating, and deleting data within databases.

- Data definition: Creating, altering, and dropping database objects like tables and views.

- Data control: Managing user access and permissions to database resources.

2. Can you explain the difference between `DELETE` and `TRUNCATE` commands?

The DELETE command removes rows from a table based on a specified condition. It is a Data Manipulation Language (DML) command. DELETE operations are logged, and can be rolled back. Each deleted row is individually recorded in the transaction log. You can use a WHERE clause to specify which rows to remove.

TRUNCATE is a Data Definition Language (DDL) command that removes all rows from a table. It deallocates the data pages used by the table, resetting the table to an empty state. TRUNCATE is faster than DELETE because it doesn't log individual row deletions and cannot be rolled back. It resets the identity (auto-increment) column to its seed value.

3. What are the different types of joins in SQL?

SQL joins are used to combine rows from two or more tables based on a related column. There are several types of joins:

- INNER JOIN: Returns rows only when there is a match in both tables.

- LEFT (OUTER) JOIN: Returns all rows from the left table and the matched rows from the right table. If there's no match, it returns

NULLvalues for the right table's columns. - RIGHT (OUTER) JOIN: Returns all rows from the right table and the matched rows from the left table. If there's no match, it returns

NULLvalues for the left table's columns. - FULL (OUTER) JOIN: Returns all rows when there is a match in either the left or right table. It combines the results of both LEFT and RIGHT outer joins. Missing values from either table are filled with

NULLs. - CROSS JOIN: Returns the Cartesian product of the tables involved; each row from the first table is combined with each row from the second table.

4. How do you sort the results of a SQL query?

To sort the results of a SQL query, you use the ORDER BY clause. You specify the column or columns you want to sort by after the ORDER BY keyword. By default, ORDER BY sorts in ascending order.

To sort in descending order, you add the DESC keyword after the column name. For example, ORDER BY column_name DESC. You can also sort by multiple columns, with the first column listed taking precedence, like so: ORDER BY column1 ASC, column2 DESC.

5. What is a primary key, and why is it important?

A primary key is a column or a set of columns in a database table that uniquely identifies each row in that table. It enforces uniqueness, meaning no two rows can have the same primary key value, and it cannot contain NULL values.

The importance of a primary key lies in its ability to:

- Uniquely identify records: Allows for easy retrieval, updating, and deletion of specific rows.

- Enforce data integrity: Prevents duplicate records and ensures each row represents a distinct entity.

- Establish relationships: Used as a foreign key in other tables to create relationships between tables.

6. What is a foreign key and how does it relate to other tables?

A foreign key is a column (or set of columns) in one table that refers to the primary key of another table. Its purpose is to establish and enforce a link between the data in the two tables.

This relationship ensures referential integrity, meaning that you can't insert a row into the table containing the foreign key if there is no corresponding row in the linked table (the one with the primary key). It also often dictates what happens when a row is deleted or updated in the parent table. For example, you might set ON DELETE CASCADE so that deleting a row in the parent table automatically deletes corresponding rows in the child table. Consider these SQL examples:

CREATE TABLE Parent (

id INT PRIMARY KEY

);

CREATE TABLE Child (

id INT PRIMARY KEY,

parent_id INT,

FOREIGN KEY (parent_id) REFERENCES Parent(id)

);

In this case, parent_id is a foreign key in the Child table, referencing the id (primary key) column in the Parent table.

7. How do you filter data in SQL? Give some examples.

SQL uses the WHERE clause to filter data. It specifies a condition that rows must satisfy to be included in the result set. The WHERE clause is placed after the FROM clause in a SELECT, UPDATE, or DELETE statement.

Examples include filtering by equality (WHERE column = 'value'), inequality (WHERE column != 'value'), using comparison operators (WHERE column > 10), range operators (WHERE column BETWEEN 1 AND 10), checking for null values (WHERE column IS NULL), and pattern matching (WHERE column LIKE 'pattern%'). Multiple conditions can be combined using AND and OR operators. Example:

SELECT * FROM employees WHERE salary > 50000 AND department = 'Sales';

8. What is the purpose of the `GROUP BY` clause?

The GROUP BY clause in SQL is used to group rows that have the same values in one or more columns into a summary row. It allows you to perform aggregate functions (like COUNT(), SUM(), AVG(), MIN(), MAX()) on these grouped rows.

Essentially, GROUP BY enables you to categorize your data and then perform calculations on each category, providing insights into subsets of your data rather than the entire dataset as a whole.

9. How do you use aggregate functions like `COUNT`, `SUM`, `AVG`, `MIN`, and `MAX`?

Aggregate functions operate on a set of rows and return a single summary value. COUNT returns the number of rows, SUM calculates the sum of values in a column, AVG calculates the average value, MIN finds the smallest value, and MAX finds the largest value. These functions are often used with the GROUP BY clause to calculate aggregate values for different groups of rows.

For example, let's say we have a table named orders with columns customer_id and order_total. SELECT COUNT(*) FROM orders; would return the total number of orders. SELECT SUM(order_total) FROM orders WHERE customer_id = 123; returns the total value for customer 123. SELECT customer_id, AVG(order_total) FROM orders GROUP BY customer_id; would give us the average order total for each customer. Here are few more examples:

SELECT MIN(order_total) FROM orders;SELECT MAX(order_total) FROM orders;

10. What is a subquery? Can you give an example of when you might use one?

A subquery, also known as an inner query or nested query, is a query embedded within another SQL query. It's used to retrieve data that will be used in the main query's conditions or selection.

For example, you might use a subquery to find all customers who placed orders larger than the average order size. The subquery would calculate the average order size, and the outer query would select customers with orders exceeding that value. A sample SQL syntax looks like this: SELECT customer_id FROM orders WHERE order_size > (SELECT AVG(order_size) FROM orders);

11. Explain the difference between `WHERE` and `HAVING` clauses.

The WHERE clause filters records before any grouping occurs. It operates on individual rows and is used to select rows based on column values. WHERE is applied before GROUP BY.

In contrast, the HAVING clause filters records after grouping. It is used to filter groups based on aggregate functions. Therefore, HAVING must be used with GROUP BY and operates on the results of aggregated data. You can use HAVING to check conditions calculated over the groups.

12. How do you combine the results of two or more `SELECT` statements?

You can combine the results of two or more SELECT statements using UNION, UNION ALL, INTERSECT, or EXCEPT (also sometimes called MINUS, depending on the database system).

UNIONcombines the result sets of two or moreSELECTstatements into a single result set, removing duplicate rows. The number and order of columns must be the same, and the data types must be compatible.UNION ALLis similar toUNION, but it includes all rows from theSELECTstatements, including duplicates.INTERSECTreturns only the rows that are common to allSELECTstatements. Column number, order, and datatype compatibility is enforced.EXCEPT(orMINUS) returns the rows from the firstSELECTstatement that are not present in the subsequentSELECTstatement(s). Column number, order, and datatype compatibility is enforced.

SELECT column1, column2 FROM table1

UNION

SELECT columnA, columnB FROM table2;

13. What are indexes and why are they used?

Indexes are special lookup tables that the database search engine can use to speed up data retrieval. Simply put, an index is a pointer to data in a table.

They are used because without indexes, the database would have to scan the entire table to find relevant rows, which can be very slow for large tables. Indexes help to dramatically reduce the number of records that need to be examined by the database server to fulfill a query. They improve query performance, especially for WHERE clauses, JOIN operations, and ORDER BY clauses. However, indexes can slow down INSERT, UPDATE, and DELETE operations because the index also needs to be updated.

14. How can you avoid SQL injection vulnerabilities?

To avoid SQL injection vulnerabilities, prioritize using parameterized queries (also known as prepared statements). These treat user inputs as data, not as executable code, preventing malicious SQL from being injected. Always sanitize user inputs, though this should be secondary to parameterized queries.

Specifically, avoid directly concatenating user input into SQL queries. If you must build queries dynamically, properly escape user input according to the database's escaping rules. Use an ORM (Object-Relational Mapper) framework, as they often have built-in protection against SQL injection. Employ database user accounts with minimal required privileges. Also, consistently apply security patching to the database server software.

15. Describe the concept of database normalization.

Database normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It typically involves dividing a database into two or more tables and defining relationships between the tables. The goal is to isolate data so that modifications of an attribute can be made in just one table and propagated through the rest of the database via defined relationships.

Normalization usually involves splitting tables into smaller, less redundant tables and defining foreign keys. There are several normal forms (1NF, 2NF, 3NF, BCNF, etc.), each building upon the previous one. Each normal form has specific rules about dependencies and redundancies that must be addressed. Achieving higher normal forms generally leads to more tables and more complex queries but ensures data consistency and reduces the risk of anomalies during data modification.

16. What are the different normal forms in database design?

Database normalization reduces data redundancy and improves data integrity by organizing data into tables in such a way that database constraints properly enforce dependencies. The different normal forms are:

- 1NF (First Normal Form): Eliminates repeating groups of data. Each column should contain only atomic values, and there should be a primary key.

- 2NF (Second Normal Form): Must be in 1NF and eliminates redundant data by ensuring each non-key attribute is fully functionally dependent on the entire primary key. Only relevant when the primary key is composite.

- 3NF (Third Normal Form): Must be in 2NF and eliminates transitive dependencies. No non-key attribute should depend on another non-key attribute.

- BCNF (Boyce-Codd Normal Form): A stronger version of 3NF. Every determinant must be a candidate key.

- 4NF (Fourth Normal Form): Must be in BCNF and eliminates multi-valued dependencies.

- 5NF (Fifth Normal Form): Must be in 4NF and eliminates redundancy by isolating semantically related multiple relationships.

17. How do you back up and restore a database?

Database backup and restore are crucial for data protection and disaster recovery. Backups involve creating a copy of the database, while restoration uses that copy to recover the database to a previous state.

Common methods include: 1. Logical backups: Exporting the database schema and data into a file (e.g., using mysqldump for MySQL or pg_dump for PostgreSQL). 2. Physical backups: Copying the underlying data files (e.g., using file system snapshots or specialized tools). Restoration typically involves importing the logical backup file or restoring the physical data files. Incremental backups can optimize backup time and space by only backing up changes since the last backup. Always verify backups to ensure integrity.

18. What are transactions in SQL? Why are they important?

Transactions in SQL are a sequence of one or more SQL operations (e.g., INSERT, UPDATE, DELETE) treated as a single logical unit of work. Either all operations within the transaction succeed, or none of them do. This "all or nothing" property is also known as atomicity.

They are important because they ensure data consistency and integrity. If a system fails midway through a series of operations, transactions guarantee that the database will revert to its previous consistent state, preventing data corruption and ensuring reliability. Transactions adhere to ACID properties: Atomicity, Consistency, Isolation, and Durability. Without transactions, concurrent access and partial failures could lead to inconsistent and unreliable data.

19. Explain the ACID properties of a database transaction.

ACID properties ensure reliable database transactions. Atomicity means a transaction is treated as a single, indivisible unit of work; either all changes are applied, or none are. Consistency ensures that a transaction transforms the database from one valid state to another, adhering to defined rules and constraints. Isolation dictates that concurrent transactions should not interfere with each other, appearing as if they are executed sequentially. Durability guarantees that once a transaction is committed, its changes are permanently stored and will survive even system failures.

For example, in a banking transaction transferring funds, atomicity ensures that if deducting funds from one account fails, the funds are not credited to the other account. Consistency ensures that the total balance across all accounts remains correct. Isolation prevents another transaction from reading the balance of an account mid-transfer. Durability ensures that once the transfer is confirmed, the changes will persist even if the database server crashes immediately afterward.

20. What is a stored procedure?

A stored procedure is a precompiled collection of SQL statements stored under a name and executed as a unit. Think of it as a mini-program or function within a database. Stored procedures can accept input parameters, perform operations like data retrieval or modification, and return output values. They are useful for encapsulating complex logic, improving performance by reducing network traffic and parsing overhead, and enhancing security by granting permissions to execute the procedure rather than directly accessing underlying tables.

Benefits of using stored procedures include:

- Reduced network traffic: Only the call to the procedure is sent over the network.

- Improved security: Access can be granted to procedures rather than tables.

- Code reusability: Procedures can be called from multiple applications.

- Better performance: Stored procedures are precompiled, meaning they are parsed and optimized when they are created.

21. What are views in SQL?

In SQL, a view is a virtual table based on the result-set of an SQL statement. It's essentially a stored query. Views don't store data physically; instead, they provide a way to look at the underlying tables in a specific, tailored manner.

Views can simplify complex queries, hide data complexity from users, and restrict access to certain columns or rows. They also promote data independence, so changes to the underlying table structure don't necessarily require changes to applications that use the view. Views can be read-only or updatable, depending on their complexity and the database system.

22. Explain the difference between clustered and non-clustered indexes.

Clustered indexes determine the physical order of data in a table. There can only be one clustered index per table because data can only be physically sorted in one way. Think of it like a phone book where entries are physically sorted by last name.

Non-clustered indexes, on the other hand, are like creating a separate lookup table that references the actual data. A table can have multiple non-clustered indexes. They contain the indexed columns and a pointer to the actual data row (either the row ID for a heap table or the clustered index key for a table with a clustered index). This is like the index at the back of a textbook, pointing to specific pages where information is found.

23. How can you optimize a slow-running SQL query?

To optimize a slow-running SQL query, start by using EXPLAIN to understand the query execution plan and identify bottlenecks. Then:

- Index appropriately: Add indexes to columns used in

WHERE,JOIN, andORDER BYclauses. - Rewrite the query: Simplify complex queries, avoid using

SELECT *, and use specific column names. - Optimize joins: Ensure efficient join conditions and consider using

INNER JOINinstead ofOUTER JOINwhen possible. - Limit data retrieval: Use

LIMITto restrict the number of rows returned if only a subset is needed. - Update statistics: Ensure database statistics are up-to-date so the query optimizer can make informed decisions. For example, in Postgres run

ANALYZE table_name. - Partition tables: For large tables, consider partitioning to improve query performance on specific data ranges.

24. What is the purpose of the `EXISTS` operator in SQL?

The EXISTS operator in SQL is used to test for the existence of rows in a subquery. It returns TRUE if the subquery returns at least one row, and FALSE if the subquery returns no rows. Unlike other subquery operators, EXISTS doesn't compare values between the outer query and the subquery. It only checks for the existence of matching rows.

Here's how it works:

- The outer query executes.

- For each row in the outer query, the subquery in the

EXISTSclause is executed. - If the subquery returns at least one row,

EXISTSevaluates toTRUE, and the row from the outer query is included in the result set. Otherwise, if the subquery returns no rows,EXISTSevaluates toFALSE, and the row from the outer query is excluded.

25. Describe a scenario where you might use a self-join.

A self-join is useful when you need to compare rows within the same table. Imagine a table of employees that includes each employee's ID and their manager's ID. To find all employees who report to a specific manager, or to list employees alongside their managers' names, a self-join is appropriate.

For example, consider an Employees table with columns EmployeeID, EmployeeName, and ManagerID. You could use a self-join like this (using SQL):

SELECT e.EmployeeName, m.EmployeeName AS ManagerName

FROM Employees e

JOIN Employees m ON e.ManagerID = m.EmployeeID;

This allows you to retrieve each employee's name alongside their manager's name, all from the same table.

Intermediate SQL interview questions

1. Explain the difference between CHAR and VARCHAR data types. When would you choose one over the other?

CHAR and VARCHAR are both string data types used to store character data, but they differ in how they handle storage. CHAR is a fixed-length data type. When you define a CHAR column with a length of, say, 10, it always occupies 10 characters of storage, even if the actual string stored is shorter. Any unused space is padded with spaces. VARCHAR, on the other hand, is a variable-length data type. It only uses the storage space needed for the actual string stored, plus a small overhead to store the length of the string.

You'd choose CHAR when you know the length of the string will always be the same, like storing state abbreviations (e.g., 'CA', 'NY'). This can offer slight performance advantages due to the fixed length. VARCHAR is preferred when the string length varies significantly, as it conserves storage space. Examples include names, addresses, or descriptions. Using CHAR for variable-length data can waste considerable space. In many modern database systems, the performance difference between CHAR and VARCHAR is negligible, making VARCHAR the more common choice due to its storage efficiency.

2. What is the purpose of using window functions in SQL, and can you provide an example of a scenario where they would be beneficial?

Window functions in SQL allow you to perform calculations across a set of table rows that are related to the current row. Unlike aggregate functions (like SUM(), AVG(), COUNT()), window functions don't collapse rows into a single summary row. Instead, they return a value for each row in the query, based on the specified window frame. They are extremely useful for tasks like calculating running totals, ranking rows within a group, or computing moving averages without resorting to complex subqueries or self-joins.

For example, imagine you have a table of sales data with columns date, product, and sales_amount. You could use a window function to calculate the cumulative sales for each product over time. The SQL query might look like this: SELECT date, product, sales_amount, SUM(sales_amount) OVER (PARTITION BY product ORDER BY date) AS cumulative_sales FROM sales_table;. In this case the SUM() function is operating as a window function due to the OVER() clause. The cumulative sales for each product, up to that date, are shown in a new cumulative_sales column without grouping the data.

3. Describe the use of common table expressions (CTEs). How do they simplify complex queries?

Common Table Expressions (CTEs) are named temporary result sets that exist only within the scope of a single query. They are defined using the WITH clause and can be referenced multiple times within that query. CTEs help in breaking down complex queries into smaller, more manageable, and readable parts.

CTEs simplify complex queries by:

- Improving Readability: By giving meaningful names to intermediate result sets, CTEs make queries easier to understand.

- Breaking Down Logic: Complex logic can be divided into smaller, logical steps, each represented by a CTE.

- Enabling Recursion: CTEs support recursive queries, which are useful for traversing hierarchical data.

- Avoiding Redundancy: A CTE can be referenced multiple times in a query, avoiding the need to repeat the same subquery logic. For example:

WITH SalesSummary AS (

SELECT ProductID, SUM(SalesAmount) AS TotalSales

FROM SalesTable

GROUP BY ProductID

)

SELECT ProductID, TotalSales

FROM SalesSummary

WHERE TotalSales > 1000;

4. How can you optimize a slow-running SQL query? Mention at least three techniques.

To optimize a slow-running SQL query, consider these techniques:

- Indexing: Add indexes to columns frequently used in

WHEREclauses,JOINconditions, andORDER BYclauses. Indexes speed up data retrieval by allowing the database to quickly locate rows that match the query's criteria. For example,CREATE INDEX idx_customer_id ON customers (customer_id); - Query Rewriting: Analyze the query's execution plan and rewrite it for better performance. This might involve using

EXISTSinstead ofDISTINCT, simplifying complexJOINoperations, or avoidingSELECT *. Optimize subqueries by converting them to joins or using temporary tables. Review the use of functions withinWHEREclauses as they may prevent index usage. - Analyze Table Statistics: Ensure the database has up-to-date statistics about the tables. The query optimizer uses these statistics to choose the most efficient execution plan. In PostgreSQL, this is done using

ANALYZE table_name;. In MySQL, it is done usingANALYZE TABLE table_name;.

5. Explain the concept of normalization in database design and why it is important.

Database normalization is the process of organizing data to reduce redundancy and improve data integrity. It involves dividing databases into tables and defining relationships between the tables. The goal is to isolate data so that modifications, such as additions and deletions, can be made in only one table, and then propagate through the rest of the database via the defined relationships.

Normalization is important because it minimizes data redundancy, which saves storage space and reduces the risk of inconsistencies. It also improves data integrity, making the database more reliable and easier to maintain. Properly normalized databases support better query performance, because there's less data to scan and more opportunities for indexing.

6. What are the different types of joins available in SQL? Describe their differences with examples.

SQL joins are used to combine rows from two or more tables based on a related column between them. The main types are:

- INNER JOIN: Returns rows only when there is a match in both tables.

SELECT * FROM TableA INNER JOIN TableB ON TableA.column = TableB.column; - LEFT (OUTER) JOIN: Returns all rows from the left table (TableA) and the matched rows from the right table (TableB). If there's no match in TableB, it returns NULL values for the columns from TableB.

SELECT * FROM TableA LEFT JOIN TableB ON TableA.column = TableB.column; - RIGHT (OUTER) JOIN: Returns all rows from the right table (TableB) and the matched rows from the left table (TableA). If there's no match in TableA, it returns NULL values for the columns from TableA.

SELECT * FROM TableA RIGHT JOIN TableB ON TableA.column = TableB.column; - FULL (OUTER) JOIN: Returns all rows when there is a match in either left (TableA) or right (TableB) table. It combines the results of both LEFT and RIGHT outer joins.

SELECT * FROM TableA FULL OUTER JOIN TableB ON TableA.column = TableB.column;(Note: Some databases like MySQL don't directly support FULL OUTER JOIN, but can emulate it using UNION ALL.) - CROSS JOIN: Returns the Cartesian product of the tables. Each row from the first table is combined with each row from the second table.

SELECT * FROM TableA CROSS JOIN TableB;

7. How do you handle NULL values in SQL queries? Explain the IS NULL and IS NOT NULL operators.

In SQL, NULL represents a missing or unknown value, and it requires special handling. Standard comparison operators (=, !=, >, <) don't work with NULL. To check for NULL values, you use the IS NULL and IS NOT NULL operators.

IS NULL checks if a value is NULL. For example, WHERE column_name IS NULL will return rows where column_name contains a NULL value. IS NOT NULL checks if a value is not NULL. The statement WHERE column_name IS NOT NULL will return rows where column_name does not contain a NULL value. You cannot use = or <> operators to accurately find or exclude NULL values.

8. Explain the difference between clustered and non-clustered indexes.

A clustered index determines the physical order of data in a table. There can only be one clustered index per table because data can only be physically sorted in one way. Think of it like a phone book sorted by last name; the physical arrangement is the index. Non-clustered indexes, on the other hand, store a separate copy of the index along with a pointer back to the actual data row. A table can have multiple non-clustered indexes. Imagine a phone book with a separate index based on area code; it points you to the relevant page (data row) in the main phone book.

Key differences:

- Clustered: Determines physical order, one per table.

- Non-clustered: Separate index, multiple allowed, contains pointers to data rows. Because non clustered indexes involve an extra look up, they tend to be slower than clustered indexes.

9. What are aggregate functions in SQL? Give examples and explain how to use GROUP BY clause with them.

Aggregate functions in SQL perform calculations on a set of rows and return a single summary value. Common examples include:

COUNT(): Returns the number of rows.SUM(): Returns the sum of values.AVG(): Returns the average value.MIN(): Returns the minimum value.MAX(): Returns the maximum value.

The GROUP BY clause is used with aggregate functions to group rows that have the same values in one or more columns. The aggregate function is then applied to each group individually. For example, to find the average salary for each department:

SELECT department, AVG(salary) AS average_salary

FROM employees

GROUP BY department;

10. Describe the purpose and usage of subqueries in SQL. Give an example scenario.

Subqueries in SQL are queries nested inside another query. They are used to retrieve data that will be used in the main query's conditions or calculations. A subquery can appear in the SELECT, FROM, WHERE, or HAVING clauses. The purpose is generally to break down complex queries into more manageable, readable parts, and to perform operations that are difficult or impossible to achieve with a single query.

For example, consider a scenario where you want to find all customers who placed orders larger than the average order size. You could use a subquery to first calculate the average order size, and then use that result in the main query's WHERE clause to filter the customers.

SELECT customer_id, order_total

FROM orders

WHERE order_total > (SELECT AVG(order_total) FROM orders);

11. How can you prevent SQL injection attacks? What are parameterized queries?

SQL injection attacks can be prevented primarily by using parameterized queries (also known as prepared statements) and by employing input validation and output encoding.

Parameterized queries treat user inputs as data, not as executable SQL code. Instead of directly embedding user input into the SQL query string, placeholders are used. The database driver then separately sends the query structure and the input parameters to the database, which combines them safely. This ensures that even if a user enters malicious SQL code as input, it will be treated as a literal string and not executed. For example, in Python using psycopg2, a parameterized query would look like: cur.execute("SELECT * FROM users WHERE username = %s", (username,)) where username is user provided input.

12. Explain the ACID properties of a database transaction. Why are they important?

ACID properties are a set of principles that guarantee database transactions are processed reliably. They are: Atomicity (all operations succeed or fail as a single unit), Consistency (the transaction moves the database from one valid state to another), Isolation (concurrent transactions do not interfere with each other), and Durability (once a transaction is committed, it remains so, even in the event of power loss or system failure).

These properties are crucial because they ensure data integrity and reliability. Without ACID properties, a database could become inconsistent or corrupted, leading to inaccurate results, financial losses, or other serious problems. For example, in a banking system, a transfer of funds requires atomicity to prevent money from being deducted from one account without being credited to another, ensuring consistency of the accounts' balances. Isolation prevents race conditions where multiple transfers interfere with each other. Durability ensures completed transactions are never lost, even if the system crashes immediately after the transaction is confirmed.

13. What is the difference between DELETE, TRUNCATE, and DROP statements in SQL?

DELETE, TRUNCATE, and DROP are SQL commands used to remove data or entire tables from a database, but they function differently.

DELETEremoves specific rows from a table based on a condition. It is a Data Manipulation Language (DML) command and logs each deletion, making it slower. It can be rolled back. Syntax:DELETE FROM table_name WHERE condition;TRUNCATEremoves all rows from a table. It is a Data Definition Language (DDL) command and deallocates the data pages. It's faster thanDELETEas it doesn't log individual row deletions.TRUNCATEresets the identity (auto-increment) column. It cannot be rolled back. Syntax:TRUNCATE TABLE table_name;DROPremoves the entire table (structure and data) from the database. It is a DDL command. All table privileges and indexes are also removed. It cannot be rolled back. Syntax:DROP TABLE table_name;

14. How would you design a database schema for a simple e-commerce application?

A simple e-commerce database schema could include tables for users, products, orders, and order_items. The users table would store user information (id, name, email, password, address). The products table would store product details (id, name, description, price, image_url). The orders table would store order information (id, user_id, order_date, total_amount, shipping_address). Finally, the order_items table would link orders to products (order_id, product_id, quantity, price).

Relationships:

- One-to-many relationship between

usersandorders(one user can have multiple orders). - One-to-many relationship between

ordersandorder_items(one order can have multiple order items). - Many-to-one relationship between

order_itemsandproducts(multiple order items can refer to the same product).

Keys:

- Primary keys for the id columns

- Foreign keys link related tables together

15. Explain the use of the HAVING clause in SQL. How does it differ from the WHERE clause?

The HAVING clause in SQL is used to filter the results of a GROUP BY query. It allows you to specify conditions that must be met by the groups themselves, rather than individual rows. You use it to filter groups based on aggregate functions like COUNT, SUM, AVG, MIN, or MAX applied to the group.

The key difference between HAVING and WHERE is when they are applied. WHERE filters rows before grouping occurs, thus affecting which rows are included in the groups. HAVING, on the other hand, filters groups after grouping and aggregation have been performed. Essentially, WHERE filters individual records, and HAVING filters groups of records. For example, the WHERE clause filters rows before any grouping, but the HAVING clause filters rows after the GROUP BY clause has been applied.

16. Describe the use of the UNION and UNION ALL operators in SQL. What is the key difference between them?

The UNION and UNION ALL operators in SQL are used to combine the result sets of two or more SELECT statements into a single result set. Both operators require that the SELECT statements have the same number of columns and compatible data types for corresponding columns.

The key difference is that UNION removes duplicate rows from the combined result set, while UNION ALL includes all rows, including duplicates. Therefore, UNION ALL is generally faster because it doesn't have to perform duplicate elimination. If you know that your result sets will not contain duplicates, or if you don't care about duplicates, UNION ALL is the preferred choice for performance reasons.

17. How do you create a stored procedure in SQL? What are the benefits of using stored procedures?

To create a stored procedure in SQL, you use the CREATE PROCEDURE statement followed by the procedure name, any input parameters, and the SQL code to be executed. For example:

CREATE PROCEDURE GetCustomerByID (@CustomerID INT)

AS

BEGIN

SELECT * FROM Customers WHERE CustomerID = @CustomerID

END

The benefits of using stored procedures include:

- Improved Performance: Stored procedures are precompiled and stored on the database server, reducing parsing and compilation overhead.

- Enhanced Security: They can help prevent SQL injection attacks by encapsulating SQL code and controlling access.

- Code Reusability: Stored procedures can be called multiple times from different applications, reducing code duplication.

- Reduced Network Traffic: Only the procedure call is sent over the network, rather than the entire SQL code.

- Simplified Maintenance: Changes to the database logic can be made in one place (the stored procedure) without affecting the calling applications.

18. Explain the concept of database triggers. Provide an example of when you might use a trigger.

Database triggers are special stored procedures that automatically execute in response to certain events on a table or view. These events can be INSERT, UPDATE, or DELETE operations. Triggers are used to enforce business rules, maintain data integrity, audit changes, or react to data modifications. They can be executed BEFORE or AFTER the event occurs.

An example of when you might use a trigger is to automatically create an audit log record whenever a row in an Employees table is updated. This trigger would capture the old and new values of the modified row and insert them into an EmployeeAuditLog table. This helps in tracking data changes and auditing purposes. Here is some example sql code:

CREATE TRIGGER EmployeeUpdateTrigger

AFTER UPDATE ON Employees

FOR EACH ROW

BEGIN

INSERT INTO EmployeeAuditLog (EmployeeID, OldSalary, NewSalary, UpdateDate)

VALUES (OLD.EmployeeID, OLD.Salary, NEW.Salary, NOW());

END;

19. What is the purpose of using the EXPLAIN statement before a SQL query? How can it help with optimization?

The EXPLAIN statement in SQL is used to analyze a query's execution plan without actually running the query. It shows how the database intends to execute the query, including the tables it will access, the indexes it might use, the join order, and the estimated number of rows to be processed. This is crucial for understanding the query's performance characteristics.

By examining the output of EXPLAIN, you can identify potential bottlenecks such as:

- Missing indexes: Indicated by full table scans instead of index seeks.

- Inefficient join orders: The database might be joining tables in a suboptimal order.

- Use of temporary tables: The database might be creating temporary tables in memory or on disk, which can be slow.

- Full table scans: Avoiding index usage can be a performance killer.

Based on the analysis, you can optimize the query by adding indexes, rewriting the query to use more efficient joins, or simplifying complex subqueries. For example, if EXPLAIN shows a full table scan, adding an index on the relevant column might significantly improve performance. Specifically, EXPLAIN SELECT * FROM users WHERE email = 'test@example.com'; might reveal that the email column needs an index.

20. How do you handle concurrency issues in a database environment? Explain concepts like locking.

Concurrency issues in a database environment arise when multiple users or applications try to access and modify the same data simultaneously, leading to data inconsistency or integrity problems. Several techniques are employed to manage these issues, with locking being a primary mechanism. Locking prevents multiple transactions from accessing the same data concurrently. There are different types of locks, such as:

- Exclusive Locks (Write Locks): These locks prevent any other transaction from reading or writing to the data.

- Shared Locks (Read Locks): Allow multiple transactions to read the data but prevent any transaction from writing to it.

Other concurrency control techniques include optimistic locking (versioning), timestamps, and multi-version concurrency control (MVCC). Optimistic locking assumes conflicts are rare, so it only checks for conflicts at the time of the write, potentially rolling back transactions if conflicts occur. MVCC creates snapshots of the data, so readers and writers don't block each other.

Advanced SQL interview questions

1. Explain how to optimize a slow-performing SQL query. What are the key steps you'd take?

To optimize a slow-performing SQL query, I'd start by understanding the problem. First, I would use EXPLAIN to analyze the query execution plan. This helps identify bottlenecks like full table scans or missing indexes. Based on the analysis, I'd focus on a few key areas:

- Indexing: Ensure appropriate indexes exist on columns used in

WHEREclauses,JOINconditions, andORDER BYclauses. Incorrect or missing indexes are a common performance issue. - Query Structure: Review the query for potential inefficiencies. This includes avoiding

SELECT *, optimizingJOINorder (especially for large tables), and rewriting subqueries or correlated subqueries where possible (sometimes usingJOINoperations or temporary tables instead). - Data Types: Check that data types used in

WHEREandJOINmatch. Implicit conversions can hinder index usage. - Statistics: Ensure the database has up-to-date statistics on the tables involved. This allows the query optimizer to make better decisions.

- Hardware/Configuration: Occasionally, slow queries indicate problems with the database server's hardware (e.g., insufficient memory, slow disk I/O) or configuration. Adjusting settings like buffer pool size can sometimes help.

2. Describe the difference between clustered and non-clustered indexes in SQL.

A clustered index determines the physical order of data in a table. A table can have only one clustered index because the data itself can only be sorted in one physical order. Think of it as a phone book sorted by last name; the physical book is sorted.

Non-clustered indexes, on the other hand, are like a separate lookup table that points to the data's actual location. A table can have multiple non-clustered indexes. It's like an index in the back of a textbook; it points to pages containing specific topics without reordering the entire book.

3. How do you handle deadlocks in SQL Server? Explain with example scenarios.

Deadlocks occur when two or more SQL Server processes block each other indefinitely, each waiting for a resource held by the other. To handle deadlocks, SQL Server automatically chooses one of the processes as a victim and terminates it, allowing the other process(es) to proceed. The victim's transaction is rolled back. This is called deadlock detection and resolution.

For example, consider two transactions: Transaction A wants to update table X and then table Y, while Transaction B wants to update table Y and then table X. If Transaction A acquires a lock on table X and Transaction B acquires a lock on table Y, and then Transaction A tries to acquire a lock on table Y (blocked by B) and Transaction B tries to acquire a lock on table X (blocked by A), a deadlock occurs. SQL Server will kill one of the processes (A or B), rollback the changes and provide error 1205. Strategies for prevention include: Accessing tables in the same order in all concurrent transactions, using shorter transactions, lowering isolation levels (with consideration), and using bound connections.

4. What are the advantages and disadvantages of using stored procedures?

Advantages of stored procedures include improved performance due to pre-compilation and execution on the database server, reduced network traffic as only the procedure call is sent, enhanced security by granting access to procedures rather than underlying tables, and code reusability across multiple applications. They also provide data consistency by centralizing data access logic.

Disadvantages include increased maintenance complexity, potential vendor lock-in as stored procedure languages are database-specific (e.g., T-SQL for SQL Server, PL/SQL for Oracle), and debugging difficulties compared to application code. Stored procedures can also increase load on the database server, especially if not optimized, and version control can be more challenging. They might not be suitable for simple queries that are more efficiently handled by ORMs.

5. Explain window functions in SQL and provide examples of how they can be used.

Window functions in SQL perform calculations across a set of table rows that are related to the current row. This set of rows is called the "window". Unlike aggregate functions which group rows into a single output row, window functions retain the individual rows while providing aggregated or ranked information.

Some common examples include:

ROW_NUMBER(): Assigns a unique sequential integer to each row within a partition of a result set.SELECT employee_id, department, salary, ROW_NUMBER() OVER (PARTITION BY department ORDER BY salary DESC) as row_num FROM employees;RANK(): Assigns a rank to each row within a partition based on the specified ordering. Rows with the same value receive the same rank, and the next rank is skipped.SELECT product_name, category, price, RANK() OVER (PARTITION BY category ORDER BY price DESC) as price_rank FROM products;SUM(),AVG(),MIN(),MAX(): Can be used as window functions to calculate aggregations over a window. For example calculating a cumulative sum of sales:SELECT order_date, sales, SUM(sales) OVER (ORDER BY order_date) as cumulative_sales FROM orders;

6. Describe different isolation levels in SQL Server and how they affect concurrency.

SQL Server defines isolation levels that control the degree to which transactions are isolated from each other's modifications. Higher isolation levels reduce concurrency but provide greater data consistency. Key isolation levels include:

- Read Uncommitted: Allows dirty reads (reading uncommitted data). Highest concurrency, lowest data consistency.

- Read Committed: Prevents dirty reads; only reads committed data. A common default.

- Repeatable Read: Prevents dirty reads and non-repeatable reads (ensures that if a transaction reads a row multiple times, it will see the same data each time).

- Serializable: Highest isolation level; prevents dirty reads, non-repeatable reads, and phantom reads (ensures that a query executed multiple times will return the same result set). Lowest concurrency due to heavy locking.

- Snapshot: Uses row versioning to provide transactional consistency. Readers do not block writers and writers do not block readers. However, it may encounter update conflicts.

7. How can you implement pagination in SQL queries?

Pagination in SQL can be implemented using LIMIT and OFFSET clauses. LIMIT specifies the maximum number of rows to return, while OFFSET specifies the number of rows to skip from the beginning of the result set.

For example, to get the second page of results with a page size of 10, you would use: SELECT * FROM table_name ORDER BY some_column LIMIT 10 OFFSET 10;. Note that different databases have slightly different syntax (e.g. SQL Server uses ROW_NUMBER() and FETCH NEXT clauses, and some older versions may not support OFFSET). Always include an ORDER BY clause to ensure consistent pagination results.

8. Explain the purpose of query hints and when you might use them.

Query hints are instructions given to the database optimizer to influence the execution plan of a query. While the optimizer generally selects the most efficient plan, there are situations where it might make suboptimal choices. Hints provide a way to override the optimizer's default behavior and force it to use a specific index, join order, or other strategies.

Use query hints judiciously. Common use cases include:

- Forcing index usage: When the optimizer doesn't choose the best index for a particular query, a hint can direct it to use a specific index, improving performance.

- Join order optimization: In complex queries with multiple joins, hints can control the order in which tables are joined, potentially reducing the overall execution time.

- Testing and debugging: Hints can be used to experiment with different execution plans and identify performance bottlenecks.

- Circumventing optimizer limitations: Occasionally, optimizers have limitations that prevent them from choosing the ideal plan. Hints can work around these limitations. Be aware that relying heavily on hints can make queries fragile, as changes in data distribution or schema can invalidate the assumptions on which the hints are based, leading to performance regressions. They should be used as a last resort when other optimization techniques have been exhausted. SQL Server example:

SELECT * FROM table1 WITH (INDEX(index_name)) WHERE condition;

9. How would you design a SQL database schema for an e-commerce website?

An e-commerce database schema typically involves several tables, including users, products, orders, order_items, and categories. The users table stores user information (user_id, username, password, email, address, etc.). The products table stores product details (product_id, name, description, price, category_id, etc.). The categories table stores category information (category_id, name). The orders table stores order details (order_id, user_id, order_date, total_amount, shipping_address, etc.). The order_items table acts as a junction table to handle the many-to-many relationship between orders and products (order_id, product_id, quantity, price). Foreign keys are used to establish relationships between these tables (e.g., user_id in orders references users.user_id).

Indexes are crucial for performance; they should be added on frequently queried columns like user_id in orders, product_id in order_items, and category_id in products. Data types should be chosen appropriately (e.g., INT for IDs, VARCHAR for names, DECIMAL for prices, TIMESTAMP for dates). Considerations for scalability may involve sharding or database replication as the site grows. Using normalization principles can reduce data redundancy and improve data integrity.

10. Describe common SQL injection vulnerabilities and how to prevent them.

SQL injection vulnerabilities occur when user-supplied input is directly incorporated into SQL queries without proper sanitization or validation. Common types include:

- String-based injection: Manipulating string literals within a query (e.g.,

' OR '1'='1). - Numeric-based injection: Injecting malicious numeric values to bypass checks.

- Boolean-based blind injection: Inferring information based on the truthiness of injected conditions.

- Time-based blind injection: Inferring information by observing delays caused by injected commands (e.g.,

WAITFOR DELAY '0:0:5').

To prevent SQL injection:

- Use parameterized queries (prepared statements): These treat user input as data, not code. Example (Python):

cursor.execute("SELECT * FROM users WHERE username = %s AND password = %s", (username, password)) - Input validation and sanitization: Validate input types, lengths, and patterns. Sanitize by escaping special characters.

- Principle of Least Privilege: Grant database users only the necessary permissions.

- Use an ORM (Object-Relational Mapper): ORMs often handle query construction and parameterization automatically, reducing the risk of injection.

11. What are common table expressions (CTEs) and how can they be helpful?

Common Table Expressions (CTEs) are temporary, named result sets that you can define within a single SQL statement (like a SELECT, INSERT, UPDATE, or DELETE). Think of them as temporary views that exist only for the duration of the query.

CTEs are helpful for:

- Improving readability: Breaking down complex queries into smaller, logical blocks.

- Simplifying recursive queries: CTEs are often used to handle hierarchical data, for example to traverse an organizational chart.

- Referencing a table multiple times in the same query: Avoiding redundant subqueries.

- Organizing code: Making SQL code easier to understand and maintain.

12. How would you optimize a SQL query that involves multiple joins?

To optimize a SQL query with multiple joins, several strategies can be employed. First, ensure appropriate indexes are in place on the join columns; this significantly speeds up the matching process. Analyze the query execution plan using tools like EXPLAIN to identify bottlenecks, such as full table scans.

Rewrite the query to minimize the amount of data being joined and processed. This can involve filtering early in the process using WHERE clauses to reduce the size of intermediate result sets before joins occur. Consider rewriting the join order, as the database optimizer might not always choose the most efficient one. Sometimes using temporary tables or Common Table Expressions (CTEs) can break down complex queries into smaller, more manageable parts, improving performance. For instance, materialize a small intermediate table using a CTE before joining it with a larger table.

13. Explain the concept of normalization and denormalization in database design.

Normalization is the process of organizing data in a database to reduce redundancy and improve data integrity. It typically involves dividing large tables into smaller, more manageable tables and defining relationships between them. This helps to minimize data duplication and ensure that data is stored consistently across the database. Denormalization, on the other hand, is the process of adding redundancy back into a database to improve read performance. This might involve combining tables or adding duplicate columns to avoid complex joins during queries. While it can speed up read operations, denormalization can also increase the risk of data inconsistencies and make data maintenance more complex. A database designer must carefully weigh the benefits of improved read performance against the potential costs of increased data redundancy and complexity when considering denormalization.

14. Describe the different types of SQL joins and their use cases.

SQL joins are used to combine rows from two or more tables based on a related column between them. Different types of joins include: INNER JOIN (returns rows only when there is a match in both tables), LEFT JOIN (returns all rows from the left table and the matched rows from the right table, filling in NULLs for non-matches), RIGHT JOIN (returns all rows from the right table and the matched rows from the left table, filling in NULLs for non-matches), and FULL OUTER JOIN (returns all rows when there is a match in either the left or right table, filling in NULLs for non-matches). A CROSS JOIN returns the cartesian product of the sets of rows from the joined tables.

The use cases vary. INNER JOIN is ideal for retrieving related data that exists in both tables. LEFT JOIN is useful when you need all records from one table and matching information from another, even if a match doesn't always exist. FULL OUTER JOIN is used when you want all records from both tables, regardless of whether they match. Finally, CROSS JOIN has limited use cases such as generating all possible combinations of data; though, it must be used with caution as it can produce very large result sets.

15. How do you handle errors and exceptions in SQL stored procedures?

In SQL stored procedures, error handling is primarily achieved using TRY...CATCH blocks. The code that might raise an error is placed within the TRY block. If an error occurs, control is immediately transferred to the CATCH block. Within the CATCH block, you can use functions like ERROR_NUMBER(), ERROR_MESSAGE(), ERROR_SEVERITY(), and ERROR_STATE() to retrieve details about the error. These details can then be logged, used to perform rollback operations, or passed back to the calling application.

Specifically, after catching an error in the CATCH block, important actions usually involve rolling back transactions if data modification occurred and re-raising the error (or a custom error) to inform the caller. This avoids masking the original problem and allows calling applications to handle the situation appropriately. Using RAISERROR within the CATCH block is a common method for re-raising the error. You should also consider implementing logging mechanisms to record error details for debugging and auditing purposes.

16. Explain data warehousing concepts and their importance.

Data warehousing is the process of collecting and storing data from various sources into a central repository, typically for analysis and reporting. It involves data extraction, transformation, and loading (ETL) processes to cleanse and structure the data. The data is then optimized for querying and reporting purposes, often utilizing a star or snowflake schema.

Data warehousing is important because it provides a single source of truth for business intelligence, enabling organizations to gain valuable insights into their operations, customer behavior, and market trends. This supports better decision-making, improved forecasting, and enhanced business performance. Without data warehousing, analyzing data from disparate sources becomes complex and time-consuming, hindering effective business strategies.

17. How can you improve the performance of full-text search in SQL Server?

To improve full-text search performance in SQL Server, consider the following:

- Use appropriate data types: Ensure the columns you're searching are of appropriate types (e.g.,

VARCHAR,NVARCHAR,TEXT,NTEXT). - Use Full-Text Indexes: Ensure that you've created full-text indexes on the columns you are querying. Optimize them with proper stop word lists and language settings.

- Optimize Queries: Use

CONTAINSorFREETEXTpredicates efficiently. PreferCONTAINSTABLEorFREETEXTTABLEwhen ranking results is important. - Regular Index Maintenance: Perform regular index maintenance to avoid fragmentation. Schedule index rebuilds or reorganizations during off-peak hours.

- Optimize Stopwords: Customize the stopword list to remove common words specific to your domain that don't add value to search results. This reduces index size and improves performance.

- Increase Resources: Allocate sufficient memory to SQL Server, as full-text search is memory-intensive. Faster storage (SSD) can also significantly improve performance.

- Filtered Indexes: Consider using filtered indexes on underlying tables if the full-text index is being used in conjunction with specific filters. This allows the optimizer to choose a more efficient execution plan.

- Partitioning: For large tables, consider partitioning the table and associated full-text index. This can improve the manageability and query performance.

18. Describe the use of triggers in SQL Server and potential drawbacks.

Triggers in SQL Server are special stored procedures that automatically execute in response to certain events on a table or view. These events can include INSERT, UPDATE, or DELETE operations. Triggers are used for auditing, enforcing data integrity, or reacting to data changes with additional logic. For example, a trigger might automatically log changes to a table or update related tables when a row is deleted. Triggers can be either AFTER triggers, which fire after the triggering event, or INSTEAD OF triggers, which replace the triggering event entirely.

Potential drawbacks include performance overhead, especially with complex logic. Triggers can also make debugging and understanding data modifications more difficult because the logic is hidden and implicit. Additionally, cascading triggers can lead to unexpected behavior and performance problems if not carefully designed. Complex trigger logic can be harder to maintain than explicit stored procedures or application code.

19. How would you design a database schema to store time-series data?

For time-series data, a common approach is to use a table with columns for the timestamp, the metric name, and the value. For example:

CREATE TABLE time_series_data (

timestamp TIMESTAMP NOT NULL,

metric_name VARCHAR(255) NOT NULL,

value DOUBLE PRECISION,

PRIMARY KEY (timestamp, metric_name)

);

Considerations include choosing an appropriate data type for the value (e.g., DOUBLE PRECISION, INTEGER), indexing the timestamp column for efficient querying, and potentially partitioning the table by time range for improved performance and manageability, especially when dealing with large volumes of data. Depending on the data characteristics, a wide-column store or a specialized time-series database (like InfluxDB or TimescaleDB) may be more suitable.

20. Explain the concept of data partitioning in SQL Server.

Data partitioning in SQL Server divides large tables or indexes into smaller, more manageable pieces. This improves query performance, manageability, and availability. SQL Server supports horizontal partitioning, where rows are divided across multiple partitions based on a partitioning function. Each partition can reside on a different filegroup, potentially on different disks or storage tiers.

- Performance: Queries targeting specific partitions scan less data, leading to faster execution.

- Manageability: Allows for easier archiving, backup, and maintenance operations on individual partitions.

- Availability: You can perform maintenance operations on one partition while others remain available.

For example, a table containing sales data could be partitioned by year. Queries for a specific year would only need to access the partition for that year. SQL Server uses a partitioning function and a partitioning scheme to define how data is distributed across partitions.

21. How would you implement auditing in a SQL Server database?

SQL Server offers several ways to implement auditing. SQL Server Audit is a built-in feature allowing you to track database events like data modifications, schema changes, or security events. It can write audit logs to files or the Windows Event Log. You can define audit specifications at the server or database level to specify what actions to audit.

Alternatively, you can use triggers. Triggers can automatically record changes to specific tables in an audit table. The trigger captures before and after values of the modified data, the user who made the change, and the timestamp. This approach offers more flexibility to customize the audit information, but requires more manual configuration and maintenance, with considerations for performance overhead. Temporal tables provide automatic auditing as well, allowing you to view data changes across time.

22. Describe the role of the SQL Server Agent and its use cases.

The SQL Server Agent is a Microsoft Windows service that automates administrative tasks in SQL Server. It executes scheduled jobs containing T-SQL scripts, command-line commands, SSIS packages, and other operations. It acts as a job scheduler, event monitor, and alerting system, freeing DBAs from repetitive manual tasks.

Common use cases include:

- Database backups: Scheduling regular database backups.

- Index maintenance: Rebuilding or reorganizing indexes.

- Statistics updates: Updating statistics for query optimization.

- Alerting: Sending notifications based on SQL Server events, such as errors or performance thresholds.

- Data import/export: Automating data transfers between systems.

- Executing stored procedures: Running stored procedures on a schedule or in response to events.

- Log shipping / Replication: Automating tasks relating to these technologies.

23. How can you monitor SQL Server performance and identify bottlenecks?

SQL Server performance monitoring involves several tools and techniques. SQL Server Management Studio (SSMS) Activity Monitor provides a real-time overview of server resource usage. Performance Monitor (PerfMon) allows tracking various system counters like CPU utilization, disk I/O, and memory usage. Extended Events (XEvents) capture detailed information about server operations, enabling in-depth analysis. Database Engine Tuning Advisor analyzes workload and recommends index and query improvements.

To identify bottlenecks, analyze wait statistics to determine what resources queries are waiting on (e.g., CPU, I/O, locks). Check for long-running queries using sp_who2 or Dynamic Management Views (DMVs) like sys.dm_exec_requests and sys.dm_exec_query_stats. Examine index usage to identify missing or unused indexes. Consider using SQL Profiler (deprecated but still functional) or Extended Events to trace specific queries and pinpoint performance issues.

24. Explain different methods of backing up and restoring a SQL Server database.

SQL Server offers several methods for backing up and restoring databases. The BACKUP DATABASE T-SQL command performs backups, and RESTORE DATABASE performs restores. Different backup types exist:

- Full backups: Capture the entire database. These are the foundation for other backup types.

- Differential backups: Capture changes since the last full backup. They're faster to create than full backups but require a full backup to restore.

- Transaction log backups: Capture transaction log records. Used to restore to a specific point in time. Requires a full backup as a starting point.

For restoration, you restore the last full backup, then the last differential backup (if any), and then all transaction log backups in sequence. SQL Server Management Studio (SSMS) provides a GUI for these operations, and maintenance plans can automate backup schedules. Other methods include using Azure Backup for cloud-based backups and third-party tools.

25. How can you implement role-based access control in SQL Server?

Role-based access control (RBAC) in SQL Server can be implemented using roles and permissions. First, create database roles using the CREATE ROLE statement. Then, grant specific permissions to these roles using the GRANT statement, specifying the object and the desired permissions (e.g., SELECT, INSERT, UPDATE, DELETE). Finally, add SQL Server users or groups to these roles using sp_addrolemember. This ensures that users inherit the permissions associated with the roles they belong to.

For example:

CREATE ROLE DataReaders;

GRANT SELECT ON MyTable TO DataReaders;

EXEC sp_addrolemember 'DataReaders', 'MyUser';

This creates a role DataReaders, grants SELECT permission on MyTable, and adds the user MyUser to that role.

26. Describe the purpose of the SQL Server profiler and how to use it.

SQL Server Profiler is a graphical user interface to monitor events in an instance of SQL Server. It captures data about server performance, query execution, security events, and other activities. This information is valuable for identifying slow-running queries, deadlocks, security vulnerabilities, and general performance bottlenecks. It has been deprecated, and Extended Events is now the preferred method.

To use it (though deprecated), you would:

- Open SQL Server Profiler.

- Connect to the desired SQL Server instance.

- Define a new trace. This includes selecting the events to capture (e.g.,

SQL:BatchCompleted,SP:StmtCompleted) and the data columns to collect for each event (e.g.,TextData,Duration,CPU,Reads,Writes). - Set filters to narrow down the captured events (e.g., filter by database, application, or user).

- Run the trace and analyze the captured data. You can save the trace data to a file or table for later analysis.

Extended Events is used similarly by creating sessions and defining events and targets.

27. How can you migrate data between different SQL Server databases?

Several methods exist for migrating data between SQL Server databases. Common approaches include using the SQL Server Import and Export Wizard, which is a GUI-based tool suitable for simple migrations. Alternatively, you can utilize bcp (Bulk Copy Program), a command-line utility for fast data transfer, especially useful for large datasets. For more complex scenarios or when transformations are needed, SQL Server Integration Services (SSIS) provides a robust platform for building ETL (Extract, Transform, Load) packages. Linked Servers can also be used to directly query and transfer data between servers using SELECT INTO or INSERT INTO ... SELECT statements. Finally, generating SQL scripts (using tools or manually) to create tables and insert data is a viable option, offering greater control but requiring more manual effort.

28. Explain the concept of data encryption in SQL Server and its benefits.

Data encryption in SQL Server protects sensitive data by converting it into an unreadable format (ciphertext), preventing unauthorized access. SQL Server offers several encryption features like Transparent Data Encryption (TDE), column-level encryption using symmetric or asymmetric keys, and Always Encrypted.

Benefits include:

- Data confidentiality: Protects data from unauthorized viewing.

- Compliance: Helps meet regulatory requirements like GDPR, HIPAA.

- Data integrity: Ensures that data hasn't been tampered with.

- Enhanced Security: Protects data at rest and in transit.

- Auditing: Tracks encryption key usage for auditing purposes.

Expert SQL interview questions

1. How would you optimize a slow-running SQL query, detailing the steps from identification to implementation?

To optimize a slow-running SQL query, I'd start by identifying the bottleneck. I'd use tools like EXPLAIN PLAN to understand the query execution plan and pinpoint slow operations like full table scans or inefficient joins. Then, I would focus on the following:

- Indexing: Ensure appropriate indexes exist on columns used in

WHEREclauses,JOINconditions, andORDER BYclauses. Adding missing indexes can drastically improve performance. - Query Rewriting: Optimize the query structure. This could involve simplifying complex subqueries, rewriting joins, or using more efficient functions. Examine the

WHEREclause for sargability. For example,WHERE order_date BETWEEN '2023-01-01' AND '2023-01-31'is better thanWHERE YEAR(order_date) = 2023 AND MONTH(order_date) = 1because the former can use an index onorder_date. - Data Optimization: Consider data partitioning or archiving old data to reduce the amount of data being scanned. Analyze table statistics and update them if necessary so that the query optimizer can make the best decision.

- Hardware/Configuration: If software optimizations are insufficient, review hardware resources (CPU, memory, disk I/O) and database configuration parameters. Increasing memory allocated to the database can significantly improve query performance.

After each change, I'd re-evaluate the query performance using EXPLAIN PLAN and timing metrics to ensure the optimization is effective. It's an iterative process.

2. Explain the concept of a 'covering index' and how it can improve query performance. Give a practical example.

A covering index is a special type of database index that includes all the columns needed to satisfy a particular query. When a query can be resolved entirely from the index itself, without needing to access the actual table rows, it's called a 'covered query'. This avoids the need for a table lookup, which is a relatively expensive operation. This significantly improves query performance, because the database can retrieve all the required data directly from the index, which is typically smaller and stored in a more efficient format than the full table.

For example, consider a table employees with columns id, name, age, and department. If you frequently run queries like SELECT name, age FROM employees WHERE department = 'Sales', a covering index on (department, name, age) could drastically speed up these queries. Without the index, the database would need to scan the employees table and retrieve the name and age for each row where department = 'Sales'. With the covering index, the database can directly retrieve name and age from the index, without needing to read the table at all. Creating such an index in SQL might look like this: CREATE INDEX idx_department_name_age ON employees (department, name, age);

3. Describe a scenario where using a correlated subquery is more efficient than using a join, and explain why.

A correlated subquery can be more efficient than a join when you need to select data based on a condition that depends on each row of the outer table and you only need a small subset of columns from the related table. Consider a scenario where you need to find all customers who have placed at least one order with an amount greater than their average order amount. Using a join would require calculating the average order amount for each customer and then joining it back to the orders table, which can be computationally expensive, especially if the tables are large. A correlated subquery can efficiently achieve this by checking the condition for each customer directly:

SELECT c.customer_id

FROM Customers c

WHERE EXISTS (

SELECT 1

FROM Orders o

WHERE o.customer_id = c.customer_id

AND o.order_amount > (

SELECT AVG(order_amount)

FROM Orders o2

WHERE o2.customer_id = c.customer_id

)

);

In this case, the correlated subquery is more efficient because it avoids the need to compute the average order amount for all customers at once, instead calculating it only for those customers being considered by the outer query. The EXISTS clause further optimizes the query by stopping the subquery execution as soon as it finds one matching row.

4. How do you handle data skew in a database, and what are the potential performance implications?

Data skew refers to an uneven distribution of data across partitions or nodes in a database. This can lead to performance bottlenecks because some partitions/nodes handle significantly more workload than others. Common strategies to address data skew include: salting (adding a random prefix to skewed values to distribute them), pre-splitting (manually creating partitions based on anticipated data distribution), and using techniques like bucketing or consistent hashing.

The performance implications of data skew can be severe. Queries targeting skewed data can experience longer processing times, leading to overall slowdowns. This can also cause resource contention on the overloaded nodes, impacting the performance of other queries and potentially leading to system instability. Effective skew mitigation is crucial for maintaining database performance and scalability.

5. Explain the different isolation levels in SQL and their impact on concurrency and data integrity. Provide examples of when to use each level.

SQL isolation levels control the degree to which concurrent transactions are isolated from each other. Higher isolation levels provide better data integrity but can reduce concurrency. The four standard isolation levels are:

- Read Uncommitted: The lowest level; transactions can read uncommitted changes from other transactions (dirty reads). This offers the highest concurrency but the lowest data integrity. Example use case: When you don't mind potentially reading inaccurate data, such as in some reporting scenarios.

- Read Committed: Transactions can only read committed changes. Prevents dirty reads, but non-repeatable reads are possible (a transaction reads the same row twice but gets different values). Example use case: Basic business operations where dirty reads are unacceptable.

- Repeatable Read: Prevents dirty reads and non-repeatable reads. However, phantom reads are still possible (a transaction executes the same query twice and gets different rows). Example use case: Financial transactions where consistent reads within a single transaction are crucial.

- Serializable: The highest level; provides complete isolation by preventing dirty reads, non-repeatable reads, and phantom reads. Transactions are effectively executed serially. Example use case: Scenarios demanding the highest data integrity, such as updating critical financial data or inventory management where consistency is paramount, even at the cost of concurrency.

6. Describe the process of database sharding and its benefits and drawbacks. How would you implement it in a real-world scenario?

Database sharding is the process of horizontally partitioning a database into smaller, more manageable parts called shards. Each shard contains a subset of the total data and can reside on a separate server. This allows for distributing the load across multiple machines, improving performance and scalability. Benefits include increased storage capacity, reduced query latency, and improved availability. Drawbacks involve increased complexity in data management, potential for inconsistent data across shards, and the need for a sharding key strategy.