As companies embrace Infrastructure as Code (IaC), identifying skilled Terraform engineers is important for maintaining infrastructure. Evaluating candidates can be tricky, especially with the increasing requirements for DevOps Engineers.

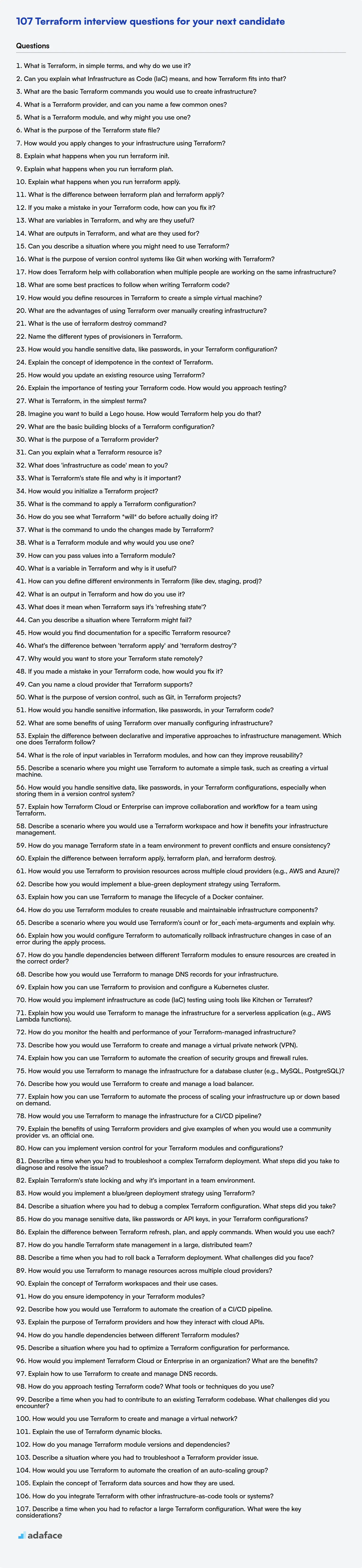

This blog post provides a curated list of Terraform interview questions, designed to help interviewers assess candidates across different experience levels from freshers to experienced professionals. We've also included Terraform MCQs to gauge core understanding.

By using these questions, you can effectively assess a candidate's Terraform skills. Consider using a Terraform online test beforehand to screen candidates more effectively.

Table of contents

Terraform interview questions for freshers

1. What is Terraform, in simple terms, and why do we use it?

Terraform is an Infrastructure as Code (IaC) tool. Simply put, it lets you define your infrastructure (servers, databases, networks, etc.) using code. This code is then used to automatically provision and manage that infrastructure. It's like writing a recipe for your infrastructure and letting Terraform execute it.

We use Terraform because it allows us to automate infrastructure management, making it repeatable, predictable, and less prone to errors. It helps in version controlling our infrastructure like regular code, enabling collaboration and easier rollback. It also supports multiple cloud providers (AWS, Azure, GCP, etc.) allowing us to manage resources across them using a single tool.

2. Can you explain what Infrastructure as Code (IaC) means, and how Terraform fits into that?

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure through code, rather than through manual processes. This allows you to automate infrastructure creation, modification, and management in a repeatable and predictable way. Benefits include version control, faster deployments, reduced errors, and improved consistency.

Terraform is a popular IaC tool that enables you to define and provision infrastructure using a declarative configuration language. You describe the desired state of your infrastructure in Terraform configuration files, and Terraform automates the process of bringing your infrastructure into that state. It supports multiple cloud providers (AWS, Azure, GCP, etc.) as well as on-premise infrastructure, making it a versatile solution for managing diverse environments.

Here's a basic example of Terraform configuration:

resource "aws_instance" "example" {

ami = "ami-0c55b76446882a2ad"

instance_type = "t2.micro"

tags = {

Name = "ExampleInstance"

}

}

3. What are the basic Terraform commands you would use to create infrastructure?

To create infrastructure with Terraform, you'd primarily use these commands:

terraform init: Initializes the Terraform working directory. This downloads the necessary provider plugins.terraform plan: Creates an execution plan, showing the changes Terraform will make to your infrastructure. It's a good practice to review this plan.terraform apply: Applies the changes described in the plan to create or modify the infrastructure. You'll typically need to confirm this action.terraform apply -auto-approvecan be used to bypass the approval prompt.terraform destroy: Destroys all the resources managed by your Terraform configuration. Use with caution!

4. What is a Terraform provider, and can you name a few common ones?

A Terraform provider is a plugin that enables Terraform to interact with various infrastructure platforms, services, and APIs. It essentially acts as a bridge, allowing Terraform to manage resources on that specific platform. Providers define the resource types Terraform can manage, their properties, and how to create, read, update, and delete (CRUD) them.

Some common Terraform providers include:

aws: For managing resources on Amazon Web Services.azurerm: For managing resources on Microsoft Azure.google: For managing resources on Google Cloud Platform.kubernetes: For managing Kubernetes clusters and resources.docker: For managing Docker containers and images.digitalocean: For managing resources on DigitalOcean.

5. What is a Terraform module, and why might you use one?

A Terraform module is a reusable configuration component that encapsulates a set of Terraform resources. It's essentially a directory containing Terraform configuration files that define a logical unit of infrastructure. Modules promote modularity, reusability, and consistency in infrastructure code.

You might use a module to:

- Simplify complex configurations: Break down large, unwieldy configurations into smaller, manageable pieces.

- Promote code reuse: Use the same module multiple times to create identical infrastructure components.

- Enforce consistency: Ensure that infrastructure components are created in a standardized way.

- Abstraction: Hide the complexity of the underlying infrastructure from the user.

For example, you could create a module to provision an AWS EC2 instance with preconfigured security groups and networking. This module can be reused across different projects or environments.

6. What is the purpose of the Terraform state file?

The Terraform state file serves as a crucial component for Terraform's infrastructure management. It essentially maps real-world resources to your Terraform configuration, allowing Terraform to track and manage your infrastructure effectively. Without the state file, Terraform wouldn't know what resources it has previously created or modified.

Specifically, the state file fulfills several key purposes:

- Tracking resources: It stores metadata about managed infrastructure resources, such as resource IDs, attributes, and dependencies.

- Mapping configuration to resources: It links resources defined in your Terraform configuration to the actual resources in your infrastructure. This is essential for updating or deleting resources.

- Storing sensitive data: While not ideal, the state file sometimes stores sensitive data such as passwords or API keys, although using a remote backend with encryption is recommended for secure storage.

- Plan generation: Terraform uses the state file to generate execution plans, determining what changes need to be made to achieve the desired state defined in your configuration.

- State locking: It helps with state locking (if using a suitable backend), preventing concurrent Terraform operations from corrupting the state.

7. How would you apply changes to your infrastructure using Terraform?

I would apply changes to my infrastructure using Terraform by following the standard workflow. First, I'd modify the Terraform configuration files (.tf) to reflect the desired changes. Then, I'd run terraform plan to preview the proposed changes. This allows me to review the additions, modifications, and deletions that Terraform intends to make before actually applying them. If the plan looks correct, I'd execute terraform apply.

To ensure safety and auditability, I would typically use a version control system like Git to manage my Terraform configurations and use CI/CD pipelines to automate the plan and apply steps. I would also leverage Terraform Cloud or Terraform Enterprise for state management, collaboration, and remote execution.

8. Explain what happens when you run `terraform init`.

terraform init initializes a Terraform working directory. This involves several key steps: It initializes the backend where Terraform state will be stored (local by default, but can be remote like AWS S3 or Azure Blob Storage). It downloads and installs the provider plugins specified in the Terraform configuration files (e.g., aws, azurerm, google). These providers allow Terraform to interact with specific infrastructure platforms. Initialization prepares the working directory for subsequent Terraform commands like plan and apply.

Specifically, it:

- Backend Initialization: Configures the backend (where Terraform state is stored).

- Provider Installation: Downloads necessary provider plugins. These plugins are crucial for interacting with specific infrastructure platforms, like AWS, Azure, or Google Cloud.

- Module Installation: Downloads the modules specified in the configuration files.

9. Explain what happens when you run `terraform plan`.

When you run terraform plan, Terraform reads your configuration files and compares them to the current state of your infrastructure. It determines the changes necessary to reach the desired state defined in your configuration. The output is a plan outlining the resources that will be created, modified, or destroyed. It essentially answers the question: 'What will Terraform do?'

Specifically, the process involves:

- Reading Configuration: Parses Terraform configuration files (

.tf). - Refreshing State: Retrieves the current state of resources from the state file (or remote backend).

- Diffing: Compares desired state (configuration) with actual state.

- Dependency Analysis: Resolves dependencies between resources.

- Plan Generation: Creates an execution plan detailing the actions Terraform will take. The plan includes:

- Resources to be created (+ sign).

- Resources to be modified (~ sign).

- Resources to be destroyed (- sign).

- Output: Displays the plan in a human-readable format to the console. You can also save it to a file.

10. Explain what happens when you run `terraform apply`.

terraform apply is the command used to apply the changes required to reach the desired state of your infrastructure, as defined in your Terraform configuration files. It performs the following key steps:

- Plan creation: Terraform first creates an execution plan. This plan details all the actions Terraform will take to achieve the desired state. This involves reading the current state, comparing it to the desired state (as defined in the configuration), and generating a plan of changes (creating, updating, or deleting resources).

- Plan approval: Terraform displays the execution plan and prompts for approval. This is a critical step where you review the changes to ensure they align with your intentions and prevent unintended consequences.

- Resource provisioning: Once approved, Terraform executes the plan. It calls the appropriate provider plugins (e.g., AWS, Azure, GCP) to create, update, or delete resources in your infrastructure according to the plan. Terraform also updates the state file to reflect the current state of the infrastructure.

11. What is the difference between `terraform plan` and `terraform apply`?

terraform plan creates an execution plan, which lets you preview the changes that Terraform is going to make to your infrastructure. It analyzes the current state of your infrastructure and compares it to the desired state defined in your Terraform configuration files, showing you what resources will be added, modified, or destroyed. Critically, it doesn't actually make any changes.

terraform apply executes the changes defined in the Terraform configuration files, using the execution plan generated by terraform plan. It actually provisions, modifies, or destroys the resources in your infrastructure to match the desired state. If you run terraform apply without a plan, Terraform will implicitly create a new plan before applying. It’s best practice to review a plan before applying to avoid unintended consequences.

12. If you make a mistake in your Terraform code, how can you fix it?

Terraform provides several mechanisms to fix mistakes in your code. The primary method is to edit the Terraform configuration files directly, correct the error, and then re-run terraform apply. Terraform's state file keeps track of the managed infrastructure, so it will attempt to reconcile the differences between the desired state (your corrected code) and the current state. If the mistake resulted in resources being created incorrectly, Terraform will modify or destroy those resources as needed to match the updated configuration.

Additionally, the terraform plan command is invaluable for previewing changes before applying them. If a mistake has been made and applied, and you want to revert to a previous state, you can use terraform state push command in conjunction with a backed-up .tfstate file. Alternatively, you can manually modify the Terraform state file, although this is generally discouraged due to the risk of data corruption. You can also use terraform taint to force recreation of specific resources.

13. What are variables in Terraform, and why are they useful?

Variables in Terraform are named values that allow you to customize and parameterize your Terraform configurations. They act as placeholders for values that might change between different deployments or environments. Think of them as input parameters to your Terraform modules.

They are useful for several reasons:

- Reusability: Avoid hardcoding values directly in your Terraform code, making your configurations reusable across different environments (e.g., development, staging, production).

- Flexibility: Easily modify infrastructure configurations without changing the actual code.

- Organization: Improve the readability and maintainability of your Terraform code by separating configuration from the code itself. Use

variables.tffiles to define your variables and their types. - Configuration Management: Integrate with CI/CD pipelines to provide different values to your infrastructure code based on the environment it's deploying to. For example, you can define a variable for instance type and set different values for dev and prod, like

t2.microvsm5.large.

14. What are outputs in Terraform, and what are they used for?

Outputs in Terraform are used to expose information about your infrastructure after it has been provisioned. They define values that can be easily accessed outside of Terraform, such as the IP address of a server, a generated password, or the ARN of a resource.

Outputs serve several purposes. They can be used to:

Display important information to the user after

terraform applycompletes.Pass data between Terraform configurations (using remote state).

Make values available to other systems or scripts that need to interact with the provisioned infrastructure. For example:

output "instance_ip_addr" { value = aws_instance.example.public_ip }

15. Can you describe a situation where you might need to use Terraform?

I would use Terraform when I need to provision and manage infrastructure as code (IaC) across multiple cloud providers or on-premise environments. For example, imagine setting up a three-tier web application: a load balancer, application servers, and a database.

Using Terraform, I can define the infrastructure (VMs, networks, security groups, etc.) in a configuration file and then use Terraform to automate the creation, modification, and deletion of these resources across AWS, Azure, and GCP if needed. This allows for consistent and repeatable deployments, version control of infrastructure, and easier collaboration among team members. For example, the terraform configuration will look something like this for creating an AWS EC2 instance:

resource "aws_instance" "example" {

ami = "ami-0c55b925" # Replace with a valid AMI

instance_type = "t2.micro"

tags = {

Name = "ExampleInstance"

}

}

16. What is the purpose of version control systems like Git when working with Terraform?

Version control systems like Git are crucial for managing Terraform infrastructure as code. They enable tracking changes to Terraform configurations, facilitating collaboration among team members, and providing a mechanism for reverting to previous states. This is essential for maintaining stability and preventing unintended consequences.

Specifically, Git helps with:

- History Tracking: Recording every change made to the Terraform code.

- Collaboration: Allowing multiple developers to work on the same infrastructure concurrently.

- Rollbacks: Reverting to a previous working state if a deployment introduces errors.

- Auditing: Providing a clear audit trail of who made what changes and when.

- Branching: Isolating experimental changes in branches without affecting the main infrastructure configuration.

- Using remote repository like

GitHub,GitLaborBitbucketfor centralized code management.

17. How does Terraform help with collaboration when multiple people are working on the same infrastructure?

Terraform facilitates collaboration through several key features. Its declarative configuration allows team members to easily understand the desired state of the infrastructure. State management, especially when using a remote backend like Terraform Cloud or HashiCorp Consul, ensures that everyone is working with the latest and consistent view of the infrastructure. This helps to avoid conflicts and unintended overwrites.

Furthermore, Terraform's infrastructure-as-code approach enables version control using Git or other VCS. This means changes can be tracked, reviewed, and audited. Features like branching, pull requests, and code reviews allow for collaborative development and ensure quality and stability. Modules also support reusability and standardized components, promoting consistency and reducing errors across teams.

18. What are some best practices to follow when writing Terraform code?

- Use Terraform Modules: Modules promote reusability and consistency. Break down infrastructure into logical components. Always version control modules.

- Maintain State Properly: Use remote state storage (e.g., AWS S3, Azure Blob Storage, Terraform Cloud) to avoid corruption and enable collaboration. Lock the state file during operations to prevent concurrent modifications.

- Version Control: Store Terraform code in a version control system (e.g., Git). Use branching strategies for different environments or features.

- Use Variables: Parameterize configurations using variables to make them flexible and reusable. Define default values and use input validation.

- Follow a Consistent Naming Convention: Establish and adhere to a clear naming convention for resources and variables to improve readability and maintainability.

- Use a Linter and Formatter: Employ tools like

terraform fmtfor code formatting andterraform validatefor syntax and configuration validation. Consider using linters liketflint. - Test Your Code: Implement automated testing for your Terraform code. Consider using tools like

Terratest. - Keep it DRY (Don't Repeat Yourself): Avoid duplication by using modules, variables, and data sources effectively. Use

for_eachandcountto manage multiple similar resources. - Use Data Sources: Use data sources to fetch information about existing infrastructure or external resources. This reduces hardcoding and makes configurations more dynamic.

- Plan and Apply Separately: Always run

terraform planto review the changes before applying them withterraform apply. - Use a CI/CD Pipeline: Automate Terraform deployments using a CI/CD pipeline for consistent and reliable infrastructure provisioning.

19. How would you define resources in Terraform to create a simple virtual machine?

To define a virtual machine in Terraform, you typically use a resource block along with the appropriate provider. For example, using AWS, you'd use the aws_instance resource. This block specifies the desired state of the VM.

Key attributes would include:

ami: The Amazon Machine Image (AMI) ID.instance_type: The type of instance (e.g., t2.micro).tags: Metadata for the VM.subnet_id: The subnet to place the VM in.vpc_security_group_ids: Security groups for network access.

Here's a snippet of Terraform code:

resource "aws_instance" "example" {

ami = "ami-xxxxxxxxxxxxx" # Replace with your AMI

instance_type = "t2.micro"

tags = {

Name = "ExampleVM"

}

}

20. What are the advantages of using Terraform over manually creating infrastructure?

Terraform offers several advantages over manual infrastructure creation. It enables Infrastructure as Code (IaC), allowing infrastructure to be defined in configuration files that can be version-controlled, shared, and reused. This promotes consistency, reduces errors, and simplifies collaboration. Furthermore, Terraform automates infrastructure provisioning, which significantly speeds up deployment and reduces manual effort.

Key benefits include:

- Version Control: Track changes and revert to previous configurations.

- Automation: Streamline deployment and reduce manual errors.

- Consistency: Ensure infrastructure is consistently configured.

- Collaboration: Facilitate teamwork and knowledge sharing.

- Cost Reduction: Optimize resource utilization through efficient management.

- Idempotence: Terraform ensures that running the same configuration multiple times yields the same result.

21. What is the use of `terraform destroy` command?

The terraform destroy command is used to destroy all infrastructure managed by the current Terraform configuration. It essentially undoes what terraform apply created.

It reads the Terraform state file to determine which resources are currently managed and then sends deletion requests to the respective providers (e.g., AWS, Azure, GCP) to remove those resources. This command is crucial for cleaning up resources when they are no longer needed, preventing unnecessary costs and maintaining a clean infrastructure environment.

22. Name the different types of provisioners in Terraform.

Terraform provisioners are used to execute scripts or other actions on a resource after it is created. They're generally a last resort, used when Terraform's built-in resources aren't sufficient. There are several types of provisioners, including:

- file: Copies files or directories to the resource.

- local-exec: Executes a local command on the machine running Terraform.

- remote-exec: Executes commands on the resource after it's created. It typically uses SSH or WinRM.

- chef: Provisions a resource using Chef.

- puppet: Provisions a resource using Puppet.

- salt-masterless: Provisions a resource using Salt in masterless mode.

- Habitat: Provisions a resource using Habitat.

23. How would you handle sensitive data, like passwords, in your Terraform configuration?

I would avoid hardcoding sensitive data directly in Terraform configurations. Instead, I would leverage secure secret management solutions. Some options include:

- Terraform Cloud/Enterprise Secrets Management: Store secrets directly within the Terraform platform and reference them in configurations.

- HashiCorp Vault: Retrieve secrets dynamically from Vault using the

vaultprovider. - Cloud Provider Secret Managers (AWS Secrets Manager, Azure Key Vault, Google Cloud Secret Manager): Store secrets in the respective cloud provider's secret management service and access them using appropriate Terraform providers.

For local development, I would use environment variables or input variables with appropriate sensitive flags. For example, variable "db_password" { type = string sensitive = true }. The sensitive flag prevents the value from being displayed in the Terraform plan and state. Also, I would use a .gitignore to avoid committing files which contain secrets or sensitive information.

24. Explain the concept of idempotence in the context of Terraform.

Idempotence in Terraform means that applying the same Terraform configuration multiple times will always result in the same desired state of the infrastructure. In other words, whether you apply a configuration once or ten times, the end result is identical. Terraform achieves this by tracking the current state of the infrastructure and only making changes when they are necessary to reach the desired state.

Terraform providers are responsible for implementing idempotency within their resources. For example, if a Terraform configuration specifies creating a virtual machine, running the configuration multiple times will not create multiple VMs. Terraform will recognize that the VM already exists and take no action. However, if the configuration is changed to modify the VM, Terraform will modify the VM, and if the VM does not match the desired state, then the modifications will be applied.

25. How would you update an existing resource using Terraform?

To update an existing resource using Terraform, you would typically modify the corresponding resource block in your Terraform configuration file (.tf files). This could involve changing attribute values, adding new attributes, or removing existing ones. After making the necessary changes, you would run terraform apply. Terraform then compares the desired state defined in your configuration with the current state of the resource in your infrastructure.

Terraform will generate a plan showing the proposed changes. Review the plan carefully to understand the impact of the update. If the plan looks correct, you can approve the changes, and Terraform will update the resource to match the new configuration. If the resource configuration contains lifecycle block with prevent_destroy = true, terraform will not be able to update the resource if it involves deleting and recreating the resource. You might need to taint a resource using terraform taint <resource_address> and then run terraform apply to force a recreation on next apply if the underlying API does not support update in place.

26. Explain the importance of testing your Terraform code. How would you approach testing?

Testing Terraform code is crucial for ensuring infrastructure deployments are reliable, predictable, and error-free. Without testing, you risk deploying broken or misconfigured infrastructure, leading to downtime, security vulnerabilities, or wasted resources. It helps catch errors early in the development cycle, preventing costly and time-consuming fixes later on.

My approach to testing Terraform involves several layers:

- Static Analysis: Using tools like

terraform fmtandterraform validateto check code formatting and syntax. Also tools liketflintfor best practices and potential errors. - Unit Testing: Testing individual modules in isolation using tools like

terratest. This verifies that each module behaves as expected with specific inputs. - Integration Testing: Deploying actual infrastructure to a test environment (e.g., staging) and verifying that different components work together correctly. This can be automated using tools like

kitchen-terraformor custom scripts. - End-to-End Testing: Verifying the entire infrastructure stack and its integration with applications. This confirms that the deployed infrastructure meets the desired functionality and performance requirements. For example, verifying if the deployed web application is accessible and serves content.

Terraform interview questions for juniors

1. What is Terraform, in the simplest terms?

Terraform is an Infrastructure as Code (IaC) tool. Essentially, it lets you define and manage your infrastructure (servers, databases, networks, etc.) using code instead of manually configuring them. This code is declarative, meaning you describe the desired state of your infrastructure, and Terraform figures out how to achieve it.

Think of it like this: instead of clicking around in a web console to create a server, you write a Terraform configuration file that specifies the server's settings (size, region, operating system, etc.). Terraform then reads this file and automatically provisions the server for you. This can be stored in version control and allows infrastructure to be treated as code.

2. Imagine you want to build a Lego house. How would Terraform help you do that?

Terraform, like a Lego instruction manual, allows you to define your desired infrastructure (the Lego house) in a declarative way. You describe what you want, not how to build it. In the Lego analogy, the Terraform configuration files are the instructions, and Terraform itself is the builder. You'd define resources such as aws_instance or google_compute_instance which might be equivalent to defining types and quantities of Lego bricks required.

Specifically, Terraform helps with:

- Defining the house (infrastructure): You describe the components of your house (servers, networks, etc.) in Terraform configuration files.

- Building the house (provisioning): Terraform automatically provisions and configures these components on your chosen cloud provider or infrastructure.

- Managing changes: Terraform tracks the state of your infrastructure and ensures that changes are applied consistently and predictably.

- Destroying the house (tearing down infrastructure): When you're finished, Terraform can easily tear down the entire infrastructure, just like dismantling your Lego house.

3. What are the basic building blocks of a Terraform configuration?

The basic building blocks of a Terraform configuration are:

Resources: These are the most important block. They represent infrastructure components, such as virtual machines, networks, or databases. They tell Terraform what infrastructure to create and manage.

Data Sources: These allow Terraform to fetch information from existing infrastructure or external APIs. This data can then be used to configure resources.

Variables: These act as parameters for your configuration, allowing you to customize deployments without modifying the code directly. They are defined with a

variableblock and can be passed in via command-line arguments, environment variables, or aterraform.tfvarsfile. Usedefaultif you require one.Outputs: These expose values from your Terraform configuration, making them accessible after the deployment. They are useful for retrieving important information, such as IP addresses or database connection strings.

Modules: These are reusable packages of Terraform configurations that can be used to create consistent and repeatable infrastructure deployments. They encapsulate a set of resources and configurations into a single unit, improving code organization and reusability.

Providers: Define the infrastructure platform (e.g., AWS, Azure, GCP) that Terraform will interact with. They are responsible for authenticating with the platform and providing the necessary APIs to manage resources. For example:

terraform { required_providers { aws = { source = "hashicorp/aws" version = "~> 4.0" } } }

4. What is the purpose of a Terraform provider?

A Terraform provider is a plugin that allows Terraform to interact with various infrastructure platforms or services. It essentially acts as a translator between Terraform's configuration language (HCL) and the API of the target platform (e.g., AWS, Azure, Google Cloud, Kubernetes, or even SaaS applications).

The provider defines the resources and data sources that Terraform can manage or read from the target platform. Without a provider, Terraform wouldn't know how to create, update, or delete resources on that platform. It abstracts the underlying API complexity and provides a consistent interface for Terraform to work with diverse infrastructures.

5. Can you explain what a Terraform resource is?

A Terraform resource is a fundamental component that represents a piece of infrastructure. This could be a physical or virtual resource, such as a virtual machine, a network interface, or a database. It's anything that your infrastructure needs to run.

Terraform manages these resources by defining their desired state in configuration files. When you apply a Terraform configuration, Terraform will create, update, or delete resources to match the state you've defined. Examples of resources are aws_instance for an EC2 instance in AWS, or google_compute_instance for a virtual machine in Google Cloud. Each resource has attributes that define its properties, like instance type, region, etc. The general format for declaring a resource is: resource "resource_type" "resource_name" { ...attributes... }.

6. What does 'infrastructure as code' mean to you?

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure through machine-readable definition files, rather than through manual, interactive configuration tools. Essentially, you treat your infrastructure setup like software code. This allows you to version control your infrastructure, automate deployments, and create repeatable environments.

Some key benefits include:

- Automation: Reduces manual effort and human error.

- Version Control: Track changes and easily rollback to previous configurations.

- Consistency: Ensures that infrastructure is provisioned in a consistent manner across environments.

- Speed: Speeds up the provisioning and deployment process.

For example, using tools like Terraform or AWS CloudFormation, you can define your servers, networks, and other resources in code, then use those definitions to automatically create and manage your infrastructure.

7. What is Terraform's state file and why is it important?

Terraform's state file is a crucial component that stores the current configuration of your infrastructure managed by Terraform. It maps Terraform resources to real-world infrastructure objects. This file keeps track of the resources Terraform has created and their properties, allowing Terraform to understand what changes are needed to achieve the desired state.

The state file is important because it enables Terraform to:

- Track resources: Know what infrastructure it's managing.

- Plan changes: Determine the differences between the desired state and the current state, creating an execution plan.

- Apply changes: Modify the infrastructure to match the desired state, updating the state file accordingly.

- Prevent conflicts: Manage resources correctly and avoid conflicts when multiple people or systems are working on the same infrastructure.

8. How would you initialize a Terraform project?

To initialize a Terraform project, you typically start by creating a new directory for your project. Inside this directory, you'll write your Terraform configuration files (usually with a .tf extension) defining your infrastructure. The core command to initialize a project is terraform init. This command performs several crucial steps: It initializes the working directory, downloads the necessary provider plugins specified in your configuration (or required by any modules you're using), and sets up the backend where Terraform will store the state file.

Once terraform init is complete, Terraform is ready to plan and apply your configuration. It's good practice to version control your .tf files using Git or a similar system, but exclude the .terraform directory and the terraform.tfstate file (or configure a remote backend to store state securely). The .terraform directory contains the downloaded provider plugins and is not meant to be version controlled. The terraform.tfstate file stores the current state of your infrastructure and should be protected.

9. What is the command to apply a Terraform configuration?

The command to apply a Terraform configuration is terraform apply. This command executes the actions proposed in the Terraform plan to achieve the desired state of your infrastructure. It will prompt for confirmation before proceeding, unless the -auto-approve flag is used.

10. How do you see what Terraform *will* do before actually doing it?

Terraform provides the terraform plan command to preview the changes it intends to make to your infrastructure. This command compares your current Terraform configuration with the current state of your infrastructure and generates an execution plan, outlining the resources that will be created, modified, or destroyed.

Running terraform plan allows you to review the proposed changes before applying them with terraform apply. This is crucial for understanding the impact of your configuration changes and minimizing the risk of unintended consequences. The output shows a detailed breakdown of each change, including resource attributes and dependencies.

11. What is the command to undo the changes made by Terraform?

The primary command to undo changes made by Terraform is terraform destroy. This command reads the current state file and destroys all the resources managed by Terraform in that state. It effectively reverts the infrastructure to the state before Terraform initially created it.

If you only want to undo specific changes from the most recent terraform apply, you could potentially use the -target option with terraform destroy to target specific resources. However, carefully consider dependencies when using -target, as destroying a resource may require destroying dependent resources as well. The terraform taint command and subsequent terraform apply can be used to force a resource to be recreated, effectively undoing configuration changes if the new configuration is the desired state.

12. What is a Terraform module and why would you use one?

A Terraform module is a reusable, self-contained package of Terraform configurations that manages a set of related infrastructure resources. It's essentially a wrapper around a collection of Terraform configurations (.tf files) in a directory, providing a way to abstract and encapsulate complex infrastructure deployments.

You would use a Terraform module for several reasons:

- Reusability: Modules allow you to define infrastructure components once and reuse them across multiple projects or environments.

- Organization: Modules help to break down large, complex configurations into smaller, more manageable pieces.

- Abstraction: Modules hide the complexity of the underlying infrastructure from the user, providing a simpler interface for deployment.

- Consistency: Modules ensure consistent deployments across different environments.

- Collaboration: Modules make it easier for teams to collaborate on infrastructure projects, as they can share and reuse modules.

13. How can you pass values into a Terraform module?

Values are passed into Terraform modules primarily using input variables. These are declared within the module using the variable block. When calling the module, you can assign values to these input variables using the module block's arguments.

Alternatively, you can pass values using:

- Terraform variables defined outside the module: If a variable with the same name as a module input variable exists in the calling configuration, Terraform will automatically use its value.

- Environment variables: Terraform can read environment variables using the

var.prefix in your Terraform configuration. - Terraform Cloud/Enterprise variables: When running Terraform in a managed environment, variables can be defined and managed there.

14. What is a variable in Terraform and why is it useful?

A variable in Terraform is a named value that can be used to customize Terraform configurations. Variables act as parameters, allowing you to define reusable configurations that can be easily adapted to different environments or scenarios without directly modifying the code.

They are useful because they promote code reusability, improve readability, and allow for better configuration management. Instead of hardcoding values directly into your Terraform configuration, you can define variables and assign values to them when running Terraform. This simplifies the process of managing configurations across different environments (e.g., development, staging, production) or for different resources with slightly varying attributes. Variables enhance the flexibility and maintainability of your Terraform code.

15. How can you define different environments in Terraform (like dev, staging, prod)?

There are several common approaches to define different environments (dev, staging, prod) in Terraform:

- Workspaces: Terraform workspaces allow you to manage multiple states for a single configuration. You can switch between workspaces (e.g.,

terraform workspace select dev) to apply the same infrastructure code to different environments. Each workspace maintains its own state file, effectively isolating the environments. - Separate Directories: Create separate directories for each environment (e.g.,

dev/,staging/,prod/). Each directory contains its own Terraform configuration files. This approach provides clear separation and isolation. - Variables: Use variables within a single Terraform configuration to conditionally configure resources based on the target environment. You can pass environment-specific values through command-line arguments (e.g.,

terraform apply -var="environment=dev") or environment variables.

16. What is an output in Terraform and how do you use it?

An output in Terraform exposes values from your Terraform configuration. These values can then be used by other Terraform configurations or systems. Outputs are defined using the output block in your Terraform configuration.

Outputs are used to:

- Display important information after a Terraform apply, such as IP addresses or DNS names.

- Pass values between Terraform configurations, using

terraform_remote_stateor data sources. - Make values available to other systems for automation or monitoring.

17. What does it mean when Terraform says it's 'refreshing state'?

When Terraform says it's 'refreshing state', it means it's reading the current state of your infrastructure resources from the actual cloud provider (like AWS, Azure, or GCP) and updating its local state file (terraform.tfstate) to reflect the real-world configuration. This process ensures that Terraform is aware of any changes that might have occurred outside of Terraform itself, such as manual modifications made through the cloud provider's console or CLI.

The primary purpose of refreshing state is to detect drifts. A drift occurs when the actual state of a resource differs from the state recorded in Terraform's state file. Terraform needs an accurate state file to correctly plan and apply changes, so refreshing is crucial for maintaining consistency and preventing unexpected modifications or errors during future Terraform operations. For example, If someone manually changes the number of instances in an autoscaling group, terraform refresh will detect this change.

18. Can you describe a situation where Terraform might fail?

Terraform can fail in various situations. One common scenario is when there are dependency issues or incorrect configurations in the Terraform code. For example, if a resource depends on another resource that hasn't been successfully created yet (due to network issues or insufficient permissions), Terraform will likely fail during the apply stage.

Another situation where Terraform might fail is when there are changes made outside of Terraform's control (e.g., manual changes to cloud resources through the console). This can lead to state drift, where Terraform's state file no longer accurately reflects the actual infrastructure. When Terraform attempts to apply changes based on its outdated state, conflicts can arise, causing the deployment to fail. Also, provider version incompatibilities and reaching resource limits within the cloud provider can lead to failures.

19. How would you find documentation for a specific Terraform resource?

The primary way to find documentation for a specific Terraform resource is through the official Terraform website. Specifically, navigate to the Terraform Registry (registry.terraform.io) and search for the provider associated with the resource. Once you've found the provider, you can browse its resources and data sources, each with detailed documentation including arguments, attributes, examples, and import instructions.

Alternatively, you can directly use a search engine like Google, DuckDuckGo, etc. searching for: terraform <provider> <resource> e.g., terraform aws s3 bucket. This usually leads you directly to the relevant documentation page on the Terraform Registry or the provider's website. The documentation typically includes code examples for various use cases.

20. What's the difference between 'terraform apply' and 'terraform destroy'?

terraform apply creates or modifies infrastructure resources defined in your Terraform configuration. It reads the current state and the desired state (defined in your .tf files), calculates the differences, and then takes actions to converge the infrastructure to the desired state. This might involve creating new resources, modifying existing ones, or even deleting resources that are no longer needed based on the configuration.

terraform destroy is the opposite. It removes all the infrastructure resources managed by your Terraform configuration from the cloud provider. It effectively undoes what terraform apply did, deleting all resources that are currently in the Terraform state. Be very careful when running terraform destroy, as it can result in significant data loss and service disruptions if not executed with proper planning and understanding.

21. Why would you want to store your Terraform state remotely?

Storing Terraform state remotely offers several advantages. Primarily, it enables collaboration among team members. When state is stored locally, only the person with the state file can safely modify the infrastructure. Remote state storage, especially when coupled with state locking, ensures that concurrent modifications are prevented, avoiding state corruption.

Furthermore, remote storage provides a more secure and durable solution. Local state files can be accidentally deleted or corrupted. Using services like AWS S3, Azure Blob Storage, or HashiCorp Consul provides redundancy and versioning, safeguarding against data loss and allowing for auditing and rollbacks.

22. If you made a mistake in your Terraform code, how would you fix it?

If I made a mistake in my Terraform code, the first step is to carefully analyze the error messages Terraform provides. These messages often pinpoint the exact location and nature of the problem (e.g., syntax error, incorrect resource attribute, dependency issue). I would then examine the relevant Terraform configuration files (e.g., .tf files) to identify the source of the error. I would use terraform plan command to see the impact of my changes.

To fix the mistake, I'd edit the Terraform code, correcting any typos, adjusting resource configurations, or resolving dependency conflicts. After making the necessary changes, I would run terraform validate to ensure the syntax and configuration are valid. Next, I'd execute terraform plan again to confirm that the changes align with the desired state and that no unexpected modifications will occur. Finally, I would apply the changes with terraform apply. If the error persists after applying, I'd revert to a previous working state using version control (e.g., Git) if available, or manually undo the changes by updating the state file or running terraform destroy for resources provisioned incorrectly.

23. Can you name a cloud provider that Terraform supports?

Yes, Terraform supports many cloud providers. One popular example is Amazon Web Services (AWS). Terraform can be used to provision and manage AWS resources such as EC2 instances, S3 buckets, and VPCs.

24. What is the purpose of version control, such as Git, in Terraform projects?

Version control with Git is crucial in Terraform projects for several reasons. Primarily, it enables tracking and managing changes to your infrastructure code over time. This includes the Terraform configurations (.tf files), state files (though these are often stored remotely and versioned separately), and any supporting scripts or modules. This allows teams to collaborate effectively, revert to previous states if needed, and understand the history of infrastructure changes.

Specifically, Git facilitates features like branching for experimenting with new infrastructure changes, pull requests for code review and approval, and a clear audit trail of who made what changes and when. Utilizing Git ensures that infrastructure changes are treated like any other code, promoting best practices for development and deployment. It also aids in disaster recovery by providing a reliable backup and history of the infrastructure configuration.

25. How would you handle sensitive information, like passwords, in your Terraform code?

Sensitive information like passwords should never be hardcoded directly into Terraform code. Instead, leverage secure methods for managing and injecting secrets. A primary approach is to use Terraform's built-in support for sensitive variables and outputs. Declare variables as sensitive = true to prevent them from being displayed in the CLI output or stored in the Terraform state file in plaintext.

For injecting secrets, use a secrets management solution like HashiCorp Vault, AWS Secrets Manager, Azure Key Vault, or Google Cloud Secret Manager. Terraform can be configured to dynamically fetch secrets from these services during runtime, ensuring that the secrets themselves are never directly present in the Terraform code or state file. Alternatively, environment variables can be used to pass secrets into Terraform, especially in CI/CD pipelines. Always encrypt your Terraform state file, preferably using encryption at rest provided by your cloud provider's storage services (e.g., S3 bucket encryption).

26. What are some benefits of using Terraform over manually configuring infrastructure?

Terraform offers several benefits over manual infrastructure configuration. Primarily, it allows for Infrastructure as Code (IaC), enabling you to define and manage infrastructure through code. This leads to version control, collaboration, and repeatability. Manual configuration is error-prone and difficult to track changes.

Key benefits include: Increased efficiency through automation, Reduced risk of human error, Improved consistency and repeatability, Better collaboration and version control, and Enhanced visibility into infrastructure changes. Terraform also supports a wide range of providers, making it versatile for different cloud platforms and services.

27. Explain the difference between declarative and imperative approaches to infrastructure management. Which one does Terraform follow?

Declarative infrastructure management focuses on defining the desired state of the infrastructure. You specify what you want, and the tool figures out how to achieve it. Imperative infrastructure management, on the other hand, focuses on specifying the exact steps needed to achieve the desired state. You specify how to do it, step-by-step.

Terraform follows a declarative approach. You define the desired state of your infrastructure using HashiCorp Configuration Language (HCL), and Terraform determines the necessary steps to reach that state. For example, you declare you want 3 servers with specific configurations, and Terraform handles the provisioning, configuration, and dependencies without you explicitly scripting each step.

28. What is the role of input variables in Terraform modules, and how can they improve reusability?

Input variables in Terraform modules act as parameters that allow users to customize the module's behavior without modifying its source code. They define what data a module expects to receive from the outside world. By using input variables, you make your modules more flexible and adaptable to different environments and use cases.

They significantly improve reusability because you can use the same module multiple times with different configurations simply by providing different values for the input variables. This avoids code duplication and promotes a modular approach to infrastructure as code. For example:

variable "instance_type" {

type = string

description = "The type of EC2 instance to create."

default = "t2.micro"

}

resource "aws_instance" "example" {

ami = "ami-0c55b1b455e52cb05"

instance_type = var.instance_type

}

In this example, instance_type is an input variable. Users can specify a different instance type when using the module, such as t2.medium, without altering the module's core code. This enables reuse across various environments or for different application needs.

29. Describe a scenario where you might use Terraform to automate a simple task, such as creating a virtual machine.

Imagine needing to quickly spin up a test virtual machine (VM) on AWS for developers to validate a new feature. Manually creating this VM through the AWS console is time-consuming and prone to errors. Terraform can automate this process. I'd define a Terraform configuration file (e.g., main.tf) that specifies the desired VM characteristics, such as the instance type (e.g., t2.micro), AMI ID, region, and security group rules.

Running terraform apply would then automatically provision the VM in AWS according to the defined configuration. This ensures consistent VM creation, reduces manual effort, and allows developers to quickly obtain a test environment. Furthermore, destroying the VM after testing is equally straightforward by using terraform destroy which ensures unused resources don't incur costs.

Terraform intermediate interview questions

1. How would you handle sensitive data, like passwords, in your Terraform configurations, especially when storing them in a version control system?

To handle sensitive data like passwords in Terraform configurations, especially when storing them in version control, I would avoid hardcoding them directly into the code. Instead, I would leverage HashiCorp Vault or a similar secrets management solution to store and manage these secrets securely. Terraform can then retrieve these secrets dynamically during provisioning using the Vault provider.

Alternatively, I'd use Terraform Cloud or Enterprise, which offer features like variable encryption and secret management. Another approach would be to use environment variables or input variables with sensitive attributes, ensuring these variables are not committed to the repository and are handled appropriately in the CI/CD pipeline. It's crucial to avoid storing any secrets directly in the Terraform state file and encrypt it at rest if possible.

2. Explain how Terraform Cloud or Enterprise can improve collaboration and workflow for a team using Terraform.

Terraform Cloud/Enterprise significantly enhances team collaboration and workflow by providing a centralized platform for managing Terraform state, infrastructure configuration, and access controls. It enables version control, ensuring that all team members are working with the correct configuration and allowing for easy rollback if needed. Workspaces provide isolation and organization of infrastructure. Role-based access control (RBAC) allows for granular permissions, limiting who can modify or view sensitive infrastructure configurations. Moreover, the ability to implement Sentinel policies ensures code quality and compliance.

Using Terraform Cloud/Enterprise, teams can establish automated workflows using features such as remote state management, remote operations (applying plans remotely), and integration with CI/CD pipelines. This reduces manual errors, improves consistency, and streamlines the infrastructure provisioning process. It makes sharing configurations and modules easier and facilitates a more consistent and repeatable infrastructure deployment process. Specifically, using a VCS-driven workflow, changes to a Terraform configuration are triggered automatically, creating a new Terraform run that shows the planned changes and enables teams to better collaborate when implementing infrastructure changes.

3. Describe a scenario where you would use a Terraform workspace and how it benefits your infrastructure management.

I would use Terraform workspaces to manage different environments (e.g., development, staging, production) for an application. Each workspace would maintain its own state file, allowing me to apply changes specific to that environment without affecting others. For example, the development workspace might use smaller, less expensive resources compared to the production workspace. This allows for cost optimization.

The benefits are clear: isolation, configuration variance, and streamlined workflows. Isolation: Changes in development don't impact production. Configuration Variance: I can use variables specific to each environment (e.g., database connection strings). Streamlined Workflows: Applying changes to a specific environment is targeted and reduces the risk of unintended consequences across the entire infrastructure. It reduces the need for separate Terraform configurations and helps maintain consistent code across all environments.

4. How do you manage Terraform state in a team environment to prevent conflicts and ensure consistency?

To manage Terraform state in a team environment and prevent conflicts, remote state management is crucial. We use a backend like AWS S3 with DynamoDB for locking. This ensures that only one person can modify the state at a time, preventing corruption and conflicts.

Specifically, the Terraform configuration specifies the S3 bucket for storing the terraform.tfstate file and the DynamoDB table for locking. Using the DynamoDB table prevents concurrent Terraform operations from modifying the state file simultaneously. Moreover, using workspaces allows for managing multiple environments (e.g., development, staging, production) with separate state files, further isolating changes and reducing the risk of conflicts. The command terraform workspace select <workspace_name> allows to switch between different environments easily. This approach combined with proper IAM roles (least privilege) ensures a safe and consistent state management.

5. Explain the difference between `terraform apply`, `terraform plan`, and `terraform destroy`.

terraform plan creates an execution plan. It compares the current state of your infrastructure with the desired state defined in your Terraform configuration files. It then determines the changes needed to reach the desired state and presents them for your review, but does not actually make any changes.

terraform apply executes the changes proposed in the Terraform plan. It takes the plan as input and provisions, modifies, or destroys resources to match the desired state defined in your configuration. terraform destroy is used to destroy all resources managed by Terraform in the current working directory. It effectively reverses the apply process, deleting all infrastructure components defined in your configuration.

6. How would you use Terraform to provision resources across multiple cloud providers (e.g., AWS and Azure)?

To provision resources across multiple cloud providers using Terraform, you would configure multiple provider blocks within your Terraform configuration. Each provider block specifies the cloud provider (e.g., AWS, Azure) and the necessary credentials and region information to interact with that provider's API. You then define resources within your Terraform configuration, associating each resource with the appropriate provider using the provider argument.

For example, you might have an aws provider block and an azurerm provider block. A resource like an EC2 instance would be associated with the aws provider, while a resource like a virtual machine would be associated with the azurerm provider. Terraform manages the dependencies between these resources and provisions them according to the declared configuration, irrespective of the underlying cloud provider. You could output attributes from resources in one provider and use them as input into resources on the other provider. For example, output the IP address of an AWS EC2 instance and use it to configure the firewall for an Azure virtual machine.

7. Describe how you would implement a blue-green deployment strategy using Terraform.

A blue-green deployment with Terraform involves provisioning two identical environments (blue and green). Initially, only one environment (e.g., blue) is live and serving traffic. Using Terraform, you would define your infrastructure as code (IaC), including resources like servers, load balancers, databases, and network configurations.

The steps are:

- Infrastructure as Code (IaC): Define both blue and green environments within Terraform. This includes compute, storage, and network resources. Use Terraform variables to switch between the two environments. For example, an

environmentvariable could determine which resource names and tags are applied. The IaC would contain your terraform scripts andvariables.tffile. - Deploy to Green: Apply your Terraform configuration to provision the green environment. Deploy the new application version to the green environment. Test and validate the deployment thoroughly.

- Switch Traffic: Update your load balancer or DNS settings using Terraform to redirect traffic from the blue environment to the green environment. This can be done using Terraform's

aws_lb_listeneroraws_route53_recordresource, modifying the target group or DNS record to point to the new environment. - Monitor: Monitor the green environment for performance and errors.

- Rollback (if needed): If issues arise, revert the traffic switch by updating the load balancer or DNS to point back to the blue environment.

- Decommission (optional): After a successful deployment and a sufficient monitoring period, you can decommission the old (blue) environment.

8. Explain how you can use Terraform to manage the lifecycle of a Docker container.

Terraform can manage the lifecycle of Docker containers using the docker provider. This allows you to define container resources in your Terraform configuration, enabling you to create, start, stop, and destroy containers as part of your infrastructure as code. The key resource is docker_container, where you specify the image, ports, volumes, and other container configurations.

Here's a simplified example of how to manage a Docker container:

resource "docker_image" "nginx" {

name = "nginx:latest"

keep_locally = false

}

resource "docker_container" "nginx" {

image = docker_image.nginx.latest

name = "nginx-container"

ports {

internal = 80

external = 8000

protocol = "tcp"

}

}

This example first pulls the nginx:latest image and then defines a container named nginx-container that uses this image. Port 80 of the container is mapped to port 8000 on the host machine. Applying this configuration with terraform apply will create and start the container. Destroying the configuration with terraform destroy will stop and remove the container.

9. How do you use Terraform modules to create reusable and maintainable infrastructure components?

Terraform modules allow you to encapsulate and reuse infrastructure configurations. To create reusable components, define a module with input variables for customization and output values for exposing key attributes. This promotes consistency and reduces code duplication. For maintainability, version your modules and manage them in a central repository. This allows updates to be propagated easily and consistently across multiple environments. Use terraform init to download modules, and module "module_name" { source = "path/to/module" } to use them.

Modules promote abstraction, hiding complex configurations behind a simple interface. Inputs allow you to configure the module's behavior without modifying its code. Outputs provide a way to access resources created within the module. This approach simplifies infrastructure management and improves collaboration among team members. Good module design includes clear documentation and examples, making them easy to understand and use.

10. Describe a scenario where you would use Terraform's `count` or `for_each` meta-arguments and explain why.

I'd use count or for_each when I need to create multiple similar resources. For example, imagine deploying multiple identical virtual machines for a web application. Instead of writing the same resource block multiple times with slightly different names, I could define one resource block for a VM and use count = 3 to create three VMs. The individual VMs can then be referenced using resource.vm[count.index].

for_each is preferable when the number of resources depends on a variable or a data structure like a map or set, and you need to customize each instance based on the key/value pairs. For instance, I could use for_each to create firewall rules based on a map containing port numbers and allowed IP ranges. Using for_each, the individual firewall rules can be accessed using each.key and each.value within the resource definition to configure each rule dynamically. I would choose for_each over count when I need to create resources dynamically and the number of resources depends on user input.

11. Explain how you would configure Terraform to automatically rollback infrastructure changes in case of an error during the apply process.

Terraform doesn't have a built-in, fully automated rollback mechanism like some configuration management tools. However, you can achieve rollback functionality using a combination of Terraform features and potentially external tools. The core idea is to preserve the previous state and have a way to re-apply it.

One approach is to leverage Terraform Cloud or Terraform Enterprise, which automatically store state history. In case of a failed apply, you can revert to a previous, known-good state version and re-apply it. Alternatively, you can manually backup the .tfstate file before each apply. If an error occurs, you can restore the backed-up state file and run terraform apply again. Also you can use terraform destroy -target=resource_that_caused_failure for a more controlled approach, only if the failure is isolated to certain resources. For critical infrastructure, consider implementing more robust CI/CD pipelines that include pre-apply validation and automated rollback scripts.

12. How do you handle dependencies between different Terraform modules to ensure resources are created in the correct order?

Terraform handles dependencies between modules using implicit and explicit dependencies. Implicit dependencies are automatically inferred when one resource references another resource's attribute. For example, if module B needs the ID of a resource created in module A, Terraform understands that module A must be created before module B.

For situations where implicit dependencies are not enough, we can use explicit dependencies using the depends_on attribute in the resource configuration. This tells Terraform that a resource should not be created until another specific resource (or module) has been successfully created, irrespective of whether there is an implicit dependency. A better approach than depends_on for module-level dependencies is often to pass outputs from one module as inputs to another. This clearly defines the module contract and avoids hidden dependencies. Example:

resource "aws_instance" "example" {

ami = "ami-0c55b3e1ad719cb32"

instance_type = "t2.micro"

depends_on = [module.vpc] #explicit dependency on the vpc module

}

13. Describe how you would use Terraform to manage DNS records for your infrastructure.

I would use the Terraform cloudflare_record, aws_route53_record, or similar resource depending on my DNS provider, to manage DNS records. In the Terraform configuration, I'd define the record type (A, CNAME, TXT, etc.), name, value, and TTL. For example, with Cloudflare, I would use the cloudflare_record resource, specifying the zone ID, record name, type, and value. This allows me to declaratively manage DNS records as code, version control them, and apply changes consistently across environments.

Specifically, using a resource like cloudflare_record, I would define records like:

resource "cloudflare_record" "example" {

zone_id = "${var.cloudflare_zone_id}"

name = "www"

value = "192.0.2.1"

type = "A"

ttl = 3600

}

This configuration creates an A record for www pointing to 192.0.2.1 with a TTL of 3600 seconds in the specified Cloudflare zone. Similar resources are available for other DNS providers, requiring only adjustments to the resource name and provider-specific arguments.

14. Explain how you can use Terraform to provision and configure a Kubernetes cluster.

Terraform can provision and configure a Kubernetes cluster using several approaches. Primarily, you define the infrastructure resources (like VMs, network, load balancers) in a Terraform configuration file, usually using providers like AWS, Azure, or GCP. Terraform then automates the creation and management of these resources to form the underlying infrastructure for your cluster. Specifically, you can use resources like aws_eks_cluster, azurerm_kubernetes_cluster, or google_container_cluster depending on the cloud provider.

After provisioning the infrastructure, Terraform can configure Kubernetes itself using the kubernetes provider. This allows you to define Kubernetes resources (deployments, services, namespaces, etc.) as Terraform resources. Terraform will then apply these configurations to the newly created cluster, effectively setting up your desired application environment. You will typically interact with the Kubernetes API Server. For example:

resource "kubernetes_deployment" "example" {

metadata {

name = "example-deployment"

}

spec {

replicas = 3

selector {

match_labels = {

app = "example"

}

}

template {

metadata {

labels = {

app = "example"

}

}

spec {

container {

image = "nginx:latest"

name = "nginx"

}

}

}

}

}

15. How would you implement infrastructure as code (IaC) testing using tools like Kitchen or Terratest?

IaC testing involves verifying that infrastructure deployments match the desired state defined in your code. With Kitchen, you'd typically use it with a provisioner like Chef or Ansible, writing tests within Kitchen's test suite (often using ChefSpec or similar testing frameworks). You define Kitchen configurations that spin up infrastructure, run your provisioning code, and then execute tests against the resulting environment to confirm resource states, file contents, user permissions, etc. Terratest, on the other hand, is designed specifically for testing Terraform code. It allows you to write Go code that provisions infrastructure using Terraform, then programmatically asserts the state of that infrastructure through the AWS, Azure, GCP or other relevant SDK.

Key steps include: 1. Writing testable IaC. 2. Setting up the test environment. 3. Writing assertions to validate resource properties, network configurations, security settings, etc. 4. Using appropriate assertion libraries. 5. Running tests and analyzing the results. 6. Integrating into CI/CD pipeline. For example, a terratest assertion could check if an AWS S3 bucket was created with encryption enabled, while a Kitchen test might verify that a specific package is installed on a VM.

16. Explain how you would use Terraform to manage the infrastructure for a serverless application (e.g., AWS Lambda functions).

To manage serverless infrastructure with Terraform, I would define resources like AWS Lambda functions, API Gateway, IAM roles, and S3 buckets in Terraform configuration files (e.g., main.tf). For example, a Lambda function would be defined using the aws_lambda_function resource, specifying the function name, runtime, handler, and deployment package (zip file containing the code). IAM roles with appropriate permissions would be created using aws_iam_role and aws_iam_policy_attachment to allow the Lambda function to access other AWS services. API Gateway resources (aws_api_gateway_rest_api, aws_api_gateway_resource, aws_api_gateway_method, aws_api_gateway_integration) would be used to create HTTP endpoints that trigger the Lambda functions.

After defining these resources, I would use Terraform commands like terraform init, terraform plan, and terraform apply to provision and update the infrastructure. Terraform's state management would track the deployed resources and ensure consistent configuration across environments. Variables and modules can be used to promote reusability and manage complexity, for example, creating separate modules for Lambda functions, API Gateway, and IAM roles to maintain organized and scalable infrastructure code. Version control (e.g., Git) can be used to manage the Terraform code and track changes over time.

17. How do you monitor the health and performance of your Terraform-managed infrastructure?

I monitor the health and performance of Terraform-managed infrastructure through a combination of methods. Firstly, I utilize Terraform Cloud's built-in features, like state management, drift detection, and run history, which offer insights into infrastructure changes and potential issues. Secondly, I integrate monitoring tools like Prometheus and Grafana to collect and visualize metrics from the provisioned resources. This includes CPU utilization, memory usage, network traffic, and disk I/O. Alerts are configured based on thresholds to notify me of anomalies, enabling proactive intervention. Cloud provider monitoring services such as AWS CloudWatch or Azure Monitor are also used, leveraging their native integration with the managed resources.

Specifically, I also use tools that notify me of changes to the Terraform state file via automation. This can include, but is not limited to:

- Terraform Cloud Notifications: Triggered by run completions.

- Custom scripts: These scripts can parse the state file for specific resource changes.

- Infrastructure as Code (IaC) scanning tools: Tools like Checkov and Terrascan scan Terraform code for misconfigurations and vulnerabilities before deployment.

18. Describe how you would use Terraform to create and manage a virtual private network (VPN).

To create and manage a VPN with Terraform, I would define the necessary resources like VPCs, subnets, VPN gateways, customer gateways, and routing rules in Terraform configuration files. The aws_vpc, aws_subnet, aws_vpn_gateway, aws_customer_gateway, and aws_route resources (for AWS) would be used to define these components. I would then run terraform init, terraform plan, and terraform apply to provision the VPN infrastructure. Any changes to the VPN configuration, such as modifying the subnet CIDR blocks or adding new routes, would be handled by updating the Terraform configuration and reapplying it.

Terraform allows for infrastructure as code, enabling version control, collaboration, and repeatability. I would also leverage variables and modules to make the configuration reusable and maintainable. For example, a module could define the standard VPN setup, and variables would allow customization like specifying different AWS regions or CIDR blocks. Security groups and network ACLs would also be configured through terraform to secure the VPN.

19. Explain how you can use Terraform to automate the creation of security groups and firewall rules.

Terraform can automate the creation of security groups and firewall rules through its declarative configuration language. You define the desired state of your security groups and firewall rules in Terraform configuration files (e.g., .tf files), and Terraform automatically provisions and manages these resources to match that defined state.

Specifically, you can use Terraform resources like aws_security_group (for AWS), google_compute_firewall (for GCP), or equivalent resources for other cloud providers. Within these resources, you define rules for inbound and outbound traffic, specifying protocols, ports, and source/destination IP ranges or security group IDs. For example:

resource "aws_security_group" "example" {

name = "example-sg"

description = "Example security group"

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

After defining these configurations, you apply the Terraform configuration using terraform apply, and Terraform automatically creates or updates the security groups and firewall rules to match the specified state. Terraform also manages dependencies, ensuring that resources are created in the correct order. This helps ensure infrastructure as code.

20. How would you use Terraform to manage the infrastructure for a database cluster (e.g., MySQL, PostgreSQL)?

Using Terraform to manage a database cluster involves defining resources like servers, networking components, and database-specific configurations. A resource block for each server instance (e.g., using aws_instance for AWS, google_compute_instance for GCP) would be created, specifying the AMI, instance type, and network settings. Networking resources such as VPCs, subnets, security groups (or firewalls), and route tables would also be defined to ensure proper connectivity and security for the database cluster.

Database-specific configurations would involve using provisioners (like remote-exec or local-exec) or configuration management tools (like Ansible) to install the database software (MySQL, PostgreSQL, etc.), configure replication, set up backups, and manage user accounts. Data sources can be used to dynamically fetch IDs of existing resources or for referencing values like the latest AMI ID. output values would then expose useful information like the database endpoints or connection strings.

21. Describe how you would use Terraform to create and manage a load balancer.

To create and manage a load balancer using Terraform, I would define a resource block for the specific load balancer type (e.g., aws_lb for AWS, google_compute_global_forwarding_rule for GCP, or azurerm_lb for Azure). This block would include essential attributes such as name, location, and any necessary configurations like listeners, default pools, and health probes.