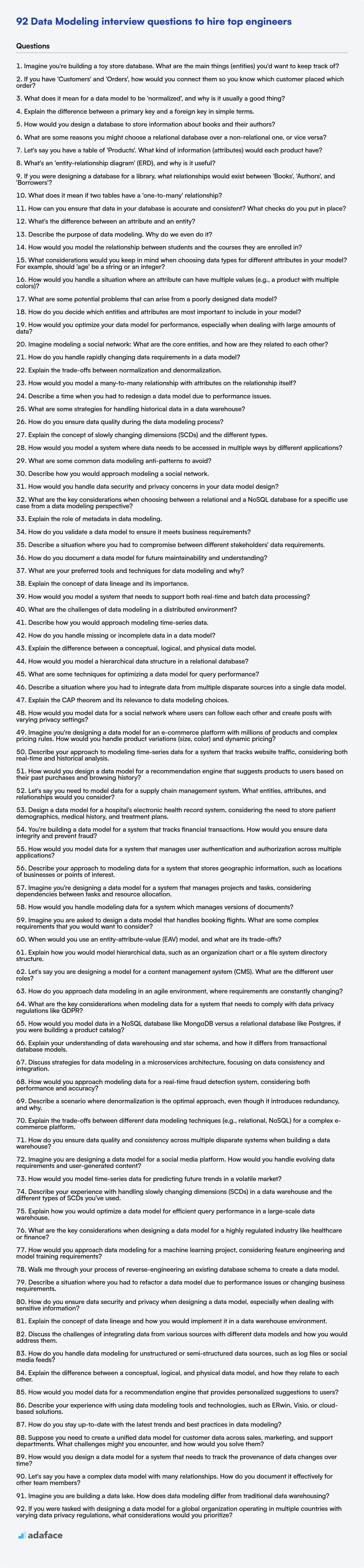

Data modeling is at the heart of any data-driven organization, transforming raw data into understandable structures that drive decisions. Recruiters looking to hire top-tier data modelers need a way to assess candidates effectively, but finding good questions can be hard.

This blog post provides a treasure trove of data modeling interview questions categorized by skill level: basic, intermediate, advanced, and expert along with multiple-choice questions. It is aimed at helping recruiters and hiring managers gauge a candidate's grip on logical thinking, data relationships and database design like someone looking for skills required for a data modeler.

By using these questions, you’ll be able to quickly determine if a candidate has the skills your team needs and to speed up the process, consider using a Data Modeling Test to filter candidates before the interview.

Table of contents

Basic Data Modeling interview questions

1. Imagine you're building a toy store database. What are the main things (entities) you'd want to keep track of?

In a toy store database, I'd primarily want to track these entities:

- Toys: Details like name, description, price, manufacturer, age range, category (e.g., action figures, board games), and quantity in stock. A unique ID would be important.

- Customers: Information like name, address, email, phone number, and purchase history. A unique customer ID is key.

- Orders: Data about each order, including order date, customer ID, items ordered (linking back to the toys), total amount, and shipping address.

- Suppliers: Information about the companies that supply the toys, including name, address, contact person, and toys supplied.

2. If you have 'Customers' and 'Orders', how would you connect them so you know which customer placed which order?

The standard way to connect 'Customers' and 'Orders' is using a foreign key relationship. You would add a CustomerID column to the Orders table. This CustomerID column would reference the primary key (usually CustomerID) in the Customers table.

This allows you to easily identify which customer placed each order. For example, in SQL:

SELECT * FROM Orders WHERE CustomerID = 123;

would retrieve all orders placed by the customer with CustomerID 123.

3. What does it mean for a data model to be 'normalized', and why is it usually a good thing?

Normalization in data modeling is the process of organizing data to reduce redundancy and improve data integrity. It typically involves dividing a database into two or more tables and defining relationships between the tables. The goal is to isolate data so that modifications to an attribute can be made in only one table.

Normalization is usually a good thing because it minimizes data duplication, which saves storage space and reduces the risk of inconsistencies. It also makes data easier to query and update, which can improve the performance of applications that use the database. Following normalization rules such as Boyce-Codd Normal Form (BCNF) is crucial for robust database design. However, in some cases, denormalization might be considered for performance reasons, at the cost of increased complexity in managing data consistency.

4. Explain the difference between a primary key and a foreign key in simple terms.

A primary key uniquely identifies each record in a table. It must contain a unique value for each row and cannot contain null values. Think of it like a student ID - no two students can have the same ID.

A foreign key, on the other hand, is a field in one table that refers to the primary key of another table. It establishes a link between the two tables. It essentially creates a relationship by referencing a unique record in a different table. For example, a 'courses' table might have a foreign key referencing the 'students' table (using student ID). This indicates which student is taking each course. Foreign keys enforce referential integrity, ensuring that relationships between tables remain consistent.

5. How would you design a database to store information about books and their authors?

I would design a database with two tables: Books and Authors. The Books table would have columns like book_id (INT, PRIMARY KEY), title (VARCHAR), isbn (VARCHAR), publication_date (DATE), and author_id (INT, FOREIGN KEY referencing the Authors table). The Authors table would contain author_id (INT, PRIMARY KEY), name (VARCHAR), and optionally other fields like birthdate or biography.

A one-to-many relationship exists between authors and books, using author_id as the foreign key in the Books table. This structure allows efficient querying for books by a specific author or for authors of a particular book. Consider indexes on frequently queried columns like title, author_id, and isbn to improve performance. For example, using SQL:

CREATE TABLE Authors (

author_id INT PRIMARY KEY,

name VARCHAR(255)

);

CREATE TABLE Books (

book_id INT PRIMARY KEY,

title VARCHAR(255),

author_id INT,

FOREIGN KEY (author_id) REFERENCES Authors(author_id)

);

6. What are some reasons you might choose a relational database over a non-relational one, or vice versa?

Choosing between relational and non-relational databases depends heavily on the application's needs. Relational databases (like PostgreSQL or MySQL) excel when data consistency and integrity are paramount. They enforce schemas, support ACID transactions, and are well-suited for applications with complex relationships between data entities, such as financial systems or inventory management. SQL offers powerful querying capabilities for relational data. Using foreign keys, joins and the features like transactions is important in maintaining data integrity. They are a good choice when dealing with structured data.

Non-relational databases (NoSQL databases like MongoDB or Cassandra) offer greater flexibility and scalability, especially for handling unstructured or semi-structured data. They're often preferred for applications with high read/write loads, rapidly evolving data models, or the need for horizontal scalability. Examples include social media platforms, IoT data storage, and content management systems. Non-relational data doesn't enforce constraints, it is the application developers responsibility to ensure data integrity.

7. Let's say you have a table of 'Products'. What kind of information (attributes) would each product have?

Each product in a 'Products' table would likely have attributes like:

- product_id: A unique identifier for the product (INT, PRIMARY KEY)

- product_name: The name of the product (VARCHAR)

- description: A brief description of the product (TEXT)

- category_id: A foreign key referencing a 'Categories' table (INT, FOREIGN KEY)

- price: The price of the product (DECIMAL/NUMERIC)

- stock_quantity: The number of units currently in stock (INT)

- manufacturer: The company that manufactures the product (VARCHAR)

- image_url: URL to a product image (VARCHAR)

- created_at: Timestamp of when the product was added (TIMESTAMP)

- updated_at: Timestamp of when the product was last updated (TIMESTAMP)

These attributes cover basic identification, categorization, pricing, availability, and provenance of the product. Depending on the specific domain, other attributes like weight, dimensions, color, or material might also be relevant.

8. What's an 'entity-relationship diagram' (ERD), and why is it useful?

An Entity-Relationship Diagram (ERD) is a visual representation of data within a system. It illustrates entities (objects or concepts about which data is stored), their attributes (properties of the entities), and the relationships between those entities. ERDs use specific notations to represent these components.

ERDs are useful for several reasons. Primarily, they help in database design by providing a clear blueprint for structuring data. They also improve communication between developers, database administrators, and stakeholders, ensuring everyone understands the data model. Further, they aid in identifying potential data redundancies or inconsistencies early in the development process, leading to a more efficient and robust database schema. Finally, ERDs act as documentation, providing a valuable reference for future maintenance and enhancements.

9. If you were designing a database for a library, what relationships would exist between 'Books', 'Authors', and 'Borrowers'?

In a library database, the following relationships would exist:

- Books and Authors: A many-to-many relationship. One book can have multiple authors (e.g., collaborations), and one author can write multiple books. This would typically be implemented using a linking table (e.g.,

BookAuthors) that stores theBookIDandAuthorIDas foreign keys. - Books and Borrowers: A many-to-many relationship. One book can be borrowed by multiple borrowers over time, and one borrower can borrow multiple books. This is often represented using a

Loanstable. Each record in theLoanstable would link aBookIDand aBorrowerIDalong with other related details likeloan_date,return_date,due_dateetc. This enables tracking who borrowed which book and when.

10. What does it mean if two tables have a 'one-to-many' relationship?

A one-to-many relationship between two tables, say Table A and Table B, means that one record in Table A can be related to multiple records in Table B, but each record in Table B can only be related to one record in Table A.

For example, consider a database with Customers and Orders tables. One customer can place multiple orders, but each order belongs to only one customer. In this case, the relationship from Customers to Orders is one-to-many.

11. How can you ensure that data in your database is accurate and consistent? What checks do you put in place?

To ensure data accuracy and consistency, I implement several checks at different levels. At the database level, I use constraints like NOT NULL, UNIQUE, PRIMARY KEY, FOREIGN KEY, and CHECK constraints to enforce data integrity. I also use data validation during data entry, often within the application layer, to verify data types, formats, and ranges before it reaches the database. Stored procedures and triggers can also be used for complex validation rules and automated data correction. Regular data audits involving data profiling and comparison against known datasets help identify and correct inconsistencies.

Furthermore, data backups and disaster recovery plans are crucial for maintaining data integrity in case of unforeseen events. Implement auditing to track changes to data. Data versioning can also be employed. Data encryption can be useful in some cases where data integrity is paramount and needs to be secured from malicious attempts.

12. What's the difference between an attribute and an entity?

An entity is a real-world object or concept that exists and can be distinguished from other objects. Think of it as a table in a database. An attribute, on the other hand, is a characteristic or property that describes an entity. It's a column in that table.

For example, a Customer is an entity. Attributes of the Customer entity might include customer_id, name, address, and phone_number. Attributes provide details about the entity, while the entity represents the thing itself.

13. Describe the purpose of data modeling. Why do we even do it?

The purpose of data modeling is to create a visual representation of data and its relationships within an information system. We do it to understand and define data requirements, ensure data consistency, and optimize database design. Data modeling helps to improve communication among stakeholders (developers, business analysts, database administrators) and facilitates efficient data storage and retrieval.

Specifically, data modeling:

- Clarifies data requirements and business rules.

- Provides a blueprint for database construction.

- Ensures data integrity and consistency.

- Improves data accessibility and usability.

- Facilitates data analysis and reporting.

14. How would you model the relationship between students and the courses they are enrolled in?

I would model the relationship between students and courses as a many-to-many relationship. This means a student can enroll in multiple courses, and a course can have multiple students enrolled in it.

To represent this in a database, I would use a relational database with three tables: students, courses, and enrollments. The students table would store student information (e.g., student ID, name). The courses table would store course information (e.g., course ID, title, description). The enrollments table would serve as a junction table, containing foreign keys referencing both students and courses (student ID, course ID), effectively linking students to their enrolled courses. This allows for efficient querying of student-course relationships.

15. What considerations would you keep in mind when choosing data types for different attributes in your model? For example, should 'age' be a string or an integer?

When choosing data types, consider storage space, data integrity, and performance. For 'age,' an integer is better than a string because age is inherently a number, allowing for mathematical operations (average age, age comparisons) and efficient storage. Using an integer also enforces data integrity, preventing non-numeric values from being entered. However, if you need to store age ranges (e.g., '25-30'), a string might be more suitable. Furthermore, certain systems might only use strings, forcing the 'age' to be stored as string.

Considerations include:

- Storage size: Integers usually take less space than strings.

- Data integrity: Using appropriate types (like integers for age) prevents invalid data.

- Performance: Numerical calculations are faster on integers than strings.

- Data usage: Think about how the data will be used. Will you need to perform calculations, or just display the data?

- System constraints: The database and programming language may have type limitations. Code example:

age = 25(integer) vs.age = "25"(string).

16. How would you handle a situation where an attribute can have multiple values (e.g., a product with multiple colors)?

There are several ways to handle attributes with multiple values, depending on the context (database, application code, etc.). One common approach is to use a separate table to store the multi-valued attributes. For example, if we have a products table and a colors table, we can create a product_colors table to link products to their respective colors. This table would have columns like product_id and color_id as foreign keys.

Another approach is to serialize the multiple values into a single string within the product table. This could involve using a comma-separated list or a JSON array. However, querying and managing these serialized values can be more complex than using a separate table. For example, the color attribute can be stored as a JSON string ["red", "blue", "green"]. While this is simple, it can make querying directly for products of a specific color difficult. You'd likely need to use JSON functions within the query, which might impact performance depending on the database system.

17. What are some potential problems that can arise from a poorly designed data model?

A poorly designed data model can lead to numerous problems. Data redundancy is a major issue, where the same information is stored multiple times, wasting storage space and increasing the risk of inconsistencies. Inconsistencies make it difficult to ensure data accuracy and reliability, impacting reporting and decision-making. Moreover, complex and poorly structured models can make querying and retrieving data inefficient and slow, hurting application performance.

Furthermore, a poorly designed model can make it difficult to enforce data integrity constraints. For example, if relationships between entities are not properly defined, orphaned records or incorrect data associations can occur. Schema changes and application development become more complex and costly due to the model's rigidity and lack of scalability. Finally, without proper data governance considerations around security and access control, a poorly designed data model can inadvertently expose sensitive information.

18. How do you decide which entities and attributes are most important to include in your model?

Prioritize entities and attributes that directly support core business processes and answer key business questions. Start by identifying the primary objectives the model needs to address. Then, consider data availability, data quality, and the level of detail required. Entities representing core concepts (e.g., customer, product, order) are typically essential.

Attributes should be included based on their relevance to the business objectives. Ask yourself if the attribute is necessary for calculations, reporting, filtering, or decision-making. It's helpful to involve stakeholders from different departments (e.g., sales, marketing, operations) to gain diverse perspectives and ensure the model captures the most critical information. Avoid including attributes that are redundant, irrelevant, or of questionable quality. Iteratively refine the model based on testing and feedback.

19. How would you optimize your data model for performance, especially when dealing with large amounts of data?

To optimize a data model for performance with large datasets, I'd focus on several key areas. Firstly, indexing is crucial. Adding indexes to frequently queried columns drastically speeds up data retrieval. However, it's important to avoid over-indexing, as it can slow down write operations. Secondly, consider data partitioning or sharding. This involves splitting the data into smaller, more manageable chunks, potentially distributed across multiple servers. This reduces the amount of data that needs to be scanned for each query.

Other techniques include: choosing appropriate data types (using the smallest data type that can accurately represent the data), denormalization (carefully adding redundant data to reduce the need for joins), and employing caching mechanisms to store frequently accessed data in memory. Furthermore, efficient query design is paramount. Using EXPLAIN to analyze query execution plans helps identify bottlenecks. For example, avoiding SELECT * and using specific column names improves performance. Choosing the right database technology for the use case is also crucial, whether it be a relational database like PostgreSQL with JSONB support, or a NoSQL solution if the data structure fits. Regularly profiling and monitoring database performance is key to identifying and addressing performance bottlenecks.

20. Imagine modeling a social network: What are the core entities, and how are they related to each other?

The core entities in a social network model are primarily Users and Posts. Users are related to each other through Friendships (or Follows). Users create Posts. Posts can be related to other Posts through Comments or Shares. Optionally, Groups can be another core entity, where Users are members and Posts can be associated with specific groups.

These entities relate as follows:

- User creates Post

- User is friends with User (Friendship).

- User is member of Group.

- Post has Comment.

- Post is shared by User.

- Post belongs to Group (optional).

Intermediate Data Modeling interview questions

1. How do you handle rapidly changing data requirements in a data model?

Handling rapidly changing data requirements involves a combination of flexible modeling techniques and robust change management practices. I would start by understanding the nature and frequency of the changes. Some approaches include employing schema-on-read techniques (like NoSQL databases) or using more adaptable schema designs (like JSON columns in relational databases) to accommodate evolving data structures.

Further strategies include:

- Version control: Maintain versions of the data model and migration scripts.

- Data virtualization: Abstract the data source from the consumer.

- Agile methodologies: Adopt an iterative development process.

- Change management: Establish a clear process for evaluating, approving, and implementing data model changes.

- Automated testing: Ensure changes don't break existing functionality. For example, if new fields are being added to a JSON object, ensure downstream processes can handle them gracefully. Unit tests can be implemented in languages such as Python to validate the data integrity and schema compliance after model changes.

2. Explain the trade-offs between normalization and denormalization.

Normalization aims to reduce data redundancy and improve data integrity by organizing data into multiple related tables. This minimizes storage space and simplifies updates since data is stored in one place only. However, normalization can lead to more complex queries involving multiple joins, potentially slowing down read performance.

Denormalization, conversely, introduces redundancy by adding data to one table that is present in another. This speeds up read operations as fewer joins are needed. The downside is increased storage space, potential data inconsistencies if updates aren't carefully managed across multiple locations, and more complex write operations to maintain data integrity. Choosing between normalization and denormalization involves balancing read and write performance with data integrity and storage costs. Often, a mix of both approaches is optimal, tailoring the database design to specific application needs.

3. How would you model a many-to-many relationship with attributes on the relationship itself?

To model a many-to-many relationship with attributes on the relationship itself, you would typically use a junction table (also called an associative entity or bridge table). This table stores foreign keys referencing both tables participating in the many-to-many relationship. In addition to these foreign keys, the junction table includes columns representing the attributes specific to the relationship.

For example, consider students and courses with a many-to-many relationship where you want to record the grade a student received in a particular course. You would have a Students table, a Courses table, and a StudentCourses junction table. The StudentCourses table would have foreign keys to Students and Courses and an additional column called Grade to store the grade the student received for that course. StudentCourses primary key would typically be a composite key of the two foreign keys pointing to Students and Courses tables. The code to define the StudentCourses junction table in SQL might look like this: CREATE TABLE StudentCourses (StudentID INT, CourseID INT, Grade VARCHAR(2), PRIMARY KEY (StudentID, CourseID), FOREIGN KEY (StudentID) REFERENCES Students(StudentID), FOREIGN KEY (CourseID) REFERENCES Courses(CourseID));

4. Describe a time when you had to redesign a data model due to performance issues.

I once worked on a system that tracked user activity for a large e-commerce platform. The initial data model stored all activity events in a single, massive table. As the volume of data grew, querying this table became incredibly slow, impacting dashboard performance and generating reports. We diagnosed the issue as stemming from full table scans and inefficient indexing on the monolithic table.

To address this, we redesigned the data model to shard the activity data based on time ranges (e.g., monthly tables). We also introduced summary tables that pre-aggregated common query patterns. This involved migrating existing data, updating our ETL pipelines, and modifying our querying logic. The result was a significant improvement in query performance and reduced the load on the database, resolving the performance bottlenecks. CREATE INDEX activity_time ON activity_table (event_time); before was not cutting it.

5. What are some strategies for handling historical data in a data warehouse?

Strategies for handling historical data in a data warehouse revolve around managing large volumes of data while maintaining query performance and data integrity. Partitioning is crucial; divide data based on time (e.g., monthly, yearly) to improve query speed and simplify archiving. Data aggregation and summarization reduce storage needs by creating summarized tables for common reporting periods. Data archiving moves older, less frequently accessed data to cheaper storage, while retaining it for compliance or long-term analysis.

Also, consider slowly changing dimensions (SCDs) to track data changes over time. SCD Type 1 overwrites old data, Type 2 creates new records with effective dates, and Type 3 stores limited history in existing records. Choose the SCD type based on your reporting needs and how much historical context you need to preserve. Implementing data lifecycle management policies helps automate these processes and ensures data is stored and managed appropriately based on its age and value.

6. How do you ensure data quality during the data modeling process?

Ensuring data quality during data modeling involves several key steps. First, I focus on defining clear and consistent data definitions, including data types, constraints, and validation rules. This helps prevent inconsistencies and errors from entering the model. Data profiling is also important to understand the existing data and identify potential issues like missing values or outliers.

Second, I emphasize collaboration with stakeholders to gather accurate requirements and ensure the model aligns with business needs. This includes involving domain experts and data stewards in the design process. Rigorous testing and validation are performed throughout the modeling process to identify and correct any data quality issues before deployment. I also prioritize data governance and documentation to maintain data quality over time.

7. Explain the concept of slowly changing dimensions (SCDs) and the different types.

Slowly Changing Dimensions (SCDs) manage changes to dimension data over time in a data warehouse. Instead of overwriting old data, SCDs preserve historical information. Different types of SCDs handle changes in various ways.

There are several types of SCDs:

- Type 0 (Retain Original): The dimension attribute value never changes. The attribute value remains unchanged.

- Type 1 (Overwrite): The new value replaces the old value. History is not preserved.

- Type 2 (Add New Row): A new row is added to the dimension table with the new value and effective dates. Commonly involves

start_date,end_date, andis_currentflags to identify the active row. This is important for accurate historical analysis. - Type 3 (Add New Attribute): A new column is added to the dimension table to store the new value. Can become unwieldy with many changing attributes.

- Type 4 (History Table): History is kept in a separate history table.

- Type 6 (Combination of Type 1, 2, and 3): A combination of Type 1, 2 and 3 SCD types is employed.

8. How would you model a system where data needs to be accessed in multiple ways by different applications?

I would model the system using a microservices architecture with a data lake or data warehouse at its core. Each microservice exposes specific APIs tailored to the needs of the application it serves. The data lake acts as a central repository, ingesting data from various sources in its raw format.

To enable efficient data access, I would use technologies like:

- APIs (REST/GraphQL): To allow microservices to query the data lake or data warehouse.

- Message queues (Kafka, RabbitMQ): For asynchronous data processing and communication between services.

- Polyglot persistence: Using different database technologies based on each service’s requirements (e.g., NoSQL for high volume, SQL for relational data). An example would be to use PostgreSQL to manage relational data and use MongoDB for document storage.

- Data virtualization: To create a unified view of the data across multiple systems, without physically moving it. This can be done using technologies like Apache Drill or Presto.

9. What are some common data modeling anti-patterns to avoid?

Several data modeling anti-patterns can lead to issues with performance, maintainability, and data integrity. Some common ones include:

- Attribute Splitting: Storing multiple pieces of information in a single column (e.g., storing first and last name in a single 'name' column). This makes querying and reporting more difficult.

- EAV (Entity-Attribute-Value) modeling without a good reason: Overly flexible but can be extremely slow for queries. Should only be used when the attributes are truly unknown and variable.

- Polymorphic Associations: A single foreign key column referencing multiple tables. Complex and hard to enforce integrity.

- Magic Numbers: Using arbitrary numeric codes to represent data values, rather than using descriptive codes or lookup tables. Hard to understand and maintain.

- Index Misuse: Too many indexes can slow down write operations. Missing indexes can slow down read operations. Incorrectly defined indexes are also a problem.

- Ignoring Normalization: Not normalizing data can lead to redundancy, update anomalies, and insert anomalies.

- Over-Normalization: Too many tables that become hard to query effectively. Going too far in the opposite direction of Ignoring Normalization.

- Using Nulls Excessively: Can obscure meaning and make queries more complex. Consider alternative representations or default values.

- Circular Dependencies: Tables that depend on each other, potentially leading to issues with data consistency and updates.

10. Describe how you would approach modeling a social network.

I would model a social network using a graph data structure. Each user would be represented as a node, and the connections between users (e.g., friendships, follows) would be represented as edges. I'd likely use an adjacency list or adjacency matrix to store the graph, depending on the expected density of the network. For instance, a social network where each person knows everyone will be dense, and a social network like twitter would be sparse. For storage and querying, a graph database like Neo4j would be well-suited, especially when dealing with complex relationship queries (e.g., finding friends-of-friends). Alternatively, a relational database like PostgreSQL with graph extensions could also work.

To implement core functionalities, I'd focus on features like adding/removing users, establishing/removing connections, and traversing the graph to find relationships. APIs for searching users, suggesting connections, and displaying network information would be key. Considerations would also include scaling for a large number of users and connections, which might involve sharding the graph or employing distributed graph processing frameworks like Apache Spark's GraphX.

11. How would you handle data security and privacy concerns in your data model design?

Data security and privacy are paramount in data model design. I would address these concerns through several strategies, starting with data minimization, ensuring we only collect and store necessary data. Data encryption at rest and in transit is crucial, using strong encryption algorithms. Access control mechanisms like role-based access control (RBAC) would restrict data access based on user roles.

Furthermore, I would implement data masking and anonymization techniques for non-production environments and reporting purposes. Regular security audits and penetration testing would identify vulnerabilities. The data model would be designed with privacy by design principles, adhering to regulations like GDPR and CCPA. Finally, a clear data retention policy would define how long data is stored and when it is securely deleted. For example, consider a users table with a password column. We should never store the password in plain text, but rather use a strong hashing algorithm like bcrypt.

12. What are the key considerations when choosing between a relational and a NoSQL database for a specific use case from a data modeling perspective?

When choosing between relational and NoSQL databases from a data modeling perspective, consider several key aspects. Relational databases excel when data integrity and consistency are paramount, requiring ACID properties. They enforce strict schemas, ensuring data conforms to predefined relationships. Data is typically normalized to reduce redundancy. Thus they are well suited for use cases with complex relationships and transactional requirements, like financial systems.

NoSQL databases, on the other hand, offer flexibility and scalability for handling unstructured or semi-structured data. They often prioritize availability and partition tolerance over strong consistency (BASE properties). Different NoSQL models (document, key-value, graph, column-family) cater to various data structures and access patterns. NoSQL databases are well-suited for use cases that require high throughput, rapid development, and the ability to adapt to evolving data structures, like social media feeds or IoT data.

13. Explain the role of metadata in data modeling.

Metadata plays a crucial role in data modeling by providing context and information about the data itself. It essentially describes the data, making it easier to understand, manage, and utilize. Metadata defines characteristics like data types, relationships, sources, definitions, and business rules. It's like a data dictionary, offering insights into what each data element represents and how it relates to other elements within the model.

Without metadata, data models are difficult to interpret and maintain. Some specific ways that metadata supports data modeling include:

- Data Discovery: Enables users to easily find and understand the purpose of datasets.

- Data Quality: Helps in identifying and resolving data quality issues through validation rules and data lineage.

- Data Governance: Supports data governance initiatives by providing a clear understanding of data ownership and responsibilities.

- Data Integration: Facilitates data integration efforts by providing a common understanding of data formats and structures across different systems.

14. How do you validate a data model to ensure it meets business requirements?

Validating a data model against business requirements involves several key steps. First, collaborate with stakeholders to thoroughly understand and document their needs, use cases, and data expectations. Translate these requirements into specific validation criteria, such as data types, constraints, relationships, and data quality rules. Data quality rules could be things like ensuring a field is not null or is within a specific range.

Next, implement validation checks within the data model or associated applications. This could involve using database constraints, application-level validation logic, or dedicated data quality tools. Regularly test the data model with sample data that represents real-world scenarios, verifying that it adheres to the defined criteria and handles edge cases appropriately. Finally, iterate on the data model based on feedback and validation results, ensuring that it continuously meets the evolving needs of the business.

15. Describe a situation where you had to compromise between different stakeholders' data requirements.

In a recent project, our marketing team wanted highly granular, real-time website activity data for personalized ad campaigns, while the engineering team was concerned about the performance impact of such detailed tracking on our core e-commerce platform. Legal also raised concerns about PII data collection and compliance with GDPR. To address this, I facilitated a series of meetings to understand each team's needs and constraints. We compromised by implementing aggregated, near real-time data feeds that still provided valuable insights for marketing, minimized the performance overhead, and masked any sensitive user information before it reached the marketing platform.

Specifically, instead of tracking every single click event, we agreed to track aggregated metrics like page views per user session and product categories visited. We also implemented a 15-minute delay on data transfer and used data anonymization techniques to ensure compliance. This compromise allowed marketing to improve campaign effectiveness while respecting technical limitations and adhering to data privacy regulations. This allowed all the stakeholders to achieve a common goal.

16. How do you document a data model for future maintainability and understanding?

To document a data model effectively, I would focus on creating clear and comprehensive documentation that caters to various audiences. This includes:

- Data Dictionary: A central repository defining each attribute/field, its data type, constraints (e.g., nullability, uniqueness), and a descriptive explanation of its purpose.

- Entity Relationship Diagram (ERD): A visual representation of the entities within the data model and the relationships between them. This provides a high-level overview.

- Data Flow Diagrams (DFD): These are diagrams that describe how data flows through the system, providing context for the data model's role in the application as a whole.

- API Documentation (if applicable): If the data model is exposed through an API, thorough documentation outlining endpoints, request/response formats, and authentication methods is essential.

- Versioning: Maintain a version history of the data model, tracking changes and their rationale over time. This is important when the data model has modifications over the life cycle of the product. For example, in code we could include a section for tracking changes:

# Version 1.0 - Initial data model for customer information. # Version 1.1 - Added 'phone_number' field to 'customers' table. - Naming Conventions: Establish and adhere to consistent naming conventions for tables, columns, and relationships. This promotes readability and reduces ambiguity.

17. What are your preferred tools and techniques for data modeling and why?

My preferred tools and techniques for data modeling depend on the project's complexity and scope. I generally start with conceptual modeling using tools like draw.io or Lucidchart to visualize entities, relationships, and attributes. For logical and physical modeling, I prefer using dedicated database modeling tools like ERwin Data Modeler or dbdiagram.io. These tools allow for forward and reverse engineering, generating DDL scripts, and enforcing data integrity constraints.

For techniques, I favor a combination of Entity-Relationship (ER) modeling and dimensional modeling, especially for data warehousing. ER modeling helps in understanding the relationships between entities and designing normalized databases. Dimensional modeling (star schema, snowflake schema) is useful for optimizing data for reporting and analysis. I also emphasize the importance of data normalization to reduce redundancy and improve data consistency. For NoSQL databases, I focus on understanding the data access patterns and designing the data model accordingly, favoring denormalization when appropriate to improve read performance.

18. Explain the concept of data lineage and its importance.

Data lineage is essentially a map of the data's journey, tracking its origin, transformations, and destinations. It shows how data moves through systems and processes, providing a clear and auditable record of its lifecycle. This includes where the data originated, what changes it underwent (transformations, aggregations, etc.), and where it is ultimately stored or used.

The importance of data lineage stems from its ability to improve data quality, ensure compliance, and facilitate better decision-making. Specifically:

- Data Quality: By understanding the data's journey, we can identify and correct errors or inconsistencies introduced during processing.

- Compliance: Lineage helps meet regulatory requirements by demonstrating data provenance and ensuring accountability.

- Auditing: Lineage enables tracing data back to its source, which is crucial for auditing and troubleshooting.

- Impact Analysis: If changes are made to a data source, lineage helps understand the downstream impact on other systems and reports.

- Trust: Understanding the origins builds confidence in the accuracy of the information.

19. How would you model a system that needs to support both real-time and batch data processing?

A hybrid architecture is best suited. Real-time data would flow through a stream processing system like Kafka/Pulsar, processed by tools like Flink/Spark Streaming, and stored in a low-latency database (e.g., Cassandra, Redis). Batch data would be processed periodically using a framework like Spark/Hadoop and stored in a data warehouse (e.g., Snowflake, BigQuery).

Key considerations include:

- Data Ingestion: Separate pipelines or a unified pipeline with routing.

- Data Storage: Choose databases optimized for respective workloads.

- Data Transformation: Apply appropriate transformations for real-time and batch use cases.

- Orchestration: Tools like Airflow can manage batch processing jobs.

- Monitoring: Implement comprehensive monitoring for both systems.

20. What are the challenges of data modeling in a distributed environment?

Data modeling in a distributed environment introduces complexities not typically found in centralized systems. One significant challenge is maintaining data consistency across multiple nodes. As data is spread across different machines, ensuring that all nodes have the most up-to-date and accurate information becomes difficult, potentially leading to data conflicts and inconsistencies. Different nodes might have varying interpretations or representations of the same data, and network latency can exacerbate these issues.

Another challenge is managing data partitioning and distribution. Determining the optimal way to split and distribute data across nodes to maximize query performance and minimize data transfer is crucial. This often involves considering factors such as data locality, data replication, and the specific query patterns of the application. Improper data partitioning can lead to performance bottlenecks, increased network traffic, and difficulties in performing join operations across distributed data sets.

21. Describe how you would approach modeling time-series data.

When approaching time-series data modeling, I'd start by visualizing the data to understand its characteristics, such as trend, seasonality, and stationarity. I would then perform exploratory data analysis (EDA), including statistical tests like the Augmented Dickey-Fuller (ADF) test to assess stationarity. If the data isn't stationary, I'd apply differencing or transformations to achieve stationarity.

Next, I'd choose a suitable model. For simple time series, ARIMA models are a good starting point. If seasonality is present, SARIMA models are more appropriate. For complex patterns, I might consider machine learning models like LSTMs or Prophet. Model evaluation involves using metrics like Mean Squared Error (MSE), Root Mean Squared Error (RMSE), or Mean Absolute Error (MAE) on a holdout dataset. Finally, model tuning would be done via cross-validation.

22. How do you handle missing or incomplete data in a data model?

Handling missing or incomplete data is a crucial part of data modeling. Several strategies can be employed, and the best approach depends on the specific context and the impact on data analysis.

Common strategies include:

- Imputation: Replacing missing values with estimated values (e.g., mean, median, mode, or using more advanced machine learning models).

- Deletion: Removing rows or columns with missing data (use with caution, as it can lead to data loss).

- Creating a new category: Introducing a new category to represent missing values explicitly. This is useful when the absence of data itself is informative.

- Using algorithms that handle missing data: Some algorithms can inherently handle missing values without requiring imputation or deletion.

- Data validation: Implement data validation rules at the point of entry to prevent incomplete data from being added in the first place.

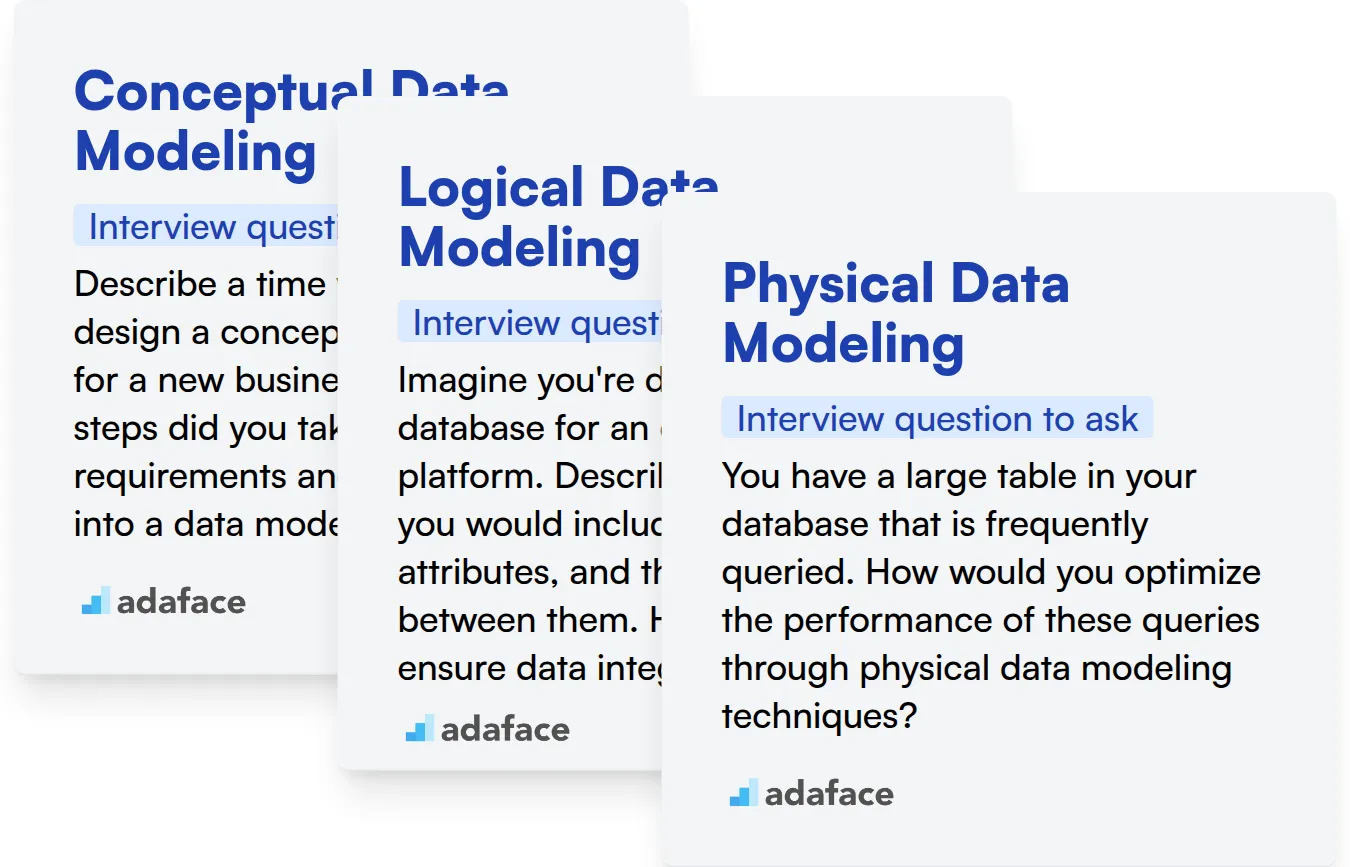

23. Explain the difference between a conceptual, logical, and physical data model.

Conceptual, logical, and physical data models represent different levels of abstraction in database design. The conceptual model provides a high-level, business-oriented view, focusing on what data is important and the relationships between data entities. It's technology-independent and often represented using entity-relationship diagrams (ERDs) that are easy for stakeholders to understand.

The logical model refines the conceptual model by defining the data elements, their data types, and relationships with more detail. It still remains independent of specific database management systems (DBMS) but prepares the model for implementation. Keys (primary, foreign) are usually defined at this stage. The physical model represents the implementation of the data model in a specific DBMS. It includes table structures, data types, indexes, constraints, and other DBMS-specific details. This model defines how the data is physically stored and accessed.

24. How would you model a hierarchical data structure in a relational database?

A hierarchical data structure can be modeled in a relational database using several approaches. The most common is the adjacency list model. This involves having a table with a parent_id column that references the id of another record in the same table. The root node would have a NULL value in the parent_id column.

Other options include the path enumeration model, where each node stores the full path to the root. The nested set model uses numerical values to represent the hierarchy, enabling efficient retrieval of descendants but making modifications complex. Finally, a closure table stores all ancestor-descendant relationships explicitly, offering fast ancestor/descendant queries at the cost of increased storage and insert/update complexity. Example SQL for adjacency list:

CREATE TABLE categories (

id INT PRIMARY KEY,

name VARCHAR(255),

parent_id INT,

FOREIGN KEY (parent_id) REFERENCES categories(id)

);

25. What are some techniques for optimizing a data model for query performance?

Several techniques can optimize a data model for faster query performance. Normalization reduces redundancy, but denormalization, introducing some redundancy, can speed up reads by reducing the need for joins. Indexing key columns significantly improves query speed by allowing the database to quickly locate relevant rows.

Other approaches include partitioning large tables into smaller, more manageable pieces. Consider using summary tables (materialized views) to pre-compute aggregates, avoiding costly calculations during queries. Optimizing data types (e.g., using INT instead of VARCHAR for numerical IDs) can also reduce storage space and improve comparison speed. Finally, analyze query execution plans regularly to identify and address performance bottlenecks.

26. Describe a situation where you had to integrate data from multiple disparate sources into a single data model.

In a previous role, I worked on a project to consolidate customer data from our CRM, marketing automation platform, and e-commerce system into a unified customer view. Each system had its own data structures and identifiers. To achieve this, we first profiled the data in each system to understand the data types, quality, and relationships. Then, we defined a common data model that could represent the key customer attributes and behaviors.

The integration process involved extracting data from each source, transforming it to conform to the common data model (e.g., standardizing address formats, mapping product IDs), and then loading it into a central data warehouse. We used Python and Pandas for the ETL process. Data deduplication was a major challenge, so we implemented fuzzy matching algorithms (like the Levenshtein distance) to identify and merge duplicate customer records based on email addresses, names, and physical addresses. The final output was a single customer view that enabled more effective marketing and customer service.

27. Explain the CAP theorem and its relevance to data modeling choices.

The CAP theorem states that it's impossible for a distributed data store to simultaneously guarantee all three of the following: Consistency (all nodes see the same data at the same time), Availability (every request receives a response, without guarantee that it contains the most recent version of the information), and Partition tolerance (the system continues to operate despite arbitrary partitioning due to network failures). In essence, you can only pick two out of three.

When designing data models, you must consider the implications of the CAP theorem. If high availability is critical (AP system), you might choose a data model that allows for eventual consistency (e.g., using techniques like optimistic locking or conflict resolution). If strong consistency is paramount (CP system), you might sacrifice some availability during network partitions, ensuring data integrity. The choice impacts data modeling choices like data replication strategies, transaction models, and conflict resolution mechanisms. Data models for CA systems are possible in non-distributed setups.

Advanced Data Modeling interview questions

1. How would you model data for a social network where users can follow each other and create posts with varying privacy settings?

We can model the data using several entities: Users, Posts, and Follows.

- Users: Each user would have a unique ID, profile information (name, bio, etc.), and security credentials. We can store this in a

userstable with columns likeuser_id,username,email,profile_data(JSON blob). - Posts: Each post would have a unique ID, the author's user ID, the post content, a timestamp, and a privacy setting. Privacy settings could be an enum (

public,friends,only_me). Apoststable would have columns likepost_id,user_id,content,timestamp,privacy. - Follows: This entity models the follower-following relationship. A table

followswould havefollower_idandfollowee_id, representing a directed edge in the social graph.follower_idfollowsfollowee_id.

Queries would involve joining these tables. For example, to get a user's feed, we'd select posts where user_id is in the set of IDs that the user follows, or where the post is public, or where the post is marked as 'friends' and the two users are friends. The friendship can be derived from the follows table by checking for bidirectional edges.

2. Imagine you're designing a data model for an e-commerce platform with millions of products and complex pricing rules. How would you handle product variations (size, color) and dynamic pricing?

For product variations, I'd use a combination of a core Product table and a ProductVariant table. Product stores general information (name, description), while ProductVariant holds variation-specific data (size, color, SKU, price). The ProductVariant table would have a foreign key referencing the Product table, creating a one-to-many relationship. Attributes specific to a product will be managed in another table referenced in the Product table. Attributes can be things like color, size etc. Dynamic pricing could be implemented using a PricingRule table. This table defines rules based on various factors (time of day, customer segment, quantity purchased). Each rule would specify a price adjustment (percentage or fixed amount). A service would evaluate these rules at runtime to determine the final price. The ProductVariant table can have a field indicating if dynamic pricing applies.

Producttable would store general information, andProductVariantstores information on variations like price, size, weight, SKU etc

3. Describe your approach to modeling time-series data for a system that tracks website traffic, considering both real-time and historical analysis.

My approach involves using a combination of techniques depending on the specific requirements of real-time and historical analysis. For real-time traffic monitoring, I'd likely use streaming data processing with tools like Apache Kafka or Kinesis to ingest data and time-series databases like InfluxDB or TimescaleDB to store and query the data efficiently. Metrics would be calculated and visualized in real-time using dashboards. Algorithms like Exponential Smoothing or ARIMA could be used for anomaly detection to identify sudden spikes or drops in traffic.

For historical analysis, I'd leverage the stored data in the time-series database or potentially move it to a data warehouse like Snowflake or BigQuery for long-term storage and complex analysis. More sophisticated time-series models like Prophet or deep learning models (e.g., LSTMs) could be employed to forecast future traffic patterns, identify trends, and perform root cause analysis of past events. Feature engineering would be crucial, incorporating elements like seasonality, day of the week, and holidays to improve model accuracy.

4. How would you design a data model for a recommendation engine that suggests products to users based on their past purchases and browsing history?

A data model for a recommendation engine could include these key entities:

- Users:

user_id(INT, primary key),user_profile(JSON - demographic info, preferences). - Products:

product_id(INT, primary key),product_name(VARCHAR),category(VARCHAR),price(DECIMAL),product_attributes(JSON - color, size, etc.). - Purchases:

purchase_id(INT, primary key),user_id(INT, foreign key referencing Users),product_id(INT, foreign key referencing Products),purchase_date(TIMESTAMP),quantity(INT). - Browsing History:

session_id(INT, primary key),user_id(INT, foreign key referencing Users),product_id(INT, foreign key referencing Products),timestamp(TIMESTAMP). - Product Interactions:

interaction_id(INT, primary key),user_id(INT, foreign key referencing Users),product_id(INT, foreign key referencing Products),interaction_type(ENUM - view, add_to_cart, wishlist),timestamp(TIMESTAMP).

This structure captures user information, product details, purchase history, browsing behavior, and other interactions. The user_profile and product_attributes fields use JSON to allow for flexible and extensible data storage. Relationships are maintained using foreign keys, enabling efficient queries for recommendation algorithms.

5. Let's say you need to model data for a supply chain management system. What entities, attributes, and relationships would you consider?

Entities would include: Suppliers, Products, Customers, Orders, Shipments, Warehouses. Suppliers would have attributes like name, address, contact info, and lead time. Products might have name, description, SKU, price, and weight. Customers have name, address, and contact details. Orders have order date, order number, customer ID, and total amount. Shipments might have shipment ID, shipping date, expected delivery date, and tracking number. Warehouses would have location, capacity, and contact information.

Relationships include: Suppliers supply Products (one-to-many), Customers place Orders (one-to-many), Orders contain Products (many-to-many, often represented by an order_item table), Orders generate Shipments (one-to-many), and Shipments originate from Warehouses (one-to-many), and Products are stored in Warehouses (many-to-many).

6. Design a data model for a hospital's electronic health record system, considering the need to store patient demographics, medical history, and treatment plans.

A basic EHR data model could include several key entities. Patient would store demographics (name, address, DOB, contact info), with a primary key patient_id. MedicalHistory would track conditions, allergies, and immunizations, linked to Patient via patient_id. Encounter represents a visit and includes date/time, reason, and attending physician. Diagnosis links Encounter to a standard medical coding system (e.g., ICD-10). TreatmentPlan details prescriptions, therapies, and follow-up appointments, also linked to Encounter. Medication stores information about drugs, including dosage and frequency. All data is related back to patients.

For data integrity and relationships, primary and foreign keys would be defined. Specific data types (e.g., dates, strings, integers) would be chosen appropriately for each attribute to ensure data accuracy and consistency. The system can be further extended to hold lab results, radiology reports, billing information, etc.

7. You're building a data model for a system that tracks financial transactions. How would you ensure data integrity and prevent fraud?

To ensure data integrity and prevent fraud in a financial transaction system, I would implement several measures. Data integrity would be enforced through: data validation (checking data types, formats, and ranges), database constraints (primary keys, foreign keys, unique constraints, not null constraints), and transaction management (ACID properties). Fraud prevention would involve: anomaly detection (identifying unusual transaction patterns), rule-based systems (flagging transactions based on predefined rules), multi-factor authentication, and encryption of sensitive data, such as account numbers.

8. How would you model data for a system that manages user authentication and authorization across multiple applications?

I would model the data with tables for Users, Applications, Roles, and Permissions, and tables to manage the relationships between them. The Users table would store user credentials and basic information. The Applications table would list all applications integrated with the authentication system. Roles would define sets of permissions, and Permissions would represent specific actions a user can perform. Tables like UserRoles (linking users to roles) and RolePermissions (linking roles to permissions) would establish the authorization logic. Additionally, a ApplicationPermissions table linking the application to allowed permissions, can allow granular control on allowed actions inside the application.

To manage authentication across multiple applications, a central Sessions table storing session tokens, user IDs, and application IDs could be used. This allows tracking active user sessions per application. For handling application-specific permissions, ApplicationRoles table allows defining roles that are specific to the application, thus avoiding polluting the global namespace. Here is an example:

CREATE TABLE UserRoles (

user_id UUID,

role_id UUID,

application_id UUID NULLABLE -- Specific application context or global role

PRIMARY KEY (user_id, role_id, application_id)

);

9. Describe your approach to modeling data for a system that stores geographic information, such as locations of businesses or points of interest.

My approach to modeling data for a geographic information system (GIS) involves using a spatial database, such as PostGIS (PostgreSQL extension) or GeoPackage. I'd represent locations as geometric data types (e.g., POINT, POLYGON) with a spatial reference identifier (SRID) to ensure correct coordinate system interpretation. Attributes for businesses or points of interest (name, address, type, etc.) would be stored as regular database columns. Spatial indexes are crucial for efficient querying, so I'd create indexes on the geometry column.

For example, consider a businesses table:

CREATE TABLE businesses (

id SERIAL PRIMARY KEY,

name VARCHAR(255),

address VARCHAR(255),

type VARCHAR(50),

location GEOGRAPHY(Point, 4326)

);

CREATE INDEX businesses_location_idx ON businesses USING GIST (location);

This allows for efficient spatial queries like finding businesses within a certain distance of a given point. The GEOGRAPHY type automatically handles spherical calculations, and SRID 4326 represents WGS 84, a common geographic coordinate system.

10. Imagine you're designing a data model for a system that manages projects and tasks, considering dependencies between tasks and resource allocation.

A good data model would include Projects, Tasks, Resources, and Dependencies tables. Projects would have project details (ID, name, description, status). Tasks would store task specifics (ID, project ID, name, description, status, assigned resource ID). Resources stores resource information (ID, name, type, availability). Dependencies links tasks (ID, predecessor task ID, successor task ID, dependency type) enabling representation of relationships. Resource allocation would be managed by linking Tasks to Resources via the assigned resource ID in the Tasks table.

Key considerations include data types (integers for IDs, strings for names/descriptions, dates for deadlines), indexing for performance (especially on foreign key columns), and relationships between tables (one-to-many for project-tasks, task-resource). A relational database (e.g., PostgreSQL, MySQL) would be well-suited for this structure. Using foreign keys ensures data integrity and facilitates efficient querying.

11. How would you handle modeling data for a system which manages versions of documents?

I would model the data with a central Document entity containing metadata (title, author, etc.) and a one-to-many relationship with a DocumentVersion entity. Each DocumentVersion would store the content, version number, and timestamp. I would use a relational database, perhaps with a versions table containing:

document_id(foreign key todocumentstable)version_number(integer, incrementing)content(text or blob)created_at(timestamp)

This allows efficient retrieval of specific versions or the latest version, and allows to easily trace document history. Optimizations like content diffing between versions to reduce storage could also be implemented.

12. Imagine you are asked to design a data model that handles booking flights. What are some complex requirements that you would want to consider?

Some complex requirements for a flight booking data model would include handling multi-leg flights (with layovers and potential different airlines), managing fare classes and availability across different flights and dates (including dynamic pricing), and dealing with ancillaries like baggage, seat selection, and meals. It's also critical to model complex routing rules and restrictions imposed by airlines, such as maximum connection times or specific airport combinations. Further considerations involve accurately representing fare rules (e.g., change fees, cancellation policies), integrating with external systems for real-time flight status updates, and ensuring data consistency across different systems when booking flights.

13. When would you use an entity-attribute-value (EAV) model, and what are its trade-offs?

An Entity-Attribute-Value (EAV) model is useful when you need to store data where the attributes are not known in advance, are sparse, or vary significantly between entities. Imagine a system where you're collecting highly variable scientific measurements or tracking properties of items with a very open-ended set of characteristics. EAV allows you to add new attributes without altering the database schema.

However, EAV models introduce trade-offs. Querying EAV data can be complex and inefficient, often requiring multiple joins to retrieve a single entity's complete set of attributes. This can lead to performance issues, especially with large datasets. Additionally, data validation and integrity can be more challenging to enforce compared to a traditional relational model, and simple things like creating indexes for specific attributes becomes more difficult. Consider whether the flexibility outweighs the performance and complexity costs.

14. Explain how you would model hierarchical data, such as an organization chart or a file system directory structure.

Hierarchical data, like organization charts or file systems, can be modeled using a few different approaches. A common and straightforward method is using the adjacency list model. In this model, each node in the hierarchy stores a direct reference (usually a foreign key) to its parent node. For instance, in a file system, each directory would have a parent_directory_id pointing to its parent. The root node has a NULL or a special value in the parent ID column.

Alternatively, for scenarios requiring efficient retrieval of an entire subtree, the nested set model or materialized path model might be preferred. Nested sets use two numbers to represent each node's position in the hierarchy (left and right values), allowing for subtree selection with a simple range query. Materialized paths store the complete path from the root to the node as a string, facilitating easy path-based queries. For example, a file's path /home/user/documents/report.pdf would be stored directly. The choice depends on the specific use case and the frequency of different types of queries.

15. Let's say you are designing a model for a content management system (CMS). What are the different user roles?

Different user roles in a CMS can include:

- Administrator: Has full control over the CMS, including user management, system configuration, and content management.

- Editor: Can create, edit, and publish content. May have restrictions on publishing to specific sections or access to system settings.

- Author: Can create and submit content for review, but cannot publish directly.

- Contributor: Can add content, but does not have permission to publish or edit existing content.

- Viewer/Subscriber: Can only view content, possibly with restricted access based on subscription level.

16. How do you approach data modeling in an agile environment, where requirements are constantly changing?

In an agile environment, I approach data modeling iteratively and collaboratively. Instead of a big upfront design, I start with a conceptual model based on the initial requirements and business understanding. This initial model is then refined and expanded incrementally as new requirements emerge or existing ones change. I prioritize delivering a minimum viable data model (MVDM) that supports the core functionality of each sprint. Close collaboration with the development team and stakeholders is crucial to ensure the data model aligns with the evolving application and business needs. Regular reviews and feedback loops allow for continuous adaptation and improvement of the data model throughout the development process.

Techniques I use include:

- Embrace change: Design for flexibility from the start.

- Incremental modeling: Build small, functional parts of the model.

- Communication: Constant dialog with stakeholders is key.

- Refactoring: Be prepared to adjust the model as needed.

- Data dictionaries: keep track of your data models and associated documentation.

17. What are the key considerations when modeling data for a system that needs to comply with data privacy regulations like GDPR?

When modeling data for GDPR compliance, key considerations include data minimization (collecting only necessary data), purpose limitation (using data only for specified purposes), and storage limitation (retaining data only as long as necessary). Data privacy by design is crucial; incorporate privacy from the start. Data should be pseudonymized or anonymized where possible to reduce the risk of identification. Implement robust access controls and encryption to protect data at rest and in transit. Consider data residency requirements.

Furthermore, data subject rights like the right to access, rectification, erasure (right to be forgotten), and portability must be supported through the data model. This requires careful design to enable efficient data retrieval, modification, and deletion. Documenting data lineage and implementing data governance policies are also vital for demonstrating compliance.

18. How would you model data in a NoSQL database like MongoDB versus a relational database like Postgres, if you were building a product catalog?

In a relational database like Postgres, I'd normalize the product catalog data across multiple tables (e.g., products, categories, attributes, variants). Relationships would be defined using foreign keys to ensure data integrity and consistency. Queries often involve joins to retrieve complete product information.

In MongoDB, a NoSQL database, I'd likely opt for a more denormalized approach. Each product would be represented as a single document containing all related information, such as categories, attributes, and variants embedded within the document as arrays or sub-documents. This reduces the need for joins and can improve read performance, especially for frequently accessed product details. However, careful consideration is needed to manage data redundancy and potential inconsistencies when updating data.

19. Explain your understanding of data warehousing and star schema, and how it differs from transactional database models.

Data warehousing is designed for analytical purposes, consolidating data from various sources into a central repository for reporting and analysis. A star schema is a data warehousing schema that has a central fact table containing measures, surrounded by dimension tables that contain descriptive attributes. This contrasts with transactional databases, which are optimized for online transaction processing (OLTP) focused on recording individual transactions with highly normalized schemas to minimize redundancy and maintain data integrity during frequent updates.

The key difference lies in their purpose and structure. Data warehouses with star schemas prioritize query performance for analytics, using denormalized structures to facilitate faster aggregations and reporting. Transactional databases, on the other hand, prioritize data consistency and efficient transaction processing through normalized schemas and ACID properties, making them suitable for applications requiring real-time updates and integrity like banking or e-commerce systems.

20. Discuss strategies for data modeling in a microservices architecture, focusing on data consistency and integration.

Data modeling in a microservices architecture requires careful consideration of data consistency and integration across independent services. Strategies often involve embracing eventual consistency and using techniques like:

- Database per service: Each microservice owns its database, promoting autonomy and independent scaling. This inevitably leads to data duplication but simplifies schema evolution and reduces coupling.

- Eventual consistency: Rely on asynchronous event-driven communication (e.g., using message queues like Kafka or RabbitMQ) to propagate data changes between services. This ensures that data will eventually be consistent across services, though there may be a delay.

- Saga pattern: Implement sagas to manage distributed transactions across multiple services. A saga is a sequence of local transactions that either all complete successfully or are all rolled back to maintain data consistency.

- API Composition/Backend for Frontends (BFF): Create dedicated API layers to aggregate data from multiple microservices for specific UI needs, avoiding direct access to individual service databases.

- Change Data Capture (CDC): Capture changes from one service's database and propagate them to other relevant services to keep data synchronized. Solutions like Debezium can be used.

Expert Data Modeling interview questions

1. How would you approach modeling data for a real-time fraud detection system, considering both performance and accuracy?

For a real-time fraud detection system, I'd prioritize a hybrid approach focusing on both performance and accuracy. I'd start by using a combination of rule-based systems for catching obvious fraud patterns (e.g., velocity checks, blacklists) due to their speed and simplicity. Concurrently, I'd implement a machine learning model, likely a gradient boosting algorithm like XGBoost or LightGBM, trained on historical transaction data. Feature engineering is crucial here; features would include transaction amount, user demographics, location data, purchase history, and network information. The ML model would score transactions in real-time, identifying potentially fraudulent activities that the rule-based system might miss.

To handle the performance aspect, I'd utilize a feature store for efficient access to precomputed features. The model would be deployed using a framework like TensorFlow Serving or TorchServe for low-latency inference. To improve accuracy, I'd implement continuous monitoring and retraining of the ML model. I'd also use techniques like A/B testing to compare different model versions or feature sets. Finally, I would use a feedback loop to capture the model predictions along with human labelled data to improve the model.

2. Describe a scenario where denormalization is the optimal approach, even though it introduces redundancy, and why.

Denormalization is often optimal when read performance is critical, and the data is read far more often than it is written. Imagine a social media platform where users frequently view profile pages displaying a user's name, profile picture, and number of followers. Storing the number of followers directly within the user's profile data (denormalized) avoids a costly join operation against a separate 'followers' table every time a profile is viewed. Although this introduces redundancy (the follower count must be updated in the user table whenever a user gains or loses a follower), the improved read performance outweighs the overhead of the occasional write operation.

Another example is generating reports or dashboards that require aggregating data from multiple tables. Creating a pre-computed, denormalized table or materialized view specifically for the report can significantly speed up query execution. The trade-off is that the denormalized table needs to be updated periodically, either in real-time or in batches, to reflect changes in the underlying data, but the performance gains during report generation can be substantial.

3. Explain the trade-offs between different data modeling techniques (e.g., relational, NoSQL) for a complex e-commerce platform.

Relational databases (SQL) offer strong ACID properties, data integrity, and a well-defined schema, making them suitable for managing transactional data like orders, payments, and user accounts in an e-commerce platform. However, they can struggle with scalability and flexibility when dealing with unstructured data like product reviews or clickstream data. NoSQL databases, such as document stores (MongoDB), key-value stores (Redis), or graph databases (Neo4j), provide better scalability and flexibility for handling diverse data types and high traffic volumes. Document stores are good for product catalogs, key-value for caching, and graph for recommendations. The trade-off is often reduced consistency (eventual consistency), weaker data integrity, and the need for application-level data validation. The choice depends on the specific requirements of each part of the platform. For instance, a relational database might be used for order management, while a NoSQL database handles product recommendations and reviews.

4. How do you ensure data quality and consistency across multiple disparate systems when building a data warehouse?

Ensuring data quality and consistency across disparate systems in a data warehouse involves several key strategies. First, implement robust ETL (Extract, Transform, Load) processes. This includes data profiling to understand data characteristics, cleansing to remove errors and inconsistencies, and transformation to standardize data formats and units. Define and enforce data quality rules during the transformation stage, such as validating data types, ranges, and uniqueness.

Second, establish a master data management (MDM) system to maintain a single source of truth for critical data entities like customers or products. MDM helps resolve conflicting information and ensures consistency across systems. Furthermore, implement data governance policies and procedures to define data ownership, access control, and data quality standards. Regular data audits and reconciliation processes are essential to monitor data quality and identify any discrepancies. Finally, consider utilizing data virtualization tools to provide a unified view of data without physically moving it, which can help maintain consistency and reduce integration complexities.

5. Imagine you are designing a data model for a social media platform. How would you handle evolving data requirements and user-generated content?

For evolving data requirements, I'd use a flexible schema approach, likely employing NoSQL databases like MongoDB or Cassandra alongside a relational database for core user data. This allows adding new fields to documents without schema migrations. For relational data, I'd use techniques like schema evolution (adding columns with default values, using NULLABLE fields) or versioning. User-generated content would be stored in a separate data store, possibly an object storage service like AWS S3 or Google Cloud Storage, with metadata stored in the main database.

To handle diverse user content, I'd use polymorphism and interfaces. For example, a Post could be an interface implemented by TextPost, ImagePost, VideoPost, each with specific attributes. Versioning content and using content delivery networks (CDNs) for media will be essential for performance and data integrity. Also, consider using a message queue (like Kafka or RabbitMQ) to handle asynchronous processing of user generated content (e.g., thumbnail generation, sentiment analysis, moderation).