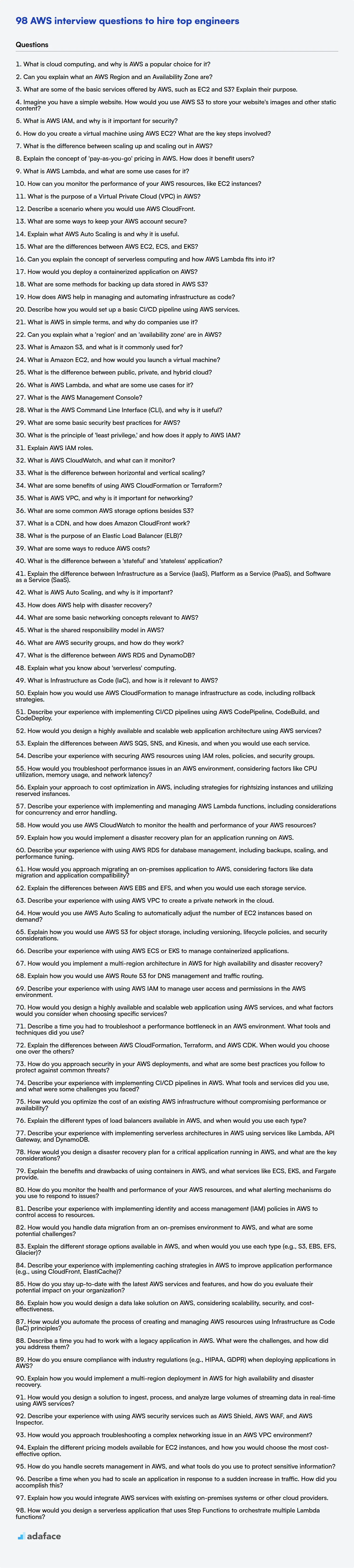

Hiring for Amazon Web Services (AWS) roles requires a keen eye for talent, ensuring candidates possess the right mix of cloud computing skills and hands-on experience. To effectively assess potential hires, recruiters and hiring managers need a targeted set of interview questions to gauge their understanding and abilities.

This blog post provides a curated list of AWS interview questions, spanning from fresher to experienced levels, including multiple-choice questions (MCQs). We've structured these questions to cover a range of AWS services and concepts, designed to help you evaluate candidates across different skill levels.

By using these questions, you can streamline your interview process and ensure you're hiring the best AWS talent; for a more data-driven approach, consider using an AWS online test before the interview to screen candidates quickly.

Table of contents

AWS interview questions for freshers

1. What is cloud computing, and why is AWS a popular choice for it?

Cloud computing is the delivery of computing services—including servers, storage, databases, networking, software, analytics, and intelligence—over the Internet (“the cloud”) to offer faster innovation, flexible resources, and economies of scale. Instead of owning and maintaining their own data centers, companies can access these resources on demand from a cloud provider.

AWS (Amazon Web Services) is a popular choice for cloud computing for several reasons:

- Mature and Comprehensive Services: AWS offers a vast range of services, covering almost every conceivable IT need, and has been a leader in the cloud market for a long time.

- Scalability and Flexibility: AWS allows businesses to easily scale resources up or down based on demand, paying only for what they use.

- Global Infrastructure: AWS has a global network of data centers, providing high availability and low latency for users around the world.

- Cost-Effectiveness: AWS's pay-as-you-go model and competitive pricing can significantly reduce IT costs.

- Security: AWS invests heavily in security, providing a secure platform for businesses to run their applications.

2. Can you explain what an AWS Region and an Availability Zone are?

An AWS Region is a geographical area. Each region contains multiple, isolated locations known as Availability Zones. Think of a Region as a city and Availability Zones as distinct neighborhoods within that city, but with their own independent infrastructure like power and networking.

Availability Zones (AZs) are physically separate and isolated from each other. They are designed to be fault-tolerant. This means that if one AZ fails, the other AZs in the Region should continue to operate. By distributing your applications across multiple AZs, you can protect your applications from a single point of failure.

3. What are some of the basic services offered by AWS, such as EC2 and S3? Explain their purpose.

AWS offers a broad range of services. Two fundamental ones are EC2 and S3.

EC2 (Elastic Compute Cloud) provides virtual servers in the cloud. Think of it as renting computers on demand. You choose the operating system, CPU, memory, storage, and networking, and then you can run your applications on these virtual servers. EC2 is highly configurable and scalable.

S3 (Simple Storage Service) is object storage. It allows you to store and retrieve any amount of data, at any time, from anywhere. S3 is used for storing files, backups, media, and more. It's highly durable, scalable, and secure object storage and often used as the foundation for many other AWS services.

4. Imagine you have a simple website. How would you use AWS S3 to store your website's images and other static content?

I would use AWS S3 to store images and static content by first creating an S3 bucket in the AWS Management Console. Then, I'd configure the bucket's permissions to allow public read access to the objects (images, CSS, JavaScript files, etc.). Next, I'd upload all the website's static assets to this bucket. Finally, I would update the website's HTML to reference these assets using the S3 bucket's URL. For example, an image tag would change from <img src="/images/logo.png"> to <img src="https://my-bucket-name.s3.amazonaws.com/images/logo.png">. To improve performance and reduce latency, I could also configure Amazon CloudFront to act as a CDN in front of the S3 bucket.

5. What is AWS IAM, and why is it important for security?

AWS IAM (Identity and Access Management) is a web service that enables you to control access to AWS resources. It allows you to manage users, groups, and roles, and define permissions to specify what actions they can perform on which resources. IAM is a fundamental component of AWS security because it enables you to implement the principle of least privilege, granting users only the permissions they need to perform their jobs.

IAM is important for security because without it, you would be relying on the root account for all access, which is a major security risk. IAM allows you to:

- Control Access: Define who can access what resources.

- Grant Least Privilege: Allow only necessary access.

- Centralized Management: Manage users and permissions in one place.

- Compliance: Meet regulatory requirements by controlling access to sensitive data.

6. How do you create a virtual machine using AWS EC2? What are the key steps involved?

Creating an EC2 virtual machine (instance) involves several key steps via the AWS Management Console, CLI, or SDK. First, you select an Amazon Machine Image (AMI), which serves as the template for your instance, defining the OS, software, and configuration. Next, you choose an instance type, which determines the hardware resources allocated (CPU, memory, storage, network). Then, you configure instance details like network settings (VPC, subnet), IAM role, and user data (startup scripts).

After configuring details, you add storage (EBS volumes), configure security groups (firewall rules), and review your configuration. Finally, you launch the instance and select or create a key pair for SSH access. Connecting to your instance usually involves using SSH, with your private key. ssh -i your_key.pem ec2-user@your_instance_public_ip.

7. What is the difference between scaling up and scaling out in AWS?

Scaling up (vertical scaling) involves increasing the resources of a single instance or server. For example, upgrading a server's CPU, RAM, or storage. Scaling out (horizontal scaling) involves adding more instances or servers to your system. This distributes the workload across multiple machines.

Key differences: Scaling up has limitations because you can only increase the resources of a single machine so much. Scaling out is generally more scalable and resilient, as the failure of one instance doesn't bring down the entire system, though it can be more complex to implement due to the need for load balancing and data synchronization across instances.

8. Explain the concept of 'pay-as-you-go' pricing in AWS. How does it benefit users?

AWS's 'pay-as-you-go' pricing model means you only pay for the computing resources you actually use. Instead of fixed contracts or upfront costs, you're charged based on consumption, like paying for electricity. If you don't use a service, you don't pay for it. This applies to services like EC2 (compute time), S3 (storage), Lambda (function executions), and others.

This model benefits users by:

- Cost Optimization: Avoid over-provisioning and paying for unused resources. Scale up or down as needed and only pay for what's required.

- Flexibility and Scalability: Easily adjust resource usage based on demand without long-term commitments.

- Reduced Capital Expenditure: Eliminate the need to invest in and maintain your own infrastructure.

- Experimentation: Easily test new services and ideas without significant upfront costs. This fosters innovation and allows for rapid prototyping.

9. What is AWS Lambda, and what are some use cases for it?

AWS Lambda is a serverless compute service that allows you to run code without provisioning or managing servers. You only pay for the compute time you consume, making it a cost-effective solution for many applications. Lambda functions are event-driven, meaning they are triggered by events from other AWS services or custom applications.

Some use cases for AWS Lambda include:

- Processing data: such as images, videos, or log files.

- Building serverless APIs: using API Gateway to trigger Lambda functions.

- Real-time stream processing: using Kinesis or DynamoDB Streams to trigger Lambda functions.

- Automating tasks: like backups, monitoring, and infrastructure management.

- Chatbots: Processing messages in real-time.

- Extending other AWS Services: Adding custom logic to S3 buckets or other AWS services via Lambda triggers.

10. How can you monitor the performance of your AWS resources, like EC2 instances?

I would monitor AWS resource performance, particularly EC2 instances, primarily using Amazon CloudWatch. CloudWatch collects metrics like CPU utilization, memory usage, disk I/O, and network traffic. I can set up CloudWatch alarms to trigger notifications or automated actions when specific thresholds are breached. For more in-depth OS-level monitoring, the CloudWatch agent can be installed on the EC2 instances to collect custom metrics.

Additionally, AWS provides tools like CloudWatch Logs for centralizing logs from EC2 instances and other services, enabling easier troubleshooting and auditing. AWS Trusted Advisor provides recommendations for optimizing resource utilization and improving security. For application-level monitoring, I would integrate services like AWS X-Ray to trace requests and identify performance bottlenecks within my applications running on EC2 instances.

11. What is the purpose of a Virtual Private Cloud (VPC) in AWS?

A Virtual Private Cloud (VPC) in AWS lets you provision a logically isolated section of the AWS Cloud where you can launch AWS resources in a virtual network that you define. It gives you control over your virtual networking environment, including selecting your own IP address ranges, creating subnets, and configuring route tables and network gateways. Essentially, it's like having your own data center within AWS.

The purpose of a VPC is to provide enhanced security, isolation, and control over your AWS resources. It allows you to create private networks within AWS, isolate your applications from the public internet, and connect your VPC to your on-premises network using VPN or Direct Connect. This helps in maintaining compliance, protecting sensitive data, and creating hybrid cloud environments.

12. Describe a scenario where you would use AWS CloudFront.

I would use AWS CloudFront to improve the performance and availability of a website or application that serves content to users across the globe. For example, consider a media company hosting videos and images. By using CloudFront, the content is cached in edge locations closer to the users.

When a user requests content, CloudFront delivers it from the nearest edge location, reducing latency and improving the user experience. This also helps to offload traffic from the origin server (e.g., S3 bucket or EC2 instance), improving its overall performance and reducing costs. Additionally, CloudFront provides built-in security features like DDoS protection and SSL/TLS encryption, further enhancing the application's security posture.

13. What are some ways to keep your AWS account secure?

Securing an AWS account involves multiple layers. Enable Multi-Factor Authentication (MFA) for all IAM users, especially those with administrative privileges. Use strong and unique passwords and rotate them regularly. Enforce the principle of least privilege by granting users only the permissions they need to perform their tasks using IAM roles.

Monitor account activity with CloudTrail and CloudWatch to detect suspicious behavior. Regularly review and update security groups to restrict network access. Use AWS Config to track resource configurations and identify deviations from desired states. Enable AWS Security Hub for a centralized view of your security posture. Consider using services like AWS GuardDuty for threat detection and AWS Trusted Advisor for best practice recommendations. Finally, regularly audit your account and IAM policies.

14. Explain what AWS Auto Scaling is and why it is useful.

AWS Auto Scaling automatically adjusts the number of compute resources based on demand. It helps maintain application availability and allows you to scale your Amazon EC2 capacity up or down automatically according to conditions you define. This ensures you're only paying for the resources you're actually using, optimizing costs.

Auto Scaling is useful because it ensures applications have the right amount of compute capacity at any given time. This improves application availability, handles traffic spikes seamlessly, and reduces operational overhead by automating the scaling process. It also improves fault tolerance by automatically replacing unhealthy instances.

15. What are the differences between AWS EC2, ECS, and EKS?

AWS EC2, ECS, and EKS are all compute services, but they cater to different needs. EC2 provides virtual machines, giving you full control over the operating system and infrastructure. You manage everything, from patching to scaling. It's great for legacy applications or when you need specific OS configurations.

ECS (Elastic Container Service) is AWS's container orchestration service. It lets you run, manage, and scale Docker containers. ECS is serverless option(Fargate), where AWS handles the underlying infrastructure, or using EC2 instances. EKS (Elastic Kubernetes Service) is also a container orchestration service, specifically for Kubernetes. EKS simplifies the deployment, management, and scaling of Kubernetes clusters on AWS. Choosing between ECS and EKS often comes down to whether you want to use the Kubernetes ecosystem (EKS) or prefer AWS's native container service (ECS).

16. Can you explain the concept of serverless computing and how AWS Lambda fits into it?

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of server resources. You don't have to provision or manage servers. You simply upload your code, and the provider runs it in response to triggers. This allows developers to focus solely on writing code without worrying about the underlying infrastructure.

AWS Lambda is a serverless compute service offered by Amazon Web Services (AWS). It lets you run code without provisioning or managing servers. Lambda executes your code only when needed and scales automatically. You pay only for the compute time you consume. Lambda functions are typically triggered by events, such as changes to data in an S3 bucket or an HTTP request via API Gateway. Lambda supports several programming languages, including Python, Java, and Node.js.

17. How would you deploy a containerized application on AWS?

I would deploy a containerized application on AWS using several services, choosing the best option based on requirements like scalability, cost, and operational overhead. Options include:

- Amazon ECS (Elastic Container Service): A fully managed container orchestration service. I would define my application's containers in a task definition, create a cluster, and then deploy the tasks to the cluster. ECS offers control and flexibility.

- Amazon EKS (Elastic Kubernetes Service): A managed Kubernetes service, useful if I'm already familiar with Kubernetes or need its advanced features. I'd create an EKS cluster and deploy my application using Kubernetes manifests.

- AWS Fargate: A serverless compute engine for containers that works with both ECS and EKS. I would choose Fargate to avoid managing EC2 instances. Simply create a task definition (for ECS) or deploy Kubernetes pods, and Fargate handles the underlying infrastructure.

- AWS App Runner: A fully managed service that builds and runs containerized web applications and APIs. This is the simplest option, requiring only the container image. App Runner automatically handles scaling and load balancing.

The choice depends on the application needs and my comfort level with the underlying technologies. For example, if simplicity is paramount, AWS App Runner would be a good choice. For more control and Kubernetes compatibility, EKS is suitable. If I am using ECS and want to avoid server management, Fargate is the way to go.

18. What are some methods for backing up data stored in AWS S3?

Several methods exist for backing up data in AWS S3. S3 Versioning is a simple and effective way to maintain multiple versions of your objects, protecting against accidental deletion or overwrites. Enabling it on a bucket automatically keeps previous versions of objects when they are modified or deleted. Another common approach is cross-region replication (CRR) or same-region replication (SRR). CRR asynchronously replicates objects to another AWS region, providing disaster recovery capabilities. SRR replicates objects within the same region, useful for compliance or reduced latency.

Beyond these, you can use AWS Backup to centrally manage and automate backups across various AWS services, including S3. This allows you to define backup policies and schedules. Finally, third-party backup solutions and custom scripts leveraging the AWS SDK can also be employed to create backups to S3 or other storage locations. For example, using aws s3 sync command to copy data to another S3 bucket.

19. How does AWS help in managing and automating infrastructure as code?

AWS provides several services that facilitate infrastructure as code (IaC) management and automation. AWS CloudFormation allows you to define and provision AWS infrastructure using declarative templates written in JSON or YAML. These templates describe the desired state of your infrastructure, and CloudFormation handles the provisioning and configuration. AWS also offers AWS CDK (Cloud Development Kit), which lets you define your cloud infrastructure in familiar programming languages like Python, TypeScript, Java, and .NET. CDK then synthesizes this code into CloudFormation templates.

Furthermore, services like AWS Systems Manager can be used to automate tasks like patching, configuration management, and software deployment on your infrastructure. These can all be orchestrated via code. AWS CodePipeline helps create CI/CD pipelines to automatically deploy infrastructure changes when changes are made to the IaC code. This helps in automated testing and deployments. AWS Config allows you to audit and evaluate the configurations of your AWS resources to ensure compliance, and infrastructure consistency.

20. Describe how you would set up a basic CI/CD pipeline using AWS services.

I would use AWS CodePipeline, CodeBuild, and CodeDeploy for a basic CI/CD pipeline. First, CodePipeline orchestrates the entire process, triggering actions based on code changes in a source repository like AWS CodeCommit or GitHub. When changes are detected, CodePipeline starts a CodeBuild project. CodeBuild compiles the code, runs unit tests, and creates deployable artifacts like Docker images or zip files. Finally, CodePipeline triggers CodeDeploy to deploy these artifacts to target environments such as EC2 instances, ECS clusters, or Lambda functions.

To configure this, I'd define a CodePipeline pipeline with stages for source, build, and deploy. The source stage integrates with the repository. The build stage specifies the CodeBuild project, including the build environment (e.g., OS, language runtime) and build commands (defined in a buildspec.yml file). The deploy stage integrates with CodeDeploy, specifying the deployment group (target environment) and deployment configuration (deployment strategy, such as blue/green or rolling updates). I would use IAM roles with least privilege to grant each service the necessary permissions. For instance, CodeBuild requires permissions to access the source repository and upload artifacts to S3.

AWS interview questions for juniors

1. What is AWS in simple terms, and why do companies use it?

AWS (Amazon Web Services) is like a giant collection of computers and software that Amazon rents out. Instead of buying and managing their own servers and data centers, companies can use AWS to store data, run applications, and do all sorts of computing tasks.

Companies use AWS for several reasons:

- Cost Savings: It's often cheaper than building and maintaining their own infrastructure.

- Scalability: They can easily increase or decrease their computing power as needed.

- Reliability: AWS has a global network of data centers, so services are highly available.

- Flexibility: AWS offers a wide range of services, from simple storage to complex machine learning.

2. Can you explain what a 'region' and an 'availability zone' are in AWS?

In AWS, a region is a geographical area. Each region is completely isolated from other regions, providing fault tolerance and stability. Choosing a region close to your users can reduce latency.

An availability zone (AZ) is a physically distinct location within an AWS region. Each region consists of multiple AZs, which are isolated from each other but connected through low-latency links. This allows you to build highly available applications by deploying resources across multiple AZs. If one AZ fails, your application can continue running in another AZ.

3. What is Amazon S3, and what is it commonly used for?

Amazon S3 (Simple Storage Service) is a scalable, high-speed, web-based cloud storage service designed for online backup and archiving of data and application programs. It is object storage, meaning data is stored as objects within buckets. Think of buckets as folders and objects as files.

S3 is commonly used for:

- Backup and Disaster Recovery: Storing backups of data for recovery purposes.

- Content Storage and Distribution: Hosting static website content (HTML, CSS, JavaScript, images, videos) and distributing it globally via Amazon CloudFront.

- Data Archiving: Storing infrequently accessed data at a low cost.

- Big Data Analytics: Storing large datasets for processing with services like Amazon EMR.

- Application Hosting: Storing application data and artifacts.

4. What is Amazon EC2, and how would you launch a virtual machine?

Amazon Elastic Compute Cloud (EC2) is a web service that provides resizable compute capacity in the cloud. Essentially, it lets you rent virtual servers (instances) to run your applications.

To launch a virtual machine (EC2 instance), you would typically:

- Choose an Amazon Machine Image (AMI), which is a template containing the operating system, applications, and data.

- Select an instance type, which determines the CPU, memory, storage, and networking capacity.

- Configure network settings like VPC and subnet.

- Set up security groups to control inbound and outbound traffic.

- Assign an IAM role to grant permissions to the instance.

- Launch the instance.

- Connect to the instance using SSH (for Linux) or RDP (for Windows).

5. What is the difference between public, private, and hybrid cloud?

Public cloud infrastructure is owned and operated by a third-party provider, making resources like servers and storage available to many users over the internet. Users share the same infrastructure, and services are billed on a usage basis, offering scalability and cost-effectiveness. Private cloud, in contrast, is infrastructure dedicated to a single organization. It can be hosted on-premises or by a third-party, but the resources are not shared. This option provides greater control and security but usually comes with higher costs.

Hybrid cloud combines public and private cloud environments, allowing data and applications to be shared between them. This approach offers flexibility, enabling organizations to leverage the benefits of both models. For example, sensitive data can be kept in the private cloud, while less sensitive workloads can run in the public cloud for cost efficiency and scalability. The orchestration between these clouds is crucial for a successful hybrid cloud strategy.

6. What is AWS Lambda, and what are some use cases for it?

AWS Lambda is a serverless compute service that lets you run code without provisioning or managing servers. You upload your code as a function, and Lambda runs it in response to events, such as changes to data in an S3 bucket, messages arriving on a queue, or HTTP requests. You only pay for the compute time you consume.

Some use cases include:

- Real-time Data Processing: Processing data streams from sources like IoT devices or clickstreams.

- Web Applications: Building serverless backends for web and mobile applications.

- Chatbots: Powering conversational interfaces.

- Automating Tasks: Automating administrative tasks such as log analysis, backups, and scheduled jobs.

- Extending other AWS Services: Triggering Lambda functions from services like S3, DynamoDB, SNS, and SQS to add custom logic. For example, resizing images when uploaded to S3.

- Serverless APIs: Creating RESTful APIs using API Gateway and Lambda. The code could be written using any supported runtime like

Node.js,Python,JavaorGo.

7. What is the AWS Management Console?

The AWS Management Console is a web-based interface that allows you to access and manage Amazon Web Services (AWS). It provides a central location to interact with all the AWS services you are authorized to use. Think of it as a graphical user interface (GUI) for AWS, allowing you to perform tasks like launching EC2 instances, configuring S3 buckets, setting up databases with RDS, and monitoring your resources using CloudWatch without needing to write code or use the command line.

It's the primary way many users interact with AWS initially, especially for learning and exploration. While the AWS CLI and SDKs provide more programmatic and automated approaches, the console offers a visual representation and step-by-step guidance for many common tasks, making it easier to understand the cloud platform.

8. What is the AWS Command Line Interface (CLI), and why is it useful?

The AWS Command Line Interface (CLI) is a unified tool to manage your AWS services from the command line. It allows you to control multiple AWS services and automate them through scripts. Instead of using the AWS Management Console (web interface), you can interact with AWS services directly using commands in your terminal.

It's useful because it provides a way to automate tasks, manage infrastructure as code, and integrate AWS services into your existing workflows. Some key use cases include scripting deployments, creating and managing resources (like EC2 instances or S3 buckets), and automating backups. It's particularly beneficial for developers and system administrators who prefer a programmatic approach to managing their AWS infrastructure.

9. What are some basic security best practices for AWS?

Some basic security best practices for AWS include: enabling Multi-Factor Authentication (MFA) for all user accounts, especially the root account. Use IAM roles instead of storing credentials directly in applications or EC2 instances. Regularly review and rotate IAM keys. Employ the principle of least privilege, granting users only the permissions they need. Enable AWS CloudTrail to log API calls for auditing purposes and monitor these logs. Use security groups and Network ACLs to control network traffic in and out of your instances.

Also, keep your software up to date by patching EC2 instances and applications regularly. Utilize AWS security services like AWS Shield for DDoS protection, AWS WAF to filter malicious web traffic and configure AWS Config to monitor and evaluate the configurations of your AWS resources. Regularly scan your environment for vulnerabilities using tools like Amazon Inspector.

10. What is the principle of 'least privilege,' and how does it apply to AWS IAM?

The principle of least privilege (PoLP) means granting a user or service only the minimum level of access required to perform their job or function. It's a core security best practice. In AWS IAM, this translates to creating IAM roles and policies that give users and AWS services only the specific permissions they need, and nothing more.

For example, instead of granting a user the AdministratorAccess policy, you'd create a custom policy that allows them only to read from a specific S3 bucket or invoke a particular Lambda function. This limits the potential blast radius of any security breach or accidental misconfiguration. By following PoLP, you reduce the risk of unauthorized access, data breaches, and compliance violations.

11. Explain AWS IAM roles.

IAM roles are AWS identities that you can assume to gain temporary access to AWS resources. Unlike IAM users, roles are not permanently associated with one specific person or application. Instead, an entity (like an EC2 instance, Lambda function, or even an IAM user) assumes the role to obtain temporary security credentials.

Roles define a set of permissions, similar to IAM user policies. When an entity assumes a role, it gets temporary access based on the permissions defined in the role's policies. This temporary access eliminates the need to store long-term credentials (like access keys) on EC2 instances or within applications, improving security. Trust policies define who can assume the role.

12. What is AWS CloudWatch, and what can it monitor?

AWS CloudWatch is a monitoring and observability service that provides you with data and actionable insights to monitor your applications, respond to system-wide performance changes, optimize resource utilization, and gain a unified view of operational health. It collects monitoring and operational data in the form of logs, metrics, and events.

CloudWatch can monitor various AWS resources and applications, including:

- EC2 instances: CPU utilization, disk I/O, network traffic, status checks.

- RDS databases: CPU utilization, database connections, disk space usage.

- Lambda functions: Invocation count, error rate, execution duration.

- Custom metrics: Application-specific metrics that you define and publish.

- Logs: Application and system logs for debugging and troubleshooting.

- Container insights: Metrics related to container performance.

- CloudWatch events/EventBridge: Monitor AWS service events and trigger actions.

13. What is the difference between horizontal and vertical scaling?

Horizontal scaling means adding more machines to your existing pool of resources, while vertical scaling means adding more power (CPU, RAM) to an existing machine.

- Horizontal Scaling: Scaling out. Distributes the load across multiple machines. Improved availability through redundancy. Can be more complex to implement due to distributed systems concerns.

- Vertical Scaling: Scaling up. Increases the resources of a single machine. Simpler to implement initially, but has limitations as you reach hardware maximums.

14. What are some benefits of using AWS CloudFormation or Terraform?

AWS CloudFormation and Terraform both offer infrastructure as code (IaC) benefits. They allow you to define your infrastructure in a declarative way, typically using YAML/JSON (CloudFormation) or HashiCorp Configuration Language (HCL) (Terraform). This enables version control, repeatability, and easier collaboration.

Some key benefits include: Infrastructure as Code: Define and manage infrastructure using code. Version Control: Track changes to infrastructure configurations. Automation: Automate infrastructure provisioning and management. Consistency: Ensure consistent infrastructure deployments across environments. Cost Reduction: Optimize resource utilization and avoid manual errors.

15. What is AWS VPC, and why is it important for networking?

AWS VPC (Virtual Private Cloud) lets you provision a logically isolated section of the AWS Cloud where you can launch AWS resources in a virtual network that you define. You have complete control over your virtual networking environment, including selection of your own IP address ranges, creation of subnets, and configuration of route tables and network gateways. VPC is a fundamental building block for secure and scalable cloud networking.

It is important because it provides:

- Isolation: You can create a private network that is isolated from other AWS customers.

- Control: You have complete control over your network configuration.

- Security: VPCs can be secured using security groups and network ACLs.

- Scalability: You can easily scale your network as your needs grow.

- Customization: Ability to use your own IP address ranges, create subnets, configure route tables, and network gateways.

16. What are some common AWS storage options besides S3?

Besides S3, AWS offers several other storage options catering to diverse needs. EBS (Elastic Block Storage) provides block-level storage volumes for use with EC2 instances, akin to a hard drive. EFS (Elastic File System) offers a fully managed NFS file system for shared file storage across multiple EC2 instances. AWS Storage Gateway connects on-premises environments to AWS storage, enabling hybrid cloud scenarios.

Other options include Glacier for long-term archival storage, Snowball/ Snowcone/ Snowmobile for petabyte-scale data transfers, and FSx which provides fully-managed shared file systems with native compatibility to popular file systems like Windows File Server, Lustre, and OpenZFS.

17. What is a CDN, and how does Amazon CloudFront work?

A CDN (Content Delivery Network) is a geographically distributed network of servers that caches static content (images, videos, CSS, JavaScript files, etc.) and delivers it to users based on their location. This reduces latency and improves website loading times.

Amazon CloudFront is Amazon's CDN service. When a user requests content, CloudFront routes the request to the nearest edge location (server) that has the content cached. If the content isn't cached, CloudFront retrieves it from the origin server (e.g., an S3 bucket or EC2 instance), caches it at the edge location, and then delivers it to the user. Subsequent requests from nearby users are served directly from the edge location cache. You can configure caching behavior, TTL (Time To Live), and invalidation rules to control how long content is cached. CloudFront supports dynamic content delivery as well, through features like Lambda@Edge and CloudFront Functions.

18. What is the purpose of an Elastic Load Balancer (ELB)?

The purpose of an Elastic Load Balancer (ELB) is to distribute incoming network traffic across multiple targets, such as EC2 instances, containers, and IP addresses, in one or more Availability Zones. This increases the availability and fault tolerance of your applications. ELBs ensure that no single target is overwhelmed by traffic, preventing performance bottlenecks and potential service disruptions.

Essentially, it acts as a single point of contact for clients and routes traffic to healthy backend servers. ELBs automatically scale to handle changes in incoming traffic, providing seamless and consistent performance. Different ELB types, like Application Load Balancers (ALB) and Network Load Balancers (NLB), are suited for different types of traffic and use cases. ALB's handle HTTP/HTTPS traffic, while NLB's are designed for high-performance TCP/UDP traffic.

19. What are some ways to reduce AWS costs?

To reduce AWS costs, consider several strategies. Right-size your EC2 instances and use reserved instances or savings plans for predictable workloads. Utilize spot instances for fault-tolerant applications. Optimize storage by using lifecycle policies for S3 and deleting unused EBS volumes.

Further optimizations include leveraging AWS Cost Explorer to identify cost drivers, implementing auto-scaling to dynamically adjust resources, and using AWS Lambda or Fargate for serverless computing. Regularly review and delete unused resources, such as outdated snapshots or unused load balancers. Consider using AWS Budgets to set up cost thresholds and alerts.

20. What is the difference between a 'stateful' and 'stateless' application?

A stateful application remembers the client's data (state) from one request to the next. This state is typically stored on the server. Subsequent requests from the same client rely on this stored state to function correctly. Consider a shopping cart application. The items you add to your cart are stored on the server, so when you browse to another page and return to your cart, the items are still there.

In contrast, a stateless application treats each request as an independent transaction. It does not retain any client data between requests. Each request contains all the information necessary for the server to understand and process it. RESTful APIs are often stateless. For example, each API call to get product information must include the product ID because the server doesn't 'remember' which product you were looking at previously. If we take a look at the protocol the web uses, HTTP, we can see that it is inherently stateless.

21. Explain the difference between Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS).

IaaS (Infrastructure as a Service) provides you with the fundamental building blocks of computing infrastructure—servers, virtual machines, storage, networks, and operating systems—over the internet. You manage the OS, storage, deployed applications, and perhaps select networking components. Think of it as renting the hardware.

PaaS (Platform as a Service) provides a platform allowing customers to develop, run, and manage applications without the complexity of building and maintaining the infrastructure. You manage the applications and data. The provider handles the OS, servers, storage, and networking. Consider it renting the tools and the workbench.

SaaS (Software as a Service) provides you with a complete product that is run and managed by the service provider. You simply use the software. Examples include Gmail, Salesforce, and Dropbox. The provider manages everything from the application down to the infrastructure.

22. What is AWS Auto Scaling, and why is it important?

AWS Auto Scaling is a service that automatically adjusts the number of compute resources in your AWS environment based on demand. It helps maintain application availability and allows you to pay only for the resources you use, scaling up during peak times and scaling down during slow periods.

Auto Scaling is important because it ensures your application can handle varying workloads, preventing performance bottlenecks or crashes during high traffic. It also optimizes costs by reducing resource usage when demand is low, leading to significant savings.

23. How does AWS help with disaster recovery?

AWS offers a comprehensive suite of services to support various disaster recovery (DR) strategies. It allows you to replicate your on-premises or cloud-based infrastructure and data to a different AWS Region, ensuring business continuity in case of a disaster. Some key AWS services used for DR include:

- AWS Backup: Centralized backup service for various AWS resources (EBS, RDS, etc.) and on-premises workloads.

- Amazon S3: Durable and scalable storage for backups and data replication.

- Amazon EC2: Used to provision compute resources in the recovery region.

- AWS CloudEndure Disaster Recovery: Automates the replication of your entire application stack to AWS.

- AWS Site-to-Site VPN/Direct Connect: Establishes secure connections between your on-premises environment and AWS.

- AWS Route 53: DNS service used for failover by routing traffic to the recovery region. The flexibility and scalability of AWS allow you to implement different DR approaches, ranging from simple backup and restore to active-active configurations, depending on your recovery time objective (RTO) and recovery point objective (RPO) requirements.

24. What are some basic networking concepts relevant to AWS?

Some fundamental networking concepts crucial for understanding AWS include VPCs (Virtual Private Clouds), which are isolated networks within AWS. Key aspects of VPCs are subnets (public and private), route tables (controlling traffic flow), and security groups (acting as virtual firewalls). Understanding CIDR blocks for IP address allocation is also important.

Furthermore, concepts like DNS (Domain Name System), routing, load balancing, and network security are directly applicable. AWS services rely heavily on these principles for communication between instances, accessing the internet, and ensuring secure and reliable application deployment. Concepts such as NAT (Network Address Translation) and VPNs (Virtual Private Networks) are used for specific use cases like allowing private instances to access the internet and connecting on-premises networks to AWS, respectively.

25. What is the shared responsibility model in AWS?

The AWS shared responsibility model defines the security obligations between AWS and the customer. AWS is responsible for the security of the cloud, including the infrastructure, hardware, and software that runs AWS services. The customer is responsible for security in the cloud. This includes managing the operating system, platform, data, applications, identity and access management, and anything the customer puts into the cloud.

Specifically, AWS handles physical security, networking, and virtualization. Customers manage their data, operating systems, applications, and security group configurations. The customer responsibility will vary depending on the service being used. For example, with EC2, the customer has more responsibility than with a fully managed service like S3 or DynamoDB.

26. What are AWS security groups, and how do they work?

AWS Security Groups act as virtual firewalls for your EC2 instances. They control inbound and outbound traffic at the instance level. By default, a security group denies all inbound traffic and allows all outbound traffic.

Security groups work by defining rules that specify the allowed traffic. These rules are stateful, meaning that if you allow inbound traffic on a specific port, the corresponding outbound traffic is automatically allowed. You can specify the protocol (TCP, UDP, ICMP), port range, and source or destination IP address/CIDR block for each rule. Multiple security groups can be associated with an instance, and the rules from all associated groups are combined to determine the effective access policy.

27. What is the difference between AWS RDS and DynamoDB?

AWS RDS and DynamoDB are both database services, but they differ significantly in their underlying models and use cases. RDS is a relational database service supporting engines like MySQL, PostgreSQL, SQL Server, etc. Data is stored in tables with predefined schemas, ACID transactions are supported and joins can be performed. DynamoDB, on the other hand, is a NoSQL database, specifically a key-value and document store. It's designed for high scalability, availability, and performance with a flexible schema.

The key difference lies in the data model and scalability approach. RDS provides structured data storage and strong consistency, making it suitable for applications requiring complex queries and relational data. DynamoDB offers schema flexibility and horizontal scalability, making it ideal for applications needing to handle large volumes of data and high traffic, like gaming, ad tech, and IoT. DynamoDB trades off strong consistency for availability and partition tolerance (CAP theorem), eventually consistent reads are by default and strongly consistent reads are an option.

28. Explain what you know about 'serverless' computing.

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. You, as the developer, only write and deploy code without worrying about the underlying infrastructure. The provider automatically scales resources up or down based on demand, and you're typically charged only for the compute time your code consumes.

Key benefits include reduced operational overhead (no servers to manage), automatic scaling, and cost efficiency (pay-per-use). Common use cases are event-driven applications, APIs, and backend services. AWS Lambda, Azure Functions, and Google Cloud Functions are popular serverless platforms.

29. What is Infrastructure as Code (IaC), and how is it relevant to AWS?

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure through machine-readable definition files, rather than manual configuration or interactive configuration tools. This allows infrastructure to be treated like software code, enabling version control, automated testing, and repeatable deployments.

In the context of AWS, IaC is highly relevant because AWS provides a wide array of services that can be fully automated using tools like AWS CloudFormation, Terraform, AWS CDK (Cloud Development Kit), and others. For example, you can define your entire AWS environment (EC2 instances, S3 buckets, VPCs, etc.) in a CloudFormation template and deploy it with a single command, ensuring consistency and reducing the risk of human error. These tools allow developers to spin up test environments easily or reliably deploy production updates to their infrastructure. The code can be treated as:

Resources:

MyEC2Instance:

Type: AWS::EC2::Instance

Properties:

ImageId: ami-0c55b60b9c137b9d8

InstanceType: t2.micro

AWS intermediate interview questions

1. Explain how you would use AWS CloudFormation to manage infrastructure as code, including rollback strategies.

AWS CloudFormation allows me to define and provision AWS infrastructure as code using templates (YAML or JSON). These templates describe the resources, their properties, and dependencies. I would define my infrastructure (e.g., EC2 instances, VPCs, databases) in a CloudFormation template. Then, I'd use the AWS CLI or Management Console to create a stack from that template. CloudFormation handles the provisioning and configuration of these resources in the specified order.

For rollback strategies, CloudFormation automatically rolls back to the previous stable state if stack creation or update fails. I can also configure custom rollback behavior using rollback triggers based on CloudWatch alarms. This lets me automatically roll back the stack if a critical metric, like CPU utilization, exceeds a certain threshold after deployment. Finally, I can implement a blue/green deployment using CloudFormation to minimize downtime during updates. If the new (green) environment fails health checks, I can easily switch back to the original (blue) environment by updating the CloudFormation stack with the original configuration.

2. Describe your experience with implementing CI/CD pipelines using AWS CodePipeline, CodeBuild, and CodeDeploy.

I have experience designing and implementing CI/CD pipelines using AWS CodePipeline, CodeBuild, and CodeDeploy to automate software releases. I've configured CodePipeline to trigger builds on code commits to repositories like CodeCommit and GitHub. CodeBuild is used to compile the code, run unit and integration tests, and package the application into deployable artifacts, often using build specifications defined in buildspec.yml. These artifacts are then stored in S3.

For deployment, I've used CodeDeploy to deploy these artifacts to EC2 instances, ECS clusters, and Lambda functions. I have experience with different deployment strategies like blue/green and rolling deployments to minimize downtime. I'm familiar with writing AppSpec files (appspec.yml) to define deployment steps like stopping the application, installing dependencies, and starting the new version. I've also integrated monitoring tools and automated rollback mechanisms into these pipelines.

3. How would you design a highly available and scalable web application architecture using AWS services?

To design a highly available and scalable web application architecture on AWS, I would leverage several key services. For high availability, I'd use Elastic Load Balancer (ELB) to distribute traffic across multiple EC2 instances in different Availability Zones. These instances would be part of an Auto Scaling Group (ASG), configured to automatically scale the number of instances based on demand. Data would be stored in Amazon RDS (Relational Database Service), configured for Multi-AZ deployment to ensure database failover. For scalability, the application would be designed with loosely coupled microservices, potentially using Amazon ECS/EKS for container orchestration. Caching mechanisms like Amazon ElastiCache (Memcached or Redis) would further improve performance.

Furthermore, Amazon S3 would store static assets, and Amazon CloudFront would be used as a CDN for fast content delivery globally. Monitoring and logging would be crucial, employing Amazon CloudWatch for metrics and alarms, and AWS CloudTrail for auditing. Infrastructure as Code (IaC) principles would be followed, using AWS CloudFormation or Terraform to provision and manage the entire infrastructure in a repeatable and automated manner.

4. Explain the differences between AWS SQS, SNS, and Kinesis, and when you would use each service.

AWS SQS (Simple Queue Service) is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications. It's primarily used for asynchronous communication where messages are temporarily stored until a consumer is ready to process them. SNS (Simple Notification Service) is a fully managed pub/sub messaging service. It's used for broadcasting messages to multiple subscribers (e.g., email, SMS, HTTP endpoints). Kinesis, on the other hand, is a platform for streaming data on AWS, offering services like Kinesis Data Streams, Kinesis Data Firehose, and Kinesis Data Analytics.

Use SQS when you need reliable message queuing with guaranteed delivery, for example, processing orders or sending emails. Use SNS when you need to broadcast messages to multiple subscribers for notifications or fan-out architectures. Use Kinesis when you need to ingest, process, and analyze real-time streaming data, such as website clickstreams, application logs, or IoT sensor data.

5. Describe your experience with securing AWS resources using IAM roles, policies, and security groups.

I have extensive experience securing AWS resources using IAM roles, policies, and security groups. I've used IAM roles to grant permissions to EC2 instances, Lambda functions, and other AWS services, allowing them to access other AWS resources without needing to hardcode credentials. This includes creating roles with the principle of least privilege, granting only the necessary permissions to perform specific tasks. For example, a Lambda function writing logs to CloudWatch only needs logs:CreateLogGroup, logs:CreateLogStream, and logs:PutLogEvents permissions.

I also have used security groups to control inbound and outbound traffic to EC2 instances and other resources. I have configured security groups to allow only specific ports and protocols from authorized sources, effectively creating a virtual firewall. I have also worked with Network ACLs to control traffic at the subnet level, providing an additional layer of security. When building cloud infrastructure I always follow AWS best practices for security.

6. How would you troubleshoot performance issues in an AWS environment, considering factors like CPU utilization, memory usage, and network latency?

To troubleshoot performance issues in AWS, I'd start by monitoring key metrics using CloudWatch. For CPU, I'd set up alarms to trigger when utilization exceeds a certain threshold (e.g., 80%) and investigate the processes consuming the most CPU using top or htop on the EC2 instance. High memory usage would be examined similarly, looking for memory leaks or inefficient code. For network latency, tools like traceroute or mtr can help identify bottlenecks. VPC Flow Logs can also provide insights into network traffic patterns.

Next, I'd analyze the logs (CloudWatch Logs or S3 logs) from various AWS services (EC2, Lambda, API Gateway, etc.) to pinpoint errors or slow operations. Tools like X-Ray can trace requests through distributed services. Based on the findings, I'd take corrective actions like scaling up resources, optimizing code, improving database queries, or configuring caching mechanisms. Regular performance testing is crucial to prevent future issues.

7. Explain your approach to cost optimization in AWS, including strategies for rightsizing instances and utilizing reserved instances.

My approach to cost optimization in AWS involves several key strategies. Firstly, I focus on rightsizing instances by continuously monitoring CPU utilization, memory usage, and network I/O using tools like CloudWatch and AWS Compute Optimizer. This helps identify over-provisioned instances that can be scaled down to smaller, more cost-effective instance types or families. I also consider using AWS Auto Scaling to dynamically adjust instance capacity based on demand. Secondly, I leverage Reserved Instances (RIs) and Savings Plans for predictable workloads. By analyzing historical usage patterns, I determine the optimal number of RIs or Savings Plans to purchase, ensuring significant discounts compared to on-demand pricing. It's essential to evaluate usage patterns regularly, adjusting RI strategy to keep up with changing business needs. Finally, I evaluate the pricing of spot instances for workloads which can tolerate interruptions.

8. Describe your experience with implementing and managing AWS Lambda functions, including considerations for concurrency and error handling.

I have extensive experience implementing and managing AWS Lambda functions for various use cases, including event-driven architectures, API backends, and data processing pipelines. I'm proficient in writing Lambda functions in Python, Node.js, and Java, and I'm familiar with the serverless framework for deployment and infrastructure as code.

When designing Lambda functions, I prioritize concurrency and error handling. For concurrency, I consider factors like memory allocation, function timeout, and provisioned concurrency to optimize performance and prevent throttling. For error handling, I implement robust mechanisms like try-except blocks with logging and dead-letter queues (DLQs) to capture and process failed invocations. I use CloudWatch metrics and logs to monitor function performance and identify potential issues, and I leverage AWS X-Ray for tracing requests through distributed applications using Lambda. I also use tools like Sentry to monitor errors. Here's a simple Python example for error handling:

import json

def lambda_handler(event, context):

try:

# Your code here

result = {

'statusCode': 200,

'body': json.dumps('Success!')

}

return result

except Exception as e:

print(f"Error: {e}")

# Send error to DLQ or monitoring service

return {

'statusCode': 500,

'body': json.dumps('Error processing request')

}

9. How would you use AWS CloudWatch to monitor the health and performance of your AWS resources?

I would leverage AWS CloudWatch in several ways to monitor the health and performance of AWS resources. Primarily, I would use CloudWatch Metrics to track key performance indicators (KPIs) like CPU utilization, memory usage, disk I/O, and network traffic for EC2 instances, RDS databases, and other AWS services. These metrics provide a baseline and allow me to identify anomalies or trends indicating potential issues.

I'd also use CloudWatch Logs to collect, monitor, and analyze log data from various sources, including applications, operating systems, and AWS services. By creating metric filters, I can extract specific data points from the logs and generate alarms based on predefined thresholds. Furthermore, I can configure CloudWatch Alarms to automatically notify me (via SNS, for example) when metrics exceed specified thresholds, enabling proactive identification and resolution of performance bottlenecks or failures. CloudWatch dashboards provide a centralized view of all monitoring data, facilitating efficient troubleshooting and performance optimization.

10. Explain how you would implement a disaster recovery plan for an application running on AWS.

A disaster recovery (DR) plan for an AWS application involves several key strategies. First, implement data replication across multiple Availability Zones (AZs) or Regions using services like S3 cross-region replication or RDS multi-AZ deployments. This ensures data durability and availability even if one AZ fails. Second, automate infrastructure as code (IaC) using CloudFormation or Terraform to rapidly recreate the application stack in a different region.

Furthermore, regularly back up critical data and configurations. Test the DR plan periodically to validate its effectiveness and identify potential weaknesses. Utilize AWS services like Route 53 for DNS failover to redirect traffic to the recovery region. Finally, monitor the application and infrastructure continuously to detect failures promptly and trigger the DR process automatically using CloudWatch alarms and Lambda functions.

11. Describe your experience with using AWS RDS for database management, including backups, scaling, and performance tuning.

I have experience using AWS RDS for database management, specifically with MySQL and PostgreSQL. For backups, I've implemented automated backup schedules and tested restoration procedures for disaster recovery. Scaling was handled both vertically (instance size upgrades) and horizontally (read replicas for read-heavy workloads). I've also used RDS Proxy to manage database connections and reduce failover times.

Regarding performance tuning, I've used tools like Performance Insights to identify slow queries and optimize them by creating indexes. Monitoring CloudWatch metrics such as CPU utilization, memory consumption, and disk I/O was crucial for proactively identifying potential bottlenecks. I've also adjusted database parameters like innodb_buffer_pool_size for MySQL to improve performance based on the observed workload.

12. How would you approach migrating an on-premises application to AWS, considering factors like data migration and application compatibility?

Migrating an on-premises application to AWS involves a phased approach. First, assess the application's architecture, dependencies, and data volume to determine the best migration strategy (rehost, replatform, refactor, repurchase, or retire). Data migration is critical; options include using AWS DMS, Snowball, or S3 for transferring data, choosing the appropriate method based on data size, transfer speed, and downtime tolerance. Application compatibility must be verified, potentially requiring code modifications or middleware updates to ensure the application functions correctly in the AWS environment.

Next, create a migration plan that outlines the steps involved, including testing and rollback procedures. Consider using AWS services like CloudFormation or Terraform for infrastructure-as-code deployments. Security is paramount, so implement appropriate security measures, such as IAM roles and security groups. After migration, continuously monitor the application's performance and optimize resources to ensure cost-effectiveness and scalability. Choose services like CloudWatch for monitoring and Auto Scaling for scaling based on demand.

13. Explain the differences between AWS EBS and EFS, and when you would use each storage service.

AWS EBS (Elastic Block Storage) and EFS (Elastic File System) are both storage services, but cater to different needs. EBS is block storage, directly attached to a single EC2 instance. Think of it like a hard drive. It's ideal for use cases requiring low-latency access from a single instance, such as operating systems, databases, and applications requiring direct access to block storage.

EFS, on the other hand, is a network file system that can be mounted by multiple EC2 instances concurrently. It's suitable for shared file storage, content repositories, and applications needing shared access to the same data. EBS is generally cheaper and faster for single instance use, while EFS provides scalability and accessibility across multiple instances.

14. Describe your experience with using AWS VPC to create a private network in the cloud.

I have experience using AWS VPC to create private networks in the cloud for various projects. I've configured VPCs with custom CIDR blocks, subnets (both public and private), route tables, and security groups to control network traffic. I've also worked with VPC Peering to connect different VPCs, and used VPN Gateways or Direct Connect to establish secure connections between on-premises networks and VPCs.

Specifically, I have practical experience with:

- Creating VPCs and Subnets: Defining appropriate CIDR blocks and subnet sizes based on application requirements.

- Configuring Route Tables: Setting up routes to direct traffic between subnets, to the internet (through an Internet Gateway), and to other VPCs.

- Implementing Security Groups and Network ACLs: Controlling inbound and outbound traffic at the instance and subnet level.

- Setting up NAT Gateways: Allowing instances in private subnets to access the internet without being directly exposed.

- Utilizing VPC Peering and VPN Gateways: Establishing secure and private connections between VPCs and on-premises networks. For example, I once configured a VPN gateway to connect a development VPC to our corporate network using IPsec tunnels, ensuring only authorized users could access the development environment. I have also used AWS CloudFormation to automate VPC creation and management.

15. How would you use AWS Auto Scaling to automatically adjust the number of EC2 instances based on demand?

I would use AWS Auto Scaling by first creating a Launch Configuration or Launch Template that defines the EC2 instance type, AMI, security groups, and other instance settings. Next, I would create an Auto Scaling Group (ASG) and configure its desired capacity, minimum capacity, and maximum capacity. To dynamically adjust the number of instances, I would define scaling policies based on CloudWatch metrics, such as CPU utilization or network traffic.

For example, a scaling policy could be configured to add EC2 instances when the average CPU utilization across all instances in the ASG exceeds 70% and remove instances when it falls below 30%. These policies can use simple scaling (e.g., add one instance) or step scaling (e.g., add instances based on the magnitude of the alarm). I would also consider using target tracking scaling to maintain a specific target value for a metric, such as average CPU utilization at 50%.

16. Explain how you would use AWS S3 for object storage, including versioning, lifecycle policies, and security considerations.

I would use AWS S3 for object storage due to its scalability, durability, and cost-effectiveness. Versioning would be enabled to preserve previous versions of objects, protecting against accidental deletion or overwrites. Lifecycle policies would automate object transitions, moving infrequently accessed data to cheaper storage classes like S3 Standard-IA or Glacier after a specified period. This optimizes storage costs based on access patterns.

Security is paramount. Bucket policies and IAM roles would control access permissions, ensuring only authorized users and services can access S3 resources. Encryption at rest (using SSE-S3 or SSE-KMS) and in transit (using HTTPS) would protect data confidentiality. Access logs would be enabled for auditing and security monitoring. MFA Delete would add an extra layer of security against accidental or malicious deletion.

17. Describe your experience with using AWS ECS or EKS to manage containerized applications.

I have experience using AWS ECS to manage containerized applications. I've used it to deploy and scale applications, defining task definitions, services, and clusters using both the AWS Management Console and Infrastructure as Code (IaC) tools like Terraform. I've configured load balancing using Application Load Balancers (ALB) to distribute traffic across tasks, and I've implemented auto-scaling policies based on CPU and memory utilization. I also have experience setting up CI/CD pipelines with tools like Jenkins and AWS CodePipeline to automate the build, testing, and deployment of container images to ECS.

While I have less hands-on experience with EKS, I understand its core concepts and how it leverages Kubernetes for container orchestration. I've studied its architecture and know that it offers more flexibility and control compared to ECS, albeit at the cost of increased operational complexity. I am familiar with Kubernetes concepts such as pods, deployments, and services, and I am aware of the different networking options and security considerations when using EKS. I am eager to expand my practical experience with EKS.

18. How would you implement a multi-region architecture in AWS for high availability and disaster recovery?

To implement a multi-region architecture in AWS for high availability (HA) and disaster recovery (DR), I would leverage several AWS services. For HA, I'd use services like Route 53 for DNS-based failover, ensuring traffic is routed to a healthy region. Application Load Balancers (ALBs) would distribute traffic within each region. Data replication using services like DynamoDB Global Tables or cross-region replication for S3 buckets is also important to ensure data consistency across regions. RDS Multi-AZ deployments offer HA within a region.

For DR, I'd implement a strategy involving regular backups (RDS snapshots, EBS snapshots) to a separate region. AWS CloudFormation or Terraform can automate infrastructure provisioning in the DR region. In the event of a regional failure, Route 53 would failover to the DR region, and the infrastructure would be provisioned, allowing the application to resume operations. Regularly testing the failover process is crucial to ensure its effectiveness.

19. Explain how you would use AWS Route 53 for DNS management and traffic routing.

I would use AWS Route 53 for DNS management by creating hosted zones for my domains. Within these zones, I'd define DNS records like A, CNAME, MX, and TXT to point to my application servers, load balancers, or other AWS services. Route 53 supports various routing policies for traffic management.

For traffic routing, I can leverage policies such as simple routing (one record for all traffic), weighted routing (distributing traffic based on weights), latency-based routing (routing users to the lowest latency region), geolocation routing (routing based on user's geographic location), and failover routing (for high availability). Health checks can be associated with records to automatically failover traffic to healthy endpoints if an endpoint becomes unavailable. This setup enhances application availability and user experience.

20. Describe your experience with using AWS IAM to manage user access and permissions in the AWS environment.

I have experience using AWS IAM to manage user access and permissions in AWS environments. I've created IAM users and groups to organize users based on their roles and responsibilities. I've also defined IAM policies that grant specific permissions to these users and groups, ensuring that users only have access to the resources they need to perform their jobs, adhering to the principle of least privilege. These policies often involved using JSON to define access control, specifying allowed actions on specific AWS resources.

Furthermore, I've worked with IAM roles to grant permissions to AWS services like EC2 instances, allowing them to access other AWS resources without requiring long-term credentials. I've also implemented multi-factor authentication (MFA) for IAM users to enhance security. I understand and have practical experience using IAM best practices, such as regularly reviewing and updating IAM policies to maintain a secure and compliant AWS environment.

AWS interview questions for experienced

1. How would you design a highly available and scalable web application using AWS services, and what factors would you consider when choosing specific services?

To design a highly available and scalable web application on AWS, I'd leverage several services. For compute, I'd use EC2 within an Auto Scaling Group behind an Elastic Load Balancer (ELB). The Auto Scaling Group ensures scalability by dynamically adjusting the number of EC2 instances based on traffic, while the ELB distributes traffic across these instances and provides high availability. For the database, I'd consider RDS (Relational Database Service) for relational data or DynamoDB for NoSQL. RDS offers features like Multi-AZ deployments for failover, while DynamoDB is highly scalable and suitable for high-throughput applications. For caching, I'd use ElastiCache (Memcached or Redis) to improve response times.

Factors influencing service choices include cost, performance requirements, and application complexity. For example, Lambda (serverless) might be suitable for event-driven tasks. S3 (Simple Storage Service) would be used for storing static content or media files. CloudFront (CDN) could be employed to cache static content closer to users. Monitoring would be implemented through CloudWatch. Security is paramount, so IAM roles, Security Groups, and VPCs (Virtual Private Cloud) would be configured to isolate and protect resources. Cost optimization would involve using reserved instances, spot instances (where applicable) and right-sizing instances. Performance testing and load testing should be performed to validate scalability and identify bottlenecks.

2. Describe a time you had to troubleshoot a performance bottleneck in an AWS environment. What tools and techniques did you use?

In one project, we experienced slow response times for our API deployed on AWS Lambda and API Gateway. Initially, we suspected the database, but after monitoring RDS metrics like CPU utilization and IOPS using CloudWatch, it became clear that the database wasn't the bottleneck. We then turned our attention to the Lambda functions themselves. Using CloudWatch Logs and X-Ray, we identified a particular function that was taking significantly longer than expected. Further investigation of the code revealed an inefficient algorithm for processing data.

We optimized the algorithm, reducing its complexity from O(n^2) to O(n). Additionally, we increased the Lambda function's memory allocation, which provided more CPU resources. Finally, we enabled API Gateway caching to reduce the load on the Lambda functions for frequently accessed data. These changes significantly improved the API's response time and resolved the performance bottleneck.

3. Explain the differences between AWS CloudFormation, Terraform, and AWS CDK. When would you choose one over the others?

AWS CloudFormation, Terraform, and AWS CDK are all Infrastructure as Code (IaC) tools, but they differ in their approach. CloudFormation is AWS-native, using YAML or JSON to define infrastructure. It's tightly integrated with AWS services, offering first-party support and often immediate support for new features. Terraform is a vendor-agnostic, open-source tool using HashiCorp Configuration Language (HCL). It supports multiple cloud providers and on-premises infrastructure. AWS CDK allows you to define your infrastructure using familiar programming languages like Python, TypeScript, or Java, abstracting away the complexity of CloudFormation templates. It essentially generates CloudFormation templates under the hood.

Choose CloudFormation when you're heavily invested in the AWS ecosystem and want native integration with the latest AWS features. Choose Terraform when you need multi-cloud support or want a vendor-neutral solution. Choose AWS CDK if you prefer using programming languages to define your infrastructure, especially when dealing with complex or dynamic infrastructure setups, and want the advantages of code constructs and abstraction over raw YAML or JSON templates.

4. How do you approach security in your AWS deployments, and what are some best practices you follow to protect against common threats?

I approach security in AWS deployments with a layered approach, focusing on prevention, detection, and response. Some best practices include:

- Identity and Access Management (IAM): Enforcing the principle of least privilege by granting only necessary permissions to users and services using IAM roles and policies. Regularly auditing IAM configurations.

- Network Security: Using Security Groups and Network ACLs to control inbound and outbound traffic at the instance and subnet levels, respectively. Employing VPCs to isolate resources and using services like AWS Shield and WAF to protect against DDoS and web application attacks.

- Data Protection: Encrypting data at rest and in transit using KMS, S3 encryption, and TLS/SSL. Implementing strict access controls and auditing data access.

- Monitoring and Logging: Utilizing CloudTrail for auditing API calls, CloudWatch for monitoring resource usage and performance, and Security Hub/GuardDuty for threat detection. Regularly reviewing logs and setting up alerts for suspicious activity.

- Infrastructure as Code (IaC): Managing infrastructure securely through code using tools like Terraform or CloudFormation, incorporating security best practices into the code itself, and performing regular security scans on the code.

- Patch Management and Vulnerability Scanning: Keeping systems up-to-date with the latest security patches and regularly scanning for vulnerabilities using tools like Amazon Inspector.

5. Describe your experience with implementing CI/CD pipelines in AWS. What tools and services did you use, and what were some challenges you faced?

I've built and managed CI/CD pipelines in AWS using services like CodePipeline, CodeBuild, CodeDeploy, and CloudFormation. I've also integrated tools like Jenkins for orchestration and SonarQube for code quality analysis. For example, a typical pipeline would involve: source code from CodeCommit or GitHub, build using CodeBuild with a buildspec.yml defining steps like running unit tests and packaging the application, and deployment to EC2 instances or ECS using CodeDeploy. Infrastructure as Code was managed using CloudFormation, enabling repeatable and versionable infrastructure deployments along with the application.

Some challenges I faced included managing dependencies within CodeBuild environments, ensuring proper IAM permissions for each service to interact securely, and optimizing pipeline execution time. Debugging failed deployments in CodeDeploy, especially when related to application configurations, also required careful log analysis and configuration validation. I have also automated pipeline creation and updates using CloudFormation to handle complex multi-environment setups more efficiently.

6. How would you optimize the cost of an existing AWS infrastructure without compromising performance or availability?

To optimize AWS costs without compromising performance or availability, I'd first focus on identifying areas of waste. This involves analyzing current resource utilization using tools like AWS Cost Explorer, CloudWatch, and Trusted Advisor. Key actions include right-sizing EC2 instances, identifying and deleting unused EBS volumes, and leveraging reserved instances or savings plans for predictable workloads. Storage costs can be reduced by using lifecycle policies for S3 to move infrequently accessed data to cheaper storage tiers like Glacier or Intelligent-Tiering.

Further optimization can be achieved through automation and architecture improvements. Implementing auto-scaling ensures resources are dynamically adjusted based on demand, preventing over-provisioning. For databases, consider using read replicas to offload read traffic from the primary instance. Additionally, explore serverless technologies like Lambda and API Gateway for event-driven workloads, as they offer a pay-per-use model that can be more cost-effective than running dedicated servers. Regularly reviewing and updating security groups and IAM roles can also improve efficiency and reduce potential security vulnerabilities that can lead to cost overruns.

7. Explain the different types of load balancers available in AWS, and when would you use each type?

AWS offers three main types of load balancers: Application Load Balancer (ALB), Network Load Balancer (NLB), and Classic Load Balancer (CLB). ALB is best for HTTP/HTTPS traffic and provides advanced routing based on content, hostnames, or path. Use it for microservices, container-based applications, and scenarios requiring layer 7 routing. NLB is designed for high-performance, low-latency TCP, UDP, and TLS traffic. It's ideal for gaming applications, IoT, and streaming services. Finally, CLB is the older generation load balancer and offers basic load balancing across multiple EC2 instances. While still supported, ALB and NLB are generally preferred for new deployments due to their advanced features and scalability. CLB supports layer 4 and layer 7 load balancing.

8. Describe your experience with implementing serverless architectures in AWS using services like Lambda, API Gateway, and DynamoDB.