As organizations across industries leverage MongoDB to manage vast amounts of data, the demand for skilled MongoDB professionals is skyrocketing. Consequently, recruiters and hiring managers need a solid grasp of the key skills and knowledge areas to effectively screen and hire the best candidates; just like our guide on skills for a data engineer.

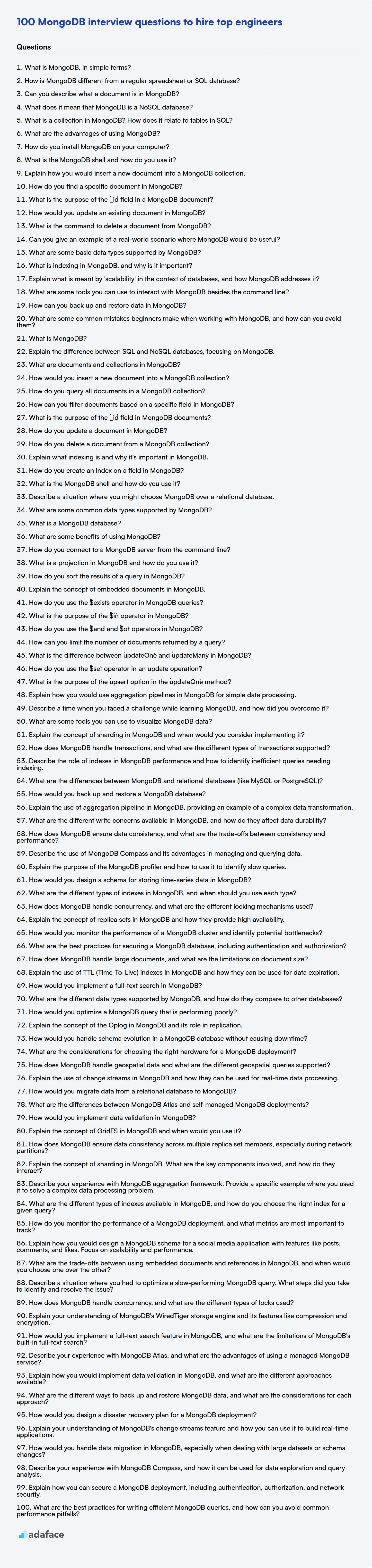

This blog post provides a curated list of MongoDB interview questions, designed to assist you in evaluating candidates across various experience levels. It includes questions tailored for freshers, juniors, intermediate, and experienced professionals, along with a set of multiple-choice questions (MCQs).

By using these questions, you can assess a candidate's MongoDB proficiency and ensure they have the skills to contribute effectively to your team; additionally, streamline your screening process and identify top talent with our MongoDB online test before the interview.

Table of contents

MongoDB interview questions for freshers

1. What is MongoDB, in simple terms?

MongoDB is a NoSQL database, meaning it's different from traditional relational databases (like MySQL or PostgreSQL). Instead of tables with rows and columns, MongoDB uses documents and collections. Think of a document as a flexible JSON-like structure where you can store data in a more free-form way.

In simpler terms, imagine you have a bunch of files (documents) organized into folders (collections). This makes it easier to handle diverse and evolving data structures, which is common in modern applications. MongoDB is known for its scalability and flexibility.

2. How is MongoDB different from a regular spreadsheet or SQL database?

MongoDB differs significantly from spreadsheets and SQL databases in its structure and functionality. Spreadsheets are primarily for simple data organization and calculations in a tabular format. SQL databases are relational, meaning data is structured in tables with predefined schemas and relationships enforced via foreign keys. MongoDB, on the other hand, is a NoSQL database that stores data in flexible, JSON-like documents. This allows for more dynamic and unstructured data storage compared to SQL databases.

Key differences:

- Data Model: SQL uses tables; MongoDB uses documents (BSON).

- Schema: SQL has a rigid schema; MongoDB has a flexible or schema-less approach.

- Relationships: SQL uses joins; MongoDB often embeds data or uses manual references.

- Scalability: MongoDB generally scales horizontally better than SQL in some scenarios. In simple cases SQL can scale better. Performance depends on your use cases and design.

3. Can you describe what a document is in MongoDB?

In MongoDB, a document is the fundamental unit of data. It's analogous to a row in a relational database table, but with a more flexible, schema-less structure. Documents are stored in collections. A document is a set of field-value pairs. Field values can be a variety of data types, including:

- Strings

- Numbers

- Booleans

- Arrays

- Nested Documents

Documents are represented in JSON-like format called BSON (Binary JSON), which extends JSON to include additional data types and provide efficient encoding and decoding.

4. What does it mean that MongoDB is a NoSQL database?

MongoDB being a NoSQL database means it doesn't adhere to the traditional relational database management system (RDBMS) model. Instead of using tables with rows and columns and SQL for querying, MongoDB uses a document-oriented data model. Data is stored in flexible, JSON-like documents with dynamic schemas.

Key characteristics include: schema flexibility, horizontal scalability, and often better performance for certain use cases. NoSQL databases like MongoDB often sacrifice ACID properties (Atomicity, Consistency, Isolation, Durability) for BASE properties (Basically Available, Soft state, Eventually consistent), making them suitable for applications that prioritize availability and speed over strict consistency.

5. What is a collection in MongoDB? How does it relate to tables in SQL?

In MongoDB, a collection is a grouping of MongoDB documents. It's analogous to a table in relational databases like SQL. A MongoDB collection exists within a single database.

Relational databases have tables with rows and columns, defining a schema. MongoDB collections are schema-less, meaning documents within a collection can have different fields. While not strictly enforced, it's often beneficial to have documents in the same collection share a similar structure for querying and indexing efficiency. You can think of collections as a way to organize related data, similar to how tables are used in SQL, but with greater flexibility in data structure.

6. What are the advantages of using MongoDB?

MongoDB offers several advantages, including its flexible schema, which allows for easy adaptation to changing data requirements without complex migrations. It excels at handling unstructured or semi-structured data. Its scalability is a major strength, facilitating horizontal scaling by distributing data across multiple servers.

Key benefits also include its document-oriented nature, making it easier to work with data in many modern programming paradigms. MongoDB also uses a rich query language, supports indexing for faster queries, and provides built-in replication for high availability and data redundancy.

7. How do you install MongoDB on your computer?

Installing MongoDB depends on your operating system. For example, on macOS using Homebrew, you can use the command brew tap mongodb/brew followed by brew install mongodb-community. On Debian or Ubuntu, you would typically import the MongoDB public GPG key, create a list file for MongoDB, and then use apt-get update and apt-get install mongodb.

No matter the OS, you'll likely need to configure the MongoDB service after installation. This may involve setting up the data directory (usually /data/db by default) and configuring the mongod.conf file for security and network settings. Remember to start the MongoDB service after installation using a command appropriate for your system (e.g., systemctl start mongod or brew services start mongodb-community).

8. What is the MongoDB shell and how do you use it?

The MongoDB shell is an interactive JavaScript interface that allows you to interact with MongoDB databases. It provides a command-line environment to execute commands, query data, and perform administrative tasks.

To use it, you typically open your terminal and type mongo. This connects you to the default MongoDB instance running on localhost. You can then use commands like show dbs to list databases, use <database_name> to switch to a specific database, db.<collection_name>.find() to query data from a collection, and db.<collection_name>.insert() to insert new documents. The shell interprets these commands and sends them to the MongoDB server, displaying the results.

9. Explain how you would insert a new document into a MongoDB collection.

To insert a new document into a MongoDB collection, I would use the insertOne() or insertMany() methods. insertOne() inserts a single document, while insertMany() inserts multiple documents.

For example, using insertOne() in a JavaScript context:

db.collection('myCollection').insertOne({ name: 'New Document', value: 123 });

For multiple documents:

db.collection('myCollection').insertMany([{ name: 'Doc1', value: 1 }, { name: 'Doc2', value: 2 }]);

Both methods return a result object containing information about the operation, such as the number of documents inserted and the generated _id values.

10. How do you find a specific document in MongoDB?

To find a specific document in MongoDB, you typically use the find() method along with a query filter. The query filter specifies the criteria that the document must meet to be returned.

For example, if you want to find a document where the _id field is equal to a specific value, you can use the following code:

db.collection.find({ _id: ObjectId("your_object_id") })

Replace collection with the name of your collection and your_object_id with the actual ObjectId of the document you're searching for. The ObjectId() constructor is used to properly format the id for querying.

11. What is the purpose of the `_id` field in a MongoDB document?

The _id field in a MongoDB document serves as the primary key for that document within a collection. It uniquely identifies each document, ensuring that no two documents in the same collection have the same _id value.

If you don't specify an _id field when inserting a new document, MongoDB automatically generates one for you. By default, this is an ObjectId, a 12-byte BSON type value that provides uniqueness across different machines and processes, making it suitable for distributed environments.

12. How would you update an existing document in MongoDB?

To update an existing document in MongoDB, you typically use the updateOne() or updateMany() methods. updateOne() updates a single document that matches the filter criteria, while updateMany() updates all documents that match. Both methods require a filter to identify the document(s) and an update document that specifies the changes to be made.

For example, using updateOne() in JavaScript:

db.collection('myCollection').updateOne(

{ _id: ObjectId("64b4e1234567890123456789") }, // Filter: Document to update

{ $set: { field1: 'new value', field2: 123 } } // Update: Changes to apply

)

Here, the filter identifies the document with the specified _id, and the $set operator updates the field1 and field2 fields. Other update operators like $inc, $push, and $pull can also be used depending on the specific update requirements.

13. What is the command to delete a document from MongoDB?

The command to delete a document from MongoDB is db.collection.deleteOne() or db.collection.deleteMany().

deleteOne(): Deletes a single document that matches the specified filter. If multiple documents match, only the first one encountered will be deleted.deleteMany(): Deletes all documents that match the specified filter.

For example:

db.users.deleteOne({ name: "John Doe" })

This will delete the first document in the users collection where the name field is equal to "John Doe".

db.products.deleteMany({ quantity: { $lt: 10 } })

This will delete all documents in the products collection where the quantity field is less than 10.

14. Can you give an example of a real-world scenario where MongoDB would be useful?

MongoDB is well-suited for applications that require flexible schemas and can benefit from horizontal scalability. A great example is an e-commerce website's product catalog. Each product can have a varying set of attributes (size, color, material, etc.), and new attributes can be easily added without schema migrations.

Furthermore, MongoDB's document-oriented nature allows you to store all product information in a single document, improving query performance. The ability to easily shard the database across multiple servers is crucial for handling large product catalogs and high traffic, which is a common challenge for successful e-commerce sites.

15. What are some basic data types supported by MongoDB?

MongoDB supports several basic data types, which are similar to those found in most programming languages. Some of the commonly used ones include:

- String: Used to store text. For example,

"hello". - Integer: Used to store numerical values. MongoDB supports different integer sizes. For example,

42. - Double: Used to store floating-point numbers. For example,

3.14. - Boolean: Used to store true/false values. For example,

trueorfalse. - Date: Used to store dates and times. Stored as the number of milliseconds since the Unix epoch.

- Array: Used to store an ordered list of values. The values within an array can be of different types. For example,

[1, "hello", true]. - Object: Used to store embedded documents, allowing for complex data structures. For example,

{ "name": "John", "age": 30 }. - ObjectId: A unique identifier automatically generated for each document in a collection.

- Null: Represents the absence of a value. For example,

null.

16. What is indexing in MongoDB, and why is it important?

Indexing in MongoDB is a way to optimize the performance of queries. It creates a data structure that stores a small portion of the data set in an easy-to-traverse form. Instead of scanning the entire collection to find matching documents, MongoDB can use the index to quickly locate the documents that match the query criteria.

Indexes are crucial because they significantly reduce the query execution time. Without indexes, MongoDB must perform a collection scan (COlSCAN), which can be very slow, especially on large datasets. With appropriate indexes, queries can use index scans (IXSCAN), resulting in faster and more efficient data retrieval. Example index creation:

db.collection.createIndex( { "field": 1 } )

Here, 1 specifies ascending order. -1 is for descending order.

17. Explain what is meant by 'scalability' in the context of databases, and how MongoDB addresses it?

Scalability in databases refers to the ability of the database system to handle increasing amounts of data, traffic, and user load without a significant degradation in performance. It essentially means the database can grow and adapt to handle more workload. There are primarily two types: vertical (scaling up by adding more resources to a single server) and horizontal (scaling out by adding more servers to a distributed system).

MongoDB addresses scalability primarily through horizontal scaling, using a technique called sharding. Sharding involves partitioning the data across multiple MongoDB instances (shards). Each shard contains a subset of the total data, and MongoDB intelligently routes queries to the appropriate shard(s). This allows MongoDB to distribute the workload across multiple machines, increasing both read and write capacity. Besides sharding, replication (creating multiple copies of data) improves read scalability and provides redundancy.

18. What are some tools you can use to interact with MongoDB besides the command line?

Besides the MongoDB command-line shell (mongosh), several GUI and programmatic tools can interact with MongoDB. These tools provide different ways to manage, visualize, and query data.

Some popular options include:

- MongoDB Compass: A GUI that provides a visual interface for exploring your data, running queries, and optimizing performance.

- NoSQLBooster for MongoDB: A shell-centric GUI tool that offers advanced IntelliSense, code snippets, and visual query builder.

- Studio 3T: A cross-platform GUI client that supports multiple MongoDB connections, visual query building, and data exploration features. There is a free version, and paid versions with more functionality.

- Programming languages: Using drivers for languages like Python (using

pymongo), Node.js (usingmongoose), Java, etc. you can programmatically interact with MongoDB. For example:

from pymongo import MongoClient

client = MongoClient('mongodb://localhost:27017/')

db = client.mydatabase

collection = db.mycollection

# Insert a document

collection.insert_one({'name': 'Example', 'value': 123})

19. How can you back up and restore data in MongoDB?

MongoDB offers several tools for backup and restore operations. mongodump is a utility to create binary backups of your MongoDB data. To back up an entire database, you can run mongodump --db <database_name> --out <backup_directory>. For a specific collection, use mongodump --db <database_name> --collection <collection_name> --out <backup_directory>. These commands create BSON files representing your data and metadata files.

To restore the data, you can use the mongorestore utility. To restore a database, you can use mongorestore --db <database_name> <backup_directory>. Similarly, to restore a specific collection, you can use mongorestore --db <database_name> --collection <collection_name> <backup_directory>. For large datasets, consider using the --oplogReplay option with mongorestore if you have the oplog available for a more efficient restore. Also, MongoDB Atlas offers managed backups.

20. What are some common mistakes beginners make when working with MongoDB, and how can you avoid them?

Beginners often make several common mistakes when starting with MongoDB. One frequent issue is schema design. Newcomers might try to rigidly enforce relational database-like schemas, which negates MongoDB's flexibility. Avoid this by embracing a more document-oriented approach, embedding related data when appropriate, and denormalizing where it improves read performance. Another mistake is not using indexes effectively. Queries without indexes can lead to slow performance, especially on large collections. Use explain() to identify slow queries and add indexes on frequently queried fields. Also, failing to understand the importance of the _id field is common.

Another pitfall is ignoring connection pooling. Opening and closing connections for each operation is inefficient. Use a connection pool library provided by your driver. Finally, neglecting data validation on the application side can lead to inconsistent data. Implement validation rules in your application code to ensure data integrity before saving it to the database.

MongoDB interview questions for juniors

1. What is MongoDB?

MongoDB is a NoSQL document database. It stores data in flexible, JSON-like documents, meaning fields can vary from document to document and the schema is not predefined.

Key features include:

- Document-oriented: Data is stored as documents (BSON format).

- Scalable: Easily scale horizontally across multiple servers.

- Flexible Schema: No need to define a rigid schema beforehand.

- High Performance: Designed for speed and performance.

- Indexing: Supports various indexing options for faster queries.

- Replication: Offers data redundancy and high availability through replica sets.

2. Explain the difference between SQL and NoSQL databases, focusing on MongoDB.

SQL databases are relational, using a predefined schema with structured data organized in tables. They enforce ACID properties (Atomicity, Consistency, Isolation, Durability). NoSQL databases, like MongoDB, are non-relational, often schema-less, and designed for flexibility and scalability with unstructured or semi-structured data. They typically trade ACID for eventual consistency and improved performance.

Mongodb, being a NoSQL database, uses a document-oriented model storing data in JSON-like documents, making it suitable for handling varying data structures. SQL queries are replaced by MongoDB's query language, focusing on retrieving documents based on specified criteria. Unlike SQL which relies on joins across tables, MongoDB embeds related data within a single document, optimizing for read performance. The tradeoff is data redundancy and less strict consistency guarantees compared to SQL.

3. What are documents and collections in MongoDB?

In MongoDB, a document is a fundamental unit of data, similar to a row in a relational database table. Documents are stored in JSON-like format called BSON (Binary JSON). Each document contains fields and values. The values can be of various data types, including strings, numbers, booleans, arrays, or even other embedded documents. For example: {"name": "John Doe", "age": 30, "city": "New York"}

A collection is a group of MongoDB documents. It's analogous to a table in a relational database. Collections don't enforce a schema, meaning documents within the same collection can have different fields. However, it's generally good practice to store documents with a similar structure within the same collection for efficient querying and data management.

4. How would you insert a new document into a MongoDB collection?

To insert a new document into a MongoDB collection, you would typically use the insertOne() or insertMany() methods provided by the MongoDB driver. For a single document, insertOne() is the correct choice. It takes a document (a JSON-like object) as an argument and inserts it into the specified collection.

Here's an example using JavaScript with the Node.js MongoDB driver:

db.collection('myCollection').insertOne({

name: 'John Doe',

age: 30,

city: 'New York'

}).then(result => {

console.log(`Inserted document with _id: ${result.insertedId}`);

});

insertMany() is used to insert multiple documents at once, taking an array of documents as an argument.

5. How do you query all documents in a MongoDB collection?

To query all documents in a MongoDB collection, you can use the find() method without any query parameters. This returns a cursor to all documents in the collection. For example, using the MongoDB shell:

db.collectionName.find()

This will return all documents in the collectionName collection. You can iterate through the cursor to access each document individually. If you want to see the results formatted nicely in the shell, you can chain the .pretty() method:

db.collectionName.find().pretty()

6. How can you filter documents based on a specific field in MongoDB?

In MongoDB, you can filter documents based on a specific field using the find() method with a query object. The query object specifies the criteria for filtering. For example, to find all documents where the status field is equal to "active", you would use the following:

db.collection.find( { status: "active" } )

You can also use comparison operators like $gt (greater than), $lt (less than), $gte (greater than or equal to), $lte (less than or equal to), $ne (not equal), and logical operators like $and, $or, $not to create more complex filter conditions. For instance, to find documents where the age is greater than 25:

db.collection.find( { age: { $gt: 25 } } )

7. What is the purpose of the `_id` field in MongoDB documents?

The _id field in MongoDB serves as a unique identifier for each document within a collection. Its primary purpose is to ensure that every document can be uniquely identified and retrieved. By default, MongoDB automatically creates an _id field and assigns a unique ObjectId value if one isn't provided when inserting a document.

- It acts as the primary key for the collection.

- It is indexed by default, which speeds up queries that filter on

_id. - While MongoDB generates

ObjectIdby default, you can specify your own unique value for_idfield (e.g., a string, number, or UUID), but it must be unique within the collection, and must have an index defined.

8. How do you update a document in MongoDB?

To update a document in MongoDB, you typically use the updateOne() or updateMany() methods. These methods require a filter to identify the document(s) to update and an update document that specifies the changes to be made. The update document uses update operators like $set, $inc, $push, etc., to modify specific fields. For example:

db.collection.updateOne(

{ _id: ObjectId("654...") },

{ $set: { status: "updated" } }

);

updateOne updates a single document matching the filter, whereas updateMany updates all documents that match. You can also use the replaceOne() method to completely replace an existing document with a new one.

9. How do you delete a document from a MongoDB collection?

To delete a document from a MongoDB collection, you can use the deleteOne() or deleteMany() methods. deleteOne() deletes a single document that matches the specified filter, while deleteMany() deletes all documents that match the filter.

For example, using deleteOne():

db.collectionName.deleteOne({ field: "value" })

This command deletes the first document in collectionName where the field is equal to "value". For deleteMany() it will remove all that match. Ensure your filter is specific enough to avoid unintended deletions.

10. Explain what indexing is and why it's important in MongoDB.

Indexing in MongoDB is a way to improve the speed of read operations on a database. It involves creating a data structure that stores a small portion of the data set in an easy-to-traverse form. MongoDB indexes use a B-tree data structure. Without indexes, MongoDB must scan every document in a collection to find the documents that match a query, which is very inefficient for large collections.

Indexes are crucial for performance because they allow MongoDB to quickly locate the relevant documents without scanning the entire collection. This dramatically reduces the query execution time, especially for large datasets. For example, if you frequently query by the userId field, creating an index on userId would significantly speed up those queries.

11. How do you create an index on a field in MongoDB?

To create an index on a field in MongoDB, you use the createIndex() method. The basic syntax is db.collection.createIndex(keys, options). The keys argument specifies the field(s) to index and the index type (ascending or descending). For example, to create an ascending index on the name field in the users collection, you'd use: db.users.createIndex( { name: 1 } ). The 1 indicates ascending order; -1 would indicate descending order. You can also create compound indexes by specifying multiple fields in the keys object. For example: db.users.createIndex( { name: 1, age: -1 } )

Options can be specified, such as creating a unique index ({ unique: true }) to enforce uniqueness of values in the indexed field or background index creation ({ background: true }). db.users.createIndex( { email: 1 }, { unique: true } ) will ensure unique email addresses in the users collection.

12. What is the MongoDB shell and how do you use it?

The MongoDB shell, mongosh, is a JavaScript interface to interact with MongoDB databases. It allows you to connect to a MongoDB instance, execute commands, and perform administrative tasks.

To use it, you first need to install it. Then, you typically open your terminal or command prompt and type mongosh. This connects to your default MongoDB instance (usually mongodb://localhost:27017). If you need to connect to a different instance, you specify the connection string, like mongosh "mongodb://<user>:<password>@<host>:<port>/<database>?authSource=admin". Once connected, you can run MongoDB commands, such as db.collection.find() to query documents, db.collection.insertOne() to insert a document or show dbs to list available databases.

13. Describe a situation where you might choose MongoDB over a relational database.

I would choose MongoDB over a relational database when dealing with unstructured or semi-structured data. For example, if I'm building a content management system (CMS) where each piece of content can have a flexible set of attributes that can change frequently, MongoDB's document-oriented approach is a better fit. Relational databases require a predefined schema, which can be cumbersome to maintain when dealing with evolving data structures.

Another scenario is when horizontal scalability and high write throughput are critical. MongoDB's sharding capabilities make it easier to distribute data across multiple servers, allowing for increased performance and availability. This is useful for applications with large amounts of data and high traffic, such as logging systems or real-time analytics platforms.

14. What are some common data types supported by MongoDB?

MongoDB supports several data types, allowing for flexible schema design. Some common data types include:

- String: UTF-8 strings.

- Integer: Signed 32-bit or 64-bit integers.

- Double: 64-bit floating-point numbers.

- Boolean: True or false values.

- Date: Stored as a 64-bit integer representing milliseconds since the Unix epoch.

- Array: An ordered list of values.

- Object: Embedded documents (nested key-value pairs).

- ObjectId: A unique identifier for documents. Example:

{ "_id" : ObjectId("64b3e8c695926425f4922a1a") } - Null: Represents a missing or undefined value.

15. What is a MongoDB database?

MongoDB is a NoSQL, document-oriented database. It stores data in flexible, JSON-like documents, meaning the schema of a collection is not strictly enforced. This allows for more agile development and easier handling of diverse data structures. Key features include:

- Scalability: Horizontally scalable via sharding.

- Flexibility: Schema-less document structure.

- Performance: Indexing and aggregation framework for fast queries.

- Replication: High availability through replica sets.

- Querying: Rich query language for filtering and manipulating data.

16. What are some benefits of using MongoDB?

MongoDB offers several benefits, including its flexible schema, which allows for easy adaptation to changing data structures. Its document-oriented model aligns well with object-oriented programming paradigms and simplifies data access for developers. Scalability is another key advantage, as MongoDB is designed to handle large volumes of data and high traffic loads through sharding and replication.

Furthermore, MongoDB's rich query language and indexing capabilities facilitate efficient data retrieval. The availability of features such as aggregation pipelines and geospatial indexing empowers developers to perform complex data analysis and location-based queries with ease. The community support is also great.

17. How do you connect to a MongoDB server from the command line?

To connect to a MongoDB server from the command line, you typically use the mongo shell (or mongosh for newer versions). Simply open your terminal and type mongo or mongosh.

By default, this attempts to connect to a MongoDB server running on localhost (127.0.0.1) on the default port 27017. To connect to a different server or port, you can specify the connection string. For example, mongo mongodb://<host>:<port> or mongosh mongodb://<host>:<port> where <host> is the hostname or IP address of the MongoDB server and <port> is the port number. For example: mongo mongodb://192.168.1.100:27017 or mongosh mongodb://192.168.1.100:27017.

18. What is a projection in MongoDB and how do you use it?

In MongoDB, a projection is used to select only the necessary fields from a document, thereby limiting the amount of data that MongoDB has to send over the network. This can significantly improve query performance, especially when dealing with large documents or when you only need a subset of the fields.

To use a projection, you include a second argument in the find() method. This argument is a document that specifies which fields to include (1) or exclude (0). For example, db.collection.find({}, {name: 1, _id: 0}) would retrieve all documents from the collection, but only include the name field and exclude the _id field (which is included by default). Remember, you cannot generally mix inclusion and exclusion in the same projection (excluding _id is an exception).

19. How do you sort the results of a query in MongoDB?

In MongoDB, you can sort the results of a query using the sort() method. This method takes a document as an argument, where the fields represent the fields to sort by, and the values represent the sort order (1 for ascending, -1 for descending).

For example, to sort a collection named 'users' by the 'age' field in ascending order and then by the 'name' field in descending order, you would use the following query:

db.users.find().sort({ age: 1, name: -1 })

20. Explain the concept of embedded documents in MongoDB.

Embedded documents in MongoDB allow you to store related data within a single document. Instead of using separate collections and performing joins, you can nest documents inside each other. This is also referred to as denormalization.

For example, consider a users collection. Instead of storing the user's address in a separate addresses collection, you can embed the address information directly within the users document. This improves read performance because all the necessary data is retrieved in a single query. However, it can make updates more complex, especially if the embedded data is frequently updated or shared across multiple documents.

21. How do you use the `$exists` operator in MongoDB queries?

The $exists operator in MongoDB is used to filter documents based on the existence of a specific field. It returns documents that contain the specified field (if set to true) or documents that do not contain the specified field (if set to false).

For example, to find all documents in a collection named products that have a field called description, you would use the following query:

db.products.find({ description: { $exists: true } })

Conversely, to find documents that do not have the description field, you would use:

db.products.find({ description: { $exists: false } })

22. What is the purpose of the `$in` operator in MongoDB?

The $in operator in MongoDB is used to select documents where the value of a specified field matches any of the values in a provided array. Essentially, it's a shorthand for multiple OR conditions on the same field.

For example, if you want to find all documents where the status field is either "active" or "inactive", you could use the $in operator like this: db.collection.find({ status: { $in: [ "active", "inactive" ] } }). This is more concise and often more efficient than writing db.collection.find({ $or: [ { status: "active" }, { status: "inactive" } ] }).

23. How do you use the `$and` and `$or` operators in MongoDB?

In MongoDB, $and and $or operators are used to create complex query conditions. $and requires all specified conditions to be true for a document to be included in the result set. $or, on the other hand, requires at least one of the specified conditions to be true.

Both operators accept an array of expressions as their argument. For example, to find documents where age is greater than 25 and city is "New York", you'd use $and. Conversely, to find documents where age is less than 20 or city is "Los Angeles", you'd use $or. In queries using multiple operators of both $and and $or, you must structure the query to ensure correct precedence and desired logic. Here's an example:

db.collection.find({

$and: [

{ $or: [ { age: { $gt: 25 } }, { city: "Los Angeles" } ] },

{ status: "active" }

]

})

24. How can you limit the number of documents returned by a query?

To limit the number of documents returned by a query, most database systems and query languages provide a LIMIT clause or equivalent mechanism. For example, in SQL, you would use SELECT * FROM table_name LIMIT 10 to retrieve only the first 10 records.

For MongoDB you can limit results using .limit(10) after your query. Elasticsearch allows it using the size parameter in the query body. These features are essential for optimizing query performance and displaying results in a manageable way, especially when dealing with large datasets.

25. What is the difference between `updateOne` and `updateMany` in MongoDB?

In MongoDB, both updateOne and updateMany are used to modify documents within a collection, but they differ in the number of documents they affect. updateOne updates at most a single document, even if the query matches multiple documents. It will update the first matching document it finds. updateMany, on the other hand, updates all documents that match the specified query.

Consider this example:

db.collection.updateOne({ status: "active" }, { $set: { last_updated: new Date() } })

// vs.

db.collection.updateMany({ status: "active" }, { $set: { last_updated: new Date() } })

The updateOne will update only the first document where status is active, while updateMany will update all documents with that status. If no documents match the query, both functions will complete without error, but no documents will be modified.

26. How do you use the `$set` operator in an update operation?

The $set operator replaces the value of a field with the specified value. If the field does not exist, $set will add a new field with the specified value. It's used in update operations to modify existing documents.

For example, to update the name field of a document to 'John Doe', you would use the following MongoDB update operation:

db.collection.updateOne(

{ _id: ObjectId("64d3b9e1a0b1c7f6e0e12345") },

{ $set: { name: "John Doe" } }

)

27. What is the purpose of the `upsert` option in the `updateOne` method?

The upsert option in the updateOne method is used to perform an "update or insert" operation. When upsert is set to true, the updateOne method will:

- Update: If a document matching the query criteria is found, it will update that document according to the update definition.

- Insert: If no document matching the query criteria is found, it will insert a new document. The new document will be based on the query criteria combined with the update definition. Specifically, the query document serves as the base for the new document, and fields from the update document (using

$setoperator) are added or overwrite existing fields.

28. Explain how you would use aggregation pipelines in MongoDB for simple data processing.

Aggregation pipelines in MongoDB are a powerful framework for data transformation and processing. They consist of a series of stages, each performing a specific operation on the input documents. Simple data processing can be achieved by chaining together stages like $match to filter documents, $group to aggregate data based on a key, $project to reshape documents by including, excluding, or renaming fields, and $sort to order the results.

For example, suppose you have a collection of sales records and want to find the total sales for each product category. You could use a $group stage to group the documents by category and calculate the sum of sales for each group. A $project stage could then be used to refine the output to only include the category and total sales fields. Such pipelines enable efficient data manipulation directly within the database, reducing the need to transfer large datasets to application code.

29. Describe a time when you faced a challenge while learning MongoDB, and how did you overcome it?

One challenge I faced while learning MongoDB was understanding aggregation pipelines. Initially, the concept of chaining multiple stages to transform data seemed complex. I struggled with effectively using operators like $group, $unwind, and $lookup to achieve the desired data manipulation. To overcome this, I started with simple aggregation examples and gradually increased the complexity. I also found the MongoDB documentation and online tutorials extremely helpful.

Specifically, I worked through examples that involved grouping data by multiple fields, calculating aggregates, and reshaping the output documents. I also experimented with different aggregation operators and observed their effects on the data. Another helpful resource was Stack Overflow, where I found solutions to specific problems I encountered. By consistently practicing and referring to available resources, I eventually gained a solid understanding of aggregation pipelines and their capabilities. I also practiced writing unit tests for my pipelines to ensure the correctness of the results.

30. What are some tools you can use to visualize MongoDB data?

Several tools can be used to visualize MongoDB data. Some popular options include:

- MongoDB Compass: MongoDB's official GUI, offering visual exploration, schema analysis, and query execution.

- BI Connectors: Tools like Tableau, Power BI, and Qlik can connect to MongoDB and create interactive dashboards and reports.

- NoSQLBooster (Studio 3T): A GUI with advanced features like SQL to MongoDB query conversion and visual explain plans.

- Custom applications: Libraries like

pymongo(Python), ormongoose(Node.js) can be used to fetch data and then visualize it using libraries likematplotlib,seaborn, or chart.js. - MongoDB Charts: A data visualization tool built specifically for MongoDB data, providing embedded charts and dashboards.

MongoDB intermediate interview questions

1. Explain the concept of sharding in MongoDB and when would you consider implementing it?

Sharding in MongoDB is a method of horizontally partitioning data across multiple machines. It distributes data across a cluster of MongoDB instances, allowing the database to handle larger datasets and higher throughput than a single server could manage. Data is divided into chunks based on a shard key, and these chunks are distributed across the shards.

You would consider implementing sharding when:

- The dataset size exceeds the storage capacity of a single server.

- The write or read throughput exceeds the capacity of a single server.

- You need to improve query performance by distributing the query load across multiple servers.

- You want to achieve high availability and fault tolerance by distributing data across multiple locations. Without sharding, you'd hit hardware limits, so it's essential for scaling out your DB.

2. How does MongoDB handle transactions, and what are the different types of transactions supported?

MongoDB handles transactions using ACID properties (Atomicity, Consistency, Isolation, Durability) since version 4.0 (for single document) and 4.2 (for multi-document). Multi-document transactions ensure that a series of operations either all succeed or all rollback, maintaining data integrity.

MongoDB supports several types of transactions:

- Single Document Transactions: Supported in earlier versions, operations are atomic within a single document.

- Multi-Document Transactions: Introduced in 4.2, these transactions span multiple documents, collections, and even databases within a replica set. They provide ACID guarantees across multiple operations.

- Distributed Transactions: Available in MongoDB 5.0 and later, enabling transactions across multiple shards in a sharded cluster. This enables ACID compliance across horizontally scaled data.

3. Describe the role of indexes in MongoDB performance and how to identify inefficient queries needing indexing.

Indexes in MongoDB are crucial for optimizing query performance. They work by creating a data structure that stores a small portion of the data set in an easy-to-traverse form. Without indexes, MongoDB must perform a collection scan, examining every document in a collection to find those that match the query criteria. This is highly inefficient, especially on large collections. With indexes, MongoDB can quickly locate the relevant documents, significantly reducing query execution time.

Inefficient queries needing indexing can be identified through the explain() method. By running db.collection.find(query).explain("executionStats"), you can analyze the query plan. Look for the stage attribute in the explain output. A COLLSCAN stage indicates a full collection scan, meaning an index is likely needed. Also, high executionTimeMillis values suggest the query is slow and could benefit from indexing. Consider creating indexes on the fields used in your queries, especially those used for filtering, sorting, or joining.

4. What are the differences between MongoDB and relational databases (like MySQL or PostgreSQL)?

MongoDB is a NoSQL document database, while relational databases (like MySQL or PostgreSQL) are SQL-based. This core difference leads to several distinctions. Relational databases use a structured schema with tables, rows, and columns, enforcing data integrity through relationships and transactions (ACID properties). MongoDB uses a flexible schema (or schemaless design) storing data in JSON-like documents within collections. This offers greater agility and scalability.

Key differences include:

- Data Model: Relational: Tables with fixed columns; MongoDB: Documents with dynamic fields.

- Schema: Relational: Fixed schema; MongoDB: Flexible schema.

- Query Language: Relational: SQL; MongoDB: MongoDB Query Language (MQL).

- Scalability: Relational: Primarily vertical scaling; MongoDB: Horizontal scaling.

5. How would you back up and restore a MongoDB database?

MongoDB offers several tools for backup and restore. mongodump creates binary export of the database's contents, and mongorestore imports data from a mongodump output or a BSON file. For example, to back up a database named mydb to a directory /opt/backup, use mongodump --db mydb --out /opt/backup. To restore it, use mongorestore --db mydb /opt/backup/mydb.

For larger deployments or production environments, consider using MongoDB Atlas's built-in backup features or MongoDB Ops Manager, which provide continuous backups and point-in-time recovery. These methods are generally preferred over mongodump and mongorestore because they offer more robust and automated backup solutions.

6. Explain the use of aggregation pipeline in MongoDB, providing an example of a complex data transformation.

The aggregation pipeline in MongoDB is a framework for data aggregation, processing, and transformation. It's structured as a multi-stage pipeline that transforms documents into aggregated results. Each stage performs a specific operation on the input documents, and the output of one stage becomes the input of the next. Some common stages include $match (filtering documents), $group (grouping documents by a specified key and applying aggregation functions), $project (reshaping documents by including, excluding, or renaming fields), $sort (sorting documents), and $unwind (deconstructing array fields).

Here's an example of a complex data transformation using an aggregation pipeline:

Consider a collection named orders with documents like this: { _id: ObjectId("..."), customerId: "cust123", items: [ { productId: "prod1", quantity: 2, price: 10 }, { productId: "prod2", quantity: 1, price: 20 } ], status: "shipped" }

To calculate the total revenue per customer for shipped orders, the aggregation pipeline would be:

[

{ $match: { status: "shipped" } },

{ $unwind: "$items" },

{ $group: { _id: "$customerId", totalRevenue: { $sum: { $multiply: [ "$items.quantity", "$items.price" ] } } } },

{ $sort: { totalRevenue: -1 } }

]

This pipeline first filters orders to only include those with the status "shipped". Then, it unwinds the items array to create separate documents for each item in the order. After that, it groups the items by customerId and calculates the totalRevenue by summing the product of quantity and price for each item. Finally, it sorts the results by totalRevenue in descending order.

7. What are the different write concerns available in MongoDB, and how do they affect data durability?

MongoDB's write concerns control the level of guarantee that MongoDB provides when reporting success of a write operation. They affect data durability by dictating how many nodes must acknowledge the write before it's considered successful.

Available write concerns include:

w: <number>: Acknowledgment requested from the specified number of nodes, or "majority" of the nodes in replica set.j: <boolean>: Whether to write to the journal on disk before acknowledging. Whenj: true, it guarantees data has been written to the on-disk journal before acknowledging the write.wtimeout: <number>: Maximum time, in milliseconds, to wait for the write concern to be satisfied.

Choosing a higher write concern (e.g., w: "majority", j: true) improves data durability but can increase latency. Lower write concerns offer faster write performance but with a greater risk of data loss in the event of a failure. The default write concern is w: 1, meaning acknowledgement from the primary node only.

8. How does MongoDB ensure data consistency, and what are the trade-offs between consistency and performance?

MongoDB ensures data consistency through various mechanisms. By default, MongoDB provides local consistency, meaning operations are consistent on a single replica set member. For stronger consistency, you can configure write concern and read preference. Write concern specifies the number of replica set members that must acknowledge a write operation before it is considered successful. Read preference dictates from which replica set members a client can read data. Using a write concern of majority ensures that writes are acknowledged by a majority of the replica set before being considered successful. Using a read preference of primary ensures that reads are always served from the primary, ensuring consistent data.

The tradeoff between consistency and performance lies in the resources required to enforce stronger consistency. For instance, requiring majority write concern adds latency to write operations, as the primary must wait for acknowledgements from other members. Similarly, reading from the primary can add latency, especially if the primary is under heavy load or geographically distant. Looser consistency settings (e.g., write concern of 1 and read preference of secondaryPreferred) improve performance but at the risk of reading stale data. Choosing the appropriate balance depends on the application's requirements for data accuracy versus response time.

9. Describe the use of MongoDB Compass and its advantages in managing and querying data.

MongoDB Compass is a GUI for visualizing and managing your MongoDB data. It provides a user-friendly interface to explore databases, collections, and documents. You can use it to perform CRUD operations, create and manage indexes, and analyze query performance.

Advantages include its visual query builder (great for users unfamiliar with MongoDB query language), real-time performance monitoring, schema visualization, and easy index management. It simplifies database administration and development tasks, especially for those who prefer a graphical interface over the command line.

10. Explain the purpose of the MongoDB profiler and how to use it to identify slow queries.

The MongoDB profiler is a diagnostic tool that collects detailed information about database operations. Its primary purpose is to help identify slow-running queries or operations that are impacting performance. By analyzing the profiler's output, developers and database administrators can pinpoint inefficient queries and optimize them.

To use the profiler, you first need to enable it. The profiling level determines which operations are logged. A level of 1 logs operations that take longer than a specified threshold (in milliseconds), set by slowms. A level of 2 logs all operations. You can set the profiling level using db.setProfilingLevel(level, slowms). After enabling the profiler, you can query the system.profile collection (e.g., db.system.profile.find()) to view the logged operations. The output will show information like the query itself, the execution time (millis), and other relevant details. Analyzing the millis field will help identify slow queries for optimization.

11. How would you design a schema for storing time-series data in MongoDB?

For time-series data in MongoDB, I'd opt for a schema that balances query efficiency and storage size. A common approach involves using a 'measurements' collection. Each document would represent a single measurement at a specific timestamp. The schema would include fields like:

timestamp: ISODate (indexed for efficient time-based queries).metric: String (name of the metric being measured).tags: Object (key-value pairs for metadata, e.g., server ID, region).value: Number (the actual measurement value).

An alternative and more compact approach is bucketing: Grouping multiple measurements within a single document for a specific time range. Each document contains an array of measurements for a time range, like an hour, reducing storage overhead. This approach requires careful planning of the bucket size.

12. What are the different types of indexes in MongoDB, and when should you use each type?

MongoDB offers several index types to optimize query performance. The most common is the Single Field Index, used to improve queries filtering on a single field. Compound Indexes are crucial for queries involving multiple fields, as they index multiple fields together, optimizing queries that filter on these fields in a specific order. Multikey Indexes are automatically created when indexing fields that hold array values; they index each element in the array. Geospatial Indexes (2d and 2dsphere) are specialized for location-based queries. The 2d index is for data stored as legacy coordinate pairs, while 2dsphere supports GeoJSON objects.

Other specialized index types include Text Indexes, which support text search queries across string content; Hashed Indexes, which use a hash of the field's value for indexing and are useful for shard key lookups due to their random distribution; and TTL (Time-To-Live) Indexes, used to automatically remove documents after a certain period. Choosing the right index depends on your query patterns. Analyze common query shapes and select index types to efficiently support those patterns. Use explain() to analyze query performance and identify needed indexes.

13. How does MongoDB handle concurrency, and what are the different locking mechanisms used?

MongoDB handles concurrency using a combination of multithreading and locking mechanisms. It employs optimistic locking at the document level and uses reader-writer locks for database operations. This means multiple readers can access a database or collection simultaneously, but only one writer can have exclusive access.

Specifically, MongoDB utilizes the following locking modes:

- Intent Shared (IS): Indicates an intent to read resources.

- Intent Exclusive (IX): Indicates an intent to modify resources.

- Shared (S): Grants read access to a resource.

- Exclusive (X): Grants exclusive write access to a resource.

- Shared Intent Exclusive (SIX): Allows reading along with intent to modify.

These locks are acquired at different levels (global, database, collection, and document) depending on the operation being performed. The WiredTiger storage engine implements concurrency control using Multi-Version Concurrency Control (MVCC), which allows multiple readers and writers to operate concurrently without blocking each other. Conflicts are detected at the time of commit, and write operations may be retried automatically.

14. Explain the concept of replica sets in MongoDB and how they provide high availability.

Replica sets in MongoDB provide high availability and data redundancy by maintaining multiple copies of data across different server instances. A replica set is essentially a cluster of MongoDB servers that includes a primary node and one or more secondary nodes. The primary node handles all write operations and is the main source of data. The secondary nodes replicate the primary node's data, ensuring that a copy of the data is available even if the primary node fails.

In case of a primary node failure, the replica set automatically initiates an election process to choose a new primary from the available secondaries. This failover process ensures minimal downtime and continuous operation. Clients automatically redirect their operations to the new primary. This automatic failover capability and data redundancy are the key components that make replica sets suitable for high-availability applications.

15. How would you monitor the performance of a MongoDB cluster and identify potential bottlenecks?

To monitor a MongoDB cluster's performance and identify bottlenecks, I'd use a combination of tools and techniques. mongostat and mongotop provide real-time insights into database operations and resource utilization. MongoDB Atlas provides a hosted monitoring solution, and other tools like Prometheus and Grafana can be integrated. Key metrics to watch include CPU utilization, memory usage, disk I/O, query execution times (using explain()), replication lag, and connection counts. High CPU or I/O can indicate slow queries or indexing issues, while replication lag can signal network problems or overloaded secondaries.

Identifying bottlenecks involves analyzing slow queries using the explain() command to check index usage, profiling database operations with the database profiler to find the most time-consuming queries. Consider optimizing indexes, re-writing inefficient queries, or sharding the database for horizontal scalability. Regularly reviewing MongoDB logs can also reveal errors or warnings that point to potential problems.

16. What are the best practices for securing a MongoDB database, including authentication and authorization?

Securing a MongoDB database involves several key best practices. Authentication is crucial; always enable authentication using auth=true in your MongoDB configuration or through command-line options. Create administrative users with strong passwords using the createUser method in the admin database, and then create user roles with the least privilege needed for each application or user. For authorization, MongoDB's role-based access control (RBAC) allows fine-grained control over data access. Define custom roles with specific permissions, such as read, write, or specific collection access, and assign these roles to users. Use TLS/SSL encryption for all client connections to protect data in transit.

Other practices include: regularly auditing your database configurations and user permissions, keeping your MongoDB server and drivers up-to-date with the latest security patches, and limiting network exposure by binding MongoDB to a private network interface and using firewalls to restrict access to only authorized IP addresses or networks. Consider using field-level encryption for sensitive data at rest, and enable auditing to track database activities for security monitoring and compliance. Always sanitize user inputs to prevent injection attacks and be mindful of data exposure in logs, avoid storing sensitive data there if possible.

17. How does MongoDB handle large documents, and what are the limitations on document size?

MongoDB handles large documents through its BSON (Binary JSON) format, which is designed for efficient storage and querying of complex data structures. While MongoDB supports large documents, there's a hard limit of 16MB per document. This limitation is in place to ensure predictable performance and prevent individual documents from monopolizing resources.

When dealing with data exceeding this limit, strategies like GridFS (for storing large files), document sharding (splitting data across multiple servers), and data model redesign (breaking down large documents into smaller, related documents) are commonly used. GridFS is particularly suited for storing large binary files like images and videos. The choice of strategy depends on the specific use case and the nature of the data.

18. Explain the use of TTL (Time-To-Live) indexes in MongoDB and how they can be used for data expiration.

TTL (Time-To-Live) indexes in MongoDB are special single-field indexes that MongoDB uses to automatically remove documents from a collection after a certain period. This is useful for data expiration scenarios like session management, event logging, or storing temporary data that doesn't need to be persisted indefinitely.

To create a TTL index, you specify a field whose value represents a date or an array containing dates, and a expireAfterSeconds value. Documents where the specified field's value is older than expireAfterSeconds relative to the current time are automatically deleted by a background process. For example:

db.collection.createIndex( { "lastActivity": 1 }, { expireAfterSeconds: 3600 } )

This will remove documents where the lastActivity field is older than 3600 seconds (1 hour) from the current time.

19. How would you implement a full-text search in MongoDB?

MongoDB offers built-in full-text search capabilities using text indexes. To implement it, you first create a text index on the field(s) you want to search. For example, db.collection.createIndex({ field1: "text", field2: "text" }). Then, you use the $text operator in your queries along with the $search expression to perform the search. For example: db.collection.find({ $text: { $search: "search term" } }).

Alternatively, for more complex requirements like typo tolerance or stemming, consider using Atlas Search, which is a fully managed search service built on Apache Lucene and directly integrated with MongoDB Atlas.

20. What are the different data types supported by MongoDB, and how do they compare to other databases?

MongoDB supports several data types, including: Double, String, Boolean, Date, Timestamp, ObjectId, Array, Embedded Document (Object), Binary Data, Code, Symbol, Int32, Int64, Decimal128, MinKey, MaxKey, and Null. These data types are generally similar to those found in other databases but with some key differences. Unlike relational databases with strict schemas, MongoDB is schema-less, offering flexibility. For example, the ObjectId type is unique to MongoDB and used as a default primary key. Also, MongoDB has built-in support for arrays and embedded documents, which avoids the need for complex joins often required in relational databases. While relational databases rely on pre-defined schemas, MongoDB offers schema flexibility. But this means we have to manage the integrity constraints ourselves. Also, MongoDB supports datatypes such as dates and numbers. These are often strings in other databases.

21. How would you optimize a MongoDB query that is performing poorly?

To optimize a poorly performing MongoDB query, I'd start by analyzing the query using explain(). This reveals the query execution plan and identifies potential bottlenecks like missing indexes or slow stages.

Next, I'd focus on the following optimization techniques:

- Indexing: Ensure appropriate indexes exist for the fields used in query filters, sort operations, and covered queries (queries that can be satisfied entirely from the index).

- Query Structure: Refactor the query to be more selective. Avoid using

$whereclauses or regular expressions without proper anchors (e.g.,^) as they are less performant. Limit the amount of data returned using projection ({field: 1}) instead of*. - Aggregation Pipeline Optimization: If using aggregation pipelines, ensure the most selective stages are placed early in the pipeline to reduce the amount of data processed in subsequent stages. Use

$matchearly and$projectto reduce the size of documents flowing through the pipeline. - Sharding: If the dataset is very large, consider sharding the collection across multiple servers.

- Read Preference: Consider setting read preferences to secondary nodes if read-heavy and eventual consistency is acceptable.

22. Explain the concept of the Oplog in MongoDB and its role in replication.

The Oplog (operations log) in MongoDB is a capped collection that resides on each replica set member. It records all write operations (insert, update, delete) that modify data. It's essentially a chronological record of changes to the database. This is critical for replication, as secondary members use the Oplog to keep their data synchronized with the primary.

When data is written to the primary, that write operation is recorded in the primary's Oplog. Secondary members then pull these Oplog entries from the primary and apply them to their own datasets. This process ensures that secondary members maintain a consistent copy of the data. Because the Oplog is a capped collection, it has a fixed size, meaning older operations are eventually overwritten, providing a rolling history of changes. The size of the Oplog directly impacts how long a secondary member can be offline before it becomes too far behind to catch up.

23. How would you handle schema evolution in a MongoDB database without causing downtime?

Schema evolution in MongoDB without downtime typically involves several strategies. First, leverage MongoDB's schema-less nature by allowing documents in a collection to have different structures. New fields can be added without affecting existing documents. Second, implement backward compatibility in your application. Your code should be able to handle both old and new document structures gracefully. This might involve checking for the existence of fields before using them or providing default values for missing fields.

Third, for more complex changes, consider using techniques like gradual schema migration. This can involve adding new fields and populating them over time using background processes or during regular application operations. For renaming fields, you can add a new field with the desired name and copy data from the old field, then eventually remove the old field once all documents have been migrated. You could also write scripts using the MongoDB shell or a driver library that perform updates in batches to minimize impact on performance. For example db.collection.updateMany({}, {$set: {newField: '$oldField'}}, {upsert: false}) can be used to migrate data. Finally, thorough testing in a staging environment is crucial before deploying any schema changes to production.

24. What are the considerations for choosing the right hardware for a MongoDB deployment?

Choosing the right hardware for MongoDB involves considering several factors. Key areas include CPU, RAM, storage, and network. CPU selection depends on the workload. Higher core counts are beneficial for write-heavy operations and complex aggregations. RAM is crucial for caching data. MongoDB performs best when the working set fits in RAM, minimizing disk I/O. Insufficient RAM leads to performance degradation. Storage is critical; SSDs offer significantly better performance compared to HDDs, especially for random read/write operations. Choose appropriate storage capacity based on your data size and expected growth. Network bandwidth is vital for replication and client connections. Ensure sufficient bandwidth to handle the data transfer between replica set members and client applications. Consider dedicated network interfaces for inter-node communication within the replica set. Furthermore, consider the cloud-based MongoDB Atlas, which manages the infrastructure for you.

25. How does MongoDB handle geospatial data and what are the different geospatial queries supported?

MongoDB handles geospatial data by storing location data as GeoJSON objects. These objects are embedded within MongoDB documents. MongoDB supports two types of geospatial indexes: 2dsphere index for spherical geometry (used for most real-world geospatial data on the Earth's surface) and 2d index for planar geometry.

MongoDB offers various geospatial queries. Here are a few frequently used queries:

$geoWithin: Selects documents where the geospatial data exists entirely within a specified shape (e.g., a polygon).$geoIntersects: Selects documents where the geospatial data intersects with a specified shape.$near: Returns documents in order of nearest to farthest from a specified point.$nearSphere: Similar to$nearbut calculates distances using spherical geometry.

26. Explain the use of change streams in MongoDB and how they can be used for real-time data processing.

MongoDB change streams allow applications to access real-time data changes without the complexity of manual polling or tailing the oplog. They provide a unified API to subscribe to data modifications (insert, update, delete, replace, invalidate) across collections, databases, or the entire deployment. Change streams are built on top of the oplog but offer a more user-friendly and reliable way to consume changes.

For real-time data processing, change streams can trigger actions based on specific data events. For example, an application could update a search index whenever a document is inserted or modified, send notifications when a user profile is updated, or maintain a real-time dashboard reflecting the latest data. Code to open a change stream to a collection myCollection in myDatabase would look like this:

db.getSiblingDB("myDatabase").collection("myCollection").watch()

27. How would you migrate data from a relational database to MongoDB?

Migrating data from a relational database to MongoDB involves several steps. First, analyze the relational schema and map it to a suitable MongoDB document structure. This often means denormalizing data to fit MongoDB's document-oriented nature. Then, extract data from the relational database, potentially transforming it during the process. Several approaches can be used for data extraction:

Direct Export: Use database-specific tools (e.g.,

mysqldump,pg_dump) to export data into a flat file format (CSV, JSON). This file can then be imported into MongoDB usingmongoimport.ETL Tools: Employ ETL (Extract, Transform, Load) tools like Apache Kafka Connect, Apache NiFi, or custom scripts using languages like Python with libraries such as

SQLAlchemyandpymongo. These tools provide more control over the transformation process. For example:import pymongo import sqlalchemy # Relational DB connection engine = sqlalchemy.create_engine('postgresql://user:password@host:port/database') connection = engine.connect() metadata = sqlalchemy.MetaData() table = sqlalchemy.Table('mytable', metadata, autoload_with=engine) # MongoDB connection client = pymongo.MongoClient('mongodb://user:password@host:port/') db = client['mydatabase'] collection = db['mycollection'] # Data migration for row in connection.execute(table.select()): doc = dict(row.items()) collection.insert_one(doc) connection.close() client.close()MongoDB Connector for BI: If using MongoDB Enterprise, the MongoDB Connector for BI allows querying relational databases directly from MongoDB, enabling more complex data integration scenarios.

Finally, validate the migrated data in MongoDB to ensure accuracy and completeness. Consider indexing relevant fields in MongoDB to optimize query performance after migration.

28. What are the differences between MongoDB Atlas and self-managed MongoDB deployments?

MongoDB Atlas is a fully-managed cloud database service, while self-managed MongoDB requires you to handle all aspects of the database infrastructure. Atlas automates tasks like provisioning, scaling, backups, and software patching, reducing operational overhead. With self-managed, you are responsible for these tasks, offering greater control but demanding more expertise and time.

Key differences include: Management: Atlas handles most management tasks, self-managed requires manual configuration and maintenance. Infrastructure: Atlas runs on the cloud provider's infrastructure, self-managed can be on-premises or on your own cloud infrastructure. Scaling: Atlas offers easier horizontal scaling, self-managed scaling requires more manual effort. Cost: Atlas has a predictable cost structure, self-managed cost varies based on infrastructure and labor. In summary, Atlas provides simplicity and automation, while self-managed gives greater control and customization.

29. How would you implement data validation in MongoDB?

MongoDB offers several ways to implement data validation. One approach is using schema validation. This allows you to define rules specifying the structure and data types of documents within a collection. You can specify these rules when creating or modifying a collection using the validator option in db.createCollection() or collMod. Validation rules are defined using query operators.

Another option involves using server-side scripting with $where clauses, although this is generally discouraged due to performance implications. Furthermore, application-level validation should also be performed to guarantee data integrity by validating the data before it reaches the database. MongoDB provides the validate() command. db.collection.validate( { full: <boolean> } ) validates the structure of the collection’s data and indexes.

30. Explain the concept of GridFS in MongoDB and when would you use it?

GridFS is a specification in MongoDB for storing and retrieving large files, such as images, audio files, and videos, that exceed the 16MB BSON document size limit. It works by splitting the file into smaller chunks (defaulting to 255KB) and storing each chunk as a separate document in a chunks collection. Metadata about the file, such as its filename, size, and content type, is stored in a files collection. This allows for efficient storage and retrieval of large files without being constrained by the document size limit.

You would use GridFS when you need to store and serve large files directly from your MongoDB database. This approach simplifies your application architecture by avoiding the need for a separate file storage system. It's particularly useful when you want to leverage MongoDB's features like replication, sharding, and indexing for your files. For example, storing user-uploaded profile pictures, large documents or media files used by an application are all suitable GridFS use-cases.

MongoDB interview questions for experienced

1. How does MongoDB ensure data consistency across multiple replica set members, especially during network partitions?

MongoDB ensures data consistency across replica set members primarily through replication and a voting-based election process. The primary node receives all write operations and then asynchronously replicates these operations to the secondary nodes. During network partitions, if the primary becomes isolated, the remaining secondaries hold an election to choose a new primary. The election considers the oplog (operation log) and data recency to select the most up-to-date secondary as the new primary.

However, data consistency guarantees are tunable. MongoDB offers different write concerns, such as w: "majority", to ensure that writes are acknowledged by a majority of the replica set members before being considered successful. This prevents data loss in case of primary failure but introduces latency. Read preference settings control from which members clients read data, offering a trade-off between data consistency and read latency. For example, reading from primaryPreferred may introduce stale reads.

2. Explain the concept of sharding in MongoDB. What are the key components involved, and how do they interact?

Sharding in MongoDB is a method of horizontally partitioning data across multiple machines to handle large datasets and high throughput operations. It involves distributing data across multiple 'shards', which are essentially independent MongoDB instances or replica sets. This allows for scaling beyond the limits of a single server.

The key components are:

- Shards: Store subsets of the data. Each shard operates as an independent MongoDB instance (or replica set for redundancy).

- Mongos (Query Routers): Act as a routing service for client applications, directing queries to the appropriate shards and aggregating the results. Clients connect to

mongosinstances, not directly to the shards. - Config Servers: Store metadata about the cluster, including the distribution of data across shards. The

mongosinstances query the config servers to determine where data resides. They store shard mapping.

The interaction goes like this: A client sends a request to a mongos instance. The mongos consults the config servers to determine which shard(s) contain the requested data. The mongos then routes the request to the appropriate shard(s), receives the results, and returns them to the client. The config servers are crucial for the mongos to properly route queries.

3. Describe your experience with MongoDB aggregation framework. Provide a specific example where you used it to solve a complex data processing problem.

I have extensive experience with the MongoDB aggregation framework, using it for various data processing tasks, including data transformation, reporting, and analytics. I'm comfortable with all stages, from initial data shaping to complex calculations and output formatting. I've worked with operators like $match, $group, $project, $unwind, $lookup, $sort, and $limit frequently.

For example, I once worked on a project requiring complex user behavior analysis for an e-commerce platform. We needed to identify user segments based on their purchasing patterns across different product categories, calculate their average order value within specific timeframes, and then rank these segments by their overall contribution to revenue. Using the aggregation framework, I was able to: 1. $match to filter relevant order data. 2. $unwind the products array within each order. 3. $lookup to enrich product data with category information. 4. $group by user and product category to calculate purchase frequency and total spending. 5. Another $group stage aggregated across categories to calculate total spending per user and the average order value. 6. Finally, $bucketAuto to segment users based on spending and $sort and $limit to rank the segments. This aggregation pipeline effectively transformed raw order data into actionable insights for marketing and product development. db.orders.aggregate([...])

4. What are the different types of indexes available in MongoDB, and how do you choose the right index for a given query?

MongoDB offers several index types, including:

- Single Field: Indexes a single field in a document.

- Compound: Indexes multiple fields; the order matters for query performance.

- Multikey: Indexes fields that hold array values; MongoDB creates separate index entries for each element of the array.

- Geospatial: Indexes fields containing geospatial data for location-based queries (2d, 2dsphere).

- Text: Supports text search queries on string content.

- Hashed: Indexes the hash of a field's value; used for shard keys in sharded clusters.