Microservices architecture is now the defacto standard for building scalable and resilient applications, which means assessing candidates requires a nuanced understanding of the underlying principles. Interviewers need a well-prepared list of questions to accurately gauge a candidate's expertise in this field, and our guide on skills required for software architect can provide some context.

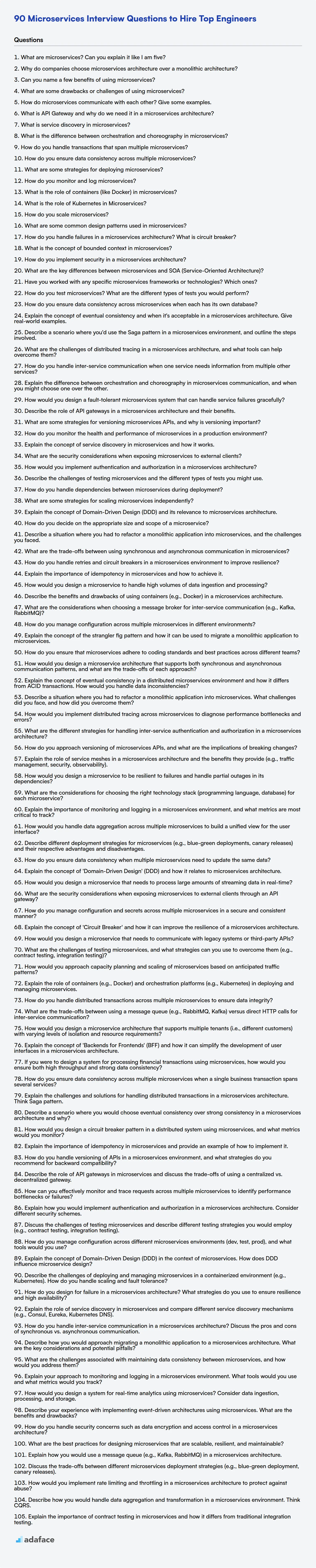

This blog post provides a question bank for hiring managers and recruiters to evaluate candidates across various experience levels, ranging from basic to expert, and also includes a set of multiple-choice questions. Each question is designed to reveal the depth of a candidate's knowledge and their practical experience with microservices.

By using these questions, you can identify candidates who not only understand the theory but also have hands-on experience building and deploying microservices and to further enhance your evaluation process, consider using Adaface's skill assessments like our Java online test before the interview.

Table of contents

Basic Microservices interview questions

1. What are microservices? Can you explain it like I am five?

Imagine you have a big toy robot, but it's hard to play with because it does everything! Microservices are like taking that big robot and breaking it into smaller robots, each doing just one thing. One robot might move, another might talk, and another might shoot lasers. These small robots can work together to do everything the big robot did, but they're easier to understand and fix if something goes wrong.

So, instead of one big program doing everything, we have many tiny programs, each responsible for one small part of the job. This makes it easier to update parts of the system without affecting the whole thing, just like fixing one small robot without stopping all the others!

2. Why do companies choose microservices architecture over a monolithic architecture?

Companies choose microservices architecture over monolithic architecture primarily for increased agility, scalability, and resilience. Microservices allow teams to work independently on smaller, more manageable codebases, leading to faster development cycles and easier deployments. Each service can be scaled independently based on its specific needs, optimizing resource utilization. If one microservice fails, the rest of the application can continue to function, improving overall system resilience.

Monolithic architectures, while simpler to initially develop, can become difficult to maintain and scale as the application grows. Changes in one part of the application can have unintended consequences in other parts, leading to longer testing cycles and slower release cadences. Furthermore, scaling a monolithic application often requires scaling the entire application, even if only a small part of it is under heavy load.

3. Can you name a few benefits of using microservices?

Microservices offer several benefits, including improved scalability, independent deployments, and increased resilience. Because services are independent, individual components can be scaled as needed without impacting the entire application. This also allows for faster and more frequent deployments, as changes to one service do not require redeploying the whole application. Furthermore, if one microservice fails, it doesn't necessarily bring down the entire system, increasing overall resilience.

Another advantage is technology diversity. Different microservices can be built using different technologies that are best suited for their specific functions. For example, one microservice might use Python and Flask, while another uses Java and Spring Boot. This allows teams to choose the right tool for the job, increasing development speed and efficiency.

4. What are some drawbacks or challenges of using microservices?

Microservices, while offering benefits like independent deployments and scalability, introduce several challenges. Increased complexity is a major drawback, requiring robust infrastructure, tooling, and monitoring to manage numerous services. Distributed systems are inherently more complex to debug and trace issues across service boundaries.

Data consistency can also be difficult to maintain across multiple databases owned by different services, potentially leading to eventual consistency models. Other challenges include the overhead of inter-service communication (latency, serialization/deserialization), the need for decentralized governance, and increased operational costs due to managing a more complex environment.

5. How do microservices communicate with each other? Give some examples.

Microservices communicate with each other using various mechanisms, primarily through APIs. The most common approach is synchronous communication via REST APIs (using HTTP). For instance, one service might make a GET request to another service's endpoint to retrieve data, or a POST request to trigger an action. Another common approach is asynchronous communication using message queues or brokers like RabbitMQ or Kafka. In this case, services publish messages to a queue/topic, and other services subscribe to those queues/topics to receive and process the messages.

Examples include:

- A product service calling an inventory service via REST to check stock levels.

- An order service publishing an "OrderCreated" event to a message queue, which is then consumed by the shipping and billing services.

- Using gRPC for high-performance internal communication.

6. What is API Gateway and why do we need it in a microservices architecture?

An API Gateway acts as a single entry point for all client requests in a microservices architecture. Instead of clients directly accessing individual microservices, they interact with the API Gateway, which then routes the requests to the appropriate backend services. It is a reverse proxy.

We need it because it decouples clients from the internal structure of the microservices, providing several benefits:

- Centralized entry point: Simplifies client interactions and reduces complexity.

- Request routing: Routes requests to the appropriate microservices.

- Authentication and authorization: Handles security concerns in one place.

- Rate limiting: Protects backend services from overload.

- Transformation: Allows request and response transformation for different client needs.

- Reduces coupling: The architecture of microservices can be changed without affecting the consumers of the API.

7. What is service discovery in microservices?

Service discovery in microservices is the automated process of locating and connecting to available service instances in a dynamic environment. It allows services to find each other without hardcoded configurations, which is essential in a microservices architecture where service instances are frequently created, destroyed, and scaled. Without service discovery, managing the constantly changing network locations of services would be extremely complex.

There are two main patterns for service discovery:

- Client-side discovery: The client queries a service registry to find available service instances and then directly connects to one of them.

- Server-side discovery: Clients connect to a load balancer, which in turn queries the service registry and routes the request to an available service instance. Examples of technologies used include:

Eureka,Consul, andetcd.

8. What is the difference between orchestration and choreography in microservices?

Orchestration and choreography are both patterns for managing interactions between microservices, but they differ in how the interactions are controlled. Orchestration relies on a central orchestrator that tells each microservice what to do. This central component manages the overall workflow. Choreography, on the other hand, has each microservice react to events and know what actions to perform based on those events without a central controller. It is a more decentralized approach.

In orchestration, the communication is top-down, with the orchestrator coordinating the services. In choreography, the services communicate by publishing events that other services subscribe to, creating a reactive system. Examples of orchestration frameworks include BPMN engines or custom workflow implementations. Examples of choreography patterns include message queues like Kafka or RabbitMQ where services publish and consume events.

9. How do you handle transactions that span multiple microservices?

Handling transactions across multiple microservices is complex, as there's no distributed transaction management like in monolithic databases. Two common patterns are Saga and Two-Phase Commit (2PC). Saga involves a sequence of local transactions, each updating a single microservice. If one fails, compensating transactions are executed to undo previous changes, ensuring eventual consistency. There are two types of Saga: Choreography-based (services communicate via events) and Orchestration-based (a central orchestrator manages the workflow).

2PC is another approach where all participating microservices must agree to commit before any changes are made permanent. While it provides strong consistency, it can introduce performance bottlenecks and tight coupling, making it less suitable for highly distributed microservices architectures. The choice between Saga and 2PC depends on the specific requirements of the system, balancing consistency, performance, and complexity.

10. How do you ensure data consistency across multiple microservices?

Ensuring data consistency across microservices is a complex challenge. Common strategies include: Saga Pattern (orchestration or choreography), where a series of local transactions across services are coordinated; Two-Phase Commit (2PC) (less common in microservices due to tight coupling), a distributed transaction protocol; and Eventual Consistency, where data may be temporarily inconsistent but converges over time. For eventual consistency, techniques like compensating transactions and idempotency are crucial for handling failures. Using message queues (e.g., Kafka, RabbitMQ) can help ensure reliable event delivery.

For instance, if an order service needs to update inventory in an inventory service, the order service publishes an 'OrderCreated' event. The inventory service consumes this event and attempts to update its stock. If the inventory update fails, a compensating transaction (e.g., 'OrderCancelled' event) can be triggered to revert the order. Idempotency ensures that processing the same event multiple times has the same effect as processing it once, preventing unintended side effects due to retries. Each service should ideally own its data, minimizing direct database access between services.

11. What are some strategies for deploying microservices?

Several strategies exist for deploying microservices. Some common approaches include:

- Blue/Green Deployment: Deploy a new version (green) alongside the old (blue). Once the new version is verified, switch traffic. This minimizes downtime.

- Canary Deployment: Gradually roll out a new version to a small subset of users. Monitor performance and errors before rolling out to everyone. This allows for early issue detection.

- Rolling Deployment: Update microservice instances one at a time or in small batches. This avoids a complete outage but requires backward compatibility during the update process.

- In-place deployment: Stop the old version and deploy new version on the same instances.

- Serverless Deployment: Deploy microservices as functions using platforms like AWS Lambda or Azure Functions. This simplifies deployment and scaling.

Consider factors like downtime tolerance, rollback strategy, and complexity when choosing a deployment method. Containerization (e.g., Docker) and orchestration (e.g., Kubernetes) are often used to facilitate these deployments. For example, using a rolling update in Kubernetes: kubectl rolling-update my-app --image=new-image:latest.

12. How do you monitor and log microservices?

Effective microservice monitoring and logging involve several key aspects. For monitoring, I'd use a combination of metrics, distributed tracing, and health checks. Metrics, gathered by tools like Prometheus, provide insights into resource utilization, response times, and error rates. Distributed tracing, using tools like Jaeger or Zipkin, helps track requests across multiple services. Health checks expose endpoints to verify service availability. I would also use Grafana or similar tools for creating dashboards that visualize these metrics and traces.

For logging, I'd implement centralized logging using tools like ELK stack (Elasticsearch, Logstash, Kibana) or Splunk. Structured logging (e.g., using JSON format) is crucial for efficient querying and analysis. Correlation IDs should be added to logs to track requests across services. Alerting mechanisms are set up to notify the team when specific thresholds are breached. Centralized dashboards with alerts allow for proactive responses to potential problems.

13. What is the role of containers (like Docker) in microservices?

Containers, such as Docker, play a crucial role in microservices architectures by providing a lightweight and isolated environment for each microservice. They encapsulate a microservice and all its dependencies (libraries, binaries, configuration files) into a single, portable unit. This ensures that the microservice runs consistently across different environments (development, testing, production).

Using containers offers several benefits:

- Isolation: Each microservice runs in its own isolated container, preventing dependencies conflicts and ensuring that failures in one microservice don't affect others.

- Portability: Containers can be easily moved and deployed across different environments, simplifying the deployment process.

- Scalability: Containers can be easily scaled up or down based on demand, allowing for efficient resource utilization.

- Simplified Deployment: Container orchestration tools like Kubernetes automate the deployment, scaling, and management of containerized microservices.

14. What is the role of Kubernetes in Microservices?

Kubernetes is a container orchestration platform that plays a vital role in managing and scaling microservices. It automates the deployment, scaling, and management of containerized applications.

Specifically, Kubernetes helps with:

- Service Discovery: Kubernetes provides mechanisms for microservices to discover and communicate with each other using service names instead of hardcoded IP addresses, by using its own DNS.

- Load Balancing: It distributes traffic across multiple instances of a microservice to ensure high availability and performance.

- Scaling: Kubernetes can automatically scale the number of microservice instances based on demand, ensuring that the application can handle varying loads.

- Self-healing: Kubernetes monitors the health of microservices and automatically restarts failed containers.

- Automated Deployments and Rollbacks: Simplifies the process of deploying new versions of microservices and rolling back to previous versions if necessary.

15. How do you scale microservices?

Scaling microservices involves scaling each service independently based on its specific needs. Horizontal scaling, where you add more instances of a service, is the most common approach. This can be automated using container orchestration platforms like Kubernetes, which dynamically adjusts the number of instances based on resource utilization or request volume. Load balancing distributes traffic across these instances.

Several strategies exist to optimize scaling. These include:

- Caching: Reduce load on services by caching frequently accessed data.

- Database Optimization: Optimize database queries and consider using read replicas.

- Asynchronous Communication: Use message queues (e.g., Kafka, RabbitMQ) for non-critical operations to decouple services and improve responsiveness.

- Code Optimization: Profile code to identify bottlenecks and optimize performance.

- Autoscaling: Utilize tools to automatically scale services based on predefined metrics. Proper monitoring and logging are crucial to identify scaling bottlenecks and ensure the health of your microservices.

16. What are some common design patterns used in microservices?

Common design patterns in microservices include:

- API Gateway: Acts as a single entry point for clients, routing requests to the appropriate microservices.

- Circuit Breaker: Prevents cascading failures by stopping requests to a failing service temporarily.

- Aggregator: Combines data from multiple services into a single response.

- CQRS (Command Query Responsibility Segregation): Separates read and write operations for optimized performance and scalability. Useful if the ratio of reads is much higher than writes and vice-versa.

- Event Sourcing: Captures all changes to an application's state as a sequence of events, enabling auditing and replay capabilities. Useful if auditing is required and application complexity is low.

- Saga: Manages distributed transactions across multiple services, ensuring data consistency. There are two main types of sagas: Choreography-based sagas and Orchestration-based sagas.

- Strangler Fig: Gradually replaces a monolithic application with microservices, allowing for a smooth transition. This includes introducing new functionalities with microservices and slowly phasing out old monolithic application functionalities.

- Backends for Frontends (BFF): Creates separate backend services tailored to specific user interfaces, optimizing the user experience.

These patterns address challenges like service discovery, fault tolerance, and data consistency in a distributed microservices architecture.

17. How do you handle failures in a microservices architecture? What is circuit breaker?

In a microservices architecture, failures are inevitable. Handling them gracefully is crucial for maintaining system stability. Strategies include retries (with exponential backoff), timeouts, load shedding (limiting incoming requests), and, most importantly, circuit breakers.

A circuit breaker is a design pattern that prevents cascading failures. It works like an electrical circuit breaker: when a service repeatedly fails, the circuit breaker "opens", preventing further requests from reaching the failing service. Instead, it returns a fallback response (e.g., cached data or a default value) or throws an exception. After a timeout period, the circuit breaker enters a "half-open" state, allowing a limited number of test requests to pass through. If these requests succeed, the circuit breaker "closes", resuming normal operation. If they fail, the circuit breaker returns to the "open" state.

18. What is the concept of bounded context in microservices?

A bounded context in microservices defines a specific domain or subdomain within a larger system, establishing clear boundaries for models, data, and code. It means each microservice owns its data and logic within this context and is responsible for maintaining its consistency. It's a strategic way to decompose a complex system into smaller, manageable units, preventing conceptual overlap and reducing the risk of creating a tightly coupled monolithic application. Different bounded contexts can use different technologies and data models without impacting each other.

Key benefits of using bounded contexts include:

- Improved maintainability and scalability.

- Reduced complexity within each microservice.

- Increased autonomy for development teams.

- Better alignment with business domains.

19. How do you implement security in a microservices architecture?

Securing a microservices architecture involves several key strategies. Authentication and authorization are crucial, often implemented using standards like OAuth 2.0 and OpenID Connect. An API Gateway acts as a central point for handling authentication, authorization, and rate limiting before requests reach individual services. Consider using JSON Web Tokens (JWTs) to securely propagate user identity between services.

Service-to-service communication should be secured with TLS/SSL. Implement proper input validation and output encoding in each service to prevent injection attacks. Regularly scan for vulnerabilities, enforce the principle of least privilege when assigning permissions, and implement robust logging and monitoring to detect and respond to security incidents.

20. What are the key differences between microservices and SOA (Service-Oriented Architecture)?

Microservices and SOA both aim to build applications using services, but differ in philosophy and implementation. SOA often uses a centralized enterprise service bus (ESB) for communication and governance, leading to heavier, more monolithic services that share resources. Microservices, in contrast, advocate for decentralized governance, with each service being small, independent, and deployable. They typically use lightweight protocols like REST or gRPC for communication, and favor smart endpoints with dumb pipes.

Key differences include:

- Service Size: SOA services tend to be larger and coarser-grained, while microservices are smaller and more fine-grained.

- Communication: SOA often relies on a central ESB, microservices favor direct communication or lightweight message queues.

- Governance: SOA typically has centralized governance, microservices favor decentralized governance.

- Technology: SOA can be technology-agnostic, microservices often use modern, lightweight technologies.

- Deployment: Microservices are independently deployable, SOA services may have dependencies making deployment more complex.

- Coupling: SOA services are often more loosely coupled via the ESB, microservices are ideally independently deployable and thus highly decoupled.

21. Have you worked with any specific microservices frameworks or technologies? Which ones?

Yes, I have experience with several microservices frameworks and technologies. Specifically, I've worked with Spring Boot and Spring Cloud in the Java ecosystem, using features like service discovery with Eureka, API gateways with Zuul (now Spring Cloud Gateway), and configuration management with Spring Cloud Config. I also have experience building microservices using Node.js with Express, often utilizing Docker and Kubernetes for containerization and orchestration.

Additionally, I've worked with message queues like RabbitMQ and Kafka for asynchronous communication between services. I have some familiarity with gRPC for high-performance inter-service communication and have used RESTful APIs extensively for synchronous interactions. In terms of service meshes, I've explored Istio and Linkerd to manage service-to-service communication. docker-compose was also used to manage inter-dependencies.

22. How do you test microservices? What are the different types of tests you would perform?

Testing microservices involves various levels and types of tests to ensure their functionality, reliability, and integration. Different types of tests performed include:

Unit Tests: Testing individual microservice components/modules in isolation. These ensure each function/method works as expected. Code coverage tools are often used.

Integration Tests: Testing the interaction between two or more microservices. This validates that they can communicate and exchange data correctly. Mocking and stubbing are often used to isolate the services being tested.

Contract Tests: Verifying that microservices adhere to the agreed-upon contracts (APIs). This helps prevent breaking changes when one service updates.

End-to-End (E2E) Tests: Testing the entire system, including all microservices and external dependencies. This ensures the overall functionality of the application from the user's perspective.

Performance Tests: Measuring the performance of microservices under different load conditions. This helps identify bottlenecks and ensure the system can handle the expected traffic.

Security Tests: Identifying security vulnerabilities in microservices. This includes testing for authentication, authorization, and data protection.

Load Tests: Evaluating how the microservice behaves under expected peak load. This will help identify potential issues that arise with large number of concurrent requests.

Chaos Engineering: Intentionally introducing failures (e.g., service outages, network latency) to test the system's resilience and fault tolerance.

For example, when using

RestTemplatefor calling other services, you might mockRestTemplateusingMockitoor similar mocking frameworks. You will want to check response status codes and expected data returned by the other service.

Intermediate Microservices interview questions

1. How do you ensure data consistency across microservices when each has its own database?

Ensuring data consistency across microservices with independent databases is challenging but crucial. Several patterns can be employed, often in combination.

- Saga Pattern: This involves a sequence of local transactions across services. If one transaction fails, compensating transactions are executed to undo previous changes, maintaining eventual consistency.

- Two-Phase Commit (2PC): A distributed transaction protocol where all participating services must agree to commit before the changes are made durable. This is generally discouraged in microservices due to its tight coupling and performance impact.

- Eventual Consistency: Accept that data may be temporarily inconsistent. Microservices publish events when data changes, and other services subscribe to these events to update their own data. Message brokers like Kafka or RabbitMQ are commonly used. Using techniques like idempotent consumers can reduce the effect of duplicated event processing.

- API Composition/Data Mesh: Querying data from multiple microservices at the API layer to create a consistent view. Data mesh is a decentralised approach with a focus on data ownership and domain-driven design.

2. Explain the concept of eventual consistency and when it's acceptable in a microservices architecture. Give real-world examples.

Eventual consistency is a consistency model where, if no new updates are made to a data item, all accesses to that item will eventually return the last updated value. It's a weaker guarantee than strong consistency, which requires that all reads see the most recent write immediately. In a microservices architecture, eventual consistency is often acceptable when dealing with distributed systems where strong consistency would introduce significant latency and reduce availability. It is particularly suitable when high availability, partition tolerance, and scalability are prioritized over immediate consistency.

Acceptable scenarios include:

- E-commerce order processing: When a user places an order, updates to inventory, order status, and payment processing systems may not be immediately consistent. A slight delay is acceptable as long as the order is eventually fulfilled correctly.

- Social media feeds: Posts and likes may not be immediately visible to all users due to caching and distribution delays. However, eventually, everyone will see the updated information.

- Content Delivery Networks (CDNs): Updates to content may take time to propagate across all CDN nodes. While some users might see older versions temporarily, the content will eventually be consistent across the network.

3. Describe a scenario where you'd use the Saga pattern in a microservices environment, and outline the steps involved.

Let's say we have an e-commerce application built with microservices: OrderService, PaymentService, and InventoryService. When a user places an order, we need to ensure that the payment is processed, and the inventory is updated. If any of these operations fail, we need to rollback the previous successful operations to maintain data consistency. This is where the Saga pattern is useful.

The steps involved are:

- The

OrderServicereceives an order request and creates a pending order. - It then initiates the Saga by sending a message to the

PaymentServiceto process the payment. - If the payment is successful, the

PaymentServicesends a confirmation message back to theOrderService. - The

OrderServicethen sends a message to theInventoryServiceto reserve the items from the inventory. - If the inventory reservation is successful, the

InventoryServicesends a confirmation message back to theOrderService. - The

OrderServicethen marks the order as complete. However, if either the payment or inventory reservation fails, a compensation transaction is triggered to rollback the completed actions. For example, If inventory reservation fails,InventoryServicesends a failure message toOrderService, which in turn sends a message toPaymentServiceto cancel the payment, and then updates the order status as failed.

4. What are the challenges of distributed tracing in a microservices architecture, and what tools can help overcome them?

Distributed tracing in a microservices architecture presents several challenges. One major hurdle is the increased complexity of tracking requests as they hop between multiple services. This makes it difficult to pinpoint the root cause of latency or errors. Another challenge is the overhead associated with collecting and propagating tracing data, which can impact performance if not implemented carefully. Data consistency and correlation across different services and technologies also pose significant problems.

Tools like Jaeger, Zipkin, and Prometheus can help overcome these challenges. Jaeger and Zipkin provide end-to-end tracing capabilities, allowing you to visualize request flows and identify performance bottlenecks. Prometheus is used for monitoring and alerting based on metrics derived from tracing data. The OpenTelemetry standard provides a vendor-agnostic way to instrument code for tracing, ensuring portability across different tracing backends. By using these tools and standards, you can effectively manage and analyze distributed traces, improving the observability and resilience of your microservices architecture.

5. How do you handle inter-service communication when one service needs information from multiple other services?

When a service needs data from multiple other services, several approaches can be used. A common pattern is orchestration, where a central service calls other services to gather the necessary information. This approach can be simple to implement initially, but it can lead to tight coupling and a single point of failure. Another pattern is choreography, where each service publishes events, and the requesting service subscribes to the relevant events to build its own view of the data. This offers better decoupling and resilience.

Alternatives include the Backend for Frontend (BFF) pattern, which creates a specific API for each client, aggregating data from multiple services. GraphQL can also be used to allow the client to specify the exact data it needs from multiple services, which is then resolved by a GraphQL server. The choice of method depends on the complexity of the data requirements, performance needs, and the desired level of coupling between services.

6. Explain the difference between orchestration and choreography in microservices communication, and when you might choose one over the other.

Orchestration and choreography are two different approaches to managing communication between microservices. Orchestration relies on a central 'orchestrator' service that tells other services what to do. The orchestrator makes decisions and coordinates the interactions. Choreography, on the other hand, is a decentralized approach where each service knows its responsibilities and communicates with other services independently based on events. Each service reacts to events and publishes new events as needed, creating a collaborative flow.

The choice between orchestration and choreography depends on the specific requirements of the system. Orchestration can be easier to understand and manage in simpler systems, providing a clear view of the overall process. However, it can become a bottleneck and a single point of failure in complex systems. Choreography offers greater scalability and resilience as it eliminates the central orchestrator, but it can be more challenging to debug and understand the overall flow, potentially leading to circular dependencies or cascading failures if not designed carefully. Therefore, choreography is often favored in complex, highly distributed systems where scalability and fault tolerance are paramount. Consider orchestration when you need a central authority to manage a workflow, and choreography when you need a more decoupled and scalable system. Event-driven architectures often lend themselves well to choreography.

7. How would you design a fault-tolerant microservices system that can handle service failures gracefully?

To design a fault-tolerant microservices system, I'd use several key strategies. Firstly, implementing service discovery (like Consul or etcd) ensures services can always locate each other, even if instances fail. Circuit breakers (using libraries like Hystrix or Resilience4j) prevent cascading failures by stopping requests to failing services. Retries with exponential backoff allow transient errors to resolve without overwhelming the system.

Secondly, employing asynchronous communication (message queues like RabbitMQ or Kafka) decouples services and allows them to continue operating even when other services are temporarily unavailable. Health checks expose service status, enabling automated monitoring and alerting, as well as automated restart or redeployment of failing instances. Finally, redundancy is critical. Multiple instances of each service should run across different availability zones to minimize the impact of infrastructure failures.

8. Describe the role of API gateways in a microservices architecture and their benefits.

API Gateways act as a single entry point for all client requests in a microservices architecture. Instead of clients directly communicating with multiple microservices, they interact with the API Gateway, which then routes the request to the appropriate microservice(s) and aggregates the responses. This simplifies the client experience and decouples the client applications from the internal microservice architecture.

Benefits include:

- Centralized Security: Implementing authentication, authorization, and rate limiting in one place.

- Request Routing: Directing requests to the correct microservice.

- Protocol Translation: Translating between different protocols (e.g., HTTP/1.1 to HTTP/2).

- Load Balancing: Distributing traffic across multiple instances of a microservice.

- Monitoring and Analytics: Providing insights into API usage and performance.

- Simplified Client Communication: Clients only need to interact with a single endpoint.

- Reduced Complexity: Hides the internal microservice structure from the client.

- Enables API versioning: facilitates seamless API evolution.

9. What are some strategies for versioning microservices APIs, and why is versioning important?

Versioning microservice APIs is crucial for maintaining compatibility as services evolve. Without it, changes can break existing clients, leading to application failures. Common strategies include:

- URI Versioning: Incorporating the version number in the URI (e.g.,

/api/v1/users). - Header Versioning: Using custom headers to specify the version (e.g.,

Accept-Version: v2). - Media Type Versioning: Defining different media types for each version (e.g.,

Accept: application/vnd.example.v1+json). - Query Parameter Versioning: Adding the version as a query parameter, such as

/api/users?version=v1

Each method has trade-offs, but the key is to choose one that aligns with the API's design and client needs. For example, URI versioning is easily discoverable, while header versioning keeps URLs cleaner. By versioning APIs, teams can safely introduce breaking changes, maintain backward compatibility, and provide a smooth transition for clients.

10. How do you monitor the health and performance of microservices in a production environment?

Monitoring microservice health and performance involves several key strategies. I'd use a combination of techniques, including centralized logging, distributed tracing, and metrics collection. Centralized logging aggregates logs from all microservices into a single, searchable location (e.g., using ELK stack or Splunk), facilitating troubleshooting and identifying patterns.

Distributed tracing (e.g., using Jaeger, Zipkin) tracks requests as they propagate through different microservices, helping pinpoint latency bottlenecks and dependencies. Metrics collection uses tools like Prometheus or Grafana to capture performance indicators (CPU usage, memory consumption, response times, error rates). These metrics are then visualized on dashboards and used to set up alerts for anomalies. Health checks should also be implemented to quickly detect failed services.

11. Explain the concept of service discovery in microservices and how it works.

Service discovery in microservices is the process of automatically locating services on a network. In a microservices architecture, service instances are dynamically created and destroyed, making it difficult to hardcode their network locations (IP addresses, ports). Service discovery mechanisms allow services to find each other without manual configuration.

The process typically involves a central registry or a distributed discovery system. Services register themselves with the registry upon startup, providing their network location and other relevant metadata. When a service needs to communicate with another service, it queries the registry to obtain the target service's address. Popular technologies include: Eureka, Consul, etcd, and ZooKeeper. A simple example with Eureka involves a service registering itself:

@EnableEurekaClient

@SpringBootApplication

public class MyServiceApplication {

public static void main(String[] args) {

SpringApplication.run(MyServiceApplication.class, args);

}

}

12. What are the security considerations when exposing microservices to external clients?

When exposing microservices to external clients, several security considerations are paramount. Authentication is crucial to verify the identity of clients, often achieved through API keys, OAuth 2.0, or JWT. Authorization then ensures that authenticated clients only access the resources they are permitted to. Implement robust input validation to prevent injection attacks, and employ rate limiting to mitigate denial-of-service attacks.

Furthermore, use HTTPS for all communication to encrypt data in transit. Implement a Web Application Firewall (WAF) to protect against common web exploits. Regularly audit and monitor your microservices for vulnerabilities, and ensure that all dependencies are up-to-date to patch known security flaws. Centralized logging is very important for auditing security related issues.

13. How would you implement authentication and authorization in a microservices architecture?

Authentication and authorization in a microservices architecture typically involves a centralized or distributed approach. A common pattern is using an API Gateway as a central point for authentication. The gateway verifies the user's identity (e.g., using JWT tokens) before routing requests to the appropriate microservice. Microservices then rely on the gateway's decision for authorization.

Each microservice should not handle authentication directly, improving security and reducing code duplication. For authorization, microservices can use roles or permissions passed by the API Gateway (contained within the JWT). They can then use this data to decide if the user is allowed to perform the requested action. For inter-service communication use mTLS. This approach ensures that only authenticated and authorized requests reach the microservices.

14. Describe the challenges of testing microservices and the different types of tests you might use.

Testing microservices presents unique challenges due to their distributed nature and independent deployability. Key challenges include: Complexity: Managing dependencies and interactions between multiple services. Network Latency: Accounting for potential delays and failures in communication. Data Consistency: Ensuring data integrity across distributed databases. Environment Setup: Replicating realistic environments for testing. Service Discovery: Properly testing services that locate each other dynamically.

Different types of tests address these challenges: Unit Tests: Validate individual service components in isolation. Integration Tests: Verify interactions between two or more services. Contract Tests: Ensure that services adhere to agreed-upon contracts (APIs). End-to-End Tests: Validate the entire system workflow from end to end. Performance Tests: Assess the scalability and responsiveness of services under load. Security Tests: Identify vulnerabilities and ensure data protection. Tools like Postman, JUnit, Mockito, and WireMock are helpful in testing microservices.

15. How do you handle dependencies between microservices during deployment?

Handling dependencies between microservices during deployment requires careful coordination. Several strategies can be employed, including:

- Rolling deployments: Deploy changes to a subset of instances, gradually increasing the updated percentage. This minimizes downtime and allows for monitoring the new version's impact before a full rollout.

- Blue-green deployments: Maintain two identical environments, 'blue' (current live) and 'green' (new version). Deploy the new version to 'green,' test it, and then switch traffic from 'blue' to 'green'. A rollback is easy if issues arise.

- Canary deployments: Route a small percentage of user traffic to the new version (canary) while the majority remains on the old version. This allows for real-world testing with minimal risk. Monitoring the canary's performance helps identify problems early.

- Feature flags: Introduce new features as flags within the existing codebase. These flags can be toggled on or off at runtime, allowing you to deploy code changes without immediately exposing them to all users.

Proper communication and versioning are crucial. Semantic versioning helps indicate the nature and scope of changes. Consumer-Driven Contracts (CDCs) or API Gateways can manage compatibility between microservices and minimize breaking changes.

16. What are some strategies for scaling microservices independently?

To scale microservices independently, several strategies can be employed. Vertical scaling involves increasing the resources (CPU, memory) of a single instance. Horizontal scaling is more common, adding more instances of the service. This often involves using a load balancer to distribute traffic.

Other strategies include:

- Database sharding: Distributing database load across multiple servers.

- Read replicas: Creating read-only copies of the database for read-heavy operations.

- Caching: Implementing caching layers to reduce database load, for example using Redis or Memcached.

- Asynchronous communication: Using message queues (e.g., Kafka, RabbitMQ) to decouple services and handle traffic spikes.

- Autoscaling: Automatically adjusting the number of instances based on demand using tools like Kubernetes.

- Code Optimization: Improving code for less resource consumption.

17. Explain the concept of Domain-Driven Design (DDD) and its relevance to microservices architecture.

Domain-Driven Design (DDD) is an approach to software development that centers the development process around the core business domain. It emphasizes understanding the business, its rules, and its language (ubiquitous language) to create a software model that accurately reflects it. DDD aims to build software that is highly aligned with business needs, making it easier to evolve and maintain as the business changes. Key concepts include: Entities, Value Objects, Aggregates, Repositories, Domain Services, and the Ubiquitous Language.

DDD is highly relevant to microservices because it helps define clear boundaries for each service based on bounded contexts within the overall domain. Each microservice can then be responsible for a specific subdomain, encapsulating its own data and logic. This promotes autonomy, independent deployability, and scalability, as each team can focus on their specific area of the business without tightly coupling to other services. It helps ensure that the microservices architecture aligns with the business needs. Example: An e-commerce domain might have separate microservices for 'Order Management', 'Inventory', and 'Customer Profiles', each designed according to its subdomain.

18. How do you decide on the appropriate size and scope of a microservice?

Determining the right size and scope for a microservice involves balancing several factors. A good starting point is the Single Responsibility Principle: a microservice should ideally own a single business capability or a well-defined set of related functionalities. It should 'do one thing, and do it well'. Teams can look at common data access patterns, transaction boundaries, and deployment frequency. If code changes in two modules always happen together, they might belong in the same microservice.

Avoid creating 'nano services' that are too granular, as the overhead of inter-service communication and coordination can outweigh the benefits. Conversely, avoid creating overly large services that resemble monoliths, as they become difficult to maintain and deploy independently. Focus on independent deployability and scalability. Also consider team autonomy; a microservice should be small enough for a small team to own and manage independently.

19. Describe a situation where you had to refactor a monolithic application into microservices, and the challenges you faced.

In a previous role, I led the effort to migrate a large monolithic e-commerce application to a microservices architecture. The monolith handled everything from product catalog management and order processing to user authentication and payment. We faced several challenges. Firstly, identifying clear boundaries for services was difficult. We opted to start by splitting out the order processing and user authentication components based on business functionality and independent deployability. Secondly, ensuring data consistency across services became a concern. We initially used eventual consistency with message queues (like Kafka) for inter-service communication.

Another significant hurdle was managing dependencies. The monolith had tightly coupled modules, making it hard to isolate and extract code. We had to carefully refactor the codebase, introduce well-defined APIs, and use techniques like dependency injection to decouple services. Monitoring and debugging also became more complex, requiring centralized logging and distributed tracing (using tools like Jaeger) to pinpoint issues across service boundaries. We also faced challenges around deployment, as we moved to a containerized environment (Docker/Kubernetes) and had to automate the deployment process. Ultimately, this involved implementing CI/CD pipelines.

20. What are the trade-offs between using synchronous and asynchronous communication in microservices?

Synchronous communication in microservices, like REST, offers simplicity and immediate feedback. You know right away if a service is down or an operation failed. However, it introduces tight coupling and can lead to cascading failures. If one service is slow or unavailable, others may be blocked, reducing overall system resilience.

Asynchronous communication, like using message queues (e.g., Kafka, RabbitMQ), promotes loose coupling and improved resilience. Services can continue operating even if others are temporarily unavailable. However, it adds complexity with message brokers, eventual consistency, and increased debugging challenges. It's harder to track request flows and immediate feedback isn't guaranteed.

21. How do you handle retries and circuit breakers in a microservices environment to improve resilience?

In a microservices environment, retries and circuit breakers are crucial for resilience. Retries involve automatically re-attempting failed requests, typically with exponential backoff to avoid overwhelming the failing service. This handles transient errors like temporary network glitches. Circuit breakers, on the other hand, prevent repeated calls to a service that is consistently failing. They "open" the circuit, failing fast and potentially using a fallback mechanism, until the service recovers, at which point the circuit "closes", allowing traffic to flow again. Common libraries like Resilience4j or Hystrix can be used to implement these patterns.

To effectively use these mechanisms, consider: defining appropriate retry policies (number of retries, backoff strategy), setting thresholds for circuit breaker tripping (error rate, failure count), and providing meaningful fallback logic. Monitoring circuit breaker state and retry metrics is also important for identifying and addressing underlying issues.

22. Explain the importance of idempotency in microservices and how to achieve it.

Idempotency in microservices is crucial because it ensures that performing an operation multiple times has the same effect as performing it once. This is vital for dealing with failures and retries in distributed systems. Without idempotency, duplicate requests due to network issues or timeouts can lead to unintended consequences like duplicate orders, incorrect balances, or data corruption.

Idempotency can be achieved through several methods. A common approach is using a unique identifier (UUID) for each request. The service checks if a request with that ID has already been processed. If it has, the service returns the previous result without re-executing the operation. Other strategies include using idempotent operations provided by databases (e.g., incrementing a counter by a specific value) or designing operations to be inherently idempotent (e.g., setting a value instead of incrementing it). Code example: if (requestAlreadyProcessed(requestId)) { return previousResult; } else { processRequest(); saveRequestStatus(requestId); return result;}

23. How would you design a microservice to handle high volumes of data ingestion and processing?

To handle high volumes of data ingestion and processing in a microservice, I'd focus on scalability, reliability, and efficiency. Key elements include:

- Asynchronous Processing: Use message queues (like Kafka, RabbitMQ) to decouple data ingestion from processing. This allows the microservice to handle bursts of data without being overwhelmed. The ingestion component writes data to the queue, and separate processing workers consume and process the data at their own pace.

- Horizontal Scaling: Design the microservice to be stateless so that multiple instances can run concurrently. Use a load balancer to distribute the traffic evenly across the instances. Technologies like Kubernetes can help with automated scaling.

- Data Partitioning: Partition the data across multiple databases or storage systems based on a relevant key (e.g., customer ID, timestamp). This distributes the load and improves query performance. Consider using sharding or distributed databases like Cassandra.

- Buffering and Batching: Buffer incoming data and process it in batches rather than individually. This reduces the overhead of processing each record and improves throughput.

- Efficient Data Formats: Use efficient data serialization formats like Protocol Buffers or Apache Avro to reduce the size of the data being transmitted and stored.

- Monitoring and Alerting: Implement comprehensive monitoring and alerting to track key metrics like ingestion rate, processing time, error rate, and resource utilization. This allows you to identify and address potential bottlenecks or issues proactively.

24. Describe the benefits and drawbacks of using containers (e.g., Docker) in a microservices architecture.

Containers offer significant benefits for microservices. They provide isolation, ensuring each service runs in its own environment, preventing dependency conflicts. They facilitate portability, allowing services to be easily deployed across different environments (dev, staging, production) and infrastructure providers. Containerization also improves resource utilization and scalability because containers are lightweight and have a small footprint. Furthermore, they simplify deployment and management through tools like Docker and Kubernetes. In a nutshell, containers allow us to easily package and deploy our microservices.

However, there are drawbacks. Increased complexity is a primary concern, requiring expertise in container orchestration, networking, and security. Monitoring and debugging can be more challenging due to the distributed nature of containerized microservices. Security vulnerabilities in container images or the container runtime environment must be addressed proactively. Finally, resource overhead, while small compared to VMs, is still present. You need to account for CPU, memory, and storage used by the container runtime itself. This extra overhead will increase the cost.

25. What are the considerations when choosing a message broker for inter-service communication (e.g., Kafka, RabbitMQ)?

When choosing a message broker, consider several factors. Performance and Scalability: Kafka excels at high throughput and horizontal scalability, suitable for event streaming. RabbitMQ is generally faster for complex routing scenarios. Reliability: Assess message delivery guarantees (at least once, at most once, exactly once). Kafka offers robust fault tolerance through replication. RabbitMQ provides features like message acknowledgments and persistence. Complexity: Kafka requires ZooKeeper for cluster management, increasing operational complexity. RabbitMQ is generally easier to set up and manage. Use Case: If you need to build an event-driven architecture with high volumes of data, Kafka is a strong choice. If you need complex routing and flexible messaging patterns, RabbitMQ might be a better fit. Ecosystem and Community: Consider the availability of client libraries, integrations, and community support. Both have large communities and extensive resources.

26. How do you manage configuration across multiple microservices in different environments?

Managing configuration across multiple microservices in different environments involves several strategies. A common approach is using a centralized configuration server like Spring Cloud Config, HashiCorp Vault, or etcd. Each microservice retrieves its configuration from this server at startup, specifying the environment (e.g., development, staging, production). These tools provide versioning, encryption, and audit trails.

Alternatively, environment variables can be used, especially in containerized environments like Docker and Kubernetes. Configuration is injected as environment variables at runtime. For more complex scenarios, a combination of both centralized configuration and environment variables can be utilized. For example, sensitive information like database passwords might be stored in a secure vault and accessed via environment variables, while application-specific settings are managed by a centralized configuration server.

27. Explain the concept of the strangler fig pattern and how it can be used to migrate a monolithic application to microservices.

The Strangler Fig Pattern is a migration strategy for gradually transforming a monolithic application into a microservices architecture. It involves creating a new, parallel application (the 'strangler fig') that incrementally replaces the functionality of the old monolith. As new features are built or existing features are rewritten as microservices, they are exposed via the new application. User traffic is then progressively shifted from the monolith to the new microservices, feature by feature, until the monolith is eventually 'strangled' and can be decommissioned.

This pattern minimizes risk by allowing for incremental changes and rollback capabilities. Functionality can be migrated in smaller, manageable chunks. Here are the key steps:

- Transform: Create a new application (the 'strangler fig').

- Coexist: The new application and the monolith run side-by-side.

- Redirect: Route traffic incrementally to the new application for specific features. The rest remains on monolith.

- Remove: Once a feature is fully migrated, remove it from the monolith.

28. How do you ensure that microservices adhere to coding standards and best practices across different teams?

To ensure microservices adhere to coding standards and best practices across different teams, a combination of strategies is crucial. Implementing centralized linting and formatting tools (e.g., ESLint, Prettier) configured with the agreed-upon standards allows for automated enforcement during development and CI/CD pipelines. Sharing common libraries and frameworks provides pre-built components that embody best practices. Regular code reviews, utilizing defined checklists aligned with the standards, can help identify and correct deviations.

Furthermore, establishing clear documentation outlining the coding standards and best practices is essential for consistent understanding and application. Providing training sessions and workshops can help teams adopt and maintain these standards. Consider using tools like SonarQube for static code analysis to detect potential issues and enforce quality gates.

Advanced Microservices interview questions

1. How would you design a microservice architecture that supports both synchronous and asynchronous communication patterns, and what are the trade-offs of each approach?

A microservice architecture supporting both synchronous and asynchronous communication utilizes distinct patterns to manage interactions. Synchronous communication, often REST-based, offers immediate responses, suitable for real-time queries and commands where the client needs to know the outcome immediately. Trade-offs include tight coupling between services, potential for cascading failures if one service is down, and increased latency due to request-response cycles.

Asynchronous communication, typically employing message queues (e.g., Kafka, RabbitMQ), decouples services, enhancing resilience and scalability. Services publish events or messages to a queue, and other services subscribe to these queues to process the information. This introduces eventual consistency. Trade-offs are increased complexity in managing message queues, debugging distributed systems, and ensuring message delivery (at-least-once, exactly-once).

2. Explain the concept of eventual consistency in a distributed microservices environment and how it differs from ACID transactions. How would you handle data inconsistencies?

Eventual consistency in a microservices environment means that data will become consistent across all services eventually, but there might be a delay. This contrasts with ACID (Atomicity, Consistency, Isolation, Durability) transactions, which guarantee immediate consistency. ACID transactions are typically used within a single database, whereas eventual consistency is more appropriate for distributed systems where maintaining immediate consistency across multiple services would be too costly in terms of performance and availability.

Handling data inconsistencies in an eventually consistent system often involves techniques like compensating transactions, idempotency, and reconciliation processes. Compensating transactions undo the effects of a previous operation if it fails downstream. Idempotency ensures that an operation can be applied multiple times without changing the result beyond the initial application. Reconciliation processes periodically compare data across services and correct any discrepancies, for example using tools like data audits and repair scripts. It also involves careful monitoring and alerting to detect inconsistencies early. Message queues and asynchronous communication patterns also facilitate this eventual data synchronization.

3. Describe a situation where you had to refactor a monolithic application into microservices. What challenges did you face, and how did you overcome them?

In a previous role, I worked on refactoring a large e-commerce platform. The initial application was a monolith built with PHP and a tightly coupled architecture. As the business grew, the monolith became difficult to maintain, deploy, and scale. We decided to transition to microservices, focusing on key areas like product catalog, order management, and user accounts.

The challenges included:

- Data consistency: Breaking down the shared database required careful planning. We used techniques like eventual consistency and sagas to manage transactions across services. We also leveraged message queues (like RabbitMQ) for asynchronous communication.

- Service discovery: Implementing a reliable service discovery mechanism was crucial. We used Consul for service registration and discovery.

- Monitoring and Logging: Centralized logging and monitoring became essential to track requests across multiple services. We implemented an ELK stack (Elasticsearch, Logstash, Kibana) for log aggregation and monitoring.

- Increased Complexity: Refactoring introduced more complexity. We addressed this with well-defined APIs, thorough documentation, and a strong CI/CD pipeline with automated testing. We used tools like Docker and Kubernetes to ease deployment and management of microservices.

4. How would you implement distributed tracing across microservices to diagnose performance bottlenecks and errors?

To implement distributed tracing across microservices, I'd use a tracing system like Jaeger, Zipkin, or Honeycomb. I would instrument each microservice with a tracing library (e.g., OpenTelemetry) to automatically inject trace IDs into requests as they propagate. This library would capture timing information for requests entering and exiting each service, creating spans representing units of work. These spans are then sent to the tracing backend which aggregates and correlates them into traces, allowing for end-to-end visibility of request flows.

Specifically, the steps would involve:

- Instrumentation: Add tracing libraries to each microservice.

- Context Propagation: Ensure trace IDs are passed between services (e.g., via HTTP headers).

- Data Collection: Configure agents to collect and forward trace data to the backend.

- Analysis: Use the tracing backend's UI to visualize traces, identify performance bottlenecks, and pinpoint error sources. We might also use sampling to reduce overhead of tracing in a high volume environment. Examples of headers used for propagation include

traceparentandtracestate.

5. What are the different strategies for handling inter-service authentication and authorization in a microservices architecture?

Microservices inter-service authentication/authorization strategies commonly involve these approaches:

- Mutual TLS (mTLS): Each service authenticates the other using certificates. It provides strong authentication and encryption.

- API Gateway with JWT: The API gateway handles authentication and authorization, issuing JSON Web Tokens (JWTs). Services then validate these JWTs for authorization.

- Dedicated Authentication/Authorization Service: A central service (e.g., OAuth 2.0 server, Keycloak) handles authentication. Other services delegate authorization decisions to this service, using techniques like token introspection or policy enforcement points (PEPs).

- Service Mesh: Service meshes like Istio can handle authentication and authorization via policies defined at the mesh level, abstracting the complexity from individual services. For example, Istio policies can authorize/deny requests based on the JWT claims.

- Shared Database/Cache (Avoid if Possible): While discouraged, services might share a database or cache to verify user permissions. This creates tight coupling and should be avoided if possible.

6. How do you approach versioning of microservices APIs, and what are the implications of breaking changes?

Microservice API versioning is crucial for managing changes without disrupting consumers. A common approach is using semantic versioning (major.minor.patch). API versioning can be implemented through URI versioning (e.g., /v1/resource), header-based versioning (e.g., Accept: application/vnd.example.v2+json), or custom request headers.

Breaking changes (e.g., removing fields, changing data types) in a microservice API can have significant implications. Clients relying on the old API version will likely experience errors or unexpected behavior. To mitigate this:

- Communicate: Inform consumers well in advance about breaking changes.

- Maintain compatibility: Support older versions for a reasonable transition period.

- Provide migration paths: Offer guidance or tools to help consumers update to the new API.

- Use API gateways: Leverage API gateways for routing and transformation to handle different versions and maintain backward compatibility where possible.

7. Explain the role of service meshes in a microservices architecture and the benefits they provide (e.g., traffic management, security, observability).

Service meshes are dedicated infrastructure layers for managing service-to-service communication in microservices architectures. They act like a network proxy, intercepting and managing calls between services without requiring changes to the service code itself. They enhance the operation of microservices by handling concerns such as:

- Traffic Management: Intelligent routing, load balancing, canary deployments, and circuit breaking.

- Security: Authentication, authorization, and encryption of communication between services (mTLS).

- Observability: Detailed metrics, tracing, and logging for monitoring service health and performance. The benefits include improved reliability, enhanced security, and better insights into the behavior of the microservices ecosystem.

8. How would you design a microservice to be resilient to failures and handle partial outages in its dependencies?

To design a resilient microservice, I'd focus on handling failures gracefully. Key strategies include: timeouts (to prevent indefinite blocking), retries (with exponential backoff to avoid overwhelming failing services), circuit breakers (to quickly stop calling a failing dependency and prevent cascading failures), and bulkheads (to isolate failures, preventing one failing dependency from taking down the entire service). Fallback mechanisms, like returning cached data or a default response, are also critical.

For partial outages, I'd implement idempotency (ensuring operations can be safely retried without unintended side effects) and asynchronous communication (using message queues like Kafka or RabbitMQ) to decouple the service from its dependencies. Monitoring and alerting are crucial for early detection and rapid response to failures. Logging also helps with troubleshooting.

9. What are the considerations for choosing the right technology stack (programming language, database) for each microservice?

Choosing the right tech stack for each microservice involves several considerations. Primarily, consider the service's specific functionality and performance requirements. For instance, a computationally intensive service might benefit from a language like Go or Rust, while a data-heavy service might be best suited for a NoSQL database if schema flexibility is key, or a traditional SQL database for structured data and ACID properties. For event processing systems, consider message queues like Kafka.

Other important factors include team expertise, existing infrastructure, and maintainability. Favoring languages and databases your team already knows reduces the learning curve. Ensure the chosen technology integrates well with your current systems. Also consider the technology’s maturity, community support and long-term maintainability. Avoid obscure technologies or ones with limited support.

10. Explain the importance of monitoring and logging in a microservices environment, and what metrics are most critical to track?

Monitoring and logging are critical in microservices due to the distributed nature of the architecture. They provide visibility into the system's behavior, allowing for quick identification and resolution of issues. Without centralized monitoring and logging, troubleshooting problems across multiple services becomes incredibly difficult and time-consuming.

Key metrics to track include:

- Request Latency: The time it takes for a service to respond to a request. High latency indicates potential bottlenecks.

- Error Rate: The percentage of requests that result in errors. An increasing error rate signals problems within a service.

- Throughput: The number of requests a service can handle per unit of time. Low throughput can indicate resource constraints.

- CPU Utilization: The amount of CPU resources being used by a service. High CPU utilization might indicate inefficient code or resource contention.

- Memory Utilization: The amount of memory being used by a service. High memory utilization can lead to performance degradation and crashes.

- Database Query Times: Time taken for database queries to execute.

- Custom Application Metrics: Specific metrics relevant to the business logic of a service (e.g., number of orders processed, number of users logged in).

- Log aggregation: centralize logging information is crucial to correlate logs.

11. How would you handle data aggregation across multiple microservices to build a unified view for the user interface?

Data aggregation across microservices for a unified UI can be achieved through several patterns. A common approach is the Backend for Frontend (BFF) pattern. Each UI client (e.g., web, mobile) has its own dedicated BFF. The BFF aggregates data from various microservices, transforming and tailoring it to the specific needs of that UI. This avoids over-fetching data or exposing unnecessary information to the client. Another pattern is using an API Gateway. The API Gateway acts as a single entry point and can handle routing requests to appropriate microservices and aggregating responses.

Alternatively, you could use an asynchronous approach with a message queue like Kafka or RabbitMQ. Microservices publish events when data changes, and a dedicated aggregation service subscribes to these events, building the unified view in a separate database or cache. The UI then queries this aggregated data store. Technologies like GraphQL can also be leveraged to enable the UI to specify exactly the data it needs from multiple services, reducing over-fetching. Code examples of calling multiple microservices from the gateway would be useful too.

12. Describe different deployment strategies for microservices (e.g., blue-green deployments, canary releases) and their respective advantages and disadvantages.

Several deployment strategies exist for microservices, each with its trade-offs. Blue-Green deployment involves running two identical environments (blue and green). New code is deployed to the green environment, and traffic is switched over once testing is complete. Advantage: simple rollback. Disadvantage: requires double the infrastructure. Canary releases deploy the new version to a small subset of users. If no errors are detected, the deployment is gradually rolled out to more users. Advantage: minimizes risk. Disadvantage: complex monitoring required. Rolling deployments gradually update instances with the new version. Advantage: minimal downtime. Disadvantage: slower rollback. A/B testing which is a type of canary deployment, directs different traffic to different versions of a new feature. Advantage: Data driven feedback loop, disadvange: requires more infrastructure.

13. How do you ensure data consistency when multiple microservices need to update the same data?

Ensuring data consistency across microservices when multiple services need to update the same data can be achieved through several strategies. One common approach is using distributed transactions with a protocol like two-phase commit (2PC), although this can introduce performance bottlenecks and complexity. Another, more popular approach is the Saga pattern. With the Saga pattern, a series of local transactions are coordinated across services. If one transaction fails, compensating transactions are executed to rollback previous changes, ensuring eventual consistency.

Eventual consistency can also be handled via message queues and proper design. Microservices publish events when data changes, and other services subscribe to these events to update their local copies of the data. This approach is asynchronous, and proper design can mitigate conflicts. For instance, last-write-wins strategy or conflict resolution logic in the consuming services, is needed to handle competing updates.

14. Explain the concept of 'Domain-Driven Design' (DDD) and how it relates to microservices architecture.

Domain-Driven Design (DDD) is an approach to software development that centers the development process around the domain, which is the subject area the software is being built for. It focuses on understanding the business's concepts, language, and rules, and then reflecting these directly in the code. DDD involves collaboration with domain experts to create a shared understanding, modeled in a ubiquitous language that is used by both business and technical teams. Key concepts in DDD include entities, value objects, aggregates, repositories, services, and bounded contexts.

DDD and microservices are closely related. DDD's bounded contexts naturally align with the concept of microservices. Each bounded context represents a specific business capability with its own model and data. This allows you to design microservices around these independent bounded contexts, leading to services that are highly cohesive, loosely coupled, and aligned with business needs. Each microservice can then own and manage its own data store, further promoting independence and scalability. DDD principles help in defining the boundaries and responsibilities of each microservice, enabling a more manageable and maintainable microservices architecture.

15. How would you design a microservice that needs to process large amounts of streaming data in real-time?

To design a microservice for real-time processing of large streaming data, I'd leverage a combination of technologies:

- Message Queue: Use a message queue like Kafka or RabbitMQ to ingest the streaming data. This provides buffering and decouples the data source from the processing service.

- Stream Processing Engine: Employ a stream processing engine such as Apache Flink, Apache Spark Streaming, or Kafka Streams to perform real-time data transformations, aggregations, and analysis. Flink is often preferred for its low latency.

- Scalable Storage: Store processed data in a scalable database like Cassandra or a data lake (e.g., using Apache Hadoop/HDFS or cloud storage).

- Horizontal Scaling: Design the microservice to scale horizontally by partitioning the data stream and deploying multiple instances. Each instance processes a subset of the data.

- Monitoring and Alerting: Implement comprehensive monitoring and alerting to detect and address any issues promptly. Metrics like processing latency and throughput are crucial.

Here's how a simple example can be coded with kafka and Flink.

# Example Flink job reading from Kafka

from pyflink.datastream import StreamExecutionEnvironment, CheckpointConfig

from pyflink.common.restart_strategy import RestartStrategies

env = StreamExecutionEnvironment.get_execution_environment()

env.set_restart_strategy(RestartStrategies.fixed_delay_restart(1, 1000))

# Configure checkpointing for fault tolerance

env.get_checkpoint_config().enable_checkpointing(5000)

env.get_checkpoint_config().set_checkpointing_mode(CheckpointConfig.CheckpointingMode.EXACTLY_ONCE)

k_consumer = FlinkKafkaConsumer(

topics='input_topic',

deserialization_schema=SimpleStringSchema(),

properties={'bootstrap.servers': 'kafka_broker:9092', 'group.id': 'flink_consumer_group'})

stream = env.add_source(k_consumer)

stream.map(lambda x: f"Processed: {x}").print()

env.execute("Streaming Data Processing")

16. What are the security considerations when exposing microservices to external clients through an API gateway?

When exposing microservices to external clients via an API gateway, several security aspects must be considered. These include:

- Authentication and Authorization: The API gateway should verify the identity of the client and ensure they have the necessary permissions to access specific microservices. This often involves technologies like OAuth 2.0, JWT, or API keys.

- Rate Limiting and Throttling: Implement rate limiting to prevent abuse, DDoS attacks, and ensure fair usage of the microservices. Throttling can limit the number of requests within a certain timeframe.

- Input Validation: The gateway needs to validate all incoming requests to prevent injection attacks and other malicious inputs. This could include checking request size, data types, and formats.

- TLS/SSL Encryption: All communication between the API gateway and external clients should be encrypted using TLS/SSL to protect sensitive data in transit.

- API Security Policies: Enforce security policies, such as CORS (Cross-Origin Resource Sharing), to control which origins can access your APIs.