Hiring a Unity developer requires understanding their technical skills and experience with the engine. You need to know if they can build your next top-rated game or simulation, which is why asking the right questions is important, and it is more than just knowing the skills required for a game programmer.

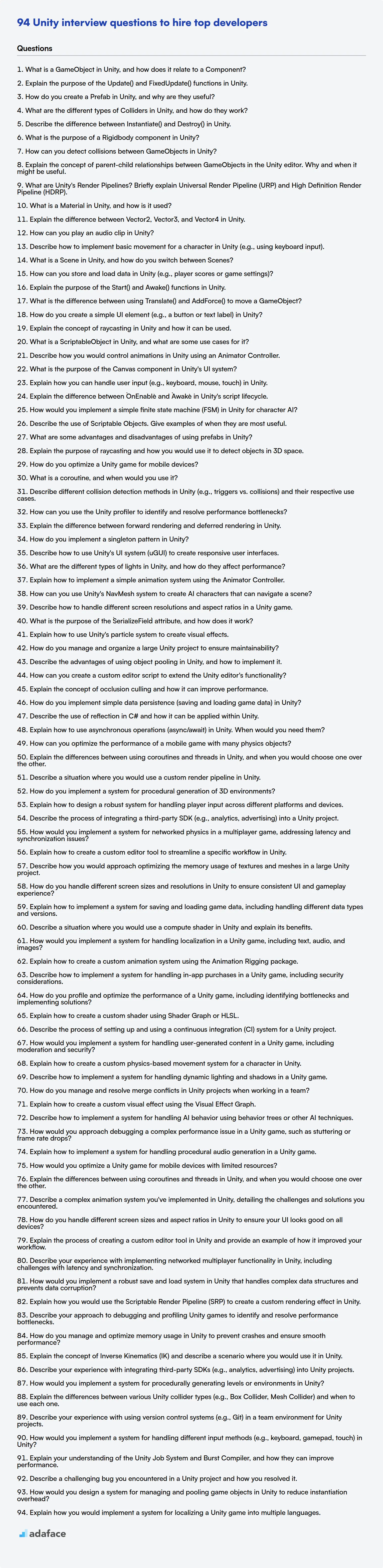

This blog post provides a categorized compilation of Unity interview questions, ranging from basic to expert levels, including Unity-specific multiple-choice questions. These questions are designed to assist recruiters and hiring managers in assessing candidates effectively.

By using these questions, you can better gauge a candidate's proficiency and fit for your team and consider using a Unity test before the interview to streamline your hiring process.

Table of contents

Basic Unity interview questions

1. What is a GameObject in Unity, and how does it relate to a Component?

A GameObject in Unity is the fundamental building block of any scene. It is a container that can hold various components, which define its behavior and appearance. Think of it as an empty box that you fill with functionalities.

A Component, on the other hand, is a modular piece of code that adds specific functionality to a GameObject. For example, a Transform component controls the GameObject's position, rotation, and scale. A Rigidbody component adds physics behavior, and a custom script (like a .cs file) can define unique game logic. A GameObject must have a Transform component, but can have zero or many other components attached to it.

2. Explain the purpose of the Update() and FixedUpdate() functions in Unity.

The Update() function in Unity is called every frame. This makes it suitable for most game logic, animations, and input handling that don't require precise timing. The frequency of Update() depends on the frame rate of the game, which can vary.

FixedUpdate() is called at a fixed time interval. This is crucial for physics calculations. Because it runs at a consistent rate, regardless of frame rate, it ensures predictable physics behavior across different hardware. Use FixedUpdate() for applying forces, torques, or directly manipulating Rigidbody components. For instance, any code that uses Rigidbody.AddForce should be in FixedUpdate().

3. How do you create a Prefab in Unity, and why are they useful?

To create a Prefab in Unity, you simply drag a GameObject from your Scene Hierarchy into your Project window. This creates a reusable asset of that GameObject, including its components, child objects, and settings.

Prefabs are useful because they allow you to create multiple instances of the same object with consistent properties. If you later need to change something about all instances of that object, you can simply modify the Prefab, and all instances will be updated automatically. This promotes code reusability, reduces errors, and makes scene management much easier. For example, imagine you have a Enemy prefab. Changing the health value of the base prefab will update all instances of the Enemy present in your scene. This saves a lot of time, and also helps prevent errors.

4. What are the different types of Colliders in Unity, and how do they work?

Colliders in Unity define the shape of an object for the purposes of physical collisions. Unity provides several types of colliders, each suited for different shapes and performance considerations. The main types are:

- Box Collider: A simple rectangular prism. Efficient for basic shapes like walls or crates.

- Sphere Collider: A sphere shape. Good for representing balls or objects with a roughly spherical form.

- Capsule Collider: A capsule shape (cylinder with hemispherical ends). Useful for character controllers.

- Mesh Collider: Uses the object's actual mesh for collision. Can be very precise but also the most performance-intensive. It can either be Convex or not. Convex Mesh Colliders can collide with other Mesh colliders. Non-convex colliders can collide only with primitive colliders like Box, Sphere or Capsule.

- Terrain Collider: Specifically designed for Unity Terrain objects, optimized for large, complex landscapes.

- Wheel Collider: Used to simulate the behavior of vehicle wheels. Handles suspension, friction, and motor torque.

- Composite Collider 2D: Used with 2D physics, they merge multiple 2D colliders into one for optimization. This is often used with Tilemap Colliders.

Colliders work by detecting when their shapes overlap with other colliders in the scene. When a collision occurs, Unity can trigger events like OnCollisionEnter, OnCollisionStay, and OnCollisionExit, allowing scripts to react to the collision. For 2D physics, analogous methods exist that start with OnTrigger and OnCollision respectively.

5. Describe the difference between Instantiate() and Destroy() in Unity.

In Unity, Instantiate() and Destroy() are fundamental functions for managing objects in a scene.

Instantiate() creates a new instance of a prefab or existing object. It essentially clones an object, allowing you to dynamically create new game objects during runtime. For example: GameObject newObject = Instantiate(myPrefab, position, rotation);. Destroy(), on the other hand, removes an object from the scene. When Destroy(gameObject) is called, the specified gameObject is marked for deletion. The actual removal happens at the end of the frame.

6. What is the purpose of a Rigidbody component in Unity?

The Rigidbody component in Unity enables a GameObject to be controlled by Unity's physics engine. It essentially gives an object mass, inertia, and the ability to collide with other objects and respond to forces like gravity. Without a Rigidbody, an object is kinematic and must be moved directly via its Transform component. Rigidbody physics interactions are what create realistic movement and collisions.

Specifically, adding a Rigidbody does the following:

- Enables the object to be affected by gravity.

- Allows the object to collide with other objects that have colliders.

- Allows applying forces and torques to the object to control its movement and rotation using methods like

AddForce()andAddTorque().

7. How can you detect collisions between GameObjects in Unity?

In Unity, you can detect collisions between GameObjects using several methods. The primary approaches involve using Unity's built-in collision detection system, which relies on Collider and Rigidbody components. To detect collisions, at least one of the colliding GameObjects must have a Rigidbody component attached. There are OnCollisionEnter, OnCollisionStay, and OnCollisionExit methods to track the collision event. These are used when you want physical collision interactions like bouncing or applying force.

Another approach is by using trigger colliders. Triggers allow GameObjects to pass through each other while still detecting the contact. You can use OnTriggerEnter, OnTriggerStay, and OnTriggerExit methods for these type of interactions. These methods provide a Collision or Collider parameter containing information about the other GameObject involved in the collision. Example:

void OnCollisionEnter(Collision collision)

{

Debug.Log("Collided with: " + collision.gameObject.name);

}

8. Explain the concept of parent-child relationships between GameObjects in the Unity editor. Why and when it might be useful.

In Unity, the parent-child relationship between GameObjects establishes a hierarchy. The child GameObject inherits transformations (position, rotation, scale) from its parent. Moving, rotating, or scaling the parent will also affect the child. This creates a dependency where the child is relatively positioned to the parent.

This relationship is useful for several reasons:

- Organization: It helps structure the scene in a logical way.

- Complex Objects: Combining multiple GameObjects into a single functional unit. For example, a car can be a parent object and the wheels can be children. Moving the car moves the wheels along with it.

- Relative Positioning: Maintaining relative distances and orientations between objects. If you want a character's weapon to always be held in the same relative position in their hand, make the weapon a child of the hand GameObject.

- Simplified Control: Allows control of multiple objects through a single parent object. This simplifies scripting and animation.

9. What are Unity's Render Pipelines? Briefly explain Universal Render Pipeline (URP) and High Definition Render Pipeline (HDRP).

Unity's render pipelines are pre-built rendering paths that define how the engine renders graphics. They dictate lighting, shading, and other visual effects. The three main pipelines are the Built-in Render Pipeline (legacy), Universal Render Pipeline (URP), and High Definition Render Pipeline (HDRP).

URP is a scriptable render pipeline designed for scalability across a wide range of platforms, from mobile to high-end PCs. It provides streamlined, performant graphics suitable for projects targeting multiple platforms. HDRP, on the other hand, is a scriptable render pipeline geared towards high-fidelity graphics on high-end hardware. It offers advanced features like realistic lighting, volumetric effects, and advanced material shading models, best suited for PC, consoles, and similar platforms prioritizing visual quality.

10. What is a Material in Unity, and how is it used?

In Unity, a Material defines how a surface should be rendered. It's essentially a recipe that tells Unity's rendering engine how light should interact with a specific object. Materials hold information about the object's color, texture, shininess, whether it should be opaque or transparent, and much more.

Materials are used by assigning them to Renderer components (like MeshRenderer or SkinnedMeshRenderer) attached to GameObjects. By changing the Material of a GameObject, you can radically alter its appearance without changing the underlying mesh. You can adjust material properties in the Inspector or via scripting. For example, to change the color of a material through code you might use:

GetComponent<Renderer>().material.color = Color.red;

11. Explain the difference between Vector2, Vector3, and Vector4 in Unity.

In Unity, Vector2, Vector3, and Vector4 represent vectors of 2, 3, and 4 dimensions, respectively. Vector2 is commonly used for 2D graphics and UI, storing x and y components representing position or direction in a 2D space. Vector3 is used more broadly for 3D positions, directions, and forces, containing x, y, and z components.

Vector4 is less frequently used but can represent a 4D vector, often useful for storing color data (RGBA) where x, y, z, and w represent red, green, blue, and alpha channels respectively or when you need homogeneous coordinates in 3D graphics or specialized calculations.

12. How can you play an audio clip in Unity?

In Unity, you can play an audio clip using the AudioSource component. First, you need an AudioSource component attached to a GameObject. Then, you can assign an AudioClip to the AudioSource. Finally, you can use the AudioSource.Play() method to play the clip. Here's some example code:

AudioSource audioSource = GetComponent<AudioSource>();

audioSource.clip = myAudioClip;

audioSource.Play();

Alternatively, for simple one-off sounds, you can use AudioSource.PlayClipAtPoint(). This creates a temporary GameObject with an AudioSource at a specified position and plays the clip.

AudioSource.PlayClipAtPoint(myAudioClip, transform.position);

13. Describe how to implement basic movement for a character in Unity (e.g., using keyboard input).

To implement basic character movement in Unity using keyboard input, you typically use the CharacterController component or Rigidbody. If using the CharacterController, in a C# script attached to the character, you would use Input.GetAxis to get the horizontal and vertical input (e.g., "Horizontal" and "Vertical" axes are mapped to the A/D or left/right arrow keys, and W/S or up/down arrow keys respectively). You then create a movement vector by multiplying the input values with a speed variable. Finally, you call CharacterController.Move(movementVector * Time.deltaTime) to move the character. The Time.deltaTime ensures movement is framerate-independent.

If using Rigidbody, you will use AddForce to control the movement. The input from the keyboard is the same as for the CharacterController, but instead of calling Move you will use rigidbody.AddForce(movementVector * speed). Below is sample code demonstrating CharacterController movement.

using UnityEngine;

public class PlayerMovement : MonoBehaviour

{

public CharacterController controller;

public float speed = 5f;

void Update()

{

float x = Input.GetAxis("Horizontal");

float z = Input.GetAxis("Vertical");

Vector3 move = transform.right * x + transform.forward * z;

move = move.normalized * speed;

controller.Move(move * Time.deltaTime);

}

}

14. What is a Scene in Unity, and how do you switch between Scenes?

In Unity, a Scene is a container that holds all the game objects, assets, and scripts that make up a specific part of your game, like a level, a menu, or a cutscene. Think of it as a single map or location in your game world.

To switch between Scenes, you typically use the SceneManager.LoadScene() method. You can load a scene by its name (string) or by its index in the Build Settings. Here's an example in C#:

using UnityEngine.SceneManagement;

public class SceneSwitcher : MonoBehaviour

{

public void LoadNextScene(string sceneName)

{

SceneManager.LoadScene(sceneName);

}

}

Make sure the scenes you want to switch to are added to the Build Settings (File -> Build Settings).

15. How can you store and load data in Unity (e.g., player scores or game settings)?

In Unity, player scores or game settings can be stored and loaded using several methods. PlayerPrefs is a simple option for storing small amounts of data like scores, settings, or player names. It's easy to use but not suitable for large or complex data. Data is stored locally on the user's device using platform-specific storage.

For more complex data structures or larger datasets, consider using serialization. This involves converting Unity objects into a format that can be stored in a file (e.g., JSON or XML). The JsonUtility class or libraries like Newtonsoft.Json can serialize and deserialize C# objects to and from JSON. Binary serialization is another option, however less human-readable and less compatible. After serializing to a string (e.g., JSON), you can write it to a file using System.IO. Loading the data involves reading the file and deserializing it back into Unity objects.

16. Explain the purpose of the Start() and Awake() functions in Unity.

In Unity, both Awake() and Start() are lifecycle functions, but they serve distinct purposes. Awake() is called when a script instance is being loaded, regardless of whether the script is enabled or disabled. It's primarily used for initialization tasks that need to happen before the game starts, such as setting up references or initializing variables. Importantly, Awake() is called only once during the lifetime of the script instance.

Start(), on the other hand, is called only once in the lifetime of the script, but after Awake(). Start() is only called if the script instance is enabled. It is often used for initialization that relies on other scripts being initialized or for tasks that should be delayed until the game actually begins. If you need to ensure a specific execution order or if you need to interact with other components that might not be fully initialized during Awake(), Start() is the better choice.

17. What is the difference between using Translate() and AddForce() to move a GameObject?

Translate() directly manipulates the transform.position of a GameObject, instantly moving it without considering physics. It's ideal for simple movements, teleportation, or when precise positioning is needed, ignoring collisions and forces. It does not interact with the physics engine at all.

AddForce(), on the other hand, applies a force to the GameObject's Rigidbody, causing it to accelerate and move based on its mass, drag, and other physics properties. It's suitable for realistic movements influenced by physics, like pushing, throwing, or simulating gravity. AddForce() considers collisions and other forces in the scene.

18. How do you create a simple UI element (e.g., a button or text label) in Unity?

In Unity, you can create UI elements using the UnityEngine.UI namespace. For example, to create a button, you would typically use the Unity Editor. Go to GameObject > UI > Button. This automatically creates a Canvas and EventSystem if they don't already exist.

Alternatively, you can create UI elements programmatically. First, add using UnityEngine.UI; to your script. Then, instantiate a GameObject, add a Button component to it, and set its properties like position and text using code. Example:

GameObject buttonObject = new GameObject("MyButton");

Button button = buttonObject.AddComponent<Button>();

// Set button parent to the canvas

buttonObject.transform.SetParent(canvas.transform, false);

19. Explain the concept of raycasting in Unity and how it can be used.

Raycasting in Unity is a technique used to project an invisible ray from a point in a specified direction and detect collisions with objects in its path. This allows you to determine what objects are in front of the ray emitter and how far away they are. The Physics.Raycast() function is the primary way to implement this. It returns true if the ray hits something, and provides information about the hit, such as the collider, the point of contact, and the normal of the surface.

Raycasting is used for a wide range of purposes. Examples include:

- Character Controller Ground Detection: Determining if a character is standing on the ground by casting a ray downwards.

- Object Selection: Clicking on objects in the scene by casting a ray from the camera through the mouse cursor position.

- AI Line of Sight: Checking if an AI agent can see the player by casting a ray from the agent to the player.

- Laser Beams/Projectiles: Simulating laser beams or projectiles by checking for collisions along their path. For example:

if (Physics.Raycast(transform.position, transform.forward, out hit, 10f)) { Debug.Log("Hit something!"); }

20. What is a ScriptableObject in Unity, and what are some use cases for it?

A ScriptableObject is a data container in Unity that allows you to store large amounts of data, independent of class instances. Unlike MonoBehaviours, ScriptableObjects don't need to be attached to GameObjects. They are stored as assets in your project.

Some common use cases include:

- Storing game configuration data (e.g., character stats, item definitions).

- Creating reusable data templates.

- Managing complex game logic by decoupling data from code.

- Reducing memory usage by sharing data across multiple objects.

Example:

[CreateAssetMenu(fileName = "NewItem", menuName = "Inventory/Item")]

public class ItemData : ScriptableObject

{

public string itemName;

public string description;

public Sprite icon;

public int maxStackSize;

}

21. Describe how you would control animations in Unity using an Animator Controller.

In Unity, I'd control animations using an Animator Controller. The Animator Controller acts as a state machine where each state represents an animation or animation blend tree. Transitions between these states are governed by conditions, typically based on parameters (variables) defined within the Animator. These parameters can be of various types like float, int, bool, or trigger, and are controlled via scripting to influence which animation is playing at any given time.

For example, to transition from an 'Idle' state to a 'Walking' state, I would create a transition in the Animator Controller. I'd then add a condition to this transition, such as a boolean parameter 'isWalking'. In the script, I'd set animator.SetBool("isWalking", true) to initiate the transition to the 'Walking' animation. Conversely, setting it to false would transition back to the 'Idle' state. Blend trees could be used for more complex behaviors, such as varying walk speed based on a float parameter. Using animator.Play("MyAnimation") allows to force a certain animation to be played.

22. What is the purpose of the Canvas component in Unity's UI system?

The Canvas component in Unity's UI system serves as the base for all UI elements. It's a container that defines the area where UI elements are rendered. Think of it as the drawing surface for your UI.

The Canvas manages how UI elements are scaled and rendered based on the chosen render mode (Screen Space - Overlay, Screen Space - Camera, or World Space). It also handles input events, ensuring UI elements respond to user interactions like clicks and touches.

23. Explain how you can handle user input (e.g., keyboard, mouse, touch) in Unity.

In Unity, handling user input involves using the Input class. For keyboard input, you can use methods like Input.GetKey(), Input.GetKeyDown(), and Input.GetKeyUp() to detect key presses. For mouse input, methods like Input.GetMouseButton(), Input.GetMouseButtonDown(), and Input.GetMouseButtonUp() detect mouse button events, and Input.mousePosition gives the mouse's screen coordinates. Touch input can be managed using Input.touchCount to get the number of touches, and Input.GetTouch(index) to retrieve a specific Touch object, which contains information like position, phase (began, moved, ended, etc.), and tap count.

For more complex input schemes, consider using Unity's Input Manager (Edit > Project Settings > Input Manager) or the new Input System package. The Input Manager allows you to define axes and actions, mapping them to various input sources. The new Input System provides more flexibility with device support and input action maps, and uses C# events or callbacks for responding to input events. Using Input.GetAxis() and Input.GetAxisRaw() will allow you to configure the axes.

Intermediate Unity interview questions

1. Explain the difference between `OnEnable` and `Awake` in Unity's script lifecycle.

Awake is called only once during the script's lifetime, when the script instance is being loaded. It's used to initialize variables and set up components before the game starts. OnEnable is called every time the script becomes enabled and active. This can happen multiple times during the script's lifetime.

Think of it this way: Awake is for one-time initialization, while OnEnable is for when the script becomes active. OnEnable is also called after Awake when the object is first instantiated. Common uses for OnEnable include registering events, subscribing to delegates, or re-initializing states when an object is pooled and reactivated.

Consider the following example:

using UnityEngine;

public class ExampleScript : MonoBehaviour

{

void Awake()

{

Debug.Log("Awake called");

}

void OnEnable()

{

Debug.Log("OnEnable called");

}

}

2. How would you implement a simple finite state machine (FSM) in Unity for character AI?

A simple FSM in Unity can be implemented using an enum to represent the states and a switch statement (or a dictionary) to handle state transitions and actions. The character AI script would have a variable to store the current state. Each state within the enum would correspond to a specific behavior (e.g., Idle, Patrol, Chase). The Update() method would contain the switch statement, executing different code blocks based on the current state. These code blocks would handle state-specific logic and determine when to transition to a new state.

For example:

enum AIState { Idle, Patrol, Chase }

AIState currentState = AIState.Idle;

void Update() {

switch (currentState) {

case AIState.Idle:

// Idle logic

if (/*condition to chase*/) {

currentState = AIState.Chase;

}

break;

case AIState.Patrol:

// Patrol logic

break;

case AIState.Chase:

// Chase logic

break;

}

}

To manage more complex behavior, consider using a dictionary to map states to functions or actions. This avoids deeply nested switch statements and promotes cleaner, more maintainable code.

3. Describe the use of Scriptable Objects. Give examples of when they are most useful.

Scriptable Objects are data containers that store information independent of script instances. Unlike regular MonoBehaviour scripts attached to GameObjects, Scriptable Objects aren't attached to scene objects; instead, they exist as assets in your project. This allows you to store data and share it across multiple objects or scenes without duplicating the data in memory.

They are most useful for:

- Configuration data: Storing game settings, character stats, or item definitions. For example, defining different types of enemies with health, damage, and speed values.

- Shared data: Representing data that needs to be accessed and modified by multiple objects. For instance, a resource manager or a global event system.

- Data persistence: Storing data that persists between game sessions. You can save and load Scriptable Objects to store player progress or game state.

- Creating reusable data templates: Making base stats for items and characters.

[CreateAssetMenu(fileName = "NewCharacterData", menuName = "Character Data")]

public class CharacterData : ScriptableObject

{

public string characterName;

public int health;

public float speed;

}

4. What are some advantages and disadvantages of using prefabs in Unity?

Prefabs in Unity offer several advantages. They promote reusability by allowing you to create a template object that can be instantiated multiple times, ensuring consistency across your scene. This significantly speeds up development and reduces the risk of errors. Making changes to the prefab automatically updates all instances, saving considerable time during adjustments.

However, prefabs also have disadvantages. Overuse can lead to rigid structures, making it difficult to introduce variations if many instances need slight modifications. While prefab variants exist, managing complex hierarchies of variants can become cumbersome. Furthermore, deeply nested prefabs can sometimes impact performance due to the increased overhead of managing numerous interconnected objects.

5. Explain the purpose of raycasting and how you would use it to detect objects in 3D space.

Raycasting is a technique used to find what objects are in the line of sight of a ray. You essentially cast an invisible ray from a point in a specific direction and detect the first object that the ray intersects. This is very useful for object detection, collision detection, and even things like determining if a character is looking at a specific object in 3D space.

To detect objects, you'd first define the origin point of the ray and the direction in which it travels. Then, using a raycasting function provided by a game engine or a custom implementation, you'd check for intersections with objects in the scene. The function typically returns information about the intersection point, the distance to the object, and the object that was hit. You can then use this information to determine what object was detected and act accordingly; for instance, trigger an event or perform some calculation. Using it might look something like this:

Ray ray(origin, direction);

RaycastHit hitInfo;

if (Physics.Raycast(ray, out hitInfo, maxDistance))

{

GameObject hitObject = hitInfo.collider.gameObject;

// Do something with the hitObject

}

6. How do you optimize a Unity game for mobile devices?

Optimizing a Unity game for mobile involves several key areas. First, optimize assets: reduce texture sizes, use texture compression (like ETC1/2, ASTC), minimize polygon count in models, and use audio compression. Employ level of detail (LOD) to reduce the detail of distant objects. Optimize shaders by using mobile-friendly versions and minimizing complex calculations. Use texture atlases and sprite sheets to reduce draw calls.

Second, optimize code and performance: use object pooling to reduce garbage collection, avoid using Find functions excessively, use coroutines efficiently, and profile your game frequently using Unity's Profiler to identify performance bottlenecks. Keep your scripts lean and avoid unnecessary calculations per frame. When developing, target a specific hardware profile (low/mid/high range mobile devices).

7. What is a coroutine, and when would you use it?

A coroutine is a function that can suspend its execution and resume it later, allowing other code to run in the meantime. It's a form of concurrency, but unlike threads, coroutines typically run within a single thread and are managed in user space, making them lightweight.

Use coroutines when you need to perform asynchronous operations or manage concurrent tasks without the overhead of traditional threads. Common use cases include:

- I/O-bound tasks: Handling network requests, file operations, or database queries without blocking the main thread.

- Concurrency: Managing multiple tasks concurrently, such as in game development or event-driven programming.

- Implementing generators: Creating iterators that produce values on demand, saving memory and improving performance.

- Asynchronous programming: Streamlining asynchronous programming with

async/awaitkeywords making the code more readable and maintainable compared to callbacks.

8. Describe different collision detection methods in Unity (e.g., triggers vs. collisions) and their respective use cases.

In Unity, collision detection primarily uses two methods: Triggers and Collisions. Triggers are activated when a Collider enters their space. Objects passing through a trigger do not experience any physical interaction or collision response. They are useful for events such as collecting items, entering a new area, or activating sensors. We typically use OnTriggerEnter, OnTriggerStay, and OnTriggerExit methods to handle trigger events. Note the IsTrigger property should be enabled on the Collider.

Collisions, on the other hand, represent physical interactions between objects with Collider and Rigidbody components (at least one object must have a non-kinematic Rigidbody). When objects collide, Unity's physics engine calculates the response based on their physical properties. Collisions are handled via OnCollisionEnter, OnCollisionStay, and OnCollisionExit methods. These are used for realistic physical interactions like objects bouncing off each other, stopping movement, or applying forces. The Rigidbody component is essential for any object that needs to be affected by Unity's physics engine for the collision to work.

For example, if we wanted to check if a player entered a certain area, we can use a trigger collider like this:

void OnTriggerEnter(Collider other)

{

if (other.gameObject.CompareTag("Player"))

{

Debug.Log("Player entered the area.");

}

}

If, however, we wanted to create a bouncy ball, we can attach a Rigidbody and Collider components to it. Then, we don't need to write any code to implement bouncing, since it will be handled by Unity's physics engine.

9. How can you use the Unity profiler to identify and resolve performance bottlenecks?

The Unity Profiler is a powerful tool to identify performance bottlenecks. To use it, first enable the Profiler (Window > Analysis > Profiler). Then, run your game/application and observe the various sections: CPU, Memory, Rendering, Audio, etc. Look for spikes or consistently high values in any of these sections. Specifically, pay attention to CPU Usage, looking at which functions consume the most time (Deep Profile can help). Also analyze Rendering, for example, high SetPass Calls or Batches could indicate shader or draw call issues. High memory allocations or garbage collection events (in Memory) can also significantly impact performance. Once a bottleneck is identified, you can address it by optimizing code (e.g., reducing complex calculations, caching results), reducing draw calls (e.g., using static batching, GPU instancing), optimizing memory usage (e.g., object pooling, avoiding unnecessary allocations), or optimizing assets (e.g., compressing textures, reducing polygon count).

10. Explain the difference between forward rendering and deferred rendering in Unity.

Forward rendering and deferred rendering are two different rendering paths in Unity. In forward rendering, each object in the scene is rendered in one or more passes, and lighting calculations are performed for each object during these passes. This approach is simple to implement and supports features like transparency and MSAA (Multi-Sample Anti-Aliasing) easily. However, the rendering cost increases linearly with the number of lights and objects, as the lighting calculations are repeated for each visible object per light.

In deferred rendering, the rendering process is split into two main passes. In the first pass, the geometry of the scene is rendered into multiple textures called G-buffer. This buffer typically contains information like color, normal, and depth. In the second pass, lighting calculations are performed using the data stored in the G-buffer. This approach allows for a large number of lights to be rendered efficiently because the lighting calculations are performed per pixel rather than per object. However, it has limitations such as difficulty handling transparency and lack of built-in MSAA support.

11. How do you implement a singleton pattern in Unity?

To implement the singleton pattern in Unity, you typically create a C# script that ensures only one instance of a class exists. This is usually achieved using a static instance variable and a GetInstance() method. In the Awake() method, the script checks if an instance already exists; if it does, the current instance is destroyed to prevent duplicates.

Here's a simple example:

public class MySingleton : MonoBehaviour

{

private static MySingleton _instance;

public static MySingleton Instance

{

get

{

if (_instance == null)

{

_instance = FindObjectOfType<MySingleton>();

if (_instance == null)

{

GameObject singleton = new GameObject("MySingleton");

_instance = singleton.AddComponent<MySingleton>();

}

}

return _instance;

}

}

private void Awake()

{

if (_instance != null && _instance != this)

{

Destroy(this.gameObject);

return;

}

_instance = this;

DontDestroyOnLoad(this.gameObject); // Optional: Persist across scene loads

}

// Your singleton's functionality here

}

12. Describe how to use Unity's UI system (uGUI) to create responsive user interfaces.

To create responsive UIs in Unity using uGUI, leverage anchors and pivot points. Anchors define how a UI element repositions and resizes relative to its parent. Use anchor presets to quickly set common anchor positions (e.g., top-left, bottom-right, stretch). The pivot point determines the origin of scaling and rotation. Also, use the Canvas Scaler component, setting its UI Scale Mode to 'Scale With Screen Size' is important. Configure the reference resolution and screen match mode to ensure the UI scales appropriately across different screen sizes.

Consider using Layout Groups (Horizontal, Vertical, Grid) to automatically arrange UI elements. This simplifies dynamic layouts, especially when content changes. The Content Size Fitter component can be used to automatically resize UI elements based on their content. It is crucial to test the UI on different resolutions and aspect ratios to ensure responsiveness.

13. What are the different types of lights in Unity, and how do they affect performance?

Unity has several light types, each with different performance characteristics. Directional lights simulate distant light sources like the sun and are generally the cheapest, as they only require a single shadow map. Point lights emit light from a single point in all directions and are more expensive, especially with shadows, because they potentially affect many objects. Spot lights are similar to point lights but emit light in a cone, making them more efficient than point lights when you only need light in a specific area. Finally, Area lights are the most expensive, as they calculate light from a rectangle or quad. They are useful for realistic lighting, particularly for interior scenes but should be used sparingly due to their high performance cost.

The main factors affecting light performance are the number of lights, the type of lights, shadow casting, and the rendering path used. Realtime shadows are very costly, so consider using baked lighting where possible, especially for static objects. Unity's rendering paths (Forward vs. Deferred) also impact lighting performance. Deferred rendering generally handles many lights better, but has its own performance overhead. Finally, using lightmaps can dramatically improve performance by pre-calculating lighting for static objects.

14. Explain how to implement a simple animation system using the Animator Controller.

To implement a simple animation system using Unity's Animator Controller, you first need to create an Animator Controller asset. Then, define animation states within the controller, each representing a different animation clip. These states are connected by transitions, which determine when and how the animation changes. Transitions are governed by conditions based on parameters defined in the Animator Controller such as float, int, bool, and trigger.

For example, if you have an 'Idle' and 'Walking' animation, create corresponding states in the Animator. Create a float parameter named Speed. Add a transition from 'Idle' to 'Walking' with the condition Speed > 0.1 and a transition back from 'Walking' to 'Idle' with the condition Speed < 0.1. Attach the Animator Controller to a game object that has an Animator component. Finally, use script to set the Speed parameter of the Animator based on player input, which will trigger the transitions between animations. animator.SetFloat("Speed",currentSpeed);

15. How can you use Unity's NavMesh system to create AI characters that can navigate a scene?

To use Unity's NavMesh system for AI navigation, first, bake a NavMesh. This involves selecting static game objects that form the walkable surfaces and using the Navigation window to generate the NavMesh. Then, add a NavMeshAgent component to your AI character. The NavMeshAgent handles pathfinding and movement. You can then control the AI's movement by setting the destination property of the NavMeshAgent in a script, for example: GetComponent<NavMeshAgent>().destination = targetPosition;

Further, you can use the NavMesh.CalculatePath function to determine a path's validity before setting a destination. For more complex scenarios, consider incorporating things like obstacle avoidance and off-mesh links to handle jumps or other non-navmesh traversal. Consider utilizing raycasting or other sensing mechanisms to detect the environment. Utilize NavMeshObstacle for dynamic obstacles.

16. Describe how to handle different screen resolutions and aspect ratios in a Unity game.

To handle different screen resolutions and aspect ratios in Unity, use the Canvas Scaler component in your UI. Set the UI Scale Mode to Scale With Screen Size. Then, specify a Reference Resolution (e.g., 1920x1080). The UI will scale accordingly. You can also use anchors and pivots to ensure UI elements are positioned correctly relative to the screen edges.

For gameplay elements, adjust the camera's orthographicSize (for 2D) or fieldOfView (for 3D) based on the aspect ratio. Consider using different camera setups or adjusting the viewport rectangle if the aspect ratio changes dramatically, and ensure your game world is designed to be flexible enough to accommodate different screen sizes without clipping or excessive empty space. Use code such as this to get screen dimensions: Screen.width and Screen.height to adjust the gameplay.

17. What is the purpose of the `SerializeField` attribute, and how does it work?

The SerializeField attribute in Unity is used to make a private or protected field visible and accessible in the Unity Editor's Inspector window. By default, only public fields are serialized (saved and loaded with the scene or prefab). SerializeField allows you to expose specific private fields for editing in the Inspector without making them publicly accessible to other scripts, thus maintaining encapsulation.

When Unity encounters SerializeField, it instructs the engine's serialization system to treat that field as if it were public for the purposes of saving and loading its value. This means the field's value will be preserved when the scene is saved, the component is disabled and re-enabled, or when the game is closed and reopened. It essentially bridges the gap between private data and the Unity Editor's visual editing capabilities.

18. Explain how to use Unity's particle system to create visual effects.

Unity's particle system is a powerful tool for creating visual effects. To use it, you typically create a new Particle System GameObject. Then, in the Inspector, you modify various modules like Emission, Shape, Velocity over Lifetime, Color over Lifetime, and Size over Lifetime to define how particles are created, how they move, and how they look. You can adjust parameters such as the number of particles emitted, their initial velocity, their color gradient over time, and the shape from which they are emitted.

For example, to create a simple fire effect, you could use a sphere-shaped emitter, set the emission rate to a high value, and use a color gradient that transitions from yellow to orange to red. You can also use textures for the particles themselves, and shaders for more advanced visual control. You can control particle behavior via scripts using the ParticleSystem API in C#. Common methods include Play(), Stop(), and Emit() for direct control over particle generation.

19. How do you manage and organize a large Unity project to ensure maintainability?

To manage a large Unity project, I focus on clear organization and maintainability. I use a structured folder system, separating assets into logical categories like _Project/Art, _Project/Scripts, _Project/Prefabs, and _Project/Scenes. Within scripts, I follow coding conventions (e.g., consistent naming, comments) and utilize namespaces to prevent naming conflicts. Version control (Git) is essential. Regularly refactoring code, using scriptable objects for data storage, and employing design patterns (like the Singleton pattern or the Observer pattern) helps reduce complexity.

Furthermore, I prioritize modularity. Breaking down large scenes and complex scripts into smaller, reusable components is crucial. Using prefabs effectively to avoid duplication is also key. Tools like the Addressable Asset System help manage asset loading and dependencies. For testing, I write unit tests using the Unity Test Framework to ensure individual components work as expected and integration tests for larger systems.

20. Describe the advantages of using object pooling in Unity, and how to implement it.

Object pooling in Unity offers significant performance advantages by reusing existing objects instead of constantly instantiating and destroying them. This is particularly beneficial for frequently created and destroyed objects like bullets, particle effects, or enemies. Instantiation and destruction are expensive operations, and object pooling reduces garbage collection overhead, leading to smoother gameplay and improved performance, especially on mobile devices.

To implement object pooling, you typically create a collection (like a List or Queue) to store inactive objects. When you need an object, you first check if one is available in the pool. If so, you reactivate it (e.g., by setting its SetActive(true) and repositioning it). If the pool is empty, you instantiate a new object. When the object is no longer needed, you deactivate it and return it to the pool instead of destroying it. Here's a simplified example:

public class ObjectPool : MonoBehaviour

{

public GameObject pooledObject;

public int poolSize = 10;

private List<GameObject> pool;

void Start()

{

pool = new List<GameObject>();

for (int i = 0; i < poolSize; i++)

{

GameObject obj = Instantiate(pooledObject);

obj.SetActive(false);

pool.Add(obj);

}

}

public GameObject GetPooledObject()

{

for (int i = 0; i < pool.Count; i++)

{

if (!pool[i].activeInHierarchy)

{

return pool[i];

}

}

return null; // Or instantiate a new one if needed

}

public void ReturnToPool(GameObject obj)

{

obj.SetActive(false);

}

}

21. How can you create a custom editor script to extend the Unity editor's functionality?

To create a custom editor script in Unity, you create a new C# script placed within an Editor folder in your project's Assets directory (e.g., Assets/Editor/MyCustomEditor.cs). This script inherits from UnityEditor.Editor or UnityEditor.EditorWindow. You use the [CustomEditor(typeof(YourTargetScript))] attribute to link the editor script to a specific component (YourTargetScript).

Inside the script, you can override the OnInspectorGUI() method to customize the Inspector window for the target component. You can use SerializedObject and SerializedProperty to interact with the target object's properties, enabling modification and saving of values. The EditorGUILayout and EditorGUI classes provide functions for drawing custom UI elements, such as buttons, fields, and labels. For example:

using UnityEditor;

using UnityEngine;

[CustomEditor(typeof(MyScript))]

public class MyScriptEditor : Editor

{

public override void OnInspectorGUI()

{

DrawDefaultInspector(); // Draws the default inspector

MyScript myScript = (MyScript)target;

if(GUILayout.Button("Do Something"))

{

myScript.DoSomething();

}

}

}

22. Explain the concept of occlusion culling and how it can improve performance.

Occlusion culling is a rendering optimization technique that prevents the GPU from drawing objects that are completely hidden from the camera's view by other objects. It avoids wasting resources on rendering pixels that won't be visible in the final image.

By not drawing occluded objects, occlusion culling reduces the number of draw calls and the amount of pixel processing (shading) the GPU needs to perform. This directly translates to improved performance (higher frame rates) and reduced power consumption, especially in scenes with high object density and significant overlap. This is often used alongside other culling techniques such as frustum culling.

23. How do you implement simple data persistence (saving and loading game data) in Unity?

For simple data persistence in Unity, I typically use PlayerPrefs. It's straightforward for saving primitive data types like integers, floats, and strings. To save data, I use methods like PlayerPrefs.SetInt("score", scoreValue), PlayerPrefs.SetFloat("volume", volumeValue), and PlayerPrefs.SetString("playerName", playerName). To load data, I use the corresponding PlayerPrefs.GetInt("score"), PlayerPrefs.GetFloat("volume"), and PlayerPrefs.GetString("playerName") methods. I also check if a key exists before loading using PlayerPrefs.HasKey("keyName") to provide default values if needed.

Alternatively, I might serialize data into a JSON or binary file for more complex data structures. For JSON, I would use JsonUtility.ToJson() to serialize an object and JsonUtility.FromJson() to deserialize it back. For binary, I would use BinaryFormatter to serialize and deserialize the data. Saving to a file involves using File.WriteAllText() or File.WriteAllBytes() and reading from a file involves using File.ReadAllText() or File.ReadAllBytes(), respectively. This is often used when more complex game data needs to be stored persistently.

24. Describe the use of reflection in C# and how it can be applied within Unity.

Reflection in C# allows you to inspect and manipulate types, properties, methods, and events at runtime. It lets you discover type information without knowing it at compile time. Within Unity, reflection can be applied to:

- Accessing private members: Modify private variables or call private methods of components (use with caution!).

- Creating generic editors: Dynamically generate editor interfaces based on component properties.

- Loading assets dynamically: Find and load assets based on type names at runtime. For example:

Type assetType = Type.GetType("MyCustomAsset, Assembly-CSharp");

AssetDatabase.LoadAssetAtPath("Assets/MyAsset.asset", assetType);

- Implementing attribute-based systems: Discover classes or methods marked with custom attributes. For instance:

[MyCustomAttribute]

public class MyClass {}

//Reflection to find all classes with MyCustomAttribute

Reflection offers great flexibility but can impact performance due to the runtime overhead of type discovery. Use it judiciously, especially in performance-critical sections of your Unity game.

25. Explain how to use asynchronous operations (async/await) in Unity. When would you need them?

Asynchronous operations in Unity (using async/await) allow you to perform long-running tasks without freezing the main Unity thread. This is crucial for tasks like network requests, file I/O, or complex calculations. To use it, you need to define an async method that returns a Task or Task<T>, and use await before operations that might take time.

For example:

using System.Threading.Tasks;

using UnityEngine;

public class Example :

MonoBehaviour

{

async Task LoadDataAsync()

{

string data = await LongRunningOperation();

Debug.Log(data);

}

Task<string> LongRunningOperation()

{

return Task.Run(() =>

{

// Simulate a long operation

System.Threading.Thread.Sleep(2000);

return "Data loaded";

});

}

void Start()

{

_ = LoadDataAsync();

}

}

You'd need asynchronous operations whenever a task might block the main thread for a noticeable amount of time, leading to a choppy or unresponsive user experience. It is especially useful when you don't want to hold up rendering or user input processing.

Advanced Unity interview questions

1. How can you optimize the performance of a mobile game with many physics objects?

Optimizing a mobile game with many physics objects involves several techniques. First, reduce the number of active physics objects by using techniques like object pooling or deactivating objects that are off-screen or not actively interacting. Second, simplify the physics representation by using simpler collision shapes (e.g., circles instead of complex polygons) and reducing the number of physics updates per second. Consider also using techniques to avoid performing updates if the state hasn't changed.

Further optimizations include using fixed timesteps for physics calculations to ensure consistency across different devices and frame rates, profiling the physics engine to identify performance bottlenecks, and using multithreading to offload physics calculations to a separate core (but be careful to address thread synchronization issues correctly!). Finally, if possible, baking static physics into level data can reduce the need for continuous calculations. Be certain to select a performant physics engine for the target hardware.

2. Explain the differences between using coroutines and threads in Unity, and when you would choose one over the other.

Coroutines and threads both allow you to perform tasks concurrently, but they operate differently in Unity. Coroutines are 'pseudo-concurrent' as they run on the main thread and yield execution at specific points (e.g., yield return null, yield return new WaitForSeconds(1)). This means they don't truly run in parallel but simulate concurrency by breaking a task into smaller parts. Threads, on the other hand, offer true parallelism by running code on separate cores, freeing the main thread.

Choose coroutines for tasks that don't require heavy processing and can be broken down into smaller, manageable chunks, like animations, simple AI, or timed events. They are easier to manage since they are tied to the Unity main thread. Opt for threads when you need to perform computationally intensive tasks (e.g., complex calculations, large file I/O, network operations) that would otherwise freeze the main thread. Be aware that threads require careful handling to avoid race conditions and synchronization issues. Importantly, you cannot directly access Unity objects or API from within a thread. Use UnityMainThreadDispatcher or similar approaches to marshal data or actions back to the main thread for Unity-related operations.

3. Describe a situation where you would use a custom render pipeline in Unity.

A custom render pipeline in Unity becomes essential when the built-in pipelines (Standard, URP, HDRP) don't meet the specific visual requirements or performance constraints of a project. For instance, if developing a stylized game with a unique rendering style, like a hand-painted look or a specific type of cel-shading, implementing a custom pipeline allows precise control over every rendering stage.

Another scenario is optimizing for very low-end hardware. Built-in pipelines can be too feature-rich, leading to performance bottlenecks. A custom pipeline lets you strip away unnecessary features and implement only the essential rendering steps, drastically improving performance. You might write custom shaders and rendering logic to control every draw call. For example, you can do custom shadow rendering using techniques not supported by the built-in pipelines, or implement custom deferred rendering paths with only the passes your game needs to render for maximum performance on your target hardware.

4. How do you implement a system for procedural generation of 3D environments?

Procedural generation of 3D environments typically involves breaking down the environment into manageable components and using algorithms to create variations. A common approach involves these steps:

- Define building blocks: Identify reusable elements like walls, floors, props, etc. These can be represented as 3D models or code-based primitives.

- Random number generation: Use a pseudorandom number generator (PRNG) with a seed to ensure reproducibility. The seed value determines the specific environment generated.

- Layout algorithms: Employ algorithms to arrange building blocks. Examples include:

- Tiling: Arrange elements in a grid or other pattern.

- L-systems: Use grammar rules to generate complex structures.

- Dungeon generation algorithms: Adapt algorithms like Binary Space Partitioning (BSP) or random walks to create interconnected spaces.

- Detailing: Add variations to the generated environment. This can include changing textures, adding props, and modifying geometry.

- Optimization: Optimize the generated environment for performance, which may involve techniques such as level of detail (LOD) and occlusion culling.

For example, generating a forest could involve scattering trees (defined as 3D models) randomly with constraints to avoid overlapping or generating a dungeon level might use a BSP algorithm to subdivide the level into rooms and corridors. Then it can be populated with traps and treasures.

5. Explain how to design a robust system for handling player input across different platforms and devices.

To design a robust player input system, abstract input events into a platform-agnostic format. Use an input manager that maps physical controls (keyboard, gamepad, touch) to virtual actions (jump, move forward, etc.). This allows for easy remapping and customization.

Consider using a layered approach: 1) Input Drivers: low-level platform-specific code. 2) Input Manager: Translates driver events to virtual actions. 3) Game Logic: Reacts to virtual actions. This approach allows you to change input methods without modifying game logic. Use techniques such as input buffering to handle dropped input or lag. Ensure that your code can support multiple input methods at once, such as keyboard and gamepad.

6. Describe the process of integrating a third-party SDK (e.g., analytics, advertising) into a Unity project.

Integrating a third-party SDK into a Unity project typically involves these steps:

Download the SDK: Obtain the SDK package (usually a

.unitypackagefile or a.aar/.jarfor Android and.framework/.xcframeworkfor iOS) from the SDK provider's website or repository.Import the SDK: In the Unity Editor, import the SDK package by going to

Assets > Import Package > Custom Package...and selecting the downloaded file. This adds the SDK's scripts, plugins, and other assets to your project. If the SDK includes native plugins (like.aar,.jar,.framework,.xcframework), ensure they're placed in the correctPluginsfolder structure (e.g.,Assets/Plugins/AndroidorAssets/Plugins/iOS).Configure the SDK: Follow the SDK's documentation to configure it correctly. This might involve adding specific script components to GameObjects, initializing the SDK with your API keys, and configuring platform-specific settings (e.g., in

Player Settingsfor Android and iOS).Write Code: Use the SDK's API in your C# scripts to trigger events or access its features. This often involves calling functions provided by the SDK's classes and methods. For Example:

using ThirdPartySDK; public class MyScript : MonoBehaviour { void Start() { ThirdPartySDK.Initialize("your_api_key"); ThirdPartySDK.TrackEvent("game_started"); } }Test and Build: Thoroughly test the integration in the Unity Editor and on target devices (Android, iOS, etc.). Build the project for the desired platform and verify that the SDK is functioning as expected. Debug any issues by checking logs and using the SDK's documentation or support resources.

7. How would you implement a system for networked physics in a multiplayer game, addressing latency and synchronization issues?

For networked physics, I'd use a client-side prediction and server reconciliation approach. Clients predict their own physics locally to minimize latency. The server runs the authoritative physics simulation and periodically sends updates to clients. Clients then reconcile their predicted states with the server's authoritative state, correcting any discrepancies smoothly, often using techniques like interpolation or blending. To mitigate cheating, server-side validation can be implemented to reject highly divergent client actions.

Consider using techniques like dead reckoning or interest management to further optimize bandwidth usage. Dead reckoning extrapolates object positions between updates. Interest management only sends updates about objects relevant to a specific client. For specific technologies, consider using UDP for its speed (handling lost packets) and a library like ENet, or game engines such as Unity or Unreal which have built-in networking features and often provide some physics synchronization tools.

8. Explain how to create a custom editor tool to streamline a specific workflow in Unity.

To create a custom editor tool in Unity, you typically start by creating a C# script in your Editor folder. This script will contain the logic for your custom tool. Use UnityEditor.Editor to create a new inspector or UnityEditor.EditorWindow for a separate window. For example, use [CustomEditor(typeof(YourComponent))] to extend the inspector of YourComponent. Within the script, use OnInspectorGUI() (for custom inspectors) or OnGUI() (for editor windows) to define the user interface using Unity's IMGUI system or UIElements for newer versions of unity. You can add buttons, fields, and other controls to manipulate scene objects or component properties.

To streamline a specific workflow, identify the repetitive or complex tasks. Then, build your custom editor tool to automate those steps. Let's say you want to batch rename game objects. You could create an editor window with a text field for the prefix, a number field for the starting index, and a button that iterates through selected game objects and renames them accordingly, making the process more efficient. For example, here's a simple code example:

using UnityEditor;

using UnityEngine;

public class RenameTool : EditorWindow

{

string prefix = "NewObject";

int startIndex = 0;

[MenuItem("Tools/Rename Tool")]

public static void ShowWindow()

{

GetWindow<RenameTool>("Rename Tool");

}

void OnGUI()

{

GUILayout.Label("Rename Settings", EditorStyles.boldLabel);

prefix = EditorGUILayout.TextField("Prefix", prefix);

startIndex = EditorGUILayout.IntField("Start Index", startIndex);

if (GUILayout.Button("Rename Selected"))

{

RenameSelectedObjects();

}

}

void RenameSelectedObjects()

{

GameObject[] selectedObjects = Selection.gameObjects;

for (int i = 0; i < selectedObjects.Length; i++)

{

selectedObjects[i].name = prefix + (startIndex + i);

}

}

}

9. Describe how you would approach optimizing the memory usage of textures and meshes in a large Unity project.

To optimize texture memory, I'd focus on several techniques. First, texture compression (e.g., ASTC, ETC) is crucial to reduce the size on the GPU. Use mipmaps to reduce the resolution of textures based on distance. Also, use texture atlases to combine multiple smaller textures into a single larger one to reduce draw calls and state changes. Consider reducing the overall texture resolution if the visual quality permits it.

For meshes, use mesh compression to reduce storage space. Optimize the mesh topology by removing unnecessary vertices and triangles. Use the lowest acceptable level of detail (LODs) for objects further away from the camera. Enable mesh optimization on import to consolidate shared vertices. Consider using procedural generation or tiling techniques for repeating patterns, reducing the need for large, unique meshes.

10. How do you handle different screen sizes and resolutions in Unity to ensure consistent UI and gameplay experience?

To handle different screen sizes and resolutions in Unity, I primarily use Unity's Canvas Scaler component, setting it to 'Scale With Screen Size'. This allows the UI to dynamically adjust based on the screen resolution, maintaining a consistent look across devices. I also ensure UI elements are anchored and positioned correctly within the canvas, using anchors to maintain relative positions.

For gameplay, I design levels with a resolution-independent approach. This might involve using orthographic cameras and adjusting the camera's size based on aspect ratio or using a perspective camera with field of view adjustments. Additionally, I test my game on various devices and emulators to identify and address any scaling or layout issues specific to certain screen sizes. Making sure to use Screen.width and Screen.height to programmatically adjust game elements or logic for different screen ratios.

11. Explain how to implement a system for saving and loading game data, including handling different data types and versions.

To implement saving and loading, serialize game data (game state, player progress) into a persistent format like JSON or binary. Use a game save object that holds all relevant data. Serialization converts the game save object into a string or byte stream for storage. Deserialization reverses this, recreating the game save object from the saved data.

Data type handling requires careful consideration. Simple types (int, float, string) map directly to JSON or binary representations. For complex objects, implement custom serialization/deserialization logic. Versioning is crucial. Include a version number in the save data. On load, check the version; if it's outdated, perform a data migration to update the data to the current version using conditional logic to update fields appropriately. This ensures compatibility between different game versions. For example:

struct GameSave {

int version = 2; //Current Version

int playerHealth;

float playerPositionX;

float playerPositionY;

};

//Example data Migration:

if (loadedSave.version < 2) {

loadedSave.playerMana = 100; //Add the playerMana variable

}

12. Describe a situation where you would use a compute shader in Unity and explain its benefits.

A good situation to use a compute shader in Unity is for particle simulations. Instead of updating particle positions and velocities on the CPU every frame, which can become a bottleneck with a large number of particles, we can offload that computation to the GPU using a compute shader.

The benefits are significant performance gains, especially when dealing with thousands or millions of particles. The GPU is highly parallelized, making it much faster at performing the same operation on many data points simultaneously. This frees up the CPU for other tasks, resulting in a smoother, more responsive game. For example, the compute shader code might look like this:

#pragma kernel CSMain

struct Particle {

float3 position;

float3 velocity;

};

RWStructuredBuffer<Particle> particles;

float deltaTime;

[numthreads(64,1,1)]

void CSMain (uint id : SV_DispatchThreadID)

{

Particle p = particles[id];

p.position += p.velocity * deltaTime;

particles[id] = p;

}

13. How would you implement a system for handling localization in a Unity game, including text, audio, and images?

For localization in Unity, I'd use ScriptableObjects to store localized data. For text, each ScriptableObject would hold a dictionary mapping locale keys (e.g., 'en', 'fr') to strings. A localization manager script would handle switching locales and retrieving the correct text. For audio and images, similar ScriptableObjects would store locale-specific assets.

To streamline the workflow, I'd integrate a localization plugin or asset store package like 'Localization Package' (Unity's official solution) or I2 Localization. These packages provide tools for importing/exporting localization data (e.g., CSV files), managing translations, and previewing localized content in the editor. The import and export feature of the data simplifies the localization process for the developers and translators. The preview option makes it easier to test and improve the localization in the game.

14. Explain how to create a custom animation system using the Animation Rigging package.

To create a custom animation system with Animation Rigging, you define constraints that modify the transform of GameObjects based on the state of other GameObjects or variables. First, create a custom IRigConstraint script that defines the logic for your animation effect. This script typically has properties that control the effect's intensity and target objects. Next, in your custom constraint script's Process method, calculate the desired transform modification based on your logic and apply it to the constrained object. You then add an empty GameObject to your character's hierarchy to act as a 'rig' for your custom constraints. Finally, you add your constraint script to the rig, setting its properties and connecting it to the appropriate GameObjects. This makes the animation dynamically affected by the game's context or other animations.

15. Describe how to implement a system for handling in-app purchases in a Unity game, including security considerations.

Implementing in-app purchases (IAP) in Unity involves using the Unity IAP service. First, import the IAP package through the Package Manager. Then, configure your products (consumable, non-consumable, subscription) in the Unity IAP catalog, specifying product IDs that match those in the app stores (e.g., Google Play, Apple App Store). Use the UnityPurchasing API to initialize IAP, register your products, and handle purchase events. The key steps are Initialize, ProcessPurchase, OnInitialized, OnInitializeFailed, OnPurchaseFailed. Implement UI elements to display products and initiate purchases using PurchaseProcessingResult.Complete() to finalize the transaction. For example:

public class IAPManager : MonoBehaviour, IStoreListener {

private static IStoreController m_StoreController;

private static IExtensionProvider m_StoreExtensionProvider;

void Start() {

// Begin initializing the purchasing system as soon as the C# scripting

// is running.

InitializePurchasing();

}

public void InitializePurchasing() {

// If we have already connected to Purchasing ...

if (IsInitialized()) {

// ... we are done here.

return;

}

}

public PurchaseProcessingResult ProcessPurchase(PurchaseEventArgs args) {

// A consumable product has been purchased by this user.

if (String.Equals(args.purchasedProduct.definition.id, kProductIDConsumable, StringComparison.Ordinal))

{

Debug.Log(string.Format("ProcessPurchase: PASS. Product: '{0}'", args.purchasedProduct.definition.id));

// The consumable item has been successfully purchased, add 100 coins to the user's in-game score.

GiveCoins(100);

// Or maybe by using UnityEngine.SceneManagement.SceneManager.LoadScene()

// Return a flag indicating whether this product has completely been received, or if the application needs

// to be reminded of this purchase at next app launch.

return PurchaseProcessingResult.Complete;

}

else

{

Debug.Log(string.Format("ProcessPurchase: FAIL. Unrecognized product: '{0}'", args.purchasedProduct.definition.id));

// Return a flag indicating whether this product has completely been received, or if the application needs

// to be reminded of this purchase at next app launch.

return PurchaseProcessingResult.Pending;

}

}

}

Security is crucial. Never trust client-side data for purchase validation. Implement server-side receipt validation to confirm purchases with the app stores' APIs. This prevents fraud and ensures legitimate transactions. Use robust error handling and logging to detect and address potential issues. Store receipt validation results securely on your server and provide only authorized content to the user after successful validation.

16. How do you profile and optimize the performance of a Unity game, including identifying bottlenecks and implementing solutions?

Profiling a Unity game involves identifying performance bottlenecks and implementing optimizations. Start with the Unity Profiler, which provides detailed insights into CPU usage, memory allocation, rendering performance, and audio. Analyze the profiler data to pinpoint areas consuming the most resources, such as scripts, rendering, or physics. Common bottlenecks include excessive garbage collection, complex shaders, or inefficient scripts.

Optimization techniques include object pooling to reduce garbage collection, optimizing shaders by simplifying calculations or using mobile-specific shaders, and using LOD (Level of Detail) groups to reduce polygon count for distant objects. Code optimization is crucial, so caching calculations, using efficient data structures, and minimizing Update() calls are beneficial. Optimize textures by using compression and mipmaps. Finally, for physics, simplify colliders and reduce the number of physics calculations. Remember to test on target hardware to ensure optimizations have the desired impact. Always profile before and after any change.

17. Explain how to create a custom shader using Shader Graph or HLSL.

Creating custom shaders depends on whether you're using Shader Graph (a visual editor) or HLSL (a code-based language). Shader Graph allows you to create shaders without writing code by connecting nodes that represent different shader operations. You start by creating a new Shader Graph asset, opening it in the editor, and then adding nodes for inputs (like textures or colors), mathematical operations, and outputs (like the final color of the surface). HLSL, on the other hand, requires writing shader code. A basic HLSL shader defines vertex and fragment functions. The vertex function transforms the object's vertices, and the fragment function calculates the color of each pixel. HLSL offers more control but requires understanding shader programming concepts.

For example, a simple HLSL shader might look like this:

Shader "Custom/SimpleShader" {

SubShader {

Pass {

HLSLPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata {

float4 vertex : POSITION;

};

struct v2f {

float4 vertex : SV_POSITION;

};

v2f vert (appdata v) {

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

return o;

}

fixed4 frag (v2f i) : SV_Target {

return fixed4(1, 0, 0, 1); // Red color

}

ENDHLSL

}

}

}

18. Describe the process of setting up and using a continuous integration (CI) system for a Unity project.

Setting up CI for a Unity project typically involves these steps: First, choose a CI platform (e.g., Jenkins, GitLab CI, GitHub Actions, CircleCI). Then, create a repository for your Unity project (Git is highly recommended). Next, configure the CI server to monitor your repository for changes. This usually involves creating a CI configuration file (e.g., .gitlab-ci.yml, Jenkinsfile) in the root of your repository. This file defines the build pipeline steps.

The CI pipeline will generally include steps like: activating your Unity license, building the Unity project for the desired platforms, running automated tests (if you have them), and potentially deploying the built application to a staging or production environment. These steps often involve command-line execution of Unity (using -batchmode, -quit, etc.) and other build tools. Example of build command:

/Applications/Unity/Unity.app/Contents/MacOS/Unity -batchmode -projectPath . -buildTarget iOS -executeMethod BuildScript.PerformiOSBuild -quit

19. How would you implement a system for handling user-generated content in a Unity game, including moderation and security?

To implement user-generated content (UGC) in Unity with moderation and security, I'd use a client-server architecture. The Unity client allows players to create content using in-game tools, then uploads it to a central server. The server stores the UGC (models, textures, scripts) securely in a database or cloud storage. This backend would also implement moderation features, like automated filtering based on keywords or image analysis, and manual review by moderators. For security, all client-server communication must be encrypted (HTTPS), and UGC should be validated and sanitized on the server-side to prevent exploits like code injection.

Specifically, to prevent script injection, UGC scripts would be disallowed or restricted to a safe subset of Unity API calls through scripting restrictions. Content validation checks for file sizes, formats, and potential malicious code patterns would be implemented. Automated and manual moderation queues would be established. Finally, a reporting system allows players to flag inappropriate content. Example: PlayerReports table with fields: reporterId, contentId, reportReason, reportTime, resolved to track reports.

20. Explain how to create a custom physics-based movement system for a character in Unity.

To create a custom physics-based movement system in Unity, start by disabling the built-in CharacterController or Rigidbody's default movement. Then, use Rigidbody.AddForce() or Rigidbody.MovePosition() in FixedUpdate() to apply forces or directly manipulate the character's position based on player input. Remember to account for factors like ground friction, air resistance, and maximum speed. For example, rb.AddForce(movementDirection * acceleration); where movementDirection is the calculated input direction, and acceleration is a tweakable float.

Implement collision detection manually using Physics.SphereCast or similar methods to detect obstacles and adjust the character's movement accordingly. This allows for fine-grained control over how the character interacts with the environment. For advanced movement, incorporate concepts like state machines to manage different movement states (e.g., walking, running, jumping), and animation blending to create a visually appealing experience.

21. Describe how to implement a system for handling dynamic lighting and shadows in a Unity game.

To implement dynamic lighting and shadows in Unity, start with Unity's built-in lighting system. Use Realtime lighting for dynamic lights, ensuring your render pipeline supports shadows (e.g., Forward or Deferred rendering). Configure light properties like intensity, color, and shadow type (hard/soft) on each light source. Utilize shadow settings in the Quality Settings to adjust shadow resolution and distance. Scripting can control light properties at runtime, such as changing intensity based on time of day. Furthermore, bake static lights using the lighting window to improve performance, blending baked and dynamic lighting effectively. Using light probes ensures objects get correct lighting information when moving between light and shadow.