Hiring the right talent for Hive-related roles involves more than just scanning resumes and verifying basic qualifications. Ensuring candidates possess the necessary skills can be challenging but is critical to the success of your projects involving big data technology.

This blog post provides a comprehensive list of interview questions tailored for evaluating Hive expertise. From data querying to data modeling to situational problems, you'll find targeted questions and expected answers for assessing applicants at various skill levels.

Using these questions can help you identify top-tier candidates and make informed hiring decisions. For a thorough evaluation, consider supplementing the interview with our Hive test.

Table of contents

10 common Hive interview questions to ask your applicants

To determine if your applicants possess the necessary skills for working with Hive, use these common interview questions. They are designed to gauge both technical proficiency and practical understanding, ensuring you find the right fit for your data engineer role.

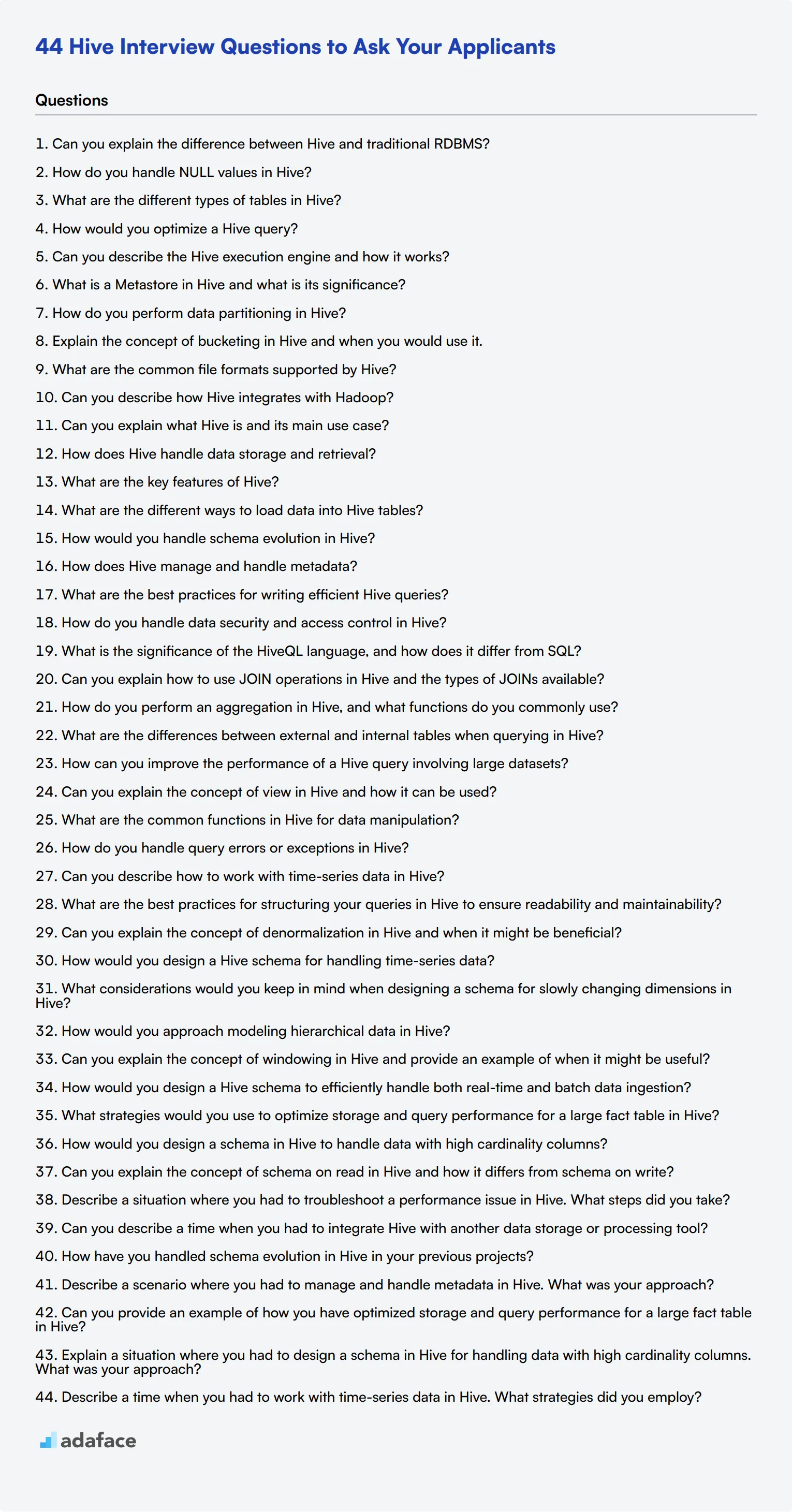

- Can you explain the difference between Hive and traditional RDBMS?

- How do you handle NULL values in Hive?

- What are the different types of tables in Hive?

- How would you optimize a Hive query?

- Can you describe the Hive execution engine and how it works?

- What is a Metastore in Hive and what is its significance?

- How do you perform data partitioning in Hive?

- Explain the concept of bucketing in Hive and when you would use it.

- What are the common file formats supported by Hive?

- Can you describe how Hive integrates with Hadoop?

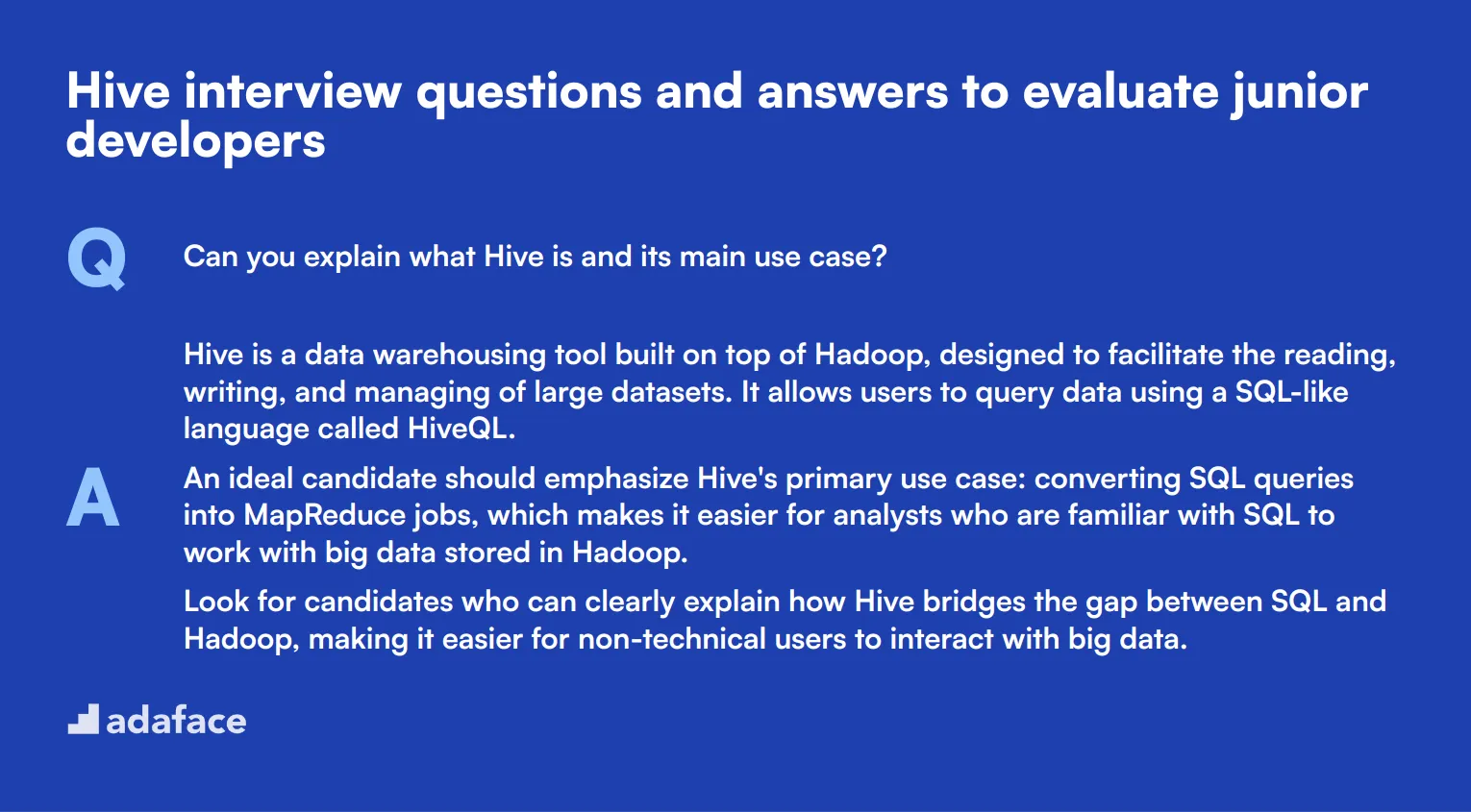

8 Hive interview questions and answers to evaluate junior developers

To evaluate whether your junior developers have a solid grasp of Hive, it’s crucial to ask the right questions. This list of questions is designed to help you assess their understanding of key concepts and practical skills in Hive during interviews.

1. Can you explain what Hive is and its main use case?

Hive is a data warehousing tool built on top of Hadoop, designed to facilitate the reading, writing, and managing of large datasets. It allows users to query data using a SQL-like language called HiveQL.

An ideal candidate should emphasize Hive's primary use case: converting SQL queries into MapReduce jobs, which makes it easier for analysts who are familiar with SQL to work with big data stored in Hadoop.

Look for candidates who can clearly explain how Hive bridges the gap between SQL and Hadoop, making it easier for non-technical users to interact with big data.

2. How does Hive handle data storage and retrieval?

Hive stores data in a distributed storage system, such as HDFS, and allows users to query this data using HiveQL. The data can be stored in various formats like text, ORC, and Parquet.

During retrieval, Hive translates HiveQL queries into a series of MapReduce or Tez jobs that run on Hadoop, allowing efficient processing of large datasets.

Candidates should demonstrate an understanding of Hive's architecture and its reliance on Hadoop for storage and processing. Look for knowledge on various storage formats and their impact on performance.

3. What are the key features of Hive?

Hive offers several key features, including a SQL-like query language (HiveQL), support for large datasets, and compatibility with Hadoop’s distributed storage and processing capabilities. It also provides features like partitioning and bucketing to optimize query performance.

Other notable features include schema flexibility, data summarization, and the ability to handle both structured and semi-structured data.

Look for candidates who can list and elaborate on these features, emphasizing how they contribute to Hive's effectiveness as a data warehousing tool.

4. What are the different ways to load data into Hive tables?

Data can be loaded into Hive tables in several ways, including using the LOAD DATA statement to load data from HDFS, manually inserting data using the INSERT statement, or creating external tables that reference data stored outside of Hive in HDFS.

Candidates should also mention the option to use tools like Apache Sqoop to import data from relational databases into Hive.

An ideal response will include various methods and highlight scenarios where each method is most appropriate. This shows the candidate's practical knowledge and flexibility in handling data ingestion.

5. How would you handle schema evolution in Hive?

Schema evolution in Hive can be handled using features like partitioning and external tables. When data schema changes, you can add new columns or modify existing columns without affecting existing data.

Candidates might also mention using the ALTER TABLE command to add, change, or replace columns in Hive tables. External tables are useful because they allow changes in the schema without the need to reload the data.

Look for answers that reflect an understanding of practical methods to manage schema changes while minimizing disruption to existing data and operations.

6. How does Hive manage and handle metadata?

Hive uses a component called the Metastore to manage metadata. The Metastore maintains information about the structure of tables, partitions, columns, data types, and HDFS locations.

It can be configured to use different databases like MySQL or Derby to store this metadata.

An ideal candidate should understand the importance of the Metastore and how it interacts with other components in Hive. Look for awareness of how metadata management impacts query planning and execution.

7. What are the best practices for writing efficient Hive queries?

Best practices for writing efficient Hive queries include optimizing join operations by using map-side joins, leveraging partitioning and bucketing, and using appropriate file formats like ORC or Parquet for storage.

Candidates should also mention avoiding complex nested queries and using Hive’s in-built functions to optimize query performance.

Look for responses that demonstrate a strong understanding of query optimization techniques and their practical application to ensure faster and more efficient data retrieval.

8. How do you handle data security and access control in Hive?

Data security and access control in Hive can be managed using Apache Ranger or Apache Sentry, which provide fine-grained access control and auditing capabilities. You can define policies to control who can access specific data or perform certain actions.

Candidates should also mention the use of HDFS file permissions and encryption to secure data at rest.

An ideal response will cover various layers of security and demonstrate an understanding of how to implement and enforce data access policies to ensure data protection.

10 Hive interview questions about data querying

To assess whether candidates possess the necessary skills for effective data querying in Hive, consider using this list of targeted interview questions. These questions will help you gauge their technical expertise and familiarity with practical Hive scenarios, making it easier to find the right fit for roles such as a data engineer or big data engineer.

- What is the significance of the HiveQL language, and how does it differ from SQL?

- Can you explain how to use JOIN operations in Hive and the types of JOINs available?

- How do you perform an aggregation in Hive, and what functions do you commonly use?

- What are the differences between external and internal tables when querying in Hive?

- How can you improve the performance of a Hive query involving large datasets?

- Can you explain the concept of view in Hive and how it can be used?

- What are the common functions in Hive for data manipulation?

- How do you handle query errors or exceptions in Hive?

- Can you describe how to work with time-series data in Hive?

- What are the best practices for structuring your queries in Hive to ensure readability and maintainability?

9 Hive interview questions and answers related to data modeling

When interviewing candidates for Hive-related positions, it's crucial to assess their understanding of data modeling concepts. These 9 Hive interview questions will help you gauge a candidate's ability to design efficient and scalable data structures. Use them to spark discussions about real-world scenarios and uncover the depth of a candidate's data engineering expertise.

1. Can you explain the concept of denormalization in Hive and when it might be beneficial?

Denormalization in Hive is the process of combining data from multiple tables into a single table, often duplicating information to improve query performance. This approach is contrary to the normalization principles used in traditional relational databases.

Denormalization can be beneficial in Hive for several reasons:

- Improved query performance by reducing the need for complex joins

- Faster data retrieval due to reduced I/O operations

- Simplified query writing for end-users

- Better compatibility with Hive's read-heavy, write-once paradigm

Look for candidates who can explain the trade-offs between storage space and query performance, and who understand that denormalization is often necessary in big data scenarios to achieve acceptable query speeds.

2. How would you design a Hive schema for handling time-series data?

When designing a Hive schema for time-series data, candidates should consider the following aspects:

- Partitioning: Use time-based partitions (e.g., by year, month, or day) to improve query performance

- Bucketing: Implement bucketing on a finer time granularity or other relevant columns

- Columnar storage: Utilize file formats like ORC or Parquet for efficient compression and faster queries

- Granularity: Determine the appropriate level of time granularity for storing data

A good schema design might look like this:

- Table partitioned by year and month

- Bucketed by day or hour within each partition

- Columns for timestamp, metrics, and any relevant dimensions

- Use of a columnar file format like ORC

Evaluate candidates based on their ability to balance query performance with storage efficiency, and their understanding of Hive's strengths in handling large-scale data processing tasks.

3. What considerations would you keep in mind when designing a schema for slowly changing dimensions in Hive?

When designing a schema for slowly changing dimensions (SCDs) in Hive, candidates should consider the following:

- SCD Type: Understand different SCD types (e.g., Type 1, Type 2, Type 3) and their implications

- Versioning: For Type 2 SCDs, include columns for version numbers or effective date ranges

- Storage format: Choose between storing all versions in a single table or using separate tables for current and historical data

- Partitioning: Partition data based on effective dates or other relevant attributes to improve query performance

- Update strategy: Determine how updates will be applied (e.g., through Hive transactions or batch updates)

A strong candidate should be able to discuss the trade-offs between different SCD implementations, such as storage overhead vs. query complexity. They should also mention the importance of considering the specific business requirements and query patterns when designing the schema.

Look for answers that demonstrate an understanding of both Hive's capabilities and limitations in handling SCDs, as well as the ability to design solutions that balance performance, storage efficiency, and data integrity.

4. How would you approach modeling hierarchical data in Hive?

When modeling hierarchical data in Hive, candidates should discuss several approaches:

- Flattened structure: Denormalize the hierarchy into a single table with columns for each level

- Parent-child table: Use a table with parent and child ID columns to represent relationships

- Adjacency list: Store each node with a reference to its parent

- Nested structures: Utilize Hive's support for complex data types like arrays, maps, or structs

- Path enumeration: Store the full path of each node in a string column

A strong answer should include pros and cons of each approach. For example:

- Flattened structures are simple to query but can lead to data redundancy

- Nested structures can efficiently represent complex hierarchies but may be more challenging to query

- Path enumeration allows for easy ancestor/descendant queries but can be less efficient for updates

Evaluate candidates based on their ability to weigh the trade-offs between query performance, storage efficiency, and ease of maintenance. Look for those who consider factors like the depth of the hierarchy, frequency of updates, and typical query patterns when recommending an approach.

5. Can you explain the concept of windowing in Hive and provide an example of when it might be useful?

Windowing in Hive allows for performing calculations across a set of rows that are related to the current row. It's useful for computing moving averages, running totals, rankings, and other analytics that require context from surrounding rows.

Key concepts of windowing include:

- Window functions: Operate on a window of data (e.g., ROW_NUMBER, RANK, LAG, LEAD)

- PARTITION BY: Divides rows into partitions to which the window function is applied

- ORDER BY: Determines the order of rows within each partition

- Window frame: Specifies which rows to include in the window (e.g., ROWS BETWEEN)

An example use case could be calculating a moving average of sales over the last 7 days for each product. This would help identify trends without the need for complex self-joins or subqueries.

Look for candidates who can explain both the syntax and the underlying concepts of windowing. They should be able to discuss scenarios where windowing provides more efficient or readable solutions compared to traditional SQL approaches.

6. How would you design a Hive schema to efficiently handle both real-time and batch data ingestion?

Designing a Hive schema for both real-time and batch data ingestion requires careful consideration of several factors:

- Partitioning strategy: Use time-based partitions to separate real-time and historical data

- Storage formats: Choose formats that support fast writes for real-time data (e.g., text files) and efficient reads for batch processing (e.g., ORC or Parquet)

- Table structure: Consider using separate tables for real-time and historical data, with a view to unify them

- Compaction strategy: Implement a process to compact small files from real-time ingestion into larger files for better query performance

- Schema evolution: Design the schema to accommodate potential changes over time

A possible approach could be:

- Create a 'landing' table for real-time data with minimal partitioning and text format

- Set up a process to periodically move data from the landing table to a 'historical' table with optimized storage and partitioning

- Use a unified view for querying both real-time and historical data

Evaluate candidates based on their ability to balance the needs of real-time ingestion (low latency, high throughput) with those of batch processing (query performance, storage efficiency). Look for answers that demonstrate an understanding of Hive's strengths and limitations in handling different data ingestion patterns.

7. What strategies would you use to optimize storage and query performance for a large fact table in Hive?

To optimize storage and query performance for a large fact table in Hive, candidates should discuss several strategies:

- Partitioning: Divide the table based on frequently filtered columns (e.g., date, region)

- Bucketing: Further organize data within partitions to optimize joins and sampling

- Columnar storage: Use file formats like ORC or Parquet for better compression and query performance

- Compression: Apply appropriate compression algorithms (e.g., Snappy, ZLIB) based on the use case

- Denormalization: Selectively denormalize to reduce complex joins for common queries

- Indexing: Utilize Hive indexing for columns frequently used in WHERE clauses

- Statistics gathering: Regularly update table and column statistics for better query optimization

A strong answer might also include:

- Discussion of trade-offs between storage efficiency and query performance

- Consideration of the specific query patterns and access frequency

- Mention of Hive features like vectorization and cost-based optimization

Look for candidates who can provide a holistic view of optimization, considering both storage and query aspects. They should be able to explain how different strategies complement each other and how to choose the right combination based on specific use cases and data engineering requirements.

8. How would you design a schema in Hive to handle data with high cardinality columns?

When designing a schema in Hive to handle data with high cardinality columns, candidates should consider the following strategies:

- Avoid partitioning on high cardinality columns: This can lead to too many small partitions

- Use bucketing: Distribute data evenly across a fixed number of buckets based on a hash of the high cardinality column

- Consider bitmap indexing: For columns with up to a few million distinct values

- Leverage columnar storage: Use ORC or Parquet formats for better compression and query performance

- Implement dimension tables: For high cardinality categorical data, use dimension tables with surrogate keys

- Data skew handling: Implement techniques like salting or distribution keys to handle skewed data

A strong answer might also include:

- Discussion of the impact of high cardinality on join performance and how to mitigate it

- Consideration of using approximate algorithms for certain types of queries (e.g., HyperLogLog for count distinct)

- Mention of the trade-offs between query performance and storage overhead

Evaluate candidates based on their ability to balance performance considerations with practical implementation. Look for answers that demonstrate an understanding of Hive's architecture and how it handles large-scale data processing tasks involving high cardinality columns.

9. Can you explain the concept of schema on read in Hive and how it differs from schema on write?

Schema on read in Hive refers to the ability to apply a schema to data at the time it's queried, rather than when it's written to storage. This is in contrast to schema on write, which is the traditional approach used in relational databases where data must conform to a predefined schema when it's inserted.

Key differences include:

- Flexibility: Schema on read allows for easier schema evolution and handling of semi-structured data

- Performance: Schema on write typically offers better query performance but slower data loading

- Data validation: Schema on write enforces data quality at insertion, while schema on read postpones validation

- Storage efficiency: Schema on read often results in more efficient storage of raw data

A strong candidate should be able to discuss the advantages and disadvantages of each approach. They should understand that schema on read is particularly useful in big data scenarios where data variety and velocity make strict schemas impractical. Look for answers that demonstrate an understanding of how this concept fits into the broader data lake architecture and its implications for data governance and quality.

7 situational Hive interview questions with answers for hiring top developers

To identify top-notch Hive developers who can handle complex data tasks, ask them these situational Hive interview questions. These questions are designed to gauge their practical problem-solving skills and understanding of real-world scenarios.

1. Describe a situation where you had to troubleshoot a performance issue in Hive. What steps did you take?

When troubleshooting a performance issue in Hive, the first step is to identify the bottleneck. This usually involves analyzing the query execution plan and checking for any resource-intensive operations.

Next, you would look into optimizing the query by breaking it down into smaller parts, adding appropriate indexes, and ensuring that the data is properly partitioned and bucketed.

A strong candidate should mention the importance of monitoring resource usage and possibly adjusting the cluster configuration. Look for a detailed explanation of their troubleshooting process and any tools they used.

2. Can you describe a time when you had to integrate Hive with another data storage or processing tool?

Integrating Hive with other data storage or processing tools often involves using connectors or APIs. For instance, integrating with HBase would require configuring Hive to use HBaseStorageHandler.

During the integration process, ensuring data consistency and optimizing data flow is crucial. One must also validate the data formats to ensure compatibility.

Look for candidates who discuss specific challenges they faced and how they resolved them, demonstrating their problem-solving skills and technical expertise.

3. How have you handled schema evolution in Hive in your previous projects?

Handling schema evolution in Hive typically involves using the Avro or Parquet file formats, which support schema evolution by allowing you to add new fields to your schema without affecting existing data.

It is also important to keep track of schema changes using a version control system and ensuring backward compatibility of queries.

An ideal candidate should explain their approach to managing schema changes, including any tools or practices they used to ensure data integrity and minimize disruptions.

4. Describe a scenario where you had to manage and handle metadata in Hive. What was your approach?

Managing metadata in Hive involves working with the Hive Metastore, which stores information about the structure of tables and partitions.

In a scenario where metadata consistency is an issue, one might use the MSCK REPAIR TABLE command to synchronize the Metastore with the actual data files.

Candidates should emphasize their understanding of the Metastore's role and any specific steps they took to resolve metadata issues, such as cleaning up stale metadata or using appropriate tools.

5. Can you provide an example of how you have optimized storage and query performance for a large fact table in Hive?

Optimizing storage and query performance for large fact tables in Hive often involves partitioning the table to reduce the amount of data scanned during queries.

Additionally, one might employ bucketing to further optimize join operations and use columnar storage formats like ORC or Parquet to improve read performance.

Look for candidates who mention specific techniques they used and any measurable outcomes, such as reduced query times or lower storage costs.

6. Explain a situation where you had to design a schema in Hive for handling data with high cardinality columns. What was your approach?

Designing a schema for high cardinality columns often requires the use of techniques like composite keys or encoding to reduce storage requirements and improve query performance.

One might also use partitioning and bucketing to manage high cardinality data effectively, ensuring that queries remain efficient.

An ideal candidate should explain their thought process and any specific challenges they faced, demonstrating their ability to design scalable and efficient schemas.

7. Describe a time when you had to work with time-series data in Hive. What strategies did you employ?

Working with time-series data in Hive typically involves partitioning the data by time intervals, such as by day or month, to improve query efficiency.

It's also important to use the appropriate file formats and compression to manage storage and retrieval performance.

Candidates should discuss their approach to managing time-series data, including any specific techniques they used to handle large volumes of data and ensure fast query performance.

Which Hive skills should you evaluate during the interview phase?

It's essential to recognize that a single interview won't allow you to evaluate every aspect of a candidate's skills and knowledge. However, when it comes to assessing Hive expertise, there are several core skills that can provide significant insights into a candidate's capabilities.

HiveQL

To filter candidates based on their HiveQL knowledge, consider using an assessment test with relevant MCQs. This can help you gauge their understanding of the language and its functionalities, such as Hive Online Test.

In addition to assessments, you can pose targeted interview questions to further evaluate their HiveQL skills. One such question could be:

Can you explain the difference between Managed Tables and External Tables in Hive?

When asking this question, look for a clear understanding of how data is stored in both table types, as well as the implications for data management and access. Candidates should be able to discuss scenarios in which one type would be preferable over the other.

Data Partitioning

An assessment that includes questions on data partitioning can help identify candidates with the necessary expertise. Use our Hive Online Test for this purpose.

A targeted interview question to assess their knowledge of data partitioning could be:

How would you implement partitioning in Hive for a large dataset?

Evaluate their response for clarity on the partitioning strategy they would use, including the criteria for partitioning and the potential impact on performance. Look for knowledge of partitioning by date, region, or another logical category.

Optimization Techniques

To gauge optimization knowledge, consider including MCQs related to optimization techniques in your assessments. Check out the Hive Online Test for relevant questions.

Another insightful question for candidates could be:

What are some optimization techniques you can apply to improve Hive query performance?

Look for responses that mention various strategies, such as using indexes, bucketing, or optimizing joins. A good candidate should demonstrate familiarity with practical examples and be able to explain the impact of these techniques on performance.

Hire top talent with Hive skills tests and the right interview questions

If you're looking to hire someone with Hive skills, it's important to ensure they truly have those skills. Evaluating accurately is key to making the right hiring decision.

The best way to do this is by using skill tests. You can use our Hive Test or explore other related tests like the Hadoop Online Test and the Data Analysis Test.

Once you've used these tests, you can shortlist the best applicants and call them for interviews. This way, you're focusing on candidates who have already proven their skills.

To get started, sign up on our dashboard and start assessing right away. For more information, visit our test library.

Hive Online Test

Download Hive interview questions template in multiple formats

Hive Interview Questions FAQs

Key skills include proficiency in Hadoop, SQL queries, data warehousing concepts, and familiarity with data modeling and scripting.

Ask questions about their experience with data querying, data modeling, and situational problem-solving within Hive environments.

Situational questions help gauge the candidate's practical problem-solving abilities and how they apply their technical knowledge in real-world scenarios.

It allows you to assess their foundational knowledge, enthusiasm for the technology, and their potential for growth within your team.

Start with basic questions to assess foundational knowledge, then proceed to more complex questions about data querying, data modeling, and situational scenarios.

Familiarize yourself with Hive, prepare a list of questions covering various aspects of Hive, and consider practical tests or coding challenges to evaluate hands-on skills.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources