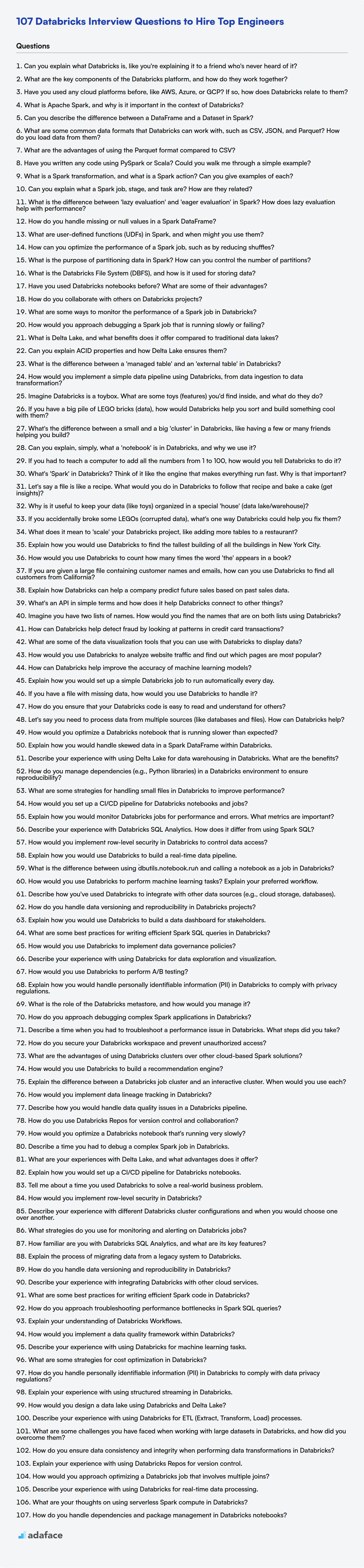

Hiring the right Databricks professional is like finding a needle in a haystack; you need to ask the right questions to sift through the noise. As with hiring any other specialized role, such as a data scientist, the interview process is a critical step.

This blog post aims to equip you with a comprehensive list of Databricks interview questions tailored for various experience levels, from freshers to seasoned professionals. We'll also include a section of multiple-choice questions to help you quickly assess a candidate's knowledge.

By using these questions, you'll be able to gauge a candidate's skills and experience effectively, and you can even use our Spark online test to screen candidates before the interview.

Table of contents

Databricks interview questions for freshers

1. Can you explain what Databricks is, like you're explaining it to a friend who's never heard of it?

Imagine you have tons of data and you want to analyze it quickly and easily, but it's scattered all over the place. Databricks is like a super-powered workspace in the cloud that helps you do just that. It's built on top of Apache Spark, which is a really fast engine for processing large amounts of data. Databricks makes Spark easier to use and adds a bunch of tools on top, like collaborative notebooks (where you can write code and see results instantly), machine learning libraries, and ways to manage your data pipelines.

Think of it as a collaborative environment for data scientists, engineers, and analysts. They can all work together on the same data, using tools like Python, SQL, Scala, and R. Databricks handles the infrastructure (like setting up and managing servers), so you can focus on actually analyzing the data and building models instead of worrying about the underlying technology. Plus, it integrates well with cloud storage like AWS S3 or Azure Blob Storage to easily read and write data.

2. What are the key components of the Databricks platform, and how do they work together?

The key components of the Databricks platform are:

- Apache Spark: The core processing engine for large-scale data processing. Databricks provides a optimized version of Spark.

- Databricks Runtime: A pre-configured environment with optimized Spark, libraries, and performance enhancements.

- Delta Lake: An open-source storage layer that brings reliability to data lakes, enabling ACID transactions, scalable metadata handling, and unified streaming and batch data processing. It allows for building a Lakehouse.

- MLflow: An open-source platform to manage the ML lifecycle, including experiment tracking, model packaging, and deployment.

- Databricks Workspace: A collaborative environment for data scientists, engineers, and analysts to work together. It provides notebooks, dashboards, and other tools for data exploration, development, and deployment.

These components work together by leveraging Spark for data processing, Delta Lake for reliable storage, MLflow for ML lifecycle management, and the Databricks Workspace for collaboration and productivity. The Databricks Runtime provides a optimized environment to run all of these components. Users can write and execute code using notebooks, schedule jobs, and deploy machine learning models, all within the Databricks platform.

3. Have you used any cloud platforms before, like AWS, Azure, or GCP? If so, how does Databricks relate to them?

Yes, I have experience with cloud platforms like AWS, Azure, and GCP. Databricks is typically deployed on top of these cloud platforms, leveraging their underlying infrastructure for compute, storage, and networking. It's not a cloud platform itself, but rather a data analytics and machine learning platform optimized to run within those environments.

Specifically, Databricks integrates deeply. For example, on AWS, Databricks utilizes services like S3 for storage and EC2 for compute. Similarly, on Azure, it leverages Azure Blob Storage and Azure VMs, and on GCP it utilizes Google Cloud Storage and Compute Engine. This allows Databricks to focus on providing a unified analytics platform while relying on the cloud providers for infrastructure management and scalability.

4. What is Apache Spark, and why is it important in the context of Databricks?

Apache Spark is a powerful open-source, distributed processing system designed for big data processing and analytics. It provides high-level APIs in Java, Scala, Python, and R, and an optimized engine that supports general computation graphs for data analysis. Spark is crucial in Databricks because Databricks is built upon Spark; it enhances Spark's capabilities by providing a collaborative notebook environment, automated cluster management, and optimized performance.

Specifically, Databricks uses Spark for various data engineering and data science tasks, including data ingestion, transformation, machine learning, and real-time data streaming. Databricks simplifies Spark usage with features like auto-scaling clusters, built-in Delta Lake for reliable data storage, and MLflow for managing machine learning workflows. Therefore, Spark is the fundamental engine that powers the Databricks platform, making it essential for processing large datasets efficiently and effectively.

5. Can you describe the difference between a DataFrame and a Dataset in Spark?

In Spark, both DataFrames and Datasets are distributed collections of data. The main difference lies in the level of abstraction and type safety. A DataFrame is like a table with columns, similar to a relational database table or a spreadsheet. It provides a schema view of the data, but without compile-time type safety. This means you won't know if you're using the wrong data type until runtime.

A Dataset, on the other hand, provides compile-time type safety. It's essentially a DataFrame with strong typing. You define the structure of your data using a Scala or Java class, and Spark ensures that the data conforms to that structure at compile time. Datasets are available in Scala and Java. With Datasets, you can perform operations directly on objects (e.g., dataset.map(x => x.attribute) instead of using string column names) making code cleaner and less error-prone. Datasets leverage Encoders to convert between JVM objects and Spark's internal binary format for storage and processing.

6. What are some common data formats that Databricks can work with, such as CSV, JSON, and Parquet? How do you load data from them?

Databricks can work with a variety of data formats including: CSV, JSON, Parquet, ORC, Avro, and plain text files. It also supports working with data stored in relational databases via JDBC connections.

To load data, you typically use Spark's spark.read API. For example, to load a CSV file:

df = spark.read.csv("/path/to/your/file.csv", header=True, inferSchema=True)

df.show()

Similarly, for JSON, you'd use spark.read.json(), and for Parquet, spark.read.parquet(). The header=True option in the CSV example indicates that the first row contains column names, and inferSchema=True attempts to automatically determine the data type of each column. Different options are available for each format to control how the data is parsed and loaded.

7. What are the advantages of using the Parquet format compared to CSV?

Parquet offers significant advantages over CSV, primarily due to its columnar storage format. This allows for efficient data compression and encoding, resulting in smaller file sizes and reduced storage costs. Columnar storage also enables faster query performance as only the necessary columns are read, skipping irrelevant data.

In contrast, CSV stores data in row-based format, leading to larger file sizes and slower query performance, especially when dealing with large datasets. Parquet also supports schema evolution and better data type handling, which are often lacking or cumbersome in CSV files.

8. Have you written any code using PySpark or Scala? Could you walk me through a simple example?

Yes, I have experience with PySpark. Here's a simple example:

Let's say we have a text file data.txt with some lines of text. We want to count the occurrences of each word using PySpark. Here's the code:

from pyspark import SparkContext

sc = SparkContext("local", "Word Count")

# Load the text file into an RDD

rdd = sc.textFile("data.txt")

# Split each line into words, flatten the RDD, convert to lowercase, and add a count of 1 to each word

word_counts = rdd.flatMap(lambda line: line.split())\

.map(lambda word: word.lower())\

.map(lambda word: (word, 1))

# Reduce by key to sum the counts for each word

word_counts = word_counts.reduceByKey(lambda a, b: a + b)

# Print the word counts

for word, count in word_counts.collect():

print(f"{word}: {count}")

sc.stop()

This code first creates a SparkContext. Then, it reads the text file, splits each line into words, and converts them to lowercase. Finally, it counts the occurrences of each word and prints the results. The flatMap function applies the lambda function to each element, returning a new RDD and then flattening the result. The reduceByKey function aggregates the values based on the key by using the lambda function.

9. What is a Spark transformation, and what is a Spark action? Can you give examples of each?

Spark transformations are lazy operations that create a new RDD (Resilient Distributed Dataset) from an existing one. They don't execute immediately; instead, Spark builds a Directed Acyclic Graph (DAG) of transformations. Examples include map(), filter(), flatMap(), groupByKey(), and reduceByKey().

Spark actions, on the other hand, trigger the execution of the DAG and return a value to the driver program or write data to external storage. Actions force Spark to perform the computations defined by the transformations. Examples include collect(), count(), first(), take(), reduce(), and saveAsTextFile().

10. Can you explain what a Spark job, stage, and task are? How are they related?

In Spark, a job is the highest-level unit of execution. It represents a complete application or workflow that Spark executes. A job is triggered when you call an action on a RDD (Resilient Distributed Dataset) or DataFrame, such as count(), collect(), or saveAsTextFile(). The Spark driver breaks down the job into smaller units called stages.

A stage is a set of independent tasks that can be executed in parallel. Stages are created based on shuffle dependencies, which occur when data needs to be redistributed across partitions (e.g., during a groupByKey() or reduceByKey() operation). Each stage consists of tasks, which are the smallest units of work in Spark. A task is a single unit of execution that operates on a partition of data. Each task performs the same operation on its respective partition. Thus, a Job is comprised of multiple stages, and each stage is comprised of multiple tasks.

11. What is the difference between 'lazy evaluation' and 'eager evaluation' in Spark? How does lazy evaluation help with performance?

In Spark, eager evaluation executes a transformation as soon as it's called. In contrast, lazy evaluation (used by Spark) delays execution until an action is triggered. Transformations are recorded in a Directed Acyclic Graph (DAG), and only when an action like count() or saveAsTextFile() is called does Spark actually process the transformations.

Lazy evaluation helps with performance in a couple of key ways:

- Optimization: Spark can optimize the entire DAG before execution. It might reorder transformations, combine them, or choose the most efficient execution strategy.

- Avoidance of unnecessary computations: If only a subset of the data is needed, Spark might only compute the necessary transformations for that subset, avoiding processing the entire dataset.

- Example:

val data = sc.textFile("mydata.txt") val filteredData = data.filter(_.contains("error")) //Transformation - lazy evaluated filteredData.count() // Action - triggers evaluation

12. How do you handle missing or null values in a Spark DataFrame?

Spark provides several ways to handle missing or null values in DataFrames. Common approaches include:

- Filtering: Removing rows with null values using

df.na.drop()ordf.filter(col("column_name").isNull()). Thedrop()method offers options likehow='any'(drop if any value in the row is null) orhow='all'(drop only if all values in the row are null). - Imputation: Replacing null values with a specific value. This can be done using

df.na.fill(value, subset=["column_name"]). Common imputation strategies include using the mean, median, or a constant value. For example,df.na.fill(df.stat.mean("column_name"), subset=["column_name"])to replace missing values with the mean of the column. You can also usefillnafunction. Example:df.fillna({'column_name': 0}) - Using

coalesce: Thecoalescefunction returns the first non-null value from a list of columns. This is helpful when you have multiple columns that might contain the desired data. Example:df.withColumn("new_column", coalesce(col("column1"), col("column2")))

13. What are user-defined functions (UDFs) in Spark, and when might you use them?

User-defined functions (UDFs) in Spark are custom functions that extend Spark's built-in functionality. You define these functions using languages like Python, Scala, Java, or R, and then register them with Spark to be used within Spark SQL expressions and DataFrames/Datasets. Essentially, they allow you to apply custom logic to your data during transformations.

You might use UDFs when:

- You need to perform complex calculations or transformations that aren't available in Spark's built-in functions.

- You need to integrate with external libraries or systems within your Spark jobs. For example:

spark.udf.register("my_udf", my_python_function)

14. How can you optimize the performance of a Spark job, such as by reducing shuffles?

Optimizing a Spark job involves several strategies to minimize data shuffling and resource consumption. One key approach is to reduce shuffles, which are expensive operations involving data redistribution across the cluster. This can be achieved through techniques like:

- Using

broadcastvariables: Broadcast small datasets to all worker nodes, avoiding the need to shuffle a larger table for joins. Example:val broadcastState = sc.broadcast(statesMap). Then, in a map or join operation, usebroadcastState.value. - Filtering early: Apply filters as early as possible in the data processing pipeline to reduce the amount of data that needs to be shuffled.

- Using appropriate partitioning: Choose the right number of partitions based on the data size and cluster resources. Too few partitions can lead to underutilization, while too many can increase overhead. Consider using repartition or coalesce strategically.

- Avoiding unnecessary

groupByKey: PreferreduceByKeyoraggregateByKeywhen possible, as they perform pre-aggregation on the mapper side before shuffling.groupByKeyshuffles all the data. - Optimizing join operations: Consider techniques like bucketing or using

skew joinhints if there's significant data skew. Also, ensure proper join ordering to minimize data transfer.

15. What is the purpose of partitioning data in Spark? How can you control the number of partitions?

Partitioning in Spark is crucial for parallel processing. It divides data into smaller, logical chunks that can be processed independently by different executors across the cluster. This significantly improves performance and scalability, especially for large datasets, as it enables true parallel computation.

You can control the number of partitions in several ways:

- During data loading: Many

SparkSession.readmethods allow you to specify the number of partitions (e.g.,spark.read.text("file.txt").repartition(10)). - Using

repartition()orcoalesce():repartition()creates a new RDD/DataFrame with the specified number of partitions, shuffling the data.coalesce()attempts to reduce the number of partitions more efficiently, avoiding a full shuffle if possible. Use repartition when increasing partitions or when a full shuffle is acceptable/desired. Use coalesce when decreasing the number of partitions to avoid a full shuffle. - Spark configuration: The

spark.default.parallelismproperty affects the default number of partitions for RDDs created from data sources like files or collections. However, explicitly setting the number of partitions withrepartition()or during data loading is often preferred for better control.

16. What is the Databricks File System (DBFS), and how is it used for storing data?

Databricks File System (DBFS) is a distributed file system mounted into a Databricks workspace, available on Databricks clusters. It's an abstraction layer on top of cloud storage (like AWS S3, Azure Blob Storage, or Google Cloud Storage), allowing users to interact with cloud storage as if it were a local file system.

DBFS is used for various purposes: storing data for processing with Spark, storing libraries, configuration files, and experiment results. Users can interact with DBFS through the DBFS CLI, the Databricks UI, the Databricks REST API, and Spark APIs. This makes it easy to load data into Spark DataFrames and Datasets. For example, you could use spark.read.csv("dbfs:/FileStore/mydata.csv") to read a CSV file from DBFS.

17. Have you used Databricks notebooks before? What are some of their advantages?

Yes, I have used Databricks notebooks extensively. Some advantages include:

- Collaboration: Multiple users can simultaneously work on the same notebook, fostering teamwork. Real-time co-authoring is supported.

- Integrated Environment: Provides a unified environment for data engineering, data science, and machine learning tasks. It integrates Spark, Python, Scala, R, and SQL.

- Version Control: Integration with Git allows for tracking changes and collaborating on code effectively.

- Interactive Computing: Enables interactive data exploration and visualization, making it easier to understand and analyze data. Inline display of plots and tables.

- Scalability: Leverages the power of Apache Spark for processing large datasets.

- Simplified Deployment: Notebooks can be easily deployed as production jobs. You can schedule jobs directly from the Databricks UI.

- Reproducibility: Easy to recreate environments with defined dependencies.

For example, using %pip install <package_name> allows specific packages to be installed for the current notebook session, ensuring environment consistency.

18. How do you collaborate with others on Databricks projects?

Collaboration in Databricks involves several key practices. I leverage Databricks Repos for version control using Git, allowing multiple developers to work on the same notebooks or code files simultaneously and manage changes effectively through branching, merging, and pull requests. I also use Databricks' built-in commenting features within notebooks to discuss code, share insights, and provide context for changes, which improves communication and understanding.

Furthermore, I utilize shared Databricks workspaces and clusters to facilitate teamwork. For example, when developing a data pipeline, team members can work on different stages (e.g., data ingestion, transformation, loading) in separate notebooks within the same repo, then use a central notebook to orchestrate the entire pipeline. Using shared clusters ensures consistent environments and simplifies dependency management, avoiding the 'works on my machine' problem. Also, I actively participate in code reviews, providing constructive feedback to ensure code quality and adherence to coding standards. Finally, clear communication is key, and I utilize tools such as Slack or Teams for quick discussions and updates on project progress.

19. What are some ways to monitor the performance of a Spark job in Databricks?

You can monitor Spark job performance in Databricks through several methods. The Spark UI is a primary tool, offering insights into job execution, stages, tasks, and resource usage. Access it directly from the Databricks UI or through the Spark History Server for completed jobs. You can also use Databricks Monitoring UI, available in the Databricks UI.

Additionally, Databricks Repos and integrated logging solutions like Azure Monitor or AWS CloudWatch (depending on the cloud provider) can capture logs and metrics for analysis. Consider using spark.sparkContext.addSparkListener() to programmatically track and collect metrics and push them to external monitoring systems. For example, one can use this to send data to monitoring tools like Prometheus or Grafana.

20. How would you approach debugging a Spark job that is running slowly or failing?

When debugging slow or failing Spark jobs, I'd start by examining the Spark UI for bottlenecks, focusing on stages with long durations or high shuffle read/write. I would check for data skew, which can cause uneven task distribution and slow performance. Reviewing executor logs and driver logs is crucial for identifying errors, exceptions, or resource issues like memory errors (OOM).

Next, I would look at the code to ensure the data is being processed efficiently. This includes avoiding unnecessary shuffles (e.g., using broadcast joins for small datasets), optimizing data serialization (using Kryo), and ensuring that data is filtered as early as possible in the pipeline to reduce the amount of data being processed. I might also consider increasing the parallelism of the job by adjusting the number of partitions.

21. What is Delta Lake, and what benefits does it offer compared to traditional data lakes?

Delta Lake is an open-source storage layer that brings reliability to data lakes. It provides ACID (Atomicity, Consistency, Isolation, Durability) transactions, scalable metadata handling, and unifies streaming and batch data processing. It's essentially a layer on top of existing object storage like AWS S3, Azure Data Lake Storage, or Google Cloud Storage, providing enhanced features for data management.

Compared to traditional data lakes, Delta Lake offers several benefits: It ensures data reliability through ACID transactions, preventing data corruption. It enables schema enforcement and evolution, maintaining data quality over time. It provides versioning and time travel capabilities, allowing you to audit and revert to previous data states. Traditional data lakes often lack these features, leading to data quality issues and difficulties in managing large-scale data.

22. Can you explain ACID properties and how Delta Lake ensures them?

ACID properties (Atomicity, Consistency, Isolation, Durability) are crucial for reliable data transactions. Delta Lake ensures ACID properties on your data lake using a transaction log.

- Atomicity: Transactions are all-or-nothing. Delta Lake uses a commit protocol to ensure either all changes in a transaction are applied, or none are. The Delta transaction log serves as the source of truth, guaranteeing that partial writes are never exposed.

- Consistency: Transactions move the data from one valid state to another. Delta Lake enforces schema validation and constraints, preventing bad data from being written. It ensures that only valid data, conforming to the defined schema, is added to the table.

- Isolation: Concurrent transactions are isolated from each other. Delta Lake uses optimistic concurrency control with serializable isolation, meaning that multiple transactions can run concurrently, but they will appear as if they executed serially. Conflicts are detected and resolved, and transactions will retry automatically.

- Durability: Once a transaction is committed, it is permanent. Delta Lake stores the transaction log in reliable storage (e.g., cloud object storage like S3 or Azure Blob Storage), making committed changes durable even in the face of failures. Checkpointing the transaction log improves read performance.

23. What is the difference between a 'managed table' and an 'external table' in Databricks?

In Databricks, a managed table's lifecycle is fully controlled by Databricks. When you drop a managed table, both the metadata and the underlying data are deleted. The data is stored in the Databricks-managed storage location, often the DBFS root. An external table, on the other hand, only has its metadata managed by Databricks. The data itself resides in an external storage location that you specify, such as an Azure Blob Storage or AWS S3 bucket. Dropping an external table only removes the metadata from the metastore; the underlying data remains untouched in its external location.

Key differences are:

- Data Location: Managed tables store data in Databricks-controlled storage; external tables store data in user-defined locations.

- Data Deletion: Dropping a managed table deletes both metadata and data; dropping an external table only deletes metadata.

- Data Access: External tables are useful when data needs to be accessed by other tools or processes outside of Databricks because they reside in external storage, while managed tables are primarily used within Databricks.

24. How would you implement a simple data pipeline using Databricks, from data ingestion to data transformation?

A simple Databricks data pipeline involves ingesting data from a source, transforming it, and then storing it. First, use Databricks notebooks or Delta Live Tables (DLT) to read data from various sources (e.g., cloud storage like AWS S3, Azure Blob Storage, or databases). This can be done using Spark's spark.read functionality with appropriate format options (e.g., csv, json, parquet).

Next, the data is transformed using Spark SQL or PySpark DataFrames. This involves cleaning, filtering, aggregating, and joining datasets. For example, you can use functions like filter(), groupBy(), agg(), and join(). The transformed data is then written to a target storage location, typically a Delta Lake table for improved reliability and performance. This is accomplished using df.write.format("delta").save("/path/to/delta/table"). Scheduling can be achieved using Databricks Jobs or DLT pipelines for automated execution.

Databricks interview questions for juniors

1. Imagine Databricks is a toybox. What are some toys (features) you'd find inside, and what do they do?

Imagine Databricks as a toybox! Here are some toys you'd find:

- Spark: The core engine. It's like a set of building blocks for distributed data processing, letting you construct complex data pipelines and run them fast across a cluster.

- Delta Lake: Think of it as a magical organizational tool for your data lake. It adds reliability and structure (ACID transactions, schema enforcement) to data stored in cloud object storage, ensuring data quality.

- MLflow: This is a toolbox for machine learning projects. It helps you track experiments, manage models, and deploy them easily. It's like a system for keeping all your ML building projects organized.

- SQL Analytics (Databricks SQL): A magnifying glass, giving business users the ability to explore data stored in the lakehouse using standard SQL queries. Great for dashboards and reporting.

- Notebooks: Interactive coding environments. Use languages like Python, Scala, R, and SQL to interact with the data. You can write some useful code, such as

print('Hello World!')orspark.read.format('delta').load('/path/to/delta').show().

2. If you have a big pile of LEGO bricks (data), how would Databricks help you sort and build something cool with them?

Databricks provides a powerful platform to process and transform large datasets, much like sorting and building with a big pile of LEGO bricks. Here's how:

Databricks' Spark engine allows for parallel processing of data, enabling you to efficiently sort, filter, and transform your 'LEGO bricks' (data) at scale. Key features include:

- Scalability: Easily handle large datasets with distributed computing.

- Data Engineering: Use tools like Delta Lake for reliable data storage and versioning.

- Data Science & Machine Learning: Leverage integrated MLflow for model building and deployment.

- SQL Analytics: Run SQL queries to explore and analyze your 'LEGO brick' data.

Essentially, Databricks gives you the tools to organize, manipulate, and combine your data (LEGO bricks) to build complex and valuable structures (insights, models, applications).

3. What's the difference between a small and a big 'cluster' in Databricks, like having a few or many friends helping you build?

In Databricks, a small cluster is like having a few friends help you build something: it's cheaper and faster to start, good for smaller tasks or testing. It has limited compute resources, so tasks take longer if they're data-intensive or computationally complex. A big cluster is like having many friends: it's more expensive but can handle larger, more complex tasks much faster because it has more compute power (more cores and memory).

Choosing the right size depends on your workload. Small clusters are great for development, testing, and small datasets. Big clusters shine when you need to process large datasets or run complex computations quickly. Consider the tradeoff between cost and performance when deciding.

4. Can you explain, simply, what a 'notebook' is in Databricks, and why we use it?

In Databricks, a notebook is a web-based interface for creating and running code, visualizations, and documentation collaboratively. Think of it as a digital, interactive notebook where you can combine code, its output, and explanatory text in a single document.

We use notebooks for several reasons:

- Collaboration: Multiple users can work on the same notebook simultaneously.

- Interactive Development: Write and execute code in cells, see results immediately, and iterate quickly.

- Data Exploration & Visualization: Easily explore data, create visualizations, and document findings.

- Workflow Orchestration: Chain together multiple steps in a data pipeline.

- Support for Multiple Languages: Databricks notebooks support languages like Python, SQL, Scala, and R. For example, you can use

%python,%sql,%scala, or%rmagic commands to specify the language for a particular cell. This allows for a mix of languages within a single notebook.

5. If you had to teach a computer to add all the numbers from 1 to 100, how would you tell Databricks to do it?

I would tell Databricks to add the numbers from 1 to 100 using either a loop or a mathematical formula within a Spark job. Using Scala in Databricks, I could implement it like this:

val sum = spark.range(1, 101).sum()

println(sum) // Output: 5050.0

Alternatively, I could use the formula for the sum of an arithmetic series: n * (n + 1) / 2. This would be implemented as: val n = 100; val sum = n * (n + 1) / 2; println(sum). This approach avoids iteration and is more efficient for large ranges.

6. What's 'Spark' in Databricks? Think of it like the engine that makes everything run fast. Why is that important?

In Databricks, Spark is the unified analytics engine used for large-scale data processing. Think of it as the powerful core that enables Databricks to handle massive datasets and complex computations efficiently. It's important because it allows users to perform tasks like data cleaning, transformation, machine learning, and real-time analytics at speeds significantly faster than traditional data processing frameworks.

Spark achieves this speed through several key features:

- In-memory processing: Spark caches data in RAM, reducing the need to read from disk.

- Distributed computing: Spark distributes the workload across a cluster of machines, enabling parallel processing.

- Lazy evaluation: Spark optimizes the execution plan by only computing results when needed.

Without Spark, Databricks would be unable to deliver its promise of fast and scalable data analytics.

7. Let's say a file is like a recipe. What would you do in Databricks to follow that recipe and bake a cake (get insights)?

If a file is like a recipe (instructions for data processing), in Databricks, I'd follow these steps to 'bake a cake' (derive insights):

First, I'd upload the 'recipe' file to Databricks, likely to DBFS or a mounted storage location. Then, I would create a Databricks notebook (Python, Scala, SQL, or R) to orchestrate the data processing. Inside the notebook, I'd:

Read the 'recipe' file: Use code to read and parse the file, for example:

with open("/dbfs/mnt/my_data/recipe.txt", "r") as f: recipe = f.read()Implement data ingestion: Use Spark to load the data ('ingredients') from the specified source (e.g., files, databases) into DataFrames. For example:

df = spark.read.csv("/dbfs/mnt/my_data/ingredients.csv", header=True)Apply data transformations: Perform the data transformations ('steps') described in the recipe using Spark DataFrame operations (filtering, aggregation, joining, etc.).

Analyze the results: Use Spark to perform analysis and derive insights, possibly visualizing the data using Databricks' built-in visualization tools or libraries like Matplotlib and Seaborn.

Store/present the 'cake': Save the resulting insights ('baked cake') to a table, visualization, or dashboard to make them accessible. Or, if the recipe contains instructions to generate a report, I would create that report.

8. Why is it useful to keep your data (like toys) organized in a special 'house' (data lake/warehouse)?

Keeping data organized in a central repository like a data lake or warehouse makes it much easier to find and use. Imagine searching for a specific toy in a room overflowing with clutter versus finding it neatly stored in its designated box within a toy house. Data lakes/warehouses centralize information, making it accessible for analysis, reporting, and machine learning. This improves efficiency and reduces the time it takes to extract valuable insights.

Furthermore, organized data allows for better consistency and quality. Cleaning and transforming data becomes simpler when it's centrally managed. This enables us to use data more reliably for decision-making, and ensures that different teams are working with the same, trustworthy information. Think of it like labeling all the toy boxes, so you know exactly what's inside, preventing mix-ups and ensuring everyone plays with the right toys.

9. If you accidentally broke some LEGOs (corrupted data), what's one way Databricks could help you fix them?

Databricks can help fix corrupted data (like broken LEGOs) primarily through its data engineering capabilities centered around Delta Lake. Delta Lake provides ACID transactions on top of data lakes, enabling features like versioning and time travel. If data is corrupted, you can use Delta Lake's time travel feature to revert to a previous, known-good version of the data. For example:

%sql RESTORE TABLE my_table TO VERSION AS OF 2;

This command would restore my_table to the state it was in at version 2, effectively undoing the damage caused by the "broken LEGOs". Further, Databricks' Spark engine allows you to perform data cleansing and transformation operations at scale. Corrupted records could be identified, filtered, and corrected, before rewriting a clean version of the dataset. Delta Lake provides the framework, and Spark provides the tools to fix it.

10. What does it mean to 'scale' your Databricks project, like adding more tables to a restaurant?

Scaling a Databricks project, like adding tables to a restaurant, means increasing its capacity and resources to handle larger workloads and more data. Instead of literal tables, think of tables in your data lake or data warehouse. This could involve processing larger datasets, supporting more concurrent users, or performing more complex analyses.

In practical terms, scaling involves several key aspects: increasing compute power (e.g., using larger Databricks clusters or more clusters), optimizing code for performance (e.g., using efficient Spark transformations), managing data storage effectively (e.g., partitioning data), and potentially improving the overall architecture to handle the increased demands. You might optimize Spark configurations, like spark.sql.shuffle.partitions, and increase cluster size as well.

11. Explain how you would use Databricks to find the tallest building of all the buildings in New York City.

I would use Databricks with Spark to process a dataset containing information about New York City buildings. First, I'd load the dataset (e.g., a CSV or Parquet file) into a Spark DataFrame. This could involve reading the data from cloud storage like AWS S3 or Azure Blob Storage using Spark's data source API. Next, I would select the columns relevant to building height (e.g., 'building_name', 'height_feet'). Finally, I would use Spark's aggregation functions to find the maximum height. Specifically, I would use the orderBy function on the height column and then grab the building name via first() or head(1).

For example, in PySpark:

df = spark.read.csv("nyc_buildings.csv", header=True, inferSchema=True)

tallest_building = df.orderBy(desc("height_feet")).select("building_name", "height_feet").first()

print(tallest_building)

This code reads the CSV, orders by height descending, selects the name and height, and prints the building name with the highest height.

12. How would you use Databricks to count how many times the word 'the' appears in a book?

First, load the book's text into a Databricks DataFrame. You can read the text file using spark.read.text().

Then, use the following steps:

- Split the text into words: Use

split()function to split each line into an array of words. - Explode the array: Use

explode()function to create a new row for each word. - Filter for 'the': Filter the DataFrame to keep only rows where the word is 'the' (case-insensitive comparison is recommended:

lower()function). - Count the occurrences: Use

count()function to count the number of rows in the filtered DataFrame.

from pyspark.sql.functions import split, explode, lower

book_df = spark.read.text("path/to/your/book.txt")

word_df = book_df.select(explode(split(book_df.value, "\\s+")).alias("word"))

the_count = word_df.filter(lower(word_df["word"]) == "the").count()

print(f"The word 'the' appears {the_count} times.")

13. If you are given a large file containing customer names and emails, how can you use Databricks to find all customers from California?

Assuming the large file is accessible in Databricks (e.g., in DBFS or an external cloud storage like S3), you can use Spark SQL or PySpark to read the file, apply filtering logic, and extract the required customer data. First, load the data into a Spark DataFrame. Then, assuming there's a 'state' column, filter the DataFrame to only include rows where the 'state' is 'California'. Finally, you can display the results or save them to a new file.

For example, in PySpark:

from pyspark.sql.functions import col

df = spark.read.csv("/path/to/your/file.csv", header=True, inferSchema=True) # Load the CSV file into a DataFrame

california_customers = df.filter(col("state") == "California") # Filter for California customers

california_customers.show() # Display the California customers

#california_customers.write.csv("/path/to/california_customers.csv", header=True) # Save to a new CSV file

14. Explain how Databricks can help a company predict future sales based on past sales data.

Databricks empowers companies to predict future sales by leveraging its unified platform for data engineering, data science, and machine learning. First, historical sales data from various sources (CRM, ERP, point-of-sale systems) is ingested and processed using Databricks' data engineering capabilities with Spark, enabling efficient cleaning, transformation, and aggregation of the data. This cleaned data is then used to train machine learning models using MLlib or other libraries like scikit-learn within the Databricks environment, building predictive models like regression or time series models, which could be productionized easily with MLflow or Databricks Model Serving.

Databricks simplifies the entire sales forecasting process, from data preparation to model deployment. The collaborative workspace allows data scientists and engineers to work together seamlessly. The scalable Spark infrastructure ensures efficient processing of large datasets, and the integrated MLflow platform streamlines model management, tracking, and deployment. Furthermore, Databricks Delta Lake offers reliable data storage and versioning, ensuring data integrity and reproducibility of results, improving confidence in predicted sales figures and enabling proactive decision-making.

15. What's an API in simple terms and how does it help Databricks connect to other things?

An API (Application Programming Interface) is like a waiter in a restaurant. The waiter takes your order (request) to the kitchen (application/system), and then brings back your food (response). In the context of Databricks, an API is a set of rules and specifications that allow different software applications to communicate with each other.

APIs enable Databricks to connect to various data sources, tools, and services. For example, Databricks uses APIs to:

- Read data from cloud storage like AWS S3 or Azure Blob Storage.

- Write data to databases like MySQL or PostgreSQL.

- Integrate with other services like Tableau for visualization or Airflow for orchestration. The underlying calls usually happen using HTTP requests which contain the request and response in a standard format (e.g. JSON).

curl https://someapi.com/datais an example of such an API call. Databricks can then process the data obtained via the API.

16. Imagine you have two lists of names. How would you find the names that are on both lists using Databricks?

To find names present in both lists using Databricks, you can leverage the power of Apache Spark and its DataFrame API. Assuming your lists are stored as DataFrames (e.g., df1 and df2), you can use an inner join operation. First create two dataframes for the name list and load the data into them. For the join to work you'll need a common column, which can be done by specifying the column using .join after specifying the dataframes.

Here's an example using PySpark:

from pyspark.sql.functions import col

# Assuming df1 and df2 are your DataFrames with a column named 'name'

common_names = df1.join(df2, df1["name"] == df2["name"], "inner")

# Select only the 'name' column to get the list of common names (optional)

common_names = common_names.select(col("df1.name"))

common_names.show()

The resulting common_names DataFrame will contain the names that appear in both input DataFrames. This approach is efficient for large datasets because Spark distributes the join operation across the cluster. Note you can write code in Scala as well.

17. How can Databricks help detect fraud by looking at patterns in credit card transactions?

Databricks, leveraging Apache Spark, can efficiently process and analyze large volumes of credit card transaction data to detect fraudulent patterns. It enables data engineers and data scientists to build robust fraud detection systems by:

- Data Ingestion & Preprocessing: Connecting to various data sources (databases, streams), cleaning, transforming, and aggregating transaction data using Spark DataFrames and SQL.

- Feature Engineering: Creating features that are indicative of fraud, such as transaction frequency, amount, location anomalies, spending patterns compared to historical data, etc., all through scalable Spark jobs.

- Model Training & Deployment: Training machine learning models (e.g., Logistic Regression, Random Forest, Gradient Boosted Trees) using MLlib or other ML libraries in Databricks. Databricks' MLflow can track experiments, manage models, and deploy the best-performing model to production for real-time or batch fraud scoring.

- Real-time Monitoring: Implementing streaming data pipelines with Spark Streaming or Structured Streaming to analyze transactions in real-time and trigger alerts when suspicious activities are detected. Databricks Delta Lake allows for reliable and performant data warehousing for both historical analysis and real-time ingestion.

- Collaboration: Provides collaborative notebooks for data scientists and engineers to work together on building, testing, and deploying fraud detection solutions.

18. What are some of the data visualization tools that you can use with Databricks to display data?

Databricks offers several options for data visualization. The built-in display() function is a quick and easy way to generate charts and tables directly from DataFrames within notebooks. This works well for exploratory data analysis. Additionally, you can use popular Python libraries like Matplotlib, Seaborn, and Plotly within Databricks notebooks. These libraries provide a wide range of customization options for creating sophisticated visualizations. For interactive dashboards, Databricks SQL (formerly known as Databricks Workspace SQL) provides a platform to create and share dashboards using SQL queries.

You can also connect Databricks to external BI tools like Tableau, Power BI, and Looker using JDBC/ODBC drivers to leverage their advanced visualization capabilities and integrate Databricks data into existing reporting workflows. For example, with Plotly you can render interactive chart using plotly.offline.iplot().

19. How would you use Databricks to analyze website traffic and find out which pages are most popular?

I would use Databricks with Spark SQL or Python (PySpark) to analyze website traffic logs, typically stored in a format like Apache access logs. First, I'd ingest the log data into a Databricks Delta table. Then, using Spark SQL, I'd query the table to extract the page URLs and aggregate the number of visits for each page. Finally, I'd order the results in descending order to identify the most popular pages. The code would look like SELECT page_url, COUNT(*) AS visit_count FROM logs_table GROUP BY page_url ORDER BY visit_count DESC.

Alternatively, in PySpark, I would load the logs, extract the URLs, and then use groupBy and count functions to achieve the same result. After getting the results, I'd visualize them using Databricks built-in charting tools or other libraries like Matplotlib to easily identify the most popular pages and trends.

20. How can Databricks help improve the accuracy of machine learning models?

Databricks enhances machine learning model accuracy through several key capabilities. Its unified platform streamlines the entire ML lifecycle, facilitating data engineering, feature engineering, model training, and deployment, leading to more consistent and reliable results. Specifically, features like Delta Lake ensure data quality and reliability, crucial for accurate model training, and MLflow enables experiment tracking, versioning, and reproducibility, allowing data scientists to iteratively improve models while maintaining a clear audit trail.

Furthermore, Databricks supports distributed training using Apache Spark, enabling faster model training on large datasets. This is vital for complex models that require substantial data for optimal performance. The platform's collaborative environment promotes knowledge sharing and code reuse within data science teams. AutoML capabilities automate model selection and hyperparameter tuning to improve efficiency.

21. Explain how you would set up a simple Databricks job to run automatically every day.

To set up a daily Databricks job, I'd use the Databricks Jobs UI or the Databricks REST API. Within the UI, I'd create a new job, configure a task to run a specific notebook or JAR, and then define a schedule trigger. This schedule would be set to run daily at the desired time. For example, I could select a daily recurrence and set the 'Hour' and 'Minute' fields to define the exact time of day.

Alternatively, using the REST API, I would create a JSON payload specifying the task details (notebook path, parameters if any) and the schedule (using cron syntax to define daily execution). A sample payload might include:

{

"name": "MyDailyJob",

"tasks": [

{

"task_key": "mytask",

"notebook_task": {

"notebook_path": "/Users/me@example.com/MyNotebook"

},

"new_cluster": {

"spark_version": "12.2.x-scala2.12",

"node_type_id": "Standard_DS3_v2",

"num_workers": 2

}

}

],

"schedule": {

"quartz_cron_expression": "0 0 0 * * ?",

"timezone": "UTC"

}

}

Then, I'd use curl or a similar tool to POST this payload to the Databricks Jobs API endpoint.

22. If you have a file with missing data, how would you use Databricks to handle it?

To handle missing data in Databricks, I would first load the data into a Spark DataFrame. Then, I'd explore the missing data patterns using functions like isNull(), isnan(), count(), and groupBy() to understand the extent and distribution of missing values. Next, I'd choose a suitable imputation strategy.

Common strategies include:

- Dropping rows/columns: Use

dropna()to remove rows or columns with missing values (suitable if missing data is minimal). - Imputation:

- Mean/Median/Mode Imputation: Replace missing values with the mean, median, or mode of the column (using

fillna()with the calculated statistic). - Constant Value Imputation: Replace missing values with a specific constant value (also using

fillna()). - More advanced techniques: For more complex scenarios, I might consider using more advanced imputation techniques like k-Nearest Neighbors imputation (using libraries like

sklearn.imputethrough Spark UDFs) or model-based imputation (training a model to predict missing values). Finally, I'd evaluate the impact of the chosen strategy on downstream analysis.

- Mean/Median/Mode Imputation: Replace missing values with the mean, median, or mode of the column (using

23. How do you ensure that your Databricks code is easy to read and understand for others?

To ensure readability and understanding of my Databricks code, I prioritize clean coding practices. This includes using descriptive variable and function names, adding comments to explain complex logic, and adhering to consistent code formatting (e.g., consistent indentation, line breaks). I also try to keep functions small and focused, with clear input and output parameters. Code should be broken down into smaller, manageable chunks using functions. Avoid long or deeply nested blocks of code. Use markdown cells in Databricks notebooks to explain the purpose of code sections.

I also structure my Databricks notebooks logically, grouping related code cells together and using markdown headings to provide context. Using meaningful cell titles is important. When possible, I follow style guides such as PEP 8 for Python and general SQL style guides when writing SQL queries. If a transformation is particularly complicated, a small sample of the data going into the transformation and the expected output data helps explain it. Consider using Databricks' built in documentation features. For example:

def calculate_average(data: list[int]) -> float:

"""Calculates the average of a list of integers.

Args:

data: A list of integers.

Returns:

The average of the integers in the list.

Raises:

TypeError: If the input is not a list of integers.

ValueError: If the input list is empty.

"""

if not isinstance(data, list):

raise TypeError("Input must be a list.")

if not all(isinstance(x, int) for x in data):

raise TypeError("List must contain only integers.")

if not data:

raise ValueError("Input list cannot be empty.")

return sum(data) / len(data)

24. Let's say you need to process data from multiple sources (like databases and files). How can Databricks help?

Databricks offers several features to help process data from multiple sources efficiently. First, it provides connectors to various data sources like databases (e.g., MySQL, PostgreSQL), cloud storage (e.g., AWS S3, Azure Blob Storage), and file formats (e.g., CSV, JSON, Parquet). These connectors allow you to easily read data into Databricks using Spark's DataFrame API. For example, you can load a CSV file like this:

df = spark.read.csv("path/to/your/file.csv", header=True, inferSchema=True)

Second, Databricks' unified analytics platform allows you to perform transformations and analysis on data from these disparate sources using a single, consistent environment. You can use SQL, Python, Scala, or R to query and process the data. By leveraging Spark's distributed processing capabilities, Databricks can handle large volumes of data from multiple sources with high performance. Furthermore, Databricks Delta Lake adds a storage layer on top of cloud storage for reliability and ACID transactions.

Databricks intermediate interview questions

1. How would you optimize a Databricks notebook that is running slower than expected?

To optimize a slow Databricks notebook, first identify bottlenecks using the Spark UI or Databricks' profiling tools to pinpoint stages or operations consuming the most resources. Then consider these optimizations:

- Data Partitioning: Ensure data is properly partitioned using

repartition()orcoalesce()based on workload to minimize shuffling. - Caching: Cache frequently accessed DataFrames/Datasets using

cache()orpersist(). - Efficient Data Formats: Use efficient formats like Parquet or Delta, leveraging features like predicate pushdown.

- Broadcast Variables: Use broadcast variables for smaller datasets to avoid shuffles during joins.

- Optimize Joins: Choose the appropriate join strategy based on dataset sizes (e.g., broadcast hash join for small-large joins).

- Code Optimization: Review Spark code for inefficiencies; utilize vectorized UDFs where possible and avoid loops.

- Resource Allocation: Tune Spark configuration parameters (e.g.,

spark.executor.memory,spark.executor.cores,spark.driver.memory) to provide sufficient resources. - Data Skew: Handle data skew by salting keys or using techniques like range partitioning.

- Avoid unnecessary shuffles: Refactor the code to avoid wide transformations that require shuffles.

- Optimize file reads: Ensure that the number of files read is optimal. Too many small files can cause slow reads.

- Optimize writes: Ensure that the number of output files is optimal by adjusting the number of partitions.

- Use Delta Lake Optimization Commands: Run

OPTIMIZEandVACUUMcommands on Delta tables.

2. Explain how you would handle skewed data in a Spark DataFrame within Databricks.

To handle skewed data in a Spark DataFrame within Databricks, I would consider strategies like salting, bucketing, and using broadcast joins. Salting involves adding a random prefix or suffix to the skewed key to distribute it across multiple partitions. Bucketing is helpful when joining on skewed keys by dividing the data into buckets. For small dimension tables joined with large fact tables exhibiting skew, broadcast joins can be very effective.

Specifically, for salting, the code might resemble this:

import org.apache.spark.sql.functions._

def addSalt(key: String, numBuckets: Int): String = {

val rand = new scala.util.Random

key + "_" + rand.nextInt(numBuckets).toString

}

val addSaltUDF = udf(addSalt _ , StringType)

df.withColumn("salted_key", addSaltUDF(col("skewed_key"), lit(10)))

After salting/bucketing, we can perform joins on the new 'salted_key' column, thereby reducing skew and improving performance. Adaptive Query Execution (AQE) in Spark is also helpful, as it dynamically optimizes query execution plans at runtime based on data statistics, which can mitigate skew effects. AQE can coalesce small partitions, split skewed partitions, and optimize join strategies.

3. Describe your experience with using Delta Lake for data warehousing in Databricks. What are the benefits?

I have experience using Delta Lake in Databricks for building reliable and scalable data warehouses. My work involved creating data pipelines that ingested data from various sources, performed transformations, and stored the results in Delta tables. I leveraged Delta Lake's features such as ACID transactions to ensure data consistency, especially during concurrent write operations and updates. Time travel was also used to audit data changes and revert to previous versions when necessary.

The benefits of using Delta Lake include improved data reliability due to ACID properties, enhanced data governance through schema enforcement and evolution, and increased query performance with features like data skipping and Z-ordering. Additionally, its seamless integration with Spark made data processing and manipulation efficient, which allowed me to build and maintain robust data warehousing solutions.

4. How do you manage dependencies (e.g., Python libraries) in a Databricks environment to ensure reproducibility?

To manage dependencies in Databricks for reproducibility, I primarily use pip and Databricks init scripts along with Databricks Repos.

Specifically:

requirements.txt: I create arequirements.txtfile listing all necessary Python packages and their versions. This file is then used in an init script that runs when the Databricks cluster starts. The init script executespip install -r requirements.txt, ensuring consistent package versions across all nodes.- Databricks Repos: Code, including init scripts and

requirements.txt, is stored in a Databricks Repo, version-controlled (e.g., using Git). This allows for easy rollback and ensures that the correct version of the code and dependencies are used. - Cluster Libraries: Another approach is to directly install libraries onto a cluster. However,

requirements.txtand init scripts are preferable for greater reproducibility. Libraries are installed to the cluster using the UI or the Databricks CLI. - Wheel Files: For complex or private dependencies, I build wheel files and store them, installing them via

pip install /path/to/wheel_file.whlin the init script.

5. What are some strategies for handling small files in Databricks to improve performance?

Small files in Databricks can lead to performance issues due to increased overhead in managing numerous files. Several strategies can mitigate this:

- Repartioning: Increase the partition size by using

repartition(numPartitions)orcoalesce(numPartitions)methods to reduce the number of partitions and create larger files.repartition()shuffles data fully across the cluster, creating an equal distribution, whilecoalesce()tries to minimize data movement (use coalesce if you know that data is already partitioned reasonably well). However,coalesce()can result in uneven partition sizes. - Writing Data with Optimized Partitioning: When writing data to storage, you can ensure efficient partitioning by specifying the number of partitions. For example, if writing a DataFrame:

This limits file size. Adapt thedf.write.option("maxRecordsPerFile", 1000000).parquet("path/to/output")maxRecordsPerFilevalue based on your data and cluster. - Compaction: Periodically compact small files into larger files. This involves reading small files, concatenating them, and writing the result back to storage. This can be automated with scheduled jobs.

- Using Delta Lake: Delta Lake provides automatic compaction (auto optimize) and data skipping which help in managing small files and improving query performance.

6. How would you set up a CI/CD pipeline for Databricks notebooks and jobs?

A CI/CD pipeline for Databricks notebooks and jobs can be set up using tools like Azure DevOps, GitHub Actions, or GitLab CI/CD. The basic steps involve version controlling your Databricks notebooks (typically as .ipynb files or .py files exported from notebooks) in a Git repository. Then, the CI/CD pipeline would trigger on code commits to the repository. The pipeline would then perform steps such as linting, unit testing (if applicable), and deploying the notebooks and jobs to a Databricks workspace. For deployment, the Databricks CLI or Databricks REST API can be used to import notebooks, create or update jobs based on the latest code in the repository. Consider using databricks bundle feature for managing deployment artifacts as part of CI/CD.

For example, a simple pipeline might:

- Checkout code: Fetch the latest code from the Git repository.

- Lint & Test: Run linters (e.g.,

flake8,pylint) and unit tests. - Deploy to Databricks: Use the Databricks CLI (

databricks bundles deploy) to deploy the notebooks and jobs to a specified Databricks workspace. This could involve:databricks bundles validate: validating the deploymentdatabricks bundles deploy -t <target>: deploying the bundles

- Run tests on Databricks: Trigger a Databricks job to run integration or acceptance tests against the deployed code.

- Report results: Publish the test results and deployment status.

7. Explain how you would monitor Databricks jobs for performance and errors. What metrics are important?

To monitor Databricks jobs, I would leverage several tools and metrics. Primarily, I'd use the Databricks UI to monitor job runs, paying close attention to the Spark UI for detailed information about stages, tasks, and executors. Important metrics include job duration, task duration, shuffle read/write sizes, and executor memory usage. These can help identify bottlenecks.

For proactive monitoring and alerting, I'd configure Databricks monitoring using tools like Azure Monitor, Prometheus, or Datadog to track these key metrics. I'd set up alerts based on thresholds for job failures, long-running tasks, or excessive resource consumption. Additionally, I'd implement logging within the Databricks jobs themselves using log4j or similar, to capture application-specific errors and warnings, which can then be routed to a central logging system for analysis.

8. Describe your experience with Databricks SQL Analytics. How does it differ from using Spark SQL?

My experience with Databricks SQL Analytics involves using it for interactive querying and dashboarding on data lakes. I've used it to build dashboards for business users, perform ad-hoc analysis, and create reports. It's a very performant serverless SQL warehouse, especially when querying data in Delta Lake format.

Databricks SQL and Spark SQL are similar but have key differences. Spark SQL is a general-purpose distributed SQL engine within the broader Apache Spark framework, suitable for large-scale data processing and ETL pipelines. Databricks SQL, on the other hand, is optimized for low-latency SQL queries for BI and analytics workloads. Databricks SQL offers features like a SQL-optimized query engine, query history, dashboards, and alerts that are not readily available in Spark SQL. Think of it this way: Spark SQL is for building the data, while Databricks SQL is for analyzing the finished product.

9. How would you implement row-level security in Databricks to control data access?

Row-level security in Databricks can be implemented using several techniques. A common approach is to leverage Dynamic Views. This involves creating a view that filters data based on a user's identity or group membership using the CURRENT_USER() or a custom function that retrieves group information. The view's definition includes a WHERE clause that restricts access to rows based on the user's context. For example:

CREATE VIEW secured_table AS

SELECT * FROM original_table

WHERE user_id = CURRENT_USER();

Another approach, especially useful with Delta Lake, involves using Delta Lake's table access control features in conjunction with user-defined functions (UDFs) to define fine-grained access policies. UDFs can encapsulate complex authorization logic. These features, when combined, ensure that users only see the data they are authorized to view based on predefined policies or roles within the Databricks environment.

10. Explain how you would use Databricks to build a real-time data pipeline.

To build a real-time data pipeline in Databricks, I'd leverage Structured Streaming. First, I'd configure a streaming source, such as Kafka or Azure Event Hubs, to ingest the incoming data. Then, using Spark Structured Streaming, I'd perform transformations like filtering, aggregation, and enrichment on the data. Finally, I'd write the processed data to a real-time sink like a Delta Lake table configured for streaming reads, a NoSQL database, or a real-time dashboarding solution.

Key considerations include choosing the appropriate trigger interval (e.g., micro-batch or continuous), handling late-arriving data using watermarks, and ensuring fault tolerance with checkpoints. Monitoring the pipeline's performance, including latency and throughput, is crucial for maintaining its stability and efficiency. I would use Databricks' built in monitoring, Spark UI and logging capabilities to achieve this.

11. What is the difference between using `dbutils.notebook.run` and calling a notebook as a job in Databricks?

dbutils.notebook.run executes a notebook within the context of the calling notebook. This means it runs inline, waits for the called notebook to complete, and any variables or configurations set in the called notebook can be directly accessed or affect the calling notebook's state, subject to scope. Also, errors in the called notebook halt the calling notebook.

Calling a notebook as a Databricks job, on the other hand, executes the notebook in a separate, isolated context. It doesn't block the calling process; it's asynchronous. Changes or errors in the job notebook don't directly affect the caller's notebook's execution. Data is passed through well-defined input and output methods (e.g., parameters and returning a value using dbutils.notebook.exit()), ensuring proper separation. Jobs are typically triggered on a schedule or event, and are better suited for production workloads requiring robust error handling and isolation.

12. How would you use Databricks to perform machine learning tasks? Explain your preferred workflow.

I would use Databricks to perform machine learning tasks by leveraging its collaborative workspace, scalable compute, and integrated MLflow tracking. My preferred workflow involves several steps:

First, I'd ingest and prepare data using Spark SQL or PySpark. This involves cleaning, transforming, and feature engineering the data. Second, I would explore different machine learning models using libraries like scikit-learn, TensorFlow, or PyTorch, experimenting with hyperparameters. Then, I'd use MLflow to track experiments, log parameters, metrics, and artifacts. I would deploy the best performing model to a Databricks serving endpoint for real-time predictions or use it for batch inference, and subsequently monitor its performance. Key steps include:

- Data Exploration and Preparation: Use Spark for distributed data processing.

- Model Training: Leverage Databricks' optimized compute for fast training cycles.

- Experiment Tracking (MLflow): Track parameters, metrics, and artifacts.

- Model Deployment: Deploy to Databricks serving or external platforms.

- Monitoring: Continuously monitor model performance and retrain as needed.

13. Describe how you've used Databricks to integrate with other data sources (e.g., cloud storage, databases).

I've used Databricks extensively to integrate with various data sources. For cloud storage, I've connected to AWS S3, Azure Blob Storage, and Google Cloud Storage using the respective connector libraries and configuring access keys or service principals. spark.read.format("parquet").load("s3a://<bucket>/<path>") is a common pattern. For relational databases like PostgreSQL and MySQL, I've utilized JDBC drivers to establish connections and read/write data using Spark's DataFrame API. I also used Databricks secrets to securely manage database credentials. For example, connecting to a postgres database:

df = spark.read.format("jdbc") \

.option("url", "jdbc:postgresql://<host>:<port>/<database>") \

.option("dbtable", "<table>") \

.option("user", dbutils.secrets.get(scope="<scope_name>", key="<user_key>")) \

.option("password", dbutils.secrets.get(scope="<scope_name>", key="<password_key>")) \

.load()

Furthermore, I have integrated with message queues like Kafka using the Spark Streaming API to ingest real-time data into Databricks for processing and analysis. The structured streaming approach makes it easier to work with.

14. How do you handle data versioning and reproducibility in Databricks projects?

Data versioning and reproducibility in Databricks projects can be handled using several techniques. For data, Delta Lake's time travel feature is key. It allows you to query previous versions of your data using SELECT * FROM table VERSION AS OF timestamp or SELECT * FROM table@v1. This enables auditing, debugging, and reproducing past analysis results. Another approach is to use data lineage tools to track the origins and transformations of data.

For code, using Databricks Repos with Git integration is recommended. This allows you to track changes to your notebooks and other code files. It also facilitates collaboration and enables you to revert to previous versions of your code, guaranteeing that you can reproduce specific code states and corresponding analysis. Using %pip freeze > requirements.txt in a notebook and tracking this file with Git alongside your code will also help in managing and reproducing environments.

15. Explain how you would use Databricks to build a data dashboard for stakeholders.

To build a data dashboard for stakeholders using Databricks, I would leverage Databricks SQL and Databricks Dashboards. First, I would use Databricks SQL to create SQL queries that aggregate and transform the raw data stored in Delta Lake tables. These queries would calculate the key metrics and KPIs relevant to the stakeholders, such as daily active users, revenue trends, or customer churn rate. Then, I would use Databricks Dashboards to visualize these metrics. I'd create charts, graphs, and tables that clearly present the data and allow stakeholders to easily understand the trends and patterns.

Specifically, I'd configure the dashboards to automatically refresh at regular intervals (e.g., daily) to ensure the stakeholders always have access to the latest information. I'd also implement access control to restrict the dashboard to specific user groups and give them appropriate permissions, and use parameters, filters, and drill downs to allow for interactivity. To monitor performance I would use Databricks monitoring tools. The choice of charts and tables used in the dashboards would depend on the type of metrics needed by stakeholders.

16. What are some best practices for writing efficient Spark SQL queries in Databricks?

When writing efficient Spark SQL queries in Databricks, consider these best practices:

Partitioning and Bucketing: Use appropriate partitioning and bucketing strategies based on your data and query patterns. This allows Spark to read only relevant data. For example,

PARTITIONED BY (date)orCLUSTERED BY (user_id INTO 200 BUCKETS). Select partitioning keys based on frequently used filter columns.Filtering Early: Apply filters as early as possible in the query to reduce the amount of data processed in subsequent stages. This minimizes data shuffled across the network.

Avoid

SELECT *: Specify the columns you need instead of selecting all columns. This reduces I/O and memory usage.Use Broadcast Joins for Small Tables: For smaller tables, use broadcast joins to avoid shuffling the larger table. You can hint this using

BROADCASTJOINor enable auto-broadcasting using spark config:spark.sql.autoBroadcastJoinThreshold.Optimize Joins: Carefully consider the join order. Start by joining the smallest tables first. For example:

SELECT /*+ BROADCASTJOIN(small_table) */ * FROM large_table JOIN small_table ON large_table.key = small_table.key;Use built-in functions: leverage spark's built-in functions for performance. These are usually highly optimized.

Caching Judiciously: Cache frequently used datasets or intermediate results using

CACHE TABLEordf.cache()to avoid recomputation. However, be mindful of memory constraints.Optimize Data Types: Use the smallest possible data types to reduce storage and memory footprint. For example, use

Intinstead ofLongif the values fit within theIntrange.Analyze Tables: Run

ANALYZE TABLE table_name COMPUTE STATISTICSto update table statistics, which helps the Spark SQL optimizer make better decisions.Avoid UDFs when possible: While UDFs are flexible, they can often be a performance bottleneck. Explore using built-in functions or Spark SQL expressions instead. If UDFs are necessary, consider using Scala or Java UDFs for better performance than Python UDFs.

Consider Delta Lake: Use Delta Lake for data storage. Delta Lake provides features such as data skipping, optimized writes, and caching to improve query performance.

17. How would you use Databricks to implement data governance policies?

Databricks offers several features to implement data governance policies. Unity Catalog provides centralized data governance, security, auditing, and data lineage across Databricks workspaces. You can define access controls, manage data ownership, and track data lineage using Unity Catalog. Data masking and row-level security can be applied to sensitive data.

Additionally, Databricks integrates with external governance tools. Data quality can be monitored through Delta Live Tables with expectations, and audit logs provide a record of data access and modifications. Workflow orchestration tools ensure consistent application of governance rules across pipelines. Furthermore, you can use notebooks for data discovery, documentation, and policy management to improve data understanding and usability.

18. Describe your experience with using Databricks for data exploration and visualization.

I've used Databricks extensively for data exploration and visualization, leveraging its collaborative notebook environment and integration with various data sources. My typical workflow involves loading data from sources like Azure Data Lake Storage Gen2 or AWS S3 into Spark DataFrames. Then, I use Spark SQL or DataFrame operations to perform data cleaning, transformation, and aggregation.

For visualization, I primarily use Databricks' built-in plotting capabilities via %matplotlib inline with libraries like Matplotlib, Seaborn, and Plotly. I also use Databricks widgets to create interactive dashboards. I've also used the display() function to easily render Pandas DataFrames and create basic charts directly within the notebook for quick data insights.

19. How would you use Databricks to perform A/B testing?

To perform A/B testing in Databricks, you would typically use a combination of data manipulation, machine learning, and statistical analysis tools available within the platform. First, you'd need to define your A/B test, identifying the control and treatment groups and the key metrics you want to track (e.g., conversion rate, click-through rate). Then, you can use Spark to process and transform your data, segmenting users or sessions into the A and B groups based on a random assignment.

Next, you would calculate the relevant metrics for each group. Databricks' built-in libraries like mllib or scikit-learn enable machine learning models which may be necessary to predict user behavior based on treatment. Finally, you would employ statistical tests (e.g., t-tests, chi-squared tests) using libraries such as scipy or custom implementations in Spark to determine if the difference in metrics between the A and B groups is statistically significant. If the treatment group performs significantly better, you can confidently implement the change. Logging results to Delta Lake enables repeatability and monitoring over time.

20. Explain how you would handle personally identifiable information (PII) in Databricks to comply with privacy regulations.

To handle PII in Databricks and comply with privacy regulations, I would implement a multi-layered approach. Firstly, I would identify and classify all PII data within the Databricks environment. This involves data discovery and tagging sensitive columns. Secondly, I would implement access controls using Databricks' built-in features like table ACLs and row-level security to restrict access to PII data to only authorized users and services. Data masking or redaction techniques using Spark SQL functions (e.g., regexp_replace, sha2) could be used to protect PII data at rest and in transit, especially when used in development or testing environments. Data encryption using customer-managed keys (CMK) would further protect data at rest. Audit logging would be enabled to track access to PII data, providing traceability for compliance reporting and security investigations.

Finally, data minimization would be practiced by only collecting and storing necessary PII. Data retention policies would be enforced to remove PII data when it is no longer needed. For example, I could use dbutils.fs.rm to delete old files after a certain period (after moving to archival storage). All these processes will be documented and regularly reviewed.

21. What is the role of the Databricks metastore, and how would you manage it?

The Databricks Metastore is a central repository that stores metadata about data assets, such as tables, databases, views, and access control privileges. It acts as a catalog, allowing users and systems to discover, understand, and access data managed by Databricks. It provides a unified view of data across different storage systems like ADLS Gen2 or S3.