Cloud computing is transforming businesses, and identifying qualified candidates is more critical than ever; finding the right cloud engineer is about making sure your technology decisions are sound. As the demand for cloud skills grows, recruiters need the right questions to assess candidates' expertise.

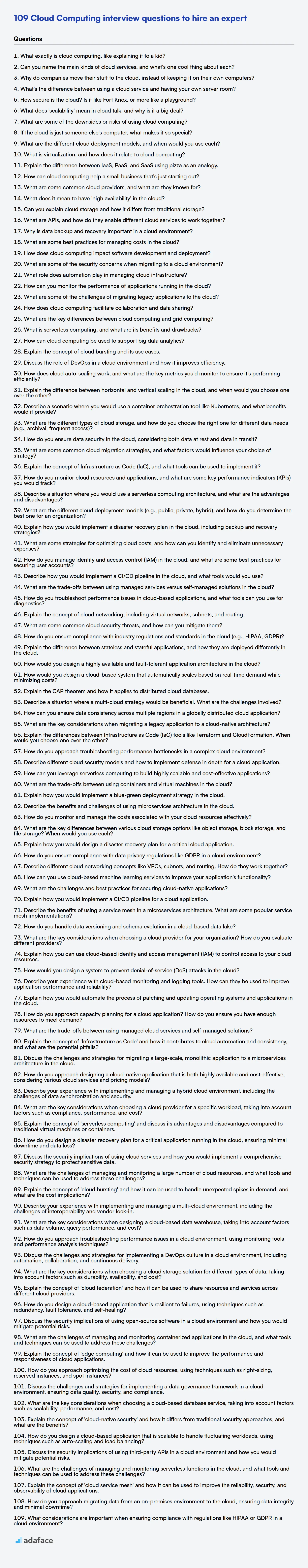

This blog post provides a curated list of cloud computing interview questions, categorized by difficulty, from basic to expert. We also include cloud computing MCQs, ensuring a structured approach to evaluating candidates across various skill levels.

By using these questions, you will be able to hire great cloud computing professionals, and if you want to screen candidates even before the interview, use Adaface's cloud computing test to automate your screening process and focus on top talent.

Table of contents

Basic Cloud Computing interview questions

1. What exactly is cloud computing, like explaining it to a kid?

Imagine you have lots of toys, but instead of keeping them all at your house, you keep them in a giant playroom that everyone can share. That playroom is like the "cloud". Instead of storing things like photos, videos, or programs on your own computer or phone, you store them on computers in that giant playroom. You can get to them from anywhere with the internet, just like visiting that playroom.

So, cloud computing is like using someone else's computers to store your stuff and run programs. This means you don't need super powerful or big computers at home, and you can access your things from any device, anywhere, as long as you have internet!

2. Can you name the main kinds of cloud services, and what's one cool thing about each?

The main kinds of cloud services are Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS).

- IaaS: Cool thing is total control. You manage the OS, storage, deployed apps, etc. Like having a virtual data center, allowing for customization.

- PaaS: Cool thing is simplified development. Focus solely on your application. The cloud provider handles the underlying infrastructure, making deployment easy. It enables rapid development cycles.

- SaaS: Cool thing is accessibility. Use software over the internet on any device, like using Gmail or Salesforce. Maintenance is all handled by the provider, very user-friendly.

3. Why do companies move their stuff to the cloud, instead of keeping it on their own computers?

Companies move to the cloud for several reasons, primarily revolving around cost, scalability, and reliability. Cloud providers offer a pay-as-you-go model, reducing upfront investment in hardware and ongoing maintenance expenses. This makes it cheaper than running everything themselves. Cloud resources can easily scale up or down based on demand, ensuring that the system can handle traffic spikes and also reduces the need to over-provision in anticipation of occasional higher load times.

Furthermore, cloud providers offer robust infrastructure with built-in redundancy and disaster recovery capabilities. This reduces downtime and ensures data availability, things that are much more costly and complex to implement on-premise. Cloud environments also simplify collaboration and access to data from anywhere. Security is another important aspect. Cloud providers invest heavily in security infrastructure and expertise which may not be feasible for an individual company to match.

4. What's the difference between using a cloud service and having your own server room?

Using a cloud service means you're renting computing resources (servers, storage, etc.) from a provider like AWS, Azure, or Google Cloud. You only pay for what you use, and they handle all the underlying infrastructure maintenance, security, and scalability. This offers benefits like reduced upfront costs, greater agility and faster deployment.

Having your own server room involves owning and maintaining all the physical hardware yourself. This means significant upfront investment, ongoing costs for electricity, cooling, and IT staff. However, it provides greater control over data security and compliance, and might be preferable if you have very specific hardware or software requirements that aren't easily met by cloud providers, or for legal requirements for data locality.

5. How secure is the cloud? Is it like Fort Knox, or more like a playground?

The security of the cloud isn't an absolute 'Fort Knox' or a 'playground' analogy. It's more accurate to describe it as a shared responsibility model. Cloud providers invest heavily in security measures like physical security, network security, and compliance certifications. However, the security of your data and applications in the cloud depends heavily on your security configurations and practices.

Factors like properly configuring access controls (IAM), encrypting data at rest and in transit, regularly patching systems, and implementing robust monitoring and logging are crucial. Neglecting these responsibilities can leave you vulnerable, regardless of the cloud provider's inherent security. Think of it like a secure building (the cloud provider's infrastructure), where you are responsible for locking your apartment (your data and applications).

6. What does 'scalability' mean in cloud talk, and why is it a big deal?

In cloud computing, scalability refers to the ability of a system, application, or infrastructure to handle a growing amount of workload or users. It means the system can easily adapt to increased demand without negatively impacting performance or availability. There are two primary types: Vertical scalability (scaling up), which involves adding more resources (CPU, memory) to an existing server, and Horizontal scalability (scaling out), which involves adding more servers to the system.

Scalability is crucial because it allows businesses to efficiently manage fluctuating workloads, meet growing customer demands, and avoid performance bottlenecks. Without scalability, a sudden surge in traffic could overwhelm the system, leading to slow response times, application crashes, and ultimately, a poor user experience and potential revenue loss. Cloud platforms provide tools and services that make scaling easier and more cost-effective compared to traditional on-premises infrastructure.

7. What are some of the downsides or risks of using cloud computing?

While cloud computing offers numerous benefits, some downsides and risks include:

- Security Risks: Data breaches, vulnerabilities in the cloud provider's infrastructure, and unauthorized access are significant concerns. You are entrusting your data to a third party, and their security becomes your security.

- Downtime and Availability: Cloud services can experience outages, impacting your application's availability. Reliance on the cloud provider's infrastructure means you're subject to their uptime guarantees and potential service disruptions.

- Vendor Lock-in: Migrating from one cloud provider to another can be complex and expensive, leading to vendor lock-in. Standardizing on a specific cloud provider's services and APIs can make switching difficult.

- Cost Management: While cloud computing can be cost-effective, unexpected usage patterns, hidden fees, and inefficient resource allocation can lead to cost overruns. Careful monitoring and optimization are crucial.

- Compliance and Regulatory Issues: Depending on your industry and data location, cloud computing may introduce compliance challenges. Adhering to regulations like GDPR or HIPAA can be more complex in a cloud environment.

- Loss of Control: You relinquish some control over your infrastructure and data when using cloud services. You are dependent on the cloud provider for maintenance, security updates, and other operational aspects.

- Network Dependency: Cloud services rely on a stable and reliable internet connection. Poor network connectivity can significantly impact application performance and accessibility.

8. If the cloud is just someone else's computer, what makes it so special?

While the cloud utilizes physical servers in data centers, its power lies in abstraction and automation. It's not just someone else's computer because it provides on-demand access to a vast pool of resources (compute, storage, databases, etc.) managed by the provider. This abstraction allows users to scale resources up or down rapidly, pay only for what they use, and offload infrastructure management burdens.

Furthermore, cloud platforms offer a range of managed services and tools that would be complex and time-consuming to build and maintain in a traditional environment. These include things like load balancing, auto-scaling, monitoring, security, and serverless computing (e.g., AWS Lambda, Azure Functions). This reduces operational overhead and allows organizations to focus on their core business and innovation rather than IT infrastructure.

9. What are the different cloud deployment models, and when would you use each?

Cloud deployment models dictate where your cloud infrastructure resides and how it's managed. The main types are:

- Public Cloud: Resources are owned and operated by a third-party provider (e.g., AWS, Azure, GCP) and shared among multiple tenants. Use when cost-effectiveness, scalability, and minimal management overhead are priorities. Suitable for general-purpose applications, development/testing, and handling variable workloads.

- Private Cloud: Resources are dedicated to a single organization, either on-premises or hosted by a third-party. Use when strict security, compliance, and control are required. Suitable for sensitive data, regulated industries, and legacy applications.

- Hybrid Cloud: A combination of public and private clouds, allowing data and applications to be shared between them. Use when you need to leverage the benefits of both models, such as scaling to the public cloud during peak demand while keeping sensitive data in a private cloud. Good for phased migration, disaster recovery, and workload optimization.

- Community Cloud: Resources are shared among several organizations with similar interests or requirements (e.g., security, compliance). Use when a group of organizations needs to collaborate and share resources in a secure environment, such as government agencies or research institutions.

10. What is virtualization, and how does it relate to cloud computing?

Virtualization is the process of creating a software-based (or virtual) representation of something physical, like a computer, server, network, or operating system. It allows you to run multiple operating systems or applications on a single physical machine, maximizing resource utilization and reducing hardware costs.

Virtualization is a core technology underpinning cloud computing. Cloud providers use virtualization to create and manage virtual machines (VMs) that customers can rent and use. This allows for on-demand scaling and resource allocation, which are key characteristics of cloud services. Without virtualization, cloud computing wouldn't be nearly as efficient or cost-effective.

11. Explain the difference between IaaS, PaaS, and SaaS using pizza as an analogy.

Imagine you want to eat pizza. With IaaS (Infrastructure as a Service), you build the entire pizza from scratch. You buy the oven, the ingredients (dough, sauce, cheese, toppings), and you make the pizza yourself. You manage everything.

With PaaS (Platform as a Service), you buy a pre-made dough and sauce base, maybe even the oven is provided. You add your own cheese and toppings, and bake it. You worry about your toppings and the baking process, but not about sourcing ingredients or providing the oven. With SaaS (Software as a Service), you simply order a pizza that's already made and delivered to your door. You just eat it; the pizza company handles everything else (ingredients, oven, baking, delivery).

12. How can cloud computing help a small business that's just starting out?

Cloud computing offers several advantages for startups. It eliminates the need for significant upfront investment in hardware and software, reducing capital expenditure. Instead of buying servers and licenses, businesses can pay for resources as they consume them (pay-as-you-go model). This can significantly improve cash flow and allow the business to allocate resources to other critical areas like product development or marketing.

Furthermore, cloud services offer scalability and flexibility. A startup can easily adjust its computing resources based on demand, scaling up during peak seasons and scaling down during quieter periods. This avoids over-provisioning and wasted resources. Cloud providers also handle infrastructure maintenance, updates, and security, freeing up the small business's technical staff to focus on core business activities, plus cloud data is backed up. This improved efficiency and business continuity is a big plus for smaller, less established companies.

13. What are some common cloud providers, and what are they known for?

Some common cloud providers include:

- Amazon Web Services (AWS): Known for its wide range of services, mature platform, and large market share. It's suitable for virtually any use case.

- Microsoft Azure: Strong integration with Microsoft products (Windows Server, .NET), hybrid cloud capabilities, and a global network. Good for enterprises already using Microsoft technologies.

- Google Cloud Platform (GCP): Known for its strengths in data analytics, machine learning, and Kubernetes. Often chosen for data-intensive applications and innovative solutions.

- DigitalOcean: Simple, developer-friendly platform focusing on virtual machines and straightforward deployments. A good choice for smaller projects and individual developers.

- IBM Cloud: Provides a range of services, including infrastructure, platform, and software, with a focus on enterprise solutions and hybrid cloud environments.

14. What does it mean to have 'high availability' in the cloud?

High availability (HA) in the cloud means that your applications and services are consistently accessible and operational, minimizing downtime. It ensures that your system can withstand failures (hardware, software, or network) without significant interruption to users. HA is achieved through redundancy and fault tolerance. Key aspects include:

- Redundancy: Multiple instances of your application or service running in different availability zones.

- Failover mechanisms: Automatic switching to a healthy instance when another fails.

- Monitoring: Continuous monitoring of system health to detect and respond to failures quickly.

- Load balancing: Distributing traffic across multiple instances to prevent overload and improve resilience.

- Automated recovery: Implementing automated processes to restore service after a failure.

15. Can you explain cloud storage and how it differs from traditional storage?

Cloud storage is a service where data is stored on remote servers accessible over the internet, managed by a third-party provider. Traditional storage, on the other hand, involves storing data on local devices like hard drives or network-attached storage (NAS) within your own infrastructure.

The main differences lie in accessibility, scalability, and management. Cloud storage offers anytime, anywhere access, easy scalability to adjust storage capacity, and reduced management overhead as the provider handles maintenance and security. Traditional storage requires physical access, has limited scalability tied to hardware, and necessitates in-house management of infrastructure, backups, and security.

16. What are APIs, and how do they enable different cloud services to work together?

APIs (Application Programming Interfaces) are sets of rules and specifications that software programs can follow to communicate with each other. They define the methods and data formats that applications use to request and exchange information, acting as intermediaries that allow different systems to interact without needing to know the underlying implementation details of each other. Think of it like a restaurant menu: you (the application) order specific dishes (API requests) without needing to know how the chef (the service) prepares them.

In the context of cloud services, APIs are crucial for interoperability. They enable services offered by different providers or even different services within the same provider to work together seamlessly. For example, a cloud storage service can use an API to allow a cloud-based image processing service to access and modify stored images. This interconnectedness allows developers to build complex applications by leveraging the capabilities of multiple independent cloud services, fostering innovation and efficiency.

17. Why is data backup and recovery important in a cloud environment?

Data backup and recovery are crucial in the cloud due to the inherent risks of data loss. These risks can stem from various sources, including: hardware failures, software bugs, accidental deletions, security breaches (like ransomware attacks), and natural disasters. Without robust backup and recovery strategies, businesses face potential financial losses, reputational damage, and legal liabilities. Regular backups ensure that data can be restored to a previous state, minimizing downtime and data loss in case of an incident.

Recovery mechanisms ensure business continuity. Cloud environments, while generally reliable, are still susceptible to failures. Backup and recovery strategies also facilitate data migration and disaster recovery, allowing organizations to maintain operational resilience and meet regulatory compliance requirements. Effective strategies include automated backups, geographically diverse storage, and well-defined recovery procedures.

18. What are some best practices for managing costs in the cloud?

Some best practices for managing cloud costs include: Right-sizing resources, which means choosing the appropriate instance types and storage sizes for your workloads. Regularly monitor resource utilization and adjust as needed. Implement auto-scaling to automatically scale resources up or down based on demand, avoiding over-provisioning during periods of low activity. Use reserved instances or committed use discounts for predictable workloads to significantly reduce costs compared to on-demand pricing.

Another important aspect is cost allocation by using tags to track costs by department, project, or environment. This allows for better visibility and accountability. Additionally, identify and eliminate idle resources that are no longer in use. You can also leverage cloud provider cost management tools to gain insights into spending patterns and identify optimization opportunities. Regularly reviewing and optimizing your cloud architecture can also help minimize costs.

19. How does cloud computing impact software development and deployment?

Cloud computing significantly impacts software development and deployment by offering scalable and on-demand resources, which streamlines the entire process. Developers can leverage cloud-based IDEs, testing environments, and collaboration tools, accelerating development cycles.

For deployment, cloud platforms provide automated deployment pipelines, continuous integration and continuous delivery (CI/CD) capabilities, and global content delivery networks (CDNs). This results in faster release cycles, improved application availability, and reduced infrastructure management overhead. Using services like AWS Lambda or Azure Functions enable serverless architectures, allowing developers to focus solely on code. These technologies shift the focus from infrastructure concerns to code quality and features.

20. What are some of the security concerns when migrating to a cloud environment?

Migrating to the cloud introduces several security concerns. Data breaches are a primary worry, as sensitive data stored in the cloud becomes a target. Insufficient access controls and misconfigured cloud services can expose data. Compliance is another concern, ensuring the cloud environment meets regulatory requirements like GDPR or HIPAA.

Another key area is vendor lock-in and ensuring the cloud provider's security practices align with your organization's. Insider threats from cloud provider employees or compromised accounts are also potential risks. Furthermore, denial-of-service (DoS) and advanced persistent threat (APT) attacks targeting cloud infrastructure can disrupt services and compromise data. Finally, the shared responsibility model, where security is divided between the cloud provider and the customer, can lead to gaps if responsibilities aren't clearly defined and managed.

21. What role does automation play in managing cloud infrastructure?

Automation is crucial for efficient cloud infrastructure management. It reduces manual effort, minimizes errors, and improves scalability. Common use cases include automated provisioning and configuration of resources, automated deployment of applications using CI/CD pipelines, automated scaling based on demand, and automated monitoring and remediation of issues. Infrastructure as Code (IaC) tools like Terraform and CloudFormation enable declarative management of infrastructure, ensuring consistency and repeatability.

22. How can you monitor the performance of applications running in the cloud?

Monitoring cloud application performance involves several key strategies. Cloud providers offer native monitoring tools like AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring that provide metrics, logs, and tracing capabilities. These tools allow you to track CPU utilization, memory consumption, network latency, and other vital statistics. You can also set up alerts based on thresholds to be notified of performance degradations or anomalies. Furthermore, Application Performance Monitoring (APM) tools such as New Relic, DataDog, and Dynatrace offer deeper insights into application code, database queries, and external dependencies.

Besides cloud native and APM tools, Logging aggregators such as the ELK stack (Elasticsearch, Logstash, Kibana) or Splunk can be invaluable for centralized log analysis. Synthetic monitoring, which simulates user traffic to test application availability and responsiveness from different geographic locations, is also beneficial. Regularly reviewing logs and metrics and proactively addressing identified bottlenecks is crucial for maintaining optimal application performance in the cloud.

23. What are some of the challenges of migrating legacy applications to the cloud?

Migrating legacy applications to the cloud presents several challenges. One key obstacle is often compatibility. Legacy systems may rely on outdated technologies or specific hardware configurations that don't translate well to the cloud environment. This can necessitate significant code refactoring, re-architecting, or even complete application replacement, which can be costly and time-consuming.

Another major challenge is data migration. Moving large volumes of data from on-premise systems to the cloud can be complex, especially when dealing with sensitive data that requires compliance with regulations. Furthermore, issues like network bandwidth limitations, data inconsistencies, and downtime during the migration process need to be carefully addressed. Security is paramount, ensuring that the cloud environment meets or exceeds existing security standards is crucial.

24. How does cloud computing facilitate collaboration and data sharing?

Cloud computing significantly eases collaboration and data sharing through centralized storage and accessibility. Multiple users can access and work on the same data simultaneously from different locations, eliminating geographical barriers.

Key mechanisms include:

- Centralized Storage: Data is stored in a central cloud repository. Making it accessible to users.

- Access Control: Role-based access control mechanisms manage who can view, edit, or share specific data.

- Version Control: Cloud platforms often maintain version histories of files, enabling users to track changes and revert to previous versions if necessary.

- Collaboration Tools: Many cloud services integrate collaboration features like real-time co-editing (e.g., Google Docs) or commenting, enhancing teamwork.

- Sharing Options: Cloud platforms provide various sharing options, such as generating shareable links with specific permissions, facilitating easy data distribution.

25. What are the key differences between cloud computing and grid computing?

Cloud computing and grid computing are both distributed computing paradigms, but they differ in their focus and management. Cloud computing provides on-demand access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction. It emphasizes scalability, elasticity, and pay-per-use models.

Grid computing, on the other hand, focuses on aggregating geographically dispersed resources (often heterogeneous) to solve complex problems that require massive computational power. It's typically used for research and scientific applications. While cloud computing aims to provide a readily available and managed environment, grid computing is more about resource sharing and collaboration among different organizations or individuals, with a higher degree of user involvement in resource management.

26. What is serverless computing, and what are its benefits and drawbacks?

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. You don't have to provision or manage servers. You simply deploy your code, and the cloud provider takes care of the rest. Common examples include AWS Lambda, Azure Functions, and Google Cloud Functions.

Benefits include reduced operational costs (pay-per-use), automatic scaling, faster deployment, and increased developer productivity. Drawbacks include potential vendor lock-in, cold starts (initial latency), limitations in execution time and resources, debugging complexities, and architectural complexity from composing many functions together.

27. How can cloud computing be used to support big data analytics?

Cloud computing provides scalable and cost-effective infrastructure for big data analytics. Cloud platforms offer services like object storage (e.g., AWS S3, Azure Blob Storage) to store massive datasets and compute services (e.g., AWS EC2, Azure Virtual Machines) to process them. Managed services such as data warehousing (e.g., Amazon Redshift, Google BigQuery), data processing (e.g., AWS EMR, Azure HDInsight), and serverless computing (e.g., AWS Lambda, Azure Functions) further simplify the analytics pipeline.

Cloud's elasticity allows users to scale resources up or down based on demand, optimizing costs. For example, you can spin up a cluster of machines to run a Spark job, and then shut them down when the job is complete. Furthermore, cloud platforms integrate well with big data analytics tools and frameworks like Hadoop, Spark, and Kafka, enabling seamless data processing and analysis. Cloud also provides security features and compliance certifications to protect sensitive data.

28. Explain the concept of cloud bursting and its use cases.

Cloud bursting is a hybrid cloud strategy where an application or workload normally runs in a private cloud or on-premises data center, but "bursts" into a public cloud when demand spikes. This allows organizations to handle unexpected increases in traffic or processing needs without investing in additional on-premises infrastructure that would sit idle most of the time. It effectively uses public cloud resources as an extension of the private cloud.

Common use cases include: handling seasonal traffic spikes for e-commerce, processing large datasets for scientific research, providing on-demand compute power for media rendering, and ensuring business continuity during disaster recovery scenarios. Cloud bursting is also beneficial when companies want to test or deploy new applications in the public cloud environment without making a significant upfront investment.

29. Discuss the role of DevOps in a cloud environment and how it improves efficiency.

DevOps in a cloud environment streamlines software development and deployment. It bridges the gap between development and operations teams, enabling faster release cycles, improved collaboration, and enhanced reliability. DevOps leverages cloud-native tools and services for automation, continuous integration (CI), and continuous delivery (CD).

Specifically, cloud environments benefit from DevOps through infrastructure as code (IaC) for automated resource provisioning, automated testing and monitoring, and auto-scaling capabilities which improve resource utilization and reduce costs. This translates into increased efficiency by reducing manual interventions, faster time-to-market for new features, and improved overall system stability. By using cloud-specific CI/CD pipelines, organizations can automate builds, tests, and deployments across various cloud environments with ease.

Intermediate Cloud Computing interview questions

1. How does cloud auto-scaling work, and what are the key metrics you'd monitor to ensure it's performing efficiently?

Cloud auto-scaling automatically adjusts the number of compute resources (e.g., virtual machines) based on demand. It typically works by monitoring resource utilization and triggering scaling actions (adding or removing resources) when predefined thresholds are crossed. The scaling process usually involves these steps: resource monitoring, decision-making (evaluating policies and thresholds), and execution (provisioning/de-provisioning instances).

Key metrics to monitor for efficient auto-scaling include: CPU utilization, memory utilization, network traffic, request latency, the number of active requests, and queue length. Monitoring costs associated with the scaling operations is crucial, and also the number of scaling events (too frequent scaling could indicate suboptimal configuration). Additionally, application-specific metrics such as transactions per second or error rates can be important. Effectively monitoring these metrics allows for fine-tuning the auto-scaling configuration to balance performance, cost, and responsiveness.

2. Explain the difference between horizontal and vertical scaling in the cloud, and when would you choose one over the other?

Horizontal scaling (scaling out) involves adding more machines to your pool of resources, while vertical scaling (scaling up) involves adding more power (CPU, RAM) to an existing machine.

You'd choose horizontal scaling when your application is designed to be distributed across multiple machines, allowing you to handle more traffic by simply adding more servers. It's often preferred for cloud environments due to its elasticity and fault tolerance. You'd choose vertical scaling when your application's performance is bottlenecked by the resources of a single machine, and it can benefit from more CPU or RAM. However, vertical scaling has limitations as you can only scale up to the maximum capacity of a single machine and may require downtime during upgrades.

3. Describe a scenario where you would use a container orchestration tool like Kubernetes, and what benefits would it provide?

Consider a scenario where you're deploying a microservices-based e-commerce application. This application consists of multiple independent services like product catalog, order management, user authentication, and payment processing. Each service is packaged as a Docker container.

Using Kubernetes offers several benefits. Firstly, it automates deployment, scaling, and management of these containers. Kubernetes ensures high availability by automatically restarting failed containers and distributing traffic. Secondly, it simplifies updates with rolling deployments, minimizing downtime. Finally, it improves resource utilization by dynamically allocating resources to containers based on demand. This leads to better efficiency and cost savings compared to manually managing these services on individual servers.

4. What are the different types of cloud storage, and how do you choose the right one for different data needs (e.g., archival, frequent access)?

Cloud storage comes in several main types: Object Storage (like AWS S3), Block Storage (like AWS EBS), and File Storage (like AWS EFS or AWS Storage Gateway). Object storage is ideal for unstructured data like images, videos, and backups, and is often used for archival due to its cost-effectiveness and scalability. Block storage is similar to having a virtual hard drive; it's best for databases, virtual machines, and applications needing low-latency access. File storage provides a shared file system for multiple users or applications and is suited for scenarios like content repositories, development environments, or media workflows.

The choice depends on access frequency, data type, performance needs, and cost. For archival data needing infrequent access, object storage is generally the best option. For applications requiring frequent, low-latency access, block storage is usually the preferred choice. File storage excels when you need a shared file system among multiple resources.

5. How do you ensure data security in the cloud, considering both data at rest and data in transit?

To ensure data security in the cloud, both at rest and in transit, a multi-layered approach is crucial. For data at rest, encryption is paramount. This includes encrypting data stored in databases, object storage, and file systems. Key management is also critical, utilizing services like KMS (Key Management Service) to securely store and manage encryption keys. Access control lists (ACLs) and Identity and Access Management (IAM) policies should be implemented to restrict access to data based on the principle of least privilege. Regular vulnerability scanning and penetration testing helps to identify and address potential weaknesses.

For data in transit, encryption using protocols like TLS/SSL (HTTPS) is essential when data is transmitted over the internet. VPNs or dedicated private network connections can be used for secure communication between on-premises environments and the cloud. Monitoring network traffic and implementing intrusion detection/prevention systems (IDS/IPS) can help detect and prevent unauthorized access. Additionally, employing secure APIs with authentication and authorization mechanisms protects data being exchanged between applications.

6. What are some common cloud migration strategies, and what factors would influence your choice of strategy?

Common cloud migration strategies include: Rehosting (lift and shift), Replatforming (lift, tinker, and shift), Refactoring (re-architecting), Repurchasing (switching to a new product), and Retiring (decommissioning). Each has different levels of complexity and associated costs.

Factors influencing the choice of strategy include: the application's complexity and business criticality, time constraints, budget, technical skills available, and the desired level of cloud optimization. For example, if an application is simple and time is limited, rehosting might be appropriate. However, for a critical application where cost optimization is important and time allows, refactoring might be a better option. Furthermore compliance requirements may also influence the approach to be adopted.

7. Explain the concept of Infrastructure as Code (IaC), and what tools can be used to implement it?

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure through machine-readable definition files, rather than manual processes or interactive configuration tools. Think of it as treating your infrastructure configuration like you treat application code: write it, version it, test it, and deploy it. This allows for automation, consistency, repeatability, and version control of your infrastructure.

Several tools can be used to implement IaC. Some popular options include:

- Terraform: An open-source infrastructure as code tool that allows you to define and provision infrastructure using a declarative configuration language.

- Ansible: An open-source automation engine that uses playbooks (YAML files) to define infrastructure configurations and automate tasks.

- CloudFormation: A service offered by AWS that allows you to define and provision AWS infrastructure using JSON or YAML templates.

- Azure Resource Manager (ARM) Templates: A service offered by Azure that allows you to define and provision Azure infrastructure using JSON templates.

- Pulumi: An open-source IaC tool that allows you to define and provision infrastructure using familiar programming languages like Python, TypeScript, Go, and C#.

8. How do you monitor cloud resources and applications, and what are some key performance indicators (KPIs) you would track?

I monitor cloud resources and applications using a combination of native cloud provider tools (like AWS CloudWatch, Azure Monitor, or Google Cloud Monitoring) and third-party monitoring solutions (like Prometheus, Datadog, or New Relic). These tools allow me to collect metrics, logs, and traces from various resources and applications.

Key performance indicators (KPIs) I'd track include: CPU utilization, memory usage, disk I/O, network traffic, request latency, error rates (e.g., 5xx errors), application response time, database query performance, and the number of active users. For containerized environments, I'd also monitor container resource usage and the number of container restarts. For serverless, I'd monitor invocation counts, execution duration, and cold starts.

9. Describe a situation where you would use a serverless computing architecture, and what are the advantages and disadvantages?

A good scenario for serverless computing is processing images uploaded to a website. When a user uploads an image, a serverless function (like AWS Lambda) can be triggered to automatically resize the image, convert it to different formats (e.g., JPEG, PNG, WebP), and store the optimized versions in cloud storage (e.g., AWS S3). This eliminates the need to manage a dedicated server for image processing.

Advantages include automatic scaling (handles varying workloads seamlessly), cost efficiency (you only pay for the compute time used), and reduced operational overhead (no server maintenance). Disadvantages can include cold starts (initial latency when a function hasn't been used recently), potential vendor lock-in, and limitations on execution time and resources (memory, disk space). Debugging and monitoring can also be more complex compared to traditional server-based applications.

10. What are the different cloud deployment models (e.g., public, private, hybrid), and how do you determine the best one for an organization?

Cloud deployment models include public, private, hybrid, and community clouds. Public clouds offer resources over the internet, managed by a third-party provider (e.g., AWS, Azure, GCP). Private clouds provide dedicated resources for a single organization, either on-premises or hosted by a provider. Hybrid clouds combine public and private clouds, allowing data and applications to be shared between them. Community clouds serve a specific community with shared concerns (e.g., regulatory compliance).

Choosing the best model depends on factors like cost, security, compliance, control, and scalability. Public clouds are generally cost-effective for applications with fluctuating demands. Private clouds offer more control and security for sensitive data. Hybrid clouds provide flexibility and scalability, allowing organizations to leverage the benefits of both public and private clouds. A thorough assessment of an organization's specific needs and constraints is crucial for selecting the optimal deployment model.

11. Explain how you would implement a disaster recovery plan in the cloud, including backup and recovery strategies?

Implementing a disaster recovery (DR) plan in the cloud involves several key strategies. Firstly, backup and replication are crucial. Regular backups of data and applications should be performed, stored in a different geographical region than the primary cloud deployment. Cloud providers offer services for automated backups and replication across regions. For example, AWS offers EBS snapshots, cross-region replication for S3 buckets, and RDS backups. Recovery strategies should include defining Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO).

Secondly, infrastructure as code (IaC) enables rapid recovery of the entire environment. By defining the infrastructure in code (e.g., using Terraform or CloudFormation), the environment can be quickly recreated in a new region in case of a disaster. Failover and failback procedures should be documented and regularly tested. This includes automating the process of switching traffic to the secondary region and back to the primary region once it's restored. Monitoring and alerting systems should be in place to detect failures and trigger the DR plan automatically. Regular DR drills are essential to ensure the plan's effectiveness and identify areas for improvement.

12. What are some strategies for optimizing cloud costs, and how can you identify and eliminate unnecessary expenses?

Strategies for optimizing cloud costs involve several key areas. Right-sizing resources is crucial; analyze CPU and memory utilization to avoid over-provisioning. Implement auto-scaling to dynamically adjust resources based on demand. Leverage reserved instances or committed use discounts for predictable workloads. Utilize spot instances for fault-tolerant tasks to save money.

To identify and eliminate unnecessary expenses, regularly monitor cloud usage and spending with cost analysis tools. Look for idle or underutilized resources, such as virtual machines and databases. Identify and remove orphaned resources (e.g., unattached volumes, unused snapshots). Implement tagging to improve cost allocation and accountability. Regularly review pricing models and explore more cost-effective options for your needs. Consider using serverless functions (e.g., AWS Lambda) when applicable.

13. How do you manage identity and access control (IAM) in the cloud, and what are some best practices for securing user accounts?

In the cloud, IAM is managed through services provided by the cloud provider (e.g., AWS IAM, Azure Active Directory, Google Cloud IAM). These services allow you to create and manage user identities, define roles with specific permissions, and control access to cloud resources. I typically use a least-privilege approach, granting users only the permissions they need to perform their job functions. Multi-Factor Authentication (MFA) is crucial for all user accounts, especially those with administrative privileges.

Some best practices for securing user accounts include: using strong, unique passwords; regularly rotating credentials; implementing role-based access control (RBAC); auditing IAM configurations and activity; using groups to manage permissions efficiently; enforcing password policies (complexity, expiration); and monitoring for unusual activity that might indicate compromised accounts. It's also important to remove unnecessary accounts and permissions promptly when users leave the organization or change roles. I also automate IAM tasks with tools like Infrastructure as Code (IaC) to ensure consistency and auditability.

14. Describe how you would implement a CI/CD pipeline in the cloud, and what tools would you use?

To implement a CI/CD pipeline in the cloud, I would leverage cloud-native services and open-source tools. For source code management, I would use Git, hosted on either GitHub, GitLab, or AWS CodeCommit. For CI, I'd use Jenkins, GitLab CI, GitHub Actions, or AWS CodePipeline to automate build, test, and static code analysis (using tools like SonarQube). Artifacts would be stored in a repository like JFrog Artifactory or AWS S3.

For CD, I'd use a tool like Spinnaker, Argo CD, or AWS CodeDeploy for deploying to environments like staging and production. Infrastructure as Code (IaC) using Terraform or CloudFormation would be used to provision and manage cloud resources, ensuring consistency across environments. Monitoring and alerting would be integrated using tools like Prometheus, Grafana, or CloudWatch to ensure application health and performance post-deployment.

15. What are the trade-offs between using managed services versus self-managed solutions in the cloud?

Managed services offer simplified operations, reduced overhead (patching, scaling, backups), and often lower initial setup costs. They allow focusing on core business logic rather than infrastructure management. However, they can be more expensive long-term, offer less customization and control, and introduce vendor lock-in. Performance tuning and debugging can be more challenging due to limited access to the underlying infrastructure.

Self-managed solutions provide full control and customization, potentially leading to cost savings with optimized resource utilization. They avoid vendor lock-in. But require significant expertise and resources for setup, maintenance, scaling, and security. The organization is responsible for all aspects of the infrastructure, including incident response and ensuring high availability, which can be time-consuming and costly.

16. How do you troubleshoot performance issues in cloud-based applications, and what tools can you use for diagnostics?

Troubleshooting performance issues in cloud applications involves a systematic approach. First, identify the bottleneck: Is it the application code, database, network, or infrastructure? I'd start by monitoring key metrics using cloud provider tools like AWS CloudWatch, Azure Monitor, or Google Cloud Monitoring. Look for high CPU utilization, memory leaks, slow database queries, network latency, and error rates.

Next, diagnose the root cause: Use profiling tools like AWS X-Ray, Azure Application Insights, or Google Cloud Trace to trace requests and identify slow code execution paths. Examine database query performance using tools like SQL Server Profiler or pgAdmin. Analyze network traffic using tools like tcpdump or Wireshark. For application code, I'd use logging extensively and potentially use a debugger to step through the code and identify issues. Finally, I would use load testing tools like JMeter or Gatling to simulate user traffic and identify performance bottlenecks under load.

17. Explain the concept of cloud networking, including virtual networks, subnets, and routing.

Cloud networking refers to the creation and management of network resources within a cloud computing environment. It enables organizations to build and operate networks without the need for physical infrastructure. Virtual networks are logically isolated networks within the cloud, allowing users to define their own network topology. Subnets are divisions within a virtual network, used to further segment the network and improve security and performance. Routing determines the path that network traffic takes between different subnets, virtual networks, or to external networks.

Key components include: Virtual Networks (isolated network spaces), Subnets (divisions within virtual networks), Routing (traffic path determination), Network Security Groups (firewall rules), and Virtual Appliances (e.g., firewalls, load balancers). Cloud providers offer services to manage these components, such as AWS VPC, Azure Virtual Network, and Google Cloud VPC.

18. What are some common cloud security threats, and how can you mitigate them?

Common cloud security threats include data breaches, misconfigurations, insufficient access control, insecure APIs, and denial-of-service attacks. Malware and ransomware also pose significant risks, exploiting vulnerabilities in cloud infrastructure or applications.

Mitigation strategies involve implementing strong access controls (like multi-factor authentication), regularly monitoring and auditing cloud configurations, using encryption for data at rest and in transit, securing APIs with authentication and authorization mechanisms, and employing intrusion detection/prevention systems. Regular vulnerability scanning and penetration testing can proactively identify and address weaknesses. Cloud providers often offer security tools and services that can be leveraged to enhance security posture, like AWS Security Hub or Azure Security Center.

19. How do you ensure compliance with industry regulations and standards in the cloud (e.g., HIPAA, GDPR)?

To ensure compliance with industry regulations and standards in the cloud, a multi-faceted approach is crucial. This includes implementing strong data governance policies, conducting regular risk assessments, and establishing robust security controls. Specifically, for HIPAA, this means implementing technical safeguards like encryption, access controls, and audit logging. For GDPR, it requires ensuring data privacy by design, obtaining proper consent for data processing, and providing individuals with the right to access, rectify, and erase their data.

Furthermore, leverage cloud provider's compliance tools and services (e.g., AWS Artifact, Azure Compliance Manager) to assess and maintain compliance. Regularly monitor and audit systems to verify adherence to the policies and standards. Automated compliance checks and continuous monitoring are vital for rapidly identifying and addressing potential violations. Training employees on compliance requirements is also essential.

20. Explain the difference between stateless and stateful applications, and how they are deployed differently in the cloud.

Stateless applications do not store any client data (state) on the server between requests. Each request from a client is treated as a new, independent transaction. This makes them highly scalable, as any server can handle any request. They are deployed in the cloud using load balancers to distribute requests across multiple instances, allowing for easy horizontal scaling. Common deployment strategies include using container orchestration platforms like Kubernetes or serverless functions.

Stateful applications, on the other hand, store client data (state) on the server. Subsequent requests depend on this stored data. This makes them more complex to scale, as you need to ensure that a client's requests are routed to the same server or have a mechanism to share state across servers. Deployment in the cloud often involves using persistent storage solutions (e.g., databases, distributed caches) and sticky sessions (routing requests from the same client to the same server). Orchestration tools must also be configured to handle stateful sets to ensure data consistency and availability. For example, deploying a stateful application with Kubernetes might require using PersistentVolumes and StatefulSets.

21. How would you design a highly available and fault-tolerant application architecture in the cloud?

To design a highly available and fault-tolerant application architecture in the cloud, I would leverage several key principles. First, redundancy is critical. This involves deploying multiple instances of the application across different availability zones (AZs) or regions. Load balancers would distribute traffic across these instances, ensuring that if one instance fails, others can seamlessly take over. Data replication across multiple AZs/regions is also essential for data durability.

Second, I'd implement monitoring and automated failover mechanisms. Monitoring tools would track the health of application instances and infrastructure components. If a failure is detected, automated failover procedures would quickly switch traffic to healthy instances, minimizing downtime. Immutable infrastructure and infrastructure-as-code practices further improve resilience, enabling rapid provisioning of replacement resources. Lastly, utilizing managed services like databases and message queues improves availability as the cloud provider handles much of the underlying infrastructure management.

Advanced Cloud Computing interview questions

1. How would you design a cloud-based system that automatically scales based on real-time demand while minimizing costs?

A cloud-based autoscaling system minimizes cost by dynamically adjusting resources based on real-time demand. Key components include: monitoring tools (e.g., Prometheus, CloudWatch) to track metrics like CPU utilization, memory usage, and request latency; an autoscaling service (e.g., AWS Auto Scaling, Azure Autoscale) configured with scaling policies (e.g., scale out when CPU > 70%, scale in when CPU < 30%); and a load balancer (e.g., Nginx, HAProxy, cloud provider load balancers) to distribute traffic evenly across instances. Implement a cooldown period to prevent rapid scaling fluctuations. Utilize cost optimization strategies such as reserved instances or spot instances where appropriate. Also, regularly review scaling policies and resource usage to identify areas for further optimization.

To further minimize costs, consider serverless functions (e.g., AWS Lambda, Azure Functions) for event-driven workloads, as they scale automatically without requiring server management. Implement caching mechanisms (e.g., Redis, Memcached) to reduce database load and improve response times. Code should be optimized for performance. For example:

def process_data(data):

# Optimized data processing logic here

pass

2. Explain the CAP theorem and how it applies to distributed cloud databases.

The CAP theorem states that in a distributed system, you can only guarantee two out of the following three properties: Consistency (all nodes see the same data at the same time), Availability (every request receives a response, without guarantee that it contains the most recent version of the information), and Partition Tolerance (the system continues to operate despite arbitrary partitioning due to network failures).

In the context of distributed cloud databases, CAP theorem dictates design choices. For example, a database prioritizing Consistency and Availability (CA) might sacrifice partition tolerance, becoming unavailable during network partitions. Conversely, a system prioritizing Availability and Partition Tolerance (AP) might sacrifice immediate consistency, employing techniques like eventual consistency. Similarly, a CP system prioritizes Consistency and Partition Tolerance, potentially sacrificing availability during partitions, meaning some requests may time out or fail. Choosing the right balance depends on the specific application requirements. Cloud databases often offer configurable consistency levels to allow users to fine-tune the tradeoff between CAP properties.

3. Describe a situation where a multi-cloud strategy would be beneficial. What are the challenges involved?

A multi-cloud strategy can be beneficial when organizations want to avoid vendor lock-in, improve resilience, or optimize costs. For example, an e-commerce company might use AWS for its compute-intensive product recommendation engine, Azure for its enterprise applications tightly integrated with Microsoft services, and Google Cloud Platform for its data analytics and machine learning workloads, leveraging each cloud's strengths. This diversification ensures that if one cloud provider experiences an outage, the entire system won't fail, and they can choose the most cost-effective solution for each specific task.

However, multi-cloud introduces complexities. Challenges include managing data consistency across different cloud environments, increased security concerns due to a larger attack surface, the need for specialized skillsets to operate different cloud platforms, and the difficulty of maintaining consistent application deployment and monitoring procedures across the different clouds. Also, cost management and optimization can become more challenging since pricing models differ among cloud providers.

4. How can you ensure data consistency across multiple regions in a globally distributed cloud application?

Ensuring data consistency in a globally distributed cloud application involves several strategies. Data replication is fundamental, where data is copied across multiple regions. However, simple replication can lead to inconsistencies. Strong consistency models, like linearizability or sequential consistency, guarantee that all reads see the most recent write, but these can significantly impact latency. Techniques like Paxos or Raft can be employed for distributed consensus, ensuring data is consistently updated across regions. Data sharding or partitioning can also help by distributing data geographically, thus reducing latency, but requires careful consideration to avoid hotspots.

Alternatively, eventual consistency offers higher availability and lower latency but accepts that data might be temporarily inconsistent. Strategies like conflict resolution mechanisms (e.g., last-write-wins, vector clocks) are then crucial. Implementing robust monitoring and alerting systems helps detect and resolve data inconsistencies promptly. Choosing the right consistency model depends on the application's specific requirements, balancing the need for strong consistency with the acceptable level of latency and availability.

5. What are the key considerations when migrating a legacy application to a cloud-native architecture?

Migrating a legacy application to a cloud-native architecture requires careful planning and execution. Key considerations include: application refactoring (or rewriting) to align with microservices and containerization. Choosing the right cloud provider and services for compute, storage, and databases is crucial. Data migration strategies to ensure minimal downtime and data integrity are also very important. Also consider security implications when moving from a potentially isolated on-premise setup to a distributed cloud environment.

Furthermore, teams need to adapt to DevOps practices with automation for CI/CD, infrastructure as code, and monitoring. Cost optimization is also vital, carefully evaluating cloud service pricing and resource utilization. Legacy applications may have dependencies that create challenges, such as dependencies on specific operating systems, libraries or frameworks, which needs to be addressed during the migration process.

6. Explain the differences between Infrastructure as Code (IaC) tools like Terraform and CloudFormation. When would you choose one over the other?

Terraform and CloudFormation are both Infrastructure as Code (IaC) tools, but they differ in scope and functionality. Terraform is a vendor-agnostic tool, meaning it can manage infrastructure across multiple cloud providers (AWS, Azure, GCP, etc.) and even on-premises environments. CloudFormation, on the other hand, is AWS-specific, limiting its use to AWS resources only. This makes Terraform a better choice for multi-cloud deployments or when you anticipate needing to move infrastructure between providers. CloudFormation often has earlier or same-day support for new AWS services, while Terraform support might lag slightly. The underlying language used by Terraform is HashiCorp Configuration Language (HCL) which many find intuitive, while CloudFormation uses YAML or JSON.

7. How do you approach troubleshooting performance bottlenecks in a complex cloud environment?

Troubleshooting performance bottlenecks in a complex cloud environment involves a systematic approach. First, I'd identify the bottleneck using monitoring tools like CloudWatch, Prometheus, or Datadog to observe metrics such as CPU utilization, memory usage, network latency, and disk I/O. Once identified, I would analyze logs (application, system, and network) to understand the events leading to the bottleneck. Then, I'd use profiling tools or distributed tracing to pinpoint the slow parts of code execution or the flow of requests through the system.

Next, I'd formulate and test hypotheses. For example, if the database is slow, I would check query performance and indexing. If the network is the issue, I'd examine routing, firewall rules, and bandwidth. I would then implement solutions like scaling resources, optimizing code or queries, caching, or re-architecting components, while continuously monitoring to confirm that my actions have improved performance and to avoid regressions. I'd also use infrastructure-as-code to manage changes and avoid configuration drifts. Finally, automating alerting and self-healing mechanisms is essential for proactive bottleneck management in the cloud.

8. Describe different cloud security models and how to implement defense in depth for a cloud application.

Cloud security models revolve around shared responsibility. The provider secures the infrastructure (compute, storage, network), while the customer secures what they put in the cloud (data, applications, configurations). Key models include:

- IaaS (Infrastructure as a Service): You manage the most, controlling OS, storage, deployed applications. Security is heavily your responsibility.

- PaaS (Platform as a Service): The provider manages OS, patching, and infrastructure. You focus on application development and security of your code/data.

- SaaS (Software as a Service): The provider manages almost everything, including the application itself. Your main security concerns are data access and user management.

Defense in depth for a cloud application involves multiple layers of security controls. This can include:

- Identity and Access Management (IAM): Strong authentication (MFA), least privilege principle.

- Network Security: Firewalls, network segmentation, intrusion detection/prevention systems (IDS/IPS).

- Data Encryption: Encrypt data at rest and in transit.

- Application Security: Secure coding practices, vulnerability scanning, web application firewalls (WAF).

- Logging and Monitoring: Centralized logging, security information and event management (SIEM).

- Regular Security Assessments: Penetration testing, vulnerability assessments, security audits.

9. How can you leverage serverless computing to build highly scalable and cost-effective applications?

Serverless computing enables building highly scalable and cost-effective applications by abstracting away server management. You only pay for the compute time consumed, scaling automatically based on demand. This eliminates the need to provision and manage servers, reducing operational overhead and infrastructure costs.

To leverage this, you can break down your application into independent, stateless functions triggered by events (e.g., HTTP requests, database changes, messages). Services like AWS Lambda, Azure Functions, and Google Cloud Functions allow you to deploy and execute these functions without managing the underlying infrastructure. This approach simplifies development, deployment, and scaling, resulting in cost optimization and improved efficiency, especially for applications with variable or unpredictable workloads. For example, processing images uploaded to cloud storage can be easily achieved via serverless functions that get triggered on upload events. Consider following example:

import boto3

def lambda_handler(event, context):

s3 = boto3.client('s3')

bucket = event['Records'][0]['s3']['bucket']['name']

key = event['Records'][0]['s3']['object']['key']

print(f"Processing image {key} from bucket {bucket}")

# Image processing logic here

return {

'statusCode': 200,

'body': 'Image processed successfully!'

}

10. What are the trade-offs between using containers and virtual machines in the cloud?

Containers and VMs offer different trade-offs in the cloud. VMs provide strong isolation, guaranteeing dedicated resources and hypervisor-level security. This comes at the cost of higher overhead due to a full OS per VM, leading to slower startup times and less efficient resource utilization.

Containers, on the other hand, offer lightweight virtualization by sharing the host OS kernel. This results in faster startup times, better resource efficiency, and higher density. However, they offer weaker isolation than VMs, as a compromised container could potentially affect other containers or the host OS. Choosing between them depends on the application's security needs, resource requirements, and performance priorities.

11. Explain how you would implement a blue-green deployment strategy in the cloud.

Blue-green deployment involves running two identical environments: blue (the current live environment) and green (the new version). To implement this in the cloud, first, provision two sets of identical infrastructure (compute, database, storage). Deploy the new version of your application to the green environment. Thoroughly test the green environment to ensure it's stable and performs as expected. Once testing is complete, switch the traffic from the blue environment to the green environment using a load balancer or DNS update. The old blue environment can then be kept as a backup or updated to become the next green deployment.

Rollback is simple; if issues arise in the green environment, switch the traffic back to the blue environment. To automate this, consider using cloud-specific services. For example, with AWS, you might use Elastic Beanstalk with deployment policies, CloudFormation for infrastructure as code, and Route 53 for DNS switching. Other cloud providers offer similar tools for achieving this. Infrastructure as code (IaC) tools help ensure the environments are truly identical.

12. Describe the benefits and challenges of using microservices architecture in the cloud.

Microservices in the cloud offer significant benefits: increased agility through independent deployment and scaling of services, improved resilience as failures are isolated, enhanced scalability by scaling only the necessary services, and technology diversity enabling different teams to choose the best technology for each service. Cloud platforms also simplify deployment and management through services like container orchestration (e.g., Kubernetes), serverless computing (e.g., AWS Lambda), and API gateways.

However, challenges exist: increased complexity in development and deployment due to distributed nature, higher operational overhead in managing numerous services, potential for inter-service communication bottlenecks, difficulty in maintaining data consistency across services (consider eventual consistency models instead of ACID transactions), and the need for robust monitoring and logging infrastructure to track service health and performance. Effective distributed tracing becomes crucial for debugging issues spanning multiple services.

13. How do you monitor and manage the costs associated with your cloud resources effectively?

I monitor and manage cloud costs through a combination of strategies. First, I leverage cloud provider cost management tools like AWS Cost Explorer or Azure Cost Management to gain visibility into spending patterns, identify cost drivers, and forecast future expenses. These tools allow me to break down costs by service, region, and resource, enabling granular analysis.

Furthermore, I implement cost optimization techniques such as right-sizing instances, utilizing reserved instances or savings plans for long-term workloads, and automating the shutdown of idle resources. I also use tagging to categorize resources and allocate costs to specific projects or departments, making it easier to track spending and enforce accountability. Budget alerts and automated responses are configured to notify me of unexpected cost spikes and trigger corrective actions, ensuring costs stay within defined limits.

14. What are the key differences between various cloud storage options like object storage, block storage, and file storage? When would you use each?

Object storage stores data as objects with metadata and a unique identifier, making it ideal for unstructured data like images, videos, and backups. It offers high scalability and cost-effectiveness, accessed via HTTP APIs. Use it for data lakes, archiving, and content delivery. Block storage stores data in fixed-size blocks, providing raw storage volumes. It's best for databases, virtual machines, and applications requiring low-latency access. Think of it like a hard drive for a server. File storage stores data in a hierarchical file system, accessible via protocols like NFS or SMB. It's suitable for shared file storage, collaboration, and applications that need file-level access. It's akin to a network drive.

The key differences lie in data structure, access method, and performance. Object storage excels in scalability and cost but has higher latency. Block storage offers the best performance for random read/write operations. File storage provides ease of use and file sharing capabilities, but may have performance limitations compared to block storage.

15. Explain how you would design a disaster recovery plan for a critical cloud application.

A disaster recovery (DR) plan for a critical cloud application focuses on minimizing downtime and data loss. Key elements include: 1. Redundancy: Deploy the application across multiple availability zones or regions. Use load balancers to distribute traffic. 2. Data Backup & Replication: Implement regular automated backups of application data. Utilize database replication strategies (e.g., asynchronous, synchronous) based on RTO/RPO requirements. 3. Failover Mechanisms: Define automated failover processes. This includes DNS updates, load balancer configuration changes, and application restarts in the DR environment. 4. Monitoring & Alerting: Implement robust monitoring to detect failures quickly. Configure alerts to notify the operations team. 5. Testing: Conduct regular DR drills to validate the plan's effectiveness. Document the plan and keep it up-to-date.

To implement this, I'd start with understanding the application's RTO (Recovery Time Objective) and RPO (Recovery Point Objective). Then, select appropriate cloud services (e.g., AWS, Azure, GCP) that offer features like multi-AZ deployments, managed databases with replication, and automated failover. For example, using AWS, I might utilize Route 53 for DNS failover, RDS for database replication, and CloudWatch for monitoring. Regularly test the failover process and document all steps.

16. How do you ensure compliance with data privacy regulations like GDPR in a cloud environment?

Ensuring GDPR compliance in a cloud environment involves several key strategies. First, data mapping is crucial to understand what personal data you hold, where it's stored, and how it flows. Implement strong access controls and encryption both in transit and at rest. Regularly audit your cloud environment and processes to identify and address potential compliance gaps.

Second, establish clear data processing agreements with your cloud providers, ensuring they also comply with GDPR requirements. Implement data loss prevention (DLP) measures to prevent unauthorized access or disclosure. Have a robust incident response plan in place to handle data breaches effectively. Finally, provide data subjects with the ability to exercise their GDPR rights, such as access, rectification, and erasure of their personal data. For example, to ensure data is encrypted at rest you may consider using cloud services with features such as AWS KMS, Azure Key Vault, or Google Cloud KMS.

17. Describe different cloud networking concepts like VPCs, subnets, and routing. How do they work together?

Virtual Private Clouds (VPCs) provide isolated network environments within a public cloud. Subnets are subdivisions of a VPC, allowing you to organize resources into logical groups (e.g., public and private subnets). Routing tables define the paths network traffic takes between subnets, to the internet, or to other networks.

VPCs act as the overall container. Subnets reside inside a VPC and are associated with a specific CIDR block. Routing tables are associated with subnets to control traffic flow. For example, a subnet can have a route to an Internet Gateway for public internet access or a route to a Virtual Private Gateway to connect to an on-premises network. Routing enables resources in different subnets or networks to communicate securely and efficiently.

18. How can you use cloud-based machine learning services to improve your application's functionality?

Cloud-based machine learning services offer a powerful way to enhance application functionality without the overhead of managing infrastructure. By leveraging pre-trained models or custom-trained models hosted on platforms like AWS SageMaker, Google Cloud AI Platform, or Azure Machine Learning, applications can gain capabilities such as image recognition, natural language processing, predictive analytics, and more.

Specifically, consider a few examples:

- Image Recognition: Use a service like AWS Rekognition to automatically tag images uploaded by users, improving searchability and content moderation.

- Sentiment Analysis: Integrate with a service like Google Cloud Natural Language to analyze user reviews or social media mentions, providing insights into customer satisfaction.

- Predictive Maintenance: Train a model on historical sensor data using Azure Machine Learning to predict equipment failures, enabling proactive maintenance and reducing downtime. These integrations are often facilitated via simple API calls, making it easy to incorporate sophisticated ML capabilities into existing applications.

19. What are the challenges and best practices for securing cloud-native applications?

Securing cloud-native applications presents unique challenges due to their distributed, dynamic, and often ephemeral nature. Common challenges include managing complex access control across microservices, ensuring consistent security policies across diverse environments (development, staging, production), and effectively monitoring and responding to threats in real-time. Furthermore, the rapid pace of development in cloud-native environments can lead to security vulnerabilities if security is not integrated early in the development lifecycle.

Best practices for securing cloud-native applications include implementing strong identity and access management (IAM) with least privilege principles, automating security scanning and vulnerability management in the CI/CD pipeline, using container security tools to scan images and enforce security policies, implementing network segmentation to limit the blast radius of potential attacks, and establishing robust monitoring and logging to detect and respond to security incidents. Infrastructure-as-Code (IaC) should be used to define and manage infrastructure in a secure and repeatable manner. Code signing and verification helps to ensure that only trusted code is deployed. Using tools such as Kubernetes Network Policies to control traffic between microservices and regular security audits are also beneficial.

20. Explain how you would implement a CI/CD pipeline for a cloud application.

A CI/CD pipeline for a cloud application automates the build, test, and deployment processes. I would typically use tools like Jenkins, GitLab CI, or GitHub Actions. The pipeline would consist of stages such as: build (compiling code, creating artifacts), test (running unit, integration, and end-to-end tests), and deploy (pushing code to staging/production environments). Cloud-specific services like AWS CodePipeline or Azure DevOps can also be used for a more integrated experience.

For example, using GitHub Actions, I'd define a workflow.yml file to specify the pipeline. This file would detail the triggers (e.g., push to main branch), jobs (build, test, deploy), and steps within each job (e.g., npm install, npm test, aws s3 sync). This workflow is triggered on code changes. Failed tests prevent deployments. Rollbacks would be implemented in the deployment stage to revert to a previous working version if issues arise after deployment.

21. Describe the benefits of using a service mesh in a microservices architecture. What are some popular service mesh implementations?

Service meshes offer numerous benefits in a microservices architecture, primarily by addressing inter-service communication challenges. They enhance reliability through features like traffic management (load balancing, retries, circuit breaking), security (authentication, authorization, encryption), and observability (metrics, tracing, logging). These capabilities reduce the operational complexity of managing distributed systems. By abstracting these concerns away from individual services, developers can focus on business logic.

Some popular service mesh implementations include Istio, Linkerd, and Consul Connect. Istio is feature-rich and widely adopted, offering advanced traffic management and security features. Linkerd is designed for simplicity and performance, focusing on low latency and resource consumption. Consul Connect integrates with HashiCorp's Consul for service discovery and configuration, providing a unified solution for service networking.

22. How do you handle data versioning and schema evolution in a cloud-based data lake?

Data versioning and schema evolution in a data lake are crucial for maintaining data integrity and enabling backward compatibility. For data versioning, techniques like immutable storage (where new versions of files are written instead of overwriting existing ones) and time-travel capabilities offered by services like Delta Lake or Apache Iceberg are employed. Each version of the data is stored, allowing you to query historical states.