Hiring the right Big Data talent can be a game-changer for any organization. In this post, we provide a comprehensive list of Big Data interview questions and answers, essential for interviewers to make informed decisions.

This blog post covers a range of questions suitable for assessing candidates at different levels: junior analysts, mid-tier analysts, and senior analysts. We also include questions related to data processing, data storage, and situational scenarios to help you evaluate technical proficiency and problem-solving skills.

By using these questions, you can confidently gauge the capabilities of potential hires and ensure they fit your team’s needs. To further streamline your recruitment process, consider using our Big Data assessment tests .

Table of contents

8 general Big Data interview questions and answers to assess candidates

Ready to dive into the world of Big Data interviews? These 8 general questions will help you assess candidates' understanding of key concepts and their ability to apply them. Use this list to gauge a candidate's knowledge, problem-solving skills, and critical thinking abilities in the ever-evolving field of Big Data.

1. Can you explain the concept of the 3 V's in Big Data?

The 3 V's in Big Data refer to Volume, Velocity, and Variety. These are the three defining properties or dimensions of Big Data:

• Volume: This refers to the vast amount of data generated every second. Big Data often involves petabytes and exabytes of data.

• Velocity: This represents the speed at which new data is generated and the pace at which data moves around. Think of real-time or nearly real-time information delivery.

• Variety: This refers to the different types of data available. Traditional data types were structured and fit neatly in a relational database. With Big Data, data comes in new unstructured data types, including text, audio, and video.

Look for candidates who can clearly explain each 'V' and provide relevant examples. Strong candidates might also mention additional V's that have been proposed, such as Veracity (the trustworthiness of the data) and Value (the worth of the data being extracted).

2. How would you explain Big Data to a non-technical person?

A good explanation for a non-technical person might go something like this: 'Big Data is like having a giant library with millions of books in different languages and formats. Some are text books, some are audio books, some are even movies. Now imagine trying to find specific information across all these books quickly. That's the challenge of Big Data.'

'It's not just about having lots of information, but being able to make sense of it all. Big Data technologies help us organize, search through, and understand this massive amount of information, so we can use it to make better decisions or create new products and services.'

Look for candidates who can simplify complex concepts without losing their essence. The ability to communicate technical ideas to non-technical stakeholders is crucial for many Big Data roles.

3. What are some common challenges in implementing Big Data solutions?

Candidates should be able to identify several challenges in implementing Big Data solutions, such as:

• Data Quality and Cleansing: Ensuring the data is accurate, complete, and consistent.

• Data Integration: Combining data from various sources and formats.

• Data Security and Privacy: Protecting sensitive information and complying with regulations.

• Scalability: Designing systems that can handle growing volumes of data.

• Skill Gap: Finding professionals with the right expertise to work with Big Data technologies.

• Cost: Managing the expenses associated with storing and processing large volumes of data.

A strong candidate will not only list these challenges but also discuss potential solutions or strategies to address them. They might mention techniques like data governance, distributed computing, or continuous learning programs for addressing the skill gap.

4. How does Big Data analytics differ from traditional data analytics?

When answering this question, candidates should highlight key differences such as:

• Scale: Big Data analytics deals with much larger volumes of data, often in petabytes or exabytes.

• Data Types: Traditional analytics typically works with structured data, while Big Data analytics can handle unstructured and semi-structured data.

• Processing Speed: Big Data analytics often involves real-time or near real-time processing, unlike traditional batch processing.

• Technology: Big Data requires specialized tools and platforms like Hadoop, Spark, or NoSQL databases, whereas traditional analytics might use relational databases and business intelligence tools.

• Approach: Big Data often uses machine learning and AI techniques to find patterns and insights, while traditional analytics might rely more on hypothesis testing and statistical methods.

Look for candidates who can explain these differences clearly and provide examples of when Big Data analytics might be preferred over traditional methods. Strong candidates might also discuss the concept of data engineering and its role in preparing data for analysis.

5. What is the role of machine learning in Big Data?

Machine learning plays a crucial role in Big Data analytics. Candidates should be able to explain that machine learning algorithms can automatically identify patterns and make predictions or decisions based on large volumes of data. Some key points they might mention include:

• Predictive Analytics: Machine learning models can forecast future trends based on historical Big Data.

• Anomaly Detection: ML algorithms can identify unusual patterns that might indicate fraud or system failures.

• Personalization: ML can analyze user behavior data to provide personalized recommendations or experiences.

• Natural Language Processing: ML enables the analysis of unstructured text data, allowing for sentiment analysis, text classification, and more.

• Image and Video Analysis: ML algorithms can process and extract insights from visual Big Data.

Strong candidates will be able to provide specific examples of how machine learning is applied in Big Data scenarios. They might also discuss the challenges of implementing ML in Big Data environments, such as the need for distributed computing and the importance of feature engineering.

6. How would you approach data quality issues in a Big Data environment?

Candidates should outline a systematic approach to addressing data quality issues in Big Data:

- Data Profiling: Analyze the data to understand its structure, content, and quality.

- Define Quality Metrics: Establish clear criteria for what constitutes 'good' data.

- Implement Data Validation: Use rules and algorithms to check data against quality standards.

- Data Cleansing: Apply techniques to correct or remove inaccurate records.

- Continuous Monitoring: Set up systems to continuously check data quality as new data flows in.

- Root Cause Analysis: Investigate the sources of data quality issues to prevent future occurrences.

- Data Governance: Establish policies and procedures for maintaining data quality across the organization.

Look for candidates who emphasize the importance of a proactive approach to data quality and understand that it's an ongoing process, not a one-time fix. Strong candidates might also discuss specific tools or technologies they've used for data quality management in Big Data environments.

7. Can you explain the concept of data lakes and how they differ from data warehouses?

Candidates should be able to explain that a data lake is a storage repository that holds a vast amount of raw data in its native format until it's needed. Key points to cover include:

• Structure: Data lakes store data in its raw form, while data warehouses store structured, processed data.

• Data Types: Data lakes can store structured, semi-structured, and unstructured data, whereas data warehouses typically only handle structured data.

• Schema: Data lakes use a schema-on-read approach, while data warehouses use schema-on-write.

• Use Cases: Data lakes are ideal for big data analytics, machine learning, and data discovery. Data warehouses are better for operational reporting and business intelligence.

• Flexibility: Data lakes offer more flexibility in terms of data storage and analysis, while data warehouses are more rigid but offer faster query performance for specific use cases.

Look for candidates who can clearly articulate these differences and discuss scenarios where one might be preferred over the other. Strong candidates might also mention challenges associated with data lakes, such as potential data swamps and the need for effective metadata management.

8. How do you stay updated with the latest trends and developments in Big Data?

This question assesses a candidate's commitment to continuous learning and their engagement with the Big Data community. Strong answers might include:

• Following industry blogs and news sites (e.g., KDnuggets, Data Science Central)

• Attending conferences and webinars

• Participating in online courses or obtaining certifications

• Engaging in open-source projects or hackathons

• Reading academic papers and industry reports

• Following thought leaders on social media platforms

• Experimenting with new tools and technologies in personal projects

Look for candidates who demonstrate a genuine enthusiasm for the field and a proactive approach to learning. The best candidates will be able to discuss recent trends or developments that have caught their interest and explain how they might apply this knowledge in their work.

20 Big Data interview questions to ask junior analysts

To help you assess the foundational knowledge and potential of junior analysts in Big Data, consider these carefully curated interview questions. Use this guide to identify candidates who can grow into more advanced roles and contribute meaningfully to your Big Data initiatives.

- Can you walk me through your experience with data cleaning and preprocessing?

- How do you handle missing or inconsistent data in a dataset?

- What tools or programming languages have you used for Big Data analysis?

- Can you explain the difference between structured and unstructured data?

- Describe a project where you had to analyze a large dataset. What challenges did you face and how did you overcome them?

- How do you ensure data security and privacy when working with Big Data?

- What is your understanding of Hadoop, and have you had any hands-on experience with it?

- Can you explain what MapReduce is and its role in Big Data processing?

- How do you validate the reliability of the data you analyze?

- What are some ways you can optimize queries in a Big Data environment?

- How would you go about visualizing Big Data results to make them understandable to a non-technical audience?

- Explain any experience you have with cloud-based Big Data platforms like AWS, Azure, or Google Cloud.

- What measures do you take to maintain data integrity during data transformation processes?

- How do you prioritize tasks and manage time when working on multiple data projects?

- Can you describe a situation where you had to collaborate with a team to achieve a Big Data project goal?

- What is your experience with real-time data processing and streaming analytics?

- How do you choose the right Big Data tools and technologies for a specific project?

- Can you discuss any experience you have with NoSQL databases?

- How do you approach learning and adopting new Big Data technologies?

- What is your process for debugging and testing Big Data applications?

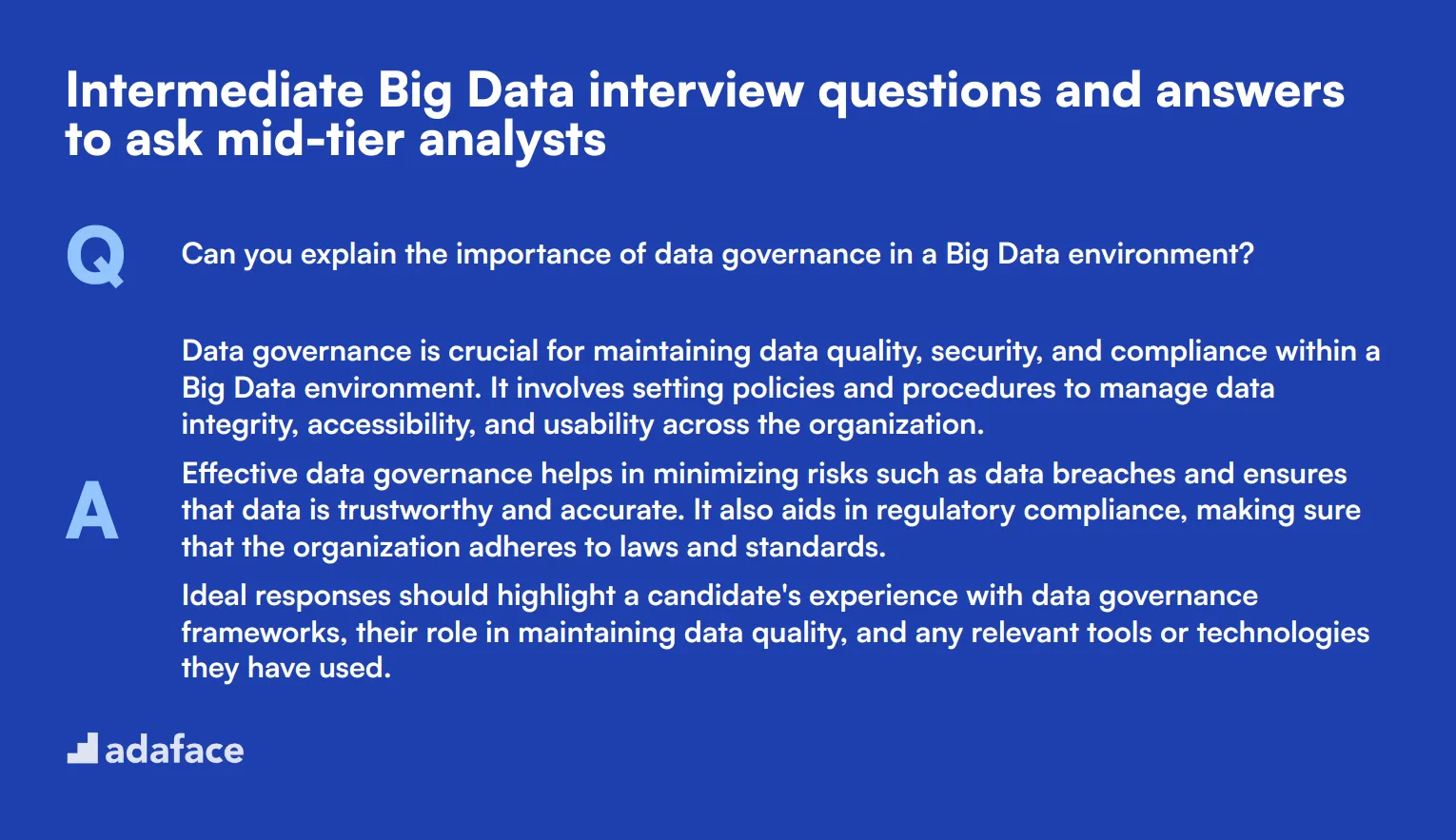

10 intermediate Big Data interview questions and answers to ask mid-tier analysts

To evaluate mid-tier analysts' competency in Big Data, these intermediate questions will help you gauge their knowledge and problem-solving skills. Use this list during interviews to ensure candidates are well-versed in real-world Big Data challenges and solutions.

1. Can you explain the importance of data governance in a Big Data environment?

Data governance is crucial for maintaining data quality, security, and compliance within a Big Data environment. It involves setting policies and procedures to manage data integrity, accessibility, and usability across the organization.

Effective data governance helps in minimizing risks such as data breaches and ensures that data is trustworthy and accurate. It also aids in regulatory compliance, making sure that the organization adheres to laws and standards.

Ideal responses should highlight a candidate's experience with data governance frameworks, their role in maintaining data quality, and any relevant tools or technologies they have used.

2. What strategies would you use to handle large-scale data processing efficiently?

Handling large-scale data processing efficiently requires a combination of optimized algorithms, distributed computing, and appropriate data storage solutions. For instance, using parallel processing frameworks like Apache Hadoop or Apache Spark can significantly speed up data processing tasks.

Another strategy is data partitioning, which involves dividing data into smaller, manageable chunks that can be processed concurrently. This helps in reducing processing time and improving performance.

Look for candidates who can discuss specific tools and techniques they have used to manage large datasets, as well as their understanding of the trade-offs involved in different approaches.

3. How do you ensure data accuracy and consistency in a Big Data project?

Ensuring data accuracy and consistency often involves implementing robust data validation techniques, such as schema validation, data profiling, and integrity checks. These methods help identify and rectify errors or inconsistencies in the data.

Regular audits and monitoring are also essential to maintain data quality over time. Automated tools can be employed to continuously check for data anomalies and ensure compliance with predefined standards.

Strong candidates will be able to discuss their experience with various data validation tools and techniques, and how they have implemented these practices in previous projects to ensure data quality.

4. Describe your experience with data integration in a Big Data environment.

Data integration in a Big Data environment involves combining data from various sources to provide a unified view. This process can be complex, especially when dealing with diverse data formats and structures.

Techniques like ETL (Extract, Transform, Load), data warehousing, and the use of integration platforms can help streamline this process. Effective data integration ensures that data is consistent, accurate, and readily available for analysis.

An ideal candidate should discuss specific integration tools they have used, challenges they faced during data integration, and how they addressed those challenges to ensure smooth data flow.

5. What methods do you use to optimize data storage in Big Data projects?

Optimizing data storage involves choosing the right storage solutions and formats based on the specific needs of the project. Techniques such as data compression, partitioning, and indexing can significantly reduce storage costs and improve retrieval times.

Using cloud-based storage solutions like AWS S3, Google Cloud Storage, or Azure Blob Storage can offer scalability and cost-efficiency. Additionally, selecting the appropriate file format (e.g., Parquet, ORC) can improve query performance.

Candidates should demonstrate their understanding of various storage optimization techniques and their experience with different storage solutions, highlighting how these choices impacted the overall performance and cost of their projects.

6. How do you manage data privacy and security in Big Data projects?

Managing data privacy and security involves implementing robust encryption, access controls, and data masking techniques to protect sensitive information. Ensuring compliance with regulations like GDPR or CCPA is also crucial.

Regular security audits and monitoring can help identify vulnerabilities and prevent data breaches. Using secure data transmission protocols and maintaining strict user access policies are also essential practices.

Look for candidates who can discuss specific security measures they have implemented in past projects, their understanding of regulatory requirements, and how they stay updated with the latest security trends and technologies.

7. Can you discuss your approach to real-time data analytics and its challenges?

Real-time data analytics involves processing data as it is generated to provide immediate insights. This requires highly efficient data ingestion and processing frameworks like Apache Kafka and Apache Flink.

Challenges include managing high data velocity, ensuring low-latency processing, and maintaining data accuracy. Handling these challenges often requires a combination of robust infrastructure, optimized algorithms, and scalable solutions.

Ideal candidates should provide examples of real-time analytics projects they have worked on, the specific tools and techniques they employed, and how they addressed common challenges to deliver timely and accurate insights.

8. Explain the importance of scalability in Big Data solutions and how you achieve it.

Scalability is critical in Big Data solutions to handle increasing data volumes and user demands without compromising performance. Achieving scalability often involves using distributed computing frameworks and scalable storage solutions.

Strategies like horizontal scaling (adding more nodes to a system) and vertical scaling (enhancing the capacity of existing nodes) can help manage growing data and processing needs. Cloud-based solutions also offer flexible scaling options.

Look for candidates who can discuss their experience with scalable architectures, the challenges they faced in scaling Big Data systems, and the tools or strategies they used to ensure seamless scalability.

9. What role does data visualization play in Big Data, and what tools do you prefer?

Data visualization is essential in Big Data as it helps transform complex data sets into understandable and actionable insights. Effective visualization can reveal patterns, trends, and correlations that might be missed in raw data.

Popular tools for data visualization include Tableau, Power BI, and D3.js. These tools offer powerful features for creating interactive and dynamic visualizations, making data analysis more intuitive and accessible.

Candidates should discuss their experience with different visualization tools, the types of visualizations they have created, and how these visualizations helped stakeholders make informed decisions.

10. How do you approach performance tuning in a Big Data environment?

Performance tuning in a Big Data environment involves optimizing queries, adjusting system configurations, and ensuring efficient resource utilization. Techniques like indexing, partitioning, and query optimization can significantly enhance performance.

Regular monitoring and profiling can help identify performance bottlenecks and areas for improvement. Tools like Apache Spark's built-in performance metrics or third-party solutions can assist in this process.

Ideal candidates should share specific examples of performance tuning they have performed, the tools and techniques they used, and the tangible improvements they achieved in system performance.

15 advanced Big Data interview questions to ask senior analysts

When interviewing for senior Big Data analyst roles, it's crucial to ask the right questions to assess their expertise and practical skills. This list of advanced Big Data interview questions ensures you evaluate candidates effectively, helping you identify top talent for data engineer job descriptions or similar roles.

- Can you describe your experience with distributed computing frameworks like Apache Spark?

- How do you manage and optimize resource allocation in a Hadoop cluster?

- What are the key considerations when designing a data pipeline for large-scale data ingestion?

- How do you approach the implementation of machine learning models at scale in a Big Data environment?

- Can you discuss your experience with data serialization formats like Avro, Parquet, or ORC?

- How do you handle schema evolution in a data lake environment?

- What strategies do you employ for indexing and partitioning large datasets to improve query performance?

- Can you elaborate on your experience with stream processing frameworks like Apache Kafka or Flink?

- What methods do you use to ensure fault tolerance and high availability in Big Data systems?

- How do you approach the integration of different data sources in a unified data platform?

- Can you explain your approach to implementing data lineage and auditability in your Big Data projects?

- What role does metadata management play in your Big Data architecture, and how do you handle it?

- How do you approach the challenge of data versioning and rollback in a dynamic Big Data environment?

- Can you discuss how you implement real-time analytics solutions while ensuring low latency?

- What techniques do you use to balance load and avoid bottlenecks in a distributed data processing system?

9 Big Data interview questions and answers related to data processing

To help you pinpoint the right candidate for handling data processing tasks, use these questions in your Big Data interviews. They will help you assess the candidate's understanding of data processing principles, methodologies, and their practical application.

1. Can you explain the concept of data partitioning and its importance in Big Data processing?

Data partitioning involves dividing a large dataset into smaller, manageable pieces, known as partitions. This technique is crucial in Big Data because it allows for parallel processing, which significantly speeds up data operations and enhances system performance.

Effective partitioning can also help in optimizing resource usage and reducing query latencies. It ensures that data is evenly distributed across nodes, preventing bottlenecks and ensuring efficient data retrieval.

Look for candidates who can articulate the technical and performance benefits of data partitioning. A strong response should include examples of how they have implemented partitioning in past projects and the impact it had on performance.

2. What is your approach to handling large-scale data transformations?

Handling large-scale data transformations typically involves using ETL (Extract, Transform, Load) processes. Candidates may mention tools like Apache Spark or Apache Flink, which are designed to handle massive datasets efficiently.

A good approach includes defining clear workflows, ensuring data quality during transformations, and optimizing the performance of transformation processes. This often involves writing efficient transformation logic and leveraging parallel processing.

The ideal candidate should demonstrate a solid understanding of ETL tools and techniques, and provide examples of how they have managed data transformations in previous roles.

3. How do you approach optimizing data storage in Big Data projects?

Optimizing data storage in Big Data projects involves several strategies, such as data compression, choosing the right storage format (e.g., ORC, Parquet), and efficient data partitioning.

Candidates should also mention the importance of eliminating redundant data and archiving old data to reduce storage costs. Using columnar storage formats can also enhance query performance and reduce disk I/O.

Look for candidates who are familiar with different storage formats and techniques. They should provide specific examples of how these strategies were applied in previous projects to optimize storage and improve performance.

4. How do you ensure data privacy and security in Big Data processing?

Ensuring data privacy and security in Big Data involves implementing robust encryption methods, access control mechanisms, and regular audits. Candidates should mention using encryption both at rest and in transit to protect sensitive data.

Additionally, they should discuss the importance of role-based access control (RBAC) to restrict access to data based on user roles, and maintaining detailed audit logs to track data access and modifications.

An ideal candidate will demonstrate a comprehensive understanding of data security practices and provide examples of how they have enforced these measures in previous projects to maintain data privacy and security.

5. What are the key considerations when designing a data ingestion pipeline?

When designing a data ingestion pipeline, it's crucial to consider scalability, data quality, and fault tolerance. The pipeline should be able to handle varying data volumes and ensure that the data being ingested is accurate and clean.

Candidates should also mention the importance of choosing the right ingestion tools and frameworks, such as Apache Kafka or AWS Kinesis, which support real-time data processing and ensure high availability.

Look for candidates who can discuss their experience with different ingestion tools and provide examples of how they designed and implemented ingestion pipelines that met the scalability and reliability requirements of their projects.

6. Can you discuss the role of data governance in Big Data processing?

Data governance involves establishing policies and procedures to manage data quality, security, and privacy. In Big Data processing, it ensures that data is accurate, consistent, and used responsibly.

Candidates should highlight the importance of data governance frameworks, such as GDPR compliance, and data stewardship roles that oversee data management practices. Proper governance helps in maintaining data integrity and reduces the risk of data breaches.

An ideal candidate will show a strong understanding of data governance principles and provide examples of how they have implemented governance practices in their previous roles to ensure data quality and compliance.

7. How do you handle data deduplication in a Big Data environment?

Data deduplication involves identifying and removing duplicate records from datasets to ensure data quality and reduce storage costs. Candidates may use techniques such as hashing, record linkage, or machine learning algorithms for deduplication.

They should also mention using tools like Apache Spark or Hadoop MapReduce, which provide built-in functions for deduplication. Regular deduplication processes can help maintain the integrity of the dataset over time.

Look for candidates who can explain different deduplication methods and provide examples of how they have successfully implemented these methods in past projects to improve data quality and efficiency.

8. What strategies do you use to manage and monitor data processing workflows?

Managing and monitoring data processing workflows involves using scheduling and orchestration tools like Apache Airflow, Oozie, or NiFi. These tools help automate workflows, manage dependencies, and provide visibility into the processing pipelines.

Candidates should discuss setting up alerting and monitoring systems to detect and address issues promptly. They may use tools like Prometheus or Grafana for real-time monitoring and logging.

An ideal candidate will demonstrate familiarity with workflow orchestration tools and monitoring systems. They should provide examples of how they have managed and monitored data processing workflows to ensure smooth and efficient operations.

9. How do you approach data validation and quality checks in Big Data processing?

Data validation and quality checks are essential to ensure the accuracy and reliability of the data being processed. Candidates should mention techniques like schema validation, data profiling, and setting up data quality rules.

They may also use tools like Apache Griffin or Deequ for automating data quality checks. Regular validation processes help identify and rectify data issues before they affect downstream applications.

Look for candidates who can discuss their experience with data validation tools and techniques. They should provide examples of how they have implemented data quality checks to maintain high data standards in their projects.

6 Big Data interview questions and answers related to data storage

When interviewing for Big Data roles, it's crucial to assess candidates' understanding of data storage concepts. These questions will help you gauge applicants' knowledge of efficient data management techniques and their ability to handle large-scale storage challenges. Use this list to evaluate candidates for roles that require expertise in Big Data storage solutions.

1. How would you approach designing a data storage solution for a petabyte-scale dataset?

A strong candidate should outline a structured approach to designing a petabyte-scale data storage solution. They might mention the following key points:

- Assessing the nature of the data (structured, semi-structured, or unstructured)

- Considering data access patterns and frequency

- Evaluating scalability requirements

- Choosing between on-premises, cloud, or hybrid solutions

- Selecting appropriate storage technologies (e.g., distributed file systems, object storage)

- Implementing data partitioning and sharding strategies

- Planning for data replication and backup

Look for answers that demonstrate a holistic understanding of large-scale data storage challenges. The ideal candidate should also mention the importance of cost-effectiveness, performance optimization, and future scalability in their design approach.

2. Can you explain the concept of data sharding and its importance in Big Data storage?

Data sharding is a technique used to distribute large datasets across multiple servers or nodes. It involves breaking down a large database into smaller, more manageable pieces called shards. Each shard is stored on a separate server, allowing for parallel processing and improved performance.

The importance of data sharding in Big Data storage includes:

- Improved scalability: Easily add more servers to handle growing data volumes

- Enhanced performance: Parallel processing of queries across multiple shards

- Better availability: If one shard fails, others remain accessible

- Load balancing: Distribute data and queries evenly across servers

A strong candidate should be able to explain how sharding is implemented, including strategies for choosing shard keys and handling cross-shard queries. Look for answers that also address potential challenges, such as maintaining data consistency across shards and the complexity of managing a sharded system.

3. How do you ensure data durability in a distributed storage system?

Ensuring data durability in a distributed storage system involves implementing multiple strategies to protect against data loss. A knowledgeable candidate might mention the following approaches:

- Replication: Storing multiple copies of data across different nodes or data centers

- Erasure coding: Using algorithms to reconstruct lost data from remaining fragments

- Regular backups: Creating periodic snapshots of the entire dataset

- Consistency checks: Implementing mechanisms to verify data integrity

- Fault-tolerant hardware: Using redundant components to minimize hardware failures

- Geographically distributed storage: Storing data across multiple physical locations

Look for answers that demonstrate an understanding of trade-offs between durability, performance, and cost. The ideal candidate should also mention the importance of regular testing and disaster recovery planning to ensure the effectiveness of durability measures.

4. What are the key considerations when choosing between row-oriented and column-oriented storage for Big Data?

When choosing between row-oriented and column-oriented storage for Big Data, several key factors come into play. A strong candidate should be able to articulate the following considerations:

- Query patterns: Row-oriented is better for retrieving entire records, while column-oriented excels at aggregations and analytics on specific columns

- Data compression: Column-oriented storage often achieves better compression ratios

- Write performance: Row-oriented storage typically offers faster write operations

- Scalability: Column-oriented storage often scales better for large datasets

- Data variety: Row-oriented is more flexible for heterogeneous data, while column-oriented works well with uniform data

Look for answers that demonstrate an understanding of the trade-offs between the two approaches. The ideal candidate should be able to provide examples of use cases where each storage type would be more appropriate, such as transactional systems for row-oriented and analytical workloads for column-oriented storage.

5. How would you handle data versioning in a Big Data environment?

Data versioning in a Big Data environment is crucial for maintaining data lineage, enabling rollbacks, and supporting compliance requirements. A knowledgeable candidate might discuss the following approaches:

- Immutable data storage: Keeping all versions of data and never overwriting existing data

- Time-travel capabilities: Enabling queries on data as it existed at a specific point in time

- Delta Lake or similar technologies: Using open-source frameworks that provide ACID transactions and versioning

- Metadata management: Maintaining detailed metadata about each version of the data

- Snapshot-based versioning: Creating periodic snapshots of the entire dataset

- Version control systems: Adapting Git-like version control concepts to data versioning

Look for answers that address the challenges of implementing versioning at scale, such as storage efficiency and query performance. The ideal candidate should also mention the importance of clear versioning policies and automated cleanup processes to manage storage costs.

6. Explain the concept of data tiering in Big Data storage and its benefits.

Data tiering in Big Data storage involves categorizing data based on its importance, access frequency, and performance requirements, and then storing it on appropriate storage mediums. This concept allows organizations to optimize storage costs while maintaining performance for frequently accessed data.

Benefits of data tiering include:

- Cost optimization: Storing less frequently accessed data on cheaper storage mediums

- Improved performance: Keeping hot data on high-performance storage for quick access

- Efficient resource utilization: Balancing data across different storage types based on needs

- Scalability: Easier management of growing datasets by moving older data to lower tiers

- Compliance: Ability to meet data retention requirements while managing costs

A strong candidate should be able to explain different tiering strategies, such as automated tiering based on access patterns or policy-based tiering. Look for answers that also address the challenges of implementing tiering, such as data movement overhead and maintaining data consistency across tiers.

5 situational Big Data interview questions with answers for hiring top analysts

Ready to find your next Big Data superstar? These situational interview questions will help you uncover how candidates think on their feet and apply their knowledge to real-world scenarios. Use these questions to gauge a candidate's problem-solving skills, analytical thinking, and ability to handle complex data challenges in a practical context.

1. How would you design a data pipeline to handle real-time streaming data from IoT devices?

A strong candidate should outline a robust and scalable approach to handling real-time streaming data from IoT devices. They might describe a pipeline that includes:

- Data ingestion using a streaming platform like Apache Kafka or Amazon Kinesis

- Stream processing with tools like Apache Flink or Spark Streaming

- Data storage in a scalable database or data lake

- Real-time analytics and visualization layer

- Error handling and data quality checks throughout the pipeline

Look for candidates who emphasize the importance of low-latency processing, fault tolerance, and scalability in their design. They should also mention considerations for data security and privacy, especially when dealing with potentially sensitive IoT data.

2. Describe a situation where you had to optimize a slow-running Big Data query. What steps did you take?

An ideal response should demonstrate the candidate's problem-solving skills and familiarity with query optimization techniques. They might describe steps such as:

- Analyzing the query execution plan to identify bottlenecks

- Optimizing data partitioning or indexing strategies

- Rewriting the query to improve efficiency

- Considering data denormalization or pre-aggregation

- Leveraging caching mechanisms

- Tuning cluster resources or scaling infrastructure

Pay attention to candidates who emphasize the importance of benchmarking before and after optimization, as well as those who consider the trade-offs between query performance and resource utilization. A strong answer will also touch on the iterative nature of optimization and the importance of monitoring query performance over time.

3. How would you approach building a recommendation system for an e-commerce platform using Big Data technologies?

A comprehensive answer should cover the key components of building a recommendation system using Big Data technologies. The candidate might outline an approach that includes:

- Data collection: Gathering user behavior data, purchase history, product attributes, and possibly external data sources

- Data processing: Cleaning and transforming the data using tools like Apache Spark or Hadoop

- Feature engineering: Creating relevant features for the recommendation model

- Model selection: Choosing appropriate algorithms (e.g., collaborative filtering, content-based filtering, or hybrid approaches)

- Model training and evaluation: Using frameworks like MLlib or TensorFlow on distributed systems

- Deployment: Implementing the model in a scalable, real-time serving infrastructure

- Feedback loop: Continuously updating the model based on new data and user interactions

Look for candidates who discuss the challenges of handling large-scale data, the importance of personalization, and strategies for dealing with the cold start problem. A strong answer will also touch on ethical considerations, such as avoiding bias in recommendations and protecting user privacy.

4. Imagine you're tasked with implementing a fraud detection system for a large financial institution. How would you approach this using Big Data technologies?

A strong answer should demonstrate understanding of both fraud detection principles and Big Data technologies. The candidate might describe an approach that includes:

- Data ingestion: Collecting transactional data, account information, and external data sources in real-time

- Data processing: Using stream processing technologies like Apache Flink or Kafka Streams for real-time analysis

- Feature engineering: Creating relevant features that indicate potential fraud

- Model development: Implementing machine learning models (e.g., anomaly detection, decision trees, or deep learning) using distributed frameworks

- Real-time scoring: Deploying models to score transactions in real-time

- Alert system: Developing a system to flag suspicious activities for further investigation

- Continuous learning: Implementing a feedback loop to update models based on confirmed fraud cases

Look for candidates who emphasize the importance of balancing false positives and false negatives, as well as those who mention the need for explainable AI in the financial sector. A comprehensive answer will also touch on data privacy concerns and regulatory compliance in handling sensitive financial data.

5. How would you design a system to handle and analyze social media sentiment at scale?

An effective answer should outline a comprehensive approach to handling large volumes of social media data and performing sentiment analysis. The candidate might describe a system that includes:

- Data collection: Using social media APIs or data streaming services to gather posts and comments

- Data processing: Implementing distributed processing with tools like Apache Spark or Flink for text preprocessing and feature extraction

- Sentiment analysis: Applying natural language processing (NLP) techniques and machine learning models for sentiment classification

- Data storage: Utilizing a scalable database or data lake solution for storing raw and processed data

- Real-time analytics: Implementing a stream processing pipeline for immediate insights

- Batch processing: Running deeper analyses on historical data

- Visualization: Creating dashboards and reports to present sentiment trends and insights

Look for candidates who discuss challenges such as handling multiple languages, dealing with sarcasm and context, and managing the high velocity of social media data. A strong answer will also touch on ethical considerations, such as privacy concerns and potential biases in sentiment analysis models.

Which Big Data skills should you evaluate during the interview phase?

In the fast-paced world of Big Data, evaluating a candidate's skills in a single interview can be challenging. However, certain core competencies are essential to ensure that the candidate can thrive in data-intensive environments. Focusing on these key skills will help interviewers make informed decisions.

Data Analysis

To filter candidates effectively, consider using an assessment test that includes relevant multiple-choice questions related to data analysis. Our Data Analysis Online Test offers tailored questions to assess this skill.

Additionally, you can ask targeted interview questions to further evaluate a candidate's data analysis skills. One such question is:

Can you describe a complex data analysis project you worked on and the tools you used?

When asking this question, look for clarity in their explanation, the relevance of the tools mentioned, and how they approached problem-solving. A strong candidate will demonstrate a structured thought process and practical experience.

Big Data Technologies

Evaluate this skill through an assessment test with multiple-choice questions on Big Data technologies. While we don't have a specific test in our library, you can adapt general tech assessments to focus on this area.

You might also want to ask candidates specific questions about their experience with these technologies. For example:

What are the key differences between Hadoop and Spark, and when would you use one over the other?

Pay attention to their ability to articulate the differences clearly and their practical experiences using these technologies in real-world scenarios.

Data Visualization

Consider using an assessment test that includes questions on data visualization techniques and tools. If needed, you can create customized assessments to suit your specific data visualization requirements.

To assess this skill further, you might ask candidates:

Which data visualization tools have you worked with, and can you provide an example of a visualization you created?

When evaluating responses, look for familiarity with various tools and the ability to explain why they chose specific visualizations based on the data.

Tips for Effective Big Data Interviews

Before putting your new knowledge to use, consider these tips to make your Big Data interviews more effective and insightful.

1. Start with a Skills Assessment

Begin your hiring process with a skills assessment to filter candidates efficiently. This approach saves time and ensures you're interviewing candidates with the right technical abilities.

For Big Data roles, consider using tests that evaluate Hadoop, Data Science, and Data Mining skills. These assessments help identify candidates with strong foundations in key Big Data technologies and methodologies.

By using skills tests, you can focus your interviews on deeper technical discussions and problem-solving scenarios. This strategy allows you to make the most of your interview time and assess candidates more thoroughly.

2. Prepare a Balanced Set of Interview Questions

Compile a mix of technical and situational questions to evaluate both hard and soft skills. With limited interview time, choosing the right questions is crucial for assessing candidates comprehensively.

Include questions about related technologies like Spark or Machine Learning. This approach helps gauge a candidate's broader knowledge of the Big Data ecosystem.

Don't forget to assess soft skills such as communication and problem-solving. These qualities are essential for success in Big Data roles, especially when working in teams or explaining complex concepts to non-technical stakeholders.

3. Ask Thoughtful Follow-up Questions

Prepare to ask follow-up questions to dig deeper into a candidate's knowledge and experience. This technique helps you distinguish between candidates who have memorized answers and those with genuine understanding and problem-solving skills.

For example, after asking about Hadoop, you might follow up with a question about how they've optimized Hadoop jobs in previous roles. Look for detailed, experience-based answers that demonstrate practical knowledge and critical thinking.

Use Big Data interview questions and skills tests to hire talented analysts

If you are looking to hire someone with Big Data skills, you need to ensure they have those skills accurately. The best way to do this is to use skill tests. Consider using our Hadoop Online Test or Data Science Test to assess candidates.

Once you use these tests, you can shortlist the best applicants and invite them for interviews. For more details, visit our online assessment platform or sign up to get started.

Hadoop Online Test

Download Big Data interview questions template in multiple formats

Big Data Interview Questions FAQs

Tailoring questions to the candidate's experience level helps you better assess their capabilities and ensures a more accurate evaluation.

Focus on general knowledge, data processing, data storage, and situational questions to get a comprehensive view of the candidate's skills.

Select questions that match the job role and candidate's experience level. Mix general, technical, and situational questions for a balanced interview.

Look for clear explanations, problem-solving skills, and familiarity with Big Data tools and concepts. Assess their ability to apply knowledge practically.

Sample answers are included for some questions to guide you, but you'll need to evaluate candidate responses based on your specific requirements.

Yes, these questions can be used in both in-person and remote interview settings. Ensure you have a reliable way to assess technical skills if interviewing remotely.

40 min skill tests.

No trick questions.

Accurate shortlisting.

We make it easy for you to find the best candidates in your pipeline with a 40 min skills test.

Try for freeRelated posts

Free resources