In today's fast-paced software development landscape, automation testing is an integral part of ensuring software quality. Recruiters and hiring managers often struggle to evaluate candidates, needing a structured approach to gauge their proficiency in this specialized field, as you can see from our post on skills required for a test engineer.

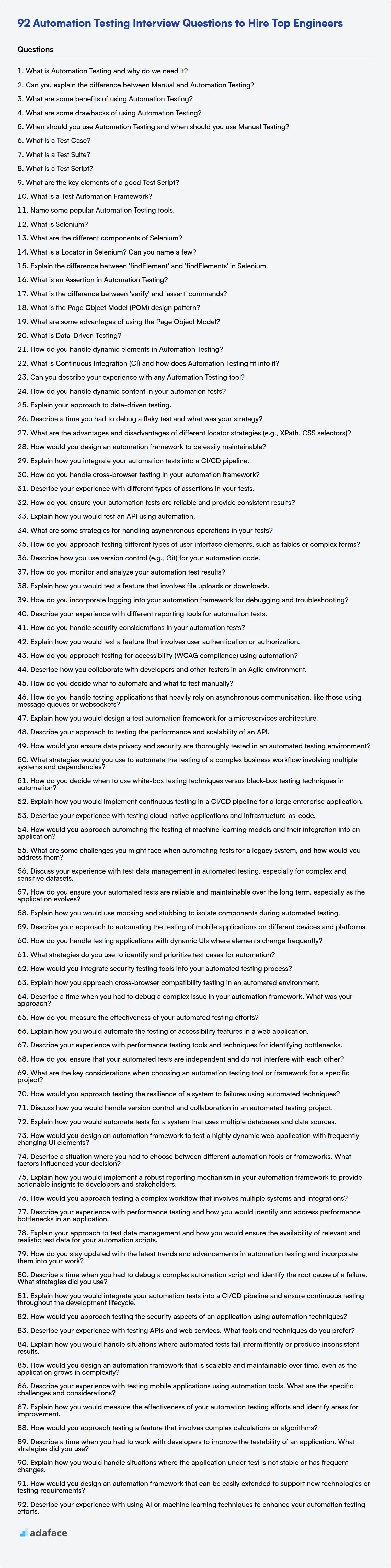

This post provides a curated list of automation testing interview questions, categorized by skill level, including basic, intermediate, advanced, and expert levels, along with multiple-choice questions. This guide helps you ask the right questions and identify candidates with the skills and knowledge to excel in automation testing roles.

By using these questions, you can refine your interview process and make data-driven hiring decisions. Before the interview, consider using Adaface's online assessments to screen candidates for practical skills and save valuable interview time.

Table of contents

Basic Automation Testing interview questions

1. What is Automation Testing and why do we need it?

Automation testing uses software to control the execution of tests, compare actual outcomes with predicted outcomes, and report on the test results. It automates repetitive tasks like regression testing, performance testing, and data entry, which would be time-consuming and error-prone if done manually.

We need automation testing to improve software quality, increase test coverage, accelerate the testing process, and reduce costs. It allows for frequent and consistent test execution, early detection of defects, and faster feedback to developers. This leads to more reliable and robust software.

2. Can you explain the difference between Manual and Automation Testing?

Manual testing involves testers executing test cases without automation tools. They manually verify the software against requirements, documenting findings and reporting bugs. This relies heavily on human observation and judgment.

Automation testing uses scripts and tools to execute pre-defined test cases automatically. It's especially useful for regression testing, performance testing, and repetitive tasks. Automation aims to improve efficiency, speed, and repeatability, but often requires initial investment in script development and maintenance. It also provides a quicker feedback for developers allowing for rapid bug-fixes.

3. What are some benefits of using Automation Testing?

Automation testing offers several key benefits. Primarily, it reduces the time and cost associated with repetitive testing tasks. Automated tests can be executed much faster than manual tests, and they can run unattended, freeing up human testers to focus on more complex or exploratory testing. This leads to faster feedback cycles and quicker identification of bugs, ultimately accelerating the development process.

Furthermore, automation enhances test coverage and consistency. Automated tests can be designed to cover a wider range of scenarios and edge cases than manual tests, ensuring more thorough testing. Because automated tests are executed consistently every time, they eliminate the risk of human error and ensure that tests are performed in the same way across multiple runs. This consistency contributes to improved software quality and reliability. Using tools such as Selenium, JUnit or Cypress further improves efficiency.

4. What are some drawbacks of using Automation Testing?

Automation testing, while beneficial, has drawbacks. Initial setup can be costly due to tool acquisition, training, and script development. It also requires expertise to design and maintain automated test suites effectively.

Furthermore, automation isn't a replacement for manual testing. It's less adaptable to unexpected UI changes and struggles with exploratory testing or usability assessments. Some tests are simply better suited for human observation, and relying solely on automation can miss subtle issues or edge cases that a human tester would catch.

5. When should you use Automation Testing and when should you use Manual Testing?

Automation testing is best suited for repetitive tasks, regression testing, performance testing, and load testing. It excels when testing large datasets, complex scenarios, and critical functionalities that require frequent validation. Use automation where consistency and speed are paramount. Automated tests are often used for API testing and testing unchanging UI elements.

Manual testing is preferred for exploratory testing, usability testing, and ad-hoc testing. It's vital when assessing user experience, aesthetics, and areas where subjective judgment is crucial. Manual testing also shines in early stages of development, during proof of concept, or when the application is frequently changing and automation scripts would require constant updates. Consider manual testing when dealing with new features or complex edge cases that are difficult to automate.

6. What is a Test Case?

A test case is a specific set of actions performed on a system or software to verify a particular feature or functionality. It includes preconditions, input data, execution steps, and expected results.

Essentially, it's a documented procedure to determine if an application works correctly under certain conditions. A well-defined test case helps testers ensure that the software meets requirements and identify any defects or deviations from the expected behavior.

7. What is a Test Suite?

A test suite is a collection of test cases that are intended to be run together to test a software program to verify that it meets the required standards. A test suite often contains:

- A set of test cases or test scripts, each designed to verify a specific aspect of the software.

- Setup procedures, such as configuring the test environment.

- Execution procedures, defining the order in which the tests should be run.

- Validation procedures, specifying how to determine whether a test has passed or failed.

Test suites are designed to improve testing efficiency by organizing and automating the execution of tests. For example, in Python, you might use unittest or pytest to create and run test suites. They help ensure comprehensive and repeatable testing of a software application or component.

8. What is a Test Script?

A test script is a set of instructions that are executed to verify that a software application or system functions as expected. It's essentially a detailed procedure designed to validate specific functionalities or requirements. Test scripts can be manual (executed by a human tester) or automated (executed by a software tool).

Key elements often included in a test script are: test case ID, test case name, preconditions (steps to be taken before the test), test steps (detailed actions to perform), expected results, and actual results. Automated test scripts are often written in programming languages like Python, Java, or JavaScript and utilize testing frameworks such as Selenium or JUnit.

9. What are the key elements of a good Test Script?

A good test script should be reliable, repeatable, and maintainable. Key elements include a clear and concise description of the test's purpose, well-defined preconditions and postconditions, and detailed steps with expected results. Use of meaningful variable names and data sets makes the script readable and easier to debug.

Furthermore, it should be designed for easy failure diagnosis, meaning it provides sufficient information to pinpoint the root cause of any failures. Modularity and proper error handling are also important. For example, in a python script, consider using try...except blocks to handle potential exceptions gracefully.

10. What is a Test Automation Framework?

A Test Automation Framework is a structured approach to automated testing. It's a set of guidelines, best practices, and tools that, when followed, lead to more efficient, maintainable, and reliable automated tests. Think of it as the architecture for your automated testing efforts.

A good framework typically includes:

- Test Libraries/Modules: Reusable code for common tasks.

- Test Data Management: How test data is handled.

- Reporting Mechanisms: How test results are presented.

- Configuration Management: Managing different environments.

- Execution Engine: The component that runs the tests.

For example, if you're automating web tests using Selenium, a framework might define how you locate elements (e.g., using Page Object Model), handle browser setup, and generate reports.

11. Name some popular Automation Testing tools.

Some popular automation testing tools include:

- Selenium: A widely used open-source framework for web application testing.

- Cypress: A modern front-end testing tool built for web.

- Playwright: A framework for reliable end-to-end testing for web apps.

- Appium: An open-source tool for automating native, mobile web, and hybrid applications.

- JUnit/TestNG: Popular Java testing frameworks, often used for unit and integration testing.

- Robot Framework: A generic open-source automation framework.

12. What is Selenium?

Selenium is a powerful open-source framework used for automating web browsers. It primarily tests web applications, verifying that they behave as expected. Selenium supports multiple browsers (Chrome, Firefox, Safari, etc.) and operating systems (Windows, macOS, Linux).

It consists of several components:

- Selenium IDE: A record and playback tool.

- Selenium WebDriver: Allows you to control a browser through code, using various language bindings like Java, Python, C#, and more.

- Selenium Grid: Enables parallel test execution across different browsers and machines, speeding up the testing process.

13. What are the different components of Selenium?

Selenium is a suite of tools to automate web browsers. Its main components include:

- Selenium IDE: A record-and-playback tool for creating simple automation scripts. It is a browser extension.

- Selenium WebDriver: A collection of language-specific bindings to control web browsers. It uses browser-specific drivers to communicate with the browser. Supports multiple languages like Java, Python, C#, etc.

- Selenium Grid: Enables running tests in parallel across multiple machines and browsers, which helps in reducing test execution time. It involves a hub (central point) and nodes (test execution environments).

14. What is a Locator in Selenium? Can you name a few?

In Selenium, a Locator is a way to uniquely identify a web element on a webpage. It's crucial for interacting with elements, such as clicking buttons, entering text, or retrieving data. Without locators, Selenium wouldn't know which element to act upon.

Some common types of Locators include:

ID: Locates elements based on theiridattribute.Name: Locates elements based on theirnameattribute.ClassName: Locates elements based on theirclassattribute.TagName: Locates elements based on their HTML tag name (e.g.,button,input).LinkText: Locates anchor elements (links) based on their exact link text.PartialLinkText: Locates anchor elements based on a partial match of their link text.CSS Selector: Locates elements based on CSS selectors, offering flexible and powerful targeting.XPath: Locates elements based on their XML path, enabling navigation through the HTML structure.

15. Explain the difference between 'findElement' and 'findElements' in Selenium.

In Selenium, findElement and findElements are used to locate web elements on a webpage, but they differ in their behavior when the element is found or not found.

findElement returns the first matching element based on the provided locator. If no element is found that matches the locator, it throws a NoSuchElementException. findElements returns a list of all matching elements. If no elements are found, it returns an empty list, not throwing an exception. This makes findElements safer when you are unsure if an element exists. Using findElement requires you to handle exceptions, while findElements enables checking the size of the returned list.

16. What is an Assertion in Automation Testing?

In automation testing, an assertion is a statement that verifies whether a specific condition or expected outcome is true at a particular point during the execution of a test script. It's a crucial mechanism for validating that the system under test behaves as intended. If the assertion evaluates to true, the test continues; if it evaluates to false, the test fails, indicating a potential defect.

For example, in Selenium with Java, an assertion might look like this:

import org.junit.jupiter.api.Assertions;

String expectedTitle = "Example Website";

String actualTitle = driver.getTitle();

Assertions.assertEquals(expectedTitle, actualTitle, "Title mismatch!");

In this example, Assertions.assertEquals checks if the actualTitle matches the expectedTitle. If they don't match, the test will fail, and the message "Title mismatch!" will be displayed. Assertions are fundamental to determining the success or failure of automated tests.

17. What is the difference between 'verify' and 'assert' commands?

Both verify and assert are used for validation in testing, but they differ in their behavior upon failure. Assert commands halt the test execution immediately when a failure occurs. This means that if an assert statement evaluates to false, the test case is terminated, and no further steps are executed. Conversely, verify commands do not stop the test execution. If a verify statement fails, the failure is logged, but the test continues to run, executing subsequent steps.

The key difference lies in whether the test should continue after a failure. Use assert when a failure indicates a critical error that invalidates the rest of the test. Use verify when you want to check multiple conditions and log all failures within a single test run, even if some conditions are not met.

18. What is the Page Object Model (POM) design pattern?

The Page Object Model (POM) is a design pattern commonly used in test automation to create a repository of web elements. Each page within the application under test has its corresponding page object. This object contains all the web elements present on that specific page and methods to interact with those elements.

The key benefits of POM include improved code maintainability, reusability, and readability. By encapsulating page elements and their interactions, changes to the UI only require updates to the corresponding page object, rather than throughout the entire test suite. This reduces test maintenance costs and improves overall test reliability. Code duplication is also minimized, as common actions can be defined once within a page object and reused across multiple tests. For example, if a login button's id changes, you only update the locator in the LoginPage object, not in every test that uses the login functionality.

19. What are some advantages of using the Page Object Model?

The Page Object Model (POM) offers several advantages in test automation. Firstly, it enhances code maintainability by encapsulating page elements and interactions within dedicated page object classes. This separation of concerns means that UI changes only require updates to the corresponding page object, rather than scattered modifications across multiple test cases. Secondly, POM promotes code reusability. Common workflows or UI components can be represented as methods within a page object, and these methods can be called by different tests, reducing code duplication. This also makes the test code more readable and easier to understand. Another advantage is increased test stability. Changes to the UI are less likely to break tests as the page objects act as a shield between the UI and the test. Lastly, using POM improves collaboration within the team. Different team members can work on different page objects or test cases simultaneously without interfering with each other's work.

20. What is Data-Driven Testing?

Data-Driven Testing (DDT) is a testing methodology where the test input and expected output are read from data sources (like CSV, Excel, databases) rather than being hard-coded in the test script. This allows running the same test logic with multiple sets of data, increasing test coverage and reducing code duplication.

Instead of writing separate tests for each input value, a single test script reads the data, feeds it to the application, and verifies the output. Key benefits include enhanced maintainability, improved test coverage, and easier parameterization of tests.

21. How do you handle dynamic elements in Automation Testing?

Handling dynamic elements in automation testing requires robust locators and flexible strategies. Instead of relying on fixed attributes, use relative locators (XPath axes like following-sibling, ancestor), partial attribute matches (contains(), starts-with(), ends-with()), or CSS selectors that target stable portions of the element's structure.

Additionally, incorporate wait mechanisms (explicit or implicit waits) to allow elements to load before interacting with them, preventing false failures due to timing issues. Consider using data-driven testing to iterate through different element variations if applicable. Using a combination of these techniques, you can create more resilient and adaptable automation scripts.

22. What is Continuous Integration (CI) and how does Automation Testing fit into it?

Continuous Integration (CI) is a development practice where developers regularly merge their code changes into a central repository, after which automated builds and tests are run. The primary goal of CI is to detect integration errors as quickly as possible, enabling faster feedback and preventing integration problems from accumulating.

Automation testing is a critical component of CI. By automating tests (unit, integration, and end-to-end), the CI system can quickly and reliably verify that new code changes haven't introduced regressions or broken existing functionality. Without automated testing, the CI process would be significantly slower and less effective, as manual testing is time-consuming and prone to human error. Here's how it fits in:

- Code is pushed to the central repository.

- The CI server detects the change.

- The CI server automatically builds and tests the code, running the automated test suite.

- Feedback is provided to the developers about the build and test results. A failing test indicates a problem that needs to be addressed.

23. Can you describe your experience with any Automation Testing tool?

I have experience with Selenium WebDriver for automating web application testing. I've used it with Java to create test scripts that interact with web elements, verify functionality, and generate reports. My experience includes writing robust locators (using IDs, XPath, CSS selectors), implementing explicit and implicit waits, and handling different browser configurations. I also have familiarity using testing frameworks like JUnit and TestNG for structuring and running tests, and integrating Selenium tests into CI/CD pipelines.

Additionally, I have worked with Postman for API testing. I have created test suites to validate API endpoints, request/response schemas, and error handling. This included parameterizing requests, setting up pre-request scripts, and writing assertions to verify the correctness of API responses. I also understand how to create API tests that can be automated as part of a larger integration testing strategy.

Intermediate Automation Testing interview questions

1. How do you handle dynamic content in your automation tests?

Handling dynamic content in automation tests involves strategies to identify and validate elements that change frequently. One common approach is to avoid relying on exact text matches, especially for things like dates or timestamps. Instead, focus on stable attributes like id or class names whenever possible. If these are not available, use relative XPath expressions to locate elements based on their proximity to more stable elements.

When dealing with dynamic text, you can use regular expressions to match patterns instead of exact values. For instance, element.text.matches("Order ID: \d+") could validate an order ID. Another effective technique is to extract the dynamic value and then use it in subsequent steps. For example, you might extract a dynamically generated ID and use it to click a link or button associated with that ID.

2. Explain your approach to data-driven testing.

My approach to data-driven testing (DDT) involves creating test cases that use external data sources, such as CSV files, Excel spreadsheets, or databases, to drive the testing process. Instead of hardcoding test data directly into the test scripts, I parameterize the tests and supply the input data from these external sources. This allows me to execute the same test logic with various sets of data without modifying the test script itself.

The key steps include:

- Identifying test parameters: Determine which aspects of the test should be varied.

- Creating data source: Build the external data source with the required data sets.

- Parameterizing test scripts: Modify the test scripts to read data from the data source.

- Executing tests: Run the tests, which will iterate through the data sets.

- Analyzing results: Review the test results for each data set. For example, a parameterized test to validate login functionality would read usernames and passwords from the external data source.

3. Describe a time you had to debug a flaky test and what was your strategy?

A flaky test I encountered involved intermittent failures in an end-to-end test for a user registration flow. My strategy involved first trying to reproduce the failure consistently. This was difficult due to the flakiness, but I used a loop to run the test repeatedly. Once I could reproduce it more reliably, I started by examining the test logs and any available error messages, looking for patterns or clues about the root cause.

My debugging process involved several techniques: I added more logging around the failing steps to capture the application's state. I also introduced delays/retries in areas where timing seemed to be a factor, specifically around asynchronous operations like database writes. Through this, I discovered that the database index creation after user registration was not always completing before subsequent queries, leading to the intermittent failures. The fix was to add an explicit check for the index's existence before proceeding. This eliminated the flakiness and made the test reliable.

4. What are the advantages and disadvantages of different locator strategies (e.g., XPath, CSS selectors)?

XPath and CSS selectors are common locator strategies in web automation. XPath excels in traversing the DOM, allowing you to locate elements based on relationships (parent, child, sibling) and attributes. Its advantages include flexibility and the ability to locate elements even without unique IDs or classes. Disadvantages include potentially slower performance compared to CSS selectors and increased complexity, leading to brittle locators if not carefully crafted.

CSS selectors, on the other hand, are generally faster and more readable. They are well-suited for locating elements based on IDs, classes, and attributes. Advantages include ease of use and better performance. However, CSS selectors have limitations in traversing the DOM upwards (to parent elements) and may not be as powerful as XPath in complex scenarios. Choosing the right strategy depends on the specific element and the stability of the locator. For instance, using id or class is preferable to complex XPath, provided they are uniquely and consistently named by the developers.

5. How would you design an automation framework to be easily maintainable?

To design an easily maintainable automation framework, I'd focus on modularity, abstraction, and reporting. I'd break down test cases into smaller, reusable components or modules. This way, changes in one area don't ripple through the entire framework. I would implement abstraction layers to hide complex implementation details, such as using a Page Object Model for UI testing.

Key considerations include using descriptive naming conventions, implementing comprehensive logging and reporting, and adhering to coding standards. Also, utilizing configuration files to manage test data and environment settings promotes maintainability. Furthermore, incorporating a version control system like Git is essential for tracking changes and facilitating collaboration. For example:

def add(x, y):

return x + y

# In a separate test module

assert add(2, 3) == 5

6. Explain how you integrate your automation tests into a CI/CD pipeline.

I integrate automation tests into a CI/CD pipeline to ensure code quality and prevent regressions. The process typically involves the following steps:

First, I configure the CI/CD tool (e.g., Jenkins, GitLab CI, Azure DevOps) to trigger automation tests upon specific events, such as code commits or pull requests. The pipeline then executes the tests using a testing framework like Selenium, Cypress, or Playwright, often within a containerized environment (e.g., Docker) to ensure consistency. Reporting is crucial, so I integrate tools to generate reports (e.g., JUnit, Allure) and publish them to a centralized dashboard, making test results readily accessible. Finally, based on test results, the pipeline can either proceed to the next stage (e.g., deployment) or halt, preventing faulty code from reaching production. The build can be failed if the tests fail and manual review of the test results and the new commit would need to be investigated. Example: if (testResults.failed) { pipeline.fail(); }

7. How do you handle cross-browser testing in your automation framework?

I handle cross-browser testing in my automation framework by utilizing tools like Selenium Grid or cloud-based testing platforms such as BrowserStack or Sauce Labs. These tools allow me to execute the same test scripts across multiple browsers and operating systems concurrently. My framework is designed with browser-specific configurations (e.g., driver setup, browser options) that can be easily switched based on the target browser.

To ensure comprehensive coverage, I define a matrix of supported browsers and versions based on analytics and user demographics. Test scripts are written to be browser-agnostic as much as possible, using WebDriver's standard APIs. When browser-specific behavior is encountered, conditional logic or browser-specific locators are implemented. Reporting includes browser-specific results for easy identification of issues. We also incorporate visual testing to compare snapshots across different browsers to ensure consistency.

8. Describe your experience with different types of assertions in your tests.

I've used various assertion types to validate expected outcomes in my tests. Primarily, I use equality assertions (e.g., assertEqual, assertEquals, ===) to verify that actual values match expected values. For numerical comparisons, I employ assertions like assertGreater, assertLess, or assertAlmostEqual to handle potential floating-point precision issues. I also utilize boolean assertions (assertTrue, assertFalse, assertIsNone, assertIsNotNone) to check the truthiness or falseness of conditions, and type assertions (assertInstanceOf, assertIsInstance) to ensure objects are of the expected type.

Beyond basic comparisons, I've worked with more specific assertions. For instance, assertIn and assertNotIn to check for membership in collections, assertRaises or similar constructs to confirm that code raises expected exceptions, and regular expression assertions (assertRegex, assertNotRegex) to validate string patterns. The choice of assertion depends on the specific requirement of the test and the level of detail needed to confirm correctness. Code examples:

self.assertEqual(result, expected_result) #check equality

self.assertTrue(isinstance(obj, MyClass))

self.assertRaises(ValueError, my_func, arg) #check exception raised

9. How do you ensure your automation tests are reliable and provide consistent results?

To ensure automation tests are reliable and consistent, I focus on several key areas. First, test isolation is crucial; each test should be independent and not rely on the state of previous tests. This can be achieved by cleaning up data or resources after each test run. Second, robust error handling is essential. Tests should gracefully handle unexpected errors, log them appropriately, and avoid cascading failures.

Further, using stable locators in UI tests is vital (e.g., IDs, data attributes rather than dynamic XPaths). Employing retry mechanisms for flaky tests (common in asynchronous environments) and parameterizing tests with data-driven approaches to cover various scenarios improves reliability. Finally, implementing a reliable test reporting and logging system helps identify and address inconsistencies quickly.

10. Explain how you would test an API using automation.

To automate API testing, I'd use tools like Postman, Rest Assured (Java), or PyTest with Requests (Python). The process generally involves creating test cases that cover different scenarios, such as verifying status codes, response times, and data accuracy. The tests would send various requests (GET, POST, PUT, DELETE) with different payloads and headers, then assert that the responses match expected values. These tests can be integrated into a CI/CD pipeline for continuous testing.

Key steps include:

- Setup: Configure the test environment and import necessary libraries.

- Request Construction: Define API endpoints, request methods, headers, and request bodies (JSON, XML, etc).

- Execution: Send the API requests using the chosen testing tool/library.

- Validation: Assertions verify the response status code (e.g., 200, 400), response headers, and the data in the response body. Examples include checking that specific fields exist and have the correct data type or verifying that a list contains a certain number of items.

- Reporting: Generate reports showing the test results (pass/fail) and any errors encountered. Error logs should be descriptive enough to pinpoint issues quickly.

11. What are some strategies for handling asynchronous operations in your tests?

When testing asynchronous operations, several strategies can ensure tests are reliable and accurate.

Promises/Async/Await: Utilize

async/awaitto simplify the handling of Promises. Await the resolution of the promise before making assertions. For example:it('should fetch data', async () => { const data = await fetchData(); expect(data).toBeDefined(); });Callbacks: If dealing with callback-based asynchronous functions, use techniques like

done()in Mocha or similar mechanisms in other testing frameworks to signal the completion of the asynchronous operation. Ensure thedone()callback is invoked within the asynchronous operation's callback.Mocking and Stubbing: Mock asynchronous dependencies (e.g., API calls) to control their behavior and responses. This allows you to test different scenarios (success, failure, timeouts) without relying on external services.

Timeouts: Adjust test timeouts appropriately for slower asynchronous operations. However, avoid overly long timeouts, as they can mask performance issues. Prefer using more precise methods like awaiting promises instead of relying solely on timeouts.

12. How do you approach testing different types of user interface elements, such as tables or complex forms?

When testing UI elements like tables and complex forms, I focus on functionality, data integrity, and user experience. For tables, I verify correct data display, sorting, filtering, pagination, and that actions (e.g., editing, deleting) work as expected. I use a combination of manual testing and automated UI tests (e.g., using Selenium or Cypress) to ensure all scenarios are covered. I check for boundary conditions (empty tables, large datasets) and accessibility. Specifically for tables the visual aspect must be tested on various screen resolutions, as well as responsiveness. Also I make sure that any modifications made via the table are correctly persisted.

For complex forms, I validate input fields, data types, required fields, and error messages. I check that form submission works correctly and that data is saved and retrieved accurately. I pay close attention to user workflow and ensure the form is easy to navigate. For forms, I make sure form validation works as expected for all input types, including custom validators, such as email, number, or regular expressions. The tests also check how the data changes on the backend with the values entered into the form. If the form uses conditional logic (e.g., showing/hiding fields), those conditions must also be tested.

13. Describe how you use version control (e.g., Git) for your automation code.

I use Git for version control of my automation code, following a standard workflow. I typically create a new branch for each feature or bug fix, ensuring that the main or develop branch remains stable. This allows me to work on isolated changes without affecting the overall project. When the changes are complete, I create a pull request for review, and after approval, merge it into the main branch.

Specifically, I utilize commands like git clone, git pull, git checkout -b <branch_name>, git add, git commit -m "<commit_message>", git push origin <branch_name>, git merge, and git rebase. I also leverage .gitignore files to exclude unnecessary files like temporary files or credentials from the repository. Furthermore, I use branching strategies, such as Gitflow, and follow commit message conventions for better code maintainability and collaboration.

14. How do you monitor and analyze your automation test results?

I monitor and analyze automation test results using a combination of tools and techniques. First, the automation framework itself usually generates reports, often in formats like HTML, XML, or JUnit. These reports provide a summary of test executions, including the number of tests passed, failed, and skipped. I also configure the framework to output detailed logs that help in pinpointing the root cause of any failures.

To further analyze the results, I often integrate the automation framework with a CI/CD pipeline. This enables automated test execution and reporting with tools like Jenkins, Azure DevOps, or GitLab CI. These tools provide visualizations and dashboards that show test trends and help track the stability of the application over time. Also, I would push results to cloud based test management tools or reporting tools such as TestRail, Xray, or Allure. These tools provide features such as advanced filtering, grouping, and historical analysis.

15. Explain how you would test a feature that involves file uploads or downloads.

Testing file uploads and downloads involves verifying functionality, security, and performance. For uploads, I'd check different file types (allowed and disallowed), file sizes (boundary and large files), and filenames (including special characters). I'd also test error handling for scenarios like incorrect file types or exceeding size limits. Security tests would involve checking for vulnerabilities like malware uploads and path traversal.

For downloads, I'd verify the integrity of the downloaded file (using checksums), test download speeds, and ensure proper handling of interrupted downloads. Additionally, I'd check if the downloaded file opens correctly and that access controls are enforced, preventing unauthorized downloads. Testing different browsers and operating systems is important to ensure cross-platform compatibility for both uploads and downloads.

16. How do you incorporate logging into your automation framework for debugging and troubleshooting?

Logging is crucial for debugging and troubleshooting automation frameworks. I typically integrate a logging library (like Python's logging module) to record events at different severity levels (DEBUG, INFO, WARNING, ERROR, CRITICAL). These logs capture details about test execution, variable values, and any exceptions encountered. This allows for detailed analysis of test failures or unexpected behavior without needing to rerun the tests in debug mode.

Specifically, I incorporate logging at key points within the framework: at the start and end of tests, before and after critical actions (e.g., clicking a button, submitting a form), and within exception handling blocks. I also configure the logging to output to both the console and a file for persistent records. Furthermore, I'd include contextual information in logs, such as test case ID, timestamp, and relevant data, which helps in pinpointing the root cause of issues more quickly.

17. Describe your experience with different reporting tools for automation tests.

I have experience with several reporting tools commonly used in automation testing. These include tools that generate HTML reports, such as Allure Report, and those integrated with testing frameworks like pytest-html for Python and TestNG for Java. I've also used cloud-based reporting platforms like TestRail and Zephyr, which provide centralized test management and reporting capabilities.

My typical workflow involves configuring the reporting tool to collect test results during execution. After the tests complete, I analyze the generated reports to identify failures, performance bottlenecks, and overall test coverage. I use the reports to communicate test outcomes to stakeholders and to track progress over time. In cases where custom reporting is needed, I've used libraries like reportlab in Python to generate PDF reports from raw test data. I can also build reports to integrate with tools like Slack to inform stakeholders about build status and test execution outcomes.

18. How do you handle security considerations in your automation tests?

Security in automation tests is crucial. I address it by avoiding hardcoding credentials, using secure credential management (e.g., environment variables, secrets management tools), and ensuring test data is sanitized and doesn't expose sensitive information. Input validation testing is automated to look for vulnerabilities like SQL injection and cross-site scripting.

Specifically, tests are designed to check authorization and authentication mechanisms. For example, API tests verify that users without proper roles cannot access restricted endpoints. Regularly updating dependencies and using static analysis tools on test code helps prevent security vulnerabilities in the test framework itself. Fuzzing techniques can also be integrated to identify unexpected vulnerabilities.

19. Explain how you would test a feature that involves user authentication or authorization.

Testing user authentication/authorization involves several layers. First, I'd verify successful login with valid credentials. Then, I'd test failure scenarios: invalid username, incorrect password, locked accounts, and brute-force protection. Testing password reset functionality (email verification, token expiry) is crucial. For authorization, I'd check that users can only access resources/features permitted by their roles or permissions. Testing the absence of access should also be tested, i.e., explicitly check that a user cannot access something they aren't supposed to.

Specifically, some tests include verifying session management (timeout, invalidation), multi-factor authentication (if applicable), and role-based access control (RBAC). For RBAC, ensure users with specific roles can perform actions and users without those roles cannot. Consider using tools like Postman or curl to send requests with different user tokens/cookies and verify the server's response (e.g., 403 Forbidden, 200 OK).

20. How do you approach testing for accessibility (WCAG compliance) using automation?

Automated accessibility testing focuses on catching common WCAG violations early in the development cycle. I typically use tools like axe-core, Lighthouse, or Pa11y integrated into my CI/CD pipeline. These tools scan the rendered HTML and report violations of accessibility guidelines automatically.

My approach involves:

- Integrating accessibility testing into existing automated tests: For example, adding axe checks to Cypress or Selenium tests.

- Running automated audits as part of the build process: This flags any new or regressions in accessibility.

- Configuring the rules: I often configure the ruleset to only check for WCAG 2.1 AA compliance. Also, if needed, selectively disable some rules to reduce false positives and focus on core accessibility issues.

axe.configure({ rules: [{ id: '...' , enabled: false }] }) - Using custom rules: If there are accessibility issues that are specific to the website, it's possible to define custom rules. While automation is helpful, it's crucial to remember that it only covers a portion of accessibility testing. Manual testing with assistive technologies like screen readers is also necessary for comprehensive WCAG compliance.

21. Describe how you collaborate with developers and other testers in an Agile environment.

In an Agile environment, collaboration is key. I actively participate in daily stand-ups to stay informed about progress and roadblocks. I work closely with developers by providing prompt feedback on bug reports and test results, using tools like Jira for clear communication and issue tracking. I engage in test-driven development (TDD) or behavior-driven development (BDD) discussions with developers to ensure testability early on and ensure clarity and reduce bugs. Also, I pair test with developers to work on complex issues and improve test coverage efficiently.

With other testers, I collaborate on test plan creation, test case reviews, and knowledge sharing. We work together to ensure comprehensive test coverage and avoid duplication of effort. Regular discussions and brainstorming sessions help us to identify potential risks and improve the overall quality of the software. I share testing tools, scripts, and automation frameworks to maximize efficiency across the team. I participate in retrospective meetings to discuss completed sprints and improve processes for future sprints.

22. How do you decide what to automate and what to test manually?

I decide what to automate based on risk, repetition, and stability. High-risk areas, frequently executed tests, and features unlikely to change are prime candidates for automation. Regression tests are almost always automated, as are tests verifying core functionality. Anything executed multiple times benefits from automation.

Manual testing is prioritized for exploratory testing, usability testing, and edge cases that are difficult to define programmatically. New features under development or functionalities undergoing frequent changes are often tested manually initially to allow for quicker feedback and adjustments. Any testing that requires human intuition and subjective evaluation also falls into manual testing.

Advanced Automation Testing interview questions

1. How do you handle testing applications that heavily rely on asynchronous communication, like those using message queues or websockets?

Testing asynchronous applications requires a strategic approach. Key is isolating components and simulating asynchronous events. For message queues, I'd focus on verifying message production (correct format, routing keys), message consumption (processing logic, side effects), and error handling (retry mechanisms, dead-letter queues). Tools like RabbitMQ's shovel plugin or Kafka's MirrorMaker are useful to test data replication.

For Websockets, I'd simulate client connections and messages. Testing includes verifying correct message exchange, handling different connection states (open, close, error), and validating real-time updates. We can use libraries like Socket.IO or ws in test scripts to send and receive messages, and tools like Postman or Insomnia also support WebSocket testing. Mocks and stubs are helpful for isolating the application from external services and controlling asynchronous behavior. We also use integration tests to verify interaction between components.

2. Explain how you would design a test automation framework for a microservices architecture.

Designing a test automation framework for a microservices architecture requires a strategic approach. Key aspects include: API Testing: Since microservices communicate via APIs, robust API testing is crucial. Tools like Postman, Rest-Assured, or Pytest with Requests can be used. We need to cover contract testing (using tools like Pact) to ensure services adhere to agreed-upon schemas. Component Testing: Unit tests focus on individual service logic. Integration tests verify interactions between related services, possibly using service virtualization or mocks to isolate dependencies. End-to-End Testing: Validate critical business flows that span multiple services. Tools like Selenium or Cypress can be employed, but minimize reliance due to maintenance overhead. Deployment Pipeline Integration: Automation should be integrated into the CI/CD pipeline to ensure tests run on every build and deployment.

Furthermore, effective monitoring and logging are essential to diagnose failures in a distributed environment. Consider tools like Prometheus and Grafana for monitoring test execution and service health. Use a centralized logging system to aggregate logs from all microservices and test components, simplifying debugging. We also need good reporting and dashboards to showcase the results of the test executions. Finally, consider using containerization (e.g., Docker) to create consistent test environments that mirror production.

3. Describe your approach to testing the performance and scalability of an API.

My approach to testing API performance and scalability involves several key steps. First, I define clear performance goals, such as response time thresholds, throughput expectations (requests per second), and resource utilization limits (CPU, memory). Then, I create realistic test scenarios mimicking anticipated user behavior, including peak load, sustained load, and stress testing.

I use tools like k6, Gatling, or JMeter to simulate concurrent users and measure key metrics. During testing, I monitor server-side metrics (CPU, memory, database performance) to identify bottlenecks. I analyze test results to pinpoint areas for optimization, such as inefficient database queries, slow algorithms, or resource constraints. Based on the results, I provide recommendations for improving performance and scalability, which might include code optimization, infrastructure scaling, caching strategies, or load balancing.

4. How would you ensure data privacy and security are thoroughly tested in an automated testing environment?

To ensure data privacy and security are thoroughly tested in an automated environment, I would focus on several key areas. Firstly, data masking and anonymization techniques should be implemented to use realistic but non-sensitive data in tests. Secondly, I'd integrate security testing tools like static analysis (SAST) and dynamic analysis (DAST) into the CI/CD pipeline to automatically detect vulnerabilities. Thirdly, implement automated access control tests to verify that only authorized users can access specific data or functionalities.

Furthermore, automated audit logging tests should be implemented to ensure that all data access and modifications are properly logged and monitored. Regular automated penetration testing against test environments is crucial. Finally, reporting must be in place, for instance, automatically flagging failing test cases related to security such as HTTP status code checks or exception details within the test results. Code example in pytest:

import pytest

import requests

def test_sensitive_data_not_exposed():

response = requests.get("your_api_endpoint")

assert response.status_code == 200

assert "sensitive_field" not in response.text

5. What strategies would you use to automate the testing of a complex business workflow involving multiple systems and dependencies?

To automate testing a complex business workflow, I'd use a combination of strategies. First, I'd define clear test cases based on user stories and business requirements, focusing on end-to-end scenarios. I'd leverage test automation frameworks like Selenium or Cypress for UI testing, and API testing tools like Postman or RestAssured to validate data flow and integrations between systems. Consider using a Behavior-Driven Development (BDD) approach with tools like Cucumber to create human-readable test scripts that are easy to maintain.

Second, for systems with message queues or asynchronous processing, I'd implement message interception and validation techniques. This includes tools and scripts to check the content and order of messages. I'd also implement service virtualization using tools like WireMock or Mockito to mock dependencies and isolate systems under test. Continuous Integration/Continuous Deployment (CI/CD) pipelines are crucial for automated execution of these tests on every code change. Finally, robust logging and monitoring will aid in debugging failures and identifying performance bottlenecks.

6. How do you decide when to use white-box testing techniques versus black-box testing techniques in automation?

The decision to use white-box or black-box testing in automation depends on the testing goals, the tester's knowledge of the system, and the stage of testing. Black-box testing is often preferred when testing from the user's perspective without knowledge of the internal code structure. It's useful for functional testing, integration testing, and system testing. Examples include testing user workflows or API endpoints by providing inputs and verifying the outputs against expected results. Automation is often centered on verifying specified requirements or user stories. These tests generally involve UI tests or API tests.

White-box testing is applied when the tester has access to the code and needs to verify specific code paths, logic, and internal structures. It's suitable for unit testing, code coverage analysis, and performance testing. For example, one might write unit tests that directly invoke functions or methods within a specific class to ensure its behavior under various conditions. White-box testing helps to catch edge cases or verify error handling that might be missed by black-box tests. It often requires code instrumentation or the use of specialized testing frameworks like JUnit or pytest for Python.

7. Explain how you would implement continuous testing in a CI/CD pipeline for a large enterprise application.

Continuous Testing in a CI/CD pipeline for a large enterprise application involves automating tests at various stages to provide rapid feedback on code changes. I'd integrate different types of tests - unit, integration, API, performance, and security - into the pipeline using tools like JUnit, Selenium, JMeter, and SonarQube.

To implement it effectively, I would ensure that:

- Unit tests run at the commit stage,

- Integration and API tests are triggered after deployment to a test environment.

- Performance and security tests should run in a staging environment before production deployment. Test results are aggregated and displayed in a centralized dashboard, like Xray or TestRail, providing visibility to the entire team. Automated gates would prevent promotion of builds that fail critical tests. I would use a framework like Jenkins, GitLab CI or Azure DevOps for orchestrating this process.

8. Describe your experience with testing cloud-native applications and infrastructure-as-code.

I have experience testing cloud-native applications, focusing on microservices deployed on Kubernetes using Infrastructure-as-Code (IaC) tools like Terraform and CloudFormation. My testing strategy includes unit tests, integration tests, and end-to-end tests, often automated within CI/CD pipelines using tools like Jenkins or GitLab CI. I also use tools like kubectl for direct interaction with the Kubernetes cluster to verify deployments and resources.

For IaC, I focus on validating that the deployed infrastructure matches the defined configuration, using tools like tfsec for security scanning of Terraform code. I also write automated tests using Python and libraries like boto3 or the Terraform SDK to verify resource properties after deployment. Further, I use tools like k6 to perform load testing on the cloud-native application to ensure scalability and performance under various conditions. I make sure to validate the deployment using tools like helm

9. How would you approach automating the testing of machine learning models and their integration into an application?

Automating ML model testing involves several key aspects. First, data validation is crucial. This ensures incoming data matches the expected schema and distribution using tools like Great Expectations or custom scripts. Next, model performance testing needs to be automated. This includes calculating relevant metrics (e.g., accuracy, precision, recall) on held-out datasets and comparing them to predefined thresholds. We can use tools like MLflow to track these metrics across different model versions. Code example of a metric computation:

from sklearn.metrics import accuracy_score

y_true = [0, 1, 1, 0]

y_pred = [0, 1, 0, 0]

accuracy = accuracy_score(y_true, y_pred)

print(f"Accuracy: {accuracy}")

Finally, for integration testing, focus on verifying the model's end-to-end behavior within the application. This might involve setting up a CI/CD pipeline that automatically deploys the model to a staging environment, running automated tests against the application's API endpoints that use the model, and rolling back the deployment if any tests fail.

10. What are some challenges you might face when automating tests for a legacy system, and how would you address them?

Automating tests for legacy systems often presents unique challenges. One common issue is lack of documentation and poorly understood code, making it difficult to determine expected behavior and write effective tests. Addressing this involves dedicating time to reverse engineering, manually exploring the system, and collaborating with developers who have historical knowledge. Another challenge is brittle tests due to tightly coupled code and lack of clear separation of concerns. Refactoring may be necessary, but often isn't feasible due to time or budget constraints. I would prioritize testing critical functionalities first and use techniques such as characterization testing to establish a baseline of existing behavior before making any changes. Also, old frameworks or libraries can make it difficult to integrate modern testing tools.

Another significant issue is data setup, often involving complex database schemas or manual data entry processes. I would aim to create automated data setup scripts using tools like SQL scripts or APIs (if available). If APIs aren't available, I would explore creating my own simple APIs that interact with the database to make data setup more programmatic. Example: Using python with pyodbc library

11. Discuss your experience with test data management in automated testing, especially for complex and sensitive datasets.

In automated testing, I've managed test data using various techniques. For simpler datasets, I've employed data generation tools or utilized in-memory data structures. However, for complex and sensitive datasets, I prioritize security and realism. I've worked with data masking and anonymization techniques to protect sensitive information while maintaining the data's structural integrity. I’ve used tools like Faker and custom scripts to generate realistic data that mimics production data without exposing real user information. A key aspect has been version control for test data, treating it like code to ensure reproducibility and manage changes effectively.

For instance, when testing banking applications, I've created pipelines to extract data from production databases, anonymize it, and then load it into test environments. We follow strict data governance policies during this process. I've also used database subsetting to create smaller, more manageable test datasets that represent the necessary scenarios, optimizing testing time and resources. Ensuring data compliance and preventing data breaches are paramount when handling sensitive datasets.

12. How do you ensure your automated tests are reliable and maintainable over the long term, especially as the application evolves?

To ensure automated tests are reliable and maintainable, I focus on several key principles. First, I prioritize writing atomic tests that test only one specific functionality, making it easier to identify the root cause of failures. I also follow the DRY (Don't Repeat Yourself) principle by creating reusable test components and helper functions. Well-defined test data management and environment setup are crucial. For example, using factories or fixtures to generate test data consistently. Code example: fixture.create_user().

Furthermore, I aim for high test coverage targeting the most critical functionalities and APIs. I regularly review and refactor tests to keep them aligned with application changes. Utilizing Page Object Model (POM), or similar design patterns, helps to abstract UI elements, so UI changes require minimal modifications to the test suite. Additionally, integrating tests into CI/CD pipelines provides continuous feedback and ensures early detection of issues. Regularly updating dependencies and addressing flaky tests also contributes to long-term reliability.

13. Explain how you would use mocking and stubbing to isolate components during automated testing.

Mocking and stubbing are techniques used to isolate units of code during automated testing. Stubs provide controlled, simple replacements for dependencies. For example, if testing a function that relies on a database connection, a stub could return a hardcoded set of results instead of actually querying the database. This ensures the test is fast and doesn't depend on external factors.

Mocks, on the other hand, are used to verify interactions. A mock object not only replaces a dependency but also allows you to assert that specific methods were called with specific arguments a certain number of times. This allows you to confirm that the unit under test is interacting with its dependencies correctly. Using mocks and stubs allows developers to test functionality without needing the whole system running which enables developers to have more confidence and speed up development.

14. Describe your approach to automating the testing of mobile applications on different devices and platforms.

My approach to automating mobile application testing involves utilizing a combination of tools and strategies to ensure comprehensive coverage across different devices and platforms. I typically start by identifying the critical functionalities and user flows that need to be tested. For test automation, I would choose Appium along with a programming language such as Python or Java to write test scripts. These scripts would interact with the application under test on various emulators, simulators, and real devices, covering a range of operating systems (iOS and Android) and screen sizes.

To manage device diversity, I would use a cloud-based device farm like BrowserStack or Sauce Labs for scalable and on-demand access to a wide array of real devices. Additionally, I prioritize integrating automated tests into the CI/CD pipeline, using tools like Jenkins or GitLab CI, to enable continuous testing and faster feedback cycles. This includes UI testing for functionality and visual validation, API testing to ensure backend integration, and performance testing to evaluate app responsiveness and resource usage.

15. How do you handle testing applications with dynamic UIs where elements change frequently?

Testing applications with dynamic UIs requires a robust and adaptable approach. Strategies include using explicit waits instead of implicit waits to handle element loading, implementing data-driven testing to manage changing data, and employing more resilient locators like XPath axes or relative locators that are less prone to breakage when UI elements shift.

Additionally, adopting visual validation tools can help detect unexpected UI changes. Consider using abstraction layers like the Page Object Model (POM) to encapsulate UI element interactions. This makes tests more maintainable. Furthermore, incorporate regular test maintenance and refactoring to keep the tests aligned with the evolving UI. Using techniques like try-catch blocks with retry mechanisms for element interactions might be useful to handle transient UI changes during test execution.

16. What strategies do you use to identify and prioritize test cases for automation?

I identify test cases for automation by focusing on high-risk, repetitive, and time-consuming scenarios. Key factors include the frequency of execution, business criticality, and the stability of the feature. I prioritize test cases that cover core functionality, critical workflows, and areas prone to regression bugs.

Specifically, I look for test cases that are:

- High-risk: Covering critical functionality where failure would have significant impact.

- Repetitive: Executed frequently, such as regression tests.

- Stable: Testing features with minimal changes to avoid maintenance overhead.

- Data-driven: Easily parameterized with different inputs.

- Integration tests: Validating interactions between components.

I also consider the test case's complexity and the effort required to automate it. It's crucial to balance the benefits of automation with the associated costs.

17. How would you integrate security testing tools into your automated testing process?

Integrating security testing tools into an automated testing process involves several key steps. First, identify the appropriate tools based on the application's technology stack and potential vulnerabilities, such as static analysis security testing (SAST) tools like SonarQube or dynamic analysis security testing (DAST) tools like OWASP ZAP. Integrate these tools into the CI/CD pipeline. For example, use plugins to trigger security scans after each build or deployment.

Configuration is crucial. Fine-tune the rules and thresholds of the security tools to minimize false positives and focus on critical vulnerabilities. Furthermore, incorporate security testing into various stages, such as unit tests, integration tests, and end-to-end tests. The results of the security scans should be automatically reported and tracked, using tools like Jira, to ensure timely remediation of identified issues. For example:

# Example using OWASP ZAP in a pipeline

zaproxy -cmd -quickurl "http://example.com" -quickprogress

18. Explain how you approach cross-browser compatibility testing in an automated environment.

My approach to cross-browser compatibility testing in an automated environment involves several key steps. First, I define a matrix of target browsers and versions based on market share and user analytics. Then, I use a framework like Selenium Grid or cloud-based testing platforms (e.g., BrowserStack, Sauce Labs) to execute my automated tests across this matrix. I write tests using tools like Selenium WebDriver or Cypress that abstract away browser-specific details, but I also incorporate browser-specific assertions where necessary to validate rendering or behavior differences.

Specifically, I leverage environment variables or configuration files to parameterize my test execution, allowing me to easily switch between different browsers and versions. I also use visual testing tools like Applitools Eyes or Percy to detect subtle rendering differences across browsers. Reporting is crucial; I configure my test framework to generate detailed reports that highlight any compatibility issues along with screenshots and videos. I integrate these reports into CI/CD pipelines to provide fast feedback to developers.

19. Describe a time when you had to debug a complex issue in your automation framework. What was your approach?

During a recent project, our automation suite intermittently failed when interacting with a specific web element. The failures were inconsistent, and the logs didn't provide clear root cause. My approach involved several steps. First, I isolated the issue by running the failing test case repeatedly in a controlled environment, eliminating external factors. Then, I implemented more detailed logging, capturing element attributes (like position and visibility), network requests/responses, and JavaScript errors just before the interaction.

I also used debugging tools built into the browser (developer console) to inspect the application state at the point of failure and stepped through the JavaScript code. After analysing the logs and the browser's behaviour, I discovered a race condition where the element was sometimes not fully rendered before the automation script attempted to interact with it. The fix was to implement an explicit wait using WebDriverWait with ExpectedConditions to ensure the element was fully loaded and interactable before proceeding.

20. How do you measure the effectiveness of your automated testing efforts?

I measure the effectiveness of automated testing efforts through several key metrics. Coverage is vital, tracking the percentage of code, features, or requirements covered by automated tests. A high coverage percentage suggests more thorough testing. Defect detection rate looks at how many defects are found by automated tests versus manual testing or production issues. A high defect detection rate indicates effective automated tests. Also, test execution time and cost help assess efficiency. Reducing test execution time and lowering the cost per test case are signs of improved efficiency. Test stability is a good indicator for how reliable the automated tests are. Frequent flaky tests lead to distrust in the automated test suite.

Other metrics to consider are Mean Time To Failure (MTTF) and Mean Time To Recovery (MTTR) for the automated test suite itself. Improvements in these metrics show increasing stability and maintainability of the automated framework itself.

21. Explain how you would automate the testing of accessibility features in a web application.

To automate accessibility testing, I would leverage tools like axe-core, pa11y, or Lighthouse. These tools can be integrated into our CI/CD pipeline to run automated checks on each build. For example, using axe-core with Selenium, we could write tests that navigate through the application and assert that no accessibility violations are found.

Specifically, the process would involve: 1. Integrating the accessibility testing library (e.g., axe-core) into the test suite. 2. Writing test cases that target specific components or pages. 3. Using assertions to verify that elements have proper ARIA attributes, sufficient color contrast, keyboard navigability, and correct semantic structure. 4. Running these tests as part of the automated build process to identify and address accessibility issues early in the development lifecycle. 5. npm install axe-core selenium-webdriver. Code example const AxeBuilder = require('@axe-core/webdriverjs'); const webdriver = require('selenium-webdriver');

22. Describe your experience with performance testing tools and techniques for identifying bottlenecks.

I have experience using tools like JMeter and Gatling for performance testing. With JMeter, I've created test plans to simulate various user loads and analyze response times, throughput, and error rates. Gatling has been useful for defining load scenarios as code, allowing for better version control and collaboration. I've also used browser developer tools to identify front-end bottlenecks like slow-loading images or inefficient JavaScript.

Techniques I use include load testing to determine system limits, stress testing to identify breaking points, and soak testing to observe performance over extended periods. Analyzing metrics like CPU utilization, memory consumption, and database query times helps me pinpoint bottlenecks. I use profiling tools (e.g., perf on Linux, or profilers built into IDEs) to examine code execution and identify slow functions or algorithms. For example, if database queries are slow, I'd use EXPLAIN to understand query execution plans and identify missing indexes or inefficient joins.

23. How do you ensure that your automated tests are independent and do not interfere with each other?

To ensure automated tests are independent, I focus on several key practices. First, each test should set up its own initial state and clean up after itself. This can involve creating unique data for each test run and deleting that data or resetting the database to a known state after the test finishes. Avoid sharing resources or data between tests. For example, if you are testing a user registration function, each test should register a new unique user, rather than relying on pre-existing accounts.

Second, consider using test isolation techniques. This might involve running tests in parallel in separate environments or using mocking and stubbing to isolate components under test. This prevents shared resources from influencing test outcomes. A good practice is also to randomize your test execution order to ensure that tests that run earlier do not affect the tests that run later. Proper setup and teardown routines are crucial for maintaining test independence.

24. What are the key considerations when choosing an automation testing tool or framework for a specific project?

When selecting an automation testing tool or framework, several factors are crucial. Project requirements dictate the tool's capabilities. Consider the application's technology stack (e.g., web, mobile, API) and choose a tool that supports it. Evaluate the team's existing skills. A tool requiring extensive learning might slow down progress. Budget is also a key consideration, some tools are open-source and free, while others require licensing fees. Reporting capabilities, integration with CI/CD pipelines, and the scalability of the tool are also important.

Other factors include ease of use, maintenance effort, and community support. A tool with good documentation and a large community provides better resources for troubleshooting and learning. Also, consider if the tool or framework allows for data-driven testing and keyword-driven testing, if the project requires those approaches. Evaluate how well the tool handles dynamic elements and asynchronous operations within the application under test. Finally, think about long-term maintainability. A well-structured and adaptable framework will save time and effort in the long run.

25. How would you approach testing the resilience of a system to failures using automated techniques?

To test system resilience using automation, I'd employ techniques like chaos engineering. This involves injecting controlled failures into the system to observe its behavior. Specifically, I would:

- Identify critical services: Determine the core components vital for the system's operation.

- Define failure scenarios: Simulate events like server crashes, network latency, or database outages.

- Automate failure injection: Use tools or scripts to automatically introduce these failures into the environment. Common tools for this include Gremlin and Chaos Toolkit.

- Monitor system behavior: Continuously track key metrics (e.g., error rates, response times) to detect any degradation in performance or availability. Tools like Prometheus and Grafana can be beneficial for this.

- Validate recovery mechanisms: Ensure that the system's automatic recovery mechanisms (e.g., failover, retries) function as expected. Code examples might use retry libraries with configurable backoff strategies.

- Analyze and improve: Based on the results, identify weaknesses in the system's resilience and implement improvements to address them. This could involve enhancing redundancy, improving error handling, or optimizing recovery processes.

26. Discuss how you would handle version control and collaboration in an automated testing project.

For version control and collaboration in an automated testing project, I would primarily use Git and a platform like GitHub or GitLab. All test scripts, configuration files, and test data would be stored in a repository. Branches would be used for developing new features or fixing bugs, ensuring that the main branch (e.g., main or develop) remains stable. Pull requests would be mandatory for merging code, requiring code review by other team members before integration. This promotes knowledge sharing and early detection of potential issues. Continuous Integration (CI) pipelines would automatically run tests upon each commit or pull request to validate code changes and provide immediate feedback.

To facilitate collaboration, I'd emphasize clear commit messages and documentation. A standardized testing framework and coding style are crucial. Regularly scheduled team meetings and code review sessions would improve communication and ensure that everyone is aligned on the project's goals and progress. For example, pytest could be used with a well-defined structure, and linting tools like flake8 to enforce style guides, preventing merge conflicts and ensuring readability. Utilizing git blame can help to understand code origins during debugging.

27. Explain how you would automate tests for a system that uses multiple databases and data sources.

Automating tests for a system with multiple databases and data sources involves several key strategies. Firstly, data mocking and stubbing allows isolating components and simulating responses from different data sources, improving test speed and reliability. We can use tools like Mockito or create custom mock objects in code. Secondly, use database seeding to create consistent test data across all databases before each test run. A tool like dbmate can be useful for managing database schemas and migrations. This ensures that tests are running against a known state. Finally, implement integration tests to verify data flow and consistency between the databases. These can use tools like pytest with plugins to manage database connections and transactions to perform assertions on the data across different databases after an operation.

Expert Automation Testing interview questions

1. How would you design an automation framework to test a highly dynamic web application with frequently changing UI elements?

To design an automation framework for a highly dynamic web application, I would prioritize flexibility and maintainability. I'd use a data-driven or keyword-driven approach, storing UI element locators (XPath, CSS selectors) in external files or databases. This allows easy updates when UI elements change without modifying the core test scripts. I'd also implement a robust wait strategy using explicit waits with dynamic conditions to handle asynchronous loading and rendering.

Key considerations include using relative locators where possible, implementing a retry mechanism for flaky tests, and incorporating self-healing locators (using multiple strategies to find an element). A modular design with reusable components and a reporting system that clearly indicates which locators failed will also be crucial. I would use a Page Object Model (POM) to encapsulate UI elements and their related operations, reducing code duplication and enhancing maintainability. For example, driver.findElement(By.xpath("//button[@id='dynamicButton']")).click(); could be encapsulated in a POM method.

2. Describe a situation where you had to choose between different automation tools or frameworks. What factors influenced your decision?

In a recent project, we had to automate the testing of our REST APIs. We considered using Postman with Newman, and REST-assured (Java library). The primary factors influencing our decision were ease of use, integration with our existing CI/CD pipeline, and the learning curve for the team.

Ultimately, we chose REST-assured. While Postman/Newman offered a quicker start and a user-friendly GUI, REST-assured provided better integration with our existing Java-based testing framework and allowed for more complex test scenarios through code. The team already had Java expertise, so the learning curve wasn't a significant barrier. Furthermore, REST-assured offered greater flexibility in terms of data handling and custom assertions, which were crucial for the project's specific requirements.

3. Explain how you would implement a robust reporting mechanism in your automation framework to provide actionable insights to developers and stakeholders.

To implement a robust reporting mechanism, I would integrate the automation framework with reporting libraries like Allure or Extent Reports. These libraries allow capturing detailed test execution information, including steps, screenshots, logs, and timings. The framework would generate interactive HTML reports with features like filtering, sorting, and trend analysis, making it easy for developers and stakeholders to quickly identify failures, performance bottlenecks, and overall test coverage.

For actionable insights, the reports would include:

- Clear error messages: Pinpointing the root cause of failures.

- Screenshots/Videos: For visual validation and debugging.

- Performance metrics: To track execution time and resource usage.

- Test execution history: To identify regressions.

- Integration with CI/CD: Automatically generate and publish reports after each build. I'd ensure the system is configurable to send notifications (email, Slack) to relevant parties upon test completion or failure, providing a direct link to the report. For example, if using